Vision-Based Mid-Air Object Detection and Avoidance Approach for Small Unmanned Aerial Vehicles with Deep Learning and Risk Assessment

Abstract

1. Introduction

1.1. Airborne Sensing Technologies and Collision Avoidance Strategies for UAVs

1.2. Vision-Based Object Detection with Deep Learning Technology

1.3. Vision-Based Reactive Avoidance Methods for UAVs

- (1)

- A comprehensive procedure for the mid-air collision avoidance approach based on the determination of the collision risk for fixed-wing UAVs is proposed by using a monocular camera.

- (2)

- The proposed DAA does not require the sizes of the intruders or the exact distance between an intruder and the host UAV to train the deep learning model.

- (3)

- The DAA could be triggered 10 s or earlier before a collision happened. Therefore, to ensure a sufficient reaction time, the sensing range of this study must be larger than 10 s before the fixed-wing intruder reaches the host UAV.

- (4)

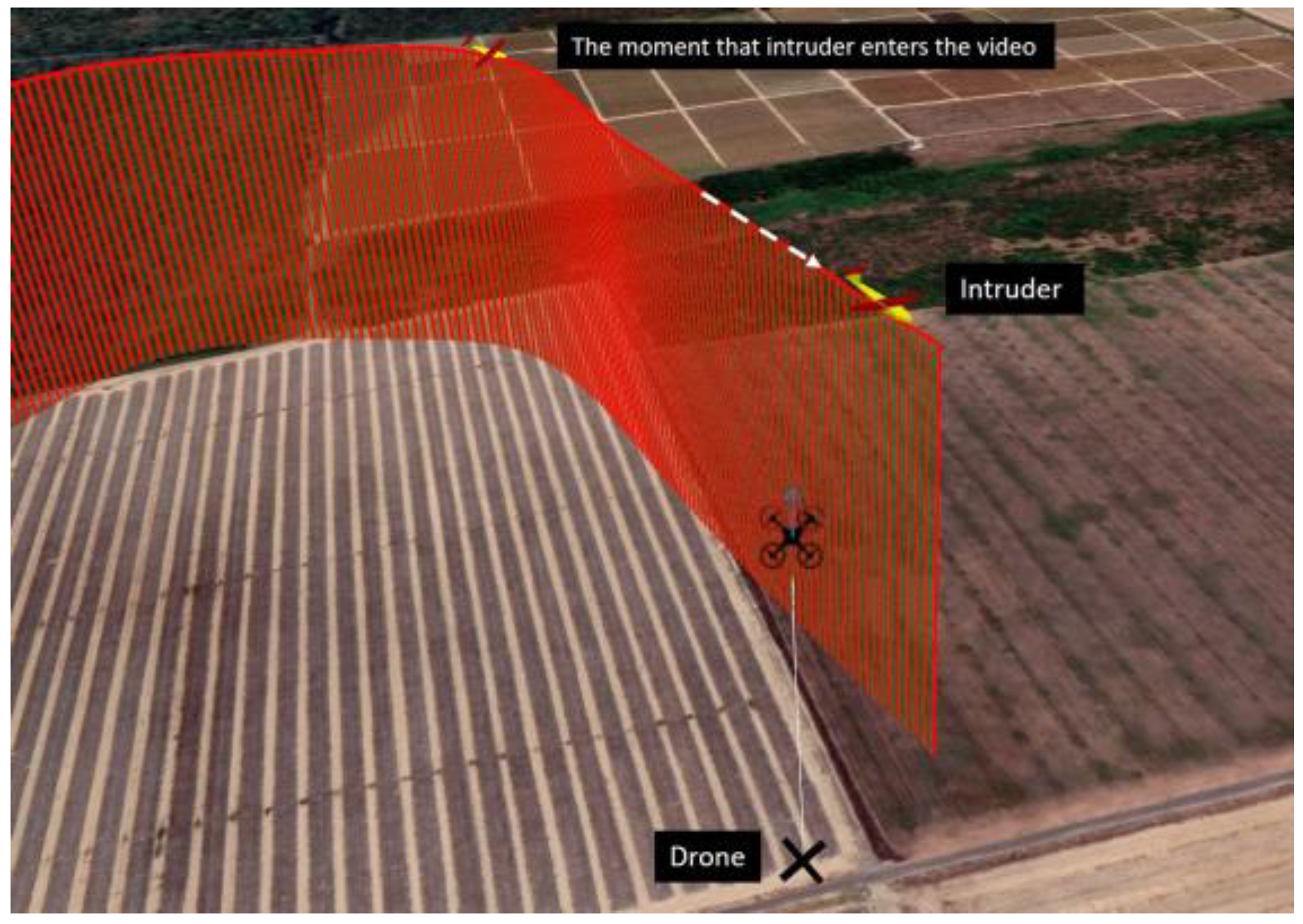

- Flight tests were conducted to demonstrate long-range object detection and collect data for the risk-based collision avoidance method.

- (5)

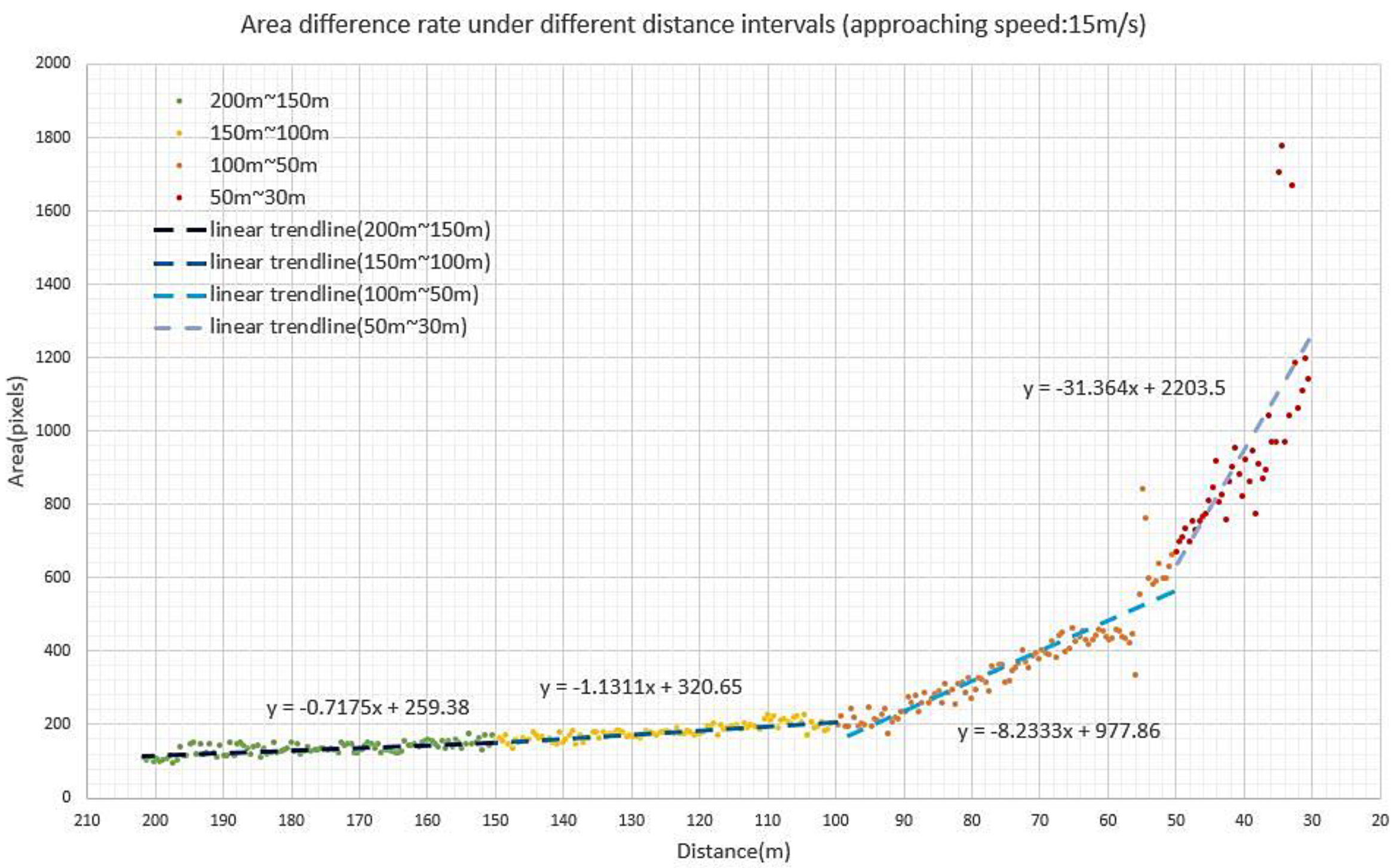

- A collision avoidance strategy is proposed and evaluated in the simulations to verify the developed avoidance strategy with the proposed collision risk assessment, which is determined by the area expansion rate and bearing angle of the intruder in the images.

2. Vision-Based Long-Range UAV Detection with Deep Learning Technology

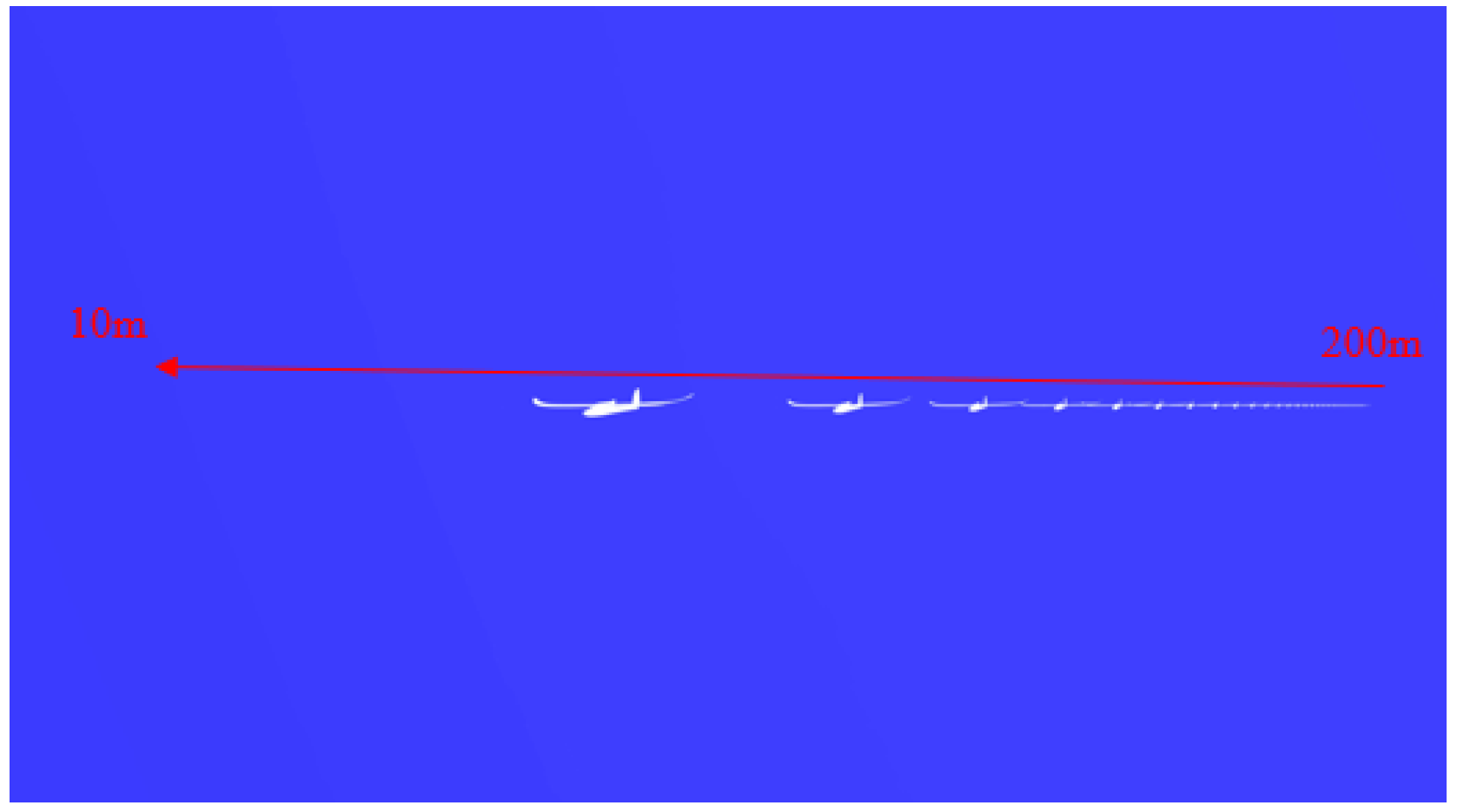

2.1. Long Distance Object Detection

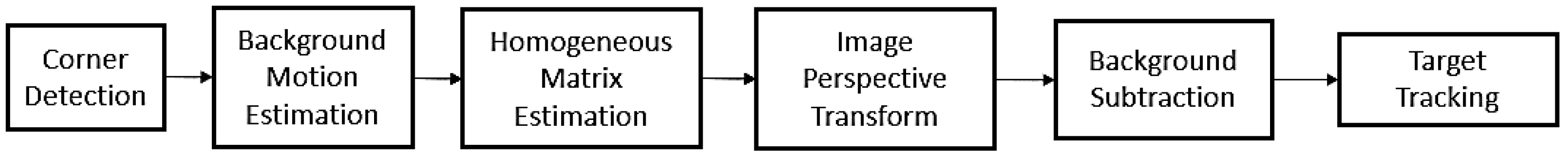

2.1.1. Homogeneous Matrix Estimation and Image Perspective Transform

2.1.2. Background Subtraction and Object Detection

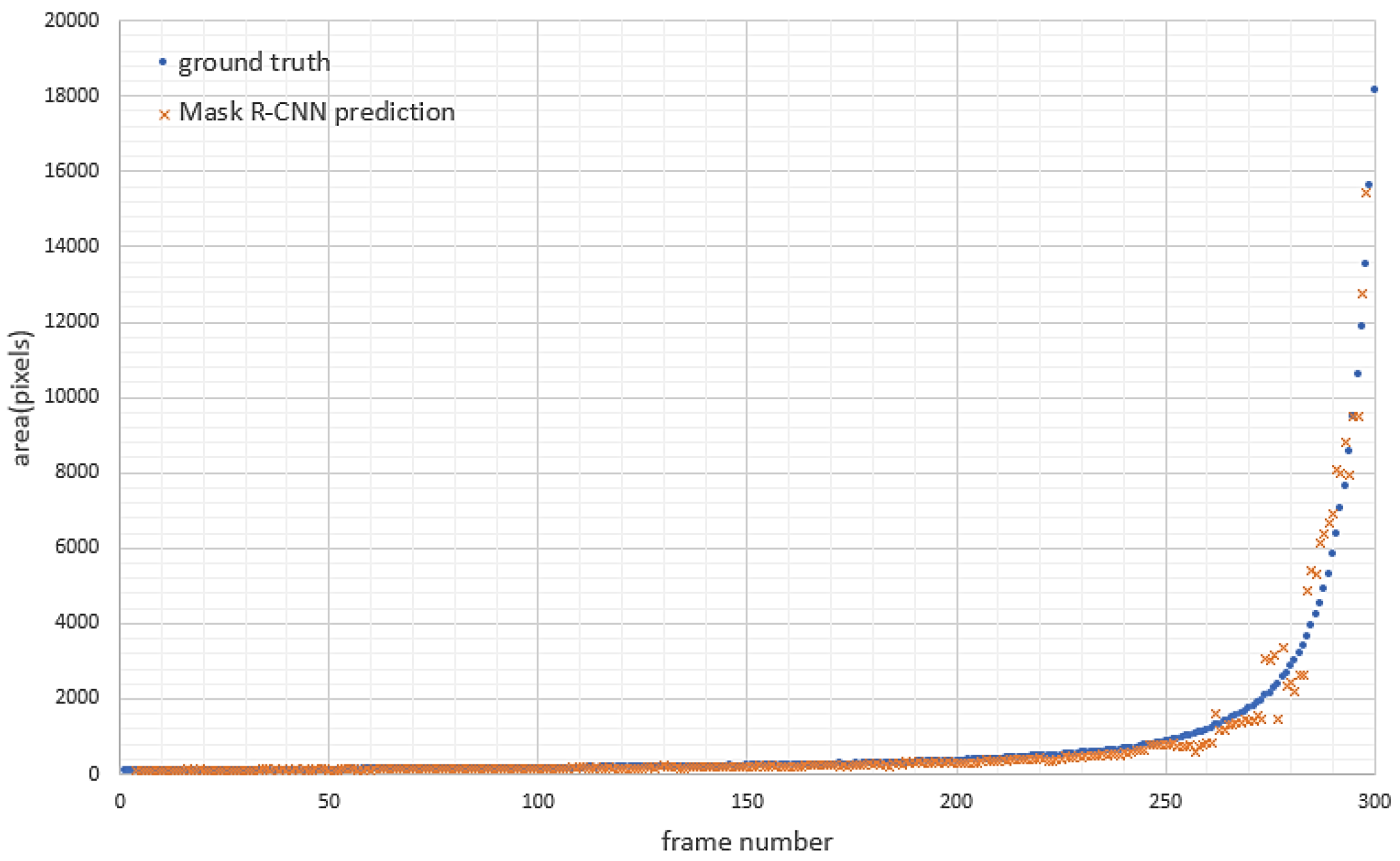

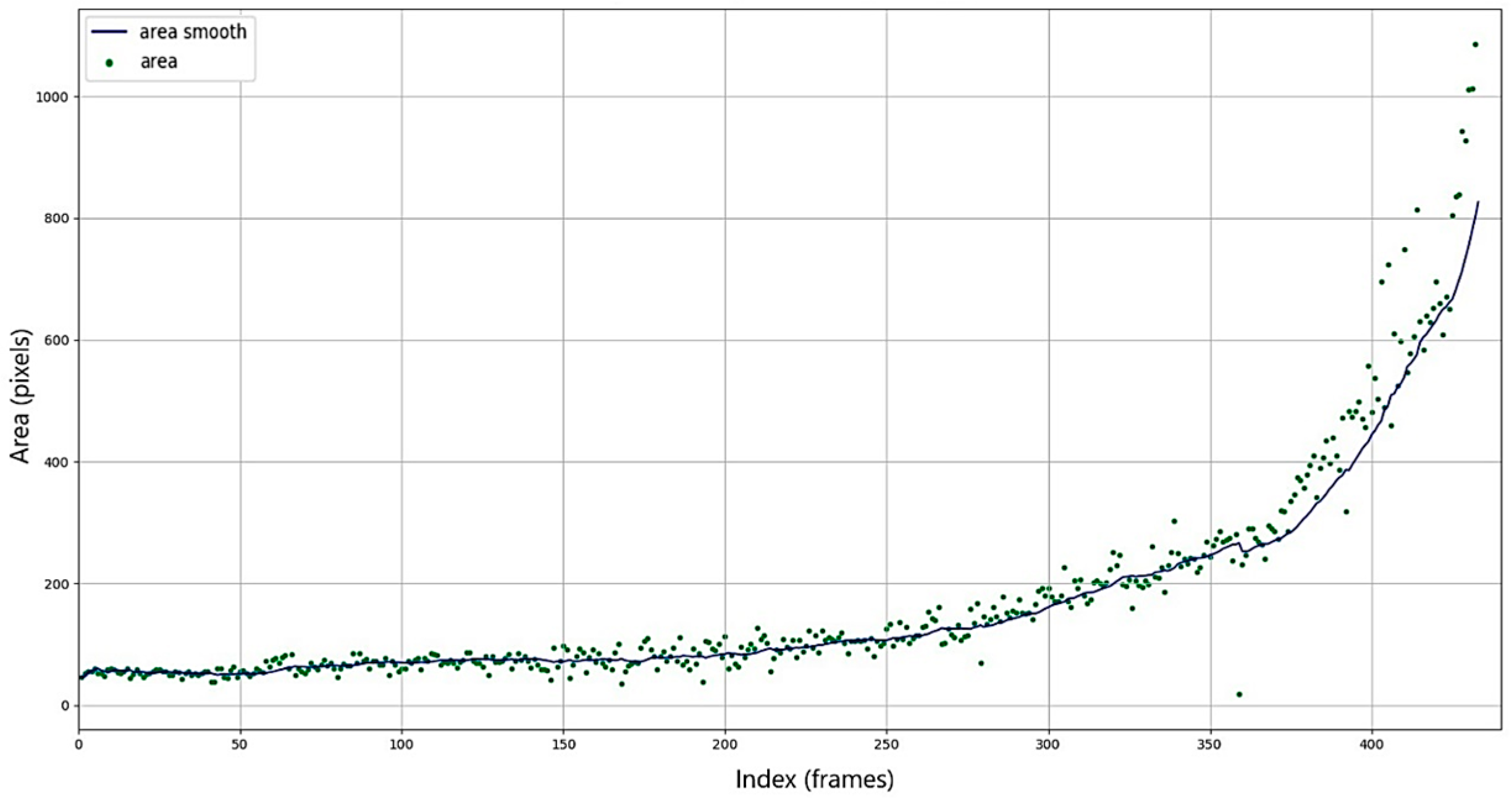

2.2. Object Area Estimation

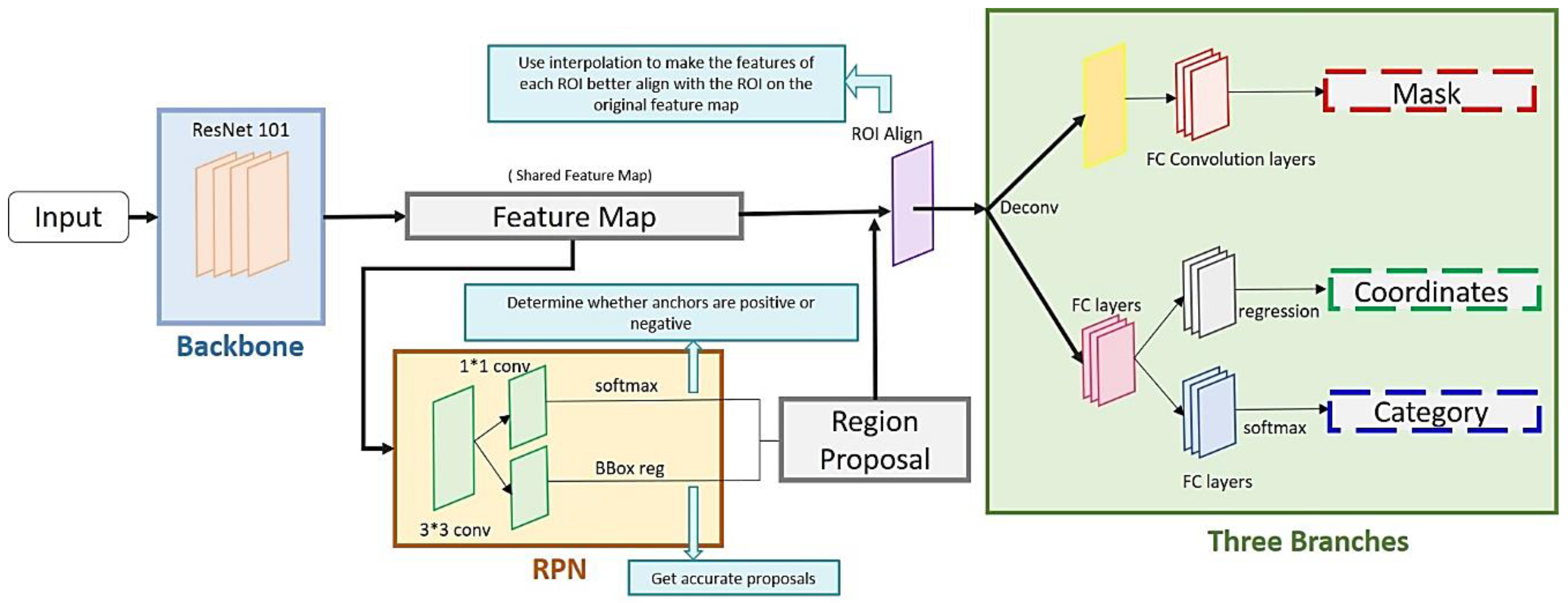

2.2.1. Mask R-CNN Detector

- (1)

- Compared to the previous version of R-CNNs, Mask R-CNNs added a new output: the mask, which represents the area of the object.

- (2)

- As a two-stage detector, a Mask R-CNN has relatively high accuracy in object instance segmentation.

2.2.2. Training Process

2.2.3. Object Area Detection Results

3. Collision Risk and Avoidance Strategy

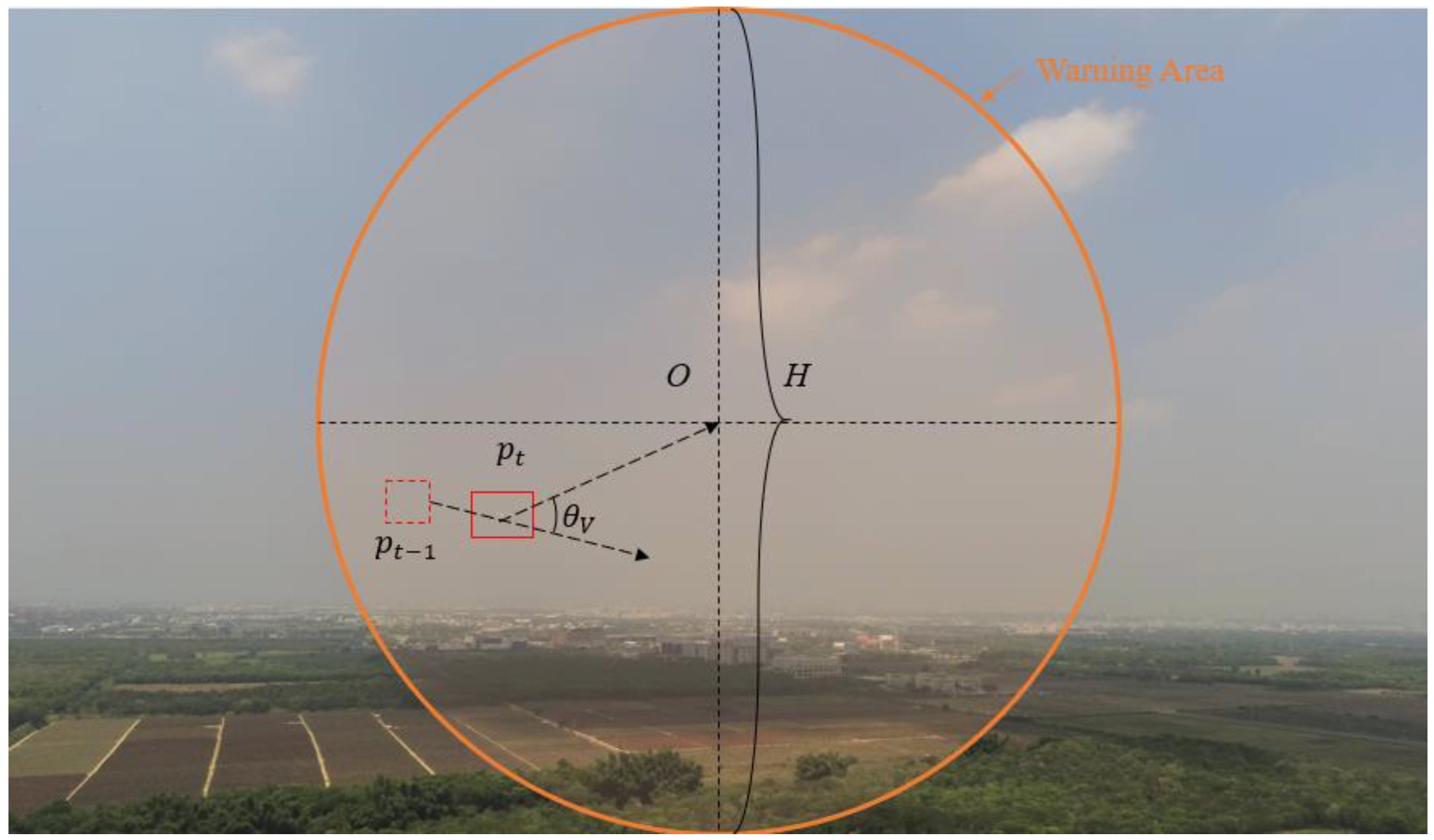

3.1. Proposed Collision Risk

3.2. Collision Avoidance Strategy Based on Collision Risk

- The host aircraft must have an initial velocity as shown in Equation (7). If the host aircraft hovers at a fixed point, the avoiding maneuver will not affect the host aircraft.

- The angular velocity of the host aircraft has an upper bound (Equation (8)) to limit the avoidance maneuver to a reasonable range.

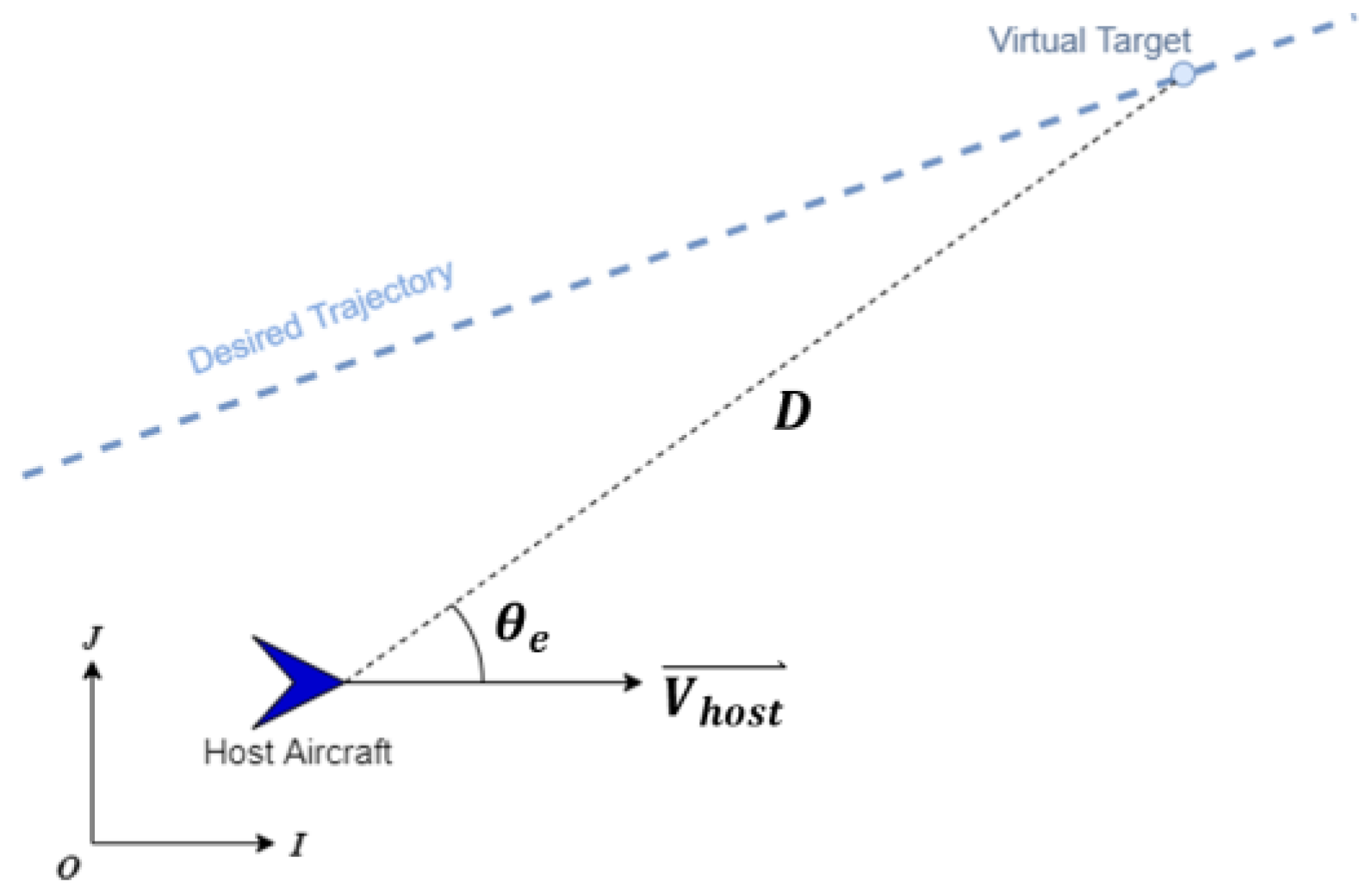

- The control law refers to [33], which is composed of waypoint following, , and collision avoidance, , as shown in Equation (9). In Equation (10), is the proportional gain of the waypoint following command. As shown in Equation (10) and Figure 13, is controlled by a waypoint following controller modified from [32], and D is the distance between the virtual target and the host aircraft.

- The proposed collision avoidance system aims to reduce the collision risk and ensure the minimum TTC between the intruder and the host aircraft.

- The avoidance maneuver intensity is another crucial factor to be concerned about. The intensity is equal to the angular velocity. The value of collision risk determines the avoidance intensity, and the function is defined as follows:

- Equation (11) is also modified from the study [32]. When the intruder UAV is determined by the collision risk, we force the host UAV to travel in a direction perpendicular to the position of the intruder UAV with respect to the host UAV. is the weighting to adjust the value of . If the collision risk of the intruder exceeds 0.5, the avoidance maneuver will remain the same for 1 s.

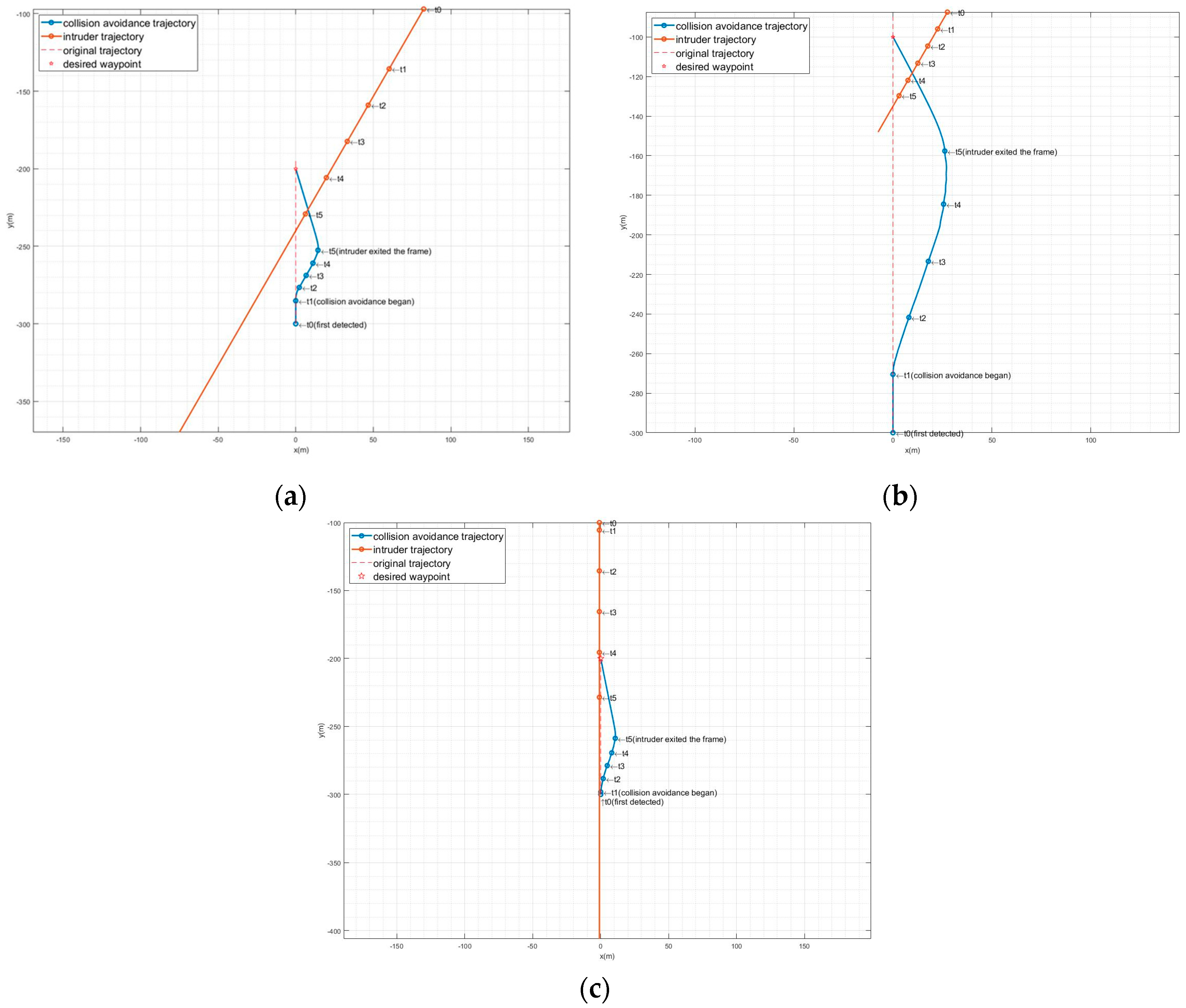

4. Simulation and Flight Experiment Results

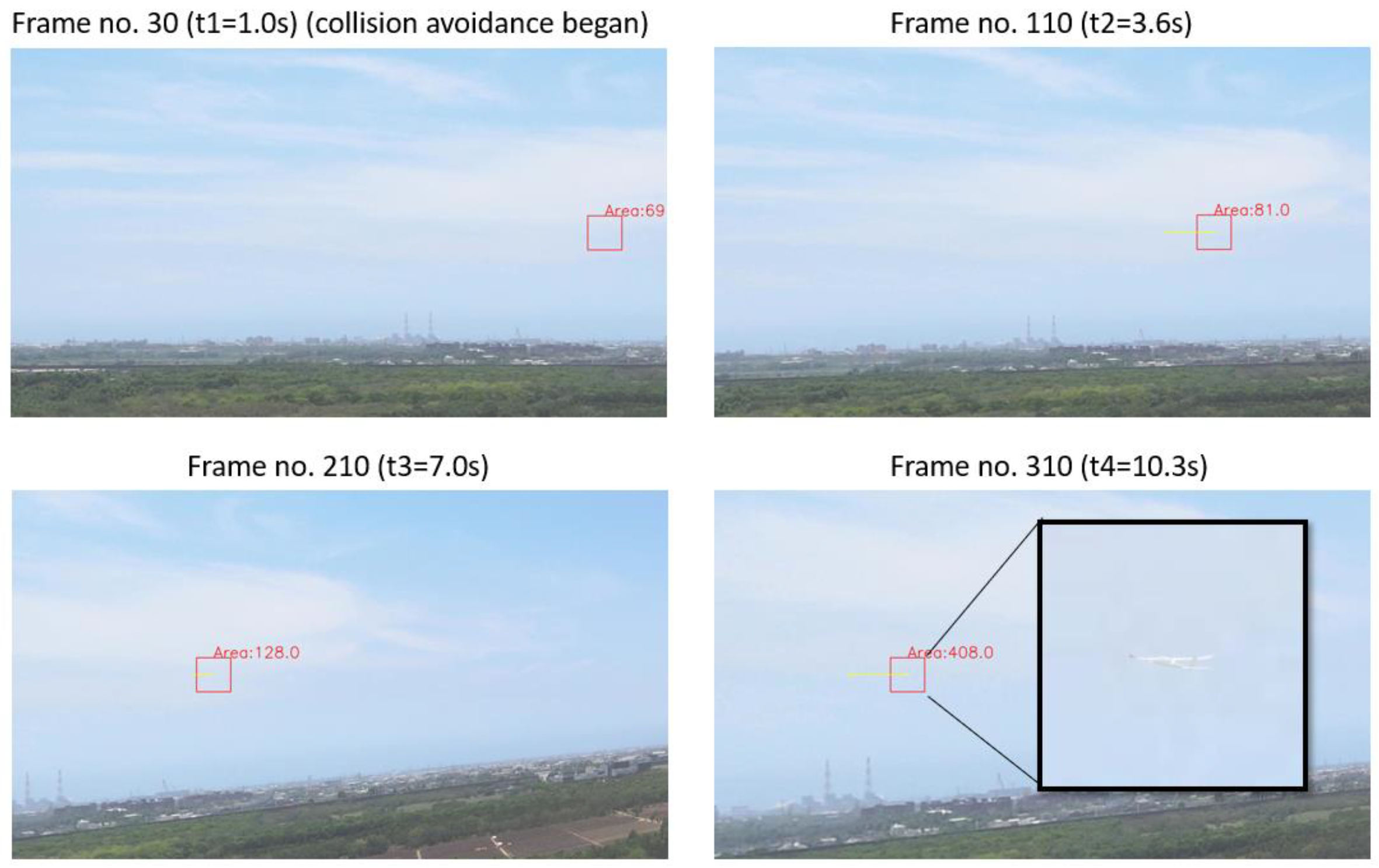

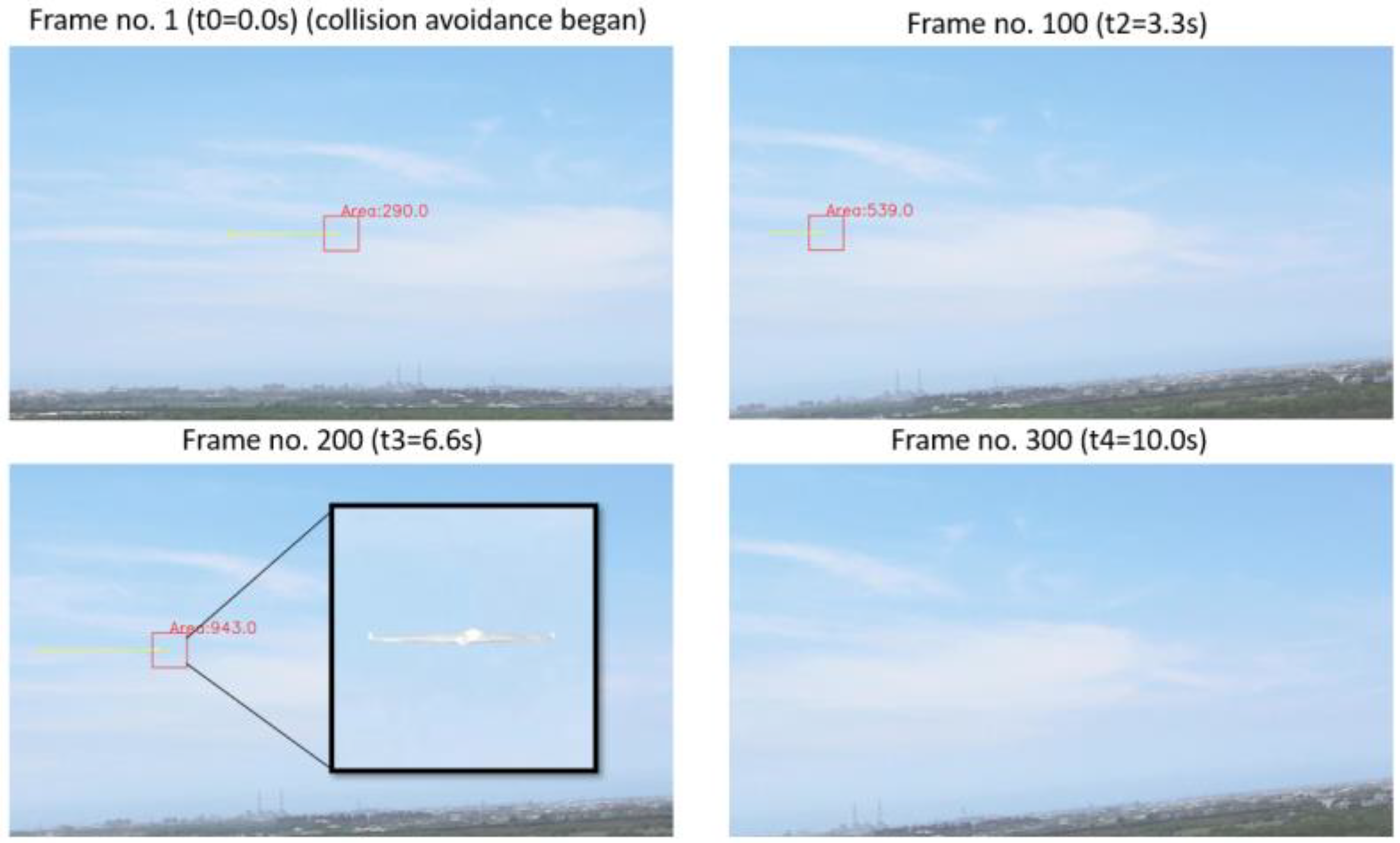

4.1. Real-Flight Experiments

4.2. Collision Avoidance Simulations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Belwafi, K.; Alkadi, R.; Alameri, S.A.; Al Hamadi, H.; Shoufan, A. Unmanned aerial vehicles’ remote identification: A tutorial and survey. IEEE Access 2022, 10, 87577–87601. [Google Scholar] [CrossRef]

- Kopardekar, P. Unmanned Aerial System (UAS) Traffic Management (UTM): Enabling Low-Altitude Airspace and Uas Operations; National Aeronautics and Space Administration, Ames Research Center: Mountain View, CA, USA, 2014.

- Ferguson, A. Pathfinder Focus Area 2 Phase iii Report. Available online: https://www.faapathfinderreport.com/ (accessed on 18 February 2024).

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Yu, X.; Zhang, Y. Sense and avoid technologies with applications to unmanned aircraft systems: Review and prospects. Prog. Aerosp. Sci. 2015, 74, 152–166. [Google Scholar] [CrossRef]

- Lu, L.; Fasano, G.; Carrio, A.; Lei, M.; Bavle, H.; Campoy, P. A comprehensive survey on non-cooperative collision avoidance for micro aerial vehicles: Sensing and obstacle detection. J. Field Robot. 2023, 40, 1697–1720. [Google Scholar] [CrossRef]

- Choe, R.; Cichella, V.; Xargay, E.; Hovakimyan, N.; Trujillo, A.C.; Kaminer, I. A Trajectory-Generation Framework for Time-Critical Cooperative Missions. In Proceedings of the AIAA Infotech@ Aerospace (I@ A) Conference, Boston, MA, USA, 19–22 August 2013; p. 4582. [Google Scholar]

- Cichella, V.; Kaminer, I.; Walton, C.; Hovakimyan, N. Optimal motion planning for differentially flat systems using bernstein approximation. IEEE Control Syst. Lett. 2017, 2, 181–186. [Google Scholar] [CrossRef]

- Subchan, S.; White, B.; Tsourdos, A.; Shanmugavel, M.; Zbikowski, R. Pythagorean hodograph (ph) path planning for tracking airborne contaminant using sensor swarm. In Proceedings of the 2008 IEEE Instrumentation and Measurement Technology Conference, Victoria, BC, Canada, 12–15 May 2008; pp. 501–506. [Google Scholar]

- Chandler, P.; Rasmussen, S.; Pachter, M. Uav cooperative path planning. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Dever, CO, USA, 14–17 August 2000; p. 4370. [Google Scholar]

- Zhang, B.; Liu, W.; Mao, Z.; Liu, J.; Shen, L. Cooperative and geometric learning algorithm (cgla) for path planning of uavs with limited information. Automatica 2014, 50, 809–820. [Google Scholar] [CrossRef]

- Chen, Y.-B.; Luo, G.-C.; Mei, Y.-S.; Yu, J.-Q.; Su, X.-L. Uav path planning using artificial potential field method updated by optimal control theory. Int. J. Syst. Sci. 2016, 47, 1407–1420. [Google Scholar] [CrossRef]

- Carbone, C.; Ciniglio, U.; Corraro, F.; Luongo, S. A novel 3d geometric algorithm for aircraft autonomous collision avoidance. In Proceedings of the 45th IEEE Conference on Decision and Control, San Diego, CA, USA, 13–15 December 2006; pp. 1580–1585. [Google Scholar]

- Fasano, G.; Accardo, D.; Moccia, A.; Carbone, C.; Ciniglio, U.; Corraro, F.; Luongo, S. Multi-sensor-based fully autonomous non-cooperative collision avoidance system for unmanned air vehicles. J. Aerosp. Comput. Inf. Commun. 2008, 5, 338–360. [Google Scholar] [CrossRef]

- Ren, W.; Beard, R.W. Trajectory tracking for unmanned air vehicles with velocity and heading rate constraints. IEEE Trans. Control Syst. Technol. 2004, 12, 706–716. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, W.; Huang, P.; Jiang, Z. Monocular vision-based sense and avoid of uav using nonlinear model predictive control. Robotica 2019, 37, 1582–1594. [Google Scholar] [CrossRef]

- Viquerat, A.; Blackhall, L.; Reid, A.; Sukkarieh, S.; Brooker, G. Reactive collision avoidance for unmanned aerial vehicles using doppler radar. In Field and Service Robotics; Springer: Berlin/Heidelberg, Germany, 2008; pp. 245–254. [Google Scholar]

- Lai, Y.-C.; Huang, Z.-Y. Detection of a moving uav based on deep learning-based distance estimation. Remote Sens. 2020, 12, 3035. [Google Scholar] [CrossRef]

- Saqib, M.; Khan, S.D.; Sharma, N.; Blumenstein, M. A study on detecting drones using deep convolutional neural networks. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–5. [Google Scholar]

- Schumann, A.; Sommer, L.; Klatte, J.; Schuchert, T.; Beyerer, J. Deep cross-domain flying object classification for robust uav detection. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Opromolla, R.; Fasano, G.; Accardo, D. A vision-based approach to uav detection and tracking in cooperative applications. Sensors 2018, 18, 3391. [Google Scholar] [CrossRef]

- Jin, R.; Jiang, J.; Qi, Y.; Lin, D.; Song, T. Drone detection and pose estimation using relational graph networks. Sensors 2019, 19, 1479. [Google Scholar] [CrossRef]

- Wu, M.; Xie, W.; Shi, X.; Shao, P.; Shi, Z. Real-time drone detection using deep learning approach. In Proceedings of the International Conference on Machine Learning and Intelligent Communications, Hangzhou, China, 6–8 July 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 22–32. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object detection using yolo: Challenges, architectural successors, datasets and applications. Multimed. Tools Appl. 2023, 82, 9243–9275. [Google Scholar] [CrossRef]

- Yang, Y.; Gao, X.; Wang, Y.; Song, S. Vamyolox: An accurate and efficient object detection algorithm based on visual attention mechanism for uav optical sensors. IEEE Sens. J. 2022, 23, 11139–11155. [Google Scholar] [CrossRef]

- Xing, J.; Liu, Y.; Zhang, G.-Z. Improved yolov5-based uav pavement crack detection. IEEE Sens. J. 2023, 23, 15901–15909. [Google Scholar] [CrossRef]

- Vera-Yanez, D.; Pereira, A.; Rodrigues, N.; Molina, J.P.; García, A.S.; Fernández-Caballero, A. Vision-based flying obstacle detection for avoiding midair collisions: A systematic review. J. Imaging 2023, 9, 194. [Google Scholar] [CrossRef]

- Li, J.; Ye, D.H.; Chung, T.; Kolsch, M.; Wachs, J.; Bouman, C. Multi-target detection and tracking from a single camera in unmanned aerial vehicles (uavs). In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 4992–4997. [Google Scholar]

- Woo, J.; Kim, N. Vision-based target motion analysis and collision avoidance of unmanned surface vehicles. Proc. Inst. Mech. Eng. Part M J. Eng. Marit. Environ. 2016, 230, 566–578. [Google Scholar] [CrossRef]

- Lyu, Y.; Pan, Q.; Zhao, C.; Zhang, Y.; Hu, J. Vision-based uav collision avoidance with 2d dynamic safety envelope. IEEE Aerosp. Electron. Syst. Mag. 2016, 31, 16–26. [Google Scholar] [CrossRef]

- Marinho, T. Bio-Inspired Vision-Based Evasion Control: Collision Avoidance without Distance Measurement; University of Illinois at Urbana-Champaign: Champaign, IL, USA, 2019. [Google Scholar]

- Cichella, V.; Marinho, T.; Stipanović, D.; Hovakimyan, N.; Kaminer, I.; Trujillo, A. Collision avoidance based on line-of-sight angle. J. Intell. Robot. Syst. 2018, 89, 139–153. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 2–29 October 2017; pp. 2961–2969. [Google Scholar]

- Su, H.; Wei, S.; Yan, M.; Wang, C.; Shi, J.; Zhang, X. Object detection and instance segmentation in remote sensing imagery based on precise mask r-cnn. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1454–1457. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Alecu, F. Blender institute–the institute for open 3d projects. Open Source Sci. J. 2010, 2, 36–45. [Google Scholar]

| Image Information | Value |

| Image Resolution | 1920 × 1080 |

| Focal Length | 35 mm |

| Attitude | Range (deg) |

| Roll | −15~15 |

| Pitch | −15~15 |

| Yaw | −75~75 |

| Specification | Value |

|---|---|

| Wingspan | 2.0 m |

| Overall Length | 1.1 m |

| Height | 0.25 m |

| Average Speed | 20 m/s |

| Case | Crossing | Head-On |

|---|---|---|

| Video Information | ||

| Resolution | 3840 × 2160 | 3420 × 1924 |

| Focal Length | 35 mm | 35 mm |

| FOV | 69 degrees | 69 degrees |

| Frames Per Second | 30 | 30 |

| Weather | Overcast | Cloudy |

| Intruder’s Information | ||

| Average Speed | 20 m/s | 20 m/s |

| Altitude | 100 m | 50 m |

| Host’s Information | ||

| Altitude | 90 m | 45 m |

| Video Information | Value |

|---|---|

| Resolution | 3840 × 2160 |

| Focal Length | 35 mm |

| FOV | 54 degrees |

| Video Length | 300 frames |

| Frames Per Second | 30 |

| Case | Type of the Intruder (Wingspan) | Speed of UAVs | ||

|---|---|---|---|---|

| Vint | Vhost | |||

| Crossing 1 | I | Fixed-wing (2.0 m) | 15 m/s | 5 m/s |

| II | Fixed-wing (1.4 m) | |||

| Crossing 2 | I | Fixed-wing (2.0 m) | 5 m/s | 15 m/s |

| II | Fixed-wing (1.4 m) | |||

| Crossing 3 | Flywing (3.5 m) | 15 m/s | 15 m/s | |

| Head-on | I | Flywing (3.5 m) | 15 m/s | 5 m/s |

| II | 5 m/s | 15 m/s | ||

| Case | Distance (m) | Min TTC (s) | MSD (m) | ||

|---|---|---|---|---|---|

| Detected Range | Collision Avoidance Began | ||||

| Crossing 1 | I | 292.3 | 233.8 | 10.16 | 21.52 |

| II | 292.3 | 285.9 | 11.85 | 25.57 | |

| Crossing 2 | I | 292.4 | 292.4 | 14.54 | 42.95 |

| II | 292.4 | 279.4 | 13.88 | 38.54 | |

| Crossing 3 | 434.6 | 434.6 | 13.93 | 56.61 | |

| Head-on | I | 300 | 300 | 5.01 | 15.90 |

| II | 300 | 300 | 6.97 | 31.14 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lai, Y.-C.; Lin, T.-Y. Vision-Based Mid-Air Object Detection and Avoidance Approach for Small Unmanned Aerial Vehicles with Deep Learning and Risk Assessment. Remote Sens. 2024, 16, 756. https://doi.org/10.3390/rs16050756

Lai Y-C, Lin T-Y. Vision-Based Mid-Air Object Detection and Avoidance Approach for Small Unmanned Aerial Vehicles with Deep Learning and Risk Assessment. Remote Sensing. 2024; 16(5):756. https://doi.org/10.3390/rs16050756

Chicago/Turabian StyleLai, Ying-Chih, and Tzu-Yun Lin. 2024. "Vision-Based Mid-Air Object Detection and Avoidance Approach for Small Unmanned Aerial Vehicles with Deep Learning and Risk Assessment" Remote Sensing 16, no. 5: 756. https://doi.org/10.3390/rs16050756

APA StyleLai, Y.-C., & Lin, T.-Y. (2024). Vision-Based Mid-Air Object Detection and Avoidance Approach for Small Unmanned Aerial Vehicles with Deep Learning and Risk Assessment. Remote Sensing, 16(5), 756. https://doi.org/10.3390/rs16050756