1. Introduction

With the launch of a lot of earth observation satellites, multi-spectral (MS) images play an important role in agriculture, disaster assessment, environmental monitoring, land cover classification, etc. The ideal MS images are acquired at multiple wavelength bands with high spatial resolution. However, limitations include the incoming radiation energy and the volume of data collected [

1], but one solution can be found by obtaining two kinds of images: a high-spatial resolution panchromatic (HR PAN) image and an MS image with fewer spatial details. These images contain both redundant and complementary information; hence, pan-sharpening, which refers to the fusion of a PAN image and an MS image to produce an HR MS image of the same size as a PAN image, has received significant attention, [

1,

2].

Numerous endeavors have been dedicated to the development of pan-sharpening algorithms in the past few decades. For conventional techniques, there are three categories: component substitution (CS) methods, multi-resolution analysis (MRA) approaches, and variational optimization-based (VO) techniques. The CS methods inject spatial information by displacing components with PAN images. The most well-known algorithms are intensity–hue–saturation [

3], principal component analysis [

4], Brovey transforms [

5], and Gram–Schmidt spectral sharpening [

6]. The two types of methods could have low computational costs and promising outcomes but sometimes may suffer from spectral distortions. The MRA approaches assume spatial details can be obtained through a multi-resolution decomposition of the PAN image. The injection of high frequencies from PAN images to the MS bands improves the spatial resolution. On the basis of the different decomposition algorithms, the MRA methods comprise the decimated wavelet transform [

7], the smoothing filter-based intensity modulation [

8], à trous wavelet transform (ATWT) [

9], contourlet [

10], etc. Compared to the CS and MRA approaches, VO-based approaches are relatively new. Methods in this category always consider the observed MS images as degraded versions of an ideal HR MS image. Based on this assumption, the recovery results for HR MS images can be obtained using the optimization algorithm. Representative methods include model-based fusion using PCA and wavelets [

11], the sparse representation of injected details [

12], and model-based, reduced-rank pan-sharpening [

13]. VO-based techniques can yield competitive results, but a large number of hyperparameters and insufficient feature representation could result in spatial and spectral distortions.

Over the past few years, deep learning has become more in vogue, and scholars have attempted to explore the high nonlinearity of convolutional neural networks (CNNs) in the context of the pan-sharpening problem. In PNN [

14], the results are pan-sharpened by a simple CNN with a structure similar to that of a super-resolution CNN (SRCNN) [

15]. Ref. [

16] is similar to that of a PNN, and the difference is that the network is used to super-resolve MS images in HIS space; then, the MS and PAN images are further enhanced by GS transform to accomplish the pan-sharpening. TFNet [

17] first fuses the MS and PAN images in the feature level, and then the pan-sharpened images are reconstructed from the fused features. Yang et al. [

18] propose a deep network architecture called PanNet for pan-sharpening. The network learns the residuals between the up-sampled MS image and the HR MS image. The training process operates within the domain of high-pass filtering. PSGAN [

19] introduces the generative adversarial network (GAN) to produce high-quality pan-sharpened images. MSDCNN [

20] adds multi-scale modules on basic residual connections, and SRPPNN [

21] utilizes high-pass residual modules to inject more abundant spatial information. Multi-scale structured sub-networks are used for the fusion of spatial details in LPPN [

22]. These networks are trained under a complete supervision framework in a reduced resolution domain. The parameters that have converged are then utilized to fuse the target full-resolution PAN/MS images. Obviously, there is a gap between the assumption and the actual situation. For this reason, the above-mentioned methods exhibit satisfactory performance in the reduced resolution domain; however, they cannot ensure optimal performance in the target domain.

Valuable efforts have been made by other groups to overcome the deterioration in the generalization ability due to scale-shift. Ref. [

23] proposes a dual-output and cross-scale strategy in which each sub-network is equipped with an output terminal that generates reduced- and target-scale results, respectively. However, its weakness is that it involves separate training processes and cascaded sub-networks, which make it computationally inefficient. PancolorGAN [

24] applies data augmentation in the training by randomly varying the down-sampling ratios. Some GAN-based models, such as Pan-Gan [

25], PercepPAN [

26] and UCGAN [

27], introduce unsupervised architectures to avoid scale-shift assumptions. Unsupervised learning architectures perfectly avoid the scale-shift issue; however, the training process of GANs is susceptible to vulnerabilities [

28], which can lead to suboptimal outcomes.

The Siamese net [

29] was initially introduced as a solution for addressing signature verification as an image-matching problem. This Siamese neural network architecture consists of two identical feed-forward sub-networks that are tied by an energy function at the final layer. These parameters are shared between the sub-networks, which means the same networks

correspond with the same parameter

. Weight bundling ensures that two similar images cannot be mapped to different positions within the feature space. The input pairs are embedded as representations in a high-dimensional domain. Then, the similarity of the two samples is compared by computing the distance between the two representations through a cosine distance. The network is optimized by minimizing the similarity metric when input pairs are from the same category and maximizing it when they belong to different categories.

At present, there have been several studies applying Siamese networks to solve pan-sharpening problems. In [

30], the Siamese network is applied in a cascade up-sampling process in which multi-level local and global fusion blocks share network parameters. The intermediate-scale MS image outputs are used as part of the loss function. This method employs a Siamese fusion network to preserve the cross-scale consistency, but it still remains shifted with full-resolution domain. Ref. [

31] proposes a knowledge distillation framework for pan-sharpening, aiming to imitate the ground-truth reconstruction process in both the feature space and the MS domain. The student network has the same architecture as the teacher network, and loss terms are applied in two intermediate feature layers. CMNet [

32] combines the classification and pan-sharpening networks in a multi-task learning way. The pan-sharpening network performs better under the guidance of the classification network. It learns ideal high-resolution MS images from an application-specific perspective but introduces additional labels that are not easily accessible.

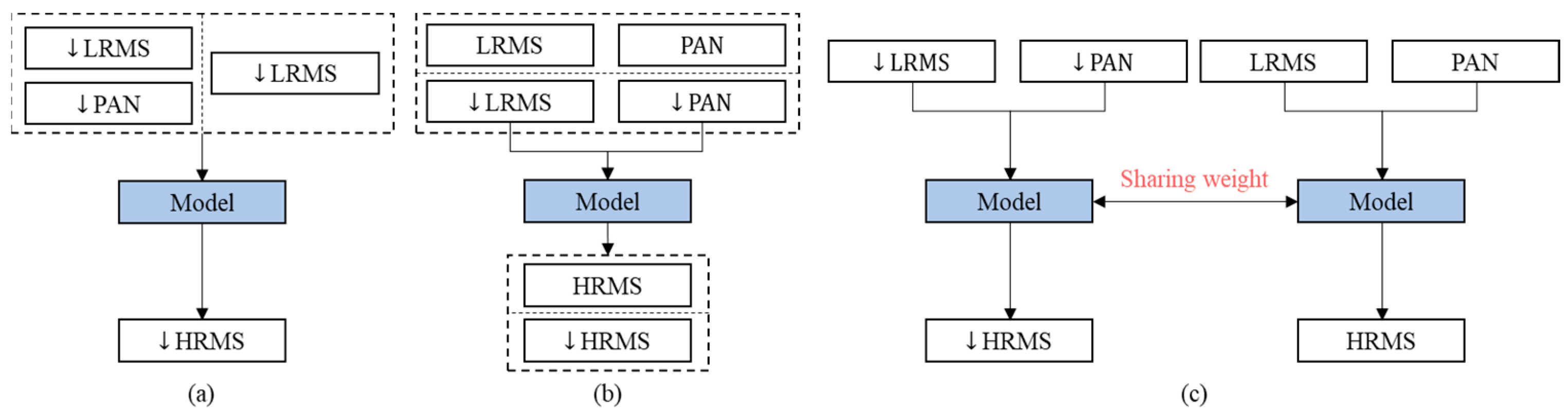

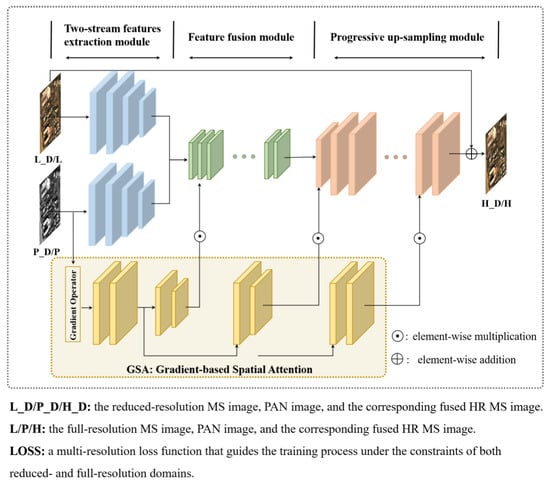

The scale-shift issue created by the lack of ground-truth in the full-resolution domain is still a challenge for deep-learning based methods. Thus, we propose a Siamese architecture named GSA-SiamNet equipped with a multi-resolution loss function. In

Figure 1, we also perform a comparison of common network architectures and our Siamese design. We could divide the previous network architectures into two main categories. One takes reduced-resolution MS images as inputs, or up-samples them first, and then stacks them with the PAN images. The networks learn a mapping function using original MS images. The other category is the two-stream network, which fuses the PAN and MS images in the feature domain. Some researchers still train the pan-sharpening mapping function using reduced-resolution images. However, unsupervised approaches, such as GANs, are trained in the full-resolution domain, and their training processes are susceptible to vulnerabilities. Compared to the previous methods, we consider pan-sharpening to be a multi-task problem that aims to retain information in both the reduced- and full-resolution domains. We adopt the Siamese network and take both a reduced-resolution image pair and a full-resolution image pair as inputs. It is worth noting that our approach differs slightly from conventional Siamese networks. Instead of using a similarity constraint for the last layer, a multi-resolution loss function is designed for both the reduced- and full-resolution domain to preserve spectral and spatial consistency. The details are illustrated in

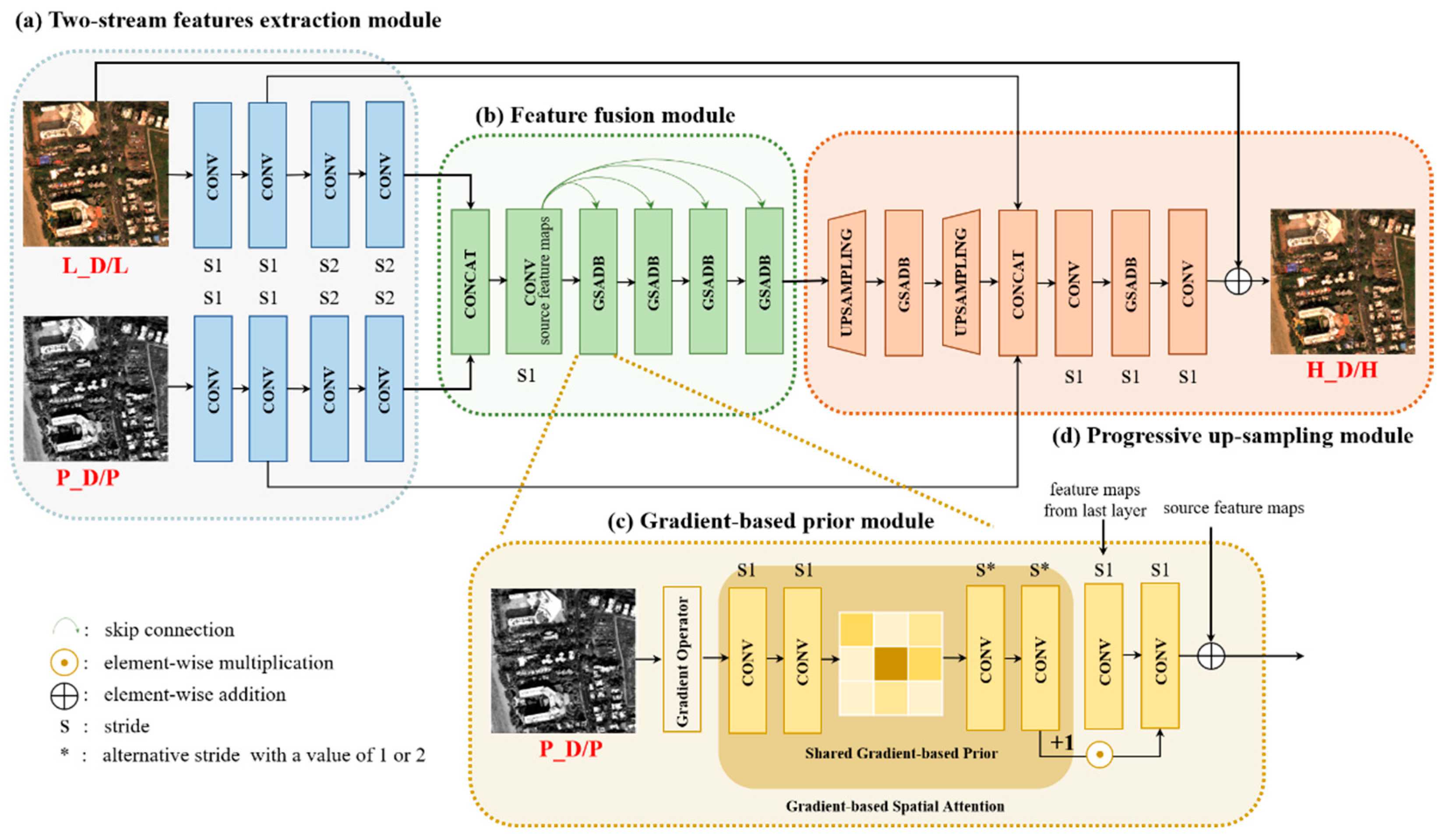

Figure 2.

The major contributions of this paper are summarized as follows. First, we propose a Gradient-based Spatial Attention (GSA) module to optimize the injection process of spatial information in multi-level intermediate layers. The high-pass information is extracted by gradient operators. Then, learned gradient-based prior is shared as the input. Here, we utilize gradient-based prior as a spatial attention mask to guide texture recovery for different regions (with different levels of spatial details). Second, a multi-resolution loss function is designed to constrain the network training in both the reduced- and full-resolution domains. Full-resolution pan-sharpening, as an auxiliary task, markedly improves the pan-sharpening performance, and the reduced-resolution pan-sharpening task supports spectral consistency. The multi-task strategy helps GSA-SiamNet achieve better scale-shift adaptation compared to other approaches.

This paper is organized as follows. In

Section 1, we provide a detailed comparison with previous learning-based network architecture and introduce the background of Siamese architecture. We propose a novel GSA-SiamNet architecture and give details in

Section 2.

Section 3 describes experiments, followed by a series of comparisons with other methods. The conclusion and discussion are provided in

Section 4.

2. Materials and Methods

In this section, we give a detailed description of our method. For convenience, we denote the original MS and PAN images as and , where , , and respectively denote the width, height, and the number of channels of the MS image, while and are the width and height of the PAN. The ratio of spatial resolution between MS and PAN images is defined as . The reduced-resolution MS and PAN images are represented as and , whose resolution also satisfies the above relationship. The pan-sharpened HR MS image has both high spectral and spatial resolutions.

The pan-sharpening task is to reconstruct high-quality MS images with both advantages of PAN and MS images. A general formulation of the deep learning-based approaches can be defined as:

where the subscript

denotes the

th spectral band, and

indicates the upscaling version of the

th band of

.

denotes the detail extraction function learned by the network. Most state-of-the-art methods treat the function as minimizing an objective of the form:

where

enforces spectral consistency,

enforces structural consistency, and

is other possible image constraints on sharpened MS images.

2.1. Network Architecture

PAN and MS images focus on preserving both geometric details and spectral information. Unfortunately, there is no well-defined boundary between spatial and spectral information. Those undesirable pan-sharpened results are caused by redundant spectral information on flat regions or insufficient extraction of spatial characteristics at edges. Furthermore, the single-resolution loss function, which treats the original MS images as the ground truth, overlooks the importance of PAN images. Motivated by this fact and the fact that the complexity of enhancing the image varies spatially, we propose a GSA-SiamNet for pan-sharpening. In this section, we introduce the four key components shown in

Figure 2. Because of the symmetry of the Siamese network, we just describe the full-resolution image pairs as inputs in the next paragraphs.

2.1.1. Two-Stream Features Extraction Module

In this paper, we use a two-stream network as a generator to generate pan-sharpened images [

19]. Instead of directly stacking PAN and MS images [

25], we accomplished fusion in the feature domain, which has proved to reduce spectral distortion [

33]. One sub-network took a multi-band up-sampled MS image as input, while the other sub-network took a single-band PAN image. In both sub-networks, there were four convolutional layers with

convolutional kernels used to extract the features. The stride of the first two layers is 1, and for the rest, it is 2 in order to compress the features. All of the convolution layers were activated through the Leaky Rectified Linear Unit (Leaky ReLU) with a slope of 0.2.

2.1.2. Feature Fusion Module

This module enables the proper fusion of PAN and MS images in the feature space. Instead of a cascade connection, we introduce a skip connection method which shares the source features [

34] among Gradient-based Spatial Attention Dense Blocks (GSADBs). The residual function of layer

is:

where

refers to the feature maps from the first convolution layer of this module and

is set to 4 in this study. Each layer, called a Gain Block (GB), consists of two

convolution layers with a Leaky ReLU activation function and is adjusted via a GSA mask. By simply multiplying these two vectors together, we can adaptively amplify the pairs with high spatial correlation and enhance the network’s ability to capture valid features. The details can be written as:

where

denotes the convolutional layer with

kernel,

is spatial attention features (see the following section for detailed descriptions), and

denotes Leaky Rectified Linear Unit activation layer.

is the feature-map of the

GSADBs of the

GBs. The end of the fusion module is a

tensor that encodes both spatial and spectral features.

2.1.3. Gradient-Based Spatial Attention Module

As part of feature fusion module, this GSA module is a flexible plugin that generates spatial attention features and refines the intermediate features within GSADBs. The high-pass information extracted from the PAN image has been used in many variational methods, and it has been shown to be effective in enforcing structural consistency. We no longer use high-pass information as a constraint in the loss function, which is different from the above variational methods. First, we utilize Laplacian and Sobel operators to obtain high-pass information from PAN images as attention prior. These gradient maps guide texture recovery for different regions. Second, the gradient-based prior helps to capture delicate texture. Through learning the GSA, we obtain a spatial attention mask that enhances spatial texture and inhibits the distortion to original spectral information in flat regions. The feature fusion module becomes more sensitive to spatial details in edge regions and less disruptive to spectral characteristics of smoothing regions.

In detail, we employ gradient maps as input and then employ a two-layer branch to generate a gradient-based prior, which is shared among GSA modules within the same stages. We set the size of the convolutional kernel to 3 and the stride to 1. However, we vary the kernel size to 4 and the stride to 2 to align the size of the attention masks and features. Then, a shared gradient-based prior performs forward passes through two successive convolutions to capture spatial attention .

2.1.4. Progressive Up-Sampling Module

Fusion features are progressively up-sampled to the PAN image size with two sub-pixel convolution layers, which can learn parameters for the upscaling process. Each up-sampling branch is also followed by a local residual block with a GSA module to modify features. The skip concatenation will help inject the details into higher layers, thereby noticeably easing the training process. A flat convolutional layer is applied last to generate the final residual of the up-sampled MS

and the ideal HR MS

. This global residual learning [

35] avoids the complicated transformation from input image pairs to targets and simply requires learning a residual map to restore the missing high-frequency details.

2.1.5. Loss Functions

In this paper, we decompose pan-sharpening into two sub-problems and regard it as a multi-task problem. The multi-resolution loss function

is proposed, aiming to retain both spectral and spatial information at different resolution scales. It is defined as follows:

where

is the coefficient to balance different loss terms, which is set to 0.1 in this paper. The first term

measures the pixel-wise difference between the spectral information of the fused HR MS image with reduced-resolution and that of the original MS image, as follows:

where

is the size of a train batch. Although

loss has been widely used in this term, the

loss function can lead to a local minimum, which makes the performance of our model unsatisfactory in flat areas [

17]. Here, we adopt

loss to measure the accuracy of the network’s reconstruction.

The second term

measures difference in gradients between the generated full-resolution HR MS image and the original PAN image, which is defined as follows:

where

denotes the gradient operator using the Laplacian operator and

is an average pooling along the channel dimension. This loss function takes advantage of the PAN image and gives a spatial constraint at a higher resolution.

3. Experimental Results and Analysis

In this section, we compare the proposed GSA-SiamNet with six widely used techniques: AWLP, MTF-GLP, BDSD, PCNN, PSGAN, and LPPN. All the codes of these compared methods are publicly available [

2], and we train them on our datasets with the default parameters. Here, we use

[

36],

[

37],

[

38],

[

39], and

[

40] to evaluate the performance of the proposed method. The metrics

,

, and

, which do not need reference images, are employed in full-resolution validation.

3.1. Datasets

To validate the performance of the proposed method, a WorldView-3 (WV-3) Dataset was used for the pan-sharpening experiments. The number of spectral bands is 8 (coastal, blue, green, yellow, red, red edge, near-IR1, and near-IR2) for the MS image taken from WV-3 sensors. Sensors carried by the WV-3 satellite can acquire MS images with a spatial resolution of 1.24 m and PAN images with a resolution of 0.31 m. The WV-3 dataset used in this paper is collected by the DigitalGlobe WV-3 satellite over Moscow and San Juan. The images from these three regions were acquired at different times and also differ significantly in their geographical settings.

3.2. Implementation Details

The mini-batch size is set to 16. The parameters in our GSA-SiamNet are updated by the Adam optimizer. The GSA-SiamNet is implemented in PyTorch and trained in parallel on two NVIDIA GeForce GTX 1080 GPUs. Then, the original MS and PAN images are used as the ground truth for spectral and spatial constraints. The size of the raw MS images is and that of the PAN images is the corresponding . The training set, collected from Moscow and Mumbai, includes 2211 samples, and one-tenth is used as the validation. There are 399 images used for the test that were collected over Moscow and San Juan. The number of epochs trained is controlled by early stopping.

3.3. Ablation Study

3.3.1. Evaluation of GSA

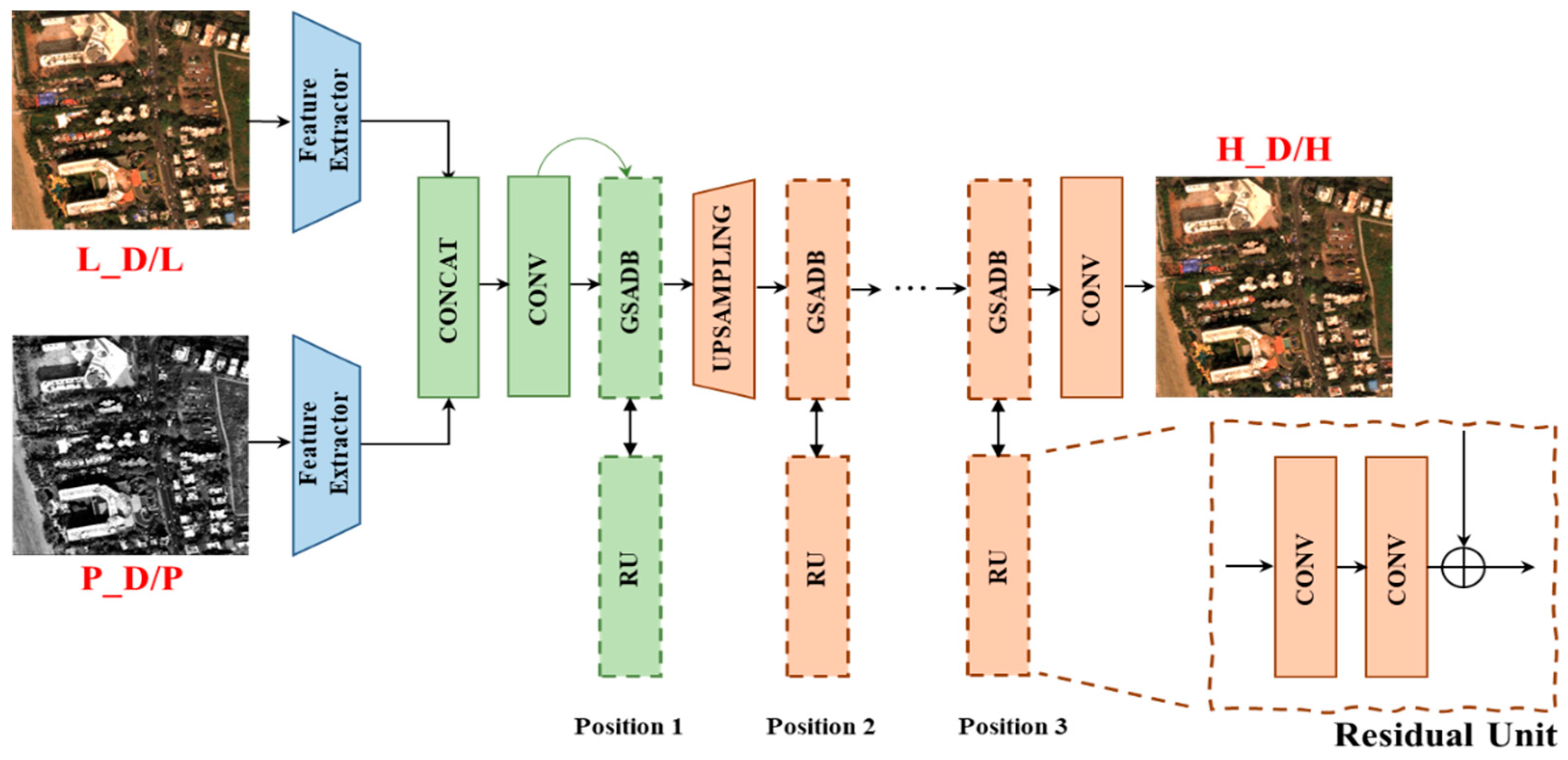

The GSA module can be stacked flexibly at any stage of the pan-sharpening process. As shown in

Figure 3, we conducted an experiment to show the effectiveness of the GSA module. By varying its integration position, we evaluate the contribution to each stage. For a fair comparison, we compare them to architectures of the same depth that do not include GSA. The skip connection residual block in the feature fusion module is also replaced with a single convolution operator. In

Table 1, bold represents the best result. The average values and standard deviations of each station are listed. From a quantitative perspective, the network’s performance is improved to different degrees, regardless of the stage of module insertion. In particular, the three metrics (

, and PSNR) are optimal when the module is inserted in the image fusion phase, while the remaining two metrics are suboptimal. These results indicate that our GSA layer indeed leads to valid performance improvement and improves the network’s ability to handle spatial details with less spectral loss. In this paper, the complete GSA-SiamNet inserts these GSA modules into all stages.

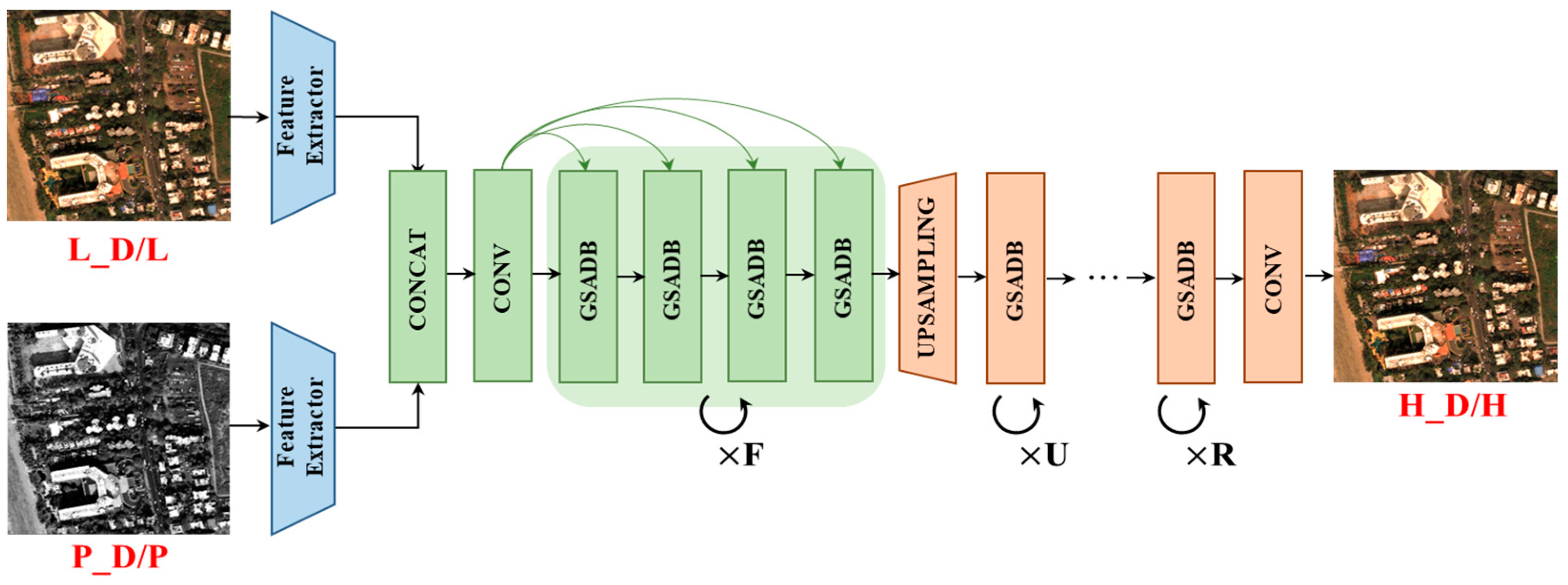

3.3.2. Evaluation of Number of Recursive Blocks

Because of weight-sharing sets among the residual units within a recursive block, the parameter volume remains the same as the layers of the network become deeper. As shown in

Figure 4, we explore various combinations of F, U, and R to construct models at different depths and observe how these three parameters affect the performance. Here, F, U, and R represent the number of recursions of GSADB at each stage.

To clearly show the impact of the number of recursive blocks, we plot three sets of line graphs in

Figure 5. The experiment was divided into two groups (setting

and

). The data in these three subplots are the same, but we have connected the results of the fixed recursive number in different stage situations with dashed lines. For example, we connect the results of the same number of U in subplot (a). The darker color of the dots represents the larger recursive number in the group.

Figure 5 shows that increasing the recursion number makes the network deeper and achieves better performance, indicating that deeper networks are generally better. In terms of intra-group comparisons, we fixed the parameter

at 4 or 6 and observed that increasing

resulted in a greater performance improvement compared to increasing

when the depth was the same. For example,

(d = 15) and

(d = 16) achieve 39.54 and 39.55 dB. The performance gains diminish as depth increases:

(d = 16) and

(d = 16) are two obvious turning points. In addition, the slope of the fold in (b) is greater compared to that in (a). We prefer to use our GSA module in shallower layers.

When making comparisons between groups, the performance improvement is less pronounced as the depth increases, specifically in the case of is 6. This result is consistent with previous findings. Despite the different depths, the three networks achieve comparable performances (, d = 6, 39.612 dB; , d = 6, 39.614 dB; , d = 5, 39.612 dB) and outperform the previous shallow networks. Under this recursive learning strategy, can achieve state-of-the-art results.

3.4. Reduced-Resolution Validation

Following Wald’s protocol [

41], we evaluate the methods at reduced resolution. This means that the original MS and PAN images are filtered by a

Gaussian blur kernel and are further down-sampled using the nearest neighbor technique with a factor of

). Five widely used metrics, including

, sCC, SAM, ERGAS, and PSNR, are used to assess the quantitative comparison of the proposed method.

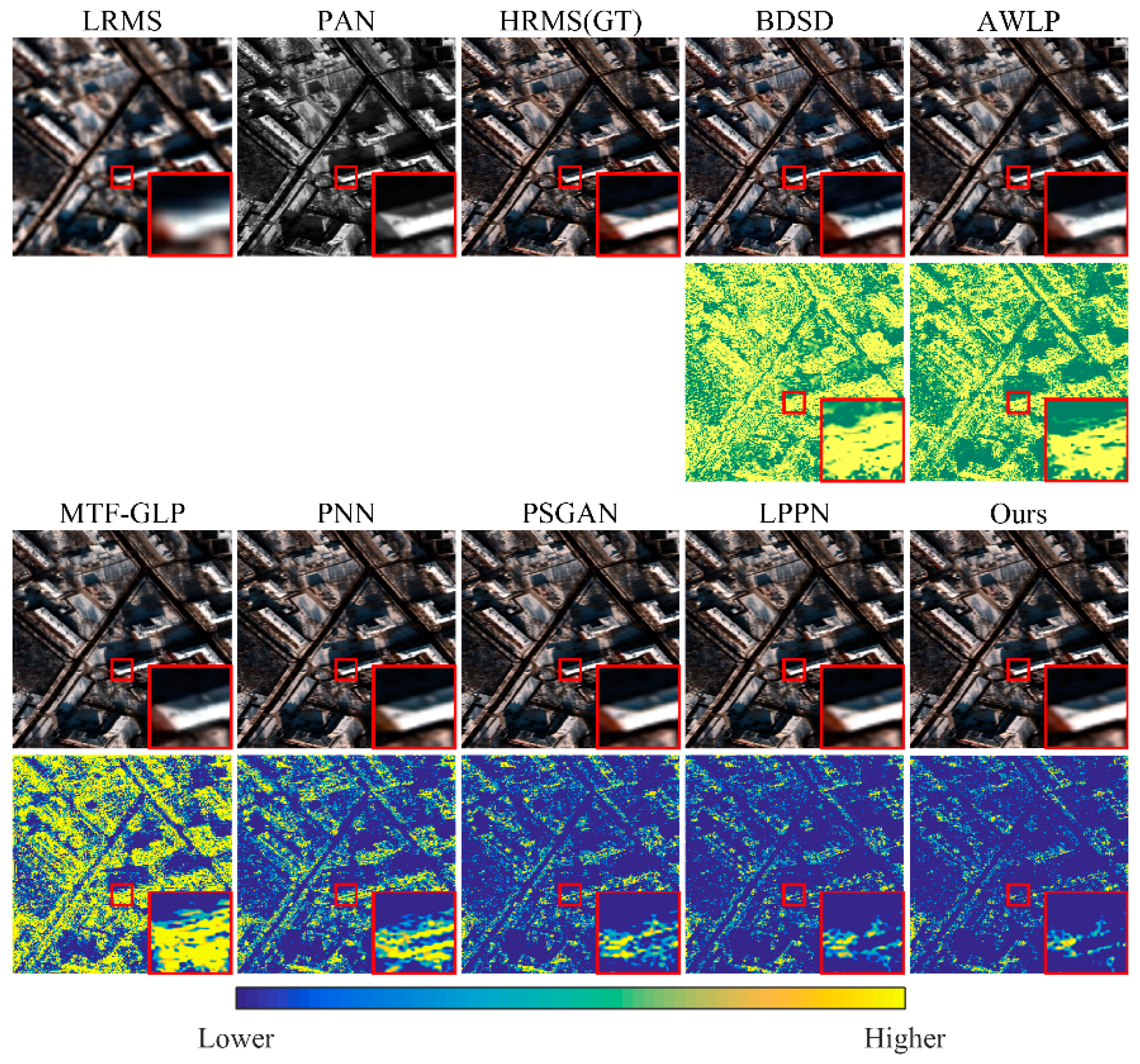

The results of the fusion are shown in

Figure 6 and

Figure 7. The first row shows the up-sampled LR MS, PAN, original HR MS (GT), and fusion results in which all MS images are displayed in true color (RGB). The second row comprises the corresponding error images, which regard the original MS image as ground truth. We color-coded the error image according to the color bar at the bottom of the figure. We performed an exponential transformation on the error image for clear visual effects. A truncated value was used to prevent small errors from being missed. From left to right, the colors represent the values from minimum to maximum.

In

Figure 6 and

Figure 7, the geometric details are effectively preserved in the reduced-resolution domain but have drawbacks, such as spectral distortion. Overall, the error plots show significant spectral distortions in BDSD and AWLP. Three conventional methods are too simple and brutal for the injection of high-frequency information, and the generated results perform poorly in terms of spectral consistency. PNN, PSGAN, LPPN, and our GSA-SiamNet have lower errors than the traditional methods. Learning-based injection functions have an advantage over manually designed injection functions in terms of spectral preservation. In

Figure 7, the colored ground objects suffer from varying degrees of spectral information loss in all pan-sharpened images. Among these methods, our method has the smallest mean error. Our GSA-SiamNet maintains a smaller distortion of the spectrum in the low-frequency regions and selectively injects high-frequency information.

Quantitatively,

Table 2 lists the results of the two sub-datasets. In the table, the best result is represented in bold. It can be observed that learning-based pan-sharpening methods outperform traditional approaches. Among the learning-based approaches, our GSA-SiamNet can yield a better performance than all the other competitors in all metrics. This is consistent with the conclusions obtained in the qualitative comparison. Consequently, our GSA-SiamNet can generate the closest fusion result to the reference MS (the ground truth), both spectrally and spatially. Regardless of qualitative or quantitative comparisons, our proposed method always yields a satisfactory performance.

3.5. Full-Resolution Validation

We also evaluate the algorithms at the full resolution of the images. In this case, three no-reference metrics (QNR, , and ) are calculated on full-resolution images.

The quantitative values in

Table 3 indicating the best performance are marked in bold. It is worth mentioning that the GSA-SiamNet does not achieve the best performance in every metric. The validation at full resolution allows for the avoidance of unknown ground truth, but the values of the indexes are less reliable because they heavily rely on the up-sampled MS image. In other words, visual evaluation is more reliable than quantitative evaluation in the full-resolution experiment.

Furthermore, we exhibit the average inference time for each fusion method, denoted as A.T., in seconds, in

Table 4. Traditional methods run on CPUs, while deep-learning based methods perform inferences on GPUs. Since the two run in different environments, we only compare the computation cost of the deep-learning based methods. For the average inference time of test datasets, the PCNN has the shortest inference times, while LPPN and our GSA-SiamNet have slightly higher computational costs. The recursive structure makes the network deeper so that it achieves better performance but increases inference time. In the future, we will study the optimization algorithm to improve computational efficiency.

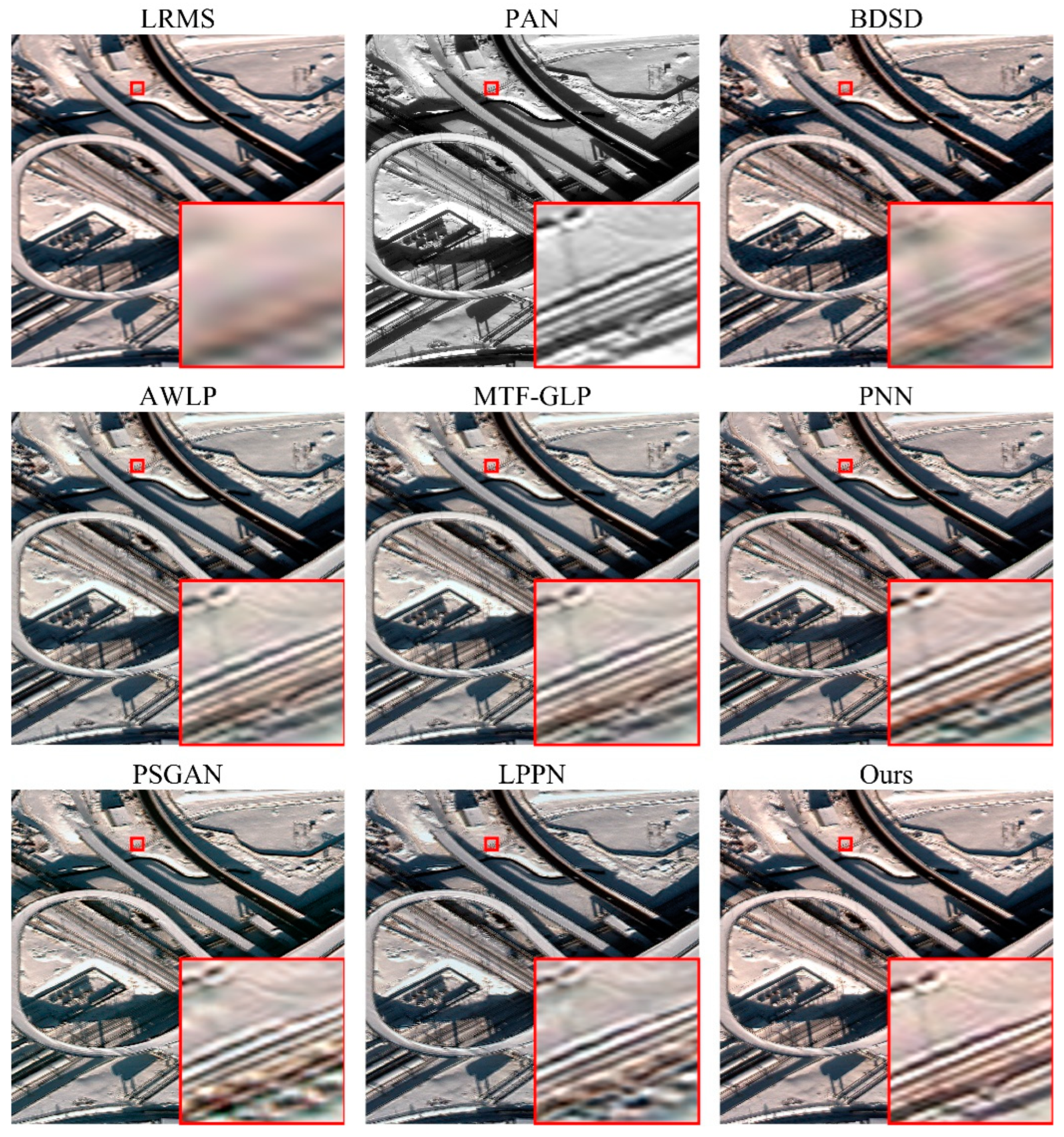

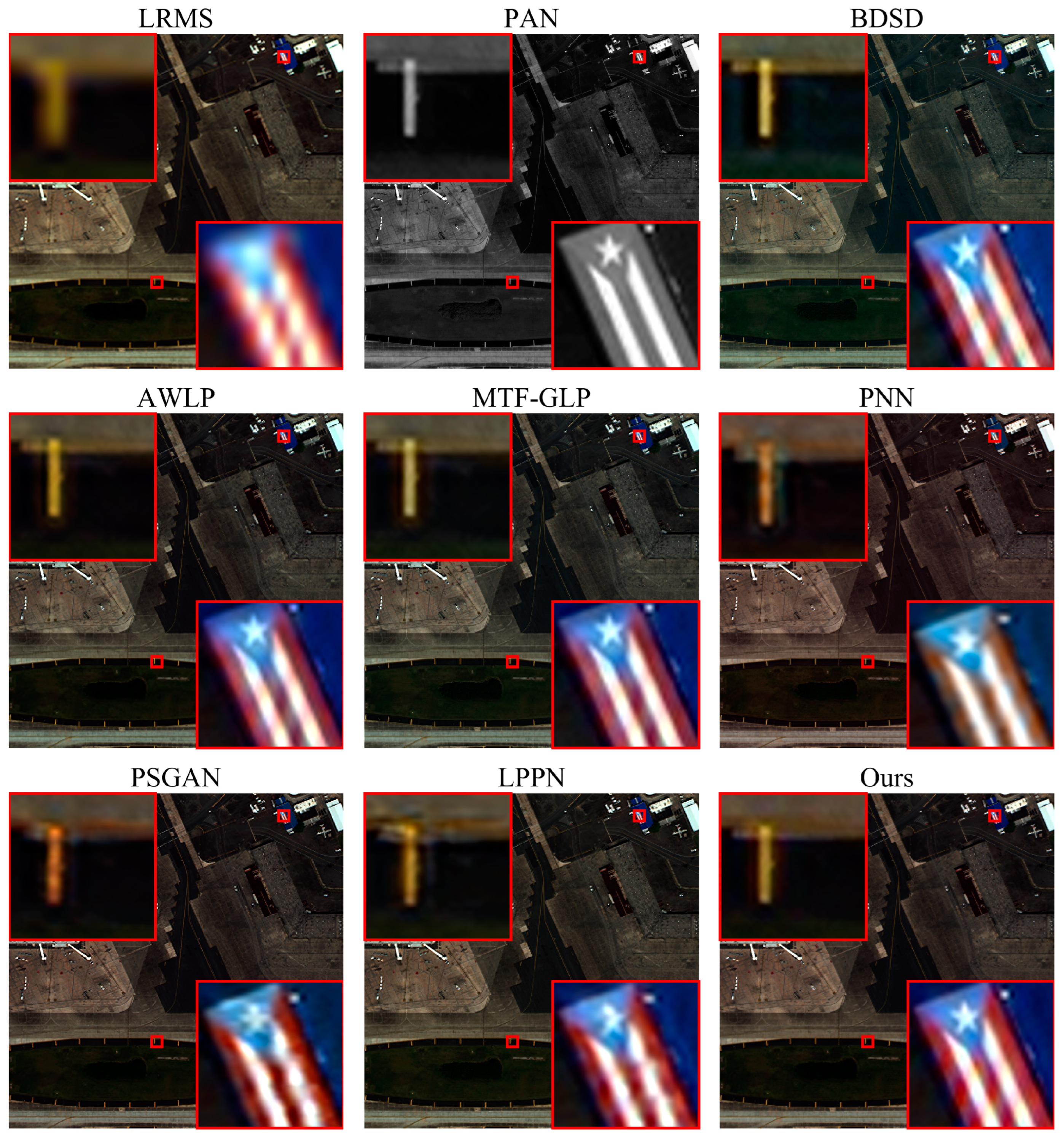

As shown in

Figure 8 and

Figure 9, we select a typical example of the results from each dataset. Discrepancies between the quantitative metrics and the visual appearance of the results can be observed. In

Figure 8, BDSD has the worst results, with the complete distortion of details. The localized, zoomed-in views show that PSGAN and LPPN failed to recover the spatial characteristics, and the image appears distorted at the edges. AWLP, MTP-GLP, PNN, and our method can maintain the details, which are as clear as those in the PAN image, well. Overall, only BDSD, PNN, and our method retain the spectral features of the original image on the snow surface.

Figure 9 is a sample collected from San Juan, and its distribution is significantly different from that of the training set. In the localized, zoomed-in views, the original LRMS yield jagged edges, which leads to severe artifacts in BDSD and AWLP. PSGAN and LPPN yield spatial distortions, while MTF-GLP and our GSA-SiamNet perform well from an anti-aliasing perspective. When the distribution of the test data differs from the distribution of the training data, PNN, PSGAN, and LPPN lose the advantage of spectral preservation and introduce some blurred information into the result. MTF-GLP suffers from color distortion. In this full-resolution validation, the pan-sharpened HR MS generated by GSA-SiamNet is visually superior.

In this section, we show the superiority of our GSA-SiamNet at full resolution. Thanks to our GSA-SiamNet’s focus on high-frequency content, it is more robust compared to other networks. As a result, the network can effectively translate a superb training performance to an excellent testing performance on new images with different distributions.