Assessing Sentinel-2, Sentinel-1, and ALOS-2 PALSAR-2 Data for Large-Scale Wildfire-Burned Area Mapping: Insights from the 2017–2019 Canada Wildfires

Abstract

1. Introduction

- To the best of our knowledge, it is the first large-scale wildfire satellite image dataset that includes both pre-fire and post-fire images captured by C-Band Sentinel-1, Multispectral Sentinel-2, and L-Band ALOS-2 PALSAR-2 satellites, respectively.

- We systematically analyzed the established large-scale multi-sensor satellite imagery dataset, quantitatively compared the difference between MSI spectra and SAR backscattering in burned and unburned areas, and the difference in temporal changes across various land cover types.

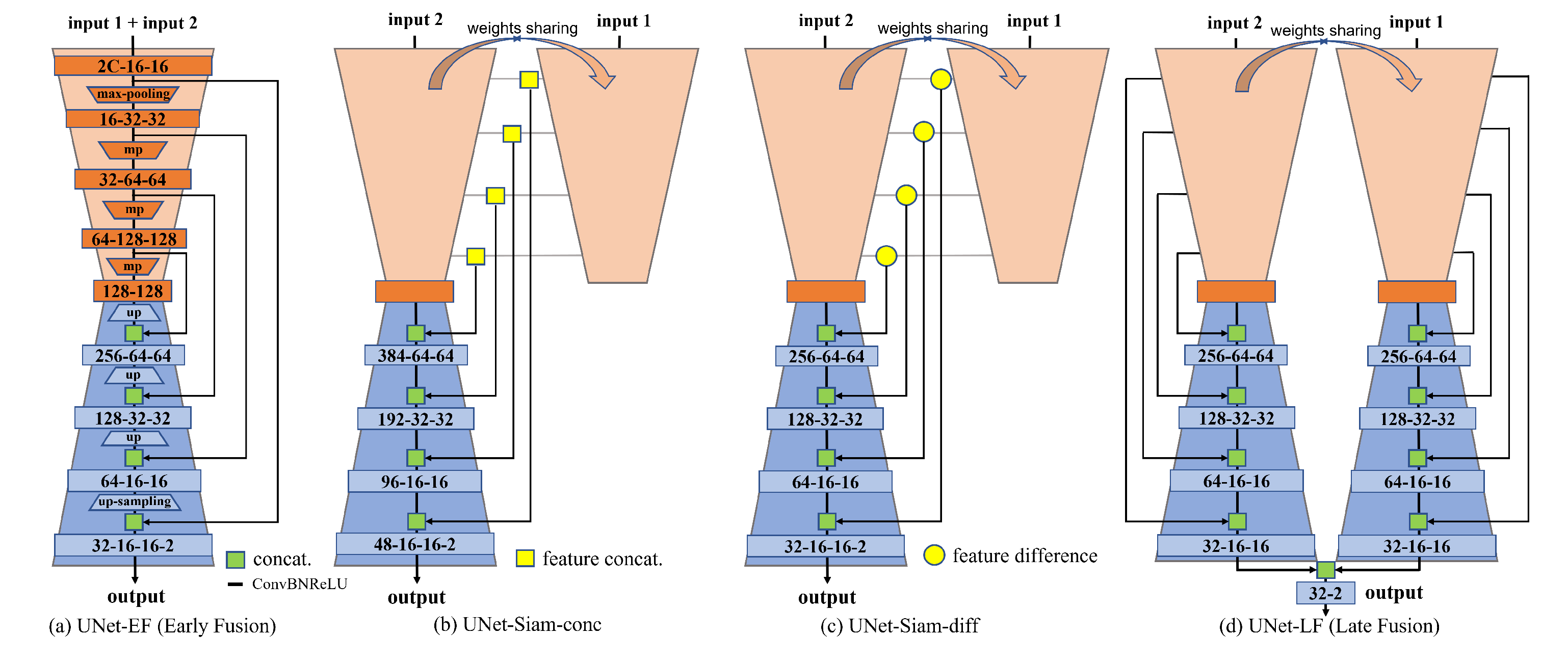

- We evaluated several simple but widely used deep learning architectures for wildfire-burned area mapping, i.e., U-Net and its Siamese variants. We also investigated three fusion strategies, including early fusion, late fusion, and intermediate fusion.

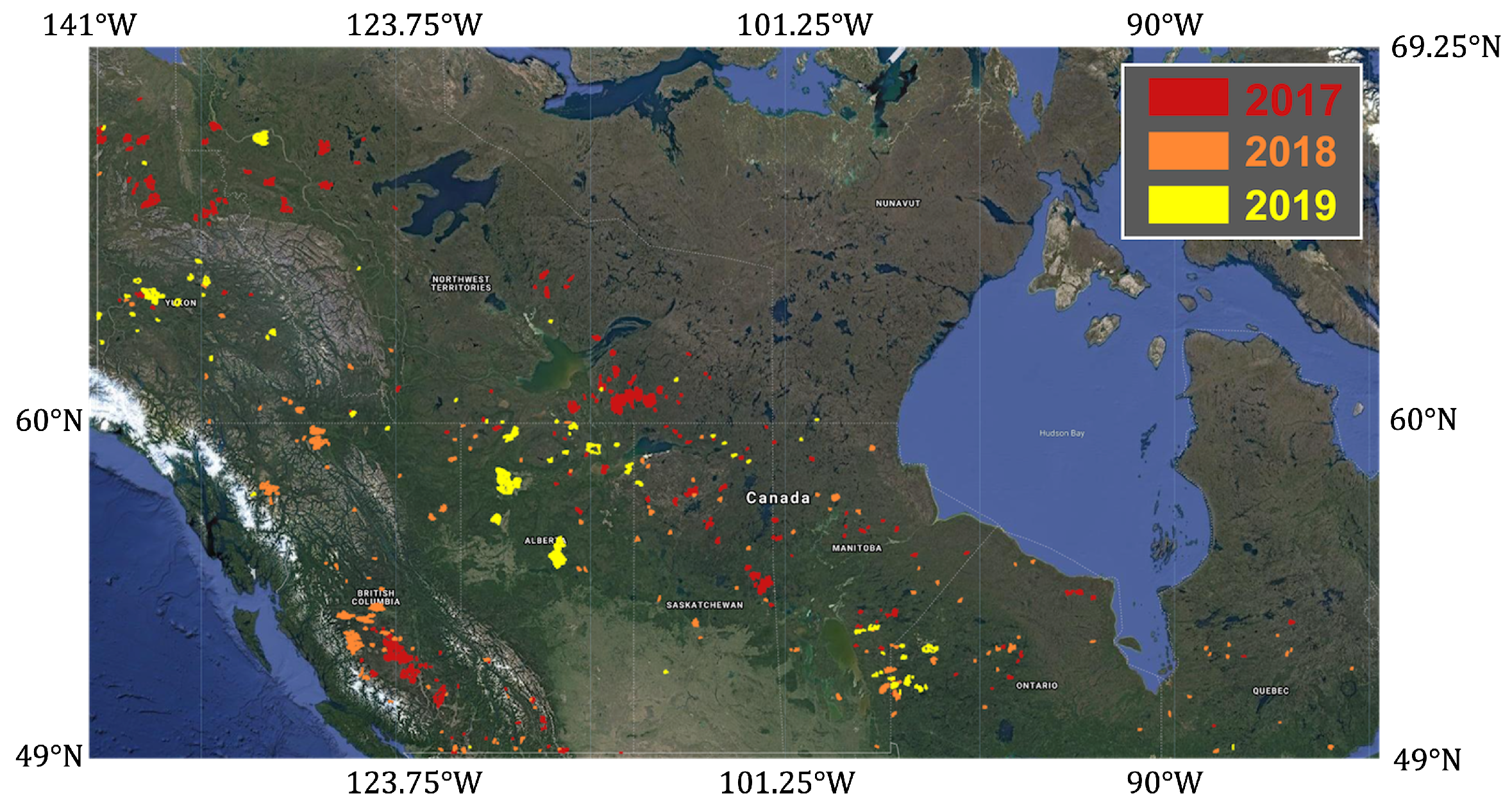

2. Wildfire-S1S2ALOS-Canada Dataset

2.1. Data Sources

2.2. Dataset Preparation

2.3. Dataset Structure

3. Optical and Radar Responses to Burned Areas

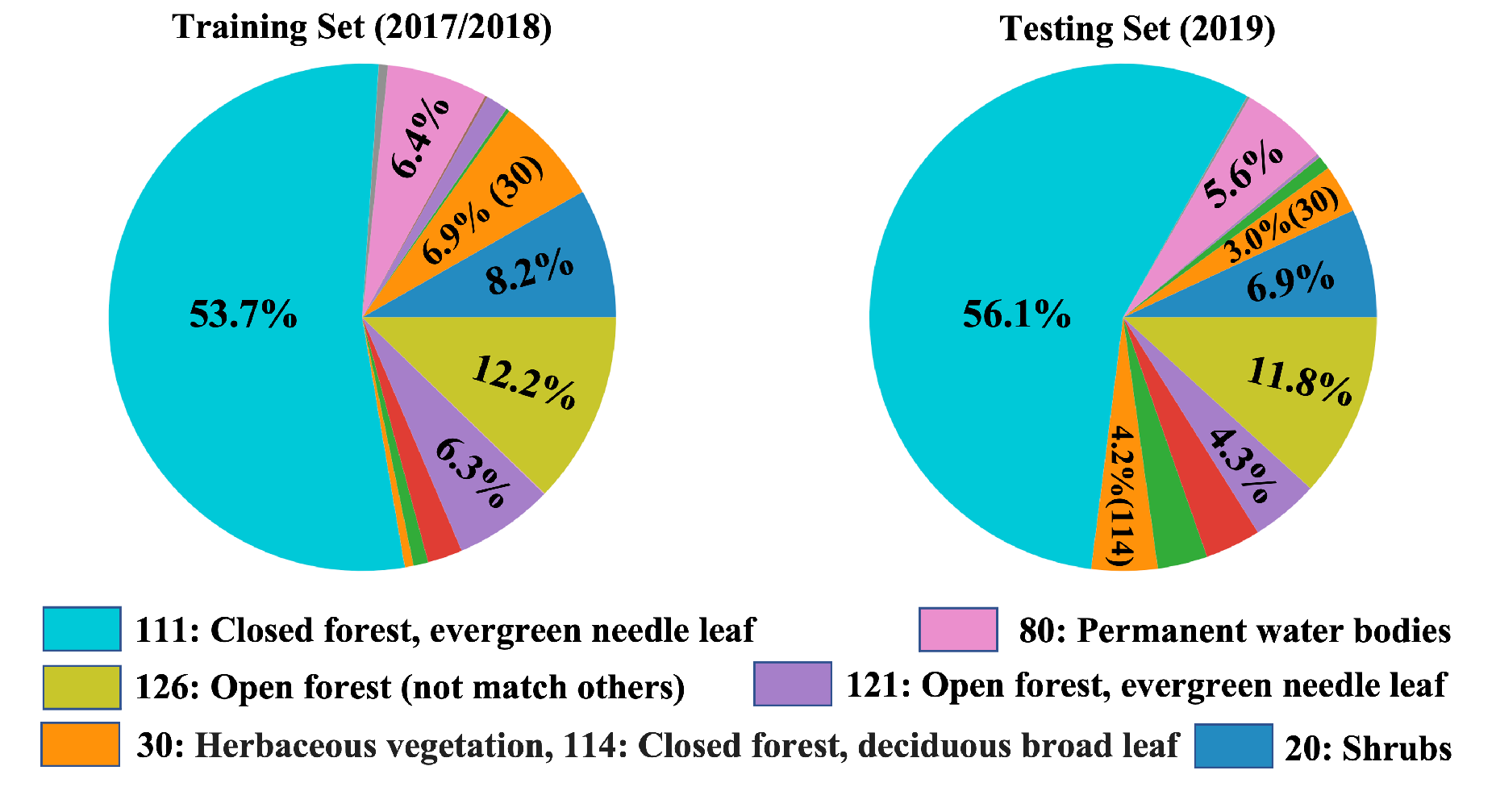

3.1. Land Cover Distribution

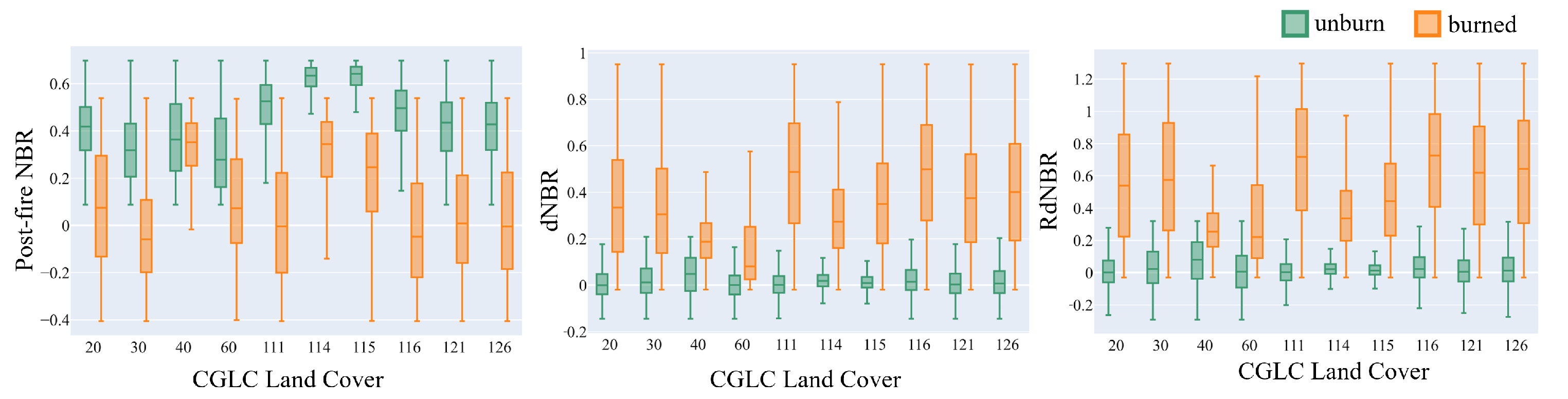

3.2. Sentinel-2 Spectral Responses

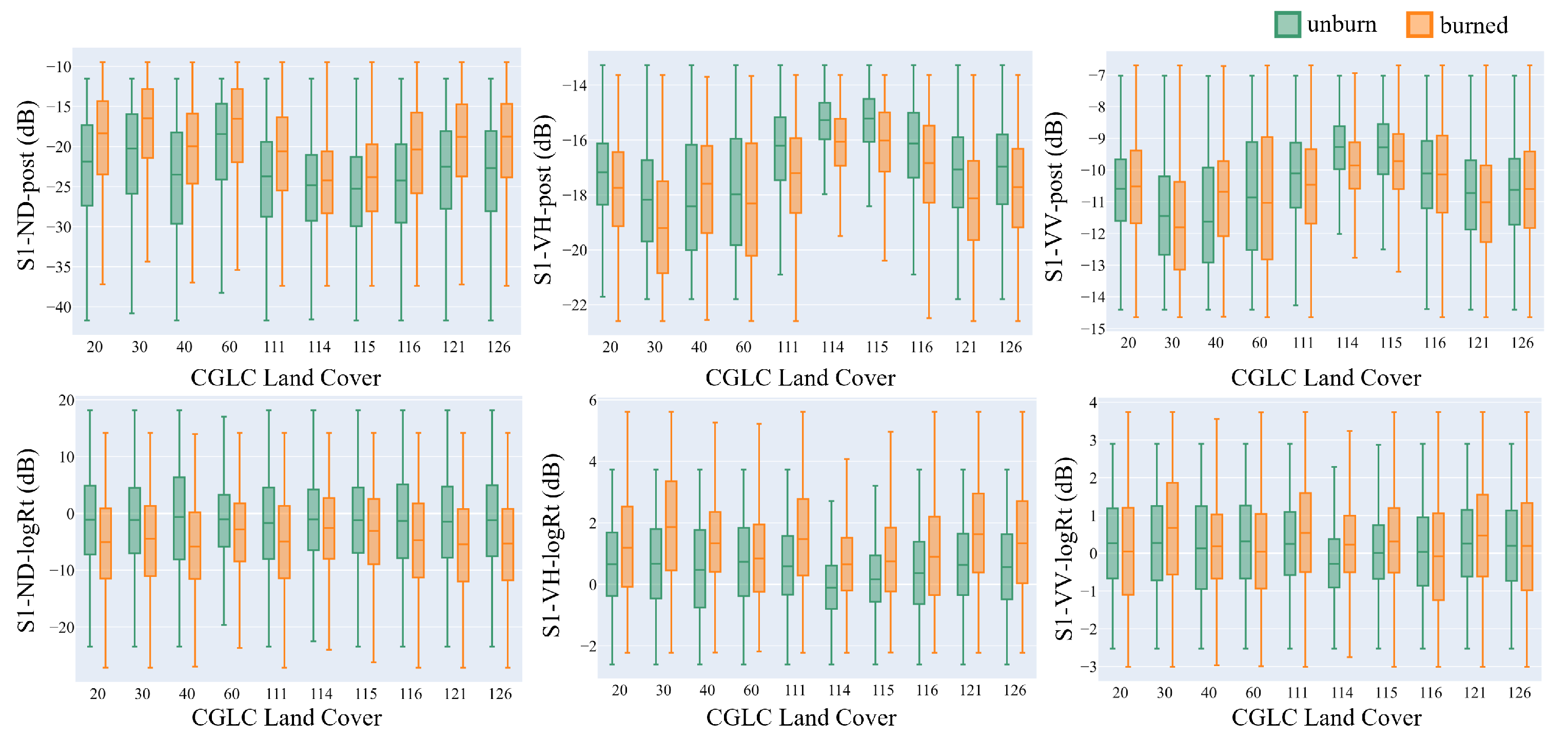

3.3. Sentinel-1 C-Band SAR Backscatter Responses

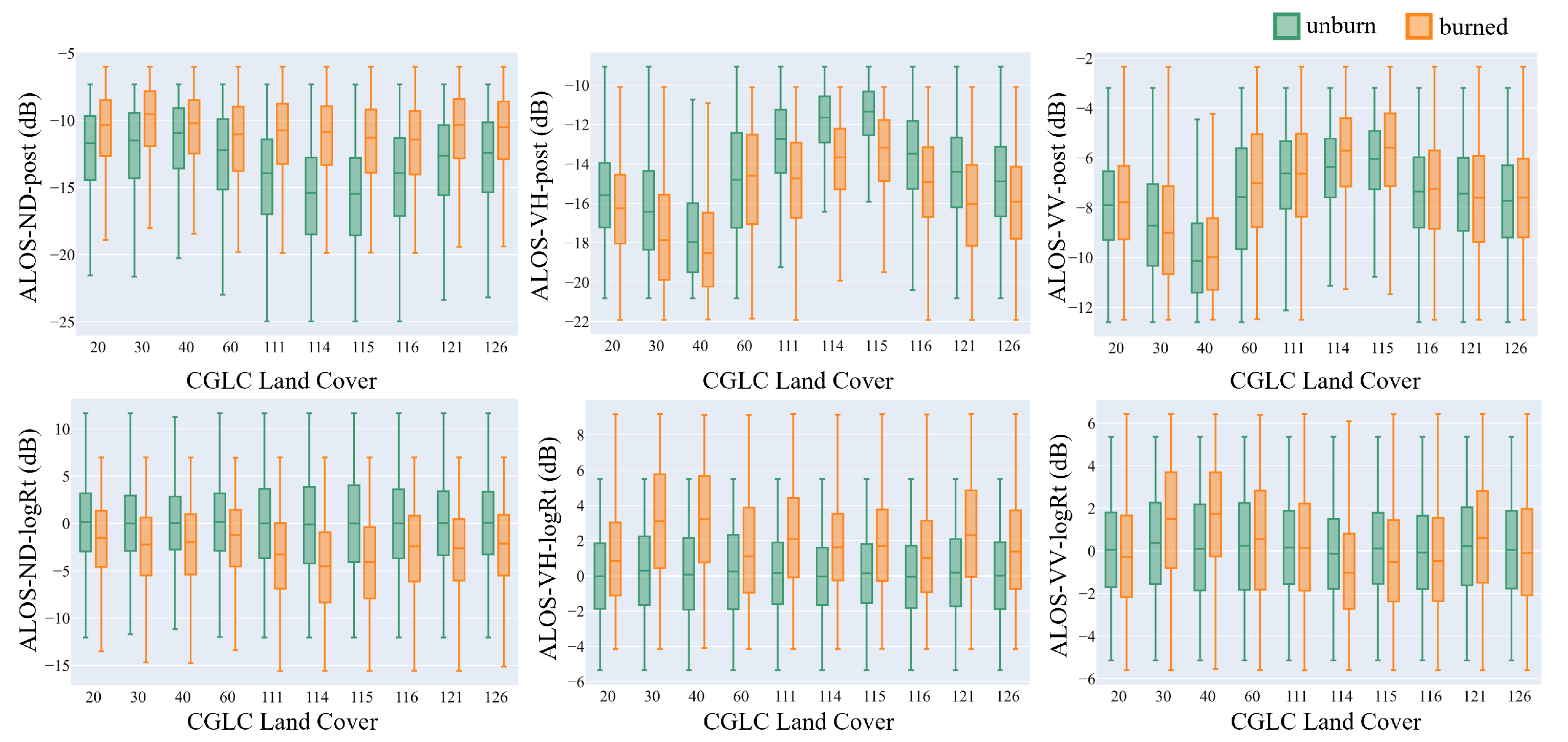

3.4. ALOS-2 PALSAR-2 L-Band SAR Backscatter Responses

4. Deep Learning for Wildfire-Burned Area Mapping

- Siam-UNet-Conc [27]: Siamese U-Net with intermediate feature concatenation, in which two encoder branches handle bi-temporal images, respectively, and the concatenated feature representation along the channel is stacked together with the corresponding decoder features of the same width and height (see Figure 7b);

5. Experimental Results

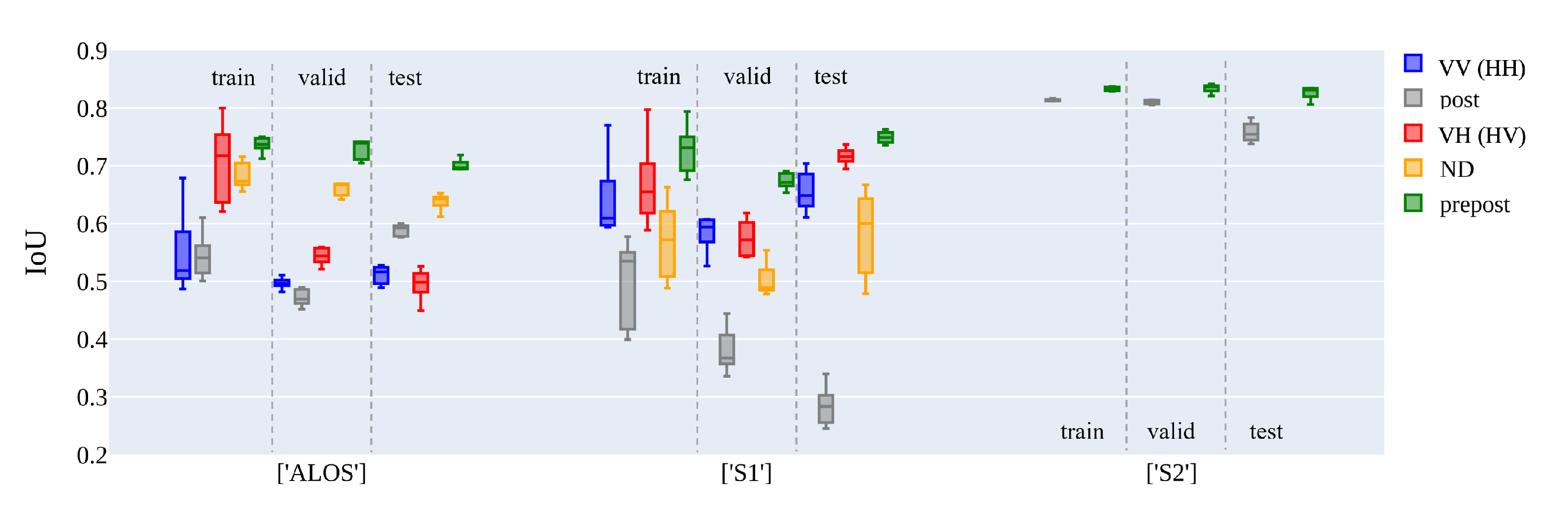

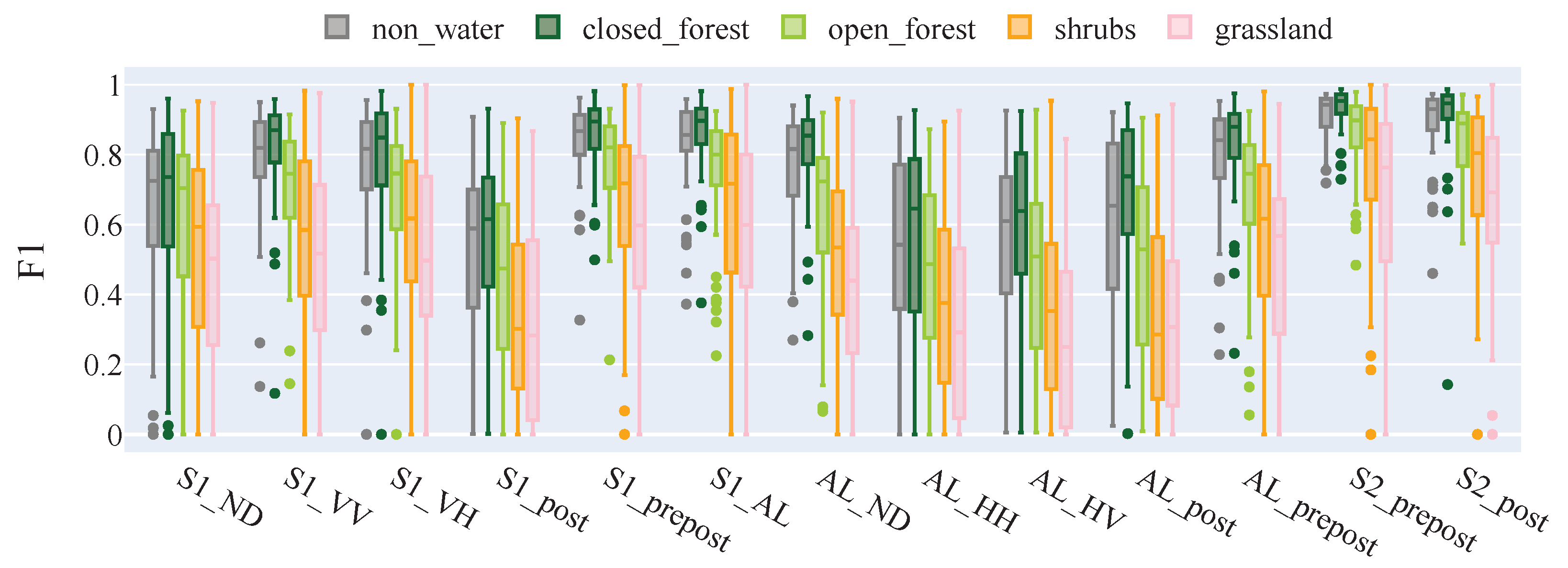

5.1. Channel Evaluation with U-Net

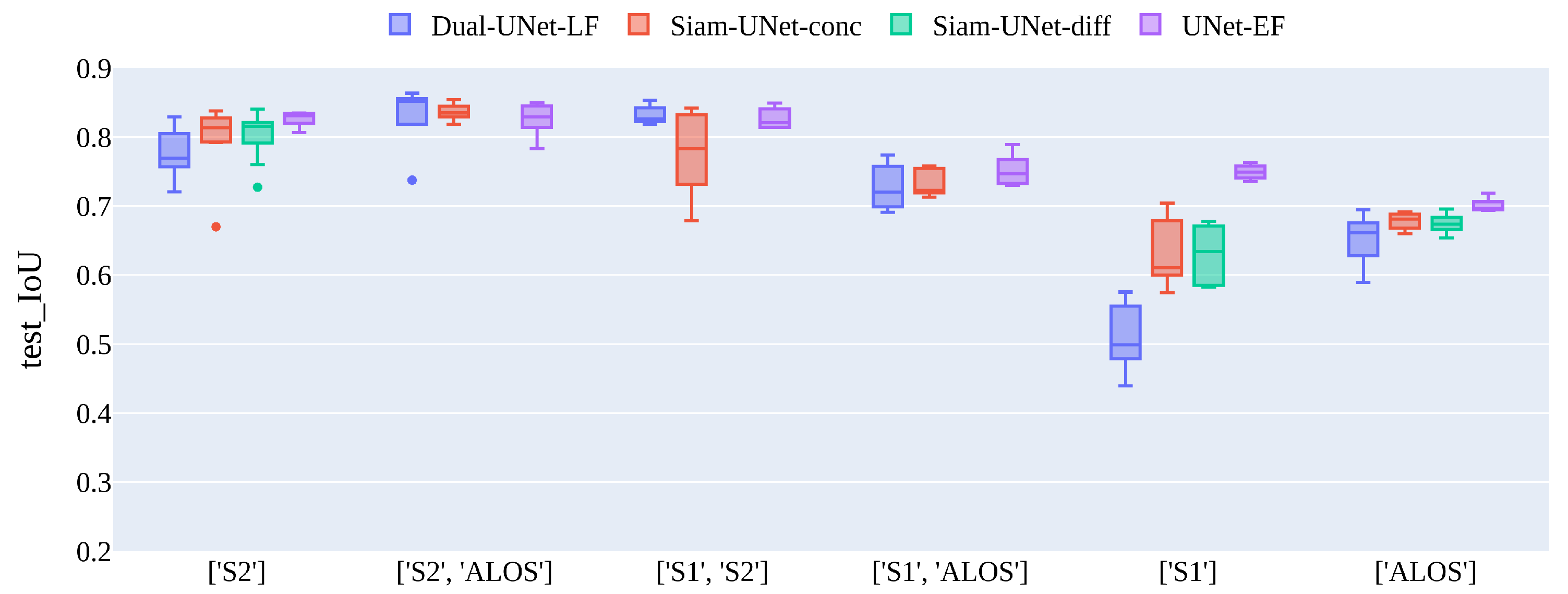

5.2. Single-Sensor vs. Multi-Sensor Fusion

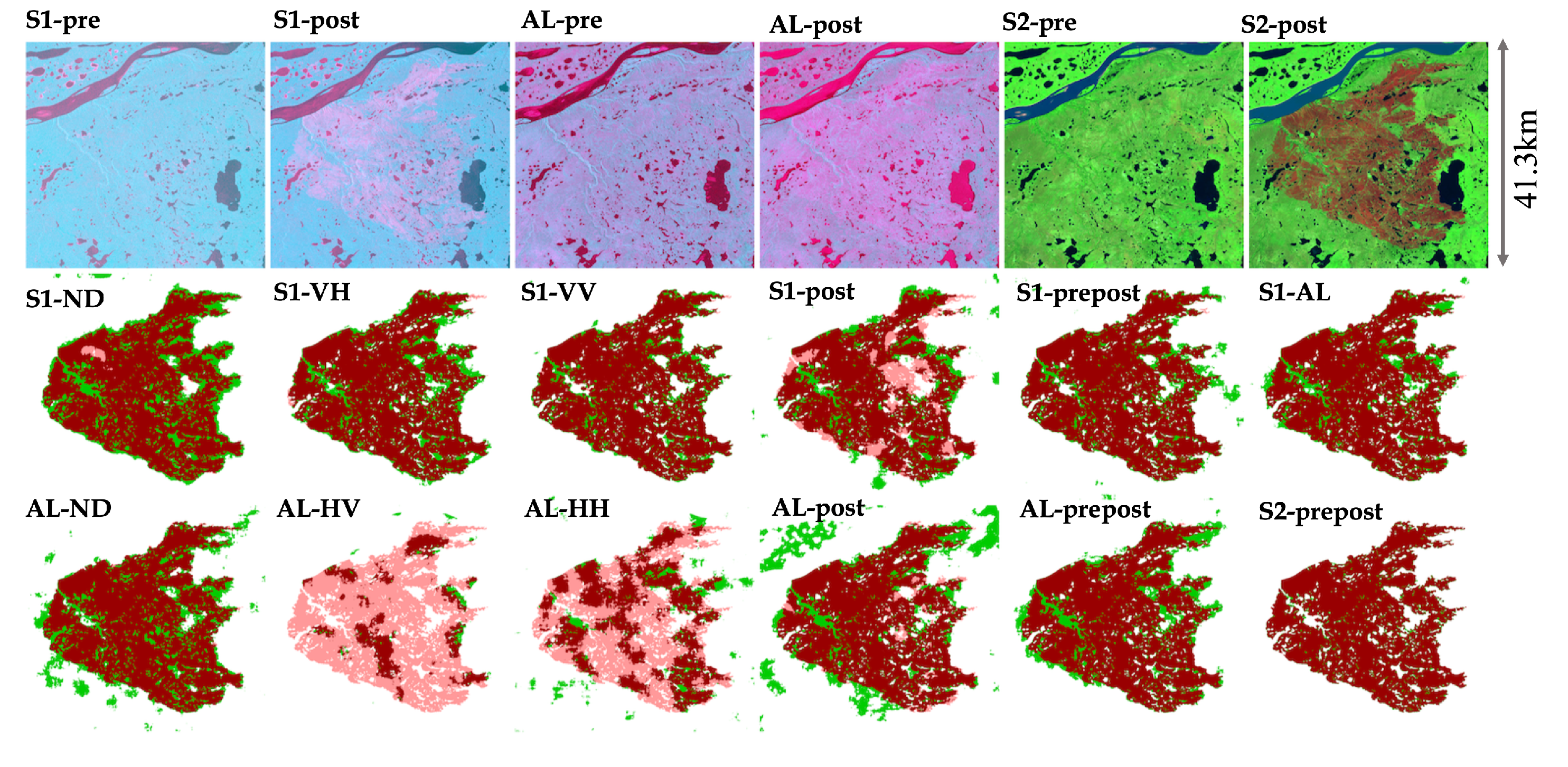

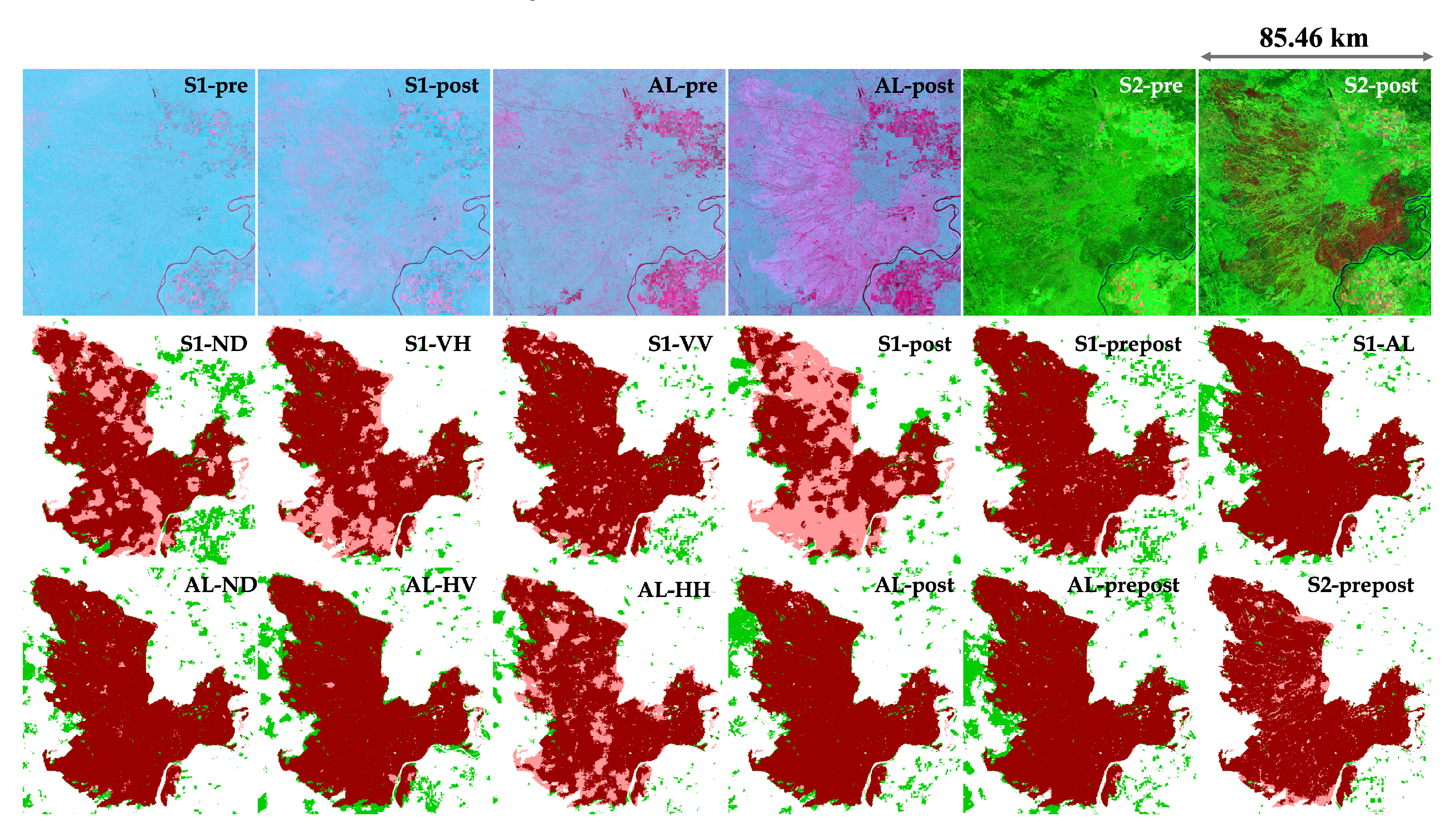

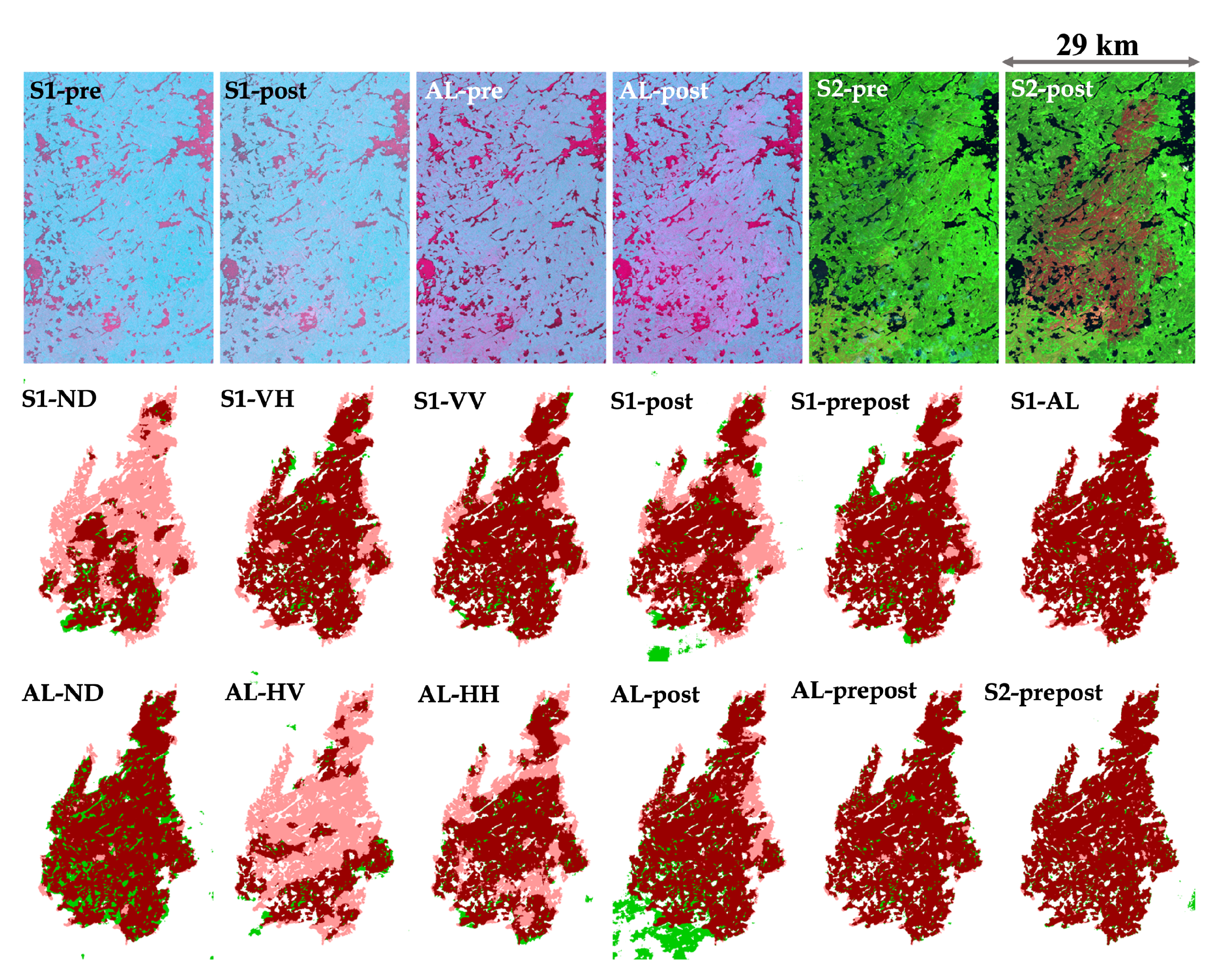

5.3. Visual Comparison

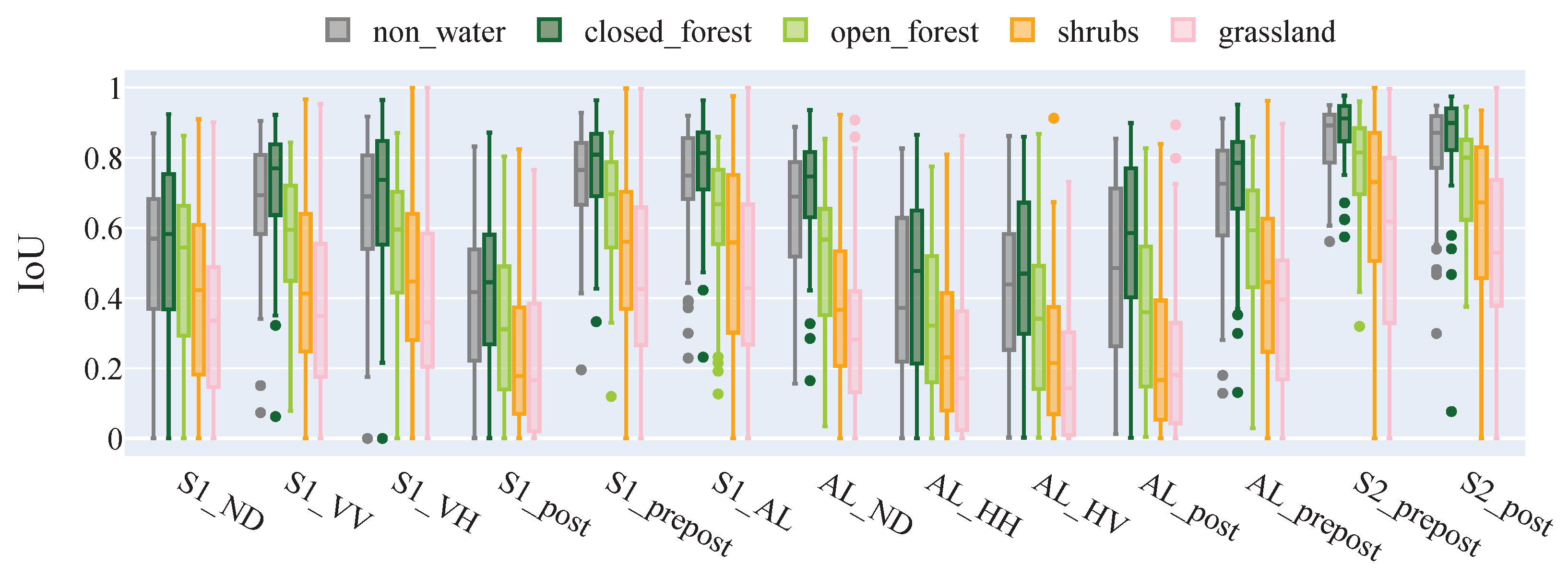

5.4. Land Cover-Specific Assessment

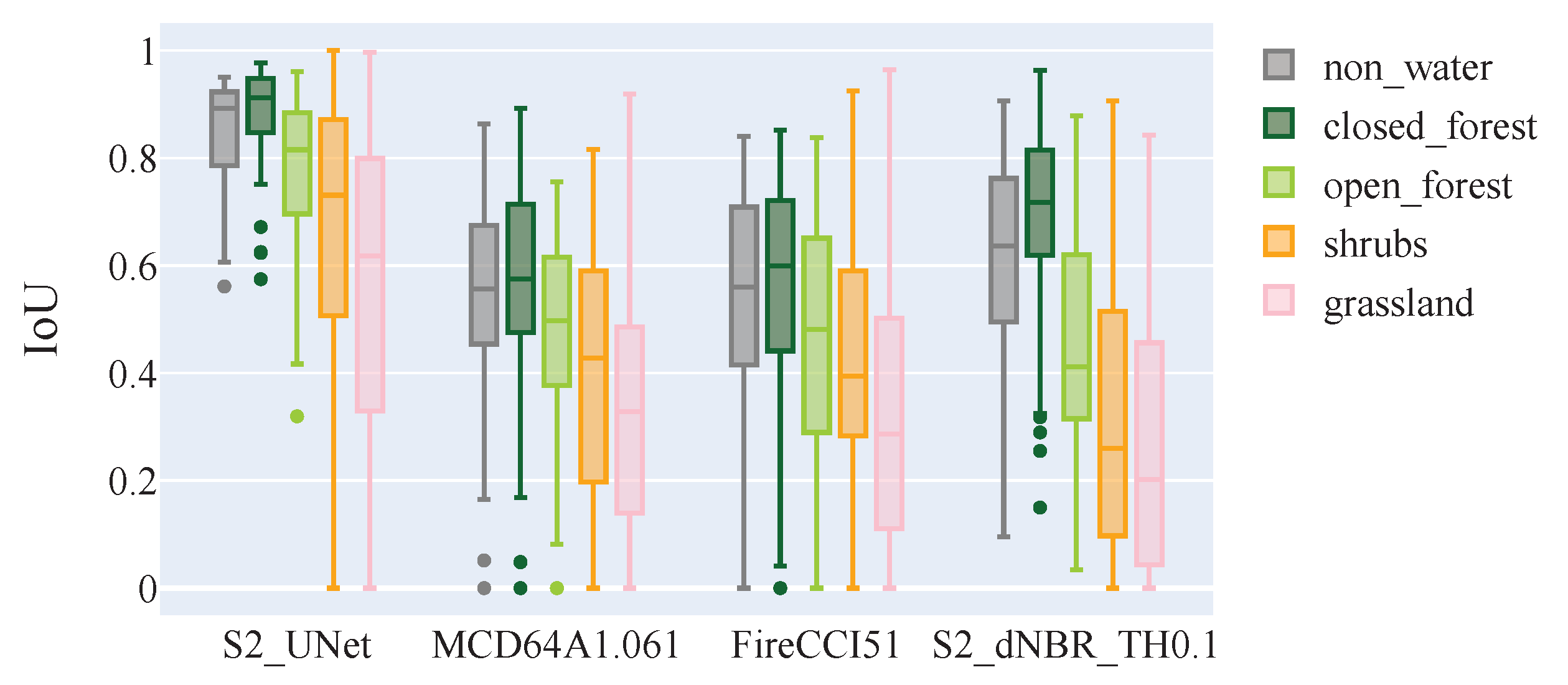

5.5. Comparison between Sentinel-2 and MODIS-Based Burned Area Products

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lizundia-Loiola, J.; Otón, G.; Ramo, R.; Chuvieco, E. A spatio-temporal active-fire clustering approach for global burned area mapping at 250 m from MODIS data. Remote Sens. Environ. 2020, 236, 111493. [Google Scholar] [CrossRef]

- Shi, K.; Touge, Y. Characterization of global wildfire burned area spatiotemporal patterns and underlying climatic causes. Sci. Rep. 2022, 12, 644. [Google Scholar] [CrossRef] [PubMed]

- Loehman, R.A.; Reinhardt, E.; Riley, K.L. Wildland Fire Emissions, Carbon, and Climate: Seeing the Forest and the Trees—A Cross-Scale Assessment of Wildfire and Carbon Dynamics in Fire-prone, Forested Ecosystems. For. Ecol. Manag. 2014, 317, 9–19. [Google Scholar] [CrossRef]

- Lasslop, G.; Coppola, A.I.; Voulgarakis, A.; Yue, C.; Veraverbeke, S. Influence of Fire on the Carbon Cycle and Climate. Curr. Clim. Chang. Rep. 2019, 5, 112–123. [Google Scholar] [CrossRef]

- Palm, E.C.; Suitor, M.J.; Joly, K.; Herriges, J.D.; Kelly, A.P.; Hervieux, D.; Russell, K.L.; Bentzen, T.W.; Larter, N.C.; Hebblewhite, M. Increasing Fire Frequency and Severity will Increase Habitat Loss for a Boreal Forest Indicator Species. Ecol. Appl. 2022, 32, e2549. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Zhang, P.; Ban, Y. Large-scale burn severity mapping in multispectral imagery using deep semantic segmentation models. ISPRS J. Photogramm. Remote Sens. 2023, 196, 228–240. [Google Scholar] [CrossRef]

- Chuvieco, E.; Mouillot, F.; van der Werf, G.R.; San Miguel, J.; Tanase, M.; Koutsias, N.; García, M.; Yebra, M.; Padilla, M.; Gitas, I.; et al. Historical Background and Current Developments for Mapping Burned Area from Satellite Earth Observation. Remote Sens. Environ. 2019, 225, 45–64. [Google Scholar] [CrossRef]

- Roy, D.P.; Boschetti, L.; Trigg, S.N. Remote Sensing of Fire Severity: Assessing the Performance of the Normalized Burn Ratio. IEEE Geosci. Remote Sens. Lett. 2006, 3, 112–116. [Google Scholar] [CrossRef]

- Massetti, A.; Rüdiger, C.; Yebra, M.; Hilton, J. The Vegetation Structure Perpendicular Index (VSPI): A forest condition index for wildfire predictions. Remote Sens. Environ. 2019, 224, 167–181. [Google Scholar] [CrossRef]

- Katagis, T.; Gitas, I.Z. Assessing the accuracy of MODIS MCD64A1 C6 and FireCCI51 burned area products in Mediterranean ecosystems. Remote Sens. 2022, 14, 602. [Google Scholar] [CrossRef]

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.R.; et al. High-resolution global maps of 21st-century forest cover change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef]

- Tyukavina, A.; Potapov, P.; Hansen, M.C.; Pickens, A.H.; Stehman, S.V.; Turubanova, S.; Parker, D.; Zalles, V.; Lima, A.; Kommareddy, I.; et al. Global trends of forest loss due to fire from 2001 to 2019. Front. Remote Sens. 2022, 3, 825190. [Google Scholar] [CrossRef]

- Tanase, M.A.; Santoro, M.; de La Riva, J.; Fernando, P.; Le Toan, T. Sensitivity of X-, C-, and L-band SAR backscatter to burn severity in Mediterranean pine forests. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3663–3675. [Google Scholar] [CrossRef]

- Tanase, M.A.; Santoro, M.; Wegmüller, U.; de la Riva, J.; Pérez-Cabello, F. Properties of X-, C-and L-band repeat-pass interferometric SAR coherence in Mediterranean pine forests affected by fires. Remote Sens. Environ. 2010, 114, 2182–2194. [Google Scholar] [CrossRef]

- Reiche, J.; Verhoeven, R.; Verbesselt, J.; Hamunyela, E.; Wielaard, N.; Herold, M. Characterizing tropical forest cover loss using dense Sentinel-1 data and active fire alerts. Remote Sens. 2018, 10, 777. [Google Scholar] [CrossRef]

- Engelbrecht, J.; Theron, A.; Vhengani, L.; Kemp, J. A simple normalized difference approach to burnt area mapping using multi-polarisation C-Band SAR. Remote Sens. 2017, 9, 764. [Google Scholar] [CrossRef]

- Belenguer-Plomer, M.A.; Tanase, M.A.; Fernandez-Carrillo, A.; Chuvieco, E. Burned area detection and mapping using Sentinel-1 backscatter coefficient and thermal anomalies. Remote Sens. Environ. 2019, 233, 111345. [Google Scholar] [CrossRef]

- Ban, Y.; Zhang, P.; Nascetti, A.; Bevington, A.R.; Wulder, M.A. Near real-time wildfire progression monitoring with Sentinel-1 SAR time series and deep learning. Sci. Rep. 2020, 10, 1322. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Ban, Y.; Nascetti, A. Learning U-Net without forgetting for near real-time wildfire monitoring by the fusion of SAR and optical time series. Remote Sens. Environ. 2021, 261, 112467. [Google Scholar] [CrossRef]

- Zhang, Q.; Ge, L.; Zhang, R.; Metternicht, G.I.; Du, Z.; Kuang, J.; Xu, M. Deep-Learning-Based Burned Area Mapping Using the Synergy of Sentinel-1&2 Data. Remote Sens. Environ. 2021, 264, 112575. [Google Scholar]

- Belenguer-Plomer, M.A.; Tanase, M.A.; Chuvieco, E.; Bovolo, F. CNN-based burned area mapping using radar and optical data. Remote Sens. Environ. 2021, 260, 112468. [Google Scholar] [CrossRef]

- Zhang, P.; Ban, Y. Unsupervised Geospatial Domain Adaptation for Large-Scale Wildfire Burned Area Mapping Using Sentinel-2 MSI and Sentinel-1 SAR Data. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS’2023), Pasadena, CA, USA, 16–21 July 2023; pp. 5742–5745. [Google Scholar]

- Loehman, R.A. Drivers of wildfire carbon emissions. Nat. Clim. Chang. 2020, 10, 1070–1071. [Google Scholar] [CrossRef]

- Zhang, P.; Hu, X.; Ban, Y. Wildfire-S1S2-Canada: A Large-Scale Sentinel-1/2 Wildfire Burned Area Mapping Dataset Based on the 2017–2019 Wildfires in Canada. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS’2022), Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 7954–7957. [Google Scholar]

- Hall, R.; Skakun, R.; Metsaranta, J.; Landry, R.; Fraser, R.; Raymond, D.; Gartrell, M.; Decker, V.; Little, J. Generating Annual Estimates of Forest Fire Disturbance in Canada: The National Burned Area Composite. Int. J. Wildland Fire 2020, 29, 878–891. [Google Scholar] [CrossRef]

- Claverie, M.; Ju, J.; Masek, J.G.; Dungan, J.L.; Vermote, E.F.; Roger, J.C.; Skakun, S.V.; Justice, C. The Harmonized Landsat and Sentinel-2 surface reflectance data set. Remote Sens. Environ. 2018, 219, 145–161. [Google Scholar] [CrossRef]

- Daudt, R.C.; Le Saux, B.; Boulch, A. Fully Convolutional Siamese Networks for Change Detection. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 4063–4067. [Google Scholar]

- Hafner, S.; Nascetti, A.; Azizpour, H.; Ban, Y. Sentinel-1 and Sentinel-2 Data Fusion for Urban Change Detection Using a Dual Stream U-Net. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

| Satellite | Setting Name | Bands | Pre-Fire | Post-Fire |

|---|---|---|---|---|

| Sentinel-2 (S2) | post | B4, B8, B12 | √ | |

| prepost | B4, B8, B12 | √ | √ | |

| Sentinel-1 (S1) | VV | VV | √ | √ |

| VH | VH | √ | √ | |

| ND | ND | √ | √ | |

| post | ND, VH, VV | √ | ||

| prepost | ND, VH, VV | √ | √ | |

| ALOS-2 PALSAR-2 (AL) | HH | HH | √ | √ |

| HV | HV | √ | √ | |

| ND | ND | √ | √ | |

| post | ND, HV, HH | √ | ||

| prepost | ND, HV, HH | √ | √ |

| Product | Non_Water | Closed_Forest | Open_Forest | Shrubs | Grassland | Others | |

|---|---|---|---|---|---|---|---|

| IoU | S2_UNet | 0.89 | 0.91 | 0.82 | 0.73 | 0.62 | 0.67 |

| MCD64A1.061 | 0.56 | 0.58 | 0.50 | 0.43 | 0.33 | 0.40 | |

| FireCCI51 | 0.56 | 0.60 | 0.48 | 0.39 | 0.29 | 0.38 | |

| S2_dNBR_TH0.1 | 0.64 | 0.72 | 0.41 | 0.26 | 0.20 | 0.25 | |

| F1 | S2_UNet | 0.94 | 0.95 | 0.90 | 0.84 | 0.76 | 0.80 |

| MCD64A1.061 | 0.71 | 0.73 | 0.66 | 0.60 | 0.49 | 0.57 | |

| FireCCI51 | 0.72 | 0.75 | 0.65 | 0.56 | 0.45 | 0.55 | |

| S2_dNBR_TH0.1 | 0.78 | 0.84 | 0.58 | 0.41 | 0.34 | 0.40 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, P.; Hu, X.; Ban, Y.; Nascetti, A.; Gong, M. Assessing Sentinel-2, Sentinel-1, and ALOS-2 PALSAR-2 Data for Large-Scale Wildfire-Burned Area Mapping: Insights from the 2017–2019 Canada Wildfires. Remote Sens. 2024, 16, 556. https://doi.org/10.3390/rs16030556

Zhang P, Hu X, Ban Y, Nascetti A, Gong M. Assessing Sentinel-2, Sentinel-1, and ALOS-2 PALSAR-2 Data for Large-Scale Wildfire-Burned Area Mapping: Insights from the 2017–2019 Canada Wildfires. Remote Sensing. 2024; 16(3):556. https://doi.org/10.3390/rs16030556

Chicago/Turabian StyleZhang, Puzhao, Xikun Hu, Yifang Ban, Andrea Nascetti, and Maoguo Gong. 2024. "Assessing Sentinel-2, Sentinel-1, and ALOS-2 PALSAR-2 Data for Large-Scale Wildfire-Burned Area Mapping: Insights from the 2017–2019 Canada Wildfires" Remote Sensing 16, no. 3: 556. https://doi.org/10.3390/rs16030556

APA StyleZhang, P., Hu, X., Ban, Y., Nascetti, A., & Gong, M. (2024). Assessing Sentinel-2, Sentinel-1, and ALOS-2 PALSAR-2 Data for Large-Scale Wildfire-Burned Area Mapping: Insights from the 2017–2019 Canada Wildfires. Remote Sensing, 16(3), 556. https://doi.org/10.3390/rs16030556