2.1. Maximum Rayleigh Altitude

The MRA characterizes the uppermost altitude at which MUA-LIDAR can detect Rayleigh echo signals over the Earth’s atmosphere. Several factors influence the altitude of the Rayleigh echo, including weather conditions, the LIDAR power, the telescope receiving area, and the status of the transmitter–receiver. When MUA-LIDAR parameters remain constant, the MRA is predominantly influenced by low-altitude weather conditions since laser emissions and high-altitude raw echo optical signals traverse the lower atmosphere twice. Previous research has indicated that a higher MRA enhances the detection of metal layers in the middle and upper atmosphere [

15]. In addition, the MRA can be conveniently inferred from metal-layer LIDAR data by simply excluding data at higher altitudes, making it an important evaluation index for the operational status of the metal-layer LIDAR system [

16].

Typically, the MRA is inferred based on the signal-to-noise ratio of the photon-counted raw echo signal [

17]. The laser’s atmospheric backscatter signal is weak, rendering it susceptible to noise interference and leading to significant errors in MRA calculation. Thus, it is imperative to process echo signals when utilizing the photon counting method to minimize errors.

As illustrated in Equation (1), the signal-to-noise (

SNR) ratio is computed as the ratio of the photon counts in echo signals (

S) to the photons originating from the background noise.

, where

represents the number of photons corresponding to the detected altitude band, and

represents the number of photons present in the background altitude band. Both

and

can be directly retrieved from the LIDAR echo signal. When employing the photon counting method, the noise distribution is represented by a Poisson distribution with

.

In our MRA calculation, we defined the effective detection range by setting the altitude area with an

SNR ratio exceeding 3 [

18,

19]. In this setup, the signal strength is significantly higher than the noise level, resulting in lower variability in measurement results that can be replicated under different experimental conditions. The collected data were subjected to filtering, denoising, moving averages, and downsampling operations. We applied the

SNR formula to minimize data noise. Subsequently, we used the Savitzky–Golay filter, which is based on local polynomial least-square fitting in the time domain. It is widely used for data stream smoothing and denoising, as it filters out noise while ensuring that the shape and width of the signal remain unchanged [

20]. A moving average operation was then performed on the acquired data by selecting 12 consecutive data points and averaging them after removing the maximum and minimum values [

21]. The moving average operation reduces the influence of individual data points, smooths irregular fluctuations in the data, and stably eliminates the influence of outliers on calculation results. The data collection system generated roughly two data points per minute. Downsampling was employed to reduce the data volume and optimize computational efficiency. This processing sequence minimized noise interference on the MRA calculations.

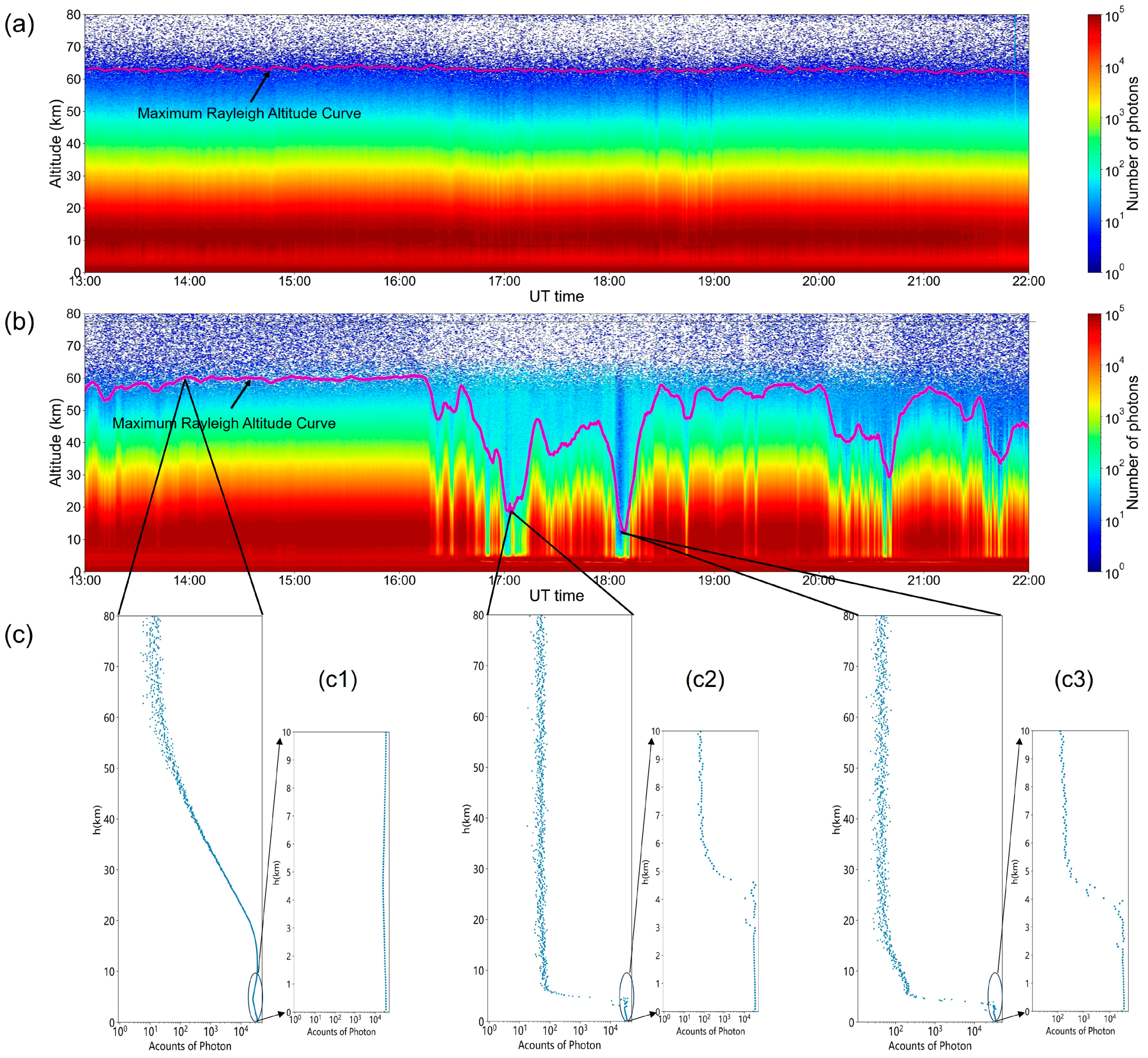

As shown in

Figure 1, a large volume of MUA-LIDAR echo-signal data collected for each station was processed with this algorithm.

Figure 1a,b illustrate trends in the photon counts of echo signals and the MRA at the Beijing Yanqing station on the nights of 8 January 2023 and 16 January 2023, respectively. The MRA cases under fair night weather conditions and partly cloudy weather conditions were selected for further analysis. Fair weather conditions prevailed during the January 16 observation, leaving the MUA-LIDAR observations unaffected. Consequently, both the echo-signal photon counts and the MRA remained stable throughout the night without significant fluctuations. This situation is favorable for inverting the temperature, wind speed, and other related parameters in the MUA. Conversely, during the January 8 observation, the MRA of the MUA-LIDAR system significantly fluctuated for a portion of the night due to interference caused by cloudy weather. Lower altitude and more fluctuating MRA data are not conducive to scientific research.

Figure 1c provides a specific example of the photon counts of echo signals versus detection altitude for a single time point shown in

Figure 1b. Specifically, the weather conditions at the moment captured in

Figure 1(c1) were fair, and the echo-signal photon counts were unaffected. The MRA reached 63,014 m. The adverse effect of cloudy weather on the MRA is evident in

Figure 1(c2), with the photon counts of echo signals peaking at 4512 m, indicative of interference due to cloud cover approximately 288 m thick. At this time, the MRA was only 20,985 m. The moment shown in

Figure 1(c3) was also impacted by cloudy weather, as the photon counts of echo signals peaked at 3936 m, indicating the cloud interference with a cloud thickness of about 288 m, resulting in an MRA of merely 15,331 m.

To summarize, weather exerts a significant impact on the MRA of MUA-LIDAR systems. The MRA serves as a critical indicator, reflecting current weather conditions, LIDAR power, and the matching status of the transmitter–receiver. Employing the proposed methodology for MRA calculations, we found that, under clear weather conditions and a constant laser state, the MRA remained generally stable throughout the night, with peak-to-peak fluctuations of less than 2 km within short intervals (30 min). When weather conditions change, the MRA value changes, demonstrating a high level of real-time sensitivity. This enables an accurate assessment of the MRA status of the MUA-LIDAR system.

2.2. Characteristics of Night Sky Images

Typically, MUA-LIDAR systems employ high-power lasers and large-aperture telescopes to capture faint echo light signals from the MUA. However, MUA-LIDAR systems do not function properly under adverse weather conditions such as rain, snow, strong winds, or dust storms. The intensity of rain and snow is typically measured by the amount of precipitation per unit of time. Strong winds are defined by a force greater than 5 on the Beaufort scale. Dust and floating weather can be evaluated based on the air quality index, which takes into account the contents of atmospheric particulate matter with a diameter equal to or below 10 microns, humidity, and wind speed [

22,

23]. Even in clear or partly cloudy weather, aerosols and haze are significant factors impairing these systems’ ability to detect the MUA. Clear weather is defined as a percentage of clouds between 0% and 10%, while partly cloudy weather is defined as having a percentage of clouds between 10% and 30% [

24]. These atmospheric elements, along with other factors near the LIDAR station, reduce the laser energy emitted, generate substantial background optical noise, and considerably attenuate the already faint echo light signals from the MUA. This further weakens the echo signals received by the telescope, directly impacting the optical transmittance of the LIDAR system and diminishing its capacity to monitor upper atmospheric conditions. Obtaining real-time weather information at LIDAR stations presents a formidable challenge that must be addressed in order to effectively utilize MUA-LIDAR technology. As depicted in

Figure 2, the 0–10 km low-altitude range predominantly corresponds to a weather region that produces clouds, haze, high winds, and precipitation including rain and snow. These atmospheric conditions exert a significant impact on the safety and operational efficiency of MUA-LIDAR systems [

25,

26].

Above 10 km from the surface, the numerous stars in the distant Milky Way are highly stable, with minimal fluctuations in luminosity over brief time periods. Astronomers can assign static brightness classifications to each star. The twinkling effect observed in stars from the Earth’s surface is a result of various atmospheric factors that affect their luminosity, including turbulence, clouds, and aerosols. On clear, cloudless nights, atmospheric aerosols and haze scatter and absorb starlight to a lesser extent, resulting in brighter and more easily observed stars. In contrast, cloudy or adverse conditions (including the presence of aerosols and haze) lead to increased atmospheric scattering and absorption. Starlight weakens, causing stars to appear distorted or even disappear altogether from view. This reduces the number of observable stars and their overall brightness. Based on this principle, an astronomical camera can be installed at the LIDAR station to capture the distribution of stars, extract data for the number of stars and their brightness, and subsequently compare these data with star charts to ascertain local weather conditions. By predicting the MRA of the MUA-LIDAR system, it becomes possible to objectively assess the impact of environmental weather on the LIDAR’s observation capabilities. This facilitates the real-time monitoring and forecasting of the MRA and weather conditions, providing a valuable means of analyzing MUA-LIDAR performance.

During MUA-LIDAR observation, scattered light primarily originates from the 0–60 km range, fluorescence predominantly emanates from the 80–150 km range, and starlight originates from beyond the Earth’s atmosphere. All these components must traverse through the lower atmosphere for effective transmission. This enables the enhancement of scattered light and fluorescence reception, as well as the resolution of starlight, facilitating the capture of distinctive celestial features. We placed a quartz spherical hood over the wide-angle camera. This type of hood has several advantages, including a wide spectral range and resistance to wear and tear. Specifically, the quartz material allows visible light and other wavelengths of electromagnetic radiation to pass through while also being able to withstand external pressure, shock, and vibration. As a result, using a quartz spherical hood is beneficial for achieving long-term stable observation. To ensure consistency in capturing night sky images, it is imperative to configure the wide-angle camera parameters appropriately. As described in

Table 1, the camera’s shooting interval was set to 2 min, with fixed exposure time, gain coefficients, and other parameters to ensure the clear and real-time acquisition of night sky images.

We selected approximately 300,000 images from multiple MUA-LIDAR stations to establish a dataset for recognizing the stars present in night sky images. Morphological recognition, template matching, and spot detection methods were employed to identify the stars in these images.

Morphological recognition algorithms can extract features such as star size and shape through transformations like expansion and erosion. In this study, the night sky images were first converted to grayscale to retain their star brightness information while reducing computational effort. Gaussian denoising was then applied to reduce the impact of noise on subsequent processing. Gaussian filtering is a widely used method for smoothing images, which works by averaging the neighborhood around each pixel to reduce noise interference [

27]. A fundamental operation in morphological algorithms, the expansion–erosion process, was performed subsequently. This operation expanded the boundaries of the stars, making them more continuous and complete. The erosion operation removed slight noise and irregular edges from the image, aiding in the extraction of the shape and structural features of the star. The image was then binarized to separate the stars from the background, resulting in a star region appearing distinctly white and facilitating subsequent contour extraction. Through these steps, the algorithm performed well in recognizing the stars in night sky images, with large star areas and scattered star distributions.

Template-matching algorithms are more efficient in cases of night sky images with relatively stable attributes, such as consistent star shapes and sizes. This process involves selecting a template image, graying the night sky image, performing template matching, and thresholding. The stars in the night sky image are recognized by matching regions in the night sky image that are similar to the template image. The use of these steps results in faster star detection within the images. However, it is necessary to regularly revise and fine-tune the templates to maintain recognition accuracy when dealing with various scenes and targets. This makes the algorithm more complex and challenging to use compared to morphological recognition algorithms.

Both morphological recognition and template-matching algorithms are susceptible to interference. For instance, the presence of dark spots with high and fixed intensity and thermal noise characterized by low and random intensity within the camera can impede the accurate identification of star features. These problems translate to higher misclassification rates, which ultimately affect the accuracy of star recognition.

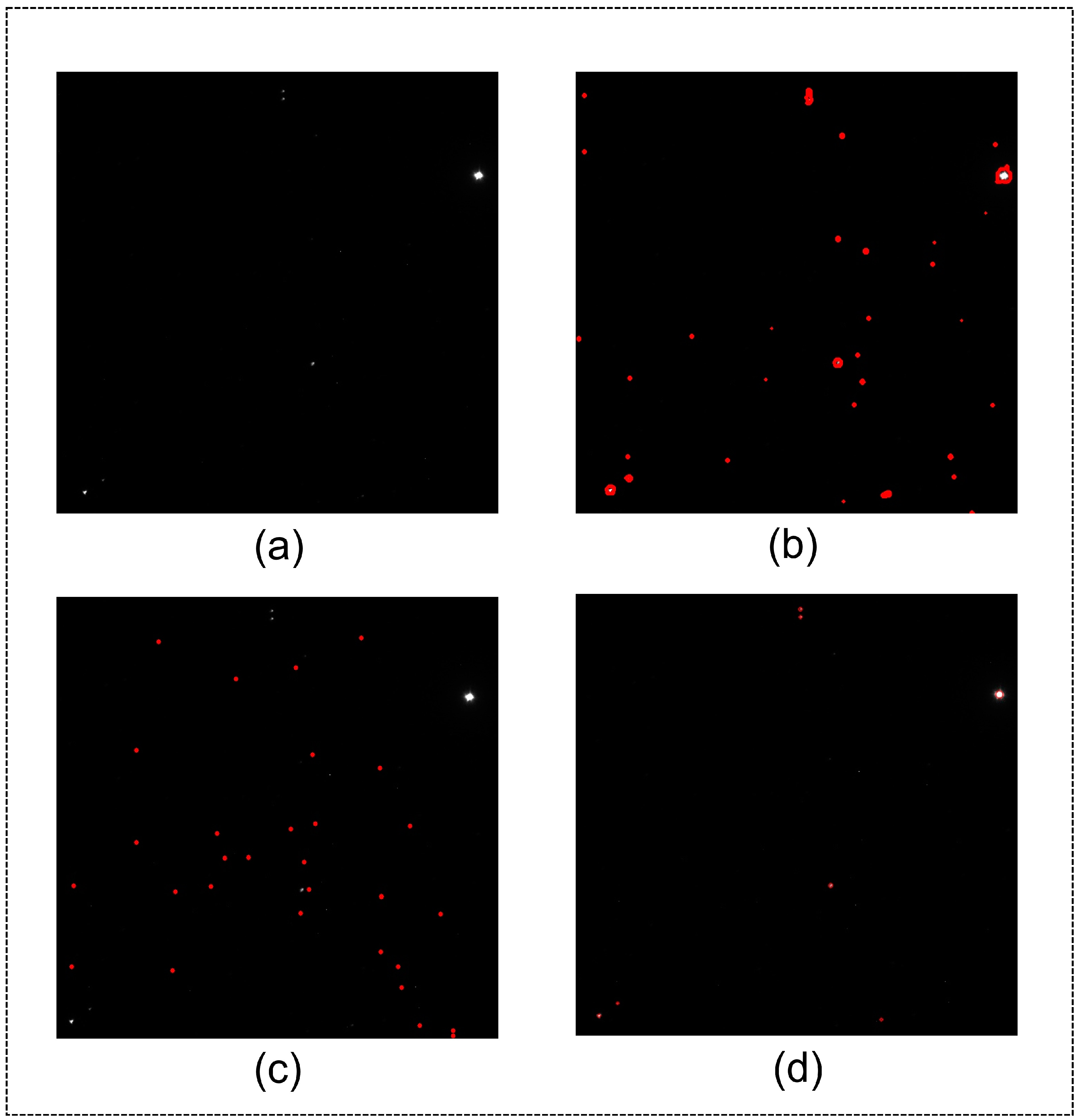

Figure 3a illustrates a section of the complete night sky image captured above the Yanqing station. The images were processed using the three algorithms mentioned above, with identified stars indicated in red.

Figure 3b displays the experimental results obtained from implementing the morphological recognition algorithm, which incorrectly labeled certain interfering points as stars, resulting in the inaccurate identification of the results; however, it also correctly identified stars with larger areas.

Figure 3c shows the results after employing the template-matching algorithm. The algorithm’s reliance on a specific template choice led to the misclassification of noise points as stars, compromising the accuracy of the recognition.

Figure 3d displays the results achieved with the spot detection algorithm. Unlike the two preceding recognition algorithms, the spot detection algorithm detected variations in brightness within the image to identify point targets and recognize the stars in the night sky image (

Figure 4).

This process encompassed various steps, including threshold processing, connected domain extraction, spot grouping, spot calculation, and feature filtering. In the thresholding step, a starting threshold, a step threshold, and a termination threshold were established. To ensure the effective detection of stars of varying sizes and brightness, the starting threshold was set to 0. Stars were then detected from the lowest possible threshold to avoid missing any relatively small or weak stars. The algorithm’s sensitivity to the full range of star detection can be retained by setting these thresholds. To enhance algorithm efficiency, the step threshold was set to 3, circumventing unnecessary calculations and detections. A lower threshold value produces more candidate spots (or “speckles”), while a higher threshold value produces fewer candidate spots. Increasing the step threshold reduces computation while maintaining the ability to detect brighter spots. The termination threshold was set to 255 to ensure that the detected speckle covered the entire brightness range of the image. This setting allowed the algorithm to adapt to brightness and contrast changes in different images, improving its adaptability and robustness. Following this, connectivity components were extracted with different operators, and their centers were computed. Spot grouping involves setting a minimum distance between spots. Through trial and error, it was determined that reducing the minimum distance between spots may lead to over-segmentation, causing larger spots to be split into multiple smaller ones. Conversely, increasing the minimum spot distance may result in small spots being ignored or incorrectly filtered out. To improve the reliability and accuracy of speckle detection, we set the minimum speckle distance to 4. Any speckles in images closer than this threshold would be considered as a single speckle. The estimated center and radius of the speckle provide information regarding its location and size. The speckle’s location in the image can be determined and tracked by identifying its center, which allows for further analysis of its characteristics, trajectory, or other relevant information. The size of the speckle provides information about its scale characteristics and morphological features, which is important for identifying speckles of different sizes, analyzing changes in their shape, and eliminating the effect of noise. Finally, the spots were filtered based on color, area, roundness, inertia ratio, and convexity features. This robust approach effectively mitigated interference from dark spots and thermal noise in the night sky image through operations like spot grouping, spot calculation, and feature filtering. Evidently, this algorithm adeptly and accurately identified the stars in the night sky image. Furthermore, it exhibited control, robustness, tunability, and scalability, which makes it an advantageous approach.

Our subsequent analysis relied on the precise identification of stars in night sky images.

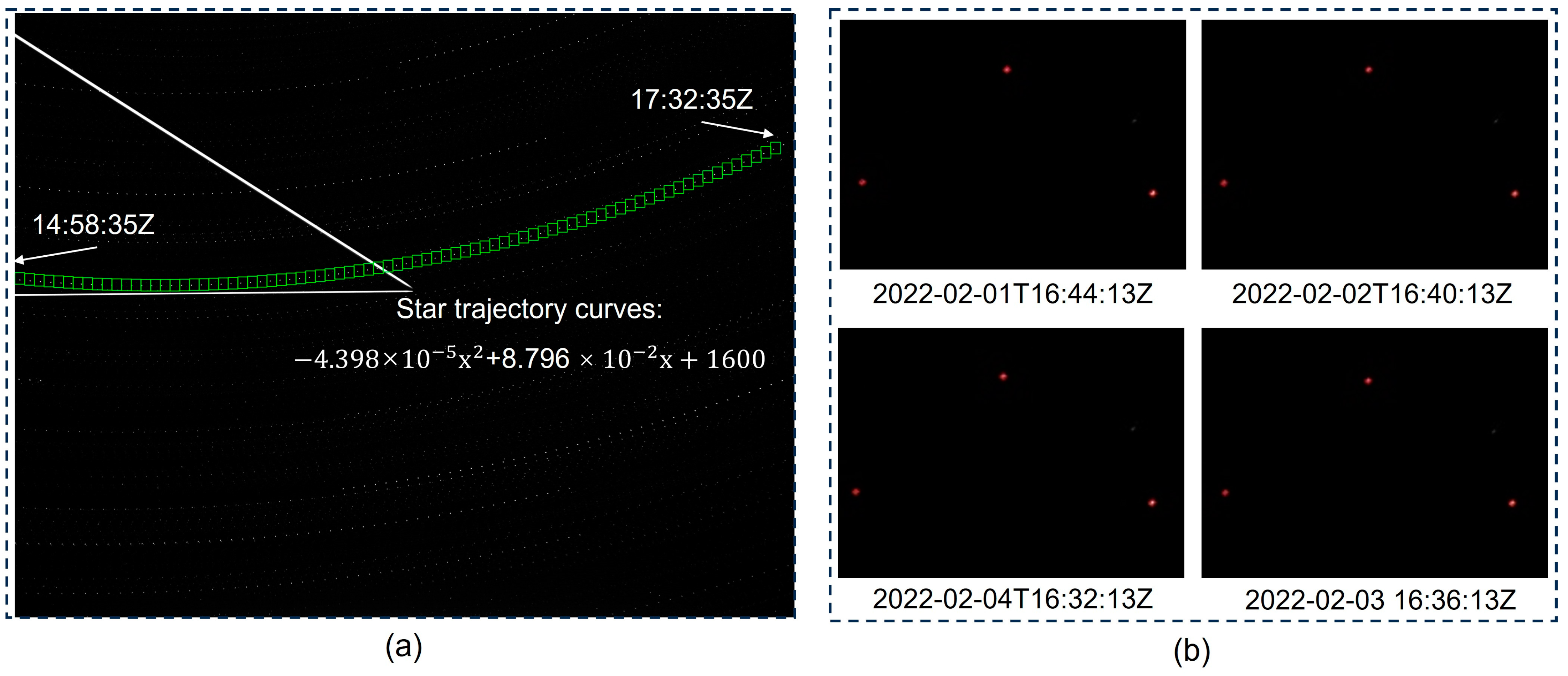

Figure 5a shows a composite of the gray values of the night sky images captured between 22:58 local time on 25 February 2022 and 01:32:35 on 26 February 2022 at the Yanqing station. A coordinate system was established with the image’s upper-left corner as the origin, and a trajectory of the star was fitted to allow for the constant monitoring of the star’s position within the camera’s field of view at any given moment. Because the camera’s field of view remains constant, and the Earth’s angular velocity is nearly uniform, with exceptions at the poles, a star that enters from the center of a wide-angle camera’s field of view has an approximate horizontal velocity of 35 pixels per minute and a vertical velocity of approximately 4 pixels per minute. It takes a minimum of 2 h for the star to exit the camera’s field of view. Further findings are presented in

Figure 5b, where the night sky images corresponding to specific moments on four consecutive clear nights from 1 to 3 February 2022 were selected for demonstration. Without taking weather conditions into account, the appearance of a specific star above a LIDAR station through a fixed, wide-angle camera differed in time by approximately 3 min and 56 s when it reappeared at the same position in the camera’s field of view the following day. There are two primary causes of this phenomenon. First, the rotation of the Earth has a period of 23 h, 56 min, and 4 s, causing a slight shift in position relative to the stars at a given point in time. Second, the Earth rotates at a nearly constant speed of about 15 degrees per hour at all points except for the north and south poles. By leveraging these, it is possible to determine the position of the stars above the LIDAR station at any point during the night, thus providing a theoretical foundation for analyzing the area, brightness, and other characteristics of said stars on different dates.

We selected several night sky images for analysis based on corresponding moments on different nights. We identified the star positions within the images and established a consistent grayscale threshold. “Star area” refers here to the count of pixels exceeding the grayscale threshold, while “star brightness” pertains to the sum of the pixels with gray values exceeding the grayscale threshold. Over multiple nights, a substantial volume of data encompassing star count, area, and brightness above the LIDAR station was amassed. This dataset served as a comprehensive resource for the MUA-LIDAR system to predict the MRA.

2.3. Different MRA Prediction Models

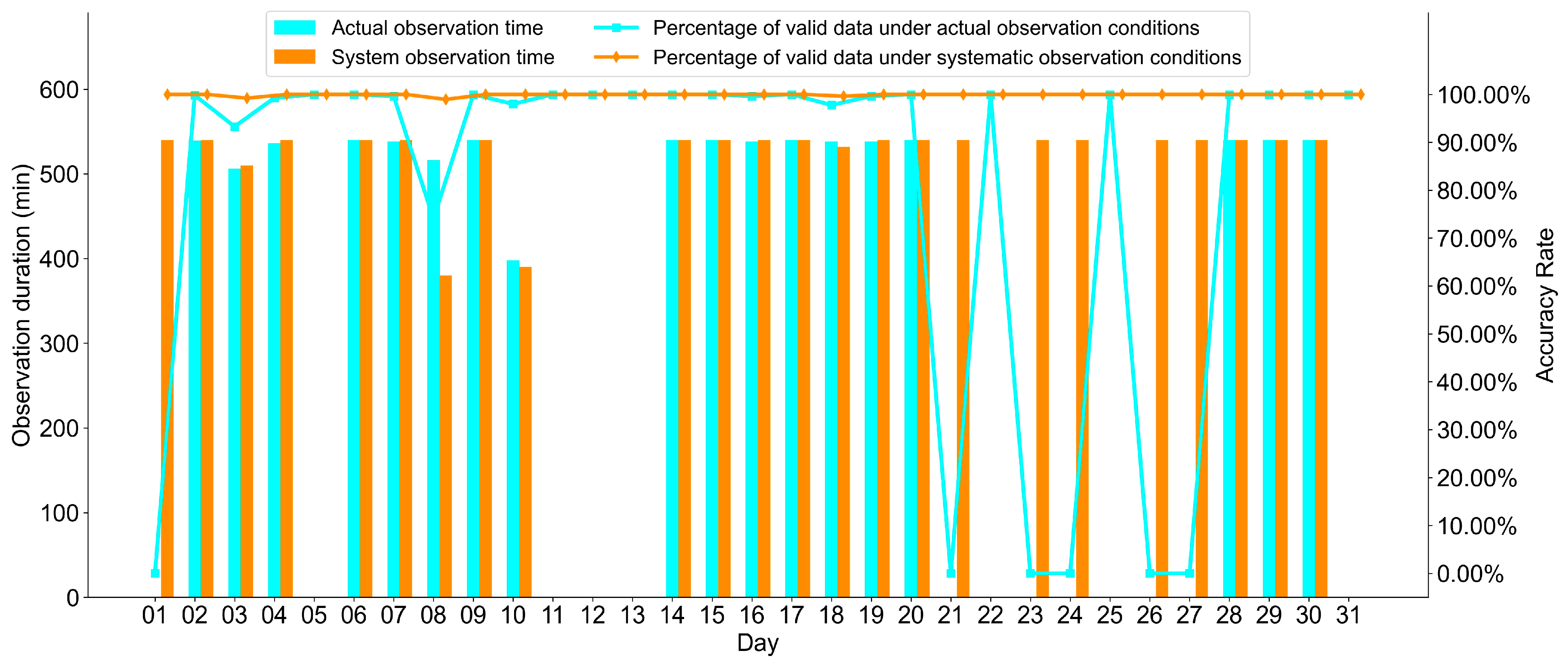

In this study, we utilized the night sky images and echo signals collected by the Yanqing MUA-LIDAR station from January 2022 to April 2023 as data sources. Prior to analysis and modeling, the data were subjected to meticulous preprocessing procedures. The objective of these preprocessing steps was to eliminate superfluous information, including duplicates, missing data, and outliers, with the ultimate goal of bolstering the accuracy and quality of the data. Night sky image data were collected every 2 min, while echo-signal data were collected every 30 s. After downsampling the echo-signal data, they were combined and integrated with the data related to the number of stars, their respective areas, and brightness, which were derived from the processing of night sky images. These preprocessing steps served to refine the data, enhancing their accuracy, standardization, and completeness. This, in turn, optimized the performance of the machine learning algorithms and equipped them to effectively address practical challenges.

Linear multiple regression, nonlinear multiple regression, and summated autoregressive sliding average models were utilized to predict the MRA of the MUA-LIDAR system. Our aim was to compare the predictive ability of different models on this dataset. The model was implemented in Python, which provides powerful data processing and scientific computing libraries, giving it a significant edge in data processing and modeling analysis for various data-related and prediction issues in alignment with the objectives of this study.

Multiple regression modeling is a well-established statistical method for predicting the values of dependent variables by modeling the relationships between multiple independent (explanatory) variables and a single dependent (response) variable [

28]. To predict the MRA, we performed a multiple regression analysis using the number, area, and brightness of stars as independent variables and the corresponding MRA data as dependent variables. We tested the data for feasibility to identify intrinsic patterns, from which a reasonable estimation of the MUA-LIDAR system’s MRA could be made. Based on the theory of multiple regression, linear and nonlinear model expressions were established as follows:

where

represents the MRA for the MUA-LIDAR system,

is the number of stars,

denotes the star’s areas, and

signifies their brightness; these serve as the independent variables for the model inputs.

−

represent the regression coefficients in the linear multiple regression model, while

indicates the regression coefficient in the nonlinear multiple regression model. These coefficients and independent variables are employed to predict the value of

.

When using a linear multiple regression model to make predictions, the model parameters obtained were , , , and . When using a nonlinear multiple regression model to predict , it became apparent that the model intercept parameter was excessively large, while the regression coefficient parameter was of minute magnitude, measuring at . To this effect, the nonlinear multiple regression model did not properly fit the training data and failed to capture the relationship between the independent and dependent variables. Furthermore, the intercept of the model was large, suggesting that the model could predict larger values of the dependent variable even in the absence of an independent variable. Hence, the nonlinear multiple regression model lacks robustness and is unfit for predicting the MRA. Therefore, we employed time series data (time, the number of stars, star area, and star brightness) as inputs for modeling and prediction using the ARIMA model.

ARIMA is a widely employed approach to modeling and predicting time series data [

29]. It comprises three components: autoregression, integration, and moving average. ARIMA enables the modeling and prediction of key features, including trends, seasonality, and cyclical patterns. In an

model,

represents the autoregressive order,

represents the number of differences, and

represents the moving average order. The modeling process typically encompasses three steps: model identification, parameter estimation, and model testing. Model identification entails determining the values of the autoregressive order

and the moving average order

by calculating the autocorrelation function and partial autocorrelation function of the time series. The number of differences

can be determined with a unit root test. By optimizing the

model, we predicted MRA values by modeling time series data, including time, the number of stars, star area, and star brightness.

We utilized the mean squared error (

MSE), root mean squared error (

RMSE), and mean absolute error (

MAE) metrics to evaluate the predictive performance of the model:

where

represents the sequence number of prediction points, whereas

and

indicate the predicted and actual values of the MRA at the

th prediction point, respectively;

represents the predicted data items.

is the average of the squared differences between the predicted values and actual values, which reflects both the predictive precision of the model and the magnitude of the prediction error.

is the square root of

, which eliminates the effect of the square term in the

MSE while retaining the magnitude of the error, making it easier to interpret.

is the average of the absolute differences between the predicted and actual values, which provides a measure of the model’s predictive accuracy and error magnitude; it more effectively eliminates outliers than

or

. The predictive effectiveness of MRA values was comprehensively evaluated according to these three key metrics. Low

MSE,

RMSE, and

MAE values indicate that the model has a high degree of predictive accuracy; high values suggest the opposite.

The linear multiple regression (MLRM) and ARIMA models were utilized to predict the MRA, and then their predictive performance was compared. As shown in

Table 2, the ARIMA model outperformed the MLRM model, excelling in capturing temporal trends and periodic patterns in the time series data, including time, the number of stars, star area, and brightness.

Moreover, the ARIMA model has faster training and more precise and stable predictions, making it particularly suitable for practical applications. Conversely, linear regression models with multiple variables necessitate a high degree of linearity in the input data. Therefore, we selected the ARIMA model for predicting the MRA of the MUA-LIDAR system.