Real-Time Environmental Contour Construction Using 3D LiDAR and Image Recognition with Object Removal

Abstract

1. Introduction

1.1. Background

1.2. Related Research

- 1.

- Vehicle Detection

- 2.

- Bounding Box Fitting Algorithms

- 3.

- Environmental Contour Extraction in Point Cloud

1.3. Main Work

2. Methods

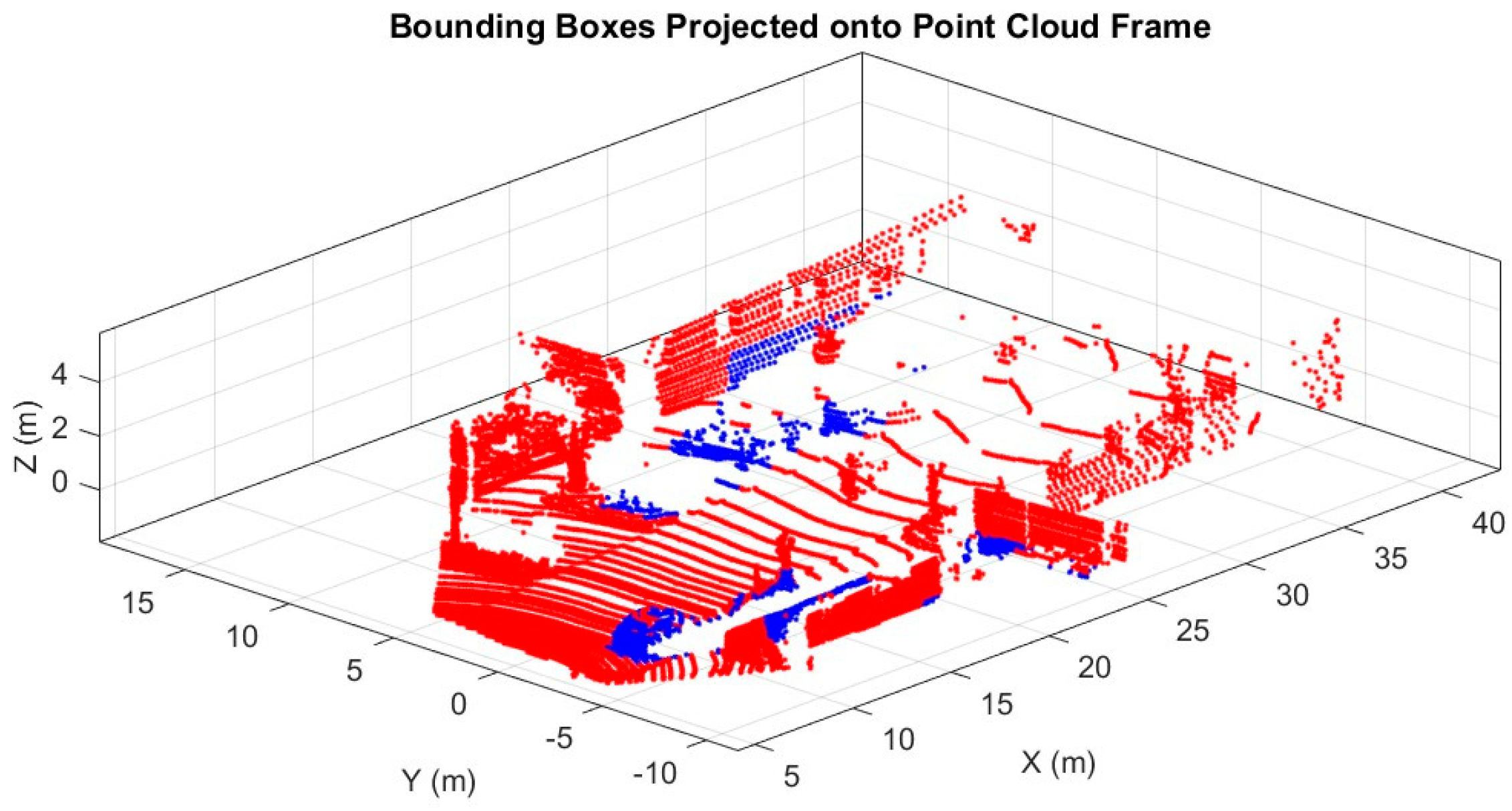

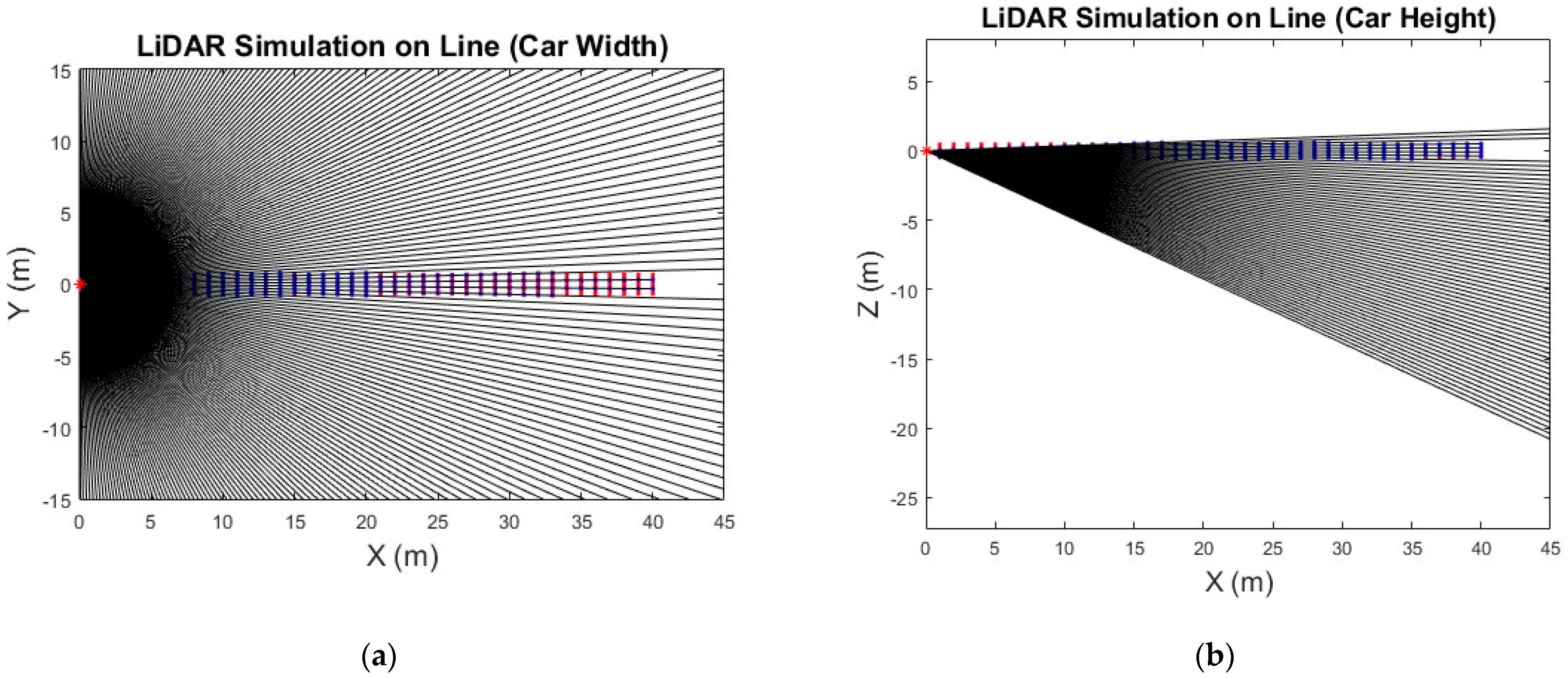

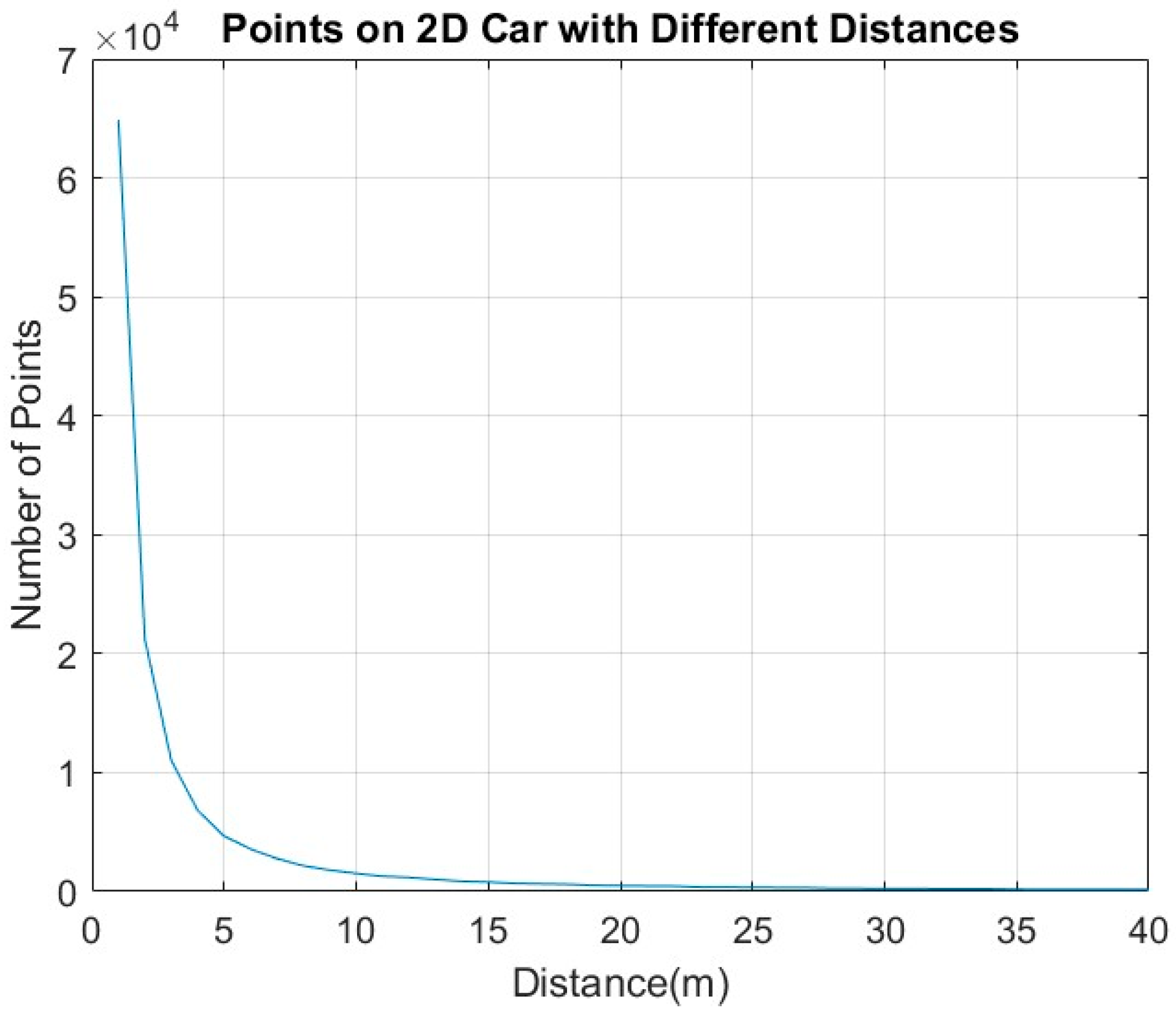

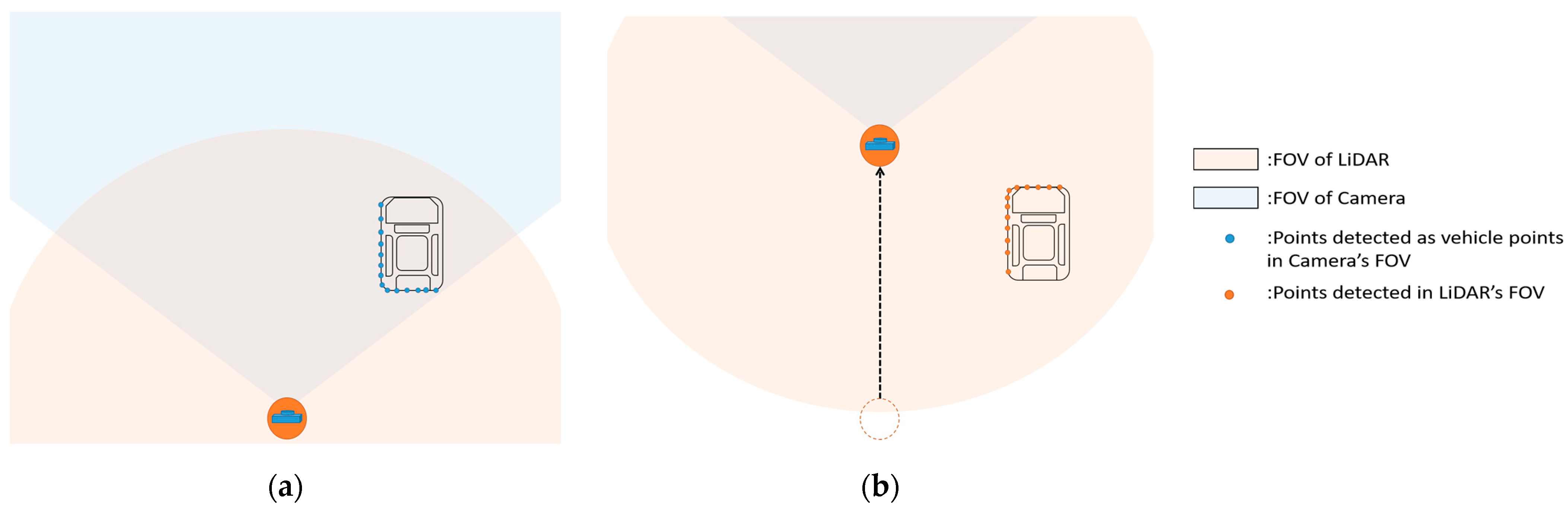

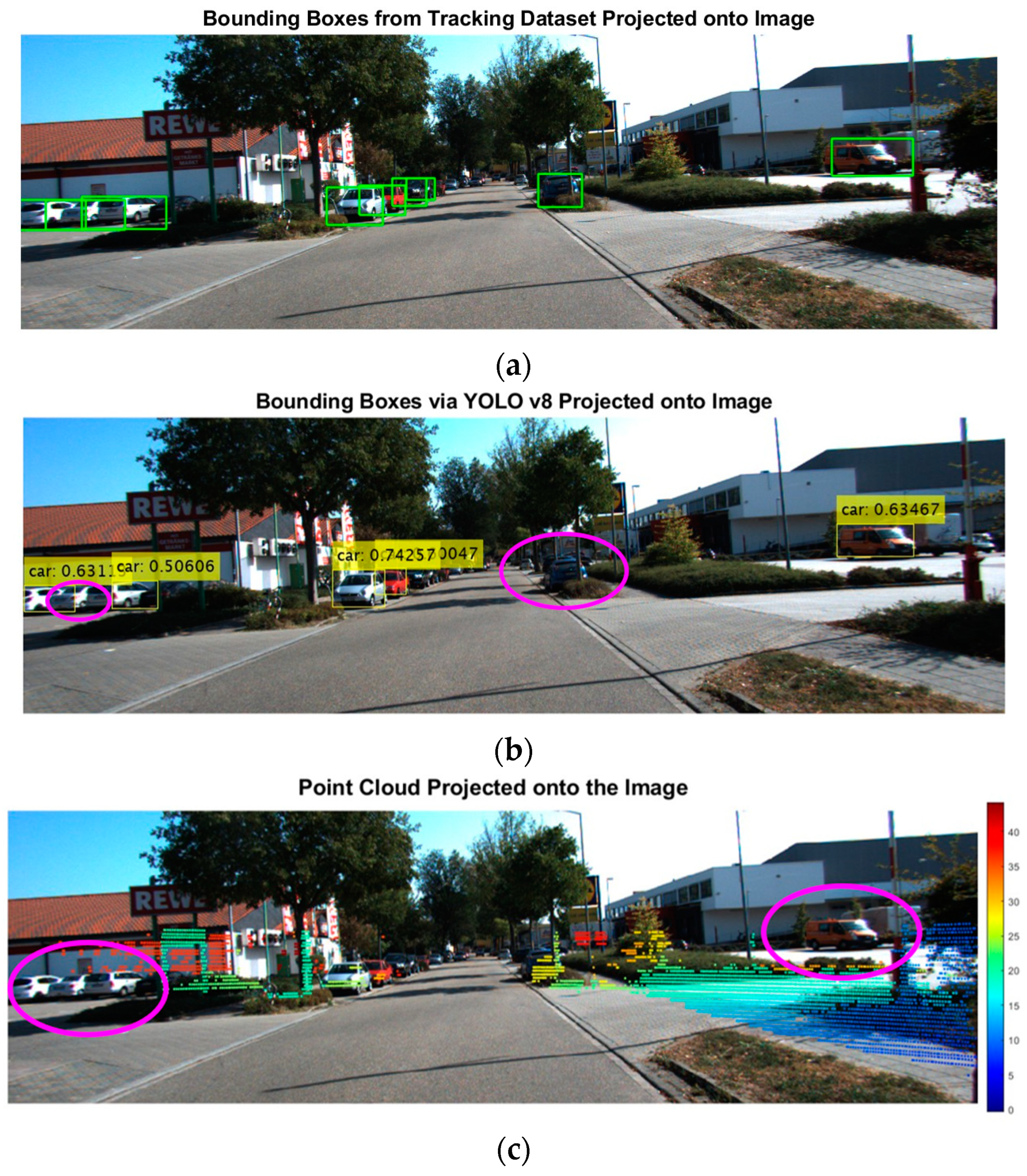

2.1. LiDAR-Camera Fusion

2.2. DBSCAN Algorithm for Object Points Extraction Refinement

| Algorithm 1 Adaptive DBSCAN-based Vehicle Point Extraction Algorithm |

| Input: BoundingBoxPoint: Points enclosed by each bounding box within camera’s FOV. : Parameters for calculating the number of points on the vehicle’s width and height based on distance. Output: VehiclePoint: Points classified as belonging to vehicles. BoundingBoxBoundary: Refined bounding boxes defined by detected vehicle. 01: For each bounding box i from 1 to NumberofBboxes: 02: Find points enclosed by bounding box i as BoundingBoxPoint 02: Calculate distance from the sensor to the mean position of BoundingBoxPoint 03: Determine the expected number of points Pts based on distance: 04: Set the DBSCAN parameters: 05: Apply Adaptive DBSCAN to cluster BoundingBoxPoint 06: If valid cluster j are detected: 07: Identify points belonging to the cluster j as VehiclePoint 08: Else: 09: Apply DBSCAN with looser DBSCAN parameters to BoundingBoxPoint. 10: Set cluster j with the highest number of points. 11: Identify points belonging to the cluster j as VehiclePoint. 12: End If 13: Calculate BoundingBoxBoundary enclosing VehiclePoint 14: End For 15: Return BoundingBoxBoundary, VehiclePoint |

2.3. Object Bounding Box Tracking Behind the Camera FOV

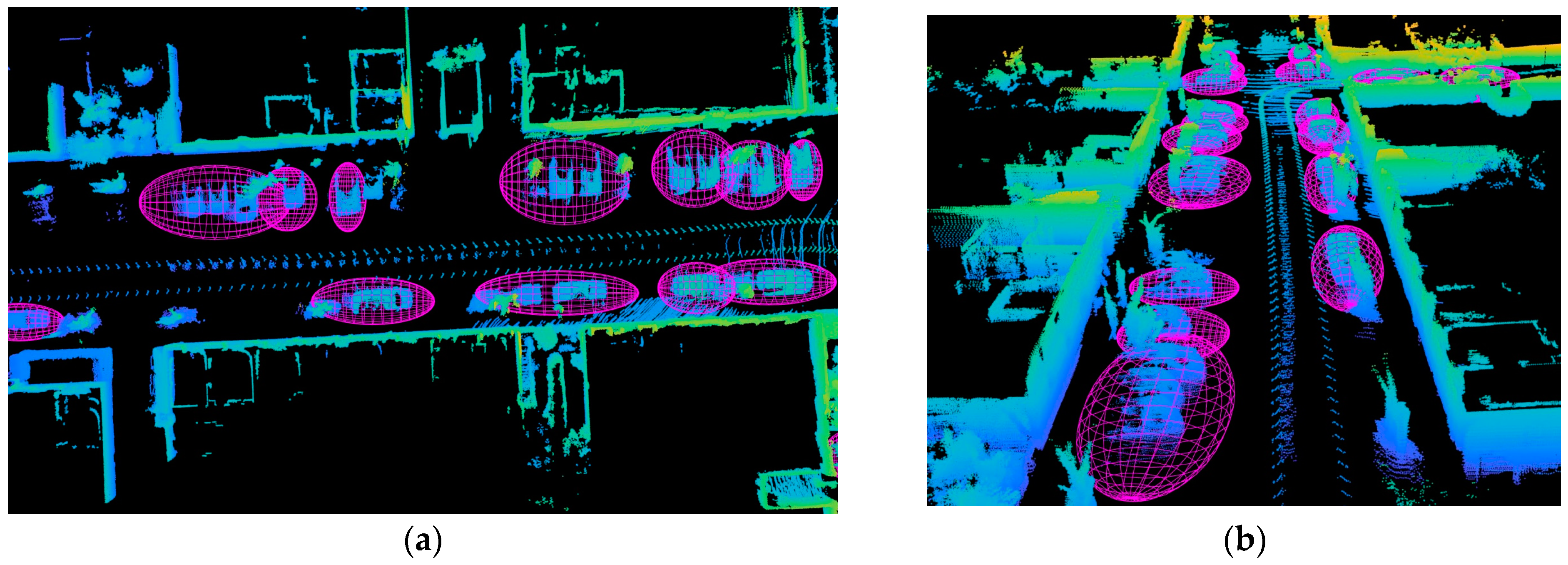

2.4. Object Enclosed Ellipsoid

| Algorithm 2 MVEE algorithm |

| Input: Q (Data points matrix of size ), tolerance Output: A ( matrix for the ellipsoid), c (center of the ellipsoid) 01: Initialize u as a vector of size n with each element set to 1/n 02: While err > tolerance do 03: Compute X = Q * diag(u) * Q’ 04: Compute M = diag(Q’* inv(X) * Q) 05: Find maximum value maximum in M, and its index j 06: Calculate step_size = (maximum − d − 1)/((d + 1) * (maximum − 1)) 07: Update new_u by scaling u by (1 − step_size) 08: Set new_u = new_u + step_size 09: Compute err = norm(new_u − u) 10: Update u to new_u 11: Compute Ellipsoid Parameters: 12: Set U = diag(u) 13: Calculate A = (1/d) * inv(P * U * P’ − (P * u) * (P * u)’) 14: Set c = P * u 15: Return A, c |

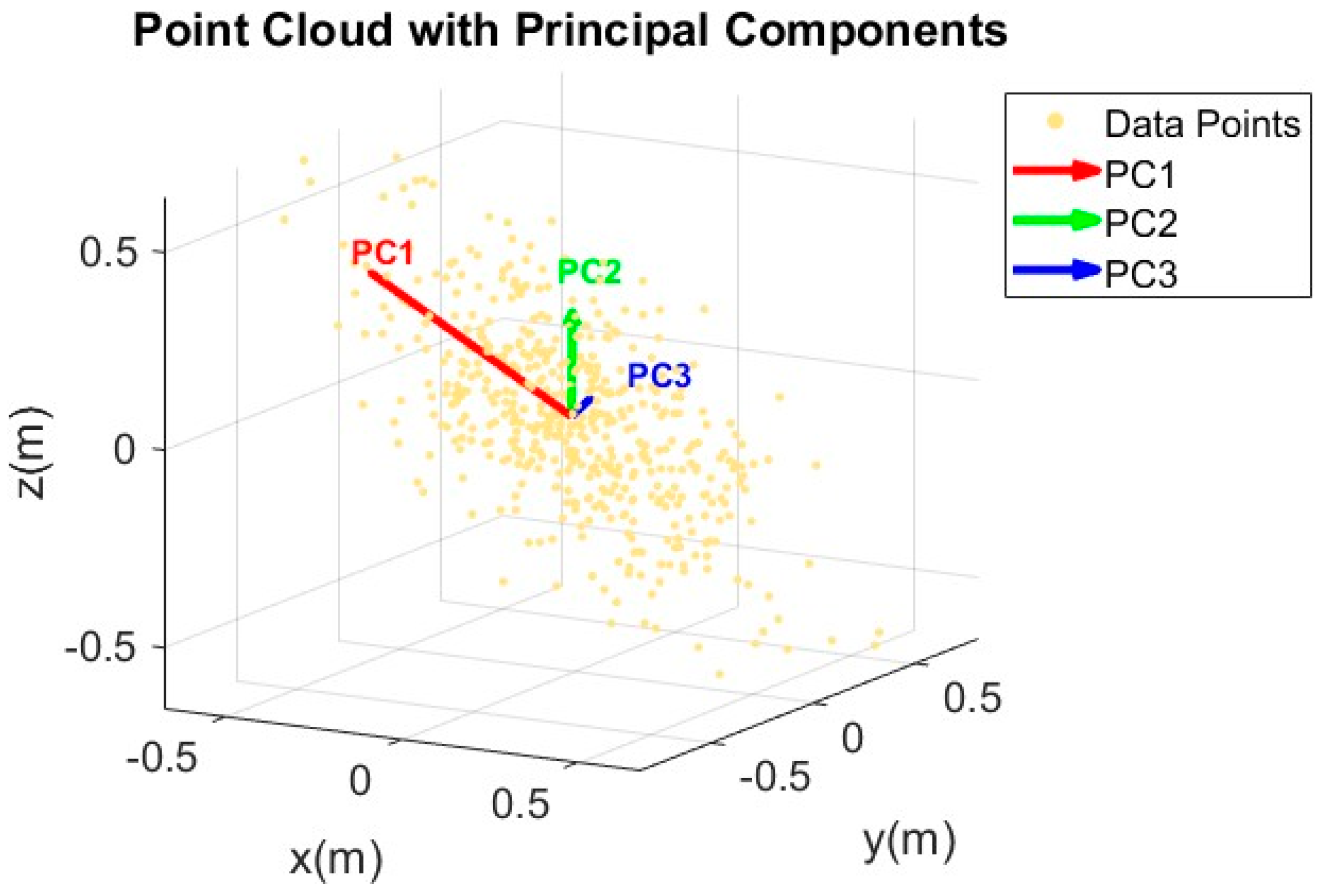

2.5. PCA for Edge Detection

| Algorithm 3 PCA-based Edge Detection in Point Clouds |

| Input: Q (Data points matrix of size ), k (number of nearest neighbors) Output: edge point 01: For each point i in Q do 02: Find k nearest neighbors of point i using KD-Tree 03: Extract neighbors’ coordinate 04: Compute the centroid of the neighbors 05: Calculate covariance matrix with dimension 3x3 w.r.t the neighbors 06: Perform SVD on covariance matrix to get eigenvalues 07: Set 08: If Curvature > Cur_thresh do 09: the point is defined as an edge point. 10: End If 11: End For 12: Return edge point |

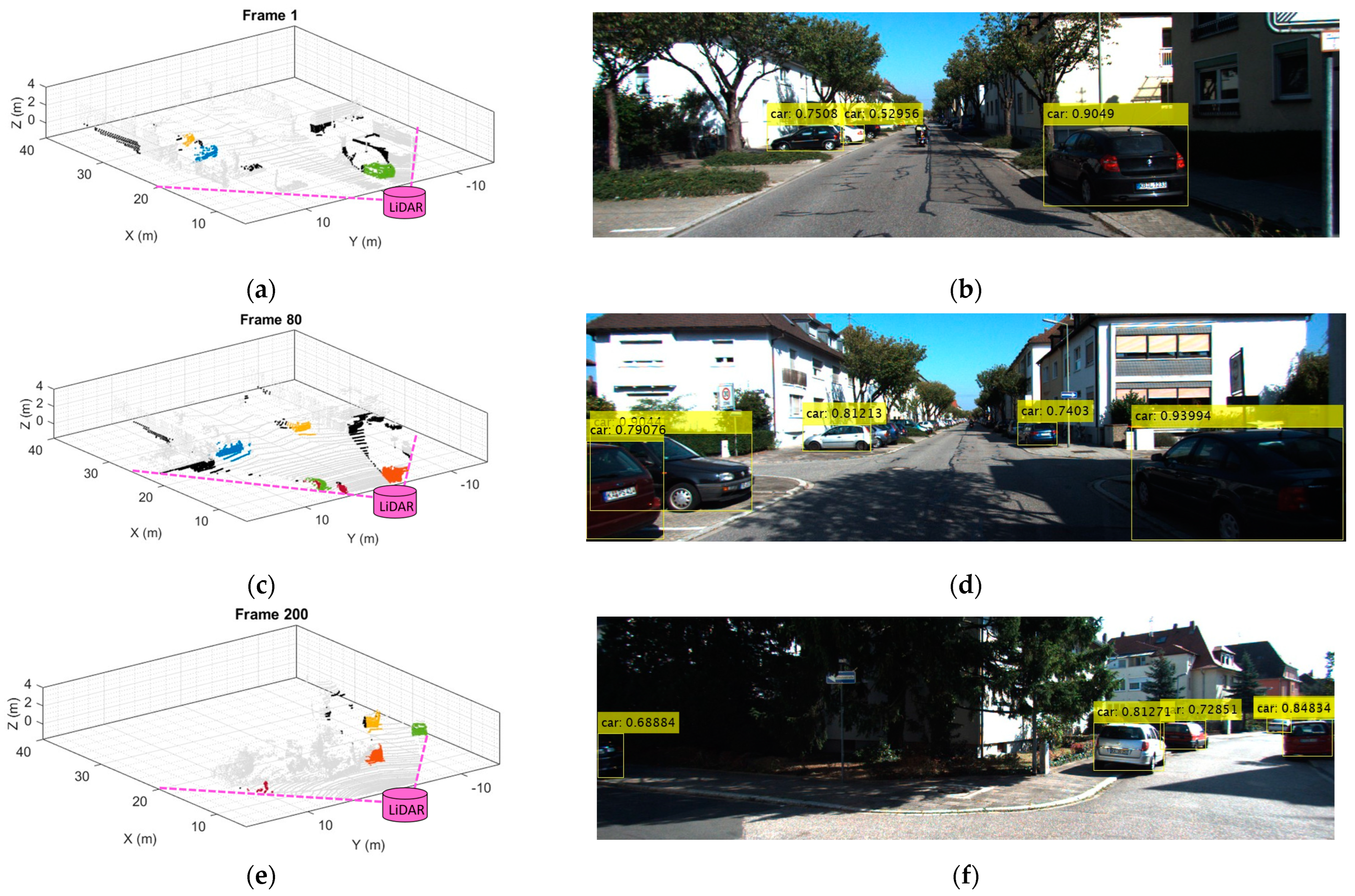

3. Experimental Results

- A.

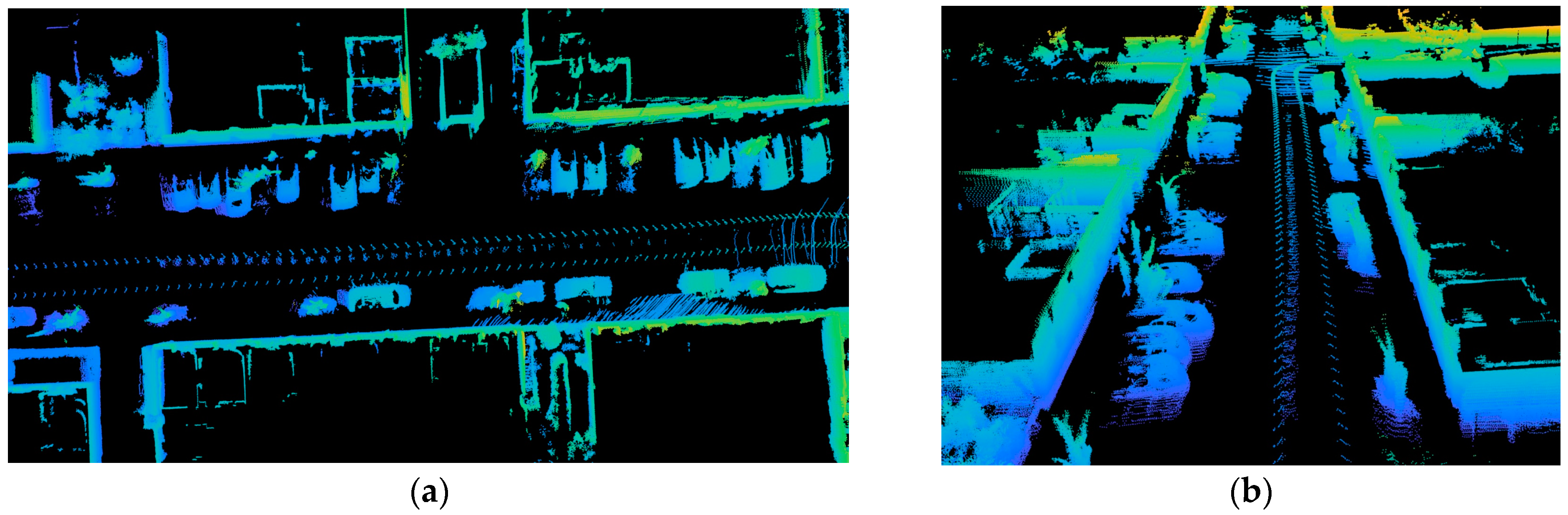

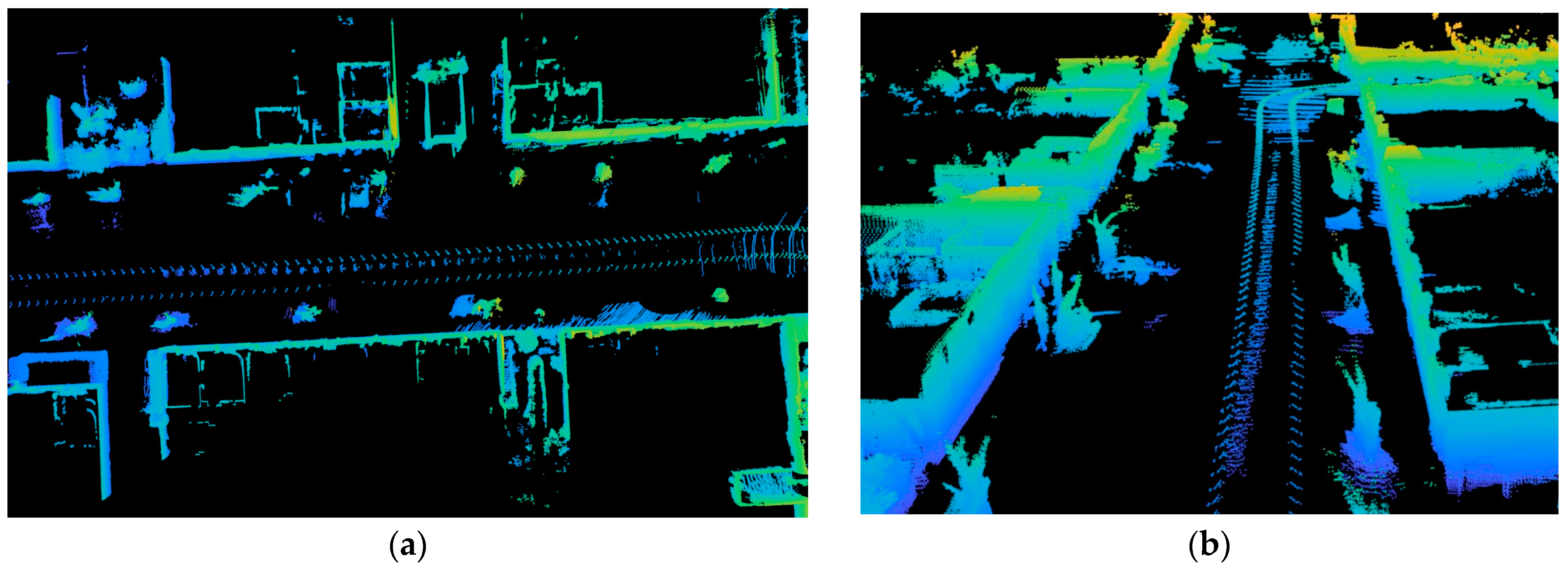

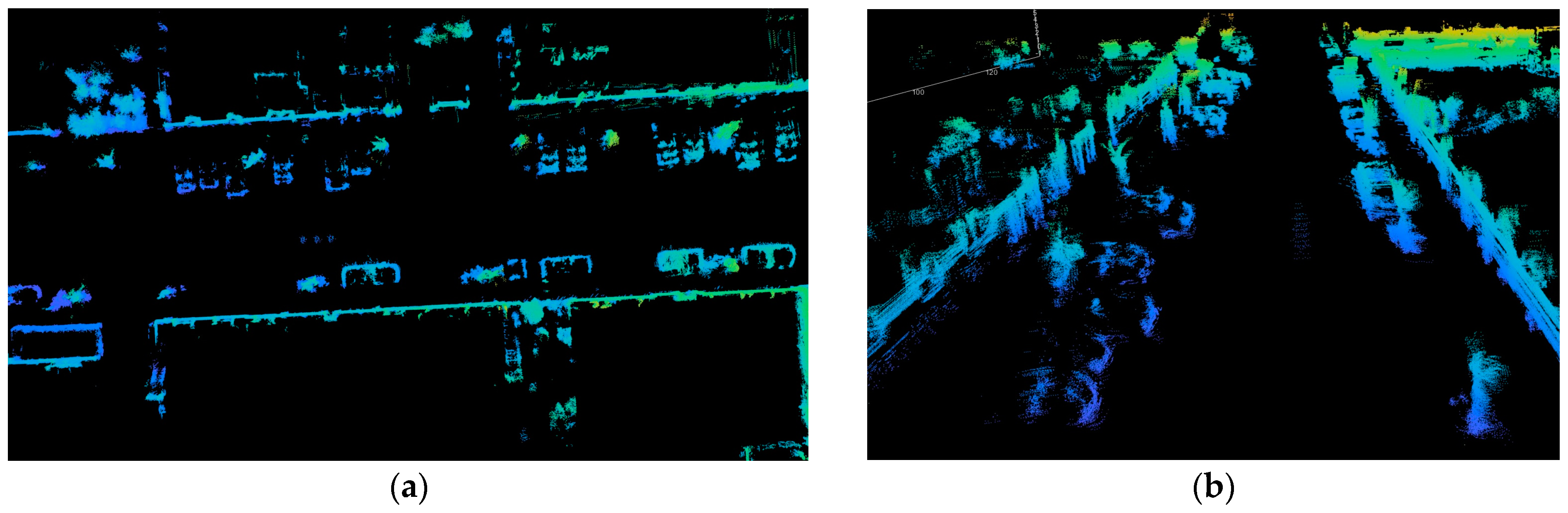

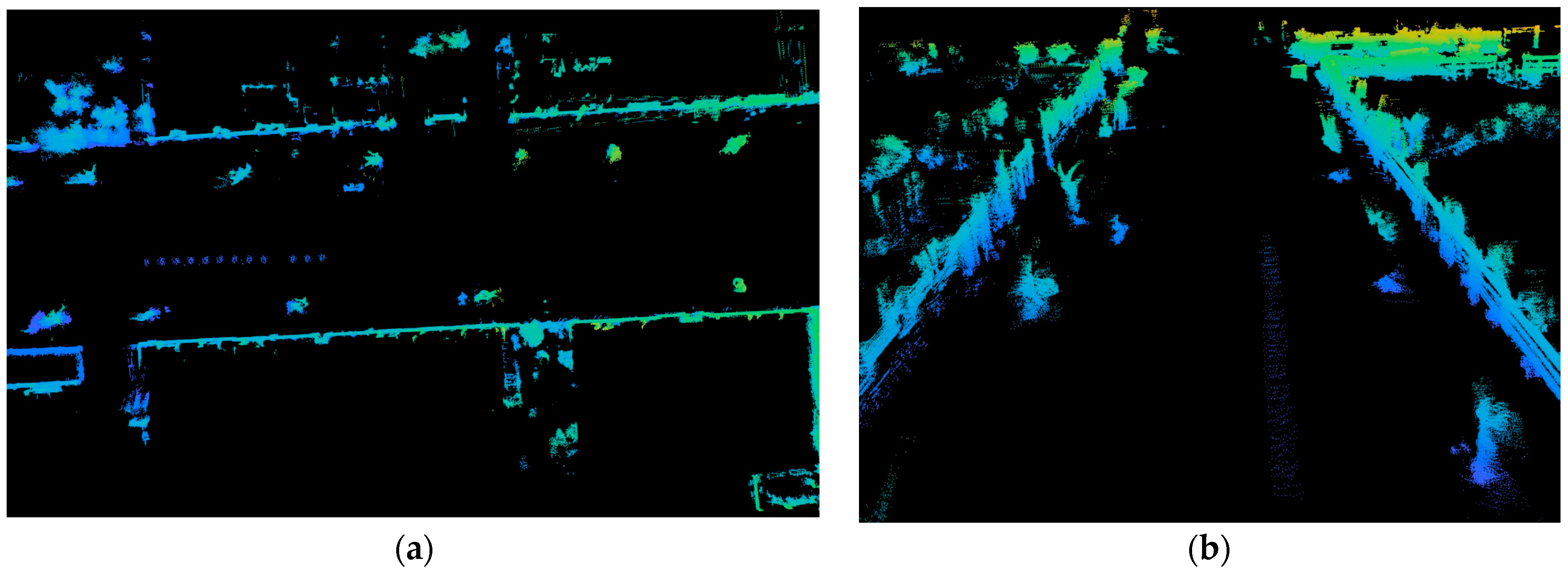

- High-Dense Point Cloud

- B.

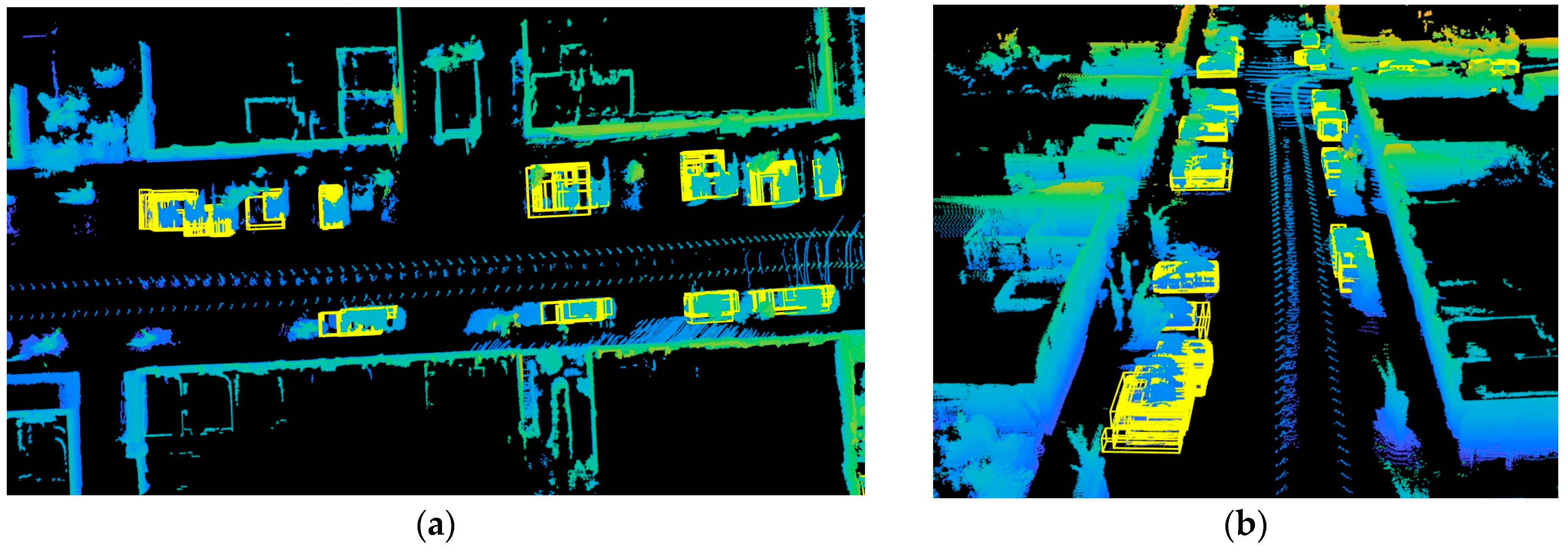

- Bounding Box Detection by Point Cloud-Image Fusion and Adaptive DBSCAN

- C.

- Minimum Volume Enclosing Ellipsoid

- D.

- Object Removal

- E.

- Environmental Edge Contour

4. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liang, L.; Ma, H.; Zhao, L.; Xie, X.; Hua, C.; Zhang, M.; Zhang, Y. Vehicle Detection Algorithms for Autonomous Driving: A Review. Sensors 2024, 24, 3088. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Zhan, J.; Duan, C.; Guan, X.; Lu, P.; Yang, K. A Review of Vehicle Detection Techniques for Intelligent Vehicles. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 3811–3831. [Google Scholar] [CrossRef]

- Jin, X.; Yang, H.; He, X.; Liu, G.; Yan, Z.; Wang, Q. Robust LiDAR-Based Vehicle Detection for On-Road Autonomous Driving. Remote Sens. 2023, 15, 3160. [Google Scholar] [CrossRef]

- Golovinskiy, A.; Kim, V.G.; Funkhouser, T. Shape-based recognition of 3D point clouds in urban environments. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision (ICCV), Kyoto, Japan, 29 September–2 October 2009; pp. 2154–2161. [Google Scholar] [CrossRef]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar] [CrossRef]

- Cho, H.; Seo, Y.-W.; Kumar, B.V.K.V.; Rajkumar, R.R. A multi-sensor fusion system for moving object detection and tracking in urban driving environments. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014. [Google Scholar]

- Han, X.; Lu, J.; Tai, Y.; Zhao, C. A real-time LIDAR and vision based pedestrian detection system for unmanned ground vehicles. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 635–639. [Google Scholar] [CrossRef]

- Premebida, C.; Monteiro, G.; Nunes, U.; Peixoto, P. A Lidar and Vision-based Approach for Pedestrian and Vehicle Detection and Tracking. In Proceedings of the IEEE Intelligent Transportation Systems Conference, Seattle, WA, USA, 30 September–3 October 2007; pp. 1044–1049. [Google Scholar] [CrossRef]

- Gonzalez, A.; Villalonga, G.; Xu, J.; Vazquez, D.; Amores, J.; Lopez, A.M. Multiview random forest of local experts combining RGB and LIDAR data for pedestrian detection. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Republic of Korea, 28 June–1 July 2015; pp. 356–361. [Google Scholar]

- Xu, X.; Zhang, L.; Yang, J.; Cao, C.; Tan, Z.; Luo, M. Object Detection Based on Fusion of Sparse Point Cloud and Image Information. IEEE Trans. Instrum. Meas. 2021, 70, 2512412. [Google Scholar] [CrossRef]

- Liu, L.; He, J.; Ren, K.; Xiao, Z.; Hou, Y. A LiDAR–Camera Fusion 3D Object Detection Algorithm. Information 2022, 13, 169. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-View 3D Object Detection Network for Autonomous Driving. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Siwei, H.; Baolong, L. Review of Bounding Box Algorithm Based on 3D Point Cloud. Int. J. Adv. Netw. Monit. Control. 2021, 6, 18–23. [Google Scholar] [CrossRef]

- Zand, M.; Etemad, A.; Greenspan, M. Oriented Bounding Boxes for Small and Freely Rotated Objects. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4701715. [Google Scholar] [CrossRef]

- Yi, J.; Wu, P.; Liu, B.; Huang, Q.; Qu, H.; Metaxas, D. Oriented Object Detection in Aerial Images with Box Boundary-Aware Vectors. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 2149–2158. [Google Scholar]

- Feng, M.; Zhang, T.; Li, S.; Jin, G.; Xia, Y. An improved minimum bounding rectangle algorithm for regularized building boundary extraction from aerial LiDAR point clouds with partial occlusions. Int. J. Remote Sens. 2020, 41, 300–319. [Google Scholar] [CrossRef]

- Naujoks, B.; Wuensche, H.-J. An Orientation Corrected Bounding Box Fit Based on the Convex Hull under Real Time Constraints. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Kwak, E.; Habib, A. Automatic representation and reconstruction of DBM from LiDAR data using Recursive Minimum Bounding Rectangle. ISPRS J. Photogramm. Remote Sens. 2014, 93, 171–191. [Google Scholar] [CrossRef]

- Todd, M.J.; Yıldırım, E.A. On Khachiyan’s algorithm for the computation of minimum-volume enclosing ellipsoids. Discret. Appl. Math. 2007, 155, 1731–1744. [Google Scholar] [CrossRef]

- Bowman, N.; Heath, M.T. Computing minimum-volume enclosing ellipsoids. Math. Program. Comput. 2023, 15, 621–650. [Google Scholar] [CrossRef]

- Borges, P.; Zlot, R.; Bosse, M.; Nuske, S.; Tews, A. Vision-based localization using an edge map extracted from 3D laser range data. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 4902–4909. [Google Scholar] [CrossRef]

- Ceylan, D.; Mitra, N.J.; Li, H.; Weise, T.; Pauly, M. Factored Facade Acquisition using Symmetric Line Arrangements. Comput. Graph. Forum 2012, 31 Pt 3, 671–680. [Google Scholar] [CrossRef]

- Tsai, T.-C.; Peng, C.-C. Ground segmentation based point cloud feature extraction for 3D LiDAR SLAM enhancement. Measurement 2024, 236, 114890. [Google Scholar] [CrossRef]

- Jutzi, B.; Gross, H. Nearest neighbour classification on laser point clouds to gain object structures from buildings. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2009, 38, 4–7. [Google Scholar]

- Pauly, M.; Gross, M.; Kobbelt, L.P. Efficient simplification of point-sampled surfaces. In Proceedings of the IEEE Visualization (VIS 2002), Boston, MA, USA, 27 October–1 November 2002; pp. 163–170. [Google Scholar] [CrossRef]

- Huang, H.; Li, D.; Zhang, H.; Ascher, U.; Cohen-Or, D. Consolidation of unorganized point clouds for surface reconstruction. ACM Trans. Graph. 2009, 28, 1–7. [Google Scholar] [CrossRef]

- Chuang, C.-S.; Peng, C.-C. Development of an Uneven Terrain Decision-Aid Landing System for Fixed-Wing Aircraft Based on Computer Vision. Electronics 2024, 13, 1946. [Google Scholar] [CrossRef]

- Kuçak, R.A. The Feature Extraction from Point Clouds using Geometric Features and RANSAC Algorithm. Adv. LiDAR 2022, 2, 15–20. [Google Scholar]

- Ji, C.; Li, Y.; Fan, J.; Lan, S. A Novel Simplification Method for 3D Geometric Point Cloud Based on the Importance of Point. IEEE Access 2019, 7, 129029–129042. [Google Scholar] [CrossRef]

- Chen, X.; Liu, Q.; Yu, K. A Point Cloud Feature Regularization Method by Fusing Judge Criterion of Field Force. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2994–3006. [Google Scholar] [CrossRef]

- Zang, Y.; Chen, B.; Xia, Y.; Guo, H.; Yang, Y.; Liu, W.; Wang, C.; Li, J. LCE-NET: Contour Extraction for Large-Scale 3-D Point Clouds. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5704413. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. KDD-96 Proc. 1996, 96, 226–231. [Google Scholar]

| MinPts | Detected Result | |

| 300 |  | Bounding Boxes: 3 Detected Vehicles: 4 |

| 400 |  | Bounding Boxes: 3 Detected Vehicles: 3 |

| 500 |  | Bounding Boxes: 3 Detected Vehicles: 3 |

| 600 |  | Bounding Boxes: 3 Detected Vehicles: 3 |

| Proposed Adaptive DBSCAN Algorithm |  | Bounding Boxes: 3 Detected Vehicles: 3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, T.-J.; He, R.; Peng, C.-C. Real-Time Environmental Contour Construction Using 3D LiDAR and Image Recognition with Object Removal. Remote Sens. 2024, 16, 4513. https://doi.org/10.3390/rs16234513

Wu T-J, He R, Peng C-C. Real-Time Environmental Contour Construction Using 3D LiDAR and Image Recognition with Object Removal. Remote Sensing. 2024; 16(23):4513. https://doi.org/10.3390/rs16234513

Chicago/Turabian StyleWu, Tzu-Jung, Rong He, and Chao-Chung Peng. 2024. "Real-Time Environmental Contour Construction Using 3D LiDAR and Image Recognition with Object Removal" Remote Sensing 16, no. 23: 4513. https://doi.org/10.3390/rs16234513

APA StyleWu, T.-J., He, R., & Peng, C.-C. (2024). Real-Time Environmental Contour Construction Using 3D LiDAR and Image Recognition with Object Removal. Remote Sensing, 16(23), 4513. https://doi.org/10.3390/rs16234513