Near Real-Time Flood Monitoring Using Multi-Sensor Optical Imagery and Machine Learning by GEE: An Automatic Feature-Based Multi-Class Classification Approach

Abstract

1. Introduction

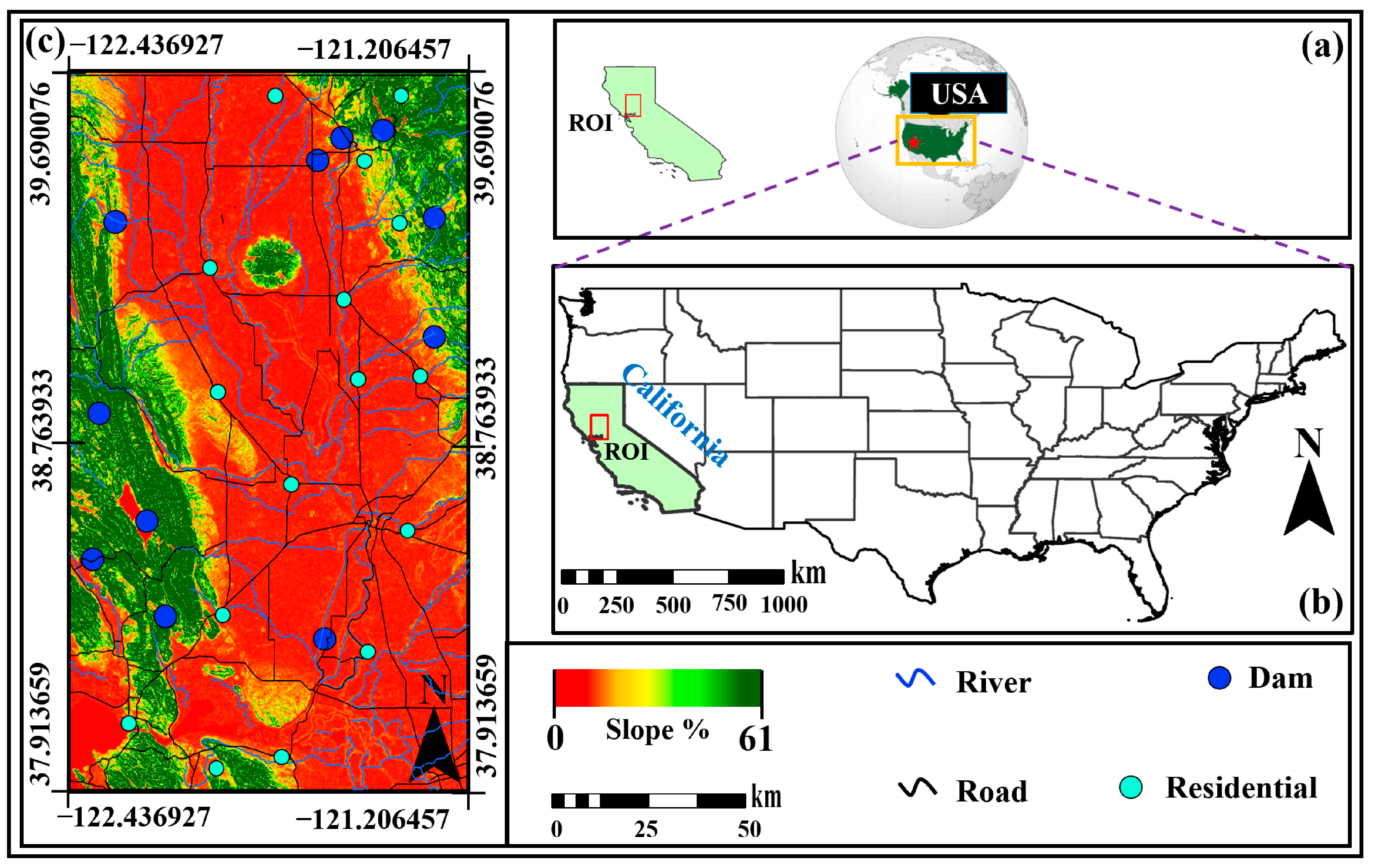

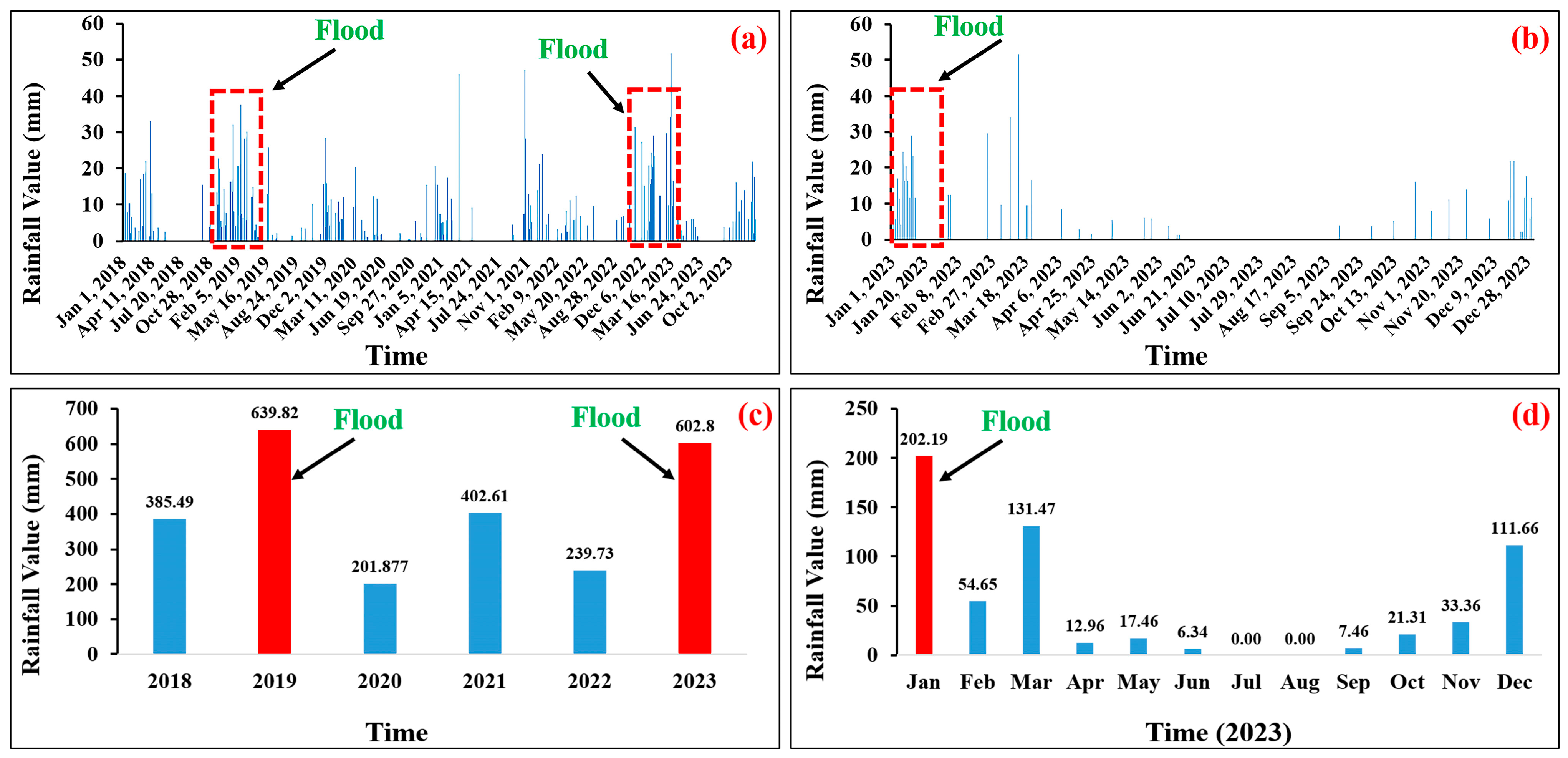

2. Study Area and Data Collection

2.1. Study Areas

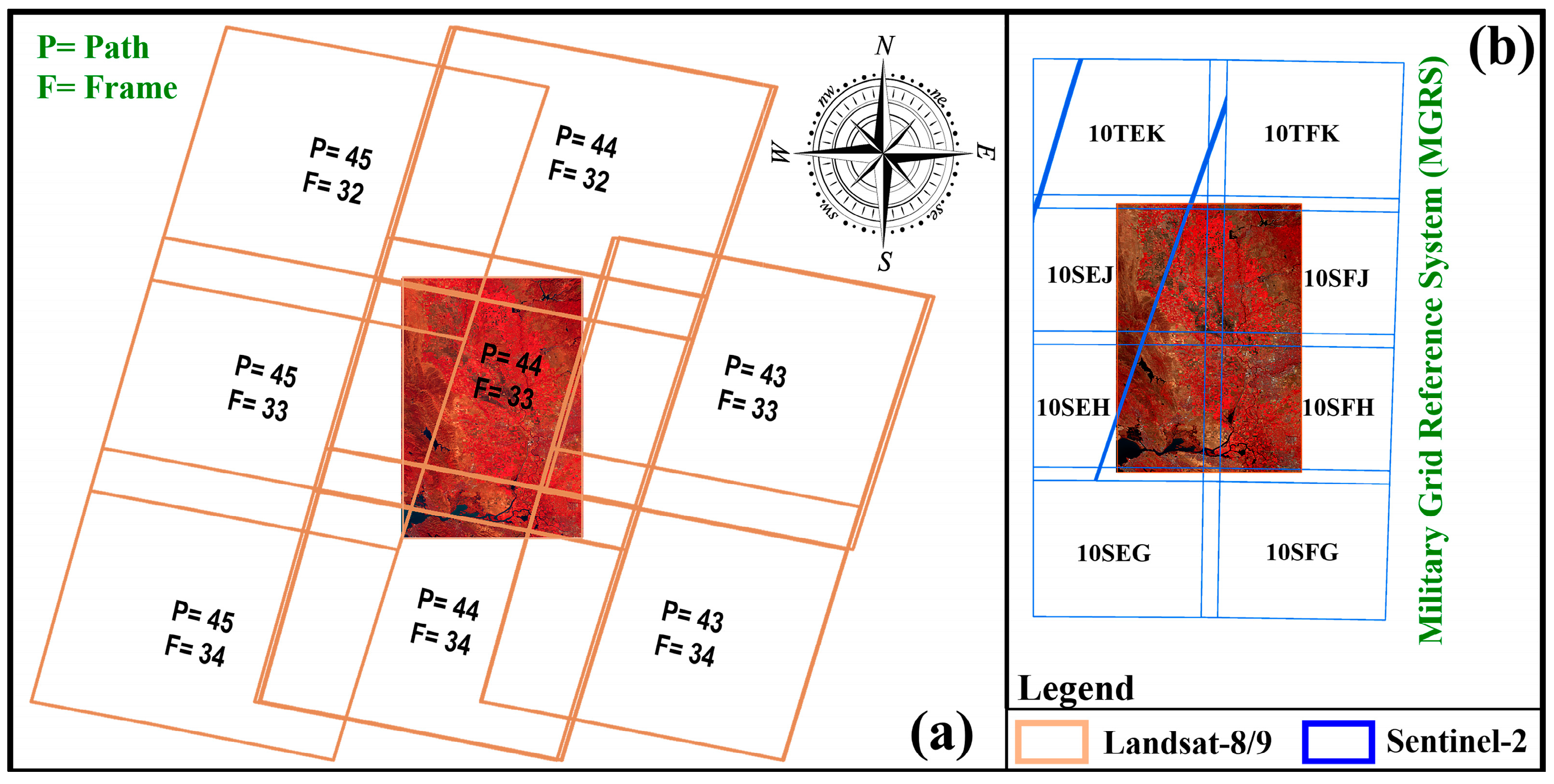

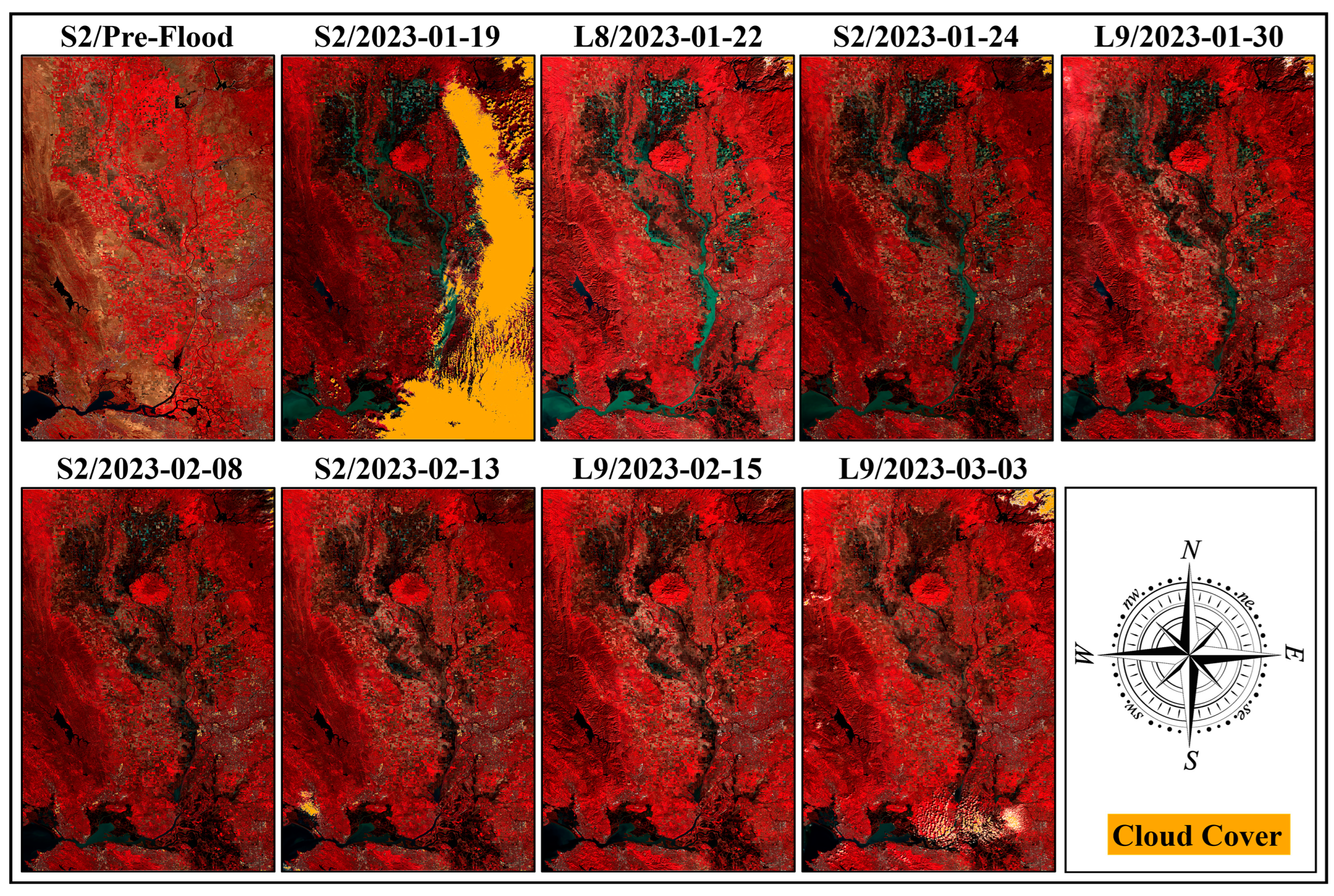

2.2. Data Collection and Data Pre-Processing

2.2.1. Sentinel-2 Satellite Imagery

2.2.2. Landsat-8/9 Satellite Imagery

2.2.3. Test Samples Data

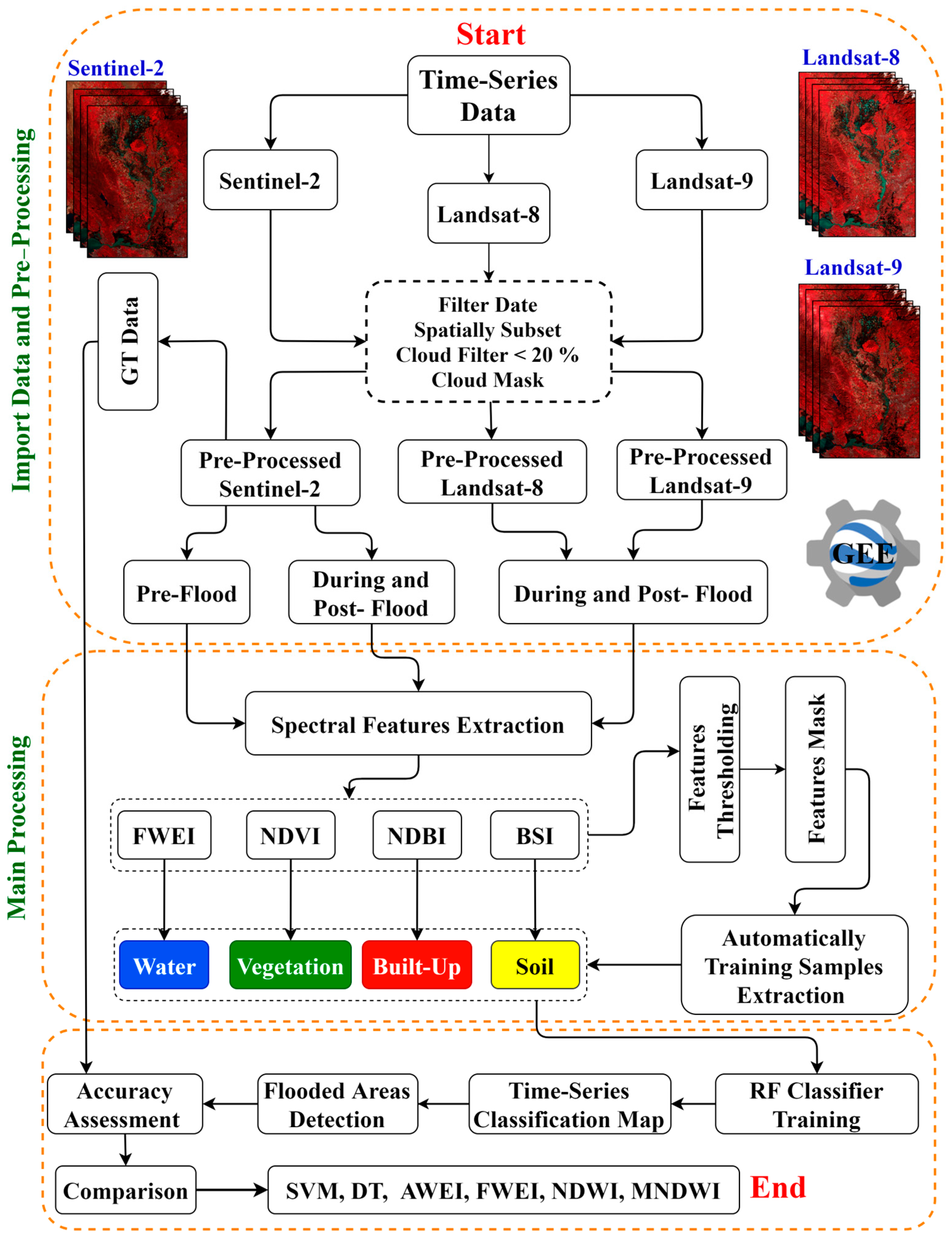

3. Methodology of the Research

3.1. Data Pre-Processing in GEE

3.2. Extraction of Spectral Features

3.2.1. Flood/Water Extraction Index (FWEI)

3.2.2. Normalized Difference Vegetation Index (NDVI)

3.2.3. Normalized Difference Built-Up Index (NDBI)

3.2.4. Bare Soil Index (BSI)

3.3. Feature Thresholding for Land Cover Mask Generation

Automated Generation of Training Samples

3.4. Time-Series Classification of Flooded Areas

3.5. NRT Cumulative Flood Mapping

3.6. Results Evaluation

4. Results and Discussion

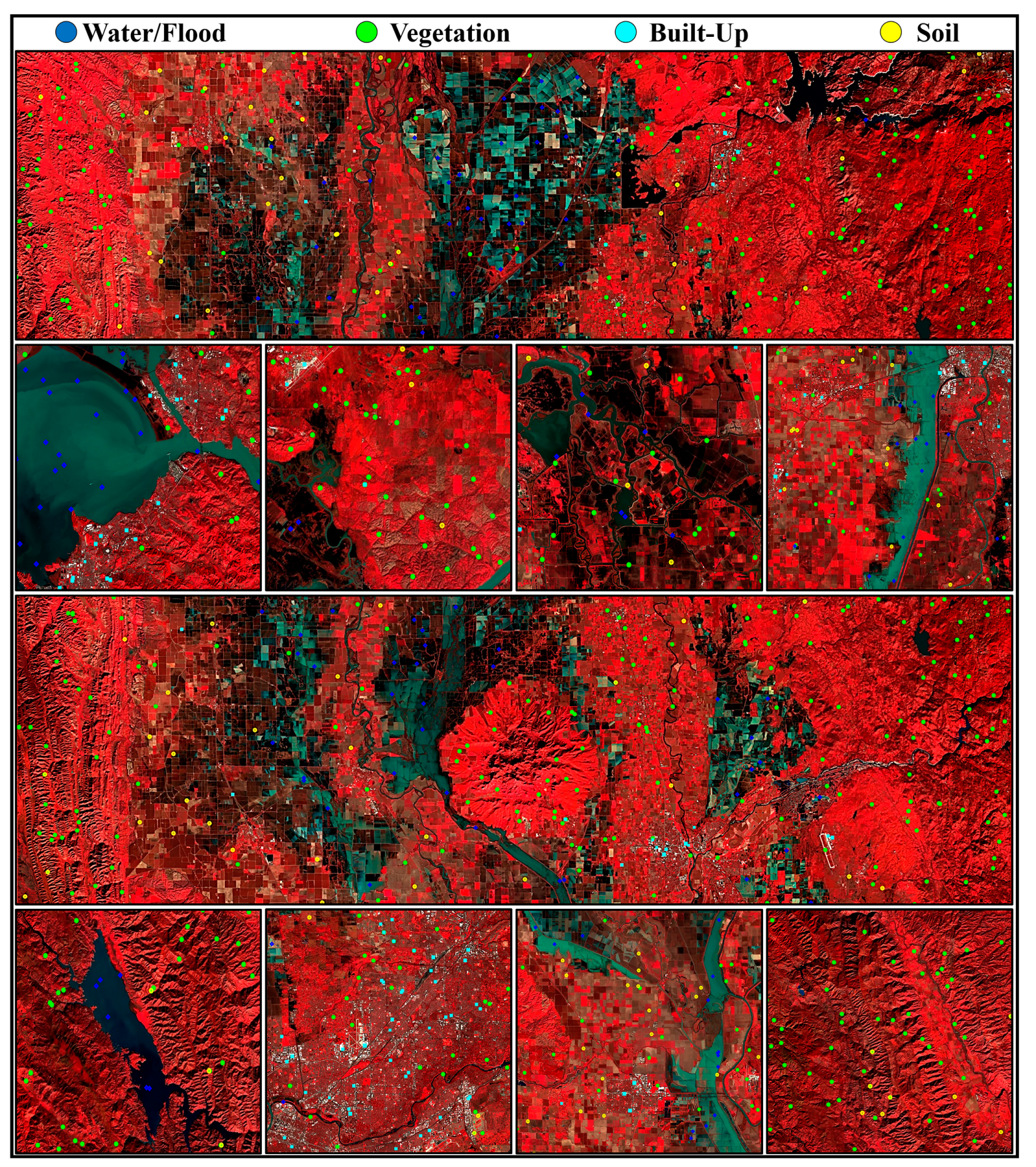

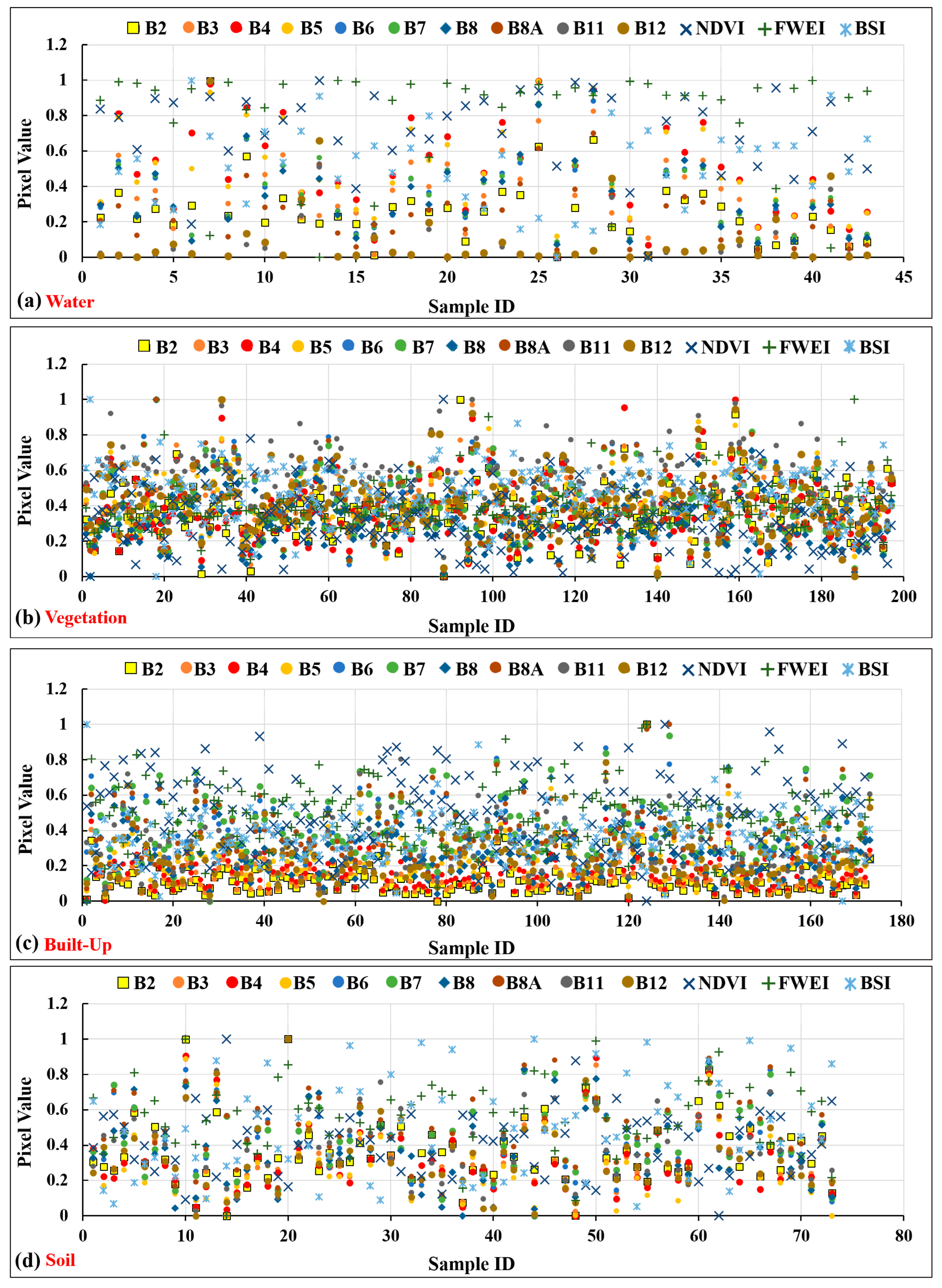

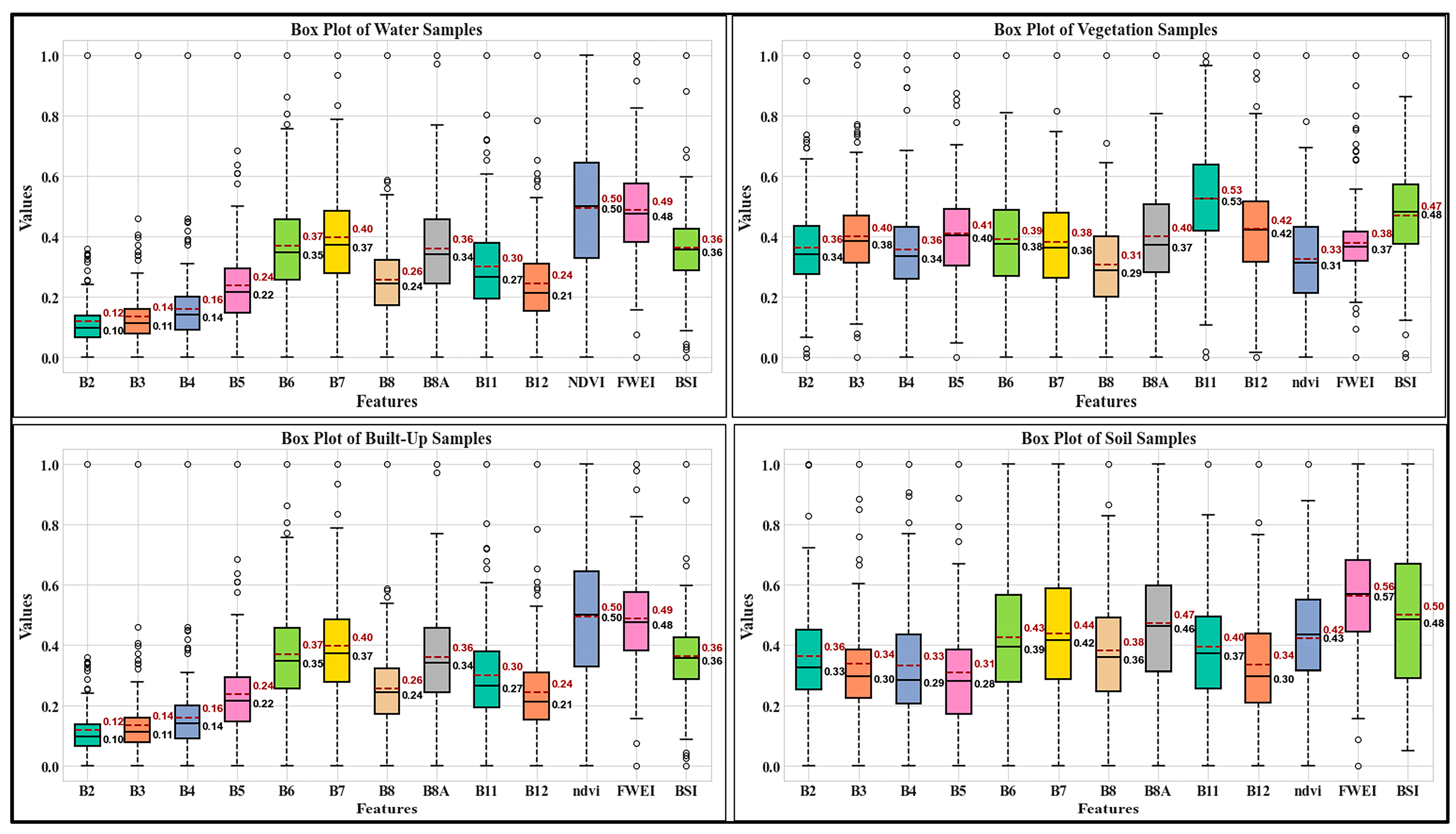

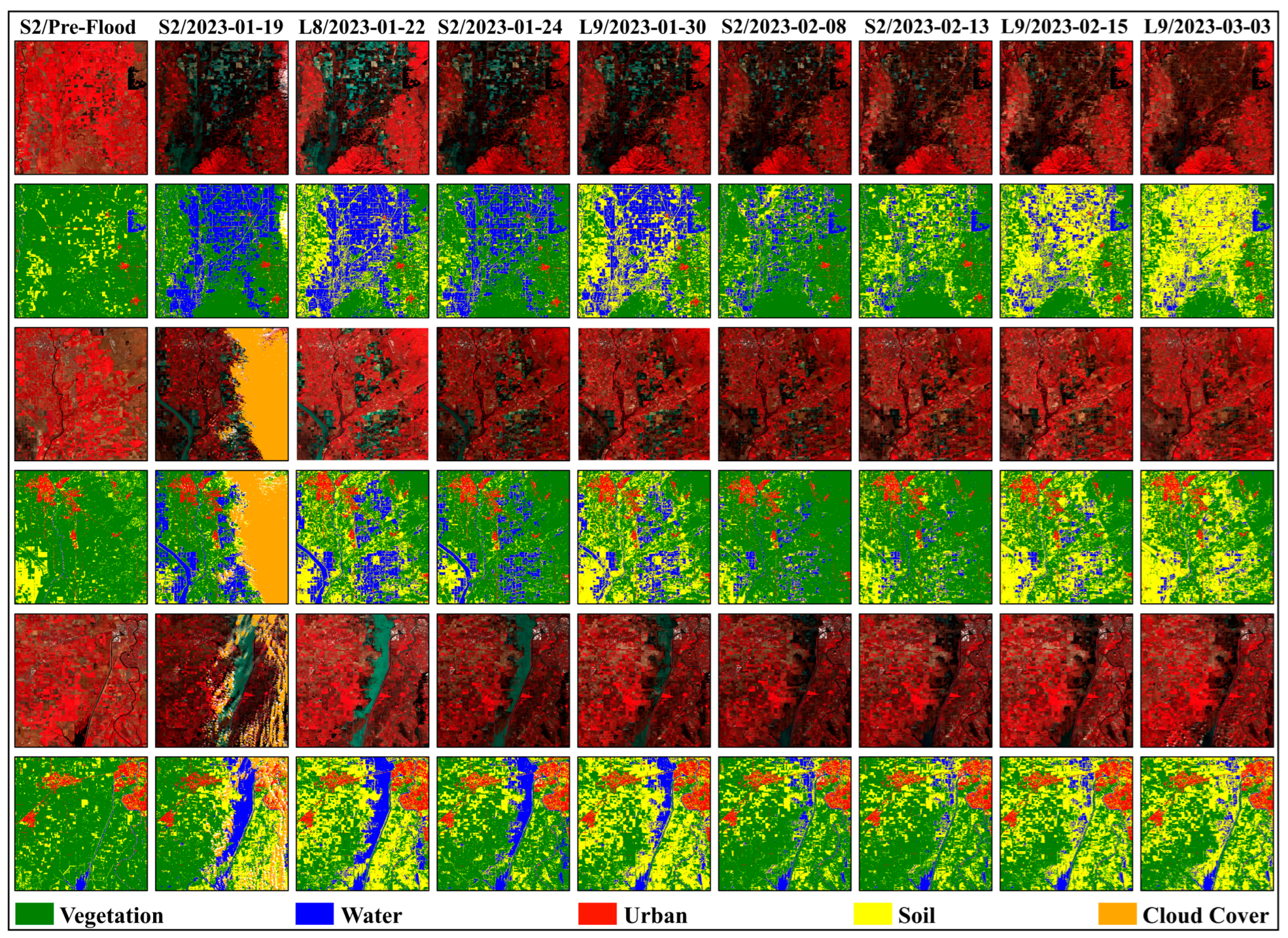

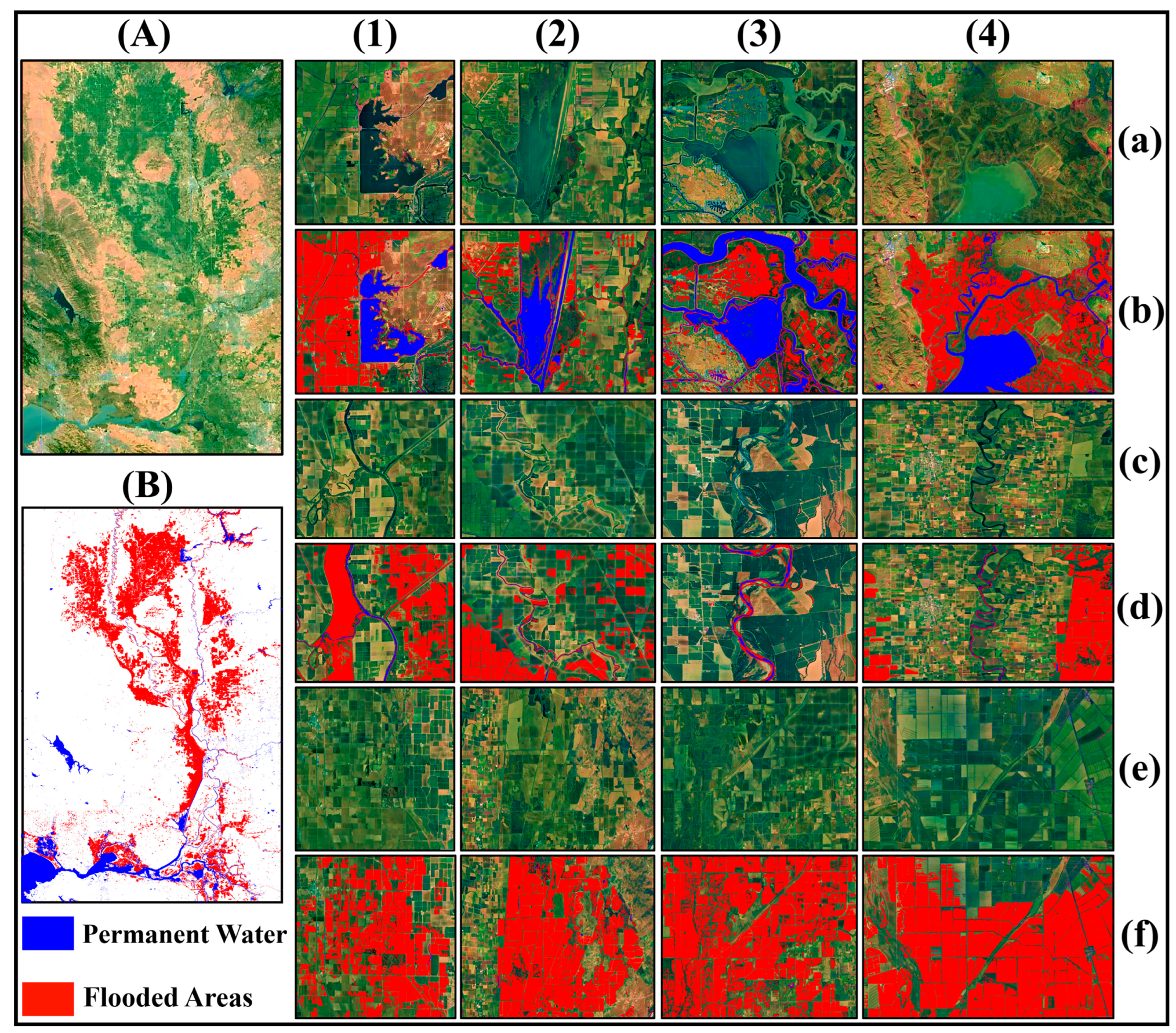

4.1. Result of Training Samples Generation

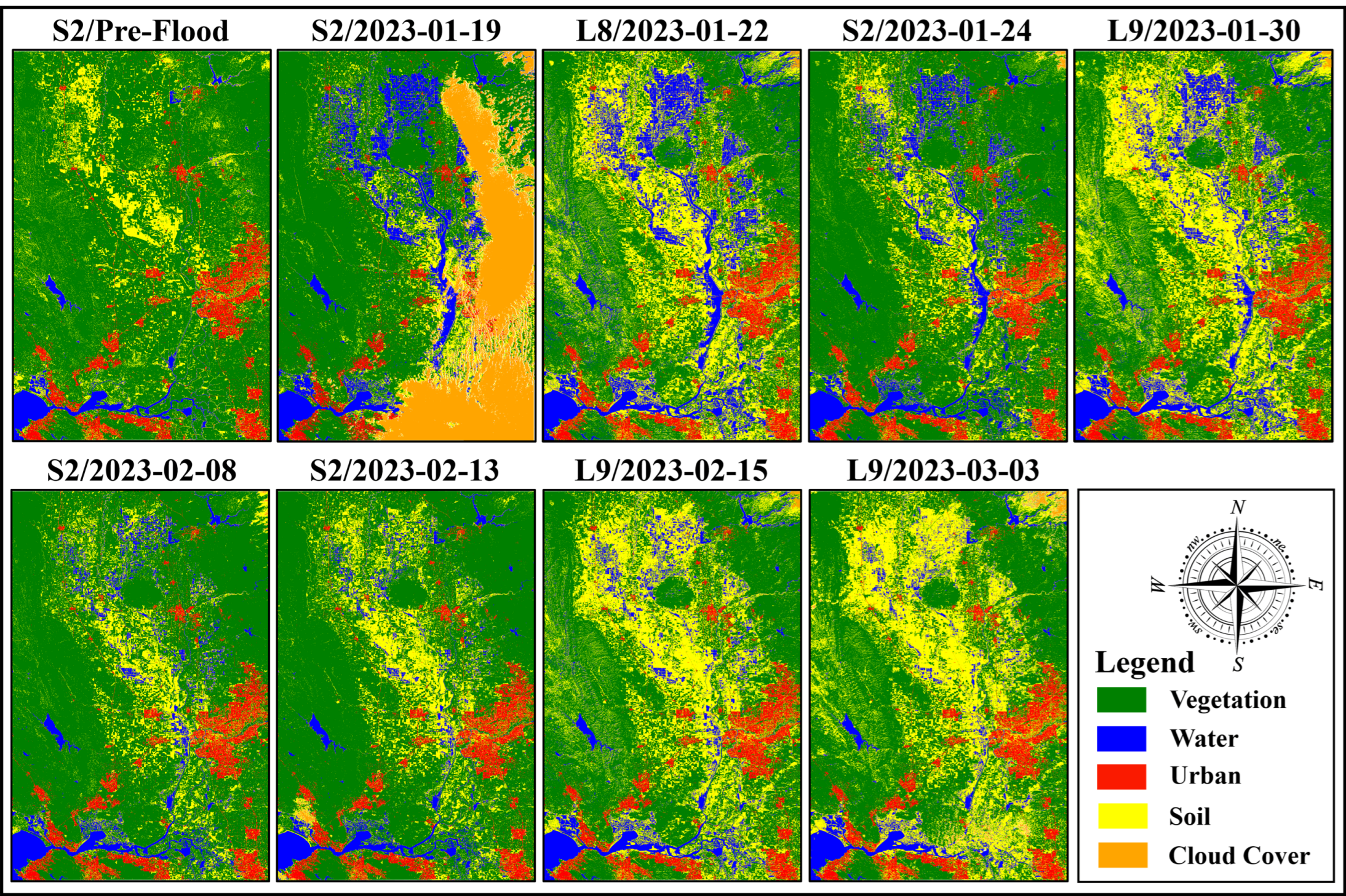

4.2. Result of Time-Series Classification Map

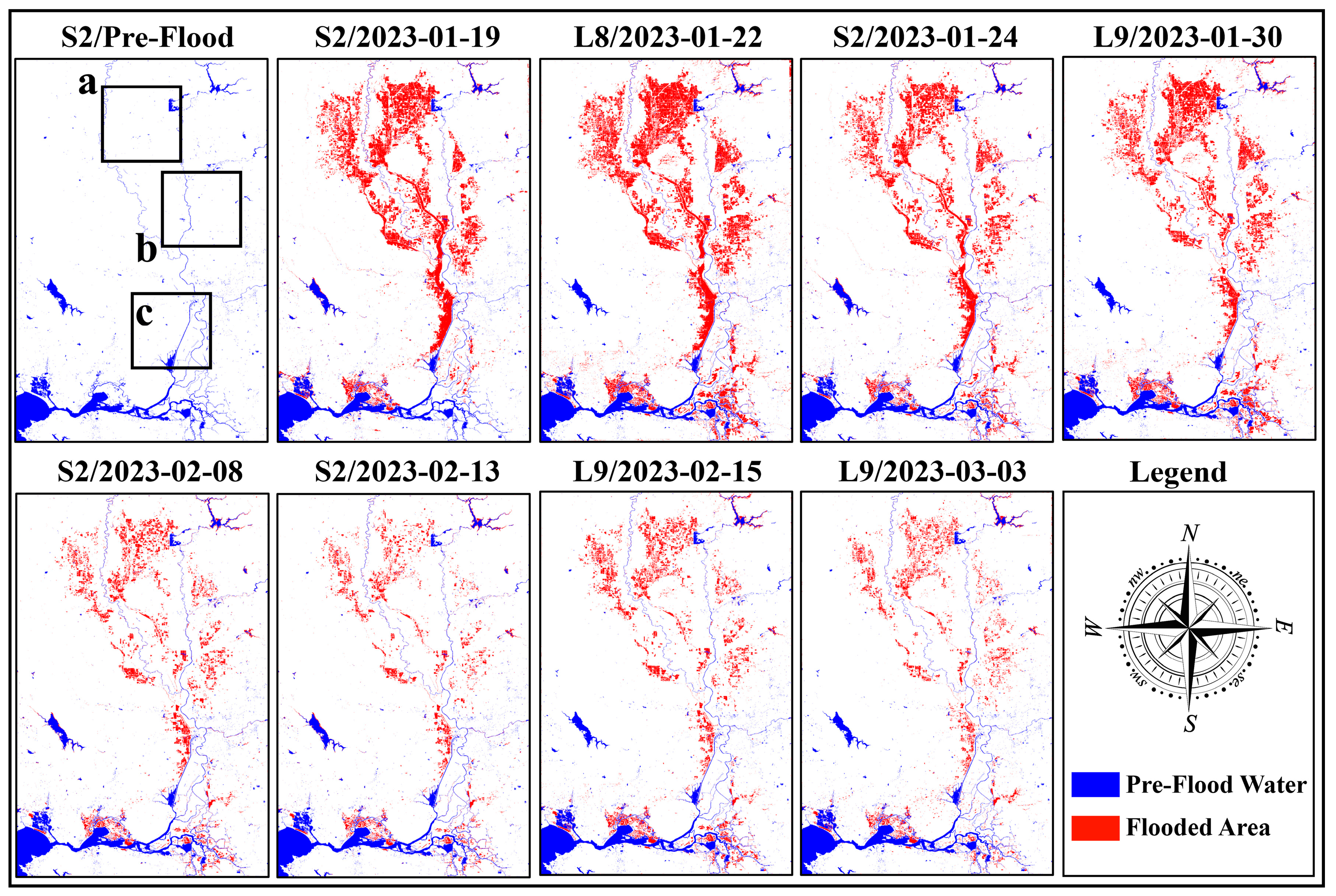

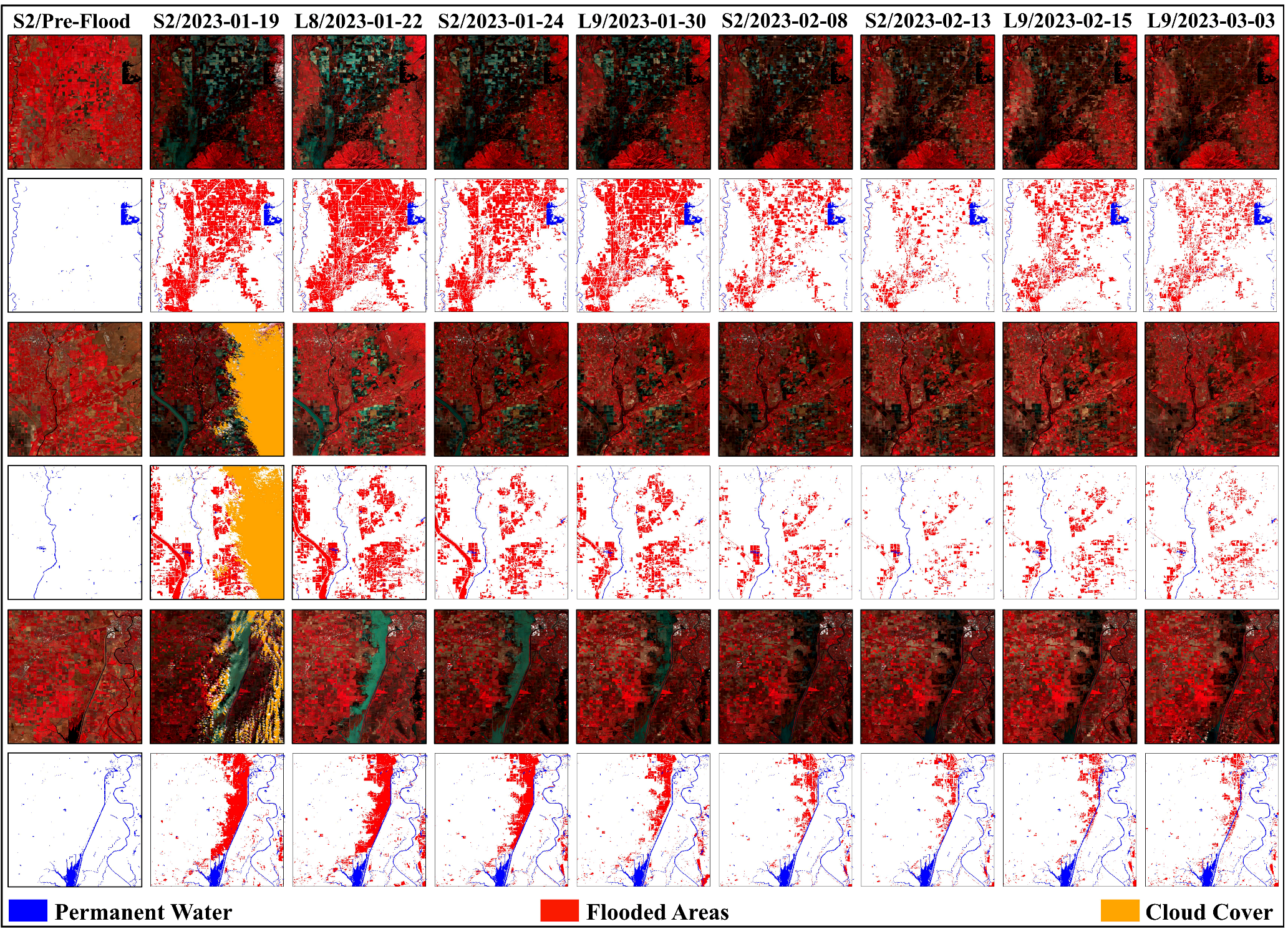

4.3. Result of NRT Flood Mapping

4.4. Extraction of Water and Flooded Areas Using the Proposed Method

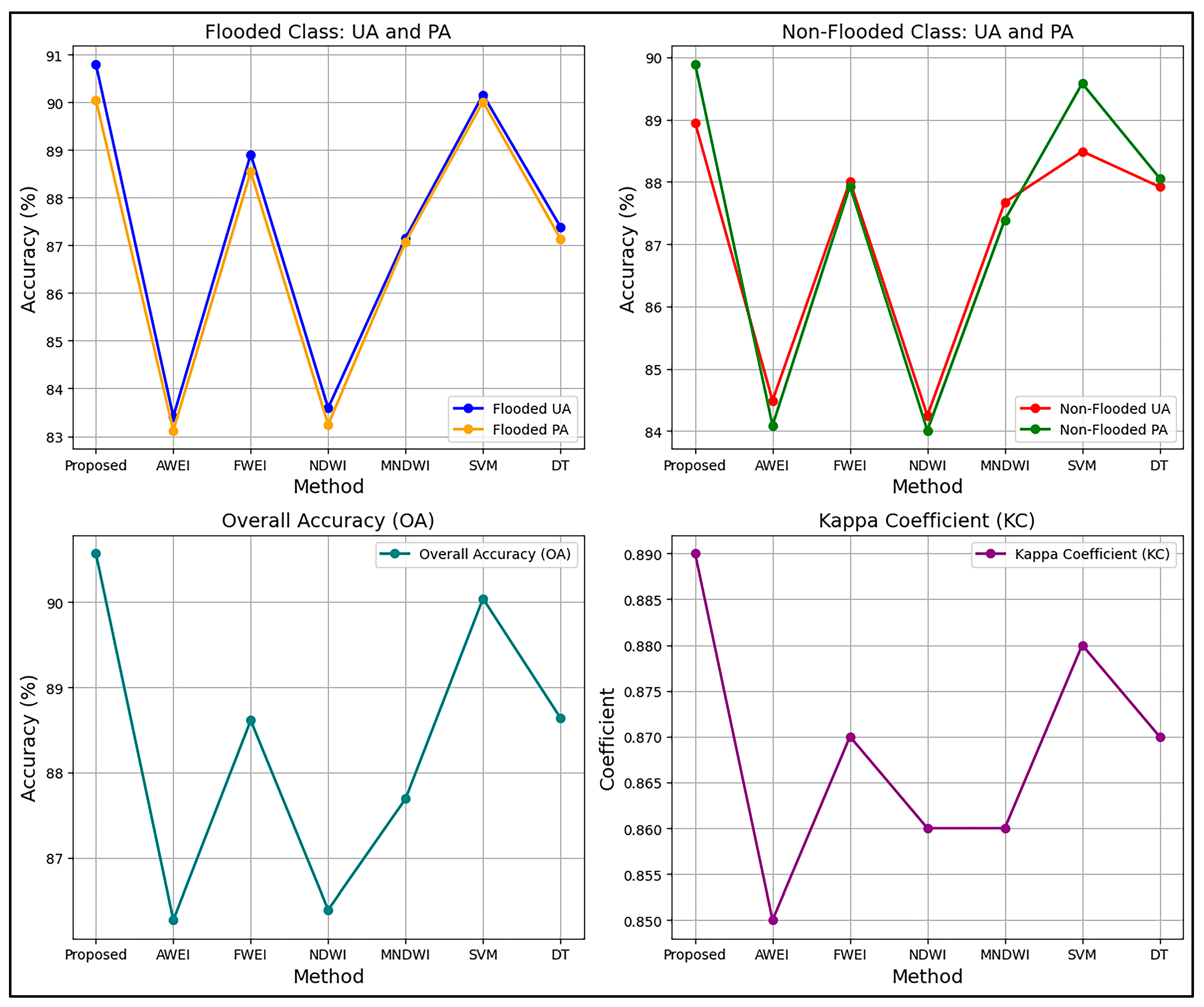

4.5. Accuracy Assessment of the Results

4.5.1. Land Cover Classification Accuracy Assessment

4.5.2. Flood Detection Accuracy Assessment

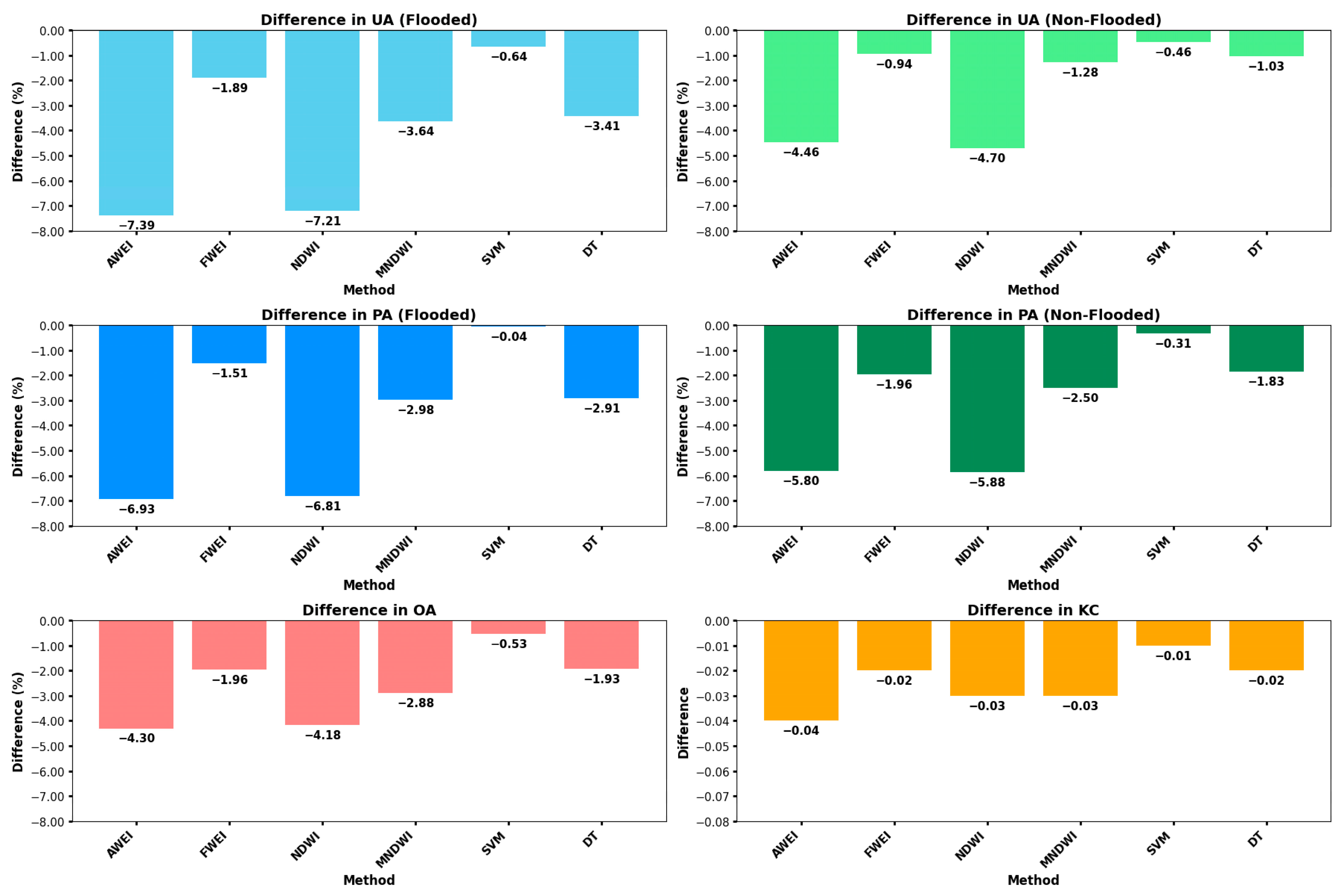

4.5.3. Comparing the Accuracy of the Proposed Method and Other Methods

4.6. Impact of Training Sample Size on Classification Accuracy Metrics

4.7. Advantages and Limitations of the Proposed Method

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fischer, S.; Lun, D.; Schumann, A.; Blöschl, G. Detecting flood-type-specific flood-rich and flood-poor periods in peaks-over-threshold series with application to Bavaria (Germany). Stoch. Environ. Res. Risk Assess. 2023, 37, 1395–1413. [Google Scholar] [CrossRef] [PubMed]

- Chandole, V.; Joshi, G.S.; Srivastava, V.K. Flood risk mapping under changing climate in Lower Tapi river basin, India. Stoch. Environ. Res. Risk Assess. 2024, 38, 2231–2259. [Google Scholar] [CrossRef]

- Jiang, W.; Ji, X.; Li, Y.; Luo, X.; Yang, L.; Ming, W.; Liu, C.; Yan, S.; Yang, C.; Sun, C. Modified flood potential index (MFPI) for flood monitoring in terrestrial water storage depletion basin using GRACE estimates. J. Hydrol. 2023, 616, 128765. [Google Scholar] [CrossRef]

- Tran, K.H.; Menenti, M.; Jia, L. Surface Water Mapping and Flood Monitoring in the Mekong Delta Using Sentinel-1 SAR Time Series and Otsu Threshold. Remote Sens. 2022, 14, 5721. [Google Scholar] [CrossRef]

- Farhadi, H.; Ebadi, H.; Kiani, A.; Asgary, A. A novel flood/water extraction index (FWEI) for identifying water and flooded areas using sentinel-2 visible and near-infrared spectral bands. Stoch. Environ. Res. Risk Assess. 2024, 38, 1873–1895. [Google Scholar] [CrossRef]

- Chowdhury, E.H.; Hassan, Q.K. Use of remote sensing data in comprehending an extremely unusual flooding event over southwest Bangladesh. Nat. Hazards 2017, 88, 1805–1823. [Google Scholar] [CrossRef]

- Pandey, A.C.; Bhattacharjee, S.; Wasim, M.; Salim, M.; Ranjan Parida, B. Extreme rainfall-induced urban flood monitoring and damage assessment in Wuhan (China) and Kumamoto (Japan) cities using Google Earth Engine. Environ. Monit. Assess. 2022, 194, 402. [Google Scholar] [CrossRef]

- Andrew, O.; Apan, A.; Paudyal, D.R.; Perera, K. Convolutional Neural Network-Based Deep Learning Approach for Automatic Flood Mapping Using NovaSAR-1 and Sentinel-1 Data. ISPRS Int. J. Geo-Inf. 2023, 12, 194. [Google Scholar] [CrossRef]

- Baghermanesh, S.S.; Jabari, S.; McGrath, H. Urban Flood Detection Using TerraSAR-X and SAR Simulated Reflectivity Maps. Remote Sens. 2022, 14, 6154. [Google Scholar] [CrossRef]

- Bangira, T.; Iannini, L.; Menenti, M.; Van Niekerk, A.; Vekerdy, Z. Flood extent mapping in the Caprivi floodplain using sentinel-1 time series. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5667–5683. [Google Scholar] [CrossRef]

- Shen, X.; Wang, D.; Mao, K.; Anagnostou, E.; Hong, Y. Inundation extent mapping by synthetic aperture radar: A review. Remote Sens. 2019, 11, 879. [Google Scholar] [CrossRef]

- Martinis, S.; Plank, S.; Ćwik, K. The use of Sentinel-1 time-series data to improve flood monitoring in arid areas. Remote Sens. 2018, 10, 583. [Google Scholar] [CrossRef]

- Garg, S.; Dasgupta, A.; Motagh, M.; Martinis, S.; Selvakumaran, S. Unlocking the full potential of Sentinel-1 for flood detection in arid regions. Remote Sens. Environ. 2024, 315, 114417. [Google Scholar] [CrossRef]

- Abdel-Hamid, A.; Dubovyk, O.; Greve, K. The potential of sentinel-1 InSAR coherence for grasslands monitoring in Eastern Cape, South Africa. Int. J. Appl. Earth Obs. Geoinf. 2021, 98, 102306. [Google Scholar] [CrossRef]

- Wang, T.; Liao, M.; Perissin, D. InSAR coherence-decomposition analysis. IEEE Geosci. Remote Sens. Lett. 2009, 7, 156–160. [Google Scholar] [CrossRef]

- Du, Y.; Zhang, Y.; Ling, F.; Wang, Q.; Li, W.; Li, X. Water bodies’ mapping from Sentinel-2 imagery with modified normalized difference water index at 10-m spatial resolution produced by sharpening the SWIR band. Remote Sens. 2016, 8, 354. [Google Scholar] [CrossRef]

- Gao, B.-C. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Farhadi, H.; Esmaeily, A.; Najafzadeh, M. Flood monitoring by integration of Remote Sensing technique and Multi-Criteria Decision Making method. Comput. Geosci. 2022, 160, 105045. [Google Scholar] [CrossRef]

- Zoka, M.; Psomiadis, E.; Dercas, N. The complementary use of optical and SAR data in monitoring flood events and their effects. Proceedings 2018, 2, 644. [Google Scholar] [CrossRef]

- Martinis, S.; Kersten, J.; Twele, A. A fully automated TerraSAR-X based flood service. ISPRS J. Photogramm. Remote Sens. 2015, 104, 203–212. [Google Scholar] [CrossRef]

- Cian, F.; Marconcini, M.; Ceccato, P. Normalized Difference Flood Index for rapid flood mapping: Taking advantage of EO big data. Remote Sens. Environ. 2018, 209, 712–730. [Google Scholar] [CrossRef]

- Huang, C.; Chen, Y.; Wu, J. Mapping spatio-temporal flood inundation dynamics at large river basin scale using time-series flow data and MODIS imagery. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 350–362. [Google Scholar] [CrossRef]

- Huang, M.; Jin, S. Rapid flood mapping and evaluation with a supervised classifier and change detection in Shouguang using Sentinel-1 SAR and Sentinel-2 optical data. Remote Sens. 2020, 12, 2073. [Google Scholar] [CrossRef]

- Sakamoto, T.; Van Nguyen, N.; Kotera, A.; Ohno, H.; Ishitsuka, N.; Yokozawa, M. Detecting temporal changes in the extent of annual flooding within the Cambodia and the Vietnamese Mekong Delta from MODIS time-series imagery. Remote Sens. Environ. 2007, 109, 295–313. [Google Scholar] [CrossRef]

- Boni, G.; Ferraris, L.; Pulvirenti, L.; Squicciarino, G.; Pierdicca, N.; Candela, L.; Pisani, A.R.; Zoffoli, S.; Onori, R.; Proietti, C. A prototype system for flood monitoring based on flood forecast combined with COSMO-SkyMed and Sentinel-1 data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2794–2805. [Google Scholar] [CrossRef]

- Mason, D.C.; Davenport, I.J.; Neal, J.C.; Schumann, G.J.-P.; Bates, P.D. Near real-time flood detection in urban and rural areas using high-resolution synthetic aperture radar images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3041–3052. [Google Scholar] [CrossRef]

- McCormack, T.; Campanyà, J.; Naughton, O. A methodology for mapping annual flood extent using multi-temporal Sentinel-1 imagery. Remote Sens. Environ. 2022, 282, 113273. [Google Scholar] [CrossRef]

- Landuyt, L.; Verhoest, N.E.; Van Coillie, F.M. Flood mapping in vegetated areas using an unsupervised clustering approach on sentinel-1 and-2 imagery. Remote Sens. 2020, 12, 3611. [Google Scholar] [CrossRef]

- Jiang, L.; Zhou, C.; Li, X. Sub-Pixel Surface Water Mapping for Heterogeneous Areas from Sentinel-2 Images: A Case Study in the Jinshui Basin, China. Water 2023, 15, 1446. [Google Scholar] [CrossRef]

- Luo, X.; Xie, H.; Xu, X.; Pan, H.; Tong, X. A hierarchical processing method for subpixel surface water mapping from highly heterogeneous urban environments using Landsat OLI data. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 6221–6224. [Google Scholar]

- Xie, H.; Luo, X.; Xu, X.; Pan, H.; Tong, X. Automated subpixel surface water mapping from heterogeneous urban environments using Landsat 8 OLI imagery. Remote Sens. 2016, 8, 584. [Google Scholar] [CrossRef]

- Xiong, L.; Deng, R.; Li, J.; Liu, X.; Qin, Y.; Liang, Y.; Liu, Y. Subpixel surface water extraction (SSWE) using Landsat 8 OLI data. Water 2018, 10, 653. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, Q.; Xie, H.; Ge, Y.; Atkinson, P.M. Spatio-temporal subpixel mapping with cloudy images. Sci. Remote Sens. 2022, 6, 100068. [Google Scholar] [CrossRef]

- Acharya, T.D.; Subedi, A.; Lee, D.H. Evaluation of water indices for surface water extraction in a Landsat 8 scene of Nepal. Sensors 2018, 18, 2580. [Google Scholar] [CrossRef] [PubMed]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Wang, X.; Xie, S.; Zhang, X.; Chen, C.; Guo, H.; Du, J.; Duan, Z. A robust Multi-Band Water Index (MBWI) for automated extraction of surface water from Landsat 8 OLI imagery. Int. J. Appl. Earth Obs. Geoinf. 2018, 68, 73–91. [Google Scholar] [CrossRef]

- Farhadi, H.; Ebadi, H.; Kiani, A.; Asgary, A. Introducing a New Index for Flood Mapping Using Sentinel-2 Imagery (SFMI). Comput. Geosci. 2024, 194, 105742. [Google Scholar] [CrossRef]

- Pham, B.T.; Jaafari, A.; Van Phong, T.; Yen, H.P.H.; Tuyen, T.T.; Van Luong, V.; Nguyen, H.D.; Van Le, H.; Foong, L.K. Improved flood susceptibility mapping using a best first decision tree integrated with ensemble learning techniques. Geosci. Front. 2021, 12, 101105. [Google Scholar] [CrossRef]

- Esfandiari, M.; Jabari, S.; McGrath, H.; Coleman, D. Flood mapping using random forest and identifying the essential conditioning factors; a case study in fredericton, new brunswick, canada. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 3, 609–615. [Google Scholar] [CrossRef]

- Farhadi, H.; Najafzadeh, M. Flood risk mapping by remote sensing data and random forest technique. Water 2021, 13, 3115. [Google Scholar] [CrossRef]

- Feyisa, G.L.; Meilby, H.; Fensholt, R.; Proud, S.R. Automated Water Extraction Index: A new technique for surface water mapping using Landsat imagery. Remote Sens. Environ. 2014, 140, 23–35. [Google Scholar] [CrossRef]

- Wolski, P.; Murray-Hudson, M.; Thito, K.; Cassidy, L. Keeping it simple: Monitoring flood extent in large data-poor wetlands using MODIS SWIR data. Int. J. Appl. Earth Obs. Geoinf. 2017, 57, 224–234. [Google Scholar] [CrossRef]

- Xu, H. Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Bijeesh, T.; Narasimhamurthy, K. Surface water detection and delineation using remote sensing images: A review of methods and algorithms. Sustain. Water Resour. Manag. 2020, 6, 68. [Google Scholar] [CrossRef]

- Inman, V.L.; Lyons, M.B. Automated inundation mapping over large areas using Landsat data and Google Earth Engine. Remote Sens. 2020, 12, 1348. [Google Scholar] [CrossRef]

- Wang, J.; Wang, F.; Wang, S.; Zhou, Y.; Ji, J.; Wang, Z.; Zhao, Q.; Liu, L. Flood Monitoring in the Middle and Lower Basin of the Yangtze River Using Google Earth Engine and Machine Learning Methods. ISPRS Int. J. Geo-Inf. 2023, 12, 129. [Google Scholar] [CrossRef]

- Malinowski, R.; Groom, G.; Schwanghart, W.; Heckrath, G. Detection and delineation of localized flooding from WorldView-2 multispectral data. Remote Sens. 2015, 7, 14853–14875. [Google Scholar] [CrossRef]

- Xie, M.; Jiang, Z.; Sainju, A.M. Geographical hidden markov tree for flood extent mapping. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 2545–2554. [Google Scholar]

- Lim, J.; Lee, K.-s. Flood mapping using multi-source remotely sensed data and logistic regression in the heterogeneous mountainous regions in North Korea. Remote Sens. 2018, 10, 1036. [Google Scholar] [CrossRef]

- Lin, K.; Chen, H.; Xu, C.-Y.; Yan, P.; Lan, T.; Liu, Z.; Dong, C. Assessment of flash flood risk based on improved analytic hierarchy process method and integrated maximum likelihood clustering algorithm. J. Hydrol. 2020, 584, 124696. [Google Scholar] [CrossRef]

- Amani, M.; Ghorbanian, A.; Ahmadi, S.A.; Kakooei, M.; Moghimi, A.; Mirmazloumi, S.M.; Moghaddam, S.H.A.; Mahdavi, S.; Ghahremanloo, M.; Parsian, S. Google earth engine cloud computing platform for remote sensing big data applications: A comprehensive review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5326–5350. [Google Scholar] [CrossRef]

- Wulder, M.A.; Loveland, T.R.; Roy, D.P.; Crawford, C.J.; Masek, J.G.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Belward, A.S.; Cohen, W.B. Current status of Landsat program, science, and applications. Remote Sens. Environ. 2019, 225, 127–147. [Google Scholar] [CrossRef]

- Yang, L.; Driscol, J.; Sarigai, S.; Wu, Q.; Chen, H.; Lippitt, C.D. Google Earth Engine and artificial intelligence (AI): A comprehensive review. Remote Sens. 2022, 14, 3253. [Google Scholar] [CrossRef]

- Teluguntla, P.; Thenkabail, P.S.; Oliphant, A.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K.; Huete, A. A 30-m landsat-derived cropland extent product of Australia and China using random forest machine learning algorithm on Google Earth Engine cloud computing platform. ISPRS J. Photogramm. Remote Sens. 2018, 144, 325–340. [Google Scholar] [CrossRef]

- Zha, Y.; Gao, J.; Ni, S. Use of normalized difference built-up index in automatically mapping urban areas from TM imagery. Int. J. Remote Sens. 2003, 24, 583–594. [Google Scholar] [CrossRef]

- Farhadi, H.; Managhebi, T.; Ebadi, H. Buildings extraction in urban areas based on the radar and optical time series data using Google Earth Engine. Sci.-Res. Q. Geogr. Data (SEPEHR) 2022, 30, 43–63. [Google Scholar]

- Diek, S.; Fornallaz, F.; Schaepman, M.E.; De Jong, R. Barest pixel composite for agricultural areas using landsat time series. Remote Sens. 2017, 9, 1245. [Google Scholar] [CrossRef]

- Panahi, H.; Azizi, Z.; Kiadaliri, H.; Almodaresi, S.A.; Aghamohamadi, H. Bare soil detecting algorithms in western iran woodlands using remote sensing. Smart Agric. Technol. 2024, 7, 100429. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Farhadi, H.; Ebadi, H.; Kiani, A. F2BFE: Development of feature-based building footprint extraction by remote sensing data and GEE. Int. J. Remote Sens. 2023, 44, 5845–5875. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Yamazaki, D.; Trigg, M.A.; Ikeshima, D. Development of a global ~90 m water body map using multi-temporal Landsat images. Remote Sens. Environ. 2015, 171, 337–351. [Google Scholar] [CrossRef]

| Image Scene | Class | UA | PA | OA | KC |

|---|---|---|---|---|---|

| S2-2023/01/24 | Flood/Water | 91.23 | 92.42 | 89.96 | 0.89 |

| Vegetation | 90.14 | 90.17 | |||

| Built-Up | 68.64 | 69.12 | |||

| Soil | 79.34 | 80.05 | |||

| L8-2023/01/22 | Flood/Water | 90.58 | 90.87 | 87.68 | 0.86 |

| Vegetation | 89.93 | 88.99 | |||

| Built-Up | 66.49 | 67.06 | |||

| Soil | 79.64 | 79.85 | |||

| L9-2023/02/15 | Flood/Water | 90.05 | 90.59 | 88.84 | 0.88 |

| Vegetation | 90.43 | 90.62 | |||

| Built-Up | 69.26 | 70.09 | |||

| Soil | 79.81 | 80.28 |

| Image Scene | Class | UA | PA | OA | KC |

|---|---|---|---|---|---|

| S2-2023/01/24 | Flooded | 92.24 | 92.58 | 92.03 | 0.91 |

| Non-Flooded | 89.39 | 90.61 | |||

| L8-2023/01/22 | Flooded | 90.19 | 90.49 | 89.14 | 0.88 |

| Non-Flooded | 88.66 | 89.63 | |||

| L9-2023/02/15 | Flooded | 89.97 | 90.09 | 90.54 | 0.89 |

| Non-Flooded | 88.81 | 89.42 | |||

| Average | Flooded | 90.8 | 90.05 | 90.57 | 0.89 |

| Non-Flooded | 88.95 | 89.89 |

| Method | Class | UA | PA | OA | KC |

|---|---|---|---|---|---|

| Proposed (Average) | Flooded | 90.8 | 90.05 | 90.57 | 0.89 |

| Non-Flooded | 88.95 | 89.89 | |||

| AWEI | Flooded | 83.41 | 83.12 | 86.27 | 0.85 |

| Non-Flooded | 84.49 | 84.09 | |||

| FWEI | Flooded | 88.91 | 88.54 | 88.61 | 0.87 |

| Non-Flooded | 88.01 | 87.93 | |||

| NDWI | Flooded | 83.59 | 83.24 | 86.39 | 0.86 |

| Non-Flooded | 84.25 | 84.01 | |||

| MNDWI | Flooded | 87.16 | 87.07 | 87.69 | 0.86 |

| Non-Flooded | 87.67 | 87.39 | |||

| SVM | Flooded | 90.16 | 90.01 | 90.04 | 0.88 |

| Non-Flooded | 88.49 | 89.58 | |||

| DT | Flooded | 87.39 | 87.14 | 88.64 | 0.87 |

| Non-Flooded | 87.92 | 88.06 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farhadi, H.; Ebadi, H.; Kiani, A.; Asgary, A. Near Real-Time Flood Monitoring Using Multi-Sensor Optical Imagery and Machine Learning by GEE: An Automatic Feature-Based Multi-Class Classification Approach. Remote Sens. 2024, 16, 4454. https://doi.org/10.3390/rs16234454

Farhadi H, Ebadi H, Kiani A, Asgary A. Near Real-Time Flood Monitoring Using Multi-Sensor Optical Imagery and Machine Learning by GEE: An Automatic Feature-Based Multi-Class Classification Approach. Remote Sensing. 2024; 16(23):4454. https://doi.org/10.3390/rs16234454

Chicago/Turabian StyleFarhadi, Hadi, Hamid Ebadi, Abbas Kiani, and Ali Asgary. 2024. "Near Real-Time Flood Monitoring Using Multi-Sensor Optical Imagery and Machine Learning by GEE: An Automatic Feature-Based Multi-Class Classification Approach" Remote Sensing 16, no. 23: 4454. https://doi.org/10.3390/rs16234454

APA StyleFarhadi, H., Ebadi, H., Kiani, A., & Asgary, A. (2024). Near Real-Time Flood Monitoring Using Multi-Sensor Optical Imagery and Machine Learning by GEE: An Automatic Feature-Based Multi-Class Classification Approach. Remote Sensing, 16(23), 4454. https://doi.org/10.3390/rs16234454