Abstract

Improving the detection of small objects in remote sensing is essential for its extensive use in various applications. The diminutive size of these objects, coupled with the complex backgrounds in remote sensing images, complicates the detection process. Moreover, operations like downsampling during feature extraction can cause a significant loss of spatial information for small objects, adversely affecting detection accuracy. To tackle these issues, we propose ESL-YOLO, which incorporates feature enhancement, fusion, and a local attention pyramid. This model includes: (1) an innovative plug-and-play feature enhancement module that incorporates multi-scale local contextual information to bolster detection performance for small objects; (2) a spatial-context-guided multi-scale feature fusion framework that enables effective integration of shallow features, thereby minimizing spatial information loss; and (3) a local attention pyramid module aimed at mitigating background noise while highlighting small object characteristics. Evaluations on the publicly accessible remote sensing datasets AI-TOD and DOTAv1.5 indicate that ESL-YOLO significantly surpasses other contemporary object detection frameworks. In particular, ESL-YOLO enhances mean average precision mAP by 10% and 1.1% on the AI-TOD and DOTAv1.5 datasets, respectively, compared to YOLOv8s. This model is particularly adept at small object detection in remote sensing imagery and holds significant potential for practical applications.

1. Introduction

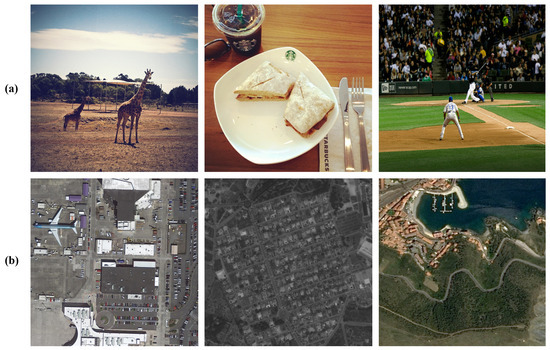

With the swift progress of remote sensing technology and the growing volume of remote sensing image data, object detection has found extensive applications across various domains [1,2,3,4,5], including urban monitoring [6], aerospace [7], and early forest fire detection [8]. Improving the effectiveness of object detection is essential to fulfill the practical requirements of these areas. In natural image datasets like COCO [9] and ImageNet [10], objects tend to be larger and are usually set against clearer backgrounds, offering rich feature representation for effective object detection, as shown in the upper section of Figure 1. Consequently, numerous leading object detection algorithms have achieved strong performance on these datasets. Conversely, remote sensing images frequently present more intricate backgrounds and include many small objects (smaller than 32 × 32 pixels) and tiny objects (smaller than 16 × 16 pixels) [4,11], as shown in the lower section of Figure 1. The complexity of the background and the prevalence of small objects significantly hinder the effectiveness of object detection.

Figure 1.

Comparison of natural images and remote sensing images: (a) natural images; (b) remote sensing images.

Recently, notable progress has been achieved in the detection of larger objects within remote sensing images. Nonetheless, detecting small objects remains a significant challenge. Conventional object detection algorithms that depend on handcrafted features like HOG [12] and SIFT [13] are limited in their ability to capture object details and lack adaptive learning capabilities, making them unsuitable for small object detection tasks.

The advent of deep learning has led to the widespread adoption of convolutional neural network-based object detection methods, thanks to their strong representation learning abilities. Detection methods can be categorized into two types: two-stage and one-stage detection. Two-stage detection approaches begin by generating candidate regions, then extract features via convolutional neural networks, followed by classification and bounding box regression. Common examples include R-CNN [14], Fast R-CNN [15], and Faster R-CNN [16]. However, the need for real-time processing in contemporary applications has led to the development of one-stage detection methods. The YOLO series redefines the object detection task as a single regression problem, accomplishing both bounding box regression and object classification in one forward pass. YOLOv1 [17] provides quicker inference speeds than two-stage detectors, but it is less effective in capturing details. YOLOv2 [18] enhances multi-scale object detection accuracy by introducing an anchor box mechanism, though its performance in complicated backgrounds is still restricted. YOLOv3 [19] advances this by integrating residual network architectures and multi-scale prediction techniques, boosting performance but increasing model complexity. YOLOv4 [20] further refines network design by employing techniques like Mosaic data augmentation and CIOU loss functions, enhancing detection accuracy but raising computational costs. In contrast, YOLOv5 employs a lighter architecture, built on optimized training strategies and a restructured model, which improves detection accuracy while enabling rapid deployment. The later YOLOv6 [21] and YOLOv7 [22] versions have further refined algorithms and training approaches. YOLOv8, the leading one-stage object detection model, maintains the trend of lightweight design and swift deployment, facilitating real-time detection while outperforming earlier versions in both performance and scalability. Consequently, we have chosen YOLOv8s as the foundational framework for our small object detection model.

The lack of small object information and the interference from intricate backgrounds in remote sensing images present considerable challenges for small object detection. Additionally, as the depth of the feature extraction backbone network increases, operations like downsampling and pooling may result in the loss of spatial location information for numerous small objects [23], negatively impacting the accuracy of bounding box regression. Thus, this study aims to develop an enhanced model based on YOLOv8 to improve the accuracy of small object detection in remote sensing images, addressing practical application needs.

We aim to mitigate the issues of insufficient small object information and loss of spatial information during feature extraction through enhancement and fusion of features. We also propose a background suppression module to reduce the impact of complex backgrounds. For feature enhancement, we introduce an effective feature enhancement module (EFEM) that employs multi-branch separable convolutions for feature extraction and integrates receptive field information across multiple scales to improve small object perception. Regarding feature fusion, we develop a multi-scale fusion architecture (SCGBiFPN) that combines shallow features rich in spatial information and incorporates skip connections to enhance feature transmission across various levels. Lastly, we present a background suppression module (LAPM) that concentrates on local features and utilizes normalization and activation functions to minimize interference from complex backgrounds, employing an attention mechanism for local areas of different sizes organized in a pyramid structure.

The main contributions of this paper consist of the following:

- (1)

- We present EFEM, an effective feature enhancement module designed to address the issue of insufficient small object information. By integrating local features across various scales, this module enhances the perception of small objects and boosts detection accuracy.

- (2)

- We propose a novel multi-scale feature fusion architecture, SCGBiFPN, which integrates shallow features abundant in spatial context information to reduce spatial information loss for small objects. The addition of skip connections facilitates smoother feature transmission across different levels, retaining more spatial and semantic information.

- (3)

- We are the first to incorporate LAPM, a background suppression module, into the YOLO model, effectively diminishing the impact of complex backgrounds and significantly enhancing small object detection performance.

2. Related Works

2.1. Feature Enhancement Method

Detecting small objects challenges the representation learning capabilities of deep learning networks. To tackle this issue, researchers have investigated feature enhancement strategies to enrich information capture. Cheng et al. [24] utilized spatial and channel attention mechanisms prior to feature fusion, significantly augmenting both spatial and semantic information. Yi et al. [25] introduced CloAttention, integrating global and local attention mechanisms to enhance the backbone network’s feature extraction, effectively focusing on both global and local details. Zhang et al. [26] improved feature representation by addressing both spatial and channel dimensions. Shen et al. [5] applied a coordinate attention mechanism at different feature levels, which strengthened contextual relationships and improved the network’s ability to capture features at multiple scales. Li et al. [27] combined self-attention and cross-attention mechanisms for effective object context and correlation capture. Tang et al. [28] introduced the CBAM hybrid attention mechanism to emphasize critical features during feature extraction. Wang et al. [29] employed the RFB module to enhance the extraction of global contextual information by expanding the model’s receptive field.

Furthermore, other studies employed multi-branch deep convolutional networks to capture multi-scale contexts and emphasize local information [30]. Large kernel selection networks were also developed to adaptively adjust kernel sizes based on object scale [31], integrating background information to improve feature representation.

2.2. Feature Fusion Method

Precise localization of small objects depends on sufficient spatial information. However, as feature extraction networks deepen, the spatial information for small objects often diminishes. To counteract this issue, researchers have utilized multi-scale feature fusion techniques to improve feature integrity and minimize information loss. Lin et al. [32] were the pioneers in proposing the feature pyramid network FPN, which significantly improves object detection performance by fusing features from various levels. Following this, numerous improved variants of FPN have been developed, with optimizations made in both network architecture and weight strategies, leading to the introduction of methods such as PANet [33], NAS-FPN [34], and BiFPN [35], all of which have demonstrated effective detection results. For small object detection, several researchers have optimized feature fusion structures in various ways. Wang et al. [36] applied a query mechanism to shallow features to extract coarse features relevant to small objects, followed by a self-sparse fusion operation to integrate the related features, thereby preserving more information about small objects. Zhang et al. [37] enhanced the fusion of feature information by combining deformable convolutions with a coordinate attention mechanism. Li et al. [38] designed an effective feature fusion module that balances the use of local details and global contextual information, facilitating the efficient capture of fine details for small objects. Jiang et al. [39] and Li et al. [40] advanced feature fusion by incorporating multi-scale features and adopting an effective BiFPN fusion architecture, respectively. Thus, the reasonable design of multi-scale fusion structures is essential for enhancing object detection performance, prompting us to design the SCGBiFPN fusion structure tailored for small object detection.

Additionally, some researchers have transformed standard downsampling convolutions into depthwise convolutions [23] and constructed object reconstruction modules [41] to preserve more feature information for detection tasks. Nonetheless, these approaches often lead to increased complexity in model training.

2.3. Background Suppression Method

Complex background interference significantly affects the accuracy of small object detection. To tackle this challenge, Zhang et al. [42] modeled foreground information to lessen the impact of intricate background features. Wang et al. [43] created a visual attention network that connects the feature extraction and classification/regression networks, enhancing object features while reducing background distractions. Ma et al. [44] proposed the Object-Specific Enhancement (OSE) strategy, which aims to strengthen object features. Fan et al. [45] improved the recognition of small objects in complex backgrounds by using large kernel depthwise convolutions to capture broad contextual information. Dong et al. [46] proposed a background separation strategy to highlight features of interest and suppress the influence of background noise. Zhao et al. [47] introduced a scene-context-guided feature enhancement module to reduce background noise and emphasize the characteristics of small objects.

Although these approaches effectively mitigate background interference, they often involve numerous training parameters and predominantly address the problem from a global feature perspective, overlooking the local features of small objects. In response, the local attention pyramid (LAP) [48] that functions without adding training parameters is introduced. This method segments the feature map into multiple patches, facilitating simultaneous background suppression and object enhancement for each patch, thereby focusing on the local characteristics of small objects.

3. Materials and Methods

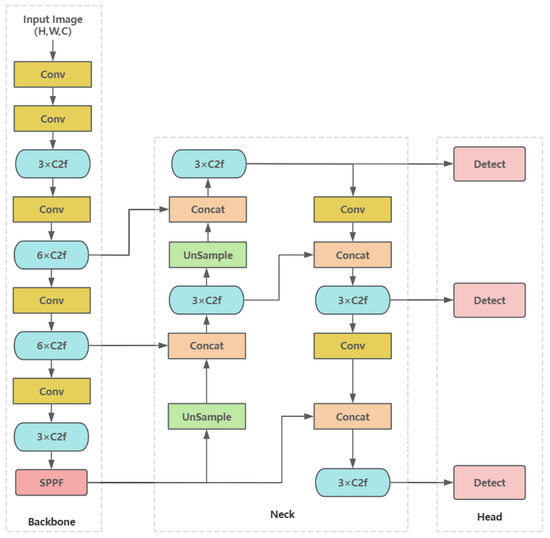

The YOLO series has undergone continuous development and refinement. Its efficiency, strong scalability, and outstanding performance in object detection have attracted considerable attention and extensive research from many scholars. To meet the diverse needs of various tasks, researchers have developed several variants of YOLO, facilitating its application across a wide range of fields. YOLOv8’s architecture consists of three primary components, the backbone, neck, and head, as illustrated in Figure 2. The backbone network handles feature extraction, which includes downsampling convolutions, the C2f module, and the SPPF module. Although YOLOv8 introduces the C2f module in its backbone, enhancing its performance compared to YOLOv5, the backbone still lacks sufficient capability to extract features from small objects, prompting the creation of the feature enhancement module. The neck structure employs the PA-FPN network to facilitate multi-scale feature fusion. However, its effectiveness in small object detection requires optimization. The detection head employs a decoupled design that separates object classification from localization tasks, thereby enhancing detection accuracy. Although YOLOv8 performs strongly in various aspects, optimization opportunities remain. Therefore, we further propose a feature enhancement module, a multi-scale feature fusion structure more suited to small object detection, and a background suppression module based on YOLOv8 to enhance overall detection performance.

Figure 2.

Structure of YOLOv8.

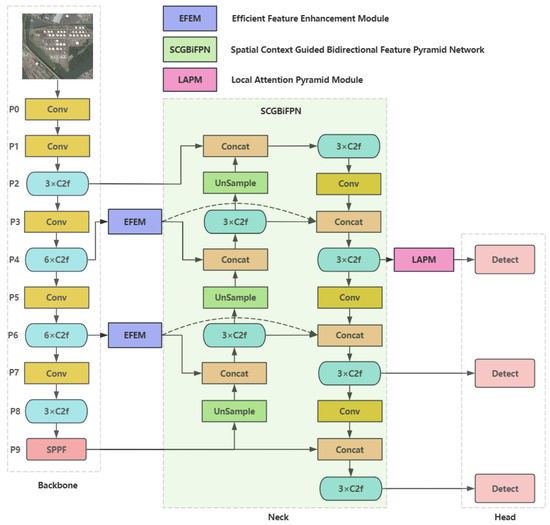

Despite the baseline YOLOv8 model’s strong performance on certain small object datasets, it faces limitations in accurately detecting small objects within remote sensing images. To address these challenges, we have developed an enhanced model tailored specifically for this task, named ESL-YOLO, as illustrated in Figure 3. Given the small size, minimal information, and restricted receptive field of small objects, the backbone network has difficulty capturing their features effectively. To overcome this, we proposed the EFEM to process the outputs of the P4 and P6 layers from the backbone network before integrating these features in the neck. This module enhances the feature representations from the backbone and the neck’s ability to capture high-level semantic information. Second, we observed that the shallow features of the backbone network are rich in spatial contextual information, while the deep features contain substantial semantic information. However, small object detection is particularly sensitive to spatial positional information due to complex background interference, and the original YOLOv8 neck design did not fully utilize the shallow spatial information to guide feature fusion. To address this, we redesigned the neck network and proposed the SCGBiFPN structure. This structure incorporates the output of the P2 layer from the backbone, which is rich in spatial contextual information, and fuses it with deep features that contain semantic information. Following fusion, three C2f modules are added to enrich spatial and semantic information, ensuring adequate spatial data in multi-scale feature fusion to improve small object detection. Given the complexity of remote sensing backgrounds, noise frequently impairs small object detection. To counter this, we added LAPM before the detection head, effectively reducing background interference and enhancing accuracy.

Figure 3.

Structure of ESL-YOLO.

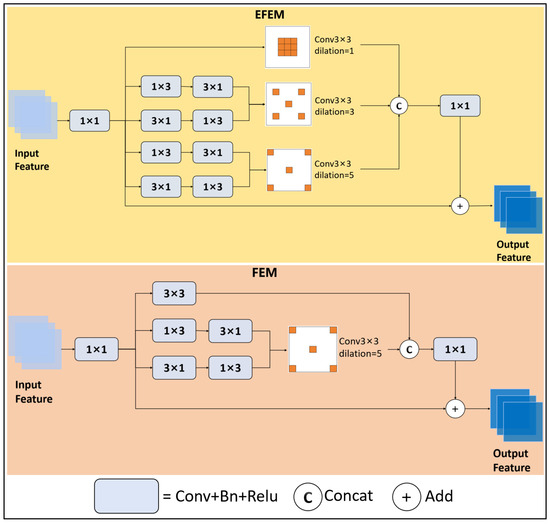

3.1. Effective Feature Enhancement Module

To tackle the challenges arising from the restricted information of small objects, the insufficient receptive field of traditional models, and the limitations of YOLOv8 in capturing the features of small objects, we proposed an Effective Feature Enhancement Module (EFEM), drawing inspiration from the FEM structure [49], as shown in Figure 4. This study emphasizes enhancing features by augmenting feature richness and expanding receptive fields at multiple scales. The EFEM module extracts rich feature semantic information by using 1 × 3 and 3 × 1 multi-branch convolution. Given the limited information from small objects, concentrating on extracting their local information is of vital importance for improving detection. Although the dilated convolutions in FEM effectively enhance features, their capacity to capture local particulars is still limited. Dilated convolutions with large dilation rates are unable to focus adequately on the fine-grained details of small objects. EFEM addresses this issue by employing dilated convolutions with smaller dilation rates and integrating multi-scale local contextual information.

Figure 4.

Structures of EFEM and FEM.

In EFEM, a 1 × 1 standard convolution is initially applied to each branch to adjust the input feature channels for subsequent processing. Subsequently, a multi-branch architecture with dilated convolutions extracts discriminative semantic features and captures multi-scale local information. Specifically, the first branch employs a 3 × 3 dilated convolution (dilation rate = 1). The second and third branches utilize 1 × 3 and 3 × 1 convolutions, followed by a 3 × 3 dilated convolution (dilation rate = 3), to extract richer feature information and capture local details. Similarly, the fourth and fifth branches adopt similar convolution combinations, ending with a 3 × 3 dilated convolution (dilation rate = 5). The outputs from these branches are fused and passed through a 1 × 1 convolution for further channel modeling. To preserve the original contextual information, EFEM incorporates a residual connection, which adds the input features to the multi-branch outputs.

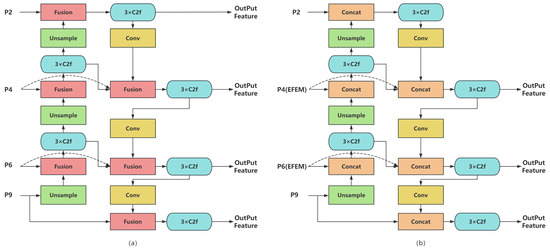

3.2. Spatial-Context-Guided Bidirectional Feature Pyramid Network

With increased backbone depth, spatial information for smaller objects is often lost. To mitigate this, we restructured YOLOv8’s original PA-FPN multi-scale fusion and introduced the Spatial-Context-Guided Bidirectional Feature Pyramid Network (SCGBiFPN), illustrated in Figure 5. The SCGBiFPN includes two paths: bottom-up and top-down. In the bottom-up path, we fuse the P9 layer features from the backbone network with the enhanced P4 and P6 features, as well as the P2 features that are rich in spatial context information. The fused features are then passed through the top-down path, ensuring that spatial context information flows throughout the entire pyramid network. By leveraging the top-level spatial information to enhance the pyramid structure, SCGBiFPN effectively shortens the information flow between the top and bottom levels. Inspired by the bidirectional connection strategy in BiFPN [35], we added skip connections to the P4 and P6 layers, allowing features to be directly transmitted across different levels. This design better preserves spatial and semantic information, and enhances multi-scale feature fusion. Unlike BiFPN, SCGBiFPN does not include a detection head for the P2 layer and employ weighted fusion strategies. This design choice aims to control model complexity while preserving more feature information.

Figure 5.

Structures of BiFPN and SCGBiFPN: (a) BiFPN; (b) SCGBiFPN.

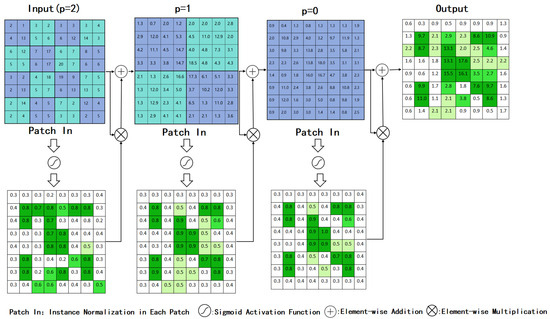

3.3. Local Attention Pyramid Module

After feature fusion, the resulting feature maps exhibit varying values across channels, which capture distinct characteristics and thus, generate focused responses in different regions. However, some channels may produce broad responses for larger regions, potentially affecting the accurate recognition of multiple objects. Given the differences in feature distribution among channels, it is essential to concentrate on local high-response regions (having higher values relative to adjacent channels). By applying independent normalization to these local high-response regions, we can mitigate this issue. Therefore, we introduce the local attention pyramid module LAPM [48], as illustrated in Figure 6. The core idea of LAPM is to partition the feature map of each channel into patches of varying sizes, apply independent instance normalization to each patch, and utilize the sigmoid activation function to enhance local high-response areas while suppressing background noise. Subsequently, the attention maps of the patches are recombined into a complete channel attention map. Due to the diverse sizes of small objects, LAPM employs a pyramid structure to hierarchically divide patches of different sizes, ensuring that local features of various scales are captured.

Figure 6.

Structure of LAPM.

Assuming the dimensions of the feature map F are H × W (H = W), and the pyramid structure consists of n layers, with the current layer denoted as p = n, , …, 1, 0, the dimensions of the patches in the input feature map of each layer are h × w. The calculations for the height and width of the patches are given by Equations (1) and (2):

The attention map generated at each layer is . Furthermore, the output result is . These are computed using the following formula:

PatchIn denotes instance normalization applied to the patches, while Sigmoid represents the sigmoid activation function. The parameter serves as the weighting factor to control the influence of the attention map, with a default setting of 0.5. In this project, p is set to 2, corresponding to the feature maps , , and the generated attention maps , , . Based on Equations (3) and (4), the computation formula for the final output feature map of LAPM is as follows:

4. Experimental Section

To thoroughly elucidate the performance of ESL-YOLO in remote sensing image object detection, this section will detail the experimental design we have established. Section 4.1 will outline the experimental environment, model training strategies, and evaluation metrics. Section 4.2 will provide a comprehensive description of the datasets used in the experiments. Finally, Section 4.3 and Section 4.4 will conduct a comprehensive analysis of ESL-YOLO’s performance through ablation studies and comparative experiments, respectively.

4.1. Experimental Details and Evaluation Metrics

All experiments in this study were carried out on a server employing an NVIDIA A100 GPU (Santa Clara, CA, USA). For the foundational model, we chose YOLOv8s, which was developed using the PyTorch framework 2.0. The training protocol included 200 epochs with a batch size of 16, utilizing stochastic gradient descent (SGD) as the optimization method. The learning rate, momentum, and weight decay were set to their default values, and we utilized the default CIOU localization loss along with the BCE classification loss from the YOLOv8 model for our loss functions. The AI-TOD-v2 and DOTAv1.5 datasets used in the experiments were resized to 800 × 800 pixels and 1024 × 1024 pixels, respectively, during the training process.

Model performance was evaluated using four metrics: precision (P), recall (R), , and . Precision measures the ratio of accurately detected objects among all predictions, while recall indicates the ratio of correctly identified objects among actual objects. These metrics are computed using , , , and , which are defined as follows:

provides a focused evaluation of detection performance by applying a single IoU threshold, while assesses accuracy across a range of thresholds, giving a more comprehensive view of model reliability under varied conditions. For each class, the corresponding average precision is calculated according to P and R. The mean average precision () is then derived by averaging values across all classes, offering an overall measure of detection effectiveness. Definitions of and are provided below:

denotes the total number of classes.

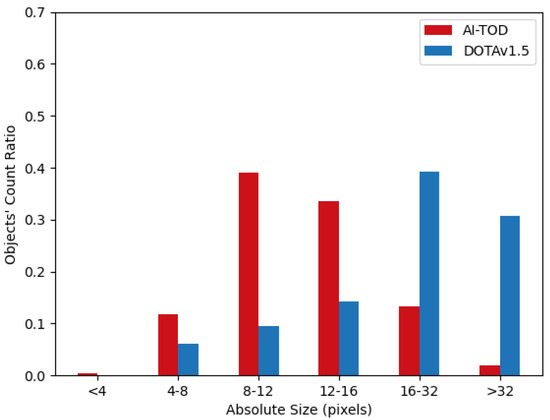

4.2. Experimental Datasets Description

The AI-TOD dataset [50] was developed to improve the detection of small objects in aerial imagery. It comprises a substantial collection of aerial images and contains a significant number of annotated instances across various categories, including examples such as airplanes, bridges, storage tanks, and vehicles. A notable feature of this dataset is that the average size of the labeled objects is around a dozen pixels, emphasizing its focus on identifying small objects within intricate aerial environments.

The DOTA dataset [51], introduced in 2018, is a comprehensive remote sensing dataset. The images in this dataset typically have high resolutions. Currently, this dataset has been updated three times. This study utilizes the DOTAv1.5 version. To ensure consistency in image size during model training, the images in the DOTAv1.5 dataset are cropped to 1024 × 1024 pixels, resulting in a total of 174,336 instances in the cropped images. The dataset covers 16 categories, including plane, ship, storage tank, soccer field, and infrastructure like bridges and harbors.

Figure 7 illustrates the distribution of different pixel-size objects in the AI-TOD and DOTAv1.5 datasets. Among them, the proportion of objects classified as small objects in the AI-TOD and DOTAv1.5 datasets is 97.98% and 69.3%, respectively. Both datasets include a substantial number of small objects, making them ideal for assessing the performance of the ESL-YOLO model.

Figure 7.

Distribution of different pixel-size objects in AI-TOD and DOTAv1.5.

4.3. Ablation Experiments

To evaluate the effectiveness of different modules in the proposed model for small object detection in remote sensing images, ablation experiments were conducted on the AI-TOD dataset, which contains a sufficient number of small objects. Under the same experimental conditions, the model configurations consisted of YOLOv8s, YOLOv8s + EFEM, YOLOv8s + SCGBiFPN, YOLOv8s + LAPM, YOLOv8s + EFEM + SCGBiFPN, and ESL-YOLO. This experiment assessed the contributions of EFEM, SCGBiFPN, and LAPM to the performance of the ESL-YOLO model. Table 1 displays the results, with a “✓” symbol indicating the addition of each module. Notably, in addition to common evaluation metrics such as precision (P), recall (R), , and , the experiment also considered testing time and model parameters to analyze the changes in detection speed and efficiency after the improvements.

Table 1.

Results of ablation experiments.

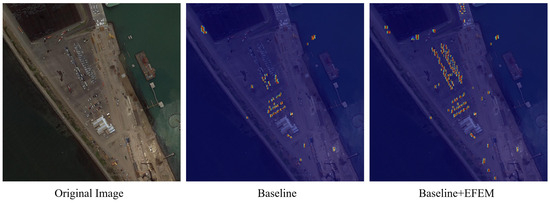

Table 1 illustrates that the addition of EFEM to YOLOv8s improved the model’s precision from 0.51 to 0.59, recall from 0.427 to 0.494, from 0.418 to 0.478, and from 0.186 to 0.21. Although adding EFEM increased testing time by 0.6 ms and model parameters by 0.55 MB, these changes were not significant, and the model’s performance improved substantially. Additionally, the feature heatmaps presented in Figure 8 illustrate the variations in object features before and after the introduction of EFEM, further confirming its effectiveness in extracting and integrating local contextual features, which facilitates a clearer distinction between objects and background. We also compared the performance of EFEM and FEM on the AI-TOD dataset, with the results summarized in Table 2. EFEM demonstrates superior overall performance in small object detection compared to FEM.

Figure 8.

Visual comparison of small object features with and without EFEM.

Table 2.

Comparison of EFEM and FEM.

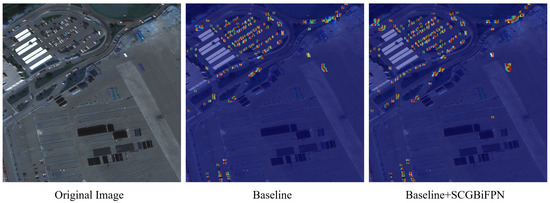

Table 1 shows that YOLOv8s incorporating the SCGBiFPN module resulted in an increase in precision from 0.51 to 0.607, recall from 0.427 to 0.522, from 0.418 to 0.511, and from 0.186 to 0.238, highlighting significant performance enhancements. The testing time and model parameters only increased by 0.2 ms and 0.28 MB, without significantly affecting the detection efficiency. The feature heatmaps in Figure 9 demonstrate that incorporating SCGBiFPN enhances the richness and detail of the model’s extracted features. To confirm SCGBiFPN’s effectiveness, we performed a comparative analysis of SCGBiFPN and BiFPN using the AI-TOD dataset, as summarized in Table 3. The results show that SCGBiFPN surpasses BiFPN in the detection of small objects.

Figure 9.

Visual comparison of small object features with and without SCGBiFPN.

Table 3.

Comparison of SCGBiFPN and BiFPN.

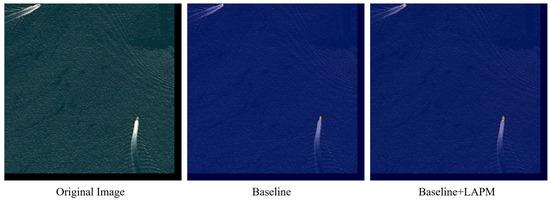

After incorporating LAPM into YOLOv8s, as Table 1 shows, there is a slight decrease in precision; however, recall, , and all demonstrated significant improvements. According to the definitions of precision (P) and recall (R), this change indicates an increase in the number of detected objects and a reduction in missed detections, albeit with a rise in false positives. Relative to the decrease in precision, the increase in recall is more significant, thereby improving the overall performance of object detection. LAPM does not increase the model’s parameters, and the minimal change in testing time has no significant impact on detection efficiency. The feature map in Figure 10 clearly indicates that the characteristics of small objects are more prominent following the addition of LAPM, further affirming its significant advantage in reducing background interference and enhancing small object features.

Figure 10.

Visual comparison of small object features with and without LAPM.

Adding both EFEM and SCGBiFPN to the baseline model led to notable improvements across all evaluation metrics, surpassing the gains observed with each module individually. This outcome indicates that EFEM strengthens the feature representation in the backbone network, enabling SCGBiFPN to integrate high-level semantic features more effectively, thus enhancing detection capabilities. The combined integration of EFEM, SCGBiFPN, and LAPM boosts precision and recall, reaching a higher of 0.518. This comprehensive enhancement confirms the effectiveness of the proposed modifications in improving the model’s overall detection capabilities.

4.4. Comparative Experiments

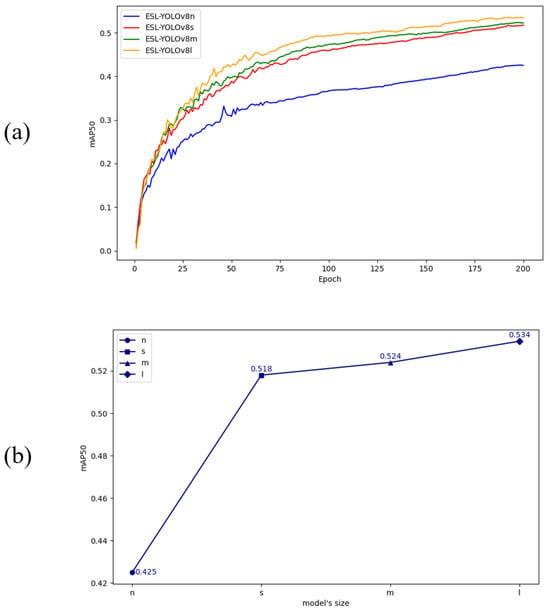

To assess the impact of the proposed improvements on ESL-YOLO with different parameter sizes, we conducted comparative experiments involving four different scale models of ESL-YOLO (n, s, m, l), as illustrated in Figure 11. From Figure 11a, it is evident that with increasing model size, the performance curve ascends more steeply, resulting in higher detection accuracy. In contrast to the other three model sizes, ESL-YOLOv8n shows a slower rate of improvement, primarily attributable to its reduced number of parameters and comparatively weaker feature extraction capabilities, which hinder its ability to achieve higher detection accuracy within a short time frame. Furthermore, Figure 11b demonstrates that as model size increases, correspondingly rises, with ESL-YOLOv8n, ESL-YOLOv8s, ESL-YOLOv8m, and ESL-YOLOv8l attaining values of 0.425, 0.518, 0.524, and 0.534, respectively.

Figure 11.

Comparative experiments of ESL-YOLO with different parameter sizes: (a) curve; (b) the best curve.

To comprehensively evaluate the performance advantages of ESL-YOLO, two groups of comparative experiments were conducted on the AI-TOD dataset. First, ESL-YOLO was compared with mainstream object detection algorithms, including YOLOv5s, YOLOv6s, YOLOv8s, and YOLOv9s [52], as well as the latest models in the YOLO series, YOLOv10s [53] and YOLOv11s. Second, ESL-YOLO was compared with representative object detection models developed in recent years based on the AI-TOD dataset, such as M-CenterNet [50], DetectoRS + NWD [54], SP-YOLOv8s [55], SAFF-SSD [56], STODNet [57], and TTFNet + GA (Darknet-53) [58]. The comparative results are shown in Table 4.

Table 4.

Results of comparison experiments on AI-TOD.

Table 4 demonstrates that ESL-YOLO significantly outperforms YOLOv5s, YOLOv6s, YOLOv8s, YOLOv9s, YOLOv10s, and YOLOv11s in terms of key metrics such as precision (P), recall (R), , and . Specifically, among the base YOLO models, YOLOv9s achieved the highest precision and , but ESL-YOLO improved upon these metrics by 3.8% and 4.6%, respectively. Similarly, YOLOv10s exhibited the highest recall and among the base models, while ESL-YOLO outperformed YOLOv10s by 3.7% in recall and 2.3% in . Compared with the latest YOLOv11s, ESL-YOLO achieved enhancements of 5%, 5.7%, 5.8%, and 2.8% in precision, recall, , and , respectively. However, ESL-YOLO has a slightly longer testing time and a marginally larger parameter size compared to YOLOv8s, YOLOv9s, YOLOv10s, and YOLOv11s. It is significantly more efficient than YOLOv6s in both aspects. For comparison, YOLOv5s has fewer parameters than ESL-YOLO but still requires 1.3 ms more testing time. Furthermore, Table 4 highlights the comparative results of ESL-YOLO with models developed for the AI-TOD dataset in recent years. ESL-YOLO achieved the best score, demonstrating its superior comprehensive detection performance. Notably, compared to M-CenterNet, DetectoRS+NWD, SAFF-SSD, SP-YOLOv8s, and TTFNet+GA (Darknet-53), ESL-YOLO also achieved the highest . Despite SP-YOLOv8s having fewer parameters than ESL-YOLO, its testing time is 24.1 ms longer, and ESL-YOLO surpasses it in precision, recall, , and by 3%, 6.4%, 3.5%, and 0.8%, respectively.

To validate the advantages and generalization performance of ESL-YOLO, this study also conducted two sets of comparative experiments on the DOTAv1.5 dataset. First, ESL-YOLO was compared with a series of YOLO models. Second, ESL-YOLO was compared with other object detection models based on the DOTAv1.5 dataset, including DCFL [59], RHINO (Swin tiny) [60], Transformer-based Model [61], and PKINet-S [62]. The experimental results are shown in Table 5.

Table 5.

Results of comparison experiments on DOTAv1.5.

According to the results in Table 5, compared with the YOLO series models, ESL-YOLO achieved the best performance in and , reaching 74.7% and 53.4%, respectively. ESL-YOLO also has the highest recall rate and can detect more objects. However, ESL-YOLO’s performance in precision metrics is slightly inferior, with a precision 3.9% lower than the highest precision YOLOv8s. In terms of testing time, ESL-YOLO requires a longer testing time than YOLO series models, with an increase of 3.5 ms compared to YOLOv8s, which has the shortest testing time. As for the model parameter count, ESL-YOLO has a lower parameter count than YOLOv6s, but higher than YOLOv5s, YOLOv8s, YOLOv9s, YOLOv10s, and YOLOv11s, with an increase of 0.86 MB compared to the baseline model YOLOv8s. Although the testing time and model parameter count have increased, these changes have limited impact on the detection speed of the model, and ESL-YOLO improves the performance of object detection. In addition, compared to other object detection algorithms such as DCFL, RHINO (Swin tiny), Transformer-based Model, and PKINet-S, ESL-YOLO performs better on and has fewer parameters than DCFL and PKINet-S.

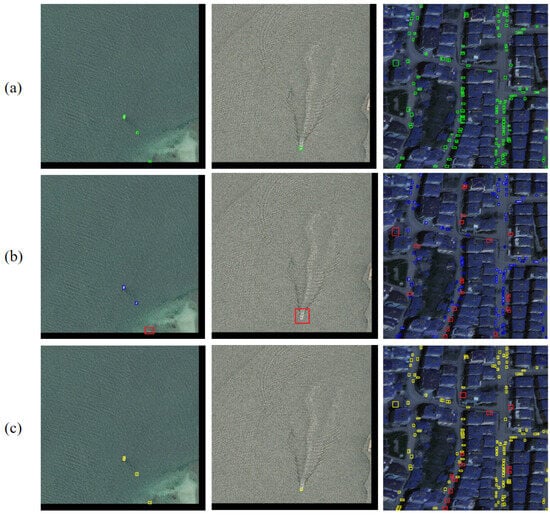

In conclusion, the findings from both datasets demonstrate that ESL-YOLO exhibits strong detection capabilities on the AI-TOD and DOTAv1.5 datasets. The design improvements in ESL-YOLO enhance its ability to detect small objects while delivering high detection performance across different datasets. To further evaluate the effectiveness of ESL-YOLO, we randomly selected image samples from both the AI-TOD and DOTAv1.5 datasets. We conducted detections using both the baseline model and ESL-YOLO, comparing the results with the corresponding actual object boxes. The experimental findings are presented in Figure 12 and Figure 13.

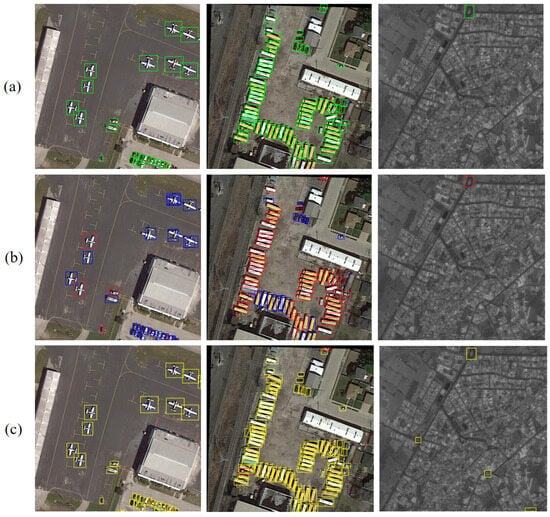

Figure 12.

Visualizing the detection results in AI-TOD: (a) Ground Truth; (b) Baseline; (c) ESL-YOLO. Note: The green boxes indicate the actual object boxes. The blue boxes indicate the detection results of the Baseline model. The yellow boxes indicate the detection results of the ESL-YOLO model. The red boxes indicate the undetected results.

Figure 13.

Visualizing the detection results in DOTAv1.5: (a) Ground Truth; (b) Baseline; (c) ESL-YOLO. Note: The green boxes indicate the actual object boxes. The blue boxes indicate the detection results of the Baseline model. The yellow boxes indicate the detection results of the ESL-YOLO model. The red boxes indicate the undetected results.

Figure 12 and Figure 13 showcase detection examples from the AI-TOD and DOTAv1.5 datasets, with green boxes indicating the actual object boxes, blue boxes showing baseline detection results, yellow boxes representing ESL-YOLO detection results, and red boxes highlighting undetected objects. A comparison of detection outcomes across multiple images in Figure 12 and Figure 13 reveals that ESL-YOLO effectively identifies a greater number of small objects under complex backgrounds and density conditions, significantly reducing the missed detection rate and demonstrating superior detection performance.

5. Conclusions

Detecting small objects in remote sensing images is particularly challenging due to limited object information and complex backgrounds. Operations such as stride-based pooling and downsampling convolutions exacerbate this problem by causing substantial spatial information loss, significantly reducing detection accuracy. To address these issues, this paper proposes ESL-YOLO, a small object detection model built upon YOLOv8. ESL-YOLO integrates three key modules—EFEM, SCGBiFPN, and LAPM—each serving a distinct role in enhancing detection performance. EFEM is a plug-and-play feature enhancement component that employs multi-branch convolutions and varying receptive field sizes to effectively aggregate multi-scale local contextual information. This enhances the model’s capability to perceive small objects and mitigates the challenges posed by insufficient object information. SCGBiFPN, an efficient multi-scale feature fusion structure, addresses spatial information loss by integrating spatially enriched shallow features and introducing skip connections, thereby preserving both spatial and semantic information. LAPM introduced to operate without additional computational cost, focuses on multi-scale local features, highlights small object characteristics, and suppresses background noise. Experimental results on the AI-TOD and DOTAv1.5 datasets demonstrate that ESL-YOLO achieves superior performance compared to other mainstream models in small object detection tasks. ESL-YOLO has also exhibited promising practical applicability. Under constrained hardware conditions, the model proposed in this paper has been successfully embedded into a remote sensing object detection system, delivering significant performance improvements in small object detection. However, these improvements come with a modest increase in computational cost. Future research will aim to develop algorithms optimized for small object detection in remote sensing images, prioritizing reduced computational overhead and enhanced performance.

Author Contributions

Conceptualization, X.Z.; data curation, X.Z. and Y.Q.; investigation, T.L. and P.J.; methodology, X.Z.; software, X.Z.; writing—original draft, X.Z.; writing—review and editing, X.Z. and G.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Tong, K.; Wu, Y.; Zhou, F. Recent advances in small object detection based on deep learning: A review. Image Vis. Comput. 2020, 97, 103910. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Mei, S.; Li, X.; Liu, X.; Cai, H.; Du, Q. Hyperspectral image classification using attention-based bidirectional long short-term memory network. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Cheng, G.; Yuan, X.; Yao, X.; Yan, K.; Zeng, Q.; Xie, X.; Han, J. Towards large-scale small object detection: Survey and benchmarks. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13467–13488. [Google Scholar] [CrossRef]

- Shen, C.; Qian, J.; Wang, C.; Yan, D.; Zhong, C. Dynamic sensing and correlation loss detector for small object detection in remote sensing images. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 5627212. [Google Scholar] [CrossRef]

- Han, J.; Zhang, D.; Cheng, G.; Guo, L.; Ren, J. Object detection in optical remote sensing images based on weakly supervised learning and high-level feature learning. IEEE Trans. Geosci. Remote. Sens. 2014, 53, 3325–3337. [Google Scholar] [CrossRef]

- Zhang, B.; Wu, Y.; Zhao, B.; Chanussot, J.; Hong, D.; Yao, J.; Gao, L. Progress and challenges in intelligent remote sensing satellite systems. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1814–1822. [Google Scholar] [CrossRef]

- Han, Y.; Duan, B.; Guan, R.; Yang, G.; Zhen, Z. LUFFD-YOLO: A Lightweight Model for UAV Remote Sensing Forest Fire Detection Based on Attention Mechanism and Multi-Level Feature Fusion. Remote Sens. 2024, 16, 2177. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Shi, T.; Gong, J.; Hu, J.; Zhi, X.; Zhang, W.; Zhang, Y.; Zhang, P.; Bao, G. Feature-enhanced CenterNet for small object detection in remote sensing images. Remote Sens. 2022, 14, 5488. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:1504.08083. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J. You Only Look Once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 726–731. [Google Scholar]

- Redmon, J. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1–12. [Google Scholar]

- Sunkara, R.; Luo, T. No more strided convolutions or pooling: A new CNN building block for low-resolution images and small objects. In Machine Learning and Knowledge Discovery in Databases, ECML PKDD 2022, Grenoble, France, 19–23 September 2022; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; Volume 13715, pp. 503–518. [Google Scholar] [CrossRef]

- Cheng, G.; Lang, C.; Wu, M.; Xie, X.; Yao, X.; Han, J. Feature enhancement network for object detection in optical remote sensing images. J. Remote Sens. 2021, 2021, 9805389. [Google Scholar] [CrossRef]

- Yi, H.; Liu, B.; Zhao, B.; Liu, E. Small object detection algorithm based on improved YOLOv8 for remote sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 1734–1747. [Google Scholar] [CrossRef]

- Zhang, K.; Shen, H. Multi-stage feature enhancement pyramid network for detecting objects in optical remote sensing images. Remote Sens. 2022, 14, 579. [Google Scholar] [CrossRef]

- Li, W.; Shi, M.; Hong, Z. SCAResNet: A ResNet variant optimized for tiny object detection in transmission and distribution towers. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6011105. [Google Scholar] [CrossRef]

- Tang, S.; Zhang, S.; Fang, Y. HIC-YOLOv5: Improved YOLOv5 for small object detection. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 6614–6619. [Google Scholar]

- Wang, Z.; Men, S.; Bai, Y.; Yuan, Y.; Wang, J.; Wang, K.; Zhang, L. Improved Small Object Detection Algorithm CRL-YOLOv5. Sensors 2024, 24, 6437. [Google Scholar] [CrossRef]

- Guo, M.H.; Lu, C.Z.; Hou, Q.; Liu, Z.; Cheng, M.M.; Hu, S.M. Segnext: Rethinking convolutional attention design for semantic segmentation. Adv. Neural Inf. Process. Syst. 2022, 35, 1140–1156. [Google Scholar]

- Li, Y.; Hou, Q.; Zheng, Z.; Cheng, M.M.; Yang, J.; Li, X. Large selective kernel network for remote sensing object detection. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 1117–1126. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. NAS-FPN: Learning scalable feature pyramid architecture for object detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10792. [Google Scholar]

- Wang, H.; Liu, C.; Cai, Y.; Chen, L.; Li, Y. YOLOv8-QSD: An improved small object detection algorithm for autonomous vehicles based on YOLOv8. IEEE Trans. Instrum. Meas. 2024, 73, 2513916. [Google Scholar] [CrossRef]

- Zhang, M.; Wang, Z.; Song, W.; Zhao, D.; Zhao, H. Efficient Small-Object Detection in Underwater Images Using the Enhanced YOLOv8 Network. Appl. Sci. 2024, 14, 1095. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, Z.; Qi, G.; Hu, G.; Zhu, Z.; Huang, X. Remote Sensing Micro-Object Detection under Global and Local Attention Mechanism. Remote Sens. 2024, 16, 644. [Google Scholar] [CrossRef]

- Jiang, L.; Yuan, B.; Du, J.; Chen, B.; Xie, H.; Tian, J.; Yuan, Z. MFFSODNet: Multi-Scale Feature Fusion Small Object Detection Network for UAV Aerial Images. IEEE Trans. Instrum. Meas. 2024, 73, 5015214. [Google Scholar] [CrossRef]

- Li, X.; Wei, Y.; Li, J.; Duan, W.; Zhang, X.; Huang, Y. Improved YOLOv7 Algorithm for Small Object Detection in Unmanned Aerial Vehicle Image Scenarios. Appl. Sci. 2024, 14, 1664. [Google Scholar] [CrossRef]

- Liu, D.; Zhang, J.; Qi, Y.; Wu, Y.; Zhang, Y. Tiny object detection in remote sensing images based on object reconstruction and multiple receptive field adaptive feature enhancement. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5616213. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Zhu, P.; Chen, P.; Tang, X.; Li, C.; Jiao, L. Foreground refinement network for rotated object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–13. [Google Scholar] [CrossRef]

- Wang, C.; Bai, X.; Wang, S.; Zhou, J.; Ren, P. Multiscale visual attention networks for object detection in VHR remote sensing images. IEEE Geosci. Remote. Sens. Lett. 2018, 16, 310–314. [Google Scholar] [CrossRef]

- Ma, W.; Li, N.; Zhu, H.; Jiao, L.; Tang, X.; Guo, Y.; Hou, B. Feature split–merge–enhancement network for remote sensing object detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Fan, X.; Hu, Z.; Zhao, Y.; Chen, J.; Wei, T.; Huang, Z. A small ship object detection method for satellite remote sensing data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 11886–11898. [Google Scholar] [CrossRef]

- Dong, Y.; Yang, H.; Liu, S.; Gao, G.; Li, C. Optical remote sensing object detection based on background separation and small object compensation strategy. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024. Early Access. [Google Scholar] [CrossRef]

- Zhao, Z.; Du, J.; Li, C.; Fang, X.; Xiao, Y.; Tang, J. Dense Tiny Object Detection: A Scene Context Guided Approach and a Unified Benchmark. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5606913. [Google Scholar] [CrossRef]

- Shim, S.H.; Hyun, S.; Bae, D.; Heo, J.P. Local attention pyramid for scene image generation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 432–441. [Google Scholar]

- Zhang, Y.; Ye, M.; Zhu, G.; Liu, Y.; Guo, P.; Yan, J. FFCA-YOLO for small object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5611215. [Google Scholar] [CrossRef]

- Wang, J.; Yang, W.; Guo, H.; Zhang, R.; Xia, G.S. Tiny object detection in aerial images. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 3271–3277. [Google Scholar]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2011–2020. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In Computer Vision—ECCV 2024, 18th European Conference, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2024; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Wang, J.; Xu, C.; Yang, W.; Yu, L. A normalized Gaussian Wasserstein distance for tiny object detection. arXiv 2021, arXiv:2110.13389. [Google Scholar]

- Ma, M.; Pang, H. SP-YOLOv8s: An improved YOLOv8s model for remote sensing image tiny object detection. Appl. Sci. 2023, 13, 8161. [Google Scholar] [CrossRef]

- Huo, B.; Li, C.; Zhang, J.; Xue, Y.; Lin, Z. SAFF-SSD: Self-attention combined feature fusion-based SSD for small object detection in remote sensing. Remote Sens. 2023, 15, 3027. [Google Scholar] [CrossRef]

- Bai, X.; Li, X. STODNet: Sparse Convolution for Super Tiny Object Detection from Remote Sensing Image. In Proceedings of the IGARSS 2024—2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 7760–7764. [Google Scholar]

- Zhang, F.; Zhou, S.; Wang, Y.; Wang, X.; Hou, Y. Label Assignment Matters: A Gaussian Assignment Strategy for Tiny Object Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5633112. [Google Scholar] [CrossRef]

- Xu, C.; Ding, J.; Wang, J.; Yang, W.; Yu, H.; Yu, L.; Xia, G.S. Dynamic coarse-to-fine learning for oriented tiny object detection. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 8652–8661. [Google Scholar]

- Lee, H.; Song, M.; Koo, J.; Seo, J. Hausdorff distance matching with adaptive query denoising for rotated detection transformer. arXiv 2023, arXiv:2305.07598. [Google Scholar]

- Ren, B.; Xu, B.; Pu, Y.; Wang, J.; Deng, Z. Improving Detection in Aerial Images by Capturing Inter-Object Relationships. arXiv 2024, arXiv:2404.04140. [Google Scholar]

- Cai, X.; Lai, Q.; Wang, Y.; Wang, W.; Sun, Z.; Yao, Y. Poly kernel inception network for remote sensing detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 27706–27716. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).