1. Introduction

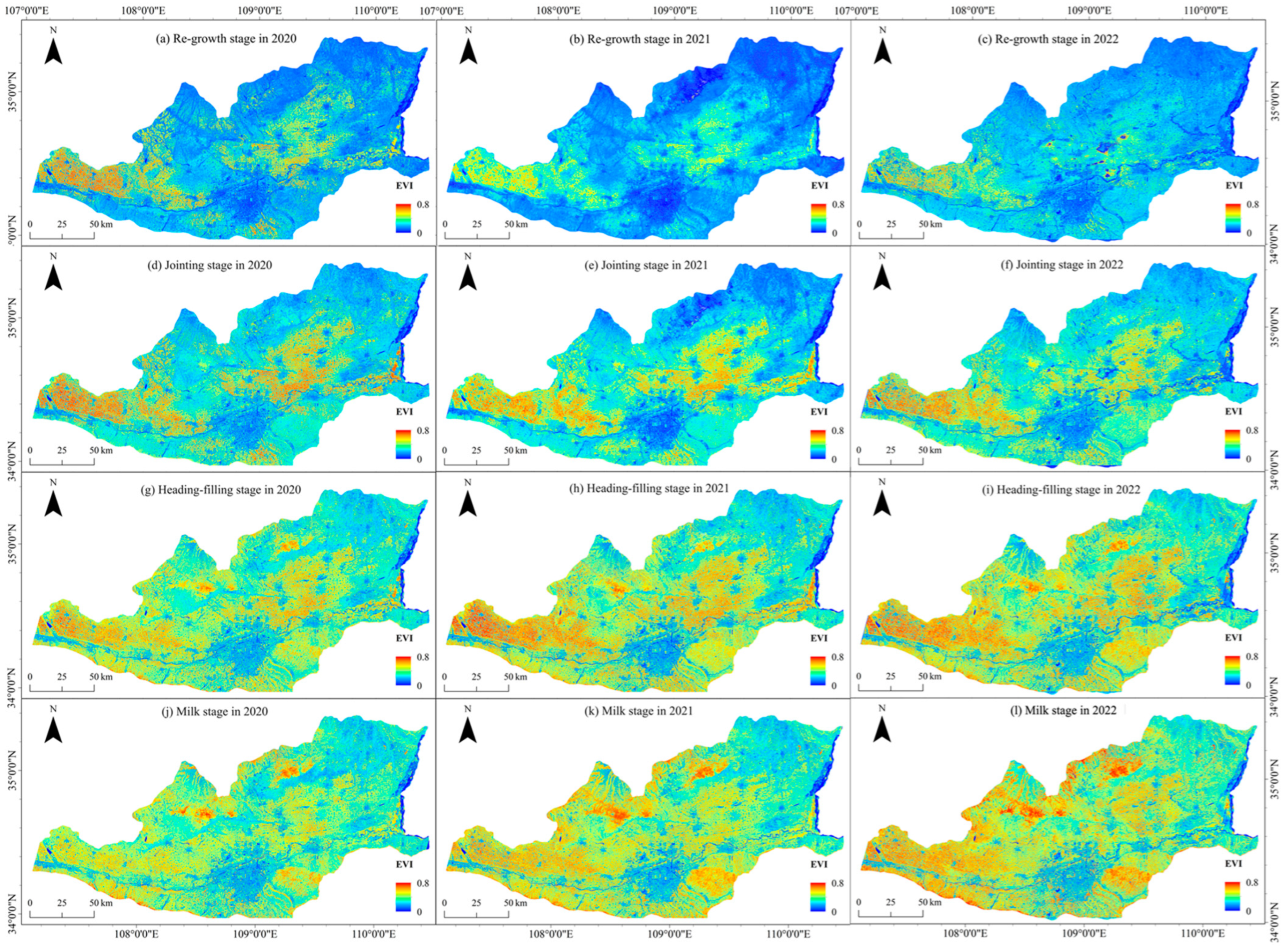

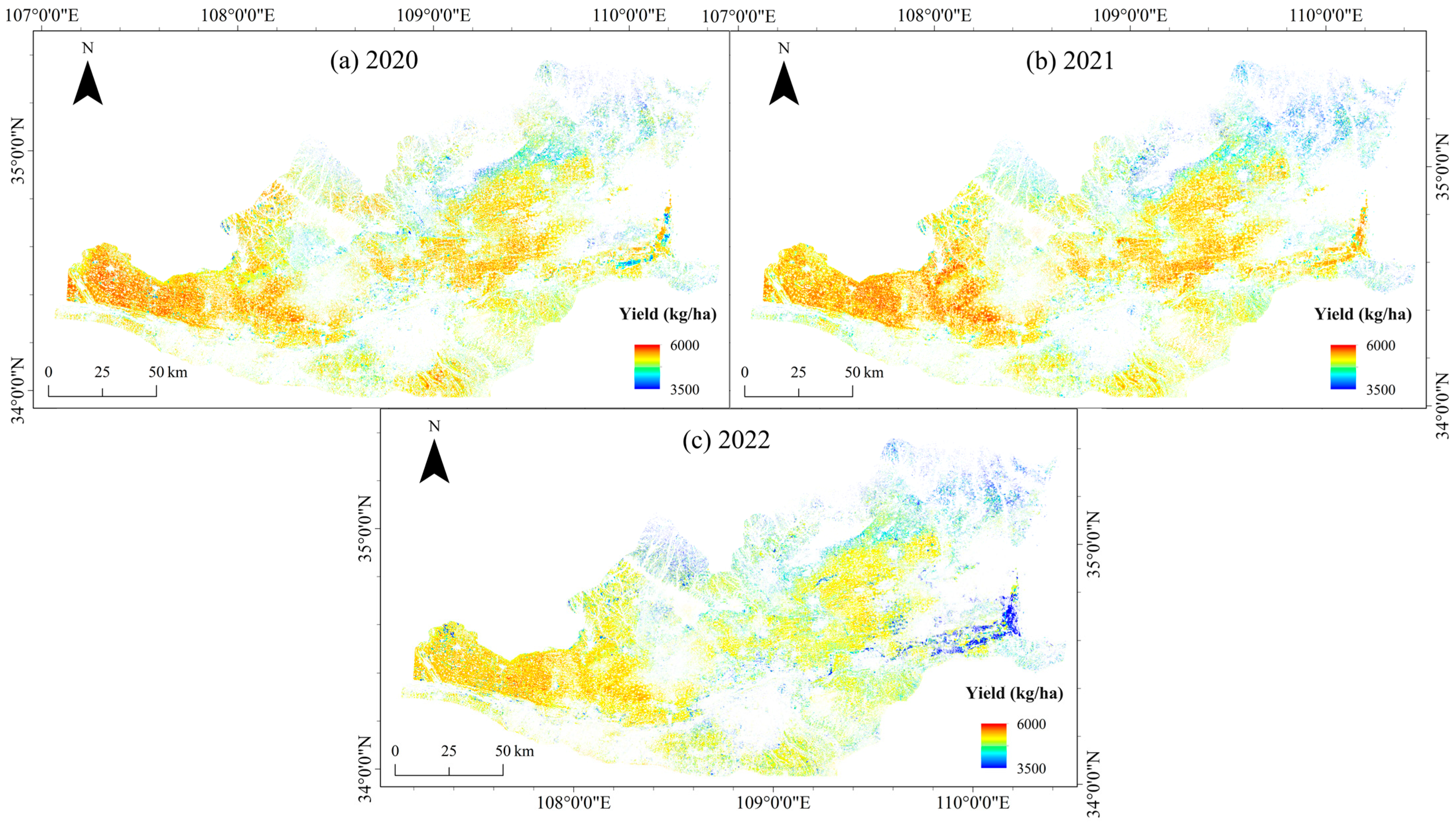

Winter wheat (WW) is one of the main grain crops in northern China, and the WW growth indices at different growing stages exhibit varying correlations with the final yield (Y). Therefore, continuous time series growth monitoring during the WW growing period (from the regrowth stage to the milk stage) is important for Y estimation [

1,

2]. Compared with observation data from ground and meteorological stations, remote sensing (RS) technology can detect the continuous spatial distribution of crop growth. A vegetation index (VI), which is calculated based on multispectral reflectance data, is one of the most commonly used methods for estimating crop growth statuses and is characterized by its simplicity and ease of use. Currently, the most commonly employed multispectral VIs for estimating crop growth include the normalized difference vegetation index (NDVI) [

3], soil-adjusted vegetation index (SAVI) [

4], and enhanced vegetation index (EVI) [

5]. The SAVI and EVI were developed based on the NDVI, and they consider soil backgrounds and atmospheric factors, respectively. Relevant studies have shown that the NDVI has a favourable linear or nonlinear relationship with vegetation coverage [

6,

7], while compared with the NDVI, the SAVI and EVI demonstrate better correlations with the leaf area index [

8,

9].

Over the past few decades, numerous VI products have been developed using high-temporal-resolution (HTR) satellite sensors, including the NOAA Advanced Very-High-Resolution Radiometer (AVHRR), Terra/Aqua Moderate Resolution Imaging Spectroradiometer (MODIS), and Fengyun-3 (FY-3) Medium Resolution Spectral Imager (MERSI). These products typically exhibit high precision in uniformly distributed farmland areas and can achieve a temporal resolution (TR) as high as 0.5 days. Among them, the FY-3 satellite is a second-generation polar-orbiting solar-synchronous meteorological satellite developed in China. Launched in November 2017, the FY-3 satellite is equipped with a MERSI containing 25 channels, including four visible and near-infrared channels, with a spatial resolution (SR) of 250 m. These channels are essential spectral bands for calculating VI products. The overpass time of FY-3D satellites is approximately 14:00 in the afternoon and the TR of FY-3D can reach 0.5 days. Currently, VIs such as the NDVI and EVI, which are calculated on the basis of satellite sensors such as MODIS, have been widely used in estimating the Y of various crops [

10,

11]. Johnson et al. [

11] estimated the crop Y for barley, spring wheat, and rapeseed on Canadian prairies via the MODIS NDVI and EVI, and the results revealed that in July, the MODIS NDVI can be used to effectively predict the crop Y of the three crops mentioned above and that the combination with the MODIS EVI can further improve the precision of crop Y forecasts. Compared with crop Y estimation models based on VIs during a specific growing period, crop Y estimation models that integrate VIs across multiple growing stages may yield more accurate estimates [

12,

13,

14]. Zhao et al. [

12] estimated the winter wheat yield (WWY) in Henan Province based on the MODIS NDVI from March to May, and the results revealed that the multiple linear regression model constructed with the NDVI from multiple months achieved a high Y estimation precision for WW. Besides the application in crop Y estimation, the VI products based on HTR satellite sensors have also been widely used in crop growth monitoring, biomass estimation [

15,

16], drought monitoring [

17,

18] and phenology monitoring [

19,

20]. Compared with the more well-developed RS satellite products in the USA, VI products based on the FY-3 satellites developed relatively late but are now playing an increasingly significant role in agricultural monitoring and meteorological disaster prevention and mitigation in China and other countries in the world, especially the “Belt & Road” [

21] countries in Asia, Africa, and South America, etc. [

22,

23,

24,

25]. To date, the application of FY-3 satellites in agricultural monitoring has formed a basic operational framework that provides regular services and data support to the relevant departments of countries around the world [

26].

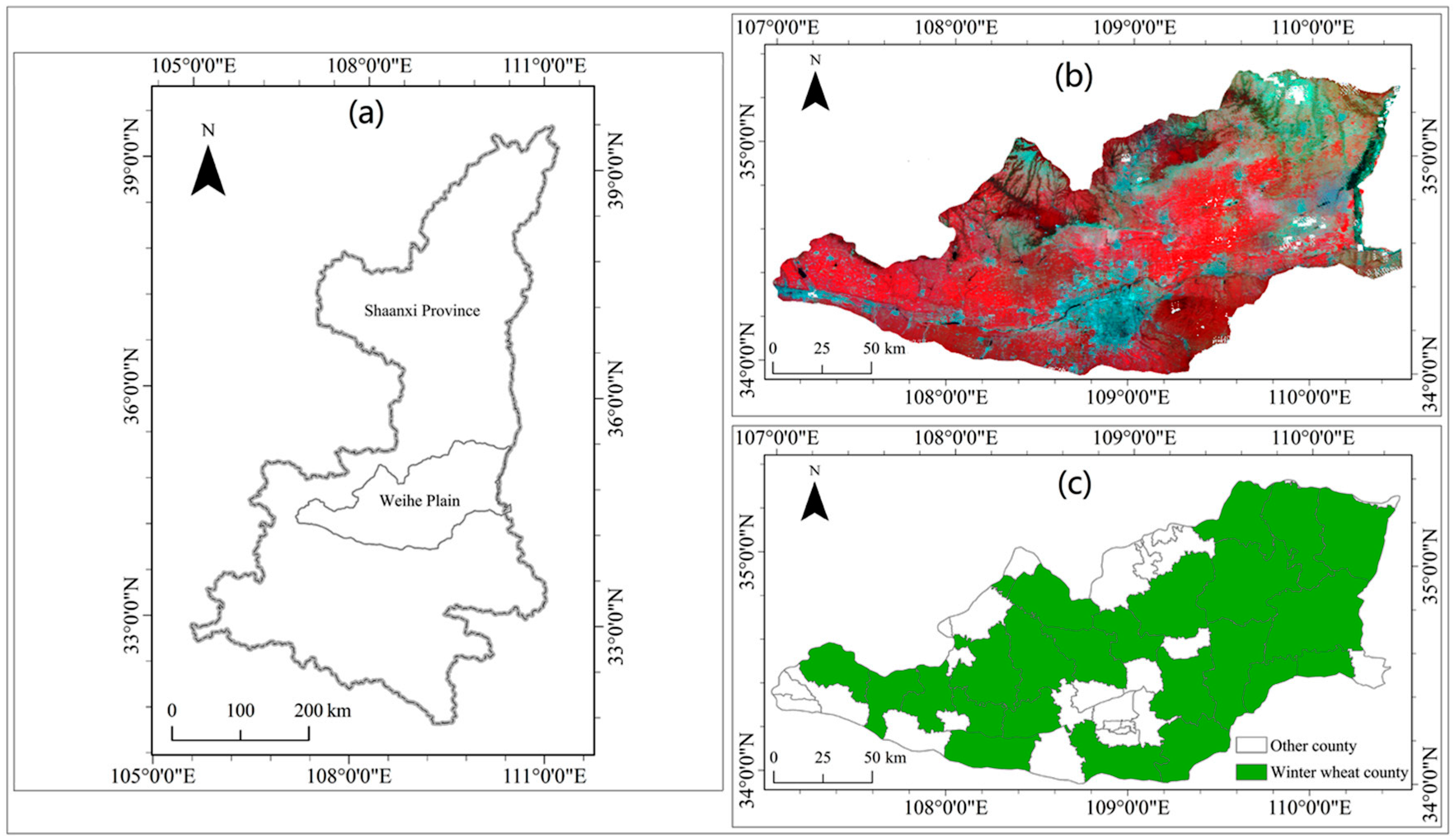

However, the SR (250–1000 m) of the above HTR satellite sensors is significantly coarser than the farmland scale (approximately 20 m) in most regions in China, such as the Weihe Plain in Shaanxi Province. Due to the existence of other vegetation and artificial land between farmlands in these regions, there are uncertainties in terms of the crop growth monitoring results based on the above satellite sensor data. In contrast, the SR (10–30 m) of the Landsat-5 Thematic Mapper (TM), the Landsat-8 Operational Land Imager (OLI), and the Sentinel-2 MultiSpectral Instrument (MSI) data are very close to the farmland scale in most regions of China, and croplands and artificial lands can be clearly distinguished in these satellite images. Among these satellites, Sentinel-2a and Sentinel-2b were launched in 2015 and 2017, respectively, and both are equipped with a multispectral instrument (MSI) containing 12 channels, including six visible and six near-infrared channels, with SRs of 10–60 m. The width of the Sentinel-2 imagery can reach 290 km, and the entire area of most provinces in China can be covered by a single orbit of Sentinel-2. Currently, VIs based on Landsat and Sentinel-2 data have been widely used in monitoring at the farmland scale [

27,

28,

29,

30]. However, due to the low TR (5–16 days) of the Landsat and Sentinel-2 images, it is difficult to obtain sufficient cloudless data during periods with few sunny days. Therefore, further development of methods for crop growth monitoring at the farmland scale based on high spatiotemporal resolution data are urgently needed.

The spatiotemporal data fusion (STDF) model can combine the advantages of fine-spatial-resolution (FSR) satellite imagery (such as Landsat) and HTR satellite images (such as MODIS), thereby realizing continuous time series monitoring of crop growth at the farmland scale. At present, the existing spatiotemporal fusion methods include weight function-based methods, unmixing-based methods, Bayesian-based methods, machine learning-based methods, deep learning-based methods, and hybrid methods [

31,

32]. The spatial and temporal adaptive reflectance fusion model (STARFM) [

33] and its improved method, i.e., the enhanced STARFM (ESTARFM) [

34], are widely employed STDF methods, and both are easy to use and have stable performance [

35]. However, these methods exhibit high uncertainties in areas with varying land cover types and complex vegetation phenology variations. Several STDF models based on deep learning have emerged as the field has developed, including the deep convolutional spatiotemporal fusion network (DCSTFN) model, which is based on the convolutional neural network autoencoder [

36]. The DCSTFN approach assumes that the variations in ground features in FSR imagery are the same as those in coarse-spatial-resolution (CSR) imagery. Therefore, the DCSTFN model still exhibits uncertainty in areas with complex and diverse land cover types. To improve the fusion precision of the DCSTFN model in areas with complex and diverse land cover types, the enhanced DCSTFN (EDCSTFN) model was proposed by Tan et al. [

37]; this model uses a residual encoder to learn the variation in ground features in FSR imagery based on the input FSR and CSR imagery. The results showed that the fusion precision of the EDCSTFN is greater than that of other unmixing-based models, such as STARFM, in areas with complex land cover changes. At present, the STDF models have been widely used in different geographical regions of the world, such as the regions in China, South Asia, and the USA, and the application of the STDF involves many areas, such as agriculture, ecology, and land cover classification [

31]. In agriculture, the STDF has been widely used in crop growth monitoring [

38,

39], crop Y estimation [

40,

41,

42], biomass estimation [

43,

44], drought monitoring [

45,

46], and phenology monitoring [

47,

48], indicating the high application potential of the STDF in improving the precision agriculture monitoring. However, deep learning-based STDF models such as the EDCSTFN currently have relatively few applications in agricultural monitoring, which is important for achieving accurate farmland-scale agriculture monitoring during periods of complex variation in crop phenology.

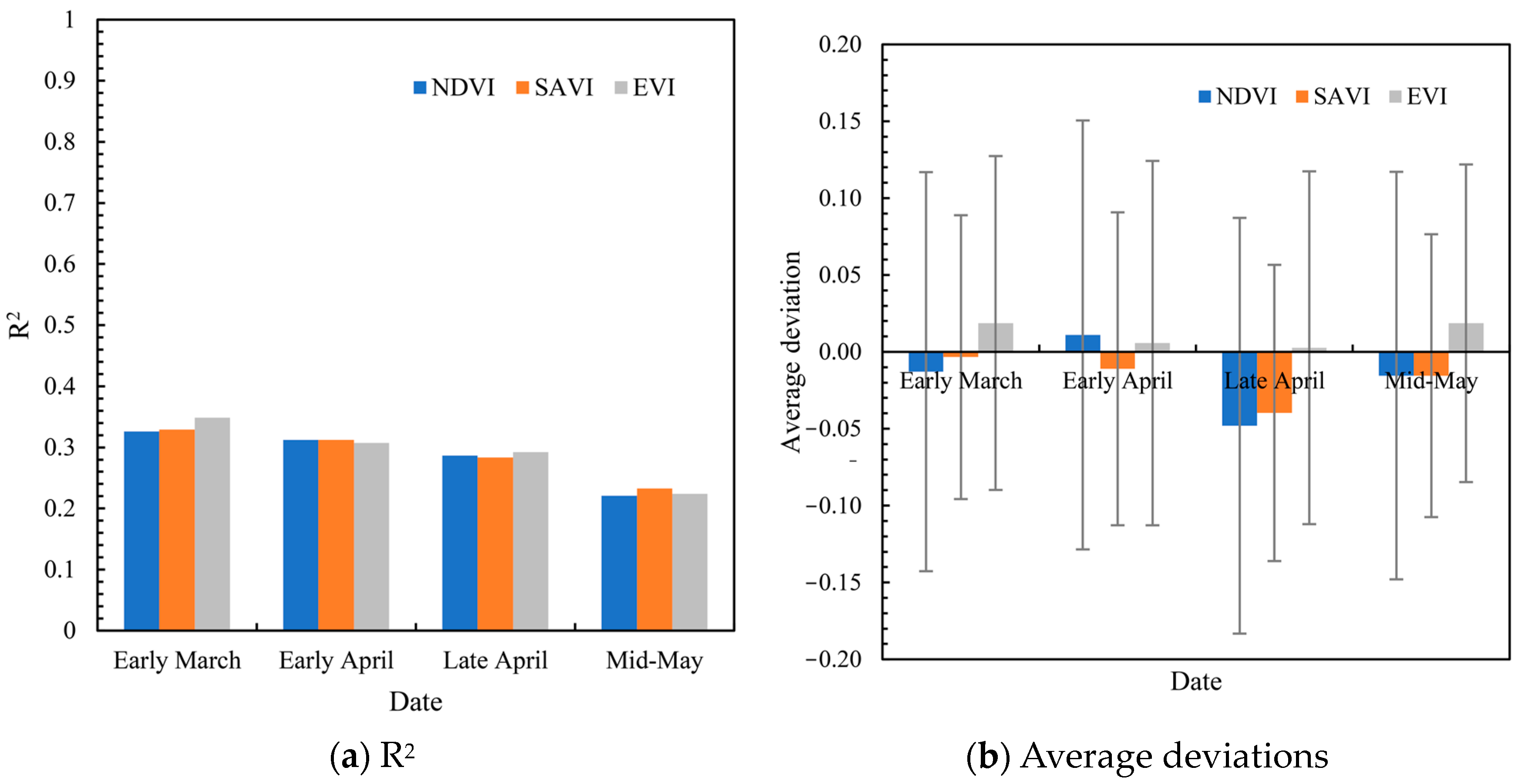

At present, most existing STDF studies are based on satellite sensors in the USA, such as the Moderate Resolution Imaging Spectroradiometer (MODIS), and most studies involve fusions between satellites that overpass in the morning. Spatiotemporal fusion-based studies for FY-3 series meteorological satellites and FSR satellite data are rare, as are STDF studies for satellites that overpass in the morning and afternoon, such as spatiotemporal fusion between the VIs of the newly launched FY-3D satellite and Sentinel-2 satellite. To extend the application of the FY-3D satellite to farmland-scale precision agricultural monitoring, it is necessary to further construct the STDF model framework for both FY-3D and FSR satellite data, such as Sentinel-2. Among the various satellite data in STDF models, there are differences in the spectral response bands, satellite overpass times, precisions of atmospheric corrections and geometric corrections, all of which affect the precision of the STDF models to varying degrees. The Sentinel-2 satellite passes over at approximately 10:00 a.m. local time, while the FY-3D satellite passes over at approximately 2:00 p.m. In addition, the spectral responses of the corresponding bands between these two sensors are slightly different. Therefore, in terms of the spatiotemporal fusion of VIs (including the NDVI, SAVI and EVI) from Sentinel-2 and FY-3D, it is necessary to assess the consistency of the two indices, properly adjust the parameters of the spatiotemporal fusion models, and retrain the deep-learning-based fusion models to evaluate the performance of each STDF model under fusion periods of different lengths.

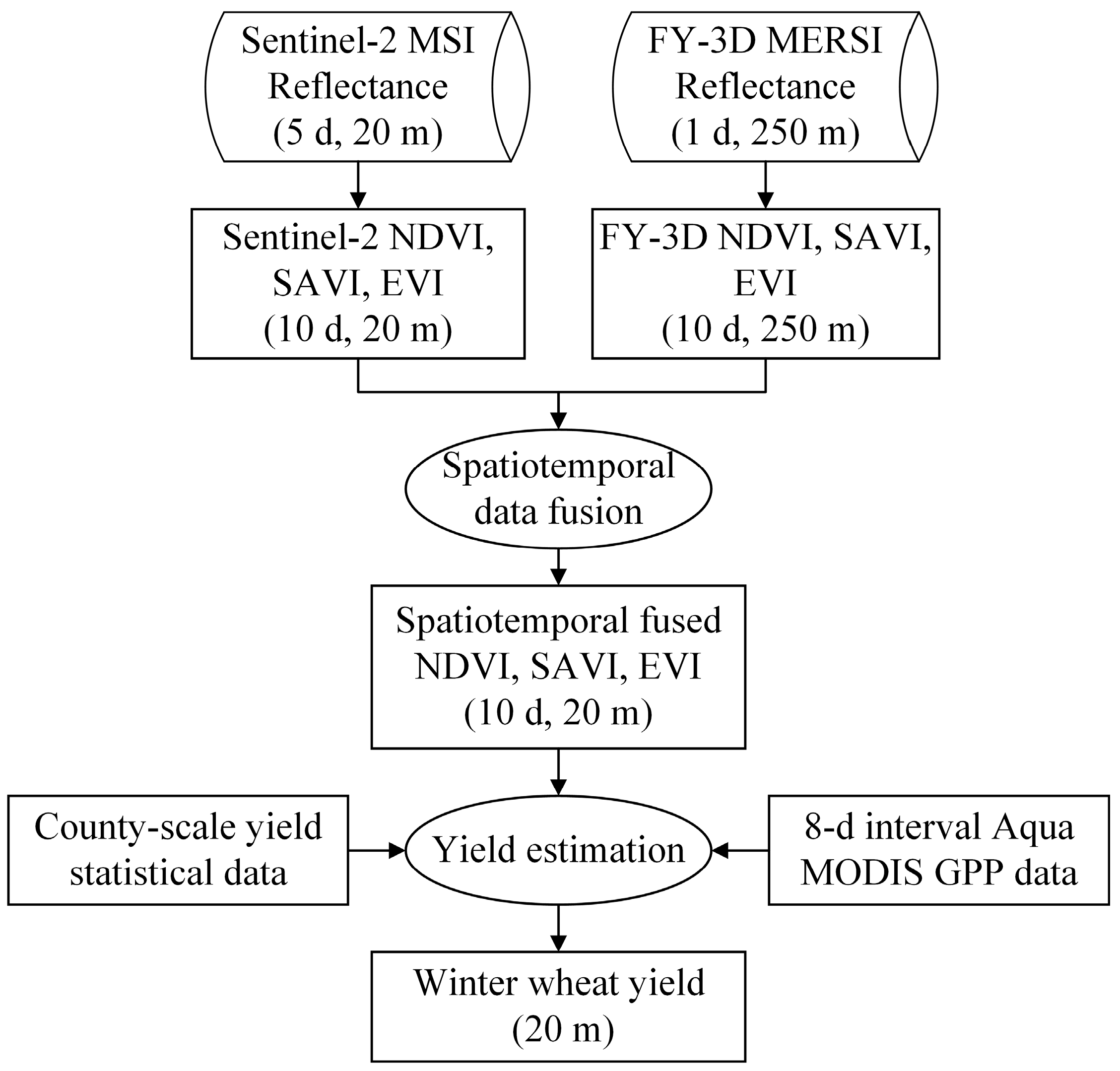

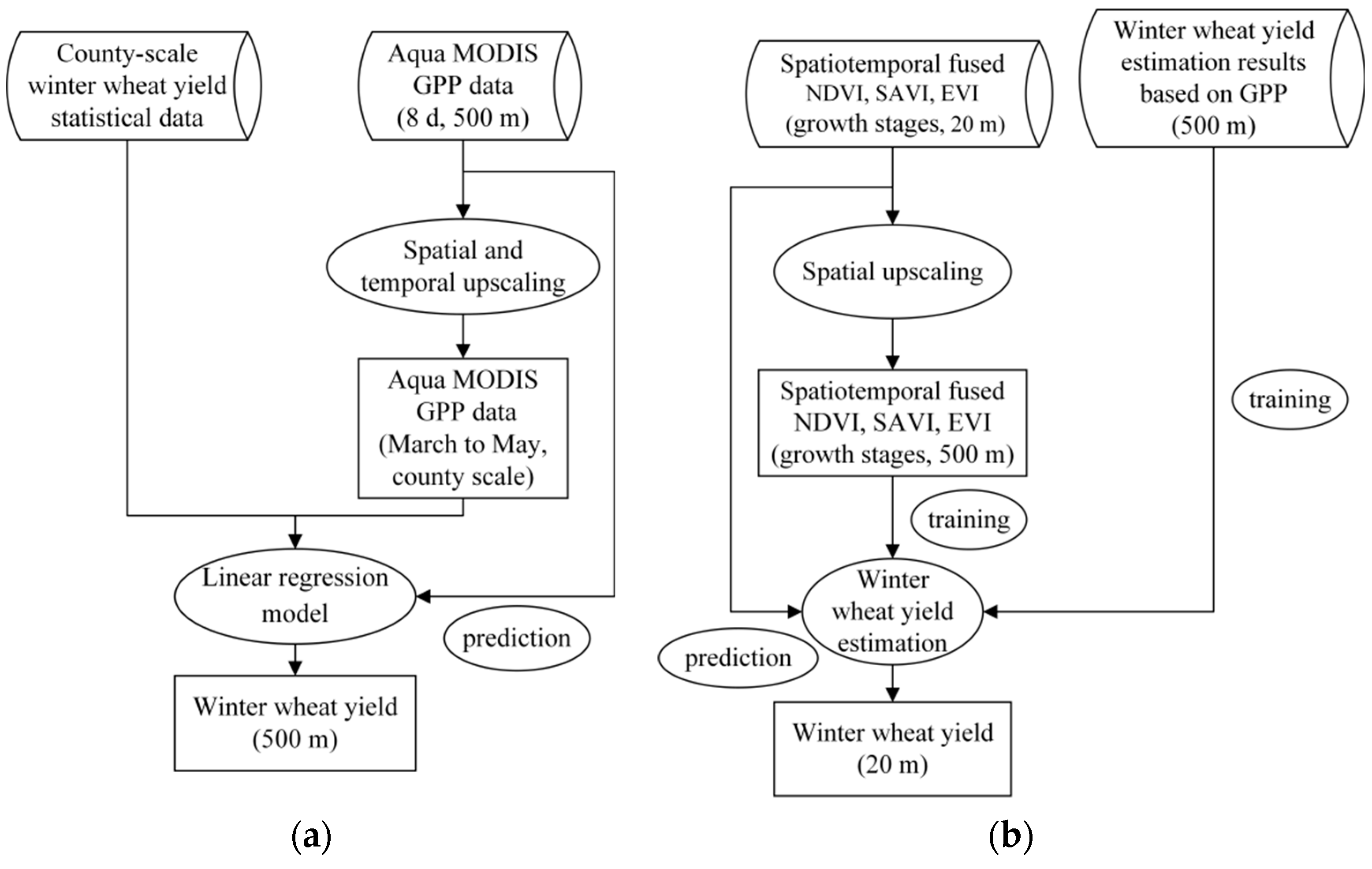

The main purpose of this study is to propose a method framework for reconstructing time-series farmland-scale FY-3D-based VI imagery (including the NDVI, SAVI and EVI) over the main WW growing period via fusion with Sentinel-2 data based on the newly deep-learning-based STDF model to achieve farmland-scale WWY estimation by using the reconstructed FY-3D VI imagery. The Weihe Plain in Shaanxi Province, which is a typical WWY planting area in China that has similar climate and farmland characteristics as other WWY planting areas in China, was selected as the study region. Specifically, the objectives of this paper include two steps: (1) constructing STDF models to fuse the 10 day interval VI imagery from Sentinel-2 and FY-3D based on the ESTARFM and EDCSTFN and comparing the precision levels of the two models under fusion periods of different lengths, thereby selecting the STDF model with the optimal precision to reconstruct farmland-scale VI imagery for each 10 day period during the WW main growing period; (2) establishing a linear regression model to estimate WWY imagery at a 500 m SR based on Aqua MODIS gross primary productivity (GPP) data and county-scale WWY data, thereby constructing a back propagation neural network (BPNN) model based on the reconstructed farmland-scale FY-3D-based VI imagery and the 500 m SR Y estimation result to realize farmland-scale WWY estimation.