Three-Dimensional Geometric-Physical Modeling of an Environment with an In-House-Developed Multi-Sensor Robotic System

Abstract

1. Introduction

2. Related Work

2.1. Geometric Modeling

2.2. Physical Modeling

2.3. Information Fusion of Sensors

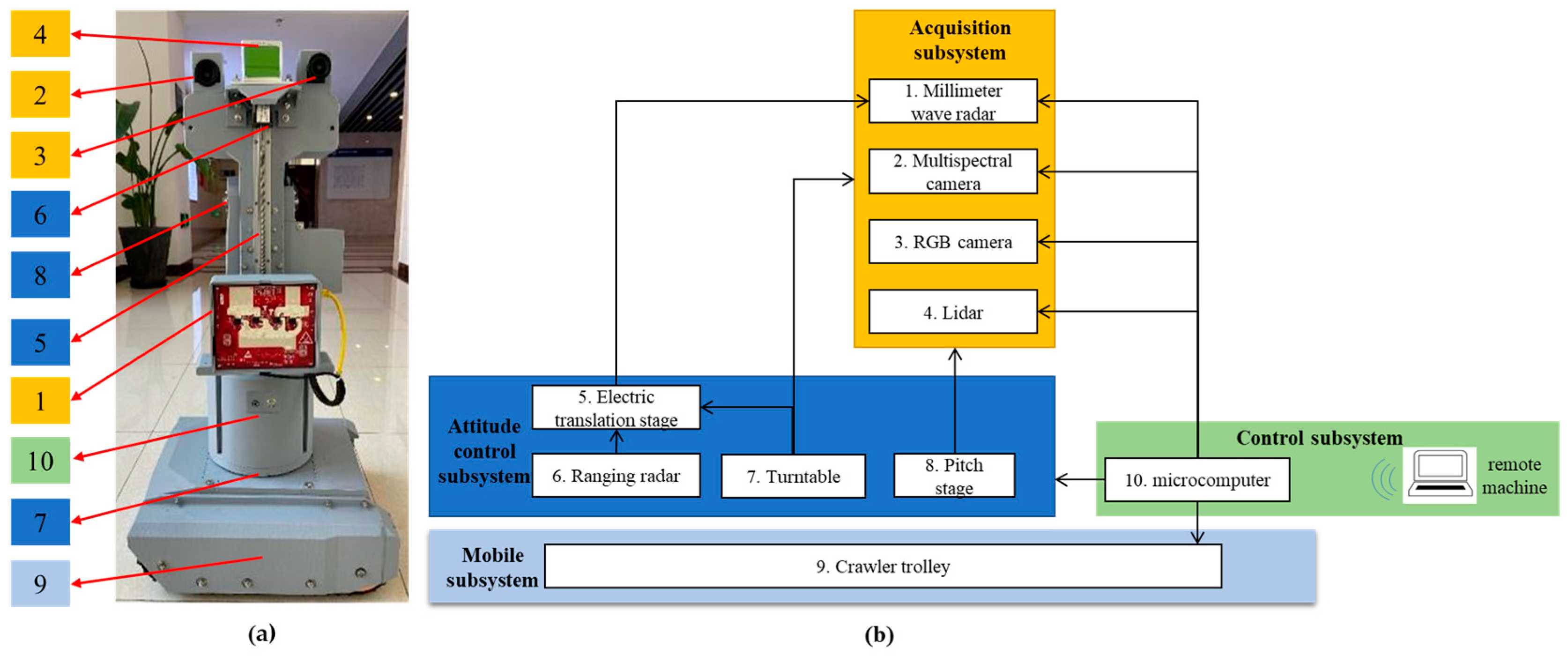

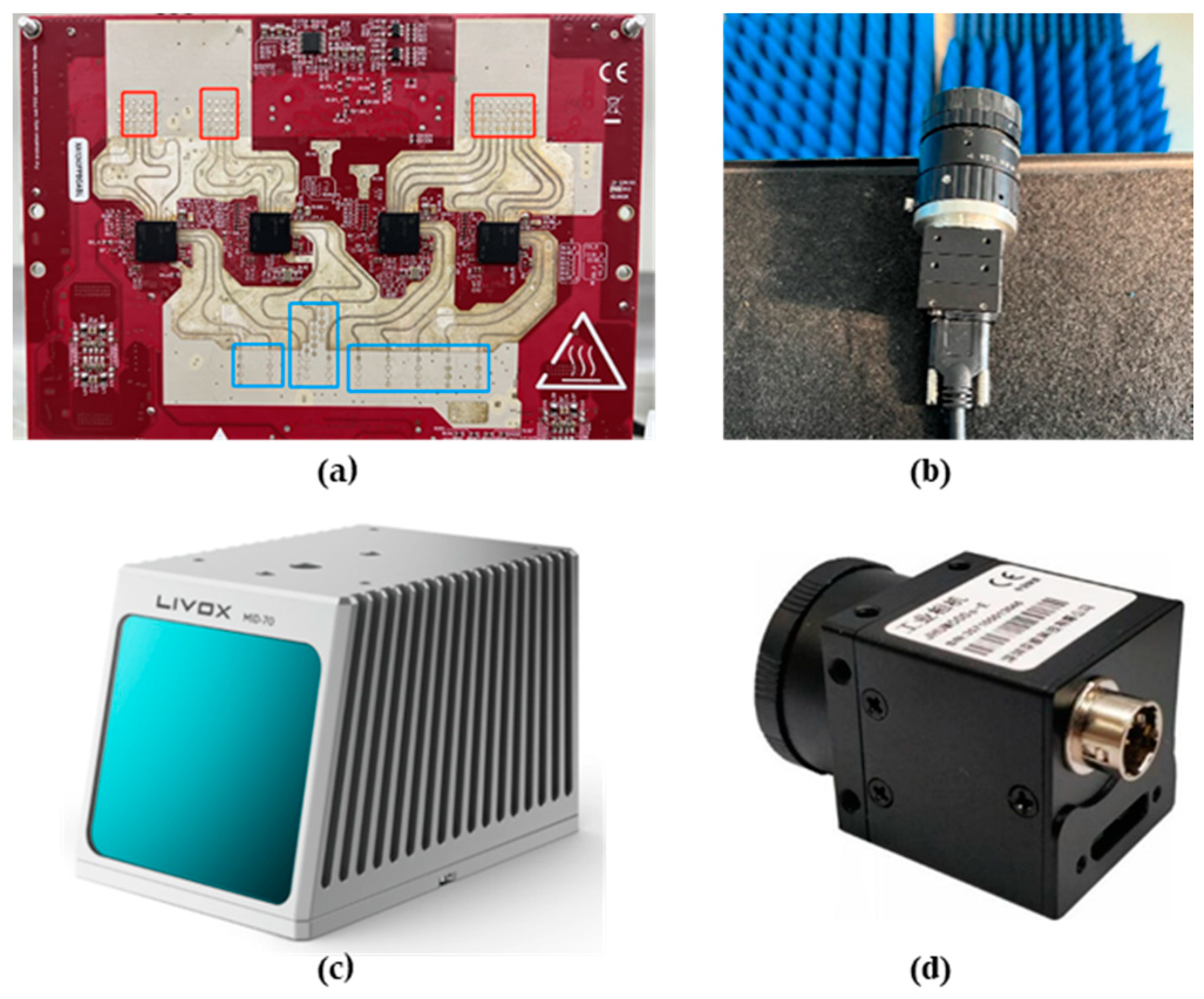

3. FUSEN: The Multi-Sensor Robotic System for Enhanced Environmental Perception

3.1. FUSEN System Architecture

3.1.1. Subsystem Composition

3.1.2. Sensor Installation

3.1.3. Sensor Parameters

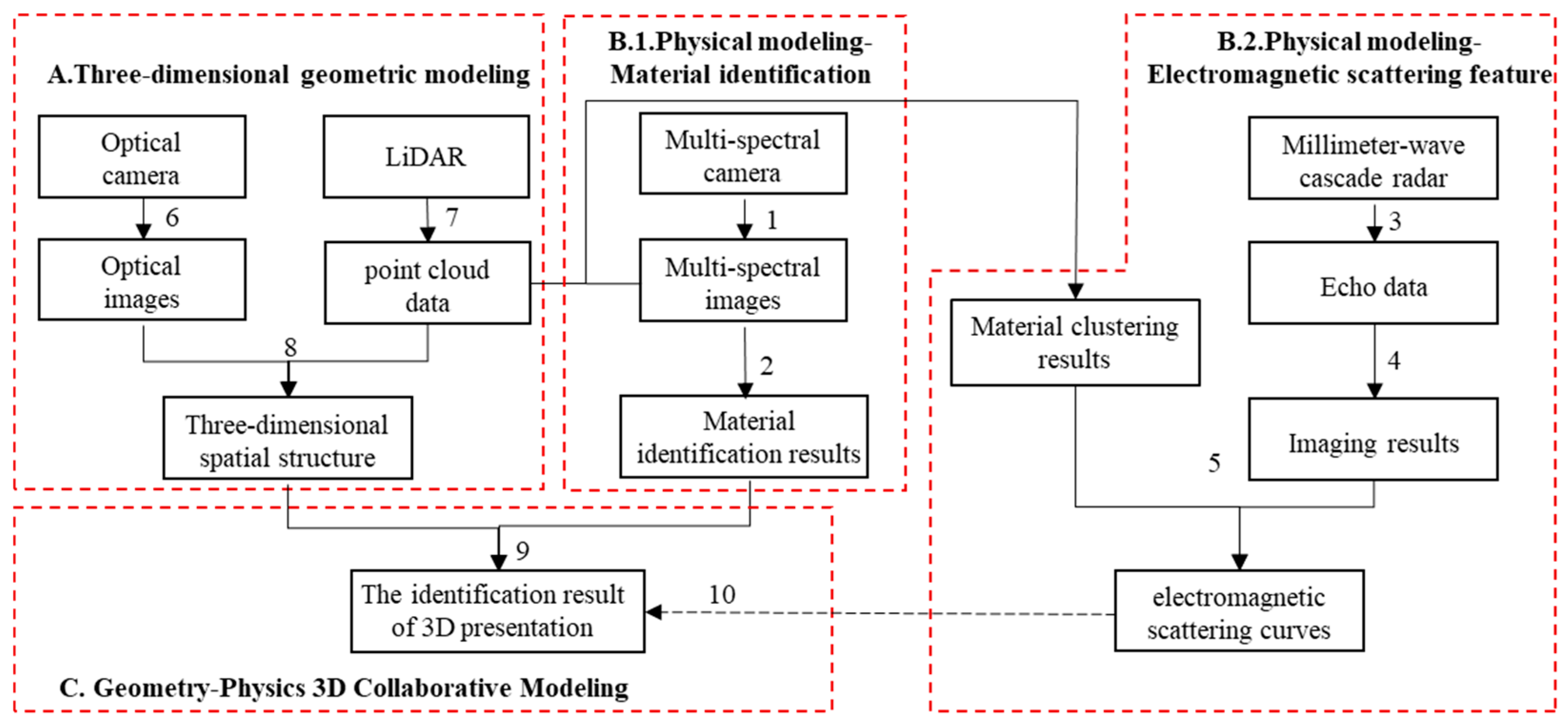

3.2. FUSEN Workflow

4. Multi-Sensor Acquisition of Environmental Physical Properties

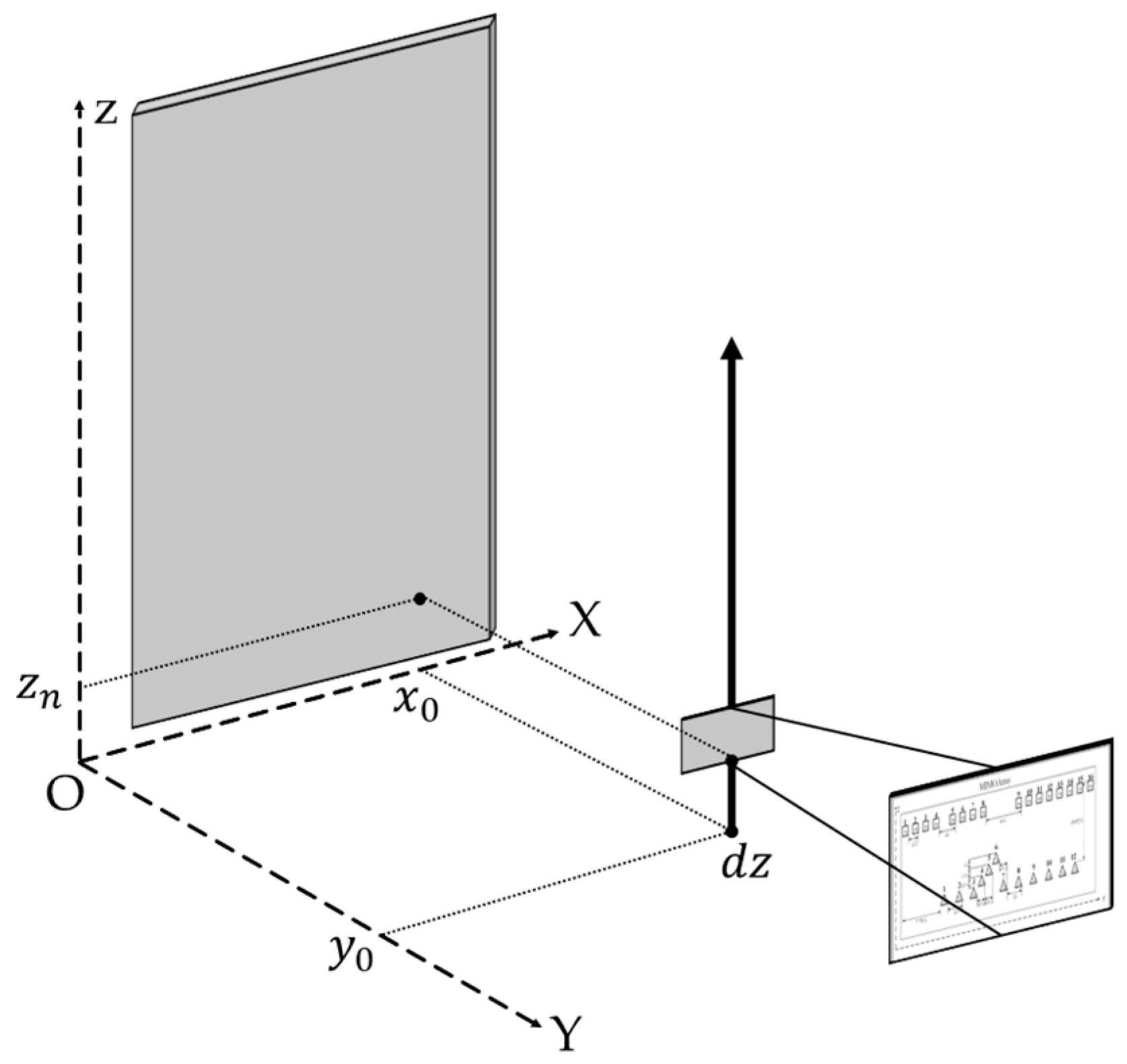

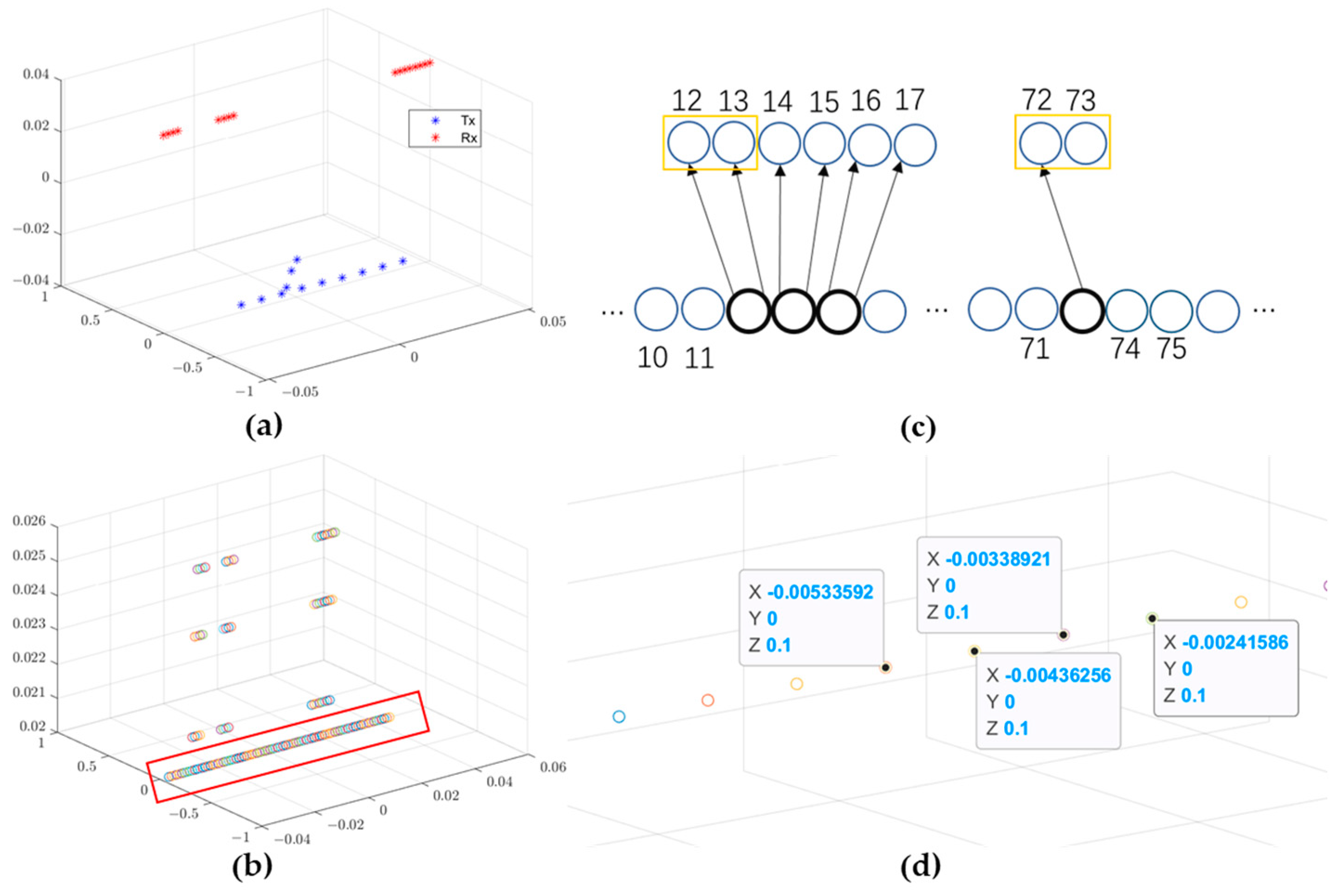

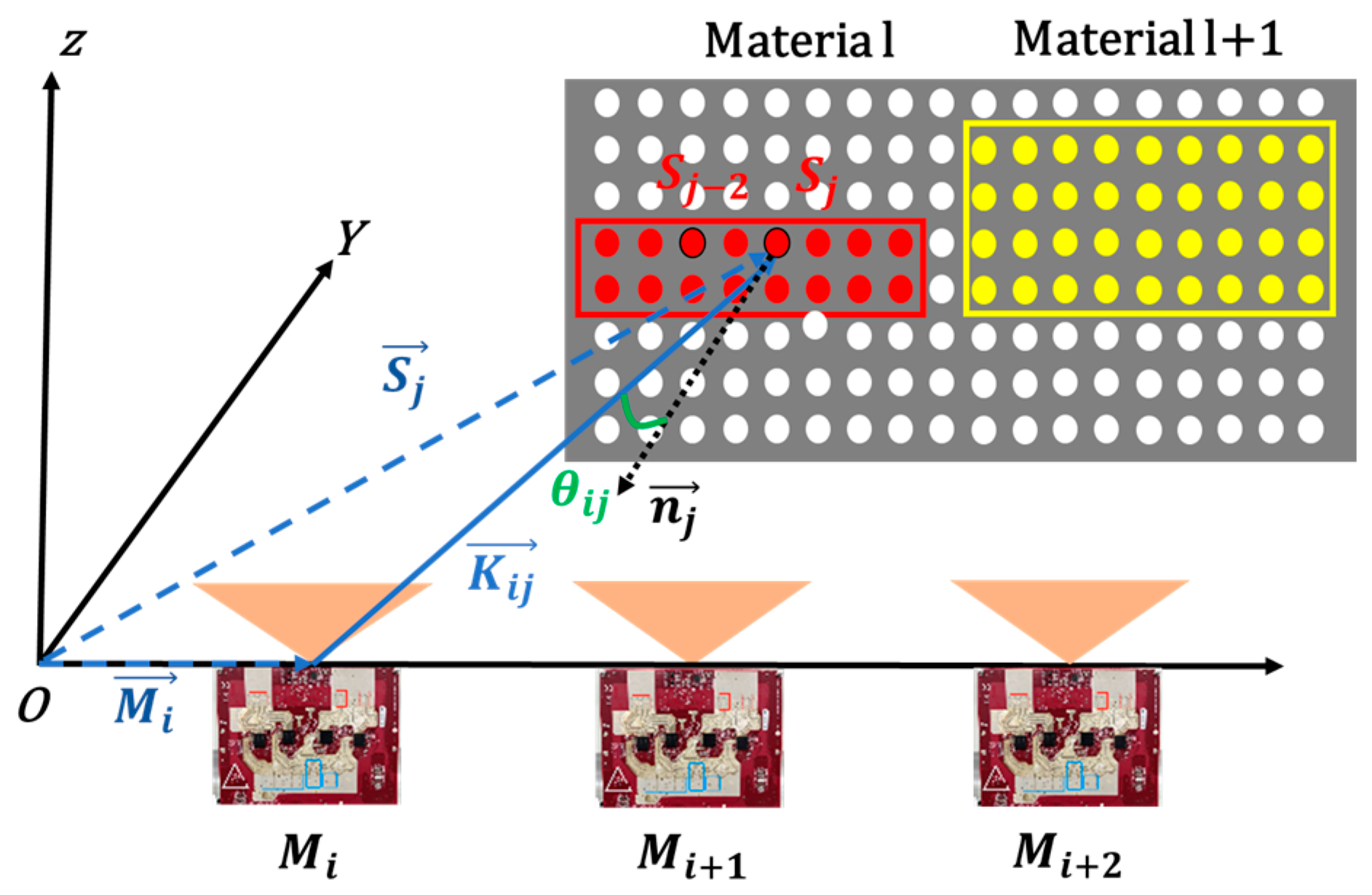

4.1. Millimeter-Wave Radar Imaging

end end |

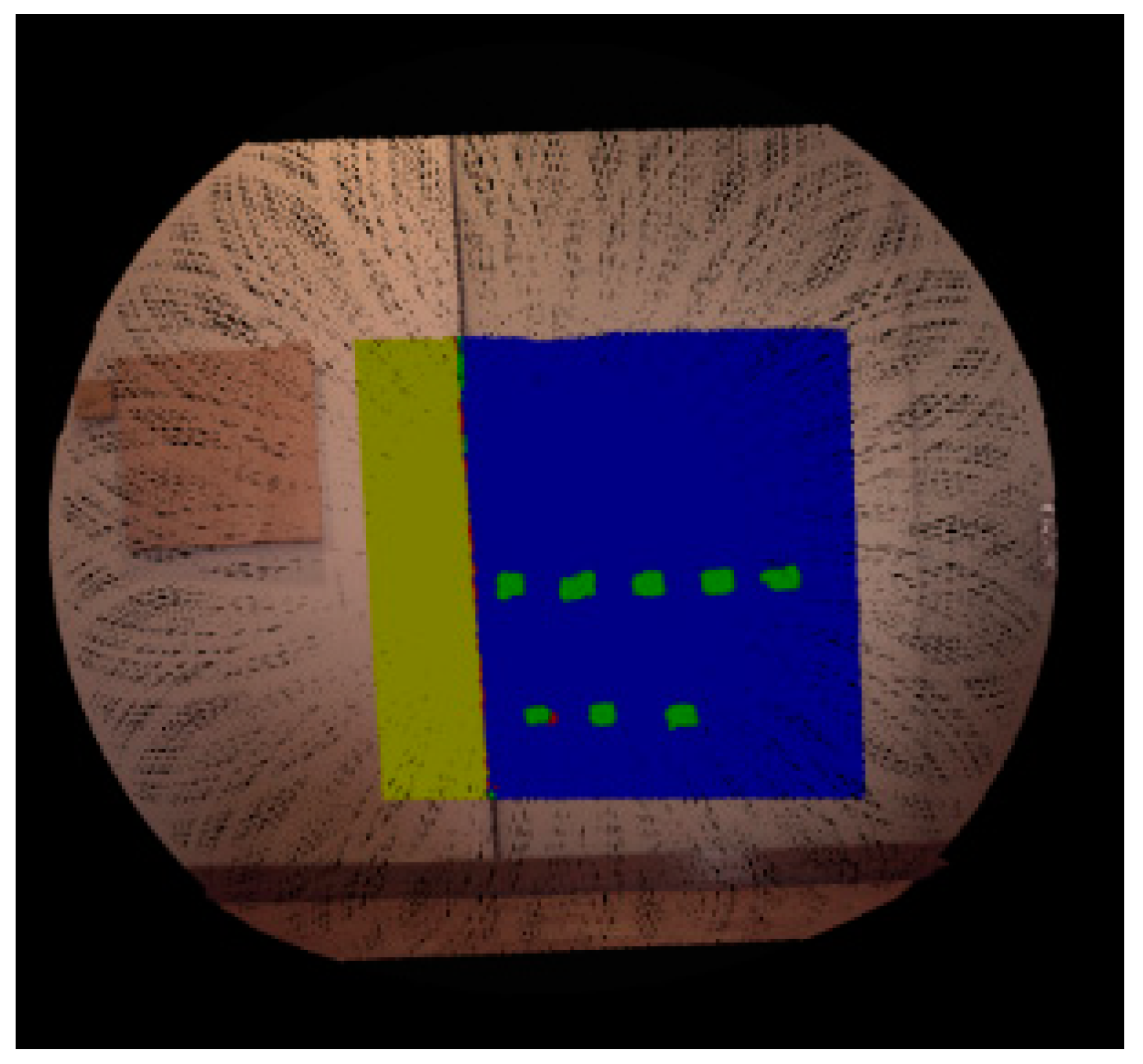

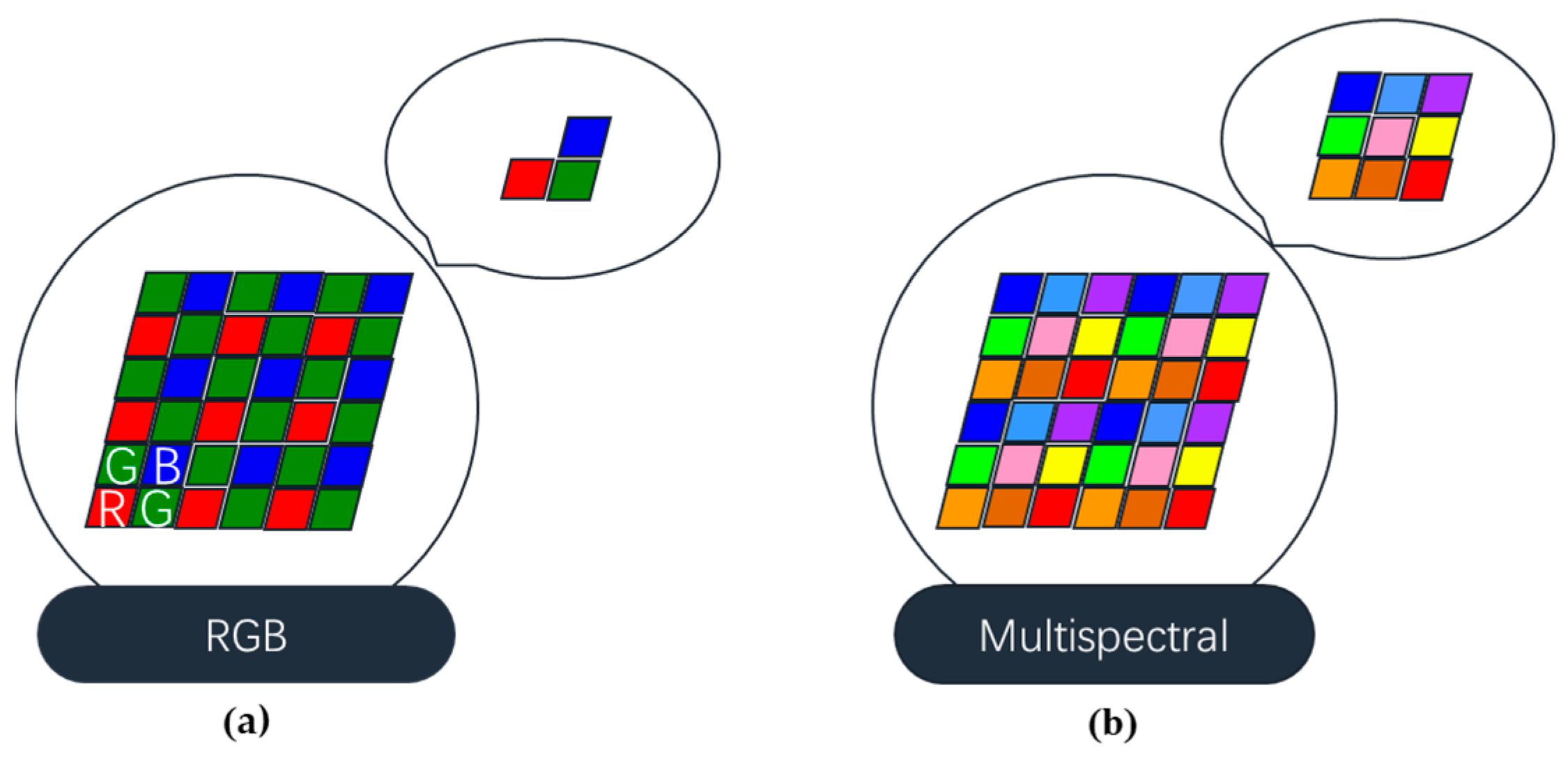

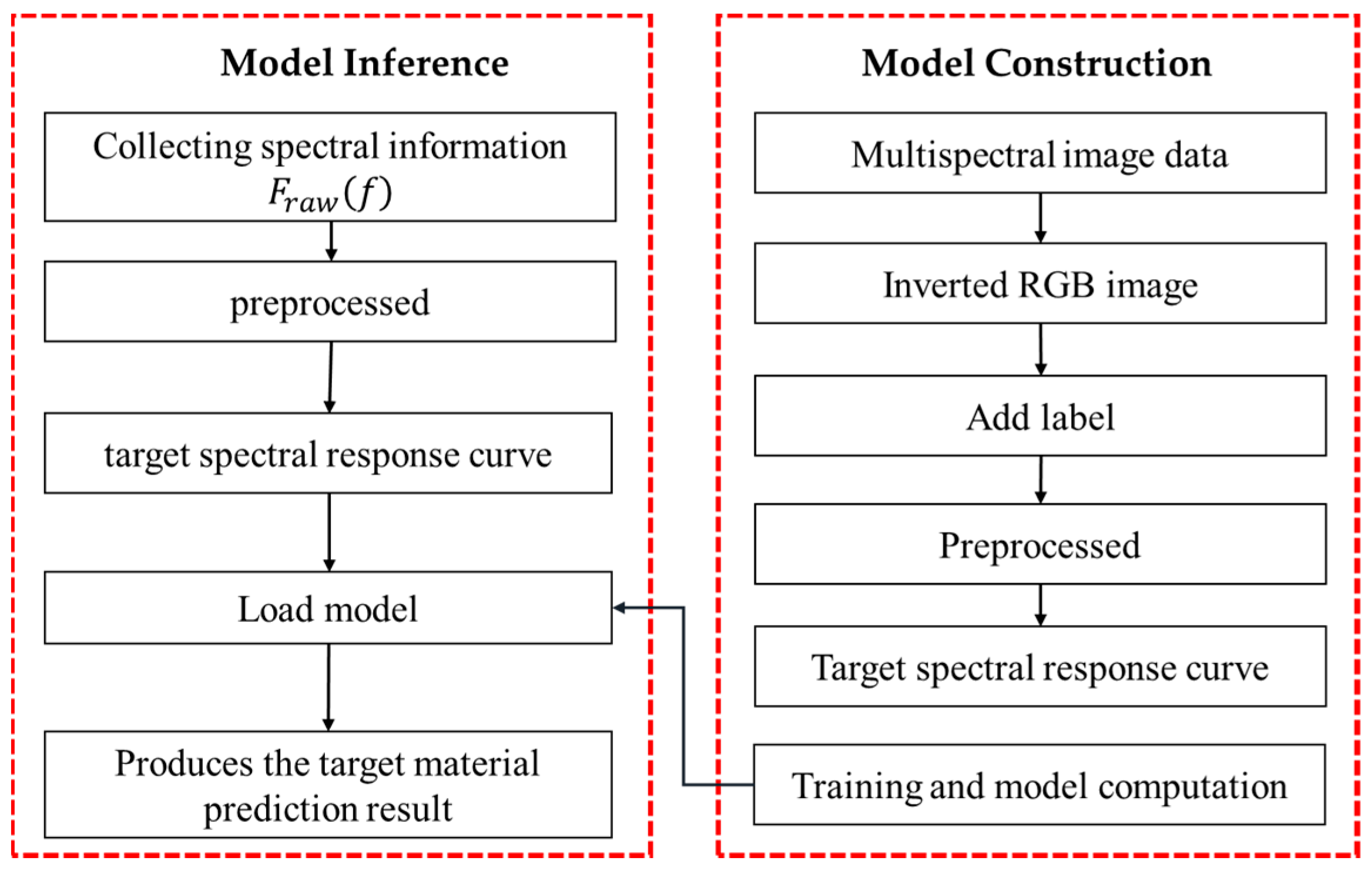

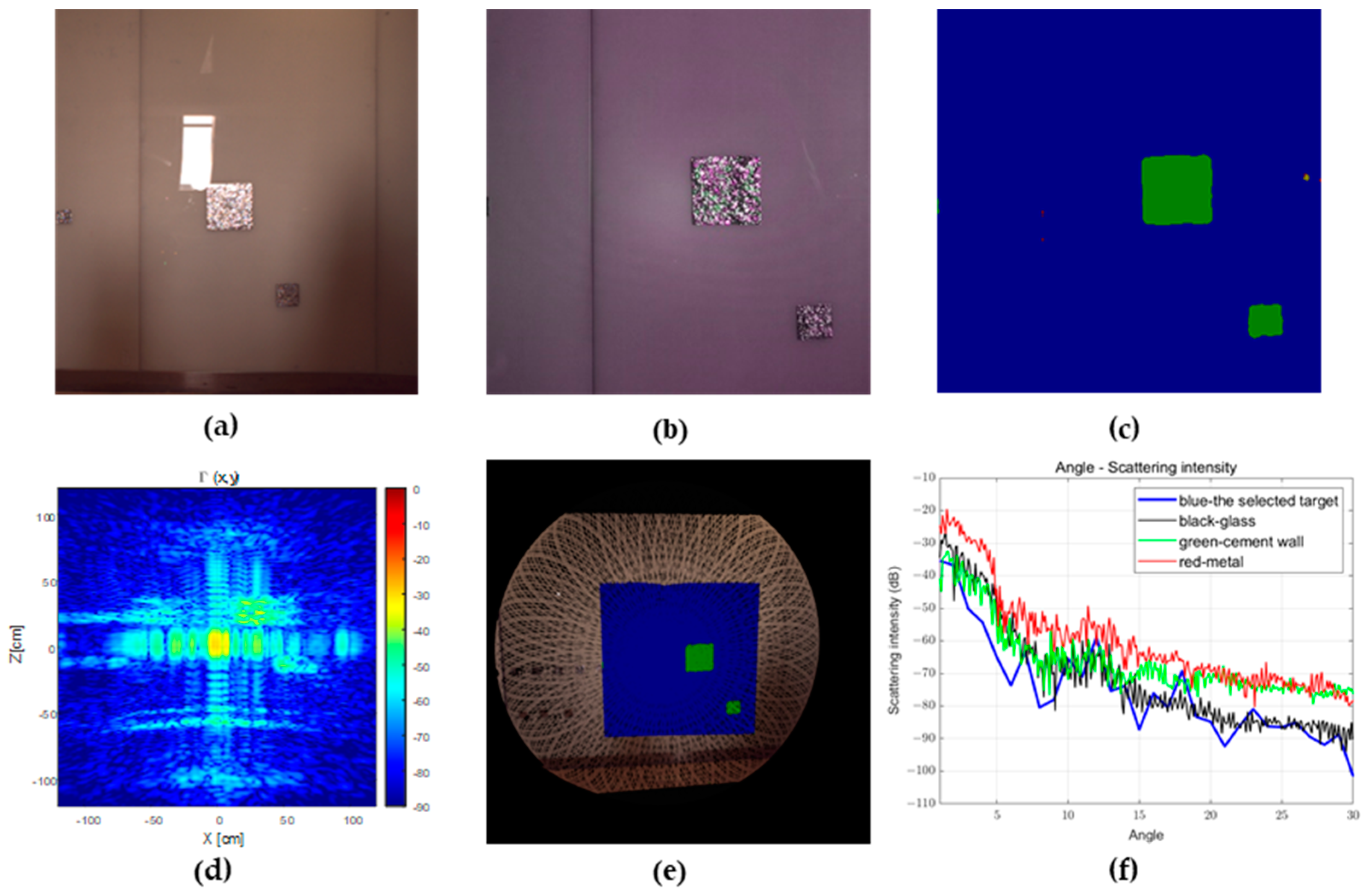

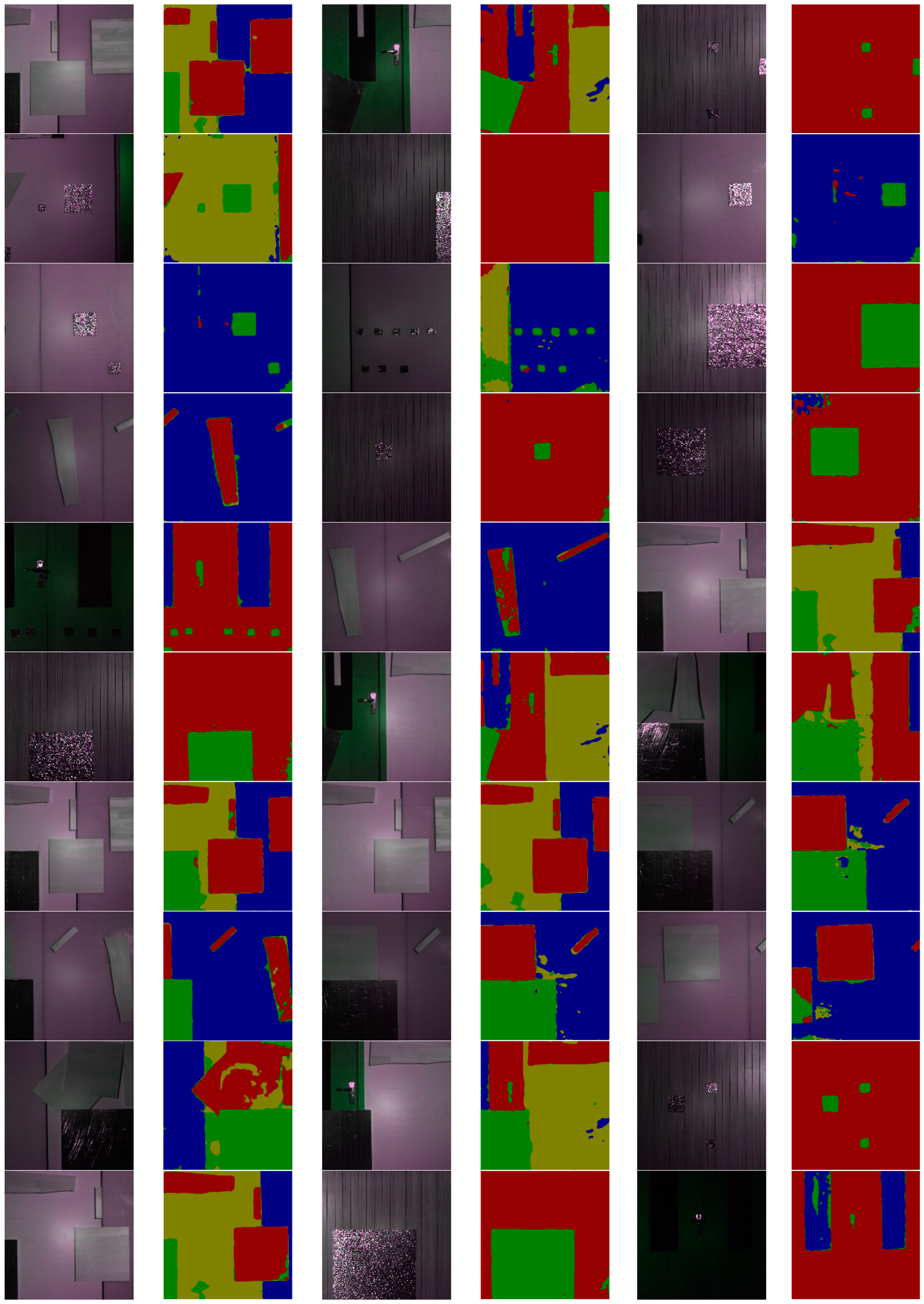

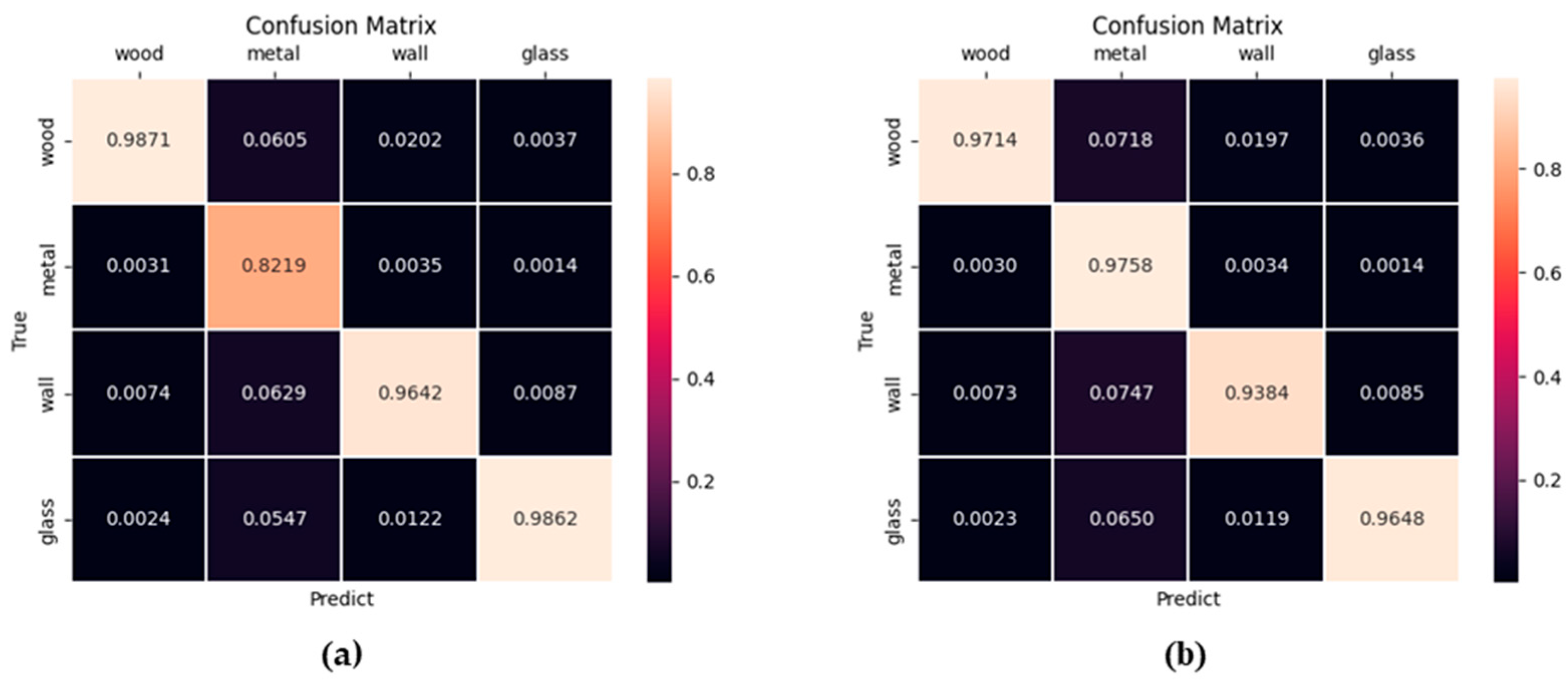

4.2. Material Recognition through Multispectral Data

5. Geometric Modeling of an Environment Based on Multi-Sensor Integration

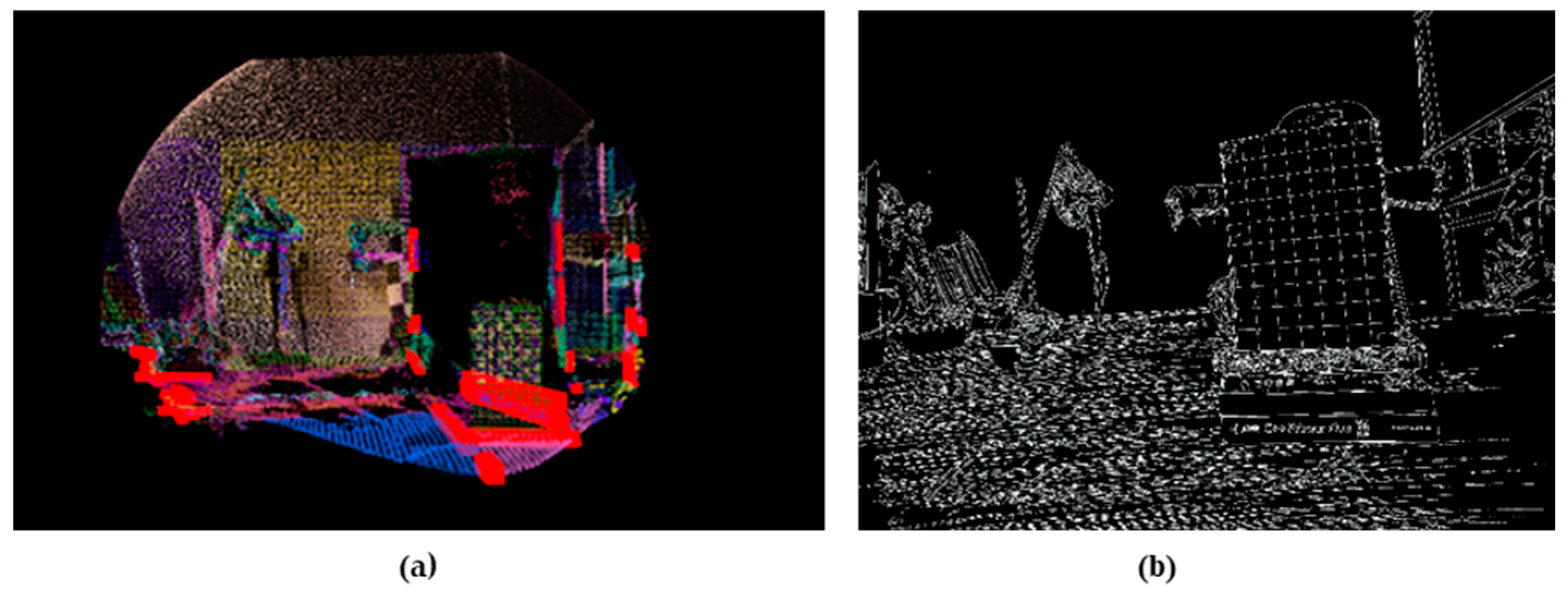

5.1. Registration of Optical Camera and LiDAR

- Edge feature extraction: The point cloud should be divided into 0.1 m × 0.1 m voxels. For each voxel, the RANSAC algorithm is repeatedly used to fit and extract the planes contained within the voxel. Plane pairs of angles within a certain range are formed, and plane intersection lines are solved, as shown in Figure 11a. The squares with the same color are set voxels;

- Edge matching: The extracted LiDAR point cloud edge needs to be matched with the corresponding edge in the RGB image. For each extracted LiDAR edge, as shown in Figure 11b, we sample multiple points on the edge. Each sampling point is converted to the camera coordinate system using the preliminary external parameter matrix obtained earlier;

- Error matching elimination: In addition to projecting the extracted LiDAR edge sampling points, the edge direction is projected onto the image plane, and its perpendicularity with the edge features is verified. This can effectively eliminate the false matching near two non-parallel lines on the image plane;

- Calculate the exact external parameter matrix.

5.2. Construction of 3D Geometric Model

- Voxel-grid filtering [25], which regularizes and orders the point cloud more effectively than the original data;

- Statistical outlier removal, which eliminates outliers by setting an outlier threshold;

- The greedy algorithm [26] makes the optimal choice in the current situation, selects the points to be connected according to certain topological and geometric constraints, and realizes the three-dimensional reconstruction of the filtered point cloud.

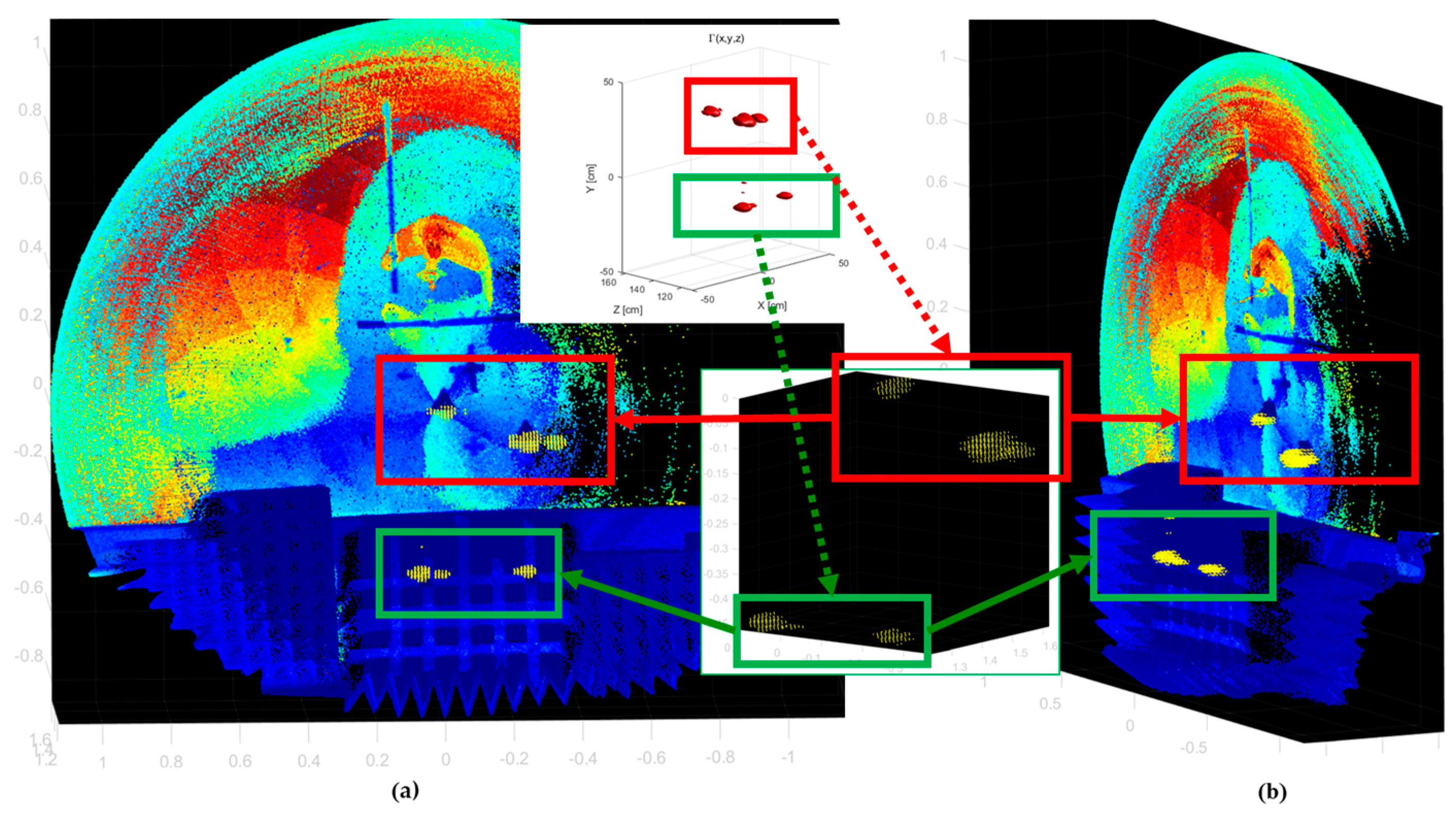

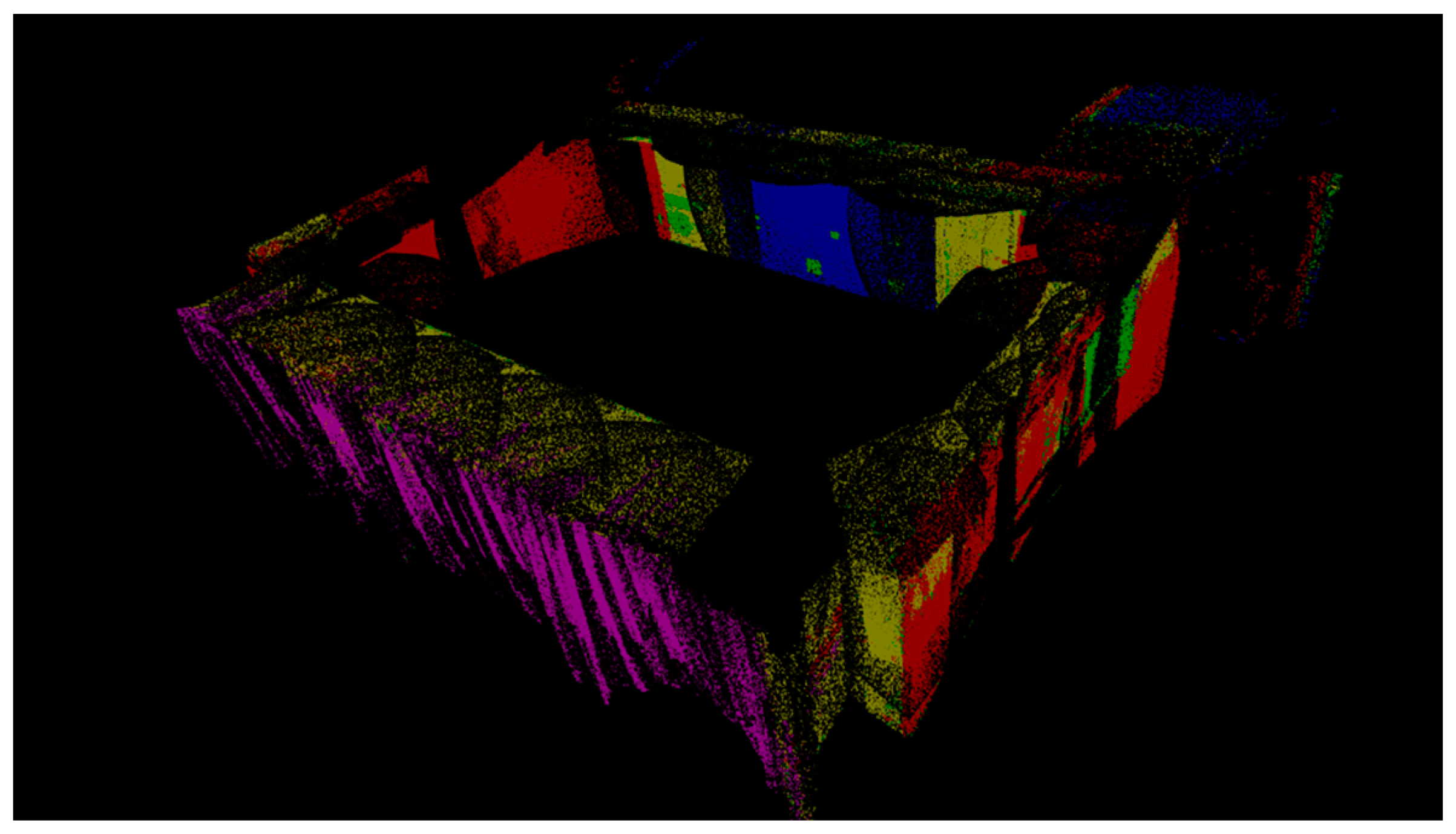

6. Multi-Sensor Data Fusion

7. Experimental Results

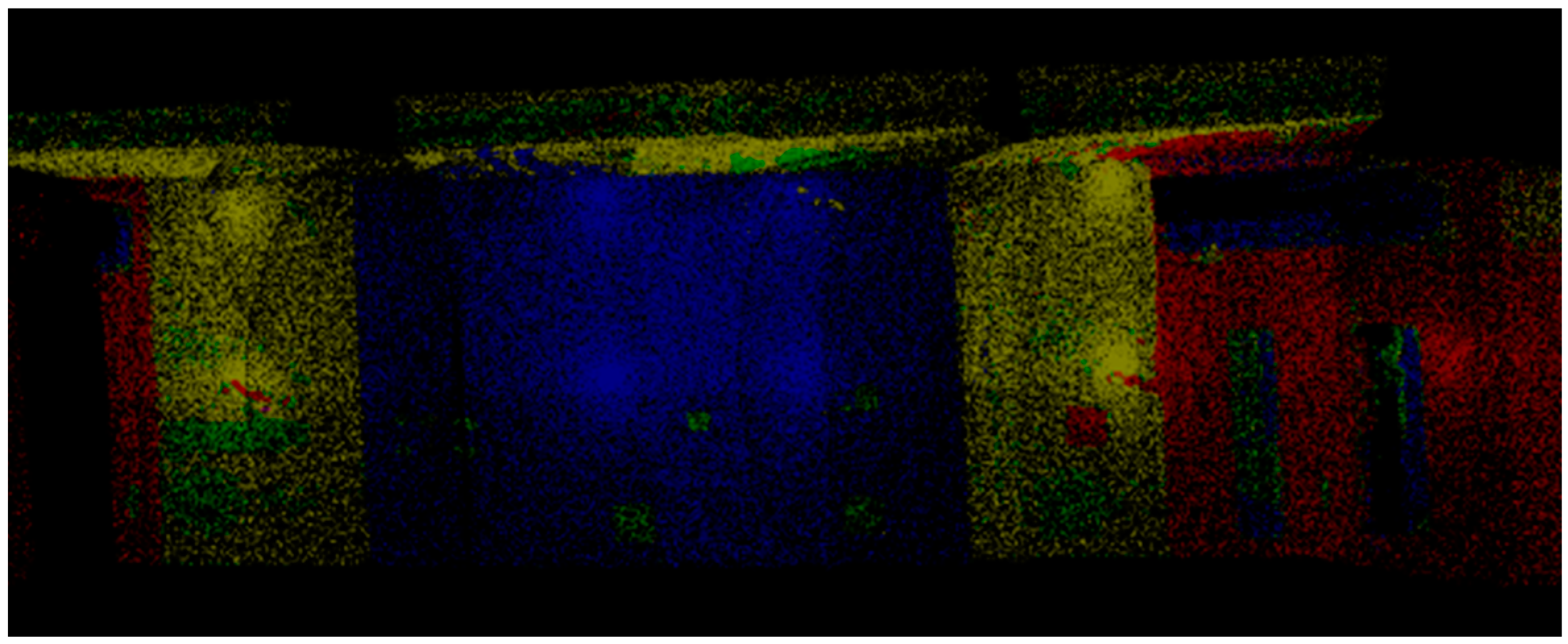

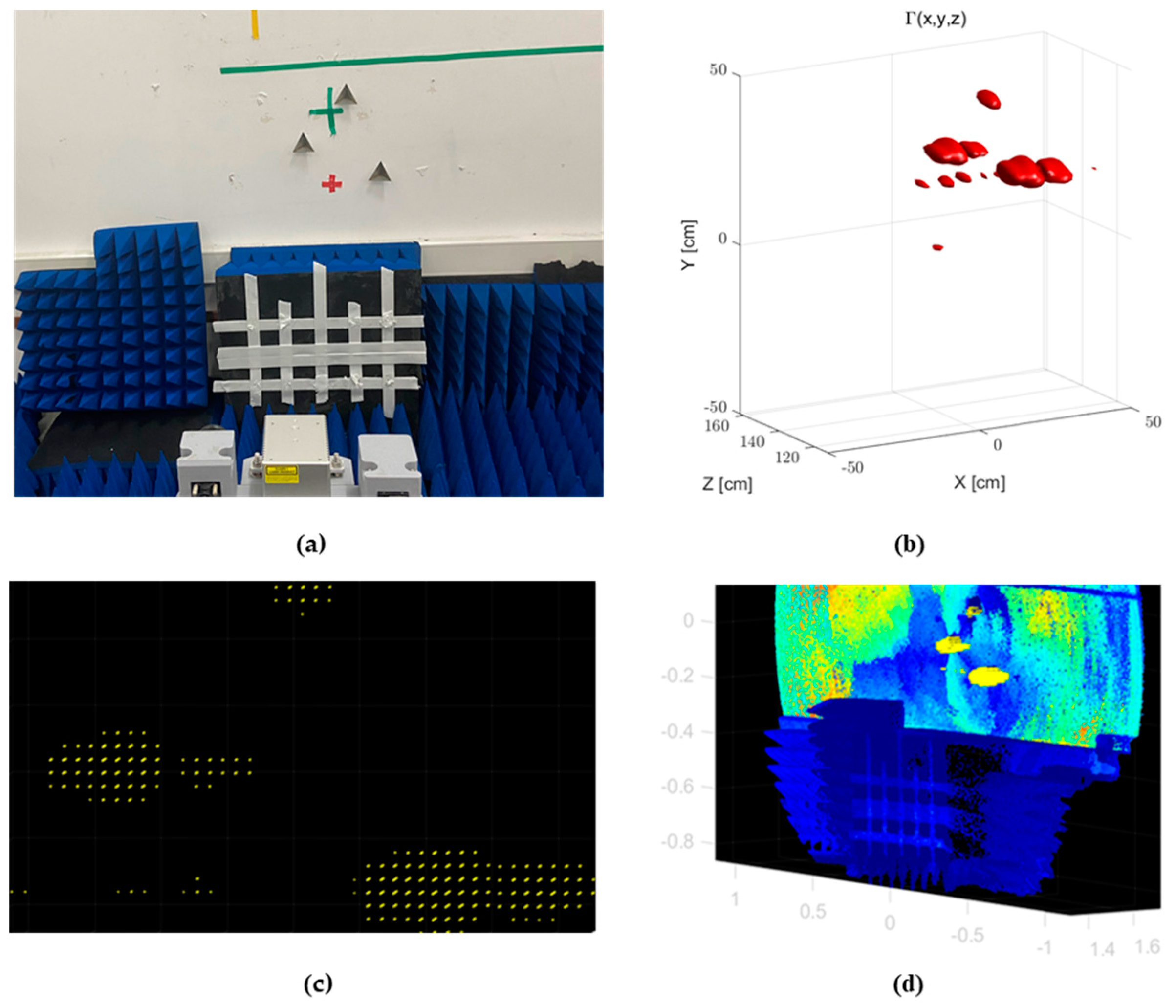

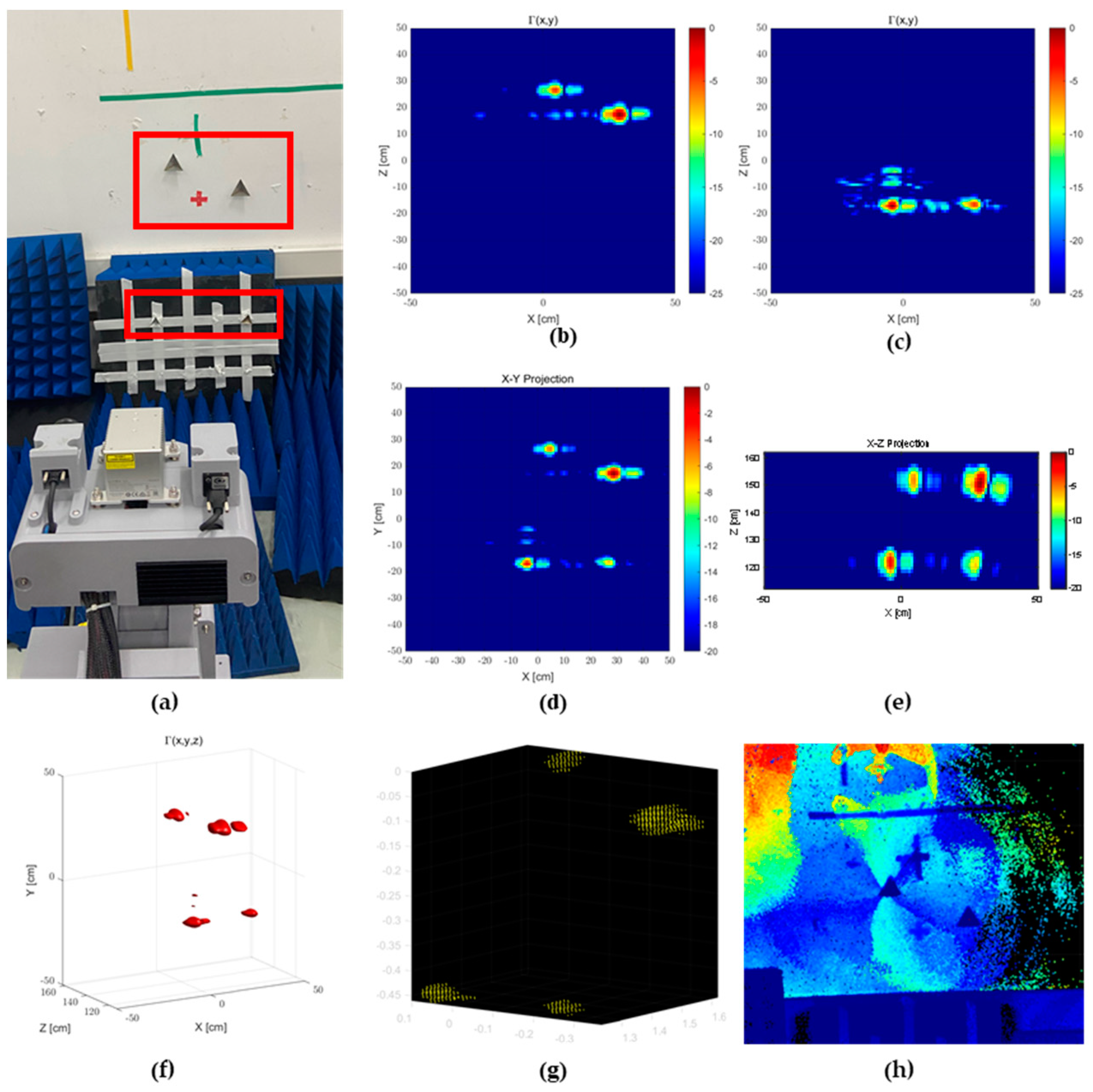

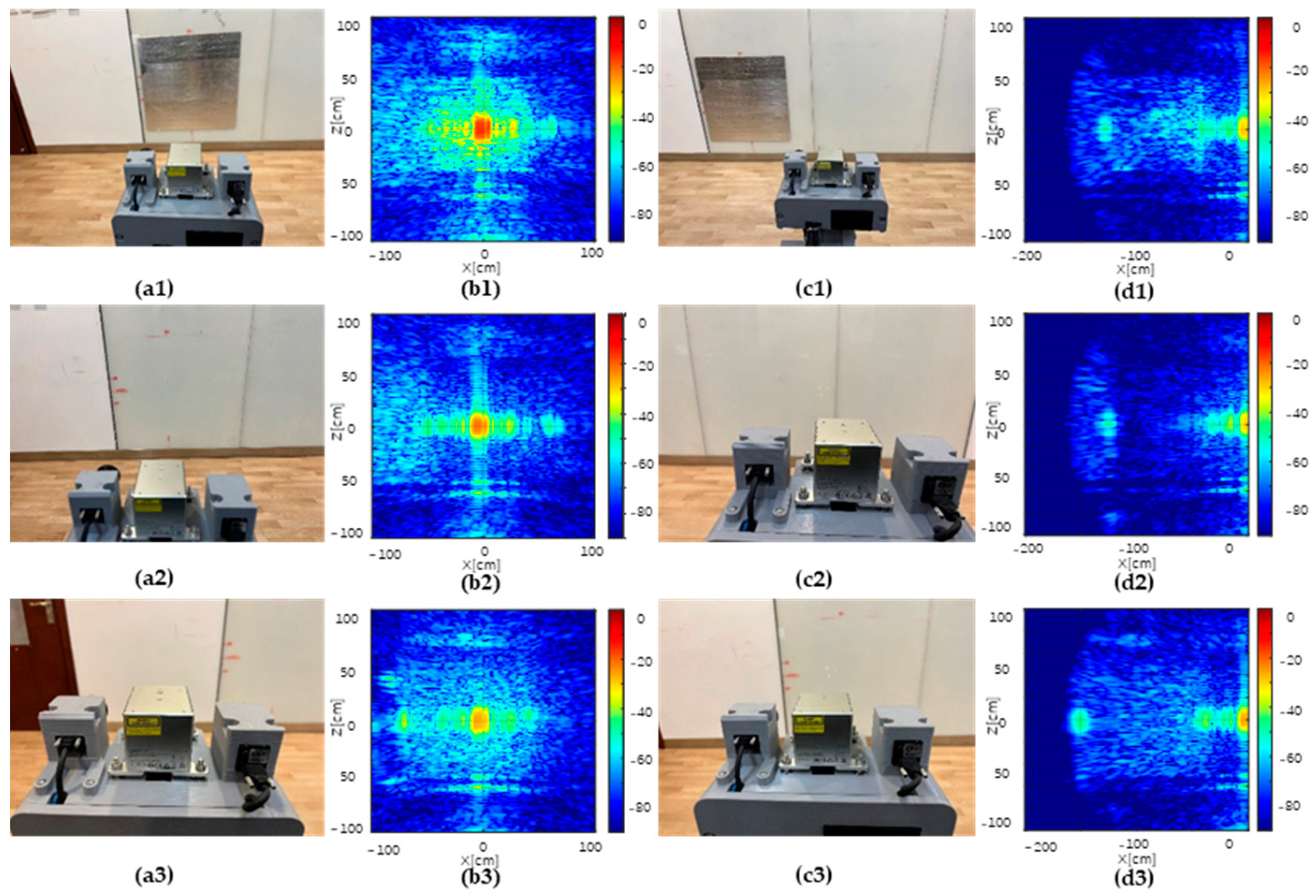

7.1. Electromagnetic Characteristic Sample Imaging Experiment

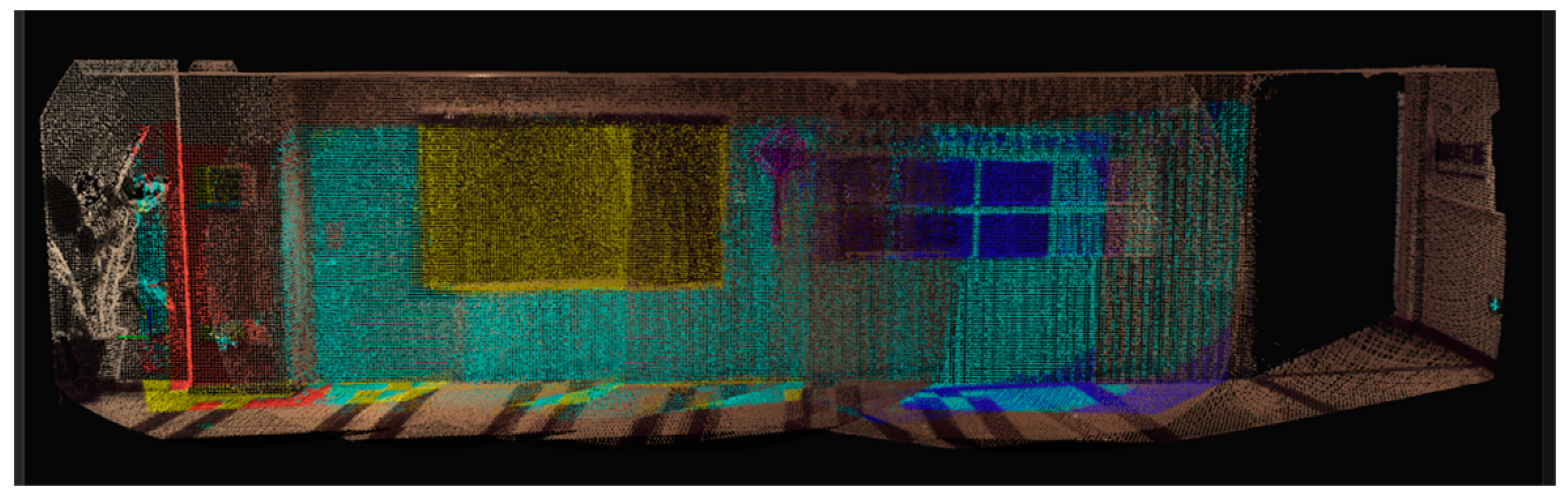

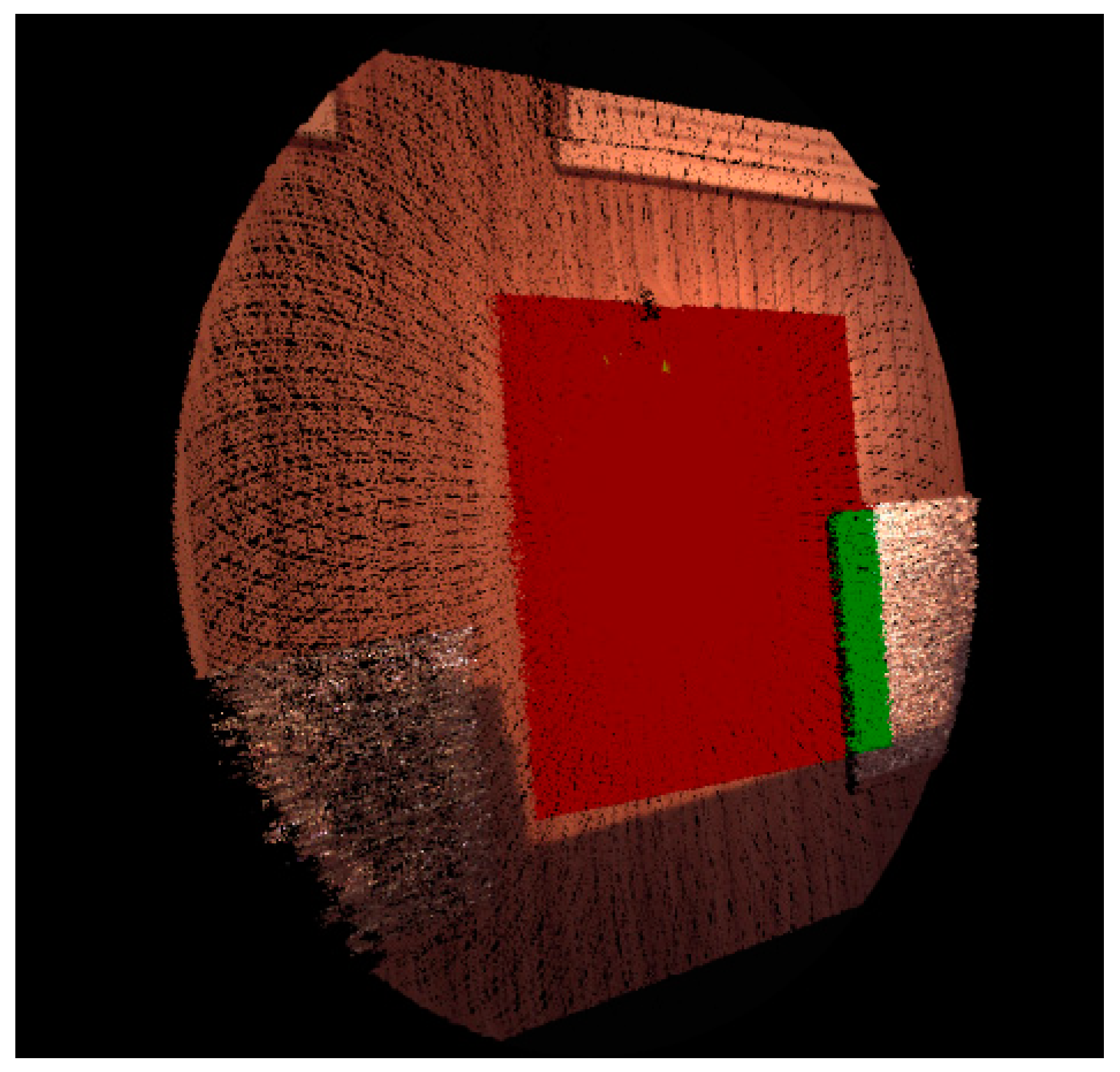

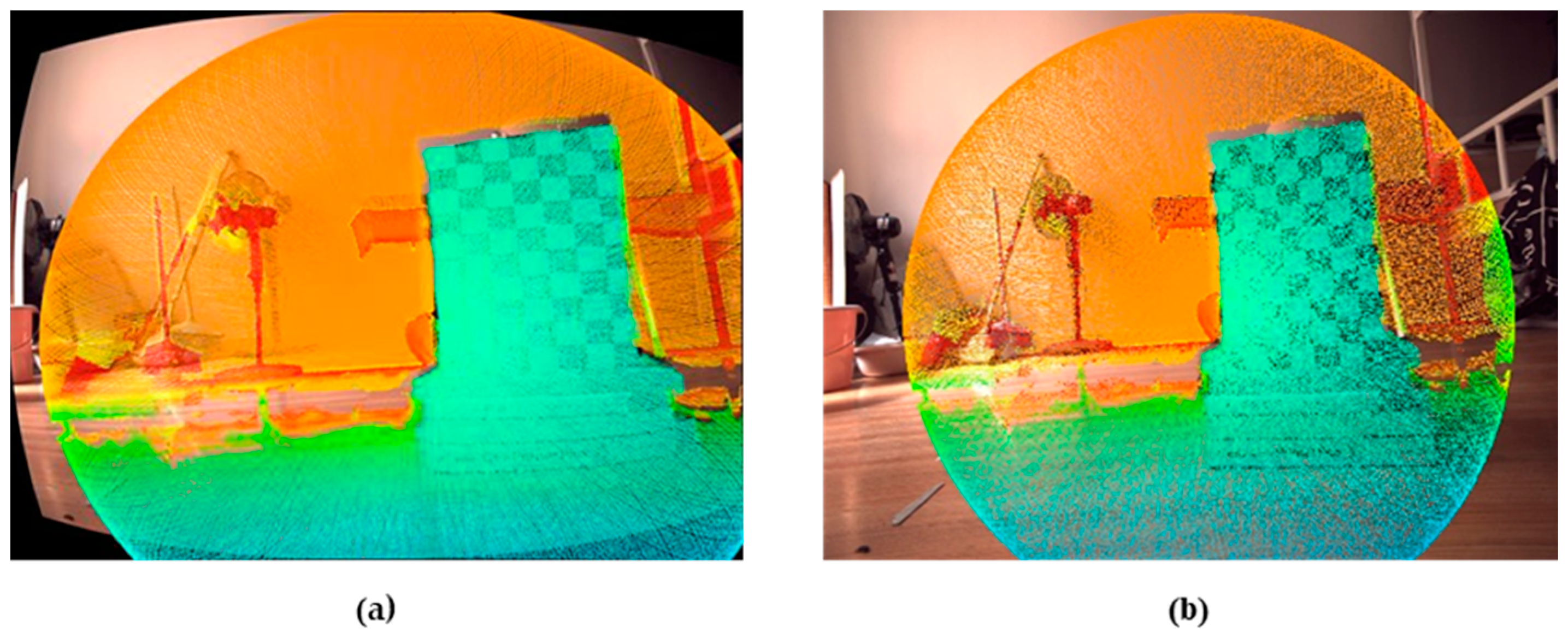

7.2. Small-Area Environment Experiment

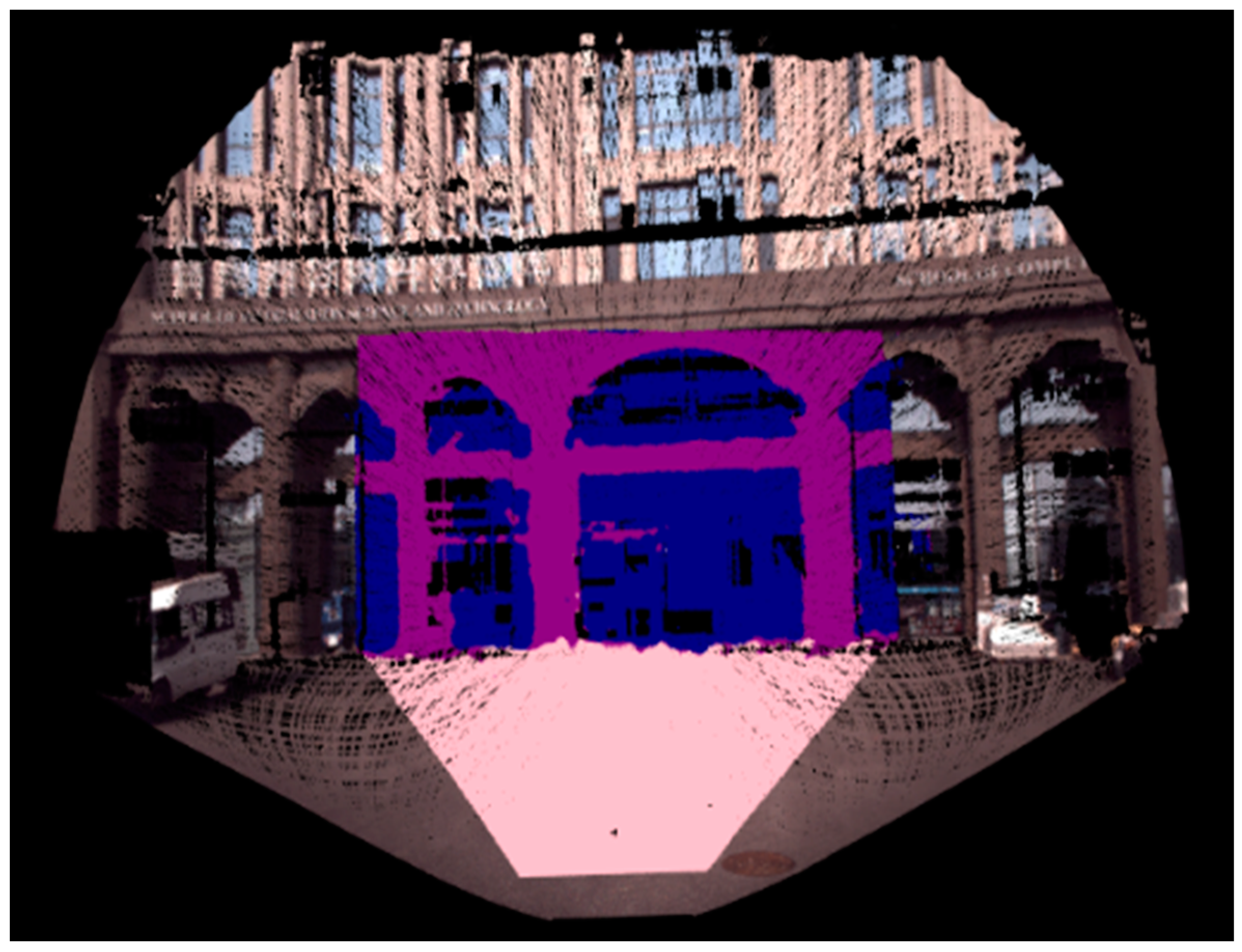

7.3. Whole-Room Experiment

7.4. Material Recognition Accuracy Experiment

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Herrera, D.; Escudero-Villa, P.; Cárdenas, E.; Ortiz, M.; Varela-Aldás, J. Combining Image Classification and Unmanned Aerial Vehicles to Estimate the State of Explorer Roses. AgriEngineering 2024, 6, 1008–1021. [Google Scholar] [CrossRef]

- Hercog, D.; Lerher, T.; Truntič, M.; Težak, O. Design and Implementation of ESP32-Based IoT Devices. Sensors 2023, 23, 6739. [Google Scholar] [CrossRef] [PubMed]

- Bae, I.; Hong, J. Survey on the Developments of Unmanned Marine Vehicles: Intelligence and Cooperation. Sensors 2023, 23, 4643. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Fu, M. Research on Unmanned System Environment Perception System Methodology. In International Workshop on Advances in Civil Aviation Systems Development; Springer Nature: Cham, Switzerland, 2023; pp. 219–233. [Google Scholar]

- Zhang, Z.; Wu, Z.; Ge, R. Generative-Model-Based Autonomous Intelligent Unmanned Systems. In Proceedings of the 2023 International Annual Conference on Complex Systems and Intelligent Science (CSIS-IAC), Shenzhen, China, 20–22 October 2023; pp. 766–772. [Google Scholar]

- Qiu, L.; Liu, C.; Dong, H.; Li, W.; Hu, B. Tightly-coupled LiDAR-Visual-Inertial SLAM Considering 3D-2D Line Feature Correspondences. IEEE Trans. Robot. 2022, 38, 1580–1596. [Google Scholar]

- Gao, Y.; Xu, L.; Zhou, W.; Li, Z.; Wang, Z. D3VIL-SLAM: 3D Visual Inertial LiDAR SLAM for Outdoor Environments. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4850–4861. [Google Scholar]

- Gao, T.; Xu, P.; Zhao, Q.; Wu, H. LMVI-SLAM: Robust Low-Light Monocular Visual-Inertial Simultaneous Localization and Mapping. IEEE Robot. Autom. Lett. 2021, 6, 2204–2211. [Google Scholar]

- Wang, Y.; Chen, L.; Zhang, J.; Yu, F. BVT-SLAM: A Binocular Visible-Thermal Sensors SLAM System in Low-Light Conditions. IEEE Sens. J. 2023, 23, 1078–1086. [Google Scholar]

- Ye, Q.; Shen, S.; Zeng, Q.; Wu, F.; Yang, Y. Vehicle Detection and Localization using 3D LIDAR Point Cloud and Image Data Fusion. IEEE Sens. J. 2021, 21, 17444–17455. [Google Scholar]

- Zhang, T.; Chen, S.; Hu, W.; Zhao, Y.; Wang, L. Environment Perception Technology for Intelligent Robots in Complex Environments. IEEE Robot. Autom. Mag. 2022, 29, 45–56. [Google Scholar]

- Uddin, M.J.; Qin, Y.; Ghazali, S.A.; Ma, W.; Islam, T.; Ghosh, R. Progress in Active Infrared Imaging for Defect Detection in the Renewable and Electronic Industries. Electronics 2023, 12, 334. [Google Scholar]

- Felton, M.; Wilson, C.; Retief, C.; Schwartz, A.; Eismann, M. Target Detection over the Diurnal Cycle Using a Multispectral Infrared Sensor. Sensors 2023, 23, 10234. [Google Scholar]

- Mendoza, F.; Lu, R.; Cen, H.; Ariana, D.; McClendon, R.; Li, W. Fruit Quality Evaluation Using Spectroscopy Technology: A Review. Foods 2023, 12, 812. [Google Scholar]

- Soumya, A.; Mohan, C.K.; Cenkeramaddi, L.R. Recent Advances in mmWave-Radar-Based Sensing, Its Applications, and Machine Learning Techniques: A Review. Sensors 2023, 23, 8901. [Google Scholar] [CrossRef]

- Zhang, F.; Luo, C.; Fu, Y.; Zhang, W.; Yang, W.; Yu, R.; Yan, S. Frequency Domain Imaging Algorithms for Short-Range Synthetic Aperture Radar. Remote Sens. 2023, 15, 5684. [Google Scholar] [CrossRef]

- Xie, H.; Lv, M.; Chen, G.; Xie, Q.; Zhang, J. Survey of Multi-Sensor Information Fusion Filtering and Control. IEEE Trans. Ind. Inform. 2021, 17, 3412–3426. [Google Scholar]

- Ren, B.; Yang, L.T.; Zhang, Q.; Feng, J.; Nie, X. Modern Computing: Vision and Challenges. Telemat. Inform. Rep. 2024, 13, 2772–5030. [Google Scholar]

- Gao, S.; Zhu, X.; Wang, Y.; Li, L. Through Fog High-Resolution Imaging Using Millimeter Wave Radar. IEEE Trans. Veh. Technol. 2022, 71, 4484–4496. [Google Scholar]

- Tan, K.; Wu, S.; Wang, Y. Two-dimensional sparse MIMO array topologies for UWB high-resolution imaging. Chin. J. Radio Sci. 2016, 31, 779–785. [Google Scholar]

- Ulander, L.M.H.; Hellsten, H.; Stenstrom, G. Synthetic Aperture Radar Processing Using Fast Factorized Back-Projection. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 760–776. [Google Scholar] [CrossRef]

- Lad, L.E.; Tinkham, W.T.; Sparks, A.M.; Smith, A.M.S. Evaluating Predictive Models of Tree Foliar Moisture Content for Application to Multispectral UAS Data: A Laboratory Study. Remote Sens. 2023, 15, 5703. [Google Scholar] [CrossRef]

- Park, Y.; Yun, S.; Won, C.S.; Cho, K.; Um, K.; Sim, S. Calibration between Color Camera and 3D LiDAR Instruments with a Polygonal Planar Board. Sensors 2014, 14, 5333–5353. [Google Scholar] [CrossRef] [PubMed]

- Yuan, C.; Liu, X.; Hong, X.; Zhang, F. Pixel-Level Extrinsic Self Calibration of High Resolution LiDAR and Camera in Targetless Environments. IEEE Robot. Autom. Lett. 2021, 6, 7517–7524. [Google Scholar] [CrossRef]

- Miknis, M.; Davies, R.; Plassmann, P.; Ware, A. Efficient point cloud pre-processing using the point cloud library. Int. J. Image Process. 2016, 10, 63–72. [Google Scholar]

- Maurya, S.R.; Magar, G.M. Performance of greedy triangulation algorithm on reconstruction of coastal dune surface. In Proceedings of the 2018 3rd International Conference for Convergence in Technology (I2CT), Pune, India, 6–8 April 2018; pp. 1–6. [Google Scholar]

| Num | X(m) | Y(m) | Z(m) | U Error (Pixel) | V Error (Pixel) | Num | X(m) | Y(m) | Z(m) | U Error (Pixel) | V Error (Pixel) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.63 | 0.197 | 0.16 | 4.21137 | 4.21285 | 13 | 0.739 | 0.284 | 0.142 | 12.3822 | 10.7165 |

| 2 | 0.629 | −0.185 | 0.033 | 11.731 | 9.74915 | 14 | 0.847 | −0.074 | 0.023 | 15.1389 | 4.91434 |

| 3 | 0.597 | −0.056 | −0.352 | 9.53926 | 9.21146 | 15 | 0.781 | 0.035 | −0.352 | 1.38927 | 4.52074 |

| 4 | 0.608 | 0.334 | −0.233 | 28.3947 | 7.5767 | 16 | 0.691 | 0.408 | −0.231 | 23.837 | 4.14517 |

| 5 | 1.288 | 0.018 | 0.149 | 15.0048 | 8.87346 | 17 | 0.851 | 0 | 0.162 | 13.4654 | 7.22812 |

| 6 | 1.254 | −0.34 | 0.031 | 13.2537 | 2.12468 | 18 | 0.786 | −0.371 | 0.033 | 16.3814 | 1.67815 |

| 7 | 1.251 | −0.225 | −0.334 | 5.24488 | 23.9537 | 19 | 0.77 | −0.242 | −0.348 | 8.37366 | 1.0941 |

| 8 | 1.307 | 0.144 | −0.217 | 20.3375 | 13.714 | 20 | 0.836 | 0.129 | −0.221 | 17.7853 | 6.703 |

| 9 | 0.841 | 0.084 | 0.153 | 15.4376 | 7.5648 | 21 | 0.667 | 0.013 | 0.16 | 18.8464 | 0.440737 |

| 10 | 0.815 | −0.287 | 0.029 | 19.0026 | 2.73421 | 22 | 0.609 | −0.353 | 0.032 | 4.63924 | 3.27094 |

| 11 | 0.79 | −0.161 | −0.34 | 1.18581 | 14.203 | 23 | 0.623 | −0.233 | −0.358 | 9.14083 | 12.0381 |

| 12 | 0.818 | 0.208 | −0.218 | 14.7613 | 9.14319 | 24 | 0.693 | 0.148 | −0.225 | 12.14 | 3.8012 |

| U Average error (pixel) | 12.9843 | V Average error (pixel) | 7.31718 | ||||||||

| Num | X(m) | Y(m) | Z(m) | U Error (Pixel) | V Error (Pixel) | Num | X(m) | Y(m) | Z(m) | U Error (Pixel) | V Error (Pixel) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.63 | 0.197 | 0.16 | 2.72956 | 0.865458 | 13 | 0.739 | 0.284 | 0.142 | 0.4435 | 11.2433 |

| 2 | 0.629 | −0.185 | 0.033 | 8.60092 | 7.93531 | 14 | 0.847 | −0.074 | 0.023 | 17.6698 | 5.11057 |

| 3 | 0.597 | −0.056 | −0.352 | 14.7296 | 8.89681 | 15 | 0.781 | 0.035 | −0.352 | 6.76452 | 2.28919 |

| 4 | 0.608 | 0.334 | −0.233 | 2.12415 | 1.12118 | 16 | 0.691 | 0.408 | −0.231 | 10.9912 | 6.25845 |

| 5 | 1.288 | 0.018 | 0.149 | 1.78096 | 1.63105 | 17 | 0.851 | 0 | 0.162 | 6.84806 | 1.74991 |

| 6 | 1.254 | −0.34 | 0.031 | 3.4407 | 5.33538 | 18 | 0.786 | −0.371 | 0.033 | 3.32046 | 0.698927 |

| 7 | 1.251 | −0.225 | −0.334 | 3.55262 | 3.77052 | 19 | 0.77 | −0.242 | −0.348 | 8.38159 | 8.65486 |

| 8 | 1.307 | 0.144 | −0.217 | 3.07098 | 0.330994 | 20 | 0.836 | 0.129 | −0.221 | 8.20325 | 2.83447 |

| 9 | 0.841 | 0.084 | 0.153 | 4.25411 | 0.69731 | 21 | 0.667 | 0.013 | 0.16 | 16.9157 | 1.65777 |

| 10 | 0.815 | −0.287 | 0.029 | 6.38252 | 1.13283 | 22 | 0.609 | −0.353 | 0.032 | 5.79765 | 3.16712 |

| 11 | 0.79 | −0.161 | −0.34 | 0.877178 | 4.06908 | 23 | 0.623 | −0.233 | −0.358 | 0.911411 | 3.21334 |

| 12 | 0.818 | 0.208 | −0.218 | 5.25818 | 0.528076 | 24 | 0.693 | 0.148 | −0.225 | 11.6263 | 1.33696 |

| U Average error (pixel) | 6.44479 | V Average error (pixel) | 3.52204 | ||||||||

| Material | Measuring Distance (R = 1 m) | Measuring Distance (R = 1.2 m) | Measuring Distance (R = 1.5 m) | Total Area |

|---|---|---|---|---|

| cement | 0 m2 | 1.927 m2 | 11.377 m2 | 13.304 m2 |

| glass | 0 m2 | 0.448 m2 | 12.942 m2 | 13.390 m2 |

| metal | 0.682 m2 | 0.065 m2 | 4.288 m2 | 5.035 m2 |

| wood | 8.641 m2 | 0.146 m2 | 9.874 m2 | 18.661 m2 |

| total | 9.323 m2 | 2.586 m2 | 38.481 m2 | 50.390 m2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Yu, M.; Chen, H.; Zhang, M.; Tan, K.; Chen, X.; Wang, H.; Xu, F. Three-Dimensional Geometric-Physical Modeling of an Environment with an In-House-Developed Multi-Sensor Robotic System. Remote Sens. 2024, 16, 3897. https://doi.org/10.3390/rs16203897

Zhang S, Yu M, Chen H, Zhang M, Tan K, Chen X, Wang H, Xu F. Three-Dimensional Geometric-Physical Modeling of an Environment with an In-House-Developed Multi-Sensor Robotic System. Remote Sensing. 2024; 16(20):3897. https://doi.org/10.3390/rs16203897

Chicago/Turabian StyleZhang, Su, Minglang Yu, Haoyu Chen, Minchao Zhang, Kai Tan, Xufeng Chen, Haipeng Wang, and Feng Xu. 2024. "Three-Dimensional Geometric-Physical Modeling of an Environment with an In-House-Developed Multi-Sensor Robotic System" Remote Sensing 16, no. 20: 3897. https://doi.org/10.3390/rs16203897

APA StyleZhang, S., Yu, M., Chen, H., Zhang, M., Tan, K., Chen, X., Wang, H., & Xu, F. (2024). Three-Dimensional Geometric-Physical Modeling of an Environment with an In-House-Developed Multi-Sensor Robotic System. Remote Sensing, 16(20), 3897. https://doi.org/10.3390/rs16203897