1. Introduction

Accurately capturing the spatiotemporal changes in water bodies is crucial for managing water resources’ dynamics, responding to water-related disasters, and monitoring the impacts of climate change in a timely manner [

1,

2,

3,

4,

5]. Therefore, analyzing the spatiotemporal distribution patterns and evolution of water bodies is of great significance.

Currently, with the continuous development and deployment of Earth observation systems, remote sensing technology has become a vital tool for multi-temporal water body detection due to its advantages of all-weather, all-time capabilities and extensive coverage [

6,

7]. At present, the commonly used remote sensing images mainly include optical imagery and SAR imagery. Optical imagery, with its rich spectral information, clear geometric structures, and texture features—especially high-resolution remote sensing imagery—is widely used in tasks such as object recognition [

8] and change detection [

9]. Traditional methods for water body detection based on optical remote sensing mainly include the water index method and image classification method [

10]. The water index method involves constructing a water index model based on spectral bands and manually selecting a threshold between water and non-water areas based on empirical values to achieve water body detection. As early as 1996, Gao et al. [

11] proposed the normalized difference water index (NDWI), using the green and near-infrared bands. However, the NDWI is significantly affected by buildings’ shadows, making it difficult to achieve satisfactory detection results in urban areas. Subsequently, various water indices emerged, such as the modified NDWI (MNDWI) [

12,

13], enhanced MNDWI (E-MNDWI) [

14], and automated water extraction index (AWEI) [

15]. Although the water index method is simple and fast, the water body detection results obtained using this method are often unsatisfactory due to the spectral similarity between water and low-reflectance objects, as well as the spectral variability of water bodies themselves. Additionally, the water index method requires the determination of an appropriate threshold, and selecting the most suitable threshold for extracting water bodies remains a major challenge [

16]. Moreover, considering the cost issues and practical needs, the current high-resolution optical imagery often only includes the red, green, blue, and near-infrared bands, which limits the application of the water index method. The image classification method detects water bodies on the basis of remote sensing spectral information, texture, and geometric edge features, using classifiers from machine learning. Common classifiers primarily include decision trees [

17], support vector machines [

18], and random forests [

19]. The image classification method can achieve better water body detection results than the water index method. However, it requires manually construction of the features of water and non-water areas for the classifier to recognize, and these manually constructed features often have subjectivity and a limited scope of applicability. In summary, traditional methods for water body detection based on optical imagery face the following challenges. (1) The shape and size of water bodies can change due to natural environmental factors and human activities, leading to spatial diversity in water body regions, which increases the difficulty of water body extraction and segmentation. (2) “Same spectrum, different objects” is a major challenge in the interpretation of optical remote sensing imagery. Shadows cast by tall objects have similar spectral characteristics to water bodies in imagery, and the presence of floating vegetation or sediment on the water surface affects reflectance, increasing the risk of false negatives or false positives. (3) The cost of acquiring high-resolution imagery is high. These challenges hinder the widespread application of traditional methods in multi-scene and multi-temporal water body detection.

The application of SAR imagery in the field of water body detection began relatively late, but it has rapidly developed in recent years due to its unique advantage of seeing through clouds and fog, and has been widely used in water body detection [

20]. Currently, the methods for water body extraction using SAR imagery primarily include grayscale threshold segmentation, DEM-based filtering, and texture information methods based on the gray-level co-occurrence matrix [

21]. Cao et al. [

22] used a threshold-based method with ASAR as the data source to achieve water body detection. Hong et al. [

23] combined SAR, optical imagery, and DEM data to improve the accuracy of water body information extraction. Klemenja et al. [

24] combined morphological filtering and supervised classification to automatically select samples for training, achieving water body detection across multiple scenes. However, the use of morphological filtering can result in unsmooth water body edges due to the presence of morphological structuring elements, leading to lower detection accuracy. Lyu et al. [

25] combined the gray-level co-occurrence matrix with the SVM method for water body extraction, effectively reducing the impact of the terrain. However, the gray-level co-occurrence matrix method involves high computational complexity, and a significant amount of texture information is lost during the grayscale quantization process. Additionally, selection of the optimal window size for extracting texture information requires extensive manual tuning. In summary, while SAR imagery can provide valuable information for water body detection in shallow water and shadowed areas, its low spatial resolution and high noise levels make it difficult to accurately and effectively extract water bodies. Additionally, the existing methods are constrained by challenges such as threshold selection, high computational complexity, and low accuracy, making them unsuitable for multi-temporal water body detection.

With the advancement of artificial intelligence and hardware–software technologies, deep learning has been widely applied in fields such as object detection, image classification, and natural language processing due to its powerful representation capabilities [

26,

27,

28,

29,

30,

31], and it also offers potential for multi-scene and multi-temporal water body detection [

32,

33,

34]. For multi-scene water body detection, Long et al. [

28] first proposed the fully convolutional network (FCN), after which FCN and its variants (such as FCN8s and UNet) have achieved great success in water body detection tasks [

33,

34,

35]. However, these methods primarily rely on semantic information for image segmentation and often overlook the intrinsic characteristics of water bodies, leading to lower detection accuracy. To enhance the diversity of water body features and semantic information, Zhang et al. [

36] proposed a network called MECNet, which integrates multi-feature extraction and combination modules, enabling water body detection across different backgrounds. Parajuli et al. [

37] proposed an attention-dense convolutional network (ADCNN) using Sentinel-2 data, which effectively extracted water bodies. However, in complex scenes, ADCNN tends to overestimate the water body detection results. Research on multi-temporal water body detection is relatively limited. For instance, Guo [

38] employed a PCNN-based image fusion method combined with the UNet network to detect changes in water bodies in Ningxiang, China, in June 2017. However, the simplistic sampling method of UNet makes it challenging to capture changes in small water bodies. Yang [

39] constructed the CNN_LSTM and Convolution Seq2Seq models to perform a multi-temporal analysis of the water bodies in the area between the Qiala Reservoir and Daxihai Reservoir in Yuli County, Xinjiang, China, achieving specific results. However, the dataset used was manually labeled, which is time-consuming and highly subjective. As a result, the model’s training performance was heavily influenced by the training set, leading to biases in the multi-temporal water body detection results. Although most models can achieve good detection results in multi-scene settings, they do not perform as well in long-term water body detection. Therefore, constructing a multi-modal integrated model for multi-scene and multi-temporal water body detection remains a key challenge and is a hot topic in current research. Additionally, efficiently obtaining a sufficient amount of high-quality training datasets is also a major challenge in deep learning-based water body detection tasks.

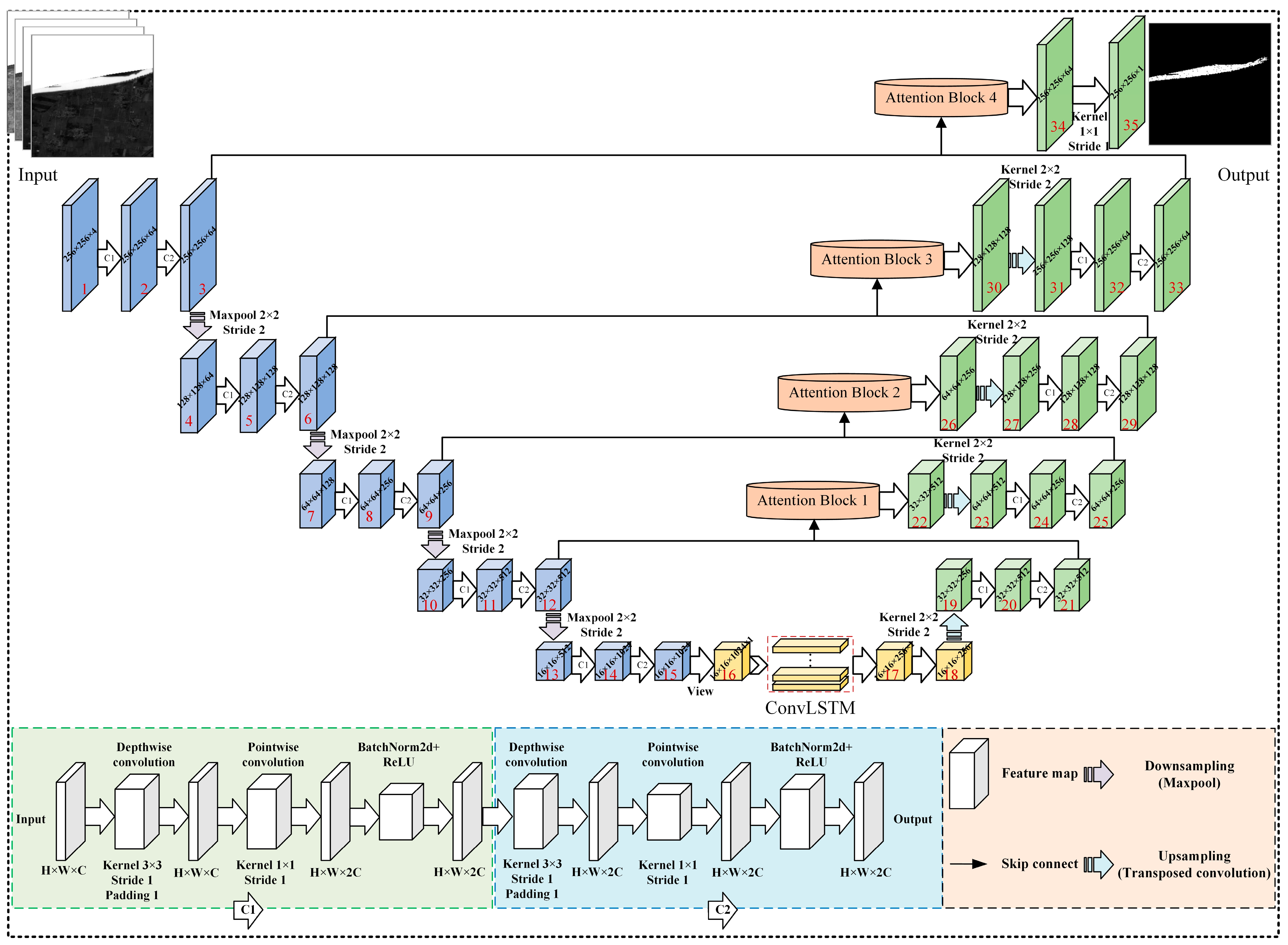

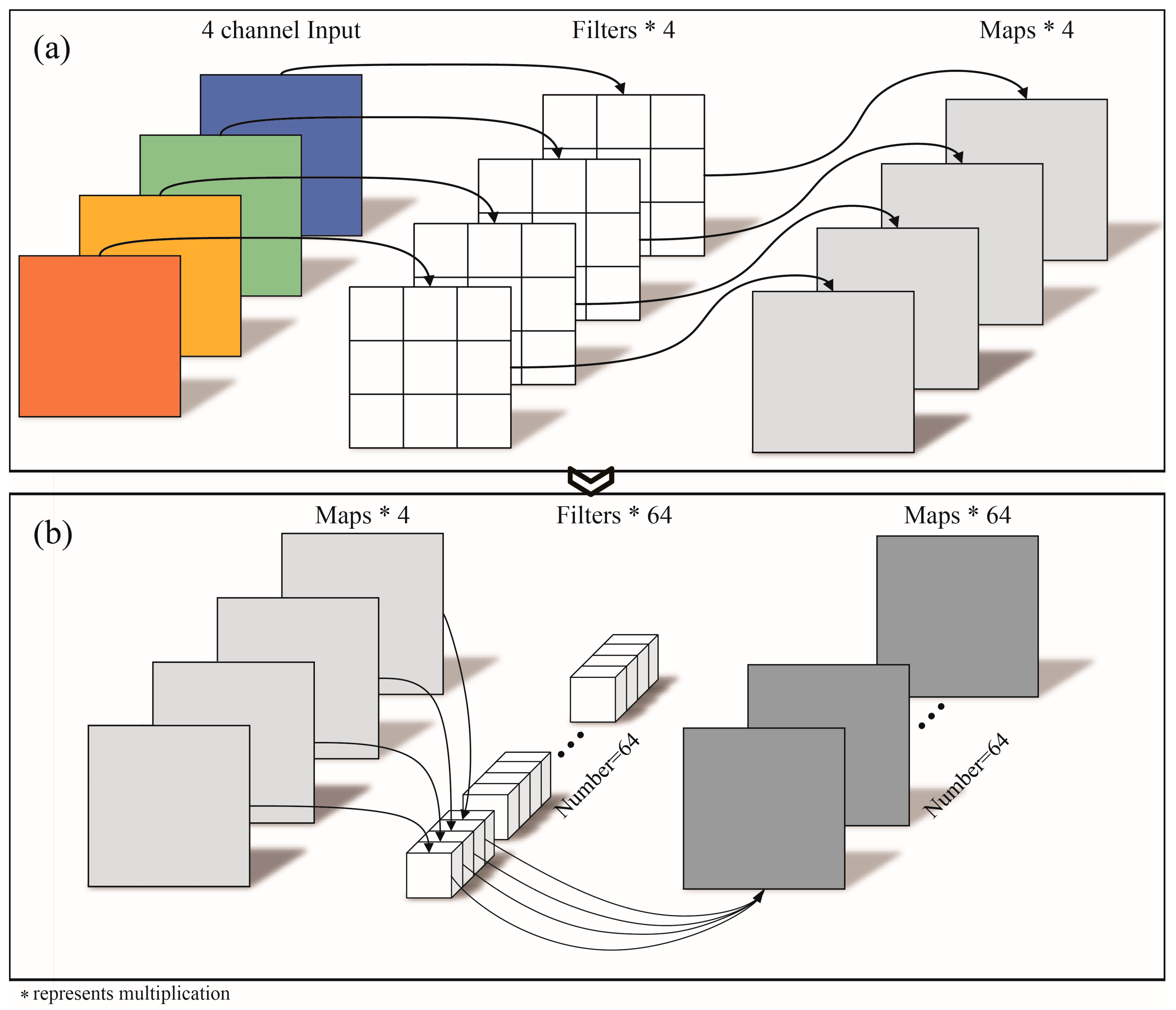

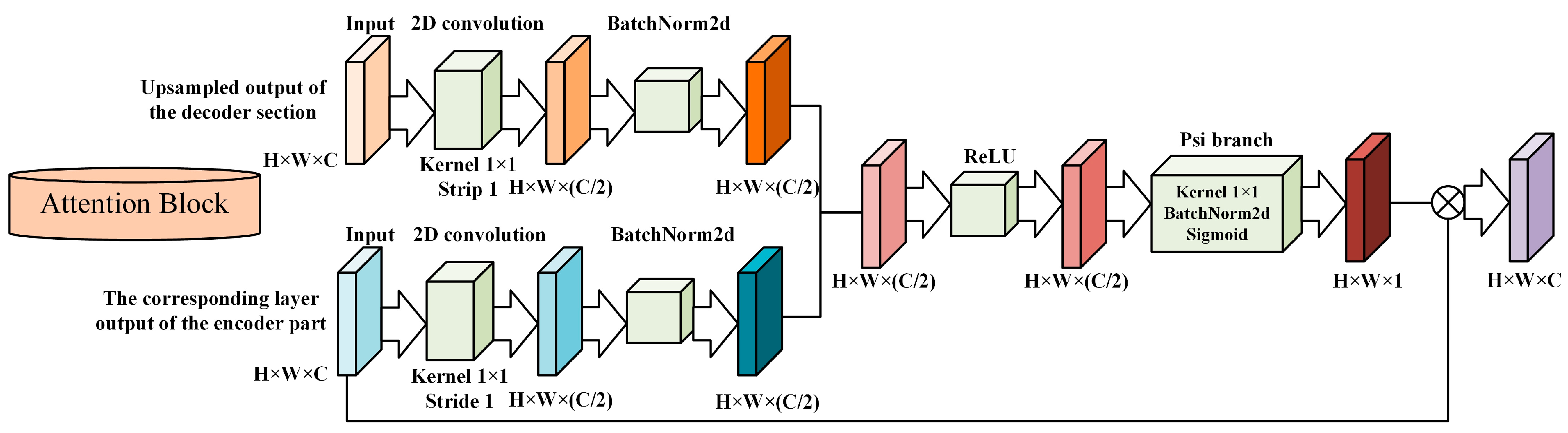

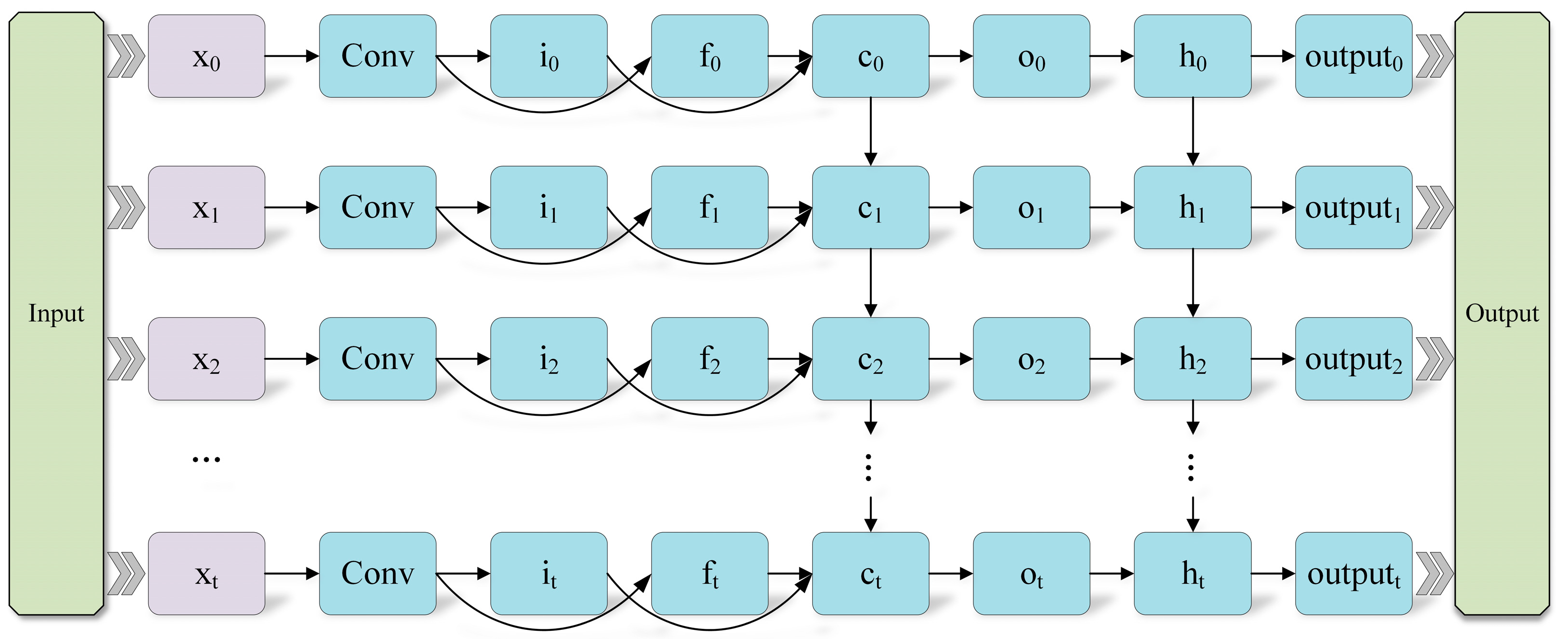

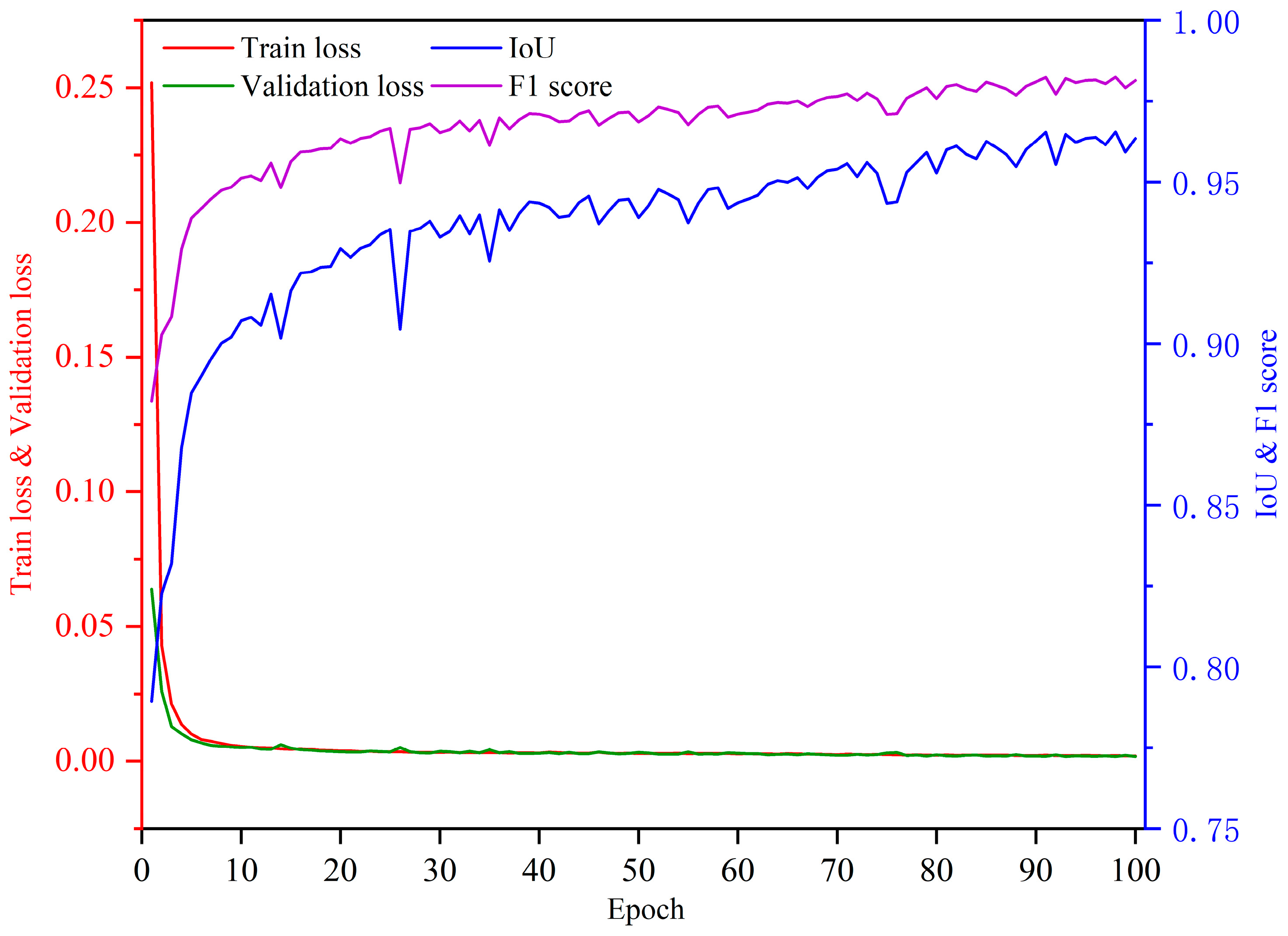

To address the limitations of the existing methods, such as their inability to effectively adapt to varying environmental conditions across different scenes and time periods, as well as the challenge of acquiring sufficient and diverse high-quality training data, this study proposes a spatiotemporal scale-based water body detection model called TSAE-UNet. TSAE-UNet integrates convolutional neural networks (CNN) [

40,

41], depthwise separable convolutions [

42], ConvLSTM [

43], and attention mechanisms [

44] to enhance the accuracy and robustness of water body detection by capturing multi-scale spatiotemporal features and establishing long-range dependencies. The model generates multi-scale feature maps using dual-stream parallel branches and enhances feature representation capabilities through lightweight attention modules and sub-pixel upsampling modules. Temporal sequence analysis is employed to capture the spatiotemporal patterns of water body changes, effectively addressing the diversity of complex environmental scenes and land cover types. Additionally, to ensure efficient model training, a rapid training dataset generation method based on Otsu [

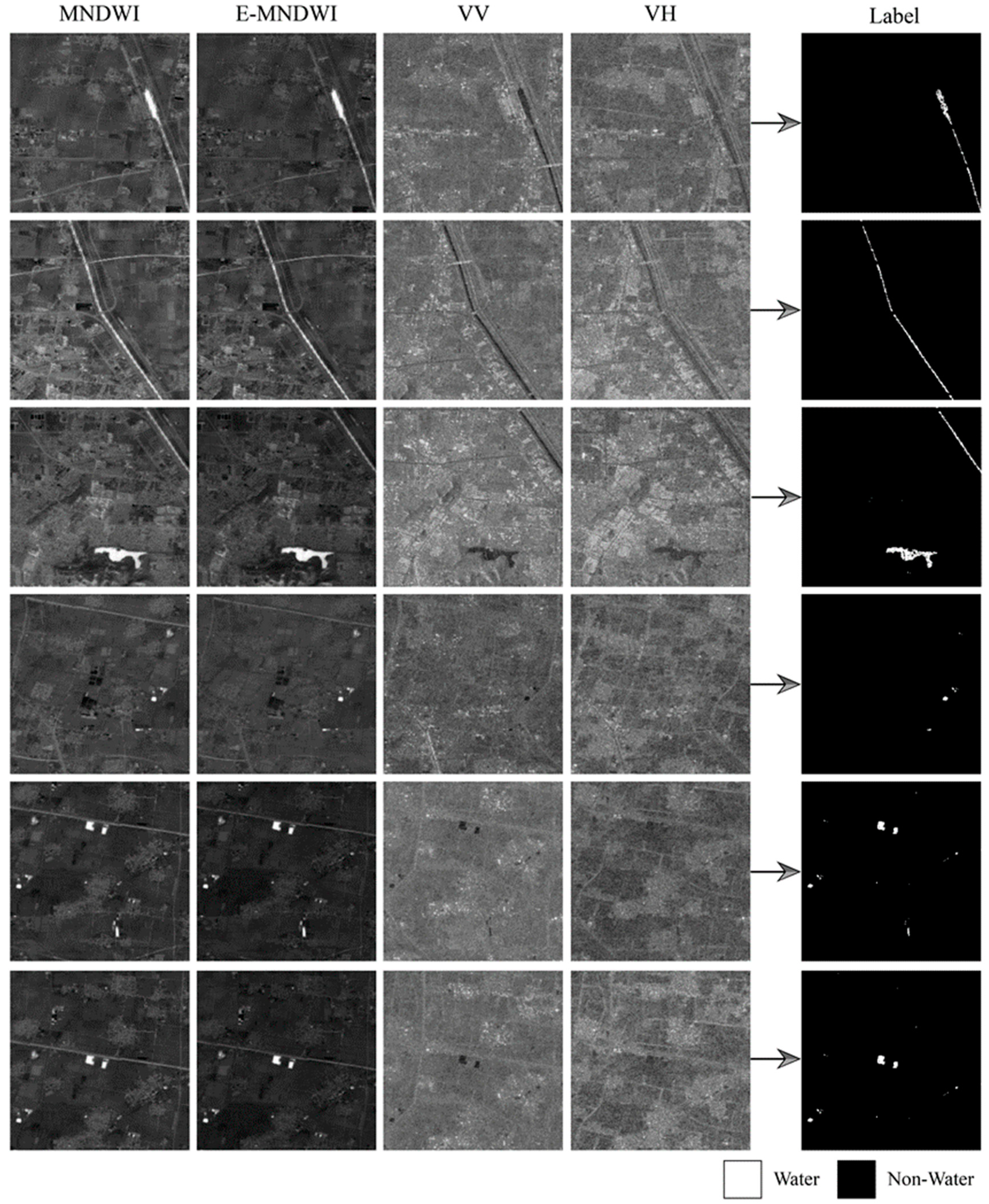

45,

46] is used, integrating the advantages of both active and passive remote sensing data.

The structure of this paper is as follows.

Section 2 introduces the training dataset’s construction method and the architecture of the TSAE-UNet model,

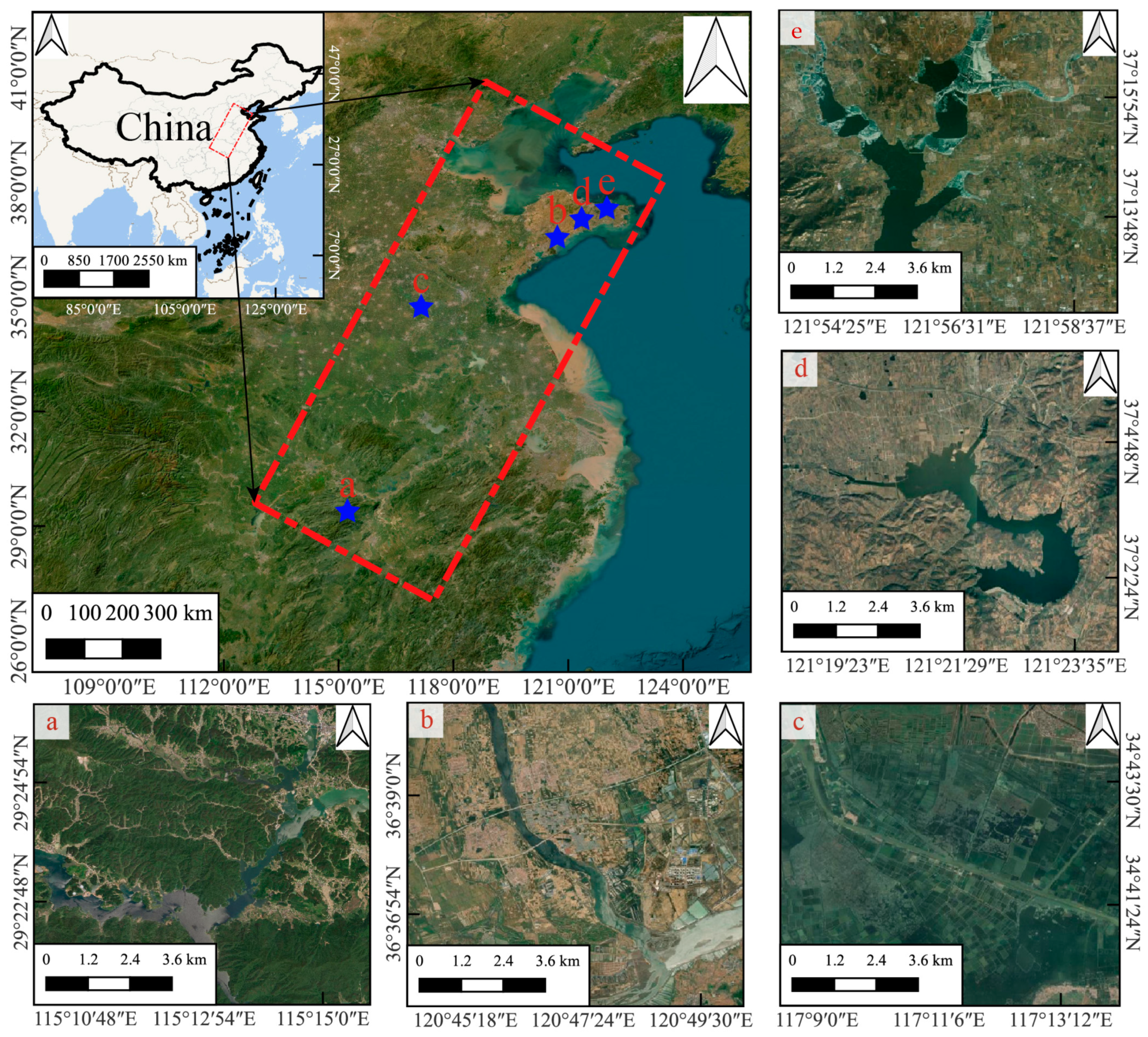

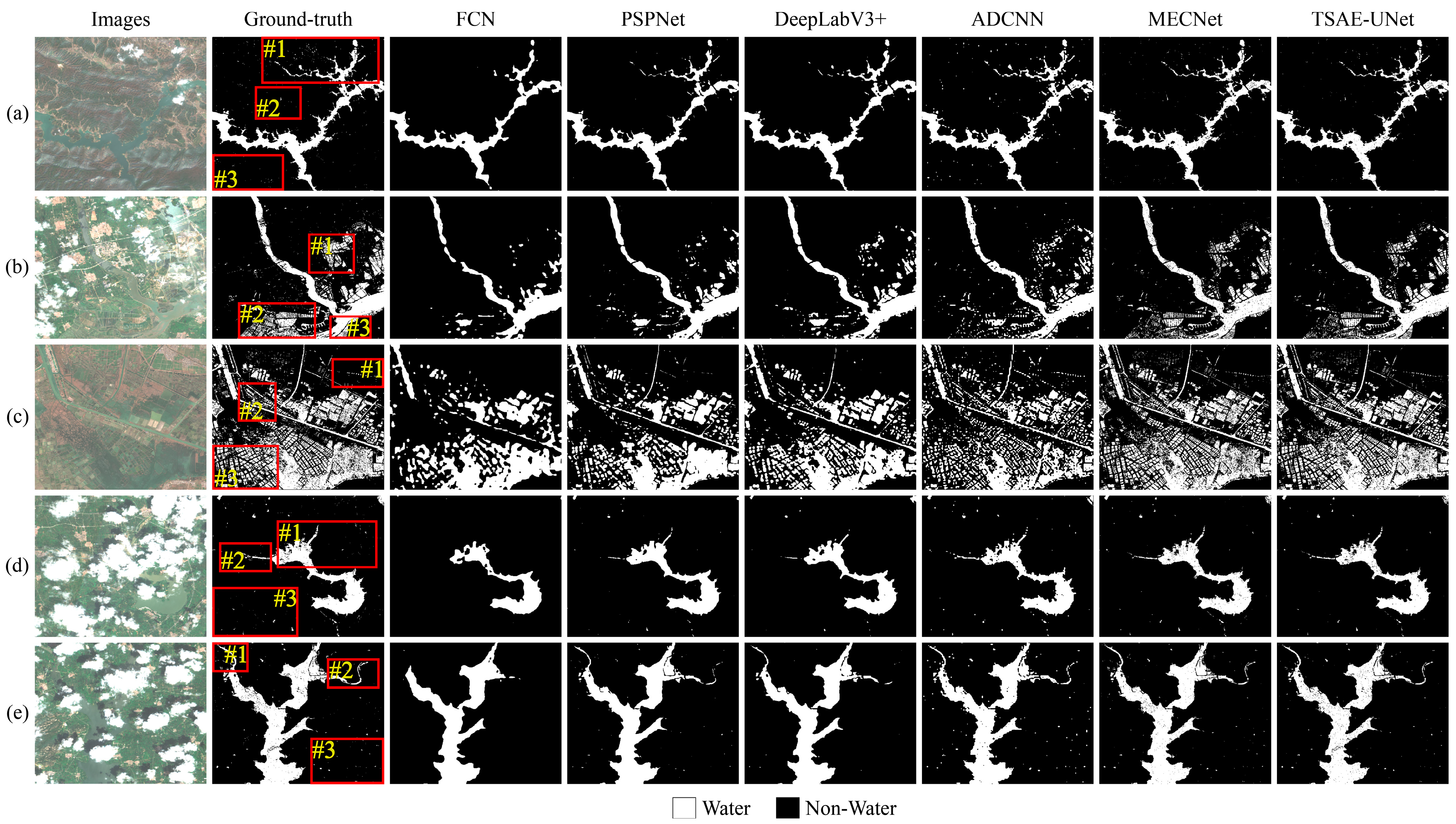

Section 3 presents the experiments on multi-scene water body detection,

Section 4 covers the experiments on multi-temporal water body detection,

Section 5 is the discussion section, and

Section 6 concludes the study with a summary of the findings.

5. Discussion

5.1. Analysis of the Applicability and Limitations of the TSAE-UNet Model

The TSAE-UNet model demonstrated strong applicability in multi-scene and multi-temporal water body detection tasks. By integrating ConvLSTM layers and attention mechanisms, the model effectively captured and leveraged the dynamic changes in water bodies in the spatiotemporal dimensions, making it adaptable to various environmental conditions. This was particularly evident in areas where water bodies’ boundaries changed significantly over time. This design allowed the model to maintain high detection accuracy even when processing large-scale medium-resolution remote sensing data, highlighting its practical utility.

However, TSAE-UNet also has some limitations. First, since medium-resolution data (e.g., Sentinel-1A and Sentinel-2) were used, the model may struggle to accurately identify smaller or narrower water bodies due to insufficient image resolution. Additionally, in complex terrain or scenes with diverse land cover, the model’s performance may be somewhat affected, primarily due to the resolution limitations of the input data, which restrict the model’s ability to handle fine-grained features. To overcome these limitations, future work will involve incorporating high-resolution remote sensing data and applying multi-resolution data fusion strategies, aiming to enable the model to capture details more accurately in complex scenarios.

5.2. Global Application Potential and Key Challenges of the TSAE-UNet Model

Although the TSAE-UNet model designed in this study has so far only been tested on water body detection experiments in typical regions of China, yielding promising results, its architectural design provides a solid foundation for global water body detection tasks. The integration of spatiotemporal feature extraction and attention mechanisms gives this model the potential for broader application worldwide. However, the significant geographical and climatic differences across the globe present a challenge. The current model was primarily trained on data from China, which may limit its generalization capability in regions with vastly different climate and terrain characteristics, such as tropical rainforests, deserts, or polar areas. In these regions, the model may not fully adapt to the varying environmental conditions, potentially affecting the accuracy of water body detection.

Additionally, obtaining Sentinel satellite data of sufficient quality may be challenging in certain special regions. For example, in polar areas or tropical regions with persistent cloud cover, acquiring Sentinel data can be difficult, directly limiting the scope of application of the TSAE-UNet model. If high-quality remote sensing data cannot be obtained, the model’s detection performance will be significantly affected, posing a practical challenge to its global applicability.

Addressing the key challenges of dataset expansion and data acquisition in specific regions would give the TSAE-UNet model the potential to play a more extensive and significant role in global water body detection and environmental monitoring.

In addition, when applying the TSAE-UNet model to larger areas, several strategies can be considered to improve efficiency and scalability. These include parallelization and optimization of the model to enhance computational efficiency, a multi-resolution approach to process large and small areas with varying levels of detail, and incremental learning with region-based processing, allowing the model to adapt to diverse geographical conditions without the need for complete retraining. Implementing these strategies would enable a more effective application of the model to large-scale water body detection tasks across different regions.

5.3. Impact of Environmental Factors on Water Body Detection Results

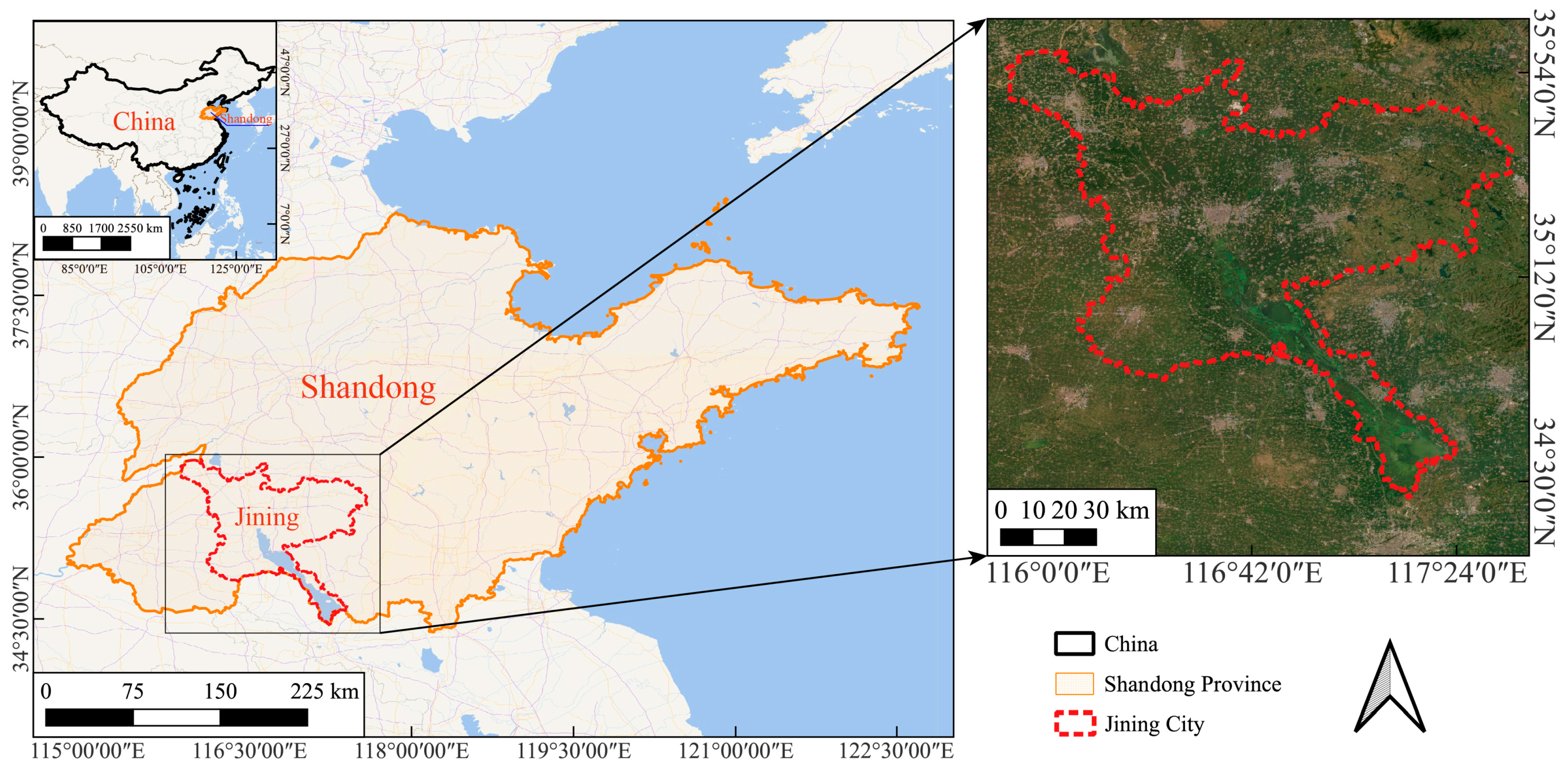

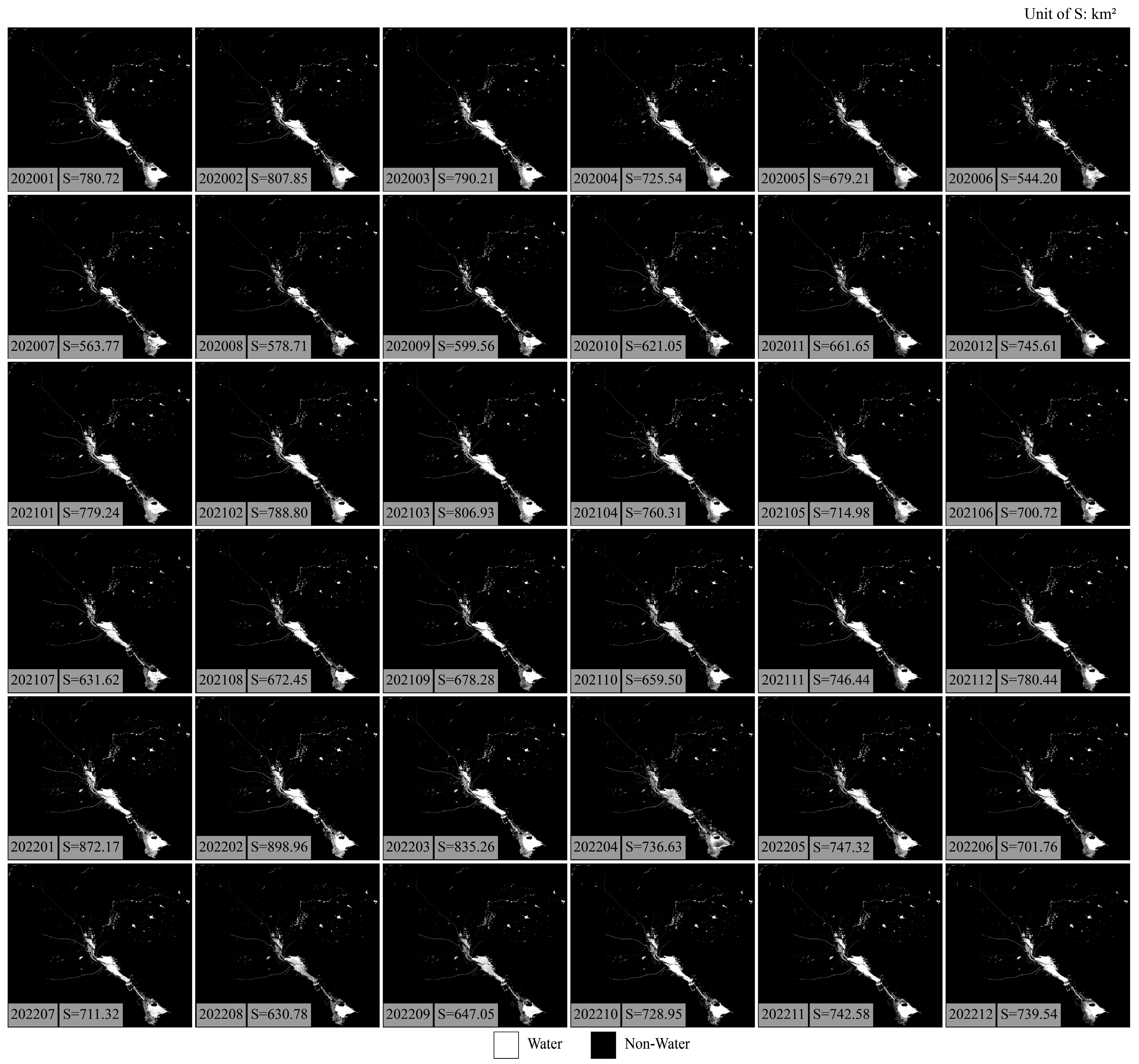

In the process of water body detection, environmental factors such as temperature, evaporation, and precipitation can affect the accuracy of the detection results. To evaluate the impact of these factors on water body detection, this study selected the water body area as the primary evaluation metric. In this section, we explore the influence of environmental factors, including temperature, evaporation, and precipitation, on the water body detection results. The data sources used included publicly available datasets such as MODIS, CHIRPS, and Landsat-8, with resolutions of 1 km, 0.05 degrees, and 30 m, respectively. Due to the lack of MODIS data for December 2022, the analysis focused on the environmental changes in Jining City from January 2020 to November 2022 and their impact on water body area.

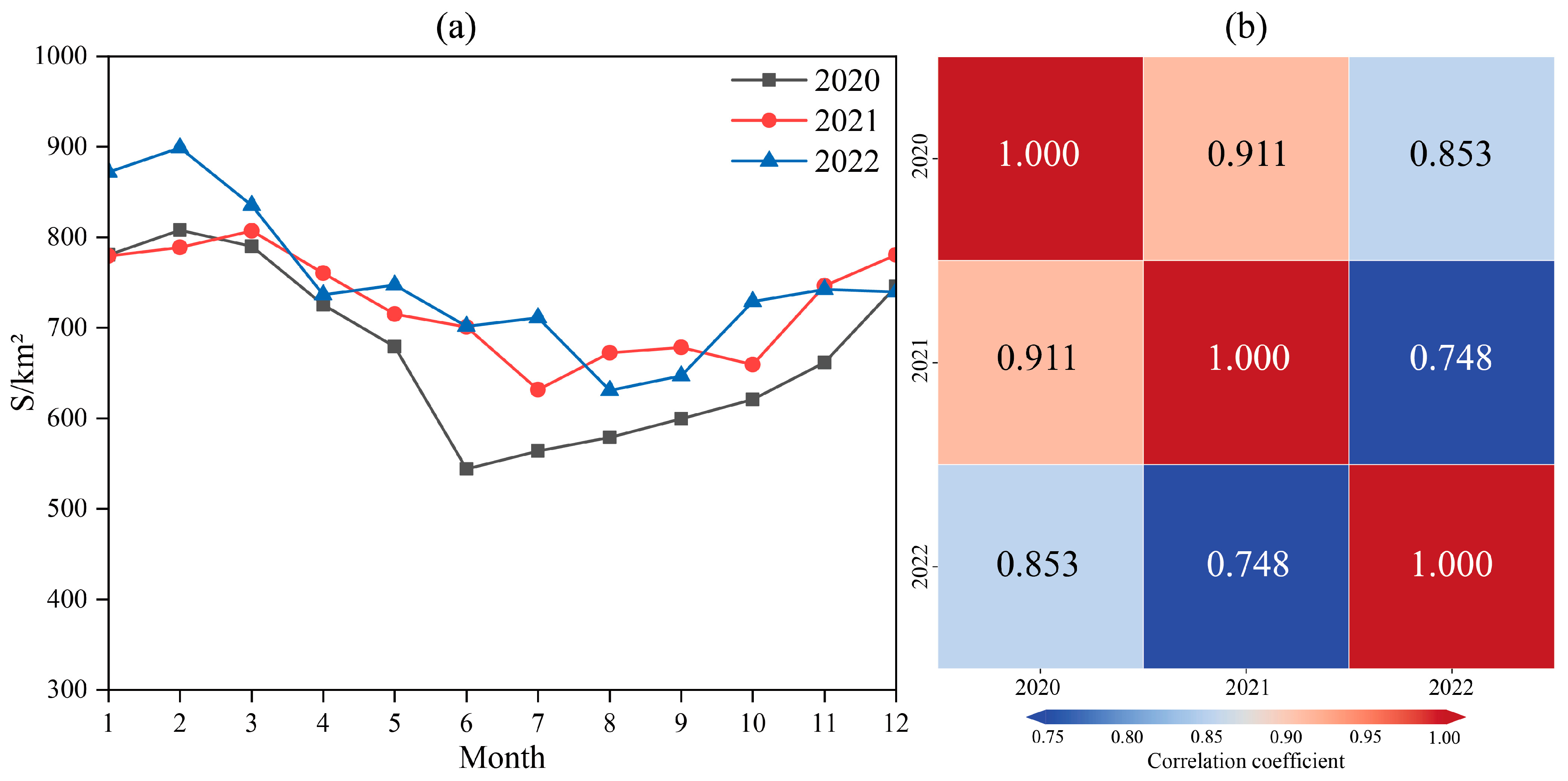

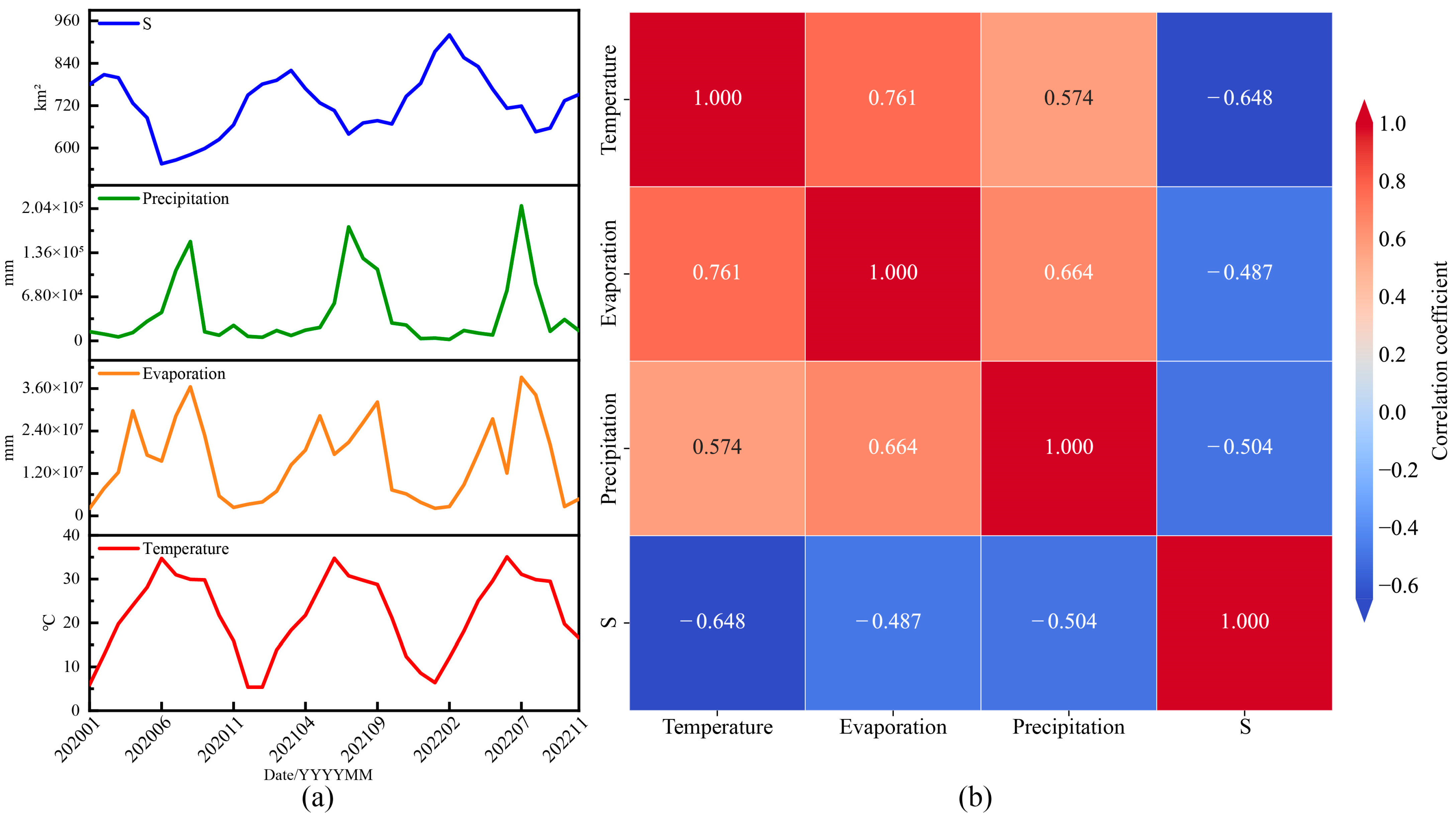

We analyzed the average temperature, total evaporation, total precipitation, and water body area from January 2020 to November 2022, and plotted the time series (

Figure 14a) and correlation heatmap (

Figure 14b). The results showed a significant correlation between water body area and these environmental factors. Specifically, temperature was significantly negatively correlated with water body area, with a correlation coefficient of −0.648, indicating that as the temperature increased, the water body area tended to decrease. Evaporation was also negatively correlated with water body area, with a correlation coefficient of −0.487, further supporting the hypothesis that increased temperature leads to increased evaporation, thereby reducing the water body area. The correlation coefficient between precipitation and water body area was −0.504; although increased precipitation may replenish water bodies, the area did not significantly increase due to higher surface runoff and evaporation rates.

These analytical results indicate that environmental factors have a significant impact on water body detection results. Therefore, understanding and accounting for these environmental changes is crucial for improving the accuracy of the detection results in long-term water body monitoring. To address these challenges, the TSAE-UNet model and related models could, in the future, attempt to integrate environmental variables as inputs to enhance their ability to adapt to dynamic changes in water bodies. Additionally, adaptive fine-tuning of the model in regions with different climatic characteristics could improve its adaptability and robustness under various environmental conditions. Furthermore, improving the data processing methods, such as applying more advanced cloud removal algorithms and image enhancement techniques, would also help ensure the quality of input data, thereby enhancing the model’s detection performance in changing environments.

6. Conclusions

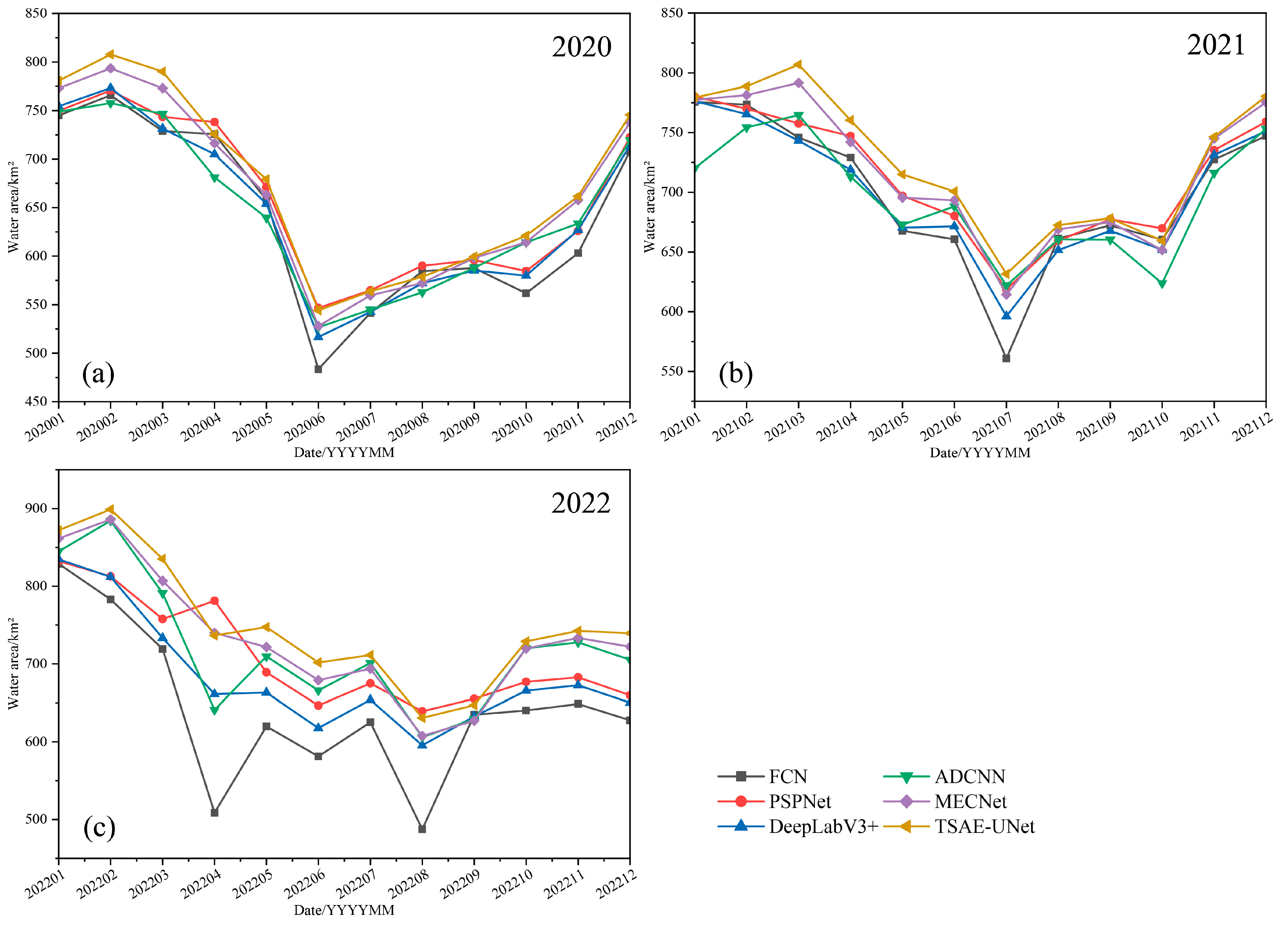

In this study, compared with the FCN, PSPNet, DeepLabV3+, ADCNN, and MECNet models, the performance of the TSAE-UNet model was evaluated to detect the long-term trends of water bodies in different environments. The main conclusions are as follows.

In multi-scene water body detection, TSAE-UNet performed excellently in urban areas, farmlands, and complex terrains. Compared with the other models, TSAE-UNet significantly reduced false positives and false negatives, demonstrating higher detection accuracy. In all tested scenarios, TSAE-UNet outperformed in precision, recall, overall accuracy, and Kappa metrics, with an overall accuracy ranging from 91.49% to 99.05%, which was 0.13% to 16.97% higher than other methods, and Kappa values ranging from 0.80 to 0.94, which was 0.01 to 0.38 higher than other methods.

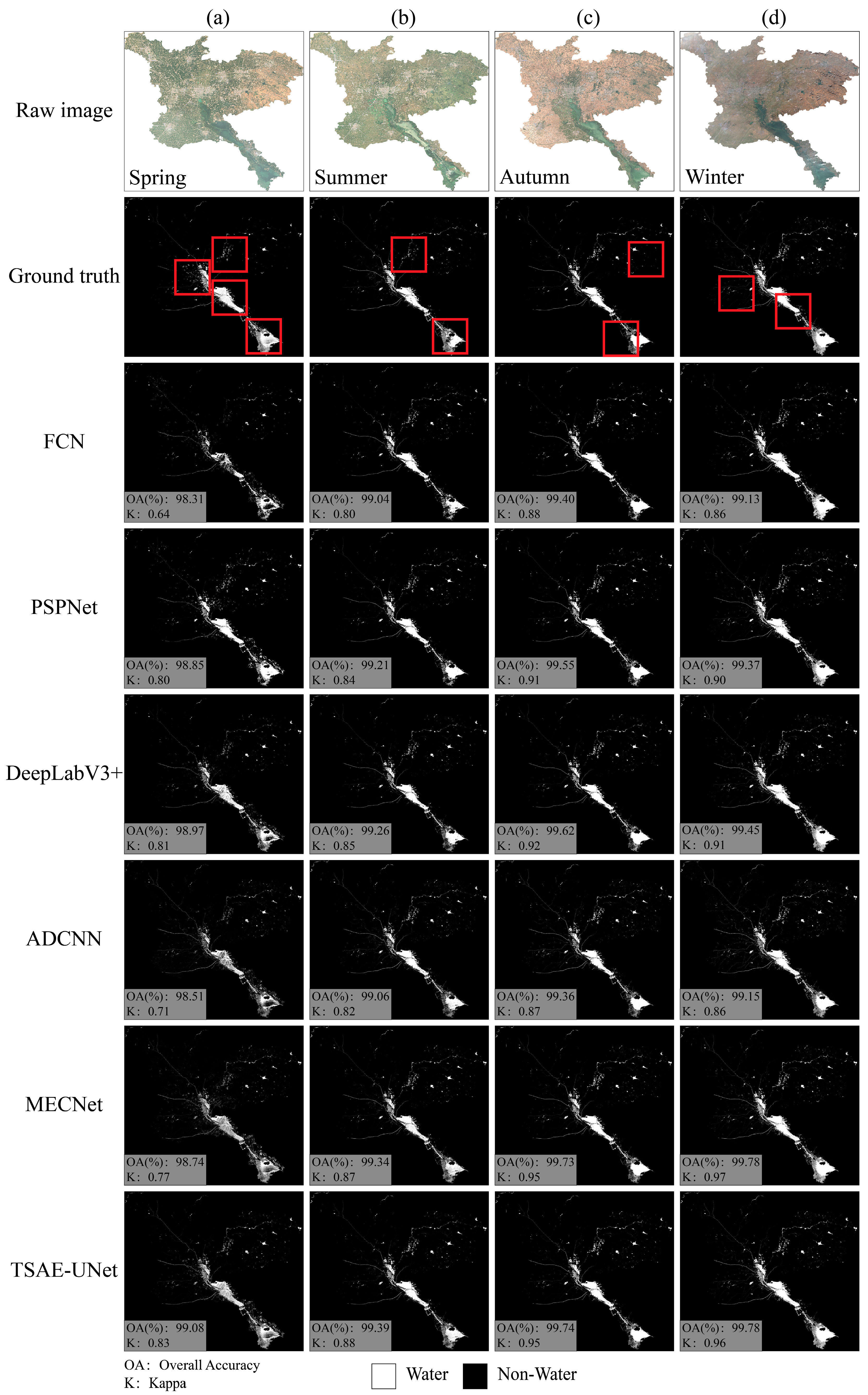

In the multi-temporal water body detection for Jining City from 2020 to 2022, TSAE-UNet demonstrated high consistency and accuracy in the monthly detection results. Over the three years of detection data, the water body area detected by TSAE-UNet had the highest correlation coefficients between years, at 0.911, 0.852, and 0.749, indicating its superior performance in long-term monitoring tasks. Additionally, during the quarterly water body detection for Jining City in 2022, TSAE-UNet achieved an overall accuracy of 99.08% to 99.78%, which was 0.05% to 1.47% higher than other methods, and Kappa values of 0.83 to 0.96, which were 0.01 to 0.32 higher than other methods.

Additionally, we explored the relationship between environmental factors such as temperature, evaporation, and precipitation, and changes in water body area in Jining City from 2020 to 2022. Correlation analysis revealed a significant negative correlation between temperature and water body area, with a correlation coefficient of −0.648; the correlation coefficient between evaporation and water body area was −0.487; and the correlation coefficient between precipitation and water body area was −0.504.

In summary, the TSAE-UNet model performs excellently in diverse scenarios and long-term monitoring tasks, demonstrating high accuracy and consistency, making it an effective tool for supporting water resource management and environmental monitoring.