Abstract

Carbon dioxide is one of the most influential greenhouse gases affecting human life. CO2 data can be obtained through three methods: ground-based, airborne, and satellite-based observations. However, ground-based monitoring is typically composed of sparsely distributed stations, while airborne monitoring has limited coverage and spatial resolution; they cannot fully reflect the spatiotemporal distribution of CO2. Satellite remote sensing plays a crucial role in monitoring the global distribution of atmospheric CO2, offering high observation accuracy and wide coverage. However, satellite remote sensing still faces spatiotemporal constraints, such as interference from clouds (or aerosols) and limitations from satellite orbits, which can lead to significant data loss. Therefore, the reconstruction of satellite-based CO2 data becomes particularly important. This article summarizes methods for the reconstruction of satellite-based CO2 data, including interpolation, data fusion, and super-resolution reconstruction techniques, and their advantages and disadvantages, it also provides a comprehensive overview of the classification and applications of super-resolution reconstruction techniques. Finally, the article offers future perspectives, suggesting that ideas like image super-resolution reconstruction represent the future trend in the field of satellite-based CO2 data reconstruction.

1. Introduction

Climate change is one of the most significant challenges for the planet’s future [,,]. It has enormous ecological, social, and economic impacts across the globe, including an increase in extreme weather events, rising sea levels, melting glaciers, reduced biodiversity, and food security. Carbon dioxide (CO2) is one of the greenhouse gases with the most significant impact on human life [,,]. Continuing increases in CO2 concentrations will significantly accelerate temperature rise [,,]. The Earth’s annual carbon emissions have exceeded its natural absorptive capacity, resulting in rising atmospheric CO2 concentrations [,]. Over the past few decades, as the global economy and population have grown, human activities have led to a steady rise in CO2 emissions, which are still increasing at a rate of more than 2 ppm/yr (ppm: parts per million, yr: year) []. If uncontrolled, the global average CO2 concentrations are projected to exceed 415 ppmv (parts per million by volume, meaning 415 volume units of CO2 per one million volume units of air) by 2030, contributing to more extreme weather events.

In response to climate change, governments have set policies and targets for greenhouse gas emissions reductions to keep CO2 levels at specific levels []. On 12 December 2015, 197 countries joined the Paris Agreement, which aimed to keep global temperatures below 2 °C [,]. In September 2018, the United Nations Framework Convention on Climate Change convened in Katowice, Poland. The conference aimed to develop rules and guidelines for implementing the Paris Agreement and accelerate global action to reduce emissions to meet the challenge of climate change []. To effectively control and reduce CO2 emissions and achieve the double carbon target, carbon monitoring is first needed to understand the characteristics and trends of the spatial and temporal distributions of atmospheric CO2. However, the spatial distribution of CO2 is not uniform and is closely related to human activities. Therefore, obtaining accurate CO2 monitoring data is essential for understanding the spatial and temporal distribution characteristics of CO2.

Satellite carbon dioxide data reconstruction uses satellite observation data and ancillary data to infer and estimate the spatial distribution and spatial–temporal variations in CO2 in the Earth’s atmosphere through data processing and analysis methods. The method makes up for the shortcomings of satellite observation, improves the precision and coverage of observation, and supports CO2 concentration data on a global scale.

Currently, there are three main ways to monitor CO2: ground based, airborne, and satellite based.

Ground-based monitoring is one of the critical methods for obtaining the spatial and temporal distributions of CO2 and is one of the older means of CO2 monitoring. In this method, the carbon dioxide concentration is monitored in real-time by ground stations or towers, such as the National Oceanic and Atmospheric Administration—Earth System Research Laboratory (NOAA-ESRL) [] and the Total Column Carbon Observing Network (TCCON) []. This method has the advantage of high accuracy and high temporal resolution and therefore is often analyzed in comparison with results from satellite data inversion. However, the global ground-based monitoring network consists of sparsely distributed stations with limited coverage, low spatial resolution, and no real-time capability, so it cannot fully reflect the spatial and temporal distributions of CO2 []. Therefore, in the late 1970s, airborne monitoring methods were introduced.

Airborne monitoring is a method of monitoring the CO2 concentration in the Earth’s atmosphere in real-time [,,]. Airborne refers to meteorological observations conducted from platforms that are flying or floating in the air. This method has the advantages of global coverage, high spatial and temporal resolution, long-term observation, and uncrewed operation. It helps to understand climate and environmental change trends. However, technical challenges, atmospheric disturbances, and calibration verification are disadvantages that must be overcome to ensure data accuracy and validity.

Satellite-based monitoring is a real-time or continuous monitoring of the CO2 concentration in the Earth’s atmosphere by launching a dedicated satellite in space and utilizing high-resolution remote sensing technology [,,]. Satellite monitoring has the advantages of being free from time and space constraints, comprehensive coverage, stable observation, long-term time series, and three-dimensional observation, and its observation accuracy is gradually improving []. However, it costs the most and is limited by shortcomings such as technical complexity, data processing challenges, and weather impacts, which need to be addressed to maximize its monitoring potential.

With the launching of more and more carbon satellites, the work of validating a large amount of CO2 data has gradually begun. At present, scholars mainly validate the accuracy of CO2 products inverted by different satellites by combining real-time ground station data, aircraft route measurement data, and model simulation data [,]. However, due to the limitations of the validation methods, the calibration of satellite CO2 data usually focuses only on evaluating the data accuracy and the study of the temporal variation characteristics of the errors, neglecting the characterization of the spatial distribution of the errors. In addition, due to the existence of aerosols and the limitation of satellite orbit, there are a lot of data missing in the process of satellite inversion, so the reconstruction of satellite CO2 data becomes particularly important [].

To deeply discuss strategies for addressing uncertainties and errors in carbon satellite data reconstruction, a comprehensive approach encompassing data processing, model validation, and error correction is essential. Firstly, optimizing data calibration and noise reduction techniques can enhance the quality of the raw observational data. Secondly, improving retrieval algorithms, such as through multi-model validation and error propagation analysis, can increase the processing accuracy. Additionally, integrating multi-source data and analyzing temporal sequences can enhance the spatial and temporal coverage of observations, mitigating the impact of data gaps. Concurrently, combining ground validation and simulation experiments for model calibration ensures result reliability. Finally, quantifying uncertainties through sensitivity analysis and statistical methods provides confidence intervals for the data results. These measures collectively reduce uncertainties and errors in the reconstruction process, thereby improving the accuracy and utility of CO2 data.

In the field of carbon remote sensing, many researchers and scholars have summarized the work of the time and published excellent review papers.

In 2015, Schimel et al. [] assessed the current development of terrestrial ecosystems and the carbon cycle through satellite observations. They presented the available satellite remote sensing data products such as vegetation index, surface temperature, chlorophyll fluorescence, land surface elevation, and their limitations. In addition, the article discusses the applications of satellite remote sensing technology in monitoring the carbon cycle and climate change and predicts future trends in satellite remote sensing technology and data products. Those trends include improving data resolution and accuracy, enhancing the real-time and spatial and temporal coverage of data, developing technologies such as multi-source data fusion and machine learning, and strengthening the integration of satellite observations with ground-based observations and model simulations to better understand the dynamics of terrestrial ecosystems.

In 2016, Yue et al. [] synthesized the latest research results and development trends of space and ground-based CO2 concentration measurement technologies, outlining the research progress of CO2 inversion algorithms, spatial interpolation methods, and ground-based observation data. In addition, they elaborated on the latest research results of CO2 concentration measurement techniques and the application of these techniques in global climate change and carbon cycle research and looked forward to future research directions.

In 2019, Xiao et al. [] provided an overview of the terrestrial carbon cycle and carbon fluxes. They outlined critical milestones in the remote sensing of the terrestrial carbon cycle and synthesized the platforms/sensors, methods, research results, and challenges faced in the remote sensing of carbon fluxes. In addition, they explored the uncertainty and validity of carbon flux and stock estimates and provided an outlook on future research directions for the remote sensing of the terrestrial carbon cycle.

In 2021, Pan et al. [] assessed the ability of CO2 satellites to serve as an objective, independent, and potentially low-cost and external data source while comparing the significance of nighttime optical satellite data for proxy CO2 monitoring to distinguish the importance of direct CO2 satellite monitoring.

In 2022, Kerimov et al. [] reviewed the application of machine learning models to greenhouse gas emissions estimation and modeling. They provided an overview of the methodology for applying machine learning in greenhouse gas emissions estimation and the main challenges faced.

As mentioned above, several past reviews have focused on CO2 monitoring, the carbon cycle, and carbon emissions. Although these reviews have covered the outlook of mapping the spatial and temporal distributions of CO2, they still lack content on CO2 data reconstruction methods. Therefore, a study focusing on satellite CO2 data reconstruction methods is urgently needed to help researchers interested in this field understand the latest developments in satellite CO2 data reconstruction.

This paper takes interpolation, data fusion, and super-resolution reconstruction as the main topics, systematically combs the satellite CO2 data reconstruction methods, and constructs high-resolution and high-precision CO2 data so that the readers can quickly understand the research hotspots of CO2 spatial and temporal distributions.

The contributions of this paper include the following:

(1) This paper provides a comprehensive review of CO2 monitoring methods and data sources.

(2) This paper describes in detail the comparative analysis of satellite CO2 data reconstruction methods on the basis of limited CO2 measurement data, with interpolation, data fusion, and super-resolution reconstruction as horizontal tasks, and traditional methods and machine/deep learning methods as vertical tasks. Combining these two types of analyses allows for a comprehensive evaluation and comparison of different CO2 data reconstruction methods. To the knowledge of the authors of this paper, this is the first review of CO2 reconstruction methods.

(3) This paper proposes the future development direction of satellite CO2 data reconstruction, namely, CO2 data with a longer period, a larger spatial range, a higher spatial resolution, and higher data accuracy can be obtained by super-resolution reconstruction and other methods.

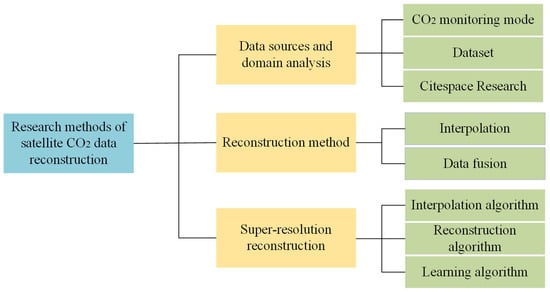

This paper is divided into four main parts. As shown in Figure 1, the first part primarily introduces the research background and the necessity of reconstructing CO2 data. Through a summary of previous reviews, it also summarizes the innovative aspects and major contributions of this review. The second part discusses the monitoring methods for CO2 and the sources of data. In this part, Citespace, a tool for visualization and analysis of scientific literature, is used to visualize the development trend and research focus in this field. The third part delves into the specific applications of interpolation and data fusion methods in the reconstruction of CO2 data to achieve high-precision CO2 data reconstruction. The fourth part provides an in-depth exploration of super-resolution reconstruction methods and offers suggestions for the future development of carbon dioxide data reconstruction. The authors of this paper believe that this review will serve as an important reference for the reconstruction of satellite-based CO2 data.

Figure 1.

Schematic diagram of the structure of this paper.

2. Data Sources and Analysis in the Field

2.1. Data Sources

2.1.1. Ground-Based Monitoring

Ground-based monitoring is an essential method for obtaining the spatial and temporal distributions of CO2. Major ground-based CO2 monitoring networks include WDCGG (World Data Centre for Greenhouse Gases), GLOBALVIEW-CO2, NOAA-ESRL, COCCON, and TCCON.

WDCGG, established by the World Meteorological Organization, focuses on the global collection, storage, and sharing of greenhouse gas data. The WDCGG dataset includes information from multiple sources: on one hand, it contains a substantial amount of in situ measurement data from ground-based observation stations, which directly measure atmospheric CO2 concentrations at the ground level; on the other hand, WDCGG also integrates remote sensing data from satellite instruments, which measure atmospheric CO2 concentrations above the ground stations. This integration of remote sensing data complements ground-based observations by capturing a broader spatial distribution and the vertical profiles of atmospheric CO2 concentrations.

GLOBALVIEW-CO2 is a dataset maintained by international collaborative organizations, aiming to provide CO2 measurement data on a global scale. This dataset primarily consists of CO2 measurement data from ground-based observation stations, provided by fixed stations located around the world, recording atmospheric CO2 concentrations at the ground level. Unlike WDCGG, the GLOBALVIEW-CO2 dataset does not include remote sensing data, relying solely on ground-based observations to monitor and analyze global CO2 levels.

NOAA-ESRL is a research laboratory within the National Oceanic and Atmospheric Administration of the United States. In climate change, NOAA-ESRL contributes to data support through the Global Greenhouse Gas Reference Network [,]. The network comprises 106 stations, primarily in developed countries, including 8 high-tower stations and 4 baseline observatories. These stations provide discrete weekly CO2 sample collection, continuous in situ CO2 measurements, and measurements of other gases. Data from NOAA-ESRL are widely used to study temporal and spatial trends in global CO2 concentrations and sources and sinks of CO2. In addition, NOAA-ESRL has developed the CarbonTracker data assimilation system. The system utilizes continuous CO2 time series data from around the globe in conjunction with Earth system models to infer global CO2 sources and sinks, as well as CO2 uptake and release processes.

COCCON (Collaborative Carbon Column Observing Network) is a global observation network focused on high-precision ground-based measurements of atmospheric greenhouse gases, such as carbon dioxide and methane []. It aims to provide accurate column concentration data to support and validate satellite observations, particularly in areas where satellite measurements are affected by cloud cover or complex terrain. Using the micro Fourier Transform Infrared Spectrometer, a portable and low-cost yet high-precision instrument, COCCON measures the solar spectrum to determine the total column concentrations of CO2, CH4, and carbon monoxide. These measurements offer high temporal and spatial resolution, making COCCON a valuable resource for calibrating satellite data, improving climate models, studying the carbon cycle, and informing climate policy. Through its global network, COCCON addresses gaps in satellite observations and plays a crucial role in advancing greenhouse gas monitoring and climate change research.

TCCON, as a global CO2 observation network comprised of ground-based measurement sites, has a key objective of providing comprehensive information on global CO2 concentration changes through high-precision and high-resolution measurements []. As shown in Figure 2, this network gathers observational sites from around the world, spanning multiple countries and regions including North America, South America, Europe, Asia, Australia, and Antarctica. Each site is equipped with high-precision ground-based infrared spectrometers. The measurement data from the TCCON network exhibit exceptional accuracy and high temporal resolution, allowing for the provision of CO2 concentration change information at hourly or even shorter intervals for research purposes. These data are also widely utilized to investigate important questions such as the spatial distribution of CO2 in the atmosphere, seasonal variations, and variations among different regions [,].

Figure 2.

Global TCCON site location distribution (https://tccondata.org/accessed on 1 October 2024).

While ground-based monitoring is a crucial means to understand changes in atmospheric CO2 concentrations, its advantages lie in high precision and high temporal resolution. Therefore, it is often used for comparative analysis with satellite and airborne data, particularly for validating satellite data retrieval results. However, this approach also has some limitations, including limited spatial coverage. Ground monitoring stations are sparsely distributed and cannot comprehensively cover the entire globe, especially in remote and oceanic regions where monitoring data are relatively scarce. This limitation may impact the accuracy of global CO2 concentration change analysis. In conclusion, accurately depicting the spatiotemporal distribution of CO2 solely relying on ground observations can be quite challenging.

2.1.2. Airborne Monitoring

Researchers have successfully supplemented ground-based monitoring with the use of airborne monitoring methods. This approach involves measuring atmospheric CO2 concentrations and vertical distribution using commercial flights or specially equipped research aircraft. It provides vital information about the distribution of CO2 and carbon cycling in the atmosphere.

On the one hand, commercial flights typically use Automatic Air Sampling Equipment or Continuous CO2 Measuring Equipment to collect air samples or continuously measure CO2 concentrations. On the other hand, research aircraft can carry various instruments to measure atmospheric CO2 concentration and vertical distribution, such as the Laser Absorption Spectrometer [] and the Infrared Absorption Spectrometer [].

Airborne monitoring can provide high-resolution data because the aircraft can collect information at different altitudes and times, resulting in more detailed spatiotemporal information. Atmospheric CO2 monitoring has become an important part of the global atmospheric monitoring network. More detailed data can be obtained through projects such as the Integrated Aircraft Trace Gases Observation Network [], the National Oceanic and Atmospheric Administration (NOAA)’s European Laboratory for Atmospheric Research Carbon Cycle Greenhouse Gas Aircraft Program, and NASA’s Airborne Greenhouse Gas Emissions Observation System. IAGOS (In-service Aircraft for a Global Observing System) is also a global program that utilizes sensors and instruments on commercial flights to measure atmospheric composition and meteorological parameters. IAGOS aims to collect atmospheric data on a global scale by installing sensors on regular commercial flights to supplement data from ground stations and satellite observations. IAGOS provides data on the concentration of carbon dioxide in the upper atmosphere. These data can be used to study the concentration and vertical distribution of CO2 in the atmosphere and to assess the impact of global climate change.

Airborne monitoring also has some limitations. Firstly, the coverage and sampling frequency of airborne monitoring are constrained by flight routes and schedules. Despite the establishment of a global aviation monitoring network, the number of monitoring stations and sampling frequencies remains limited due to factors such as cost. Additionally, since airborne monitoring instruments are installed on commercial aircraft, they can be influenced by factors such as weather, flight altitude, and aircraft type, which can affect the accuracy and reliability of the monitoring data.

2.1.3. Satellite Monitoring

During the past two decades, continuous advances in sensor technology and inversion methods have led to the maturation of satellite remote sensing for CO2 detection. Optical sensors onboard satellites are widely used for atmospheric CO2 observations. Currently, many satellites and instruments have been successfully launched into space, including the Scanning Imaging Absorption Spectrometer for Atmospheric Component Mapping (SCIAMACHY) [], the Atmospheric Infrared Sounder (AIRS) [], the Greenhouse Gas Observing Satellite (GOSAT) [], the Orbiting Carbon Observatory (OCO-2, OCO-3) [,,], and TanSat []. Future satellite launch programs for monitoring CO2 are in the pipeline. Table 1 summarizes the CO2 monitoring satellites that have been launched and those planned for launch until 2028 worldwide.

Table 1.

Carbon dioxide monitoring satellites launched and planned (to be launched by 2028) globally.

Europe has been an early pioneer in satellite remote sensing for greenhouse gases. On 28 February 2002, the European Space Agency (ESA) launched the Environmental Satellite carrying the instrument SCIAMACHY. It had three observation modes: limb, nadir, and occultation. It was the first satellite sensor capable of detecting changes in boundary layer CO2 concentrations. SCIAMACHY confirmed the feasibility of measuring near-surface CO2 concentrations in the near-infrared spectral range []. The retrieval algorithm for SCIAMACHY involved a combination of radiative transfer models and atmospheric inversion techniques to derive CO2 concentrations from the observed spectra. This approach was critical in validating the satellite’s capability to detect CO2 variations at different altitudes. Furthermore, ESA has initiated the European Copernicus anthropogenic CO2 monitoring mission. These satellites not only provide precise observations with high resolution and high signal-to-noise ratios but also can simultaneously image various parameters [].

On 23 January 2009, Japan successfully launched the GOSAT satellite, becoming the world’s first satellite specifically designed for the detection of atmospheric greenhouse gases, including CO2 and CH4 []. GOSAT employs the Fourier Transform Spectroscopy (FTS) method, which involves using a Michelson interferometer to capture spectral data across a broad range of wavelengths. The retrieval algorithm processes these data using radiative transfer models and inversion techniques to estimate CO2 and CH4 concentrations. Following GOSAT, the GOSAT-2 satellite was successfully launched on 29 October 2018, equipped with higher-performance sensors to provide more accurate greenhouse gas concentration data. However, both GOSAT and GOSAT-2 had limitations in terms of their ability to continuously sample the atmosphere due to their coarse spatial resolution. To enhance the spatial coverage capability for atmospheric greenhouse gases, Japan initiated the GOSAT-GW project, which adopted a grating-based spectral detection mode similar to OCO, Sentinel-5, and TanSat.

The United States’ first carbon satellite, OCO, experienced a launch failure in 2009 due to a payload rocket malfunction. However, in July 2014, OCO-2 was successfully launched []. NASA (National Aeronautics and Space Administration) released the initial global CO2 concentration distribution maps for OCO-2 on 8 December 2014, covering the period from 1 October 2014 to 11 November 2014. The retrieval algorithm for OCO-2 utilizes a combination of high-resolution spectroscopic measurements and advanced inversion techniques to derive CO2 concentrations from the captured solar absorption spectra. Following OCO-2, OCO-3 was successfully launched on 4 May 2019 and installed on the International Space Station, continuing the CO2 observation mission. Compared to OCO-2, OCO-3 has a larger observation range, meaning it can cover a wider swath of the Earth’s surface in each orbit, and it operates in the orbit of the space station. Each orbit allows for target and snapshot observations of any point at different times. Additionally, it can provide continuous observations of CO2 and Solar-Induced Fluorescence data from dawn to dusk within a day, significantly enhancing its observational capabilities for local and point source targets [].

Satellite resolution plays a crucial role in the remote sensing detection of point sources such as power plants. To achieve this goal, commercial satellite companies in Canada and the United States have initiated greenhouse gas satellite remote sensing programs with high spatial resolution [,]. Canada’s GHGSat company has launched three satellites in 2016, 2020, and 2021. These satellites are capable of capturing greenhouse gas remote sensing data with a resolution of 25 m and an accuracy of 4 ppm for Column-Averaged Dry Air Mole Fraction of CO2 () and 18 parts per billion for Column-Averaged Dry Air Mole Fraction of CH4 (). This innovative solution provides high-precision estimation for point source emissions. Meanwhile, Planet Labs, a private Earth imaging company based in the United States, plans to launch two Carbon Mapper satellites in 2024. These satellites will collect high signal-to-noise ratio spectral data with a resolution of 30 m, an 18 km swath, and a range of 400–2500 nanometers. These data can provide high-precision and greenhouse gas scientific data, with the capability to detect emissions from sources as small as 50 kg/h and as large as 300 t/h CO2. This detection capability is sufficient for effectively monitoring over of the world’s coal-fired power plants, making a significant contribution to greenhouse gas emission monitoring.

In recent years, China has made significant progress in the field of greenhouse gas remote sensing detection. First, on 22 December 2016, China successfully launched its first carbon satellite, TanSat, which has achieved a series of important results in global atmospheric CO2 concentration and chlorophyll fluorescence monitoring, among other aspects. The Institute of Atmospheric Physics of the Chinese Academy of Sciences, using the carbon retrieval system IAPCAS (Institute of Atmospheric Physics Carbon Retrieval Algorithm System), which was independently developed by their team, obtained from the TanSat satellite []. The IAPCAS retrieval algorithm integrates spectral data with radiative transfer models to provide accurate CO2 measurements. Following that, on 15 November 2017, the Fengyun-3D (FY-3D) meteorological satellite was successfully launched at the Taiyuan Satellite Launch Center. FY-3D carries the Greenhouse Gas Monitoring Instrument, which measures global CO2 and CH4 column concentrations using shortwave infrared interferometry. The retrieval algorithm for FY-3D processes interferometric data to estimate greenhouse gas concentrations with high precision. Furthermore, on 9 May 2018, the Gaofen-5 (GF-5) satellite was successfully launched at the Taiyuan Satellite Launch Center. The GF-5 satellite carries the Greenhouse Gases Monitor Instrument, primarily designed for quantitative monitoring of the distribution and changes in CO2 and CH4 concentrations globally. The Chinese GF-5B satellite (Gaofen-5B), launched on 7 September 2021, is a crucial part of China’s high-resolution Earth observation system. It features advanced remote sensing instruments, including multi-spectral, hyperspectral, infrared, and ultraviolet sensors, for precise monitoring of the atmosphere, land, and oceans. The retrieval algorithms for GF-5B are tailored to handle complex datasets from these various sensors, ensuring accurate greenhouse gas measurements. In the future, the Fengyun-3 No.08 polar-orbiting satellite is planned to be launched, which will carry a high-spectral-resolution greenhouse gas monitoring instrument. This instrument will achieve high-precision quantitative inversion of global atmospheric greenhouse gases through continuous high-resolution measurements in the near-infrared and shortwave infrared spectral bands. Here, high-resolution refers to the ability to distinguish and resolve fine details within the spectral bands, enabling the accurate detection and analysis of trace gas concentrations.

2.2. Datasets

Various datasets are used to measure and monitor global atmospheric CO2 concentrations. Currently, researchers utilize ground-based, satellite, and other ancillary datasets.

- (1)

- Ground-based datasets

Ground-based data refer to the continuous monitoring and recording of CO2 concentrations in the atmosphere by setting up meteorological stations, observatories, and other equipment on the Earth’s surface. These datasets contain extensive observations of atmospheric CO2 concentrations, typically on hourly, daily, monthly, or yearly time scales. They cover variations in atmospheric CO2 concentrations across different regions globally. Additionally, these data are usually maintained and published collectively by multiple institutions and organizations. Data processing and quality control also undergo rigorous standardization and calibration procedures. As shown in Table 2, there are currently three main ground-based datasets: TCCON, WDCGG, and GLOBALVIEW-CO2.

TCCON sites use Fourier Transform Spectrometers (FTSs) to make hyperspectral observations with a spectral resolution of 0.02 and a temporal resolution of about 90 s. Spectral data are recorded for direct solar radiation in the 4000 to 9000 range. The spectra measured for CO2 include two CO2 weak absorption bands located at 6220 and 6339 , and one absorption band located at 7885 . The spectral data are standardized, stable, and obtained in continuous observation mode at the TCCON site. The accuracy of the atmospheric products inverted from the FTS observation spectra reaches 0.8 ppm under clear or less cloudy conditions. The sites are selected according to a uniform criterion, and most are located within 100 km of human activity impacts.

WDCGG was established by the World Meteorological Organization and is dedicated to the collection, storage, and sharing of greenhouse gas data on a global scale []. The dataset includes various sources of data: first, ground-based observations, which involve direct measurements at observation sites, such as the atmospheric CO2 concentration measurements initiated by the Mauna Loa Observatory in Hawaii in 1958 []; second, mobile observations, which include measurements from ships, aircraft, and high-altitude balloons. These mobile platforms help capture spatial variations and vertical distributions of atmospheric CO2 concentrations; and finally, the remote sensing of data involves measurements of the atmosphere from satellite instruments, providing supplementary information to ground-based and mobile observations. By the late 1970s, NOAA’s Global Monitoring Division had conducted extensive measurements of atmospheric CO2 concentrations worldwide, encompassing both ground-based and mobile observations. In 1989, the International Meteorological Organization established the Global Atmosphere Watch, which now includes 51 countries that collectively establish observation sites and contribute data, integrating ground-based, mobile, and remote sensing technologies to provide a comprehensive view of atmospheric CO2 levels.

The GLOBALVIEW-CO2 dataset, provided by NOAA-ESRL, integrates observational data from multiple stations worldwide, offering long-term CO2 concentration data []. This dataset is essential for researching atmospheric carbon cycling, climate change, and identifying sources of CO2 emissions.

Table 2.

Current major ground-based carbon observation datasets.

Table 2.

Current major ground-based carbon observation datasets.

| Database | Brief Introduction | Reference |

|---|---|---|

| TCCON | TCCON is recognized as a standard network for CO2 | [] |

| WDCGG | WDCGG focuses on the collection, management and dissemination of observational data | [] |

| GLOBALVIEW-CO2 | The observation platforms include ground-based stations, tall towers, ships, and aircraft | [] |

- (2)

- Satellite datasets

Currently, the inversion of using satellite shortwave infrared observations has become the most effective method for obtaining CO2 data. This effectiveness refers to the ability of satellite datasets to provide comprehensive, global coverage and frequent observations, which ground-based or airborne datasets cannot achieve. Satellites can monitor remote and inaccessible areas, offering a more complete and consistent picture of CO2 distribution on a global scale. As shown in Table 3, the available satellite data include ENVISAT (with SCIAMACHY), the GOSAT series, the OCO series, and TanSat, which simultaneously provide products from different inversion algorithms.

Table 3.

Major satellite carbon observation datasets.

The SCIAMACHY spectral data employ three main algorithms to estimate the concentration of CO2 in the atmosphere []. These algorithms are the Differential Optical Absorption Spectroscopy (DOAS) algorithm, the Weighting Function Modified DOAS (WFM-DOAS) algorithm, and the Band-Enhanced Sensitivity Differential (BESD) algorithm. The DOAS algorithm is based on the absorption characteristics of the spectrum. It utilizes the absorption properties of different gas molecules at various wavelengths of light to infer the concentrations of gases in the atmosphere. The WFM-DOAS algorithm builds upon the DOAS approach by considering the propagation path of light through the atmosphere. This helps enhance measurement precision and also takes into account the vertical distribution of gases in the atmosphere, which can impact the results. The BESD algorithm is particularly useful for measuring low-concentration gases like methane and CO2. This method relies on the absorption and emission characteristics of gas molecules in the atmosphere. By measuring the spectral emissions from the atmosphere, it can estimate the concentrations of methane and CO2. These algorithms collectively provide valuable tools for assessing atmospheric gas concentrations, with each having its own strengths and applications.

The GOSAT is positioned in a sun-synchronous orbit at an inclination of 98° with an orbit altitude of approximately 666 kilometers. It completes one orbit approximately every 100 min, resulting in a revisit time of once every three days, with its local time of overpass being 13:00 []. The GOSAT satellite series includes GOSAT, GOSAT-2, and the planned GOSAT-GW. GOSAT utilizes the Thermal And Near-infrared Sensor for Carbon Observation (TANSO)—FTS and the TANSO-2 OCC for measurements. GOSAT-2, on the other hand, employs the TANSO-2 FTS-2 and TANSO-2 OCC-2 instruments for its observations. Satellite data products are categorized into four levels. Level 1 includes raw data, such as radiance measurements. Level 2 provides column-averaged concentrations of greenhouse gases like CO2 and CH4. Level 3 aggregates data spatially and temporally into gridded maps of gas concentrations. Level 4 comprises higher-level products, including model outputs like regional CO2 flux data. These data level descriptions are consistent across the GOSAT series, with each new satellite improving upon its predecessor’s capabilities.

The OCO series of satellites includes OCO-2 and OCO-3. OCO-2 is a NASA-sponsored Earth observation satellite program whose primary goal is to detect CO2 column concentrations with high accuracy, precision, and spatial resolution. OCO-2’s CO2 products offer excellent precision and accuracy and can characterize CO2 sources, sinks, and regional variations. In 2014, OCO-2 was launched into a sun-synchronous EOSA-train orbit at 705 km, becoming the first satellite in the A-train constellation. Each orbit has a period of 98.8 min, with a transit time of 13:36 local time and a revisit period of 16 days. OCO-2 carries a three-band grating imaging spectrometer to capture the solar reflected light spectrum in the 0.765 µm, 1.61 µm, and 2.06 µm bands. The spectrometer collects eight consecutive observation images at a frequency of 3 Hz at the bottom of the sky, with each image covering a 2 km by 1 km area on the Earth’s surface. OCO-3 is specialized in measuring gases such as CO2 and methane in the atmosphere. The data products of the OCO series satellites are divided into two levels: Level 1 and Level 2. Level 1 data products contain raw radiometric and image data from OCO-2 and OCO-3. Level 2 data products mainly cover vertical profiles of the CO2 column concentration and apparent absorption rate, which are crucial for studying the global carbon cycle and climate change.

TanSat is China’s first satellite dedicated to observing CO2 from space. Launched on 22 December 2016, TanSat was placed into a sun-synchronous orbit at an altitude of 700 km with an inclination angle of 98.2°, and its local overpass time is 13:30. The satellite carries two main instruments: the Aerosol and Cloud Gas Sensor (ACGS) and the Cloud and Aerosol Polarization Imager (CAPI). ACGS is a grating spectrometer designed to record the solar backscatter spectra for retrieving CO2 and oxygen concentrations. CAPI is a multi-band imaging spectrometer covering spectral ranges from ultraviolet to near-infrared in five bands (365–408 nm, 660–685 nm, 862–877 nm, 1360–1390 nm, and 1628–1654 nm). It is used to gather information about aerosols and clouds, with the 660–685 nm and 1628–1654 nm bands specifically employed for scattering detection. Using inversion algorithms developed by the Institute of Atmospheric Physics, Chinese Academy of Sciences, data from the carbon satellite have been inverted to obtain global CO2 distributions. Comparisons with observation data from TCCON at the Sudan Gurusha and Karlsruhe stations indicate absolute biases of 2.1 ppm and 2.9 ppm, respectively []. Additionally, the ACOS (Atmospheric CO2 Observations from Space) inversion algorithm has been applied to retrieve CO2 column concentrations from the carbon satellite data, yielding a product bias of 0.85 ppm []. Furthermore, the Department of Ecological and Environmental Informatics at the State Key Laboratory of Resources and Environmental Information System in China has developed a carbon satellite inversion algorithm that combines the SCIAMACHY radiative transfer model with optimization estimation methods. Validation against TCCON data shows a bias of 2.62 ppm and a standard deviation of 1.41 ppm [].

- (3)

- Auxiliary datasets

The CO2-supporting datasets provide information at the national, regional, and city levels, documenting emissions from sources such as coal combustion, oil, natural gas, cement production, and iron and steel production. These datasets are instrumental in analyzing climate change trends, formulating mitigation policies, and assessing national progress in reducing emissions. Standard anthropogenic CO2 emission datasets, listed in Table 4, are based on various methodologies and data sources.

Table 4.

Common auxiliary datasets for CO2.

The CHRED (China High Resolution Emission Database) is an energy consumption database published by the China Energy Research Institute. It provides data on energy consumption across various regions of China, supporting research on energy policy, regional energy planning, and environmental analysis []. This dataset includes information on energy consumption by provinces and prefecture-level administrative units, covering a range of energy types such as coal, crude oil, natural gas, and electricity. It may also include consumption data by different industries and uses, as well as related economic and environmental indicators. Researchers, policymakers, and environmental protection agencies can use the CHRED dataset to analyze energy consumption, environmental impacts, and economic benefits across China.

A team of researchers from Peking University (PKU) has released a series of global emissions inventory datasets that cover various pollutants and time scales []. The datasets include global emissions data for CO2, CO, and PM2.5. These global emissions inventory data were developed using a bottom–up approach, estimating pollutant emissions on a pollutant-by-pollutant basis. The dataset has a spatial resolution of 0.1° by 0.1°, providing detailed geographic information, and covers the period from 1960 to 2014 with a monthly temporal resolution. It represents 64 to 88 individual emission sources, encompassing different sectors and fuel combinations.

The ODIAC (Open-Data Inventory for Anthropogenic Carbon dioxide) dataset estimates anthropogenic CO2 emissions in various regions of the globe using satellite observations, energy statistics, and ground-based data []. With a resolution of 1 km, this dataset can be used to study the spatial distribution, as well as temporal and spatial variations, and trends in global carbon emissions [].

The EDGAR (Emissions Database for Global Atmospheric Research) dataset provides global anthropogenic emissions data with a resolution of 0.1° × 0.1°, offering high accuracy, spatial and temporal resolution, and timeliness [].

Additionally, CARMA [], WDI [], MEIC [], and CEADS [] are standard auxiliary CO2 datasets that enhance the transparency of global carbon emissions and support mitigation actions. These datasets aggregate information from multiple sources, including government reports, energy statistics, and other publicly available data.

2.3. Citespace-Based Reconstruction Analysis of Satellite CO2 Data

Citespace is a tool for visualizing and analyzing scientific literature, primarily used for studying knowledge evolution and academic collaboration networks within academic fields [,]. It assists researchers in identifying research hotspots, key authors, field collaborations, and trends in knowledge evolution, thereby facilitating academic research and decision-making. In this section, Citespace 6.1.R2 is employed to visualize and analyze literature related to global satellite CO2 data reconstruction and to review research on satellite CO2 data reconstruction over the past 20 years, focusing on keyword mapping and author contributions.

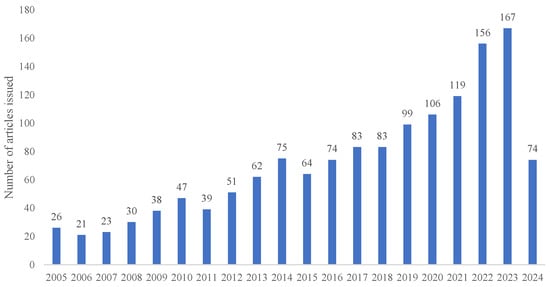

With the increase in CO2 emissions, satellite CO2 data reconstruction has become widely utilized. Figure 3 illustrates that the number of papers in the field of satellite CO2 reconstruction has shown an overall increasing trend from 2005 to 2024, with the most significant rise occurring between 2021 and 2022. This indicates that satellite CO2 data reconstruction has garnered considerable attention in recent years and is highly valued by scholars.

Figure 3.

Number of papers issued on satellite CO2 data reconstruction (total per year until May 2024).

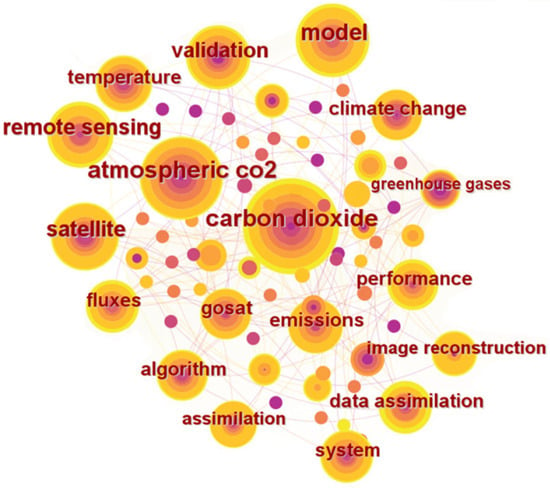

This paper searched the Web of Science using the keywords “carbon dioxide” and “reconstruction”, collecting about 1437 relevant articles from the past two decades. The size of each circle in the keyword map is proportional to the frequency of the keyword. The hierarchy of the circles represents the passage of time, with the circles moving from the inside to the outside, indicating a progression from the past to the present. Red circles highlight key nodes, demonstrating that the relevant literature in the field has attracted significant attention. Connecting lines show associations between different keywords (data source: Web of Science Core Collection).

As shown in Figure 4, the CO2 node is the most prominent and frequent keyword on the map, followed by “satellite”, “remote sensing”, “reconstruction”, “assimilation”, and “model”. These high-frequency keywords reflect the core concepts, key technologies, and research directions in the reconstruction of satellite CO2 data, highlighting the hotspots and concerns in the field. This information helps researchers gain a deeper understanding of the field’s development trends and knowledge structure.

Figure 4.

Keyword mapping analysis of carbon satellite data reconstruction based on Citespace.

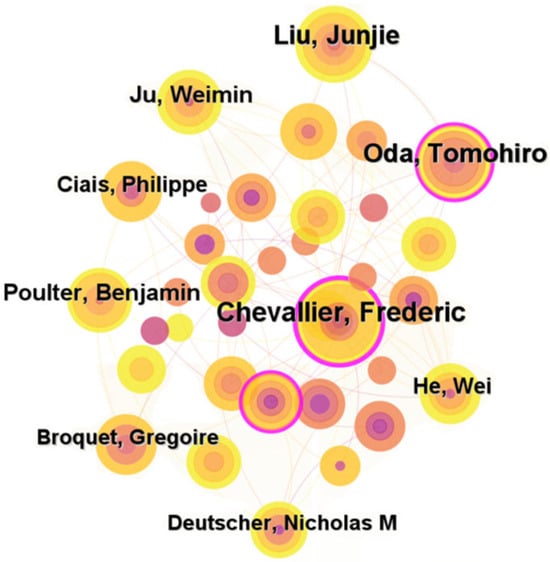

In the author mapping, as shown in Figure 5, authors such as Frederic Chevallier, Tomohiro Oda, and others appear with high frequency in Citespace’s author mapping. The high frequency of these authors reflects their academic reputation, leadership, collaborative networks, and key innovations, as well as the significance of their academic contributions and activities in the field of satellite CO2 data reconstruction. Readers can stay informed about the research frontiers in this field by following the progress and work of these prominent authors.

Figure 5.

Author mapping analysis in the field of carbon satellite data reconstruction based on Citespace.

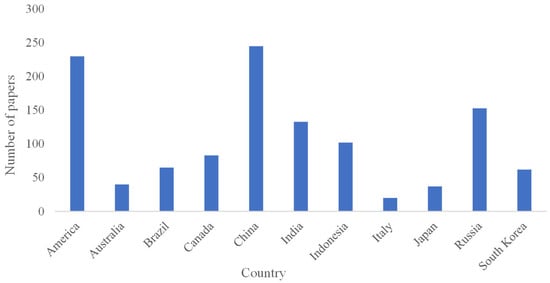

It can be observed from Figure 6 that China and the United States lead in paper contributions. Compared to developing countries, developed nations have made more rapid progress in the field of satellite remote sensing and have paid greater attention to satellite CO2 data reconstruction, resulting in a higher volume of published papers. This trend indicates that countries worldwide are focusing on advancing this field, highlighting it as a significant area of development at the international level.

Figure 6.

Number of papers on CO2 in selected countries in the period January 2020 to May 2024.

3. Satellite CO2 Reconstruction Methods

Scholars have conducted CO2 data reconstruction studies across multiple satellites and developed a series of high-precision CO2 datasets over extended periods, considering the spatial and temporal coverage as well as the data accuracy of various CO2 satellites.

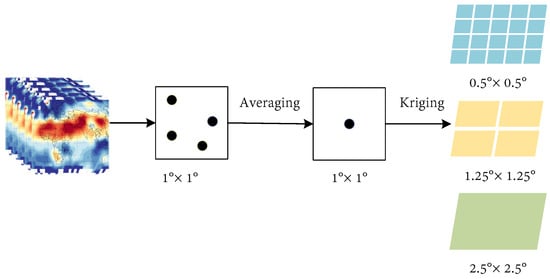

Interpolation is a method used to estimate information about a function’s value or its derivative at discrete points. It involves estimating approximate values at other points based on a finite number of known points. Interpolation techniques can be used to fill in unobserved spatial data within a single image or unobserved temporal data across multiple images over a fixed observation area. Kriging is a specific interpolation method that not only utilizes known data points but also models the spatial correlation structure between these points to make more accurate predictions. It achieves this by fitting a semivariogram to quantify the spatial correlation and then using this model to appropriately weight the known data points when estimating values at unknown locations. In CO2 data reconstruction, Kriging can estimate CO2 concentrations at locations without measurements by leveraging the spatial patterns observed in known data. However, not all CO2 data reconstruction methods use Kriging. Some methods may employ other statistical or machine learning techniques that do not involve the spatial correlation modeling characteristic of Kriging.

Data fusion is an information processing technique where computers analyze and synthesize observational data from multiple sensors collected over time under specific guidelines to perform decision-making or assessment tasks. It typically targets a specific observation area and integrates information from sources such as satellite carbon remote sensing, meteorological data, and Digital Elevation Models (DEMs) to address missing spatiotemporal data.

In this section, interpolation and data fusion methods will be explored in depth to enhance the spatial resolution of CO2 data, fill observational gaps, and improve data accuracy. This approach will provide more comprehensive information and support for CO2 concentration research and applications.

3.1. Data Reconstruction Based on Interpolation

Interpolation involves estimating values between several discrete data points using specific methods. These data points are often collected over a defined time or spatial range, though data may not be available at every point within that range. The goal of interpolation is to fill in these gaps to create a more continuous and complete dataset. It is important to note that interpolation methods generate estimates for unknown data points rather than actual measurements. Consequently, the interpolation error should be evaluated for each case, and the uncertainty of the data must be considered. Table 5 compares different interpolation techniques to provide a comprehensive understanding. In different interpolation methods, the local trend, the information of coordinates, and the stratification have specific meanings. The local trend refers to systematic changes or trends within a local area of data, which can be linear or nonlinear and are usually caused by local factors rather than the overall trend of the data. The information of coordinates refers to the specific spatial locations of data points, which are used to determine the spatial relationships and distances between data points, and are fundamental for calculating spatial correlation and performing interpolation. Stratification involves dividing data into multiple subsets or layers based on certain criteria, such as geographic, geological, or statistical characteristics. This method is particularly useful for areas with significantly different characteristics, as the data within each layer may have distinct statistical properties and spatial variability structures. During interpolation, stratification can enhance the model’s accuracy and reliability by ensuring the data within each layer are more homogeneous and consistent.

Table 5.

Comparison of different Kriging interpolation methods.

Kriging interpolation methods include several variants for estimating attribute values at unknown locations. For example, Simple Kriging (SK) assumes that the attribute values follow a normal distribution with a constant mean; Ordinary Kriging (OK) takes into account local trends and semivariance functions; Universal Kriging (UK) is suitable for cases with external trends. In Kriging interpolation methods, external trends refer to systematic changes or trends present in the data, which are typically caused by known external factors rather than random spatial variation. These external trends can be explained and described by one or more known external variables. Simple Co-Kriging (SCK) and Ordinary Co-Kriging (OCK) are suitable for multi-attribute interpolation, considering covariance and local spatial trends, respectively. Probability Kriging (PCK) considers the probability distribution of the attribute values. At the same time, Simple Collocated Co-Kriging (SCCK) and Ordinary Collocated Co-Kriging (OCCK) are used in multi-attribute contexts and consider correlations and local trends between attributes. The selection of the appropriate Kriging method depends on the data characteristics and requirements and can provide accurate and reliable spatial interpolation results.

In satellite CO2 reconstruction, Kriging interpolation is used in the field of carbon satellite data reconstruction []. Interpolation methods based on discrete data points can be used to fill in missing or blank parts of satellite observations to obtain a complete picture of the CO2 distribution []. These methods utilize statistical properties and spatial correlations between known observations to infer CO2 concentrations at unobserved locations.

In order to accurately reflect the spatial and temporal distributions of CO2, both temporal and spatial interpolation are essential. However, capturing spatial and temporal variability in satellite data reconstruction is challenging using only spatial interpolation methods. In contrast, spatiotemporal interpolation methods can capture spatiotemporal variability more accurately, and the model’s accuracy can be assessed by cross-validation in space and time. Next, spatial interpolation and combined spatiotemporal interpolation methods will be introduced.

3.1.1. Spatially Interpolated Data Reconstruction

Traditional statistical methods have widely utilized spatial Kriging interpolation to generate data products. However, interpolation methods that utilize only spatial correlation do not consider the time-dependent structure of CO2 data. As a result, temporal variations in dynamic CO2, including annual growth and seasonal cycles, need to be adequately considered []. The following Table 6 lists the results obtained by researchers in recent years in satellite data reconstruction using spatial interpolation methods.

Table 6.

Papers related to spatial interpolation.

In 2008, Tomosada et al. [] used a spatial statistical approach to obtain CO2 column concentrations from GOSAT data. In 2012, Hammerling et al. [] used a spatial interpolation approach to generate maps at high spatial and temporal resolutions without the need to use atmospheric transport models and estimates of CO2 uptake and emissions. In 2014, Jing et al. [] combined the GOSAT and SCIAMACHY satellite measurements to propose a filling method to model the spatial correlation structure of the CO2 concentration to solve the problem of limited CO2 data provided by a single satellite due to cloud effects, in order to more accurately characterize the spatial and temporal distributions of atmospheric CO2 concentration. In 2020, Shrutilipi Bhattacharjee et al. [] proposed a method to fill in the spatial correlation structure of the modeled CO2 concentration based on the Kriging grid interpolation method of the spatial interpolation technique, which interpolates the CO2 source points in the emission inventory and the types in the land use/cover information with the CO2 column concentration separately, and then combines the interpolation results of the two to obtain a more accurate prediction of the CO2 column concentration. Meanwhile, the researchers also demonstrated that the method can be applied to predict the CO2 column concentration in other regions.

Although spatial interpolation can be used to reconstruct satellite CO2 data to address the problem of insufficient CO2 data, it has some limitations. First, interpolation methods rely on estimating values between known measurement points, but they may introduce errors, especially when data are sparse or when extrapolation beyond the range of measurements is required. Second, interpolation methods do not accurately capture the true spatial and temporal variability because they do not account for spatial and temporal autocorrelations in the data. This can lead to the generation of biased or noisy maps, particularly in situations where measurement points are sparse, resulting in a loss of information rather than overly smooth fields. In contrast, spatiotemporal interpolation methods can better address these issues.

3.1.2. Spatiotemporal Interpolation Data Reconstruction

Spatiotemporal interpolation methods can capture spatiotemporal variability more accurately and can also be used to assess the accuracy of models by cross-validating them in space and time. Additionally, spatiotemporal interpolation methods can utilize multiple data sources, including satellite observations, ground-based observations, and model simulations, to enhance spatiotemporal resolution and accuracy. However, these methods primarily focus on estimating values between existing data points, whereas data fusion methods aim to integrate data from different sources to create a more comprehensive and consistent representation. Data fusion typically involves calibrating and merging data sources to reduce systematic errors and improve the overall reliability of the results, potentially providing higher precision and consistency when handling different types of data. The following Table 7 lists some excellent articles on satellite CO2 data reconstruction using spatiotemporal interpolation methods in recent years for the readers’ convenience.

Table 7.

Papers related to spatiotemporal interpolation.

In 2015, Tadić et al. [] proposed a flexible moving-window Kriging method, which can serve as an effective technique for imputing missing data and reconstructing datasets. This method was demonstrated to generate high spatial and temporal resolution maps using satellite data, and its feasibility was validated using CO2 data from the GOSAT satellite and the GOME-2 instrument. In 2017, Zeng et al. [] employed spatiotemporal geostatistical methods, effectively utilizing the spatial and temporal correlations between observational data, to establish a global land-based mapping dataset of total CO2 amounts from satellite measurements. They conducted cross-validation and verification at the TCCON sites. The results revealed a correlation coefficient of 0.94 between the dataset and observational values, with an overall bias of 0.01 ppmv.

Due to the limited spatial and temporal resolution of concentrations, more data sources must be available. If spatial and temporal interpolation is performed using fewer observational data sources, the uncertainty of the interpolated data will increase significantly. In the future, finding suitable data sources with high-quality, long-time series of CO2 concentrations is another critical task. To create the longest possible time series and to improve the accuracy by utilizing multiple measurements when possible, Zhonghua He’s team [] developed an accuracy-weighted spatiotemporal Kriging method for integrating and mapping observed by multiple satellites, which fills in the data gaps from multiple satellites and generates continuous global 1° × 1° spatiotemporally resolved data every eight days from 2003 to 2016.

To better reconstruct the satellite CO2 data and to observe the spatiotemporal variations of CO2, an extended Gstat package was proposed. It reuses the spacetime class to estimate the spatiotemporal covariance/half-variance model and performs spatiotemporal interpolation. However, it is challenging to select reliable and reasonable semivariance models and their parameters in spatiotemporal Kriging interpolation. Inappropriate choices of models and parameters may lead to significantly inaccurate and inefficient interpolation results. To better understand the multi-fractal scale behavior, Ma et al. [] investigated the characteristics of the multi-fractal scale behavior of the time series of atmospheric concentration in China from 2010 to 2018 in terms of spatial distribution. They gained insights into the dynamical mechanisms of the CO2 concentration changes and proposed an improved spatiotemporal interpolation method (spatiotemporal thin-plate spline interpolation) to realize the spatiotemporal interpolation of the atmospheric concentration. In 2023, Sheng et al. [] generated a global terrestrial dataset with a grid resolution of 1° and a temporal resolution of 3 days, covering the period from 2009 to 2020 based on the spatiotemporal geostatistical method.

In summary, although interpolation methods can, to a certain extent, solve some of the missing data problems and maintain the spatial continuity of the data, some interpolation methods rely heavily on the number and quality of samples, meaning that they primarily depend on the distribution and accuracy of existing data points to estimate values at unmeasured locations. This can lead to several issues: firstly, if the number of samples is insufficient, the interpolation results may not adequately reflect the true spatial variability of the data, thereby reducing estimation accuracy. Secondly, if the sample quality is poor, these errors may be propagated into the interpolation results, further affecting their reliability. Additionally, these methods sometimes neglect the spatial characteristics of the geographic data, such as topographic variations or environmental conditions, which can lead to results that do not accurately represent the real situation, causing discrepancies from the actual data. In addition, due to the limitations of the data itself, interpolation reconstruction often fails to improve the data accuracy significantly. Therefore, when applying interpolation methods, it is necessary to carefully choose appropriate variants and parameters and make adjustments and corrections according to the actual situation.

3.2. Data Reconstruction Based on Data Fusion

Data fusion combines data from different data sources, sensors, or observation methods to obtain more comprehensive, accurate, and reliable information [].

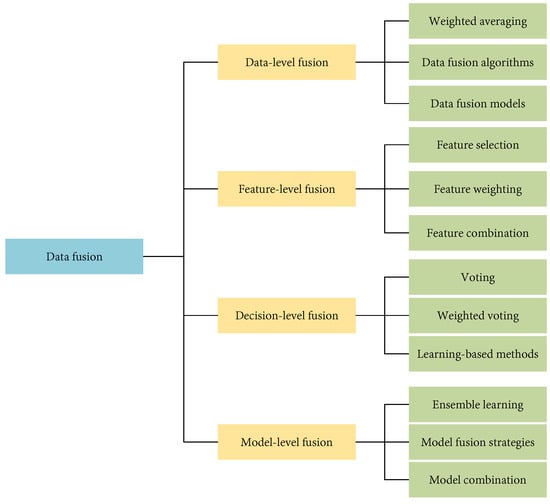

Data fusion methods and techniques are diverse. Their advantage lies in their ability to fully utilize information from multiple data sources, thereby increasing the accuracy and confidence of the data while reducing uncertainty. Data fusion can also fill gaps and compensate for deficiencies while providing more comprehensive spatial and temporal coverage. The following Figure 7 shows the categorization of data fusion methods to help readers understand better. In Figure 7, Vvting aggregates predictions from multiple models by selecting the most frequent outcome, thereby enhancing accuracy through consensus. Weighted voting refines this approach by assigning different weights to each model’s predictions based on their accuracy, thus giving more influence to the more reliable models [].

Figure 7.

Classification of data fusion methods.

Fusion methods are categorized into data-level fusion, feature-level fusion, decision-level fusion, and model-level fusion. Data-level fusion enhances the coverage and credibility of data by combining raw data from different sources. Feature-level fusion extracts features from different data sources and improves the expressiveness of the data. Extracting features means identifying and extracting variables or information that are useful for a specific task. For example, in image data, this might include extracting edge, texture, or shape features; in time series data, it could involve extracting trends, periodicities, or anomalies. The process of feature extraction typically includes steps such as data preprocessing, feature selection, and feature calculation. Enhancing the expressiveness of the data means integrating and optimizing features extracted from different data sources to make the data more accurate in representing and reflecting the actual situation. This can be achieved by increasing the detail and richness of the data, enabling subsequent analyses or models to better capture the underlying patterns and complexities of the data. Decision-level fusion integrates the decision results from different data sources to improve the credibility of classification and decision-making. Model-level fusion integrates outputs from different models to enhance overall model performance. First, different models may excel at handling specific types of data or tasks, and by combining their outputs, we can leverage each model’s strengths and address the weaknesses of individual models, improving overall prediction accuracy and robustness. Second, a single model might overfit the training data, leading to poor performance on new data. Model fusion helps mitigate this risk by incorporating diverse learning strategies and perspectives, thus enhancing generalization. Additionally, different models may react differently to noise and outliers in the data; combining multiple models can reduce the errors introduced by any single model and increase overall result stability. Finally, various models might use different feature sets, algorithms, or training methods, and model fusion can effectively utilize these diverse information sources to better handle complex data. In summary, model-level fusion combines the strengths of multiple models to improve prediction accuracy and overall model performance. Choosing the appropriate fusion method depends on the problem requirements and the nature of the data.

In satellite CO2 reconstruction, due to the presence of heavy clouds (or aerosols) and the limitation of satellite orbits, there is a large amount of missing data in satellite inversion, which limits the study of global CO2 sources and sinks. Therefore, satellite CO2 reconstruction using data fusion methods is a potential endeavor. Data fusion can improve the spatial resolution and temporal coverage, increase the data precision and accuracy, and enhance the spatial coverage. By integrating information from different data sources, data fusion can generate more detailed, continuous, and comprehensive maps of CO2 distribution, contributing to an in-depth understanding and study of the carbon cycle process.

Data fusion can be performed based on statistics, modeling, and learning algorithms. The choice of the specific method depends on the nature of the data, the purpose of the fusion, and the application requirements. These three approaches are described below to help understand satellite CO2 data reconstruction better.

3.2.1. Data Fusion Method Based on Statistics

The use of statistical-based data fusion methods is a commonly used data fusion technique that integrates information from multiple data sources by applying statistical principles and methods to obtain more accurate and reliable estimates. Table 8 shows the results achieved by researchers in recent years in satellite CO2 data reconstruction using statistics-based data fusion methods.

Table 8.

Data fusion method based on statistics.

In 2013, Reuter et al. [] applied an ensemble median merging algorithm (EMMA) and used grid-weighted averaging to fuse CO2 data, resulting in a new dataset. In 2014, Jing et al. [] fused measurements from GOSAT and SCIAMACHY and proposed a data imputation method based on spatial correlation structures of CO2 concentrations. This method enabled the creation of high-spatiotemporal-resolution global land CO2 distribution maps. Hai et al. [], in the same year, employed dimensionality reduction Kalman smoothing and a spatial random effects model to merge CO2 observational data from GOSAT, AIRS, and OCO-2 satellites. In 2015, Zhou et al. [] introduced an improved fixed-rank Kriging method based on GOSAT and AIRS data. The results demonstrated a better correlation between the fused dataset and meteorological analysis data. In 2017, Zhao et al. [] introduced a method called High-Precision Surface Albedo Model Data Fusion (HSAM-DF). This approach utilized geological–chemical model CO2 concentration outputs as a driving field and ground-based CO2 concentration observations as accuracy control conditions to merge two types of CO2 data. In 2023, Meng et al. [] used inversion data from GOSAT and OCO-2 to create a global continuous spatiotemporal dataset called “Mapping-”. Mapping- revealed the spatiotemporal characteristics of global similar to those observed in the CarbonTracker model data.

Statistically based data fusion methods can derive spatial and temporal distributions of . These distributions are more accurate, have higher resolution, and span longer periods than the results generated based solely on a single satellite dataset.

3.2.2. Data Fusion Method Based on Model Simulation

Model-based simulation is a common approach to data fusion, mainly using chemical transport models (CTMs) to simulate atmospheric CO2. CTMs can infer the distribution of CO2 concentrations and fluxes in various regions of the globe and, at the same time, correct the simulation results using observational data to improve their accuracy. Commonly used CTMs include CarbonTracker and GEOS-Chem. They obtain optimized carbon flux and CO2 concentration distributions in various regions of the globe by assimilating data such as bottle-sampled CO2, continuous CO2 series from towers, and detected by satellites. These two commonly used chemical transport models are described in detail next.

- (1)

- GEOS-Chem

GEOS-Chem is a global chemical transport model dedicated to simulating chemical reactions and transporting substances in the atmosphere []. The model employs a high-resolution, three-dimensional grid to reflect atmospheric changes in space and time accurately. It fully accounts for many critical atmospheric processes, including radiation, convection, turbulence, wet and dry deposition, and chemical reactions. GEOS-Chem is particularly notable for its broad applications in atmospheric composition modeling, generating simulations of gases such as CO2, CH4, CO, and isoprene with spatial and temporal continuity. It has been extensively used to evaluate satellite-detected data as well as ground-based observations.

GEOS-Chem is a 3D Eulerian transport model with a spatial resolution of 4° × 5°, containing 47 vertical levels, with coverage extending from the surface to a height of 0.01 hectopascal. During assimilation, the model uses a four-dimensional variational (4D-Var) approach by iterating the model’s equations to minimize the cost function J:

where N is the number of time steps of the observation data, c is the optimized model state, is the observation data at the ith time step, is the observation operator, the model state c is transferred to the observation space, is the observation error covariance matrix at the ith time step, is the background estimation, and is the background error covariance matrix.

Before the reconstruction of satellite CO2 data, it is critical to assess the applicability of chemical transport models in CO2 simulations through ground-based observations of atmospheric CO2 and satellite-measured XCO2 []. Several studies have investigated the contribution of terrestrial ecosystems to atmospheric CO2 concentrations through chemical model simulations. In 2011, Feng et al. [] evaluated the accuracy of a global chemical transport model for CO2 simulations from 2003 to 2006 using ground-based observations, aircraft measurements, and AIRS satellite data. In 2013, Lei et al. [] compared and assessed the spatial and temporal variations in atmospheric CO2 between June 2009 and May 2010 using XCO2 from two datasets, GOSAT and GEOS-Chem, and analyzed the CO2 differences between the Chinese land region and the U.S. land region to demonstrate the satellite observations and model simulations’ rationality and uncertainty. In 2017, Li et al. [] evaluated regional chemical modeling simulations of CO2 concentrations in 2012 using GOSAT observations and ground-based measurements.

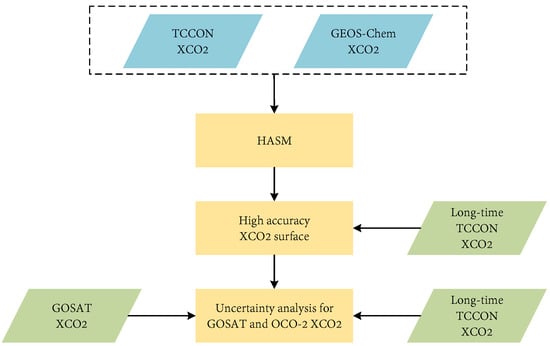

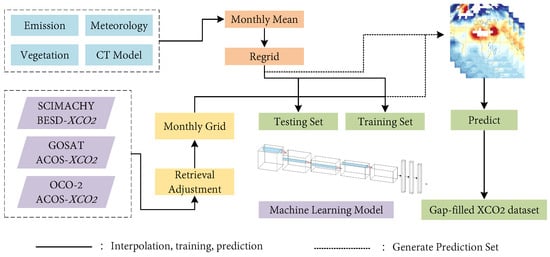

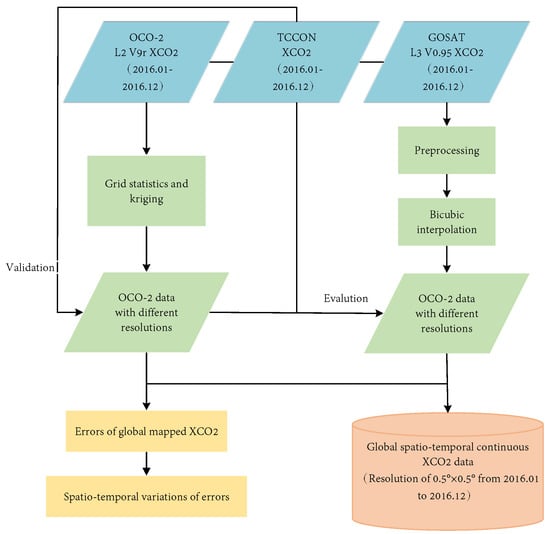

Due to limitations in data availability and precision, the spatiotemporal patterns of XCO2 have not been well characterized at the regional scale. Researchers have utilized XCO2 data from GOSAT to investigate the spatiotemporal patterns of XCO2 in the Chinese region. They employed a high-resolution nested grid GEOS-Chem model to construct XCO2 []. In 2017, Zhang et al. [] compared the results of XCO2 derived from the fusion of TCCON measurements with the GEOS-Chem model to the satellite observations. They found that the global OCO-2 XCO2 estimates were closer to the HASM XCO2. The primary methodological workflow can be seen in Figure 8.

Figure 8.

Flowchart for obtaining high-precision XCO2 and evaluating the effectiveness of GOSAT and OCO-2 satellite observations (Zhang et al. []).

GEOS-Chem is essential in atmospheric chemistry, air quality research, climate change, and pollution management. However, CTM simulations require a higher level of certainty, primarily due to the limited knowledge of a priori fluxes, errors in the simulated atmospheric transport processes, and inaccuracies in the observational CO2 data being assimilated, particularly satellite-acquired data.

Errors in the representation of atmospheric transport in chemical transport models have long been recognized as a major source of uncertainty in atmospheric CO2 inversion analyses. Improving transport models is critical for enhancing the accuracy of CO2 inversions. Current efforts to improve model transport focus on two key areas: refining the parameterization of unresolved transport, particularly in coarse offline CTMs, and increasing the spatial and temporal resolutions of model simulations to better capture atmospheric transport processes. As transport models evolve, it will be crucial to regularly evaluate their ability to accurately represent large-scale atmospheric dynamics [].

- (2)

- CarbonTracker

CarbonTracker is a CO2 measurement and modeling system developed by NOAA to track CO2 sources and sinks globally []. The CarbonTracker model typically uses the Transport Model 5 chemical transport model to simulate the atmospheric transport of CO2 and other trace gases. It integrates ground-based observatories, airborne observations, satellite observations, and model simulations to provide high-resolution estimates of CO2 concentration and spatial distribution through data assimilation techniques. The current release is CarbonTracker 2022, which covers the period from January 2000 to December 2020 with global surface–atmosphere CO2 flux estimates.

In CO2 flux inversion, errors induced by atmospheric transport models can contribute to the uncertainty in inferring surface fluxes, in addition to biases in retrieval. Furthermore, model grid cells are often relatively coarse and may have different representational capabilities compared to satellite observations. Therefore, analyzing the differences between the simulated CO2 and satellite-derived results and assessing the uncertainty in model transport outcomes is crucial. To better understand CO2 trends in the Asian region, Farhan Mustafa and colleagues conducted a comparison in 2020 between obtained from CarbonTracker and obtained from GOSAT and OCO-2 satellite observations []. The results revealed good consistency between CarbonTracker and the other two satellite datasets, allowing the use of any of these datasets to understand CO2 in the context of carbon budgets, climate change, and air quality.

CarbonTracker reconstructs atmospheric carbon emission and absorption processes by integrating multiple observations and simulation models. Satellite CO2 data are crucial in this process, as they provide high temporal and spatial resolution observations that can compensate for the lack of ground-based observations, monitor carbon emission sources and sinks, and validate and improve models. However, due to the coarse spatial resolution, data extracted by CarbonTracker may not capture the spatial heterogeneity of CO2.

In the field of carbon dioxide reconstruction, in addition to commonly used physical models like GEOS-Chem and CarbonTracker, many other models are widely applied. Numerical Weather Prediction (NWP) models, such as WRF, provide high-precision meteorological background data and can be coupled with Chemical Transport Models (CTMs) like CAMS (Copernicus Atmospheric Monitoring Service) to enhance the simulation of CO2 transport [,]. Radiative Transfer Models (RTMs), such as MODTRAN and SCIATRAN, simulate the propagation of light through the atmosphere, providing a crucial foundation for satellite remote sensing inversion []. Additionally, General Circulation Models (GCMs), like the GISS model, aim to simulate atmospheric circulation in the global climate system and are often combined with CTMs for long-term climate analysis []. By integrating observational data across varying temporal and spatial scales, these models enable the accurate reconstruction of atmospheric CO2 distribution and trends, providing essential scientific support for addressing climate change.

3.2.3. Data Fusion Method Based on Learning Algorithms

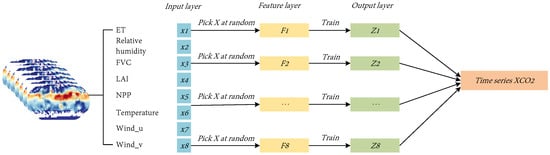

Data fusion methods based on learning algorithms belong to emerging technologies. They utilize neural networks, integrated learning, Convolutional Neural Networks, Generative Adversarial Networks, and transfer learning to learn and fuse information from multiple data sources automatically []. Learning algorithms are widely used in action recognition [], image enhancement [,], and semantic segmentation [,]. These methods reveal complex relationships between data by training on a large amount of data, extracting feature representations, and merging information from different data sources to obtain more accurate and comprehensive data fusion results [].

Machine learning methods utilize existing observations and associated features as inputs, and models are trained to learn data relationships to predict and fill in missing data [,]. Deep learning methods, on the other hand, utilize deep neural network models for satellite data reconstruction. These deep learning models have multiple hidden layers to learn more advanced feature representations from the data [,,]. The success of machine learning and deep learning methods in satellite CO2 data reconstruction depends on adequate training data and appropriate feature engineering.

With the assistance of multi-source data, even simple multiple linear regression models can obtain good fitting results. However, due to the complexity of CO2 transport processes between terrestrial ecosystems, marine ecosystems, and the atmosphere, linear models face the challenge of having an inadequate fitting ability. To overcome this challenge, studies have been conducted in recent years to apply machine learning to derive continuous distributions and to reconstruct satellite CO2 data. Table 9 summarizes the results of data fusion methods based on learning algorithms achieved by researchers in satellite CO2 data reconstruction in recent years.

Table 9.

Data fusion method based on data fusion.