Ghost Removal from Forward-Scan Sonar Views near the Sea Surface for Image Enhancement and 3-D Object Modeling

Abstract

1. Introduction

2. Materials and Methods

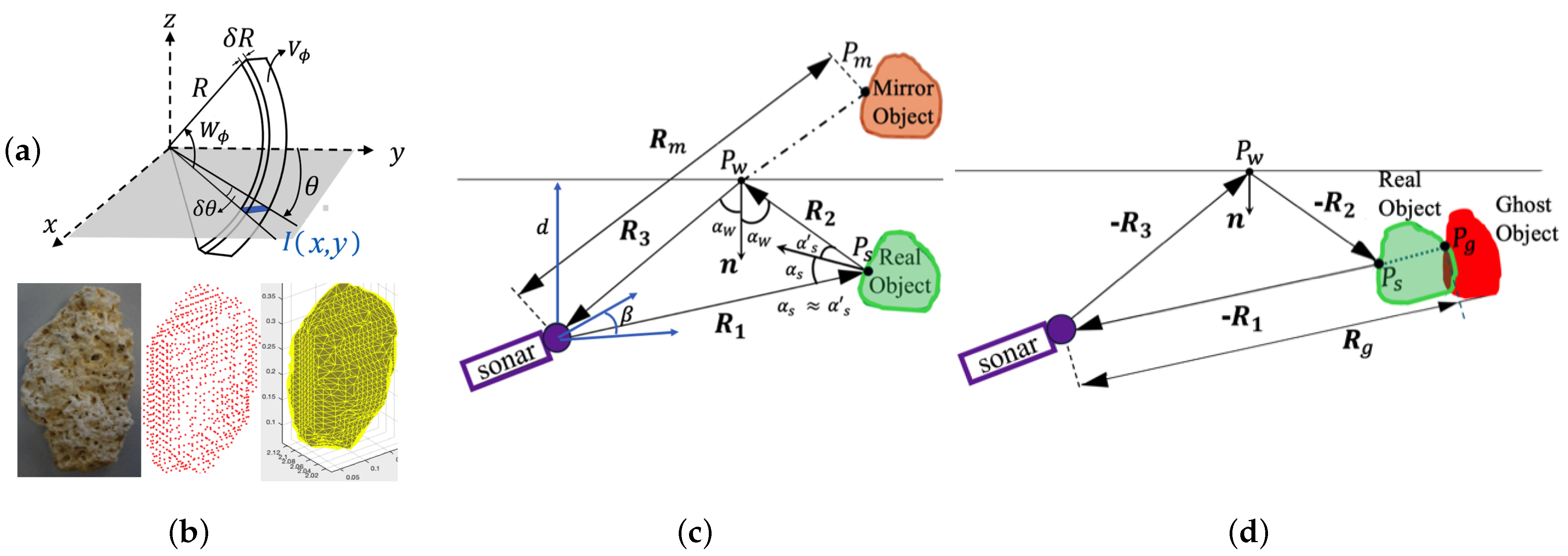

2.1. Notation, Coordinate Transformation and FSS Image Formation

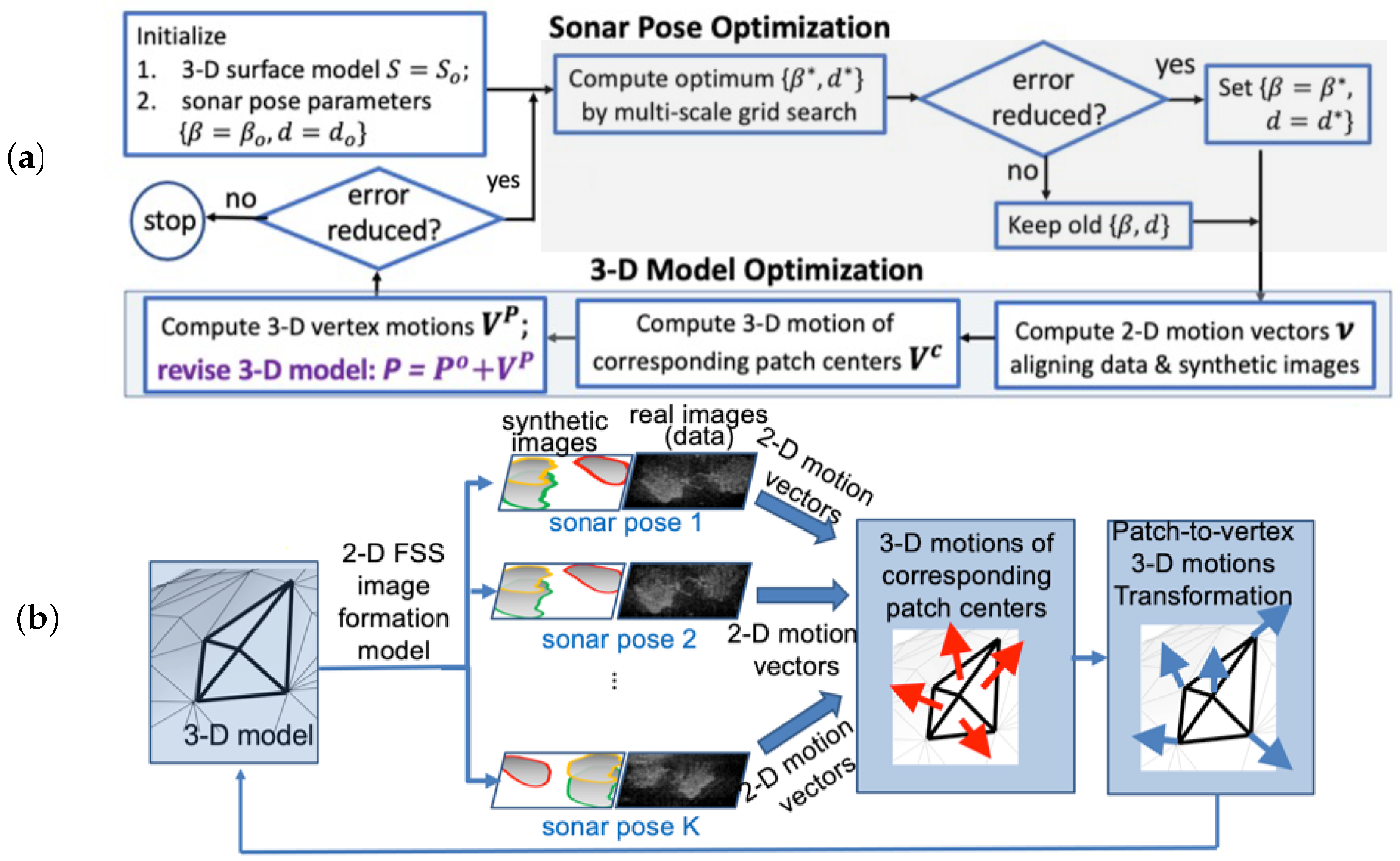

2.2. 3-D Object Modeling

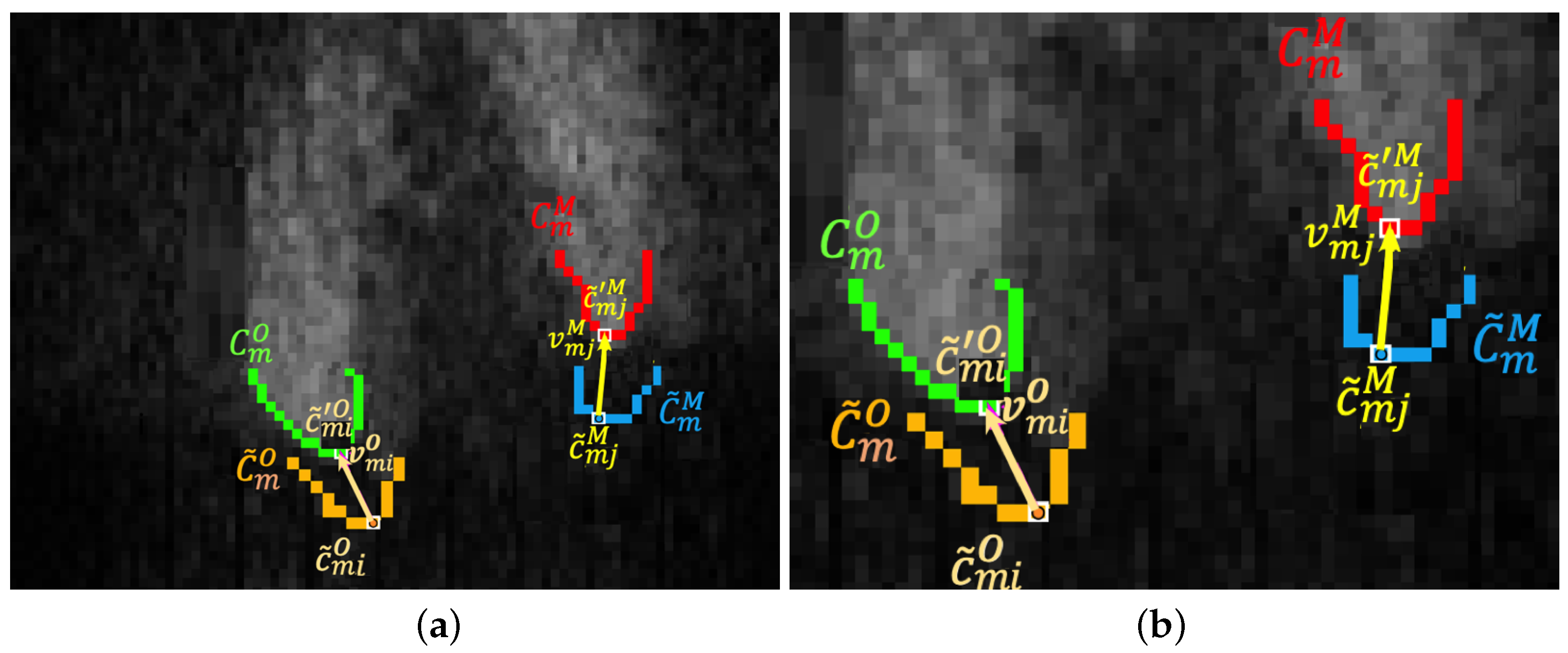

2.3. Unified Optimization Famework

2.4. Sonar Pose Optimization

2.5. Error Metric

2.6. Enhanced Target Images

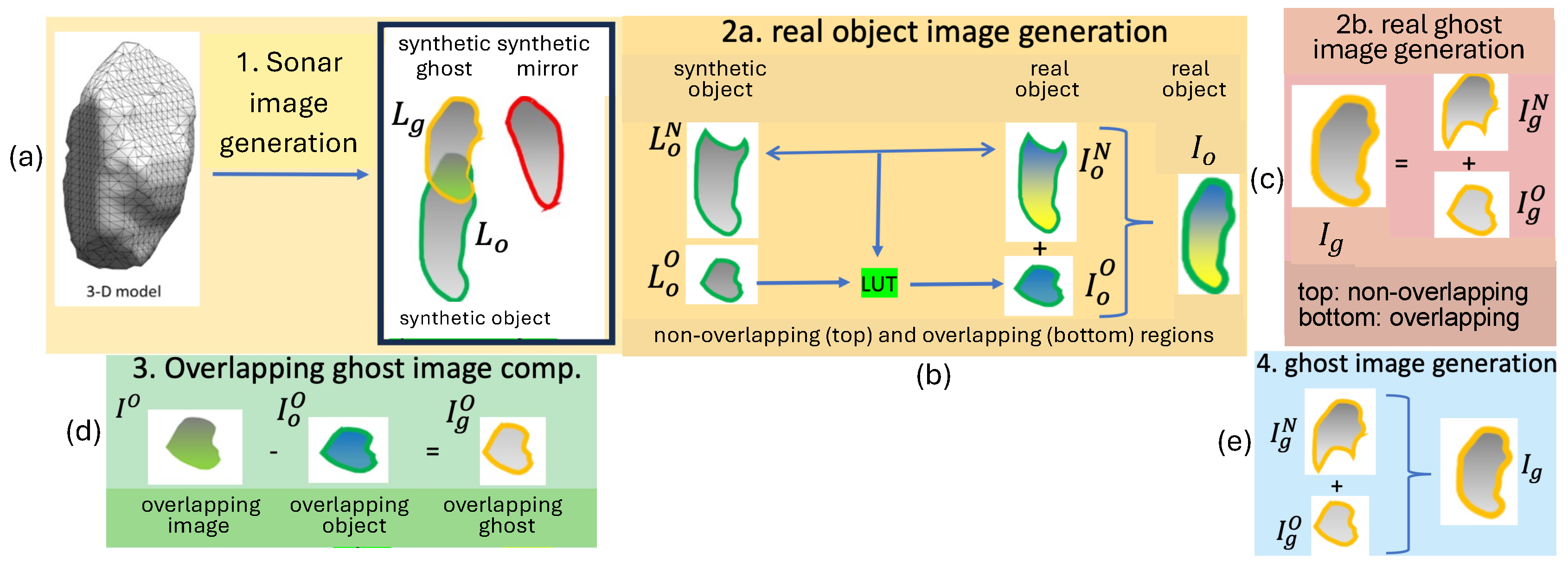

- Generation of synthetic logarithmic object image based on the model in [26], applied to the 3-D object model (step 1);

- Segmentation of non-overlapping and overlapping object regions from , respectively (step 2a), and the generation of normalized intensity values with zero mean and unit variance;

- Calculation of mean-variance pair of intensity values , and applying the transformation ;

- Construction of look-up table (LUT) to transform to by matching their histograms (step 2a);

- Computation of scaled intensity values within overlapping object region, and applying the LUT to map values to values (step 2a).

3. Results

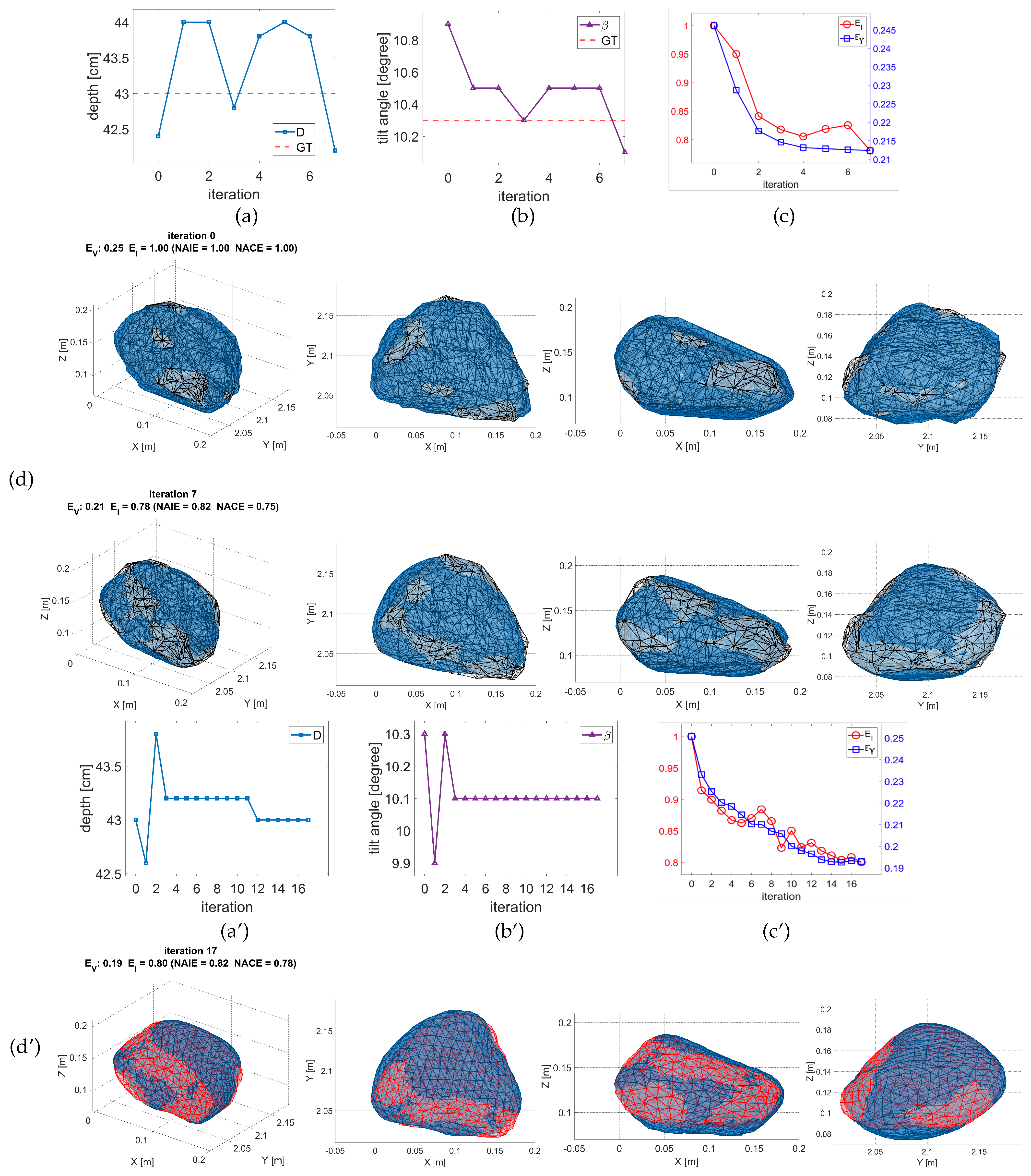

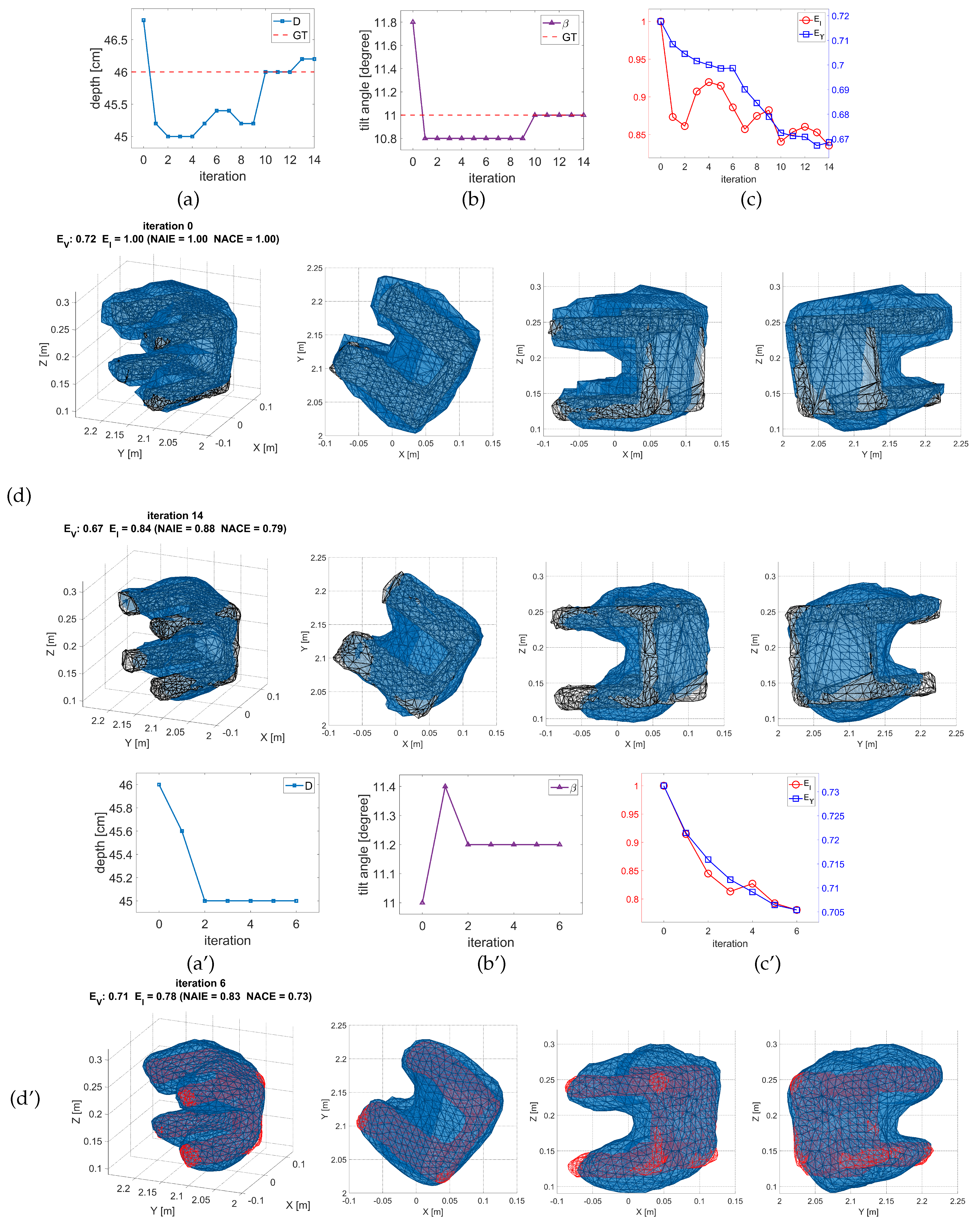

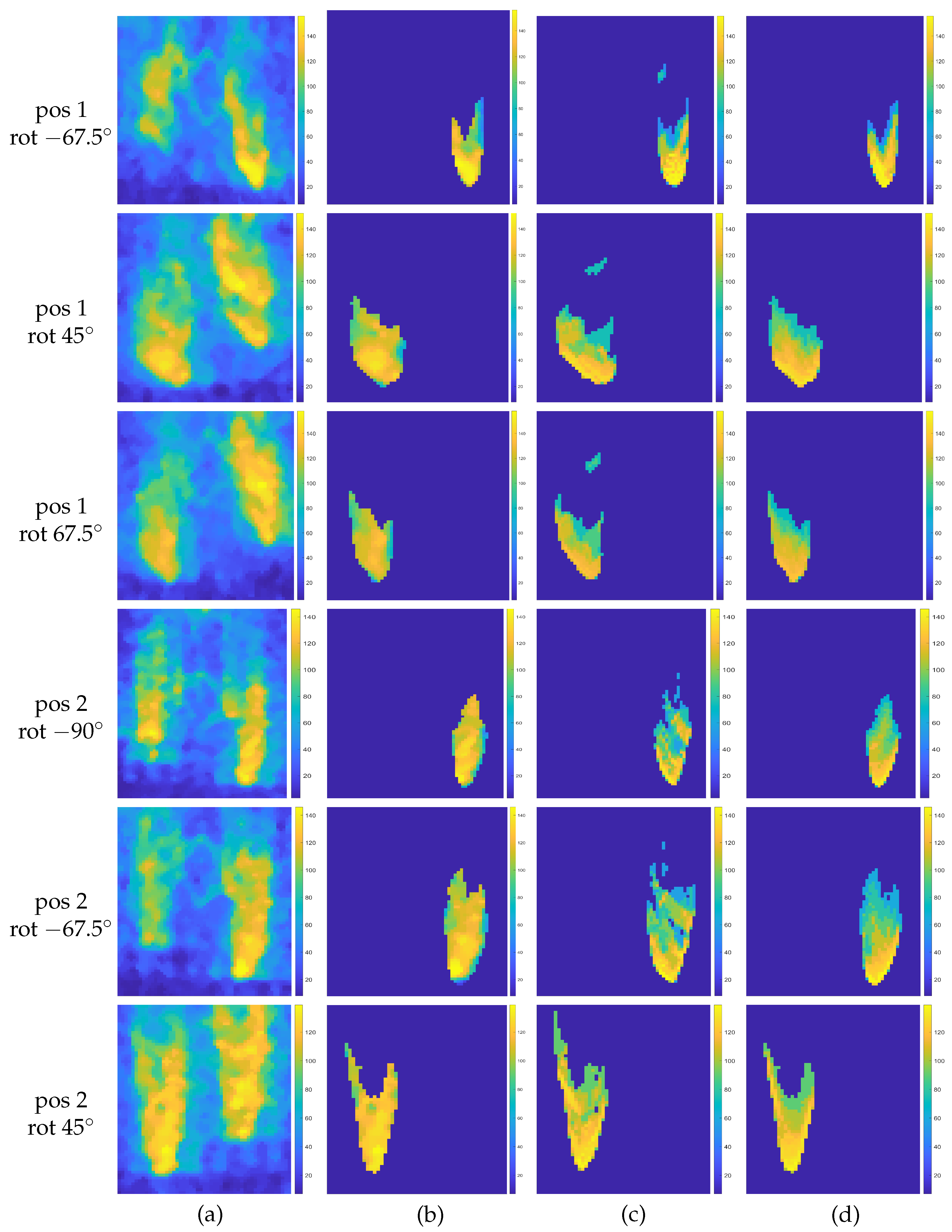

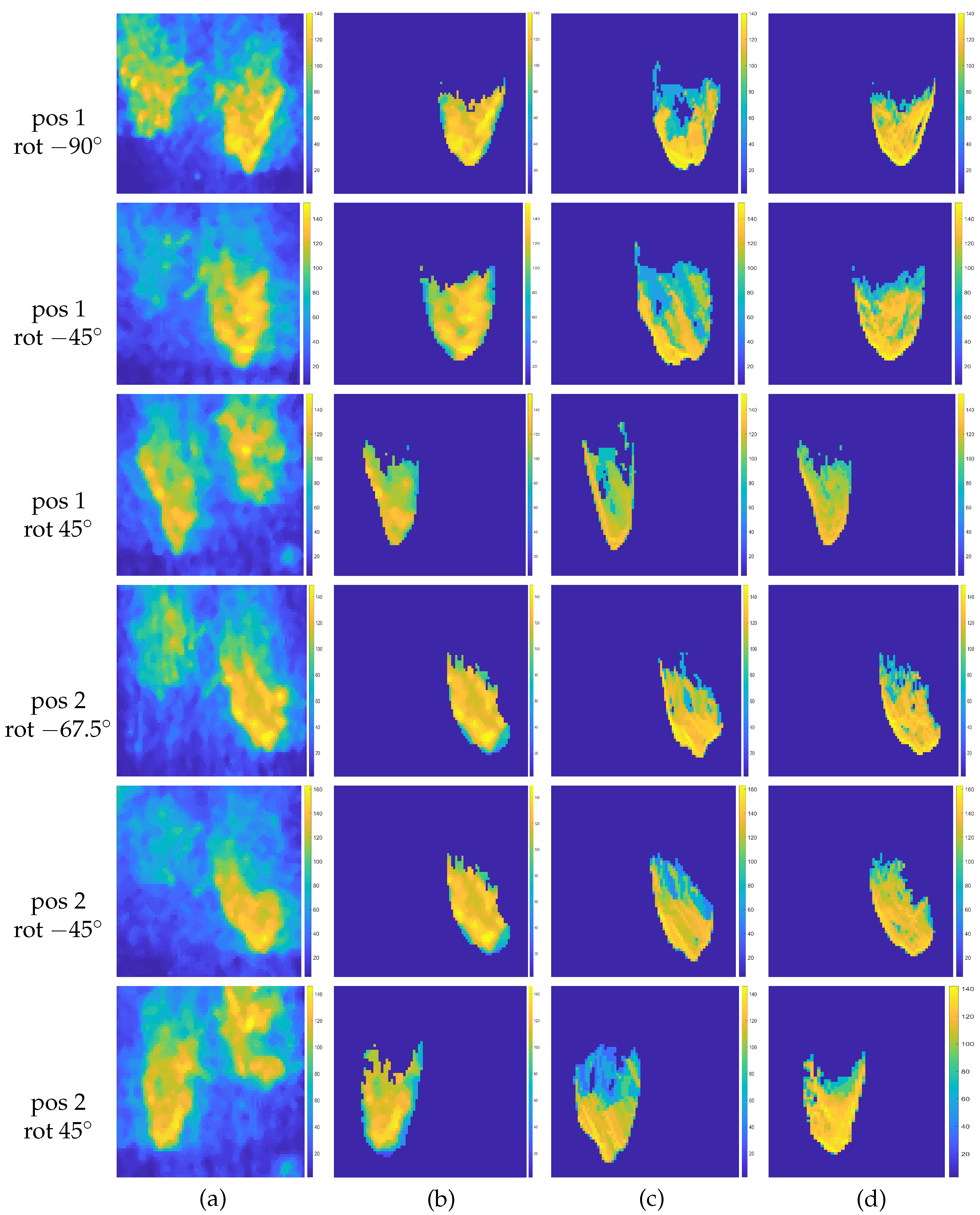

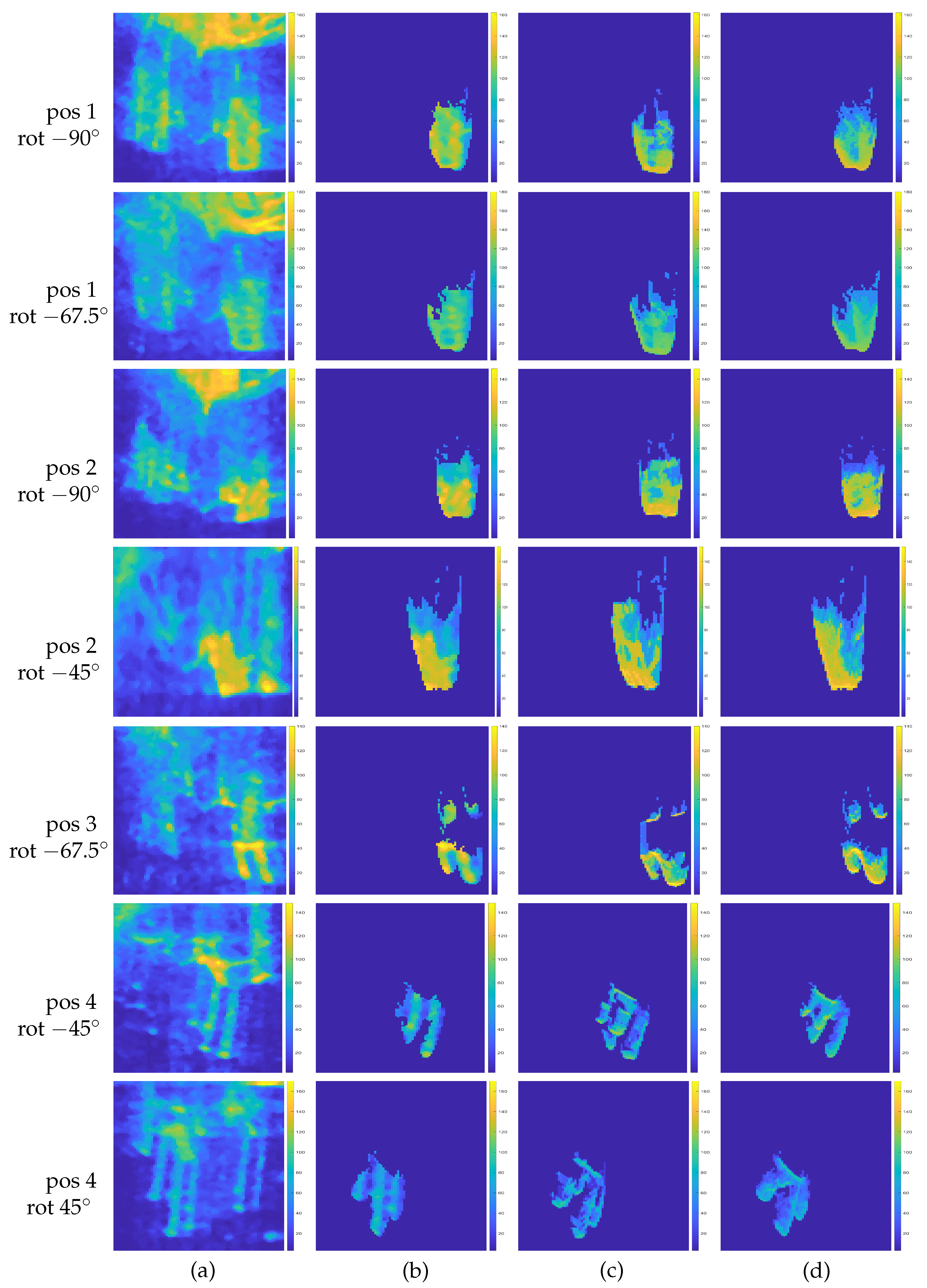

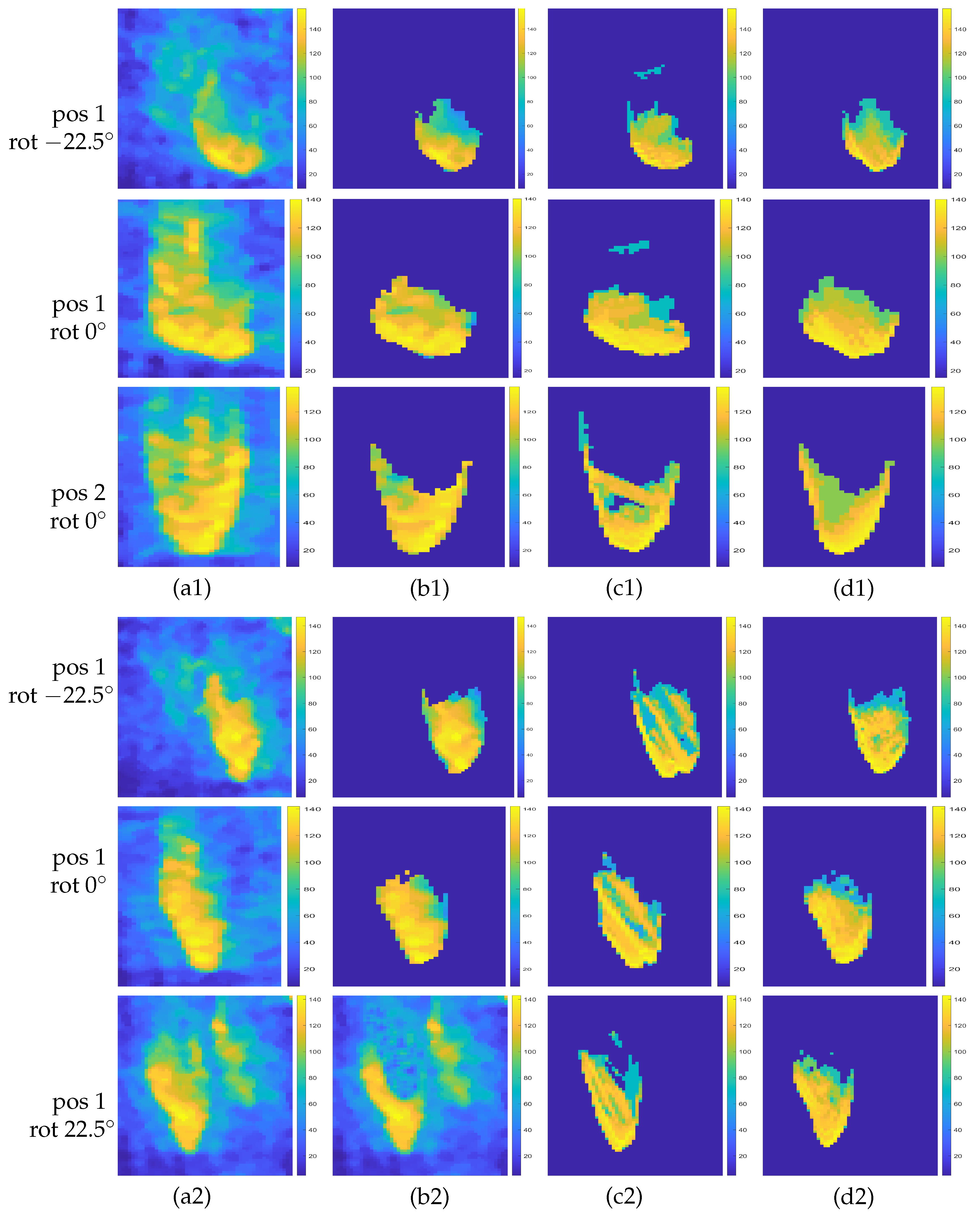

3.1. Experiments with Synthetic Data

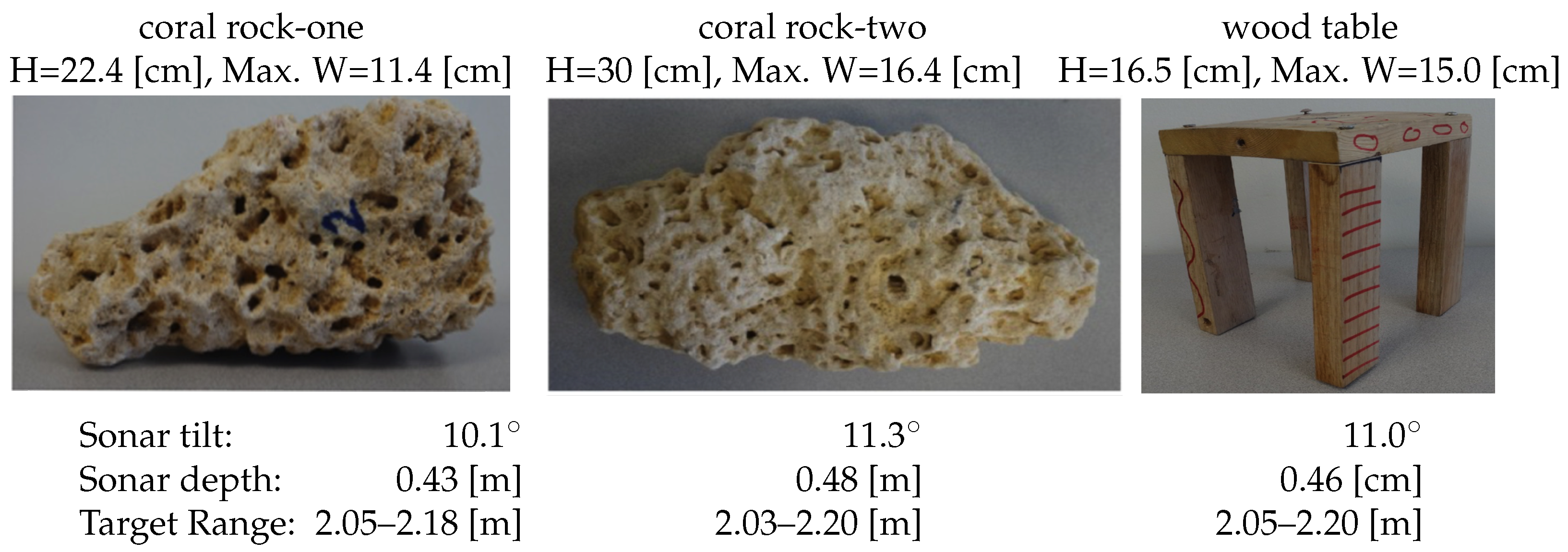

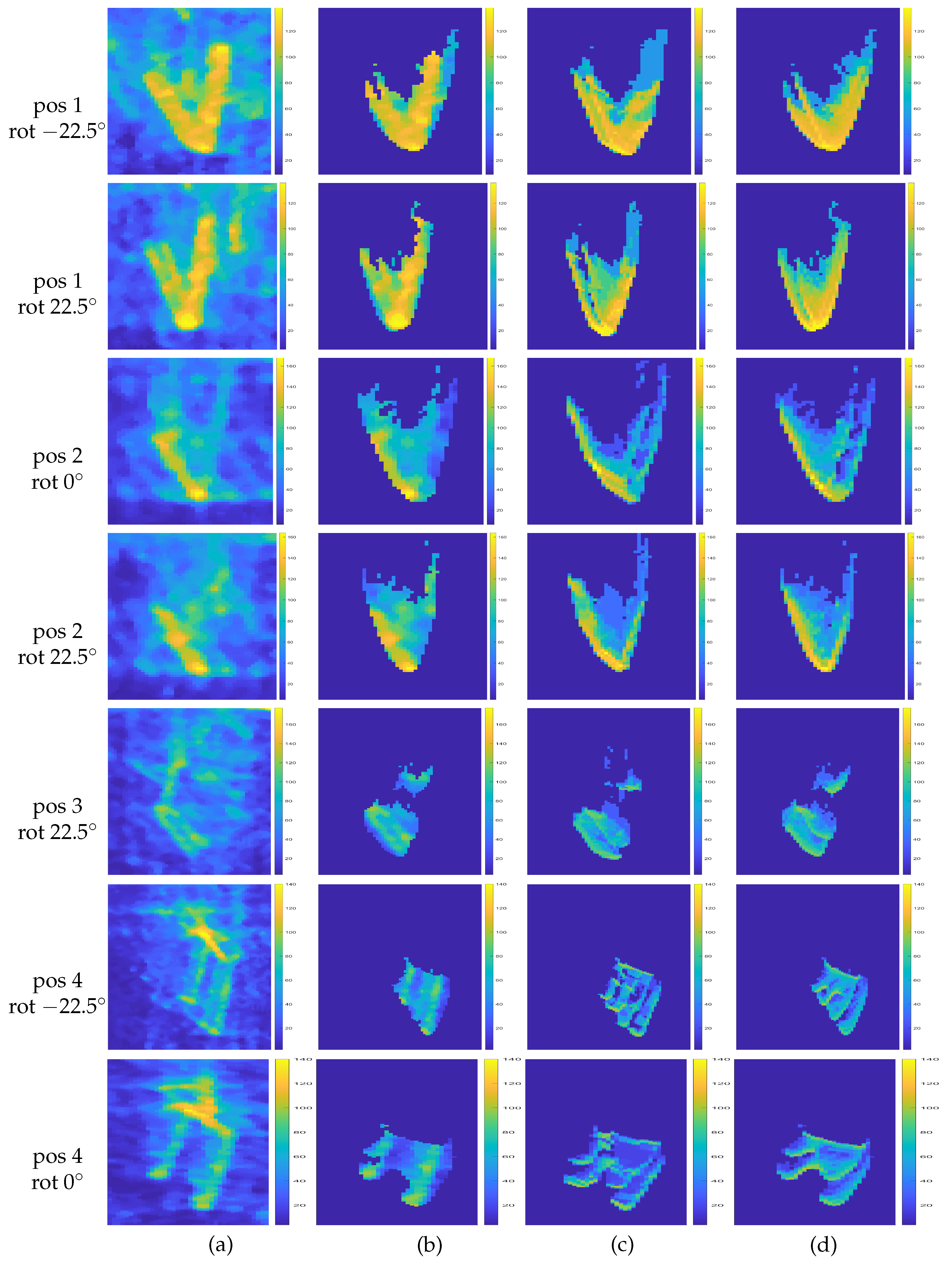

3.2. Experiments with Real Data

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Available online: https://oceanexplorer.noaa.gov/edu/materials/multibeam-sonar-fact-sheet.pdf (accessed on 5 October 2024).

- Available online: https://www.uio.no/studier/emner/matnat/ifi/INF-GEO4310/h12/undervisningsmateriale/sonar_introduction_2012_compressed.pdf (accessed on 5 October 2024).

- Available online: https://en.wikipedia.org/wiki/Side-scan_sonar (accessed on 5 October 2024).

- Burguera, A.; Oliver, G. High-resolution underwater mapping using side-scan sonar. PLoS ONE 2016, 11, e0146396. [Google Scholar] [CrossRef] [PubMed]

- Hansen, R.E. Synthetic aperture sonar technology review. Mar. Technol. Soc. J. 2013, 47, 117–127. [Google Scholar] [CrossRef]

- Hayes, M.P.; Gough, P.T. Synthetic aperture sonar: A review of current status. IEEE J. Ocean. Eng. 2009, 34, 207–224. [Google Scholar] [CrossRef]

- Available online: https://www.sonardyne.com/applications/obstacle-avoidance/#:~:text=When%20your%20marine%20operation%20takes,warn\%20of%20potential%20collision%20hazards (accessed on 5 October 2024).

- Available online: https://www.simrad-yachting.com/sonar-and-transducers/forwardscan-sonar/ (accessed on 5 October 2024).

- Available online: https://www.teledynemarine.com/en-us/products/product-line/Pages/forward-looking-sonars.aspx (accessed on 5 October 2024).

- Available online: http://www.soundmetrics.com/Products/DIDSON-Sonars (accessed on 5 October 2024).

- Available online: https://www.seascapesubsea.com/product/oculus-m750d/#:~{}\protect\protect\leavevmode@ifvmode\kern+.1667em\relax:text=Description,horizontal%20fields%20of%20view%20\respectively (accessed on 5 October 2024).

- Available online: https://www.tritech.co.uk/products/gemini-720is (accessed on 5 October 2024).

- Ferreira, F.; Djapic, V.; Micheli, M.; Caccia, M. Forward looking sonar mosaicing for mine countermeasures. Annu. Rev. Control. 2015, 40, 212–226. [Google Scholar] [CrossRef]

- Hurtos, N.; Petillot, Y.; Salvi, J. Fourier-based registrations for two-dimensional forward-looking sonar image mosaicing. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS’12), Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 5298–5305. [Google Scholar]

- Hurtos, N.; Nagappa, S.; Palomeras, N.; Salvi, J. Real-time mosaicing with two-dimensional forward-looking sonar. In Proceedings of the IEEE International Conference Robotics and Automation (ICRA’14), Hong Kong, China, 31 May–7 June 2014; pp. 601–606. [Google Scholar]

- Kim, K.; Intrator, N.; Neretti, N. Image registration and mosaicing of noisy acoustic camera images. In Proceedings of the IEEE International Conference on Electronics, Circuits and Systems, (ICECS’04), Tel Aviv, Israel, 15 December 2004; pp. 527–530. [Google Scholar]

- Negahdaripour, S.; Aykin, M.D.; Sinnarajah, S. Dynamic scene analysis and mosaicing of benthic habitats by FS sonar imaging—Issues and complexities. In Proceedings of the OCEANS’11 MTS/IEEE, Waikoloa, HI, USA, 19–22 September 2011. [Google Scholar]

- Geiger, A. Computer Vision - Lecture 8.1 (Shape-from-X: Shape-from-Shading). Available online: https://www.youtube.com/watch?v=YQ5QOiyoF9U (accessed on 5 October 2024).

- Liu, Y. 3-D Object Modeling from 2-D Underwater Forward-Scan Sonar Imagery in the Presence of Multipath near Sea Surface. Master’s Thesis, University of Miami, Coral Gables, FL, USA, 2022. [Google Scholar]

- Liu, Y.; Negahdaripour, S. Object modeling from underwater forward-scan sonar imagery with sea-surface multipath. IEEE J. Ocean. Eng. 2024, 1–14. [Google Scholar] [CrossRef]

- Cho, H.; Kim, B.; Yu, S. Auv-based underwater 3-D point cloud generation using acoustic lens-based multibeam sonar. IEEE J. Ocean. Eng. 2018, 43, 856–872. [Google Scholar] [CrossRef]

- Guerneve, T.; Subr, K.; Petillot, Y. Three-dimensional reconstruction of underwater objects using wide-aperture imaging sonar. J. Field Robot. 2018, 35, 890–905. [Google Scholar] [CrossRef]

- Westman, E.; Gkioulekas, I.; Kaess, M. A volumetric albedo framework for 3d imaging sonar reconstruction. In Proceedings of the IEEE International Conference on Robotics and Automation, Paris, France, 31 May–31 August 2020; pp. 9645–9651. [Google Scholar]

- Zerr, B.; Stage, B. Three-dimensional reconstruction of underwater objects from a sequence of sonar images. In Proceedings of the 3rd IEEE International Conference on Image Processing, Lausanne, Switzerland, 19 September 1996; Volume 3, pp. 927–930. [Google Scholar]

- Wang, Y.; Ji, Y.; Liu, D.; Tsuchiya, H.; Yamashita, A.; Asama, H. Elevation angle estimation in 2d acoustic images using pseudo front view. IEEE Robot. Autom. Lett. 2021, 6, 1535–1542. [Google Scholar] [CrossRef]

- Aykin, M.D.; Negahdaripour, S. Modeling 2-D lens-based forward-scan sonar imagery for targets with diffuse reflectance. IEEE J. Ocean. Eng. 2016, 41, 569–582. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, C.; Ji, Y.; Tsuchiya, H.; Asama, H.; Yamashita, A. 2d forward looking sonar simulation with ground echo modeling. arXiv 2023, arXiv:2304.0814. [Google Scholar]

- Kraus, F.; Scheiner, N.; Dietmayer, K. Using machine learning to detect ghost images in automotive radar. In Proceedings of the IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC’20), Rhodes, Greece, 20–23 September 2020. [Google Scholar]

- Kraus, F.; Scheiner, N.; Ritter, W.; Dietmayer, K. The radar ghost dataset—An evaluation of ghost objects in automotive radar data. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 8570–8577. [Google Scholar]

- Liu, C.; Liu, S.; Zhang, C.; Huang, Y.; Wang, H. Multipath propagation analysis and ghost target removal for FMCW automotive radars. In Proceedings of the IET International Radar Conference, Online, 4–6 November 2020; pp. 330–334. [Google Scholar]

- Longman, O.; Villeval, S.; Bilik, I. Multipath ghost targets mitigation in automotive environments. In Proceedings of the IEEE Radar Conference, Atlanta, GA, USA, 7–14 May 2021; pp. 1–5. [Google Scholar]

- Swl Marine Bronze Submersible Level Sensor. Available online: https://www.sensorsone.com/swl-marine-bronze-submersible-level-sensor/ (accessed on 5 October 2024).

- Tdk-Tmrsensor. Available online: https://product.tdk.com/en/techlibrary/productoverview/tmr-angle-sensors.html/ (accessed on 5 October 2024).

- Barrault, G. Modeling the forward Look Sonar. Master’s Thesis, Florida Atlantic University, Boca Raton, FL, USA, 2001. [Google Scholar]

- Choi, W.S.; Olson, D.; Davis, D.; Zhang, M.; Racson, A.; Bingham, B.; McCarrin, M.; Vogt, C.; Herman, J. Physics-based modeling and simulation of multibeam echosounder perception for autonomous underwater manipulation. Front. Robot. AI 2021, 8, 706646. [Google Scholar] [CrossRef] [PubMed]

- Liu, D.; Wang, Y.; Ji, Y.; Tsuchiya, H.; Yamashita, A.; Asama, H. Cyclegan-based realistic image dataset generation for forward-looking sonar. Adv. Robot. 2021, 35, 242–254. [Google Scholar] [CrossRef]

- Negahdaripour, S. Calibration of DIDSON forward-scan acoustic video camera. In Proceedings of the OCEANS 2005 MTS/IEEE, Washington, DC, USA, 17–23 September 2005. [Google Scholar]

- Kutulakos, K.N.; Seitz, S.M. A theory of shape by space carving. Int. J. Comput. Vis. 2000, 38, 199–218. [Google Scholar] [CrossRef]

- Aykin, M.D.; Negahdaripour, S. Three-dimensional target reconstruction from multiple 2-D forward-scan sonar views by space carving. IEEE J. Ocean. Eng. 2017, 42, 574–589. [Google Scholar] [CrossRef]

- Negahdaripour, S.; Milenkovic, V.M.; Salarieh, N.; Mirzargar, M. Refining 3-D object models constructed from multiple fs sonar images by space carving. In Proceedings of the IEEE/MTS Oceans Conference-Anchorage, Anchorage, AK, USA, 18–21 September 2017; pp. 1–9. [Google Scholar]

- Gu, J.-H.; Joe, H.-G.; Yu, S.-C. Development of image sonar simulator for underwater object recognition. In Proceedings of the OCEANS’13, San Diego, CA, USA, 23–27 September 2013. [Google Scholar]

- Kwak, S.; Ji, Y.; Yamashita, A.; Asama, H. Development of acoustic camera-imaging simulator based on novel model. In Proceedings of the IEEE 15th International Conference on Environment and Electrical Engineering (EEEIC’15), Rome, Italy, 10–13 June 2015; pp. 1719–1724. [Google Scholar]

- Potokar, E.; Lay, K.; Norman, K.; Benham, D.; Neilsen, T.B.; Kaess, M.; Mangelson, J.G. HoloOcean: Realistic sonar simulation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Kyoto, Japan, 23–27 October 2022. [Google Scholar]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE T. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Chen, Y.; Medioni, G. Object modeling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Bergström, P.; Edlund, O. Robust registration of point sets using iteratively reweighted least squares. Comput. Optim. Appl. 2014, 58, 543–561. [Google Scholar] [CrossRef]

- Bergström, P.; Edlund, O. Robust registration of surfaces using a refined iterative closest point algorithm with a trust region approach. Numer. Algorithms 2017, 74, 755–779. [Google Scholar] [CrossRef]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2017, arXiv:1609.04747v2. [Google Scholar]

- Azure Kinect DK. Available online: https://www.microsoft.com/en-us/d/azure-kinect-dk/8pp5vxmd9nhq?activetab=pivot:overviewtab (accessed on 5 October 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Negahdaripour, S. Ghost Removal from Forward-Scan Sonar Views near the Sea Surface for Image Enhancement and 3-D Object Modeling. Remote Sens. 2024, 16, 3814. https://doi.org/10.3390/rs16203814

Liu Y, Negahdaripour S. Ghost Removal from Forward-Scan Sonar Views near the Sea Surface for Image Enhancement and 3-D Object Modeling. Remote Sensing. 2024; 16(20):3814. https://doi.org/10.3390/rs16203814

Chicago/Turabian StyleLiu, Yuhan, and Shahriar Negahdaripour. 2024. "Ghost Removal from Forward-Scan Sonar Views near the Sea Surface for Image Enhancement and 3-D Object Modeling" Remote Sensing 16, no. 20: 3814. https://doi.org/10.3390/rs16203814

APA StyleLiu, Y., & Negahdaripour, S. (2024). Ghost Removal from Forward-Scan Sonar Views near the Sea Surface for Image Enhancement and 3-D Object Modeling. Remote Sensing, 16(20), 3814. https://doi.org/10.3390/rs16203814