Abstract

Synthetic Aperture Radar (SAR) imaging is essential for monitoring geomorphic changes, urban transformations, and natural disasters. However, the inherent complexities of SAR, particularly pronounced speckle noise, often lead to numerous false detections. To address these challenges, we propose the Multidirectional Attention Fusion Network (MDAF-Net), an advanced framework that significantly enhances image quality and detection accuracy. Firstly, we introduce the Multidirectional Filter (MF), which employs side-window filtering techniques and eight directional filters. This approach supports multidirectional image processing, effectively suppressing speckle noise and precisely preserving edge details. By utilizing deep neural network components, such as average pooling, the MF dynamically adapts to different noise patterns and textures, thereby enhancing image clarity and contrast. Building on this innovation, MDAF-Net integrates multidirectional feature learning with a multiscale self-attention mechanism. This design utilizes local edge information for robust noise suppression and combines global and local contextual data, enhancing the model’s contextual understanding and adaptability across various scenarios. Rigorous testing on six SAR datasets demonstrated that MDAF-Net achieves superior detection accuracy compared with other methods. On average, the Kappa coefficient improved by approximately 1.14%, substantially reducing errors and enhancing change detection precision.

1. Introduction

Change detection [1] is essential for monitoring dynamic transformations across the Earth’s surface, significantly impacting disaster management [2], urban planning [3], and environmental monitoring [4]. Synthetic Aperture Radar (SAR) [5,6] imaging, renowned for its ability to deliver high-resolution [7] imagery under any atmospheric conditions, has emerged as an indispensable tool in these areas [8]. However, despite its advanced capabilities, SAR imaging faces several challenges that undermine its effectiveness in accurately detecting changes. One of them is speckle noise [9,10], a granular distortion inherent to radar data that significantly obscures essential image features and complicates the detection of genuine changes. Furthermore, the complex nature of SAR data often leads to a high incidence of false detections due to their imaging mechanisms.

The complexity of natural landscapes significantly heightens the challenges faced in SAR imaging. These landscapes exhibit a wide array of dynamic changes, necessitating preprocessing techniques such as image denoising to maintain the integrity of information while reducing noise [11,12]. Edge-preserving filtering techniques such as bilateral filters [13], guided filters [14], and anisotropic local filters [15] have been developed to enhance image quality in SAR imaging by reducing noise without compromising essential details like edges and textures. These techniques improve noise reduction by considering the similarity between pixels, thereby maintaining the structural clarity of the image and effectively preventing the unwanted diffusion of features towards the boundaries.

However, these techniques predominantly utilize local information and do not effectively integrate global contextual data, which is critical for interpreting complex, multiscale changes across diverse natural environments. Natural objects within these landscapes, such as vegetation and water bodies, exhibit strong regional and inter-class heterogeneity [16,17]. These variations underline the multiscale nature of natural features in environmental monitoring [18], where the precise detection of changes demands not only local but also global contextual understanding.

Recent advancements in deep learning, particularly convolutional neural networks (CNNs) [19,20], have improved SAR image processing [21,22] by offering robust feature extraction. However, the effectiveness of CNNs in change detection largely relies on their ability to learn local details crucial for interpreting textures and edges within images. Despite their strengths, CNNs inherently focus on local textural features and scale invariance, which limits their capability to handle long-range spatial dependencies essential for accurate change interpretation. To address these challenges, integrating CNNs with transformer [23] architectures has proven effective. This hybrid approach combines the strengths of CNNs in detecting local features with the transformers’ ability to model distant relationships, enhancing change detection and interpretation in SAR images. To further advance this field, this paper introduces a novel algorithmic framework called the Multidirectional Attention Feature Learning Fusion Network (MDAF-Net), which significantly enhances change detection capabilities in SAR images by leveraging advanced machine learning techniques. The main contributions of this paper are as follows:

- We have developed the Multidirectional Filter (MF), a technique specifically designed for SAR imagery that effectively reduces noise and enhances feature clarity. This approach excels in speckle noise suppression while preserving essential edge details.

- The Multidirectional Adaptive Filter (MDAF) combines multidirectional learning with a multiscale self-attention mechanism, providing deep analysis of both local and global information in SAR images. This enhances the model’s understanding of complex spatial relationships.

- MDAF-Net integrates advanced noise reduction techniques with spatial context awareness to accurately identify real changes in SAR imagery, significantly reducing false positives.

The Multidirectional Attention Fusion Network (MDAF-Net) has been rigorously tested across six diverse SAR datasets: Farmland-A, Farmland-B, Inland River, Ottawa, Red River, and JLR—encompassing complex scenarios like agricultural changes, river dynamics, and urban transformations. Its performance outshines several leading-edge methods, with substantial improvements in reducing false positives and negatives, and enhancing overall accuracy. These results underscore MDAF-Net’s robustness and efficiency in detecting changes across varied and challenging environments.

The remainder of this article is organized as follows. Section 2 shows related works about this article. Section 3 describes the proposed method. Section 4 presents the experiments demonstrating the effectiveness of our proposed network and comparisons with other methods. Finally, in Section 5, we conclude this article.

2. Related Work

2.1. SAR Denoising

SAR image denoising has evolved from traditional methods such as mean, median, and Gaussian filtering, which effectively reduce noise but often blur critical edge details. To address this, advanced techniques like bilateral filters, guided filters, non-uniform local filters, and transformations using multiscale geometric transforms such as contourlet have been developed. These methods are particularly effective in capturing directional edges and detailed textures. Among these, the Side Window Filter [24] introduced by Yin stands out for its ability to preserve edge details by optimizing filter window placement to minimize edge blurring during noise reduction. Additionally, a real-time image dimensionality reduction filter has been proposed that locates image edges using a thresholding-based method. Spatial filters, on the other hand, work by smoothing across a fixed window, which can produce artifacts around the object and occasionally result in excessive smoothing, leading to visual blurring [25].

The integration of deep learning into SAR denoising represents a significant advancement. Feng et al. [26] introduced a dual-component deep learning network that first generates a Texture Level Map (TLM) to assess texture randomness and scale, then learns the spatial variance between noise and clean images, allowing the network to adaptively preserve details or smooth noise. Liu et al. [27] developed the Multiscale Residual Dense Dual Attention Network (MRDDANet), which employs multiscale modules to extract features from noisy images and processes these through a dense dual attention mechanism, effectively balancing noise suppression with texture preservation. Dong et al. [28] introduced ShearNet, which enhances traditional CNNs by incorporating a shearlet transform-based denoising layer to reduce noise impact maximally, using hard-threshold shrinkage on high-frequency subbands and a novel noise-robust loss function adapted for noisy labels, improving the network’s ability to handle SAR images in change detection tasks. These innovative approaches significantly improve the precision and applicability of SAR image denoising techniques. Our approach does not directly denoise SAR images but rather focuses on processing differential features extracted from SAR data. These differential features inherently retain typical noise characteristics of SAR imagery, which, if unaddressed, can obscure significant temporal changes. To overcome the limitations of traditional denoising methods, we propose a strategy specifically tailored for these differential features. This strategy significantly enhances the accuracy and reliability of change detection by precisely targeting residual noise elements that might be overlooked during the initial processing phase. Our method avoids the common pitfalls of over-smoothing and loss of crucial spatial details, ensuring that the changes detected are both authentic and significant.

2.2. Change Detection in Remote Sensing

Deep learning models, characterized by their multiple hidden layers, are highly effective at extracting discriminative features. These models have achieved significant advancements in various domains such as natural language processing [29,30], saliency detection [31], and anomaly detection [32]. In recent years, the remote sensing community has increasingly turned its attention to deep learning methods. Numerous deep learning techniques have been proposed to address the challenge of change detection (CD) [33].

Gong et al. [34] proposed an innovative approach for change detection (CD) in multi-temporal SAR images using deep learning. They trained a deep neural network to directly generate CD maps from two source images without creating a difference image (DI), thereby simplifying the CD problem into a classification task. Zhang et al. [35] proposed an enhanced deep Siamese convolutional neural network (CNN) model that utilizes a Siamese architecture to process paired images, effectively learning the differences between them, and extracting distinct and discriminative features between changed and unchanged classes. Chen et al. [36] proposed a novel Siamese-based spatiotemporal attention neural network for change detection. They integrated a new CD self-attention module into the feature extraction process. The approach utilizes standard CNN networks for deep feature extraction, and employs spatiotemporal attention modules to model the relationships between bi-temporal images, thereby optimizing feature representation. Meiet et al. [37] proposed a structure consistency-based change detection (CD) method that detects changes by comparing the structures of two images rather than their pixel values, demonstrating strong robustness.

Some approaches incorporate the transformer [38,39] module to address the CNN model’s limitations in capturing long-term dependencies. Chen et al. [40] proposed a BIT with a transformer encoder and two decoders for capturing temporal and spatial context. Zhang et al. [41] built a pure transformer Siamese CD network using Swin transformer modules. Mubashir et al. [42] proposed ScratchFormer, an end-to-end CD method using transformers with random sparse attention manipulation. Zhang et al. [43] proposed a two-phase feature alignment (BiFA) model to generate accurate CD plots. They introduced a bi-temporal interaction (BI) module for channel-level alignment and a differential shunt field (ADFF) module for spatial alignment, along with an implicit neural alignment decoder (IND) for multiscale alignment.

The combination of CNNs and transformers [44] leverages the strengths of both architectures: CNNs capture local features and texture details, while transformers model long-range dependencies and global context. Integrating these approaches enhances change detection by balancing local detail preservation with comprehensive contextual understanding [45]. This section presents a remote sensing SAR image change detection network (MDAF-Net) based on multidirectional attention feature learning fusion, utilizing CNN-extracted features with transformer-based global context modeling to excel in both local and global feature representation.

3. Proposed Method

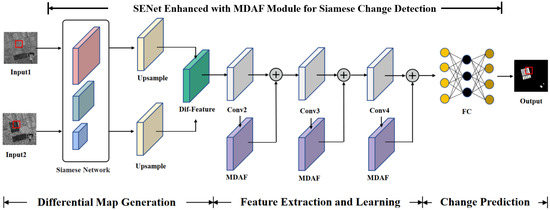

The overall network structure for change detection, referred to as MDAF-Net, is illustrated in Figure 1. The input consists of bi-temporal SAR [46] images, denoted as Input1 and Input2, and the final output is the change detection result, Output. The framework is composed of three main components:

Figure 1.

Multidirectional Attention Fusion Network Framework diagram.

- Difference Map Generation Module: uses a Siamese network [47] to extract features from dual-temporal images and generates the difference map.

- Feature Learning Module: enhances feature representation through multiscale self-attention mechanisms and multidirectional feature learning.

- Change Prediction Module: utilizes a multilayer perceptron (MLP) [48] to classify the extracted features and output the change detection results.

We utilize a multilayer convolutional network framework that incorporates convolutional layers with varying kernel sizes (1 × 1, 3 × 3, 5 × 5) paired with batch normalization to process image features efficiently. The computational complexity for processing the feature maps in these layers is , where and represent the number of input and output channels, K denotes the kernel size, and H and W are the dimensions of the feature maps.

3.1. Differential Map Generation Module

The difference map generation [49] module primarily uses a Siamese network for feature extraction from the input images. This Siamese network consists of convolutional layers with kernel sizes of 1 × 1, 3 × 3, and 5 × 5. Different kernel sizes capture features from various receptive fields, and these convolutions are parallelized within the network. The extracted features are concatenated along the channel dimension, enabling the fused features to have multiscale feature learning capabilities. The results are then upsampled using bilinear interpolation [50], as described in Equation (1).

In the bilinear interpolation method, represented by Formula (1), the terms , , , and correspond to the pixel values at the coordinates , , , and , respectively. These coordinates denote the four nearest pixels surrounding the target point , where is the interpolated value that needs to be calculated. The method employs linear interpolations first in the x-direction and then in the y-direction to preserve the spatial structure during the upscaling of an image or feature map. This method enlarges the input feature map while maintaining spatial structure.

The Siamese network can measure the similarity between two inputs by using the same convolutional layers to extract features from the dual-temporal images. Due to the consistent network parameters, the differences between the two images become more pronounced, which is beneficial for change detection tasks. This characteristic allows the dual-temporal features obtained from this network to be constructed into a difference map. The formula for generating the difference feature map is shown in Equation (2).

In this formula, and represent the feature values of the SAR images at two different time points, and , respectively. The quantifies the squared difference between these pixel values, highlighting changes over time. Difference map generation plays a critical role in change detection: by directly comparing images from different time points, difference maps provide an intuitive visualization of change areas, facilitating analysis and understanding. By comparing features, the change detection problem is simplified into a classification task, reducing algorithmic complexity. Difference maps amplify subtle changes, making it easier for algorithms to capture small change areas, thus improving detection accuracy.

3.2. Feature Extraction and Noise Reduction

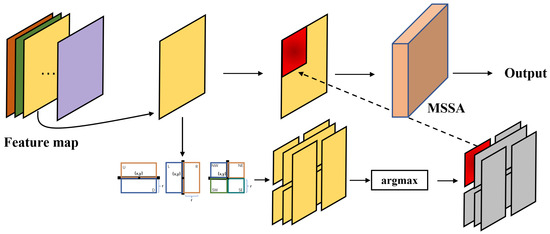

The proposed MDAF module, shown in Figure 2, integrates SENet (Squeeze-and-Excitation Network) [51] as the backbone network to extract features from the difference map. SENet, known for its ability to adaptively recalibrate channel feature responses, serves as the baseline network to capture essential feature representations. To enhance feature learning and global feature utilization, the MDAF module employs a Multidirectional Filter (MF) and a multiscale self-attention (MSSA) mechanism.

Figure 2.

MDAF module structure diagram. This structure essentially combines convolution to denoise, enhance, and maintain learnability in the feature layer.

Initially, after SENet extracts features from the input difference map, the resultant feature maps are bifurcated into two branches. One branch processes the features through the MF technique, which effectively reduces noise and preserves edge details, enhancing local feature extraction. Subsequently, the processed features are passed through the MSSA module within the MDAF. This module leverages a multiscale self-attention mechanism to capture intricate spatial interactions and relationships within the feature maps. The output from the MSSA module is then fused with the features from the other branch, which continues through the network without global feature enhancement. This fusion combines the detailed local features with the enriched global contextual features, resulting in a more robust and comprehensive representation. Finally, these fused features are processed by subsequent convolutional layers and fully connected layers to predict the class of each pixel, determining whether each pixel has changed or remains unchanged. This structure essentially combines convolutional operations for denoising, enhancing, and maintaining learnable capabilities at the feature layer. The final output is a change detection map that highlights the areas of change between the two input images, leveraging the enhanced feature representations obtained through the SENet backbone, Multidirectional Filter, and the multiscale self-attention mechanism.

3.3. MDAF Integration

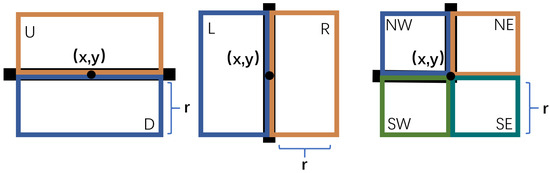

3.3.1. Multidirectional Fine-Grained Denoising

To effectively denoise and enhance edge features in the input feature map, the MDAF module employs a approach utilizing eight directional windows, as shown in Figure 3. The proposed directional windows for denoising, , represent eight directions: left, right, up, down, northwest, northeast, southwest, and southeast. The first four directions primarily match vertical and horizontal lines, while the latter four align with diagonal edges. Here, x and y are the coordinates of the central feature point, and r is the radius of the window size. By altering r while fixing , the size, direction, and position of the window can be adjusted. Once the center coordinates are fixed, applying a filter kernel, F, within each directional window produces eight outputs:

Figure 3.

Multidirectional filter window design.

Here, and are the input feature regions within the directional windows, and are the weights in the filter window. denotes the filtered output corresponding to a specific directional window, n. The normalization factor, , for the window adjusts the influence of each weight, ensuring that the calculation reflects the relative importance of each feature within the window. Therefore, the output of the directional window with the smallest L2 distance [52] from the input is selected as the final output. The differences between the outputs of the eight directional windows and the central feature are compared, and the closest match is selected. The final selection of the output, , is based on the smallest Euclidean distance to the original input feature, , ensuring minimal distortion or deviation:

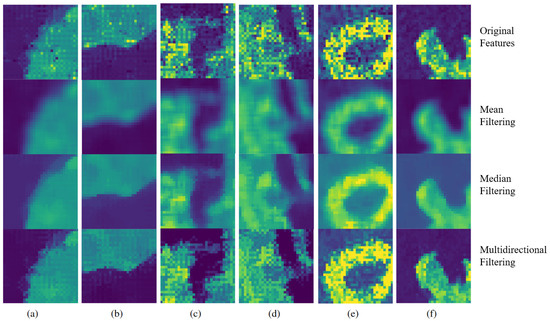

Unlike traditional methods that simply replace the original value with the closest match, this approach selects the most similar region’s filtered features and integrates them with the original features. This enhances regions similar to the central feature and attenuates noisy areas, improving feature clarity for subsequent classification tasks. This method retains edge details, effectively preventing the diffusion of edge textures seen in median filtering, thus achieving noise reduction while preserving edges. Figure 4 shows the visualization of feature layers. The original features are unprocessed, followed by comparisons using mean filtering, median filtering, and the proposed multidirectional window feature enhancement method. The first two methods fail to sufficiently preserve edge details, causing edge diffusion and blurring [53]. In contrast, the proposed method demonstrates excellent noise reduction and edge preservation capabilities, while being adaptable to feature layer processing.

Figure 4.

Feature layer visualization using various filtering methods. Each group (a–f) shows original features, mean filtering, median filtering, and multidirectional filtering results.

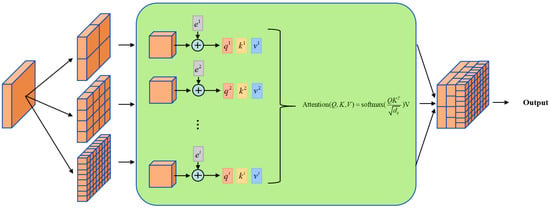

3.3.2. Global Context with Multidirectional Attention

The denoised and enhanced feature layers primarily contain local information, which is not conducive to learning global semantic information. By passing these features through the multiscale self-attention (MSSA) module, both global and local information are integrated [54], as shown in Figure 5. In the MSSA module, the input feature layer has dimensions of 16 × 16 × 10. Initially, the original feature layer is divided into blocks. To facilitate multiscale learning of global information, the model processes three parallel block partitioning modes: four 8 × 8 × 10 blocks, sixteen 4 × 4 × 10 blocks, and sixty-four 2 × 2 × 10 blocks. For each self-attention operation, the input features are first flattened, treating each block as a token. This process disrupts the inherent positional information of the features, so positional encoding is added to each token based on its original sequence attributes. This positional encoding helps determine the location of neighboring blocks and their distances relative to the current block.

Figure 5.

MSSA structure diagram.The MSSA module integrates global and local information.

Next, each token is fed into the self-attention [55] module for similarity computation and feature learning. Features from any location interact with features from all other locations, learning the dependencies between them based on their positions. This addresses the issue of poor long-term information utilization in local learning models. Notably, for single-scale self-attention operations, feature discontinuities may occur at block boundaries due to partitioning. The multiscale design compensates for this by integrating boundary information across different scales. The feature layers processed by the self-attention module are concatenated and fused, resulting in feature layers that are multiscale, denoised, enhanced, and capable of capturing long-range information. This feature layer is the output of the MSSA module.

4. Experimental Results and Analysis

In this section, we first describe the datasets used in the experiments. Then, we provide a detailed explanation of the evaluation metrics. Next, we conduct an extensive study of several key parameters that influence change detection performance. Finally, we compare the proposed method with several state-of-the-art methods.

4.1. Dataset and Evaluation Criteria

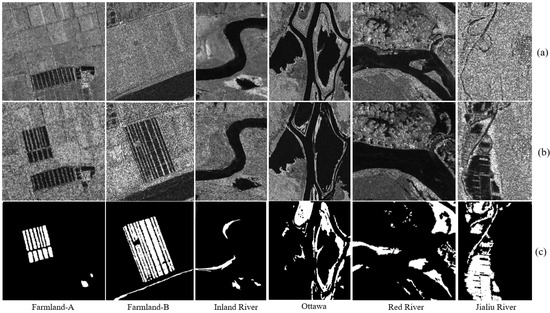

This study utilizes six sets of remote sensing SAR images and their corresponding change detection reference maps. The datasets used in the experiments are described as follows, as shown in Figure 6. The specific information of the datasets is shown in Table 1. More information about the datasets will be introduced below.

Figure 6.

This figure presents geographical datasets from various extracted regions and river systems. The datasets include Farmland-A, Farmland-B, and the Inland River originating from the Yellow River Estuary, as well as datasets from the Ottawa River, Red River, and Jialu River. Specifically, (a) illustrates the data corresponding to Time Phase 1, (b) displays the data for Time Phase 2, and (c) serves as a reference change map for comparison.

Table 1.

Detailed Overview of SAR Image Datasets for Change Detection.

4.1.1. Yellow River Estuary Dataset

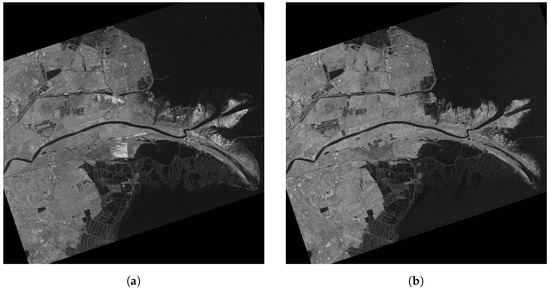

The dual-temporal SAR images of the Yellow River estuary are shown in Figure 7. This dataset is located in the Yellow River estuary area, captured by the Canadian Radarsat satellite sensor, with a resolution of 8 m. Figure 7a represents the first temporal image, taken in June 2008, and Figure 7b represents the second temporal image, taken in June 2009. These two temporal images demonstrate the changes in the Yellow River estuary area. Three typical regions were extracted from the Yellow River estuary as datasets: Farmland-A (291 × 306), Farmland-B (257 × 289), and Inland River (291 × 444).

Figure 7.

Dual-temporal SAR images of Yellow River estuary dataset. (a) Taken on 18 June 2008, (b) Taken on 19 June 2009.

4.1.2. Ottawa Dataset

The Ottawa dataset is located in Ottawa, Canada, and was captured by the Canadian Radarsat satellite sensor. This subset of the image, provided by the Canadian Department of National Defence, has a size of 290 × 350 with a resolution of 8 m. Figure 6a represents the first temporal image, taken in May 1997, and Figure 6b represents the second temporal image, taken in August 1997. These images illustrate the areas affected by flooding, with the white regions in Figure 6c indicating the change areas.

4.1.3. Red River Dataset

The Red River dataset is located in the Red River area of Vietnam and was captured by the European Space Agency’s ERS-2 satellite sensor. This subset has a size of 512 × 512. Figure 6a represents the first temporal image, taken on 24 August 1996, and Figure 6b represents the second temporal image, taken on 14 August 1999. These images illustrate the areas affected by flooding, with the white regions in Figure 6c indicating the change areas.

4.1.4. Jialu River Dataset

The Jialu River dataset is located in the Jialu River area of Zhengzhou, Henan Province, China, and was captured by the Chinese GF-3 satellite sensor. This subset has a size of 300 × 400. Figure 6a represents the first temporal image, taken on 15 July 2021, and Figure 6b represents the second temporal image, taken on 28 July 2021. These images illustrate the areas affected by flooding, with the white regions in Figure 6c indicating the change areas.

4.1.5. Evaluation Criteria for CD

For the predicted results of the remote sensing SAR change detection model, effective metrics are needed to measure the performance and classification accuracy of the change detection methods. The evaluation criteria include the following. True positives (TP) refer to the number of correctly detected positive samples, which are pixels that have changed and are correctly classified as changed. False positives (FP) are the number of incorrectly detected positive samples, where pixels that have not changed are incorrectly classified as changed. True negatives (TN) involve the correct detection of negative samples, specifically pixels that have not changed and are correctly classified as unchanged. False negatives (FN) denote the number of incorrectly detected negative samples, which are pixels that have changed but are incorrectly classified as unchanged.

- Overall Error (OE): the total number of incorrect detections, calculated as:

- Overall Classification Accuracy (PCC): the proportion of correctly classified samples, calculated as:

- Kappa Coefficient: a statistical measure that compares an observed accuracy with an expected accuracy (random chance), calculated as:whereand

The total number of image pixels is N. The Kappa value ranges from −1 to 1, depending on the values of TP, TN, FN, and FP. refers to the total number of pixels that have truly changed, and refers to the total number of pixels that have not changed. This accurately reflects the quality of the change detection results, with higher values indicating better detection performance.

4.2. Parameters Analysis of the Proposed MDAF-Net

The server system is Ubuntu 18.04, with an Intel(R) Xeon(R) CPU E5-2650 v4 @ 2.20 GHz and an NVIDIA GeForce RTX 2080ti GPU. Python version 3.8.12 is used, based on the deep learning framework Pytorch 1.8. For the loss function and optimizer, the model utilizes cross-entropy loss for classification. The model employs the Adaptive Moment Estimation (Adam) optimizer, which automatically adjusts the learning rate and updates variables based on historical gradient oscillations. This ensures faster convergence of the model and facilitates efficient oscillation within the optimal value range.

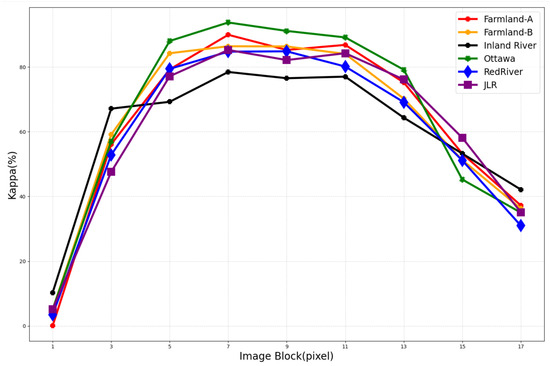

Key Parameter Analysis and Experimental Setup

In this model, the performance of both the MDAF module and the MSSA module is influenced by a key parameter: the input image patch size. This parameter significantly affects the overall performance of the model. In this experiment, we analyze the impact of different image patch sizes, specifically 1, 3, 5, 7, 9, 11, 13, 15, and 17. The Kappa metric for the Farmland-A, Farmland-B, Inland River, Ottawa, Red River, and JRL datasets is analyzed, with the results shown in Figure 8.

Figure 8.

Parameter variation indicators for different image patch sizes.

When the image patch size is too small, the network cannot learn information from neighboring regions and relies on iterative learning from other areas, which disrupts the relative positional information between patches. For change detection tasks, the change of a single pixel is closely related to the state of surrounding pixels, leading to significant prediction errors. As the patch size increases, the Kappa metric gradually improves, as local semantic information is supplemented. However, when the patch size exceeds 7, the Kappa metric begins to decline slowly due to the increased distance between edge and center information, introducing more interference and complexity, reducing prediction performance. The experiment shows that a patch size of 7 is most appropriate.

The MDAF-Net model employs the cross-entropy loss function for classification tasks, which is particularly suited for binary classification scenarios prevalent in change detection. The cross-entropy loss is calculated as follows:

The equation illustrates the computational framework of the cross-entropy loss, where x represents the outputs from the last layer of the network, and denotes the target label, which can be either 0 or 1 for binary classification tasks. What is more, MDAF-Net utilizes the Adaptive Moment Estimation (Adam) optimizer, a method renowned for its efficiency in handling sparse gradients and its robustness in terms of convergence speed. The Adam optimizer dynamically adjusts the learning rate based on the historical gradient oscillations, allowing for more precise updates of the model parameters. ReLU activation is employed at multiple stages within our model: it is applied after batch normalization of each convolution output during the feature extraction process to aid in learning non-linear features. Before classification, features derived from both MF and CNN architectures are individually processed with ReLU to ensure effective non-linearity and integration. Finally, a fully connected layer, coupled with a Sigmoid activation function, is used for classification decisions, enabling a probabilistic interpretation suitable for binary outcomes. This strategic application of activation functions enhances the model’s ability to discern complex patterns effectively.

4.3. Comparative Algorithm Explanation

To verify the effectiveness of the proposed MDAF-Net, we compared our method with several closely related methods, including GKI [56], CNN [57], PCAKM [58], DCNet [59], BIT, and MutSimNet [60]. Below, we provide a brief introduction to each of these methods:

- 1.

- GKI: uses a minimum-error thresholding for unsupervised change detection in SAR images, adapting to non-Gaussian data distributions.

- 2.

- CNN: applies convolutional layers to learn feature representations from the input images for detecting changes between multi-temporal images.

- 3.

- PCAKM: combines PCA to reduce dimensionality and K-Means clustering to group data into changed and unchanged categories based on significant features.

- 4.

- DCNet: detects changes in SAR images through a channel weighting-based deep learning network, enhancing sensitivity and accuracy.

- 5.

- BIT: implements a bi-temporal image transformer to efficiently and effectively model contexts within the spatial–temporal domain, utilizing a transformer encoder to model contexts in compact token-based space-time.

- 6.

- MutSimNet: introduces a mutually reinforcing similarity network that applies similarity learning and a self-attention mechanism within a feature pyramid network. This design aims to minimize false alarms and reduce misjudgment rates along changing boundaries by effectively integrating multilayer features and focusing on edge contour learning.

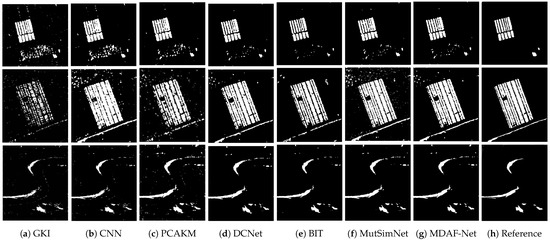

By comparing MDAF-Net with these methods, we aim to demonstrate the superiority of our approach in terms of change detection accuracy. The change detection experiments were conducted on the typical regions of the Yellow River estuary, namely Farmland-A, Farmland-B, and Inland River. The performance of the aforementioned algorithms was compared with the proposed MDAF-Net. The detailed comparison of prediction results is presented in Table 2, and the visualized prediction results are shown in Figure 9a–f.

Table 2.

Comparison of change detection (CD) methods on the Yellow River estuary dataset.

Figure 9.

Prediction results of MDAF-Net and comparison methods on Farmland-A, Farmland-B and Inland River datasets.

4.3.1. Results on the Yellow River Estuary Dataset

From Figure 9, it is evident that speckle noise significantly impacts the predictions of the models, with GKI and the CNN being the most affected. The global misjudgments caused by noise indicate these models’ weak interference resistance. PCAKM shows some noise reduction effects; however, it inadequately captures local edge information, as evidenced by the apparent “sticking” lines, indicating a weaker ability to learn edge textures. DCNet has stronger noise reduction capabilities but learns less global information, leading to incorrect predictions in localized block regions. BIT demonstrates good global understanding of data with less noise, yet the second image shows noticeable “missing corners”. MutSimNet, while not having significant omissions, does not handle noise well, leading to prominent noise artifacts in the first and second images. Compared with other methods, MDAFLF-Net suppresses noise more completely. Although its false positives (FP) and false negatives (FN) are not the lowest, the sum of these errors (OE) is the lowest, and it preserves edge information more completely. Thanks to the proposed global information learning concept, the model effectively distinguishes erroneous block regions in the image, and the textures are smooth and the shapes intact. This demonstrates that our advanced algorithms are adept at capturing and depicting topographical changes, whether it be variations in agricultural grid patterns or changes in river width. Through this approach, not only can we observe conventional geomorphological changes such as alterations in land use and vegetation cover, but we can also precisely monitor more subtle topographical feature shifts, such as the movement of riverbanks and adjustments in agricultural boundaries.

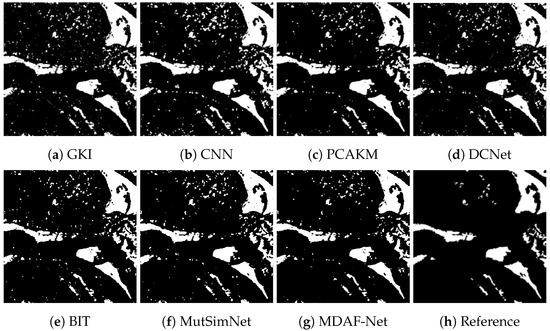

4.3.2. Results on the Ottawa Dataset

For the Ottawa dataset, change detection algorithm experiments were conducted, comparing the proposed MDAF-Net model with the aforementioned algorithms. The detailed comparative analysis of the prediction results is presented in Table 3. The visualization of the prediction results is shown in Figure 10a–f. The predicted change maps in Figure 10 indicate that distinguishing changes along the river edges is particularly challenging. The GKI method shows significant noise around the river edges, with less smooth line connections, indicating weak noise resistance. Both the CNN and PCAKM perform poorly in areas with complex changes, such as river bifurcations, with many misclassifications in thin, broken regions. DCNet struggles with predicting the shapes of void areas, showing interference from local features and less robustness, leading to noticeable blurring. The BIT algorithm minimizes noise effectively but falls short in accurately capturing the width of terrain features, which can impede precise landscape depiction. MutSimNet occasionally overlooks subtle changes, possibly due to its inadequate grasp of the broader context. This highlights the ongoing need to enhance how algorithms discern fine details and process complex spatial data, particularly in dynamic environmental settings. In contrast, the proposed MDAF-Net algorithm demonstrates more accurate predictions, maintaining complete texture and edge information. As shown in Table 3, MDAF-Net improves performance by at least 0.18% compared with other methods. The MDAF-Net algorithm demonstrates outstanding performance on datasets related to flooding, accurately capturing and analyzing surface changes.

Table 3.

Comparative analysis of CD algorithms on Ottawa dataset.

Figure 10.

Prediction results of MDAF-Net and comparison methods on Ottawa dataset.

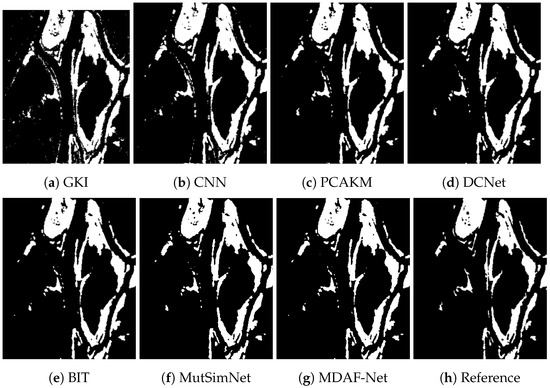

4.3.3. Results on the Red River Dataset

We conducted change detection experiments on the Red River dataset, comparing the performance of the aforementioned algorithms with the proposed MDAF-Net. The predicted results are shown in Figure 11a–f, and the comparative analysis of specific prediction metrics is presented in Table 4. From the change detection results shown in Figure 11, we observe a significant number of misclassified spots in the upper part of the image, indicating a complex region. Both GKI and the CNN display numerous noise blocks, largely due to the abnormal noise in the original features. While PCAKM and DCNet show a slight reduction in noise, they struggle with identifying the change characteristics in the riverbank sandbars. Both the BIT and MutSimNet algorithms underperform in handling noise. In contrast, the proposed MDAF-Net model excels in learning features in dispersed regions, accurately excluding false detection areas and achieving superior detection performance. As reflected in Table 4, the Kappa index of MDAF-Net surpasses the other methods by at least 2.17%.

Figure 11.

Prediction results of MDAF-Net and comparison methods on Red River Dataset.

Table 4.

Comparison of CD methods on Red River dataset.

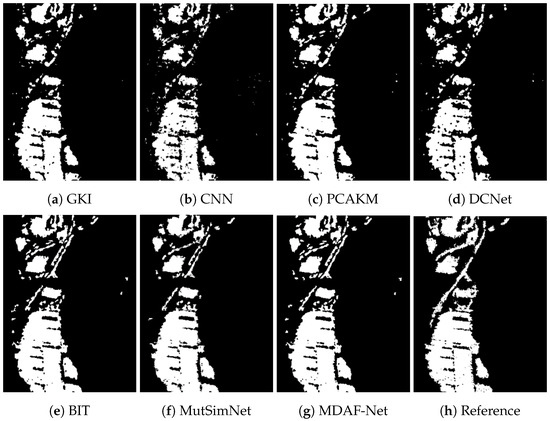

4.3.4. Results on the Jialu River Dataset

The change detection experiment on the Jialu River dataset includes a comparison between various algorithms and the proposed MDAF-Net. The prediction results are shown in Figure 12a–f, with a detailed comparative analysis of the prediction metrics presented in Table 5. Based on Table 5 and Figure 12, it can be observed that the CNN method shows numerous voids in the “flood” area, with dense scattered points in the central and right parts of the image. The flood edge predictions are not smooth, resulting in rough boundaries. The GKI and PCAKM methods are less affected by spot noise and have strong denoising capabilities; however, they also lose some information, such as local edge protrusions being smoothed out, leading to an increased number of missed detections. DCNet exhibits good detection performance, but the predicted change area in the upper part of the image is directly interrupted, indicating instability in predicting complex areas. The BIT algorithm performs well globally but exhibits considerable noise in details. In contrast, the MutSimNet algorithm has fewer noise issues and presents more intact link lines, though its performance in areas with dense connections is mediocre. The proposed MDAF-Net method effectively removes noise and preserves edge features more completely. The Kappa index of this method is 2.66% higher than that of other methods, confirming its strong competitiveness.

Figure 12.

Prediction results of MDAF-Net and comparison methods on Jialu River dataset.

Table 5.

Comparison of CD methods on JLR dataset.

5. Conclusions

This paper addresses the issues of weak denoising capabilities, severe edge information damage, and insufficient utilization of global semantic information in previous SAR image change detection methods. We propose a remote sensing SAR image change detection method based on multidirectional attention feature learning fusion. From the perspective of feature learning and fusion, we construct a network structure that integrates multidirectional windows and multiscale attention. This structure is capable of learning local area features, preserving edge features, reducing noise, and simultaneously capturing global information. It effectively learns the relationships between long-range features and local features. The proposed method was tested on six datasets and compared with four different methods. The results, both in terms of metrics and visualizations, demonstrate the accuracy and reliability of the proposed model.

Author Contributions

Conceptualization, L.L. and Q.L.; methodology, L.L.; software, X.L.; validation, Q.L., G.C. and F.L.; formal analysis, F.L.; investigation, G.C.; resources, L.J.; data curation, X.L.; writing—original draft preparation, L.L.; writing—review and editing, L.J. and P.C.; visualization, X.L.; supervision, L.J.; project administration, P.C.; funding acquisition, P.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was generously supported by several grants, for which we express our gratitude: the Key Scientific Technological Innovation Research Project by the Ministry of Education; the National Natural Science Foundation of China Innovation Research Group Fund (Grant 61621005); the State Key Program and the Foundation for Innovative Research Groups of the National Natural Science Foundation of China (Grant 61836009); the Major Research Plan of the National Natural Science Foundation of China (Grants 91438201, 91438103, 91838303); further support by the National Natural Science Foundation of China (Grants U1701267, 62076192, 62006177, 61902298, 61573267, 61906150, 62276199); the 111 Project; the Program for Cheung Kong Scholars and Innovative Research Team in University (Grant IRT 15R53); the ST Innovation Project from the Chinese Ministry of Education; the Key Research and Development Program in Shaanxi Province of China (Grant 2019ZDLGY03-06); the National Science Basic Research Plan in Shaanxi Province of China (Grants 2019JQ-659, 2022JQ-607); the China Postdoctoral Fund (Grant 2022T150506); the Scientific Research Project of the Education Department in Shaanxi Province of China (Grant 20JY023); the Fundamental Research Funds for the Central Universities (Grants XJS201901, XJS201903, JBF201905, JB211908); and the CAAI-Huawei MindSpore Open Fund. Each funding source has significantly propelled our research forward, and we are profoundly grateful for their support.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Pacifici, F.; Longbotham, N.; Emery, W.J. The Importance of Physical Quantities for the Analysis of Multitemporal and Multiangular Optical Very High Spatial Resolution Images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6241–6256. [Google Scholar] [CrossRef]

- Walter, V. Object-based classification of remote sensing data for change detection. ISPRS J. Photogramm. Remote Sens. 2004, 58, 225–238. [Google Scholar] [CrossRef]

- Stilla, U.; Xu, Y. Change detection of urban objects using 3D point clouds: A review. ISPRS J. Photogramm. Remote Sens. 2023, 197, 228–255. [Google Scholar] [CrossRef]

- Zhu, Q.; Guo, X.; Li, Z.; Li, D. A review of multi-class change detection for satellite remote sensing imagery. Geo-Spat. Inf. Sci. 2024, 27, 1–15. [Google Scholar] [CrossRef]

- Li, L.; Ma, L.; Jiao, L.; Liu, F.; Sun, Q.; Zhao, J. Complex contourlet-CNN for polarimetric SAR image classification. Pattern Recognit. 2020, 100, 107110. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. A SAR dataset of ship detection for deep learning under complex backgrounds. Remote Sens. 2019, 11, 765. [Google Scholar] [CrossRef]

- Shang, L.; Zhou, Y.; Su, P.G. Super-resolution restoration of MMW image based on sparse representation method. Neurocomputing 2014, 137, 79–88. [Google Scholar] [CrossRef]

- Saha, S.; Bovolo, F.; Bruzzone, L. Building change detection in VHR SAR images via unsupervised deep transcoding. IEEE Trans. Geosci. Remote Sens. 2020, 59, 1917–1929. [Google Scholar] [CrossRef]

- Singh, P.; Diwakar, M.; Shankar, A.; Shree, R.; Kumar, M. A Review on SAR Image and its Despeckling. Arch. Comput. Methods Eng. 2021, 28, 4633–4653. [Google Scholar] [CrossRef]

- Han, Y.; Feng, X.C.; Baciu, G.; Wang, W.W. Nonconvex sparse regularizer based speckle noise removal. Pattern Recognit. 2013, 46, 989–1001. [Google Scholar] [CrossRef]

- Jie, L.; Zheng, B.; Chen, H.W.; Flynn, M.P. A cascaded noise-shaping SAR architecture for robust order extension. IEEE J. Solid-State Circuits 2020, 55, 3236–3247. [Google Scholar] [CrossRef]

- Upla, K.P.; Joshi, M.V.; Gajjar, P.P. An edge preserving multiresolution fusion: Use of contourlet transform and MRF prior. IEEE Trans. Geosci. Remote Sens. 2014, 53, 3210–3220. [Google Scholar] [CrossRef]

- Paris, S.; Kornprobst, P.; Tumblin, J.; Durand, F. Bilateral filtering: Theory and applications. Found. Trends Comput. Graph. Vis. 2009, 4, 1–73. [Google Scholar] [CrossRef]

- Mishiba, K. Fast guided median filter. IEEE Trans. Image Process. 2023, 32, 737–749. [Google Scholar] [CrossRef] [PubMed]

- Ochotorena, C.N.; Yamashita, Y. Anisotropic guided filtering. IEEE Trans. Image Process. 2019, 29, 1397–1412. [Google Scholar] [CrossRef]

- Tian, S.; Zhong, Y.; Zheng, Z.; Ma, A.; Tan, X.; Zhang, L. Large-scale deep learning based binary and semantic change detection in ultra high resolution remote sensing imagery: From benchmark datasets to urban application. ISPRS J. Photogramm. Remote Sens. 2022, 193, 164–186. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L. Artificial intelligence for remote sensing data analysis: A review of challenges and opportunities. IEEE Geosci. Remote Sens. Mag. 2022, 10, 270–294. [Google Scholar] [CrossRef]

- Guo, P.; Du, G.; Wei, L.; Lu, H.; Chen, S.; Gao, C.; Chen, Y.; Li, J.; Luo, D. Multiscale face recognition in cluttered backgrounds based on visual attention. Neurocomputing 2022, 469, 65–80. [Google Scholar] [CrossRef]

- Chua, L.O. CNN: A vision of complexity. Int. J. Bifurc. Chaos 1997, 7, 2219–2425. [Google Scholar] [CrossRef]

- Huang, X.; Lu, Q.; Zhang, L. A multi-index learning approach for classification of high-resolution remotely sensed images over urban areas. ISPRS J. Photogramm. Remote Sens. 2014, 90, 36–48. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Gu, S.; Zhang, L. Learning deep CNN denoiser prior for image restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3929–3938. [Google Scholar]

- Chierchia, G.; Cozzolino, D.; Poggi, G.; Verdoliva, L. SAR image despeckling through convolutional neural networks. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5438–5441. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Thakur, R.K.; Maji, S.K. Agsdnet: Attention and gradient-based sar denoising network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4506805. [Google Scholar] [CrossRef]

- Reich, S.; Wörgötter, F.; Dellen, B. A Real-Time Edge-Preserving Denoising Filter. In Proceedings of the VISIGRAPP (4: VISAPP), Madeira, Portugal, 27–29 January 2018; pp. 85–94. [Google Scholar]

- Yin, H.; Gong, Y.; Qiu, G. Side window filtering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8758–8766. [Google Scholar]

- Liu, S.; Lei, Y.; Zhang, L.; Li, B.; Hu, W.; Zhang, Y.D. MRDDANet: A Multiscale Residual Dense Dual Attention Network for SAR Image Denoising. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Dong, H.; Jiao, L.; Ma, W.; Liu, F.; Liu, X.; Li, L.; Yang, S. Deep shearlet network for change detection in sar images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5241115. [Google Scholar] [CrossRef]

- Priya, P.; Firdaus, M.; Ekbal, A. Computational politeness in natural language processing: A survey. ACM Comput. Surv. 2024, 56, 1–42. [Google Scholar] [CrossRef]

- Raza, S.; Garg, M.; Reji, D.J.; Bashir, S.R.; Ding, C. Nbias: A natural language processing framework for BIAS identification in text. Expert Syst. Appl. 2024, 237, 121542. [Google Scholar] [CrossRef]

- Guo, R.; Ying, X.; Qi, Y.; Qu, L. UniTR: A Unified TRansformer-based Framework for Co-object and Multi-modal Saliency Detection. IEEE Trans. Multimed. 2024, 26, 7622–7635. [Google Scholar] [CrossRef]

- Huo, Y.; Cheng, X.; Lin, S.; Zhang, M.; Wang, H. Memory-augmented Autoencoder with Adaptive Reconstruction and Sample Attribution Mining for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5518118. [Google Scholar] [CrossRef]

- Shafique, A.; Cao, G.; Khan, Z.; Asad, M.; Aslam, M. Deep learning-based change detection in remote sensing images: A review. Remote Sens. 2022, 14, 871. [Google Scholar] [CrossRef]

- Gong, M.; Zhao, J.; Liu, J.; Miao, Q.; Jiao, L. Change detection in synthetic aperture radar images based on deep neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 125–138. [Google Scholar] [CrossRef]

- Zhan, Y.; Fu, K.; Yan, M.; Sun, X.; Wang, H.; Qiu, X. Change detection based on deep siamese convolutional network for optical aerial images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1845–1849. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Mei, L.; Ye, Z.; Xu, C.; Wang, H.; Wang, Y.; Lei, C.; Yang, W.; Li, Y. SCD-SAM: Adapting Segment Anything Model for Semantic Change Detection in Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5626713. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, L.; Zhang, L. MSDformer: Multi-scale Deformable Transformer for Hyperspectral Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5525614. [Google Scholar]

- Pinasthika, K.; Laksono, B.S.P.; Irsal, R.B.P.; Yudistira, N. SparseSwin: Swin Transformer with Sparse Transformer Block. Neurocomputing 2024, 580, 127433. [Google Scholar] [CrossRef]

- Chen, H.; Qi, Z.; Shi, Z. Remote sensing image change detection with transformers. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5607514. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, L.; Cheng, S.; Li, Y. SwinSUNet: Pure transformer network for remote sensing image change detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5224713. [Google Scholar] [CrossRef]

- Noman, M.; Fiaz, M.; Cholakkal, H.; Narayan, S.; Muhammad Anwer, R.; Khan, S.; Shahbaz Khan, F. Remote Sensing Change Detection with Transformers Trained from Scratch. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4704214. [Google Scholar] [CrossRef]

- Zhang, H.; Chen, H.; Zhou, C.; Chen, K.; Liu, C.; Zou, Z.; Shi, Z. BiFA: Remote Sensing Image Change Detection with Bitemporal Feature Alignment. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5614317. [Google Scholar] [CrossRef]

- Yuan, F.; Zhang, Z.; Fang, Z. An effective CNN and Transformer complementary network for medical image segmentation. Pattern Recognit. 2023, 136, 109228. [Google Scholar] [CrossRef]

- Wang, X.; Guo, Z.; Feng, R. A CNN-and Transformer-Based Dual-Branch Network for Change Detection with Cross-Layer Feature Fusion and Edge Constraints. Remote Sens. 2024, 16, 2573. [Google Scholar] [CrossRef]

- Pantze, A.; Santoro, M.; Fransson, J.E. Change detection of boreal forest using bi-temporal ALOS PALSAR backscatter data. Remote Sens. Environ. 2014, 155, 120–128. [Google Scholar] [CrossRef]

- He, A.; Luo, C.; Tian, X.; Zeng, W. A twofold siamese network for real-time object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4834–4843. [Google Scholar]

- Tolstikhin, I.O.; Houlsby, N.; Kolesnikov, A.; Beyer, L.; Zhai, X.; Unterthiner, T.; Yung, J.; Steiner, A.; Keysers, D.; Uszkoreit, J.; et al. Mlp-mixer: An all-mlp architecture for vision. Adv. Neural Inf. Process. Syst. 2021, 34, 24261–24272. [Google Scholar]

- Bruzzone, L.; Prieto, D.F. Automatic analysis of the difference image for unsupervised change detection. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1171–1182. [Google Scholar] [CrossRef]

- Gribbon, K.T.; Bailey, D.G. A novel approach to real-time bilinear interpolation. In Proceedings of the DELTA 2004. Second IEEE International Workshop on Electronic Design, Test and Applications, Perth, WA, Australia, 28–30 January 2004; pp. 126–131. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Tian, Y.; Fan, B.; Wu, F. L2-net: Deep learning of discriminative patch descriptor in euclidean space. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 661–669. [Google Scholar]

- Immerkær, J. Use of blur-space for deblurring and edge-preserving noise smoothing. IEEE Trans. Image Process. 2001, 10, 837–840. [Google Scholar] [CrossRef]

- Yang, Y.; Xu, D.; Nie, F.; Yan, S.; Zhuang, Y. Image clustering using local discriminant models and global integration. IEEE Trans. Image Process. 2010, 19, 2761–2773. [Google Scholar] [CrossRef]

- Shen, T.; Zhou, T.; Long, G.; Jiang, J.; Wang, S.; Zhang, C. Reinforced self-attention network: A hybrid of hard and soft attention for sequence modeling. arXiv 2018, arXiv:1801.10296. [Google Scholar]

- Moser, G.; Serpico, S.B. Generalized minimum-error thresholding for unsupervised change detection from SAR amplitude imagery. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2972–2982. [Google Scholar] [CrossRef]

- Gong, M.; Yang, H.; Zhang, P. Feature learning and change feature classification based on deep learning for ternary change detection in SAR images. ISPRS J. Photogramm. Remote Sens. 2017, 129, 212–225. [Google Scholar] [CrossRef]

- Celik, T. Unsupervised change detection in satellite images using principal component analysis and k-means clustering. IEEE Geosci. Remote Sens. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Gao, Y.; Gao, F.; Dong, J.; Wang, S. Change detection from synthetic aperture radar images based on channel weighting-based deep cascade network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4517–4529. [Google Scholar] [CrossRef]

- Liu, X.; Liu, Y.; Jiao, L.; Li, L.; Liu, F.; Yang, S.; Hou, B. MutSimNet: Mutually Reinforcing Similarity Learning for RS Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4403613. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).