1. Introduction

Synthetic Aperture Radar (SAR) is an active Earth observation system. Compared with the optical Earth observation system, SAR has the capability of all-day, all-weather Earth observation, which has important application value in the fields of military reconnaissance, resource survey, and disaster warning [

1,

2,

3].

SAR ship target detection is one of the important contents of SAR image application. Initially, people used the constant false alarm rate (CFAR) to detect SAR images [

4,

5,

6], which is a ship detection algorithm based on the statistical distribution of background clutter, and its use of statistical distribution to model the image background clutter. However, this scheme, which favours manual parameter selection, often has unsatisfactory detection results.

The emergence of neural network algorithms has led to significant breakthroughs in areas such as target detection. AlexNet [

7] was a pioneer in using convolutional neural networks (CNNs) for the first time, and its model won the 2012 Imagenet Image Recognition Competition. Several subsequent model architectures, such as ResNet [

8] and DenseNet [

9], have addressed network degradation during training through residual concatenation. Additionally, CSPNet [

10] has reduced training costs by reducing repetitive gradient computations. Target detection algorithms can generally be classified into two categories: single-stage and two-stage. Two-stage algorithms, represented by the R-CNN [

11,

12,

13] family, generate a large number of prediction frames in an image, and then train convolution for each prediction frame. In contrast, single-stage algorithms, such as YOLO [

14,

15,

16], SSD [

17], and RetinaNet [

18], use whole-image convolution to make training faster and more efficient. Although the two-stage model initially outperformed the single-stage model in terms of generalisation ability, the single-stage model gradually surpassed the two-stage model and achieved better performance as the YOLO model was continuously updated and iterated.

In the evolution of the YOLO series of algorithms, several modules have been added to enhance the model’s performance. The FPN [

19] network structure utilises a multi-scale fusion approach to combine feature information from the top to the bottom. This is because in the feature extraction process, the high-level feature map contains stronger semantic information but destroys the small targets, while the bottom-level feature map protects the small targets but does not have better semantic information. The PAN [

20] structure further improves the performance by adding a bottom-up approach to the FPN, enhancing the model’s robustness and detection ability.

In addition to improving the network structure, target detection algorithms can also optimise performance through data enhancement [

21], loss function design [

22], and post-processing. Data enhancement techniques can increase the diversity of samples and improve the generalisation ability of the model by performing operations such as rotation, scaling, and panning on the training data. In terms of loss function design, Focal Loss [

18] effectively solves the problem of imbalance between positive and negative samples in target detection by introducing a compensating factor, which improves the ability to detect small targets. Post-processing methods, such as non-maximum suppression (NMS), can eliminate overlapping detection results and improve the accuracy and efficiency of detection.

To improve the generalisation ability of the target detection model to SAR maritime ship targets, Guo et al. [

21], proposed an SAR ship detection model called Masked Efficient Adaptive Network (MEA-Net), which is lightweight and highly accurate for unbalanced datasets. Tang et al. [

23], designed a Pyramid Mixed Attention Module (PPAM) to mitigate the effect of background noise on ship detection, while its parallel component facilitates the processing of multiple ship sizes. In addition, Hu et al. [

24] proposed attention mechanisms in spatial and channel dimensions to adaptively assign the importance of features at different scales.

However, the research on improving generalisation ability mentioned above mainly focuses on training and testing on the same dataset. There are few existing studies on cross-domain detection. Recent studies, including Huang et al. [

25], have divided the target detection model into off-the-shelf and adaptation layers to dynamically analyse the cross-domain capability of each module. They proposed a method to reduce the difference in feature distribution between the source and target domains by using multi-source data for domain adaptation. Tang et al. [

26], proposed a cross-domain weakly supervised approach based on the DETR cross-domain weakly supervised target detection (CDWSOD) method. The aim is to adapt the detector from the source domain to the target domain through weak supervision.

The aforementioned studies have enhanced the CNN networks’ capabilities in SAR target detection to some extent. However, they rarely take into account the following aspects: 1. The trained networks are only capable of exhibiting high generalisation ability under the same dataset they were trained and predicted on, and do not possess a good cross-domain generalisation ability. 2. The learning of image features is limited to unipolarised SAR images, and when the training data contain full polarisation data, the correlation between different polarisations is often ignored, the learned feature information is limited, and it is difficult to make further breakthroughs after a certain degree of generalisation. The combination of the classification and localisation tasks in single-stage target detection renders the model vulnerable to interference from complex backgrounds.

This paper proposes a multipolarisation fusion cross-domain adaptive network that is adapted to complex backgrounds. The network implements end-to-end migration learning, which enables it to adapt to different scenarios. Additionally, the network effectively utilises existing SAR image resources to fully extract the potential characteristics of the images.

The main contributions of this paper are as follows:

A method for achieving deep domain adaptation on SAR ship target detection is proposed through cross-domain adversarial learning.

A channel fusion module is proposed to combine SAR image features from four polarisations, enhancing the information and association of the features.

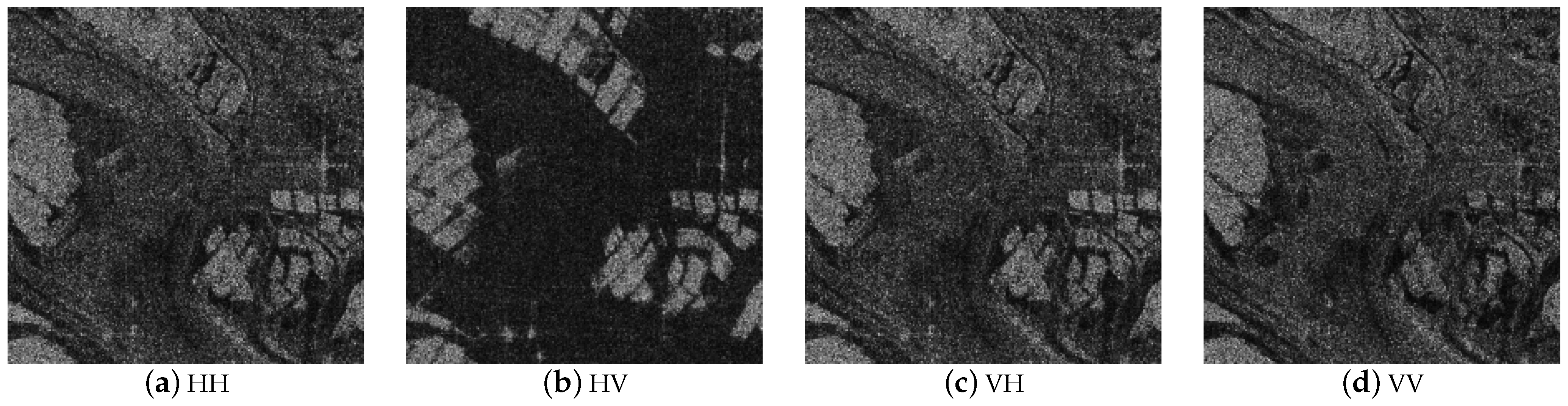

Figure 1 shows the four polarised images under a single scene.

An anti-interference head is proposed to improve the generalisation ability of the model under complex backgrounds.

The structure of the remaining parts of this article is as follows.

Section 2 introduces the work related to this article,

Section 3 introduces the principles of materials and methods,

Section 4 reports on the experimental process and results, and

Section 5 discusses the experimental results and provides future research directions. Compared with the currently best-performing YOLOV8s model, this model has improved accuracy by 4.9%, recall rate by 3.3%, AP by 2.4%, and F1 by 3.9%.

3. Materials and Methods

3.1. Overarching Framework

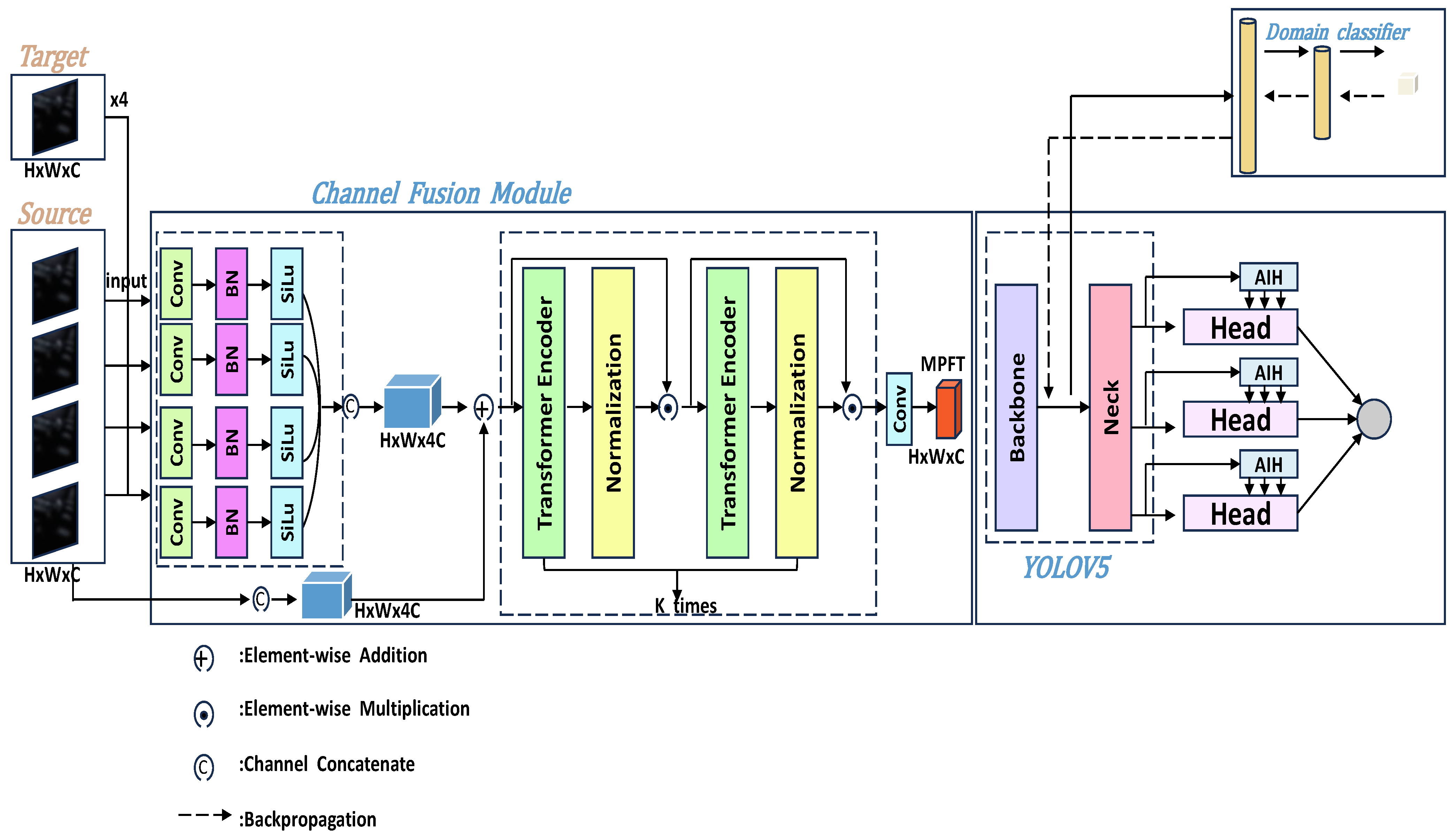

This paper proposes a complex background SAR ship target detection method based on fusion tensor and cross-domain adversarial learning, as illustrated in

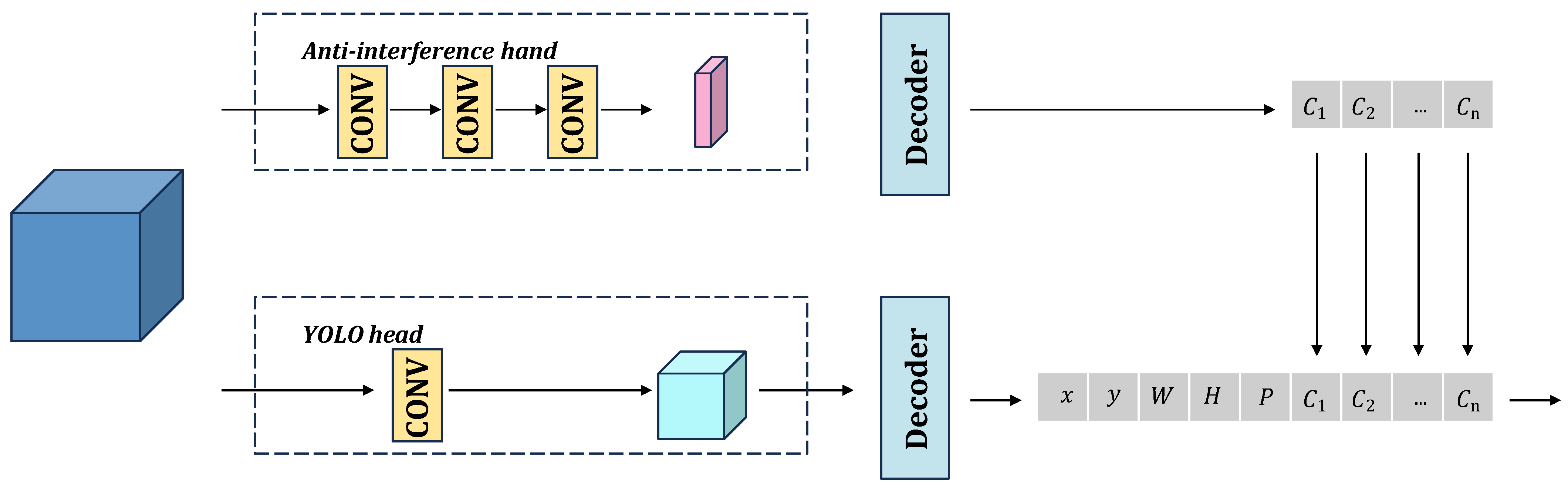

Figure 2. The model incorporates the CALM based on the YOLOV5s model, which achieves mutual adaptation between domains by inverting the gradient to bring the feature distribution distances between the source and target domains closer. Based on this approach, the proposed CFM extracts unique features from the fully polarised image and common features through convolutional neural network and Transformer, respectively. This completes the fusion and feature extraction of the fully polarised image, significantly enriching the model’s feature information. The decoupling method forms AIH that separates the classifiers from the traditional detection head. This greatly alleviates the problem of susceptibility to complex background interference caused by the coupling of the detection head and reduces the occurrence of False Positives.

All four polarised images are of size H × W × C. In the CFM, the images from the four polarisations are input from the source, and their unique features are extracted separately. The features are then concatenated using ‘concatenate’ to form a fusion tensor of size H × W × 4C. Residuals are concatenated with the images that have not undergone feature extraction. The tensor obtained by concatenating the four polarisations is compressed into a tensor of size H × W × C by extracting their common features using a Transformer Encoder. This compressed tensor is referred to as the Multi-Polarisation Fusion Tensor (MPFT) in this paper. The MPFT contains both the unique features of the four polarisations and their common features, resulting in a high information content.

The training process involves combining YOLOV5s and the CALM. The backbone of YOLOV5 extracts features from the MPFTs generated from both the source and target domains. These features are then used for domain classification and feature fusion operations in the neck part of YOLOV5. The resulting losses are the domain classification loss and target detection loss. It should be noted that in unsupervised training for the target domain, the feature extraction tensor specific to the target domain will not enter the neck. This means that it will not calculate the target detection loss during training, but only the loss in domain classification.

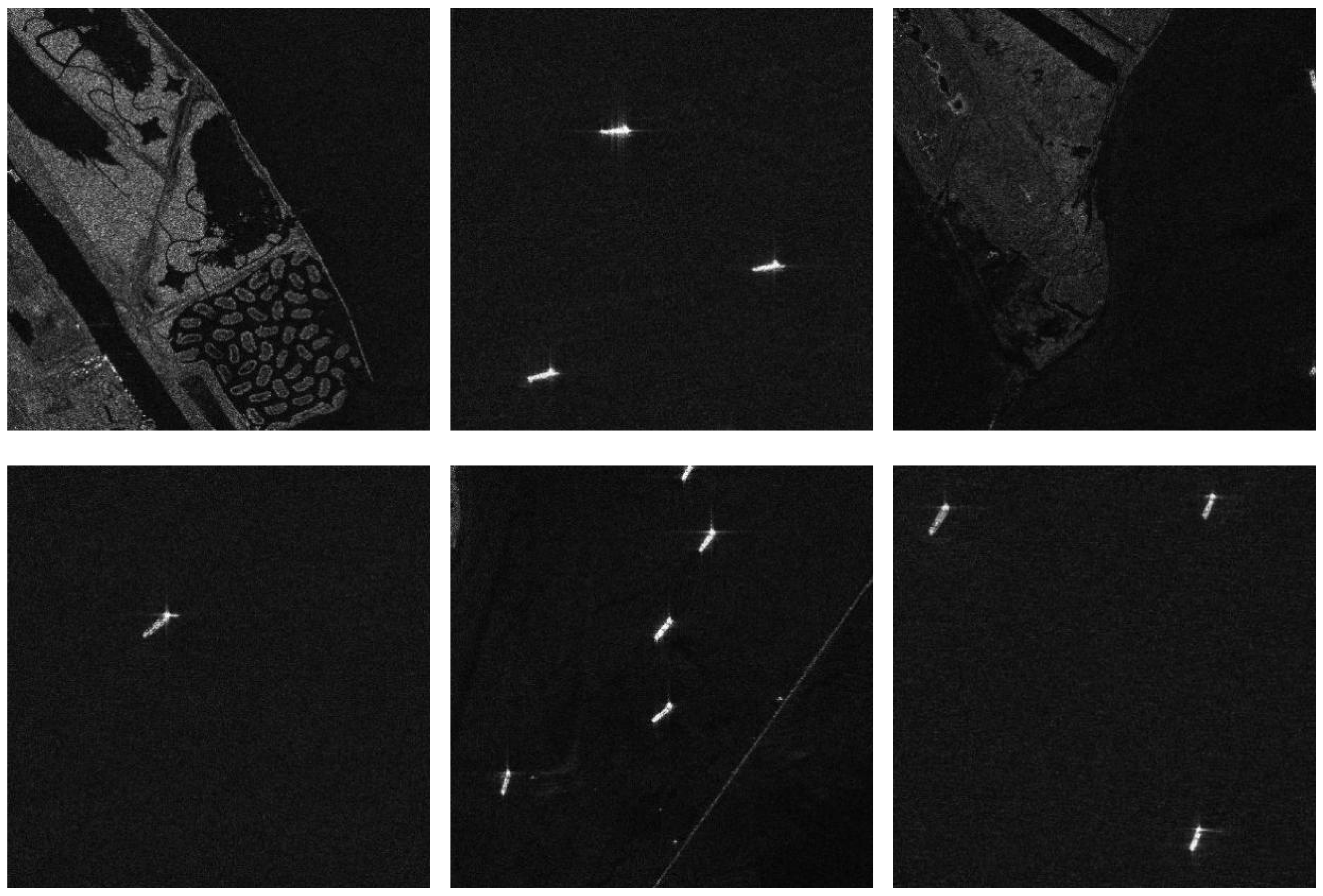

This paper uses a fully polarised SAR image as the training set, as illustrated in

Figure 1.

In the detection head section of YOLOV5, both the traditional YOLOV5 detection head and the AIH proposed in this paper calculate the loss simultaneously. The traditional detection head calculates the localisation loss, confidence loss, and category probability loss. In contrast, the AIH only calculates the category probability loss and replaces the final result of the traditional detection head. The experiments in this paper use a single polarised image as the target domain. To match the input of the multipolarised source domain, the target domain will be replicated four times as input.

3.2. Cross-Domain Adversarial Learning Module

SAR images are captured in complex and variable scenes, and image resources are often expensive and scarce. Directly using the training results of a dataset in a different scene with a different feature distribution often leads to unsatisfactory results.

Ganin et al. [

33] proposed a scheme to effectively bring two different domain-distributed datasets closer together, achieving domain adaptation between real SAR datasets and simulated SAR images, and improving the cross-domain generalisation ability of the detection model.

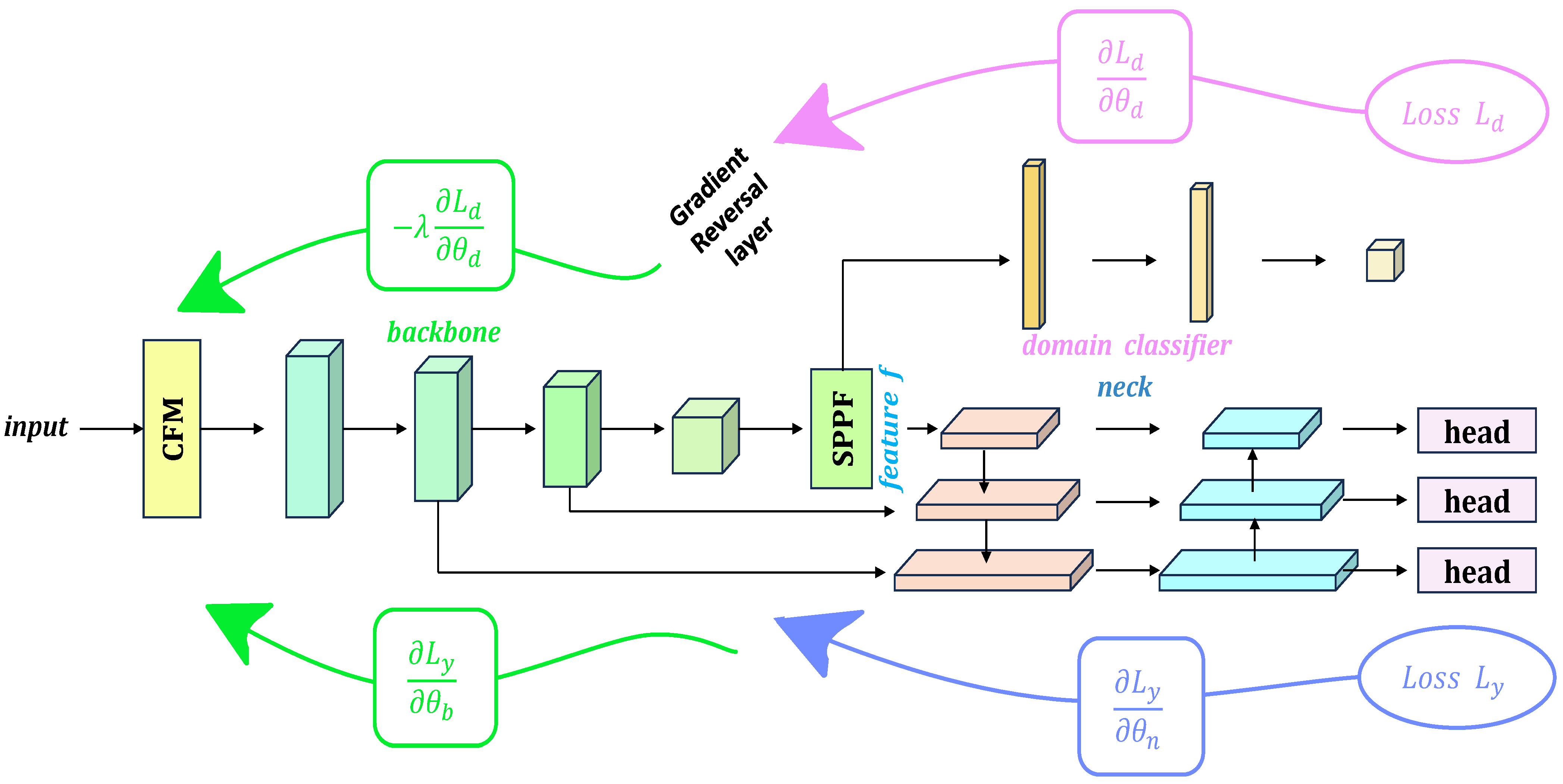

This paper proposes a cross-domain adversarial learning module, as shown in

Figure 3. The module assumes datasets from two different domain distributions, where the source domain is a labelled dataset and the target domain is an unlabelled dataset, both with a certain distance between their domain distributions before the model is trained. The CALM trains a domain classifier to distinguish images from different domains by minimising the domain classification loss. It then manipulates the gradient flow inversely by inverting the gradient to narrow the distance between the source and target domains. This achieves the effect of migration learning with high generalisation ability.

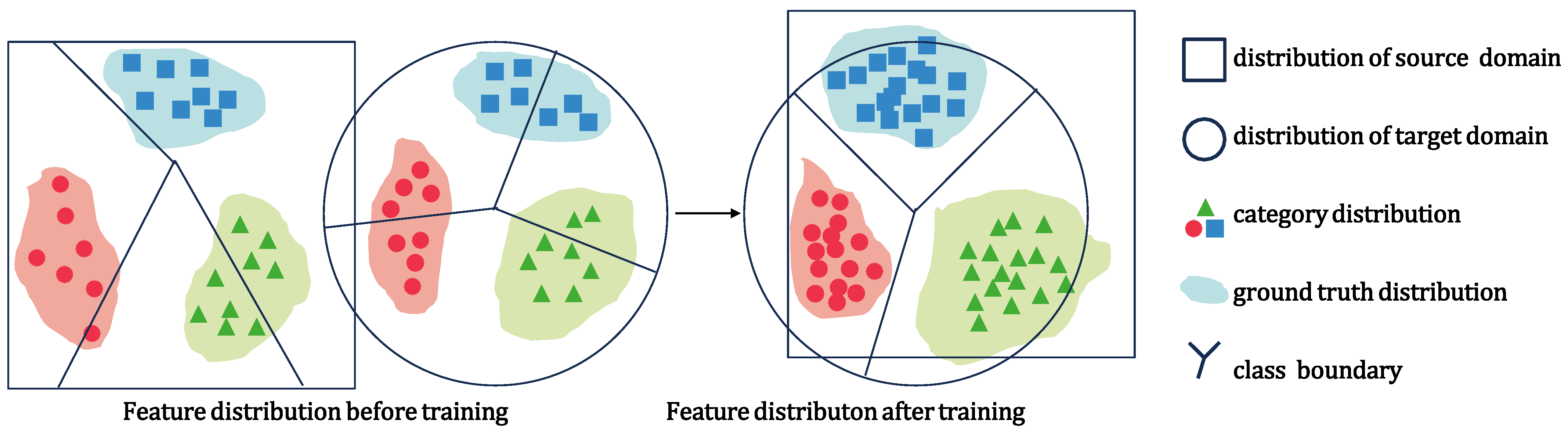

Assume that the input sample

,

x is mapped by the CNN and the output is

, where

Y is the feature distribution space of the output result. The square and the circle in

Figure 4 denote the feature distribution spaces

and

of the source and target domains, respectively, and there are multiple samples belonging to different categories within the space. In order to allow the source domain to accurately predict the target domain with its different distributions, the CALM needs to complete two tasks; the first task is to distinguish the different distributions within the source domain, i.e., the most basic YOLO target detection task, to minimise the target detection loss, so that the class boundary can be transformed from a random state before training to an accurate classification state after training. The second task is to close the distance between

and

so that the source and target domains have similar feature distributions. By this method, even if the target domain has no labels, it can be directly predicted efficiently. The specific process is described below.

Each input sample

x has the label

of the domain it belongs to, where the source domain is 0 and the target domain is 1. In the experiments of this paper, the input source domain is the fully polarised dataset with labels, which needs to be entered into the model after feature fusion. The target domain is the unipolarised dataset HRSID, trained without labels, which enters the model after, from the polarisation fusion module. As shown in

Figure 3, the whole mapping of the model is divided into three parts. The first part is the feature extraction module backbone, which goes from the head of the overall model to the end of the SPPF module of YOLOV5 to obtain the feature

f, which is used by the feature extractor

to denote the parameters trained to

. The second part is the YOLOV5’s feature fusion module, neck, which finally outputs the results of target detection, denoted by

, with the parameter

. The third part is the domain classifier, which is used to learn the domain to which the sample

x belongs, denoted by

and parameterised by

. In addition, the target domain only goes through backbone and domain classifier during training, and all three modules of the source domain need to participate and calculate the corresponding loss.

The training process requires the features to be discriminative, i.e., to be able to do the job of target detection correctly, i.e., the source domain needs to be trained in such a way that the YOLO target detection loss is minimised. In addition, f needs to be domain invariant, i.e., it is necessary that the feature distribution of the source domain and the feature distribution of the target domain are as close as possible to each other and have a certain degree of similarity. The approach adopted is used to learn the domain to which the sample belongs and to bring the domain closer. That is, to minimise the loss on and maximise the loss on. And in order to maximise the loss on, a gradient reversal layer (GRL) operation is performed by multiplying a negative number to the gradient when the gradient of the domain classifier is backpropagated to the backbone. What the GRL does is multiply the error passed to this layer by a negative number, which causes the network before and after the GRL to have its training objectives opposite to each other to achieve the effect of confrontation.

3.2.1. Loss Function

For the CALM as a whole, the following loss functions should be considered. In the source domain:

For the target domain there is:

where

i denotes the ith training sample.

is the loss for YOLO target detection, only

when the source domain calculates this part of the loss.

is the loss for domain classification, where

takes a negative value, the minus sign indicates that the opposite direction of the gradient is taken, and

is the learning rate. Thus the overall loss of the whole model has:

where

is the overall loss of the model.

For training purposes, the overall loss needs to be minimised with:

Among them, training the feature extraction parameter and the feature fusion parameter makes the loss of target detection minimised, thus ensuring the correctness of the target detection result. Training the domain classifier parameter allows the domain classification loss to be maximised, thus bringing the feature distribution space of the source and target domains closer together.

3.2.2. Model Optimisation

It is worth noting that instead of fixing the adaptation factor

in order to suppress the noise signal of the domain classifier at an early stage of the training process,

is gradually changed from 0 to 1 as the training proceeds, i.e.,

where

is a hyperparameter with an initial value of 10.

p is the current number of training rounds/total number of rounds, which changes from 0 to 1 as the training progresses. Thus,

has an initial value of 0, which gradually changes to 1 as the training progresses to achieve the purpose of suppressing early noise in the domain classifier.

3.3. Channel Fusion Module

Fully polarised SAR typically produces images with four polarisation channels: HH, HV, VH, and VV. This approach can provide more comprehensive scattering information of the observed target, which is beneficial for detecting ship targets. Even if the target domain image is single-polarised, such as full-polarised data in the training data, the correlation between the polarisation channels should be fully exploited to improve the feature extraction capability of the network. This paper presents the CFM, illustrated in

Figure 2.

Multimodal fusion can be performed at three levels: decision, feature, and pixel. However, pixel-level fusion is not recommended due to the high computational cost. To extract both local features under unipolarisation and global features under full polarisation simultaneously, this paper employs feature-level fusion.

The process involves first extracting the unique features under single polarisation using the basic Conv+BN+SiLu structure. Furthermore, to maintain the feature tensor size after extraction, it is crucial to regulate the convolution output tensor size by adjusting the convolution step size and padding.

where

,

,

, and

represent the input HH, HV, VH, and VV feature maps respectively. CBS(.) represents the Conv+BN+SiLu operation.

,

,

,

represents the quadrupolarised feature tensor after CBS(.) extraction.

The feature extraction of the four polarised images before fusion is a feature-level fusion, which is conducive to the subsequent feature extraction of the fused tensor. Compared with the pixel-level fusion that directly splices the input images of the four polarisations, this method effectively saves computation and distinguishes the differences between different polarisations more obviously, which greatly enriches the semantic information of the fused tensor. The feature fusion process is described as:

where ⊕ stands for element-wise addition.

is the result after feature fusion.

After obtaining the feature fusion tensor

it is necessary to perform the extraction of fully polarised shared features, using Transformer to perform this operation [

34,

35]. Transformer can learn remote dependency relationships of feature maps relative to CNN, because the calculation of self-attention is independent of the distance between pixels. The feature tensor

is put through k Transformers with residual concatenation. Describe the process as:

where

denotes the normalisation operation.

represents Transformer operation. ⊙ stands for element-wise multiplication.

denotes the result after the ith Transformer operation.

3.4. Anti-Interference Head

The interference from the complex background of SAR images often leads to False Positive detection results. This is particularly problematic as coastal facilities share similar features with target ships. To address this issue, this paper proposes the AIH model inspired by the decoupling head of YOLOVX [

36,

37]. The model aims to better isolate the target from the complex and variable background and reduce its influence on target detection.

In YOLOVX, the authors note that the classification and localisation tasks of the model have significant differences. They argue that the traditional coupling head can negatively impact the final detection results. To address this issue, this paper proposes splitting the original detection head into three separate heads. These heads predict the target’s category, localisation, and confidence, respectively. The authors claim that this approach leads to better generalisation ability in both classification and localisation. This paper argues that decoupling the classification head from the traditional coupling head can improve the model’s ability to classify different categories. Additionally, decoupling is useful for distinguishing between the target and background information in YOLO, which can effectively improve the ability to reduce background interference. In practical applications of SAR ships, the model’s detection results are not substantially affected by the confidence level. Therefore, it is unnecessary to separate the confidence level into individual detection heads. Only the classification head needs to be stripped out of the original detection head. To cope with the interference of the complex background, the AIH illustrated in

Figure 5 is obtained. The process is as follows:

YOLOV5 has three sizes of detection heads, H × W × (156,512,1024), which predict large, medium, and small targets, respectively. For a single detection head, the traditional YOLO algorithm compresses it into a tensor of size H × W × ((4 + 1 + C) × anchor) by one layer of convolution for the final decoding work, where 4 stands for the coordinates of the centre point (X,Y) and the length and width of the detection frames (w,h), 1 stands for the confidence level, C stands for the predicted number of categories, and anchor stands for the initial number of detection boxes in a grid cell.

In this paper, a classification head of size H × W × (C × anchor) is peeled off by three-layer convolution in the traditional coupling head, and by decoding this tensor, the probability of the classification condition category of a certain detecting frame is obtained and therefore the probability of the detecting frame being background is . According to the above discussion, the result has a strong ability to distinguish between background and target. On the other hand, a positioning and confidence head of size H × W × 5 is obtained by one layer of convolution for calculating the positioning coordinates and confidence. Finally, the results of the two detection heads are combined to obtain the prediction of the model.

During the training process, a large amount of SAR image data is used, and throughrepeated iterative training, the model is made to gradually learn the feature representations that effectively distinguish the target from the background. Eventually, the AIH is able to accurately distinguish the target ship from the coastal facilities in the target detection task with a low false alarm rate.

4. Experiments

In this part, specific experiments are carried out on the scheme proposed above. The main purpose of the experiments in this paper is to demonstrate that the CALM has some cross-domain generalisation capability, and that CFM and AIH can effectively improve the cross-domain generalisation capability of the model. In this paper, the independently constructed SAR Full Polarisation Dataset (SFPD) is used as the source domain, and the HRSID dataset is used as the target domain. In addition, in order to ensure real-time target detection, the target domains involved in the training are not used for the evaluation of the model.

In the experiments, firstly, their cross-domain generalisation ability will be tested under several current commonly used models, and after finding certain patterns, they will be improved by deep domain adaptation, and finally, some images of the training results of the models will be shown.

In this paper, the models are trained and evaluated on the Windows platform, and the GPU used is NVIDA RTX-3090.

4.1. Model Evaluation Methodology

The model evaluation metrics in this paper are and F1 and AP (average precision). Before calculation, firstly, the confusion matrix of the trained model under the test set needs to be calculated. If the intersection and integration ratio (IOU) of the detected frame to the true frame is greater than 0.5, the detection is considered to be correct and is a True Positive

. If the background is wrongly predicted as the target it is a False Positive

and if the target is missed, it is a False Negative

. Thus, the model’s accuracy, Formula (9), is obtained, and the accuracy indicates the proportion of correctly positive predictions of the model to the proportion of all positive predictions.

The recall of the model represents the proportion of correct predictions that are positive to the proportion of all that are actually positive, and is given by Formula (10):

After obtaining precision and recall, there are two metrics to evaluate the model. However, usually the two hold each other back, so it is necessary to combine the two to be the final evaluation metrics, so there are

and AP as the final evaluation metrics. F1 is calculated as Formula (11):

Whereas the process of calculating AP is more complex, in YOLO, the process of calculating AP is as follows:

For each category, first sort all the prediction frames according to the confidence level from high to low.

For each prediction box, calculate its IoU (Intersection over Union) value with all the real boxes in the same category, and find the real box with the largest IoU value.

If the IoU value is greater than a set threshold (usually 0.50), the prediction box is considered as a correct prediction, otherwise it is considered as an incorrect prediction.

For each confidence threshold, calculate the precision and recall at that threshold.

In a nutshell, the process of AP calculation is to determine whether the prediction frame is correct or not by comparing the IoU values between the prediction frame and the real frame, then calculating the precision and recall based on different confidence thresholds, and finally plotting the PR curve and calculating the AP.

4.2. Date Analyses

4.2.1. HRSID

The HRSID dataset [

38], which was released in January 2020, is a high-resolution SAR image dataset primarily used for ship detection, semantic segmentation, and instance segmentation tasks. The dataset comprises 5,604,800 ship images captured by Sentinel-1B, TerraSAR-X, and TanDEM-X satellites. The imaging area was selected in ports with high cargo handling capacity or busy canals crisscrossing the trading city. The images were captured using StripMap imaging mode with a resolution of better than 3 m and polarisation modes of HH, HV, and VV. The scanning range is 270 km. The dataset comprises 5604 high-resolution SAR images, each with a resolution of 800 × 800 pixels, and 16,951 ship instances.

Figure 6 displays the specific images.

This paper uses HRSID as the target domain for model training and evaluation. HRSID was chosen due to its significant number of images and its high-resolution SAR image dataset with the same target as SFPD.

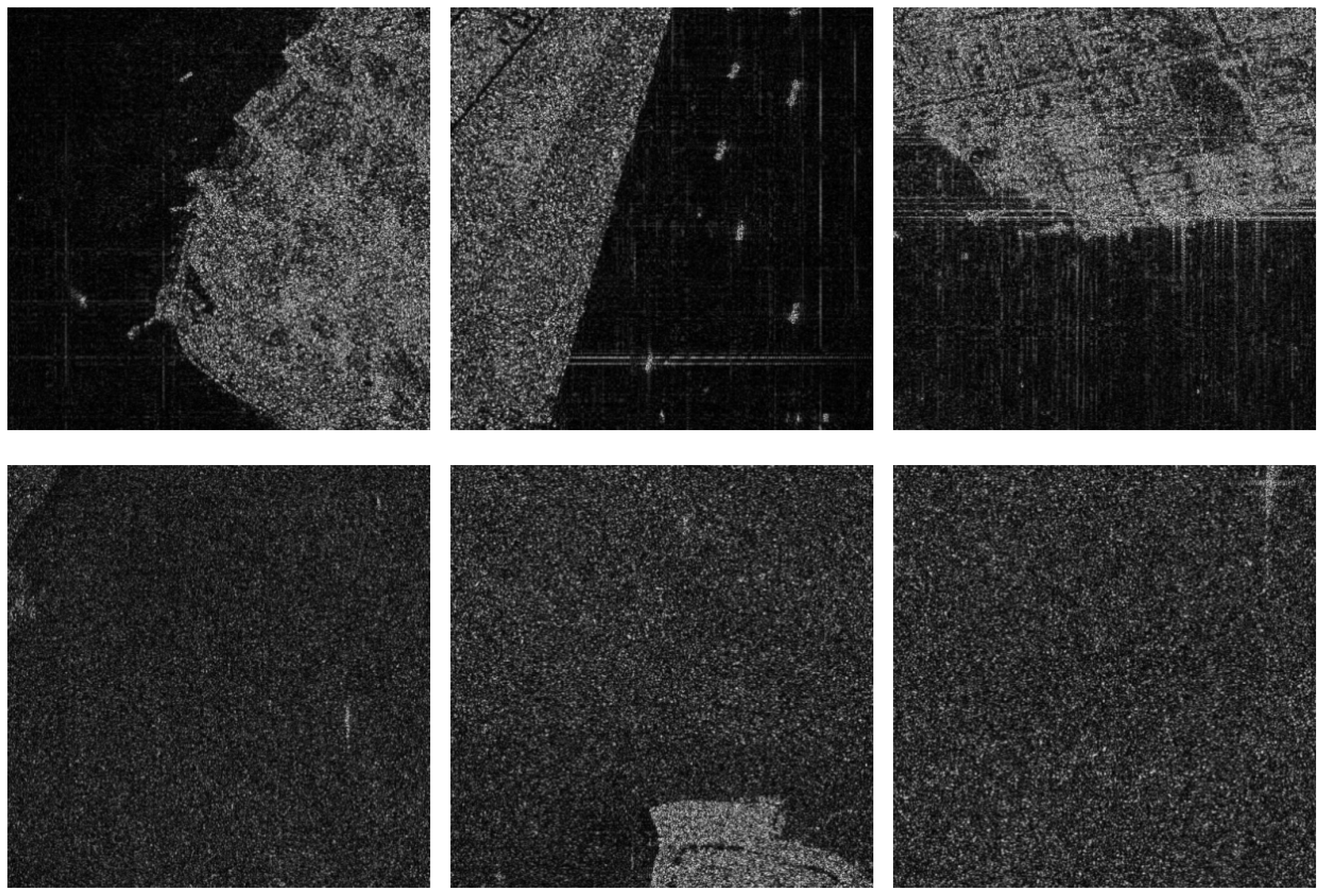

4.2.2. SAR Full Polarisation Dataset

The dataset captured harbour and maritime targets using on-board SAR with 8 m resolution and QPSI as the imaging mode. Full polarisation was employed, resulting in 4668 images, each measuring 416 × 416 pixels. In total, 15,212 targets were identified, with an average pixel value of 412.53.

Figure 7 displays some of the images.

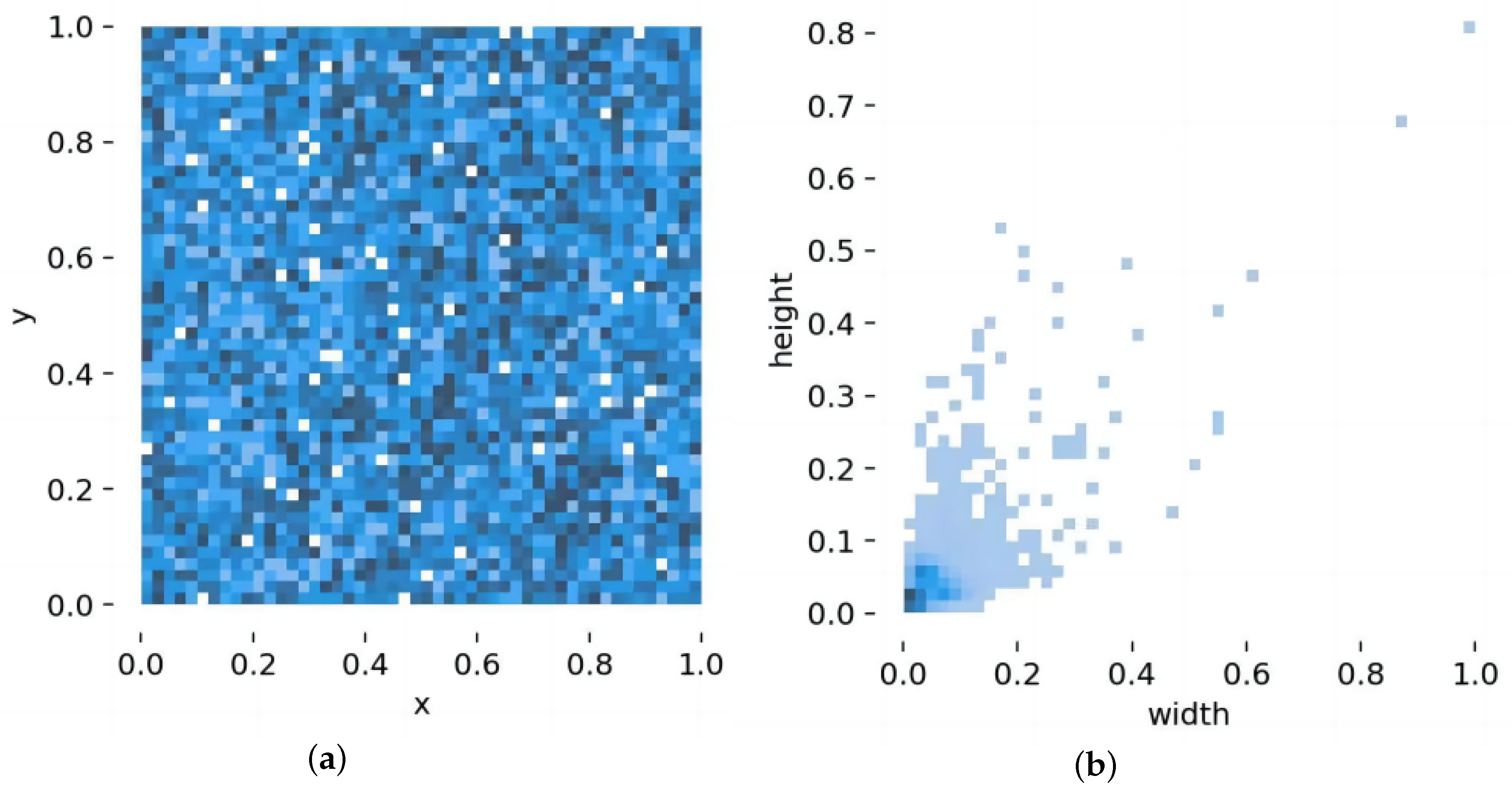

Figure 8a shows the heat map of the positional distribution of the images, indicating a more uniform distribution of colour blocks and therefore a more uniform distribution of target frames.

Figure 8b displays the heat map of the aspect distribution of the target box, revealing a concentration of dark colour blocks in the lower left corner of the image, indicating a higher number of small targets.

4.3. Multi-Model Comparative Experiments

The experiments in this paper begin by exploring the target detection capability of cross-domain generalisation on several currently dominant models. Usually, the learning ability of the models is improved with the deepening of the network.

However, the experiments in this paper find that, as shown in

Table 1, the learning ability of the YOLOV5 model in terms of cross-domain generalisation detection decreases with the depth of the model under the same structure. In YOLOV5, compared to the s model, the m model decreases precision by 4.4%, recall by 1.2%, AP by 2.1%, and F1 by 2.4%. Compared to the m model, the l model decreases precison by 1.6%, recall by 0.4%, AP by 1.9%, and F1 by 0.8%. In this paper, we argue that this decrease is inevitable, although models with larger parameters learn more semantic features from the source domain, resulting in a higher generalisation ability on the source domain. However, this high generalisation ability to the target domain is likely to become overfitting. As for the two-stage large model like Faster-RCNN, the AP is only 18.7%, which basically does not have the ability of cross-domain generalised detection. Moreover, Azizpour et al. [

39] pointed out that performance can be improved by increasing the width and depth of the network when the source and target domains are close. However, excessive parameterisation may damage feature information, causing the learned ability to deviate from the target domain. Therefore, in transfer learning, caution should be exercised in selecting models and datasets to avoid the negative impact of increasing parameter count.

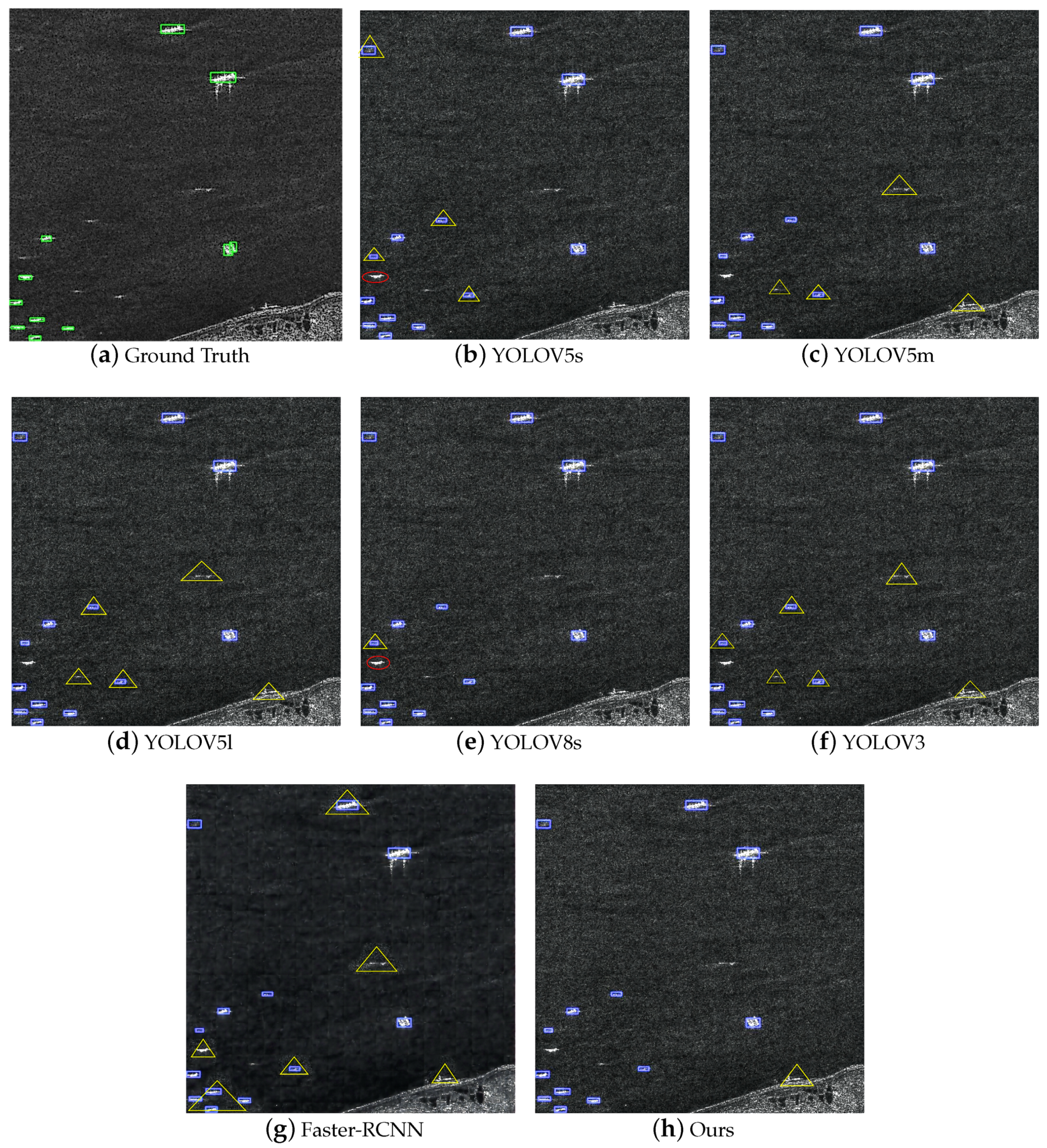

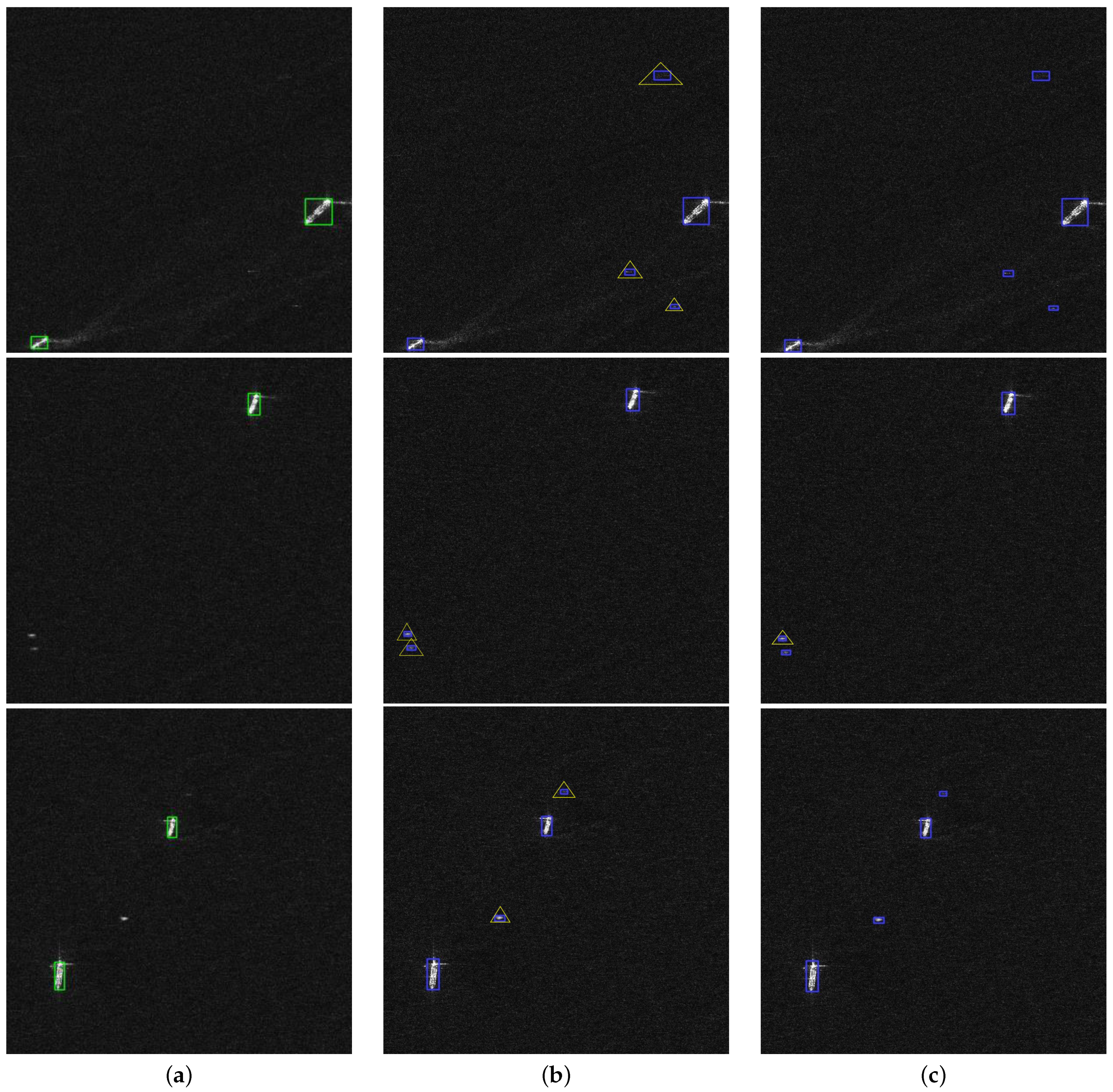

The detection images of various models are shown in

Figure 9, where the green box is Ground Truth, the blue box is the model detection result, the red ellipse is False Negative, and the yellow triangle is False Positive. It can be seen that the models with a larger number of parameters are more likely to be False Positive due to overfitting. And although Faster-RCNN can successfully detect some targets, too many False Positives has made it lose its usability.

4.4. Model Improvement Results

This paper proposes a complex background SAR ship target detection method based on fusion tensor and cross-domain adversarial learning. The aim is to solve the problem of low cross-domain generalised detection capability of traditional models. The proposed fusion of the CALM and YOLOV5s transforms the model into a cross-domain model. The CFM and AIH models are fused sequentially to enhance the model’s target detection ability in the cross-domain generalisation problem. It should be noted that, unlike traditional ablation experiments, this paper does not separately validate the effects of CFM + AIH. This is because the CFM and AIH models are two models designed based on the CALM cross-domain model to take full advantage of the correlation between the fully polarised image data and to improve the model’s ability to resist the interference of complex backgrounds. The final model improves precision by 2.3%, recall by 5.2%, AP by 4.1%, and F1 by 4.2% compared to the baseline model based on YOLOV5s. The specific experimental results are shown in

Table 2. Compared with YOLOV8s, which is the best-performing model among all models, precision is improved by 4.9%, recall is improved by 3.3%, AP is improved by 2.4%, and F1 is improved by 3.9%.

4.4.1. Analysis of CALM Results

After implementing the CALM structure, precision increased by 0.5%, recall improved by 1.8%, AP improved by 2.8%, and F1 improved by 1.4%. All parameters have been improved, but the improvement in precision is not very significant. This is because the CALM forces the source and target domains to be closer together, causing the target domain to learn features belonging to the source domain that interfere with its own detection, resulting in little improvement in reducing False Positives. However, in practical applications, the primary objective is to detect the target as quickly as possible after a single detection. Therefore, this paper argues that the recall metric is more crucial in the real-time detection of SAR images. The CFM and AIH proposed in this paper will significantly improve precision and further enhance recall. The specific experiments are described in detail below.

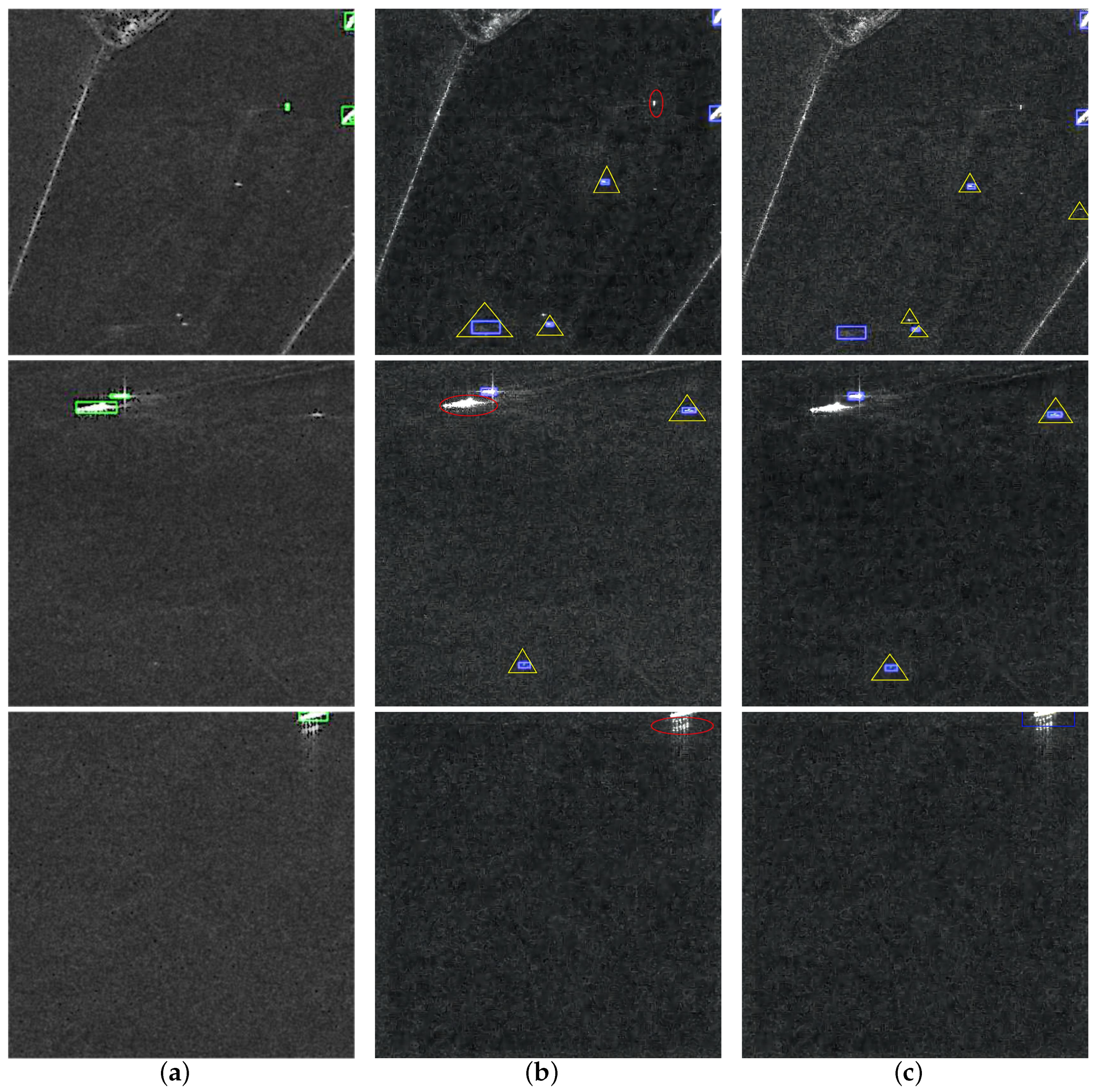

Figure 10 shows the detection picture of the CALM experiment. It is evident that the addition of the CALM significantly reduces the False Negative phenomenon of the model. However, there is no significant improvement in the False Positive phenomenon. To address this issue, this paper proposes the design of two modules, CFM and AIH.

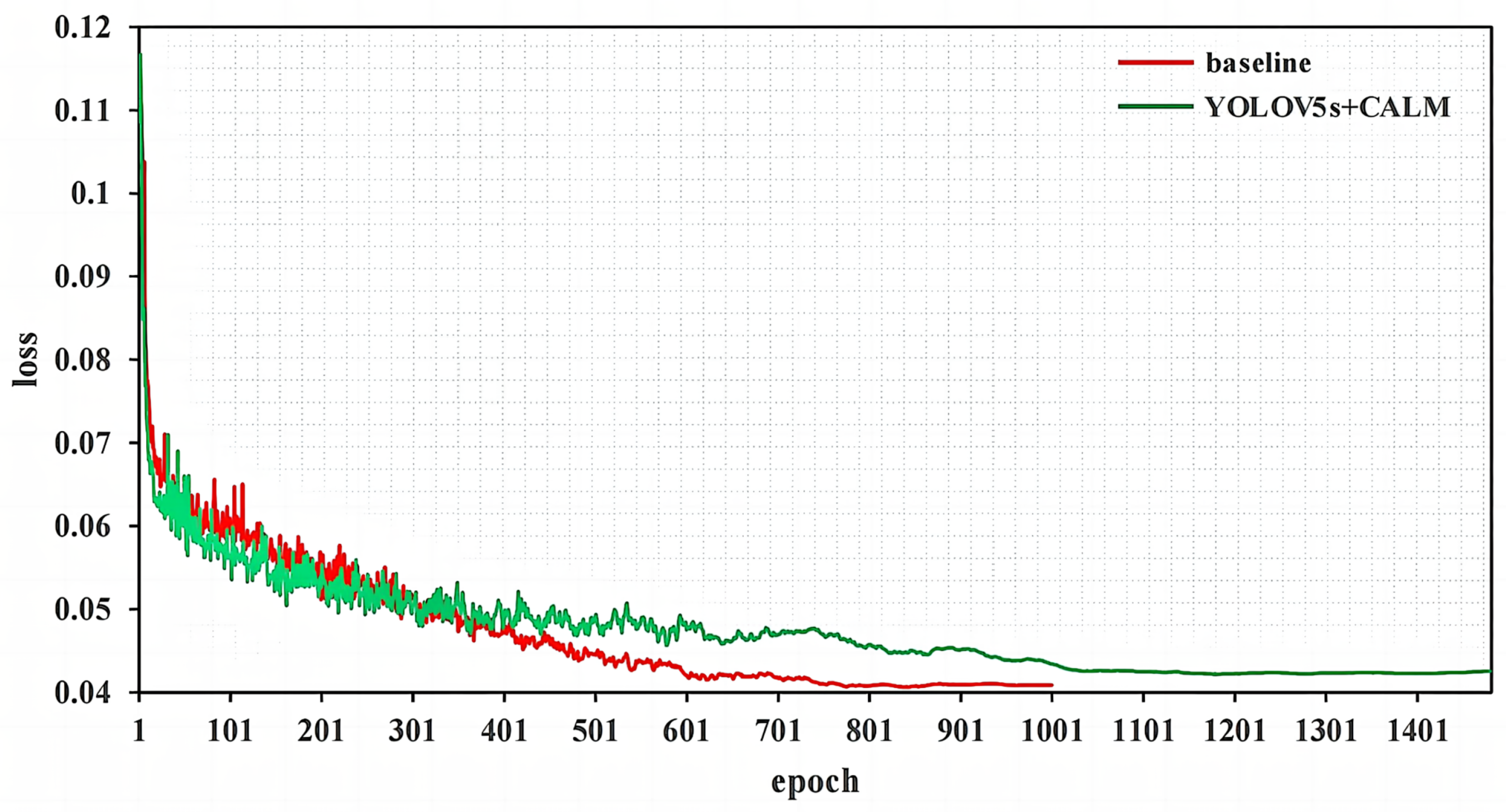

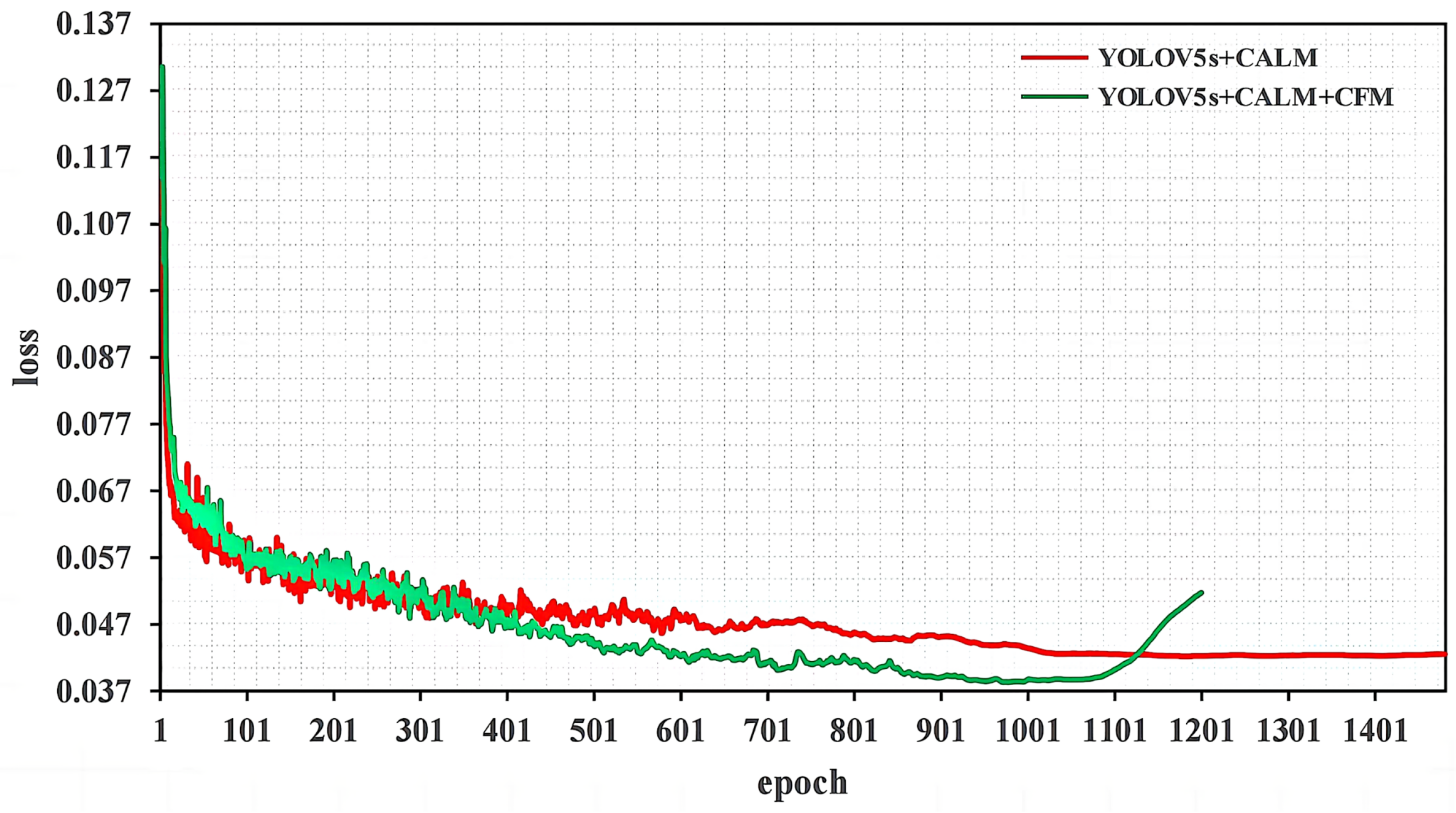

Figure 11 shows a line graph that illustrates the loss in the source domain over epochs. The YOLOV5 + ATL model converges slower and has a higher loss compared to the baseline. The interference of the source domain in the calculation of the target detection loss is believed to be caused by the fact that the source and target domains enter the feature extraction module simultaneously. It is important to note that this is a subjective evaluation and should be clearly marked as such. This interference does not directly affect the detection of the target domain. However, it reduces the target detection ability learned by the model, which indirectly affects the detection of the target domain.

This paper argues that the phenomenon can be mitigated by separating the feature extraction module of the source and target domains. This requires further experimental investigation.

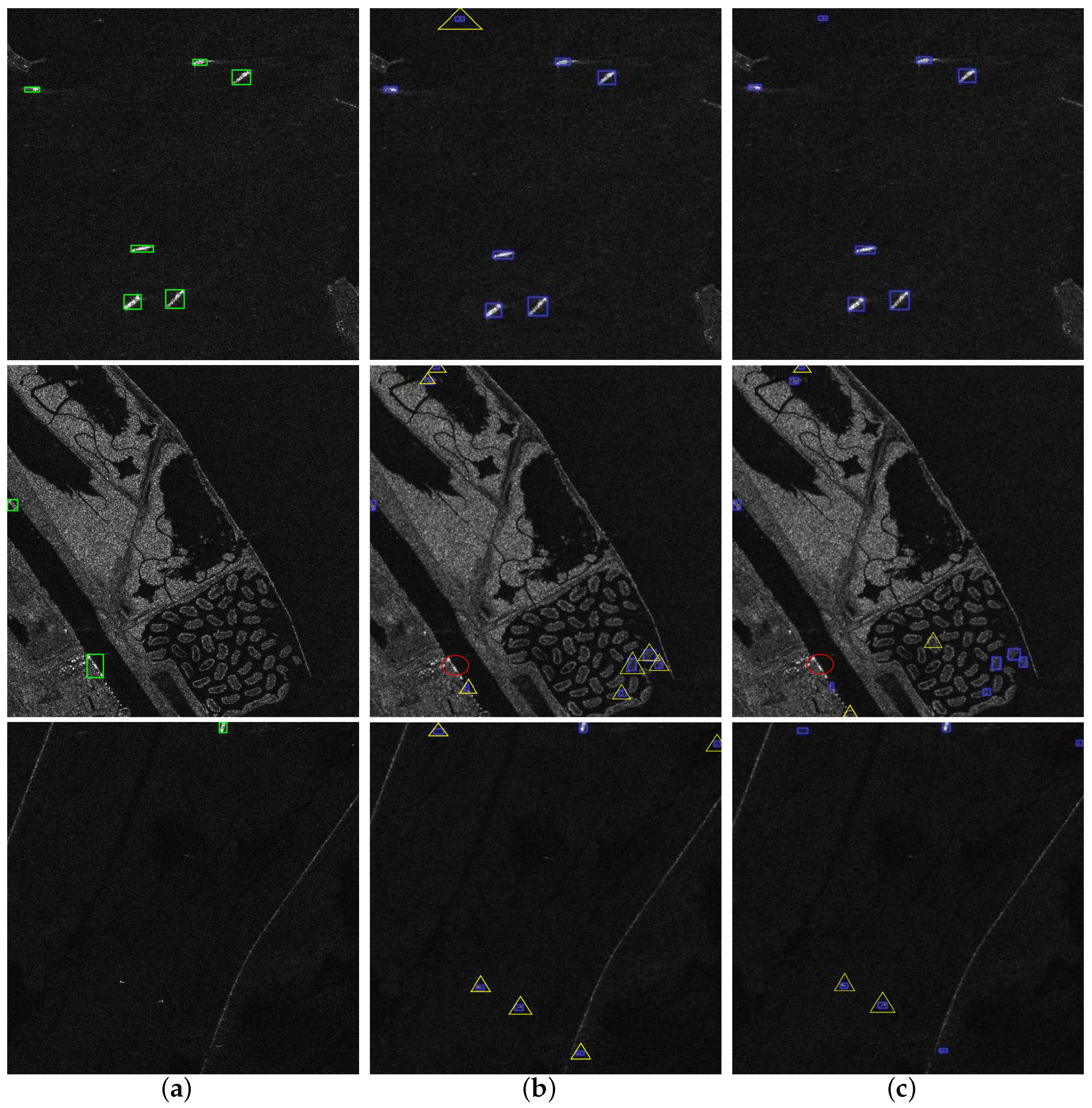

4.4.2. Analysis of CFM Results

The model that incorporates the designed CFM module improves precision by 0.1%, recall by 2%, and F1 by 1.3%. The experiments demonstrate that CFM effectively fuses the features of the four polarisations and fully utilises them to enrich the semantic features of the model, thereby further improving its cross-domain generalisation ability.

Figure 12 shows the detection image after adding CFM. The experiment found that the model’s False Positive phenomenon was reduced after adding CFM.

Figure 13 shows a line graph of the loss on the validation set during the CFM experiments as a function of the training batch. It can be observed that the convergence speed improved after adding CFM, resulting in lower loss after final convergence. However, after 1000 rounds, the loss gradually starts to increase, indicating network degradation. In this paper, we suggest that the reason for overfitting on the target domain is due to the extraction of too many features from the source domain using CFM. However, CFM does have an enhancement effect, and overfitting can be effectively controlled by appropriately limiting the number of training epochs. The subsequent experiments showed that the inclusion of the designed AIH module effectively reduced the occurrence of network degradation.

It is worth noting that CFM not only improves the cross-domain model proposed in this paper, but also shows remarkable capability even when used alone on traditional non-cross-domain models. In this paper, the CFM module is trained and evaluated simultaneously on the SFPD dataset for the CFM module, and the results are obtained as shown in

Table 3. It can be found that compared to the model under single polarisation, the polarisation fusion with CFM improves precision by 1.5%, recall by 9.6%, AP by 5.2%, and F1 by 5.9%, while Params and GFlops only increase by 1.37 and 0.8, respectively, thus highlighting the importance of utilising multi-polarised data.

4.4.3. Analysis of AIH Results

The addition of the designed AIH module to CALM+CFM resulted in a 1.7% improvement in precision, 1.4% improvement in recall, 2.3% improvement in AP, and 1.5% improvement in F1. It was found that the recall of the model continued to improve and there was a further breakthrough in precision after the addition of the AIH module. This indicates that AIH has a strong ability to resist background interference, which can effectively distinguish the complex background from the target to be detected and mitigate the False Positive phenomenon.

The detection image after adding AIH is shown in

Figure 14. It is found that the False Positive phenomenon of the model after adding the AIH is decreased very significantly. The figure’s original target was misjudged due to interference from the complex background, which resembled the light spots of the ship target. However, the AIH module’s fusion eliminated many of these falsely detected target frames.

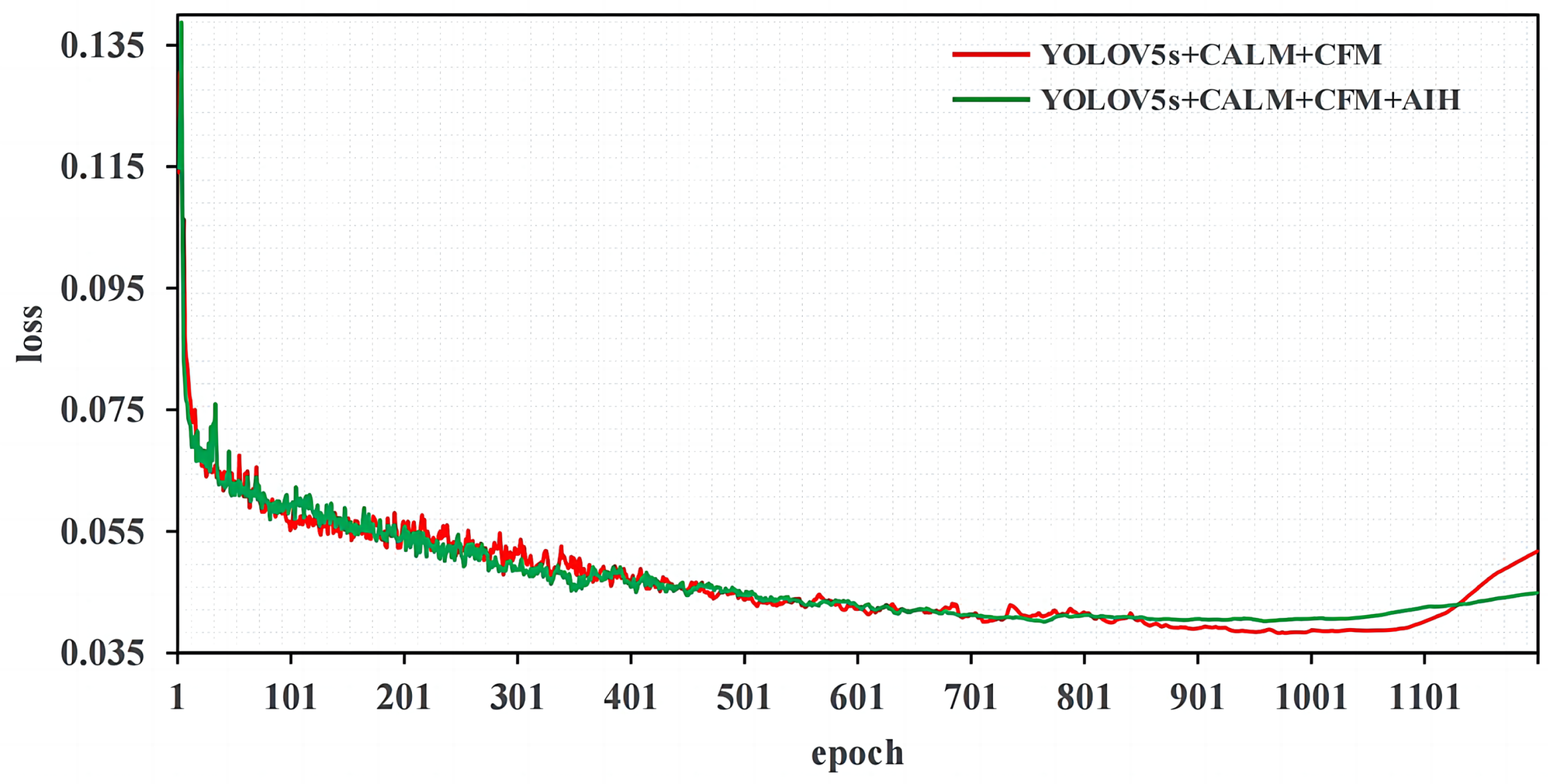

Figure 15 shows a line graph of the loss on the validation set during the AIH experiments as the training batch changes. It can be observed that the magnitude of the loss becomes less jittery after adding AIH, and the network degradation phenomenon is alleviated to some extent. This is because the decoupling method of AIH effectively separates the classification task from the localisation task, allowing for more stable model training.

5. Discussion

This paper proposes a complex background SAR ship target detection method based on fusion tensor and cross-domain adversarial learning. Three modules, CALM, CFM, and AIH, are designed to improve the cross-domain generalised detection capability of current target detection models. The model makes full use of the rich feature information of the omnipolar dataset and alleviates the problems of missing target features and susceptibility to interference by complex backgrounds due to the coupling of detection models. Compared to the best-performing YOLOV8s model among typical mainstream models, this model improves precision by 4.9%, recall by 3.3%, AP by 2.4%, and F1 by 3.9%.

The experiments in this paper show that deeper models are prone to reduced cross-domain generalisation detection ability, which is considered to be caused by overfitting.

In the CALM experiment, the recall was significantly improved, but the change in precision was not very noticeable. The experiments in this paper suggest that this may be due to differences in the distribution between the source and target domains. Domain adaptation causes the target domain to learn a feature distribution that does not belong to itself, which can affect the final detection results. Future experiments will further investigate cross-domain migration learning of SAR images to address the aforementioned issues.