FD-Net: A Single-Stage Fire Detection Framework for Remote Sensing in Complex Environments

Abstract

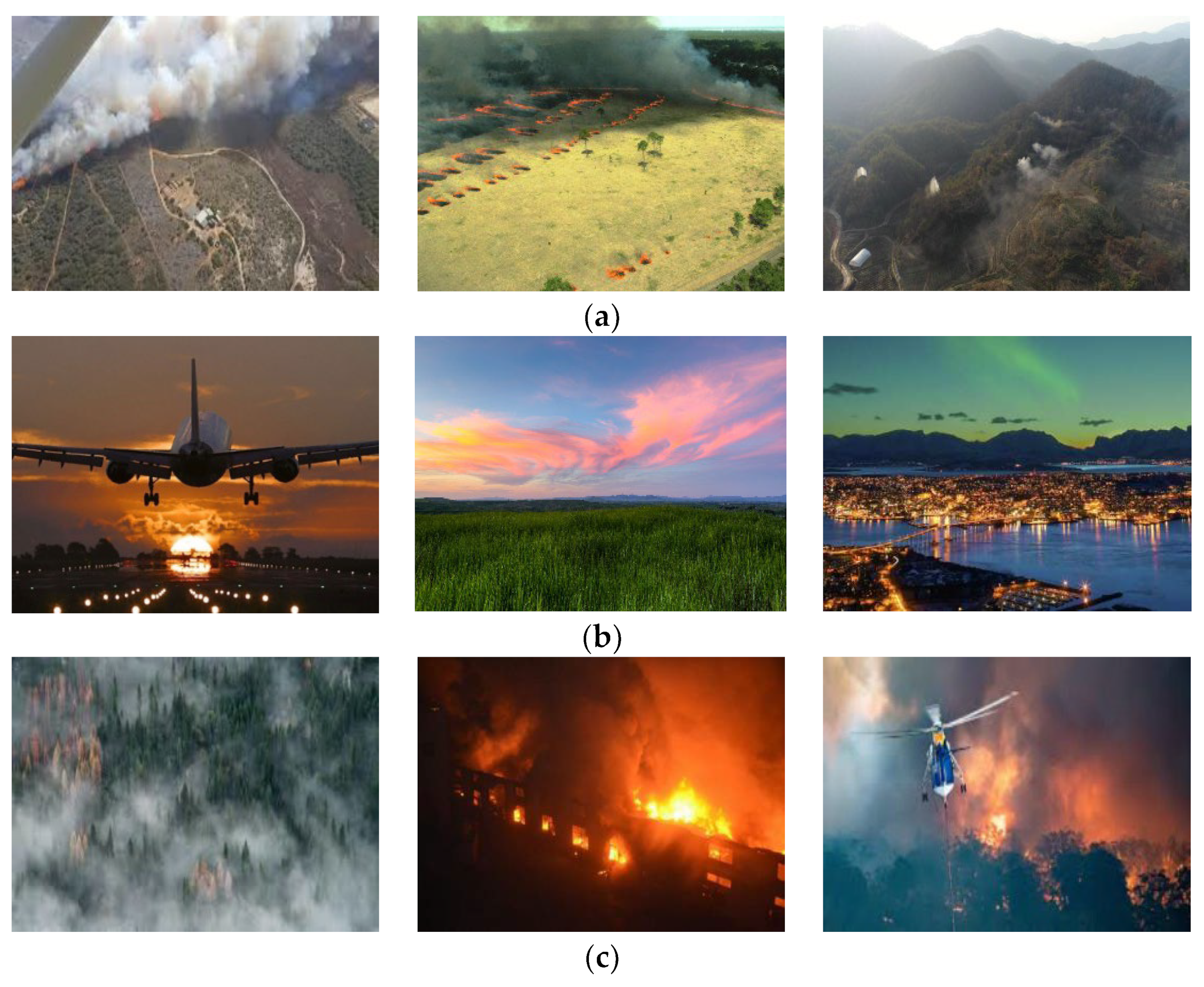

1. Introduction

- I.

- We collected two large-scale fire detection datasets.

- II.

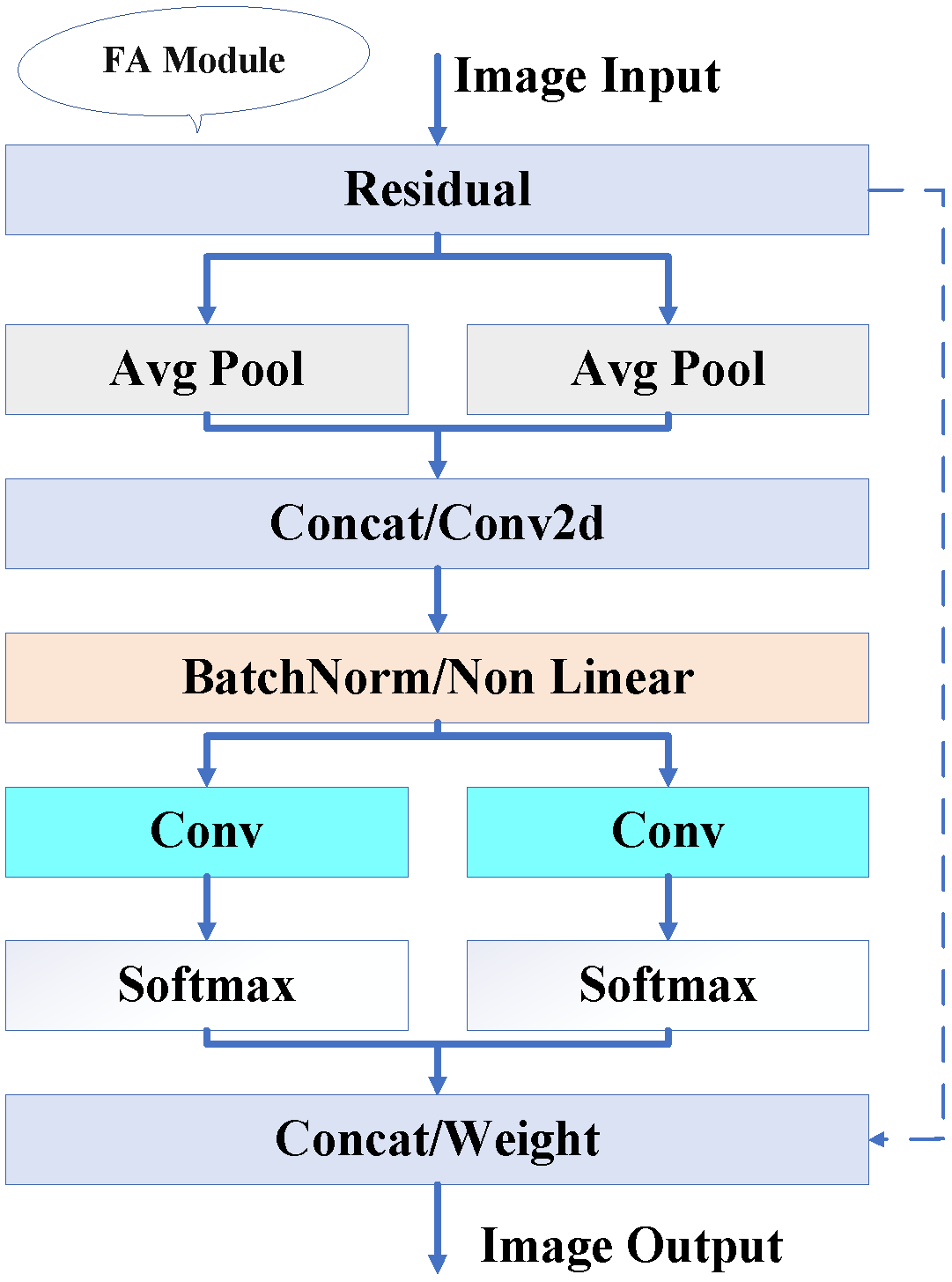

- We proposed a new attention mechanism called the Fire Attention (FA) mechanism.

- III.

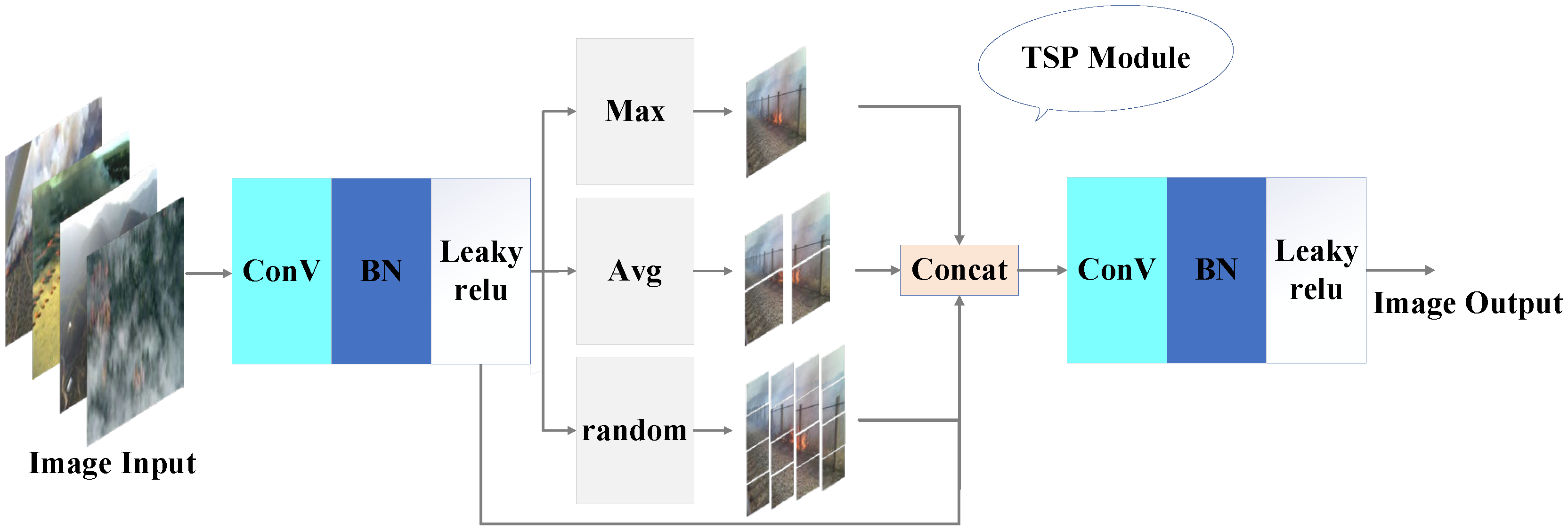

- We developed the Three-Scale Pooling (TSP) module to correct geometric distortion in fire images.

- IV.

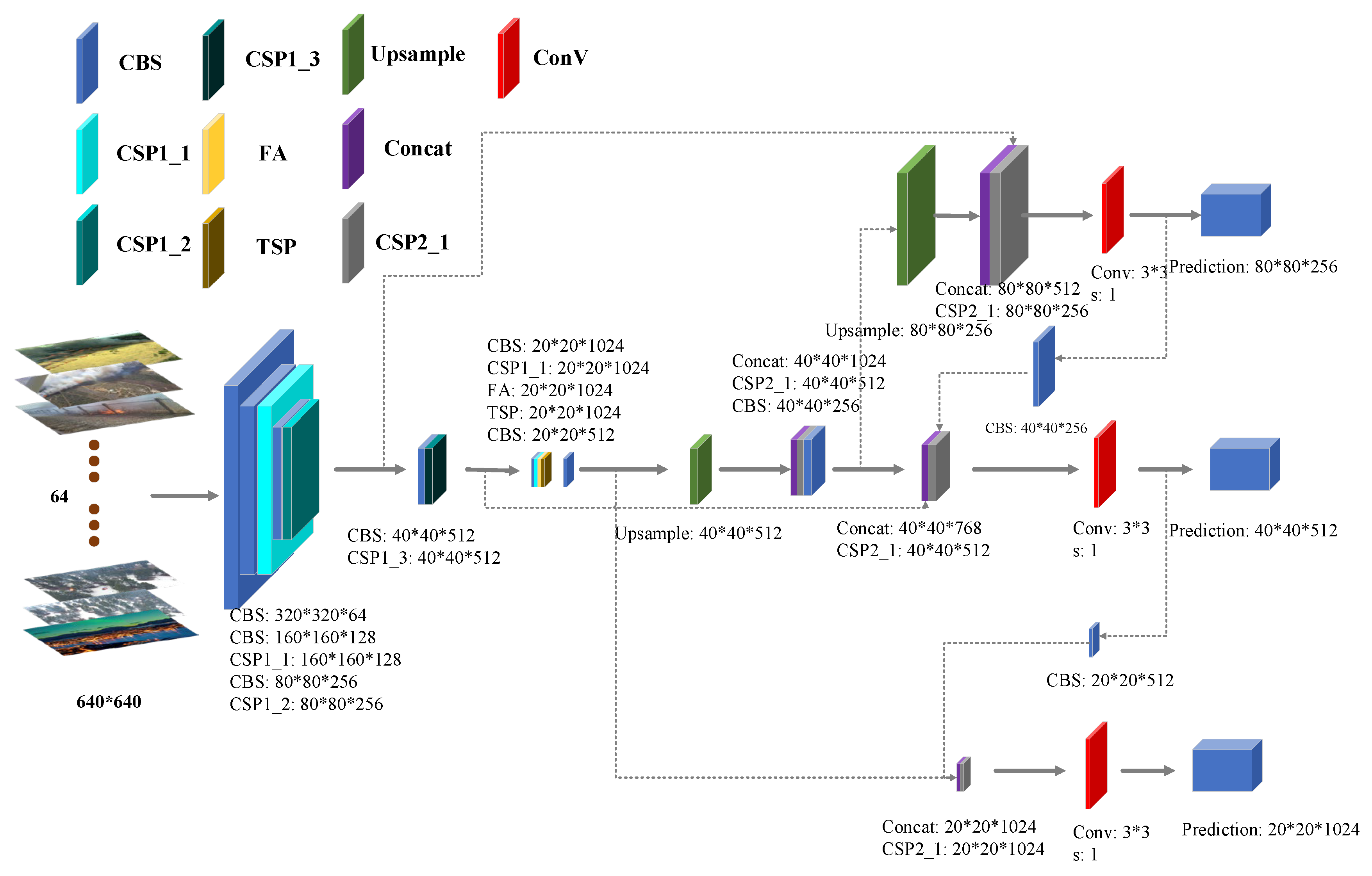

- We fine-tuned the “Neck” of the YOLOv5 network and proposed a new Fire Fusion (FF) module to enhance the precision of fire image detection.

2. Related Works

2.1. One-Stage Methods

2.2. Two-Stage Methods

2.3. Other Methods

3. Proposed Methods

3.1. FA Mechanism

3.2. TSP Module

3.3. FF Module

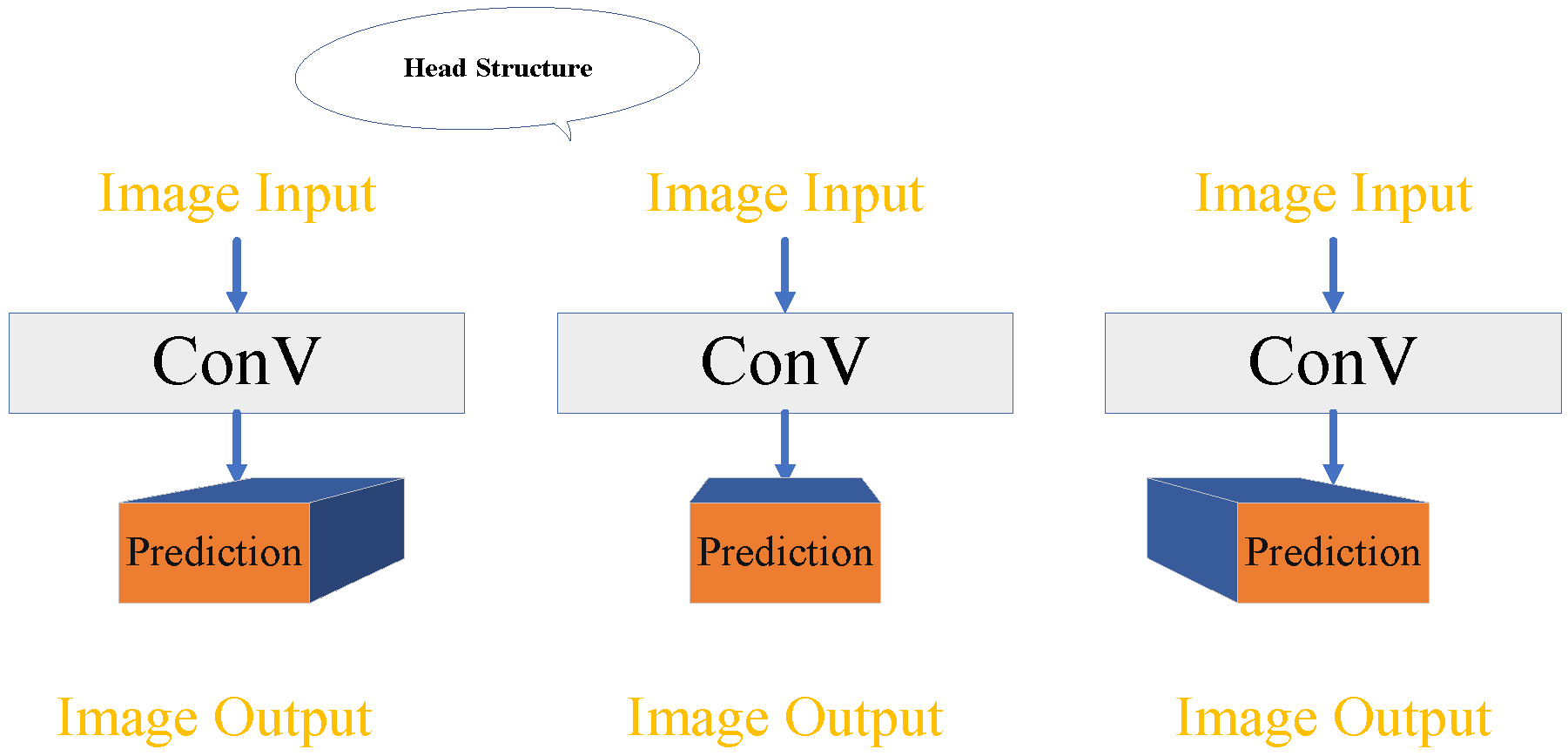

3.4. Prediction Head

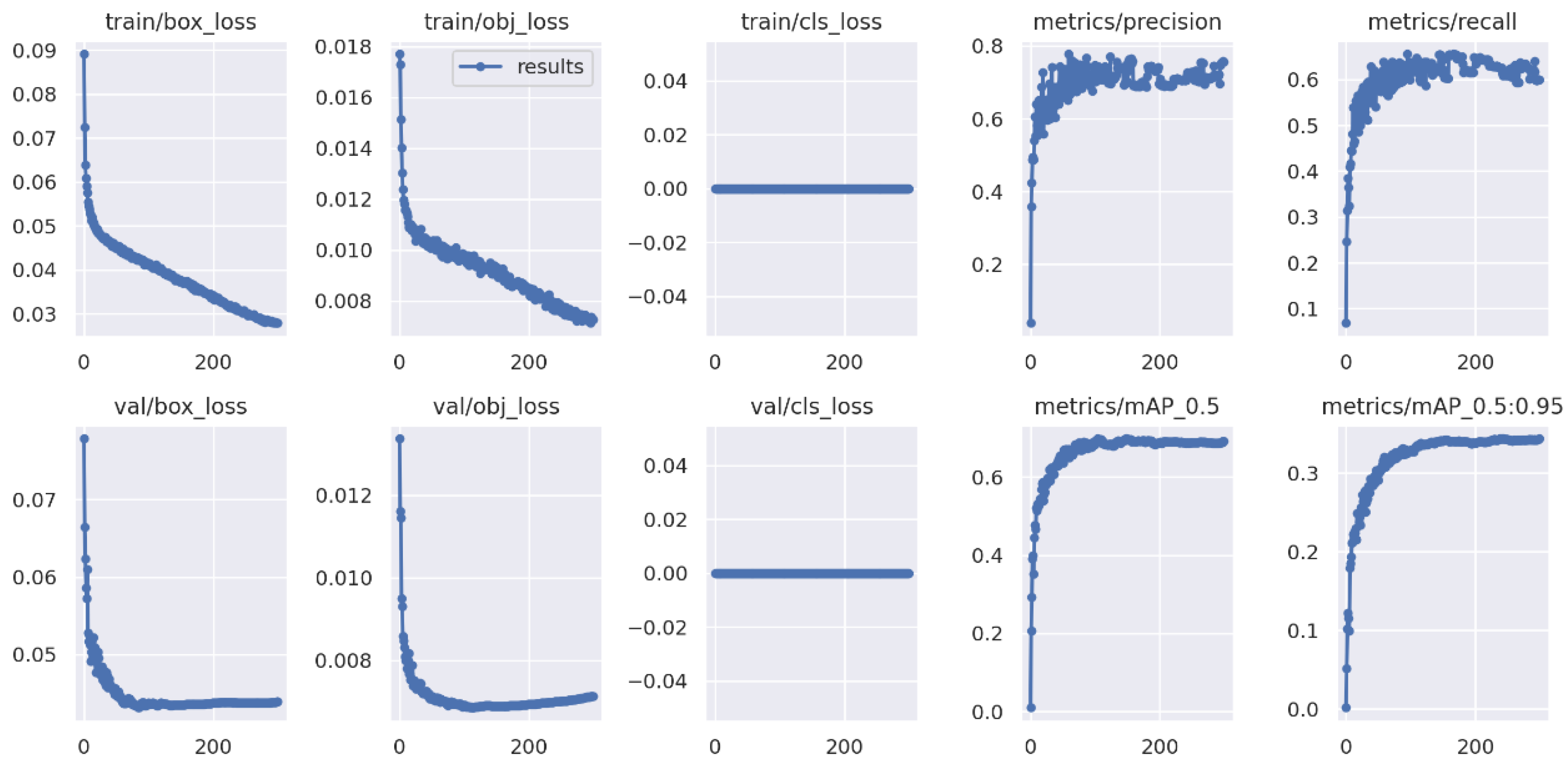

4. Experimental Results

4.1. Experimental Settings

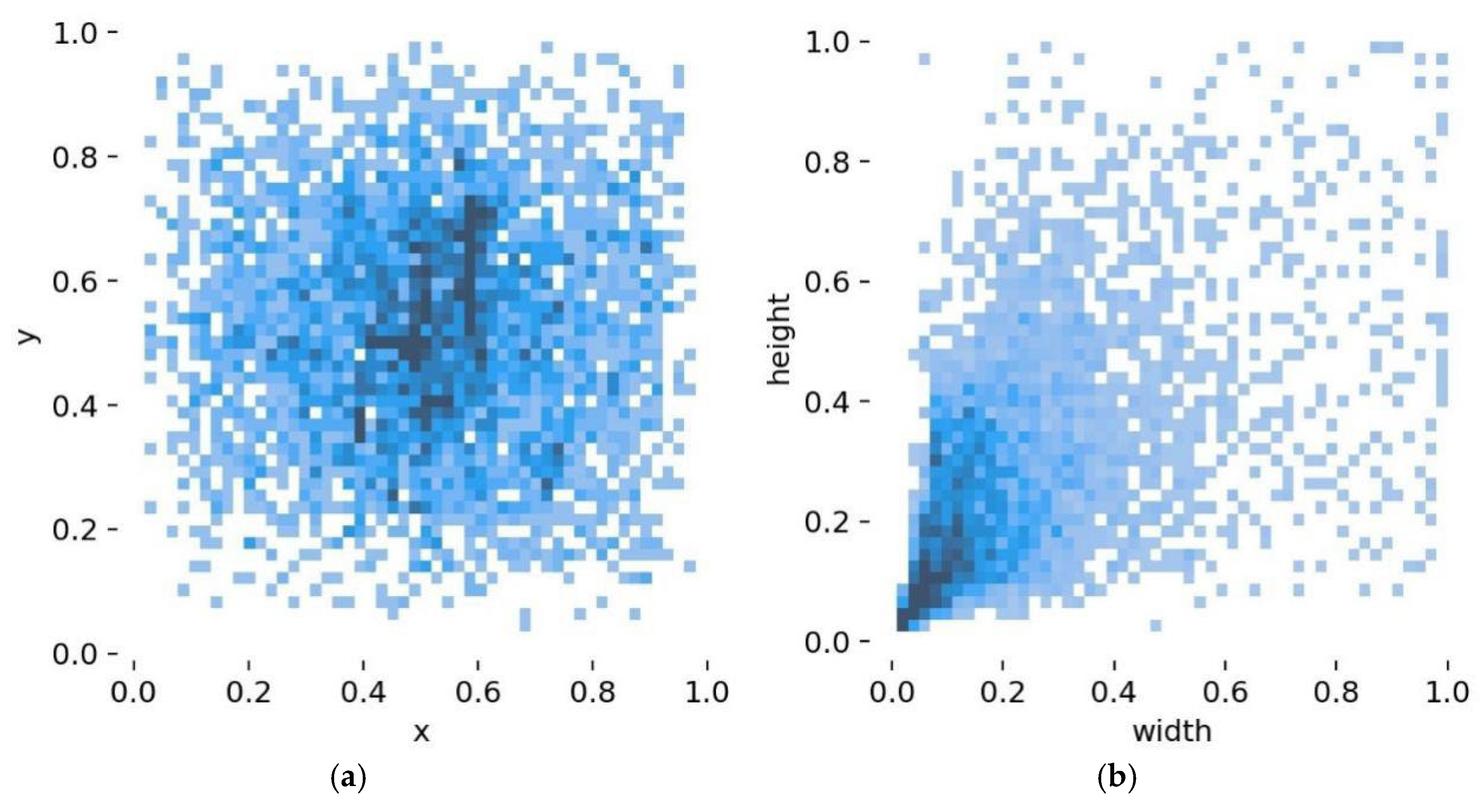

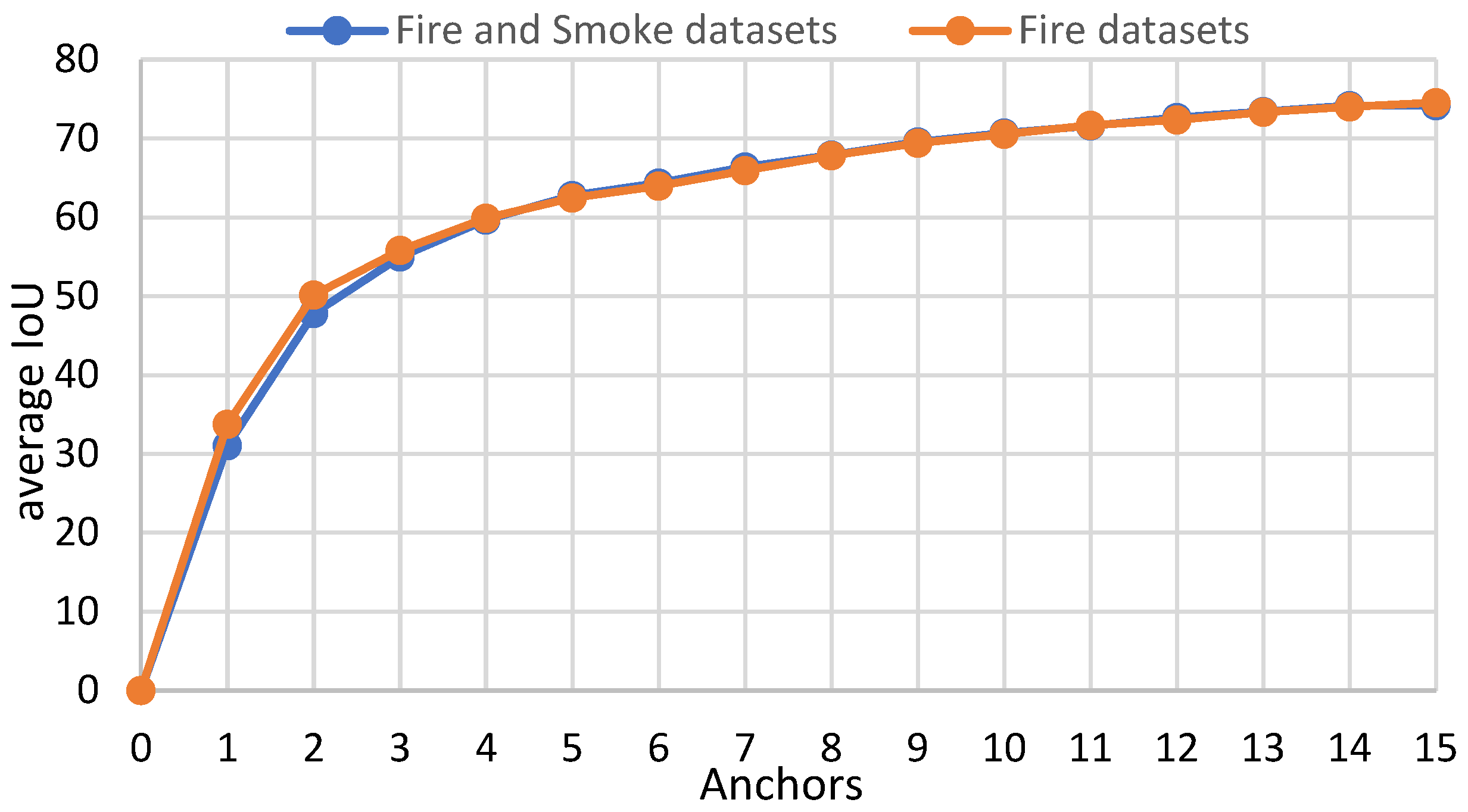

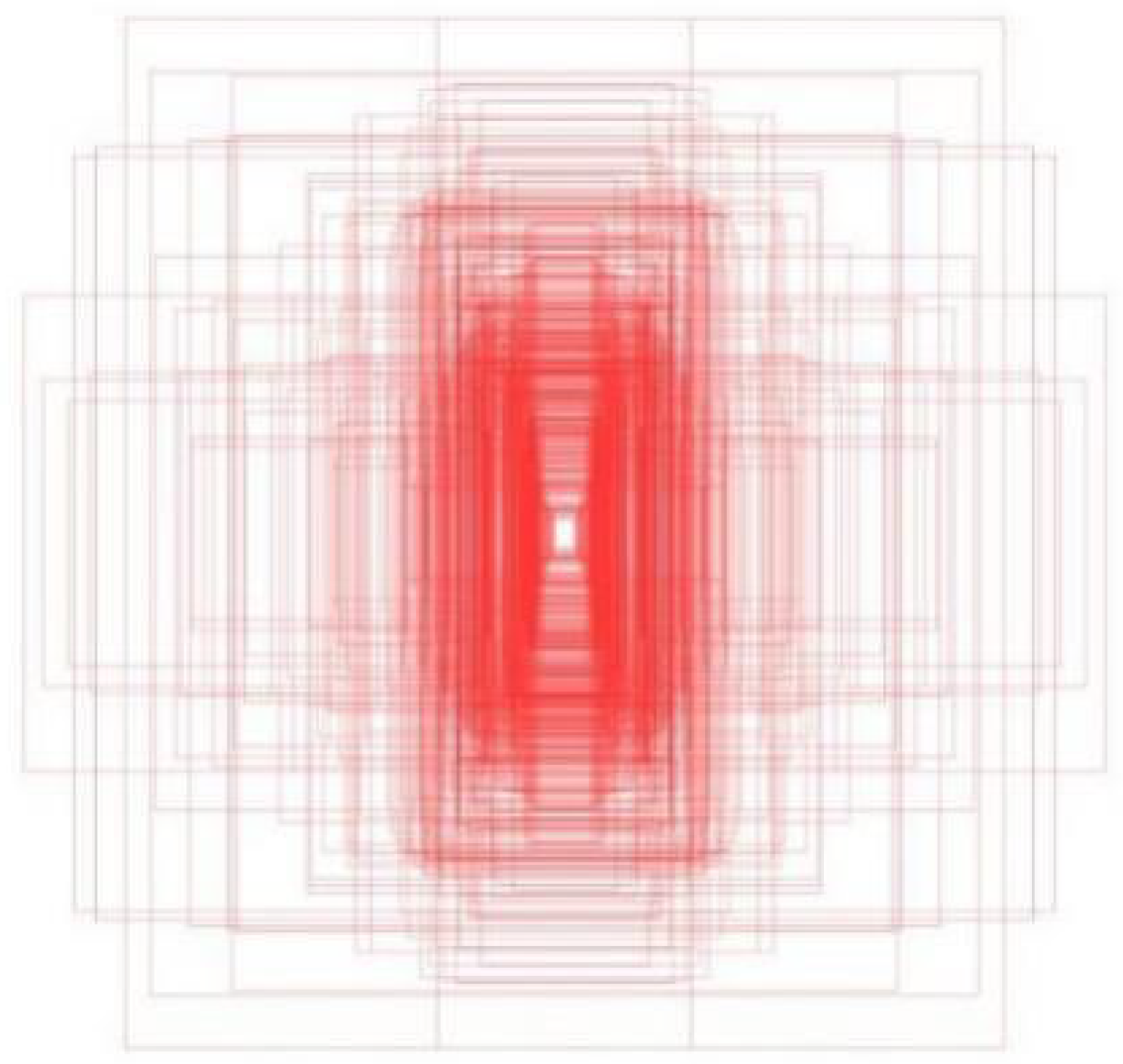

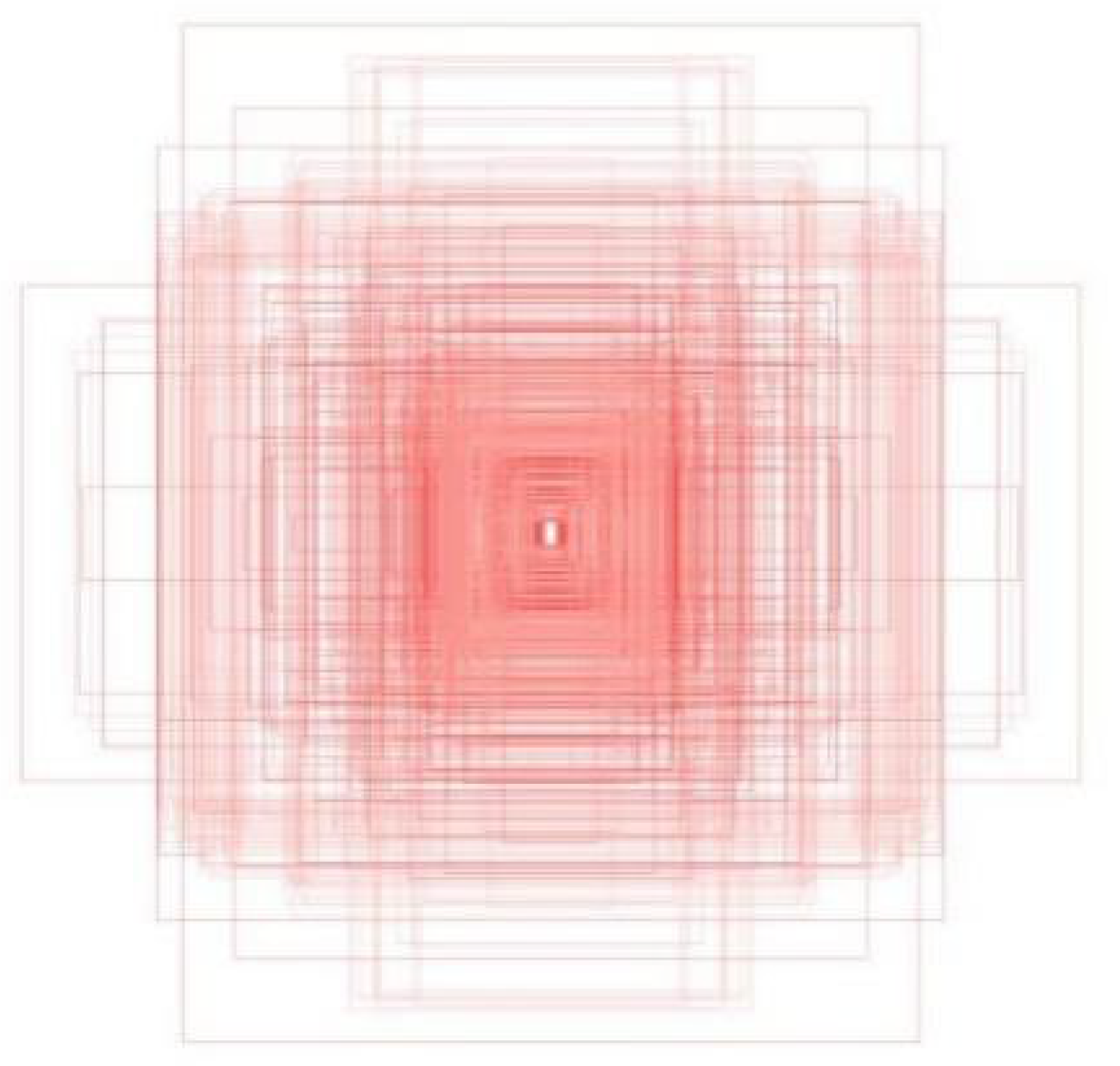

4.2. K-Means

4.3. Evaluation Metrics

4.4. Quantitative Comparison

4.5. Performance Comparisons

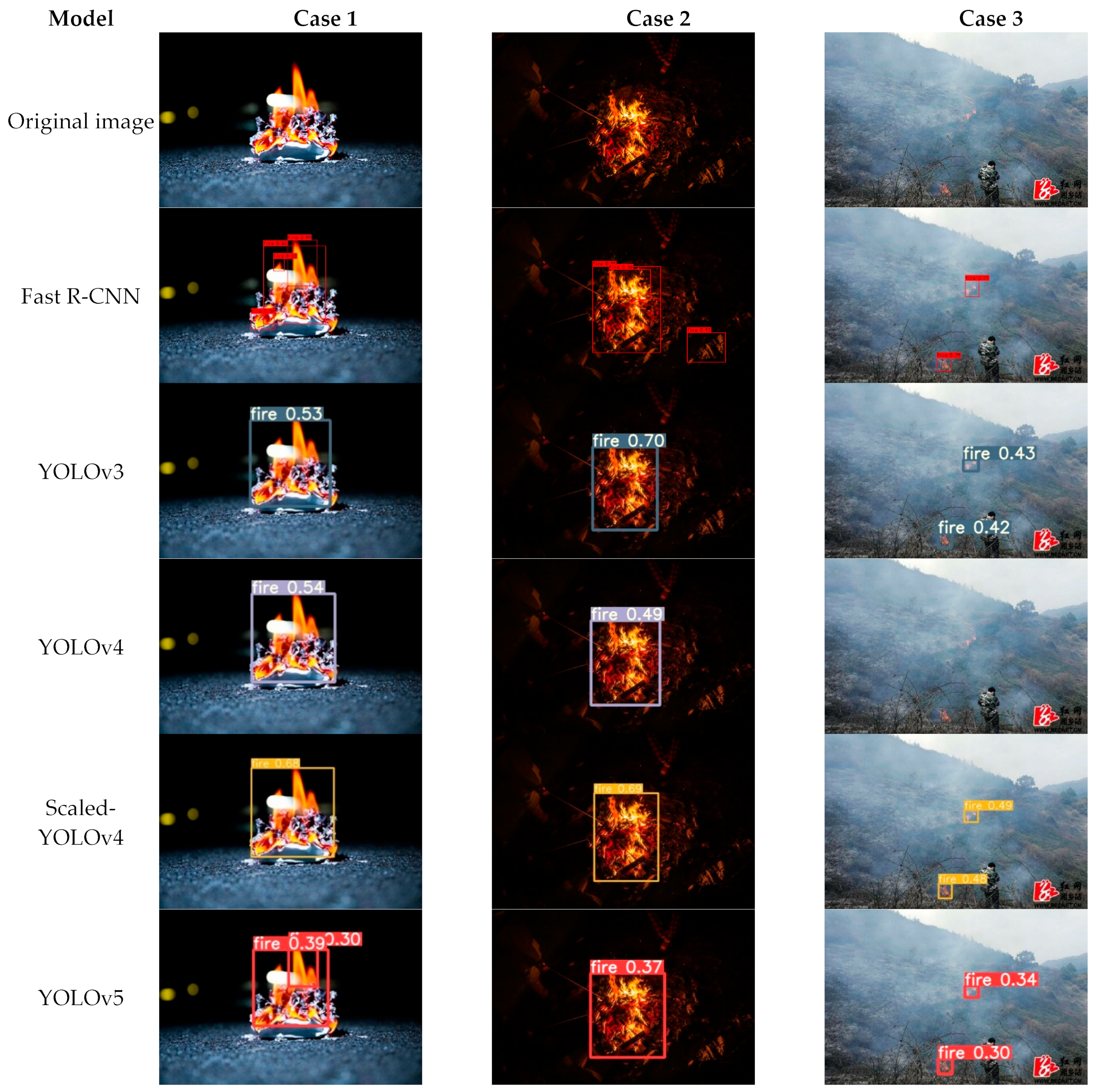

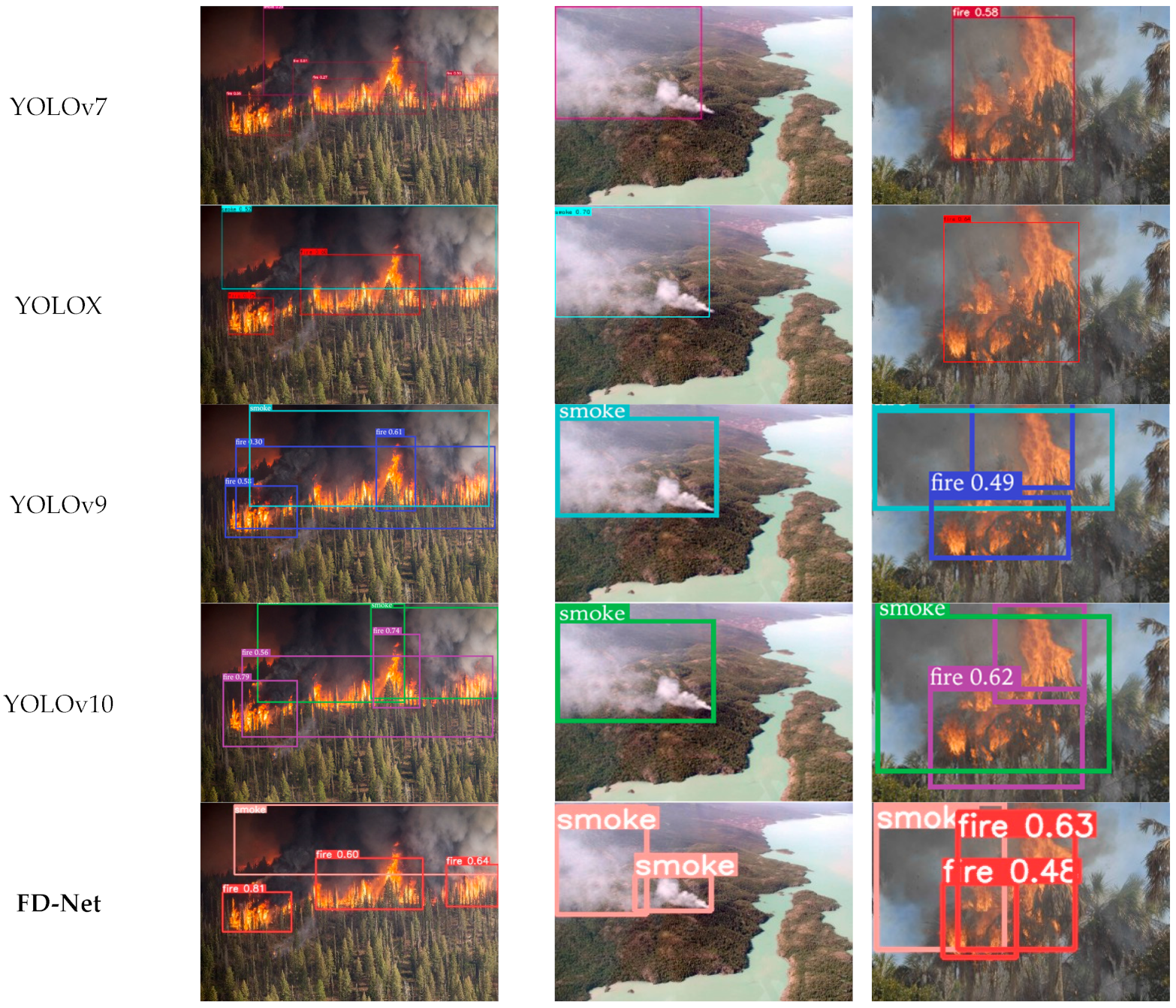

4.6. Qualitative Comparison

5. Extended Experiment

5.1. Quantitative Comparison

5.2. Performance Comparisons

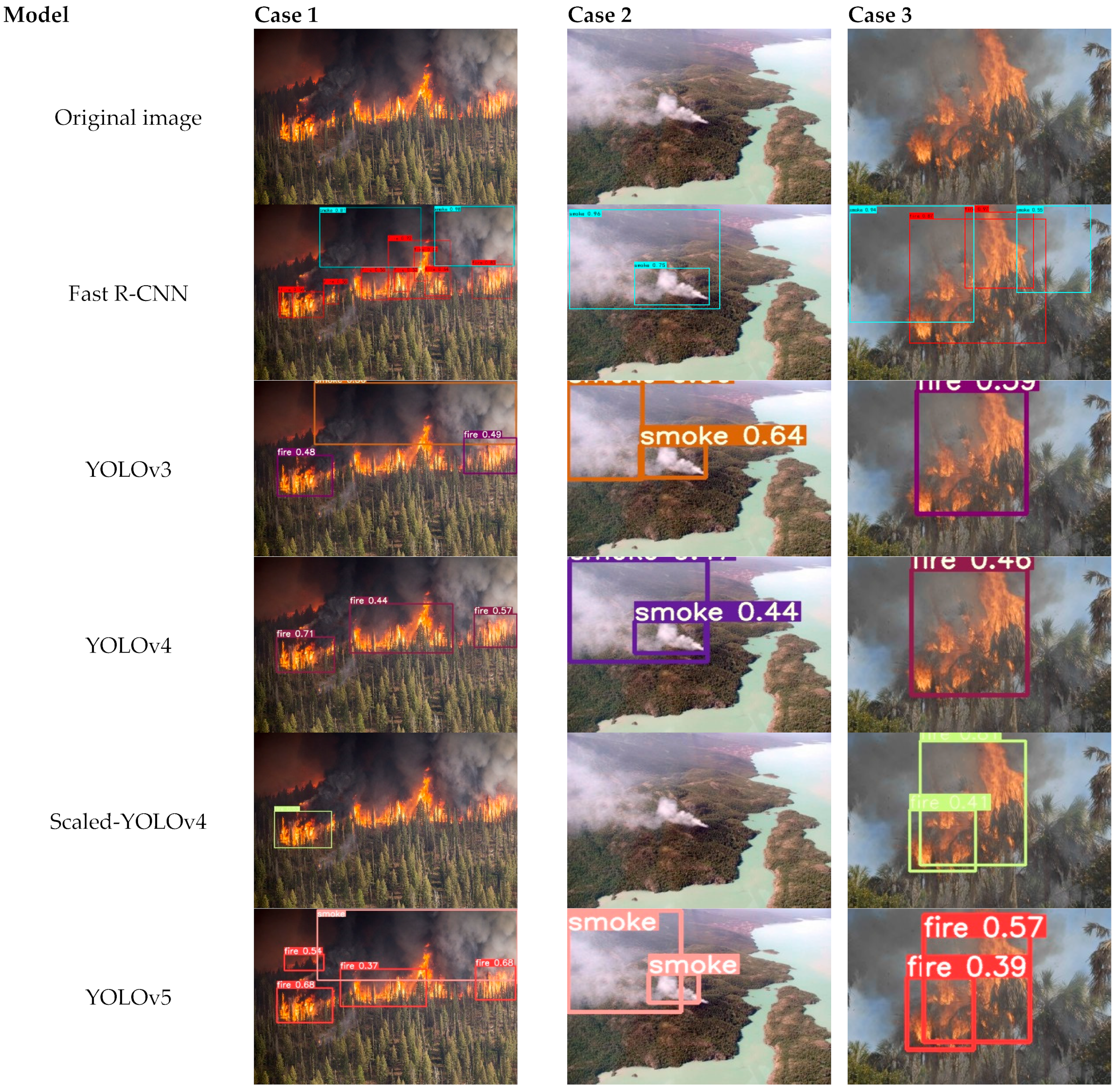

5.3. Qualitative Comparison

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yang, H.; Wang, J.; Wang, J. Efficient Detection of Forest Fire Smoke in UAV Aerial Imagery Based on an Improved YOLOv5 Model and Transfer Learning. Remote Sens. 2023, 15, 5527. [Google Scholar] [CrossRef]

- Yuan, J.; Ma, X.; Han, G. Research on Lightweight Disaster Classification Based on High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 2577. [Google Scholar] [CrossRef]

- Chen, S.; Cao, Y.; Feng, X.; Lu, X. Global2Salient: Self-adaptive feature aggregation for remote sensing smoke detection. Neurocomputing 2021, 466, 202–220. [Google Scholar] [CrossRef]

- Ma, J.; Chen, J.; Ng, M.; Anne, L. Loss odyssey in medical image segmentation. Med. Image Anal. 2021, 71, 1361–8415. [Google Scholar] [CrossRef]

- Cheng, S.; Wu, Y.; Li, Y.; Yao, F. TWD-SFNN: Three-way decisions with a single hidden layer feedforward neural network. Inf. Sci. 2021, 579, 15–32. [Google Scholar] [CrossRef]

- Bodapati, S.; Bandarupally, H. Comparison and Analysis of RNN-LSTMs and CNNs for Social Reviews Classification. Adv. Intell. Syst. Comput. 2021, 1319, 49–59. [Google Scholar]

- Abdel-Magied, M.F.; Loparo, K.A.; Lin, W. Fault detection and diagnosis for rotating machinery: A model-based approach. In Proceedings of the 1998 American Control Conference, Philadelphia, PA, USA, 26 June 1998; pp. 3291–3296. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar]

- Liu, W.; Fu, C.-Y. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV, Amsterdam, The Netherlands, 11–14 October 2016; p. 9905. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Yar, H.; Khan, Z.A.; Ullah, F.U.M.; Ullah, W.; Baik, S.W. A modified YOLOv5 architecture for efficient fire detection in smart cities. Expert Syst. Appl. 2023, 231, 120465. [Google Scholar] [CrossRef]

- Mahaveerakannan, R.; Anitha, C.; Thomas, A.K.; Rajan, S.; Muthukumar, T.; Rajulu, G.G. An IoT based forest fire detection system using integration of cat swarm with LSTM model. Comput. Commun. 2023, 211, 37–45. [Google Scholar] [CrossRef]

- Shees, A.; Ansari, M.S.; Varshney, A.; Asghar, M.N.; Kanwaly, N. FireNet-v2: Improved Lightweight Fire Detection Model for Real-Time IoT Applications. Procedia Comput. Sci. 2023, 218, 2233–2242. [Google Scholar] [CrossRef]

- Jadon, A.; Omama, M.; Varshney, A. FireNet: A specialized lightweight fire & smoke detection model for real-time IoT applications. arXiv 2019, arXiv:1905.11922. [Google Scholar]

- Jiang, H.; Learned-Miller, E. Face detection with the faster R-CNN. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 650–657. [Google Scholar]

- Bharati, P.; Pramanik, A. Deep learning techniques—R-CNN to mask R-CNN: A survey. In Computational Intelligence in Pattern Recognition; Springer: Singapore, 2020; pp. 657–668. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Almeida, J.S.; Jagatheesaperumal, S.K.; Nogueira, F.G.; de Albuquerque, V.H. EdgeFireSmoke++: A novel lightweight algorithm for real-time forest fire detection and visualization using internet of things-human machine interface. Expert Syst. Appl. 2023, 221, 119747. [Google Scholar] [CrossRef]

- Pritam, D.; Dewan, J.H. Detection of fire using image processing techniques with LUV color space. In Proceedings of the 2017 2nd International Conference for Convergence in Technology (I2CT), Mumbai, India, 7–9 April 2017; pp. 1158–1162. [Google Scholar]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. Int. J. Comput. Vis. 2020, 128, 642–656. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- Chen, J.; He, Y.; Wang, J. Multi-feature fusion based fast video flame detection. Build. Environ. 2010, 45, 1113–1122. [Google Scholar] [CrossRef]

- Khatami, A.; Mirghasemi, S.; Khosravi, A.; Lim, C.P.; Nahavandi, S. A new PSO-based approach to fire flame detection using K-Medoids clustering. Expert Syst. Appl. 2017, 68, 69–80. [Google Scholar] [CrossRef]

- Chen, K.; Cheng, Y.; Bai, H.; Mou, C.; Zhang, Y. Research on Image Fire Detection Based on Support Vector Machine. In Proceedings of the 2019 9th International Conference on Fire Science and Fire Protection Engineering (ICFSFPE), Chengdu, China, 18–20 October 2019; pp. 1–7. [Google Scholar]

- Xia, D.; Wang, S. Research on Detection Method of Uncertainty Fire Signal Based on Fire Scenario. In Proceedings of the 2006 6th World Congress on Intelligent Control and Automation, Dalian, China, 21–23 June 2006; pp. 4185–4189. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13708–13717. [Google Scholar]

- Wang, J.; Yu, J.; He, Z. DECA: A novel multi-scale efficient channel attention module for object detection in real-life fire images. Appl. Intell. 2022, 52, 1362–1375. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; p. 11211. [Google Scholar]

- Oksum, E.; Le, D.V.; Vu, M.D.; Nguyen, T.H.; Pham, L.T. A novel approach based on the fast sigmoid function for interpretation of potential field data. Bull. Geophys. Oceanogr. 2021, 62, 543–556. [Google Scholar]

- You, H.; Yu, L.; Tian, S. MC-Net: Multiple max-pooling integration module and cross multi-scale deconvolution network. Knowl. -Based Syst. 2021, 231, 107456. [Google Scholar] [CrossRef]

- Wang, S.-H.; Khan, M.A.; Govindaraj, V.; Fernandes, S.L.; Zhu, Z.; Zhang, Y.-D. Deep Rank-Based Average Pooling Network for COVID-19 Recognition. Comput. Mater. Contin. 2022, 70, 2797–2813. [Google Scholar] [CrossRef]

- Zhang, Y.D.; Satapathy, S.C.; Liu, S. A five-layer deep convolutional neural network with stochastic pooling for chest CT-based COVID-19 diagnosis. Mach. Vis. Appl. 2021, 32, 14. [Google Scholar] [CrossRef]

- Yang, Z. Activation Function: Cell Recognition Based on yolov5s/m. J. Comput. Commun. 2021, 9, 1–16. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.A. A method for stochastic optimization, arXiv 2014. arXiv 2020, arXiv:1412.6980. [Google Scholar]

- Cantrell, K.; Erenas, M.M.; de Orbe-Payá, I. Use of the hue parameter of the hue, saturation, value color space as a quantitative analytical parameter for bitonal optical sensors. Anal. Chem. 2010, 82, 531–542. [Google Scholar] [CrossRef]

- Dong, P.; Galatsanos, N.P. Affine transformation resistant watermarking based on image normalization. Int. Conf. Image Process. 2002, 3, 489–492. [Google Scholar]

- Verma, V.; Lamb, A.; Beckham, C. Manifold Mixup: Better Representations by Interpolating Hidden States. Int. Conf. Mach. Learn. 2018, 97, 6438–6447. [Google Scholar]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. CutMix: Regularization Strategy to Train Strong Classifiers with Localizable Features. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6022–6031. [Google Scholar]

- Zhong, Z.; Zheng, L.; Kang, G. Random erasing data augmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 13001–13008. [Google Scholar]

- Jacksi, K.; Ibrahim, R.K.; Zeebaree, S.R.M.; Zebari, R.R.; Sadeeq, M.A.M. Clustering Documents Based on Semantic Similarity Using HAC and K-Mean Algorithms. In Proceedings of the 2020 International Conference on Advanced Science and Engineering (ICOASE), Duhok, Iraq, 23–24 December 2020; pp. 205–210. [Google Scholar]

- Redmon, J.; Farhadi, A. yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. Scaled-YOLOv4: Scaling Cross Stage Partial Network. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13024–13033. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y. YOLOv9: Learning what you want to learn using programmable gradient information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Cao, Y.; Tang, Q.; Wu, X. EFFNet: Enhanced Feature Foreground Network for Video Smoke Source Prediction and Detection. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 1820–1833. [Google Scholar] [CrossRef]

| Model | mAP (%) | GFLOPs | Params | Weight (M) | FPS |

|---|---|---|---|---|---|

| Fast R-CNN [16] | 60.90 | 370 | 137098724 | 113.5 | 20 |

| YOLOv3 [44] | 66.00 | 155.276 | 61529119 | 246.5 | 111 |

| YOLOv4 [8] | 65.20 | 141.917 | 63943071 | 256.3 | 83 |

| Scaled-YOLOv4 [45] | 64.21 | 166 | 70274000 | 141.2 | 90 |

| YOLOv5 [9] | 67.30 | 4.2 | 1766623 | 3.9 | 250 |

| YOLOv7 [46] | 62.72 | 105.1 | 37201950 | 74.8 | 125 |

| YOLOX [47] | 68.70 | 156 | 54208895 | 36 | 50 |

| YOLOv9 [48] | 68.63 | 28.9 | 8205369 | 66.9 | 32 |

| YOLOv10 [49] | 72.39 | 103.3 | 23156890 | 169.7 | 62 |

| FD-Net (ours) | 69.23 | 4.4 | 1980895 | 4.4 | 220 |

| Model | Aps | Apm | Apl |

|---|---|---|---|

| Fast R-CNN [16] | 0.582 | 0.6204 | 0.584 |

| YOLOv3 [44] | 0.422 | 0.624 | 0.537 |

| YOLOv4 [8] | 0.453 | 0.611 | 0.530 |

| Scaled-YOLOv4 [45] | 0.45 | 0.564 | 0.471 |

| YOLOv5 [9] | 0.454 | 0.627 | 0.522 |

| YOLOv7 [46] | 0.348 | 0.602 | 0.487 |

| YOLOX [47] | 0.510 | 0.632 | 0.594 |

| YOLOv9 [48] | 0.476 | 0.622 | 0.598 |

| YOLOv10 [49] | 0.536 | 0.640 | 0.530 |

| FD-Net (ours) | 0.492 | 0.651 | 0.534 |

| Model | mAP | fire | Apm(F) | Apl(F) | Smoke | Apm(S) | Apl(S) |

|---|---|---|---|---|---|---|---|

| Fast R-CNN [16] | 56.27 | 42.00 | 54.10 | 57.00 | 70.00 | 12.50 | 86.00 |

| YOLOv3 [44] | 60.20 | 45.60 | 36.60 | 39.40 | 74.80 | 19.20 | 79.90 |

| YOLOv4 [8] | 63.90 | 51.50 | 41.00 | 40.40 | 76.20 | 21.30 | 81.40 |

| Scaled-YOLOv4 [45] | 62.50 | 48.90 | 27.80 | 33.80 | 76.10 | 16.80 | 71.10 |

| YOLOv5 [9] | 60.30 | 46.40 | 39.90 | 40.80 | 74.20 | 15.90 | 79.40 |

| YOLOv7 [46] | 59.70 | 46.90 | 38.30 | 41.50 | 72.50 | 11.90 | 79.40 |

| YOLOX [47] | 66.42 | 55.00 | 72.10 | 59.00 | 78.00 | 24.30 | 94.00 |

| YOLOv9 [48] | 64.65 | 49.28 | 58.66 | 46.20 | 80.02 | 16.30 | 74.85 |

| YOLOv10 [49] | 65.02 | 53.12 | 45.69 | 46.91 | 76.91 | 14.69 | 71.31 |

| FD-Net(ours) | 65.10 | 52.60 | 44.00 | 47.70 | 77.60 | 16.90 | 80.60 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, J.; Wang, H.; Li, M.; Wang, X.; Song, W.; Li, S.; Gong, W. FD-Net: A Single-Stage Fire Detection Framework for Remote Sensing in Complex Environments. Remote Sens. 2024, 16, 3382. https://doi.org/10.3390/rs16183382

Yuan J, Wang H, Li M, Wang X, Song W, Li S, Gong W. FD-Net: A Single-Stage Fire Detection Framework for Remote Sensing in Complex Environments. Remote Sensing. 2024; 16(18):3382. https://doi.org/10.3390/rs16183382

Chicago/Turabian StyleYuan, Jianye, Haofei Wang, Minghao Li, Xiaohan Wang, Weiwei Song, Song Li, and Wei Gong. 2024. "FD-Net: A Single-Stage Fire Detection Framework for Remote Sensing in Complex Environments" Remote Sensing 16, no. 18: 3382. https://doi.org/10.3390/rs16183382

APA StyleYuan, J., Wang, H., Li, M., Wang, X., Song, W., Li, S., & Gong, W. (2024). FD-Net: A Single-Stage Fire Detection Framework for Remote Sensing in Complex Environments. Remote Sensing, 16(18), 3382. https://doi.org/10.3390/rs16183382