ICTH: Local-to-Global Spectral Reconstruction Network for Heterosource Hyperspectral Images

Abstract

1. Introduction

- (1)

- We propose ICTH for heterogeneous hyperspectral image reconstruction, combining a CNN and a Transformer to achieve a coarse-to-fine reconstruction scheme, demonstrating excellent results on three hyperspectral datasets;

- (2)

- We propose an efficient plug-and-play spatial–spectral attention mechanism (S2AM) that simultaneously extracts fine-grained features in both spatial and spectral dimensions while maintaining a linear relationship between complexity and spatial dimensions;

- (3)

- We have refined the pre-processing operations on heterogeneous image data to enhance SR accuracy;

- (4)

- We present a vegetation-index-based assessment of the effectiveness of spectral reconstruction.

2. Related Work

2.1. Hyperspectral Image Reconstruction

2.2. Vision Transformer

3. Materials and Methods

3.1. Study Area and Experimental Design

3.2. Aerial Image Acquisition and Data Preprocessing

3.3. Remote Sensing Image Preprocessing

3.3.1. RGB and Multispectral Image Mosaic

3.3.2. Geometric Correction and Ortho-Stitching of Hyperspectral Data

3.4. Remote Sensing Image Alignment

3.5. Model Construction

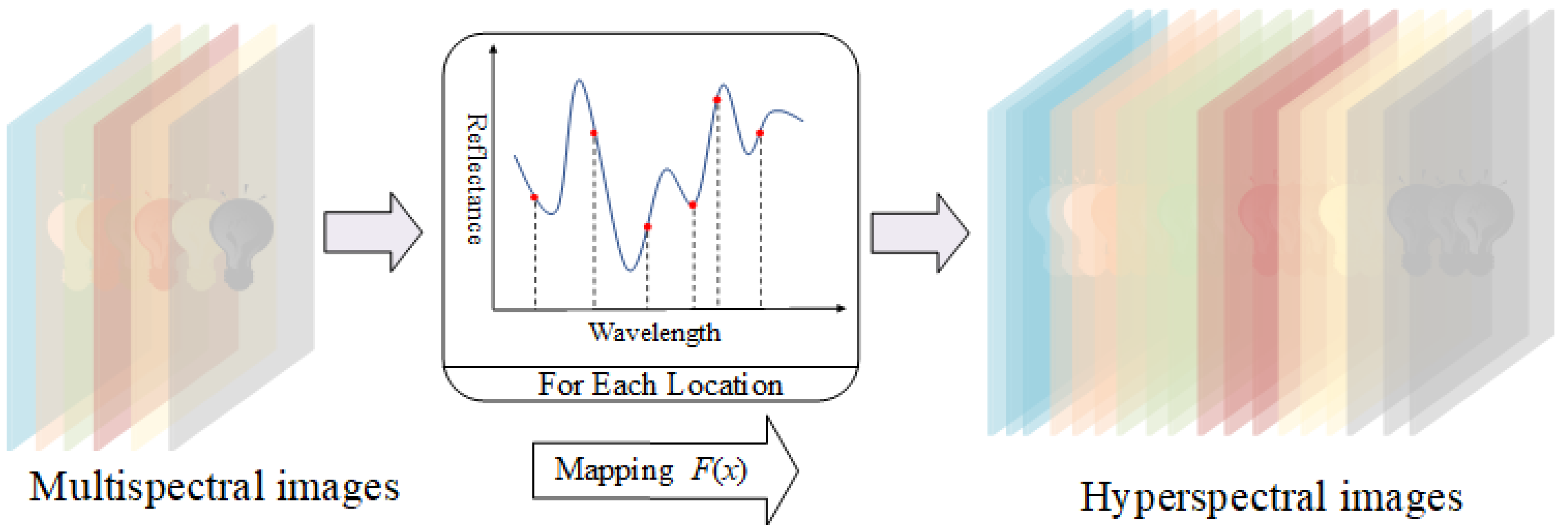

3.5.1. Problem Formulation

3.5.2. Network Architecture

3.5.3. Spatial–Spectral Conv3D Block

3.5.4. Spatial–Spectral Attention Mechanism

3.5.5. High-Frequency Extractor

3.6. Model Performance Evaluation

3.6.1. The Visual Effects Evaluation Indicators

3.6.2. The Application of Evaluation Indicators

3.7. Training Setting

3.7.1. Dataset

3.7.2. Implementation Details

4. Results

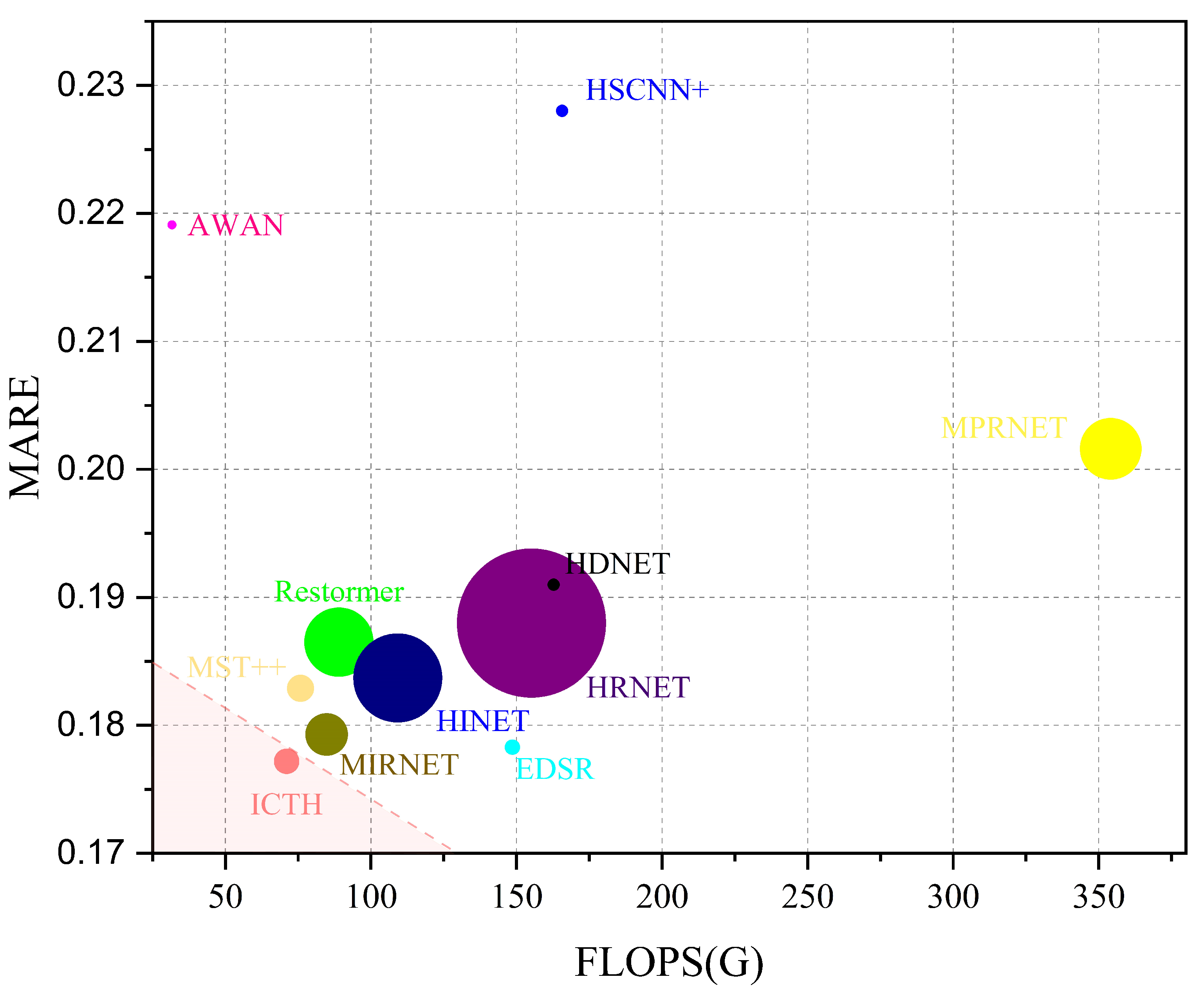

4.1. Comparison with SOTA Methods

4.1.1. Quantitative Results

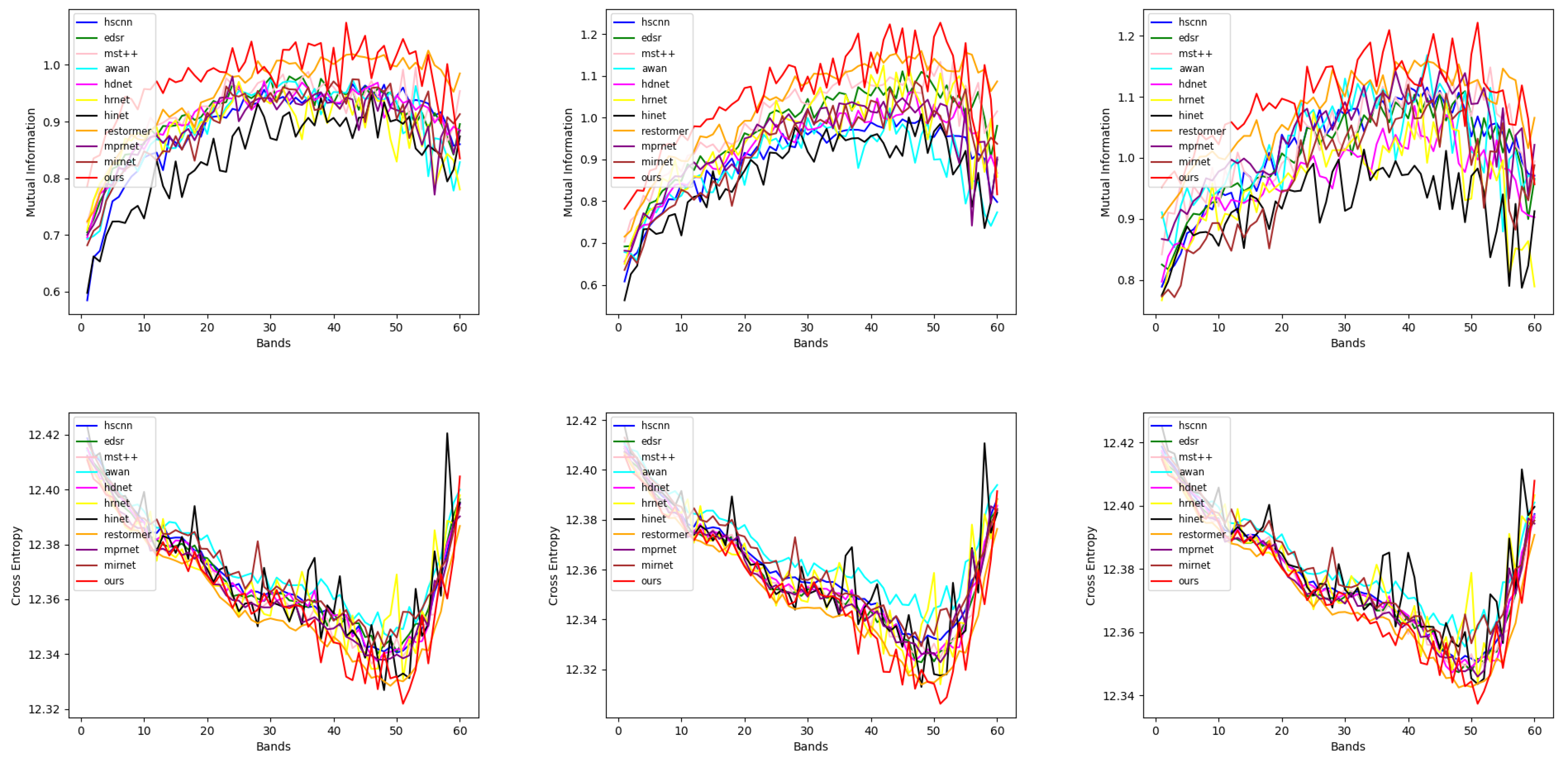

4.1.2. Qualitative Results

4.1.3. Integrated Assessment

4.2. Application Validation

4.2.1. Validation of the Application of VI

4.2.2. Verification of Generalizability

4.3. Ablation Study

4.3.1. Decomposition Ablation

4.3.2. Attention Comparison

5. Discussion

5.1. Importance of Geographic Alignment

5.2. Scalability Challenges in Spectral Reconstruction of UAV Hyperspectral Imagery

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Qureshi, R.; Uzair, M.; Khurshid, K.; Yan, H. Hyperspectral document image processing: Applications, challenges and future prospects. Pattern Recognit. 2019, 90, 12–22. [Google Scholar] [CrossRef]

- Meng, Z.; Qiao, M.; Ma, J.; Yu, Z.; Xu, K.; Yuan, X. Snapshot multispectral endomicroscopy. Opt. Lett. 2020, 45, 3897–3900. [Google Scholar] [CrossRef] [PubMed]

- Ma, H.; Huang, W.; Dong, Y.; Liu, L.; Guo, A. Using UAV-based hyperspectral imagery to detect winter wheat fusarium head blight. Remote Sens. 2021, 13, 3024. [Google Scholar] [CrossRef]

- Miyoshi, G.T.; Arruda, M.d.S.; Osco, L.P.; Marcato Junior, J.; Gonçalves, D.N.; Imai, N.N.; Tommaselli, A.M.G.; Honkavaara, E.; Gonçalves, W.N. A novel deep learning method to identify single tree species in UAV-based hyperspectral images. Remote Sens. 2020, 12, 1294. [Google Scholar] [CrossRef]

- Tian, Q.; He, C.; Xu, Y.; Wu, Z.; Wei, Z. Hyperspectral Target Detection: Learning Faithful Background Representations via Orthogonal Subspace-Guided Variational Autoencoder. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5516714. [Google Scholar] [CrossRef]

- Chang, C.I. An effective evaluation tool for hyperspectral target detection: 3D receiver operating characteristic curve analysis. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5131–5153. [Google Scholar] [CrossRef]

- Zhang, X.; Huang, W.; Wang, Q.; Li, X. SSR-NET: Spatial–spectral reconstruction network for hyperspectral and multispectral image fusion. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5953–5965. [Google Scholar] [CrossRef]

- Borsoi, R.A.; Imbiriba, T.; Bermudez, J.C.M. Super-resolution for hyperspectral and multispectral image fusion accounting for seasonal spectral variability. IEEE Trans. Image Process. 2019, 29, 116–127. [Google Scholar] [CrossRef]

- Yan, L.; Wang, X.; Zhao, M.; Kaloorazi, M.; Chen, J.; Rahardja, S. Reconstruction of hyperspectral data from RGB images with prior category information. IEEE Trans. Comput. Imaging 2020, 6, 1070–1081. [Google Scholar] [CrossRef]

- Hang, R.; Liu, Q.; Li, Z. Spectral super-resolution network guided by intrinsic properties of hyperspectral imagery. IEEE Trans. Image Process. 2021, 30, 7256–7265. [Google Scholar] [CrossRef]

- Xu, R.; Yao, M.; Chen, C.; Wang, L.; Xiong, Z. Continuous spectral reconstruction from rgb images via implicit neural representation. In Proceedings of the Computer Vision—ECCV 2022 Workshops: European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 78–94. [Google Scholar] [CrossRef]

- Li, J.; Du, S.; Wu, C.; Leng, Y.; Song, R.; Li, Y. Drcr net: Dense residual channel re-calibration network with non-local purification for spectral super resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 1259–1268. [Google Scholar] [CrossRef]

- Aeschbacher, J.; Wu, J.; Timofte, R. In defense of shallow learned spectral reconstruction from RGB images. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 471–479. [Google Scholar]

- Akhtar, N.; Mian, A. Hyperspectral recovery from RGB images using Gaussian processes. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 100–113. [Google Scholar] [CrossRef] [PubMed]

- Mei, S.; Jiang, R.; Li, X.; Du, Q. Spatial and spectral joint super-resolution using convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4590–4603. [Google Scholar] [CrossRef]

- Han, X.H.; Shi, B.; Zheng, Y. Residual HSRCNN: Residual hyper-spectral reconstruction CNN from an RGB image. In Proceedings of the 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 2664–2669. [Google Scholar]

- Koundinya, S.; Sharma, H.; Sharma, M.; Upadhyay, A.; Manekar, R.; Mukhopadhyay, R.; Karmakar, A.; Chaudhury, S. 2D-3D CNN based architectures for spectral reconstruction from RGB images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 844–851. [Google Scholar]

- Alvarez-Gila, A.; Van De Weijer, J.; Garrote, E. Adversarial networks for spatial context-aware spectral image reconstruction from RGB. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 480–490. [Google Scholar]

- Zhang, Y.; Yang, W.; Zhang, W.; Yu, J.; Zhang, J.; Yang, Y.; Lu, Y.; Tang, W. Two-Step ResUp&Down Generative Adversarial Network to Reconstruct Multispectral Image from Aerial RGB Image. Comput. Electron. Agric. 2022, 192, 106617. [Google Scholar]

- Cai, Y.; Lin, J.; Lin, Z.; Wang, H.; Zhang, Y.; Pfister, H.; Timofte, R.; Van Gool, L. Mst++: Multi-stage spectral-wise transformer for efficient spectral reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 745–755. [Google Scholar]

- Arad, B.; Ben-Shahar, O. Sparse recovery of hyperspectral signal from natural RGB images. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings Part VII 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 19–34. [Google Scholar]

- Gao, L.; Hong, D.; Yao, J.; Zhang, B.; Gamba, P.; Chanussot, J. Spectral superresolution of multispectral imagery with joint sparse and low-rank learning. IEEE Trans. Geosci. Remote Sens. 2020, 59, 2269–2280. [Google Scholar] [CrossRef]

- Wan, X.; Li, D.; Kong, F.; Lv, Y.; Wang, Q. Spectral Quadratic Variation Regularized Auto-Weighted Tensor Ring Decomposition for Hyperspectral Image Reconstruction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 9907–9921. [Google Scholar] [CrossRef]

- Chen, H.; Zhao, W.; Xu, T.; Shi, G.; Zhou, S.; Liu, P.; Li, J. Spectral-wise implicit neural representation for hyperspectral image reconstruction. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 3714–3727. [Google Scholar] [CrossRef]

- Qiu, Y.; Zhao, S.; Ma, X.; Zhang, T.; Arce, G.R. Hyperspectral image reconstruction via patch attention driven network. Opt. Express 2023, 31, 20221–20236. [Google Scholar] [CrossRef]

- Wang, L.; Sun, C.; Fu, Y.; Kim, M.H.; Huang, H. Hyperspectral image reconstruction using a deep spatial-spectral prior. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8032–8041. [Google Scholar]

- Fubara, B.J.; Sedky, M.; Dyke, D. RGB to spectral reconstruction via learned basis functions and weights. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 480–481. [Google Scholar]

- Zhang, L.; Lang, Z.; Wang, P.; Wei, W.; Liao, S.; Shao, L.; Zhang, Y. Pixel-aware deep function-mixture network for spectral super-resolution. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12821–12828. [Google Scholar]

- Zhang, T.; Liang, Z.; Fu, Y. Joint spatial-spectral pattern optimization and hyperspectral image reconstruction. IEEE J. Sel. Top. Signal Process. 2022, 16, 636–648. [Google Scholar] [CrossRef]

- Xiong, Z.; Shi, Z.; Li, H.; Wang, L.; Liu, D.; Wu, F. HSCNN: CNN-based hyperspectral image recovery from spectrally undersampled projections. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 518–525. [Google Scholar]

- Li, J.; Cui, R.; Li, B.; Song, R.; Li, Y.; Dai, Y.; Du, Q. Hyperspectral image super-resolution by band attention through adversarial learning. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4304–4318. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Zhu, Z.; Liu, H.; Hou, J.; Zeng, H.; Zhang, Q. Semantic-embedded unsupervised spectral reconstruction from single RGB images in the wild. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 2279–2288. [Google Scholar]

- Zhao, Y.; Po, L.M.; Lin, T.; Yan, Q.; Liu, W.; Xian, P. HSGAN: Hyperspectral reconstruction from rgb images with generative adversarial network. IEEE Trans. Neural Netw. Learn. Syst. 2023. early access. [Google Scholar] [CrossRef]

- Chen, C.F.R.; Fan, Q.; Panda, R. Crossvit: Cross-attention multi-scale vision transformer for image classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 357–366. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Zhao, E.; Qu, N.; Wang, Y.; Gao, C. Spectral Reconstruction from Thermal Infrared Multispectral Image Using Convolutional Neural Network and Transformer Joint Network. Remote Sens. 2024, 16, 1284. [Google Scholar] [CrossRef]

- Wang, Y.; Li, X.R. Distributed estimation fusion with unavailable cross-correlation. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 259–278. [Google Scholar] [CrossRef]

- Kenney, C.S.; Zuliani, M.; Manjunath, B. An axiomatic approach to corner detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 191–197. [Google Scholar]

- Oppermann, M. Triangulation—A methodological discussion. Int. J. Tour. Res. 2000, 2, 141–145. [Google Scholar] [CrossRef]

- Broge, N.H.; Leblanc, E. Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Learning enriched features for real image restoration and enhancement. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXV 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 492–511. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Multi-stage progressive image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14821–14831. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Chen, L.; Lu, X.; Zhang, J.; Chu, X.; Chen, C. Hinet: Half instance normalization network for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 182–192. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Shi, Z.; Chen, C.; Xiong, Z.; Liu, D.; Wu, F. Hscnn+: Advanced cnn-based hyperspectral recovery from rgb images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 939–947. [Google Scholar]

- Zhao, Y.; Po, L.M.; Yan, Q.; Liu, W.; Lin, T. Hierarchical regression network for spectral reconstruction from RGB images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 422–423. [Google Scholar]

- Hu, X.; Cai, Y.; Lin, J.; Wang, H.; Yuan, X.; Zhang, Y.; Timofte, R.; Van Gool, L. Hdnet: High-resolution dual-domain learning for spectral compressive imaging. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17542–17551. [Google Scholar]

- Li, J.; Wu, C.; Song, R.; Li, Y.; Liu, F. Adaptive weighted attention network with camera spectral sensitivity prior for spectral reconstruction from RGB images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 462–463. [Google Scholar]

- Shen, Y.; Yan, Z.; Yang, Y.; Tang, W.; Sun, J.; Zhang, Y. Application of UAV-Borne Visible-Infared Pushbroom Imaging Hyperspectral for Rice Yield Estimation Using Feature Selection Regression Methods. Sustainability 2024, 16, 632. [Google Scholar] [CrossRef]

| Flight Setting Content | Parameters | Flight Setting Content | Parameters |

|---|---|---|---|

| Flight altitude | 50 m | Mainline angle | 182° |

| Movement speed | 2.3 m/s | Head Pitch Angle | −90° |

| Heading overlap rate | 94% | Distance between photos | F:3.7M / S:9.8M |

| Bypass overlap rate | 89% | Photo interval | 2.0 SEC |

| Model | NTIRE 2022 Dataset | Rice Field Dataset | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| MARE | RMSE | PSNR | SSIM | SAM | MARE | RMSE | PSNR | SSIM | SAM | |

| HSCNN+ | 0.4048 | 0.0593 | 26.03 | 0.823 | 0.243 | 0.2280 | 0.0190 | 34.51 | 0.915 | 0.164 |

| HRNET | 0.4178 | 0.0570 | 26.31 | 0.839 | 0.182 | 0.1880 | 0.0221 | 33.24 | 0.911 | 0.169 |

| HINET | 0.3705 | 0.0503 | 27.80 | 0.871 | 0.140 | 0.1837 | 0.0193 | 34.46 | 0.910 | 0.180 |

| EDSR | 0.3331 | 0.0456 | 27.99 | 0.879 | 0.201 | 0.1783 | 0.0199 | 34.19 | 0.919 | 0.164 |

| HDNET | 0.2682 | 0.0373 | 29.87 | 0.915 | 0.128 | 0.1910 | 0.0192 | 34.46 | 0.915 | 0.169 |

| AWAN | 0.2499 | 0.0367 | 31.22 | 0.916 | 0.101 | 0.2191 | 0.0224 | 33.14 | 0.908 | 0.171 |

| MIRNET | 0.2012 | 0.0287 | 32.72 | 0.943 | 0.092 | 0.2016 | 0.0207 | 33.80 | 0.912 | 0.170 |

| MPRNET | 0.2032 | 0.0284 | 32.87 | 0.946 | 0.102 | 0.1793 | 0.0201 | 34.06 | 0.918 | 0.162 |

| Restormer | 0.1842 | 0.0280 | 33.22 | 0.945 | 0.094 | 0.1865 | 0.0188 | 34.86 | 0.920 | 0.162 |

| MST++ | 0.1836 | 0.0279 | 33.41 | 0.951 | 0.085 | 0.1829 | 0.0192 | 34.42 | 0.918 | 0.162 |

| Ours | 0.1672 | 0.0246 | 34.26 | 0.952 | 0.088 | 0.1772 | 0.0186 | 34.76 | 0.922 | 0.160 |

| Model | MARE | RMSE | PSNR | SSIM | SAM |

|---|---|---|---|---|---|

| HSCNN+ | 0.4732 | 0.0200 | 34.02 | 0.872 | 0.264 |

| HRNET | 0.3605 | 0.0167 | 35.64 | 0.893 | 0.246 |

| HINET | 0.3783 | 0.0184 | 34.79 | 0.876 | 0.296 |

| EDSR | 0.3895 | 0.0176 | 35.15 | 0.891 | 0.250 |

| HDNET | 0.4103 | 0.0175 | 35.19 | 0.888 | 0.249 |

| AWAN | 0.4688 | 0.0206 | 33.77 | 0.875 | 0.252 |

| MIRNET | 0.4423 | 0.0186 | 34.64 | 0.874 | 0.263 |

| MPRNET | 0.4444 | 0.0189 | 34.54 | 0.878 | 0.258 |

| Restormer | 0.3740 | 0.0168 | 35.55 | 0.893 | 0.254 |

| MST++ | 0.3912 | 0.0170 | 35.45 | 0.891 | 0.249 |

| Ours | 0.3278 | 0.0162 | 35.90 | 0.900 | 0.249 |

| CSSM | HFE | MSA | MARE | RMSE | PSNR | SSIM | SAM |

|---|---|---|---|---|---|---|---|

| 0.2385 | 0.0211 | 33.62 | 0.891 | 0.200 | |||

| ✔ | W-MSA | 0.2018 | 0.0194 | 34.32 | 0.906 | 0.175 | |

| ✔ | 0.2180 | 0.0193 | 34.42 | 0.912 | 0.167 | ||

| ✔ | ✔ | W-MSA | 0.1914 | 0.0191 | 34.47 | 0.914 | 0.169 |

| ✔ | ✔ | S-MSA | 0.1859 | 0.0192 | 34.42 | 0.907 | 0.167 |

| ✔ | ✔ | SS2M | 0.1772 | 0.0186 | 34.76 | 0.922 | 0.160 |

| Experimental Area | Alignment | MARE | RMSE | PSNR | SSIM | SAM |

|---|---|---|---|---|---|---|

| Area 1 | G | 0.1772 | 0.0186 | 34.76 | 0.922 | 0.160 |

| w/o G | 0.3745 | 0.0463 | 26.73 | 0.787 | 0.306 | |

| Area 2 | G | 0.3278 | 0.0162 | 35.90 | 0.900 | 0.248 |

| w/o G | 11.9138 | 0.0602 | 24.66 | 0.645 | 0.434 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, H.; Liu, Z.; Huang, Z.; Wang, X.; Su, W.; Zhang, Y. ICTH: Local-to-Global Spectral Reconstruction Network for Heterosource Hyperspectral Images. Remote Sens. 2024, 16, 3377. https://doi.org/10.3390/rs16183377

Zhou H, Liu Z, Huang Z, Wang X, Su W, Zhang Y. ICTH: Local-to-Global Spectral Reconstruction Network for Heterosource Hyperspectral Images. Remote Sensing. 2024; 16(18):3377. https://doi.org/10.3390/rs16183377

Chicago/Turabian StyleZhou, Haozhe, Zhanhao Liu, Zhenpu Huang, Xuguang Wang, Wen Su, and Yanchao Zhang. 2024. "ICTH: Local-to-Global Spectral Reconstruction Network for Heterosource Hyperspectral Images" Remote Sensing 16, no. 18: 3377. https://doi.org/10.3390/rs16183377

APA StyleZhou, H., Liu, Z., Huang, Z., Wang, X., Su, W., & Zhang, Y. (2024). ICTH: Local-to-Global Spectral Reconstruction Network for Heterosource Hyperspectral Images. Remote Sensing, 16(18), 3377. https://doi.org/10.3390/rs16183377