Visual Localization Method for Unmanned Aerial Vehicles in Urban Scenes Based on Shape and Spatial Relationship Matching of Buildings

Abstract

1. Introduction

- (1)

- In SSRM, vector e-map data (stored in .shp format) are used as geo-referenced data instead of pre-collected images or image-based map-related data. The e-map data can comprehensively reflect the individual and spatial relationship characteristics of buildings while also reducing the amount of data prestored on UAVs.

- (2)

- We propose a scene matching method in which the shape information and spatial relationships of buildings are used to match UAV images and geo-referenced data. Compared with existing map-based matching methods, increased consideration is given to the spatial relationships between buildings, thus greatly enhancing the robustness of the matching process.

- (3)

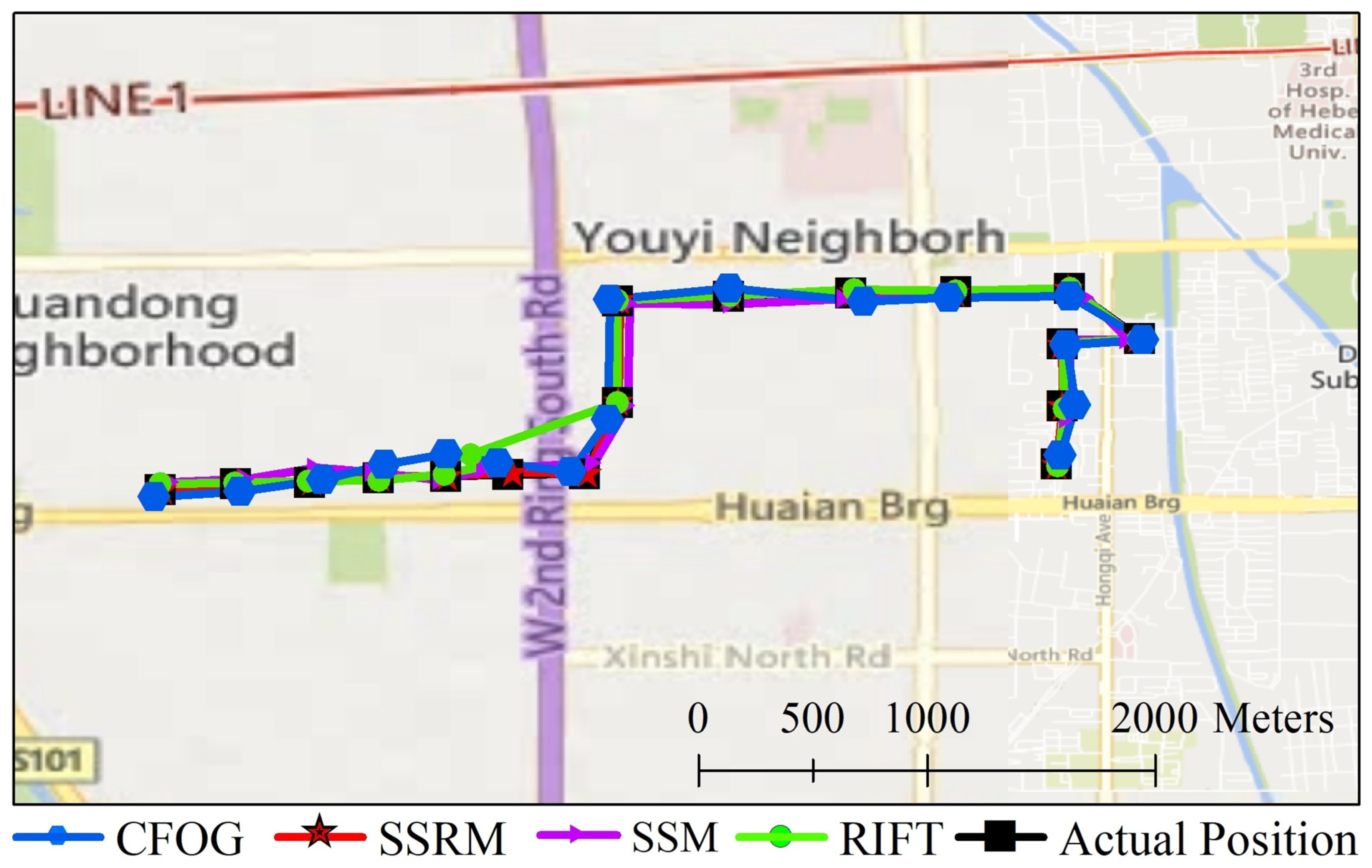

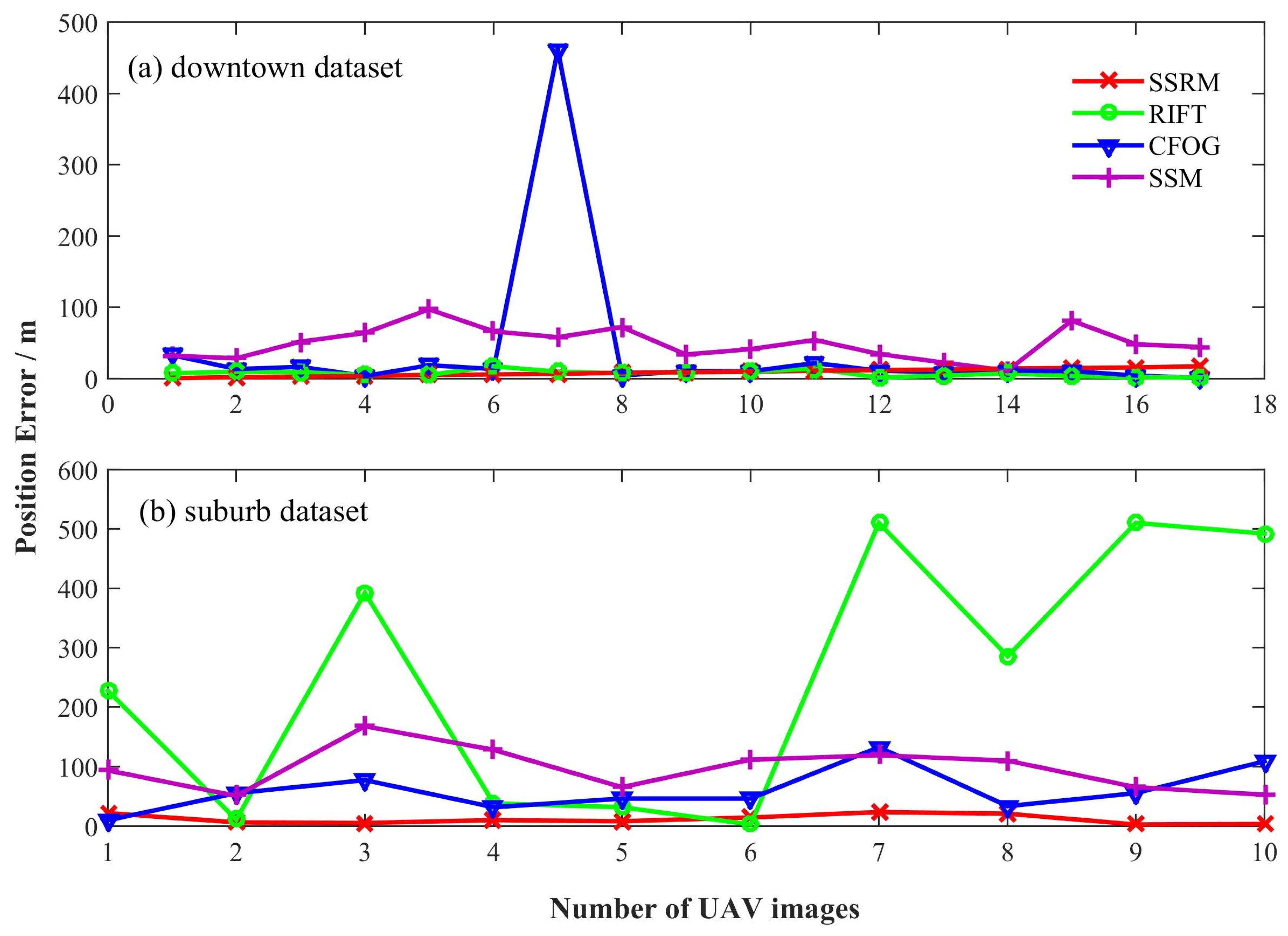

- The effectiveness of the SSRM method is verified via simulation flight data. Moreover, we compare the SSRM method with the radiation-variation insensitive feature transform (RIFT) feature matching algorithm [13], the channel features of oriented gradient (CFOG) template matching algorithm [14], and the SSM map-based algorithm [10]. The consideration of the shape and spatial relationships of buildings ensures the accuracy of scene matching and provides far better localization accuracy.

2. Related Works

2.1. Image-Based Matching Methods

2.1.1. Template Matching Methods

2.1.2. Feature Matching Methods

2.1.3. Deep Learning-Based Matching Methods

2.2. Map-Based Matching Methods

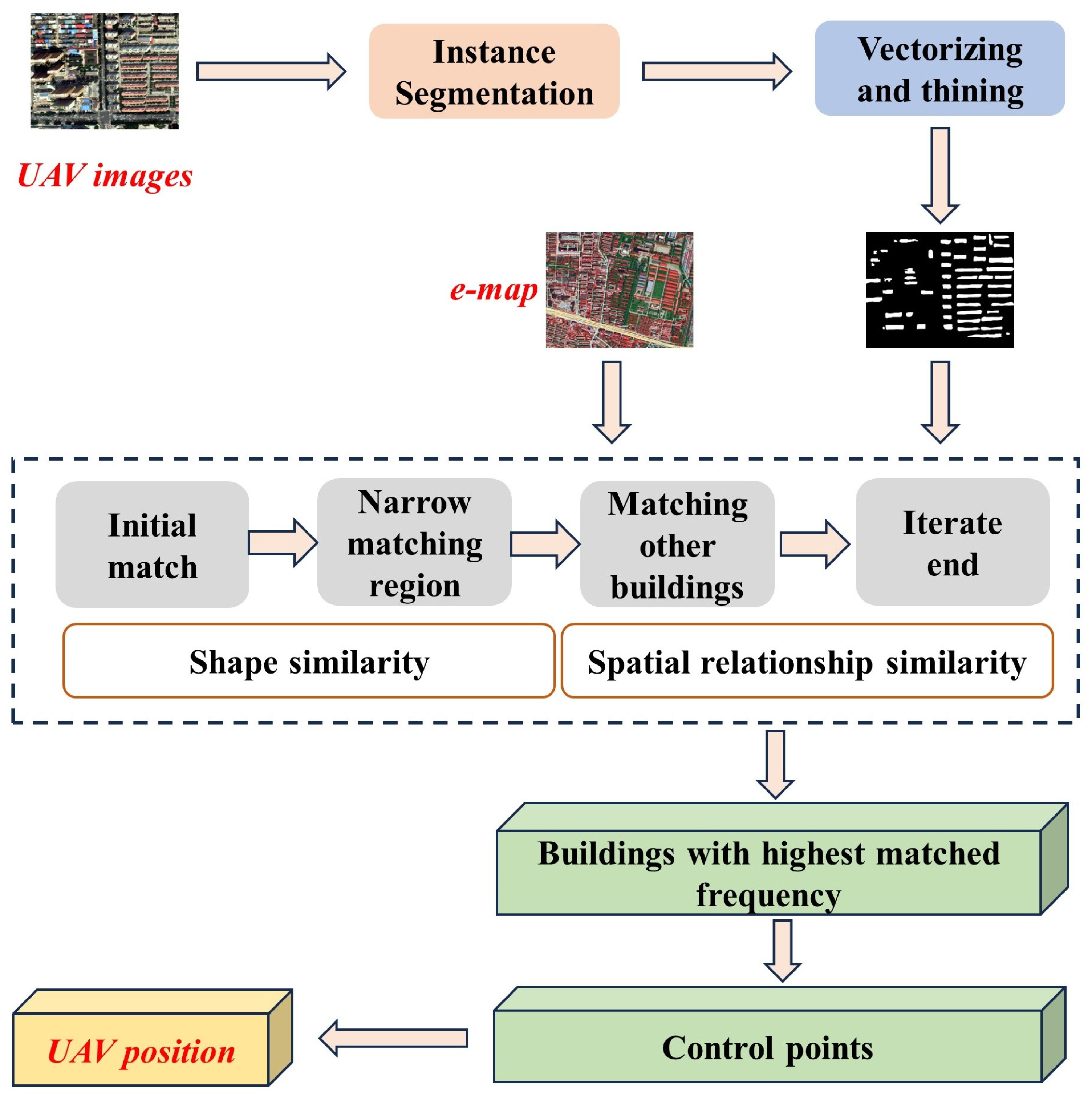

3. Methodology

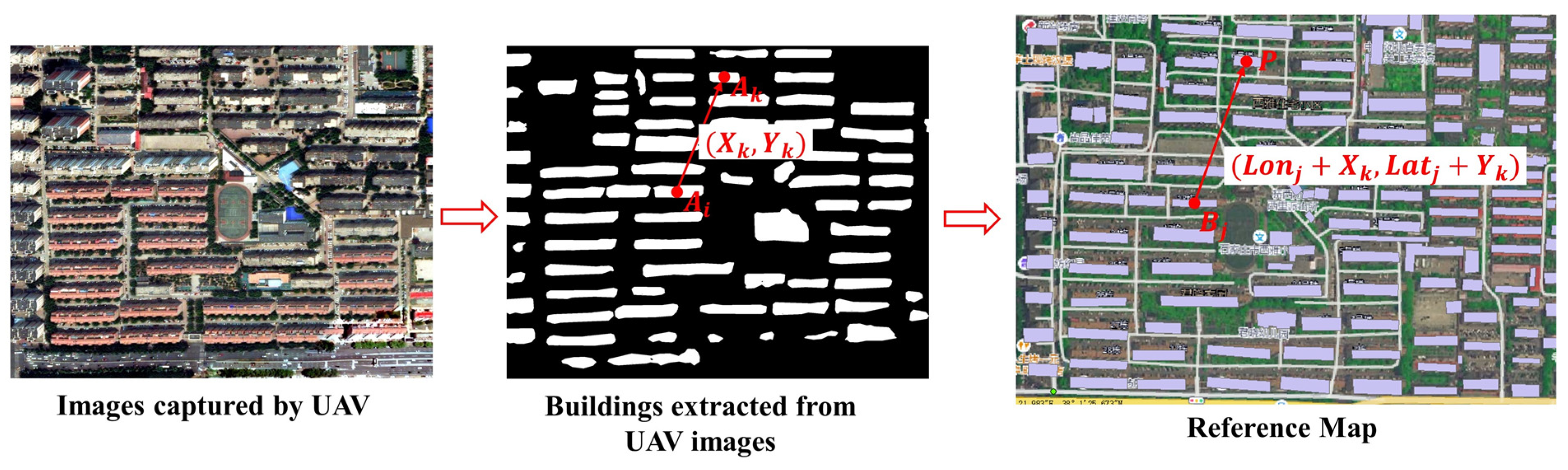

3.1. Individual Building Extraction

3.2. Scene Matching and UAV Position Determination

4. Data and Experiments

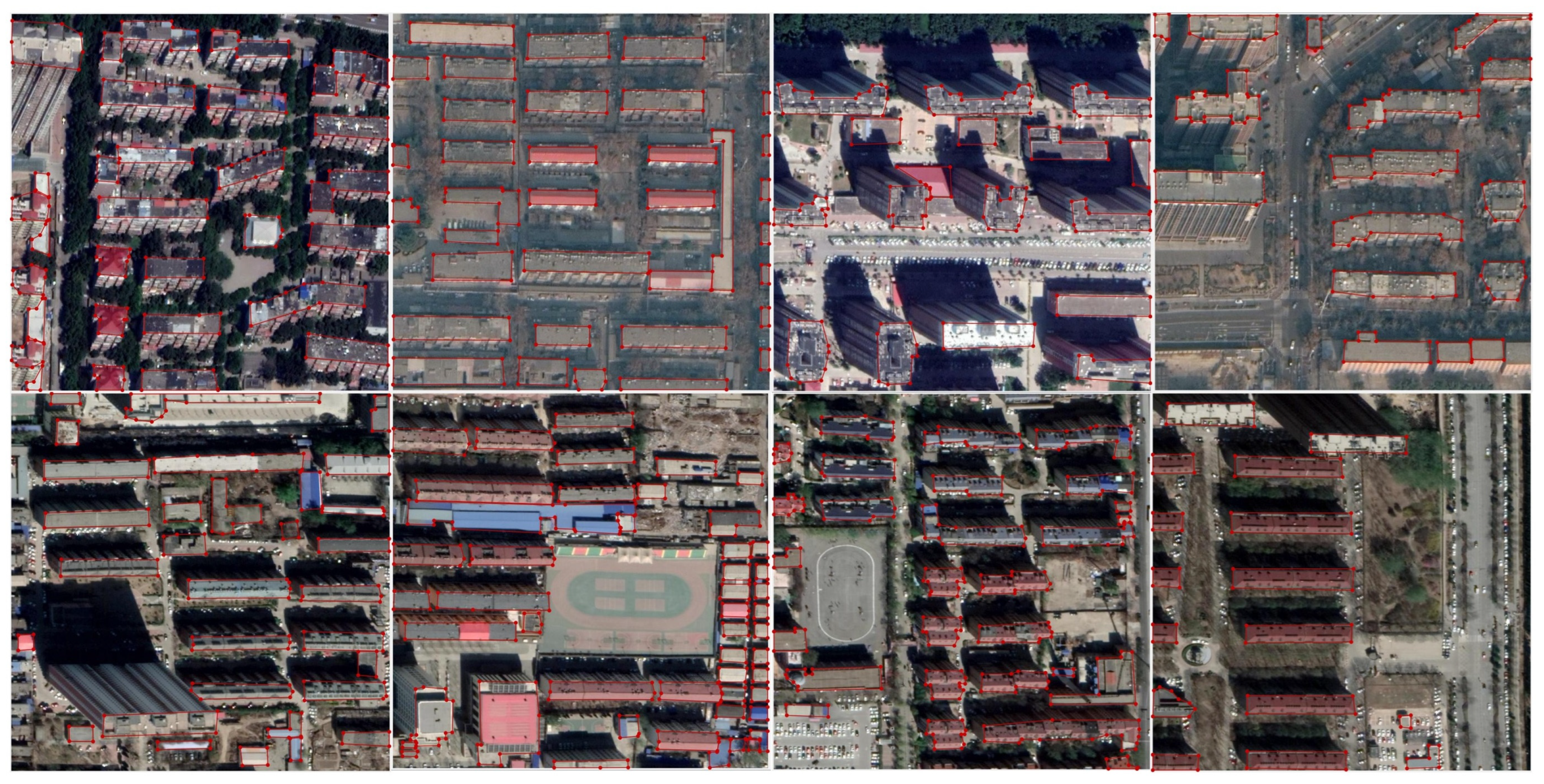

4.1. Instance Segmentation Dataset

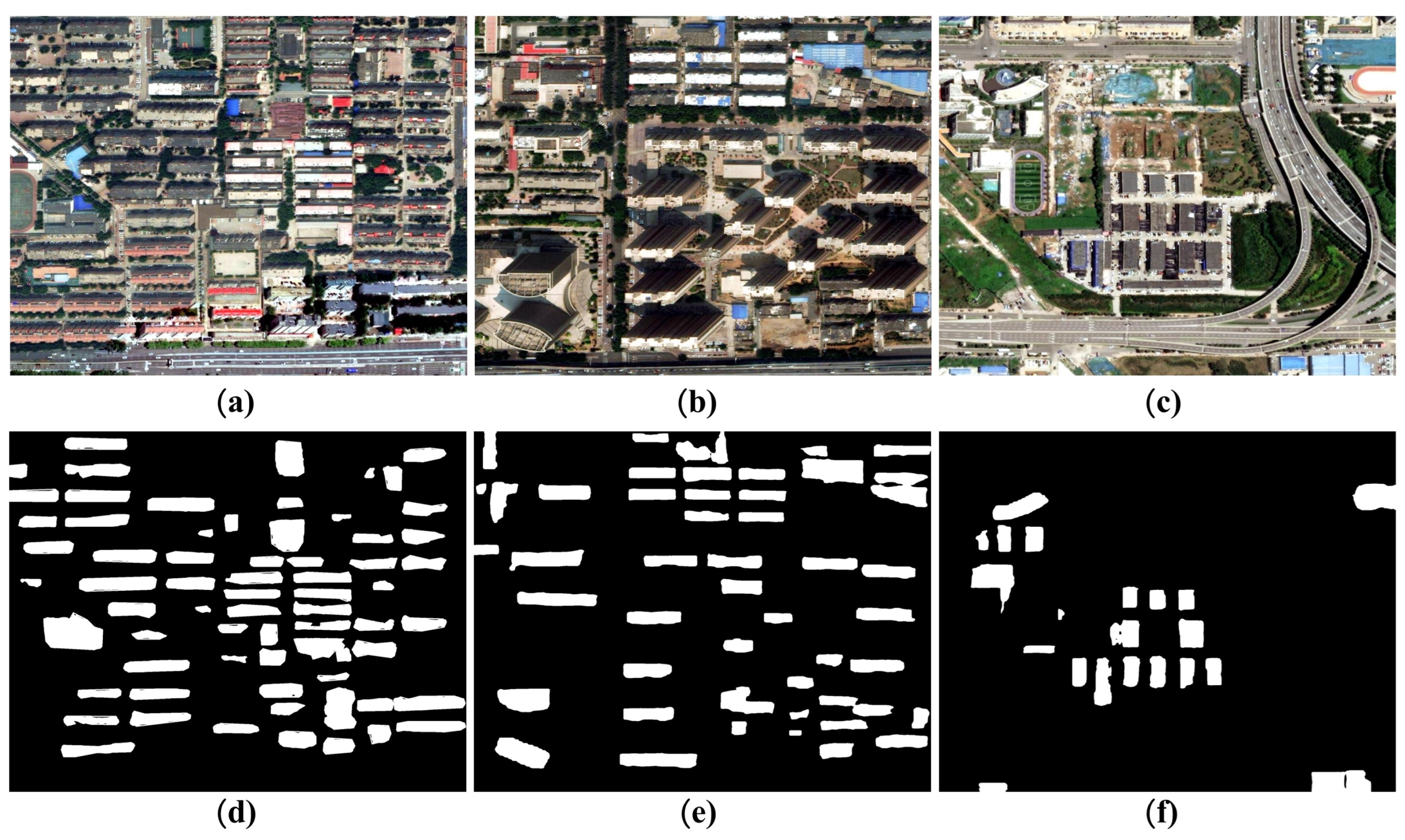

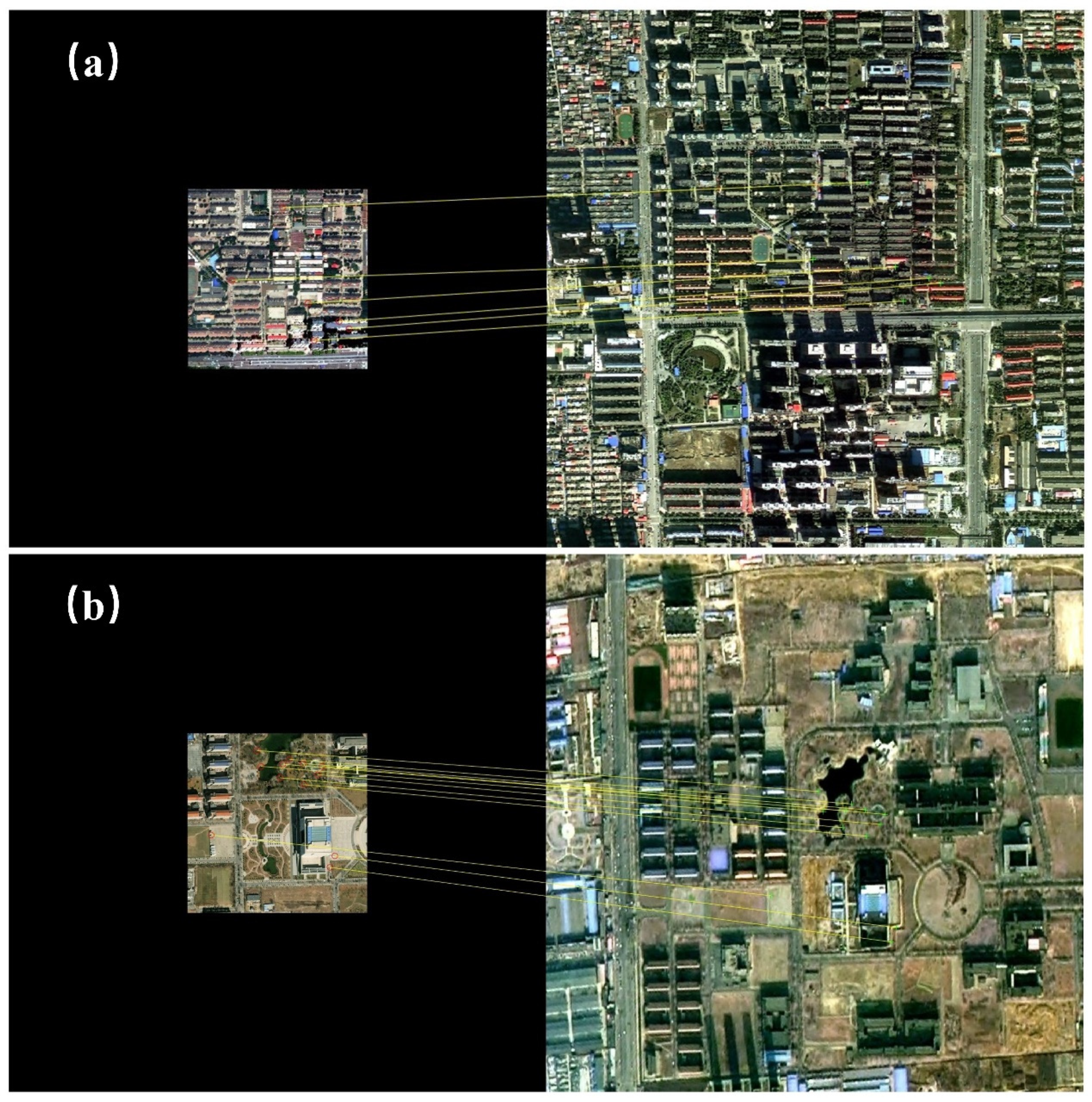

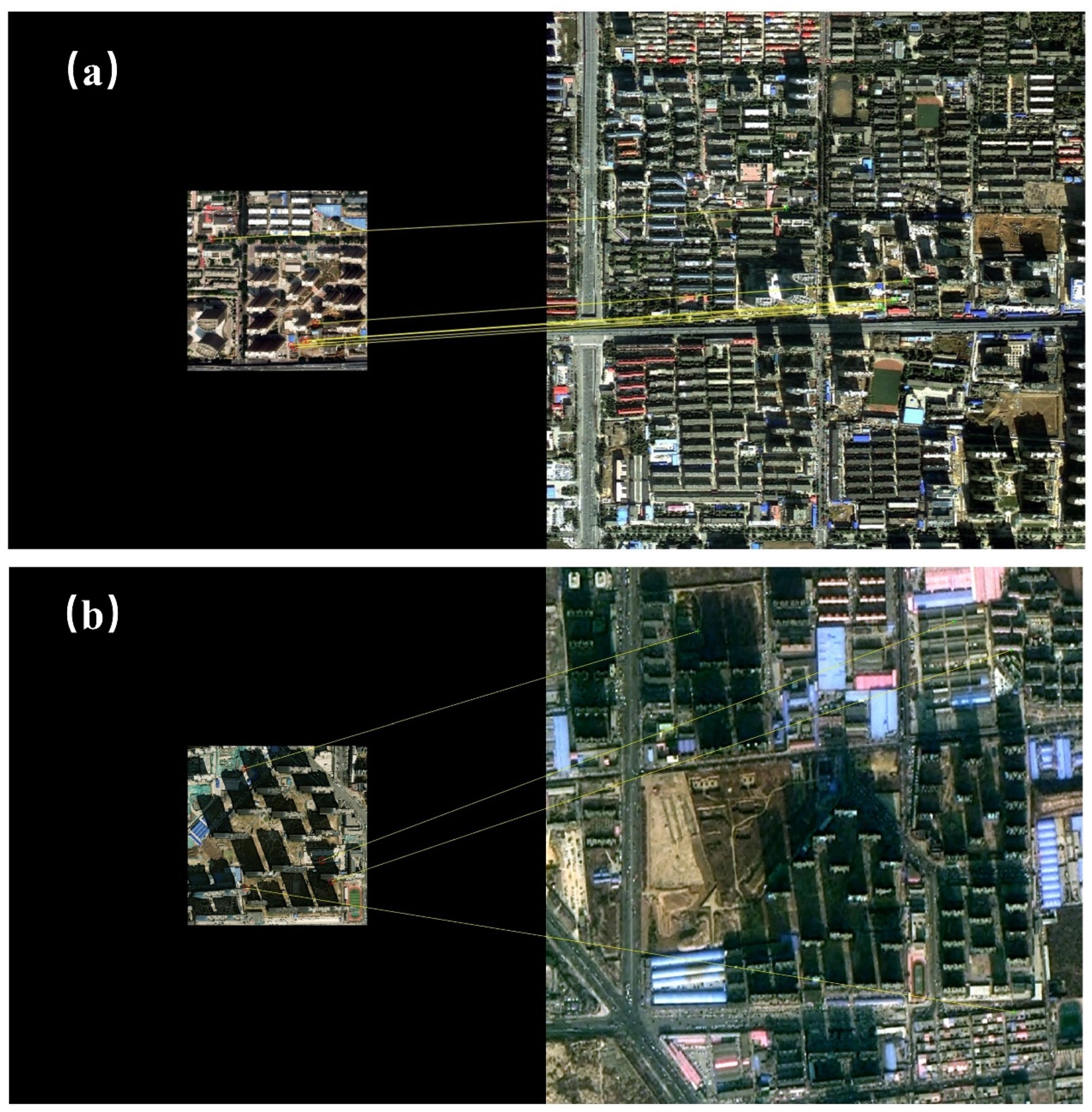

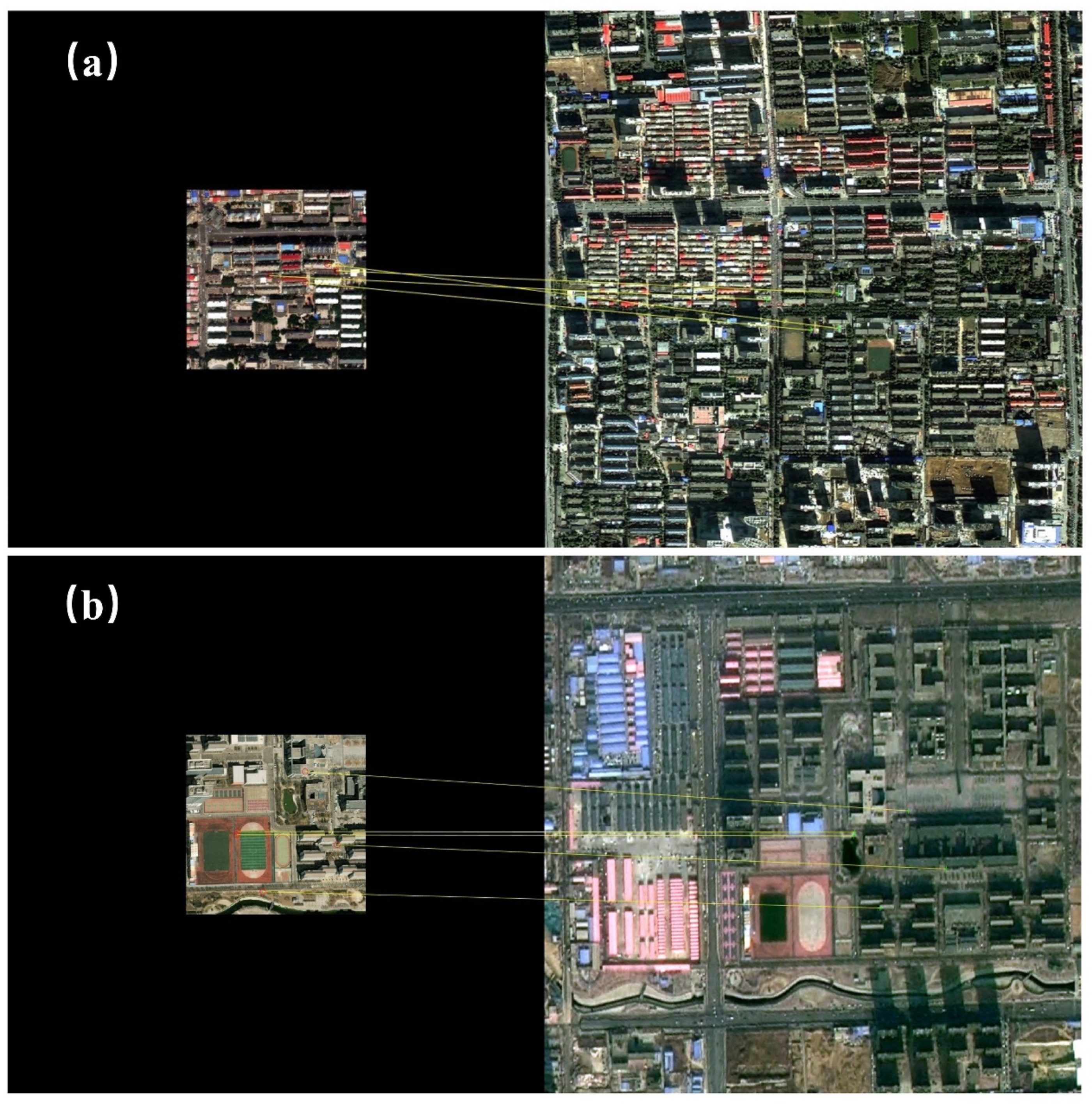

4.2. Geolocalization Dataset

4.3. E-Map Dataset

4.4. Comparison Experiments

5. Results

5.1. Instance Segmentation Results

5.2. Scene Matching Results

5.3. UAV Localization Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Couturier, A.; Akhloufi, M.A. A review on absolute visual localization for UAV. Robot. Auton. Syst. 2021, 135, 103666. [Google Scholar]

- Kinnari, J.; Verdoja, F.; Kyrki, V. GNSS-Denied Geolocalization of UAVs by Visual Matching of Onboard Camera Images with Orthophotos. In Proceedings of the 20th International Conference on Advanced Robotics, ICAR 2021, Manhattan, NY, USA, 6–10 December 2021; pp. 555–562. [Google Scholar]

- Gui, J.; Gu, D.; Wang, S.; Hu, H. A review of visual inertial odometry from filtering and optimisation perspectives. Adv. Robot. 2015, 29, 1289–1301. [Google Scholar]

- Liu, Y.; Bai, J.; Wang, G.; Wu, X.; Sun, F.; Guo, Z.; Geng, H. UAV Localization in Low-Altitude GNSS-Denied Environments Based on POI and Store Signage Text Matching in UAV Images. Drones 2023, 7, 451. [Google Scholar] [CrossRef]

- Li, W. Research on UAV Localization Method based on Image Registration and System Design. Master Degree, Univeristy of Electronic Science and Technology, Chengdu, China, 2023. [Google Scholar]

- Boiteau, S.; Vanegas, F.; Gonzalez, F. Framework for Autonomous UAV Navigation and Target Detection in Global-Navigation-Satellite-System-Denied and Visually Degraded Environments. Remote Sens. 2024, 16, 471. [Google Scholar] [CrossRef]

- Zhao, C.; Zhou, Y.; Lin, Z.; Hu, J.; Pan, Q. Review of scene matching visual navigation for unmanned aerial vehicles. Sci. Sin. Inf. 2019, 49, 507–519. [Google Scholar]

- Chen, J.; Chen, C.; Chen, Y. Fast algorithm for robust template matching with M-estimators. IEEE Trans. Signal Process. 2003, 51, 230–243. [Google Scholar]

- Xu, Y.; Pan, L.; Du, C.; Li, J.; Jing, N.; Wu, J. Vision-based UAVs Aerial Image Localization: A Survey. In Proceedings of the 2nd ACM SIGSPATIAL International Workshop on AI for Geographic Knowledge Discovery, Seattle, WA, USA, 6 November 2018; pp. 8–18. [Google Scholar]

- Nassar, A.; Amer, K.; ElHakim, R.; ElHelw, M. A Deep CNN-Based Framework For Enhanced Aerial Imagery Registration with Applications to UAV Geolocalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1594–1604. [Google Scholar]

- Choi, J.; Myung, H. BRM Localization: UAV localization in GNSS-denied environments based on matching of numerical map and UAV images. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 4537–4544. [Google Scholar]

- Buck, A.R.; Keller, J.M.; Skubic, M. A modified genetic algorithm for matching building sets with the histograms of forces. In Proceedings of the IEEE Congress on Evolutionary Computation, Brisbane, Australia, 10–15 June 2012; pp. 1–7. [Google Scholar]

- Li, J.; Hu, Q.; Ai, M. RIFT: Multi-Modal Image Matching Based on Radiation-Variation Insensitive Feature Transform. IEEE Trans. Image Process. 2020, 29, 3296–3310. [Google Scholar]

- Ye, Y.; Bruzzone, L.; Shan, J.; Bovolo, F.; Zhu, Q. Fast and Robust Matching for Multimodal Remote Sensing Image Registration. IEEE Trans. Geosci. Remote 2019, 57, 9059–9070. [Google Scholar]

- Zhang, X.; Leng, C.; Hong, Y.; Pei, Z.; Cheng, I.; Basu, A. Multimodal Remote Sensing Image Registration Methods and Advancements: A Survey. Remote Sens. 2021, 13, 5128. [Google Scholar] [CrossRef]

- Lewis, J.P. Fast Template Matching. In Vision Interface 95; Canadian Image Processing and Pattern Recognition Society, Quebec, Canada, 15–19 May 1995; Canadian Image Processing and Pattern Recognition Society: Toronto, ON, Canada; Volume 95, pp. 120–123.

- Wan, X.; Liu, J.; Yan, H.; Morgan, G.L.K. Illumination-invariant image matching for autonomous UAV localisation based on optical sensing. ISPRS J. Photogramm. Remote Sens. 2016, 119, 198–213. [Google Scholar]

- Patel, B.; Barfoot, T.D.; Schoellig, A.P. Visual Localization with Google Earth Images for Robust Global Pose Estimation of UAVs. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 6491–6497. [Google Scholar]

- Sibiryakov, A. Fast and high-performance template matching method. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1417–1424. [Google Scholar]

- Ye, Y.; Shan, J.; Hao, S.; Bruzzone, L.; Qin, Y. A local phase based invariant feature for remote sensing image matching. ISPRS J. Photogramm. Remote Sens. 2018, 142, 205–221. [Google Scholar]

- Fan, Z.; Zhang, L.; Liu, Y.; Wang, Q.; Zlatanova, S. Exploiting High Geopositioning Accuracy of SAR Data to Obtain Accurate Geometric Orientation of Optical Satellite Images. Remote Sens. 2021, 13, 3535. [Google Scholar] [CrossRef]

- Haigang, S.; Chang, L.; Zhe, G.; Zhengjie, J.; Chuan, X. Overview of multi-modal remote sensing image matching methods. Acta Geod. Cartogr. Sin. 2022, 51, 1848–1861. [Google Scholar]

- Mantelli, M.; Pittol, D.; Neuland, R.; Ribacki, A.; Maffei, R.; Jorge, V.; Prestes, E.; Kolberg, M. A novel measurement model based on abBRIEF for global localization of a UAV over satellite images. Robot. Auton. Syst. 2019, 112, 304–319. [Google Scholar]

- Dusmanu, M.; Rocco, I.; Pajdla, T.; Pollefeys, M.; Sivic, J.; Torii, A.; Sattler, T. D2-Net: A Trainable CNN for Joint Detection and Description of Local Features. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8084–8093. [Google Scholar]

- Kumar, B.V.; Carneiro, G.; Reid, I. Learning local image descriptors with deep siamese and triplet convolutional networks by minimising global loss functions. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5385–5394. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

- Sarlin, P.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperGlue: Learning Feature Matching with Graph Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 4937–4946. [Google Scholar]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-Free Local Feature Matching with Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 8918–8927. [Google Scholar]

- Masselli, A.; Hanten, R.; Zell, A. Localization of Unmanned Aerial Vehicles Using Terrain Classification from Aerial Images. Intell. Auton. Syst. 2016, 13, 831–842. [Google Scholar]

- Yun, H.; Ziyang, M.; Jiawen, A.; Yuanqing, W. Localization method by aerial image matching in urban environment based on semantic segmentation. J. Huazhong Univerisity Sci. Technol. (Nat. Sci. Ed.) 2022, 11, 79–84. [Google Scholar]

- Wang, H.; Cheng, Y.; Liu, N.; Zhao, Y.; Chan, H.C. An illumination-invariant shadow-based scene matching navigation approach in low-altitude flight. Remote Sens. 2022, 14, 3869. [Google Scholar] [CrossRef]

- Shan, M.; Wang, F.; Lin, F.; Gao, Z.; Tang, Y.Z.; Chen, B.M. Google map aided visual navigation for UAVs in GPS-denied environment. In Proceedings of the IEEE Conference on Robotics and Biomimetics, Zhuhai, China, 6–9 December 2015; pp. 114–119. [Google Scholar]

- Yol, A.; Delabarre, B.; Dame, A.; Dartois, J.; Marchand, E. Vision-based absolute localization for unmanned aerial vehicles. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, IL, USA, 14–18 September 2014; pp. 3429–3434. [Google Scholar]

- Sun, L.; Sun, Y.; Yuan, S.; Ai, M. A survey of instance segmentation research based on deep learning. CAAI Trans. Intell. Syst. 2022, 17, 16. [Google Scholar]

- Wang, X.; Zhang, R.; Kong, T.; Li, L.; Shen, C. SOLOv2: Dynamic and Fast Instance Segmentation. Adv. Neural Inf. Process. Syst. 2020, 33, 17721–17732. [Google Scholar]

- Douglas, D.H.; Peucher, T.K. Algorithms for the reduction of the number of points required to represent a digitized line or its caricature. Int. J. Geogr. Inf. Sci. 1973, 2, 112–122. [Google Scholar]

- Wang, J.; Meng, L.; Li, W.; Yang, W.; Yu, L.; Xia, G.S. Learning to Extract Building Footprints From Off-Nadir Aerial Images. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 1294–1301. [Google Scholar] [PubMed]

| Method | Error/m | RMSE/m | Time/s | ||

| Downtown | Suburb | Downtown | Suburb | ||

| RIFT | 38.46 | 250.11 | 105.82 | 196.77 | 18.65 |

| CFOG | 49.59 | 59.85 | 21.45 | 35.08 | 2.01 |

| SSM | 43.74 | 96.44 | 18.12 | 35.81 | 0.748 |

| SSRM | 7.38 | 11.92 | 4.12 | 7.57 | 3.58 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Bai, J.; Sun, F. Visual Localization Method for Unmanned Aerial Vehicles in Urban Scenes Based on Shape and Spatial Relationship Matching of Buildings. Remote Sens. 2024, 16, 3065. https://doi.org/10.3390/rs16163065

Liu Y, Bai J, Sun F. Visual Localization Method for Unmanned Aerial Vehicles in Urban Scenes Based on Shape and Spatial Relationship Matching of Buildings. Remote Sensing. 2024; 16(16):3065. https://doi.org/10.3390/rs16163065

Chicago/Turabian StyleLiu, Yu, Jing Bai, and Fangde Sun. 2024. "Visual Localization Method for Unmanned Aerial Vehicles in Urban Scenes Based on Shape and Spatial Relationship Matching of Buildings" Remote Sensing 16, no. 16: 3065. https://doi.org/10.3390/rs16163065

APA StyleLiu, Y., Bai, J., & Sun, F. (2024). Visual Localization Method for Unmanned Aerial Vehicles in Urban Scenes Based on Shape and Spatial Relationship Matching of Buildings. Remote Sensing, 16(16), 3065. https://doi.org/10.3390/rs16163065