Study on the Estimation of Leaf Area Index in Rice Based on UAV RGB and Multispectral Data

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Design and Study Area

2.2. UAV Data Acquisition and Processing

2.3. Ground Data Acquisition and LAI Measurement

2.4. Feature Variable Extraction and Screening

2.4.1. Feature Variable Extraction

2.4.2. Feature Variable Screening

2.5. Modelling Methods and Accuracy Validation

3. Results and Analysis

3.1. Feature Variable Extraction Results

3.2. Results of LAI Estimation Based on Single Features

3.2.1. Results of Single-Variable Analysis under Single Features

3.2.2. Results of Multi-Variable Analysis under Single Features

3.3. Results of LAI Estimation Based on Multi-Feature Mixed

4. Discussion

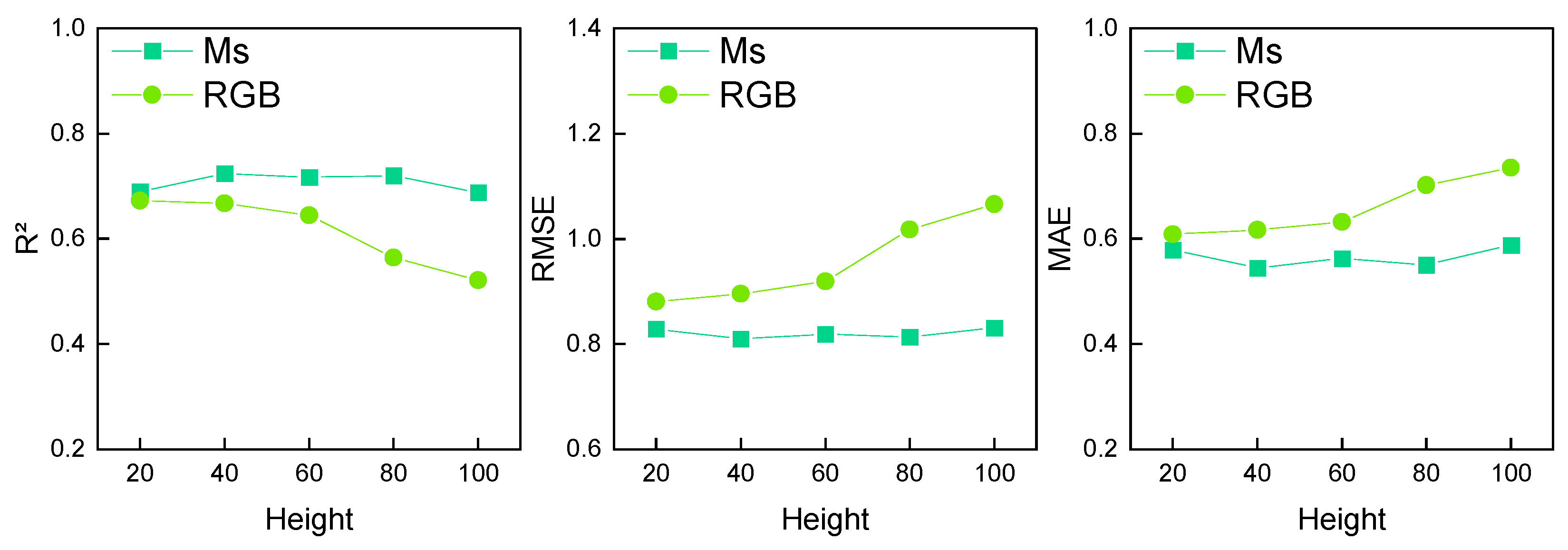

4.1. Comparison of Accuracy between Image Down-Sampling and Data Acquisition by UAV

4.2. Effect of Rice Heading on LAI Estimation

4.3. Analysis of the Application Potential of the Research Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Van Nguyen, N.; Ferrero, A. Meeting the challenges of global rice production. Paddy Water Environ. 2006, 4, 1–9. [Google Scholar] [CrossRef]

- Muthayya, S.; Sugimoto, J.D.; Montgomery, S.; Maberly, G.F. An overview of global rice production, supply, trade, and consumption. Ann. N. Y. Acad. Sci. 2014, 1324, 7–14. [Google Scholar] [CrossRef]

- Tang, L.; Risalat, H.; Cao, R.; Hu, Q.; Pan, X.; Hu, Y.; Zhang, G. Food Security in China: A Brief View of Rice Production in Recent 20 Years. Foods 2022, 11, 3324. [Google Scholar] [CrossRef] [PubMed]

- Bandumula, N. Rice Production in Asia: Key to Global Food Security. Proc. Natl. Acad. Sci. India Sect. B Biol. Sci. 2017, 88, 1323–1328. [Google Scholar] [CrossRef]

- Qiu, Z.; Ma, F.; Li, Z.; Xu, X.; Ge, H.; Du, C. Estimation of nitrogen nutrition index in rice from UAV RGB images coupled with machine learning algorithms. Comput. Electron. Agric. 2021, 189, 106421. [Google Scholar] [CrossRef]

- Yan, G.; Hu, R.; Luo, J.; Weiss, M.; Jiang, H.; Mu, X.; Xie, D.; Zhang, W. Review of indirect optical measurements of leaf area index: Recent advances, challenges, and perspectives. Agric. For. Meteorol. 2019, 265, 390–411. [Google Scholar] [CrossRef]

- Jonckheere, I.; Fleck, S.; Nackaerts, K.; Muys, B.; Coppin, P.; Weiss, M.; Baret, F. Review of methods for in situ leaf area index determination: Part I. Theories, sensors and hemispherical photography. Agric. For. Meteorol. 2004, 121, 19–35. [Google Scholar] [CrossRef]

- Fang, H.; Baret, F.; Plummer, S.; Schaepman-Strub, G. An Overview of Global Leaf Area Index (LAI): Methods, Products, Validation, and Applications. Rev. Geophys. 2019, 57, 739–799. [Google Scholar] [CrossRef]

- Rosati, A. Estimating Canopy Light Interception and Absorption Using Leaf Mass Per Unit Leaf Area in Solanum melongena. Ann. Bot. 2001, 88, 101–109. [Google Scholar] [CrossRef]

- Weiss, M.; Baret, F.; Smith, G.J.; Jonckheere, I.; Coppin, P. Review of methods for in situ leaf area index (LAI) determination: Part II. Estimation of LAI, errors and sampling. Agric. For. Meteorol. 2004, 121, 37–53. [Google Scholar] [CrossRef]

- Fang, H.; Li, W.; Wei, S.; Jiang, C. Seasonal variation of leaf area index (LAI) over paddy rice fields in NE China: Intercomparison of destructive sampling, LAI-2200, digital hemispherical photography (DHP), and AccuPAR methods. Agric. For. Meteorol. 2014, 198–199, 126–141. [Google Scholar] [CrossRef]

- Yang, R.; Liu, L.; Liu, Q.; Li, X.; Yin, L.; Hao, X.; Ma, Y.; Song, Q. Validation of leaf area index measurement system based on wireless sensor network. Sci. Rep. 2022, 12, 4668. [Google Scholar] [CrossRef] [PubMed]

- Confalonieri, R.; Foi, M.; Casa, R.; Aquaro, S.; Tona, E.; Peterle, M.; Boldini, A.; De Carli, G.; Ferrari, A.; Finotto, G.; et al. Development of an app for estimating leaf area index using a smartphone. Trueness and precision determination and comparison with other indirect methods. Comput. Electron. Agric. 2013, 96, 67–74. [Google Scholar] [CrossRef]

- Johnson, L.; Roczen, D.; Youkhana, S.; Nemani, R.; Bosch, D. Mapping vineyard leaf area with multispectral satellite imagery. Comput. Electron. Agric. 2003, 38, 33–44. [Google Scholar] [CrossRef]

- Qiao, L.; Gao, D.; Zhao, R.; Tang, W.; An, L.; Li, M.; Sun, H. Improving estimation of LAI dynamic by fusion of morphological and vegetation indices based on UAV imagery. Comput. Electron. Agric. 2022, 192, 106603. [Google Scholar] [CrossRef]

- Zheng, G.; Moskal, L.M. Retrieving Leaf Area Index (LAI) Using Remote Sensing: Theories, Methods and Sensors. Sensors 2009, 9, 2719–2745. [Google Scholar] [CrossRef]

- Jacquemoud, S.; Verhoef, W.; Baret, F.; Bacour, C.; Zarco-Tejada, P.J.; Asner, G.P.; François, C.; Ustin, S.L. PROSPECT+SAIL models: A review of use for vegetation characterization. Remote Sens. Environ. 2009, 113, S56–S66. [Google Scholar] [CrossRef]

- Wei, C.; Huang, J.; Mansaray, L.; Li, Z.; Liu, W.; Han, J. Estimation and Mapping of Winter Oilseed Rape LAI from High Spatial Resolution Satellite Data Based on a Hybrid Method. Remote Sens. 2017, 9, 488. [Google Scholar] [CrossRef]

- Wang, Y.; Fang, H. Estimation of LAI with the LiDAR Technology: A Review. Remote Sens. 2020, 12, 3457. [Google Scholar] [CrossRef]

- Wu, M.; Wu, C.; Huang, W.; Niu, Z.; Wang, C. High-resolution Leaf Area Index estimation from synthetic Landsat data generated by a spatial and temporal data fusion model. Comput. Electron. Agric. 2015, 115, 1–11. [Google Scholar] [CrossRef]

- Tian, J.; Wang, L.; Li, X.; Gong, H.; Shi, C.; Zhong, R.; Liu, X. Comparison of UAV and WorldView-2 imagery for mapping leaf area index of mangrove forest. Int. J. Appl. Earth Obs. Geoinf. 2017, 61, 22–31. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A Review on UAV-Based Applications for Precision Agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Olson, D.; Anderson, J. Review on unmanned aerial vehicles, remote sensors, imagery processing, and their applications in agriculture. Agron. J. 2021, 113, 971–992. [Google Scholar] [CrossRef]

- Xie, C.; Yang, C. A review on plant high-throughput phenotyping traits using UAV-based sensors. Comput. Electron. Agric. 2020, 178, 105731. [Google Scholar] [CrossRef]

- Shi, Y.; Gao, Y.; Wang, Y.; Luo, D.; Chen, S.; Ding, Z.; Fan, K. Using Unmanned Aerial Vehicle-Based Multispectral Image Data to Monitor the Growth of Intercropping Crops in Tea Plantation. Front. Plant Sci. 2022, 13, 820585. [Google Scholar] [CrossRef]

- Lin, Y. LiDAR: An important tool for next-generation phenotyping technology of high potential for plant phenomics? Comput. Electron. Agric. 2015, 119, 61–73. [Google Scholar] [CrossRef]

- Zhang, F.; Hassanzadeh, A.; Kikkert, J.; Pethybridge, S.J.; van Aardt, J. Evaluation of Leaf Area Index (LAI) of Broadacre Crops Using UAS-Based LiDAR Point Clouds and Multispectral Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4027–4044. [Google Scholar] [CrossRef]

- Luo, S.; Chen, J.M.; Wang, C.; Gonsamo, A.; Xi, X.; Lin, Y.; Qian, M.; Peng, D.; Nie, S.; Qin, H. Comparative Performances of Airborne LiDAR Height and Intensity Data for Leaf Area Index Estimation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 300–310. [Google Scholar] [CrossRef]

- Li, S.; Yuan, F.; Ata-Ui-Karim, S.T.; Zheng, H.; Cheng, T.; Liu, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cao, Q. Combining Color Indices and Textures of UAV-Based Digital Imagery for Rice LAI Estimation. Remote Sens. 2019, 11, 1763. [Google Scholar] [CrossRef]

- Zhang, Y.; Ta, N.; Guo, S.; Chen, Q.; Zhao, L.; Li, F.; Chang, Q. Combining Spectral and Textural Information from UAV RGB Images for Leaf Area Index Monitoring in Kiwifruit Orchard. Remote Sens. 2022, 14, 1063. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, K.; Sun, Y.; Zhao, Y.; Zhuang, H.; Ban, W.; Chen, Y.; Fu, E.; Chen, S.; Liu, J.; et al. Combining Spectral and Texture Features of UAS-Based Multispectral Images for Maize Leaf Area Index Estimation. Remote Sens. 2022, 14, 331. [Google Scholar] [CrossRef]

- Yuan, W.; Meng, Y.; Li, Y.; Ji, Z.; Kong, Q.; Gao, R.; Su, Z. Research on rice leaf area index estimation based on fusion of texture and spectral information. Comput. Electron. Agric. 2023, 211, 108016. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Y.; Zhang, Q.; Duan, R.; Liu, J.; Qin, Y.; Wang, X. Toward Multi-Stage Phenotyping of Soybean with Multimodal UAV Sensor Data: A Comparison of Machine Learning Approaches for Leaf Area Index Estimation. Remote Sens. 2022, 15, 7. [Google Scholar] [CrossRef]

- Carlson, T.N.; Ripley, D.A. On the relation between NDVI, fractional vegetation cover, and leaf area index. Remote Sens. Environ. 1997, 62, 241–252. [Google Scholar] [CrossRef]

- Xiao, Z.; Wang, T.; Liang, S.; Sun, R. Estimating the Fractional Vegetation Cover from GLASS Leaf Area Index Product. Remote Sens. 2016, 8, 337. [Google Scholar] [CrossRef]

- Zhang, D.; Liu, J.; Ni, W.; Sun, G.; Zhang, Z.; Liu, Q.; Wang, Q. Estimation of forest leaf area index using height and canopy cover information extracted from unmanned aerial vehicle stereo imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 471–481. [Google Scholar] [CrossRef]

- Wang, F.-m.; Huang, J.-f.; Lou, Z.-h. A comparison of three methods for estimating leaf area index of paddy rice from optimal hyperspectral bands. Precis. Agric. 2010, 12, 439–447. [Google Scholar] [CrossRef]

- Siegmann, B.; Jarmer, T. Comparison of different regression models and validation techniques for the assessment of wheat leaf area index from hyperspectral data. Int. J. Remote Sens. 2015, 36, 4519–4534. [Google Scholar] [CrossRef]

- Colombo, R. Retrieval of leaf area index in different vegetation types using high resolution satellite data. Remote Sens. Environ. 2003, 86, 120–131. [Google Scholar] [CrossRef]

- Nguyen, H.T.; Lee, B.-W. Assessment of rice leaf growth and nitrogen status by hyperspectral canopy reflectance and partial least square regression. Eur. J. Agron. 2006, 24, 349–356. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Y.; Bao, Y.; Luo, J.; Jin, X.; Xu, X.; Song, X.; Yang, G. Exploring the Best Hyperspectral Features for LAI Estimation Using Partial Least Squares Regression. Remote Sens. 2014, 6, 6221–6241. [Google Scholar] [CrossRef]

- Durbha, S.S.; King, R.L.; Younan, N.H. Support vector machines regression for retrieval of leaf area index from multiangle imaging spectroradiometer. Remote Sens. Environ. 2007, 107, 348–361. [Google Scholar] [CrossRef]

- Liang, L.; Di, L.; Huang, T.; Wang, J.; Lin, L.; Wang, L.; Yang, M. Estimation of Leaf Nitrogen Content in Wheat Using New Hyperspectral Indices and a Random Forest Regression Algorithm. Remote Sens. 2018, 10, 1940. [Google Scholar] [CrossRef]

- Chen, Z.; Jia, K.; Xiao, C.; Wei, D.; Zhao, X.; Lan, J.; Wei, X.; Yao, Y.; Wang, B.; Sun, Y.; et al. Leaf Area Index Estimation Algorithm for GF-5 Hyperspectral Data Based on Different Feature Selection and Machine Learning Methods. Remote Sens. 2020, 12, 2110. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, C.; Yang, C.; Xie, T.; Jiang, Z.; Hu, T.; Luo, Z.; Zhou, G.; Xie, J. Assessing the effect of real spatial resolution of in situ UAV multispectral images on seedling rapeseed growth monitoring. Remote Sens. 2020, 12, 1207. [Google Scholar] [CrossRef]

- Petras, V.; Petrasova, A.; McCarter, J.B.; Mitasova, H.; Meentemeyer, R.K. Point Density Variations in Airborne Lidar Point Clouds. Sensors 2023, 23, 1593. [Google Scholar] [CrossRef]

- Kamal, M.; Phinn, S.; Johansen, K. Assessment of multi-resolution image data for mangrove leaf area index mapping. Remote Sens. Environ. 2016, 176, 242–254. [Google Scholar] [CrossRef]

- Li, W.; Wang, J.; Zhang, Y.; Yin, Q.; Wang, W.; Zhou, G.; Huo, Z. Combining Texture, Color, and Vegetation Index from Unmanned Aerial Vehicle Multispectral Images to Estimate Winter Wheat Leaf Area Index during the Vegetative Growth Stage. Remote Sens. 2023, 15, 5715. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Guo, A.; Ye, H.; Huang, W.; Qian, B.; Wang, J.; Lan, Y.; Wang, S. Inversion of maize leaf area index from UAV hyperspectral and multispectral imagery. Comput. Electron. Agric. 2023, 212, 108020. [Google Scholar] [CrossRef]

- Lyu, X.; Li, X.; Gong, J.; Li, S.; Dou, H.; Dang, D.; Xuan, X.; Wang, H. Remote-sensing inversion method for aboveground biomass of typical steppe in Inner Mongolia, China. Ecol. Indic. 2021, 120, 106883. [Google Scholar] [CrossRef]

- Kira, O.; Nguy-Robertson, A.L.; Arkebauer, T.J.; Linker, R.; Gitelson, A.A. Toward Generic Models for Green LAI Estimation in Maize and Soybean: Satellite Observations. Remote Sens. 2017, 9, 318. [Google Scholar] [CrossRef]

- Liu, Y.; Fan, Y.; Feng, H.; Chen, R.; Bian, M.; Ma, Y.; Yue, J.; Yang, G. Estimating potato above-ground biomass based on vegetation indices and texture features constructed from sensitive bands of UAV hyperspectral imagery. Comput. Electron. Agric. 2024, 220, 108918. [Google Scholar] [CrossRef]

- Sekrecka, A.; Wierzbicki, D.; Kedzierski, M. Influence of the Sun Position and Platform Orientation on the Quality of Imagery Obtained from Unmanned Aerial Vehicles. Remote Sens. 2020, 12, 1040. [Google Scholar] [CrossRef]

- Kennedy, S.; Burbach, M. Great Plains Ranchers Managing for Vegetation Heterogeneity: A Multiple Case Study. Great Plains Res. 2020, 30, 137–148. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Fitzgerald, G.J.; Rodriguez, D.; Christensen, L.K.; Belford, R.; Sadras, V.O.; Clarke, T.R. Spectral and thermal sensing for nitrogen and water status in rainfed and irrigated wheat environments. Precis. Agric. 2006, 7, 233–248. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Panigada, C.; Rossini, M.; Busetto, L.; Meroni, M.; Fava, F.; Colombo, R. Chlorophyll concentration mapping with MIVIS data to assess crown discoloration in the Ticino Park oak forest. Int. J. Remote Sens. 2010, 31, 3307–3332. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef]

- Meyer, G.E.; Hindman, T.W.; Laksmi, K. Machine vision detection parameters for plant species identification. In Proceedings of the Precision Agriculture and Biological Quality, Boston, MA, USA, 3–4 November 1999; pp. 327–335. [Google Scholar]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Mao, W.; Wang, Y.; Wang, Y. Real-time detection of between-row weeds using machine vision. In Proceedings of the 2003 ASAE Annual Meeting, Las Vegas, NV, USA, 27–30 July 2003; p. 1. [Google Scholar]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially Located Platform and Aerial Photography for Documentation of Grazing Impacts on Wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Seifert, E.; Seifert, S.; Vogt, H.; Drew, D.; van Aardt, J.; Kunneke, A.; Seifert, T. Influence of Drone Altitude, Image Overlap, and Optical Sensor Resolution on Multi-View Reconstruction of Forest Images. Remote Sens. 2019, 11, 1252. [Google Scholar] [CrossRef]

- Feng, L.; Wu, W.; Wang, J.; Zhang, C.; Zhao, Y.; Zhu, S.; He, Y. Wind Field Distribution of Multi-rotor UAV and Its Influence on Spectral Information Acquisition of Rice Canopies. Remote Sens. 2019, 11, 602. [Google Scholar] [CrossRef]

- Sun, B.; Li, Y.; Huang, J.; Cao, Z.; Peng, X. Impacts of Variable Illumination and Image Background on Rice LAI Estimation Based on UAV RGB-Derived Color Indices. Appl. Sci. 2024, 14, 3214. [Google Scholar] [CrossRef]

- He, J.; Zhang, N.; Su, X.; Lu, J.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Estimating Leaf Area Index with a New Vegetation Index Considering the Influence of Rice Panicles. Remote Sens. 2019, 11, 1809. [Google Scholar] [CrossRef]

- Makino, Y.; Hirooka, Y.; Homma, K.; Kondo, R.; Liu, T.-S.; Tang, L.; Nakazaki, T.; Xu, Z.-J.; Shiraiwa, T. Effect of flag leaf length of erect panicle rice on the canopy structure and biomass production after heading. Plant Prod. Sci. 2022, 25, 1–10. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, L.; Tian, T.; Yin, J. A Review of Unmanned Aerial Vehicle Low-Altitude Remote Sensing (UAV-LARS) Use in Agricultural Monitoring in China. Remote Sens. 2021, 13, 1221. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Fan, Y.; Bian, M.; Ma, Y.; Jin, X.; Song, X.; Yang, G. Estimating potato above-ground biomass by using integrated unmanned aerial system-based optical, structural, and textural canopy measurements. Comput. Electron. Agric. 2023, 213, 108229. [Google Scholar] [CrossRef]

- Zou, M.; Liu, Y.; Fu, M.; Li, C.; Zhou, Z.; Meng, H.; Xing, E.; Ren, Y. Combining spectral and texture feature of UAV image with plant height to improve LAI estimation of winter wheat at jointing stage. Front. Plant Sci. 2023, 14, 1272049. [Google Scholar] [CrossRef] [PubMed]

- Zu, J.; Yang, H.; Wang, J.; Cai, W.; Yang, Y. Inversion of winter wheat leaf area index from UAV multispectral images: Classical vs. deep learning approaches. Front. Plant Sci. 2024, 15, 1367828. [Google Scholar] [CrossRef] [PubMed]

- Du, R.; Lu, J.; Xiang, Y.; Zhang, F.; Chen, J.; Tang, Z.; Shi, H.; Wang, X.; Li, W. Estimation of winter canola growth parameter from UAV multi-angular spectral-texture information using stacking-based ensemble learning model. Comput. Electron. Agric. 2024, 222, 109074. [Google Scholar] [CrossRef]

- Liu, Z.; Ji, Y.; Ya, X.; Liu, R.; Liu, Z.; Zong, X.; Yang, T. Ensemble Learning for Pea Yield Estimation Using Unmanned Aerial Vehicles, Red Green Blue, and Multispectral Imagery. Drones 2024, 8, 227. [Google Scholar] [CrossRef]

- Yu, M.; He, J.; Li, W.; Zheng, H.; Wang, X.; Yao, X.; Cheng, T.; Zhang, X.; Zhu, Y.; Cao, W.; et al. Estimation of Rice Leaf Area Index Utilizing a Kalman Filter Fusion Methodology Based on Multi-Spectral Data Obtained from Unmanned Aerial Vehicles (UAVs). Remote Sens. 2024, 16, 2073. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Jin, X.; Fan, Y.; Chen, R.; Bian, M.; Ma, Y.; Song, X.; Yang, G. Improved potato AGB estimates based on UAV RGB and hyperspectral images. Comput. Electron. Agric. 2023, 214, 108260. [Google Scholar] [CrossRef]

- Niu, X.; Chen, B.; Sun, W.; Feng, T.; Yang, X.; Liu, Y.; Liu, W.; Fu, B. Estimation of Coastal Wetland Vegetation Aboveground Biomass by Integrating UAV and Satellite Remote Sensing Data. Remote Sens. 2024, 16, 2760. [Google Scholar] [CrossRef]

| Data Type | Effective Pixels | Format | Image Size | Channels/Bands |

|---|---|---|---|---|

| RGB | 20 MP | JPG | 5472 × 3648 | R, G, B (0–255) |

| Ms (Multispectral) | 2.08 MP | JPG + TIF | 1600 × 1300 | RB, RG, RR, RRE, RNIR |

| Flying Height | Day Time | Average Flying Time (RGB/Ms) | Number of Images (RGB/Ms) | Spatial Resolution (RGB/Ms) |

|---|---|---|---|---|

| 20 m | 11:00 a.m.–2:00 p.m. | 19 min/35 min | 450/840 | 0.4 cm/1.1 cm |

| 40 m | 5 min/10 min | 124/229 | 1.0 cm/2.2 cm | |

| 60 m | 3 min/5 min | 60/110 | 1.4 cm/3.5 cm | |

| 80 m | 2 min/3 min | 36/66 | 2.0 cm/4.6 cm | |

| 100 m | 1 min/2 min | 23/45 | 2.9 cm/5.6 cm |

| Acquisition Date | Samples | Min | Max | Mean | SD | CV (%) |

|---|---|---|---|---|---|---|

| 6/13 | 43 | 0.39 | 1.70 | 0.93 | 0.26 | 27.96 |

| 7/13 | 43 | 1.49 | 4.61 | 2.91 | 0.75 | 25.77 |

| 8/9 | 43 | 2.39 | 6.72 | 4.27 | 1.32 | 30.91 |

| 9/7 | 43 | 1.15 | 5.64 | 3.19 | 1.22 | 37.81 |

| All Data | 172 | 0.39 | 6.72 | 2.82 | 1.55 | 54.96 |

| VI/CI | Formula | Ref |

|---|---|---|

| Ratio vegetation index (RVI) | RNIR/RR | [55] |

| Normalized difference vegetation index (NDVI) | (RNIR − RR)/(RNIR + RR) | [56] |

| Green normalized difference vegetation index (GNDVI) | (RNIR − RG)/(RNIR + RG) | [57] |

| Normalized difference red edge index (NDRE) | (RNIR − RRE)/(RNIR + RRE) | [58] |

| Enhanced vegetation index2 (EVI2) | 2.5 × (RNIR − RR)/(1 + RNIR + 2.4 × RR) | [59] |

| Optimized soil adjusted vegetation index (OSAVI) | 1.16 × (RNIR − RR)/(RNIR + RR + 0.16) | [60] |

| MERIS terrestrial chlorophyll index (MTCI) | (RNIR − RRE)/(RRE − RR) | [61] |

| Red-edge chlorophyll index (CI-re) | (RNIR/RRE) − 1 | [62] |

| Excess red vegetation index (EXR) | 1.4r − g | [63] |

| Excess green vegetation index (EXG) | 2g − r − b | [64] |

| Excess green minus excess red vegetation index (EXGR) | EXG − EXR | [64] |

| Green leaf algorithm index (GLA) | (2 × g − r − b)/(2 × g + r × b) | [65] |

| Visible atmospherically resistant index (VARI) | (g − r)/(g + r − b) | [66] |

| Vegetative index (VEG) | g/r0.667×b0.333 | [67] |

| Normalized green–red difference index (NGRDI) | (g − r)/(g + r) | [67] |

| Red green blue vegetation index (RGBVI) | (g2 – b × r)/(g2 + b × r) | [68] |

| Feature Variable | Height | SLR | ER | LR | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | ||

| VI (EVI2) | 20 m | 0.608 | 1.091 | 0.722 | 0.618 | 1.077 | 0.693 | 0.554 | 1.164 | 0.801 |

| 40 m | 0.598 | 1.105 | 0.735 | 0.618 | 1.076 | 0.698 | 0.536 | 1.187 | 0.825 | |

| 60 m | 0.600 | 1.103 | 0.739 | 0.608 | 1.092 | 0.709 | 0.542 | 1.179 | 0.818 | |

| 80 m | 0.602 | 1.099 | 0.734 | 0.609 | 1.089 | 0.718 | 0.545 | 1.175 | 0.816 | |

| 100 m | 0.592 | 1.108 | 0.737 | 0.601 | 1.091 | 0.721 | 0.530 | 1.189 | 0.828 | |

| CI (RGBVI) | 20 m | 0.399 | 1.351 | 0.952 | 0.346 | 1.409 | 1.042 | 0.429 | 1.316 | 0.902 |

| 40 m | 0.389 | 1.362 | 0.975 | 0.337 | 1.418 | 1.057 | 0.416 | 1.331 | 0.923 | |

| 60 m | 0.394 | 1.356 | 0.986 | 0.346 | 1.408 | 1.054 | 0.426 | 1.322 | 0.929 | |

| 80 m | 0.387 | 1.365 | 0.973 | 0.332 | 1.423 | 1.075 | 0.411 | 1.339 | 0.937 | |

| 100 m | 0.388 | 1.363 | 0.973 | 0.336 | 1.418 | 1.063 | 0.409 | 1.341 | 0.943 | |

| Feature Variable | Height | SLR | ER | LR | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | ||

| M_TF (RNIR-Mea) | 20 m | 0.484 | 1.243 | 0.860 | 0.413 | 1.341 | 0.998 | 0.482 | 1.257 | 0.883 |

| 40 m | 0.496 | 1.225 | 0.842 | 0.425 | 1.325 | 0.983 | 0.491 | 1.242 | 0.853 | |

| 60 m | 0.484 | 1.242 | 0.861 | 0.415 | 1.336 | 0.995 | 0.486 | 1.250 | 0.872 | |

| 80 m | 0.486 | 1.249 | 0.863 | 0.418 | 1.329 | 0.981 | 0.487 | 1.248 | 0.869 | |

| 100 m | 0.491 | 1.227 | 0.841 | 0.423 | 1.329 | 0.983 | 0.492 | 1.239 | 0.848 | |

| R_TF (G-Ent) | 20 m | 0.363 | 1.392 | 1.101 | 0.369 | 1.395 | 1.112 | 0.359 | 1.396 | 1.102 |

| 40 m | 0.358 | 1.396 | 1.103 | 0.358 | 1.396 | 1.119 | 0.355 | 1.399 | 1.106 | |

| 60 m | 0.353 | 1.400 | 1.128 | 0.352 | 1.403 | 1.121 | 0.342 | 1.412 | 1.129 | |

| 80 m | 0.139 | 1.616 | 1.421 | 0.154 | 1.602 | 1.417 | 0.138 | 1.617 | 1.427 | |

| 100 m | 0.114 | 1.641 | 1.437 | 0.121 | 1.634 | 1.441 | 0.113 | 1.642 | 1.437 | |

| Feature | Height | MLR | SVR | RFR | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | ||

| VIs (NDRE, GNDVI, OSAVI, and EVI2) | 20 m | 0.642 | 0.921 | 0.717 | 0.665 | 0.890 | 0.687 | 0.673 | 0.887 | 0.661 |

| 40 m | 0.647 | 0.919 | 0.716 | 0.666 | 0.891 | 0.683 | 0.675 | 0.886 | 0.659 | |

| 60 m | 0.642 | 0.924 | 0.721 | 0.654 | 0.904 | 0.690 | 0.665 | 0.896 | 0.669 | |

| 80 m | 0.648 | 0.913 | 0.714 | 0.662 | 0.899 | 0.689 | 0.670 | 0.890 | 0.673 | |

| 100 m | 0.639 | 0.923 | 0.728 | 0.650 | 0.909 | 0.705 | 0.661 | 0.898 | 0.689 | |

| CIs (EXG, GLA, EXGR, and RGBVI) | 20 m | 0.578 | 1.002 | 0.728 | 0.588 | 0.989 | 0.712 | 0.607 | 0.966 | 0.709 |

| 40 m | 0.519 | 1.068 | 0.784 | 0.523 | 1.064 | 0.773 | 0.551 | 1.028 | 0.753 | |

| 60 m | 0.482 | 1.110 | 0.829 | 0.498 | 1.092 | 0.797 | 0.544 | 1.042 | 0.764 | |

| 80 m | 0.421 | 1.174 | 0.874 | 0.447 | 1.147 | 0.822 | 0.454 | 1.139 | 0.805 | |

| 100 m | 0.405 | 1.186 | 0.894 | 0.420 | 1.164 | 0.854 | 0.445 | 1.148 | 0.812 | |

| Feature | Height | MLR | SVR | RFR | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | ||

| M_TFs (RNIR-Mea, RNIR-Hom, RNIR-Ent, and RNIR-Sec) | 20 m | 0.530 | 1.056 | 0.789 | 0.560 | 1.023 | 0.746 | 0.571 | 1.008 | 0.731 |

| 40 m | 0.538 | 1.049 | 0.783 | 0.574 | 1.012 | 0.734 | 0.581 | 0.991 | 0.724 | |

| 60 m | 0.525 | 1.066 | 0.802 | 0.555 | 1.031 | 0.764 | 0.569 | 1.016 | 0.752 | |

| 80 m | 0.533 | 1.054 | 0.788 | 0.560 | 1.026 | 0.759 | 0.572 | 1.010 | 0.732 | |

| 100 m | 0.539 | 1.047 | 0.779 | 0.571 | 1.014 | 0.737 | 0.585 | 0.981 | 0.716 | |

| R_TFs (R-Sec, G-Ent, G-Sec, and B-Sec) | 20 m | 0.533 | 1.052 | 0.790 | 0.561 | 1.019 | 0.744 | 0.530 | 1.054 | 0.787 |

| 40 m | 0.502 | 1.085 | 0.829 | 0.541 | 1.043 | 0.765 | 0.510 | 1.078 | 0.803 | |

| 60 m | 0.427 | 1.154 | 0.881 | 0.463 | 1.127 | 0.841 | 0.419 | 1.163 | 0.893 | |

| 80 m | 0.384 | 1.215 | 0.941 | 0.423 | 1.169 | 0.892 | 0.392 | 1.199 | 0.921 | |

| 100 m | 0.377 | 1.224 | 0.950 | 0.403 | 1.198 | 0.914 | 0.368 | 1.213 | 0.946 | |

| Data Type | 20M | 40M | 60M | 80M | 100M | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | |

| Ms | 0.690 | 0.829 | 0.579 | 0.724 | 0.810 | 0.545 | 0.717 | 0.819 | 0.563 | 0.720 | 0.814 | 0.551 | 0.688 | 0.831 | 0.588 |

| RGB | 0.673 | 0.881 | 0.609 | 0.667 | 0.896 | 0.617 | 0.645 | 0.919 | 0.632 | 0.564 | 1.018 | 0.702 | 0.521 | 1.066 | 0.735 |

| Data Type | VIs | VIs + M_CC | VIs + M_TFs | VIs + M_TFs + M_CC | ||||||||

| Ms | R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE |

| 0.675 | 0.886 | 0.659 | 0.703 | 0.847 | 0.527 | 0.724 | 0.810 | 0.545 | 0.712 | 0.828 | 0.573 | |

| CIs | CIs + R_CC | CIs + R_TFs | CIs + R_TFs + R_CC | |||||||||

| RGB | R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE |

| 0.607 | 0.966 | 0.709 | 0.671 | 0.884 | 0.606 | 0.673 | 0.881 | 0.609 | 0.668 | 0.912 | 0.625 | |

| Mode | Number of Variables | Feature Mixed | R2 | RMSE | MAE |

|---|---|---|---|---|---|

| 1 | 8 | VIs + CIs | 0.725 | 0.808 | 0.520 |

| 2 | 9 | VIs + CIs + M_CC | 0.723 | 0.817 | 0.534 |

| 3 | 9 | VIs + CIs + R_CC | 0.728 | 0.809 | 0.517 |

| 4 | 12 | VIs + CIs + M_TFs | 0.740 | 0.796 | 0.489 |

| 5 | 12 | VIs + CIs + R_TFs | 0.730 | 0.803 | 0.510 |

| 6 | 16 | VIs + CIs + M_TFs + R_TFs | 0.731 | 0.800 | 0.509 |

| 7 | 17 | VIs + CIs + M_TFs + R_TFs + M_CC | 0.722 | 0.814 | 0.545 |

| 8 | 17 | VIs + CIs + M_TFs + R_TFs + R_CC | 0.724 | 0.814 | 0.535 |

| 9 | 18 | VIs + CIs + M_TFs + R_TFs + M_CC + R_CC | 0.729 | 0.807 | 0.519 |

| Data Type | VIs | VIs + M_CC | VIs + M_TFs | VIs + M_TFs + M_CC | ||||||||

| Ms | R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE |

| Pre-Hs | 0.691 | 0.638 | 0.464 | 0.715 | 0.615 | 0.446 | 0.734 | 0.609 | 0.424 | 0.734 | 0.608 | 0.427 |

| Pos-Hs | 0.420 | 1.178 | 0.953 | 0.456 | 1.146 | 0.919 | 0.449 | 1.154 | 0.935 | 0.443 | 1.155 | 0.941 |

| CIs | CIs + R_CC | CIs + R_TFs | CIs + R_TFs + R_CC | |||||||||

| RGB | R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE |

| Pre-Hs | 0.618 | 0.701 | 0.549 | 0.677 | 0.653 | 0.484 | 0.689 | 0.645 | 0.472 | 0.683 | 0.648 | 0.478 |

| Pos-Hs | 0.355 | 1.239 | 1.018 | 0.412 | 1.183 | 0.957 | 0.405 | 1.191 | 0.970 | 0.408 | 1.189 | 0.963 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Jiang, Y.; Xu, B.; Yang, G.; Feng, H.; Yang, X.; Yang, H.; Liu, C.; Cheng, Z.; Feng, Z. Study on the Estimation of Leaf Area Index in Rice Based on UAV RGB and Multispectral Data. Remote Sens. 2024, 16, 3049. https://doi.org/10.3390/rs16163049

Zhang Y, Jiang Y, Xu B, Yang G, Feng H, Yang X, Yang H, Liu C, Cheng Z, Feng Z. Study on the Estimation of Leaf Area Index in Rice Based on UAV RGB and Multispectral Data. Remote Sensing. 2024; 16(16):3049. https://doi.org/10.3390/rs16163049

Chicago/Turabian StyleZhang, Yuan, Youyi Jiang, Bo Xu, Guijun Yang, Haikuan Feng, Xiaodong Yang, Hao Yang, Changbin Liu, Zhida Cheng, and Ziheng Feng. 2024. "Study on the Estimation of Leaf Area Index in Rice Based on UAV RGB and Multispectral Data" Remote Sensing 16, no. 16: 3049. https://doi.org/10.3390/rs16163049

APA StyleZhang, Y., Jiang, Y., Xu, B., Yang, G., Feng, H., Yang, X., Yang, H., Liu, C., Cheng, Z., & Feng, Z. (2024). Study on the Estimation of Leaf Area Index in Rice Based on UAV RGB and Multispectral Data. Remote Sensing, 16(16), 3049. https://doi.org/10.3390/rs16163049