Towards Robust Pansharpening: A Large-Scale High-Resolution Multi-Scene Dataset and Novel Approach

Abstract

1. Introduction

- We construct PanBench, a large-scale, high-resolution, multi-scene dataset containing all mainstream satellites for pansharpening, including six finely annotated classifications of ground feature coverage. Moreover, we can extend this dataset with applications such as super-resolution and colorization tasks.

- We propose CMFNet, a high-fidelity fusion network for pansharpening, and the experimental results show that the algorithm has good generalization ability and significantly surpasses the currently representative pansharpening methods.

- The PanBench dataset is not only suitable for pansharpening tasks in the field of remote sensing, but also supports other computer vision tasks, such as image super-resolution and image coloring. This dataset demonstrates strong adaptability and extensibility across different tasks.

2. Related Works

2.1. Datasets for Pansharpening

2.2. Algorithms for Pansharpening

3. PanBench Dataset

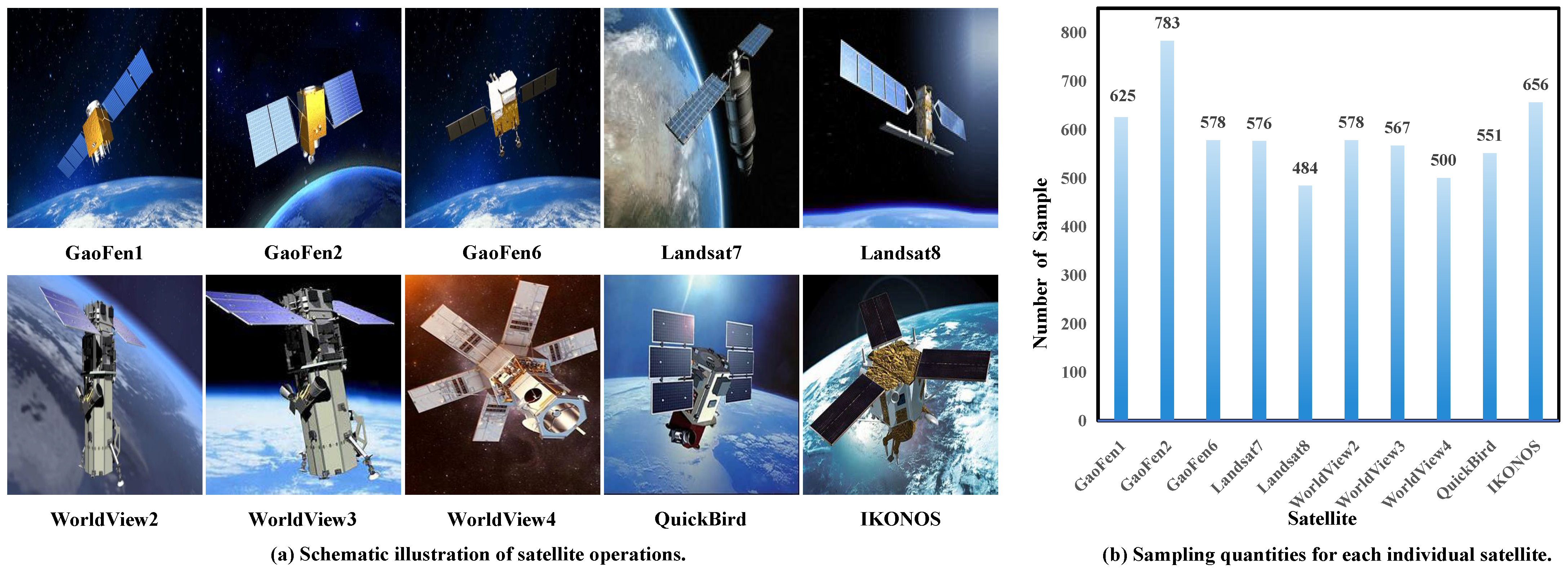

3.1. Multi-Source Satellite

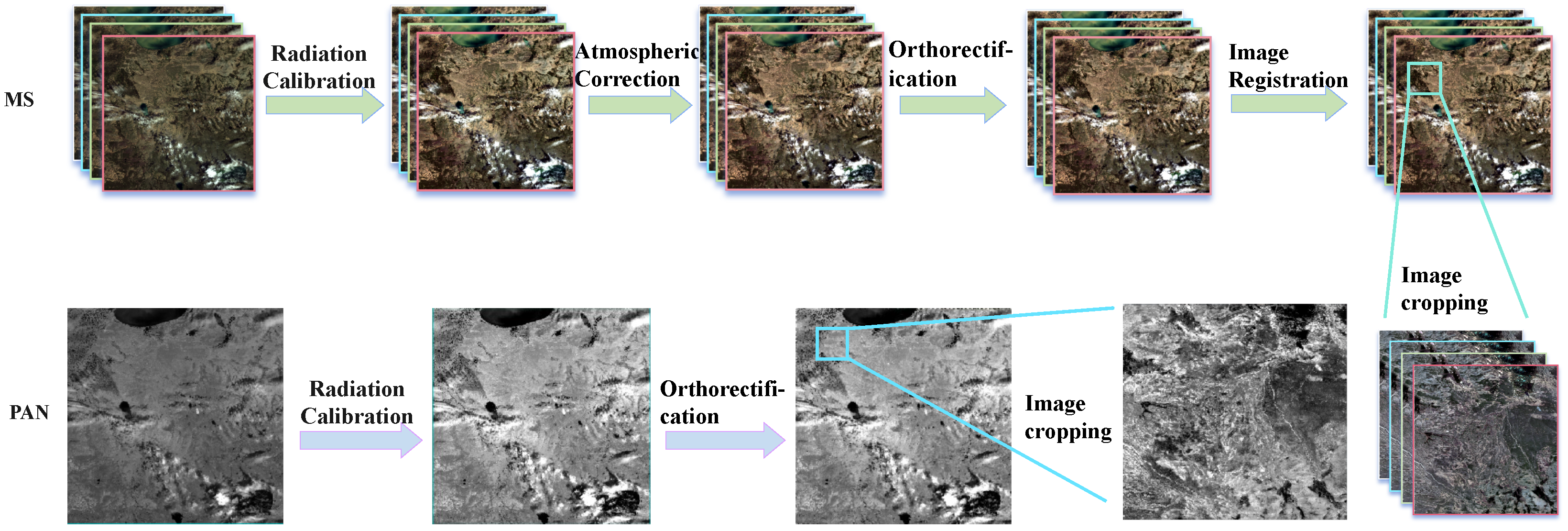

3.2. Data Processing

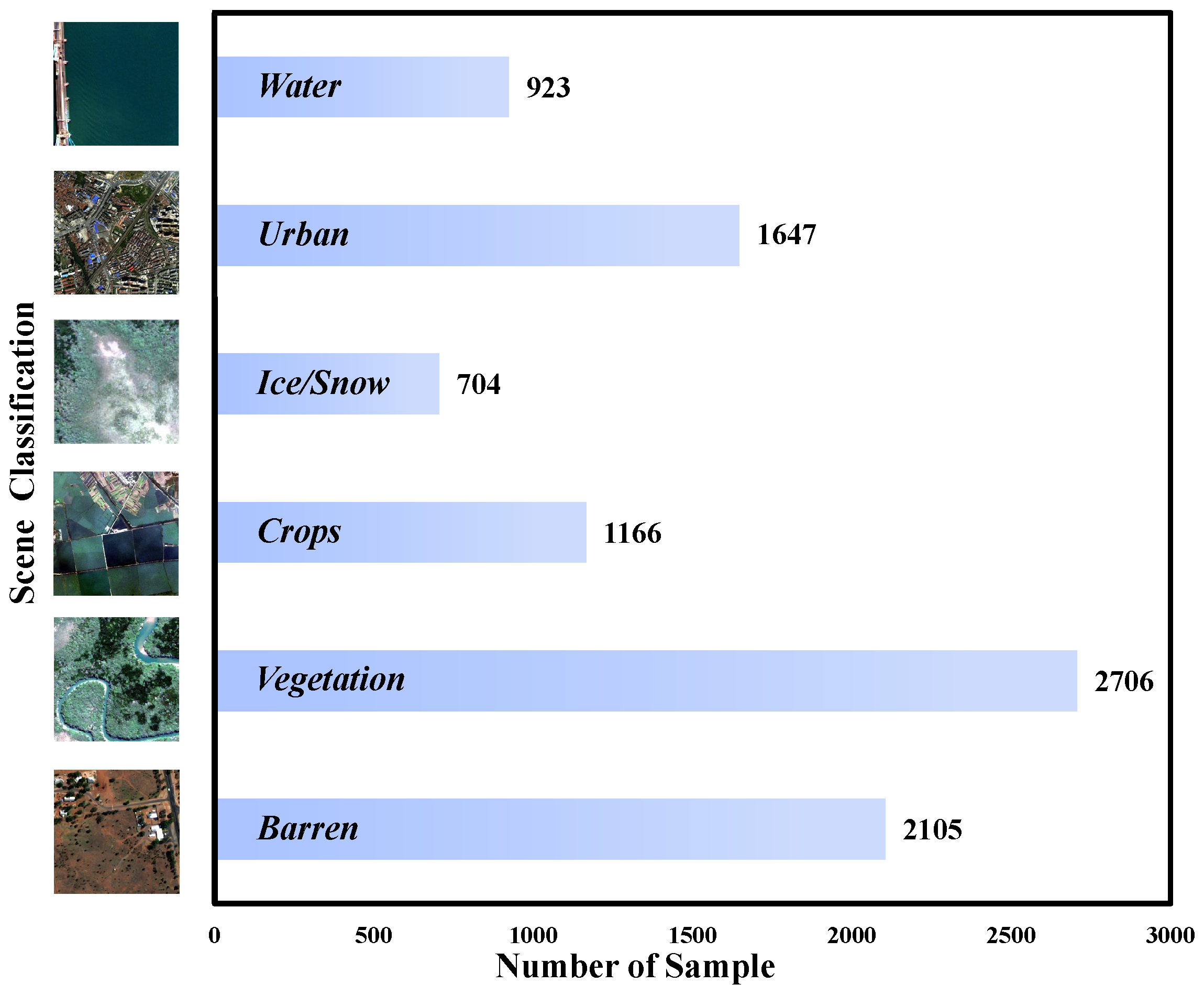

3.3. Scene Classification

4. Methodology

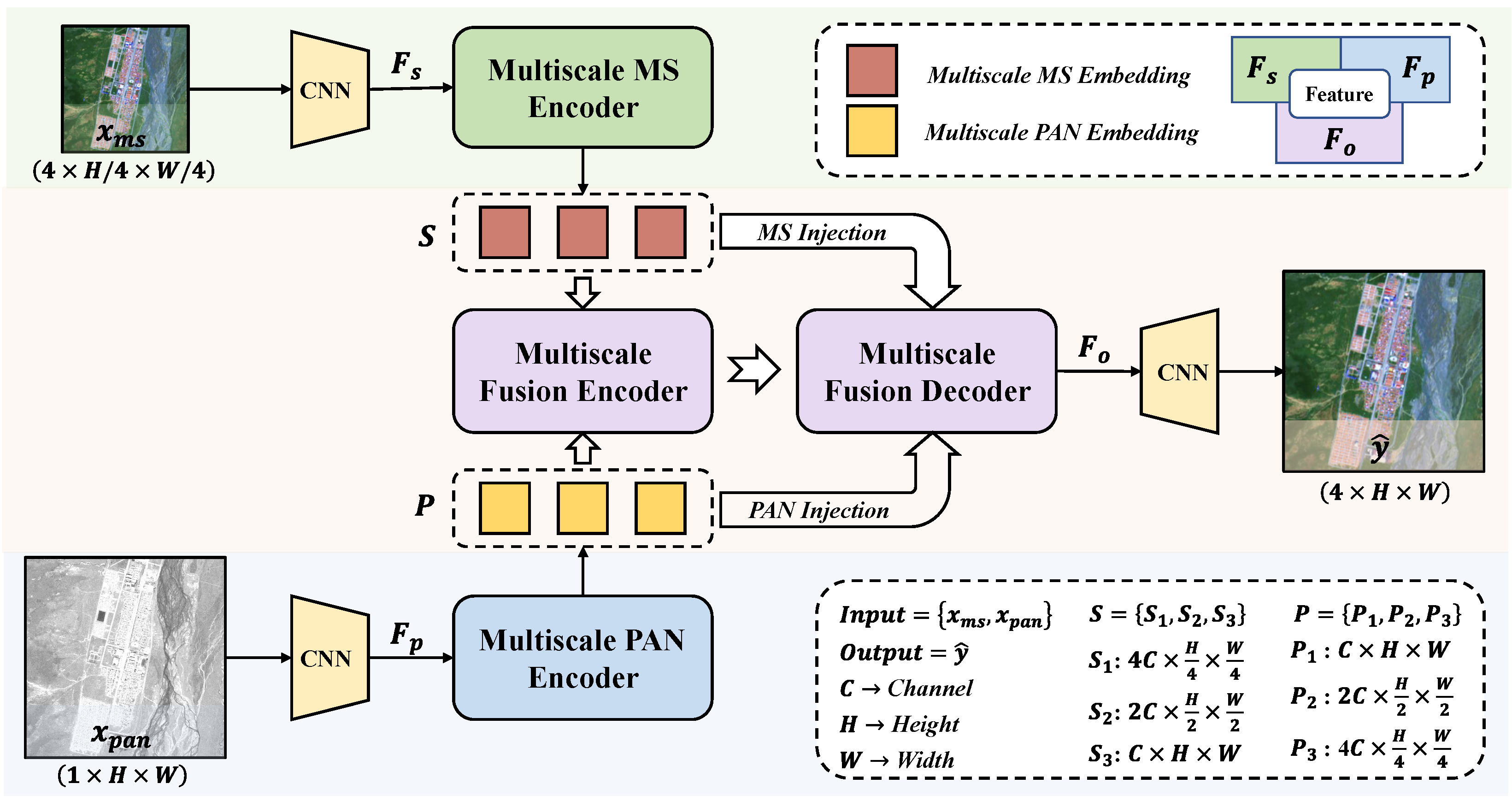

4.1. Overall Framework

4.1.1. Problem Definition

4.1.2. Overall Pipeline

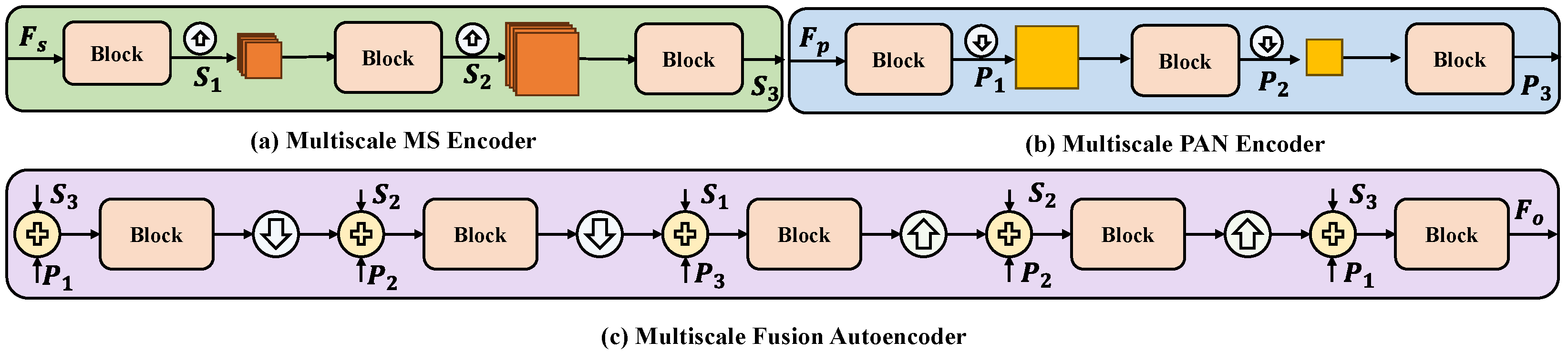

- Multiscale MS encoder: The MS image passes through a convolutional layer with a kernel size of 3 × 3 to obtain the features . The features are passed through a multiscale MS encoder, obtaining hierarchical image features of three resolutions.

- Multiscale PAN encoder: Similar to the multiscale MS encoder, the PAN features are pre-extracted without changing the image size, and three features corresponding to the MS scale are obtained by the multiscale PAN encoder.

- Multiscale fusion autoencoder: The fused features are obtained from the features of the three corresponding scales obtained from the multiscale MS encoder and the PAN encoder, respectively. Finally, the fused features are output by a convolution layer.

4.2. Cascaded Multiscale Fusion Network

4.2.1. Multiscale MS Encoder

4.2.2. Multiscale PAN Encoder

4.2.3. Multiscale Fusion Autoencoder

- Multiscale fusion encoder: The encoder is responsible for gradually downsampling the input image and extracting high-level semantic features [15,53,54]. To be able to make full use of the information of the images of the multiscale MS encoder and multiscale PAN encoder, it is necessary to deeply fuse and at the same scale. After the multiscale MS and PAN encoders, we have two feature sets and , representing MS and PAN images, respectively. Since high-resolution MS images must have high spatial and spectral resolutions, their features must have both spatial and spectral information. To do this, the two feature sets must be concatenated and added at the same scale. That is, = + , = + , and = + . Then, the block, the same as the multiscale MS encoder, is used to encode the concatenated feature maps into a more compact representation after each addition, and the end of the multiscale fusion encoder (Figure 5c) is the feature set , which encodes the spatial and spectral information of the two input images:

- Multiscale fusion decoder: The decoder (Figure 5c) corresponds to the encoder, and the upsampled feature map is fused with the feature map in the corresponding encoder by the feature fusion operation. This can help to recover the detail and texture information of the image [55]. Specifically, we upsampled the features of the set and superimposed and fused them with the features of the corresponding scale of . In encoder downsampling, some details and local information may be lost due to the loss of information or resolution degradation caused by downsampling. Therefore, in the process of decoding , multiscale injection of and can obtain the details and local information from the encoder in the decoder, which can effectively connect and fuse the low-level and high-level features, and finally, output the fusion result .

5. Experiments and Results

5.1. Implementation Details

5.1.1. Training Settings

5.1.2. Evaluation Metrics

5.1.3. Quantitative Comparison

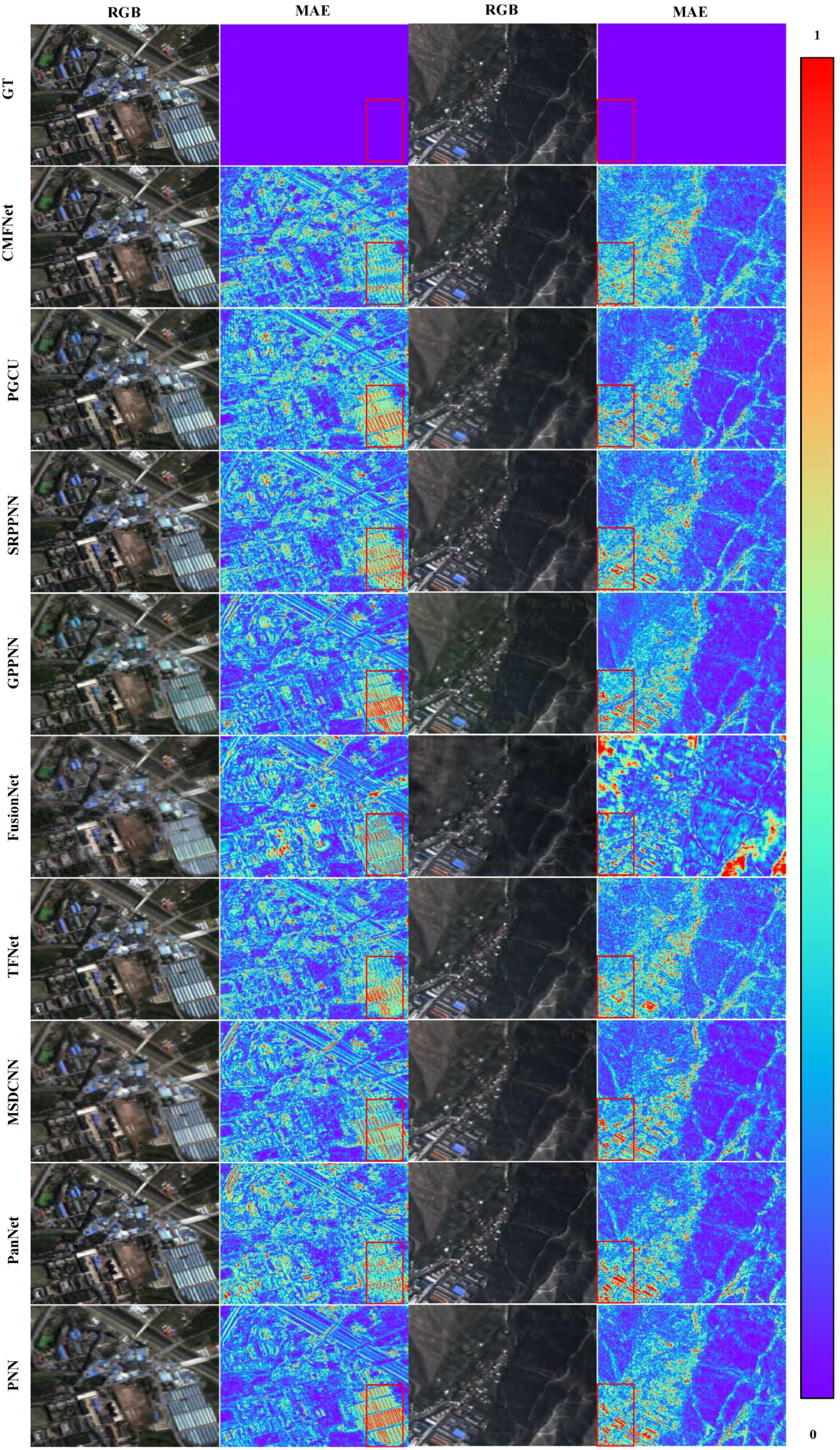

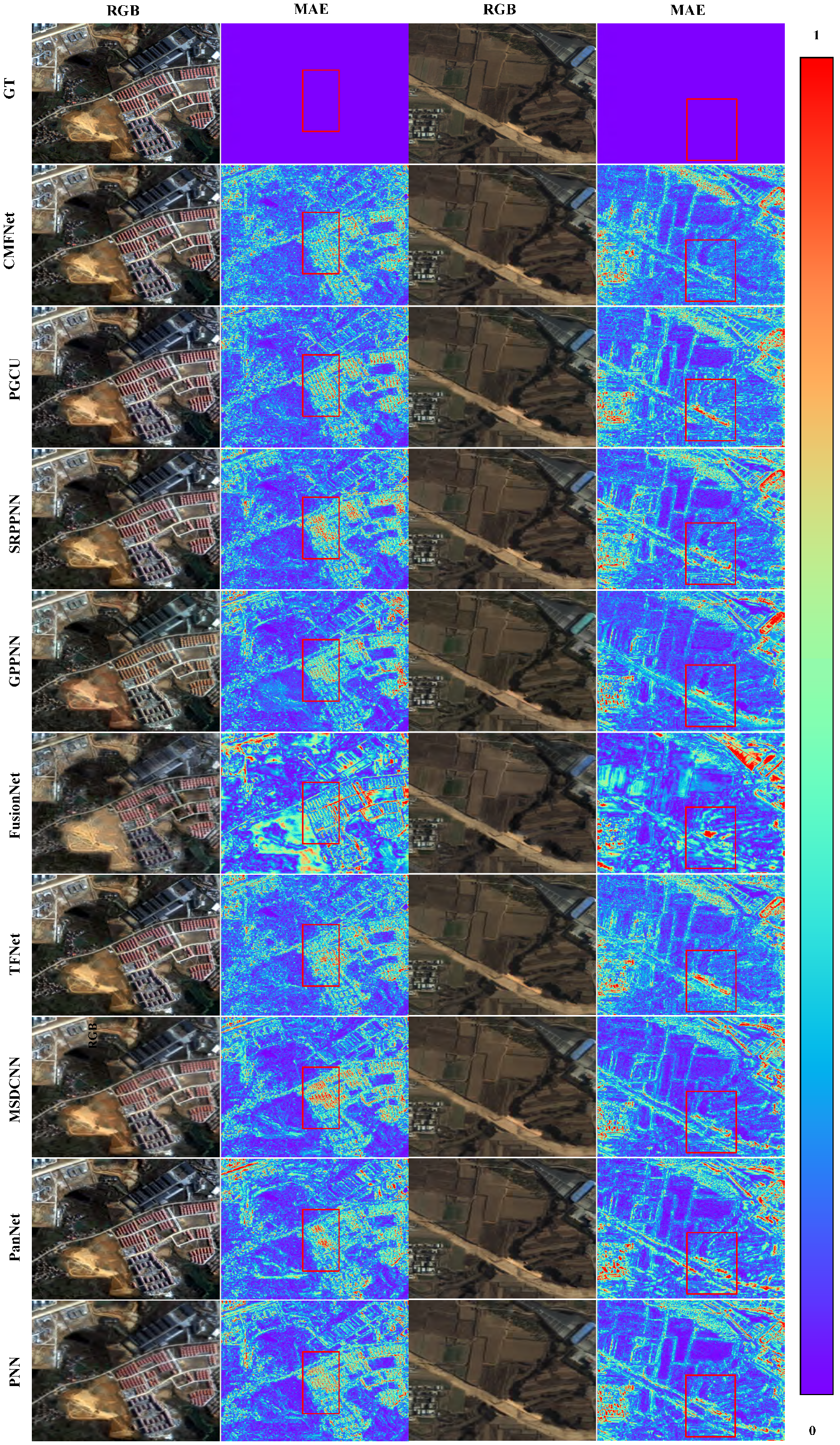

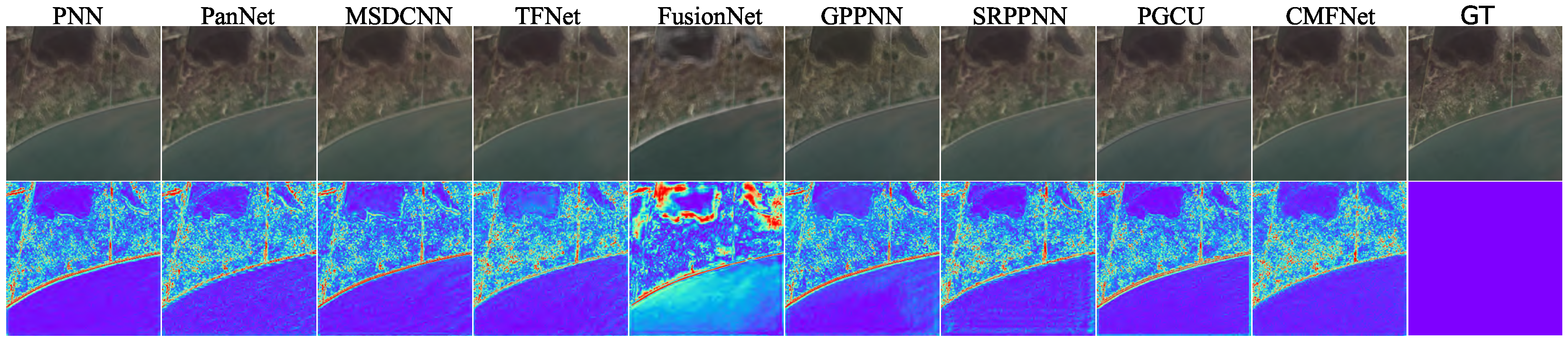

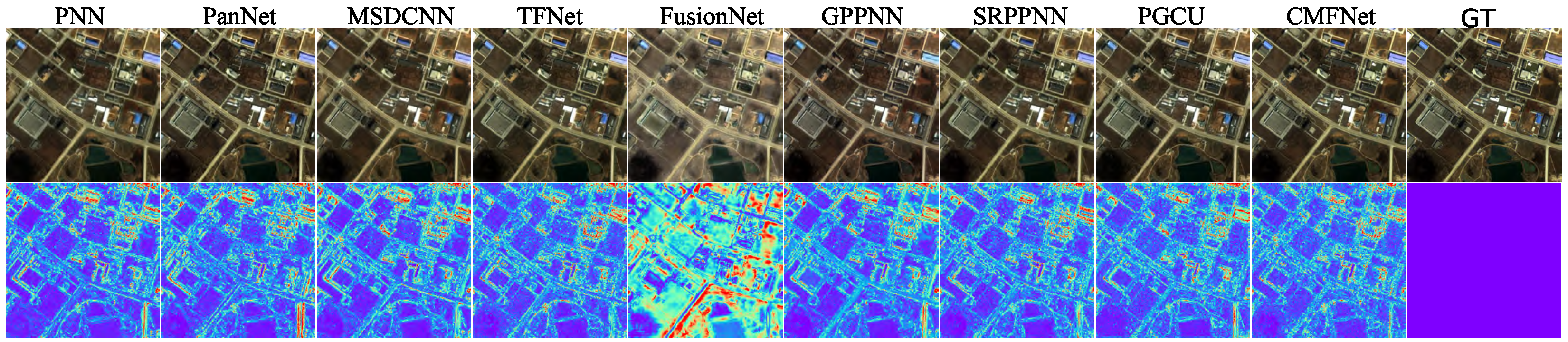

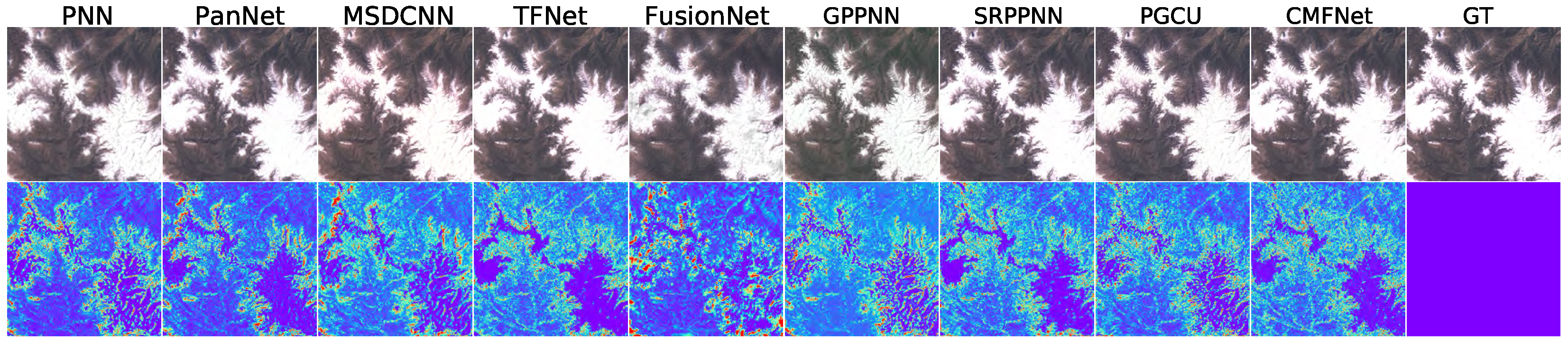

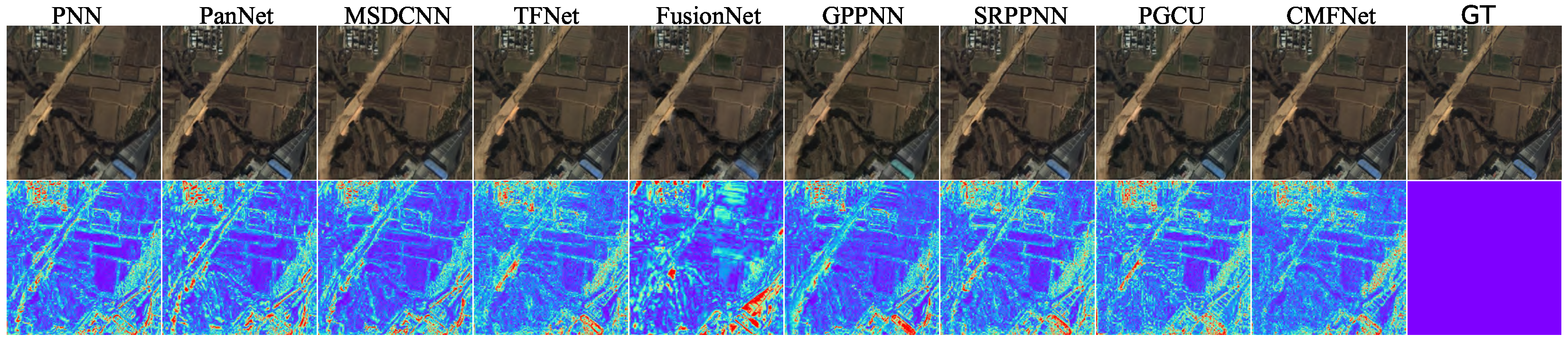

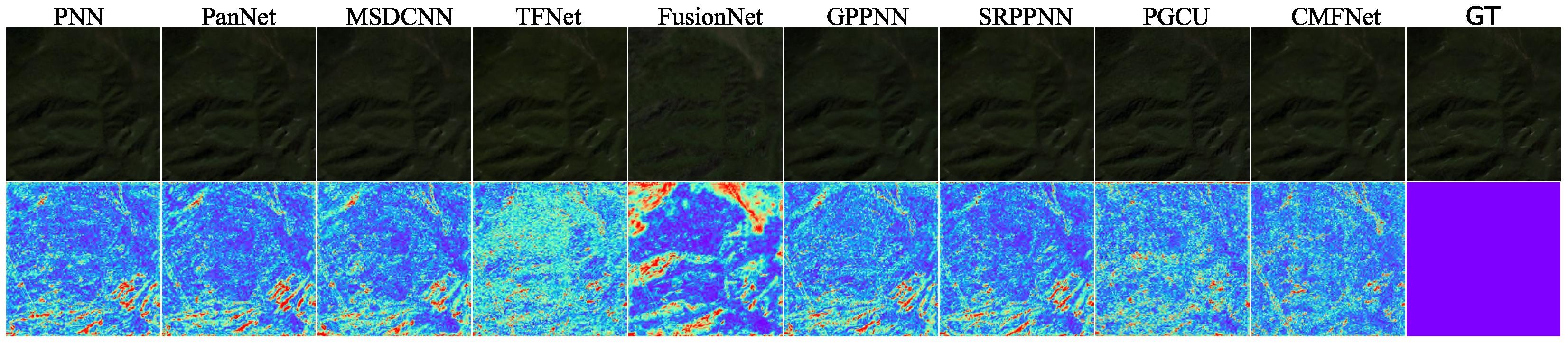

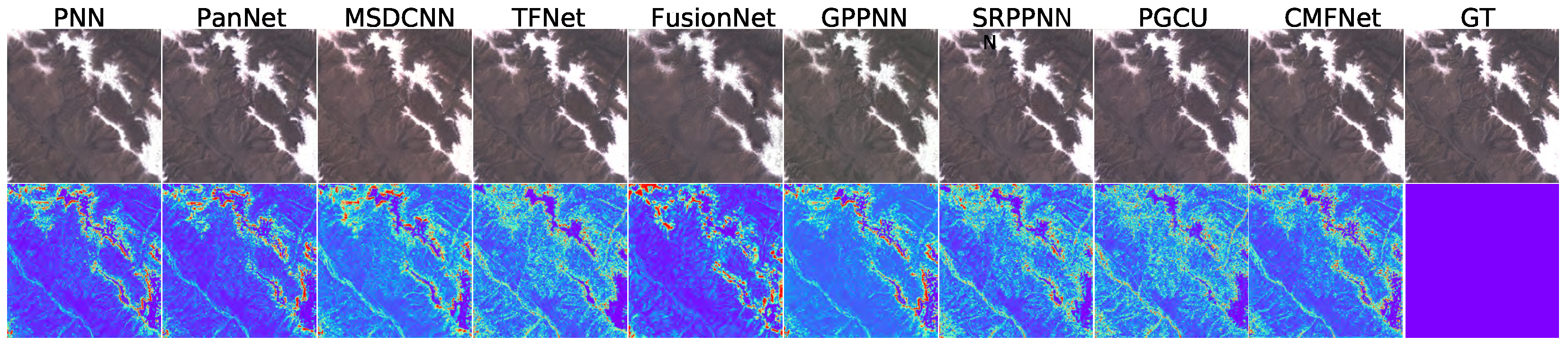

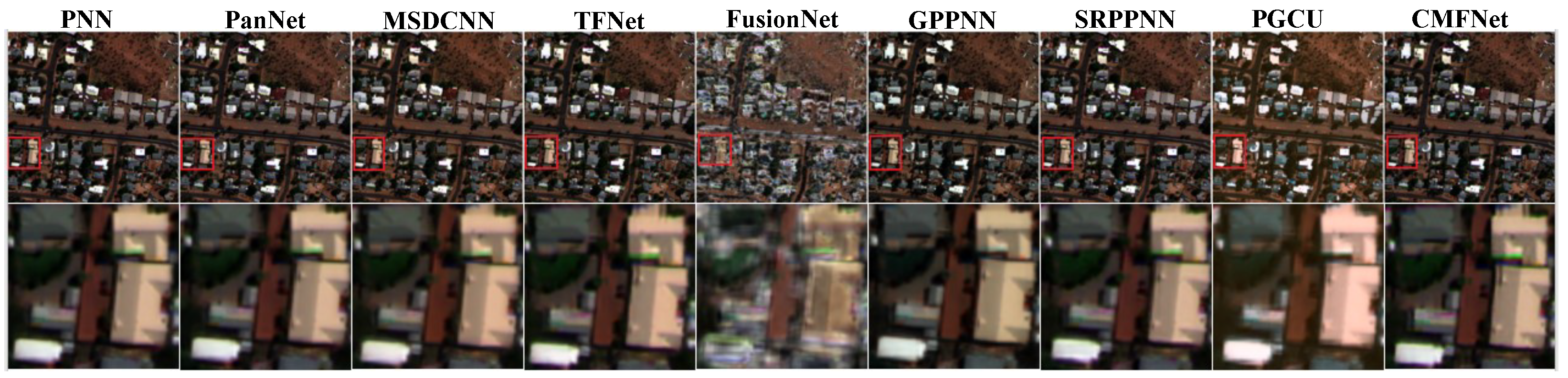

5.1.4. Qualitative Comparison

5.2. Ablation Studies

5.2.1. Impact of the Multiscale Cascading

5.2.2. Impact of the Cascaded Injection

5.2.3. Impact of the Block

5.2.4. Scalability of the PanBench Dataset

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, H.; Ma, J. GTP-PNet: A residual learning network based on gradient transformation prior for pansharpening. ISPRS-J. Photogramm. Remote Sens. 2021, 172, 223–239. [Google Scholar] [CrossRef]

- Cao, X.; Yao, J.; Xu, Z.; Meng, D. Hyperspectral Image Classification with Convolutional Neural Network and Active Learning. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4604–4616. [Google Scholar] [CrossRef]

- Lv, Z.; Zhang, P.; Sun, W.; Benediktsson, J.A.; Li, J.; Wang, W. Novel Adaptive Region Spectral-Spatial Features for Land Cover Classification with High Spatial Resolution Remotely Sensed Imagery. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5609412. [Google Scholar] [CrossRef]

- Liu, J.; Li, S.; Zhou, C.; Cao, X.; Gao, Y.; Wang, B. SRAF-Net: A Scene-Relevant Anchor-Free Object Detection Network in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5405914. [Google Scholar] [CrossRef]

- Li, X.; Wang, Z.; Zhang, B.; Sun, F.; Hu, X. Recognizing object by components with human prior knowledge enhances adversarial robustness of deep neural networks. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 8861–8873. [Google Scholar] [CrossRef] [PubMed]

- Asokan, A.; Anitha, J. Change detection techniques for remote sensing applications: A survey. Earth Sci. Inform. 2019, 12, 143–160. [Google Scholar] [CrossRef]

- Wu, C.; Du, B.; Zhang, L. Fully convolutional change detection framework with generative adversarial network for unsupervised, weakly supervised and regional supervised change detection. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 9774–9788. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.; Fu, X.; Xu, C.; Meng, D. Deep spatial-spectral global reasoning network for hyperspectral image denoising. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5504714. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, Z.; Yi, P.; Jiang, J.; Xiao, J.; Yao, Y. Deep distillation recursive network for remote sensing imagery super-resolution. Remote Sens. 2018, 10, 1700. [Google Scholar] [CrossRef]

- Wang, Z.; Jiang, K.; Yi, P.; Han, Z.; He, Z. Ultra-dense GAN for satellite imagery super-resolution. Neurocomputing 2020, 398, 328–337. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the ICCV, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Shao, Z.; Lu, Z.; Ran, M.; Fang, L.; Zhou, J.; Zhang, Y. Residual Encoder–Decoder Conditional Generative Adversarial Network for Pansharpening. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1573–1577. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Chen, C.; Liang, P.; Guo, X.; Jiang, J. Pan-GAN: An unsupervised pansharpening method for remote sensing image fusion. Inf. Fusion 2020, 62, 110–120. [Google Scholar] [CrossRef]

- Hu, X.; Li, K.; Zhang, W.; Luo, Y.; Lemercier, J.M.; Gerkmann, T. Speech separation using an asynchronous fully recurrent convolutional neural network. NeuralIPS 2021, 34, 22509–22522. [Google Scholar]

- Li, K.; Yang, R.; Hu, X. An efficient encoder-decoder architecture with top-down attention for speech separation. arXiv 2022, arXiv:2209.15200. [Google Scholar]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by Convolutional Neural Networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef]

- Yuan, Q.; Wei, Y.; Meng, X.; Shen, H.; Zhang, L. A Multiscale and Multidepth Convolutional Neural Network for Remote Sensing Imagery Pan-Sharpening. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 978–989. [Google Scholar] [CrossRef]

- Xu, S.; Zhang, J.; Zhao, Z.; Sun, K.; Liu, J.; Zhang, C. Deep Gradient Projection Networks for Pan-Sharpening. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021; pp. 1366–1375. [Google Scholar]

- Yang, J.; Fu, X.; Hu, Y.; Huang, Y.; Ding, X.; Paisley, J. PanNet: A Deep Network Architecture for Pan-Sharpening. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017; pp. 5449–5457. [Google Scholar]

- Liu, X.; Liu, Q.; Wang, Y. Remote sensing image fusion based on two-stream fusion network. Inf. Fusion 2020, 55, 1–15. [Google Scholar] [CrossRef]

- Deng, L.J.; Vivone, G.; Jin, C.; Chanussot, J. Detail Injection-Based Deep Convolutional Neural Networks for Pansharpening. IEEE Trans. Geosci. Remote Sens. 2020, 59, 6995–7010. [Google Scholar] [CrossRef]

- Zhou, M.; Huang, J.; Yan, K.; Yu, H.; Fu, X.; Liu, A.; Wei, X.; Zhao, F. Spatial-Frequency Domain Information Integration for Pan-Sharpening. In Proceedings of the ECCV, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 274–291. [Google Scholar]

- Zhou, M.; Yan, K.; Huang, J.; Yang, Z.; Fu, X.; Zhao, F. Mutual Information-Driven Pan-Sharpening. In Proceedings of the CVPR, New Orleans, LA, USA, 18–24 June 2022; pp. 1798–1808. [Google Scholar]

- Pohl, C.; Moellmann, J.; Fries, K. Standardizing quality assessment of fused remotely sensed images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 863–869. [Google Scholar] [CrossRef]

- Liu, Q.; Zhou, H.; Xu, Q.; Liu, X.; Wang, Y. PSGAN: A Generative Adversarial Network for Remote Sensing Image Pan-Sharpening. IEEE Trans. Geosci. Remote Sens. 2020, 59, 10227–10242. [Google Scholar] [CrossRef]

- Jin, X.; Huang, S.; Jiang, Q.; Lee, S.J.; Wu, L.; Yao, S. Semisupervised Remote Sensing Image Fusion Using Multiscale Conditional Generative Adversarial Network with Siamese Structure. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7066–7084. [Google Scholar] [CrossRef]

- Zhu, Z.; Cao, X.; Zhou, M.; Huang, J.; Meng, D. Probability-Based Global Cross-Modal Upsampling for Pansharpening. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 14039–14048. [Google Scholar]

- Meng, Q.; Shi, W.; Li, S.; Zhang, L. PanDiff: A Novel Pansharpening Method Based on Denoising Diffusion Probabilistic Model. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5611317. [Google Scholar] [CrossRef]

- Liu, Q.; Meng, X.; Shao, F.; Li, S. Supervised-unsupervised combined deep convolutional neural networks for high-fidelity pansharpening. Inf. Fusion 2023, 89, 292–304. [Google Scholar] [CrossRef]

- Zhou, B.; Shao, F.; Meng, X.; Fu, R.; Ho, Y.S. No-Reference Quality Assessment for Pansharpened Images via Opinion-Unaware Learning. IEEE Access 2019, 7, 40388–40401. [Google Scholar] [CrossRef]

- Agudelo-Medina, O.A.; Benitez-Restrepo, H.D.; Vivone, G.; Bovik, A. Perceptual quality assessment of pansharpened images. Remote Sens. 2019, 11, 877. [Google Scholar] [CrossRef]

- Vivone, G.; Dalla Mura, M.; Garzelli, A.; Pacifici, F. A benchmarking protocol for pansharpening: Dataset, preprocessing, and quality assessment. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6102–6118. [Google Scholar] [CrossRef]

- Meng, X.; Xiong, Y.; Shao, F.; Shen, H.; Sun, W.; Yang, G.; Yuan, Q.; Fu, R.; Zhang, H. A large-scale benchmark data set for evaluating pansharpening performance: Overview and implementation. IEEE Geosci. Remote Sens. Mag. 2020, 9, 18–52. [Google Scholar] [CrossRef]

- Cai, J.; Huang, B. Super-Resolution-Guided Progressive Pansharpening Based on a Deep Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5206–5220. [Google Scholar] [CrossRef]

- Gastineau, A.; Aujol, J.F.; Berthoumieu, Y.; Germain, C. Generative Adversarial Network for Pansharpening with Spectral and Spatial Discriminators. IEEE Trans. Geosci. Remote Sens. 2021, 60, 4401611. [Google Scholar] [CrossRef]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raskar, R. DeepGlobe 2018: A Challenge to Parse the Earth through Satellite Images. In Proceedings of the CVPRW, Salt Lake City, UT, USA, 18–22 June 2018; pp. 172–181. [Google Scholar]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Li, S.; Yang, B. A new pansharpening method using a compressed sensing technique. TGRS 2010, 49, 738–746. [Google Scholar]

- Huang, W.; Xiao, L.; Wei, Z.; Liu, H.; Tang, S. A New Pan-Sharpening Method with Deep Neural Networks. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1037–1041. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the CVPR, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Verde, N.; Mallinis, G.; Tsakiri-Strati, M.; Georgiadis, C.; Patias, P. Assessment of Radiometric Resolution Impact on Remote Sensing Data Classification Accuracy. Remote Sens. 2018, 10, 1267. [Google Scholar] [CrossRef]

- Lin, S.; Gu, J.; Yamazaki, S.; Shum, H.Y. Radiometric calibration from a single image. In Proceedings of the CVPR, Washington, DC, USA, 27 June–2 July 2004; Volume 2, p. II. [Google Scholar]

- Liang, S.; Fang, H.; Chen, M. Atmospheric correction of Landsat ETM+ land surface imagery. I. Methods. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2490–2498. [Google Scholar] [CrossRef]

- Barzaghi, R.; Carrion, D.; Carroccio, M.; Maseroli, R.; Stefanelli, G.; Venuti, G. Gravity corrections for the updated Italian levelling network. Appl. Geomat. 2023, 15, 773–780. [Google Scholar] [CrossRef]

- Meng, Q.; Zhao, M.; Zhang, L.; Shi, W.; Su, C.; Bruzzone, L. Multilayer Feature Fusion Network with Spatial Attention and Gated Mechanism for Remote Sensing Scene Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6510105. [Google Scholar] [CrossRef]

- Li, K.; Yang, R.; Sun, F.; Hu, X. IIANet: An Intra-and Inter-Modality Attention Network for Audio-Visual Speech Separation. In Proceedings of the Forty-first International Conference on Machine Learning (ICML), Vienna, Austria, 7–12 July 2024. [Google Scholar]

- Li, K.; Luo, Y. Subnetwork-To-Go: Elastic Neural Network with Dynamic Training and Customizable Inference. In Proceedings of the ICASSP, Seoul, Republic of Korea, 22–27 May 2024; pp. 6775–6779. [Google Scholar]

- Zou, X.; Li, K.; Xing, J.; Zhang, Y.; Wang, S.; Jin, L.; Tao, P. DiffCR: A Fast Conditional Diffusion Framework for Cloud Removal from Optical Satellite Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5612014. [Google Scholar] [CrossRef]

- Chen, L.; Chu, X.; Zhang, X.; Sun, J. Simple Baselines for Image Restoration. In Proceedings of the ECCV, Tel Aviv, Israel, 23–27 October 2022; pp. 17–33. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the CVPR, Tel Aviv, Israel, 23–27 October 2022; pp. 11976–11986. [Google Scholar]

- Li, K.; Chen, G. Spmamba: State-space model is all you need in speech separation. arXiv 2024, arXiv:2404.02063. [Google Scholar]

- Li, K.; Xie, F.; Chen, H.; Yuan, K.; Hu, X. An audio-visual speech separation model inspired by cortico-thalamo-cortical circuits. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 10, 76–80. [Google Scholar] [CrossRef] [PubMed]

- Li, K.; Luo, Y. On the design and training strategies for RNN-based online neural speech separation systems. In Proceedings of the ICASSP, Rhodes, Greece, 4–9 June 2023; pp. 1–5. [Google Scholar]

- Liu, M.; Zhang, W.; Orabona, F.; Yang, T. Adam: A Stochastic Method with Adaptive Variance Reduction. arXiv 2021, arXiv:2011.11985. [Google Scholar]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Yuhas, R.H.; Goetz, A.F.; Boardman, J.W. Discrimination among semi-arid landscape endmembers using the Spectral Angle Mapper (SAM) algorithm. In Proceedings of the JPL Airborne Geoscience Workshop, Pasadena, CA, USA, 1–5 June 1992; Volume 1, pp. 147–149. [Google Scholar]

- Renza, D.; Martinez, E.; Arquero, A. A New Approach to Change Detection in Multispectral Images by Means of ERGAS Index. IEEE Geosci. Remote Sens. Lett. 2012, 10, 76–80. [Google Scholar] [CrossRef]

- Zhou, J.; Civco, D.L.; Silander, J.A. A wavelet transform method to merge Landsat TM and SPOT panchromatic data. Int. J. Remote Sens. 1998, 19, 743–757. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS + Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Vivone, G.; Restaino, R.; Chanussot, J. Full scale regression-based injection coefficients for panchromatic sharpening. IEEE Trans. Image Process. 2018, 27, 3418–3431. [Google Scholar] [CrossRef]

- Huang, J.; Li, K.; Wang, X. Single image super-resolution reconstruction of enhanced loss function with multi-gpu training. In Proceedings of the ISPA/BDCloud/SocialCom/Su, Xiamen, China, 16–18 December 2019; pp. 559–565. [Google Scholar]

- Saharia, C.; Ho, J.; Chan, W.; Salimans, T.; Fleet, D.J.; Norouzi, M. Image Super-Resolution via Iterative Refinement. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4713–4726. [Google Scholar] [CrossRef]

- Li, K.; Yang, S.; Dong, R.; Wang, X.; Huang, J. Survey of single image super-resolution reconstruction. IET Image Process. 2020, 14, 2273–2290. [Google Scholar] [CrossRef]

- Saharia, C.; Chan, W.; Chang, H.; Lee, C.; Ho, J.; Salimans, T.; Fleet, D.; Norouzi, M. Palette: Image-to-Image Diffusion Models. In Proceedings of the ACM SIGGRAPH, Vancouver, BC, Canada, 7–11 August 2022; pp. 1–10. [Google Scholar]

- Zhou, M.; Yan, K.; Pan, J.; Ren, W.; Xie, Q.; Cao, X. Memory-augmented deep unfolding network for guided image super-resolution. Int. J. Comput. Vis. 2023, 131, 215–242. [Google Scholar] [CrossRef]

| Method | Publication | Year | GF1 | GF2 | GF6 | LC7 | LC8 | WV2 | WV3 | WV4 | QB | IN | PAN |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PNN [17] | Remote Sens. | 2016 | √ | √ | 132 * | ||||||||

| PanNet [20] | ICCV | 2017 | √ | √ | √ | 400 * | |||||||

| MSDCNN [18] | J-STARS | 2018 | √ | √ | √ | 164 * | |||||||

| TFNet [21] | Inform. Fusion | 2020 | √ | √ | 512 * | ||||||||

| FusionNet [22] | TGRS | 2020 | √ | √ | √ | √ | 64 * | ||||||

| PSGAN [26] | TGRS | 2020 | √ | √ | √ | 256 * | |||||||

| GPPNN [19] | CVPR | 2021 | √ | √ | √ | 128 * | |||||||

| SRPPNN [35] | TGRS | 2021 | √ | √ | √ | 256 * | |||||||

| MDSSC-GAN [36] | TGRS | 2021 | √ | 512 * | |||||||||

| MIDP [24] | CVPR | 2022 | √ | √ | √ | 128 * | |||||||

| SFIIN [23] | ECCV | 2022 | √ | √ | √ | 128 * | |||||||

| PanDiff [29] | TGRS | 2023 | √ | √ | √ | √ | 64 * | ||||||

| USSCNet [30] | Inform. Fusion | 2023 | √ | √ | √ | 256 * | |||||||

| PGCU [28] | CVPR | 2023 | √ | √ | √ | 128 * | |||||||

| CMFNet [Ours] | - | 2024 | √ | √ | √ | √ | √ | √ | √ | √ | √ | √ | 1024 * |

| Model | Full Resolution | Reduced Resolution | |||||||

|---|---|---|---|---|---|---|---|---|---|

| ↓ | ↓ | QNR ↑ | PSNR ↑ | SSIM ↑ | SAM ↓ | ERGAS ↓ | SCC ↑ | MSE ↓ | |

| GSA [61] | 0.0711 | 0.1334 | 0.8085 | 17.3500 | 0.3237 | 0.0807 | 5.0289 | 0.8770 | 33.3673 |

| MTF-GLP [62] | 0.1189 | 0.1308 | 0.7716 | 16.9708 | 0.3005 | 0.0931 | 5.6847 | 0.8707 | 36.4232 |

| PNN [17] | 0.0559 | 0.1298 | 0.8223 | 28.9029 | 0.7887 | 0.0750 | 4.4998 | 0.8992 | 25.5223 |

| PanNet [20] | 0.0640 | 0.1184 | 0.8319 | 30.1465 | 0.8497 | 0.0702 | 3.8053 | 0.9234 | 18.5474 |

| MSDCNN [18] | 0.0542 | 0.0981 | 0.8557 | 29.2675 | 0.8237 | 0.0761 | 4.1677 | 0.9139 | 21.7871 |

| TFNet [21] | 0.0565 | 0.1105 | 0.8404 | 32.7018 | 0.8931 | 0.0600 | 2.8816 | 0.9486 | 11.9169 |

| FusionNet [22] | 0.0998 | 0.1663 | 0.7505 | 24.4378 | 0.7175 | 0.0880 | 7.5029 | 0.7913 | 70.0308 |

| GPPNN [19] | 0.0671 | 0.1074 | 0.8369 | 28.8901 | 0.8211 | 0.0842 | 4.2720 | 0.9124 | 22.3945 |

| SRPPNN [35] | 0.0513 | 0.0907 | 0.8640 | 31.3186 | 0.8647 | 0.0666 | 3.3526 | 0.9344 | 15.5632 |

| PGCU [28] | 0.1171 | 0.0961 | 0.7994 | 29.9692 | 0.8244 | 0.0759 | 3.9113 | 0.9167 | 19.5983 |

| CMFNet [Ours] | 0.0567 | 0.1012 | 0.8515 | 34.4921 | 0.9153 | 0.0511 | 2.3984 | 0.9601 | 8.8862 |

| Satellite | Model | Reduced Resolution | Full Resolution | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| PSNR ↑ | SSIM ↑ | SAM ↓ | ERGAS ↓ | SCC ↑ | MSE ↓ | ↓ | ↓ | QNR ↑ | ||

| GF1 | PNN | 36.5457 | 0.8278 | 0.0313 | 2.4438 | 0.8742 | 0.0011 | 0.0698 | 0.0904 | 0.8469 |

| PanNet | 38.2636 | 0.9052 | 0.0278 | 1.8354 | 0.9016 | 0.0006 | 0.0958 | 0.1607 | 0.7596 | |

| MSDCNN | 35.5714 | 0.8428 | 0.0374 | 2.4953 | 0.8737 | 0.0011 | 0.0731 | 0.2003 | 0.7417 | |

| TFNet | 39.4532 | 0.9379 | 0.0275 | 1.5140 | 0.9404 | 0.0003 | 0.0828 | 0.1745 | 0.7568 | |

| FusionNet | 29.2258 | 0.7811 | 0.0503 | 5.0309 | 0.6753 | 0.0044 | 0.1203 | 0.1457 | 0.7509 | |

| GPPNN | 33.3889 | 0.8360 | 0.0570 | 3.0394 | 0.8647 | 0.0012 | 0.0877 | 0.0951 | 0.8263 | |

| SRPPNN | 38.5106 | 0.9146 | 0.0273 | 1.7626 | 0.9198 | 0.0005 | 0.0679 | 0.1609 | 0.7819 | |

| PGCU | 35.8450 | 0.8383 | 0.0353 | 2.4158 | 0.8704 | 0.0010 | 0.0757 | 0.0859 | 0.8462 | |

| CMFNet | 42.8653 | 0.9591 | 0.0196 | 1.0458 | 0.9580 | 0.0001 | 0.0686 | 0.0866 | 0.8517 | |

| GF2 | PNN | 28.6084 | 0.8093 | 0.0726 | 5.4655 | 0.9212 | 0.0021 | 0.0591 | 0.1021 | 0.8468 |

| PanNet | 30.8310 | 0.8897 | 0.0656 | 4.1091 | 0.9560 | 0.0011 | 0.0566 | 0.0732 | 0.8744 | |

| MSDCNN | 30.0148 | 0.8615 | 0.0710 | 4.6657 | 0.9450 | 0.0015 | 0.0551 | 0.0790 | 0.8709 | |

| TFNet | 34.1263 | 0.9351 | 0.0523 | 2.8889 | 0.9797 | 0.0005 | 0.0665 | 0.0744 | 0.8643 | |

| FusionNet | 25.1434 | 0.7246 | 0.0966 | 8.7656 | 0.8212 | 0.0041 | 0.0588 | 0.0868 | 0.8598 | |

| GPPNN | 30.1646 | 0.8666 | 0.0725 | 4.5182 | 0.9472 | 0.0014 | 0.0633 | 0.0822 | 0.8605 | |

| SRPPNN | 32.0361 | 0.9060 | 0.0586 | 3.4941 | 0.9663 | 0.0009 | 0.0602 | 0.0741 | 0.8708 | |

| PGCU | 30.4483 | 0.8477 | 0.0753 | 4.4774 | 0.9474 | 0.0012 | 0.0685 | 0.0768 | 0.8616 | |

| CMFNet | 36.6737 | 0.9554 | 0.0394 | 2.2247 | 0.9870 | 0.0003 | 0.0550 | 0.0771 | 0.8725 | |

| GF6 | PNN | 29.1462 | 0.8080 | 0.0590 | 2.7313 | 0.9464 | 0.0013 | 0.0568 | 0.0795 | 0.8687 |

| PanNet | 30.1292 | 0.8445 | 0.0519 | 2.4281 | 0.9580 | 0.0010 | 0.0570 | 0.0747 | 0.8730 | |

| MSDCNN | 29.6711 | 0.8398 | 0.0607 | 2.5947 | 0.9544 | 0.0011 | 0.0570 | 0.0720 | 0.8756 | |

| TFNet | 33.0839 | 0.9010 | 0.0415 | 1.7560 | 0.9779 | 0.0005 | 0.0577 | 0.0818 | 0.8656 | |

| FusionNet | 24.9037 | 0.7577 | 0.0708 | 4.7497 | 0.8708 | 0.0036 | 0.0626 | 0.1351 | 0.8119 | |

| GPPNN | 29.5826 | 0.8383 | 0.0604 | 2.6056 | ’0.9538 | 0.0012 | 0.0608 | 0.0789 | 0.8656 | |

| SRPPNN | 31.3415 | 0.8688 | 0.0492 | 2.1116 | 0.9675 | 0.0008 | 0.0580 | 0.0743 | 0.8724 | |

| PGCU | 30.4135 | 0.8387 | 0.0549 | 2.3715 | 0.9606 | 0.0010 | 0.0588 | 0.0699 | 0.8759 | |

| CMFNet | 34.2858 | 0.9165 | 0.0361 | 1.5311 | 0.9826 | 0.0004 | 0.0571 | 0.0852 | 0.8628 | |

| LC7 | PNN | 29.4185 | 0.8439 | 0.0172 | 1.9653 | 0.9700 | 0.0014 | 0.0353 | 0.0799 | 0.8872 |

| PanNet | 30.9216 | 0.8936 | 0.0154 | 1.6598 | 0.9783 | 0.0010 | 0.0302 | 0.0784 | 0.8935 | |

| MSDCNN | 30.1564 | 0.8901 | 0.0308 | 1.7492 | 0.9799 | 0.0011 | 0.0300 | 0.0899 | 0.8824 | |

| TFNet | 35.3150 | 0.9310 | 0.0145 | 0.9734 | 0.9900 | 0.0003 | 0.0288 | 0.0781 | 0.8950 | |

| FusionNet | 25.6248 | 0.8052 | 0.0178 | 3.0744 | 0.9286 | 0.0034 | 0.0322 | 0.1258 | 0.8462 | |

| GPPNN | 29.9320 | 0.8782 | 0.0378 | 1.8414 | 0.9781 | 0.0011 | 0.0281 | 0.0790 | 0.8949 | |

| SRPPNN | 33.8618 | 0.9152 | 0.0147 | 1.1585 | 0.9862 | 0.0004 | 0.0304 | 0.0774 | 0.8943 | |

| PGCU | 32.2866 | 0.8931 | 0.0196 | 1.3951 | 0.9831 | 0.0007 | 0.0299 | 0.0814 | 0.8908 | |

| CMFNet | 36.6353 | 0.9391 | 0.0110 | 0.8417 | 0.9918 | 0.0002 | 0.0294 | 0.0776 | 0.8949 | |

| LC8 | PNN | 25.8213 | 0.7790 | 0.1062 | 4.7706 | 0.9082 | 0.0028 | 0.0900 | 0.1370 | 0.7861 |

| PanNet | 27.5038 | 0.8588 | 0.0950 | 3.7852 | 0.9407 | 0.0019 | 0.0623 | 0.1099 | 0.8349 | |

| MSDCNN | 26.8183 | 0.8381 | 0.1015 | 4.1062 | 0.9326 | 0.0022 | 0.0781 | 0.1283 | 0.8040 | |

| TFNet | 29.3548 | 0.8927 | 0.0808 | 2.8930 | 0.9629 | 0.0012 | 0.0539 | 0.1162 | 0.8363 | |

| FusionNet | 23.4355 | 0.7377 | 0.1122 | 6.7343 | 0.8310 | 0.0049 | 0.0807 | 0.0734 | 0.8521 | |

| GPPNN | 26.5420 | 0.8324 | 0.1107 | 4.1318 | 0.9287 | 0.0023 | 0.1294 | 0.1599 | 0.7322 | |

| SRPPNN | 28.0687 | 0.8622 | 0.0914 | 3.3472 | 0.9502 | 0.0016 | 0.0557 | 0.1150 | 0.8359 | |

| PGCU | 27.3723 | 0.8407 | 0.0983 | 3.6750 | 0.9418 | 0.0019 | 0.1090 | 0.1512 | 0.7576 | |

| CMFNet | 30.0631 | 0.9055 | 0.0719 | 2.6224 | 0.9685 | 0.0010 | 0.0533 | 0.1201 | 0.8332 | |

| Satellite | Model | Reduced Resolution | Full Resolution | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| PSNR ↑ | SSIM ↑ | SAM ↓ | ERGAS ↓ | SCC ↑ | MSE ↓ | ↓ | ↓ | QNR ↑ | ||

| QB | PNN | 31.3753 | 0.8939 | 0.0713 | 2.9642 | 0.9628 | 0.0008 | 0.0539 | 0.1051 | 0.8488 |

| PanNet | 31.6202 | 0.9050 | 0.0679 | 2.9175 | 0.9651 | 0.0008 | 0.0551 | 0.1038 | 0.8485 | |

| MSDCNN | 30.9598 | 0.8935 | 0.0725 | 3.1480 | 0.9592 | 0.0009 | 0.0500 | 0.0989 | 0.8572 | |

| TFNet | 35.3911 | 0.9457 | 0.0592 | 1.8994 | 0.9851 | 0.0003 | 0.0550 | 0.1134 | 0.8397 | |

| FusionNet | 25.4156 | 0.7978 | 0.0830 | 6.1690 | 0.8616 | 0.0032 | 0.0484 | 0.1180 | 0.8394 | |

| GPPNN | 30.3634 | 0.8783 | 0.0866 | 3.4097 | 0.9530 | 0.0011 | 0.0591 | 0.0997 | 0.8479 | |

| SRPPNN | 33.9285 | 0.9309 | 0.0631 | 2.2163 | 0.9790 | 0.0005 | 0.0581 | 0.1171 | 0.8340 | |

| PGCU | 32.3178 | 0.8940 | 0.0726 | 2.7943 | 0.9643 | 0.0007 | 0.0665 | 0.1298 | 0.8144 | |

| CMFNet | 37.4593 | 0.9581 | 0.0506 | 1.4965 | 0.9897 | 0.0002 | 0.0537 | 0.1175 | 0.8367 | |

| IN | PNN | 22.3923 | 0.5686 | 0.1005 | 6.3841 | 0.8280 | 0.0069 | 0.0575 | 0.0991 | 0.8497 |

| PanNet | 23.0846 | 0.6518 | 0.1001 | 5.9124 | 0.8482 | 0.0059 | 0.0654 | 0.0952 | 0.8462 | |

| MSDCNN | 22.5448 | 0.6052 | 0.1019 | 6.2599 | 0.8381 | 0.0066 | 0.0714 | 0.1117 | 0.8257 | |

| TFNet | 24.1673 | 0.6913 | 0.0912 | 5.2883 | 0.8674 | 0.0049 | 0.0682 | 0.1021 | 0.8370 | |

| FusionNet | 18.6515 | 0.4653 | 0.1092 | 9.8336 | 0.6865 | 0.0152 | 0.0669 | 0.1707 | 0.7762 | |

| GPPNN | 22.5994 | 0.6158 | 0.1106 | 6.2129 | 0.8400 | 0.0065 | 0.0714 | 0.1157 | 0.8216 | |

| SRPPNN | 23.4979 | 0.6485 | 0.0958 | 5.6708 | 0.8543 | 0.0055 | 0.0628 | 0.1049 | 0.8393 | |

| PGCU | 23.1044 | 0.6274 | 0.1011 | 5.9427 | 0.8465 | 0.0060 | 0.0757 | 0.1196 | 0.8147 | |

| CMFNet | 25.1965 | 0.7549 | 0.0836 | 4.7192 | 0.8960 | 0.0039 | 0.0723 | 0.1219 | 0.8152 | |

| WV2 | PNN | 29.5103 | 0.8598 | 0.0942 | 4.3577 | 0.9472 | 0.0013 | 0.0919 | 0.0905 | 0.8271 |

| PanNet | 30.1567 | 0.8792 | 0.0886 | 4.1544 | 0.9545 | 0.0011 | 0.0803 | 0.0832 | 0.8580 | |

| MSDCNN | 29.0844 | 0.8510 | 0.0971 | 4.6167 | 0.9428 | 0.0014 | 0.0957 | 0.0894 | 0.8246 | |

| TFNet | 33.3759 | 0.9290 | 0.0676 | 2.8346 | 0.9783 | 0.0005 | 0.0812 | 0.0899 | 0.8372 | |

| FusionNet | 25.1853 | 0.7841 | 0.1091 | 7.8640 | 0.8693 | 0.0038 | 0.0948 | 0.1101 | 0.8063 | |

| GPPNN | 28.8612 | 0.8377 | 0.1006 | 4.7538 | 0.9363 | 0.0015 | 0.1104 | 0.0947 | 0.8068 | |

| SRPPNN | 31.4856 | 0.9023 | 0.0804 | 3.4571 | 0.9662 | 0.0008 | 0.0901 | 0.0924 | 0.8272 | |

| PGCU | 30.1465 | 0.8659 | 0.0946 | 4.0744 | 0.9553 | 0.0011 | 0.0994 | 0.1047 | 0.8076 | |

| CMFNet | 34.8766 | 0.9424 | 0.0577 | 2.3661 | 0.9839 | 0.0004 | 0.0785 | 0.0874 | 0.8420 | |

| WV3 | PNN | 29.8229 | 0.7151 | 0.0933 | 8.7425 | 0.6751 | 0.0049 | 0.1007 | 0.3563 | 0.5778 |

| PanNet | 31.5740 | 0.8472 | 0.0893 | 6.5003 | 0.7651 | 0.0026 | 0.1156 | 0.2724 | 0.6416 | |

| MSDCNN | 31.1376 | 0.8219 | 0.0891 | 7.0228 | 0.7535 | 0.0030 | 0.0981 | 0.2707 | 0.6563 | |

| TFNet | 33.0056 | 0.9052 | 0.0821 | 5.1618 | 0.8213’ | 0.0016 | 0.1213 | 0.3206 | 0.5934 | |

| FusionNet | 27.9152 | 0.6996 | 0.1035 | 10.1020 | 0.6110 | 0.0055 | 0.1224 | 0.1961 | 0.7041 | |

| GPPNN | 30.8794 | 0.8239 | 0.1003 | 7.1749 | 0.7639 | 0.0031 | 0.1351 | 0.2322 | 0.6631 | |

| SRPPNN | 31.8867 | 0.8586 | 0.0929 | 6.2988 | 0.7775 | 0.0025 | 0.1115 | 0.2980 | 0.6221 | |

| PGCU | 30.5010 | 0.7917 | 0.1037 | 7.2780 | 0.7306 | 0.0035 | 0.1114 | 0.2396 | 0.7565 | |

| CMFNet | 35.2994 | 0.9350 | 0.0682 | 4.0791 | 0.8546 | 0.0010 | 0.0951 | 0.1307 | 0.7895 | |

| WV4 | PNN | 25.7747 | 0.7873 | 0.1208 | 5.3634 | 0.9525 | 0.0032 | 0.0608 | 0.0887 | 0.8581 |

| PanNet | 26.2031 | 0.8142 | 0.1178 | 5.1480 | 0.9579 | 0.0029 | 0.0517 | 0.0737 | 0.8622 | |

| MSDCNN | 25.8597 | 0.7938 | 0.1123 | 5.2353 | 0.9557 | 0.0031 | 0.0640 | 0.0897 | 0.8538 | |

| TFNet | 28.3349 | 0.8539 | 0.0992 | 3.9341 | 0.9743 | 0.0018 | 0.0547 | 0.0807 | 0.8712 | |

| FusionNet | 17.1025 | 0.5800 | 0.1399 | 14.1302 | 0.7322 | 0.0281 | 0.0576 | 0.2316 | 0.7266 | |

| GPPNN | 25.7128 | 0.8052 | 0.1216 | 5.3345 | 0.9537 | 0.0033 | 0.0588 | 0.0899 | 0.8584 | |

| SRPPNN | 27.5470 | 0.8352 | 0.1109 | 4.3418 | 0.9682 | 0.0021 | 0.0570 | 0.0895 | 0.8606 | |

| PGCU | 26.4050 | 0.8126 | 0.1181 | 4.9106 | 0.9606 | 0.0028 | 0.0571 | 0.1046 | 0.8470 | |

| CMFNet | 29.7377 | 0.8751 | 0.0894 | 3.4016 | 0.9796 | 0.0013 | 0.0566 | 0.0779 | 0.8720 | |

| Scene | Model | Reduced Resolution | Full Resolution | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| PSNR ↑ | SSIM ↑ | SAM ↓ | ERGAS ↓ | SCC ↑ | MSE ↓ | ↓ | ↓ | QNR ↑ | ||

| Water | PNN | 37.3836 | 0.8954 | 0.0469 | 3.7171 | 0.8253 | 0.0012 | 0.0923 | 0.1158 | 0.8058 |

| PanNet | 38.0401 | 0.9162 | 0.0436 | 3.1765 | 0.8388 | 0.0009 | 0.1150 | 0.1427 | 0.7625 | |

| MSDCNN | 36.7225 | 0.9035 | 0.0505 | 3.5042 | 0.8332 | 0.0011 | 0.0876 | 0.2030 | 0.7297 | |

| TFNet | 38.9414 | 0.9339 | 0.0463 | 2.6433 | 0.8790 | 0.0006 | 0.1052 | 0.1652 | 0.7494 | |

| FusionNet | 29.9043 | 0.8263 | 0.0694 | 7.9718 | 0.6324 | 0.0105 | 0.1338 | 0.2384 | 0.6554 | |

| GPPNN | 35.1459 | 0.9037 | 0.0665 | 3.9257 | 0.8344 | 0.0012 | 0.1153 | 0.1244 | 0.7780 | |

| SRPPNN | 38.5920 | 0.9237 | 0.0429 | 2.9622 | 0.8578 | 0.0007 | 0.0905 | 0.1540 | 0.7718 | |

| PGCU | 36.8776 | 0.9068 | 0.0550 | 3.3634 | 0.8252 | 0.0010 | 0.1014 | 0.1528 | 0.7644 | |

| CMFNet | 41.7801 | 0.9453 | 0.0363 | 2.1098 | 0.8990 | 0.0004 | 0.0932 | 0.1274 | 0.7948 | |

| Urban | PNN | 25.8327 | 0.7519 | 0.0963 | 5.9530 | 0.9059 | 0.0037 | 0.0700 | 0.1106 | 0.8285 |

| PanNet | 27.3584 | 0.8424 | 0.0927 | 4.8631 | 0.9434 | 0.0024 | 0.0666 | 0.1275 | 0.8156 | |

| MSDCNN | 26.4527 | 0.7995 | 0.0936 | 5.3637 | 0.9292 | 0.0029 | 0.0683 | 0.1240 | 0.8171 | |

| TFNet | 30.4813 | 0.9025 | 0.0737 | 3.4755 | 0.9707 | 0.0013 | 0.0687 | 0.1440 | 0.7986 | |

| FusionNet | 21.7787 | 0.6560 | 0.1073 | 9.0889 | 0.8153 | 0.0085 | 0.0671 | 0.1467 | 0.7975 | |

| GPPNN | 26.3670 | 0.8010 | 0.1026 | 5.3858 | 0.9280 | 0.0030 | 0.0796 | 0.1193 | 0.8118 | |

| SRPPNN | 28.7109 | 0.8651 | 0.0873 | 4.2278 | 0.9544 | 0.0019 | 0.0724 | 0.1454 | 0.7944 | |

| PGCU | 27.3457 | 0.8043 | 0.0963 | 4.9847 | 0.9354 | 0.0026 | 0.0769 | 0.1226 | 0.8115 | |

| CMFNet | 32.4034 | 0.9299 | 0.0632 | 2.8182 | 0.9807 | 0.0009 | 0.0653 | 0.1029 | 0.8393 | |

| Ice/snow | PNN | 27.3929 | 0.8155 | 0.0531 | 3.2067 | 0.9579 | 0.0021 | 0.0579 | 0.0816 | 0.8661 |

| PanNet | 28.8623 | 0.8734 | 0.0474 | 2.6352 | 0.9713 | 0.0015 | 0.0431 | 0.0768 | 0.8835 | |

| MSDCNN | 28.3687 | 0.8636 | 0.0594 | 2.8286 | 0.9693 | 0.0017 | 0.0492 | 0.0848 | 0.8706 | |

| TFNet | 32.9410 | 0.9186 | 0.0399 | 1.7121 | 0.9864 | 0.0006 | 0.0411 | 0.0788 | 0.8834 | |

| FusionNet | 23.6423 | 0.7625 | 0.0569 | 4.8699 | 0.9103 | 0.0049 | 0.0473 | 0.1324 | 0.8264 | |

| GPPNN | 28.2210 | 0.8553 | 0.0663 | 2.8315 | 0.9682 | 0.0017 | 0.0624 | 0.0883 | 0.8570 | |

| SRPPNN | 31.3481 | 0.8949 | 0.0452 | 2.0456 | 0.9803 | 0.0009 | 0.0428 | 0.0762 | 0.8843 | |

| PGCU | 29.9009 | 0.8677 | 0.0515 | 2.3806 | 0.9750 | 0.0012 | 0.0598 | 0.0885 | 0.8588 | |

| CMFNet | 34.0810 | 0.9290 | 0.0342 | 1.5132 | 0.9889 | 0.0005 | 0.0414 | 0.0821 | 0.8800 | |

| Crops | PNN | 29.2004 | 0.8292 | 0.0752 | 4.0294 | 0.9393 | 0.0015 | 0.0745 | 0.1016 | 0.8330 |

| PanNet | 30.3834 | 0.8786 | 0.0701 | 3.5639 | 0.9574 | 0.0011 | 0.0698 | 0.1092 | 0.8295 | |

| MSDCNN | 29.1161 | 0.8367 | 0.0772 | 4.0700 | 0.9403 | 0.0014 | 0.0736 | 0.0905 | 0.8435 | |

| TFNet | 33.7983 | 0.9267 | 0.0558 | 2.4056 | 0.9803 | 0.0005 | 0.0722 | 0.1197 | 0.8179 | |

| FusionNet | 24.6057 | 0.7476 | 0.0892 | 7.1907 | 0.8453 | 0.0044 | 0.0789 | 0.0948 | 0.8347 | |

| GPPNN | 28.7492 | 0.8248 | 0.0863 | 4.2515 | 0.9343 | 0.0016 | 0.0868 | 0.0907 | 0.8314 | |

| SRPPNN | 31.9011 | 0.8999 | 0.0643 | 2.9410 | 0.9687 | 0.0008 | 0.0755 | 0.1209 | 0.8139 | |

| PGCU | 30.2371 | 0.8439 | 0.0758 | 3.6139 | 0.9508 | 0.0012 | 0.0832 | 0.1105 | 0.8170 | |

| CMFNet | 35.7272 | 0.9449 | 0.0464 | 1.9383 | 0.9866 | 0.0003 | 0.0692 | 0.1203 | 0.8199 | |

| Vegetation | PNN | 26.8214 | 0.7546 | 0.0866 | 5.0817 | 0.9021 | 0.0031 | 0.0617 | 0.1043 | 0.8415 |

| PanNet | 28.3724 | 0.8320 | 0.0799 | 4.1249 | 0.9344 | 0.0021 | 0.0575 | 0.1055 | 0.8435 | |

| MSDCNN | 27.7013 | 0.8066 | 0.0848 | 4.5168 | 0.9251 | 0.0025 | 0.0601 | 0.1118 | 0.8354 | |

| TFNet | 30.8699 | 0.8802 | 0.0671 | 3.1480 | 0.9582 | 0.0014 | 0.0598 | 0.1134 | 0.8339 | |

| FusionNet | 23.3024 | 0.6834 | 0.1002 | 7.8640 | 0.8062 | 0.0067 | 0.0626 | 0.1165 | 0.8283 | |

| GPPNN | 27.6335 | 0.8078 | 0.0900 | 4.4876 | 0.9247 | 0.0025 | 0.0764 | 0.1188 | 0.8153 | |

| SRPPNN | 29.2823 | 0.8451 | 0.0758 | 3.6886 | 0.9434 | 0.0019 | 0.0587 | 0.1104 | 0.8381 | |

| PGCU | 28.1940 | 0.8030 | 0.0856 | 4.2916 | 0.9290 | 0.0023 | 0.0735 | 0.1089 | 0.8272 | |

| CMFNet | 32.4267 | 0.9045 | 0.0578 | 2.6551 | 0.9683 | 0.0011 | 0.0568 | 0.1173 | 0.8331 | |

| Barren | PNN | 27.8755 | 0.7635 | 0.0623 | 3.4420 | 0.9218 | 0.0025 | 0.0601 | 0.0896 | 0.8566 |

| PanNet | 28.9247 | 0.8170 | 0.0577 | 3.0723 | 0.9361 | 0.0020 | 0.0570 | 0.0834 | 0.8650 | |

| MSDCNN | 28.2446 | 0.7988 | 0.0670 | 3.3001 | 0.9305 | 0.0023 | 0.0600 | 0.0899 | 0.8562 | |

| TFNet | 31.7399 | 0.8595 | 0.0510 | 2.4164 | 0.9532 | 0.0014 | 0.0569 | 0.0870 | 0.8616 | |

| FusionNet | 23.7621 | 0.7005 | 0.0698 | 5.5669 | 0.8335 | 0.0057 | 0.0580 | 0.1411 | 0.8097 | |

| GPPNN | 28.0283 | 0.7931 | 0.0741 | 3.3838 | 0.9284 | 0.0023 | 0.0669 | 0.0935 | 0.8471 | |

| SRPPNN | 30.4227 | 0.8313 | 0.0554 | 2.7118 | 0.9441 | 0.0017 | 0.0566 | 0.0858 | 0.8631 | |

| PGCU | 29.2601 | 0.8011 | 0.0612 | 3.0536 | 0.9349 | 0.0020 | 0.0683 | 0.0957 | 0.8443 | |

| CMFNet | 32.9757 | 0.8848 | 0.0447 | 2.1215 | 0.9632 | 0.0011 | 0.0581 | 0.0955 | 0.8526 | |

| Cascading Level | PSNR ↑ | SSIM ↑ | SAM ↓ | ERGAS ↓ | SCC ↑ | MSE ↓ |

|---|---|---|---|---|---|---|

| 1 | 33.0352 | 0.8937 | 0.0579 | 2.7846 | 0.9494 | 10.9749 |

| 2 | 34.3852 | 0.9139 | 0.0516 | 2.4266 | 0.9595 | 9.0428 |

| 3 | 34.4921 | 0.9153 | 0.0511 | 2.3984 | 0.9601 | 8.8862 |

| Cascading Injection | PSNR ↑ | SSIM ↑ | SAM ↓ | ERGAS ↓ | SCC ↑ | MSE ↓ |

|---|---|---|---|---|---|---|

| × | 34.0757 | 0.9102 | 0.0528 | 2.4926 | 0.9568 | 9.5769 |

| √ | 34.4921 | 0.9153 | 0.0511 | 2.3984 | 0.9601 | 8.8862 |

| Block | PSNR ↑ | SSIM ↑ | SAM ↓ | ERGAS ↓ | SCC ↑ | MSE ↓ |

|---|---|---|---|---|---|---|

| ResNet [42] | 33.5597 | 0.9025 | 0.0564 | 2.6369 | 0.9526 | 10.2362 |

| ConvNeXt [52] | 33.8639 | 0.9062 | 0.0546 | 2.5677 | 0.9558 | 9.9013 |

| NAFNet [51] | 34.3511 | 0.9147 | 0.0522 | 2.4590 | 0.9580 | 8.9712 |

| DiffCR [50] | 34.4921 | 0.9153 | 0.0511 | 2.3984 | 0.9601 | 8.8862 |

| Task | PSNR ↑ | SSIM ↑ | SAM ↓ | ERGAS ↓ | SCC ↑ | MSE ↓ |

|---|---|---|---|---|---|---|

| Super-resolution (w/o PAN) | 29.6283 | 0.7656 | 0.3256 | 25.9604 | 0.8205 | 25.3851 |

| Colorization (w/o MS) | 25.1988 | 0.7811 | 0.1686 | 7.9895 | 0.7782 | 50.1688 |

| Pansharpening (MS+PAN) | 34.3852 | 0.9139 | 0.0579 | 2.7846 | 0.9494 | 9.0428 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Zou, X.; Li, K.; Xing, J.; Cao, T.; Tao, P. Towards Robust Pansharpening: A Large-Scale High-Resolution Multi-Scene Dataset and Novel Approach. Remote Sens. 2024, 16, 2899. https://doi.org/10.3390/rs16162899

Wang S, Zou X, Li K, Xing J, Cao T, Tao P. Towards Robust Pansharpening: A Large-Scale High-Resolution Multi-Scene Dataset and Novel Approach. Remote Sensing. 2024; 16(16):2899. https://doi.org/10.3390/rs16162899

Chicago/Turabian StyleWang, Shiying, Xuechao Zou, Kai Li, Junliang Xing, Tengfei Cao, and Pin Tao. 2024. "Towards Robust Pansharpening: A Large-Scale High-Resolution Multi-Scene Dataset and Novel Approach" Remote Sensing 16, no. 16: 2899. https://doi.org/10.3390/rs16162899

APA StyleWang, S., Zou, X., Li, K., Xing, J., Cao, T., & Tao, P. (2024). Towards Robust Pansharpening: A Large-Scale High-Resolution Multi-Scene Dataset and Novel Approach. Remote Sensing, 16(16), 2899. https://doi.org/10.3390/rs16162899