A Deep Learning Classification Scheme for PolSAR Image Based on Polarimetric Features

Abstract

1. Introduction

2. Method

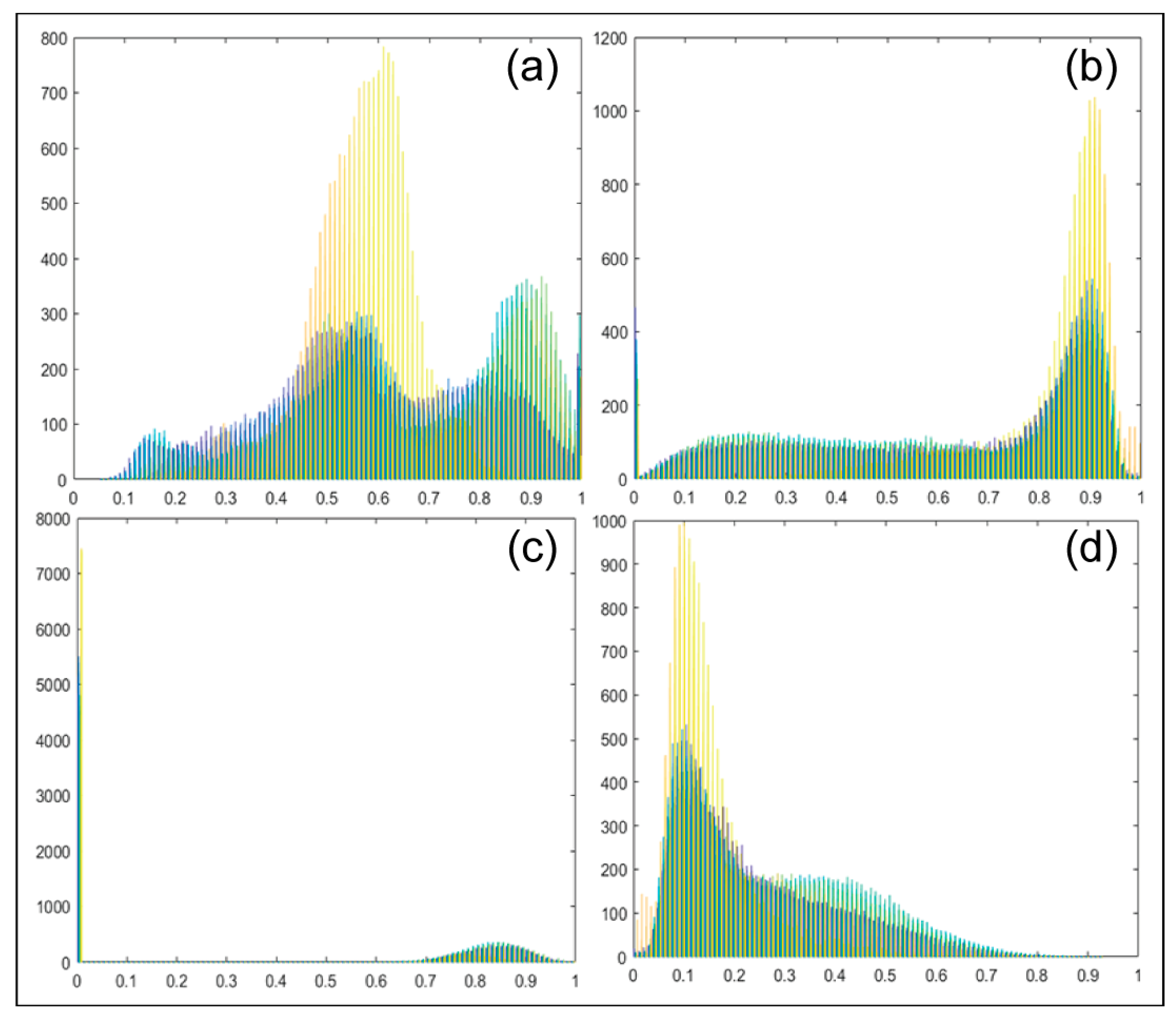

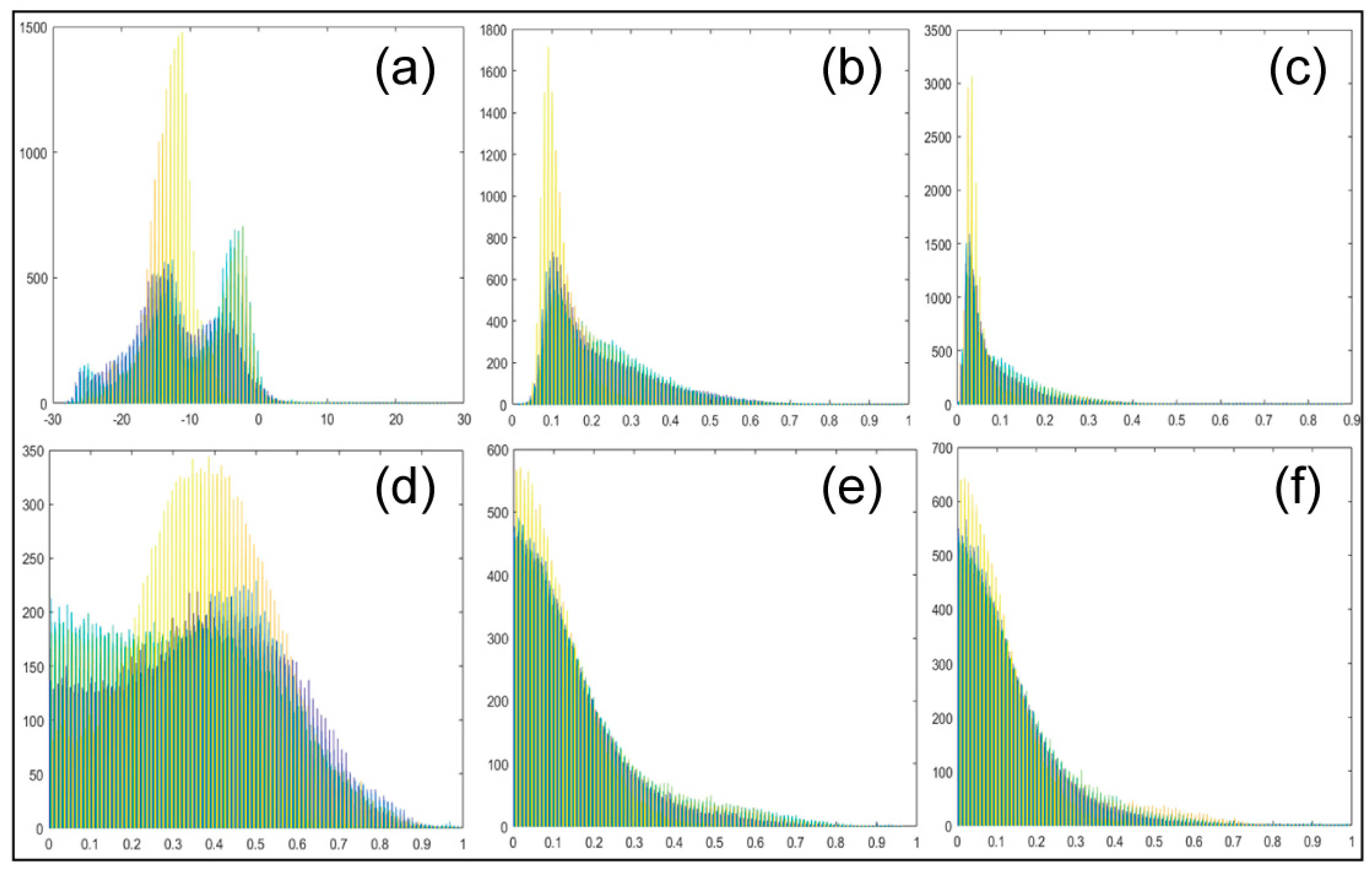

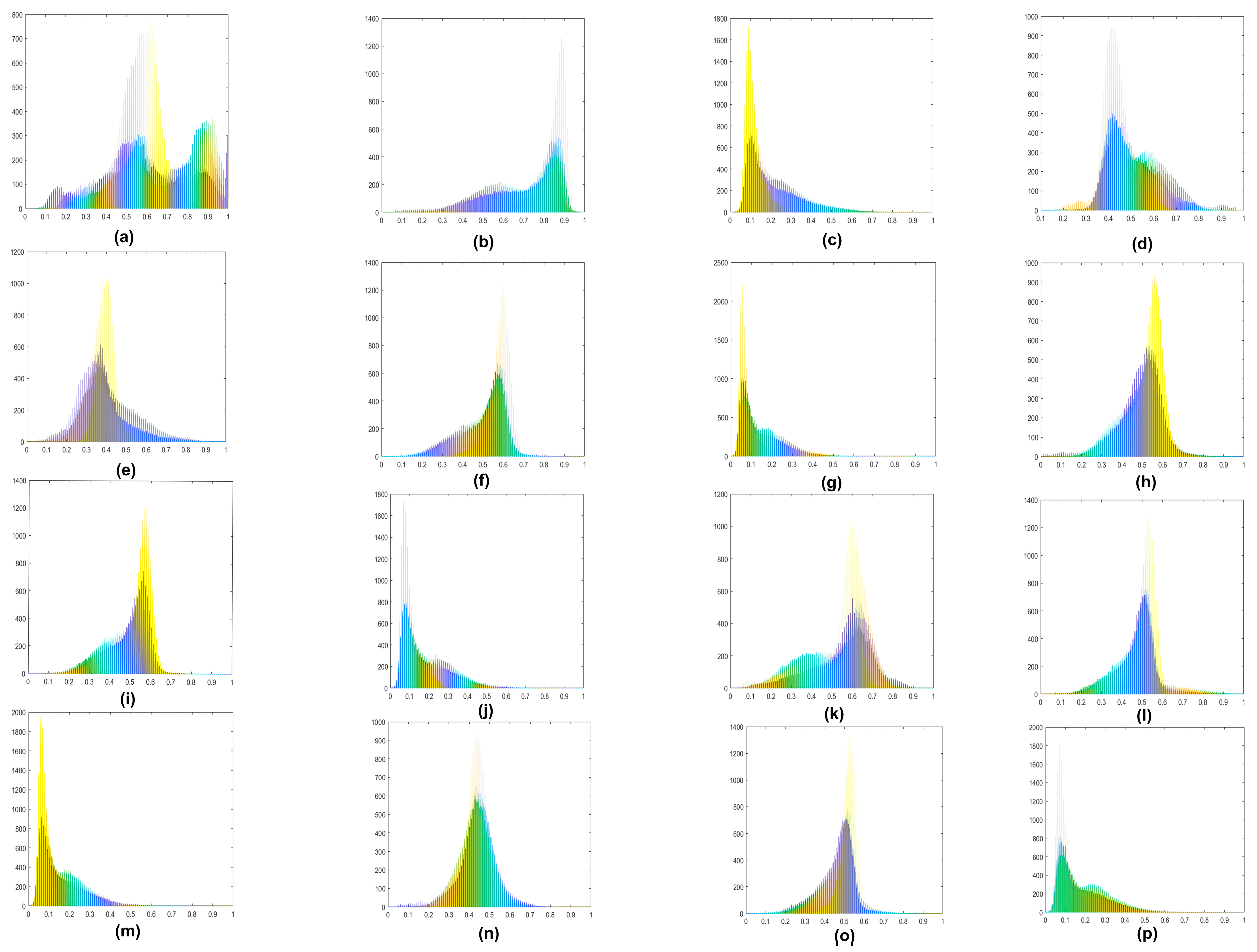

2.1. Polarization Decomposition Method Based on Polarimetric Scattering Features

2.2. Vertical, Horizontal, Left-Handed Circular, Right-Handed Circular Polarization Methods

2.3. Input Feature Normalization and Design of Three Schemes

2.4. Experiment and Pre-Processing

2.5. Classification Process of Polarization Scattering Characteristics Using Deep Learning

| Algorithm 1: A deep learning classification scheme for PolSAR image based on polarimetric features |

| Input: GF-3 PolSAR images. Output: Predict label Ytest {y1, y2, …, ym} 1: Processing GF-3 PolSAR images. 2: Polarimetric decomposition. 3: Extract polarimetric features. 4: Feature normalization. 5: Three schemes are proposed based on the previous studies and scattering mechanisms. 6: Randomly select a certain proportion of training samples (Patch_Xtrain: {Patch_x1, Patch_x2, …, Patch_xn}, the remaining labeled samples are used as validation samples 7: Inputting Patch_xi into CNN. for i < N do the train one time. If good fitting, then Save model, and break. else if over-fitting or under-fitting, then Adjust parameters include, i.e., learning rate, bias. End 8: Predict Label: Y = Softmax (Patch_Xtrain) 9: Test images are input to the model and predict the patches of all pixels. 10: Do method evaluation, i.e., Statistic OA, AA, and Kappa coefficient. |

3. Experimental and Result Analysis

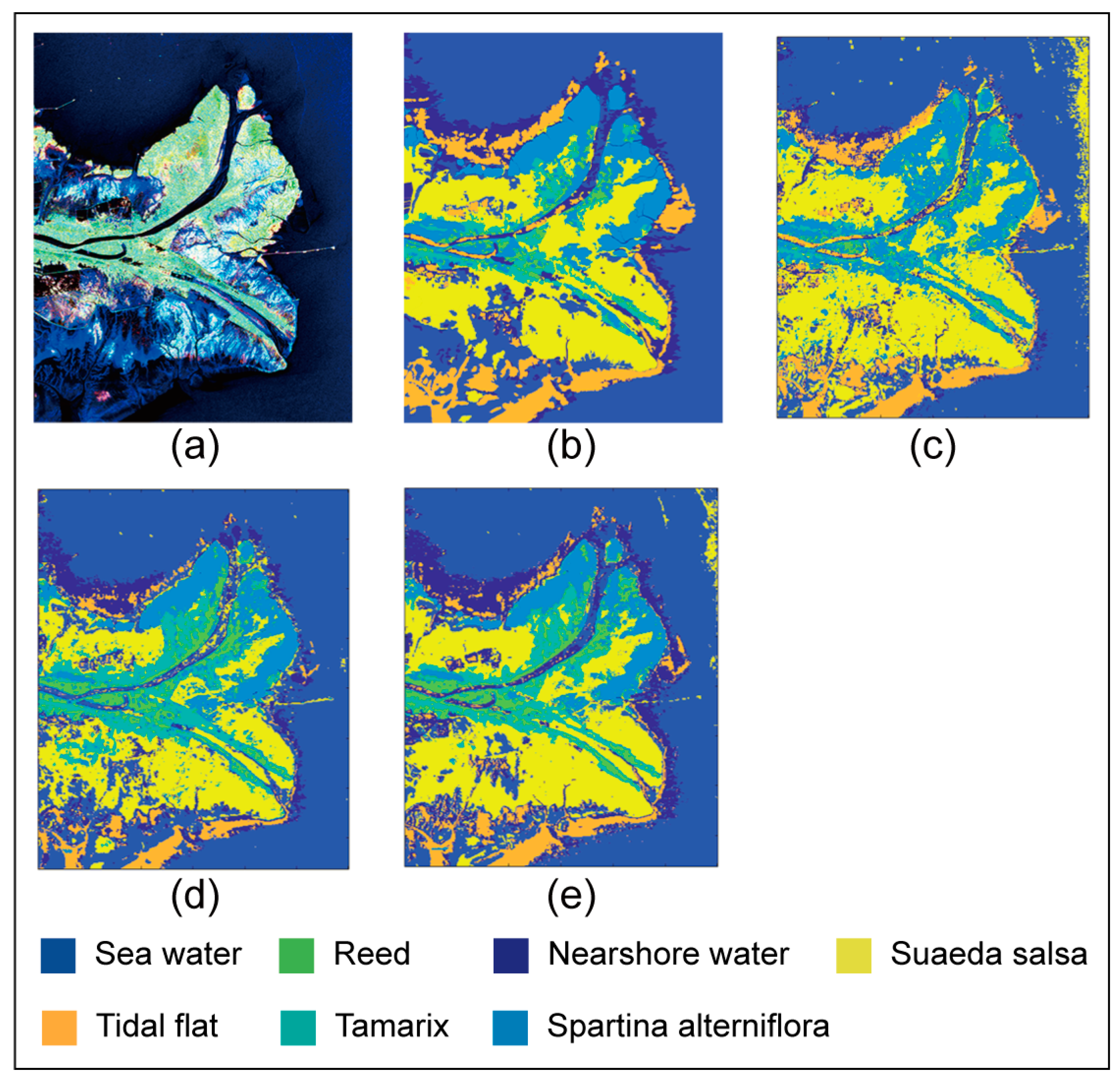

3.1. Study Area and Dataset

3.2. Classification Results of the Yellow River Delta on AlexNet

3.3. Classification Results of the Yellow River Delta on VGG16

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lee, J.S.; Grunes, M.R.; Pottier, E. Quantitative comparison of classification capability: Fully polarimetric versus dual and single-polarization SAR. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2343–2351. [Google Scholar]

- Wang, Y.; Cheng, J.; Zhou, Y.; Zhang, F.; Yin, Q. A multichannel fusion convolutional neural network based on scattering mechanism for PolSAR image classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4007805. [Google Scholar] [CrossRef]

- Wang, X.; Cao, Z.; Cui, Z.; Liu, N.; Pi, Y. PolSAR image classification based on deep polarimetric feature and contextual information. J. Appl. Remote Sens. 2019, 13, 034529. [Google Scholar] [CrossRef]

- Dong, H.; Zhang, L.; Lu, D.; Zou, B. Attention-based polarimetric feature selection convolutional network forPolSAR image classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4001705. [Google Scholar] [CrossRef]

- Lonnqvist, A.; Rauste, Y.; Molinier, M.; Hame, T. Polarimetric SAR data in land cover mapping in boreal zone. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3652–3662. [Google Scholar] [CrossRef]

- McNairn, H.; Shang, J.; Jiao, X.; Champagne, C. The contribution of ALOS PALSAR multipolarization and polarimetric data to crop classification. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3981–3992. [Google Scholar] [CrossRef]

- Qi, Z.; Yeh, A.G.-O.; Li, X.; Lin, Z. A novel algorithm for land use and land cover classification using RADARSAT-2 polarimetric SAR data. Remote Sens. Environ. 2012, 118, 21–39. [Google Scholar] [CrossRef]

- Cloude, S.R.; Pottier, E. A review of target decomposition theorems in radar polarimetry. IEEE Trans. Geosci. Remote Sens. 1996, 34, 498–518. [Google Scholar] [CrossRef]

- Cloude, S.R.; Pottier, E. An entropy based classification scheme for land applications of polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 1997, 35, 68–78. [Google Scholar] [CrossRef]

- Lardeux, C.; Frison, P.L.; Tison, C.; Souyris, J.C.; Stoll, B.; Fruneau, B.; Rudant, J.P. Support vector machine for multifrequency SAR polarimetric data classification. IEEE Trans. Geosci. Remote Sens. 2009, 47, 4143–4152. [Google Scholar] [CrossRef]

- Dickinson, C.; Siqueira, P.; Clewley, D.; Lucas, R. Classification of forest composition using polarimetric decomposition in multiple landscapes. Remote Sens. Environ. 2013, 131, 206–214. [Google Scholar] [CrossRef]

- Yin, Q.; Lin, Z.; Hu, W.; López-Martínez, C.; Ni, J.; Zhang, F. Crop Classification of Multitemporal PolSAR Based on 3-D Attention Module with ViT. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4005405. [Google Scholar] [CrossRef]

- Wang, W.; Wang, J.; Lu, B.; Liu, B.; Zhang, Y.; Wang, C. MCPT: Mixed Convolutional Parallel Transformer for Polarimetric SAR Image Classification. Remote Sens. 2023, 15, 2936. [Google Scholar] [CrossRef]

- Hua, W.; Zhang, Y.; Zhang, C.; Jin, X. PolSAR Image Classification Based on Relation Network with SWANet. Remote Sens. 2023, 15, 2025. [Google Scholar] [CrossRef]

- Lee, J.S.; Grunes, M.R.; Ainsworth, T.L.; Du, L.J.; Schuler, D.L.; Cloude, S.R. Unsupervised classification using polarimetric decomposition and the complex Wishart classifier. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2249–2258. [Google Scholar]

- Silva, W.B.; Freitas, C.C.; Sant’Anna, S.J.S.; Frery, A.C. Classification of segments in PolSAR imagery by minimum stochastic distances between Wishart distributions. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 6, 1263–1273. [Google Scholar] [CrossRef]

- Chen, Q.; Kuang, G.Y.; Li, J.; Sui, L.C.; Li, D.G. Unsupervised land cover/land use classification using PolSAR imagery based on scattering similarity. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1817–1825. [Google Scholar] [CrossRef]

- Wu, Y.H.; Ji, K.F.; Yu, W.X.; Su, Y. Region-based classification of Polarimetric SAR imaged using Wishart MRF. IEEE Trans. Geosci. Remote Sens. Lett. 2008, 5, 668–672. [Google Scholar] [CrossRef]

- Dong, H.; Xu, X.; Sui, H.; Xu, F.; Liu, J. Copula-Based Joint Statistical Model for Polarimetric Features and Its Application in PolSAR Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5777–5789. [Google Scholar] [CrossRef]

- Liu, B.; Hu, H.; Wang, H.; Wang, K.; Liu, X.; Yu, W. Superpixel-based classification with an adaptive number of classes for polarimetric SAR images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 907–924. [Google Scholar] [CrossRef]

- Krogager, E. New decomposition of the radar target scattering matrix. Electron. Lett. 1990, 26, 1525–1527. [Google Scholar] [CrossRef]

- Freeman, A.; Durden, S.L. A three-component scattering model for polarimetric SAR data. IEEE Trans. Geosci. Remote Sens. 1998, 36, 963–973. [Google Scholar] [CrossRef]

- Yamaguchi, Y.; Moriyama, T.; Ishido, M.; Yamada, H. Four-component scattering model for polarimetric SAR image decomposition. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1699–1706. [Google Scholar] [CrossRef]

- An, W.T.; Lin, M.S. A reflection symmetry approximation of multi-look polarimetric SAR data and its application to freeman-durden decomposition. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3649–3660. [Google Scholar] [CrossRef]

- van Zyl, J.J.; Arii, M.; Kim, Y. Model-based decomposition of polarimetric SAR covariance matrices constrained for nonnegative eigenvalues. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3452–3459. [Google Scholar] [CrossRef]

- Huynen, J.R. Physical reality of radar targets. Proc. SPIE 1993, 1748, 86–96. [Google Scholar]

- Cameron, W.L.; Leung, L.K. Feature motivated polarization scattering matrix decomposition. In Proceedings of the IEEE International Conference on Radar, Arlington, VA, USA, 7–10 May 1990. [Google Scholar]

- Nie, W.; Huang, K.; Yang, J.; Li, P. A deep reinforcement learning-based framework for PolSAR imagery classification. IEEE Trans. Geosci. Remote. Sens. 2021, 60, 4403615. [Google Scholar] [CrossRef]

- Ren, B.; Zhao, Y.; Hou, B.; Chanussot, J.; Jiao, L. A mutual information-based self-supervised learning model for PolSAR land cover classification. IEEE Trans. Geosci. Remote. Sens. 2021, 59, 9224–9237. [Google Scholar] [CrossRef]

- Zhang, S.; An, W.; Zhang, Y.; Cui, L.; Xie, C. Wetlands Classification Using Quad-Polarimetric Synthetic Aperture Radar through Convolutional Neural Networks Based on Polarimetric Features. Remote. Sens. 2022, 14, 5133. [Google Scholar] [CrossRef]

- Quan, S.; Qin, Y.; Xiang, D.; Wang, W.; Wang, X. Polarimetric Decomposition-Based Unified Manmade Target Scattering Characterization With Mathematical Programming Strategies. IEEE Trans. Geosci. Remote. Sens. 2021, 60, 1–18. [Google Scholar] [CrossRef]

- Quan, S.; Zhang, T.; Wang, W.; Kuang, G.; Wang, X.; Zeng, B. Exploring Fine Polarimetric Decomposition Technique for Built-Up Area Monitoring. IEEE Trans. Geosci. Remote. Sens. 2023, 61, 1–19. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.-A. Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2014, 63, 139–144. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Jiao, L.; Liu, F. Wishart deep stacking network for fast POLSAR image classifification. IEEE Trans. Image Process. 2016, 25, 3273–3286. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Jiao, L.; Tang, X. Task-oriented GAN for PolSAR image classifification and clustering. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2707–2719. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Wang, S.; Gao, C.; Shi, D.; Zhang, D.; Hou, B. Wishart RBM based DBN for polarimetric synthetic radar data classifification. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015. [Google Scholar]

- Shao, Z.; Zhang, L.; Wang, L. Stacked sparse autoencoder modeling using the synergy of airborne LiDAR and satellite optical and SAR data to map forest above-ground biomass. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2017, 10, 5569–5582. [Google Scholar] [CrossRef]

- Zhang, L.; Ma, W.; Zhang, D. Stacked sparse autoencoder in PolSAR data classifification using local spatial information. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1359–1363. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Guan, H.; Wang, C. Automated detection of three-dimensional cars in mobile laser scanning point clouds using DBM-Hough-forests. IEEE Trans. Geosci. Remote. Sens. 2016, 54, 4130–4142. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep learning-based classifification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Zhang, L.; Shi, Z.; Wu, J. A Hierarchical oil tank detector with deep surrounding features for high-resolution optical satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2015, 8, 4895–4909. [Google Scholar] [CrossRef]

- Liang, H.; Li, Q. Hyperspectral imagery classifification using sparse representations of convolutional neural network features. Remote Sens. 2016, 8, 99. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Guan, H.; Jia, F.; Wang, C. Learning hierarchical features for automated extraction of road markings from 3-D mobile LiDAR point clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2014, 8, 709–726. [Google Scholar] [CrossRef]

- Xie, H.; Wang, S.; Liu, K.; Lin, S.; Hou, B. Multilayer feature learning for polarimetric synthetic radar data classifification. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 2818–2821. [Google Scholar]

- Chen, X.; Hou, Z.; Dong, Z.; He, Z. Performance analysis of wavenumber domain algorithms for highly squinted SAR. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2023, 16, 1563–1575. [Google Scholar] [CrossRef]

- Dong, H.; Zhang, L.; Zou, B. Exploring vision transformers for polarimetric SAR image classification. IEEE Trans. Geosci. Remote. Sens. 2021, 60, 5219715. [Google Scholar] [CrossRef]

- Deng, P.; Xu, K.; Huang, H. When CNNs meet vision transformer: A joint framework for remote sensing scene classification. IEEE Geosci. Remote. Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Ren, S.; Zhou, F.; Bruzzone, L. Transfer-Aware Graph U-Net with Cross-Level Interactions for PolSAR Image Semantic Segmentation. Remote. Sens. 2024, 16, 1428. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, W.; Chen, W.; Chen, C. BSDSNet: Dual-Stream Feature Extraction Network Based on Segment Anything Model for Synthetic Aperture Radar Land Cover Classification. Remote. Sens. 2024, 16, 1150. [Google Scholar] [CrossRef]

- Shi, J.; Nie, M.; Ji, S.; Shi, C.; Liu, H.; Jin, H. Polarimetric Synthetic Aperture Radar Image Classification Based on Double-Channel Convolution Network and Edge-Preserving Markov Random Field. Remote. Sens. 2023, 15, 5458. [Google Scholar] [CrossRef]

- Liu, L.; Li, Y. PolSAR Image Classification with Active Complex-Valued Convolutional-Wavelet Neural Network and Markov Random Fields. Remote. Sens. 2024, 16, 1094. [Google Scholar] [CrossRef]

- Yang, R.; Xu, X.; Gui, R.; Xu, Z.; Pu, F. Composite Sequential Network With POA Attention for PolSAR Image Analysis. IEEE Trans. Geosci. Remote. Sens. 2021, 60, 5209915. [Google Scholar] [CrossRef]

- Chu, B.; Zhang, M.; Ma, K.; Liu, L.; Wan, J.; Chen, J.; Chen, J.; Zeng, H. Multiobjective Evolutionary Superpixel Segmentation for PolSAR Image Classification. Remote. Sens. 2024, 16, 854. [Google Scholar] [CrossRef]

- Ai, J.; Wang, F.; Mao, Y.; Luo, Q.; Yao, B.; Yan, H.; Xing, M.; Wu, Y. A fine PolSAR terrain classification algorithm using the texture feature fusion-based improved convolutional autoencoder. IEEE Trans. Geosci. Remote. Sens. 2021, 60, 5218714. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, H.; Xu, F.; Jin, Y.-Q. Polarimetric SAR image classifification using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1935–1939. [Google Scholar] [CrossRef]

- Chen, S.-W.; Tao, C.-S. PolSAR image classifification using polarimetric-feature-driven deep convolutional neural network. IEEE Geosci. Remote Sens. Lett. 2018, 15, 627–631. [Google Scholar] [CrossRef]

- An, W.; Lin, M.; Yang, H. Modified reflection symmetry decomposition and a new polarimetric product of GF-3. IEEE Geosci. Remote. Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- An, W. Polarimetric Decomposition and Scattering Characteristic Extraction of Polarimetric SAR. Ph.D. Thesis, Tusinghua University, Beijing, China, 2010. [Google Scholar]

- Yang, J. On Theoretical Problems in Radar Polarimetry. Ph.D. Thesis, Niigata University, Niigata, Japan, 1999. [Google Scholar]

- User Manual of Gaofen-3 Satellite Products, China Resources Satellite Application Center. 2016. Available online: https://osdds.nsoas.org.cn/ (accessed on 30 March 2024).

- Chen, J.; Chen, Y.; An, W.; Cui, Y.; Yang, J. Nonlocal filtering for polarimetric SAR data: A pretest approach. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1744–1754. [Google Scholar] [CrossRef]

| Scheme | Parameters | Polarization Features |

|---|---|---|

| 1 | 4 | P0, PS, PD, PV |

| 2 | 6 | NonP0, T22, T33, coeT12, coeT13, coeT23 |

| 3 | 16 | P0, T12, T23, T23, H(T12), H(T13), H(T23), L(T12), L(T13), L(T23), V(T12), V(T13), V(T23), R(T12), R(T13), R(T23) |

| Images | Nearshore Water | Seawater | Spartina Alterniflora | Tamarix | Reed | Tidal Flat | Suaeda Salsa |

|---|---|---|---|---|---|---|---|

| 20210914_1 | 500 | 400 | 1000 | 500 | 500 | 500 | 500 |

| 20210914_2 | 500 | 200 | 0 | 0 | 0 | 500 | 0 |

| 20211013 | 0 | 400 | 0 | 500 | 500 | 0 | 500 |

| Total | 1000 | 1000 | 1000 | 1000 | 1000 | 1000 | 1000 |

| Classification Accuracy Input Scheme | Scheme 1 | Scheme 2 | Scheme 3 |

|---|---|---|---|

| Nearshore water | 83.4 | 96.8 | 100 |

| Seawater | 98.7 | 96.9 | 99.60 |

| Spartina alterniflora | 87.0 | 96.8 | 93.3 |

| Tamarix | 40.1 | 100 | 100 |

| Reed | 50.4 | 94.5 | 68.50 |

| Tidal flat | 61.8 | 49.3 | 44.6 |

| Suaeda salsa | 98.2 | 50.8 | 96.8 |

| Indepent experiments Overall Accuracy | 74.23 | 83.59 | 86.11 |

| 71.36 | 81.41 | 81.53 | |

| 70.41 | 77.83 | 77.04 | |

| 68 | 73.66 | 73.73 | |

| 67.84 | 68.87 | 71.99 | |

| Average Overall Accuracy | 70.368 | 77.072 | 78.08 |

| Kappa coefficient | 0.6993 | 0.8085 | 0.8380 |

| Classification Accuracy Input Scheme | Scheme 1 | Scheme 2 | Scheme 3 |

|---|---|---|---|

| Nearshore water | 89.3 | 95.7 | 95.6 |

| Seawater | 99.4 | 97.7 | 99.7 |

| Spartina alterniflora | 87.6 | 96.6 | 95.9 |

| Tamarix | 40.2 | 98.5 | 100 |

| Reed | 26.1 | 93.8 | 44.7 |

| Tidal flat | 73.2 | 28.5 | 58.3 |

| Suaeda salsa | 100 | 66.2 | 94.1 |

| Indepent experiments overall accuracy | 73.69 | 82.43 | 84.04 |

| 72.8 | 82.21 | 83.57 | |

| 69.7 | 81.44 | 82.07 | |

| 68.66 | 79.44 | 81.54 | |

| 67.6 | 77.53 | 80.11 | |

| Average overall accuracy | 70.49 | 80.61 | 82.266 |

| Kappa coefficient | 0.6930 | 0.7950 | 0.8138 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Cui, L.; Dong, Z.; An, W. A Deep Learning Classification Scheme for PolSAR Image Based on Polarimetric Features. Remote Sens. 2024, 16, 1676. https://doi.org/10.3390/rs16101676

Zhang S, Cui L, Dong Z, An W. A Deep Learning Classification Scheme for PolSAR Image Based on Polarimetric Features. Remote Sensing. 2024; 16(10):1676. https://doi.org/10.3390/rs16101676

Chicago/Turabian StyleZhang, Shuaiying, Lizhen Cui, Zhen Dong, and Wentao An. 2024. "A Deep Learning Classification Scheme for PolSAR Image Based on Polarimetric Features" Remote Sensing 16, no. 10: 1676. https://doi.org/10.3390/rs16101676

APA StyleZhang, S., Cui, L., Dong, Z., & An, W. (2024). A Deep Learning Classification Scheme for PolSAR Image Based on Polarimetric Features. Remote Sensing, 16(10), 1676. https://doi.org/10.3390/rs16101676