Segmentation of Sandplain Lupin Weeds from Morphologically Similar Narrow-Leafed Lupins in the Field

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Field and Data Collection

2.2. Image Data Processing

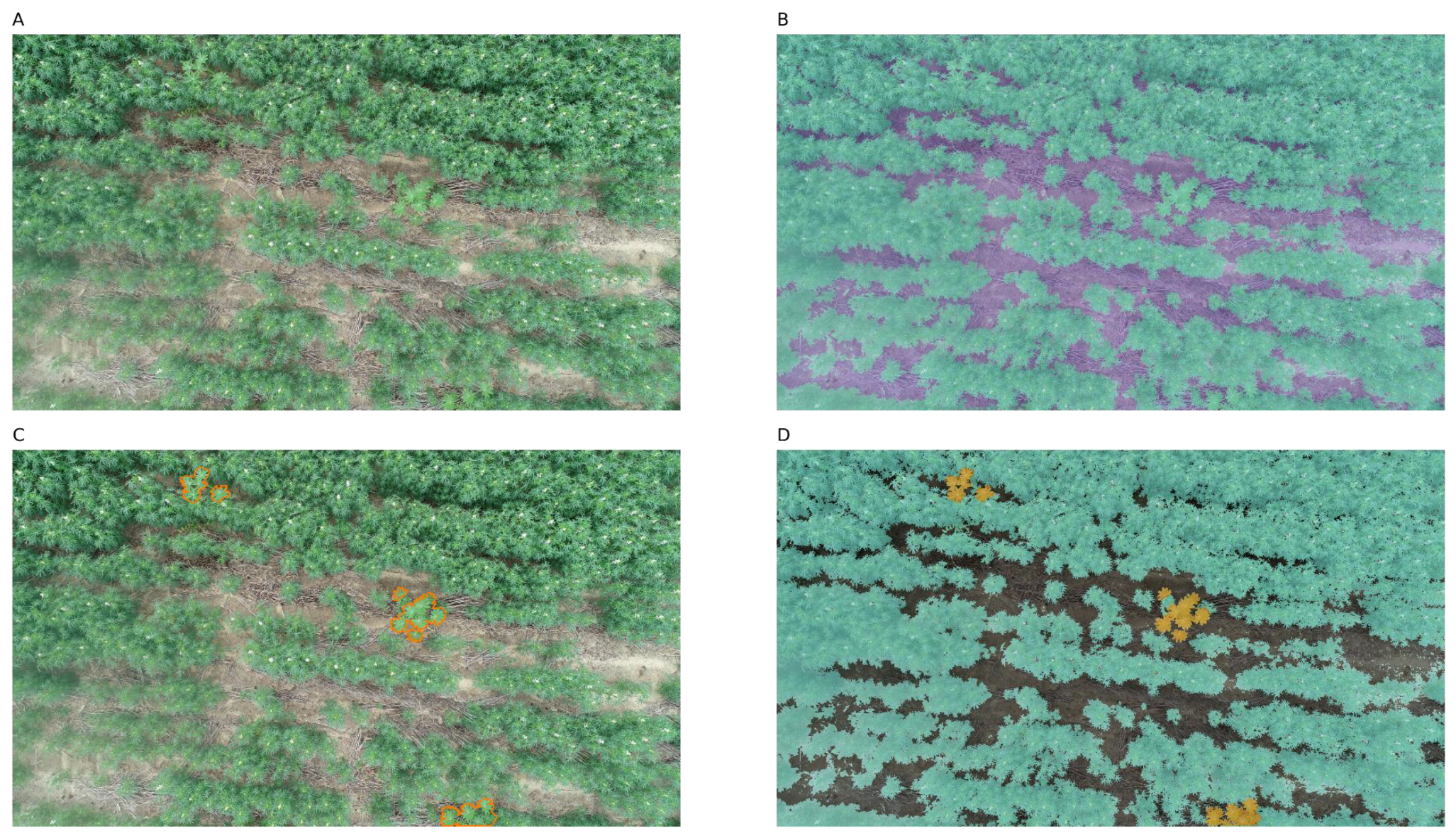

2.3. Bounding Box Labelling and Segmentation Masks

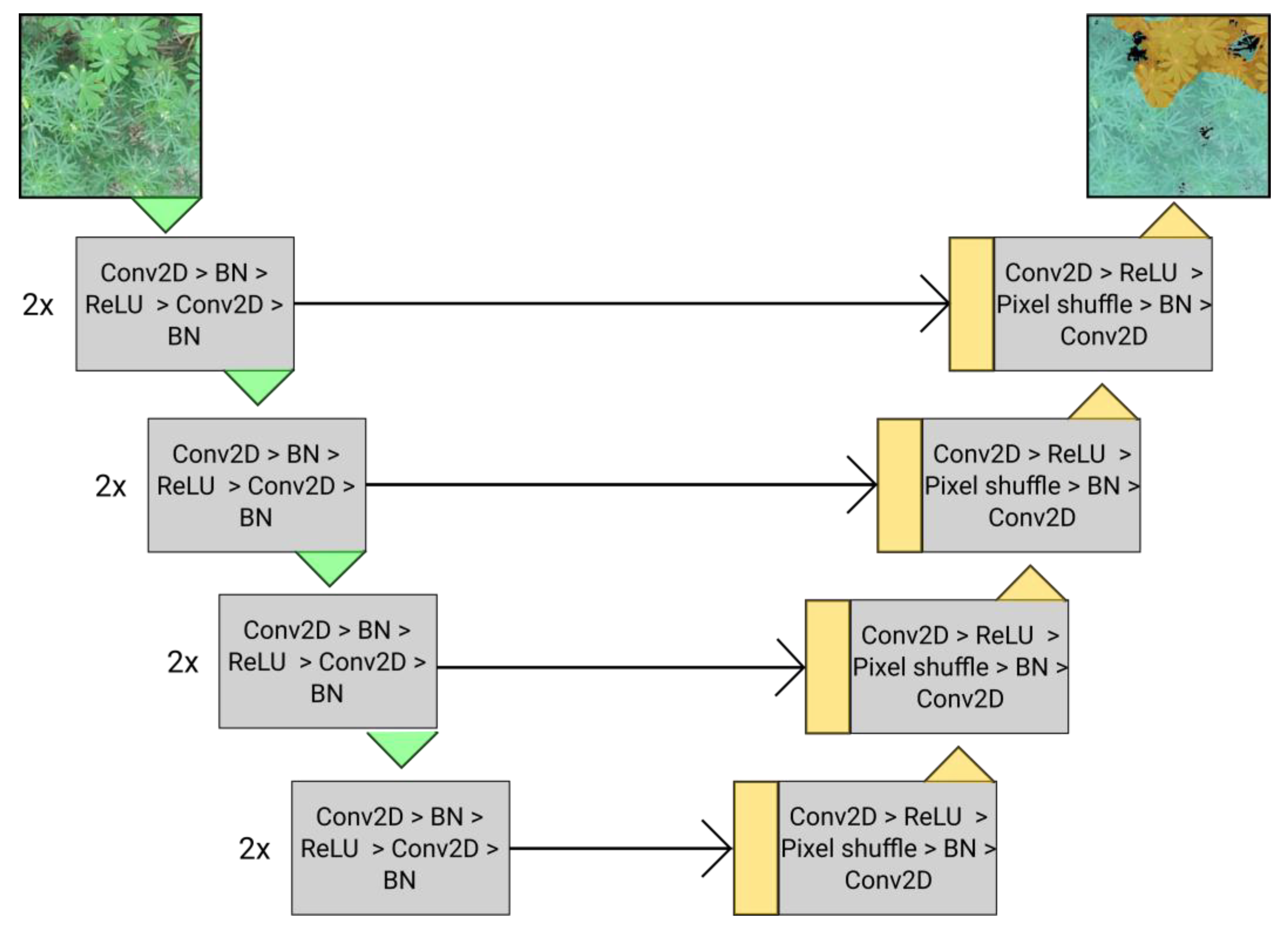

2.4. Segmentation Model Architecture

2.5. Pixel-Wise Evaluation Metrics

2.6. Object-Wise Weed Detection

2.7. Weed Map Construction from Predicted Masks

3. Results

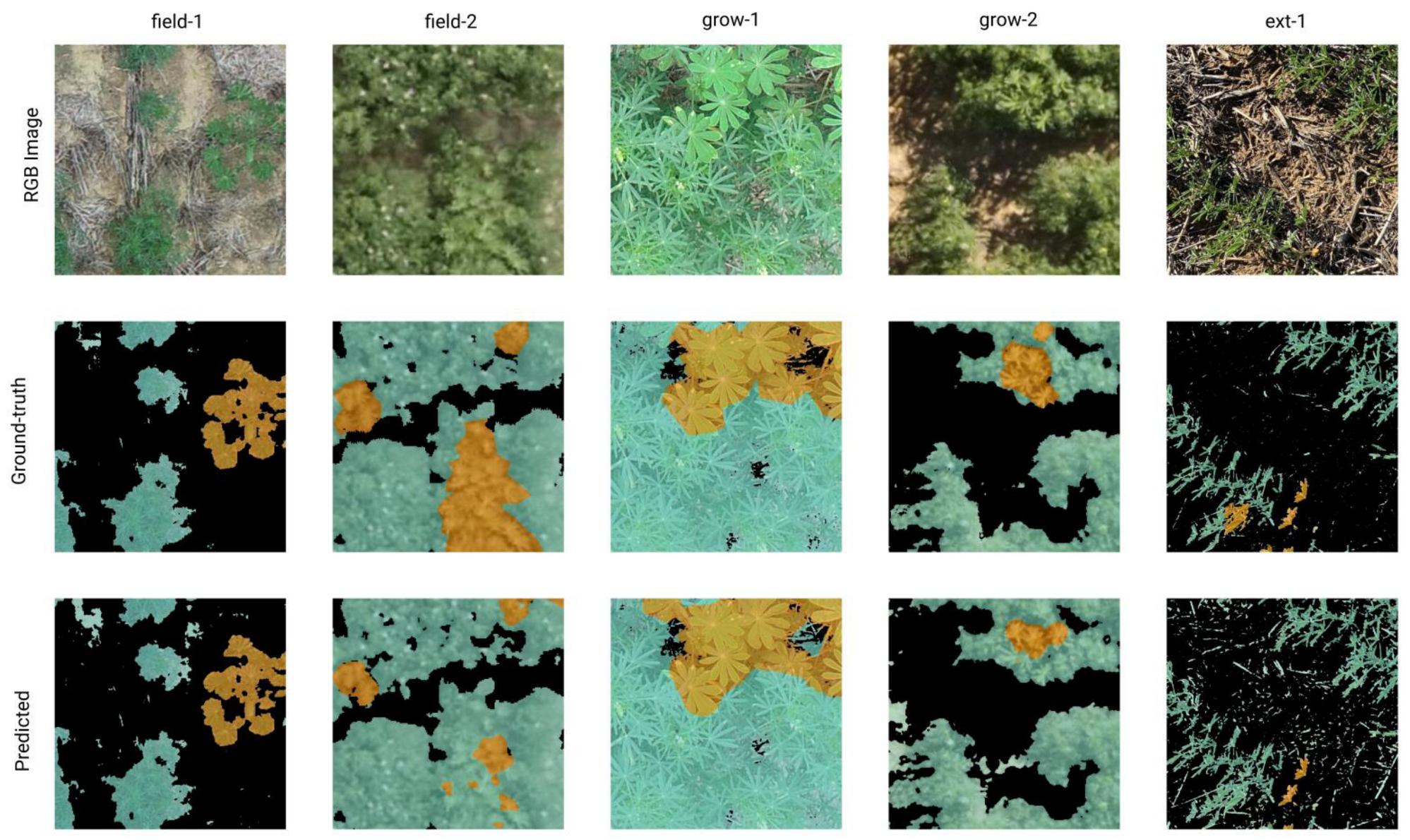

3.1. Segmentation Performance for Sandplain and Narrow-Leafed Lupins

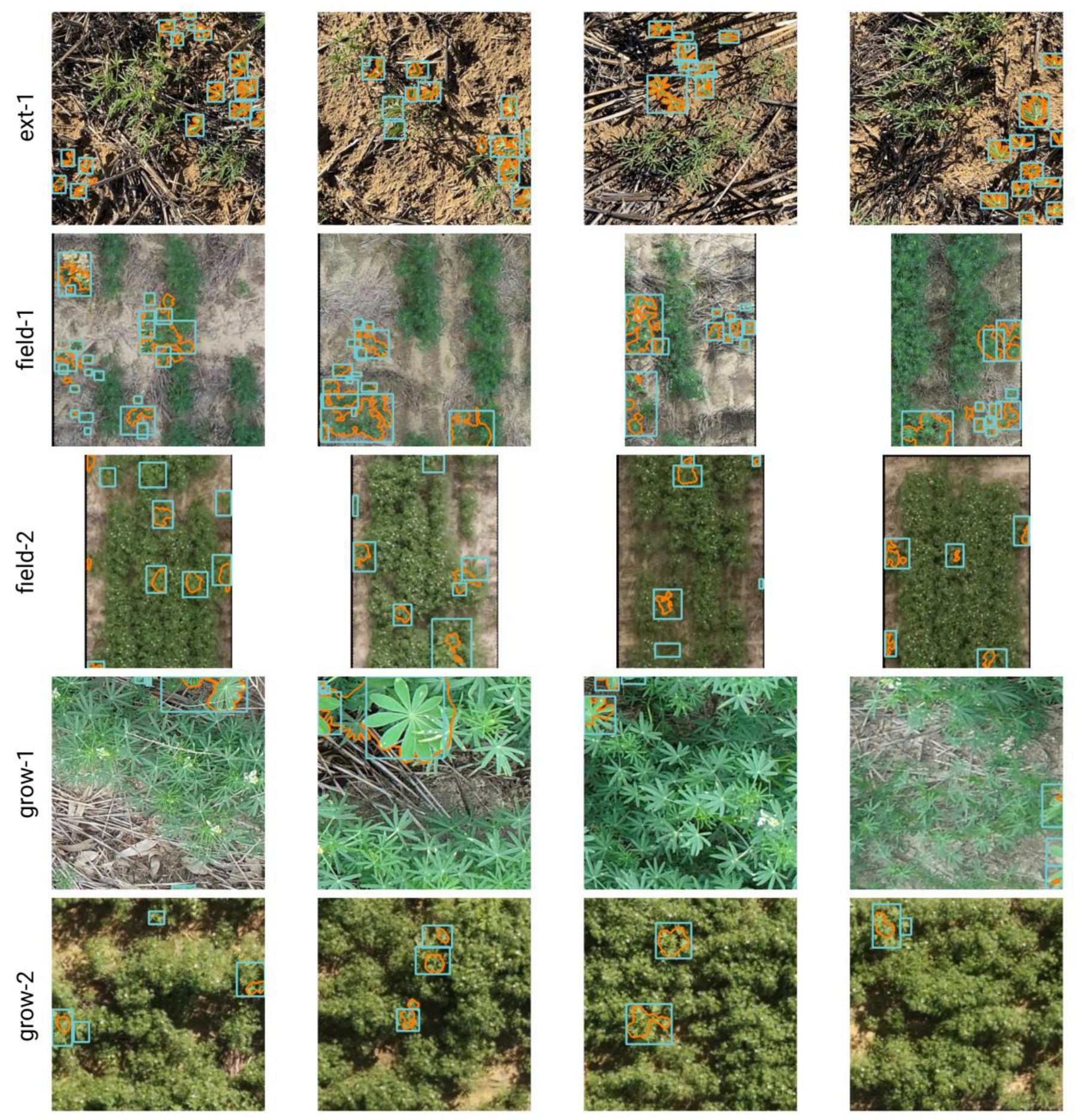

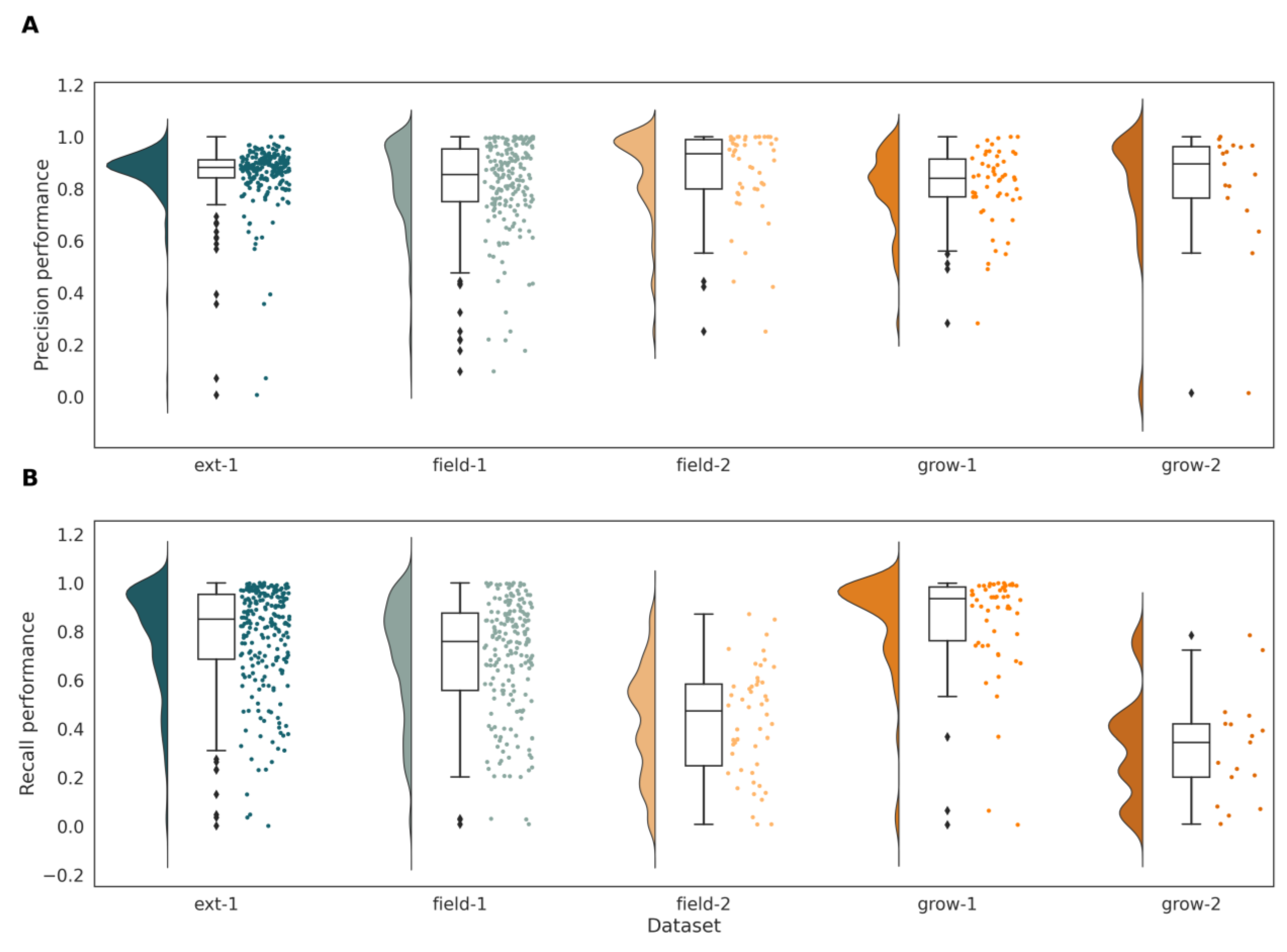

3.2. Target Accuracy for Detecting Individual Sandplain Lupin Weeds

3.3. Effect of Environmental Conditions on Sandplain Lupin Detection

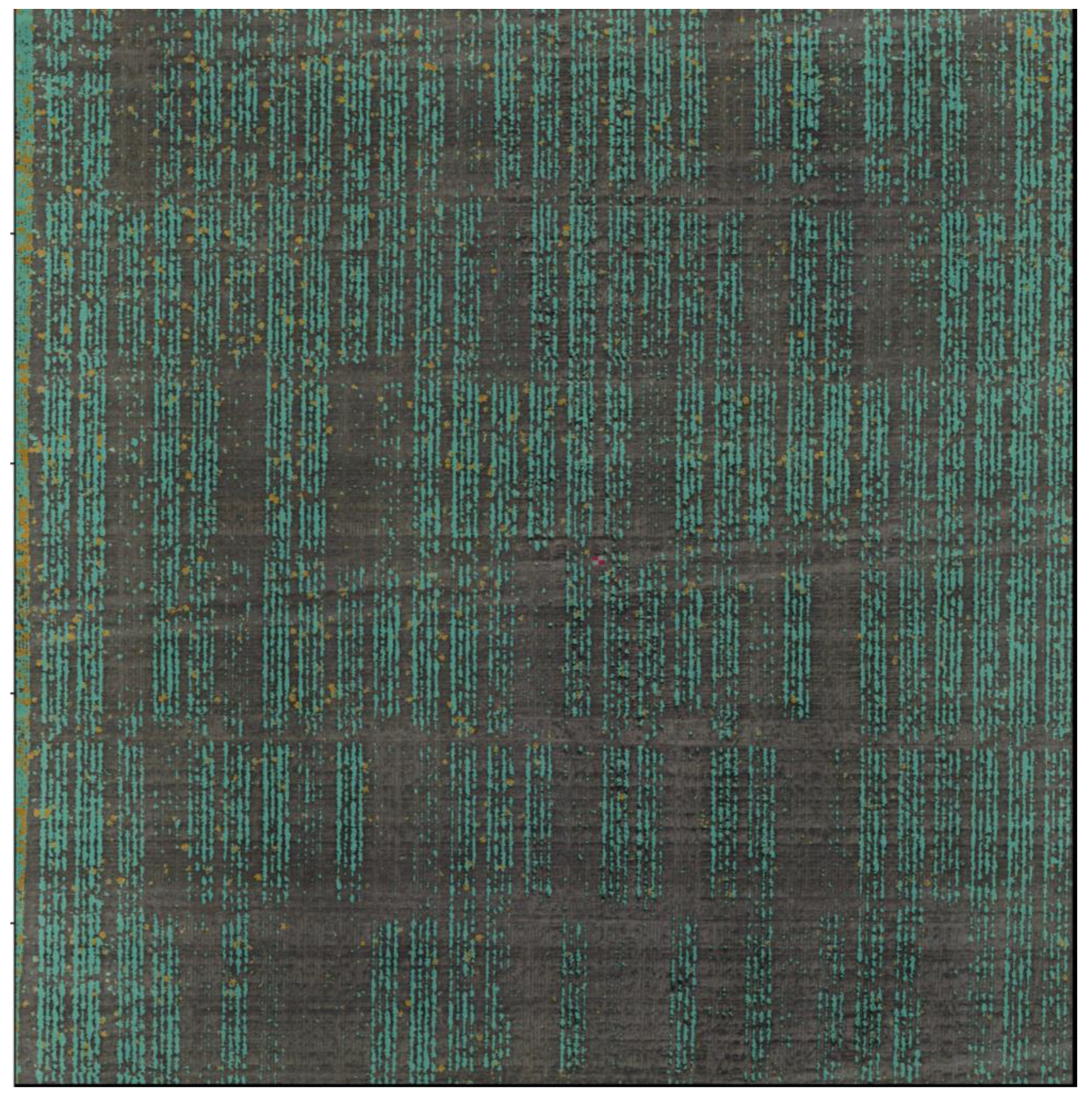

3.4. Weed Mapping Increases Herbicide Use Efficiency

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- DPIRD. Early History of Lupins in Western Australia|Agriculture and Food. Available online: https://www.agric.wa.gov.au/lupins/early-history-lupins-western-australia (accessed on 21 June 2022).

- Brand, J.D.; Tang, C.; Rathjen, A.J. Screening rough-seeded lupins (Lupinus pilosus Murr. and Lupinus atlanticus Glads.) for tolerance to calcareous soils. Plant Soil 2002, 245, 261–275. [Google Scholar] [CrossRef]

- Megirian, G. Review Investigates Control Options for Blue Lupin and Weeds in the West. Groundcover 2020, Issue 147, July–August 2020. Available online: https://groundcover.grdc.com.au/weeds-pests-diseases/weeds/tackling-the-problematic-lupin-and-weeds-that-give-wa-growers-the-blues (accessed on 21 June 2022).

- Thomas, G. DAW665-Advanced Management Strategies for Control of Anthracnose and Brown Spot in Lupins-GRDC. Available online: https://grdc.com.au/research/reports/report?id=376 (accessed on 21 June 2022).

- Lucas, M.M.; Stoddard, F.L.; Annicchiarico, P.; Frías, J.; Martínez-Villaluenga, C.; Sussmann, D.; Duranti, M.; Seger, A.; Zander, P.M.; Pueyo, J.J. The future of lupin as a protein crop in Europe. Front. Plant Sci. 2015, 6, 705. [Google Scholar] [CrossRef] [PubMed]

- Pollard, N.J.; Stoddard, F.L.; Popineau, Y.; Wrigley, C.W.; MacRitchie, F. Lupin flours as additives: Dough mixing, breadmaking, emulsifying, and foaming. Cereal Chem. 2002, 79, 662–669. [Google Scholar] [CrossRef]

- López-Granados, F.; Torres-Sánchez, J.; De Castro, A.-I.; Serrano-Pérez, A.; Mesas-Carrascosa, F.J.; Peña, J.M. Object-based early monitoring of a grass weed in a grass crop using high resolution UAV imagery. Agron. Sustain. Dev. 2016, 36, 67. [Google Scholar] [CrossRef]

- Zhang, N.; Wang, M.; Wang, N. Precision agriculture—A worldwide overview. Comput. Electron. Agric. 2002, 36, 113–132. [Google Scholar] [CrossRef]

- Dammer, K.-H.; Wartenberg, G. Sensor-based weed detection and application of variable herbicide rates in real time. Crop. Prot. 2007, 26, 270–277. [Google Scholar] [CrossRef]

- Hunter, J.E.; Gannon, T.W.; Richardson, R.J.; Yelverton, F.H.; Leon, R.G. Integration of remote-weed mapping and an autonomous spraying unmanned aerial vehicle for site-specific weed management. Pest Manag. Sci. 2020, 76, 1386–1392. [Google Scholar] [CrossRef]

- Che’Ya, N.N.; Dunwoody, E.; Gupta, M. Assessment of weed classification using hyperspectral reflectance and optimal multispectral UAV imagery. Agronomy 2021, 11, 1435. [Google Scholar] [CrossRef]

- Huang, Y.; Lee, M.A.; Thomson, S.J.; Reddy, K.N. Ground-based hyperspectral remote sensing for weed management in crop production. Int. J. Agric. Biol. Eng. 2016, 9, 98–109. [Google Scholar]

- Shahbazi, N.; Flower, K.C.; Callow, J.N.; Mian, A.; Ashworth, M.B.; Beckie, H.J. Comparison of crop and weed height, for potential differentiation of weed patches at harvest. Weed Res. 2021, 61, 25–34. [Google Scholar] [CrossRef]

- Bosilj, P.; Duckett, T.; Cielniak, G. Analysis of Morphology-Based Features for Classification of Crop and Weeds in Precision Agriculture. IEEE Robot. Autom. Lett. 2018, 3, 2950–2956. [Google Scholar] [CrossRef]

- Sanders, J.T.; Jones, E.A.L.; Minter, A.; Austin, R.; Roberson, G.T.; Richardson, R.J.; Everman, W.J. Remote Sensing for Italian Ryegrass [Lolium perenne L. ssp. multiflorum (Lam.) Husnot] Detection in Winter Wheat (Triticum aestivum L.). Front. Agron. 2021, 3, 687112. [Google Scholar] [CrossRef]

- Zhang, Y.; Gao, J.; Cen, H.; Lu, Y.; Yu, X.; He, Y.; Pieters, J.G. Automated spectral feature extraction from hyperspectral images to differentiate weedy rice and barnyard grass from a rice crop. Comput. Electron. Agric. 2019, 159, 42–49. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Dos Santos Ferreira, A.; Freitas, D.M.; da Silva, G.G.; Pistori, H.; Folhes, M.T. Weed detection in soybean crops using ConvNets. Comput. Electron. Agric. 2017, 143, 314–324. [Google Scholar] [CrossRef]

- Gao, J.; French, A.P.; Pound, M.P.; He, Y.; Pridmore, T.P.; Pieters, J.G. Deep convolutional neural networks for image-based Convolvulus sepium detection in sugar beet fields. Plant Methods 2020, 16, 29. [Google Scholar] [CrossRef]

- de Camargo, T.; Schirrmann, M.; Landwehr, N.; Dammer, K.-H.; Pflanz, M. Optimized deep learning model as a basis for fast UAV mapping of weed species in winter wheat crops. Remote Sens. 2021, 13, 1704. [Google Scholar] [CrossRef]

- Lottes, P.; Behley, J.; Chebrolu, N.; Milioto, A.; Stachniss, C. Robust joint stem detection and crop-weed classification using image sequences for plant-specific treatment in precision farming. J. Field Robot. 2020, 37, 20–34. [Google Scholar] [CrossRef]

- Ma, X.; Deng, X.; Qi, L.; Jiang, Y.; Li, H.; Wang, Y.; Xing, X. Fully convolutional network for rice seedling and weed image segmentation at the seedling stage in paddy fields. PLoS ONE 2019, 14, e0215676. [Google Scholar] [CrossRef]

- Partel, V.; Kakarla, S.C.; Ampatzidis, Y. Development and evaluation of a low-cost and smart technology for precision weed management utilizing artificial intelligence. Comput. Electron. Agric. 2019, 157, 339–350. [Google Scholar] [CrossRef]

- Fawakherji, M.; Youssef, A.; Bloisi, D.; Pretto, A.; Nardi, D. Crop and Weeds Classification for Precision Agriculture Using Context-Independent Pixel-Wise Segmentation. In Proceedings of the 2019 Third IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; pp. 146–152. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Kurtzer, G.M.; Sochat, V.; Bauer, M.W. Singularity: Scientific containers for mobility of compute. PLoS ONE 2017, 12, e0177459. [Google Scholar] [CrossRef] [PubMed]

- Weed-AI. Available online: https://weed-ai.sydney.edu.au/ (accessed on 25 January 2022).

- Walker, J.; Hertel, K.; Parker, P.; Edwards, J. Lupin Growth and Development; Munroe, A., Ed.; Industry & Investment NSW: Sydney, NSW, Australia, 2011. [Google Scholar]

- Danilevicz, M.F.; Bayer, P.E.; Boussaid, F.; Bennamoun, M.; Edwards, D. Maize yield prediction at an early developmental stage using multispectral images and genotype data for preliminary hybrid selection. Remote Sens. 2021, 13, 3976. [Google Scholar] [CrossRef]

- Anderson, S.L.; Murray, S.C.; Malambo, L.; Ratcliff, C.; Popescu, S.; Cope, D.; Chang, A.; Jung, J.; Thomasson, J.A. Prediction of Maize Grain Yield before Maturity Using Improved Temporal Height Estimates of Unmanned Aerial Systems. Plant Phenome J. 2019, 2, 1–15. [Google Scholar] [CrossRef]

- Kataoka, T.; Kaneko, T.; Okamoto, H.; Hata, S. Crop growth estimation system using machine vision. In Proceedings of the 2003 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM 2003), Kobe, Japan, 20–24 July 2003; pp. b1079–b1083. [Google Scholar]

- Xu, X.; Xu, S.; Jin, L.; Song, E. Characteristic analysis of Otsu threshold and its applications. Pattern Recognit. Lett. 2011, 32, 956–961. [Google Scholar] [CrossRef]

- Bradski, G. The OpenCV library. Dr. Dobb’s J. Softw. Tools Prof. Program. 2000, 25, 120–123. [Google Scholar]

- Skalski, P. Make Sense. Available online: https://github.com/SkalskiP/make-sense/ (accessed on 25 January 2022).

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Lecture Notes in Computer Science; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Howard, J.; Gugger, S. Fastai: A Layered API for Deep Learning. Information 2020, 11, 108. [Google Scholar] [CrossRef]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. arXiv 2016. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Sudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Jorge Cardoso, M. Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Lecture Notes in Computer Science; Cardoso, M.J., Arbel, T., Carneiro, G., Syeda-Mahmood, T., Tavares, J.M.R.S., Moradi, M., Bradley, A., Greenspan, H., Papa, J.P., Madabhushi, A., Eds.; Springer International Publishing: Cham, Switzerland, 2017; Volume 10553, pp. 240–248. [Google Scholar]

- PyTorch|NVIDIA NGC. Available online: https://catalog.ngc.nvidia.com/orgs/nvidia/containers/pytorch/tags (accessed on 15 December 2022).

- Fabian, P.; Gael, V.; Alexandre, G.; Vincent, M.; Bertrand, T. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Opitz, J.; Burst, S. Macro F1 and Macro F1. arXiv 2019. [Google Scholar] [CrossRef]

- Sa, I.; Popović, M.; Khanna, R.; Chen, Z.; Lottes, P.; Liebisch, F.; Nieto, J.; Stachniss, C.; Walter, A.; Siegwart, R. WeedMap: A Large-Scale Semantic Weed Mapping Framework Using Aerial Multispectral Imaging and Deep Neural Network for Precision Farming. Remote Sens. 2018, 10, 1423. [Google Scholar] [CrossRef]

- Wu, Z.; Chen, Y.; Zhao, B.; Kang, X.; Ding, Y. Review of Weed Detection Methods Based on Computer Vision. Sensors 2021, 21, 3647. [Google Scholar] [CrossRef] [PubMed]

- Su, D.; Qiao, Y.; Kong, H.; Sukkarieh, S. Real time detection of inter-row ryegrass in wheat farms using deep learning. Biosyst. Eng. 2021, 204, 198–211. [Google Scholar] [CrossRef]

- Le, V.N.T.; Ahderom, S.; Alameh, K. Performances of the LBP Based Algorithm over CNN Models for Detecting Crops and Weeds with Similar Morphologies. Sensors 2020, 20, 2193. [Google Scholar] [CrossRef] [PubMed]

- Sapkota, B.; Singh, V.; Neely, C.; Rajan, N.; Bagavathiannan, M. Detection of Italian Ryegrass in Wheat and Prediction of Competitive Interactions Using Remote-Sensing and Machine-Learning Techniques. Remote Sens. 2020, 12, 2977. [Google Scholar] [CrossRef]

- Girma, K.; Mosali, J.; Raun, W.R.; Freeman, K.W.; Martin, K.L.; Solie, J.B.; Stone, M.L. Identification of optical spectral signatures for detecting cheat and ryegrass in winter wheat. Crop Sci. 2005, 45, 477–485. [Google Scholar] [CrossRef]

- GRDC GROWNOTES: Lupin Western; GRDC: Sydney, NSW, Australia, 2017.

- Prichard, J.M.; Forseth, I.N. Rapid leaf movement, microclimate, and water relations of two temperate legumes in three contrasting habitats. Am. J. Bot. 1988, 75, 1201. [Google Scholar] [CrossRef]

- Fu, Q.A.; Ehleringer, J.R. Heliotropic leaf movements in common beans controlled by air temperature. Plant Physiol. 1989, 91, 1162–1167. [Google Scholar] [CrossRef]

- Jaffe, M.J. On heliotropism in tendrils of Pisum sativum: A response to infrared irradiation. Planta 1970, 92, 146–151. [Google Scholar] [CrossRef]

- Grant, R.H. Potential effect of soybean heliotropism on ultraviolet-b irradiance and dose. Agron. J. 1999, 91, 1017–1023. [Google Scholar] [CrossRef]

- Atamian, H.S.; Creux, N.M.; Brown, E.A.; Garner, A.G.; Blackman, B.K.; Harmer, S.L. Circadian regulation of sunflower heliotropism, floral orientation, and pollinator visits. Science 2016, 353, 587–590. [Google Scholar] [CrossRef] [PubMed]

- López-Granados, F.; Torres-Sánchez, J.; Serrano-Pérez, A.; De Castro, A.I.; Mesas-Carrascosa, F.-J.; Peña, J.M. Early season weed mapping in sunflower using UAV technology: Variability of herbicide treatment maps against weed thresholds. Precis. Agric. 2016, 17, 183–199. [Google Scholar] [CrossRef]

- Porsild, A.E.; Harington, C.R.; Mulligan, G.A. Lupinus arcticus Wats. Grown from Seeds of Pleistocene Age. Science 1967, 158, 113–114. [Google Scholar] [CrossRef] [PubMed]

| ID | Field Type | Platform | Collection Date | Growth Stage | GSD (cm/px) | Flight Height (m) | Total Images | Total Labels |

|---|---|---|---|---|---|---|---|---|

| field-1 | Trial site | UAV | 16 July 2021 | 2–3.3 | 0.27 | 10 | 101 | 1602 |

| field-2 | Trial site | UAV | 11 August 2021 | 3–4 | 0.55 | 20 | 97 | 840 |

| grow-1 | Grower | UAV | 16 July 2021 | 2–3.3 | 0.11 | 4 | 88 | 462 |

| grow-2 | Grower | UAV | 19 August 2021 | 2–4 | 0.55 | 20 | 292 | 207 |

| ext-1 | Grower | Smartphone | 12 July 2019 | 1–2.5 | 0.01 | 1.5 | 217 | 4879 |

| Dataset | Precision | Recall | IoU | Macro F1 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NLL | SL | Avg | NLL | SL | Avg | NLL | SL | Avg | NLL | SL | Avg | |

| field-1 | 0.81 | 0.82 | 0.81 | 0.95 | 0.70 | 0.93 | 0.51 | 0.45 | 0.51 | 0.64 | 0.57 | 0.64 |

| field-2 | 0.89 | 0.82 | 0.88 | 0.91 | 0.43 | 0.89 | 0.51 | 0.26 | 0.50 | 0.58 | 0.37 | 0.57 |

| grow-1 | 0.96 | 0.84 | 0.96 | 0.99 | 0.85 | 0.99 | 0.87 | 0.64 | 0.87 | 0.93 | 0.76 | 0.92 |

| grow-2 | 0.95 | 0.83 | 0.95 | 1.00 | 0.32 | 0.99 | 0.72 | 0.29 | 0.72 | 0.82 | 0.42 | 0.82 |

| ext-1 | 0.68 | 0.88 | 0.69 | 0.97 | 0.78 | 0.97 | 0.41 | 0.54 | 0.42 | 0.56 | 0.67 | 0.57 |

| Dataset | Number of Sandplain Lupins | Predicted Sandplain Lupins | Percentage Identified (%) | R2 |

|---|---|---|---|---|

| field-1 | 737 | 589 | 79.91 | 0.76 |

| field-2 | 143 | 111 | 77.62 | 0.64 |

| grow-1 | 87 | 75 | 86.20 | 0.76 |

| grow-2 | 31 | 23 | 74.19 | 0.73 |

| ext-1 | 938 | 785 | 83.37 | 0.91 |

| Total | 1936 | 1583 | 80.32 | 0.76 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Danilevicz, M.F.; Rocha, R.L.; Batley, J.; Bayer, P.E.; Bennamoun, M.; Edwards, D.; Ashworth, M.B. Segmentation of Sandplain Lupin Weeds from Morphologically Similar Narrow-Leafed Lupins in the Field. Remote Sens. 2023, 15, 1817. https://doi.org/10.3390/rs15071817

Danilevicz MF, Rocha RL, Batley J, Bayer PE, Bennamoun M, Edwards D, Ashworth MB. Segmentation of Sandplain Lupin Weeds from Morphologically Similar Narrow-Leafed Lupins in the Field. Remote Sensing. 2023; 15(7):1817. https://doi.org/10.3390/rs15071817

Chicago/Turabian StyleDanilevicz, Monica F., Roberto Lujan Rocha, Jacqueline Batley, Philipp E. Bayer, Mohammed Bennamoun, David Edwards, and Michael B. Ashworth. 2023. "Segmentation of Sandplain Lupin Weeds from Morphologically Similar Narrow-Leafed Lupins in the Field" Remote Sensing 15, no. 7: 1817. https://doi.org/10.3390/rs15071817

APA StyleDanilevicz, M. F., Rocha, R. L., Batley, J., Bayer, P. E., Bennamoun, M., Edwards, D., & Ashworth, M. B. (2023). Segmentation of Sandplain Lupin Weeds from Morphologically Similar Narrow-Leafed Lupins in the Field. Remote Sensing, 15(7), 1817. https://doi.org/10.3390/rs15071817