Based on the decentralized PI algorithm, this section proposes a PIDSM for solving the problem of time-dependent observation tasks in the AEOS constellation. Such an algorithm can be deployed and executed concurrently in all AEOSs. By exchanging information with the neighbor satellites through ISLs, each AEOS s ∈ S iteratively adds or removes some targets in or from its currently ordered sequence such that the global fitness is somehow optimized, while the constraints defined in Section II are met.

4.1. The Decentralized Framework of PI

We start by introducing the “significance value” (respectively, the “marginal significance value”) of a target with regard to the assigned (resp. non-assigned) satellite in the PI framework.

(1)

Significance

: Assume

g ∈

as, the significance value

qsi(

asg) represents the variation of

F(

as) after removing

g from

as, it can be formulated as follows:

where

asg is the sequence after removing

g from

as. For completeness, we set

qs(

asg) = 0 for

g ∉

as.

(2)

Marginal significance: Assume

g ∉

as, the marginal significance

qc*(

ac⊕

g) represents the maximal variation of

F(

as) and after adding

g into

as, it can be formulated as follows:

where

as⊕

k g is the target sequence after inserting

g into the

k-th position of

as. If

g ∈

as, let

qs*(

as⊕

g) toward minus infinity, i.e.,

qs*(

ac⊕

g) → −∞.

The basic idea of PI framework is as follows [

34]: each AEOS

s transmits the significance value

qs(

asg) of target

g ∈

as to its neighbor satellite

c through ISLs. Then, satellite

c compares the received

qs(

asg) with the marginal significance value

qc*(

ac⊕

g) computed based on its sequence

ac. If the criterion in (18) is satisfied (see Proposition 1 later), target

g is deleted from

as but added into some proper position of

ac such that the global fitness value ϒ(

a) is increased. Similarly, the significance of target

g is further transmitted to other satellites and this value is continuously updated. The above process is repeated until (18) is no longer satisfied or ϒ(

a) cannot be increased by exchanging targets between satellites. Note that the

convergence of PI algorithm is naturally guaranteed since the global fitness ϒ(

a) is increased whenever the target assignment is changed.

To facilitate the understanding of the PI procedure, the following conclusion reveals how global fitness is increased by exchanging targets between satellites.

Proposition 1. Given the global fitness value ϒ(a) of a solution a, two AEOSs s and c with H(s, c) = 1, and a target g ∈ as\ac. Then, if (18) is satisfied, the global fitness value would be increased after removing g from as but inserting it into the proper position of ac.

Proof: Let pc = argmaxk ∈{1,2,..|ac|+1}{F(ac⊕kg) − F(ac)}. Suppose we remove g from as and add it into the pc-th position of ac, resulting in a new solution denoted as a′. By (16) and (17), we can calculate the fitness of a′ as ϒ(a′) = ϒ(a) + qc*(ac⊕g) − qs(asg). Therefore, the inequality ϒ(a′) > ϒ(a) holds and the global fitness value increases only when qc*(c⊕g) > qs(s g). □

Specifically, we use Fs and Fc to denote the partial fitness value of s and c, respectively. According to whether qc*(ac⊕g) and qs(as⊖g) is greater or less than zero, there are four cases:

Case 1. qs(asg) < 0 and qc*(ac⊕g) > 0. Naturally (18) holds, then Fs and Fc are both increased. In this way, the global fitness value ϒ(a′) is increased.

Case 2. qs(asg) ≥ 0 and qc*(ac⊕g) > 0. Then, the satisfaction of (18) implies that the reduction of Fs is less that the increase of Fc. Hence, ϒ(a′) is increased.

Case 3. qs(asg) ≥ 0 and qc*(ac⊕g) ≤ 0. Then, (18) never holds.

Case 4. qs(asg) < 0 and qc*(ac⊕g) ≤ 0. Then, we have |qc*(ac⊕g)| < |qs(asg)|. That means, the increase of Fs is greater than the decrease of Fc, and hence ϒ(a′) is increased correspondingly.

The significance value of a target can be broadcasted among AEOSs by using ISLs. According to Proposition 1, based on the received significance value, an AEOS can determine whether to remove or insert a target from or into its current target sequence so as to increase the global fitness value. However, the sparse ISL communication topology makes it difficult to deliver a target’s significance value to all satellites, leading to the possibility of the system getting trapped in a local optimum [

34]. For example, consider a set of AEOSs

S = {

s1,

s2,

s3} and four targets

G = {

g1,

g2,

g3,

g4}. There are ISLs between

s1 and

s2 as well as between

s2 and

s3. As illustrated in

Figure 4, the current schedule solution is

a1 = {

g1},

a2 = {

g2,

g3}, and

a3 = {

g4}. We now assume that i)

qs2*(

as2⊕

g1) ≤

qs1(

as1g1), and ii) the global fitness will be increased if target

g1 is removed from

a1 to

a3. However, since

qs2*(

as2⊕

g1) ≤

qs1(

as1g1),

g1 cannot be added into

as2 by Proposition 1, and further

g1 cannot be added into

as3, leading to a local optimum.

To deliver the target significance value to all satellites, PI algorithm framework introduces three message lists Zs, Qs, and us stored on each satellite s, which are defined as follows:

Zs = [Zs1, Zs2, …, Zsn]T is a vector that keeps track of which target is assigned to which satellite. The entry Zsg = c implies that s thinks that target g is scheduled to satellite c, and if s deems g to be unassigned, Zsg towards infinity, i.e., Zsg → ∞.

Qs = [Qs1, Qs2, …, Qsn]T is a vector recording the significance values of all targets. If Zsg → ∞, we set Qsg → ∞.

us = [

us1,

us2…,

usm]

T is a vector where the entry

usc records the time when (

s thinks that) satellite

c has received the latest message from other satellites. Once a message is passed, the timestamp

usc is updated as follows:

where

τr is the time that

s receives the message from

c. Apparently, if

c is the neighbor of

s, or

H[

s,

c] = 1, we have

usc =

τr; otherwise,

usc is equal to maximal timestamp

uhc where

H[

s,

h] = 1.

The timestamp list us records the latest message exchange time, and the lists Qs and Zs together represent a schedule solution. These three lists can be continuously updated through local communication with other satellites. In particular, suppose Zsg = c ∈ S. Then, AEOS s believes that at least at time usc, target g is scheduled to satellite c and the significance value of g with regard to c is Qsg. Initially, we set as = ∅, Zsg → ∞, and Qsg = 0 for each AEOS s and every target g.

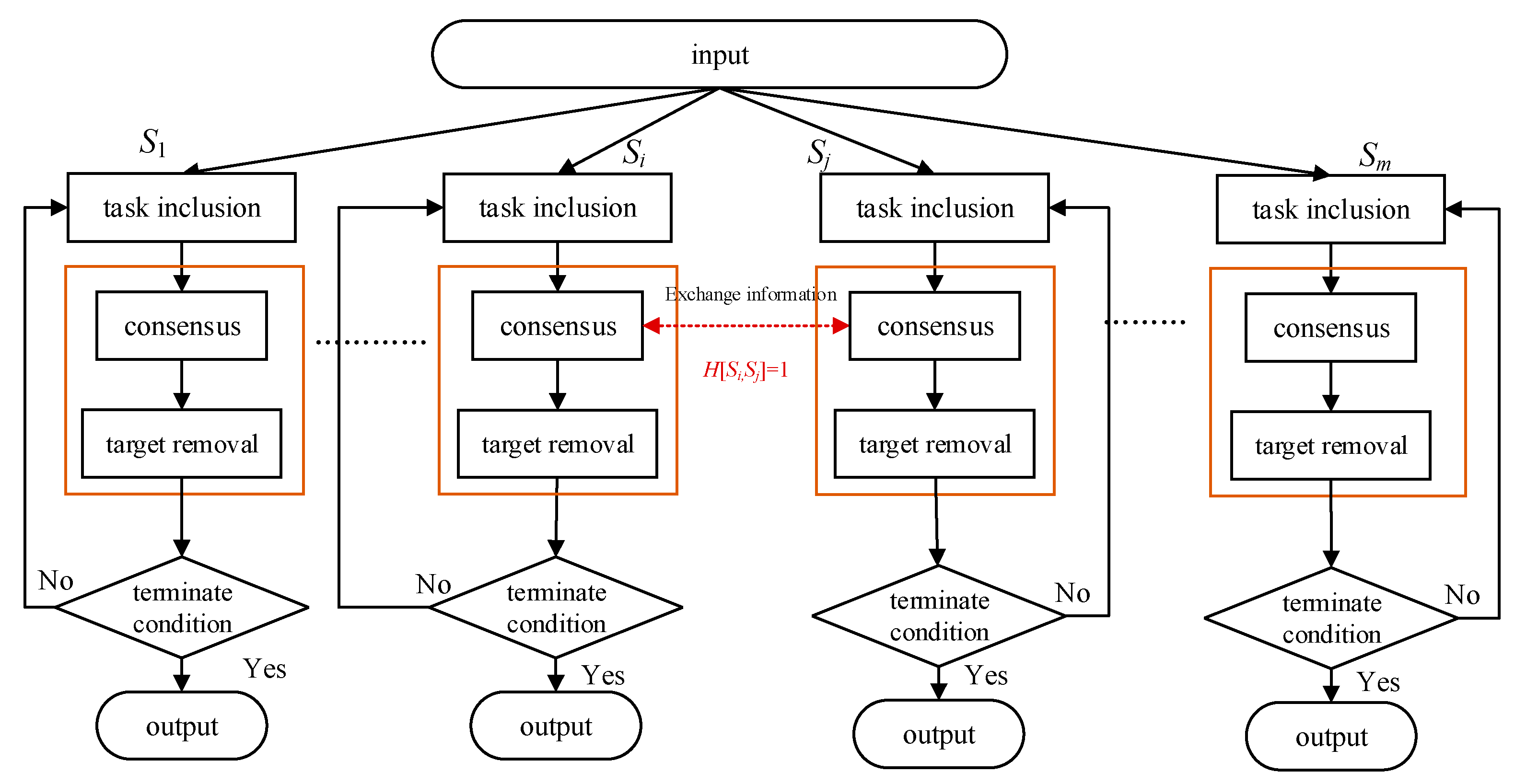

Generally, as shown in

Figure 5, the decentralized PI framework contains two phases:

target inclusion and

consensus and target removal; they are both running concurrently on each satellite. Specifically, the task inclusion phase determines whether to add a target to its current target sequence, and different significance values may result for a specific target at the end of this phase. Thus, the second phase is required, which has two parts: (i)

consensus, where a consensus significance value list is reached among all satellites, and (ii)

task removal, where each satellite removes the problematic targets from its current sequence. Note that only the consensus part exchanges messages among satellites using ISLs, but the other procedures are performed independently. The above two phases are executed iteratively. When no change can be made to the obtained sequences for a period of time, the PI algorithm running on each satellite ends.

Generally, as shown in

Figure 5, the decentralized PI framework contains two phases:

target inclusion and

consensus and target removal; they are both running concurrently on each satellite. Specifically, the task inclusion phase determines whether to add a target to its current target sequence, and different significance values may result for a specific target at the end of this phase. Thus, the second phase is required, which has two parts: (i)

consensus, where a consensus significance value list is reached among all satellites, and (ii)

task removal, where each satellite removes the problematic targets from its current sequence. Note that only the consensus part exchanges messages among satellites using ISLs, but the other procedures are performed independently. The above two phases are executed iteratively. When no change can be made to the obtained sequences for a period of time, the PI algorithm running on each satellite ends.

4.3. Consensus and Target Removal Phase

Algorithm 4 is executed independently on each satellite, which can lead to a target being allocated to more than one satellite or the significance value of a target being different on different satellites, resulting in conflicts. Thus, the second phase is required to ensure that each target g is scheduled at most once and a consensus significance value for each target is reached among all satellites. This phase includes two steps: consensus and task removal.

Consensus: By (16), the significance value of target

g is dependent on the target order of the sequence that

g is assigned, thus the strong synergies exist between targets. According to the basic idea of PI algorithm, the significance list is broadcasted among satellites through a series of local ISL-based communication, and the significance value of every target is gradually increased. If we just aim to find the biggest target significance value during communication, the significance of some targets may converge to a nonexisting value (in the sense of not being assigned to any satellite). To avoid such a problem, we use the heuristic rules called winning bids proposed in the work [

33] to reach the consensus of the significance list. This method is discussed in detail as follows.

By using ISLs, AEOS

s sends the message lists

Qs,

Zs and

us to its neighbor

c with

H[

s,

c] = 1 and receives the corresponding lists

Qc,

Zc, and

uc. Then,

s updates the stored messages

Zsg and

Qsg for each target

g according to the rules given in

Table 2. There are three actions in

Table 2 and the default is the

Leave action.

The first two columns of

Table 2 record target

g’s observers that are believed by the sender

c and receiver

s, respectively. The third column outlines the action taken on

Qsg and

Zsg with respect to receiver

s. Note that once a piece of message is passed, timestamp

ui is updated according to (19) to obtain the latest time information.

Table 2.

Consensus rules.

Table 2.

Consensus rules.

| Sender c Thinks Zcg Is | Receiver s Thinks Zsg Is | Receiver’s Action |

|---|

| c | s | if Qcg > Qsg: Update |

| c | Update |

| k∉{c, s} | if uck > usk or Qcg > Qsg: Update |

| none | Update |

| s | s | Leave |

| c | Reset |

| k∉{c, s} | if uck > usk: Reset |

| none | Leave |

| k∉{c, s} | s | if uck > usk and Qcg > Qsg: Update |

| c | if uck > usk: Update |

| otherwise: Reset |

| k | if uck > usk: Update |

| β∉{c, s, g} | if uck > usk and ucβ > usβ: Update |

| if uck > usk and Qcg > Qsg: Update |

| if ucβ > usβ and usk > uck: Reset |

| none | if uck > usk: Update |

| none | s | Leave |

| c | Update |

| k∉{c, s} | if uck > usk: Update |

| none | Leave |

Target removal: Only the local message lists

Qs and

Zs are updated during the above consensus procedure, but the target sequence

as is not affected. Thus, it is possible that

Zsg ≠

s for some

s ∈

S and

g ∈

as when the consensus is completed. That means that

s believes that it does not observe a target which is however included in its sequence

as Then, the second step, namely, target removal, is needed to remove these problematic targets

De = {

g ∈

as |

Zsg ≠

s} from

as. The removal criterion is given as follows:

where

wsg =

F(

as) −

F(

as⊖

g) is the significance value of target

g computed based on the current sequence

as.

If (21) is satisfied, we remove from

as and

De the target

gk = argmax

g∈De(

Qsg −

wsg) which is associated with the largest objective increase and set

Qsgk = 0. Repeat this process until

De becomes empty or (21) is no longer met. Finally, if

De ≠ ∅ but (21) is not satisfied, i.e., there are some targets retained in

De, we reset the observer of each remaining target

g in

De as

s, i.e.,

Zsg = s, and update the significance list

Qsg =

wsg. The consensus and target removal phase can be expressed in Algorithm 5.

| Algorithm 5: Consensus and target removal phase running on each satellite. |

Input: AEOS s, target sequence as, Qs and Zs;

Output: New sequence as, and updated lists Qs and Zs;Send Qs, Zs and us to satellite c with H(s, c) = 1; Receive Qc, Zc and ucfrom c with H(c, h) = 1; According to rules in Table 2 to update Qs, Zs and us; Let De = {g ∈ as|Zsg ≠ s}; while maxg∈De(Qsg − wsg) > 0 gk = argmaxg∈De(Qsg − wsg); Remove gk from as and De; Reset Qsgk = 0; end Let Qsg = wsg ∀g ∈ as; if De ≠ ∅ Let Zsg = s, ∀g ∈ De; End Output as, Qs, Zs;

|

4.4. Convergence Analysis

The proposed PIDSM is essentially working on the iterative optimization principle with each satellite aiming to increase the global fitness at each iteration, and thereby the convergence is guaranteed. In particular, the significance values of all targets are exchanged among satellites via local ISL communication, and the global fitness value is increased by recursively adding/removing a target into/from the target sequence of some satellites according to Proposition 1. Meanwhile, the first phase adds as many targets as possible into the target sequence of a satellite, while the second phase ensures that a consensus significance values list can be reached for all satellites at each iteration. When no changes can be made in the obtained solution after executing the first and second phases for a period of time, the whole algorithm is converged.

Formally, the entire procedure of the PIDSM running on AEOS s is expressed in detail as follows.

Step 1: Running Algorithm 4 to include targets. First, add targets to as according to Criterion (20) and update the related information Zs. When no target can be scheduled to as, recalculate Qsg for each target g in ai.

Step 2: Sending the information lists Qs, Zi, and us stored on satellite s to its neighbor c with H[s, c] = 1.

Step 3: Receiving the information lists Qc, Zc, and uc from satellite c with H[s, c] = 1.

Step 4: Conducting the consensus procedure. Based on the received message Qc, Zc, and uc from each neighbor c, update the message Qs and Zs stored on satellite s itself.

Step 5: Removing the problematic targets. According to Criterion (21), remove tasks in De = {g ∈ as | Zsg ≠ s} from ai and De until De = ∅ or that criterion is not satisfied. Then, update Qs and place each remaining target g in De back in as by setting Zsg = s.

Step 6: Repeat steps 1–7 until no further changes are made to the scheduled solution for a specified period of time.