Human Activity Classification Based on Dual Micro-Motion Signatures Using Interferometric Radar

Abstract

1. Introduction

- Propose a human activity classification method based on micro-Doppler and interferometric micro-motion signatures using a DCNN classifier.

- Demonstrate the performance analysis and comparison of the proposed algorithm with micro-Doppler signatures-based classifiers for motion capture (MOCAP) simulated data for seven human activity classes.

- Apply real measurement data for different walking activities of humans to the proposed classification method to prove the effectiveness of the algorithm.

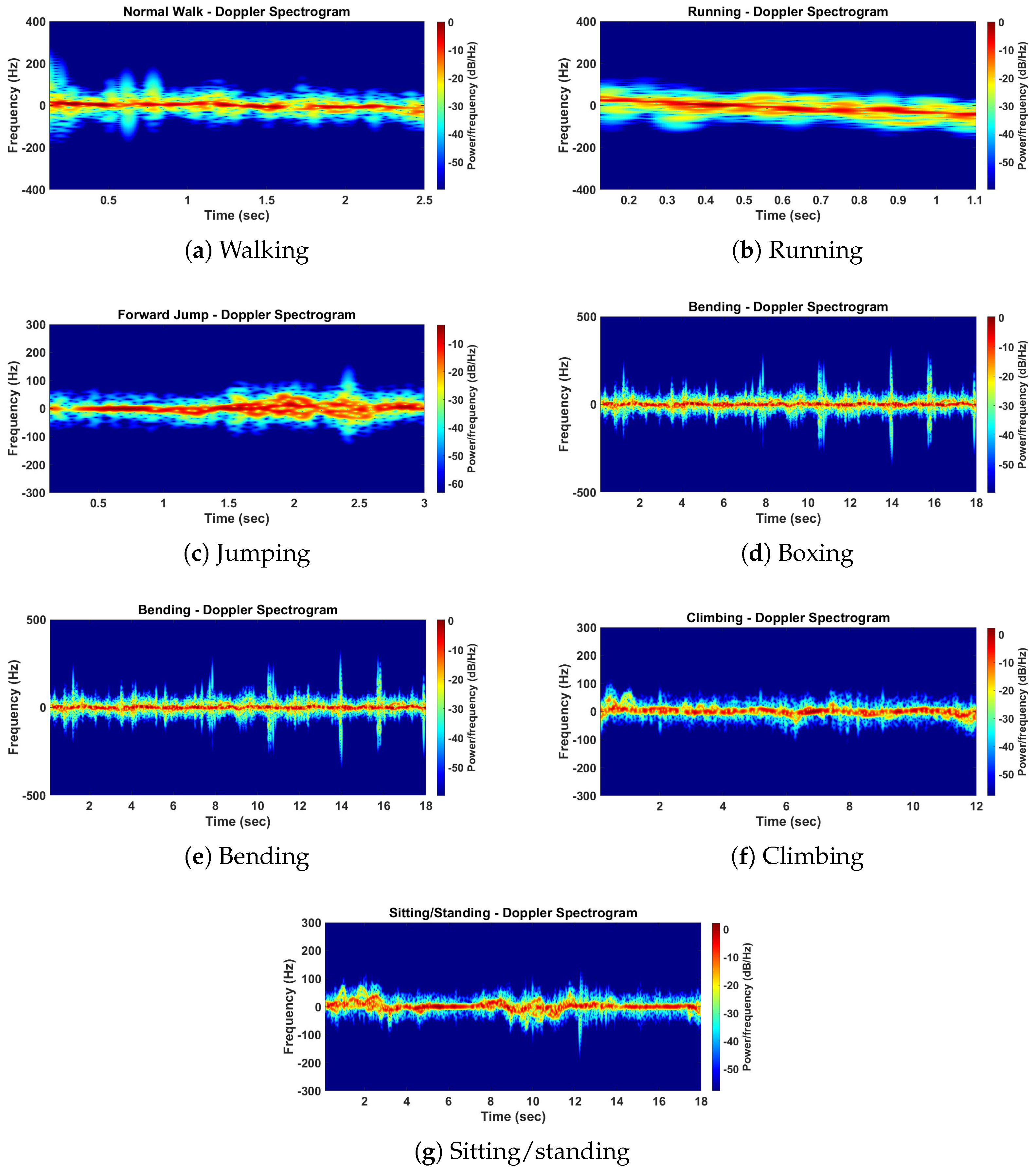

2. Human Activity Classes

3. Radar Return Simulation of Human Activities

4. Data Processing

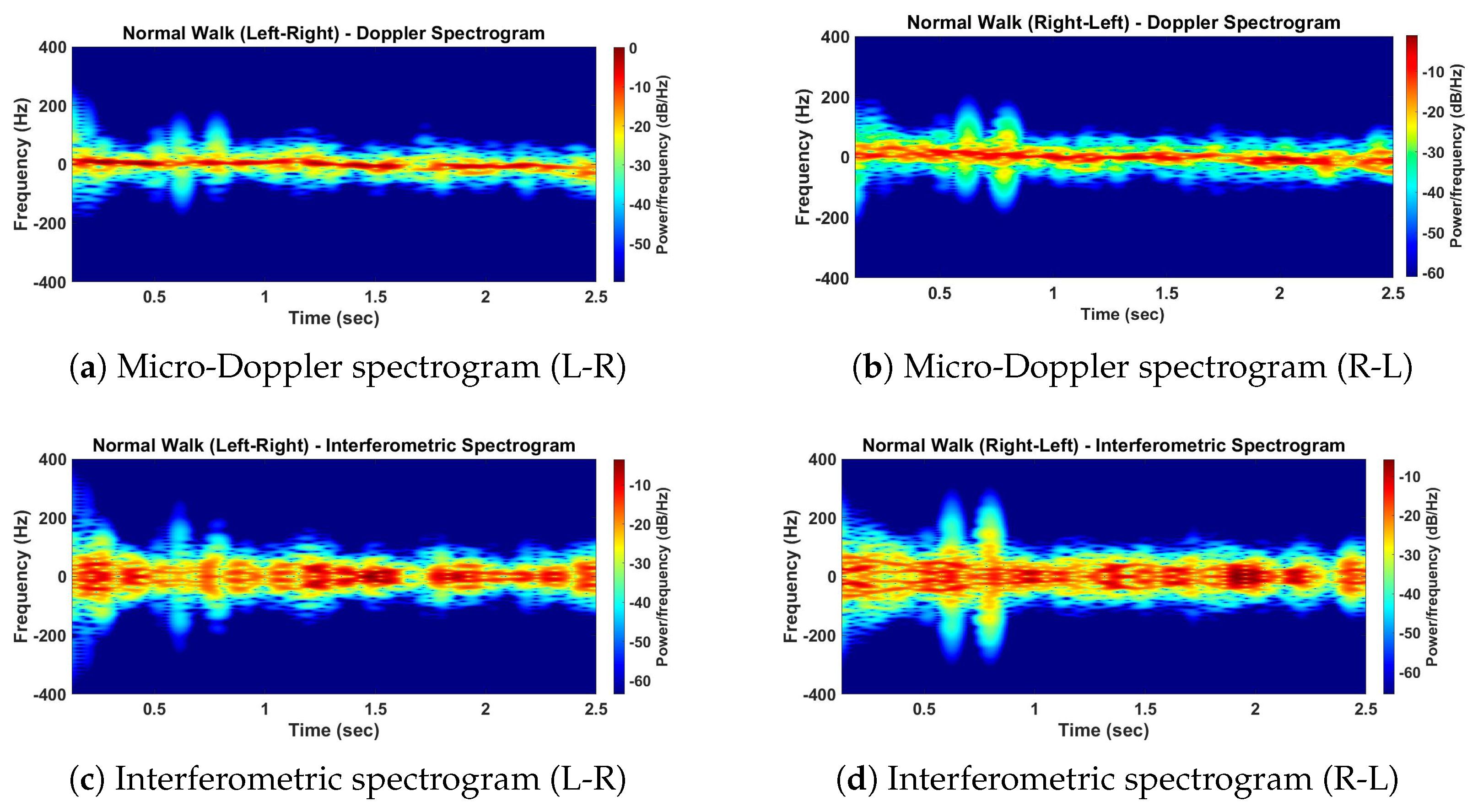

4.1. Interferometric Radar Processing

4.2. Time-Frequency Analysis

4.3. Micro-Motion Characteristics of Different Human Activities

5. Activity Classification Algorithm

5.1. Deep Convolutional Neural Network (DCNN)

5.2. DCNN Parameter Selection

5.3. DCNN Training Procedure

- Input a mini-batch of n training samples to the CNN architecture.

- Compute the CNN predicted output using the feed-forward technique by passing the input completely through the CNN architecture.

- Compute the gradients of the error function with respect to trainable parameters. Gradient information based on the backpropagation algorithm is defined as [42],where trainable parameters , and , represent the filter weights and biases of convolutional and fully connected layers, respectively.

- Update the filter weights and biases based on the ADAM optimization algorithm [42].

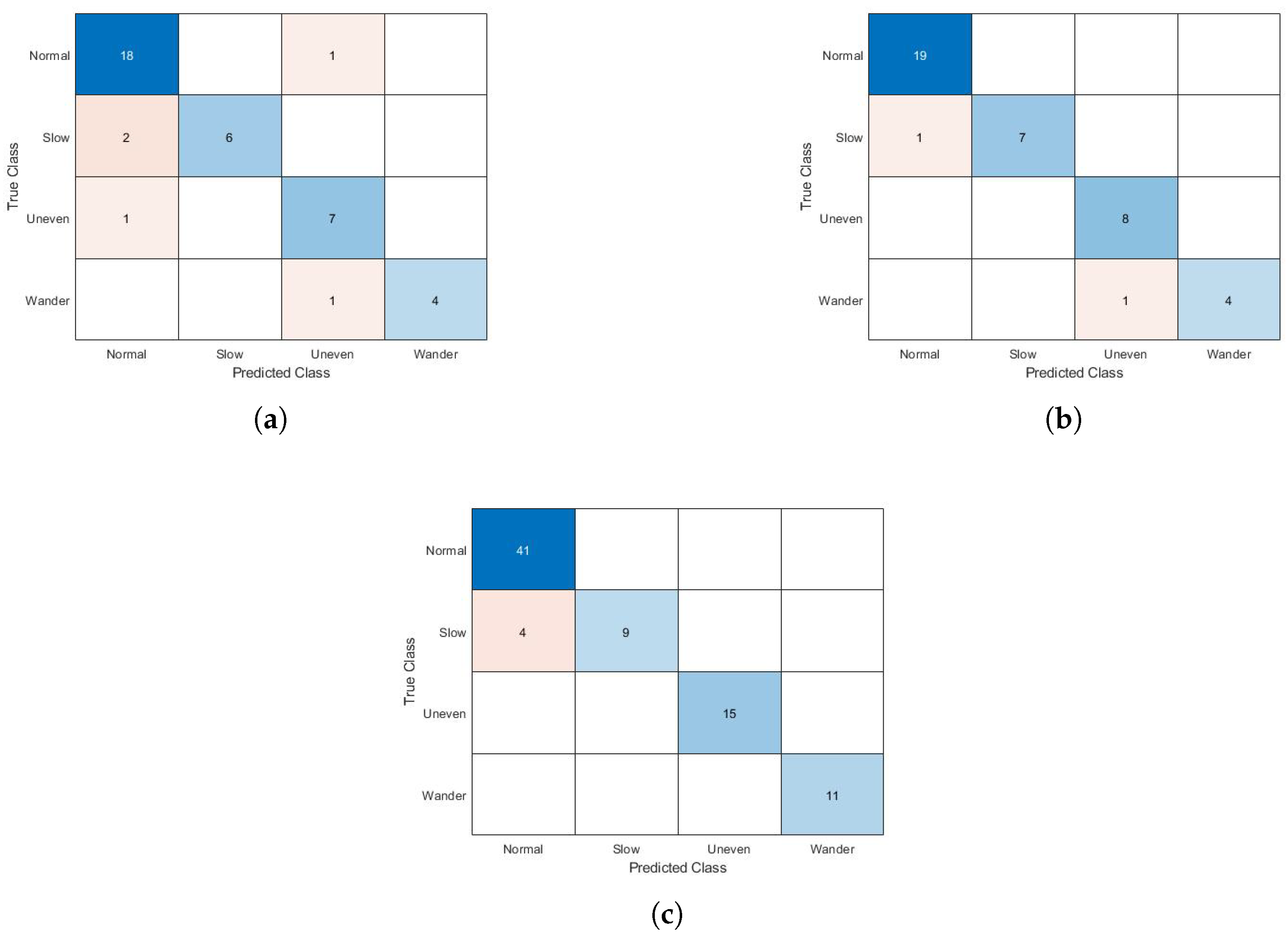

6. Simulation Results

- Micro-Doppler signatures only;

- Interferometric micro-motion signatures only;

- Dual micro-motion signatures.

6.1. Classification among Seven Human Activities

6.2. Classification among Four Different Walking Patterns

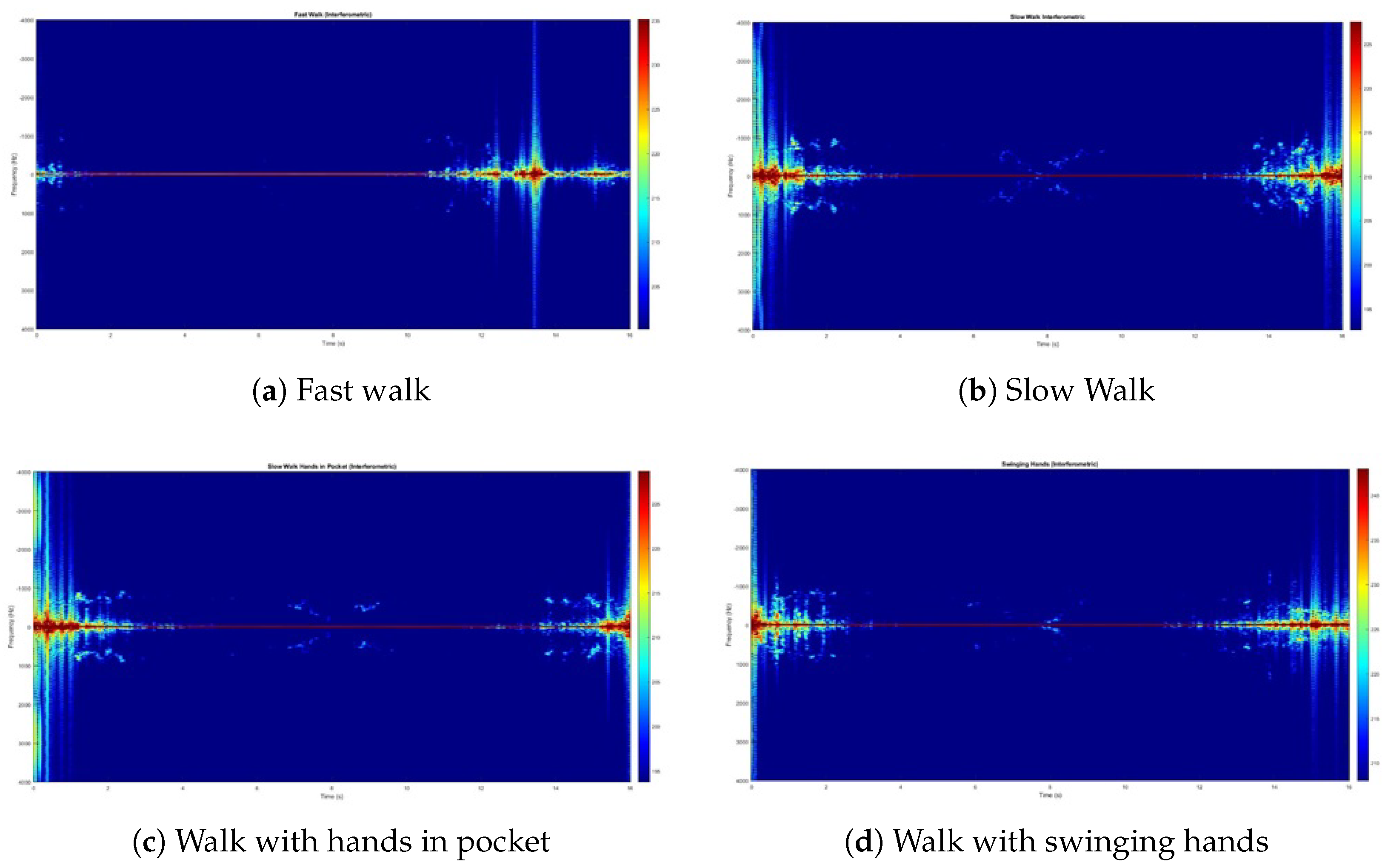

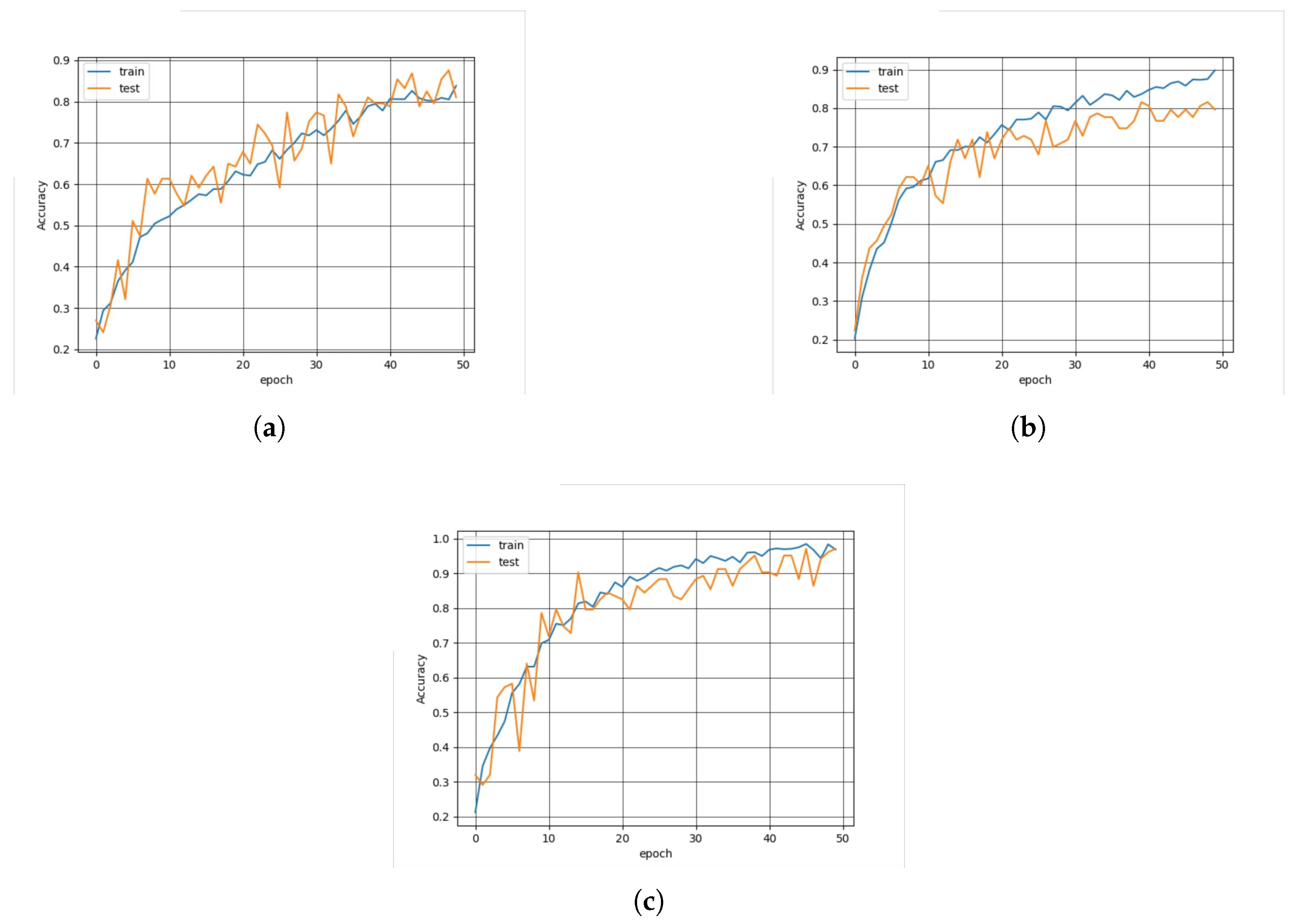

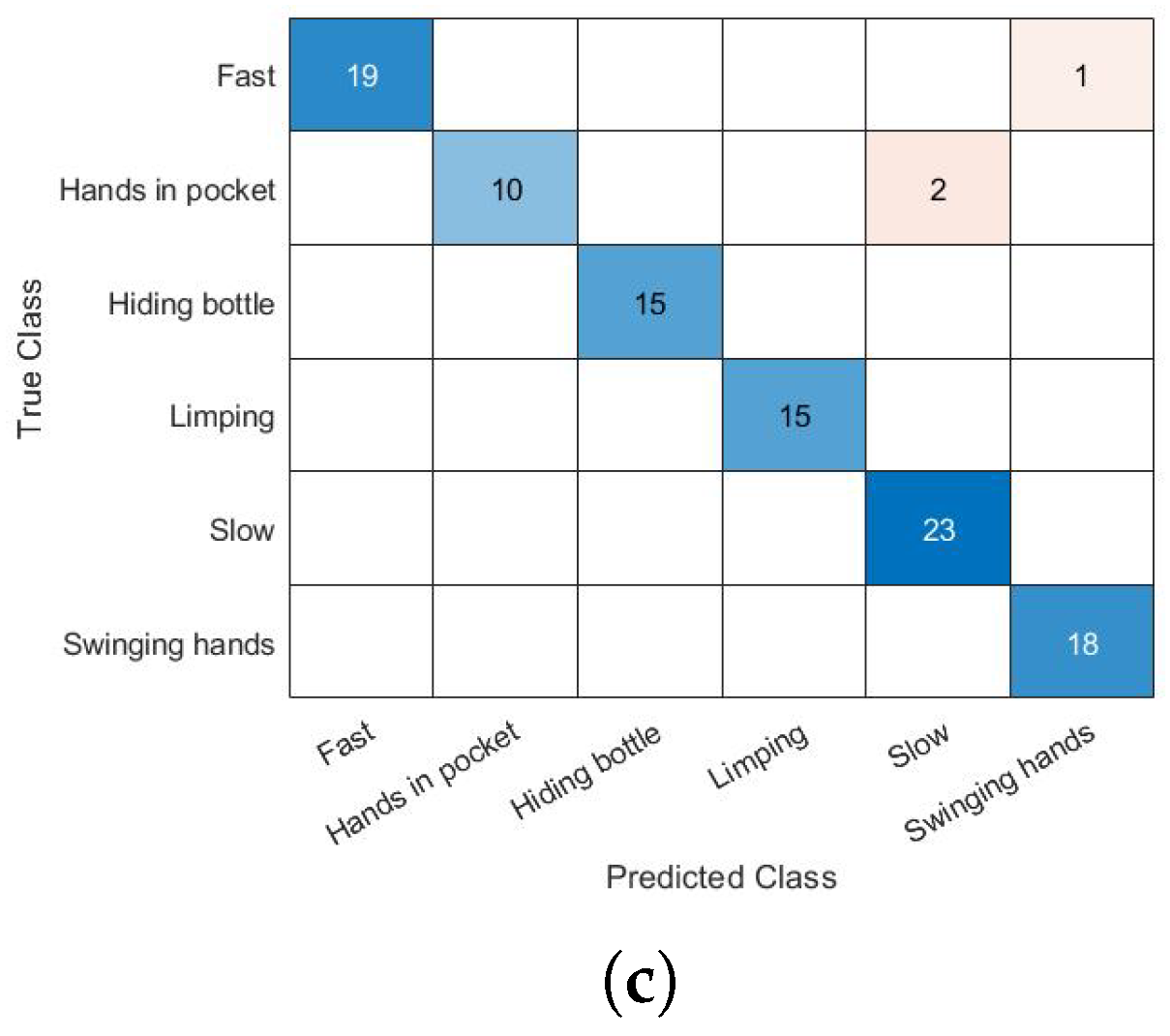

7. Experimental Results

- (1)

- Fast walk

- (2)

- Slow walk

- (3)

- Slow walk with hands in pockets

- (4)

- Slow walk with swinging hands

- (5)

- Walk with hiding bottle

- (6)

- Walk with a limp

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gupta, A.; Gupta, K.; Gupta, K.; Gupta, K. A Survey on Human Activity Recognition and Classification. In Proceedings of the 2020 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 28–30 July 2020; pp. 0915–0919. [Google Scholar]

- Gurbuz, S.Z.; Rahman, M.M.; Kurtoglu, E.; Macks, T.; Fioranelli, F. Cross-Frequency Training with Adversarial Learning for Radar Micro-Doppler Signature Classification. Proc. SPIE 2020, 11408, 58–68. [Google Scholar]

- Dorp, P.V.; Groen, F.C.A. Human Walking Estimation with Radar. IEE Proc. Radar Sonar Navig. 2003, 150, 356–365. [Google Scholar] [CrossRef]

- Nanzer, J.A. A Review of Microwave Wireless Techniques for Human Presence Detection and Classification. IEEE Trans. Microw. Theory Tech. 2017, 65, 1780–1794. [Google Scholar] [CrossRef]

- Setlur, P.; Amin, M.G.; Ahmad, F. Urban Target Classifications Using Time-Frequency Micro-Doppler Signatures. In Proceedings of the 2007 9th International Symposium on Signal Processing and Its Applications, Sharjah, United Arab Emirates, 12–15 February 2007; pp. 1–4. [Google Scholar]

- Anderson, M.G.; Rogers, R.L. Micro-Doppler Analysis of Multiple Frequency Continuous Wave Radar Signatures. In Proceedings of the Radar Sensor Technology XI, Orlando, FL, USA, 9–13 April 2007; Volume 6547, pp. 92–101. [Google Scholar]

- Otero, M. Application of a Continuous Wave Radar for Human Gait Recognition. In Proceedings of the Signal Processing, Sensor Fusion, and Target Recognition XIV, Orlando, FL, USA, 28 March–1 April 2005; Volume 5809, pp. 538–549. [Google Scholar]

- Bilik, I.; Tabrikian, J.; Cohen, A. GMM-Based Target Classification for Ground Surveillance Doppler Radar. IEEE Trans. Aerosp. Electron. Syst. 2006, 42, 267–278. [Google Scholar] [CrossRef]

- Smith, G.E.; Woodbridge, K.; Baker, C.J. Naive Bayesian Radar Micro-Doppler Recognition. In Proceedings of the 2008 International Conference on Radar, Adelaide, Australia, 2–5 September 2008; pp. 111–116. [Google Scholar]

- Kim, Y.; Ling, H. Human Activity Classification Based on Micro-Doppler Signatures Using a Support Vector Machine. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1328–1337. [Google Scholar]

- Yao, X.; Shi, X.; Zhou, F. Human activity classification Based on Complex-Value Convolutional Neural Network. IEEE Sens. J. 2020, 20, 7169–7180. [Google Scholar] [CrossRef]

- Moulton, M.C.; Bischoff, M.L.; Benton, C.; Petkie, D.T. Micro-Doppler Radar Signatures of Human activity. In Proceedings of the Millimetre Wave and Terahertz Sensors and Technology III, Toulouse, France, 20–23 September 2010; Volume 7837. [Google Scholar]

- Tekeli, B.; Gurbuz, S.Z.; Yuksel, M.; Gürbüz, A.C.; Guldogan, M.B. Classification of Human Micro-Doppler in a Radar Network. In Proceedings of the 2013 IEEE Radar Conference (RadarCon13), Ottawa, ON, Canada, 29 April–3 May 2013; pp. 1–6. [Google Scholar]

- Cornacchia, M.; Ozcan, K.; Zheng, Y.; Velipasalar, S. A Survey on Activity Detection and Classification Using Wearable Sensors. IEEE Sens. J. 2017, 17, 386–403. [Google Scholar] [CrossRef]

- Tahmoush, D. Review of Micro-Doppler Signatures. IET Radar Sonar Navig. 2015, 9, 1140–1146. [Google Scholar] [CrossRef]

- Du, R.; Fan, Y.; Wang, J. Pedestrian and Bicyclist Identification Through Micro-Doppler Signature with Different Approaching Aspect Angles. IEEE Sens. J. 2018, 18, 3827–3835. [Google Scholar] [CrossRef]

- Tahmoush, D.; Silvious, J. Angle, Elevation, PRF, and Illumination in Radar Micro-Doppler for Security Applications. In Proceedings of the 2009 IEEE Antennas and Propagation Society International Symposium, North Charleston, SC, USA, 1–5 June 2009; pp. 1–4. [Google Scholar]

- Anderson, M.G. Design of Multiple Frequency Continuous Wave Radar Hardware and Micro-Doppler Based Detection and Classification Algorithms. Ph.D. Thesis, The University of Texas, Austin, TX, USA, 2008. [Google Scholar]

- Hassan, S.; Wang, X.; Ishtiaq, S. Human Gait Classification Based on Convolutional Neural Network using Interferometric Radar. In Proceedings of the 2021 International Conference on Control, Automation and Information Sciences (ICCAIS), Xi’an, China, 14–17 October 2021; pp. 450–456. [Google Scholar]

- Özcan, M.B.; Gürbüz, S.Z.; Persico, A.R.; Clemente, C.; Soraghan, J. Performance Analysis of Co-Located and Distributed MIMO Radar for Micro-Doppler Classification. In Proceedings of the 2016 European Radar Conference (EuRAD), London, UK, 5–7 October 2016; pp. 85–88. [Google Scholar]

- Smith, G.E.; Woodbridge, K.; Baker, C.J. Multistatic Micro-Doppler Signature of Personnel. In Proceedings of the 2008 IEEE Radar Conference, Rome, Italy, 26–30 May 2008. [Google Scholar]

- Fairchild, D.P.; Narayanan, R.M. Multistatic Micro-Doppler Radar for Determining Target Orientation and Activity Classification. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 512–521. [Google Scholar] [CrossRef]

- Qiao, X.; Li, G.; Shan, T.; Tao, R. Human Activity Classification Based on Moving Orientation Determining Using Multistatic Micro-Doppler Radar Signals. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5104415. [Google Scholar] [CrossRef]

- Nanzer, J.A. Millimeter-Wave Interferometric Angular Velocity Detection. IEEE Trans. Microw. Theory Tech. 2010, 58, 4128–4136. [Google Scholar] [CrossRef]

- Ishtiaq, S.; Wang, X.; Hassan, S. Detection and Tracking of Multiple Targets Using Dual-Frequency Interferometric Radar. In Proceedings of the IET International Radar Conference (IET IRC 2020), Online, 4–6 November 2020; pp. 468–475. [Google Scholar]

- Wang, X.; Li, W.; Chen, V.C. Hand Gesture Recognition Using Radial and Transversal Dual Micro-Motion Features. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 5963–5973. [Google Scholar] [CrossRef]

- Nanzer, J.A. Micro-Motion Signatures in Radar Angular Velocity Measurements. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–4. [Google Scholar]

- Boulic, R.; Thalmann, N.M.; Thalmann, D. A Global Human Walking Model with Real-Time Kinematic Personification. Vis. Comput. 1990, 6, 344–358. [Google Scholar] [CrossRef]

- Ram, S.S.; Ling, H. Simulation of Human Micro-Doppler Using Computer Animation Data. In Proceedings of the IEEE Radar Conference, Rome, Italy, 26–30 May 2008; pp. 1–6. [Google Scholar]

- Kolekar, M.H.; Dash, P.D. Hidden Markov Model Based Human Activity Recognition Using Shape and Optical Flow Based Features. In Proceedings of the 2016 IEEE Region 10 Conference (TENCON), Singapore, 22–25 November 2016; pp. 393–397. [Google Scholar]

- Singh, V.K.; Nevatia, R. Human Action Recognition Using a Dynamic Bayesian Action Network with 2D Part Models. In Proceedings of the Seventh Indian Conference on Computer Vision, Graphics and Image Processing, Chennai, India, 22–25 November 2010; pp. 17–24. [Google Scholar]

- Milanova, M.; Al-Ali, S.; Manolova, A. Human Action Recognition Using Combined Contour-Based and Silhouette-Based Features and Employing KNN or SVM Classifier. Int. J. Comput. 2015, 9, 37–47. [Google Scholar]

- Smith, K.A.; Csech, C.; Murdoch, D.; Shaker, G. Gesture Recognition Using mm-Wave Sensor for Human-Car Interface. IEEE Sens. Lett. 2018, 2, 1–4. [Google Scholar] [CrossRef]

- Li, X.; He, Y.; Jing, X. A Survey of Deep Learning-Based Human Activity Recognition in Radar. Remote Sens. 2019, 11, 1068. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D Convolutional Neural Networks for Human Action Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 221–231. [Google Scholar] [CrossRef]

- Deng, L.; Yu, D. Deep Learning: Methods and Applications. Found. Trends Signal Process. 2014, 7, 197–387. [Google Scholar] [CrossRef]

- Amin, M.G.; Erol, B. Understanding Deep Neural Networks Performance for Radar-Based Human Motion Recognition. In Proceedings of the 2018 IEEE Radar Conference (RadarConf18), Oklahoma City, OK, USA, 23–27 April 2018; pp. 1461–1465. [Google Scholar]

- Lin, Y.; Le, K.J.; Yang, S.; Fioranelli, F.; Romain, O.; Zhao, Z. Human Activity Classification with Radar: Optimization and Noise Robustness with Iterative Convolutional Neural Networks Followed with Random Forests. IEEE Sens. J. 2018, 18, 9669–9681. [Google Scholar] [CrossRef]

- Kim, Y.; Moon, T. Human Detection and Activity Classification Based on Micro-Doppler Signatures Using Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 8–12. [Google Scholar] [CrossRef]

- Yilmaz, E.; German, B. A Deep Learning Approach to an Airfoil Inverse Design Problem. In Proceedings of the 2018 Multidisciplinary Analysis and Optimization Conference, Atlanta, Georgia, 25–29 June 2018. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Representations by Back-Propagating Errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

| Activity | Description |

|---|---|

| Walking | The action of walking in forward direction with both upper and lower limbs moving. It includes; (i) Normal walk (ii) Slow walk (iii) Walk on uneven terrain (iv) Wander (random walk) |

| Running | The action of running swiftly in forward direction with both upper and lower limbs moving. It includes; (i) Normal run (ii) Jog |

| Jumping | The action of springing free from ground into the air by using lower limbs. It includes; (i) Simple jump (ii) High jump (iii) Forward jump |

| Punching | The action of striking or hitting with fists. It includes; (i) Simple punch (ii) Boxing |

| Bending | The action of assuming an angular or curved shape, that is, bend over and pick up with one hand. |

| Climbing | The action of ascending and then descending, that is, climb a ladder and then move downward. |

| Sitting/standing | The action of changing posture between sitting and standing positions. |

| Motion Type | Convolution Layers | Filters | Pooling Layers | Pooling Type | Neurons | Output of DCNN |

|---|---|---|---|---|---|---|

| Activity class | 04 | C1:32 (5 × 5) C2:64 (3 × 3) C3:128 (3 × 3) C4:512 (3 × 3) | 04 (P1, P2, P3, P4) | Max (2 × 2) | 18,432 | (i) Walking (ii) Running (iii) Jumping (iv) Punching (v) Bending (vi) Climbing (vii) Sitting/Standing |

| Walking patterns | 03 | C1:32 (5 × 5) C2:64 (3 × 3) C3:128 (3 × 3) | 03 (P1, P2, P3, P4) | Max (2 × 2) | 25,088 | (i) Normal (ii) Slow (iii) uneven terrain (iv) Random |

| Description | Configuration 1 | Configuration 2 | Configuration 3 |

|---|---|---|---|

| Classification accuracy | 92% | 94% | 98% |

| Description | Configuration 1 | Configuration 2 | Configuration 3 |

|---|---|---|---|

| Classification accuracy | 90% | 92% | 95% |

| Description | Configuration 1 | Configuration 2 | Configuration 3 |

|---|---|---|---|

| Classification accuracy | 83% | 80% | 90% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hassan, S.; Wang, X.; Ishtiaq, S.; Ullah, N.; Mohammad, A.; Noorwali, A. Human Activity Classification Based on Dual Micro-Motion Signatures Using Interferometric Radar. Remote Sens. 2023, 15, 1752. https://doi.org/10.3390/rs15071752

Hassan S, Wang X, Ishtiaq S, Ullah N, Mohammad A, Noorwali A. Human Activity Classification Based on Dual Micro-Motion Signatures Using Interferometric Radar. Remote Sensing. 2023; 15(7):1752. https://doi.org/10.3390/rs15071752

Chicago/Turabian StyleHassan, Shahid, Xiangrong Wang, Saima Ishtiaq, Nasim Ullah, Alsharef Mohammad, and Abdulfattah Noorwali. 2023. "Human Activity Classification Based on Dual Micro-Motion Signatures Using Interferometric Radar" Remote Sensing 15, no. 7: 1752. https://doi.org/10.3390/rs15071752

APA StyleHassan, S., Wang, X., Ishtiaq, S., Ullah, N., Mohammad, A., & Noorwali, A. (2023). Human Activity Classification Based on Dual Micro-Motion Signatures Using Interferometric Radar. Remote Sensing, 15(7), 1752. https://doi.org/10.3390/rs15071752