Machine Learning Models for Approximating Downward Short-Wave Radiation Flux over the Ocean from All-Sky Optical Imagery Based on DASIO Dataset

Abstract

1. Introduction

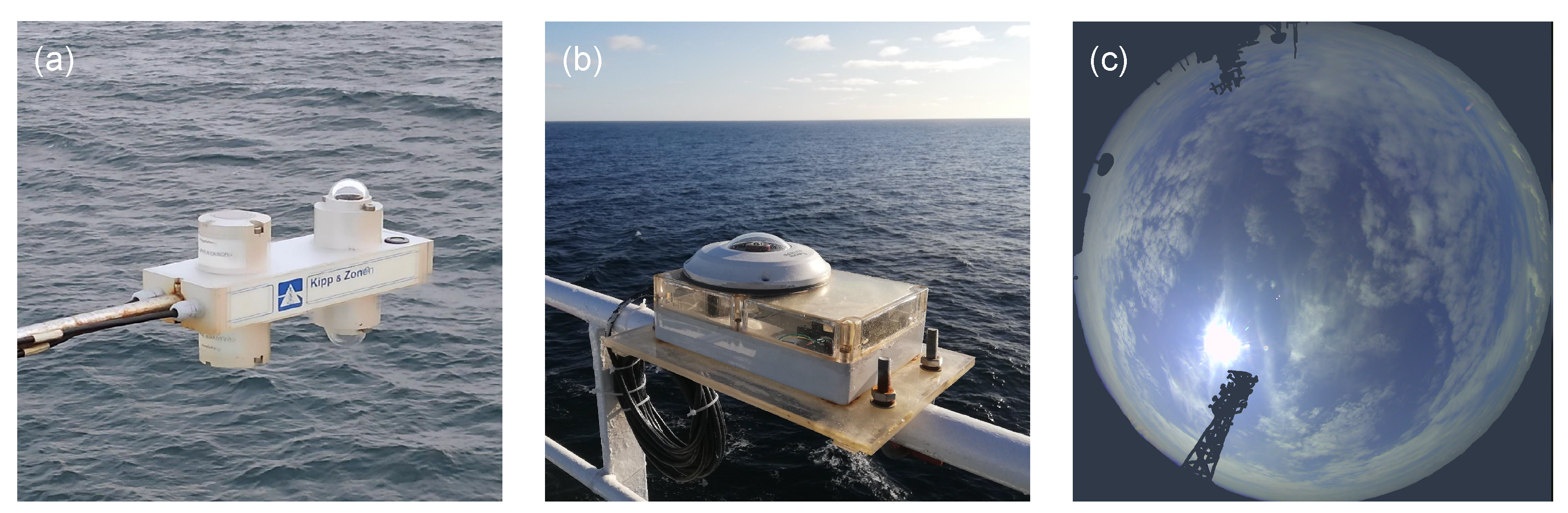

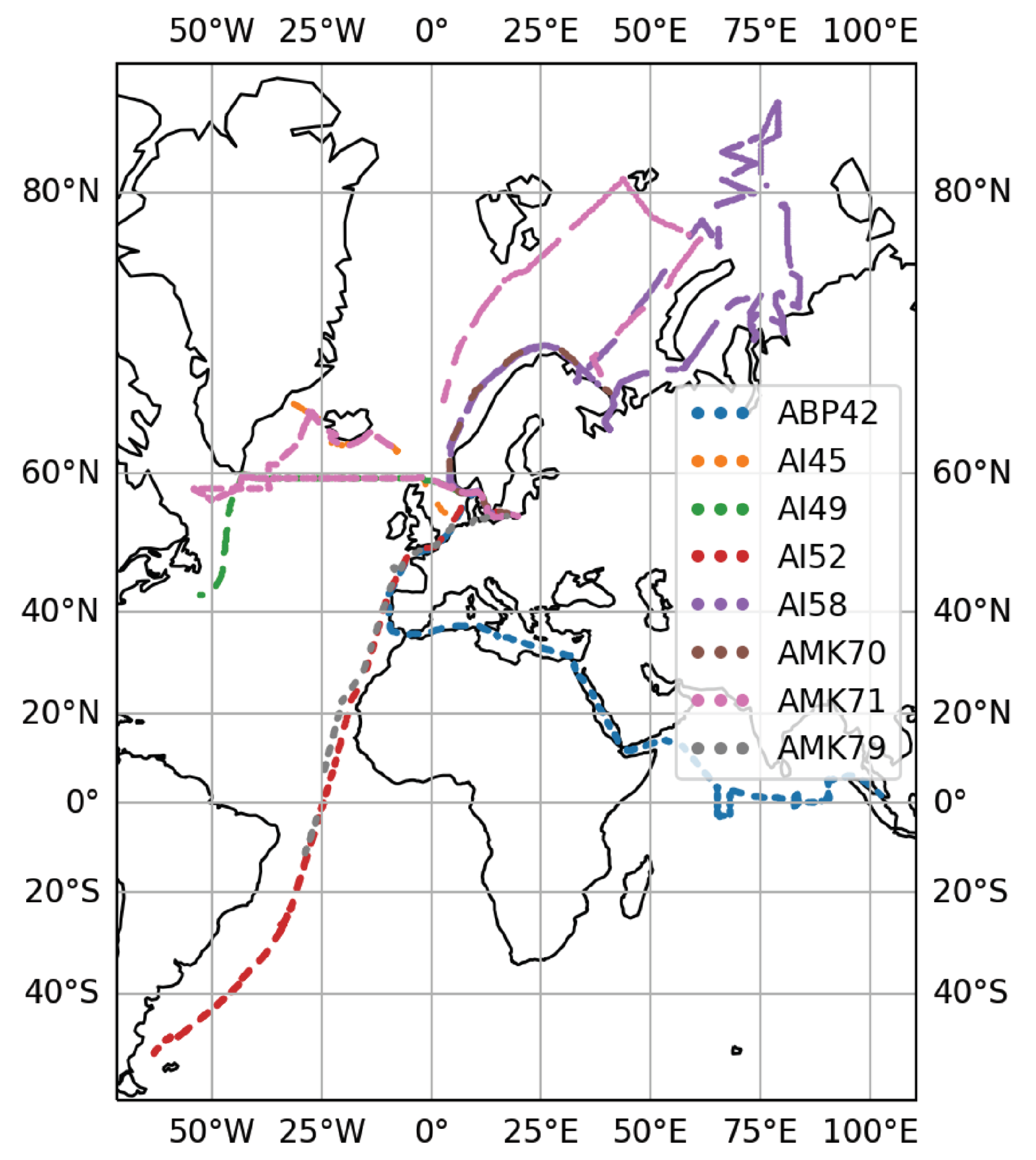

2. Data

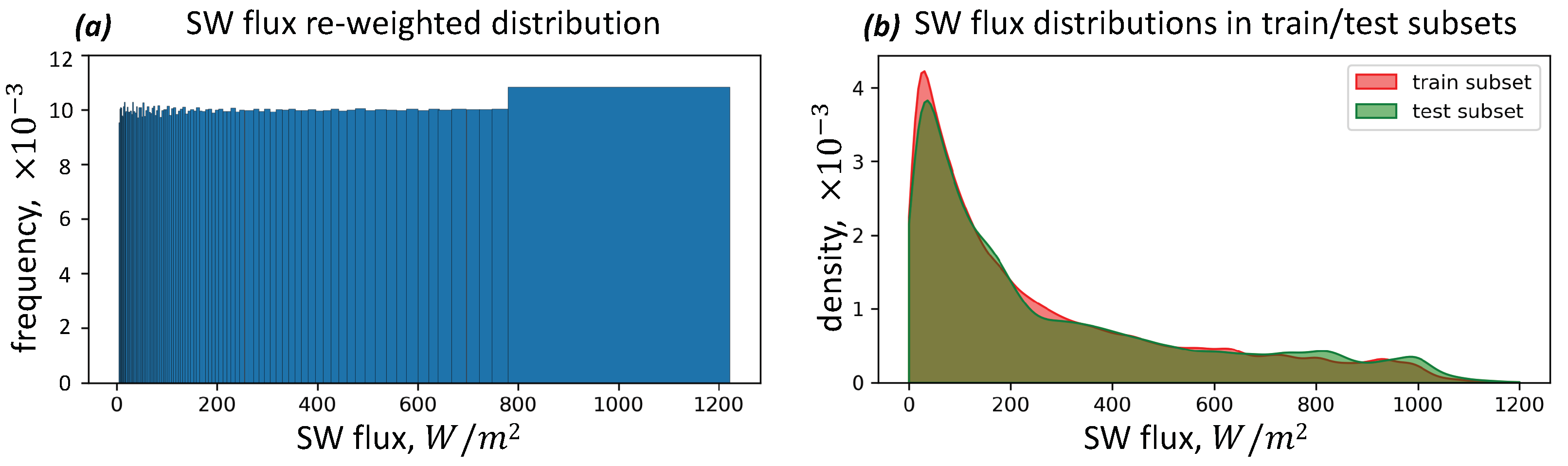

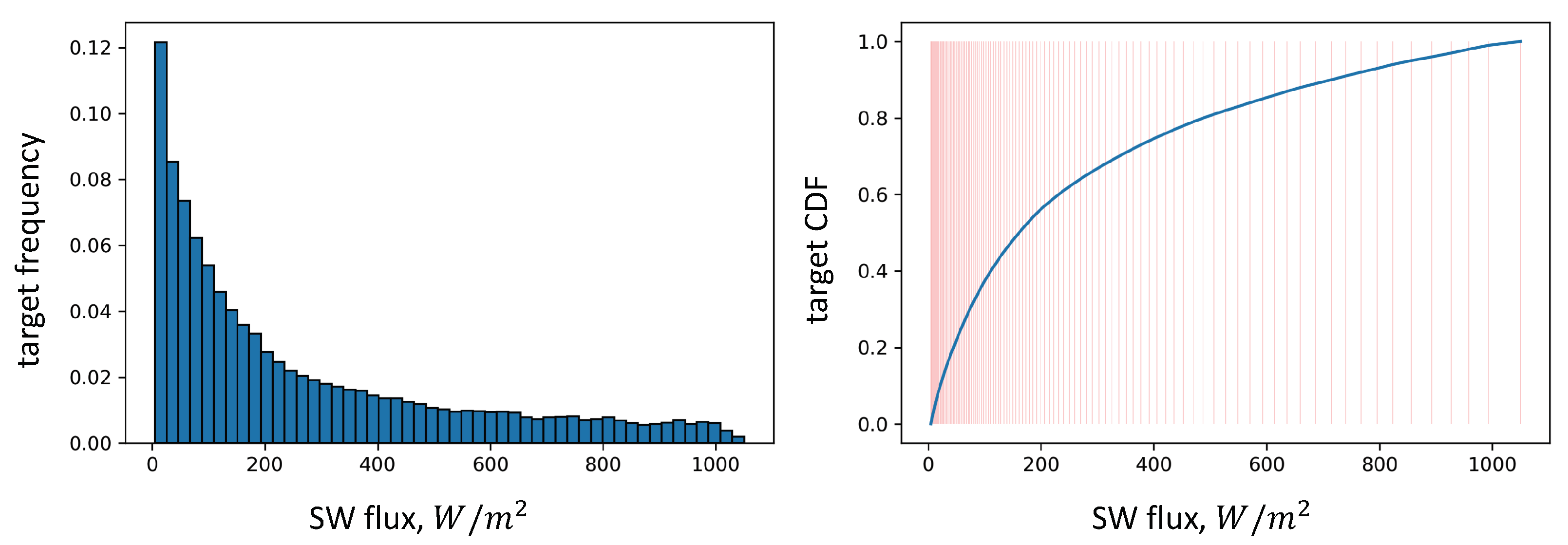

Inverse Target-Frequency (ITF) Re-Weighting of Training Subset

3. Methods

3.1. Feature Engineering

- Maximum and minimum values;

- Sample mean;

- Sample variance;

- Sample skewness;

- Sample kurtosis;

- Sample estimates of the following percentiles: , , , , , … , , , (21 in total). Here, stands for a sample estimate of the percentile of level d.

3.2. Machine Learning Methods

3.2.1. Classic Models

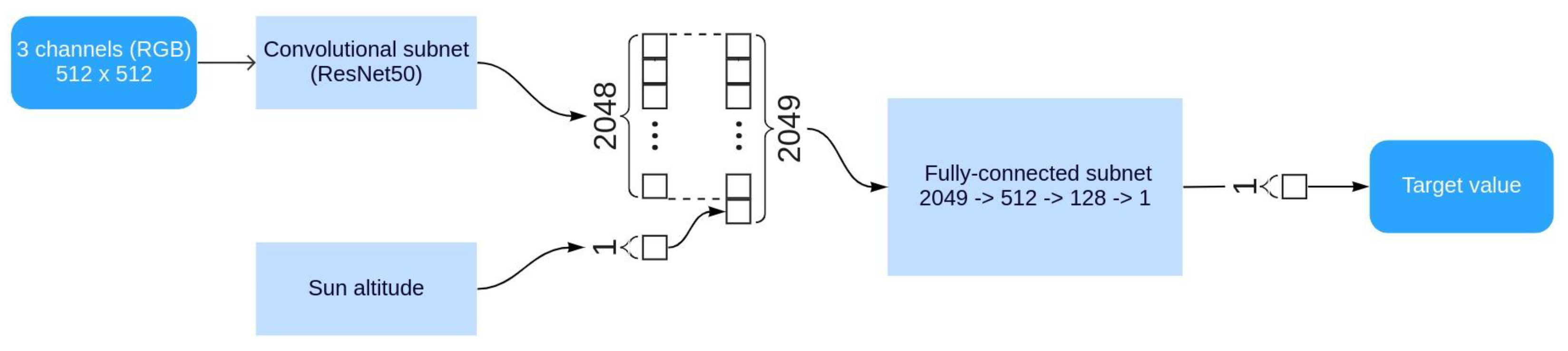

3.2.2. Convolutional Neural Network

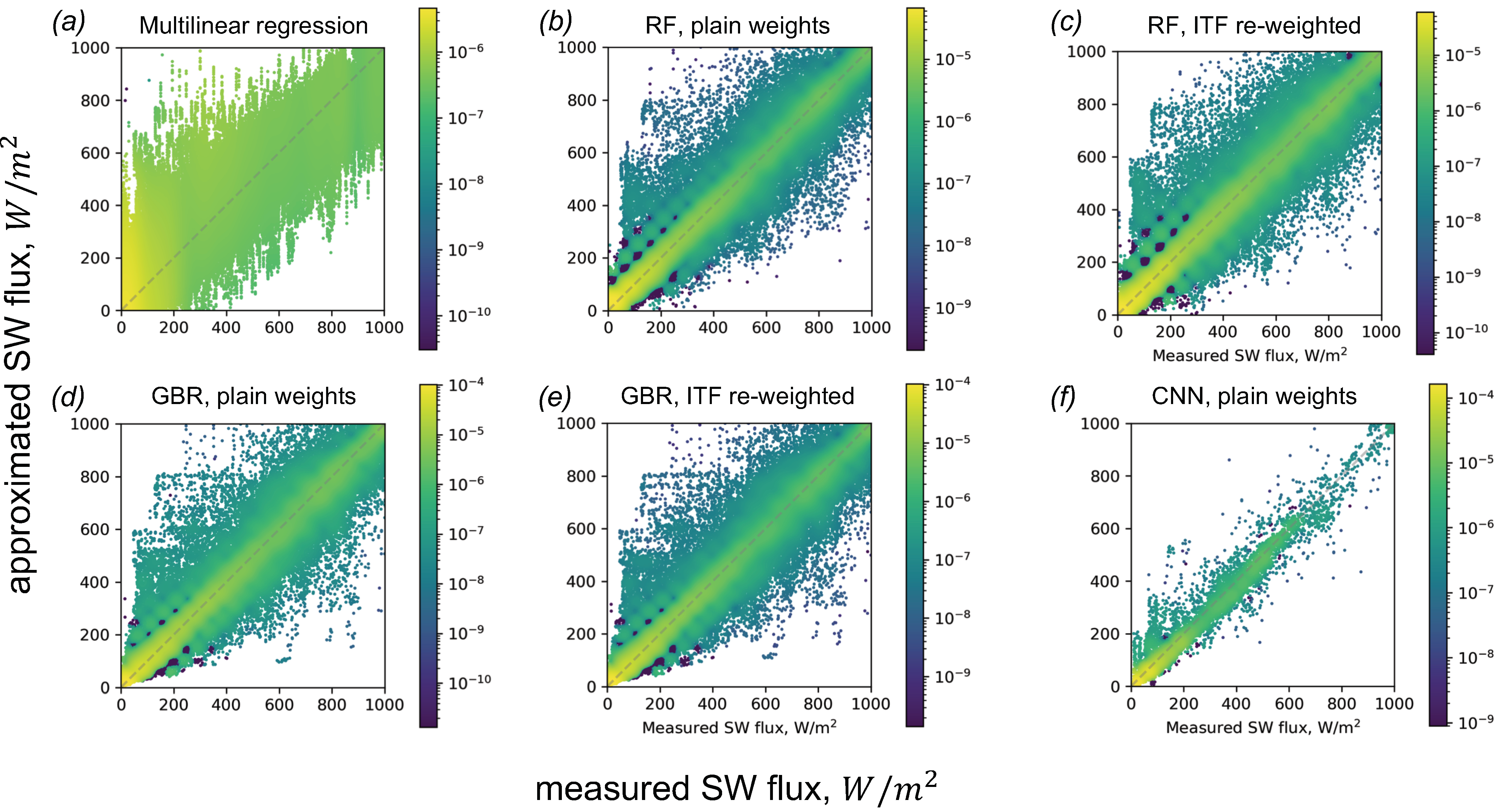

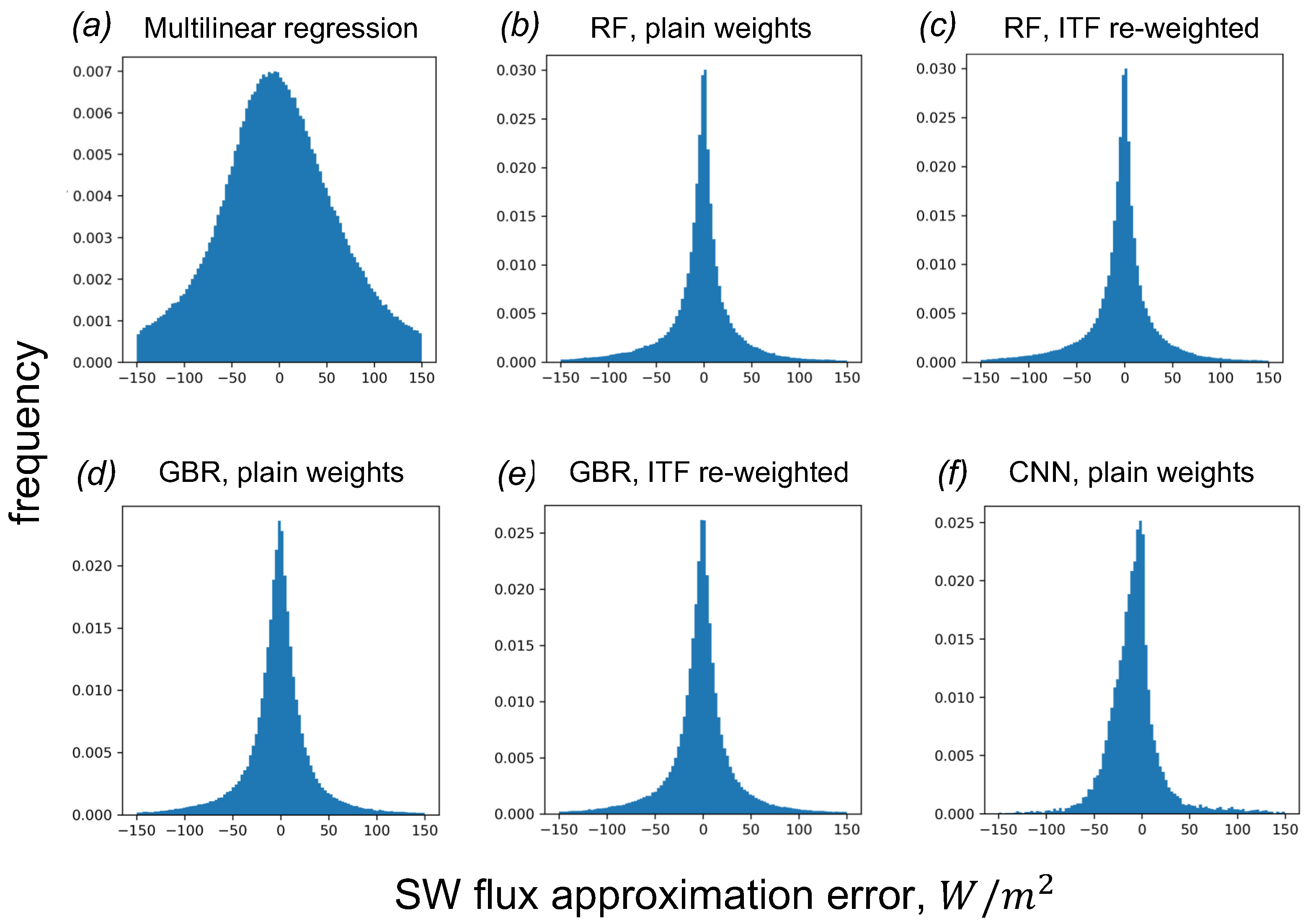

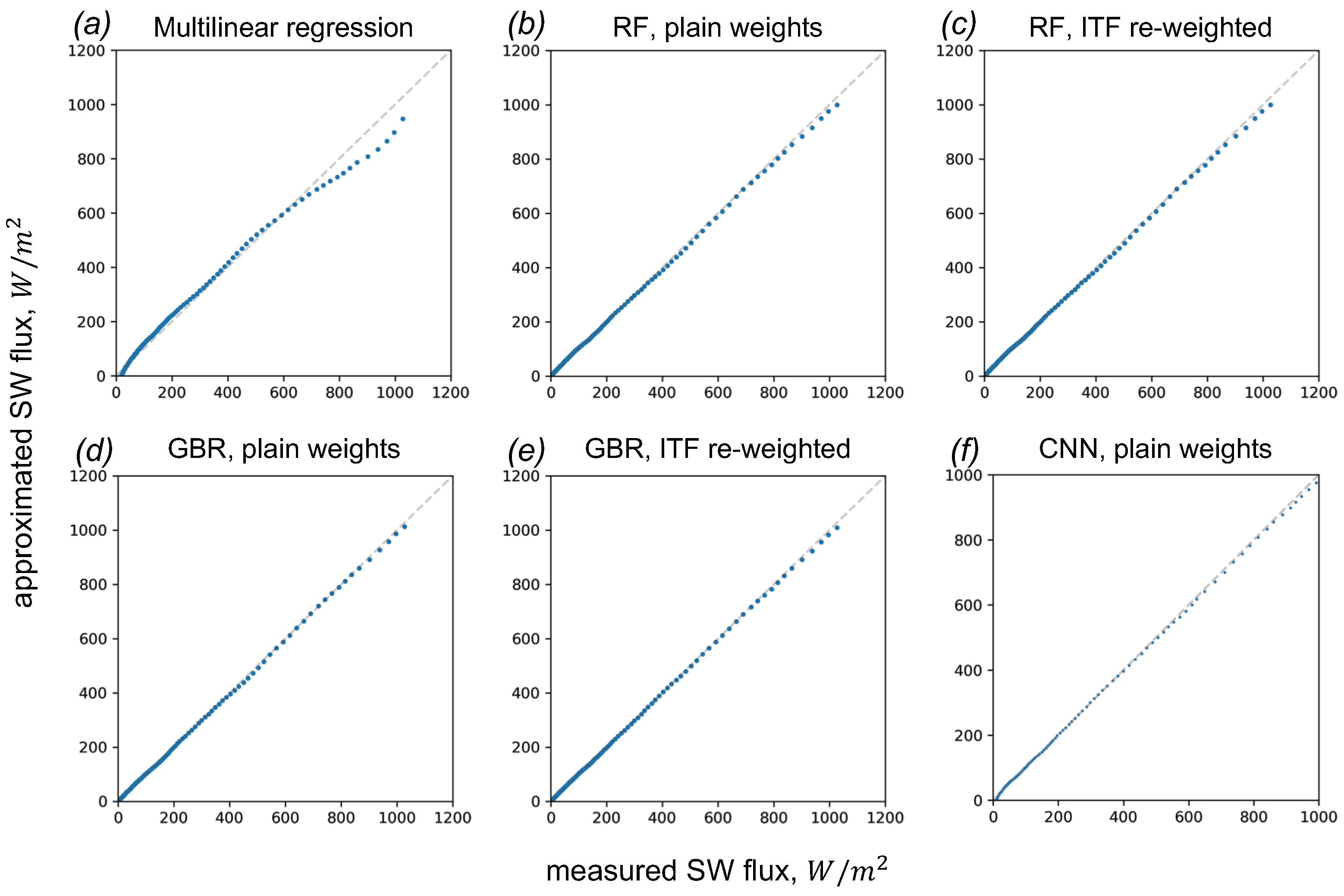

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNN | Convolutional neural network |

| DL | Deep learning |

| ML | Machine learning |

| SW | short wave |

| LW | long wave |

| DASIO | Dataset of All-Sky Imagery over the Ocean |

| RF | Random Forests |

| LR | Linear regression |

| MLR | Multilinear regression |

| GBR | Gradient Boosting for Regression |

References

- Trenberth, K.E.; Fasullo, J.T.; Kiehl, J. Earth’s Global Energy Budget. Bull. Am. Meteorol. Soc. 2009, 90, 311–324. [Google Scholar] [CrossRef]

- Stephens, G.L.; Li, J.; Wild, M.; Clayson, C.A.; Loeb, N.; Kato, S.; L’Ecuyer, T.; Stackhouse, P.W.; Lebsock, M.; Andrews, T. An update on Earth’s energy balance in light of the latest global observations. Nat. Geosci. 2012, 5, 691–696. [Google Scholar] [CrossRef]

- Wu, H.; Ying, W. Benchmarking Machine Learning Algorithms for Instantaneous Net Surface Shortwave Radiation Retrieval Using Remote Sensing Data. Remote Sens. 2019, 11, 2520. [Google Scholar] [CrossRef]

- Cess, R.D.; Nemesure, S.; Dutton, E.G.; Deluisi, J.J.; Potter, G.L.; Morcrette, J.J. The Impact of Clouds on the Shortwave Radiation Budget of the Surface-Atmosphere System: Interfacing Measurements and Models. J. Clim. 1993, 6, 308–316. [Google Scholar] [CrossRef]

- McFarlane, S.A.; Mather, J.H.; Ackerman, T.P.; Liu, Z. Effect of clouds on the calculated vertical distribution of shortwave absorption in the tropics. J. Geophys. Res. Atmos. 2008, 113, D18203. [Google Scholar] [CrossRef]

- Lubin, D.; Vogelmann, A.M. The influence of mixed-phase clouds on surface shortwave irradiance during the Arctic spring. J. Geophys. Res. Atmos. 2011, 116, D00T05. [Google Scholar] [CrossRef]

- Chou, M.D.; Lee, K.T.; Tsay, S.C.; Fu, Q. Parameterization for Cloud long-wave Scattering for Use in Atmospheric Models. J. Clim. 1999, 12, 159–169. [Google Scholar] [CrossRef]

- Stephens, G.L. Radiation Profiles in Extended Water Clouds. I: Theory. J. Atmos. Sci. 1978, 35, 2111–2122. [Google Scholar] [CrossRef]

- Aleksandrova, M.; Gulev, S.; Sinitsyn, A. An improvement of parametrization of short-wave radiation at the sea surface on the basis of direct measurements in the Atlantic. Russ. Meteorol. Hydrol. 2007, 32, 245–251. [Google Scholar] [CrossRef]

- Dobson, F.W.; Smith, S.D. Bulk models of solar radiation at sea. Q. J. R. Meteorol. Soc. 1988, 114, 165–182. [Google Scholar] [CrossRef]

- Ebtehaj, I.; Soltani, K.; Amiri, A.; Faramarzi, M.; Madramootoo, C.A.; Bonakdari, H. Prognostication of Shortwave Radiation Using an Improved No-Tuned Fast Machine Learning. Sustainability 2021, 13, 8009. [Google Scholar] [CrossRef]

- Voyant, C.; Notton, G.; Kalogirou, S.; Nivet, M.L.; Paoli, C.; Motte, F.; Fouilloy, A. Machine learning methods for solar radiation forecasting: A review. Renew. Energy 2017, 105, 569–582. [Google Scholar] [CrossRef]

- Krinitskiy, M.; Aleksandrova, M.; Verezemskaya, P.; Gulev, S.; Sinitsyn, A.; Kovaleva, N.; Gavrikov, A. On the generalization ability of data-driven models in the problem of total cloud cover retrieval. Remote Sens. 2021, 13, 326. [Google Scholar] [CrossRef]

- Krinitskiy, M.A.; Sinitsyn, A.V. Adaptive algorithm for cloud cover estimation from all-sky images over the sea. Oceanology 2016, 56, 315–319. [Google Scholar] [CrossRef]

- Liu, S.; Duan, L.; Zhang, Z.; Cao, X.; Durrani, T.S. Multimodal Ground-Based Remote Sensing Cloud Classification via Learning Heterogeneous Deep Features. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7790–7800. [Google Scholar] [CrossRef]

- Liu, S.; Li, M.; Zhang, Z.; Xiao, B.; Cao, X. Multimodal Ground-Based Cloud Classification Using Joint Fusion Convolutional Neural Network. Remote Sens. 2018, 10, 822. [Google Scholar] [CrossRef]

- Taravat, A.; Frate, F.D.; Cornaro, C.; Vergari, S. Neural Networks and Support Vector Machine Algorithms for Automatic Cloud Classification of Whole-Sky Ground-Based Images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 666–670. [Google Scholar] [CrossRef]

- Chen, J.; He, T.; Jiang, B.; Liang, S. Estimation of all-sky all-wave daily net radiation at high latitudes from MODIS data. Remote Sens. Environ. 2020, 245, 111842. [Google Scholar] [CrossRef]

- Lu, N.; Liu, R.; Liu, J.; Liang, S. An algorithm for estimating downward shortwave radiation from GMS 5 visible imagery and its evaluation over China. J. Geophys. Res. Atmos. 2010, 115, 1–15. [Google Scholar] [CrossRef]

- Pfister, G.; McKenzie, R.; Liley, J.; Thomas, A.; Forgan, B.; Long, C.N. Cloud coverage based on all-sky imaging and its impact on surface solar irradiance. J. Appl. Meteorol. Climatol. 2003, 42, 1421–1434. [Google Scholar] [CrossRef]

- Tzoumanikas, P.; Nikitidou, E.; Bais, A.; Kazantzidis, A. The effect of clouds on surface solar irradiance, based on data from an all-sky imaging system. Renew. Energy 2016, 95, 314–322. [Google Scholar] [CrossRef]

- Chen, L.; Yan, G.; Wang, T.; Ren, H.; Calbó, J.; Zhao, J.; McKenzie, R. Estimation of surface shortwave radiation components under all sky conditions: Modeling and sensitivity analysis. Remote Sens. Environ. 2012, 123, 457–469. [Google Scholar] [CrossRef]

- Kipp & Zonen. CNR 1 Net Radiometer Instruction Manual. Available online: https://www.kippzonen.com/Download/85/Manual-CNR-1-Net-Radiometer-English (accessed on 27 February 2023).

- Michel, D.; Philipona, R.; Ruckstuhl, C.; Vogt, R.; Vuilleumier, L. Performance and Uncertainty of CNR1 Net Radiometers during a One-Year Field Comparison. J. Atmos. Ocean. Technol. 2008, 25, 442–451. [Google Scholar] [CrossRef]

- Long, C.; DeLuisi, J. Development of an automated hemispheric sky imager for cloud fraction retrievals. In 10th Symposium on Meteorological Observations and Instrumentation: Proceedings of the 78th AMS Annual Meeting, Phoenix, AZ, USA, 11–16 January 1998; American Meteorological Society (AMS): Boston, MA, USA, 1998; pp. 171–174. [Google Scholar]

- Wang, Y.; Liu, D.; Xie, W.; Yang, M.; Gao, Z.; Ling, X.; Huang, Y.; Li, C.; Liu, Y.; Xia, Y. Day and Night Clouds Detection Using a Thermal-Infrared All-Sky-View Camera. Remote Sens. 2021, 13, 1852. [Google Scholar] [CrossRef]

- Sunil, S.; Padmakumari, B.; Pandithurai, G.; Patil, R.D.; Naidu, C.V. Diurnal (24 h) cycle and seasonal variability of cloud fraction retrieved from a Whole Sky Imager over a complex terrain in the Western Ghats and comparison with MODIS. Atmos. Res. 2021, 248, 105180. [Google Scholar] [CrossRef]

- Kim, B.Y.; Cha, J.W.; Chang, K.H. Twenty-four-hour cloud cover calculation using a ground-based imager with machine learning. Atmos. Meas. Tech. 2021, 14, 6695–6710. [Google Scholar] [CrossRef]

- Azhar, M.A.D.M.; Hamid, N.S.A.; Kamil, W.M.A.W.M.; Mohamad, N.S. Daytime Cloud Detection Method Using the All-Sky Imager over PERMATApintar Observatory. Universe 2021, 7, 41. [Google Scholar] [CrossRef]

- Xie, W.; Liu, D.; Yang, M.; Chen, S.; Wang, B.; Wang, Z.; Xia, Y.; Liu, Y.; Wang, Y.; Zhang, C. SegCloud: A novel cloud image segmentation model using a deep convolutional neural network for ground-based all-sky-view camera observation. Atmos. Meas. Tech. 2020, 13, 1953–1961. [Google Scholar] [CrossRef]

- Kim, B.Y.; Cha, J.W. Cloud Observation and Cloud Cover Calculation at Nighttime Using the Automatic Cloud Observation System (ACOS) Package. Remote Sens. 2020, 12, 2314. [Google Scholar] [CrossRef]

- Alonso-Montesinos, J. Real-Time Automatic Cloud Detection Using a Low-Cost Sky Camera. Remote Sens. 2020, 12, 1382. [Google Scholar] [CrossRef]

- Shi, C.; Zhou, Y.; Qiu, B.; He, J.; Ding, M.; Wei, S. Diurnal and nocturnal cloud segmentation of all-sky imager (ASI) images using enhancement fully convolutional networks. Atmos. Meas. Tech. 2019, 12, 4713–4724. [Google Scholar] [CrossRef]

- Lothon, M.; Barnéoud, P.; Gabella, O.; Lohou, F.; Derrien, S.; Rondi, S.; Chiriaco, M.; Bastin, S.; Dupont, J.C.; Haeffelin, M.; et al. ELIFAN, an algorithm for the estimation of cloud cover from sky imagers. Atmos. Meas. Tech. 2019, 12, 5519–5534. [Google Scholar] [CrossRef]

- Liu, S.; Li, M.; Zhang, Z.; Xiao, B.; Durrani, T.S. Multi-Evidence and Multi-Modal Fusion Network for Ground-Based Cloud Recognition. Remote Sens. 2020, 12, 464. [Google Scholar] [CrossRef]

- Liu, S.; Duan, L.; Zhang, Z.; Cao, X. Hierarchical Multimodal Fusion for Ground-Based Cloud Classification in Weather Station Networks. IEEE Access 2019, 7, 85688–85695. [Google Scholar] [CrossRef]

- Xiao, Y.; Cao, Z.; Zhuo, W.; Ye, L.; Zhu, L. mCLOUD: A Multiview Visual Feature Extraction Mechanism for Ground-Based Cloud Image Categorization. J. Atmos. Ocean. Technol. 2015, 33, 789–801. [Google Scholar] [CrossRef]

- Heinle, A.; Macke, A.; Srivastav, A. Automatic cloud classification of whole sky images. Atmos. Meas. Tech. 2010, 3, 557–567. [Google Scholar] [CrossRef]

- Calbó, J.; Sabburg, J. Feature Extraction from Whole-Sky Ground-Based Images for Cloud-Type Recognition. J. Atmos. Ocean. Technol. 2008, 25, 3–14. [Google Scholar] [CrossRef]

- Vivotek FE8171V Network Camera User’s Manual; Vivotek Inc.: New Taipei City, Taiwan, 2015; Available online: http://download.vivotek.com/downloadfile/downloads/usersmanuals/fe8171vmanual_en.pdf (accessed on 27 February 2023).

- Vivotek FE8171V Network Camera Data Sheet; Vivotek Inc.: New Taipei City, Taiwan, 2015; Available online: http://download.vivotek.com/downloadfile/downloads/datasheets/fe8171vdatasheet_en.pdf (accessed on 27 February 2023).

- He, H.; Garcia, E.A. Learning from Imbalanced Data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar] [CrossRef]

- Branco, P.; Torgo, L.; Ribeiro, R. A Survey of Predictive Modelling under Imbalanced Distributions. arXiv 2015, arXiv:1505.01658. [Google Scholar]

- Ibraheem, N.A.; Hasan, M.M.; Khan, R.Z.; Mishra, P.K. Understanding color models: A review. ARPN J. Sci. Technol. 2012, 2, 265–275. [Google Scholar]

- Krinitskiy, M. Cloud cover estimation optical package: New facility, algorithms and techniques. AIP Conf. Proc. 2017, 1810, 080009. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased boosting with categorical features. Adv. Neural Inf. Process. Syst. 2018, 31, 1–11. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 1–9. [Google Scholar]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- O’Shea, K.; Nash, R. An introduction to convolutional neural networks. arXiv 2015, arXiv:1511.08458. [Google Scholar]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A survey on deep transfer learning. In Artificial Neural Networks and Machine Learning—ICANN 2018: Proceedings of the 27th International Conference on Artificial Neural Networks, Rhodes, Greece, 4–7 October 2018; Springer: Cham, Switzerland, 2018; pp. 270–279. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

- Van Rossum, G.; Drake, F.L. Python 3 Reference Manual; CreateSpace: Scotts Valley, CA, USA, 2009. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 8026–8037. [Google Scholar]

- Bradski, G. The OpenCV Library. Dr. Dobb’s J. Softw. Tools 2000, 25, 120–123. [Google Scholar]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A Next-generation Hyperparameter Optimization Framework. arXiv 2019, arXiv:1907.10902. [Google Scholar]

| Feature | Value, Property, Description |

|---|---|

| Lens | Board lens, Fixed, f = 1.27 mm, F2.8 |

| Field of View | |

| Shutter Time | 1/5 s to 1/32,000 s |

| Image properties | 1920 × 1920 JPEG, 96 DPI, 24 bit color depth |

| Indicator | Value |

|---|---|

| Train subset size | 1,041,734 |

| Test subset size | 350,859 |

| Total size of the dataset | 1,392,593 |

| SW flux mean | 271.0 W/m |

| SW flux std | 273.0 W/m |

| SW flux minimum value | 5.0 W/m |

| SW flux 25% percentile | 59.0 W/m |

| SW flux 50% percentile | 162.4 W/m |

| SW flux 75% percentile | 411.0 W/m |

| SW flux maximum value | 1458.7 W/m |

| Mission | Departure | Destination | Route | No. of Examples |

|---|---|---|---|---|

| Name | Train/Test Subset | |||

| AI45 | 17 Septmeber 2014 | 25 Septmeber 2014 | Northern Atlantic | 10,050/ |

| Reykjavik, Iceland | Rotterdam, The Netherlands | 2757 | ||

| AI49 | 12 June 2015 | 2 July 2015 | Northern Atlantic | 49,124 / |

| Gdansk, Poland | Halifax, NS, Canada | 16,457 | ||

| AI-52 | 30 Septmeber 2016 | 3 November 2016 | Atlantic ocean | 158,908/ |

| Gdansk, | Ushuaia, | 57,622 | ||

| Poland | Argentina | |||

| ABP-42 | 21 January 2017 | 25 March 2017 | Indian ocean, Red sea, | 178,354/ |

| Singapore | Kaliningrad, | Mediterranean sea, | 58,025 | |

| Russia | Atlantic ocean | |||

| AMK-70 | 5 October 2017 | 13 October 2017 | Northern Atlantic, | 20,384/ |

| Arkhangelsk, | Kaliningrad, | Arctic ocean | 7322 | |

| Russia | Russia | |||

| AMK-71 | 24 June 2018 | 13 August 2018 | Northern Atlantic, | 220,782/ |

| Kaliningrad, | Arkhangelsk, | Arctic ocean | 73,228 | |

| Russia | Russia | |||

| AMK-79 | 2 December 2019 | 5 January 2020 | Atlantic ocean | 51,921/ |

| Kaliningrad, | Montevideo, | Arctic ocean | 15,876 | |

| Russia | Uruguay | |||

| AI-58 | 26 July 2021 | 6 Septmeber 2021 | Northern Atlantic, | 352,211/ |

| Kaliningrad, | Kaliningrad, | Arctic ocean | 119,572 | |

| Russia | Russia |

| Model/Study | RMSE, W/m |

|---|---|

| Multilinear Regression (baseline) | |

| Random Forests, plain weights | |

| Random Forests, ITF re-weighted | |

| Gradient Boosting, plain weights | |

| Gradient Boosting, ITF re-weighted | |

| CNN | |

| Dobson–Smith parameterization [10] | 78.2 (38–116) |

| LVOAMKI parameterization [9] | 61.9 (26–115) |

| ML algorithms on remote sensing data (MODIS) [3] | 51.73–54.04 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Krinitskiy, M.; Koshkina, V.; Borisov, M.; Anikin, N.; Gulev, S.; Artemeva, M. Machine Learning Models for Approximating Downward Short-Wave Radiation Flux over the Ocean from All-Sky Optical Imagery Based on DASIO Dataset. Remote Sens. 2023, 15, 1720. https://doi.org/10.3390/rs15071720

Krinitskiy M, Koshkina V, Borisov M, Anikin N, Gulev S, Artemeva M. Machine Learning Models for Approximating Downward Short-Wave Radiation Flux over the Ocean from All-Sky Optical Imagery Based on DASIO Dataset. Remote Sensing. 2023; 15(7):1720. https://doi.org/10.3390/rs15071720

Chicago/Turabian StyleKrinitskiy, Mikhail, Vasilisa Koshkina, Mikhail Borisov, Nikita Anikin, Sergey Gulev, and Maria Artemeva. 2023. "Machine Learning Models for Approximating Downward Short-Wave Radiation Flux over the Ocean from All-Sky Optical Imagery Based on DASIO Dataset" Remote Sensing 15, no. 7: 1720. https://doi.org/10.3390/rs15071720

APA StyleKrinitskiy, M., Koshkina, V., Borisov, M., Anikin, N., Gulev, S., & Artemeva, M. (2023). Machine Learning Models for Approximating Downward Short-Wave Radiation Flux over the Ocean from All-Sky Optical Imagery Based on DASIO Dataset. Remote Sensing, 15(7), 1720. https://doi.org/10.3390/rs15071720