1. Introduction

In contrast to multispectral sensors, hyperspectral sensors enable hundreds of narrow and contiguous bands to be obtained in order to characterize each pixel in a real scene. They have attracted great attention from researchers in signal and image processing, as hyperspectral images (HSIs) contain valuable spatial and spectral information. Over the past few decades, hyperspectral images have been applied in various areas [

1], such as data fusion, unmixing, classification, and target detection [

2,

3,

4,

5,

6,

7].

Anomaly detection (AD) aims to detect points of interest, such as natural materials, man-made targets, and other interferers, and to label each pixel as a target or background. AD is one of the most important research fields in HSI processing [

8]. Specifically, it is assumed that the spectral signatures of an anomaly are different from those of their surrounding neighbors, which makes it feasible to distinguish anomalies from the background. AD is, in essence, an unsupervised classification problem without prior knowledge of the anomaly or background, leading to an incredibly challenging detection task.

To date, a large number of AD methods have been proposed for HSIs, grouped into three main categories: deep-learning-based, statistics-based, and geometric-modeling-based methods. Deep-learning-based AD algorithms usually use deep neural networks to mine deep features of spectra in HSIs. Since no prior information can be exploited in AD, many researchers train the deep model in an unsupervised way for AD tasks [

9,

10,

11]. However, the deep model for off-the-shelf HSIs still takes a lot of time to train while exhibiting limited generalization ability. Reed–Xiaoli (RX) [

12], a benchmark statistics-based method, assumes that the background follows a Gaussian distribution and accomplishes AD via the generalized likelihood ratio test (GLRT). Specifically, the Mahalanobis distance between the pixel being tested and the surrounding background is calculated to determine whether the pixel is an anomaly. Many RX-based methods have been developed [

13,

14,

15,

16,

17,

18]. For example, global RX (GRX) [

13] and local RX (LRX) [

14] are two typical versions of this method, and they estimate the background statistical variables using the entire image and the surrounding neighbors, respectively. The above methods suffer the limitation of relying on the assumption that the background obeys a single distribution, which is difficult to satisfy in real hyperspectral scenes. Hence, the kernel-RX algorithm [

15,

16] was developed, which employs a kernel function and maps the data into a higher-dimensional feature space to characterize non-Gaussian distributions. Geometric-modeling-based methods [

19,

20,

21,

22,

23,

24,

25,

26,

27,

28] are another category of AD methods. Representation-based methods [

19,

20,

21] have been successfully applied to AD because they do not need a specific distribution assumption, but they fail to capitalize on the high spectral correlation of HSIs. Thus, robust principal component analysis (RPCA) was proposed, which assumes that the background is represented by a single subspace and aims to recover low-rank background and separate a sparse anomaly from an observed HSI [

22,

23]. Nevertheless, the low-rank representation (LRR)-based model assumed that the data lay in multiple subspaces since most pixels were mixed pixels, which necessitated the exploration of other methods for HSI AD [

24,

25]. An accurate background dictionary construction for LRR is still a challenging task. Linear mixing model (LMM)-based methods [

29] have attracted considerable attention in the AD field due to their explicit physical descriptions obtained through background modeling and their ability to enhance the spatial structure [

26,

27,

28]. Here, the background mixed pixels can be linearly represented by pure material signatures, which is normally accomplished by non-negative matrix factorization (NMF) [

30], and they can be approximately written as the product of two non-negative matrices: an endmember matrix and an abundance matrix. Initially, Qu et al. [

26] applied spectral unmixing to model original HSI data and regarded the obtained abundance maps as the input for LRR. To enhance the HSI spatial structure smoothness, more recently, the authors of [

28] proposed an enhanced total variation (ETV) model with an endmember background dictionary (EBD) by applying ETV to the row vectors of the representation coefficient matrix. The abovementioned methods both achieved promising performance with respect to background modeling using the LMM, though they were matrix-based methods that reshaped the 3D HSI into a 2D matrix and could not avoid destroying the spatial or spectral structure of the HSI.

Since an HSI is essentially a cube, the aforementioned matrix-based background modeling methods failed to explore the intrinsic multidimensional structure of HSIs [

31]. In comparison, HSI processing based on tensor decomposition can simultaneously preserve the spatial and spectral structural information [

32,

33]. Recent developments in tensor-based AD methods have heightened the need for inner-structure preservation [

34] and the exploration of tensor physical characteristics [

35,

36,

37]. The spectral signatures of the background pixels in the homogeneous regions have a high correlation, resulting in the background having a strong spatial linear correlation and therefore a low-rank characteristic. Conventional low-rank tensor decomposition [

38] includes prior-based tensor approximation, canonical polyadic (CP) decomposition, and Tucker decomposition. Recently, several new tensor decomposition models [

39,

40,

41] were proposed to capture the low-rank structural information in a tensoral manner. Li et al. [

35] proposed a prior-based tensor approximation (PTA) method for hyperspectral AD, assuming the low rankness of the spectra and piecewise-smoothness in the spatial domain. Note that PTA actually operates on matrices and not tensors, as the hyperspectral cube is unfolded in 2D structures. Thus, these tensor-based approaches do not allow one to preserve the inner structure of the data. Song et al. [

36] proposed an AD algorithm based on endmember extraction and LRR, whereas the physical meaning of the abundance maps underlying the LMM were not exploited.

In this paper, motivated by the fact that abundance maps possess more distinctive features than raw data, contributing to an accurate separation of anomalies from the background, we propose an abundance tensor regularization procedure with low rankness and smoothness based on sparse unmixing (ATLSS) for hyperspectral AD. With the proper modeling of the physical conditions, an observed third-order tensor HSI can be decomposed into a

background tensor, an

anomaly tensor, and

Gaussian noise. Based on the LMM, the background tensor is approximated as a mode-3 product of an abundance tensor and an endmember matrix to enhance the spatial structure information for the existing mixed pixels. Peng [

42] also demonstrated that abundance maps inherit the spatial structure of the original HSI, and it is rational to characterize this property on abundance maps. The spectral signatures of the background pixels in the homogeneous regions have a high correlation, which yields a spatial linear correlation and, therefore, a low-rank property. In [

31,

43], the authors imposed low rankness on the abundance tensor to effectively capture the HSI’s low-dimensional structure. In our paper, the abundance tensor is characterized by tensor regularization with low rankness through CP decomposition. Moreover, each pixel contains limited materials, and neighboring pixels are constituted by similar materials, which display sparsity and spatial smoothness properties. The anomaly part accounts for a small portion of the whole scene, and each tube-wise fiber (i.e., the spectral bands of each pixel) contains few non-zero values, which indicates the tube-wise sparsity of the anomaly tensor. In a real observed HSI, the spectra are usually corrupted with noise caused by the precision limits of the imaging spectrometer and errors in analog-to-digital conversion. This issue was dealt with by modeling the noise as Gaussian random variables [

44,

45,

46]. Here, Gaussian noise was modeled separately to the anomalies to suppress the noise confusion in the anomalies.

The main contributions of this paper can be summarized as follows:

- (1)

The low spatial resolution of HSIs means that existing mixed pixels rely heavily on spectral information, which makes it difficult to differentiate the target of interest and the background. Therefore, in view of the LMM, we proposed a completely blind tensor-based model where the background is decomposed using the mode-3 product of the abundance tensor and endmember matrix, with the spatial structure and spectral information preserved. Moreover, the obtained abundance tensor of the background provides more robust structural information, which is essential for AD performance improvement.

- (2)

Considering the distinctive features of the background’s abundance maps, we characterized them by tensor regularization and imposed low rankness, smoothness, and sparsity. Specifically, the -norm was introduced to enforce sparseness, and the total variation (TV) regularizer was adopted to encourage spatial smoothness. Moreover, the typically high correlation between abundance vectors implies the low-rank structure of abundance maps. In contrast to the rigorous low-rank constraint, soft low-rank regularization was imposed on the background in order to leverage its spatial homogeneity. Its strictness was controlled by scalar parameters. In addition, for the anomaly part of HSI AD, the -norm was utilized to characterize the tube-wise sparsity, since the anomalies accounted for a small portion of the scene. Gaussian noise was also incorporated into the model to suppress the noise confusion in the anomalies.

The experimental results obtained using five different datasets, with extensive metrics and illustrations, demonstrated that the proposed method significantly outperformed the other competing methods.

The remainder of this article is organized as follows. The third paragraph of

Section 2 introduces the problem formulation and proposed method. In

Section 3, we evaluate the performance of the proposed method and compare it with some traditional and state-of-the-art AD methods using five real hyperspectral datasets. Finally, the conclusion and future works are presented in

Section 4.

2. Problem Formulation and Proposed Method

In this section, we first introduce HSI AD based on the background LMM. The proposed ATLSS algorithm is then illustrated. In addition, the overall flowchart of the proposed ATLSS algorithm for hyperspectral AD is shown in

Figure 1.

2.1. Tensor Notation and Definition

This subsection introduces some mathematical notation and preliminaries related to tensors, and our proposed method is clearly described. We use lowercase bold symbols for vectors, e.g., , and capital letters for matrices, e.g., . The paper denotes third-order tensors using bold Euler script letters, e.g., . The scalar is written as x.

Definition 1. The dimension of a tensor is called the mode, and has N modes. Slices are two-dimensional sections of a tensor and are defined by fixing all but two indices. For a third-order tensor , and is the k-th frontal slice.

Definition 2 (the mode-n unfolding and folding of a tensor). The “unfold” operation along mode-n on an N-mode tensor is defined as . Its inverse operation is mode-n folding, denoted as .

Definition 3 (rank-one tensors). An N-way tensor is a rank-one tensor if it can be written as the outer product of N vectors.

Definition 4 (mode-

n product)

. The mode-n product of tensor and a matrix is defined as:In contrast, the n-mode product can be further computed by matrix multiplication. Definition 5 (CP decomposition)

. CP decomposition factorizes an n-order tensor into a sum of component rank-one tensors as:where is the weight vector and is the n-th factor matrix. R is the rank of tensor , and we denote . 2.2. HSI AD Based on Background LMM

Since an HSI cube can be naturally treated as a third-order tensor, we used a tensor-based representation to avoid spatial and spectral information loss. In such an AD application, an HSI tensor can be decomposed into so-called background and anomaly tensors [

47]. However, in real-world applications, the scenes are usually corrupted by noise [

45]; hence, in this paper, noise was also added into the model to suppress its confusion with the estimated anomaly term, and the model is expressed as follows:

where

is the observed HSI and

,

, and

are the background, anomaly, and noise, respectively.

H,

W, and

D represent the height, width, and number of bands of each tensor, respectively. The purpose of AD is to reconstruct an accurate background image to more accurately separate the anomalies from the background and noise, yielding superior performance.

There are typically several mixed pixels in natural HSI scenes, implying that more than one material constitutes each mixed pixel. This refers to an explicit physical interpretation under the assumption of the LMM; that is, the spectrum of each pixel of a low-rank background can be linearly combined with a few pure spectral endmembers. NMF is an ideal solution for the LMM, as it decomposes the original data into the product of two low-dimensional non-negative matrices. Alternatively, under tensor notation, the tensor background approximates the mode-3 product of a non-negative abundance tensor and a non-negative endmember matrix. Mathematically, Equation (

4), inspired by NMF, takes the following form:

where

is the third-order abundance tensor,

is the endmember matrix, and

R is the number of endmembers. The third-order abundance tensor is obtained by reshaping each abundance vector into a frontal slice matrix

of dimensions

and then stacking them along the mode-3 direction. The newly formed abundance tensor implements the underlying inner low-rank structure information for thorough characterization.

2.3. Proposed Method

An HSI usually consists of a few materials, so it lies in low-rank subspaces. Moreover, a similar substance is distributed in the adjacent region, which gives the HSI a locally smooth property. Compared to the background, anomalies are distributed randomly; thus, anomalies are often assumed to be sparse. Therefore, in this paper, we modeled the problem based on the assumption that the observed HSI was a superposition of a low-rank background, sparse anomalies, and a noise term.

The LMM assumes that each mixed pixel in the background linearly consists of a few endmembers, indicating that many zero entries are contained in the abundance tensor, which can be represented by a sparse property. Remarkably, the

-norm can directly minimize non-zero components, but this leads to an NP-hard problem. The

-norm, therefore, is introduced to promote the sparsity of the abundance tensor, where the sparsity prior narrows the solution space and achieves accurate abundance tensor estimation. The anomaly pixels occupy a small proportion of the scene, indicating that the anomaly matrix has a column-sparse property and is characterized by the

-norm [

48]. Here, we have

; therefore, due to the physical meaning and the definition of the

-norm in [

49], it is reasonable to assume that the tensor anomaly has tube-wise sparsity. In addition, an HSI is usually corrupted by noise, which is assumed to be identically and independently distributed Gaussian random variables [

44]; therefore, the noise was modeled as

to suppress it from being confused with the anomaly. In general, the background model based on the LMM can be rewritten as follows:

where

and

denote the

-norm and

-norm, respectively, and

and

are the trade-off parameters that control the sparsity of the abundance tensor and tube-wise sparsity of the anomaly, respectively.

In addition to the single-pixel sparsity, the spatial correlation between the neighboring pixels also deserves to be exploited. It supposes that there is a high correlation among the spectra of the pixels lying in homogeneous regions. For this, we imposed a soft low-rank property on the abundance tensor of the background tensor in order to model the aforementioned high correlation property of homogeneous regions.

Assuming that

designates the rank of the abundance tensor, the loss function for HSI AD could be written as:

However, the rank of

in (

7) is a non-convex problem in that the optimization is also difficult. Referring to [

50], we introduced

, which was assumed to be low-rank and represents a low-rank prior. Then, CP decomposition was employed to measure the low rankness of

via the summation of the

rank-one components. Consequently, the CP decomposition of

could be written as:

where

,

, and

are the factor matrices.

Subsequently, we introduced a new regularization term controlled by a low-rank tensor

to enforce a non-strict constraint on

, as shown in (

9). A very large

value would lead to the mixing of anomalies into the background, which would undermine the low-rank characterization of the background by the regularization term. Therefore, the parameter

aimed to modify the strictness of the low-rank constraint on

. Thus, not only was the non-convex issue addressed, but the small-scale details that were necessary for the background could also be preserved. The function based on (

7) was approximately rewritten as:

where the rank is controlled by

. Then, the model of (

9) could be rewritten as:

Notably, pixels with spatial homogeneity are more likely to contain the same materials, which indicates the fractional abundance of the adjacent pixels that tend to be similar. Here, the spatial context information of the abundance tensor was characterized by the TV regularizer [

51] by encouraging a piecewise smoothness structure with the distinct edges preserved. The spatial TV norm of the abundance tensor

is defined as [

52]:

Let

indicate the intensity of the voxel

and

and

be the two horizontal and vertical differential operators in the spatial domain, respectively. Then, we have:

The TV-based cost function corresponding to (

10) could be modeled as:

where

is the TV norm and

is a parameter to adjust the strength of the piecewise smoothness.

Similarly to

and

, this could be further rewritten as:

2.4. Optimization Procedure

The optimization problem in (

14) could be solved by ADMM. We needed to introduce three auxiliary variables,

, and

, and then transform it into the following equivalent problem:

The problem in (

15) could be solved by ALM [

53] to minimize the following augmented Lagrangian function:

where

,

,

, and

are the Lagrange multipliers, and

is the penalty parameter. The above problem could be solved by updating one variable while fixing the others. Specifically, in the

-th iteration, where the problem could be divided into several subproblems, the variables were updated as follows:

Update

:

which could be transformed into the following linear system:

Similarly to (

18), we obtained the following solution:

Update :

We combined (

18) and (

20) and computed the result as follows:

By substituting (

13) into (

14), the optimization problem became:

where CP decomposition was solved by an alternating least-squares (ALS) [

54] algorithm. Consequently, each factor matrix was calculated through a linear least-squares approach by fixing the other two matrices.

Update

for which the solution is:

Next, we solved the subproblem of

:

where

is the identity tensor;

is a convolution, as defined in (

12), that operates in the spatial domain; and

indicates the adjoint operator of

. Therefore,

could be quickly computed by:

where

and

denote the fast Fourier transform [

55] and its inverse, respectively.

Update

and

:

which could be solved by a soft-thresholding function. The update rule for

was similar to that of

.

Given

, the closed solution of (

30) could be calculated as:

where

and

.

Update

,

,

,

, and

:

Finally, according to the anomaly , the AD maps could be obtained by . Then, we arrived at an augmented Lagrangian alternating direction method to solve the proposed ATLSS model.

2.5. Initialization and Termination Conditions

In the proposed ATLSS solver, the input terms were the observed HSI, the basis matrix

, the abundance matrix

, and the number of endmembers

R. It is worth noting that the initialization of

,

, and

R influenced the AD results of the proposed method. Hence, we initialized the background

by the RX algorithm. Then, HySime [

56] was employed to estimate the number of background endmembers

R. Afterward, NMF [

57] and the related update rules were utilized to iterate over

and

, respectively. The CP rank

K was determined by the algorithm referred to in [

50] to provide an accurate rank estimation for the proposed method. In general, the iterative process continued until a maximum number of 100 iterations or a residual error was satisfied.

2.6. Computational Complexity Analysis

Each iteration’s computational cost consisted of updating all the relevant factors. The time complexity of CP decomposition (update ) is ; the time complexity of FFT (update ) is ; the time complexity of the soft threshold operator (update , ) is ; the time complexity of other matrix multiplication operations (update , , , , , , , and ) is . Thus, the time complexity of the proposed method is .

3. Experimental Results and Discussion

In this section, the proposed ATLSS algorithm is applied to five real HSI datasets for AD, and a detailed description of the experiments is provided below. All the experimental algorithms were performed in MATLAB 2016b on a computer with a 64-bit quad-core Intel Xeon 2.40 GHz CPU and 32.0 GB of RAM in Windows 7.

3.1. Experimental Datasets

- (1)

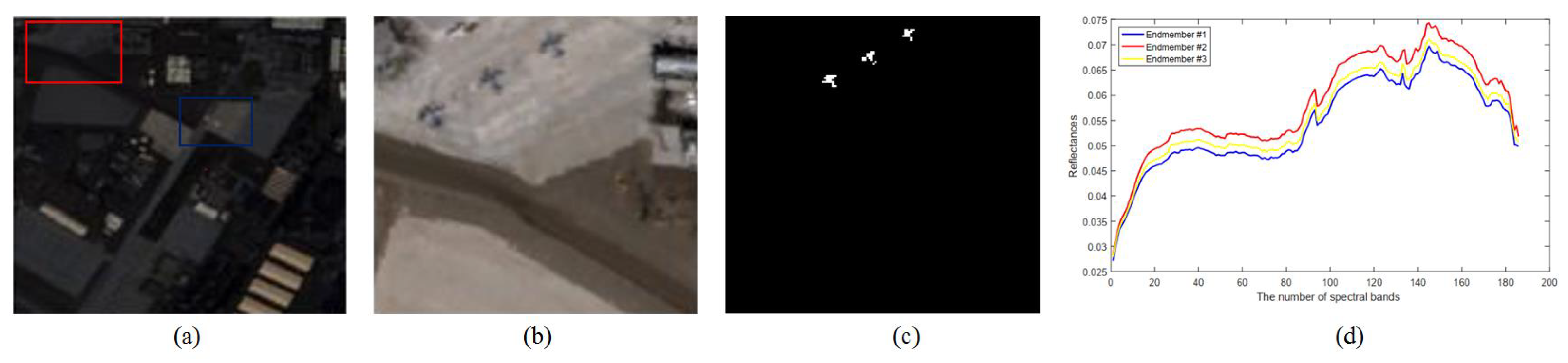

AVIRIS airplane data: The AVIRIS airplane dataset was collected by AVIRIS in San Diego. One hundred and eighty-nine bands were retained, while the water absorption regions; low-SNR bands; and bad bands (1–6, 33–35, 97, 107–113, 153–166, 221–224) were removed. In

Figure 1b, the subimage is named the AVIRIS−1 dataset, and it is located in the top-left corner of the AVIRIS image with a size of

. The anomaly contained in the image was three airplanes, and the ground truth is shown in

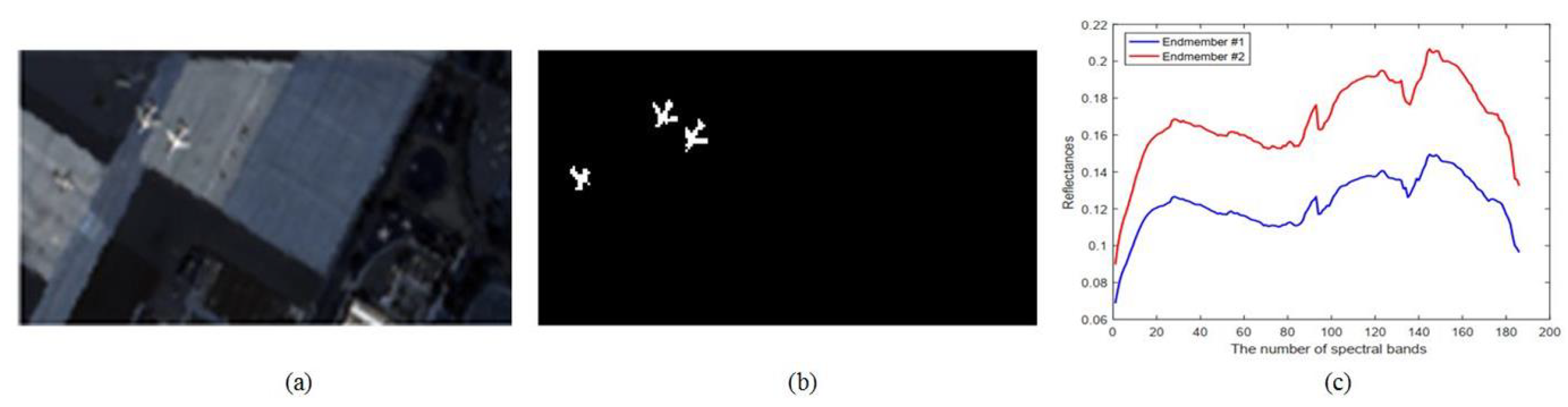

Figure 1c. AVIRIS−2 was located in the center of San Diego, as shown in

Figure 2, and it contained 120 anomaly pixels with a size

.

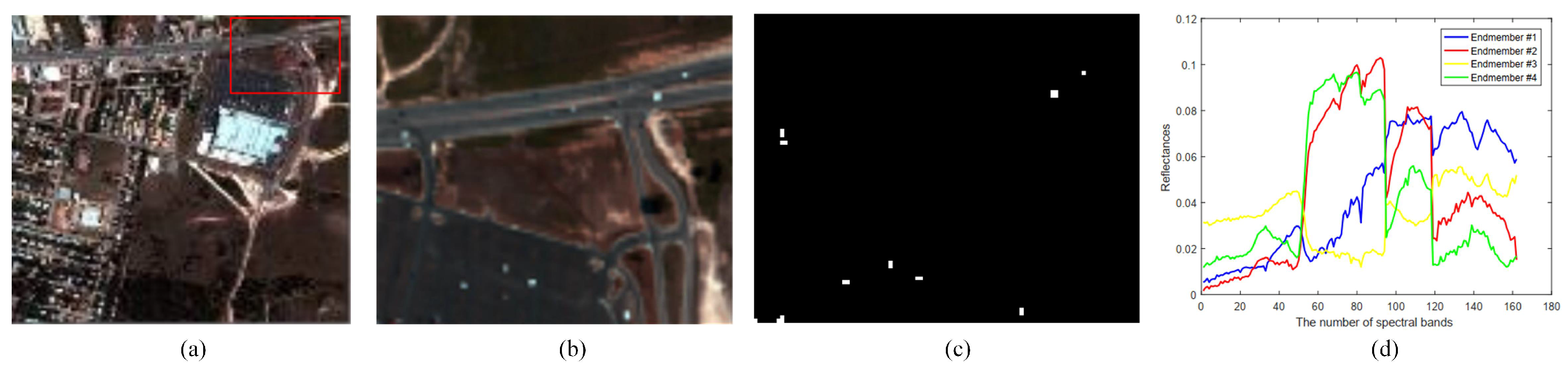

- (2)

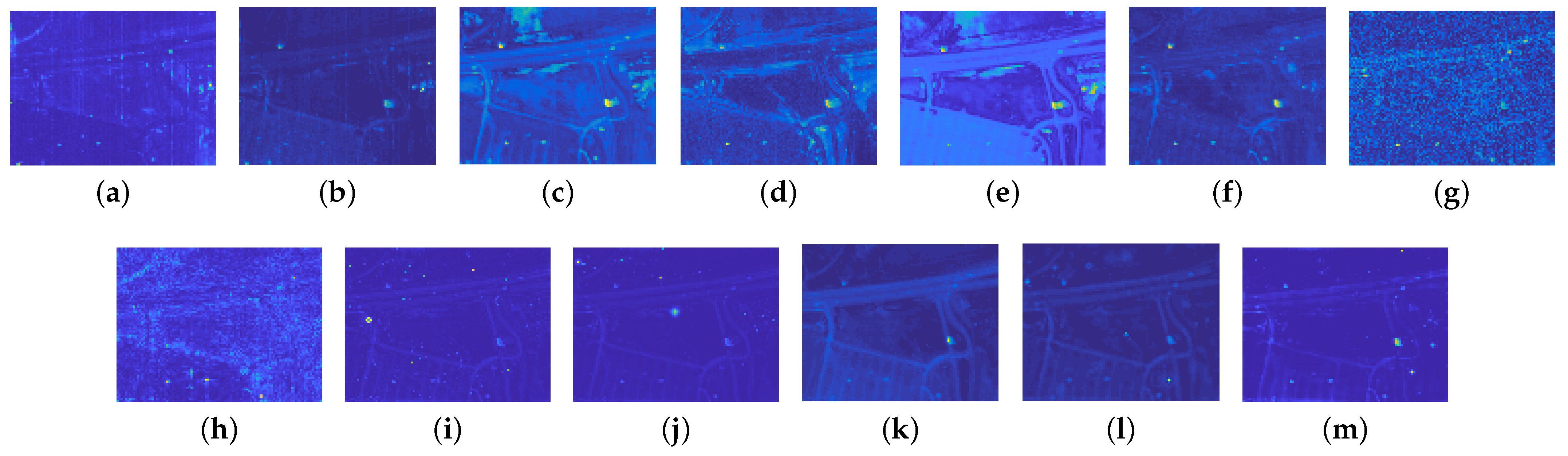

HYDICE data: The real data were collected by the hyperspectral digital imagery collection experiment (HYDICE) sensor, and the original image had a size of

. After removing the low-SNR and water-vapor absorption bands, 162 bands remained. An

subspace was cropped from the top right of the whole image, and the cars and roofs in the image scene were considered anomalies. The false-color image and the corresponding ground truth are shown in

Figure 3.

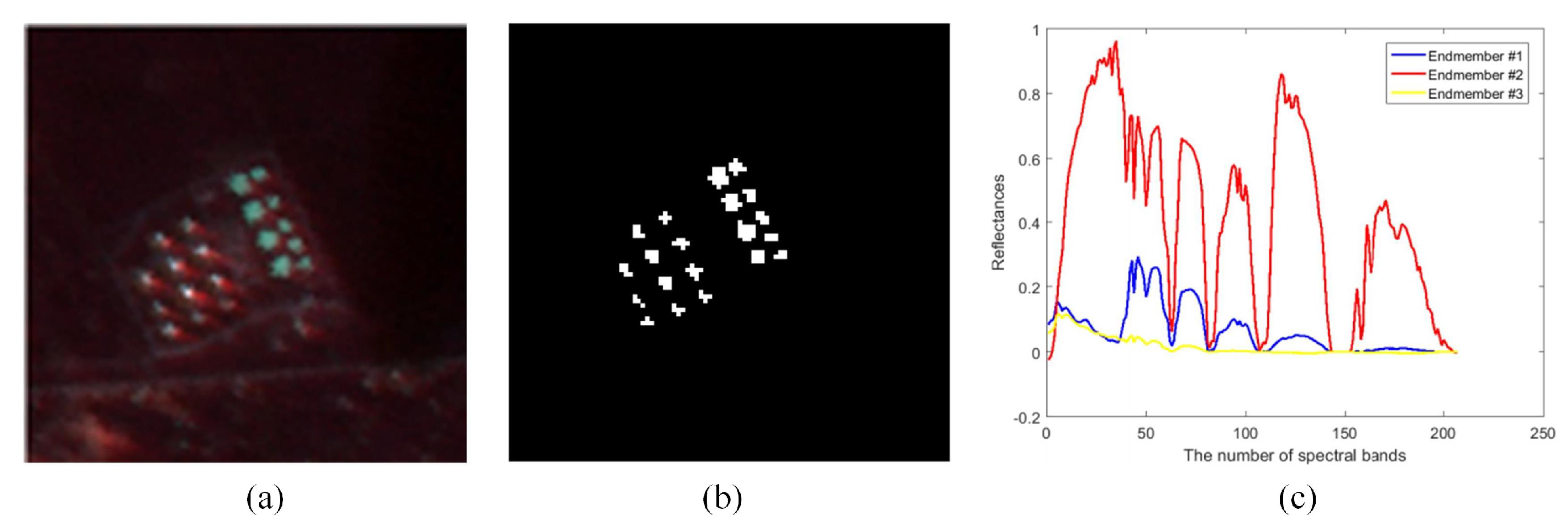

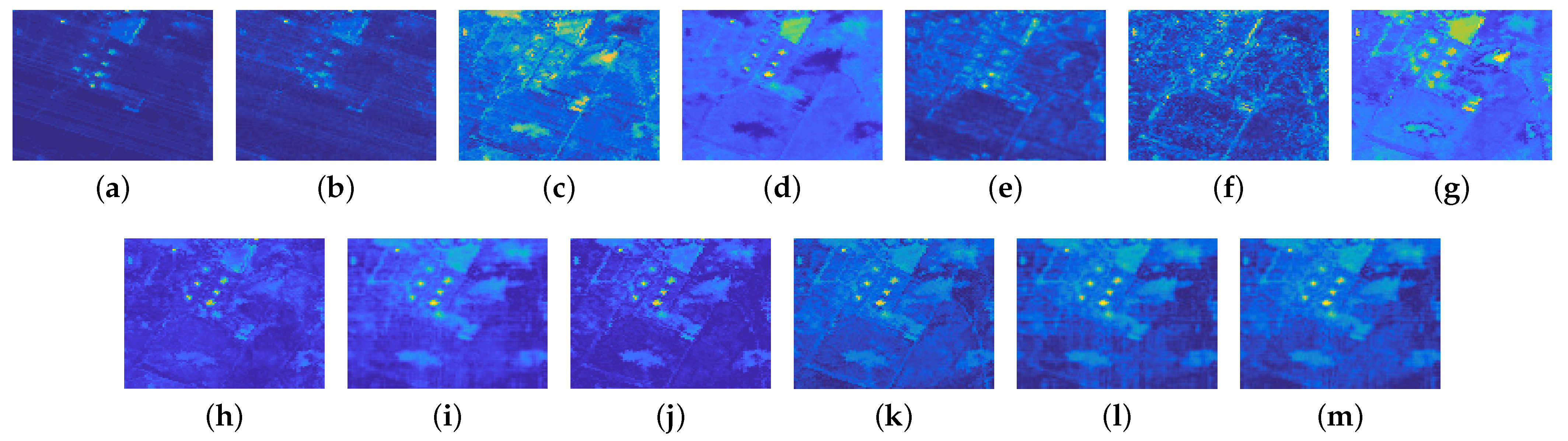

- (3)

Urban (ABU) data: The Urban dataset was collected with AVIRIS sensors and contained five images of five different scenes. In this paper, we selected the Urban−1 and Urban−2 images, captured at different locations on the Texas coast, to perform the experiment. The spatial size of the Urban−1 dataset was

, the number of spectral bands was 204, and its false-color image and the ground truth are presented in

Figure 4a,b. For the Urban−2 dataset, with a size of

,

Figure 5a,b shows the corresponding false-color image and ground truth.

3.2. Evaluation Metrics and Parameter Settings

The AD performance of the proposed ATLSS method is demonstrated in this section.

Table 1 shows the formulations of ATLSS and its four degradation models (Dm), annotated as Dm-1, Dm-2, Dm-3, and Dm-4, based on the linear spectral unmixing method. In addition, we chose to compare our models with RX [

12], RPCA [

22], LRASR [

24], GTVLRR [

25], GVAE [

9], PTA [

35], TRPCA [

49], and LRTDTV [

34]. RXD is a statistics-based method in the HSI AD field, and it is the baseline in almost all reference articles. The methods listed above are based on matrix modeling. PTA is a tensor-based method, but it is based on matrix operations. GVAE is a deep learning method. TRPCA and the proposed ATLSS algorithm are based on tensor modeling. We classified the RX, RPCA, LRASR, GTVLRR, and PTA methods as matrix-based operations, and TRPCA, LRTDTV, Dm-1, Dm-2, Dm-3, Dm-4, LRTDTV, and ATLSS as tensor-based operations.

To evaluate the detector more effectively, the 3D ROC curve [

58] was employed, which introduces the threshold

in addition to the parameters

and

used in the 2D ROC curve [

59] to specify a third dimension

. In addition, the 2D ROC curves

and

were used to measure the AD result; an efficient detector would have a performance with a larger

value but a smaller

value, and it is desired that the curves of

and

are close to the upper-left and lower-left corners of the coordinate axis, respectively. In addition, box and whisker plots were used to evaluate the separability between the anomaly and background. The boxes in the box and whisker plot reflect the distribution range of the detection values of the anomaly and background; that is, a larger gap between the anomaly and background boxes indicates better discrimination by the detector.

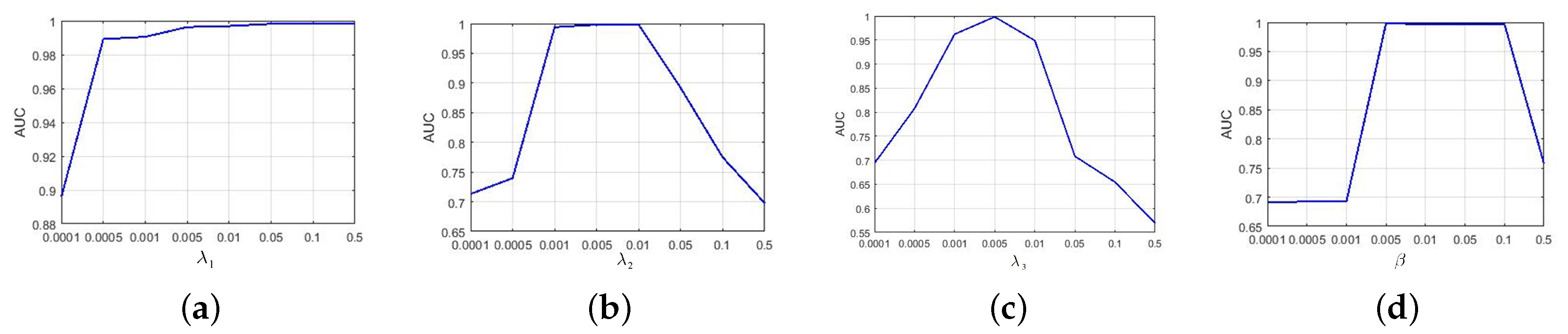

In the proposed ATLSS method, the number of endmembers R was first estimated in the initialization phase using the HySime algorithm, whereas the most significant task was to search for the best set of parameters , which needed to be carefully identified. Moreover, , , , and ranged within the set , respectively, while was selected from . Here, we carefully determined the optimal parameters for the five datasets and for all the algorithms. To demonstrate the contribution of the different parameters to AD, we took the example of the AVIRIS−2 airplane data while changing the parameters , and to illustrate the tuning procedure in detail. Specifically, we fixed , , and and traversed until a favorable result was observed; then, we alternated the other parameters. We applied ATLSS with different parameter settings to the AVIRIS airplane datasets (including AVIRIS−1 and AVIRIS−2), HYDICE dataset, and Urban (ABU) datasets (including Urban−1 and Urban−2) in turn to obtain detection results under the optimal parameter combination.

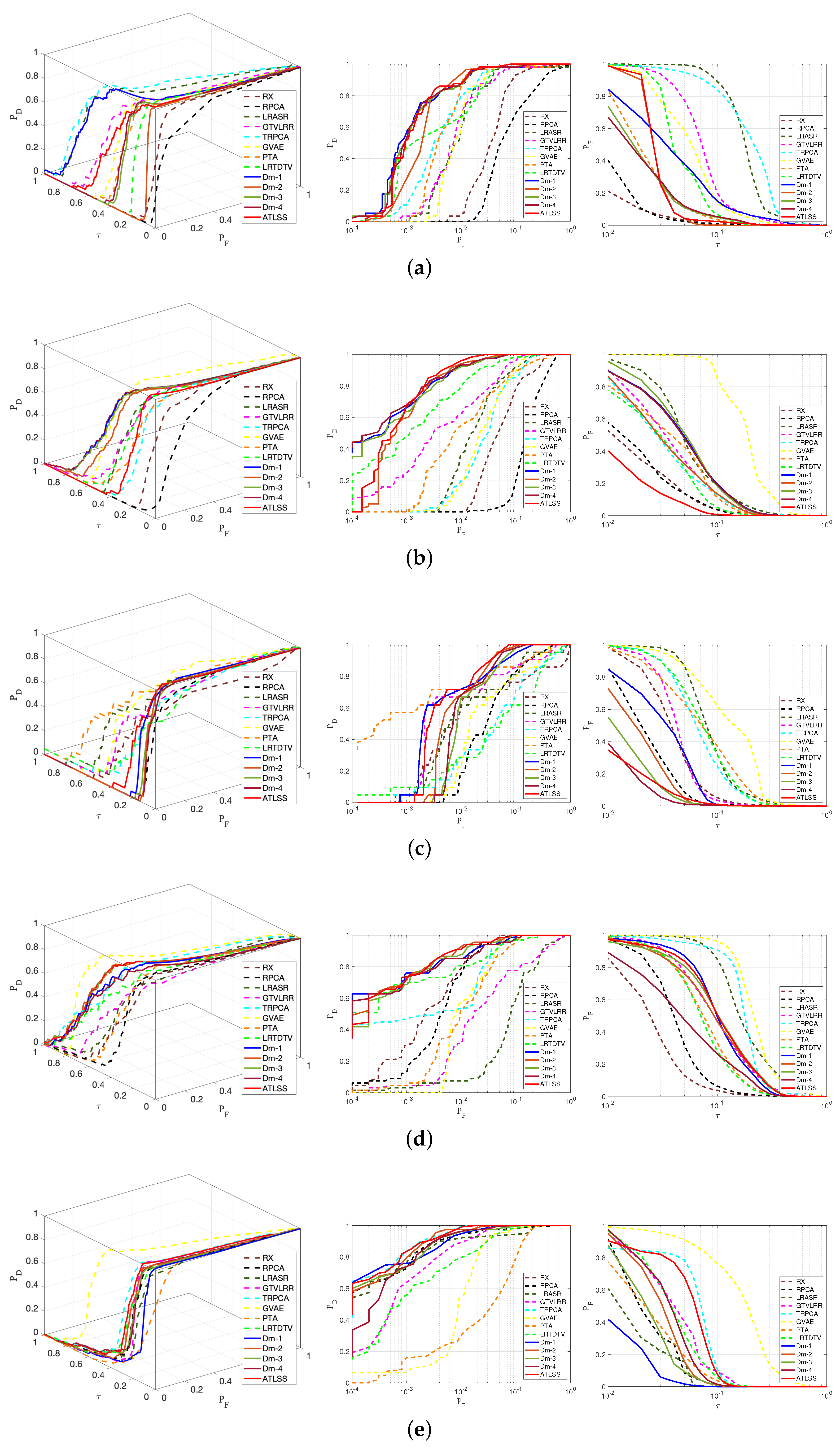

3.3. Detection Performance

We investigated the contribution of the regularization terms, including sparsity regularization, TV regularization, and CP regularization, in the proposed ATLSS method with regard to the accuracy of AD. We referred again to

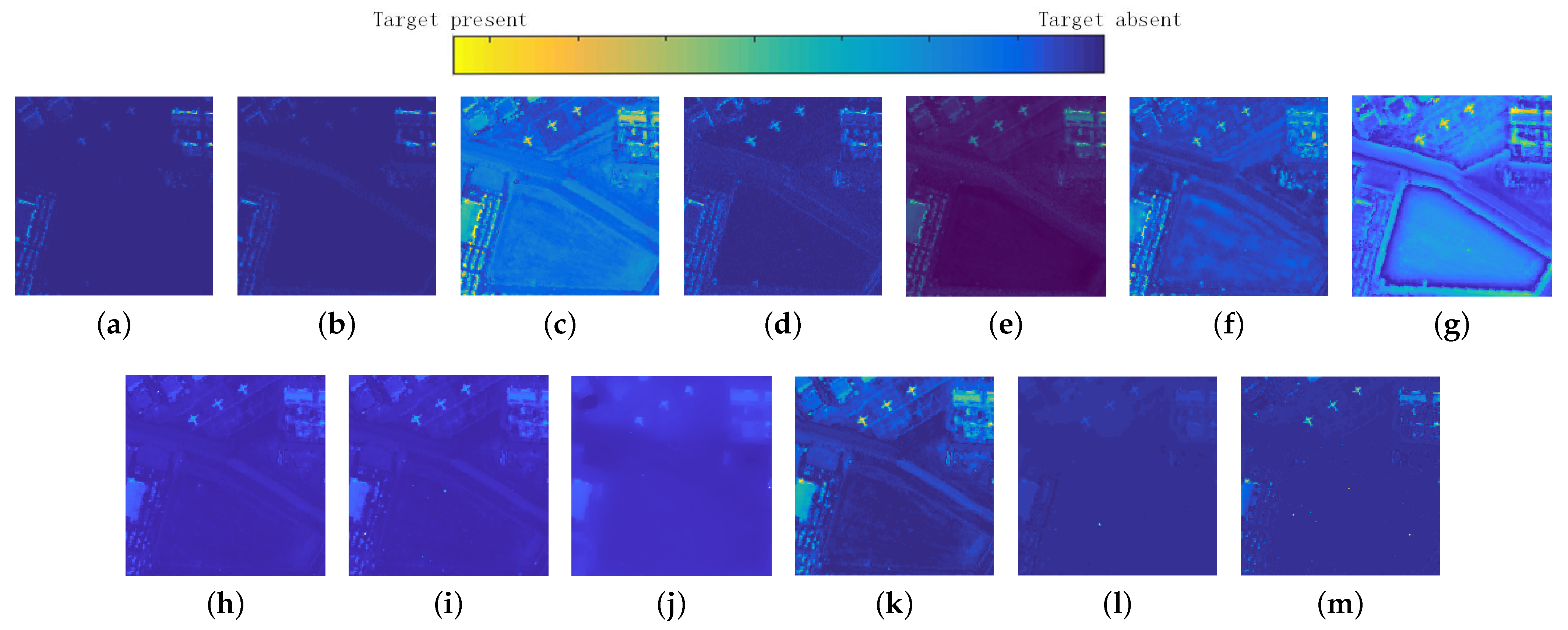

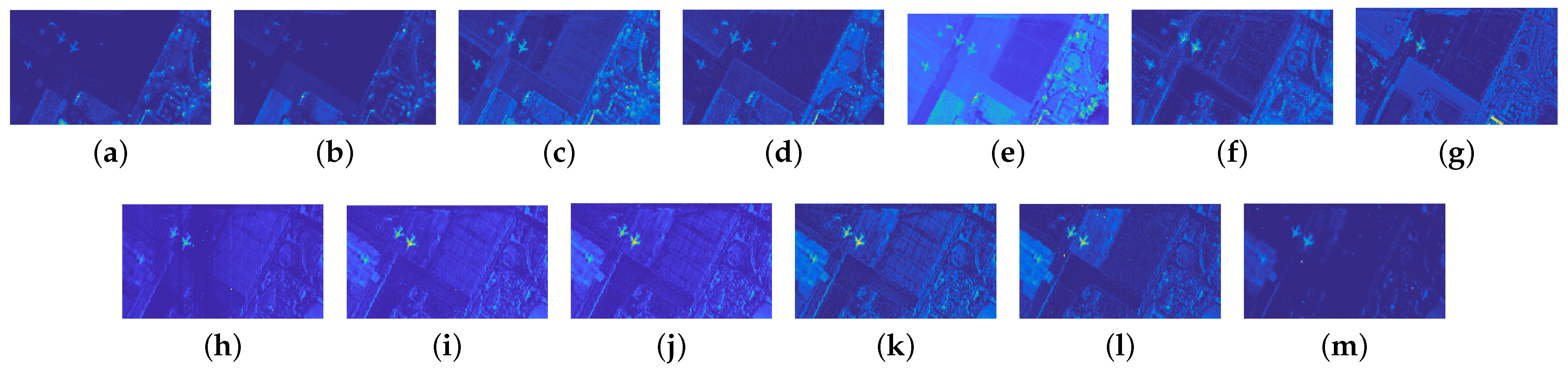

Table 1 for the ATLSS, Dm-1, Dm-2, Dm-3, and Dm-4 models. Furthermore, we compared the performance of ATLSS, including Dm-1, Dm-2, Dm-3, and Dm-4, with that of RX, RPCA, LRASR, GTVLRR, TRPCA, GVAE, PTA, and LRTDTV. The 2D plots of the algorithm detection results comparison for the five datasets are shown sequentially in

Figure 6,

Figure 7,

Figure 8,

Figure 9 and

Figure 10.

Table 2 shows the AUC values of

obtained by different AD algorithms on the five real datasets. Each algorithm ran ten times on each dataset to avoid randomness, and the average AUC values were used.

Figure 11 and

Figure 12 show the corresponding performance curves and box and whisker plots of the different methods under comparison for the five real datasets. We also took the AVIRIS−2 dataset as an example to illustrate the superior performance of the proposed method in detail.

For Dm-1, when the parameter settings were and , all five datasets achieved very good results.

3.3.1. AVIRIS−2

For the AVIRIS−2 dataset, the estimated number of endmembers for the background was two, as shown in

Figure 2c, and the estimated rank was

. In the following two paragraphs, we study (1.a) the effects of the regularization terms and (1.b) the comparison of different anomaly detectors.

(1.a) Effects of the Regularization Terms: After a large number of parameter traversals, the trade-off parameters referenced in the ATLSS algorithm and Dm-2 both achieved their optimal performance, i.e.,

,

,

, and

for ATLSS and

,

, and

for Dm-2.

Figure 13 shows the detection accuracy of ATLSS on the AVIRIS−2 dataset when one parameter varied within a predefined parameter range and the other three trade-off parameters were fixed. In

Figure 13a, it can be observed that when

increased from

to

, the curve showed an upward trend, and the highest detection result was obtained at

, which indicated a positive effect on controlling the sparsity of the abundance tensor.

Table 2 and

Figure 7 provide the certification based on quantitative analysis and visual qualitative analysis. The peak of the curve in

Figure 13b was located at

when

was in the interval

. The increasing curve implied that TV had a positive effect on suppressing noise, while

imposed a smoothness constraint on the abundance tensor, leading to a dramatic decline in the detection results.

was imposed on

to control its low rankness, and

Figure 13c reveals that setting

balanced the low-rank regularization with the most important information but captured small-scale details. The curve first steadily increased and then fell as it deviated from the optimal parameter value, implying that parameter values that were too large led to strict low rankness in

and thus resulted in a significant residual loss in the reconstructed abundance tensor. In

Figure 13d, one can clearly see that the curve was stable when

and exhibited superior performance. Outside this interval, the detection result was sensitive to changes in the parameter, with the detection performance declining in a straight downward trend. When

was set to

, the best performance was achieved.

(1.b) Comparison with Different Anomaly Detectors:Table 2 demonstrates the AUC values of

and

for the different methods under comparison on the five datasets. In

Table 2, the anomaly detection accuracy

of ATLSS, which obtained the highest score among all the methods under comparison when

, is illustrated by the ROC curve in the upper left corner, which also shows the efficiency of the model. The

score was lower than the others, and the

ROC curve is closest to the lower-left corner, as shown in

Figure 11b. ATLSS performed well on the AVIRIS−2 dataset and achieved optimal AD accuracy and a low false-alarm rate. The proposed ATLSS model and the generation Dm-1, Dm-2, Dm-3, and Dm-4 methods better separated the anomaly and background, as shown in

Figure 12b. The 2D plots of the detection results are presented in

Figure 7i, showing the low-rank structure imposed by the regularization constraint (i.e., CP decomposition on the introduced low-rank prior term), which granted the abundance tensor adequate flexibility to model fine-scale spatial details with most of its spatial distribution preserved. The exploration of the background tensor enabled the suppression of the background more efficiently. Moreover, TV regularization, which smoothed the estimated abundance map, effectively suppressed Gaussian noise. When compared with the generation Dm-1, Dm-2, Dm-3, and Dm-4 methods, ATLSS demonstrated better background suppression, as shown in

Figure 7, and the best AD performance, as shown in

Table 2. In

Figure 11b, in addition to the curves of

and

, the 3D ROC curve also comprehensively shows the performance of the proposed ATLSS method.

The methods assumed that the background and the anomaly had a low-rank and sparse property and performed better than the RX method in terms of the AUC value and false-alarm rate, which can be observed from

Table 2 and

Figure 11b. The 2D plots in

Figure 7 also reveal that the background and Gaussian noise were both effectively suppressed; moreover, the anomaly airplanes were more clearly detected by ATLSS compared to RX. The GTVLRR, PTA, Dm-2, Dm-3, Dm-4, LRTDTV, and ATLSS, utilized TV regularization to smooth away the noise signature while strengthening the outlines of the airplanes. One can observe that GTVLRR performed well in comparison to the other methods. However, the proposed ATLSS method based on tensor decomposition was outstanding compared to the other methods in terms of

and the power of the anomaly and background separation.

Table 3 shows the computational times of the ten algorithms for the AVIRIS−2 dataset. We observed that the running time of ATLSS was longer than that of Dm-1, Dm-2, Dm-3, and Dm-4, having been increased by the added regular terms, but the AD performance improved. The execution time of ATLSS was shorter than that of GTVLRR and TRPCA, while it was higher than that of LRTDTV. The detection result of ATLSS was superior to that of LRTDTV. Compared to RX, RPCA, LRASR, and PTA, the time cost of ATLSS was much higher, because the former are based on matrix operations. As shown in

Table 2, the deep learning method GVAE had a training time/test time of

, presenting not only high time demands but also a far inferior experimental performance compared to our proposed ATLSS model.

3.3.2. AVIRIS−1

For the AVIRIS−1 dataset, the estimated number of endmembers for the background was three, as shown in

Figure 1d, and the estimated rank was

. The best performance was achieved when we set the four parameters as

.

When comparing Dm-1, Dm-2, Dm-3, Dm-4, and ATLSS, we observed that the anomaly included three planes, which are clearly identified in

Figure 6. The ATLSS’s best performance was achieved when we set the four parameters as

. In

Table 2 and

Figure 11a, one can observe that the value of

was the largest for ATLSS, and the curve is in the upper-left corner. The

value of ATLSS was also relatively low. The superior performance of ATLSS demonstrated that it imposed low-rank tensor regularization and TV regularization on the abundance tensor, efficiently suppressing the background and smoothing away the noise. Among all the compared methods, as shown in

Table 2, the best detection result was also achieved by ATLSS. One can observed that even though RX had the lowest false-alarm rate, the AD accuracy and the separation of the anomaly and background shown in

Figure 11a and

Figure 12a demonstrated that its performance was not very good. Notably, our proposed method was superior to the tensor-based PTA method on the AVIRIS−2 dataset for all measurements.

Figure 11a shows that the ROC curve of ATLSS was closer to the top left than those of all the other methods, and

Table 2 further proves the remarkable performance of ATLSS.

3.3.3. HYDICE

For the Urban data, the estimated number of endmembers was

, as shown in

Figure 3d, and the estimated CP rank was

.

Compared to Dm-1, Dm-2, Dm-3, and Dm-4, as shown in

Table 2, the ATLSS method showed a competitive performance when we set the parameters as

; the AD AUC value was the highest; and the false-alarm rate was the lowest. ATLSS was also powerful in separating anomalies and the background, as shown in

Figure 12c. In

Figure 8i, the anomaly was accurately detected; furthermore, the background and the noise were both well-suppressed, which is a benefit of tensor low-rank regularization and TV smoothness for the background abundance tensor. Compared with PTA, the

values of ATLSS, as shown in

Table 2, accounted for the fact that abundance maps possess more distinctive features than raw data, which is beneficial for identifying anomalies within a background and achieving outstanding performance. The qualitative and quantitative results are presented in

Table 2 and

Figure 11c, as well as in

Figure 12c. These results were obtained by background low-rank decomposition, which enabled more accurate background reconstruction.

3.3.4. Urban (ABU-1)

For the ABU Urban−1 dataset, the number of estimation endmembers was two, as shown in

Figure 4c, and the estimated CP rank was

.

In contrast with Dm-1, Dm-2, Dm-3, and Dm-4, the ATLSS background model imposed a CP regularization constraint and TV regularization on the abundance tensor, and an excellent AD performance was achieved when the parameters were

, as shown in

Table 2. A higher detection result would lead to a few anomaly pixels being regarded as background pixels, resulting in a lower false-alarm rate. Thus, as shown in

Table 2 and

Figure 11d, the

values of Dm-1, Dm-2, Dm-3, Dm-4, and ATLSS increased gradually, while the

values in

Table 2 also increased. Furthermore, one can observe that the

of RX was the lowest and the

the highest among all the compared methods. However,

Figure 12d shows that ATLSS and the generation methods achieved superior separation between the anomaly and background. In general, the evaluation metrics mentioned above validated that the proposed ATLSS method outperformed the other methods in both qualitative and quantitative aspects.

3.3.5. Urban (ABU-2)

For the ABU Urban−2 dataset, the number of estimation endmembers was three, as shown in

Figure 5c, and the estimated CP rank was

.

We obtained the best performance when we set the parameters as

. As shown in

Table 2, the detection result

of ATLSS was higher than those of Dm-1, Dm-2, Dm-3, and Dm-4. In contrast, the

value of ATLSS was slightly higher than those of the other four generative methods. This model suffered from a much higher detection result, which caused a few anomaly pixels to be detected as background pixels. However, its efficient separation between the anomaly and background is shown in

Figure 6e, demonstrating that it is still a competitive AD method. For the Urban (ABU-2) dataset, the results of the tensor-based PTA and ATLSS methods were evenly matched, as shown in

Table 2 and

Figure 11 and

Figure 12. However, it is worth noting that the

value of ATLSS was lower than that of PTA, which demonstrated that the abundance maps possessed more distinctive features than the original data and enabled the more accurate identification of the anomaly and the background; hence, the model achieved outstanding performance.

We performed the proposed ATLSS method and extensive comparison experiments using the five datasets and summarize the advantages of the proposed method as follows:

- (1)

Effectiveness: The proposed ATLSS method decomposed the background into an abundance tensor and endmember matrix. The structural characteristics of the abundance tensor were fully explored, i.e., the local spatial continuity and the high abundance vector correlations, which contributed to reconstructing a more accurate abundance tensor for the background. The proposed ATLSS model performed excellently compared to its degradation models Dm-1, Dm-2, Dm-3, and Dm-4.

- (2)

Performance: Eight comparison algorithms were presented to sufficiently demonstrate the performance of the proposed method. Compared to RX, ATLSS had a more accurate AD performance thanks to the low-rank and sparse assumptions. The deep learning method GVAE had a high training time while achieving a moderate performance when compared with ATLSS. Compared to the tensor-based methods PTA, TRPCA, and LRTDTV, the proposed ATLSS method exploited the abundance tensor’s physical meaning, which provided more distinctive features than the raw data, achieving outstanding AD performance.