PMPF: Point-Cloud Multiple-Pixel Fusion-Based 3D Object Detection for Autonomous Driving

Abstract

1. Introduction

- General—PMPF is a plug-and-play method and achieves many significant enhancements using the SOTA LiDAR-only detectors.

- Accurate—Several methods that use PMPF to fuse data, such as PV-RCNN and CIA-SSD, have improved mAP in all categories in the KITTI test set and can effectively reduce false detection rates.

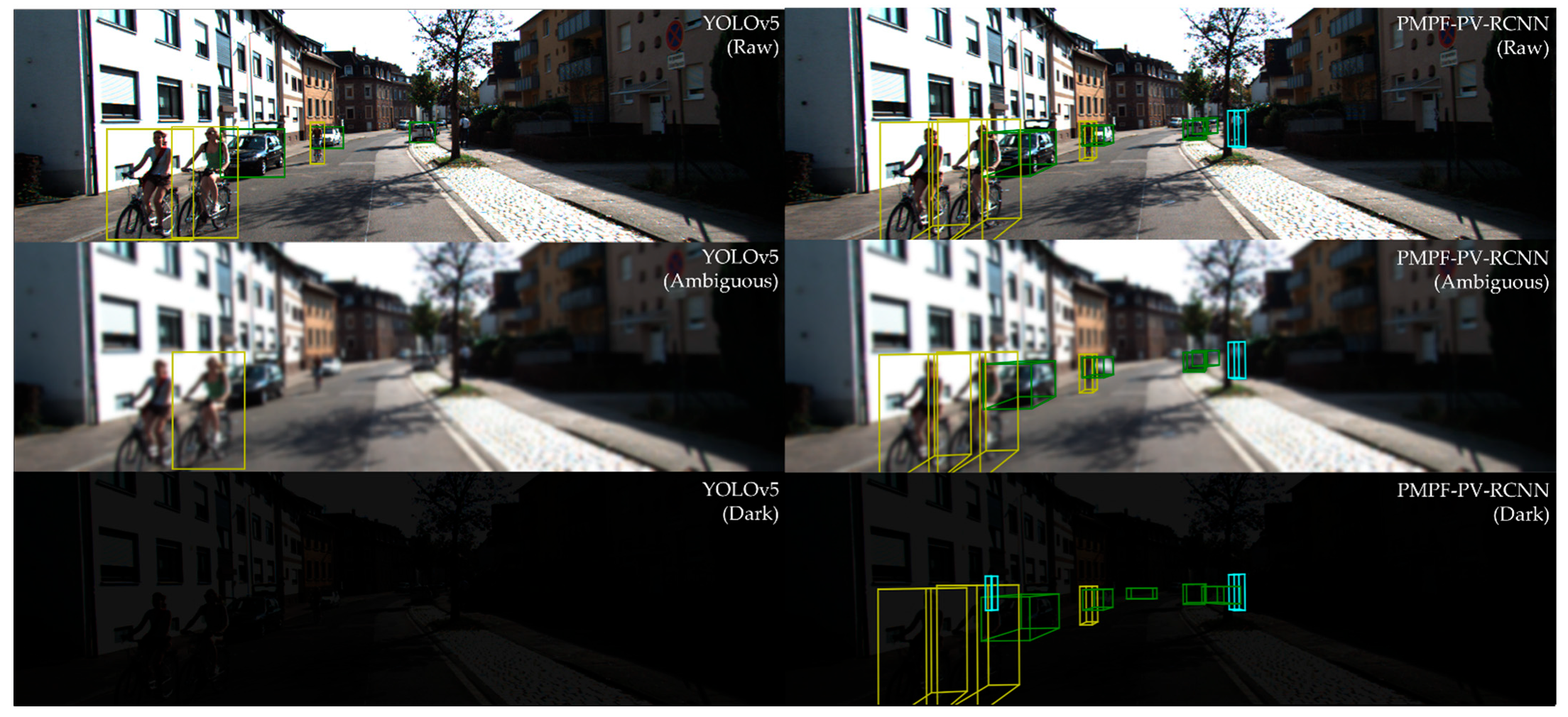

- Robust—PMPF ensures the correct detection of key targets based on low-quality images.

- Fast—PMPF has low latency in the pre-fusion phase and can be directly applied to real-time detectors without sacrificing real-time performance.

2. Related Works

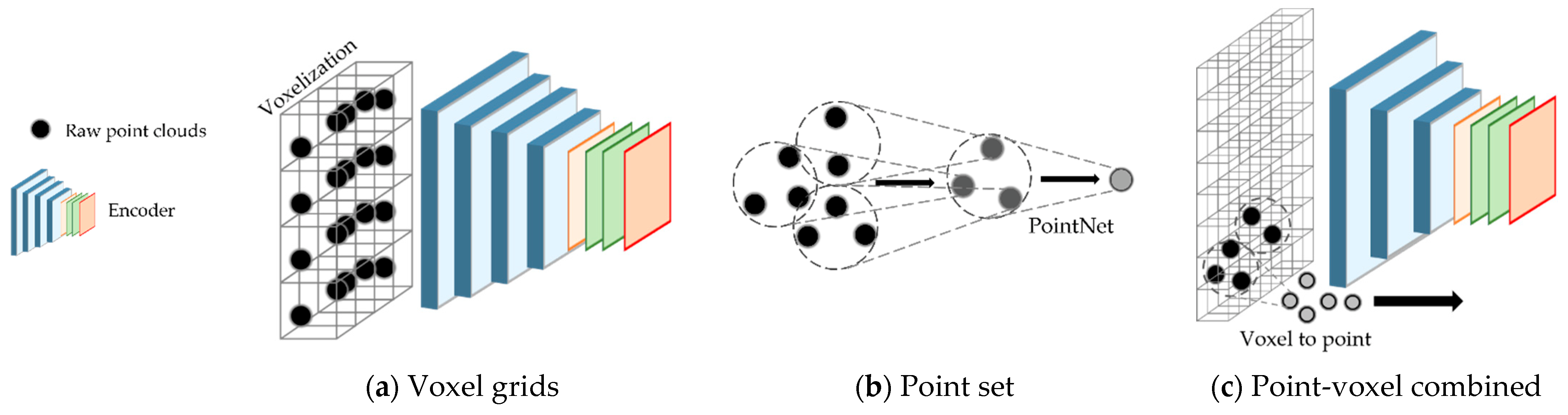

2.1. LiDAR-Only 3D Object Detector

2.2. LiDAR-Camera Fusion-Based 3D Object Detector

3. Method

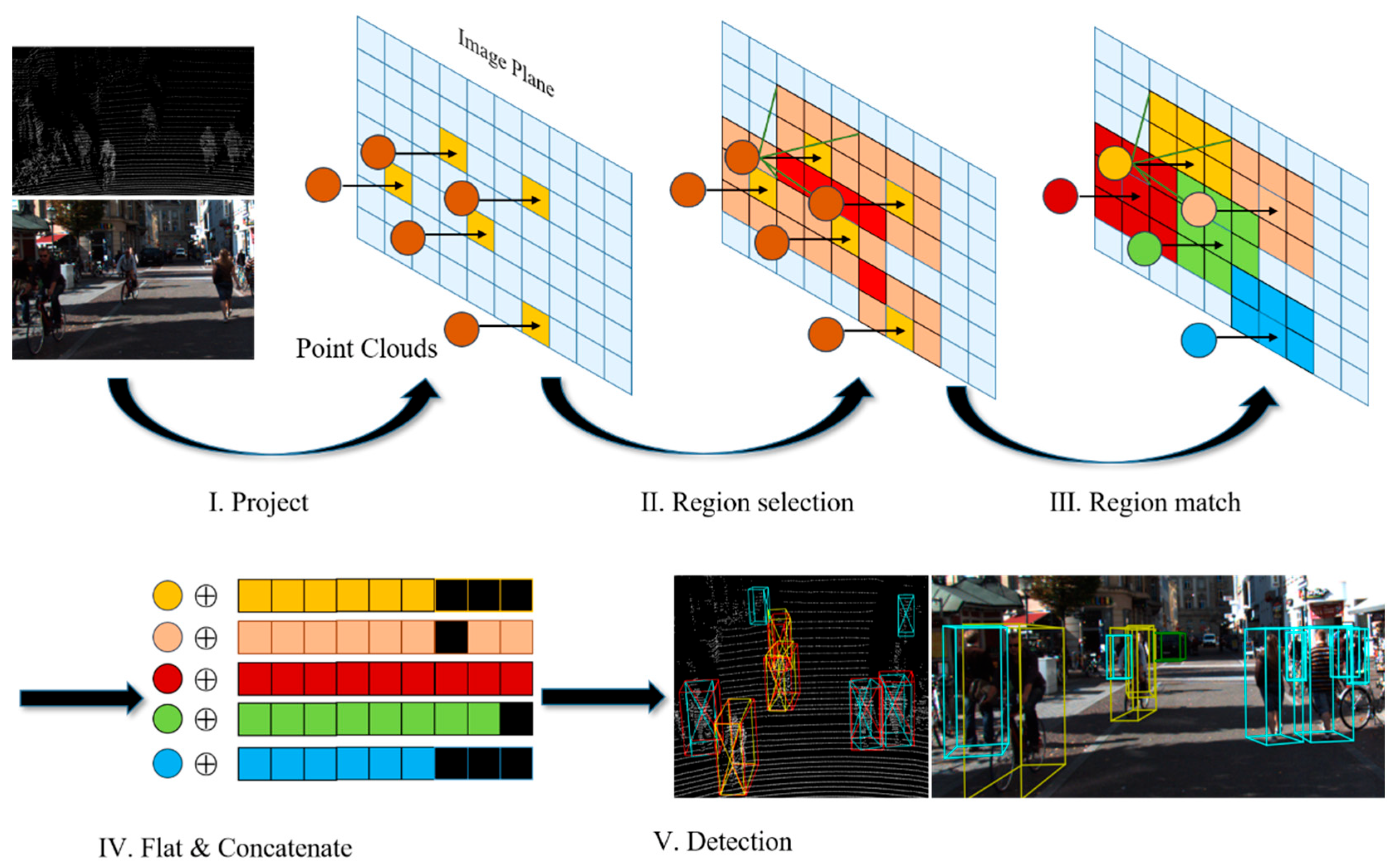

3.1. PMPF Method

| Algorithm 1 Point Cloud-Multiply Pixels Fusion (, , , , ) | |

| Inputs: | |

| Point clouds with N points. | |

| Image with W width, H height and 3 channels. | |

| Region size must be odds. | |

| Homogenous transformation matrix . | |

| Camera matrix . | |

| Output: | |

| Fused point clouds . | |

| for do | |

| end for |

3.1.1. Region Selection

3.1.2. Region Match

3.1.3. Flat and Concatenate

3.2. 3D Object Detection

4. Experimental Setup

4.1. Dataset

- Easy: Minimum bounding box height: 40 px; maximum occlusion level: fully visible; maximum truncation: 15%;

- Moderate: Minimum bounding box height: 25 px; maximum occlusion level: partly occluded; maximum truncation: 30%;

- Hard: Minimum bounding box height: 25 px; maximum occlusion level: difficult to see; maximum truncation: 50%.

4.2. PMPF Setup

4.3. 3D Object Detection

5. Result and Analysis

5.1. Quantitative Analysis

5.1.1. KITTI Validation Set

5.1.2. KITTI Test Set

5.1.3. Performance Disadvantage Analysis

- In comparison with the LiDAR-only detector, performance degradation occurred mainly in the category of car with well-defined geometric features. Since PMPF makes the detector sensitive to texture information, it may reduce the serval detector’s sensitivity to targets with explicit geometric features.

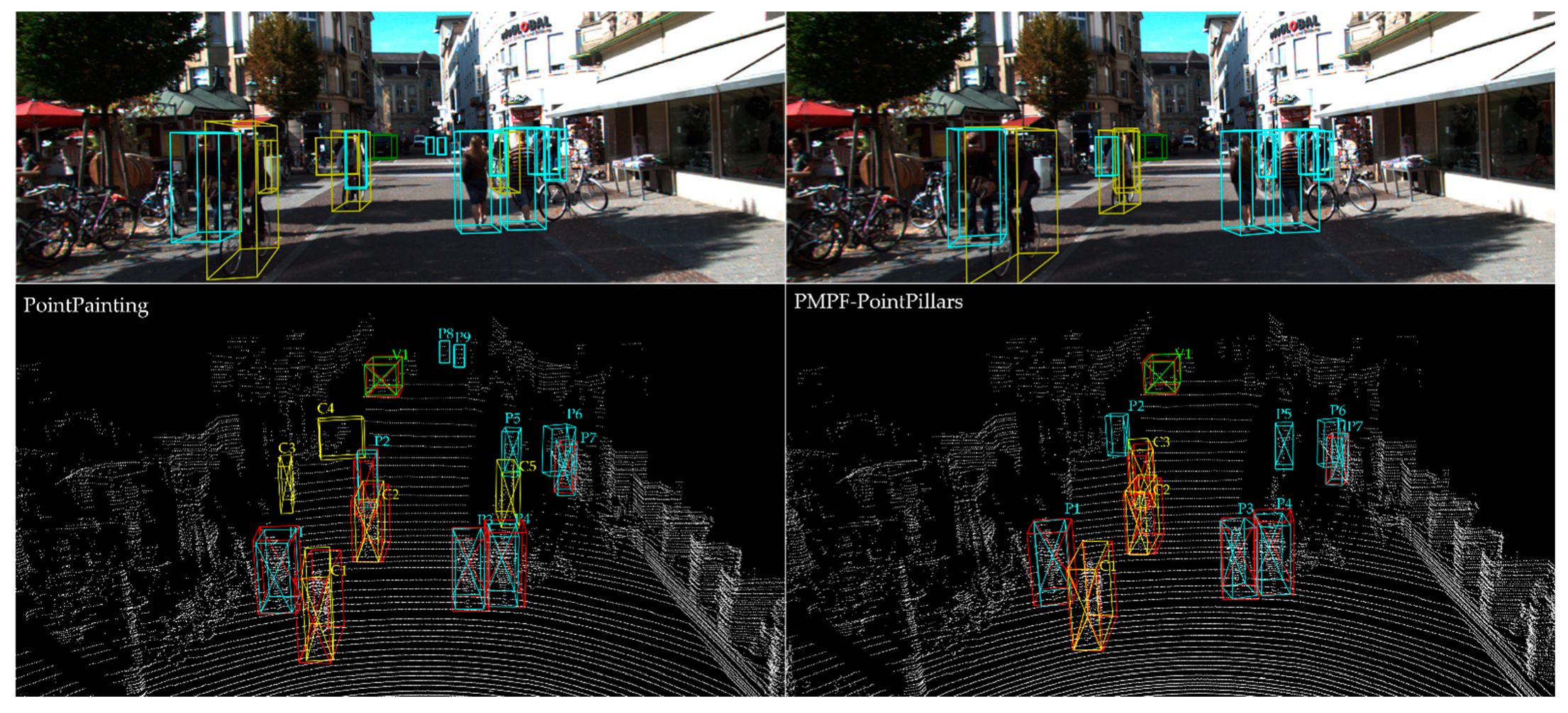

- In comparison with the fusion-based model, performance disadvantage occurs mainly in the more difficult pedestrian and cyclist categories. The comparison with PointPainting [9] is discussed in detail in Section 5.2, and this section focuses on the performance disadvantage with F-ConvNet [27] in the cyclist category. F-ConvNet generates region proposals in the image, generates the frustum in space, extracts features, and enhances the model detection for small targets by performing multi-resolution frustum feature fusion. In contrast, PMPF improves the detection of targets with sparse geometric information by directly fusing multi-pixels matched with point clouds, and achieves a general accuracy improvement for small targets compared to the LiDAR-only detector; however, there is no doubt that PMPF is not as sensitive as 2D perception models for images.

- Adaptive fusion data selection. PMPF is a sequence-based multimodal fusion model. In the pre-fusion stage, the size of the fused information is selected based on the distinctness of geometric features (point cloud density, etc.) using an adaptive mechanism (self-attention, etc.). Fewer or even no pixels are fused to dense point clouds, and more pixels are fused to sparse point clouds, which are subsequently sampled to the same scale before being fed into the detector to ensure the accurate detection of targets with various uneven densities in the scene.

- The adoption of a multi-stage fusion strategy to achieve the accurate detection of texture information by increasing the fusion of 2D–3D features in the network.

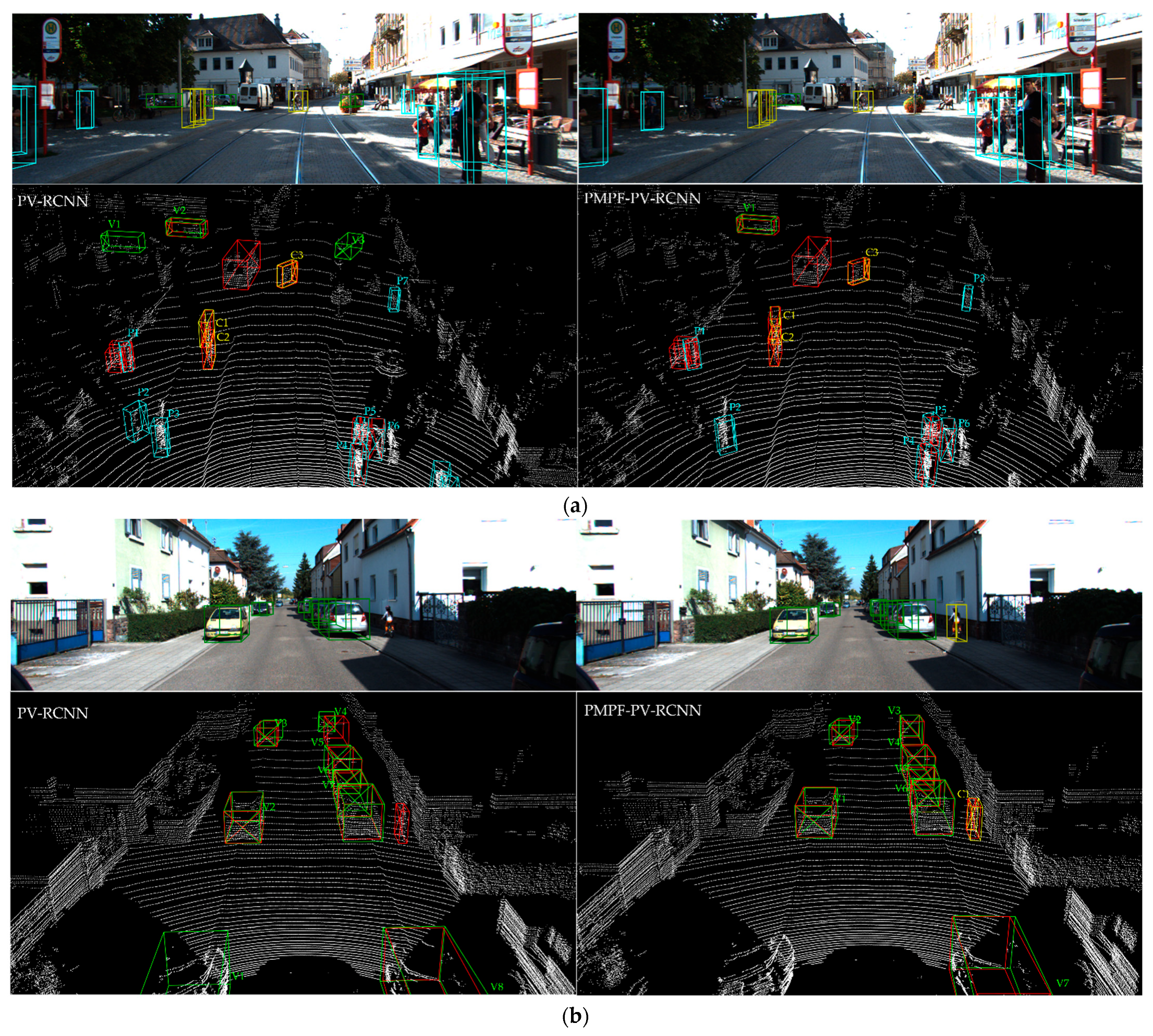

5.2. Qualitative Analysis

5.3. Time Latency

5.4. Ablation Studies

5.4.1. Effect of the Image Quality

5.4.2. Effect of the PMPF Components

- Some of the projected point clouds are at the edges of the image plane, making it impossible to extend them in certain directions to obtain pixel information;

- Most of the projected point clouds are more closely aligned in the horizontal direction, resulting in multiple points repeatedly acquiring the same pixels, at which point the ARR is 33.86%.

5.4.3. Effect of the Clustering Conditions and Execution Configuration of K-Means Clustering

6. Conclusions

- PMPF accurately fuses all point clouds, which is computationally wasteful at background points;

- PMPF’s sensitivity to targets with significant geometric features is reduced compared to LiDAR-only detectors;

- PMPF’s detection accuracy for hard-difficulty targets is not accurate enough compared to some SOTA fusion-based methods;

- PMPF method is trained and tested on public datasets; therefore, factors such as rain, snow, dust, and illumination conduction that affect LiDAR and cameras can degrade the generalization performance of the PMPF method.

- Segmenting the foreground and background and performing accurate data fusion only in the foreground without sacrificing real-time performance;

- Adaptive selection of fused image information, less or even no fusion of images in point clouds with significant geometric features, and more fusion of image information on sparse objects.

- Fusing more hierarchical multimodal data-fusion strategies, such as building a deep network of data fusion + feature fusion to enhance the performance of object detection.

- Fusing more information from sensors, such as radar, and IMU, to ensure the environment coding has greater accuracy with richer results to improve the performance of the detector;

- Enhancing the generalization performance by data augmentation or training in real data.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Duarte, F. Self-Driving Cars: A City Perspective. Sci. Robot. 2019, 4, eaav9843. [Google Scholar] [CrossRef]

- Guo, J.; Kurup, U.; Shah, M. Is It Safe to Drive? An Overview of Factors, Metrics, and Datasets for Driveability Assessment in Autonomous Driving. IEEE Trans. Intell. Transport. Syst. 2020, 21, 3135–3151. [Google Scholar] [CrossRef]

- Bigman, Y.E.; Gray, K. Life and Death Decisions of Autonomous Vehicles. Nature 2020, 579, E1–E2. [Google Scholar] [CrossRef]

- Huang, P.; Cheng, M.; Chen, Y.; Luo, H.; Wang, C.; Li, J. Traffic Sign Occlusion Detection Using Mobile Laser Scanning Point Clouds. IEEE Trans. Intell. Transport. Syst. 2017, 18, 2364–2376. [Google Scholar] [CrossRef]

- Chen, L.; Zou, Q.; Pan, Z.; Lai, D.; Zhu, L.; Hou, Z.; Wang, J.; Cao, D. Surrounding Vehicle Detection Using an FPGA Panoramic Camera and Deep CNNs. IEEE Trans. Intell. Transport. Syst. 2020, 21, 5110–5122. [Google Scholar] [CrossRef]

- Wang, J.-G.; Zhou, L.-B. Traffic Light Recognition With High Dynamic Range Imaging and Deep Learning. IEEE Trans. Intell. Transport. Syst. 2019, 20, 1341–1352. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are We Ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision Meets Robotics: The KITTI Dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. PointPainting: Sequential Fusion for 3D object detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4603–4611. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast Encoders for object detection From Point Clouds. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12689–12697. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. STD: Sparse-to-Dense 3D Object Detector for Point Cloud. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1951–1960. [Google Scholar]

- Cui, Y.; Chen, R.; Chu, W.; Chen, L.; Tian, D.; Li, Y.; Cao, D. Deep Learning for Image and Point Cloud Fusion in Autonomous Driving: A Review. IEEE Trans. Intell. Transport. Syst. 2022, 23, 722–739. [Google Scholar] [CrossRef]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef]

- Zheng, W.; Tang, W.; Chen, S.; Jiang, L.; Fu, C.-W. CIA-SSD: Confident IoU-Aware Single-Stage Object Detector From Point Cloud. Proc. AAAI Conf. Artif. Intell. 2021, 35, 3555–3562. [Google Scholar] [CrossRef]

- Zheng, W.; Tang, W.; Jiang, L.; Fu, C.-W. SE-SSD: Self-Ensembling Single-Stage Object Detector from Point Cloud. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 14494–14503. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D Object Proposal Generation and Detection from Point Cloud. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Shi, S.; Guo, C.; Yang, J.; Li, H. PV-RCNN: The Top-Performing LiDAR-Only Solutions for 3D Detection / 3D Tracking / Domain Adaptation of Waymo Open Dataset Challenges. arXiv 2020, arXiv:2008.12599. [Google Scholar] [CrossRef]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From Points to Parts: 3D object detection from Point Cloud with Part-Aware and Part-Aggregation Network. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2647–2664. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17), Red Hook, NY, USA, 4 December 2017; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D object detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Graham, B.; Engelcke, M.; van der Maaten, L. 3D Semantic Segmentation with Submanifold Sparse Convolutional Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 9224–9232. [Google Scholar]

- Zhang, J.; Wang, J.; Xu, D.; Li, Y. HCNET: A Point Cloud object detection Network Based on Height and Channel Attention. Remote Sens. 2021, 13, 5071. [Google Scholar] [CrossRef]

- Ge, R.; Ding, Z.; Hu, Y.; Wang, Y.; Chen, S.; Huang, L.; Li, Y. AFDet: Anchor Free One Stage 3D object detection. arXiv 2020, arXiv:2006.12671. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum PointNets for 3D object detection From RGB-D Data. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 918–927. [Google Scholar]

- Wang, Z.; Jia, K. Frustum ConvNet: Sliding Frustums to Aggregate Local Point-Wise Features for Amodal 3D object detection. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1742–1749. [Google Scholar]

- Du, X.; Ang, M.H.; Karaman, S.; Rus, D. A General Pipeline for 3D Detection of Vehicles. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 3194–3200. [Google Scholar]

- Shin, K.; Kwon, Y.P.; Tomizuka, M. RoarNet: A Robust 3D object detection Based on RegiOn Approximation Refinement. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 2510–2515. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. IPOD: Intensive Point-Based Object Detector for Point Cloud. arXiv 2018, arXiv:1812.05276. [Google Scholar]

- Imad, M.; Doukhi, O.; Lee, D.-J. Transfer Learning Based Semantic Segmentation for 3D object detection from Point Cloud. Sensors 2021, 21, 3964. [Google Scholar] [CrossRef] [PubMed]

- Huang, T.; Liu, Z.; Chen, X.; Bai, X. EPNet: Enhancing Point Features with Image Se-mantics for 3D object detection. arXiv 2020, arXiv:2007.08856. [Google Scholar]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-View 3D object detection Network for Autonomous Driving. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1907–1915. [Google Scholar]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3D Proposal Generation and object detection from View Aggregation. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar]

- Lu, H.; Chen, X.; Zhang, G.; Zhou, Q.; Ma, Y.; Zhao, Y. Scanet: Spatial-Channel Attention Network for 3D object detection. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 1992–1996. [Google Scholar]

- Yuan, Q.; Mohd Shafri, H.Z. Multi-Modal Feature Fusion Network with Adaptive Center Point Detector for Building Instance Extraction. Remote Sens. 2022, 14, 4920. [Google Scholar] [CrossRef]

- Zheng, W.; Xie, H.; Chen, Y.; Roh, J.; Shin, H. PIFNet: 3D object detection Using Joint Image and Point Cloud Features for Autonomous Driving. Appl. Sci. 2022, 12, 3686. [Google Scholar] [CrossRef]

- Liu, L.; He, J.; Ren, K.; Xiao, Z.; Hou, Y. A LiDAR–Camera Fusion 3D object detection Algorithm. Information 2022, 13, 169. [Google Scholar] [CrossRef]

- Wang, J.; Zhu, M.; Wang, B.; Sun, D.; Wei, H.; Liu, C.; Nie, H. KDA3D: Key-Point Densification and Multi-Attention Guidance for 3D object detection. Remote Sens. 2020, 12, 1895. [Google Scholar] [CrossRef]

- Wang, J.; Zhu, M.; Sun, D.; Wang, B.; Gao, W.; Wei, H. MCF3D: Multi-Stage Complementary Fusion for Multi-Sensor 3D object detection. IEEE Access 2019, 7, 90801–90814. [Google Scholar] [CrossRef]

- Pang, S.; Morris, D.; Radha, H. CLOCs: Camera-LiDAR Object Candidates Fusion for 3D object detection. arXiv 2020, arXiv:2009.00784. [Google Scholar]

- Liang, M.; Yang, B.; Wang, S.; Urtasun, R. Deep Continuous Fusion for Multi-Sensor 3D object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 641–656. [Google Scholar]

- Liang, M.; Yang, B.; Chen, Y.; Hu, R.; Urtasun, R. Multi-Task Multi-Sensor Fusion for 3D object detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7345–7353. [Google Scholar]

- Sindagi, V.A.; Zhou, Y.; Tuzel, O. MVX-Net: Multimodal VoxelNet for 3D object detection. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 7276–7282. [Google Scholar]

- Song, S.; Xiao, J. Sliding Shapes for 3D object detection in Depth Images. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 634–651. [Google Scholar]

- Song, S.; Xiao, J. Deep Sliding Shapes for Amodal 3D object detection in RGB-D Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 808–816. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Wang, S.; Suo, S.; Ma, W.-C.; Pokrovsky, A.; Urtasun, R. Deep Parametric Continuous Convolutional Neural Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2589–2597. [Google Scholar]

- Krishna, K.; Narasimha Murty, M. Genetic K-Means Algorithm. IEEE Trans. Syst. Man Cybern. Part B Cybern. 1999, 29, 433–439. [Google Scholar] [CrossRef]

- Jain, A.K. Data Clustering: 50 Years beyond K-Means. Pattern Recognit. Lett. 2010, 31, 651–666. [Google Scholar] [CrossRef]

- Qian, R.; Lai, X.; Li, X. 3D object detection for Autonomous Driving: A Survey. Pattern Recognit. 2022, 130, 108796. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

| Method | RT (ms) | mAP | Car | Pedestrian | Cyclist | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mod. | Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | ||

| PointPillars [10] | 16 | 66.96 | 87.22 | 76.95 | 73.52 | 65.37 | 60.66 | 56.51 | 82.29 | 63.26 | 59.82 |

| PMPF-PointPillars | 38 | 69.44 | 86.61 | 76.71 | 75.35 | 70.69 | 66.20 | 60.53 | 82.14 | 65.41 | 58.91 |

| SECOND [13] | 38 | 67.12 | 86.85 | 76.64 | 74.41 | 67.79 | 59.84 | 52.38 | 84.92 | 64.89 | 58.59 |

| PMPF-SECOND | 60 | 68.44 | 88.18 | 78.21 | 76.88 | 69.36 | 60.35 | 54.23 | 85.52 | 66.76 | 63.38 |

| PointRCNN [16] | 100 | 67.01 | 86.75 | 76.06 | 74.30 | 63.29 | 58.32 | 51.59 | 83.68 | 66.67 | 61.92 |

| PMPF-PointRCNN | 122 | 72.26 | 88.58 | 78.43 | 77.93 | 63.90 | 58.53 | 51.49 | 87.25 | 74.46 | 68.28 |

| PV-RCNN [17] | 80 | 70.83 | 91.55 | 83.12 | 78.94 | 63.63 | 56.62 | 52.93 | 89.17 | 72.75 | 68.57 |

| PMPF-PV-RCNN | 102 | 73.52 | 89.16 | 83.66 | 78.82 | 66.59 | 61.82 | 56.94 | 90.79 | 75.08 | 70.88 |

| Part-A2 [18] | 80 | 69.79 | 89.56 | 79.41 | 78.84 | 65.68 | 60.05 | 55.45 | 85.50 | 69.90 | 65.48 |

| PMPF- Part-A2 | 101 | 70.82 | 89.44 | 79.17 | 78.67 | 64.01 | 61.25 | 58.35 | 85.56 | 72.04 | 67.12 |

| CIA-SSD [14] | 31 | - * | 89.89 | 79.63 | 78.65 | - | - | - | - | - | - |

| PMPF-CIA-SSD | 53 | - | 89.66 | 80.95 | 78.90 | - | - | - | - | - | - |

| SE-SSD [15] | 31 | - | 93.19 | 86.12 | 83.31 | - | - | - | - | - | - |

| PMPF-SE-SSD | 53 | - | 94.20 | 85.45 | 83.68 | - | - | - | - | - | - |

| Method | RT(s) | mAP | Car | Pedestrian | Cyclist | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mod. | Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | ||

| PointPillars [10] (base) | 0.016 | 66.96 | 87.22 | 76.95 | 73.52 | 65.37 | 60.66 | 56.51 | 82.29 | 63.26 | 59.82 |

| PointPainting [9] | 0.316 | 69.03 | 86.26 | 76.77 | 70.25 | 71.50 | 66.15 | 61.03 | 79.12 | 64.18 | 60.79 |

| PMPF-PointPillars | 0.037 | 69.44 | 86.61 | 76.71 | 75.35 | 70.69 | 66.20 | 60.53 | 82.14 | 65.41 | 58.91 |

| Method | Modality | mAP | Car | Pedestrian | Cyclist | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mod. | Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | ||

| MV3D [33] | L+I | - * | 74.97 | 63.63 | 54.00 | - | - | - | - | - | - |

| AVOD-FPN [34] | L+I | 54.86 | 83.07 | 71.76 | 65.73 | 50.46 | 42.27 | 39.04 | 63.76 | 50.55 | 44.93 |

| F-PointNet [1] | L+I | 59.35 | 82.19 | 69.79 | 50.59 | 50.53 | 42.15 | 38.08 | 72.27 | 56.12 | 49.01 |

| F-ConvNet [27] | L+I | 61.61 | 87.36 | 76.39 | 66.59 | 52.16 | 43.38 | 38.80 | 81.98 | 65.07 | 56.54 |

| PointPainting [9] | L+I | 58.82 | 82.11 | 71.70 | 67.08 | 50.32 | 40.97 | 37.87 | 77.63 | 63.78 | 55.89 |

| SECOND [13] | L | - | 84.65 | 75.96 | 68.71 | - | - | - | - | - | - |

| PointPillars [10] | L | 58.29 | 82.58 | 74.31 | 68.99 | 51.45 | 41.92 | 38.89 | 77.10 | 58.65 | 51.92 |

| PointRCNN [16] | L | 57.94 | 86.96 | 75.64 | 70.70 | 47.98 | 39.37 | 36.01 | 74.96 | 58.82 | 52.53 |

| Part-A2 [18] | L | 61.78 | 87.81 | 78.49 | 73.51 | 53.10 | 43.35 | 40.06 | 79.17 | 63.52 | 56.93 |

| STD [11] | L | 61.25 | 87.95 | 79.71 | 75.09 | 53.29 | 42.47 | 38.35 | 78.69 | 61.59 | 55.30 |

| PV-RCNN [17] | L | 62.81 | 90.25 | 81.43 | 76.82 | 52.17 | 43.29 | 40.29 | 78.60 | 63.71 | 57.65 |

| CIA-SSD [14] | L | - | 89.59 | 80.28 | 72.87 | - | - | - | - | - | - |

| SE-SSD [15] | L | - | 91.49 | 82.54 | 77.15 | - | - | - | - | - | - |

| PMPF-PV-RCNN | L+I | 63.62 | 90.23 | 82.13 | 77.25 | 52.20 | 44.50 | 40.36 | 79.09 | 64.23 | 56.95 |

| Region Size | Projection (ms) | Region Selection (ms) | Region Match (ms) | Encoding (ms) | Total Time Latency (ms) |

|---|---|---|---|---|---|

| Frame (Region) | (PointPillars) | ||||

| K = 1 | 0.092 | 0.007 | 0.000 (0.000) | 0.051 | 0.150 |

| K = 3 | 0.092 | 0.044 | 20.723 (0.617) | 0.662 | 21.521 |

| K = 5 | 0.092 | 0.097 | 60.031 (1.622) | 1.352 | 61.572 |

| Method | Image Quality | Mod. mAP | Car | Pedestrian | Cyclist | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | |||

| PV-RCNN | - | 70.83 | 91.55 | 83.12 | 78.94 | 63.06 | 59.45 | 55.02 | 89.48 | 72.46 | 68.86 |

| PMPF-PV-RCNN | Raw | 73.52 | 89.16 | 83.66 | 78.82 | 66.59 | 61.82 | 56.94 | 90.79 | 75.08 | 70.88 |

| Ambiguous | 73.13 | 89.03 | 83.05 | 78.51 | 66.07 | 61.47 | 55.98 | 89.65 | 74.86 | 69.72 | |

| Dark | 70.71 | 88.62 | 81.04 | 75.95 | 63.02 | 59.13 | 54.48 | 89.17 | 71.98 | 68.41 | |

| Module (Input) | Region Size | Region Match | AUR%/ARR% | Car | Pedestrian | Cyclist | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | ||||

| SECOND [13] (Point clouds) | - | - | - | 86.85 | 76.64 | 74.41 | 67.79 | 59.84 | 52.38 | 84.92 | 64.89 | 58.59 |

| PMPF-SECOND (Point clouds +image) | 1 | × | 8.22/1.34 | 86.43 | 76.72 | 73.98 | 68.12 | 59.75 | 51.78 | 85.03 | 64.76 | 59.02 |

| 3 | × | 55.27/33.86 | 87.93 | 77.96 | 76.59 | 68.95 | 60.55 | 52.26 | 85.34 | 64.98 | 60.35 | |

| 3 | √ | 55.19/29.52 | 88.18 | 78.21 | 76.88 | 69.36 | 60.35 | 54.23 | 85.52 | 66.76 | 63.38 | |

| 5 | × | 85.36/144.03 | 85.29 | 74.35 | 72.01 | 67.51 | 57.26 | 49.18 | 83.52 | 63.35 | 56.25 | |

| 5 | √ | 84.55/80.84 | 87.73 | 76.12 | 74.46 | 69.02 | 60.03 | 53.55 | 85.15 | 65.56 | 57.65 | |

| Module (Input) | Region Size | Region Match | AUR%/ARR% | Car | Pedestrian | Cyclist | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | ||||

| PV-RCNN [17] (Point clouds) | - | - | - | 91.55 | 83.12 | 78.94 | 63.06 | 59.45 | 55.02 | 89.48 | 72.46 | 68.86 |

| PMPF-PV-RCNN (Point clouds +image) | 1 | × | 8.22/1.34 | 87.21 | 81.20 | 75.23 | 64.15 | 59.02 | 55.37 | 87.23 | 71.97 | 68.50 |

| 3 | √ | 55.27/33.86 | 88.96 | 81.18 | 76.59 | 65.25 | 61.45 | 56.22 | 88.42 | 74.23 | 69.18 | |

| 3 | √ | 55.19/29.52 | 89.16 | 83.66 | 78.82 | 66.59 | 61.82 | 56.94 | 90.79 | 75.08 | 70.88 | |

| 5 | × | 85.36/144.03 | 84.52 | 79.36 | 76.44 | 63.35 | 61.06 | 55.13 | 88.36 | 74.67 | 69.25 | |

| 5 | √ | 84.55/80.84 | 87.21 | 79.44 | 76.91 | 66.74 | 61.66 | 55.92 | 89.94 | 74.79 | 69.45 | |

| Dt. | Dd. | Dr. | Clu. | Iter. | RT (ms) | AUR%/ARR% | mAP Mod. | Car | Pedestrian | Cyclist | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | ||||||||

| 55.27/33.86 | 72.29 | 88.96 | 81.18 | 76.59 | 65.25 | 61.45 | 56.22 | 88.42 | 74.23 | 69.18 | ||||||

| √ | 2 | 20 | 20.527 | 55.25/30.04 | 72.95 | 89.03 | 82.54 | 77.93 | 66.45 | 61.43 | 56.21 | 88.12 | 74.87 | 69.35 | ||

| √ | √ | 2 | 20 | 20.561 | 55.21/29.76 | 72.93 | 88.98 | 82.67 | 78.12 | 66.28 | 61.55 | 56.71 | 88.25 | 74.57 | 69.98 | |

| √ | √ | 2 | 20 | 20.533 | 55.23/29.88 | 73.32 | 88.85 | 83.52 | 78.66 | 66.31 | 61.62 | 56.67 | 90.44 | 74.83 | 70.36 | |

| √ | √ | √ | 2 | 20 | 20.723 | 55.19/29.52 | 73.52 | 89.16 | 83.66 | 78.82 | 66.59 | 61.82 | 56.94 | 90.79 | 75.08 | 70.88 |

| √ | √ | √ | 4 | 20 | 22.423 | 30.23/11.54 | 71.87 | 88.12 | 82.65 | 77.16 | 65.21 | 60.05 | 55.63 | 86.15 | 72.92 | 69.25 |

| √ | √ | √ | 2 | 40 | 37.589 | 55.08/28.47 | 73.44 | 89.54 | 83.35 | 79.12 | 66.76 | 61.76 | 56.73 | 90.63 | 75.21 | 71.10 |

| Module (Input) | Region Match | RT(s) | AUR%/ARR% | mAP Mod. | Car | Pedestrian | Cyclist | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | |||||

| PMPF-PV-RCNN (Point clouds + semantic map) | × | 0.22 | 54.25/26.68 | 73.81 | 88.95 | 84.05 | 79.36 | 65.89 | 62.04 | 58.03 | 89.73 | 75.36 | 72.02 |

| √ | 0.24 | 54.20/25.31 | 74.11 | 89.05 | 84.11 | 79.97 | 66.79 | 62.73 | 58.21 | 90.34 | 75.50 | 72.12 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Liu, K.; Bao, H.; Zheng, Y.; Yang, Y. PMPF: Point-Cloud Multiple-Pixel Fusion-Based 3D Object Detection for Autonomous Driving. Remote Sens. 2023, 15, 1580. https://doi.org/10.3390/rs15061580

Zhang Y, Liu K, Bao H, Zheng Y, Yang Y. PMPF: Point-Cloud Multiple-Pixel Fusion-Based 3D Object Detection for Autonomous Driving. Remote Sensing. 2023; 15(6):1580. https://doi.org/10.3390/rs15061580

Chicago/Turabian StyleZhang, Yan, Kang Liu, Hong Bao, Ying Zheng, and Yi Yang. 2023. "PMPF: Point-Cloud Multiple-Pixel Fusion-Based 3D Object Detection for Autonomous Driving" Remote Sensing 15, no. 6: 1580. https://doi.org/10.3390/rs15061580

APA StyleZhang, Y., Liu, K., Bao, H., Zheng, Y., & Yang, Y. (2023). PMPF: Point-Cloud Multiple-Pixel Fusion-Based 3D Object Detection for Autonomous Driving. Remote Sensing, 15(6), 1580. https://doi.org/10.3390/rs15061580