Context-Driven Feature-Focusing Network for Semantic Segmentation of High-Resolution Remote Sensing Images

Abstract

1. Introduction

- (1)

- There is a lack of efficient feature-focusing methods for multi-scale ground objects. HRRSI scenes typically include a wide range of artificial objects and natural elements, such as built-up, roads, barren land, impermeable surfaces, and trees, as shown in Figure 1. Buildings in urban environments are very changeable and typically closely aligned, while buildings in rural regions are simpler and arranged more haphazardly. Meanwhile, compared to rural regions, urban roads are generally wider and more complicated. Moreover, in urban scenes, water is generally represented as rivers or lakes, whereas rural areas are more likely to present ponds and ditches. Although combining low- and high-level features [16,17] can increase semantic segmentation performance, most previous studies applied this combined method directly to the entire HRRSI scene without focusing on ground objects at different scales to extract multi-scale information from the fused features further. Therefore, how a feature-focusing extraction method for multi-scale objects needs to be further addressed.

- (2)

- There is a lack of efficient feature-focusing methods for image context. In the DCNN-based semantic segmentation task, different resolution features have different effects on extracting ground objects at different scales. Having enough local background is beneficial for detecting small and densely aligned objects; however, for extensive and regular farms or other natural elements, local information may be redundant and bring additional interference to the classification. Therefore, the importance of features at different scales is not the same for different ground objects. Directly using fused features for segmentation can affect the performance of the model.

- (1)

- The novel FF module is proposed, which designs a multiscale convolution kernel group aimed at focusing on multi-scale ground objects in the fused features, extracting multi-scale spatial information at a more detailed level more efficiently and learning richer multi-scale feature representations.

- (2)

- The novel CF module is proposed, which adaptively calculates focus weights based on the image context, and the weights enhance the scale features related to the image and suppress the scale features irrelevanted to the image.

- (3)

- The CFFNet is proposed for the HRRSIs’ semantic segmentation. The network enhances the multi-scale feature representation capability of the model by focusing on multi-scale features that are associated with image context. Extensive experiments are conducted for multiclass (e.g., buildings, roads, farmland, and water) RS segmentation. The experimental results show that the proposed method outperforms SOTA methods and demonstrate the proposed framework’s rationality and effectiveness.

2. Materials and Methods

2.1. Related Work

2.1.1. Multi-Scale Feature Fusion-Based Semantic Segmentation Approaches

2.1.2. Attention-Based Semantic Segmentation Approaches

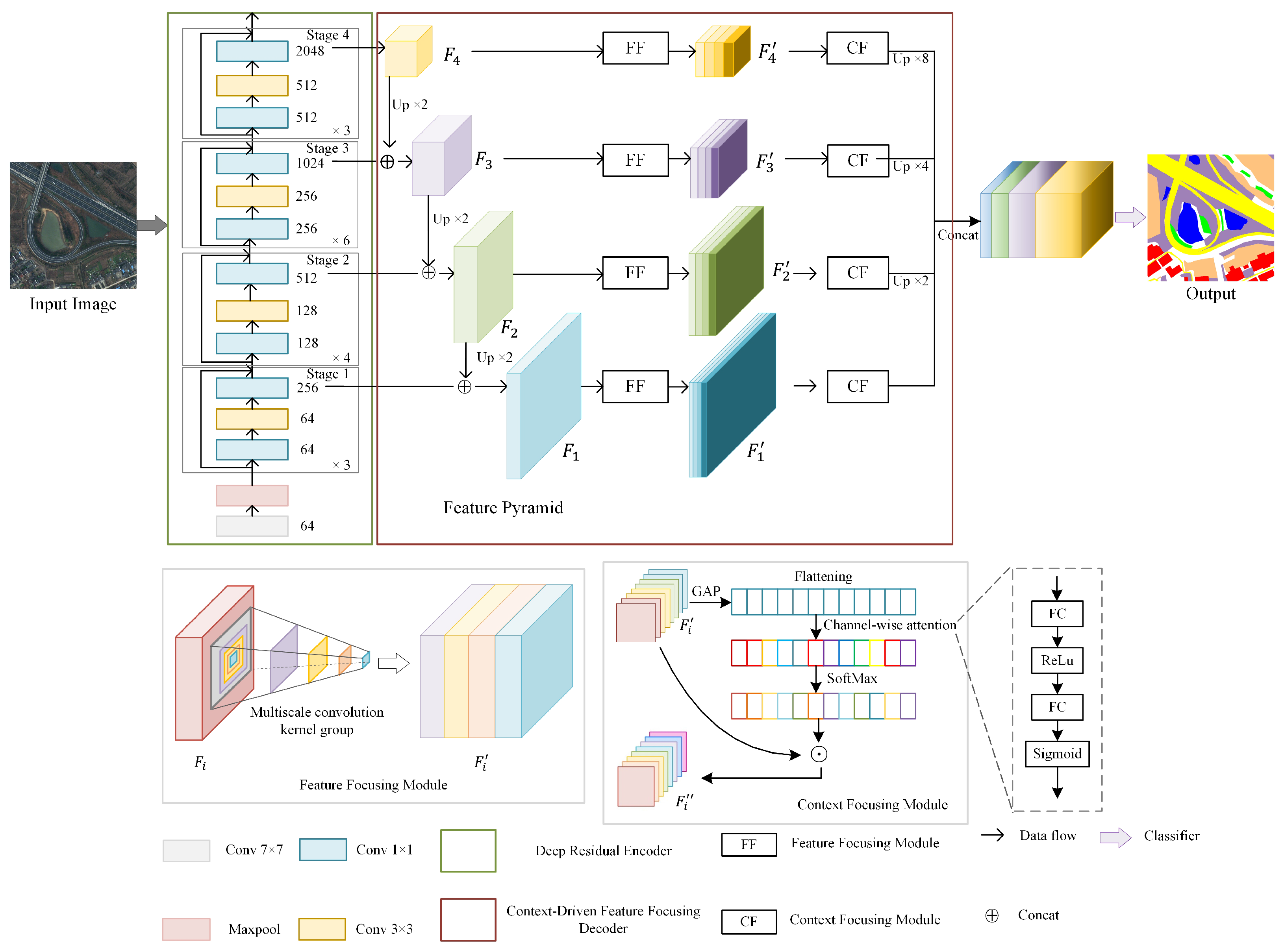

2.2. Methods

2.2.1. Deep Residual Encoder

2.2.2. Context-Driven Feature-Focusing Decoder

- (1)

- Feature-focusing module

- (2)

- Context-Focusing Module

2.2.3. Map Prediction and Loss Function

3. Results and Discussion

3.1. Dataset Description

3.2. Implementation

3.3. Evaluation Metrics

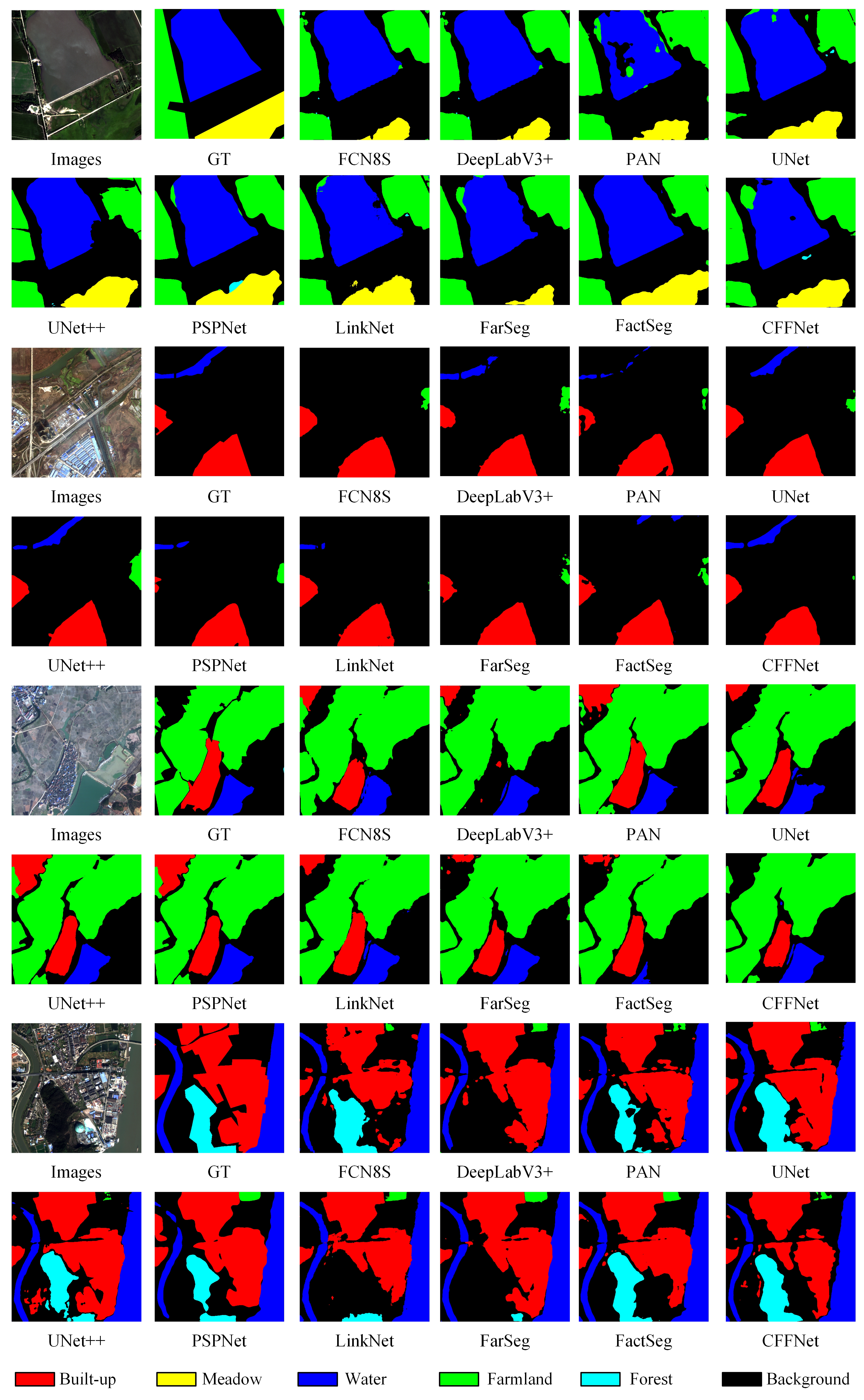

3.4. Experiments on the GID Dataset

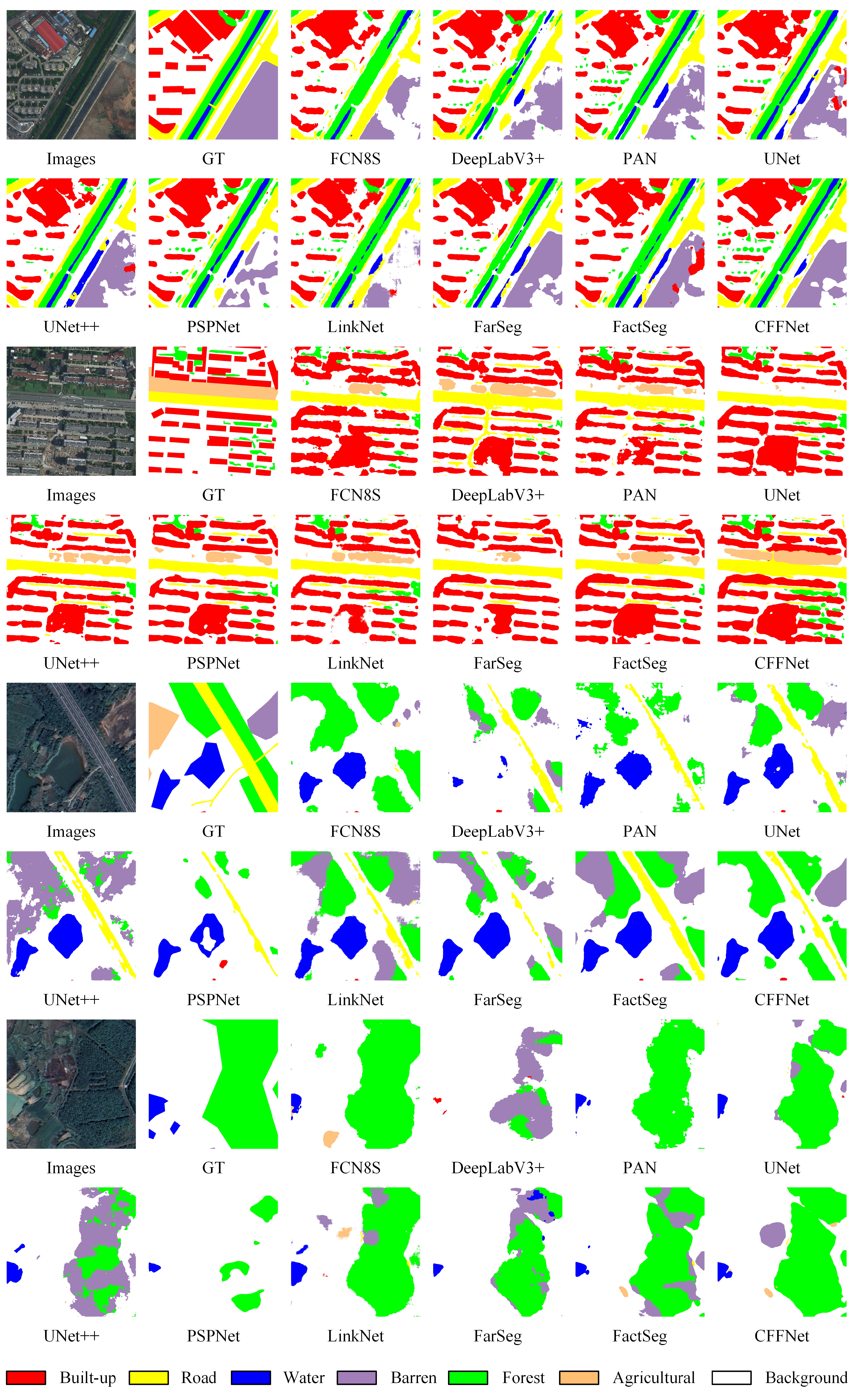

3.5. Experiments on the LoveDA Dataset

- (1)

- Baseline: The baseline method employs CE loss optimization. The baseline uses a modified ResNet50 as the encoder. The baseline’s decoder was a multiclass segmentation variation of an FPN, as described in Section 2.2.2, with a 3 × 3 convolution applied after F1, F2, F3, and F4, respectively.

- (2)

- Baseline + FF: This is the baseline with the FF module and CE loss optimization. The 3 × 3 convolution added after F1, F2, F3, and F4, respectively, in the baseline was removed.

- (3)

- Baseline + CF: This is the baseline with the CF module and CE loss optimization.

- (4)

- Ours: This is the full CFFNet framework.

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cheng, X.; He, X.; Qiao, M.; Li, P.; Hu, S.; Chang, P.; Tian, Z. Enhanced contextual representation with deep neural networks for land cover classification based on remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102706. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, C.; Li, R.; Duan, C.; Meng, X.; Atkinson, P.M. Scale-Aware Neural Network for Semantic Segmentation of Multi-Resolution Remote Sensing Images. Remote Sens. 2021, 13, 5015. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Duan, C.; Wang, L.; Zhang, C. Land cover classification from remote sensing images based on multi-scale fully convolutional network. Geo-Spat. Inf. Sci. 2022, 25, 1–17. [Google Scholar] [CrossRef]

- Li, N.; Huo, H.; Fang, T. A Novel Texture-Preceded Segmentation Algorithm for High-Resolution Imagery. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2818–2828. [Google Scholar] [CrossRef]

- Vapnik, V.; Chervonenkis, A. A note on one class of perceptrons. Autom. Remote Control 1964, 25, 788–792. [Google Scholar]

- Pavlov, Y.L. Random Forests. Karelian Cent. Russ. Acad. Sci. Petrozavodsk. 1997, 45, 5–32. [Google Scholar]

- Lowe, D. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91. [Google Scholar] [CrossRef]

- Teichmann, M.; Araujo, A.; Zhu, M.; Sim, J. Detect-to-Retrieve: Efficient Regional Aggregation for Image Search. CoRR 2018, arXiv:1812.01584. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Duan, C.; Yang, Y.; Wang, X. Classification of Hyperspectral Image Based on Double-Branch Dual-Attention Mechanism Network. Remote Sens. 2020, 12, 582. [Google Scholar] [CrossRef]

- Shao, Z.; Zhou, W.; Deng, X.; Zhang, M.; Cheng, Q. Multilabel Remote Sensing Image Retrieval Based on Fully Convolutional Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 318–328. [Google Scholar] [CrossRef]

- Gao, L.; Liu, H.; Yang, M.; Chen, L.; Wan, Y.; Xiao, Z.; Qian, Y. STransFuse: Fusing Swin Transformer and Convolutional Neural Network for Remote Sensing Image Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10990–11003. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Zhang, C.; Duan, C.; Su, J.; Wang, L.; Atkinson, P.M. Multiattention Network for Semantic Segmentation of Fine-Resolution Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Lin, G.; Milan, A.; Shen, C.; Reid, I. RefineNet: Multi-path Refinement Networks for High-Resolution Semantic Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5168–5177. [Google Scholar] [CrossRef]

- Tan, X.; Xiao, Z.; Wan, Q.; Shao, W. Scale Sensitive Neural Network for Road Segmentation in High-Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2021, 18, 533–537. [Google Scholar] [CrossRef]

- Li, R.; Wang, L.; Zhang, C.; Duan, C.; Zheng, S. A2-FPN for semantic segmentation of fine-resolution remotely sensed images. Int. J. Remote Sens. 2022, 43, 1131–1155. [Google Scholar] [CrossRef]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. BiSeNet: Bilateral Segmentation Network for Real-Time Semantic Segmentation. In Proceedings of the Computer Vision–ECCV 2018, Munich, Germany, 10–13 September; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 334–349. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Ronneberger, O. Invited Talk: U-Net Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Bildverarbeitung für die Medizin 2017, Heidelberg, Germany, 12–14 March 2017; Maier-Hein, G., Fritzsche, K.H., Deserno, G., Lehmann, T.M., Handels, H., Tolxdorff, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2017; p. 3. [Google Scholar]

- Zhao, H.; Qi, X.; Shen, X.; Shi, J.; Jia, J. ICNet for Real-Time Semantic Segmentation on High-Resolution Images. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 418–434. [Google Scholar]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.W.; Wu, J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Proceedings of the 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018; Stoyanov, D., Taylor, Z., Carneiro, G., Syeda-Mahmood, T., Martel, A., Maier-Hein, L., Tavares, J.M.R., Bradley, A., Papa, J.P., Belagiannis, V., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar] [CrossRef]

- Zhang, H.; Dana, K.; Shi, J.; Zhang, Z.; Wang, X.; Tyagi, A.; Agrawal, A. Context Encoding for Semantic Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7151–7160. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, k. Spatial Transformer Networks. In Advances in Neural Information Processing Systems, Proceedings of the Annual Conference on Neural Information Processing Systems 2015, Montreal, QC, Canada, 8–12 December 2015; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28, p. 28. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Advances In Neural Information Processing Systems 30, Proceedings of the Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Advances in Neural Information Processing, Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Wu, W.; Zhang, Y.; Wang, D.; Leo, Y. SK-Net: Deep Learning on Point Cloud via End-to-End Discovery of Spatial Keypoints. In Proceedings of the Thirty-Fourth AAAI Conference On Artificial Intelligence, The Thirty-Second Innovative Applications of Artificial Intelligence Conference and the Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, ASSOC Advancement Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Chen, Y.; Kalantidis, Y.; Li, J.; Yan, S.; Feng, J. A(2)-Nets: Double Attention Networks. In Advances in Neural Information Processing Systems 31, (NIPS 2018), Proceedings of the 32nd Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 2–8 December 2018; Bengio, S., Wallach, H., Larochelle, H., Grauman, K., CesaBianchi, N., Garnett, R., Eds.; Curran Publishing: Red Hook, NY, USA, 2018; Volume 31, p. 31. [Google Scholar]

- Roy, A.G.; Navab, N.; Wachinger, C. Concurrent Spatial and Channel ‘Squeeze & Excitation’ in Fully Convolutional Networks. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2018, Granada, Spain, 16–20 September 2018; Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 421–429. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-Local Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3141–3149. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. CCNet: Criss-Cross Attention for Semantic Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Long Beach, CA, USA, 15–20 June 2019; pp. 603–612. [Google Scholar] [CrossRef]

- Lin, X.; Guo, Y.; Wang, J. Global Correlation Network: End-to-End Joint Multi-Object Detection and Tracking. arXiv 2021, arXiv:abs/2103.12511. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 833–851. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve Restricted Boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Wang, J.; Zheng, Z.; Ma, A.; Lu, X.; Zhong, Y. LoveDA: A Remote Sensing Land-Cover Dataset for Domain Adaptive Semantic Segmentation. arXiv 2021, arXiv:abs/2110.08733. [Google Scholar]

- Tong, X.Y.; Xia, G.S.; Lu, Q.; Shen, H.; Li, S.; You, S.; Zhang, L. Land-cover classification with high-resolution remote sensing images using transferable deep models. Remote Sens. Environ. 2020, 237, 111322. [Google Scholar] [CrossRef]

- Li, H.; Xiong, P.; An, J.; Wang, L. Pyramid Attention Network for Semantic Segmentation. arXiv 2018, arXiv:1805.10180. [Google Scholar]

- Chaurasia, A.; Culurciello, E. LinkNet: Exploiting Encoder Representations for Efficient Semantic Segmentation. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017. [Google Scholar]

- Zheng, Z.; Zhong, Y.; Wang, J.; Ma, A. Foreground-Aware Relation Network for Geospatial Object Segmentation in High Spatial Resolution Remote Sensing Imagery. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4095–4104. [Google Scholar] [CrossRef]

- Ma, A.; Wang, J.; Zhong, Y.; Zheng, Z. FactSeg: Foreground Activation-Driven Small Object Semantic Segmentation in Large-Scale Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

| Domain | City | Train | Val | Test |

|---|---|---|---|---|

| Urban | NJ | 976 | 357 | 357 |

| CZ | 0 | 320 | 320 | |

| WH | 180 | 0 | 143 | |

| Rural | NJ | 992 | 320 | 336 |

| CZ | 0 | 0 | 640 | |

| WH | 374 | 672 | 0 | |

| Total | 2522 | 1669 | 1796 | |

| Method | Backbone | IoU per Category (%) | mIoU (%) | OA (%) | Kappa | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Background | Built-up | Farmland | Forest | Meadow | Water | |||||

| FCN8S | VGG16 | 63.69 | 81.75 | 66.25 | 33.80 | 31.44 | 64.28 | 56.87 | 78.86 | 0.6811 |

| DeepLabV3+ | ResNet50 | 64.71 | 87.93 | 69.03 | 29.83 | 39.26 | 65.31 | 59.34 | 80.47 | 0.7073 |

| PAN | ResNet50 | 65.17 | 82.41 | 67.62 | 32.42 | 39.99 | 65.61 | 58.87 | 80.01 | 0.6987 |

| UNet | ResNet50 | 64.20 | 88.51 | 59.17 | 27.61 | 35.97 | 66.16 | 58.60 | 80.30 | 0.7057 |

| Unet++ | ResNet50 | 64.70 | 82.29 | 68.19 | 29.90 | 37.04 | 66.16 | 58.05 | 79.89 | 0.6982 |

| PSPNet | ResNet50 | 64.20 | 87.18 | 67.98 | 32.19 | 40.45 | 65.38 | 59.56 | 80.05 | 0.7017 |

| LinkNet | ResNet50 | 64.25 | 86.20 | 68.41 | 29.58 | 35.72 | 65.72 | 58.31 | 79.93 | 0.7004 |

| FarSeg | ResNet50 | 64.50 | 85.46 | 69.11 | 30.00 | 37.36 | 65.65 | 58.68 | 80.24 | 0.7042 |

| FactSeg | ResNet50 | 64.40 | 87.68 | 69.00 | 29.29 | 35.69 | 66.07 | 58.69 | 80.29 | 0.7054 |

| Ours | ResNet50 | 65.29 | 88.73 | 68.45 | 33.27 | 36.11 | 66.00 | 59.64 | 80.60 | 0.7082 |

| Method | Backbone | IoU per Category (%) | mIoU (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Background | Built-up | Road | Water | Barren | Forest | Agricultural | |||

| FCN8S | VGG16 | 42.60 | 49.51 | 48.05 | 73.09 | 11.84 | 43.49 | 58.30 | 46.69 |

| DeepLabV3+ | ResNet50 | 42.97 | 50.88 | 52.02 | 74.36 | 10.40 | 44.21 | 58.53 | 47.62 |

| PAN | ResNet50 | 43.04 | 51.34 | 50.93 | 74.77 | 10.03 | 42.19 | 57.65 | 47.13 |

| UNet | ResNet50 | 43.06 | 52.74 | 52.78 | 73.08 | 10.33 | 43.05 | 59.87 | 47.84 |

| Unet++ | ResNet50 | 42.85 | 52.58 | 52.82 | 74.51 | 11.42 | 44.42 | 58.80 | 48.20 |

| PSPNet | ResNet50 | 44.40 | 52.13 | 53.52 | 76.50 | 9.73 | 44.07 | 57.85 | 48.31 |

| LinkNet | ResNet50 | 43.61 | 52.07 | 52.53 | 76.85 | 12.16 | 45.05 | 57.25 | 48.50 |

| FarSeg | ResNet50 | 43.09 | 51.48 | 53.85 | 76.61 | 9.78 | 43.33 | 58.90 | 48.15 |

| FactSeg | ResNet50 | 42.60 | 53.63 | 52.79 | 76.94 | 16.20 | 42.92 | 57.50 | 48.94 |

| Ours | ResNet50 | 43.16 | 54.13 | 54.25 | 78.70 | 17.14 | 43.35 | 60.65 | 50.20 |

| Method | Backbone | IoU per Category (%) | mIoU (%) | OA (%) | Kappa | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Background | Built-up | Road | Water | Barren | Forest | Agricultural | |||||

| FCN8S | VGG16 | 50.67 | 59.48 | 47.20 | 56.20 | 24.72 | 41.64 | 46.82 | 46.68 | 65.72 | 0.5363 |

| DeepLabV3+ | ResNet50 | 51.61 | 61.13 | 51.18 | 57.82 | 23.44 | 37.65 | 48.06 | 47.27 | 66.63 | 0.5411 |

| PAN | ResNet50 | 53.50 | 61.84 | 51.85 | 56.46 | 26.02 | 43.54 | 47.04 | 48.61 | 67.58 | 0.5577 |

| UNet | ResNet50 | 52.69 | 62.89 | 51.69 | 66.33 | 23.84 | 42.42 | 47.89 | 49.68 | 68.05 | 0.5658 |

| Unet++ | ResNet50 | 50.98 | 64.51 | 53.43 | 64.37 | 16.15 | 40.77 | 47.74 | 48.28 | 66.35 | 0.5461 |

| PSPNet | ResNet50 | 52.99 | 64.76 | 50.38 | 65.79 | 21.26 | 40.27 | 47.59 | 49.01 | 68.18 | 0.5632 |

| LinkNet | ResNet50 | 51.05 | 63.44 | 52.03 | 67.59 | 17.47 | 44.79 | 48.80 | 49.31 | 67.28 | 0.5609 |

| FarSeg | ResNet50 | 52.94 | 64.09 | 51.43 | 66.76 | 24.03 | 39.95 | 47.06 | 49.47 | 68.09 | 0.5620 |

| FactSeg | ResNet50 | 50.32 | 62.79 | 51.96 | 66.11 | 20.32 | 44.07 | 47.36 | 48.98 | 66.62 | 0.5513 |

| Ours | ResNet50 | 53.18 | 62.90 | 52.96 | 68.71 | 24.15 | 43.68 | 53.28 | 51.27 | 69.68 | 0.5931 |

| Method | mIoU (%) | OA (%) | Kappa |

|---|---|---|---|

| Baseline | 49.20 | 68.21 | 0.5692 |

| Baseline + FF | 50.01 | 68.95 | 0.5809 |

| Baseline + CF | 48.98 | 68.08 | 0.5685 |

| Ours | 51.27 | 69.68 | 0.5931 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tan, X.; Xiao, Z.; Zhang, Y.; Wang, Z.; Qi, X.; Li, D. Context-Driven Feature-Focusing Network for Semantic Segmentation of High-Resolution Remote Sensing Images. Remote Sens. 2023, 15, 1348. https://doi.org/10.3390/rs15051348

Tan X, Xiao Z, Zhang Y, Wang Z, Qi X, Li D. Context-Driven Feature-Focusing Network for Semantic Segmentation of High-Resolution Remote Sensing Images. Remote Sensing. 2023; 15(5):1348. https://doi.org/10.3390/rs15051348

Chicago/Turabian StyleTan, Xiaowei, Zhifeng Xiao, Yanru Zhang, Zhenjiang Wang, Xiaole Qi, and Deren Li. 2023. "Context-Driven Feature-Focusing Network for Semantic Segmentation of High-Resolution Remote Sensing Images" Remote Sensing 15, no. 5: 1348. https://doi.org/10.3390/rs15051348

APA StyleTan, X., Xiao, Z., Zhang, Y., Wang, Z., Qi, X., & Li, D. (2023). Context-Driven Feature-Focusing Network for Semantic Segmentation of High-Resolution Remote Sensing Images. Remote Sensing, 15(5), 1348. https://doi.org/10.3390/rs15051348