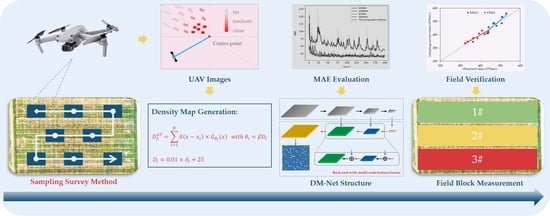

Sampling Survey Method of Wheat Ear Number Based on UAV Images and Density Map Regression Algorithm

Abstract

1. Introduction

2. Materials and Methods

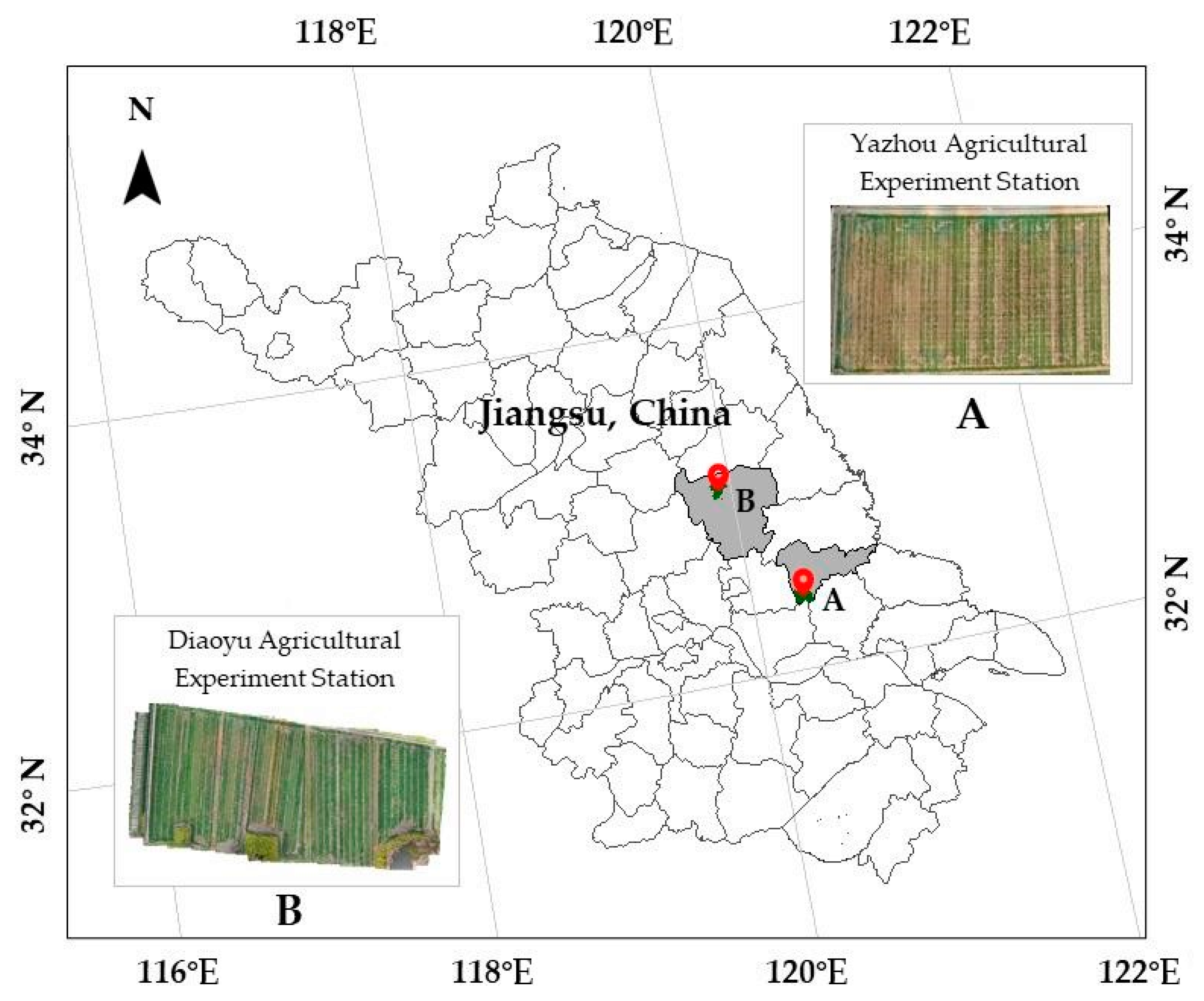

2.1. Experiment

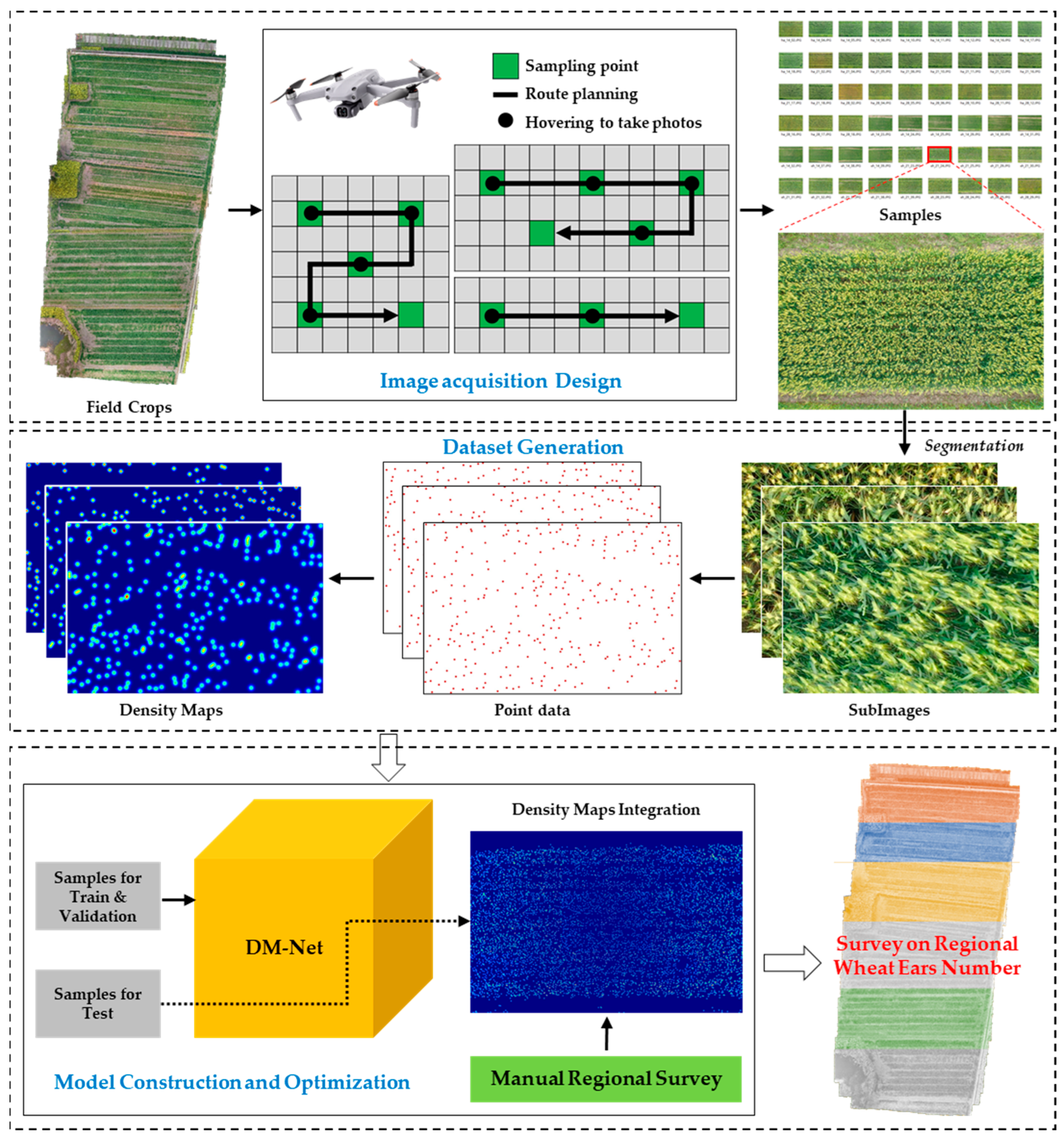

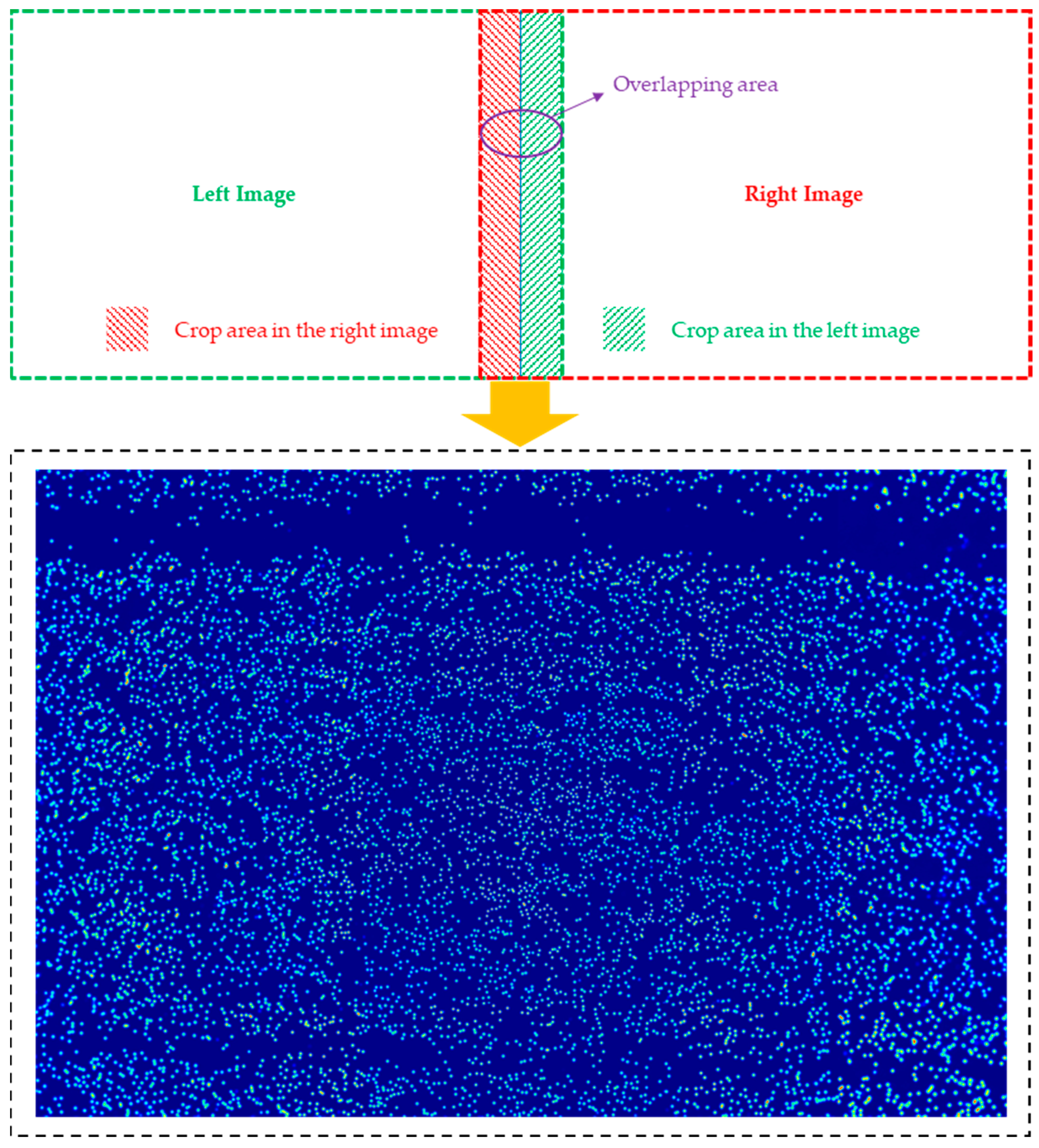

2.2. Sampling Survey Pipeline

2.2.1. Image Acquisition Design

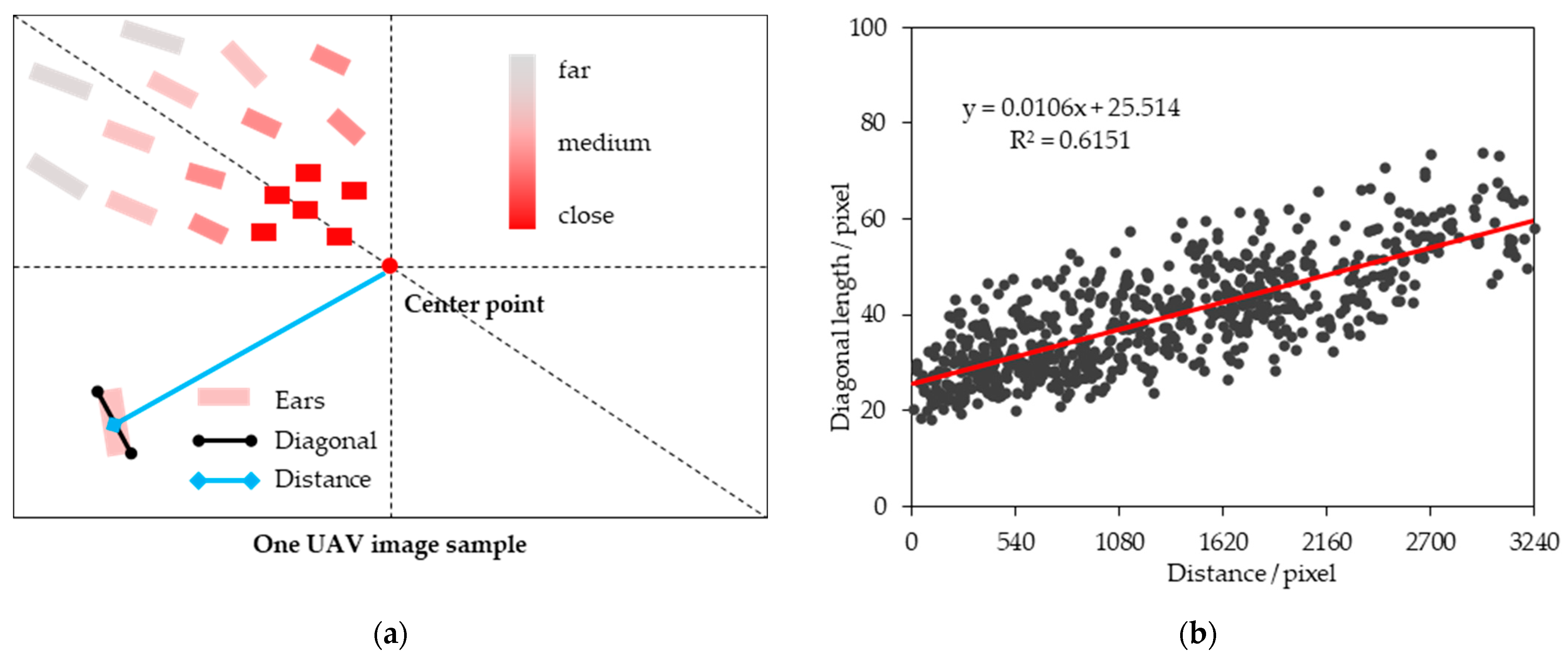

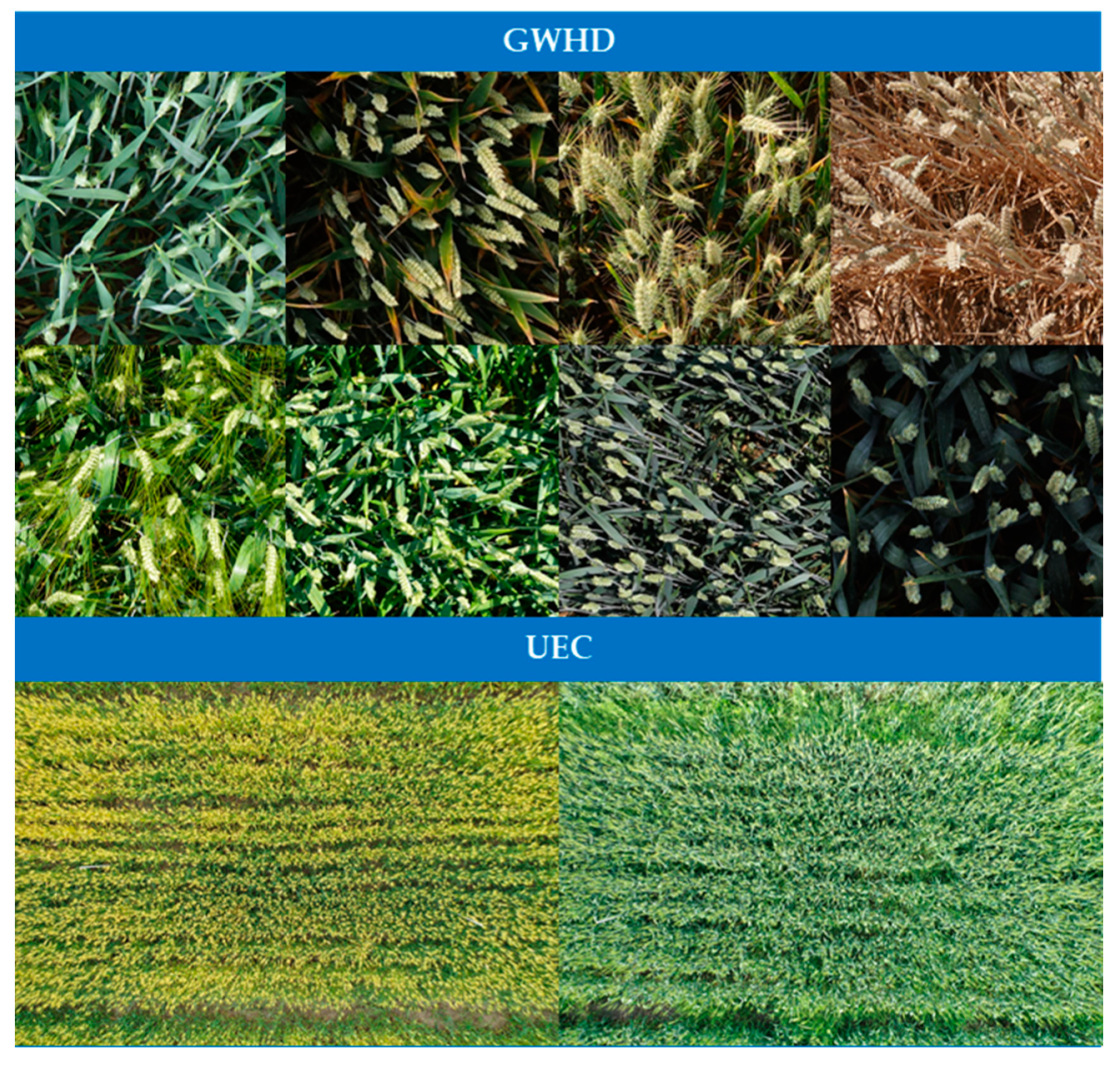

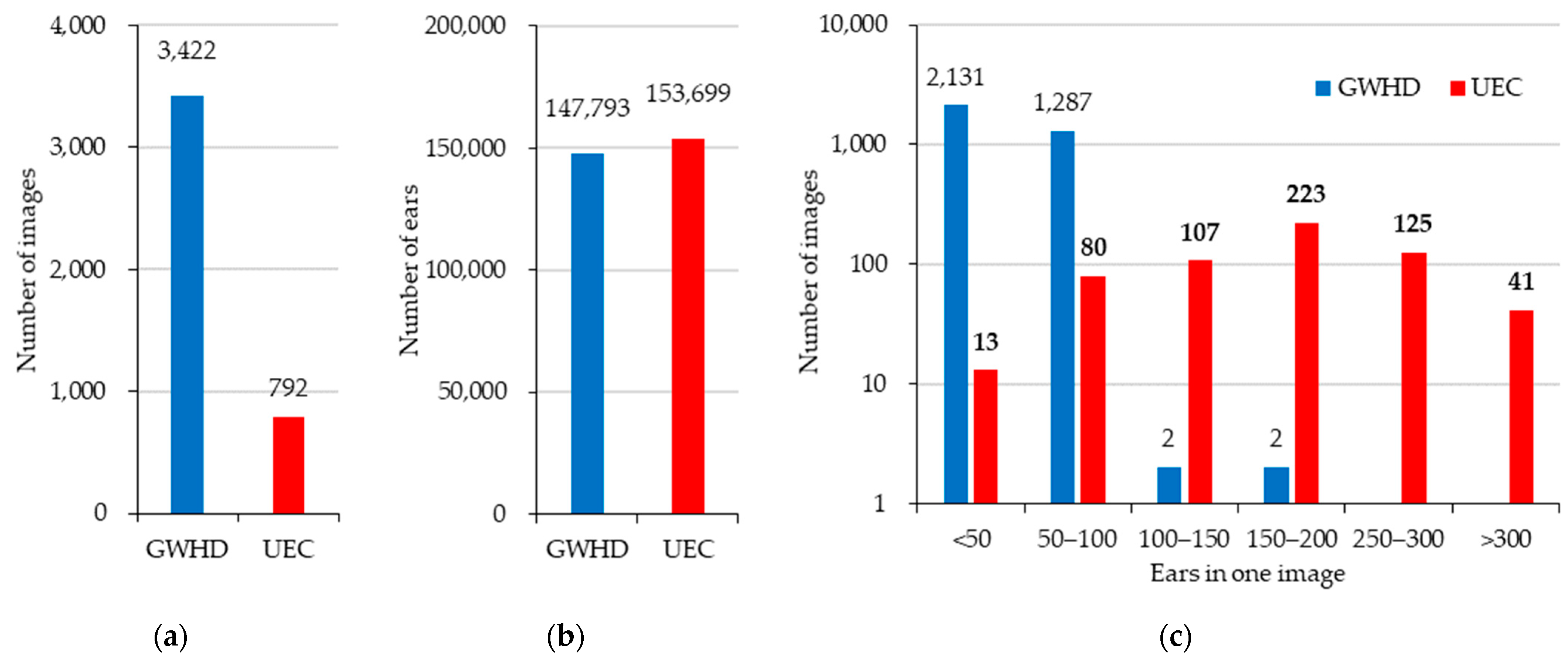

2.2.2. Dataset and Image Labeling

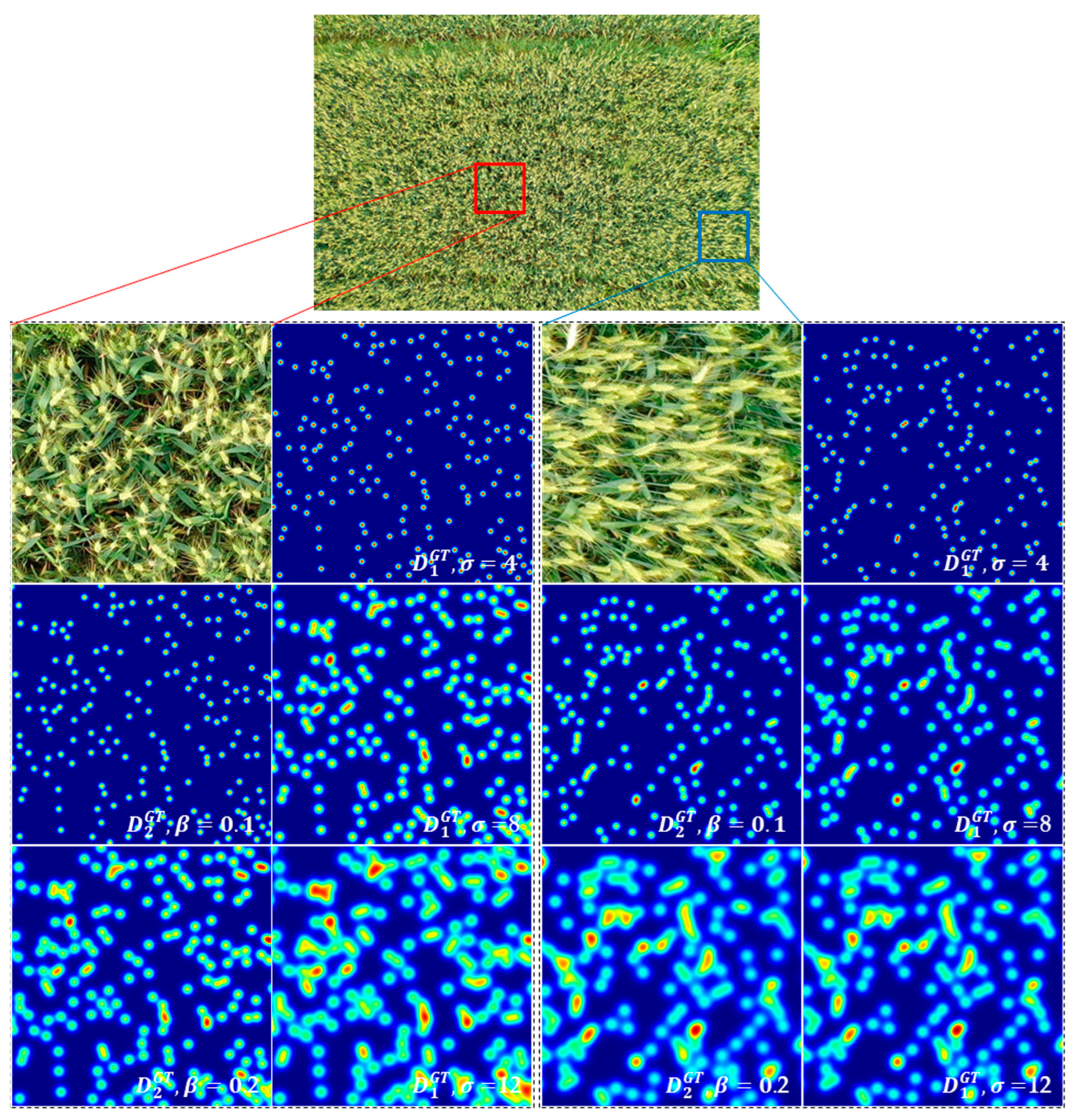

2.2.3. Density Map Generation

2.2.4. The Proposed Method

2.3. Model Training

2.4. Model Evaluation

2.5. Manual Measurement and Evaluation

3. Results

3.1. Density Map Generation

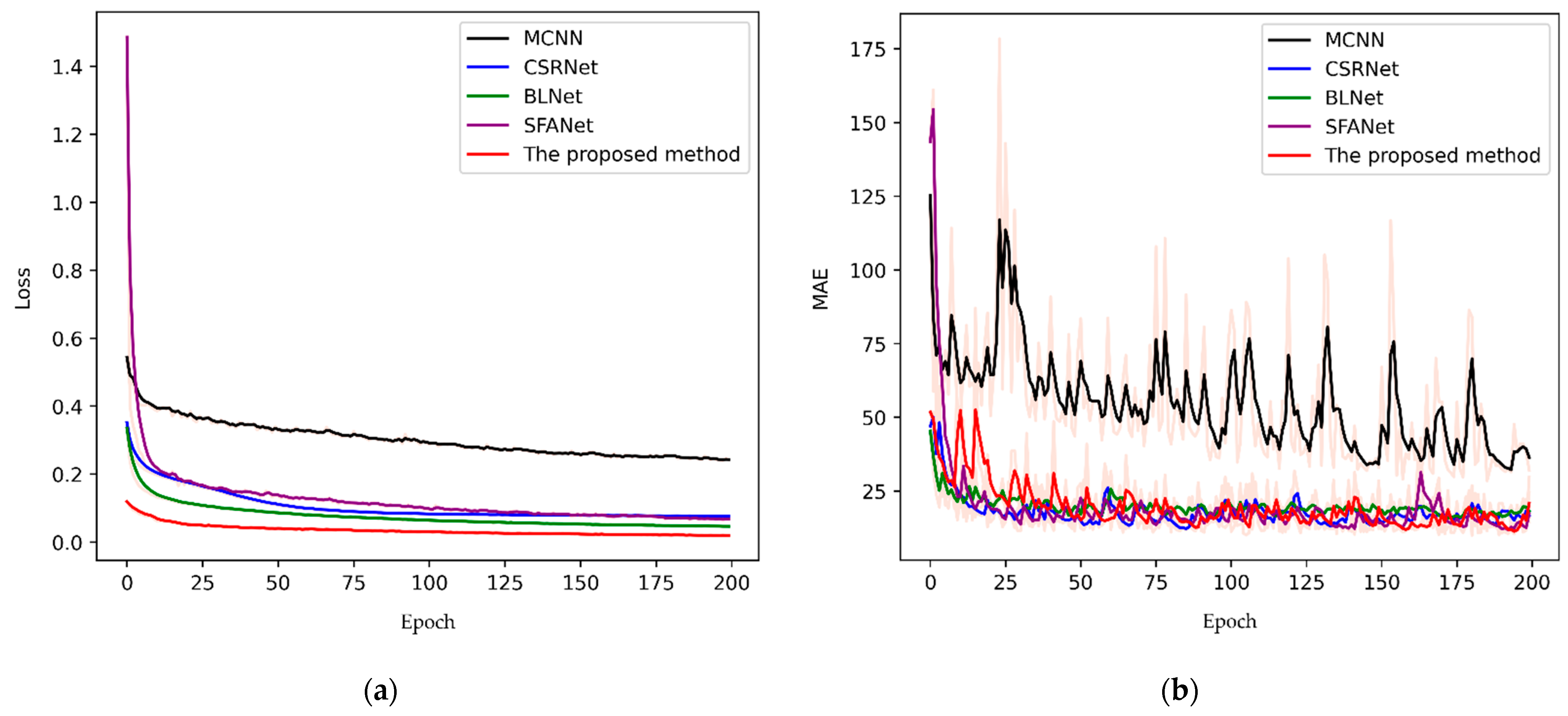

3.2. DM-Net Construction

3.3. The Result of the Sampling Survey

4. Discussion

4.1. The Challenge of UAV Image Datasets

4.2. Advantages in Efficiency

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Eversole, K.; Feuillet, C.; Mayer, K.F.X.; Rogers, J. Slicing the wheat genome. Science 2014, 345, 285–287. [Google Scholar] [CrossRef]

- Bognár, P.; Kern, A.; Pásztor, S.; Lichtenberger, J.; Koronczay, D.; Ferencz, C. Yield estimation and forecasting for winter wheat in Hungary using time series of MODIS data. Int. J. Remote Sens. 2017, 38, 3394–3414. [Google Scholar] [CrossRef]

- Slafer, G.A.; Calderini, D.F.; Miralles, D.J. Yield components and compensation in wheat: Opportunities for further increasing yield potential. In Increasing Yield Potential in Wheat: Breaking the Barriers, Proceedings of the Workshop Held in Ciudad Obregon, Sonora, Mexico, 28–30 April 1986; CIMMYT: Mexico City, Mexico, 1996; pp. 101–133. [Google Scholar]

- Del Moral, L.F.G.; Rharrabti, Y.; Villegas, D.; Royo, C. Evaluation of grain yield and its components in durum wheat under Mediterranean conditions: An ontogenic approach. Agron. J. 2003, 95, 266–274. [Google Scholar] [CrossRef]

- Slafer, G.A.; Savin, R.; Sadras, V.O. Coarse and fine regulation of wheat yield components in response to genotype and environment. Field Crop Res. 2014, 157, 71–83. [Google Scholar] [CrossRef]

- Ferrante, A.; Cartelle, J.; Savin, R.; Slafer, G.A. Yield determination, interplay between major components and yield stability in a traditional and a contemporary wheat across a wide range of environments. Field Crop Res. 2017, 203, 114–127. [Google Scholar] [CrossRef]

- Prystupa, P.; Savin, R.; Slafer, G.A. Grain number and its relationship with dry matter, N and P in the spikes at heading in response to N× P fertilization in barley. Field Crop Res. 2004, 90, 245–254. [Google Scholar] [CrossRef]

- Peltonen-Sainio, P.; Kangas, A.; Salo, Y.; Jauhiainen, L. Grain number dominates grain weight in temperate cereal yield determination: Evidence based on 30 years of multi-location trials. Field Crops Res. 2007, 100, 179–188. [Google Scholar] [CrossRef]

- Jin, X.; Liu, S.; Baret, F.; Hemerlé, M.; Comar, A. Estimates of plant density of wheat crops at emergence from very low altitude UAV imagery. Remote Sens. Environ. 2017, 198, 105–114. [Google Scholar] [CrossRef]

- Liu, T.; Sun, C.; Wang, L.; Zhong, X.; Zhu, X.; Guo, W. In-field wheatear counting based on image processing technology. Trans. Chin. Soc. Agric. Mach. 2014, 45, 282–290. [Google Scholar]

- Fernandez-Gallego, J.A.; Kefauver, S.C.; Gutiérrez, N.A.; Nieto-Taladriz, M.T.; Araus, J.L. Wheat ear counting in-field conditions: High throughput and low-cost approach using RGB images. Plant Methods 2018, 14, 22. [Google Scholar] [CrossRef]

- Bao, W.; Lin, Z.; Hu, G.; Liang, D.; Huang, L.; Zhang, X. Method for wheat ear counting based on frequency domain decomposition of MSVF-ISCT. Info. Proc. Agric. 2022, 1, 1. [Google Scholar] [CrossRef]

- Hasan, M.M.; Chopin, J.P.; Laga, H.; Miklavcic, S.J. Detection and analysis of wheat spikes using convolutional neural networks. Plant Methods 2018, 14, 100. [Google Scholar] [CrossRef]

- Madec, S.; Jin, X.; Lu, H.; Solan, B.D.; Liu, S.; Duyme, F.; Heritier, E.; Baret, F. Ear density estimation from high resolution RGB imagery using deep learning technique. Agric. Forest Meteorol. 2019, 264, 225–234. [Google Scholar] [CrossRef]

- Sadeghi-Tehran, P.; Virlet, N.; Ampe, E.M.; Reyns, P.; Hawkesford, M.J. DeepCount: In-field automatic quantification of wheat spikes using simple linear iterative clustering and deep convolutional neural networks. Front. Plant Sci. 2019, 10, 1176. [Google Scholar] [CrossRef]

- Misra, T.; Arora, A.; Marwaha, S.; Chinnusamy, V.; Rao, A.R.; Jain, R.; Sahoo, R.N.; Ray, M.; Kumar, S.; Raju, D.; et al. SpikeSegNet-a deep learning approach utilizing encoder-decoder network with hourglass for spike segmentation and counting in wheat plant from visual imaging. Plant Methods 2020, 16, 40. [Google Scholar] [CrossRef] [PubMed]

- Xu, C.; Jiang, H.; Yuen, P.; Ahmad, K.Z.; Chen, Y. MHW-PD: A robust rice panicles counting algorithm based on deep learning and multi-scale hybrid window. Comput. Electron. Agric. 2020, 173, 105375. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, X.; Yan, J.; Qiu, X.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W. A wheat spike detection method in UAV images based on improved YOLOv5. Remote Sens. 2021, 13, 3095. [Google Scholar] [CrossRef]

- Hong, Q.; Jiang, L.; Zhang, Z.; Ji, S.; Gu, C.; Mao, W.; Li, W.; Liu, T.; Li, B.; Tan, C. A Lightweight Model for Wheat Ear Fusarium Head Blight Detection Based on RGB Images. Remote Sens. 2022, 14, 3481. [Google Scholar] [CrossRef]

- Dandrifosse, S.; Ennadifi, E.; Carlier, A.; Gosselin, B.; Dumont, B.; Mercatoris, B. Deep learning for wheat ear segmentation and ear density measurement: From heading to maturity. Comput. Electron. Agric. 2022, 199, 107161. [Google Scholar] [CrossRef]

- Xiong, H.; Cao, Z.; Lu, H.; Madec, S.; Liu, L.; Shen, C. TasselNetv2: In-field counting of wheat spikes with context-augmented local regression networks. Plant Methods 2019, 15, 150. [Google Scholar] [CrossRef]

- Wang, D.; Zhang, D.; Yang, G.; Xu, B.; Luo, Y.; Yang, X. SSRNet: In-field counting wheat ears using multi-stage convolutional neural network. IEEE T. Geosci. Remote. 2021, 60, 1–11. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Peña, J.M.; Gutiérrez, P.A.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. Selecting patterns and features for between-and within-crop-row weed mapping using UAV-imagery. Expert Syst. Appl. 2016, 47, 85–94. [Google Scholar] [CrossRef]

- Chang, A.; Jung, J.; Maeda, M.M.; Landivar, J. Crop height monitoring with digital imagery from Unmanned Aerial System (UAS). Comput. Electron. Agric. 2017, 141, 232–237. [Google Scholar] [CrossRef]

- Schut, A.G.T.; Traore, P.C.S.; Blaes, X.; De By, R.A. Assessing yield and fertilizer response in heterogeneous smallholder fields with UAVs and satellites. Field Crop Res. 2018, 221, 98–107. [Google Scholar] [CrossRef]

- Li, B.; Huang, H.; Zhang, A.; Liu, P.; Liu, C. Approaches on crowd counting and density estimation: A review. Pattern Anal Applic. 2021, 24, 853–874. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, D.; Chen, S.; Gao, S.; Ma, Y. Single-image crowd counting via multi-column convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 589–597. [Google Scholar]

- Sindagi, V.A.; Patel, V.M. Generating high-quality crowd density maps using contextual pyramid cnns. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1861–1870. [Google Scholar]

- Ma, Z.; Wei, X.; Hong, X.; Gong, Y. Bayesian loss for crowd count estimation with point supervision. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6142–6151. [Google Scholar]

- Song, Q.; Wang, C.; Wang, Y.; Tai, Y.; Wang, C.; Li, J.; Wu, J.; Ma, J. To choose or to fuse? Scale selection for crowd counting. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 2576–2583. [Google Scholar]

- Roth, L.; Hund, A.; Aasen, H. PhenoFly Planning Tool: Flight planning for high-resolution optical remote sensing with unmanned areal systems. Plant Methods 2018, 14, 116. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, X.; Chen, D. Csrnet: Dilated convolutional neural networks for understanding the highly congested scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake, UT, USA, 18–23 June 2018; pp. 1091–1100. [Google Scholar]

- Zhu, L.; Zhao, Z.; Lu, C.; Lin, Y.; Peng, Y.; Yao, T. Dual path multi-scale fusion networks with attention for crowd counting. arXiv 2019, arXiv:1902.01115. [Google Scholar]

| Hardware | Software | ||

|---|---|---|---|

| Project | Content | Project | Content |

| CPU | AMD EPYC 7742 64-Core Processor | Language | Python 3.7 |

| GPU | NVIDIA A100 40 G × 4 | Framework | Pytorch 1.7 |

| RAM | 512 G | CUDA | CUDA 11.0 |

| Operating system | Ubuntu 20.04 LTS | Monitor | Tensorboard X |

| Methods | MAE | MSE |

|---|---|---|

| MCNN [28] | 28.50 | 36.62 |

| CSRNet [33] | 10.78 | 13.73 |

| BLNet [30] | 12.83 | 16.32 |

| SFANet [34] | 10.04 | 12.83 |

| The proposed method | 9.01 | 11.85 |

| Kernel Size | MAE | MSE |

|---|---|---|

| 10.03 | 13.34 | |

| 10.74 | 14.25 | |

| 13.31 | 16.65 | |

| 9.01 | 11.85 | |

| 9.97 | 13.00 |

| Projects | Flight Altitude | |||||

|---|---|---|---|---|---|---|

| 2 m | 5 m | 10 m | 20 m | 50 m | ||

| Image Mosaic | Number of images | Null | 26,327 | 6623 | 1676 | 280 |

| Time of image acquisition (min) | Null | 2110.9 | 536.6 | 103.8 | 11.5 | |

| Time of image mosaic (min) | Null | 7898.1 | 1986.9 | 502.8 | 84.0 | |

| Sampling survey | Number of images | 532 | 98 | 22 | 6 | 1 |

| Time of image acquisition (min) | 11.1 | 3.9 | 2.6 | 2.4 | 2.3 | |

| Image resolution (mm/pixel) | 0.3 | 0.8 | 1.6 | 3.2 | 7.9 | |

| Number of ears in one image | 1000 | 8000 | 40,000 | 136,000 | 880,000 | |

| Number of ears in one sub-image (1/25) | 40 | 320 | 1600 | 5440 | 35,200 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, W.; Zhong, X.; Lei, C.; Zhao, Y.; Liu, T.; Sun, C.; Guo, W.; Sun, T.; Liu, S. Sampling Survey Method of Wheat Ear Number Based on UAV Images and Density Map Regression Algorithm. Remote Sens. 2023, 15, 1280. https://doi.org/10.3390/rs15051280

Wu W, Zhong X, Lei C, Zhao Y, Liu T, Sun C, Guo W, Sun T, Liu S. Sampling Survey Method of Wheat Ear Number Based on UAV Images and Density Map Regression Algorithm. Remote Sensing. 2023; 15(5):1280. https://doi.org/10.3390/rs15051280

Chicago/Turabian StyleWu, Wei, Xiaochun Zhong, Chaokai Lei, Yuanyuan Zhao, Tao Liu, Chengming Sun, Wenshan Guo, Tan Sun, and Shengping Liu. 2023. "Sampling Survey Method of Wheat Ear Number Based on UAV Images and Density Map Regression Algorithm" Remote Sensing 15, no. 5: 1280. https://doi.org/10.3390/rs15051280

APA StyleWu, W., Zhong, X., Lei, C., Zhao, Y., Liu, T., Sun, C., Guo, W., Sun, T., & Liu, S. (2023). Sampling Survey Method of Wheat Ear Number Based on UAV Images and Density Map Regression Algorithm. Remote Sensing, 15(5), 1280. https://doi.org/10.3390/rs15051280