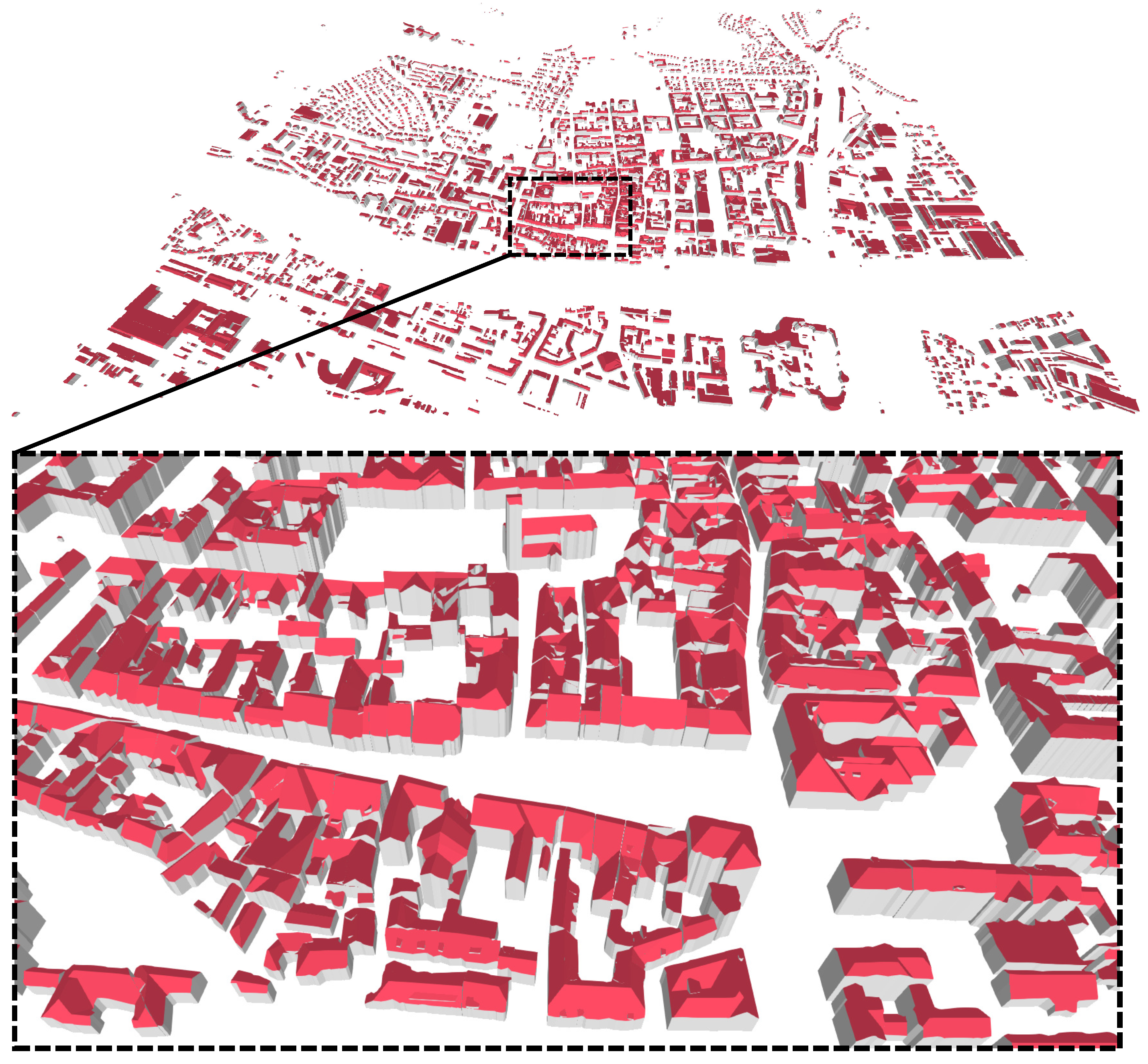

Novel Half-Spaces Based 3D Building Reconstruction Using Airborne LiDAR Data

Abstract

1. Introduction

- A significant improvement is achieved through the division of buildings into smaller parts without height jumps, which we refer to as sub-buildings.

- The division is based on an innovative analysis of half-spaces and height jumps, where a custom search space was introduced for height jump detection. The division provides a greater ability to reconstruct building roof details, which are obtained by merging the sub-buildings.

- The height jumps and the outlines of buildings are obtained directly from the point cloud, independent of a roof’s shape, which minimises the processing errors.

2. Methodology

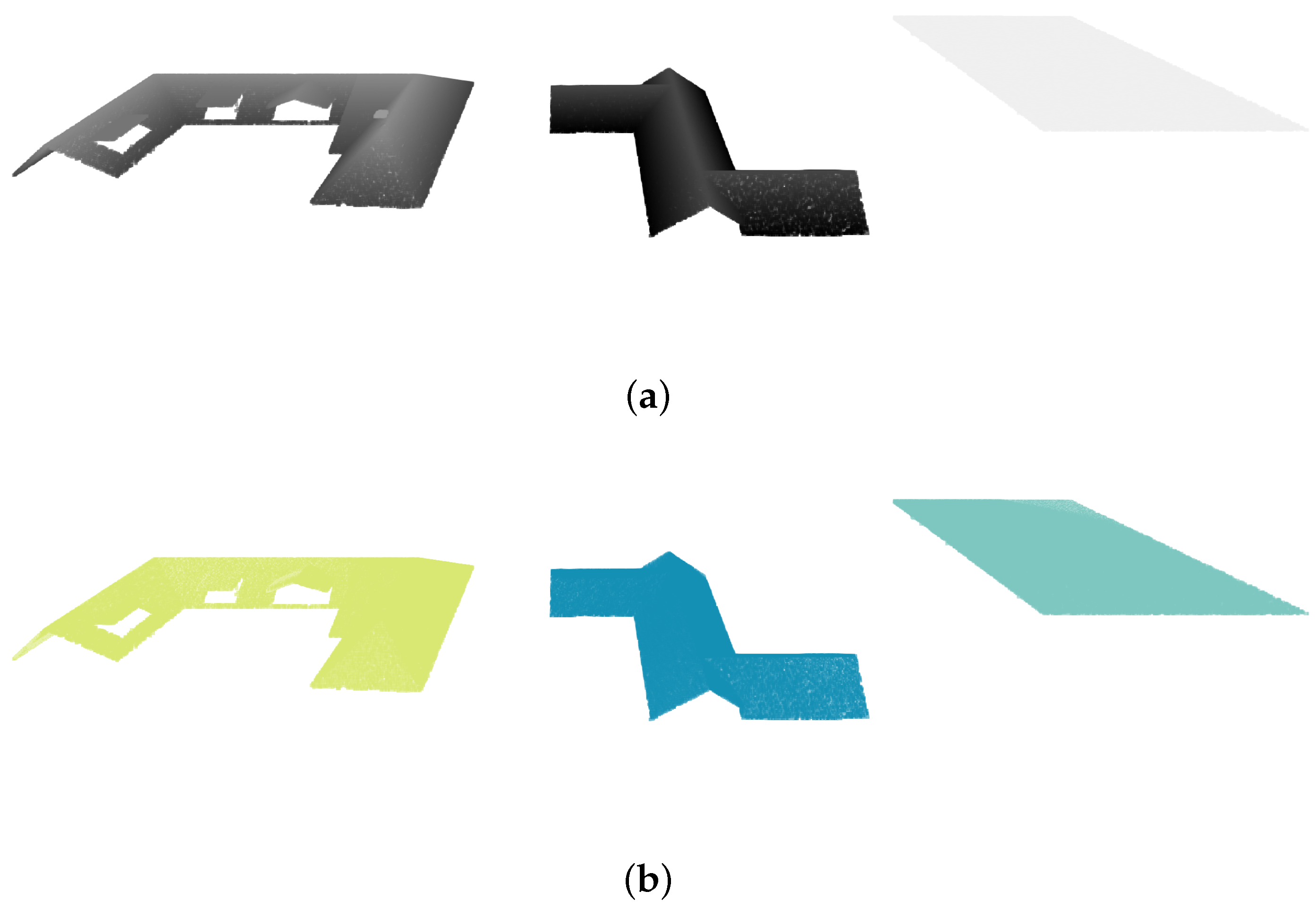

2.1. Data Preprocessing

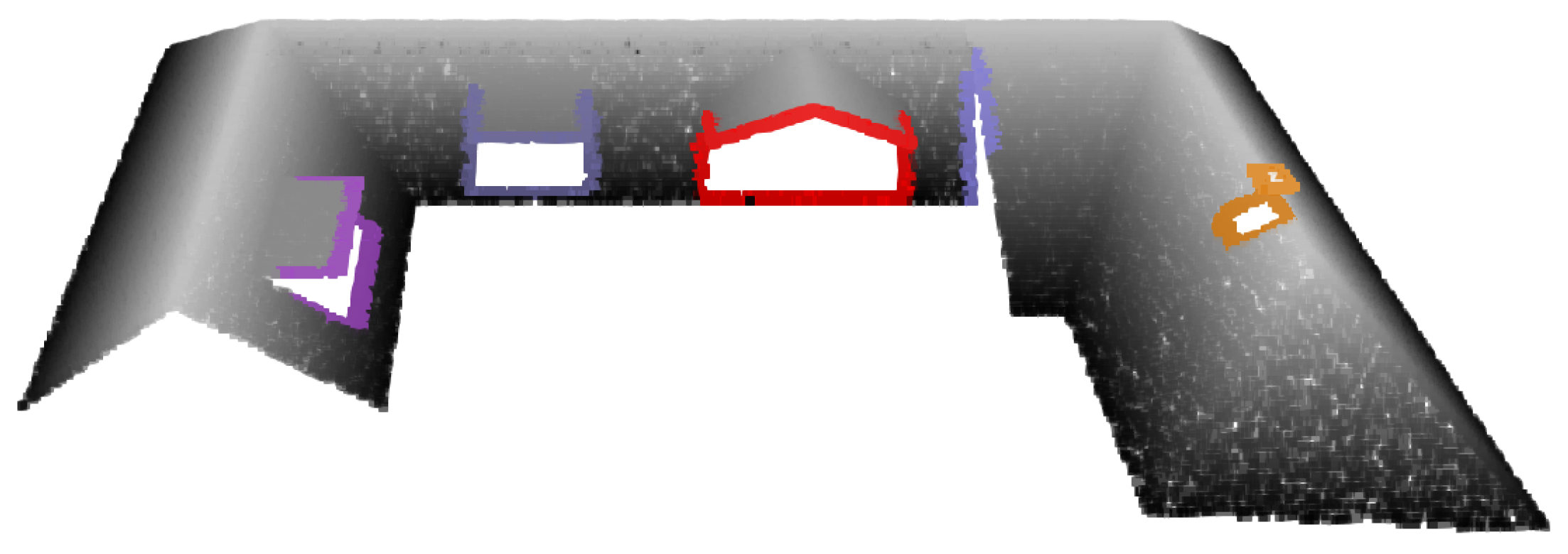

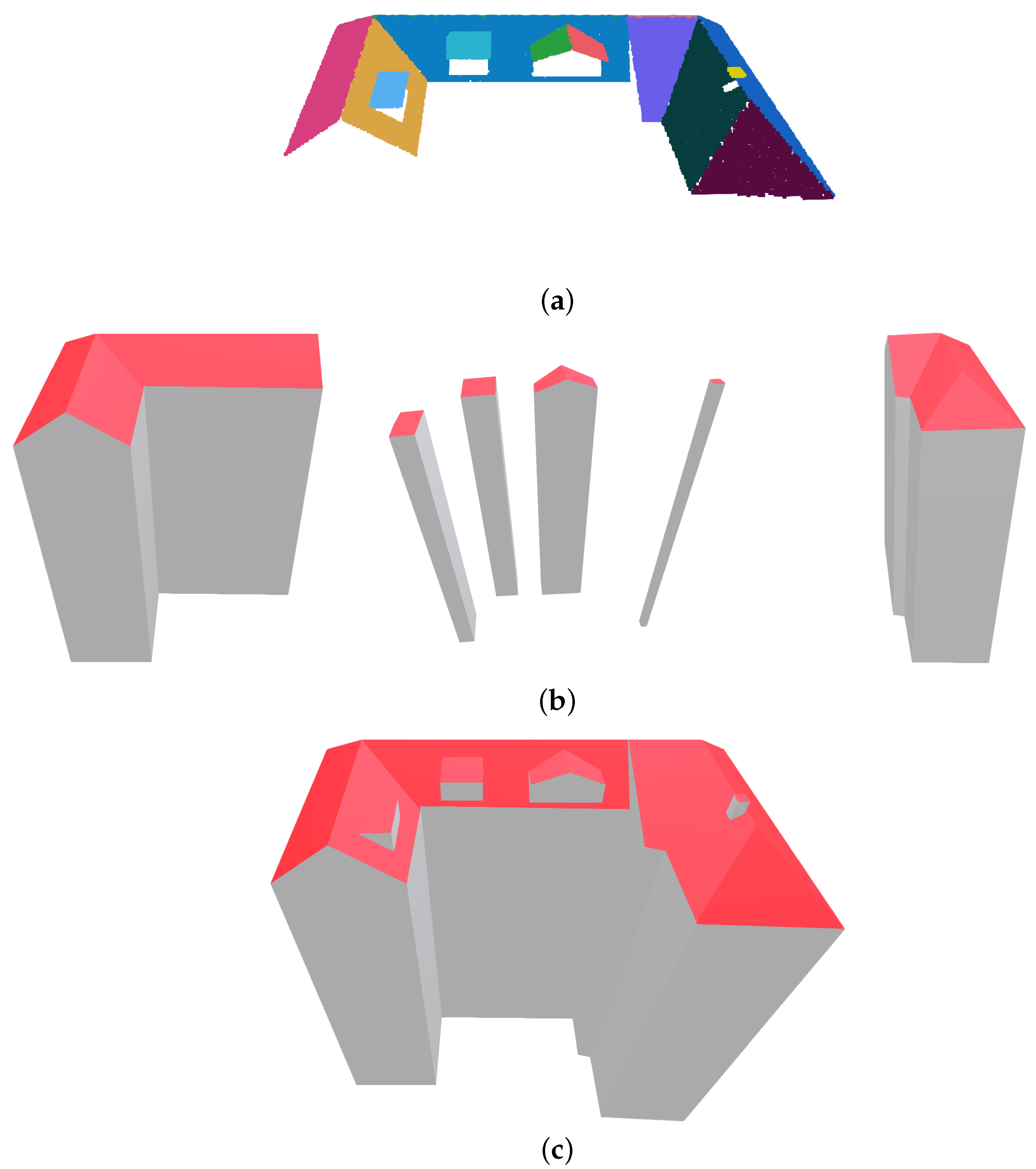

2.2. Building Division

2.3. Shaping 3D Building Models

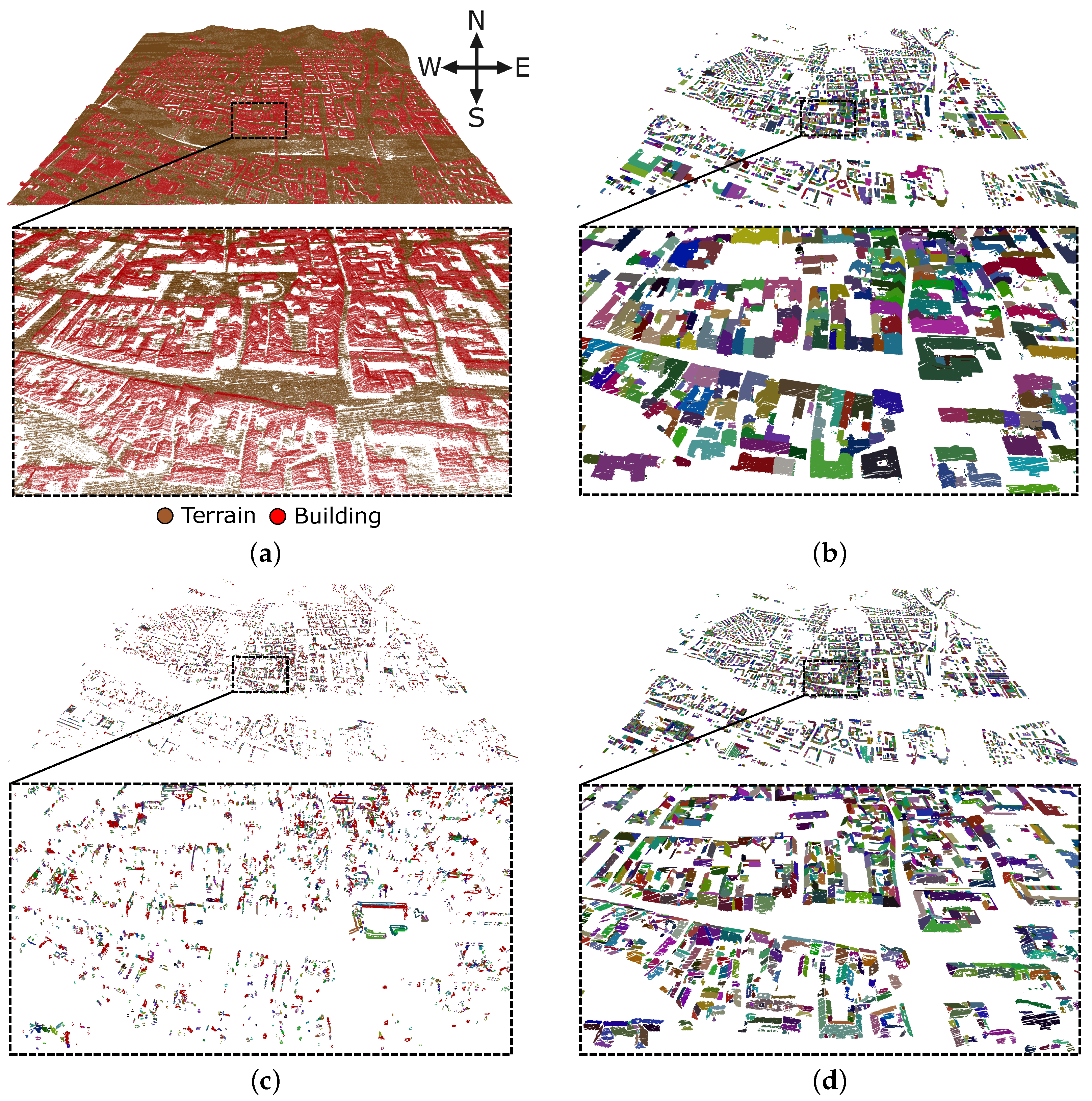

3. Results and Discussion

3.1. Validation

3.2. Limitations

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, P.; Biljecki, F. A review of spatially-explicit GeoAI applications in Urban Geography. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102936. [Google Scholar] [CrossRef]

- Hofierka, J.; Gallay, M.; Onačillová, K.; Hofierka, J. Physically-based land surface temperature modeling in urban areas using a 3-D city model and multispectral satellite data. Urban Clim. 2020, 31, 100566. [Google Scholar] [CrossRef]

- Bizjak, M.; Žalik, B.; Štumberger, G.; Lukač, N. Large-scale estimation of buildings’ thermal load using LiDAR data. Energy Build. 2021, 231, 110626. [Google Scholar] [CrossRef]

- Ali, U.; Shamsi, M.H.; Hoare, C.; Mangina, E.; O’Donnell, J. Review of urban building energy modeling (UBEM) approaches, methods and tools using qualitative and quantitative analysis. Energy Build. 2021, 246, 111073. [Google Scholar] [CrossRef]

- Yuan, J.; Masuko, S.; Shimazaki, Y.; Yamanaka, T.; Kobayashi, T. Evaluation of outdoor thermal comfort under different building external-wall-surface with different reflective directional properties using CFD analysis and model experiment. Build. Environ. 2022, 207, 108478. [Google Scholar] [CrossRef]

- Deng, T.; Zhang, K.; Shen, Z.J.M. A systematic review of a digital twin city: A new pattern of urban governance toward smart cities. J. Manag. Sci. Eng. 2021, 6, 125–134. [Google Scholar] [CrossRef]

- Pang, H.E.; Biljecki, F. 3D building reconstruction from single street view images using deep learning. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102859. [Google Scholar] [CrossRef]

- Wang, R. 3D building modeling using images and LiDAR: A review. Int. J. Image Data Fusion 2013, 4, 273–292. [Google Scholar] [CrossRef]

- Wang, R.; Peethambaran, J.; Dong, C. LiDAR Point Clouds to 3D Urban Models: A Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2018, 11, 606–627. [Google Scholar] [CrossRef]

- Buyukdemircioglu, M.; Kocaman, S.; Kada, M. Deep learning for 3D building reconstruction: A review. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII-B2-2, 359–366. [Google Scholar] [CrossRef]

- Li, L.; Song, N.; Sun, F.; Liu, X.; Wang, R.; Yao, J.; Cao, S. Point2Roof: End-to-end 3D building roof modeling from airborne LiDAR point clouds. ISPRS J. Photogramm. Remote Sens. 2022, 193, 17–28. [Google Scholar] [CrossRef]

- Dorninger, P.; Pfeifer, N. A Comprehensive Automated 3D Approach for Building Extraction, Reconstruction, and Regularization from Airborne Laser Scanning Point Clouds. Sensors 2008, 8, 7323–7343. [Google Scholar] [CrossRef]

- Elberink, S.O.; Vosselman, G. Building reconstruction by target based graph matching on incomplete laser data: Analysis and limitations. Sensors 2009, 9, 6101–6118. [Google Scholar] [CrossRef] [PubMed]

- Sampath, A.; Shan, J. Segmentation and reconstruction of polyhedral building roofs from aerial LiDAR point clouds. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1554–1567. [Google Scholar] [CrossRef]

- Chen, Y.; Cheng, L.; Li, M.; Wang, J.; Tong, L.; Yang, K. Multiscale grid method for detection and reconstruction of building roofs from airborne LiDAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4081–4094. [Google Scholar] [CrossRef]

- Chen, D.; Wang, R.; Peethambaran, J. Topologically Aware Building Rooftop Reconstruction From Airborne Laser Scanning Point Clouds. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7032–7052. [Google Scholar] [CrossRef]

- Huang, J.; Stoter, J.; Peters, R.; Nan, L. City3D: Large-Scale Building Reconstruction from Airborne LiDAR Point Clouds. Remote Sens. 2022, 14, 2254. [Google Scholar] [CrossRef]

- Lafarge, F.; Mallet, C. Creating large-scale city models from 3D-point clouds: A robust approach with hybrid representation. Int. J. Comput. Vis. 2012, 99, 69–85. [Google Scholar] [CrossRef]

- Zhou, Q.Y.; Neumann, U. 2.5D building modeling by discovering global regularities. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 326–333. [Google Scholar] [CrossRef]

- Bauchet, J.P.; Lafarge, F. Kinetic Shape Reconstruction. ACM Trans. Graph. 2020, 39, 1–4. [Google Scholar] [CrossRef]

- Li, M.; Rottensteiner, F.; Heipke, C. Modelling of buildings from aerial LiDAR point clouds using TINs and label maps. ISPRS J. Photogramm. Remote Sens. 2019, 154, 127–138. [Google Scholar] [CrossRef]

- Wang, S.; Cai, G.; Cheng, M.; Marcato Junior, J.; Huang, S.; Wang, Z.; Su, S.; Li, J. Robust 3D reconstruction of building surfaces from point clouds based on structural and closed constraints. ISPRS J. Photogramm. Remote Sens. 2020, 170, 29–44. [Google Scholar] [CrossRef]

- Vosselman, G. Building Reconstruction Using Planar Faces In Very High Density Height Data. Int. Arch. Photogramm. Remote Sens. 1999, 32, 87–92. [Google Scholar]

- Huang, H.; Brenner, C.; Sester, M. A generative statistical approach to automatic 3D building roof reconstruction from laser scanning data. ISPRS J. Photogramm. Remote Sens. 2013, 79, 29–43. [Google Scholar] [CrossRef]

- Poullis, C.; You, S. Photorealistic large-scale Urban city model reconstruction. IEEE Trans. Vis. Comput. Graph. 2009, 15, 654–669. [Google Scholar] [CrossRef]

- Henn, A.; Gröger, G.; Stroh, V.; Plümer, L. Model driven reconstruction of roofs from sparse LIDAR point clouds. ISPRS J. Photogramm. Remote Sens. 2013, 76, 17–29. [Google Scholar] [CrossRef]

- Haala, N.; Brenner, C. Virtual city models from laser altimeter and 2D map data. Photogramm. Eng. Remote Sens. 1999, 65, 787–795. [Google Scholar]

- Kada, M.; McKinley, L. 3D Building Reconstruction from LIDAR based on a Cell Decomposition Approach. In Proceedings of the CMRT09: Object Extraction for 3D City Models, Road Databases and Traffic Monitoring-Concepts, Algorithms and Evaluation, Paris, France, 3–4 September 2009; Volume XXXVIII, pp. 47–52. [Google Scholar]

- Kada, M.; Wichmann, A. Feature-Driven 3d Building Modeling Using Planar Halfspaces. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, II-3/W3, 37–42. [Google Scholar] [CrossRef]

- Li, Z.; Shan, J. RANSAC-based multi primitive building reconstruction from 3D point clouds. ISPRS J. Photogramm. Remote Sens. 2022, 185, 247–260. [Google Scholar] [CrossRef]

- Verma, V.; Kumar, R.; Hsu, S. 3D Building Detection and Modeling from Aerial LiDAR Data. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 2, pp. 2213–2220. [Google Scholar] [CrossRef]

- Xiong, B.; Jancosek, M.; Oude Elberink, S.; Vosselman, G. Flexible building primitives for 3D building modeling. ISPRS J. Photogramm. Remote Sens. 2015, 101, 275–290. [Google Scholar] [CrossRef]

- Hu, P.; Yang, B.; Dong, Z.; Yuan, P.; Huang, R.; Fan, H.; Sun, X. Towards Reconstructing 3D Buildings from ALS Data Based on Gestalt Laws. Remote Sens. 2018, 10, 1127. [Google Scholar] [CrossRef]

- Bizjak, M.; Žalik, B.; Lukač, N. Parameter-Free Half-Spaces Based 3D Building Reconstruction Using Ground and Segmented Building Points from Airborne LiDAR Data with 2D Outlines. Remote Sens. 2021, 13, 4430. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining (KDD-96), Portland, OR, USA, 2–4 August 1996; AAAI Press: Palo Alto, CA, USA, 1996; Volume 96, pp. 226–231. [Google Scholar]

- Rabbani, T.; den Heuvel, F.; Vosselmann, G. Segmentation of point clouds using smoothness constraint. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 248–253. [Google Scholar]

- Bizjak, M. The segmentation of a point cloud using locally fitted surfaces. In Proceedings of the 18th Mediterranean Electrotechnical Conference: Intelligent and Efficient Technologis and Services for the Citizen, MELECON 2016, Lemesos, Cyprus, 18–20 April 2016; IEEE: Limassol, Cyprus, 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Czerniawski, T.; Sankaran, B.; Nahangi, M.; Haas, C.; Leite, F. 6D DBSCAN-based segmentation of building point clouds for planar object classification. Autom. Constr. 2018, 88, 44–58. [Google Scholar] [CrossRef]

- Li, L.; Yao, J.; Tu, J.; Liu, X.; Li, Y.; Guo, L. Roof plane segmentation from airborne LiDAR data using hierarchical clustering and boundary relabeling. Remote Sens. 2020, 12, 1363. [Google Scholar] [CrossRef]

- Bevington, P.R.; Robinson, D.K. Data Reduction and Error Analysis for the Physical Sciences, 3rd ed.; McGraw–Hill: New York, NY, USA, 2002. [Google Scholar]

- Edelsbrunner, H.; Kirkpatrick, D.; Seidel, R. On the shape of a set of points in the plane. IEEE Trans. Inf. Theory 1983, 29, 551–559. [Google Scholar] [CrossRef]

- Douglas, D.H.; Peucker, T.K. Algorithms for the reduction of the number of points required to represent a digitized line or its caricature. Cartogr. Int. J. Geogr. Inf. Geovisualization 1973, 10, 112–122. [Google Scholar] [CrossRef]

- Cramer, M. The DGPF-Test on Digital Airborne Camera Evaluation Overview and Test Design. Photogramm.-Fernerkund.-Geoinf. 2010, 2010, 73–82. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Sohn, G.; Jung, J.; Gerke, M.; Baillard, C.; Benitez, S.; Breitkopf, U. The ISPRS benchmark on urban object classification and 3D building reconstruction. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-3, 293–298. [Google Scholar] [CrossRef]

- Biljecki, F.; Heuvelink, G.B.; Ledoux, H.; Stoter, J. The effect of acquisition error and level of detail on the accuracy of spatial analyses. Cartogr. Geogr. Inf. Sci. 2018, 45, 156–176. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| 0.9 | |

| 2.0 | |

| 5 |

| Parameter | Value |

|---|---|

| k | 20 |

| 2.5 | |

| 50 | |

| (m) | 2 |

| Metric | Value |

|---|---|

| RMSE (m) | 0.29 |

| Completeness (%) | 85.0 |

| (%) | 96.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bizjak, M.; Mongus, D.; Žalik, B.; Lukač, N. Novel Half-Spaces Based 3D Building Reconstruction Using Airborne LiDAR Data. Remote Sens. 2023, 15, 1269. https://doi.org/10.3390/rs15051269

Bizjak M, Mongus D, Žalik B, Lukač N. Novel Half-Spaces Based 3D Building Reconstruction Using Airborne LiDAR Data. Remote Sensing. 2023; 15(5):1269. https://doi.org/10.3390/rs15051269

Chicago/Turabian StyleBizjak, Marko, Domen Mongus, Borut Žalik, and Niko Lukač. 2023. "Novel Half-Spaces Based 3D Building Reconstruction Using Airborne LiDAR Data" Remote Sensing 15, no. 5: 1269. https://doi.org/10.3390/rs15051269

APA StyleBizjak, M., Mongus, D., Žalik, B., & Lukač, N. (2023). Novel Half-Spaces Based 3D Building Reconstruction Using Airborne LiDAR Data. Remote Sensing, 15(5), 1269. https://doi.org/10.3390/rs15051269