Former-CR: A Transformer-Based Thick Cloud Removal Method with Optical and SAR Imagery

Abstract

1. Introduction

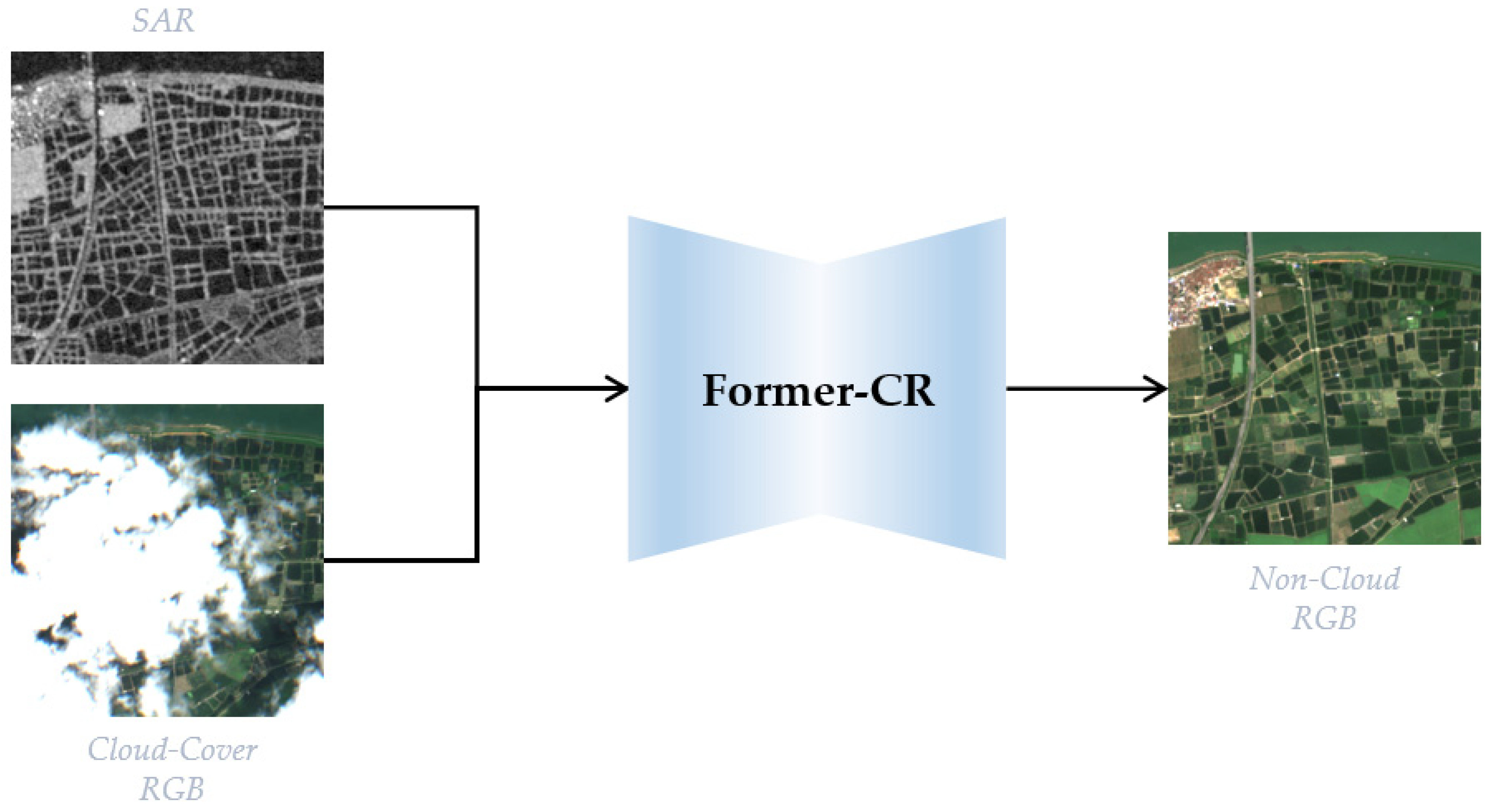

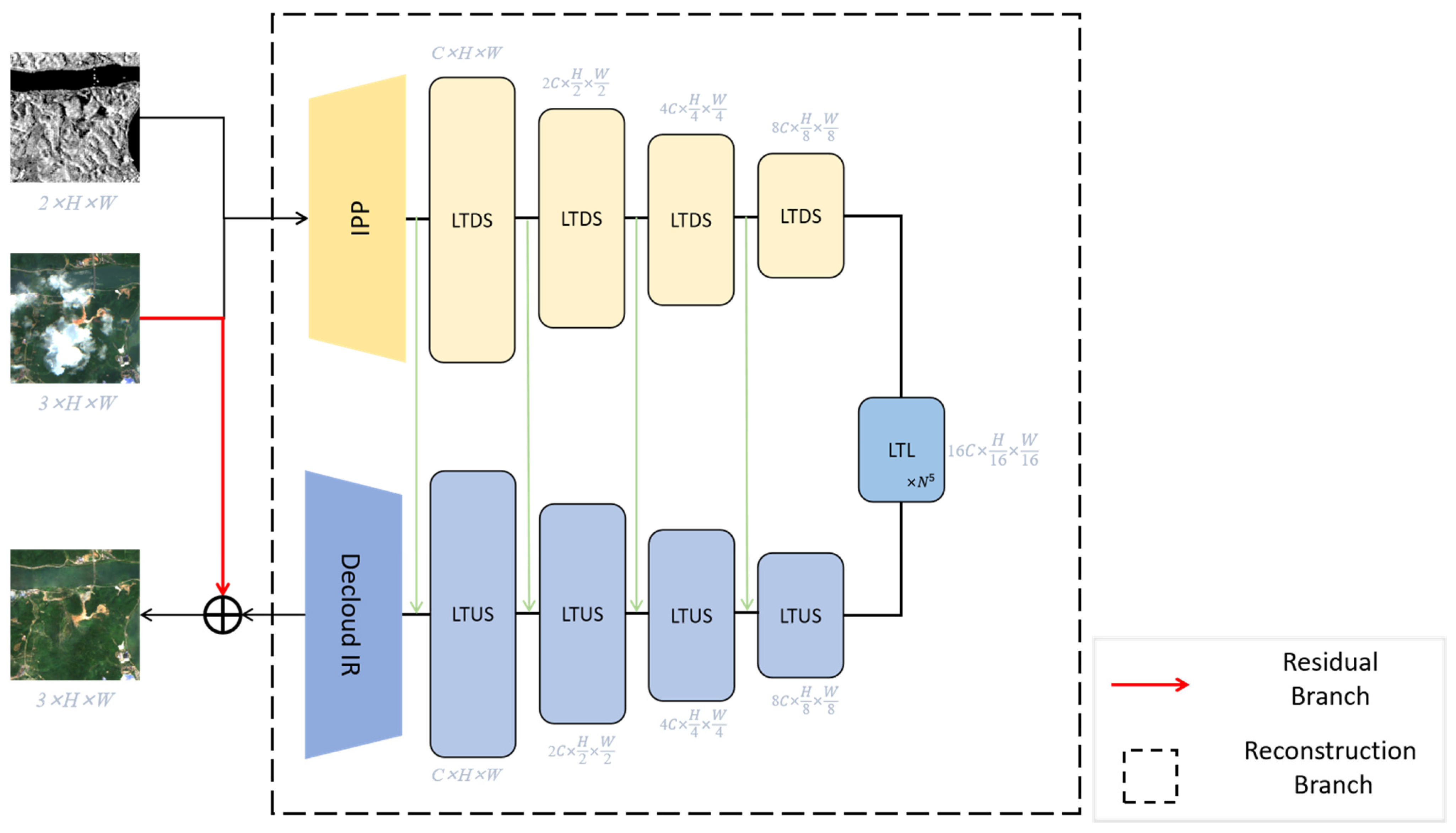

- We designed a Transformer-based multisource image cloud removal model, Former-CR, to recover cloudless optical images directly from SAR and cloudy optical images. It combines the reliable texture and structure information of the SAR image and the color information of the cloud-free area of the optical image, so as to reconstruct the cloud-free image with global consistency.

- We designed IPP and Decloud-IR. IPP can improve the flexibility of model input and extract shallow features, while Decloud-IR is able to improve the output flexibility of our model and better map the feature to the output image space. The two increase the cloud removal model’s processing capacity for remote sensing images, as well as its flexibility and scalability.

- In order to improve the image structural similarity, visual interpretability, and global consistency, a loss function that can comprehensively consider the above factors is proposed as the optimization objective of our model. The superiority of our loss function in cloud removal is extensively verified by ablation experiments.

2. Related Work

3. Materials and Methods

3.1. Reconstruction Branch

3.1.1. Overall Pipeline

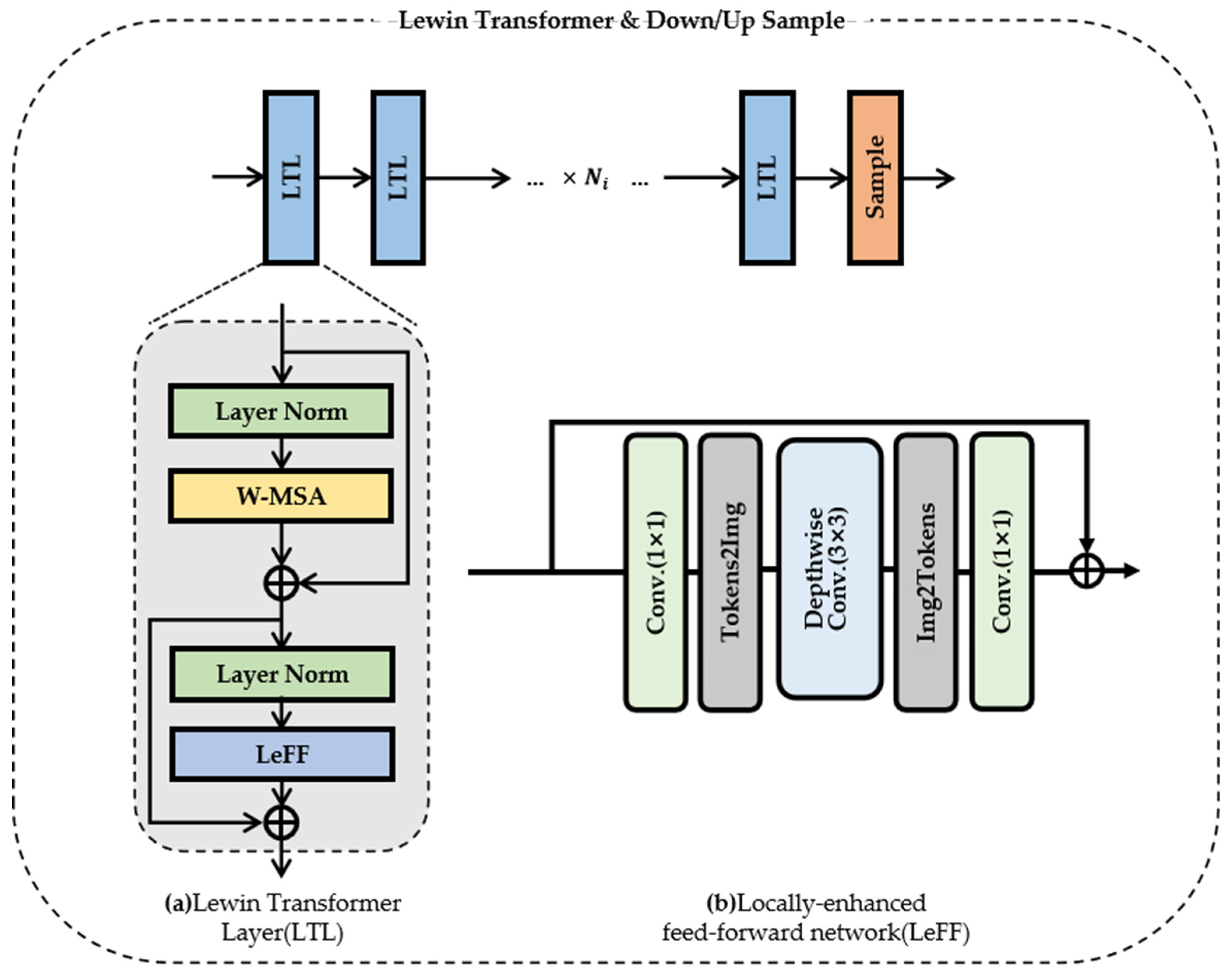

3.1.2. Lewin Transformer and Sample

3.1.3. Decloud-IR

3.2. Residual Branch

- Cloud-free area information retention: The input cloud optical image is retained by the residual branch, which maximizes the reproduction of cloud-free area information at output, reducing contamination and changes to information outside the non-cloud occlusion area during information reconstruction. This is the largest contribution of the residual branch.

- Accelerate model convergence: The residual connection before the output cloudless image can reduce the difference between the predicted image and the target image. Under the constraint of the loss function, the smaller the loss of the predicted image compared with the objective function, the faster the model converges.

- Prediction stability: In the case of data input partially occluded by large areas of thick clouds, there is less effective information left in the optical image. The residual connection can at least save information about areas without clouds if it is impossible to retrieve high-quality cloud information throughout the reconstruction phase. Therefore, even under the worst-case scenarios, the model’s output is not much poorer than the input cloud image. Our model with residual connection offers significant benefits in producing steady outcomes when compared to the unstable prediction or inaccurate prediction of CGAN-based approaches in the situation of bad data quality.

3.3. Loss Function

3.4. Metric

3.4.1. SSIM

3.4.2. PSNR

3.4.3. MAE

4. Results

4.1. Dataset

4.2. Training Setting

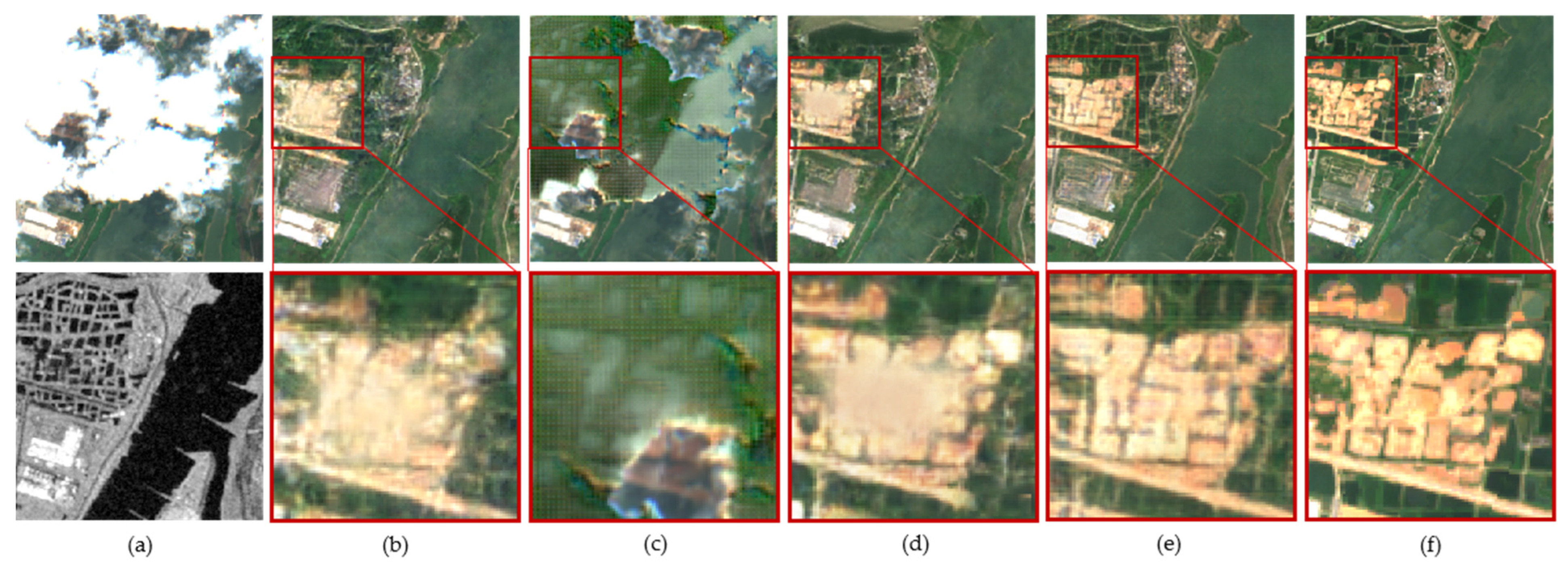

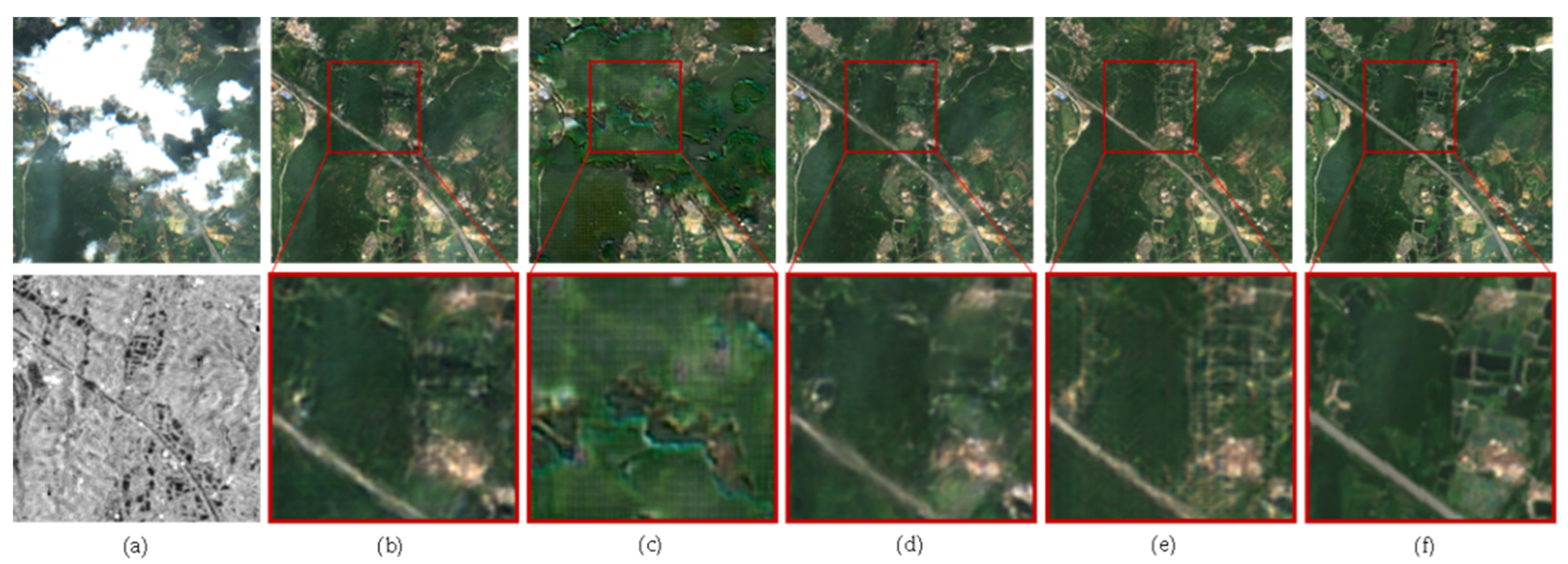

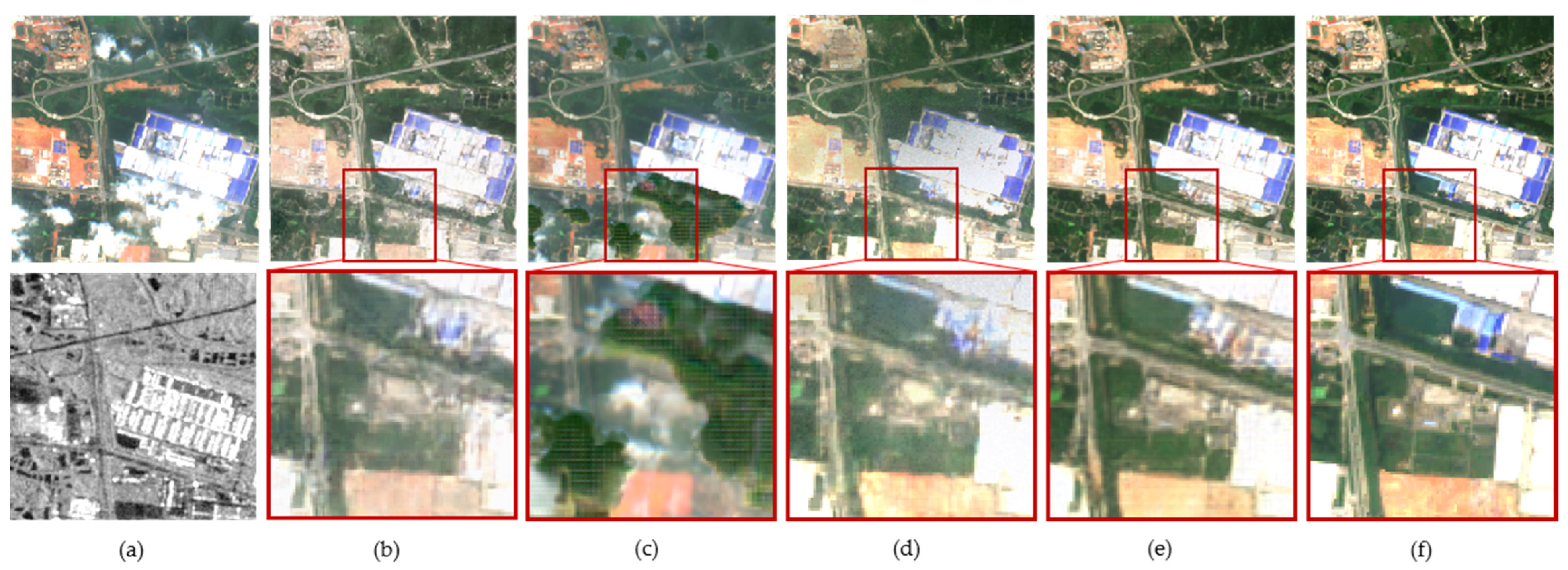

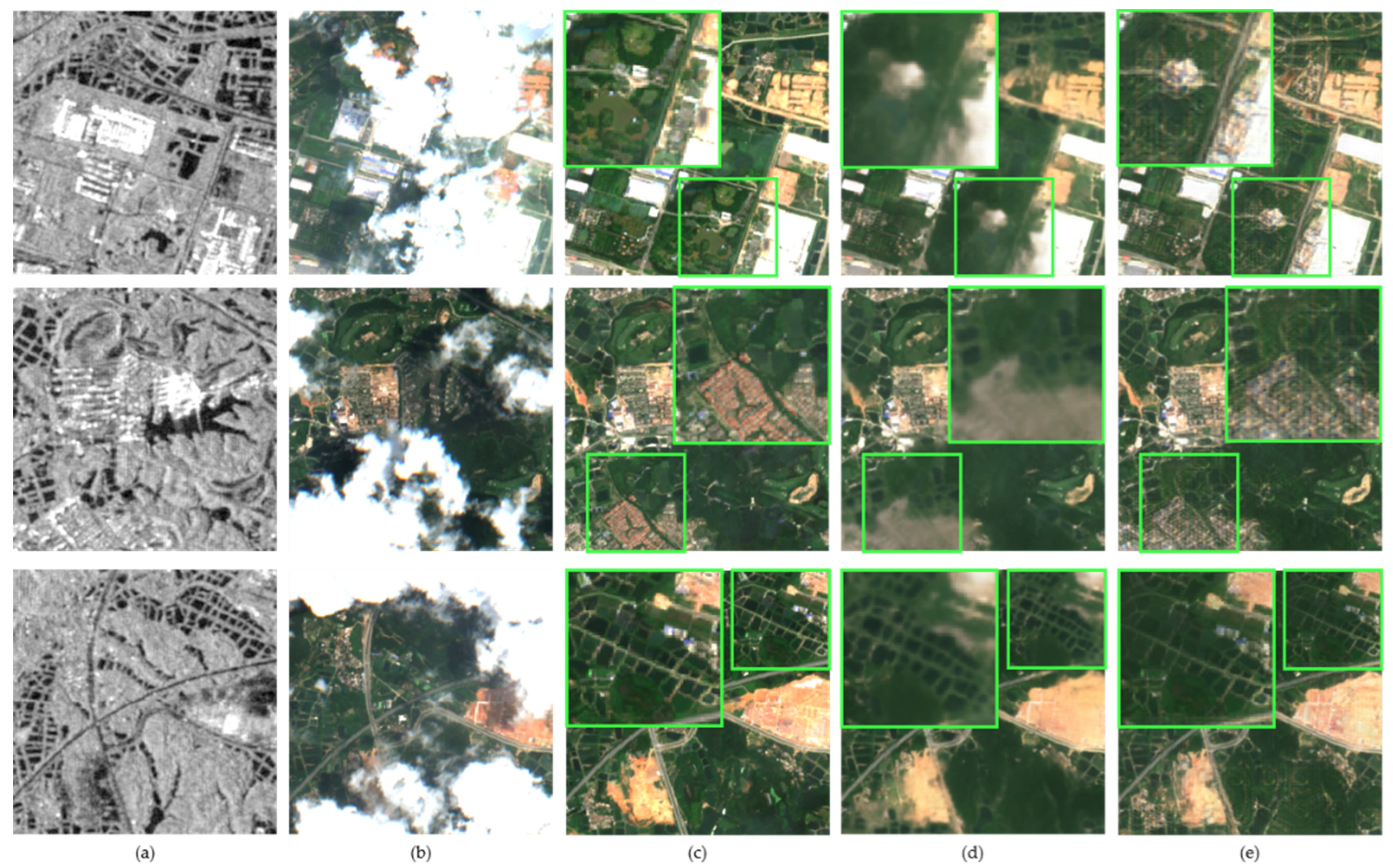

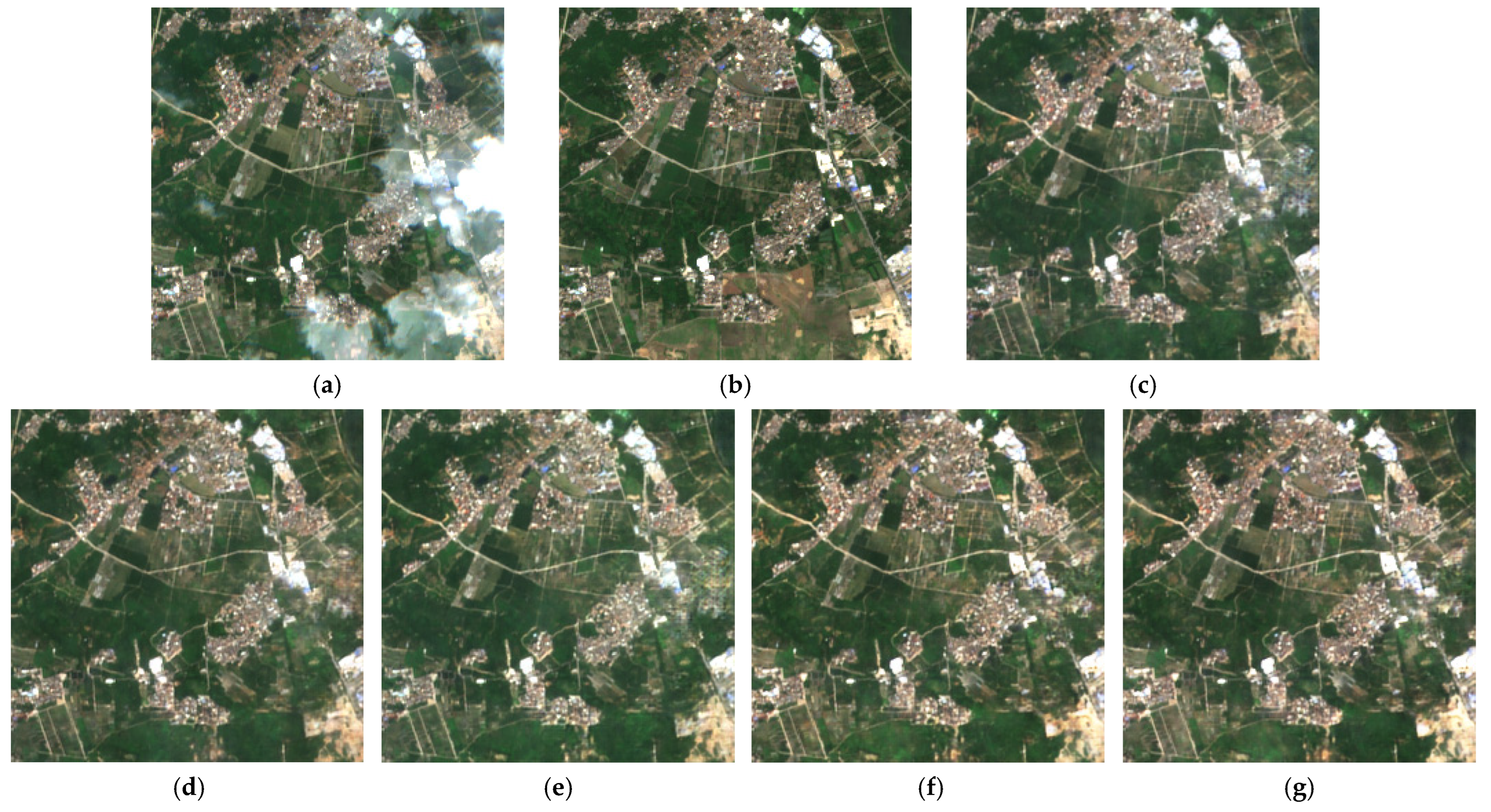

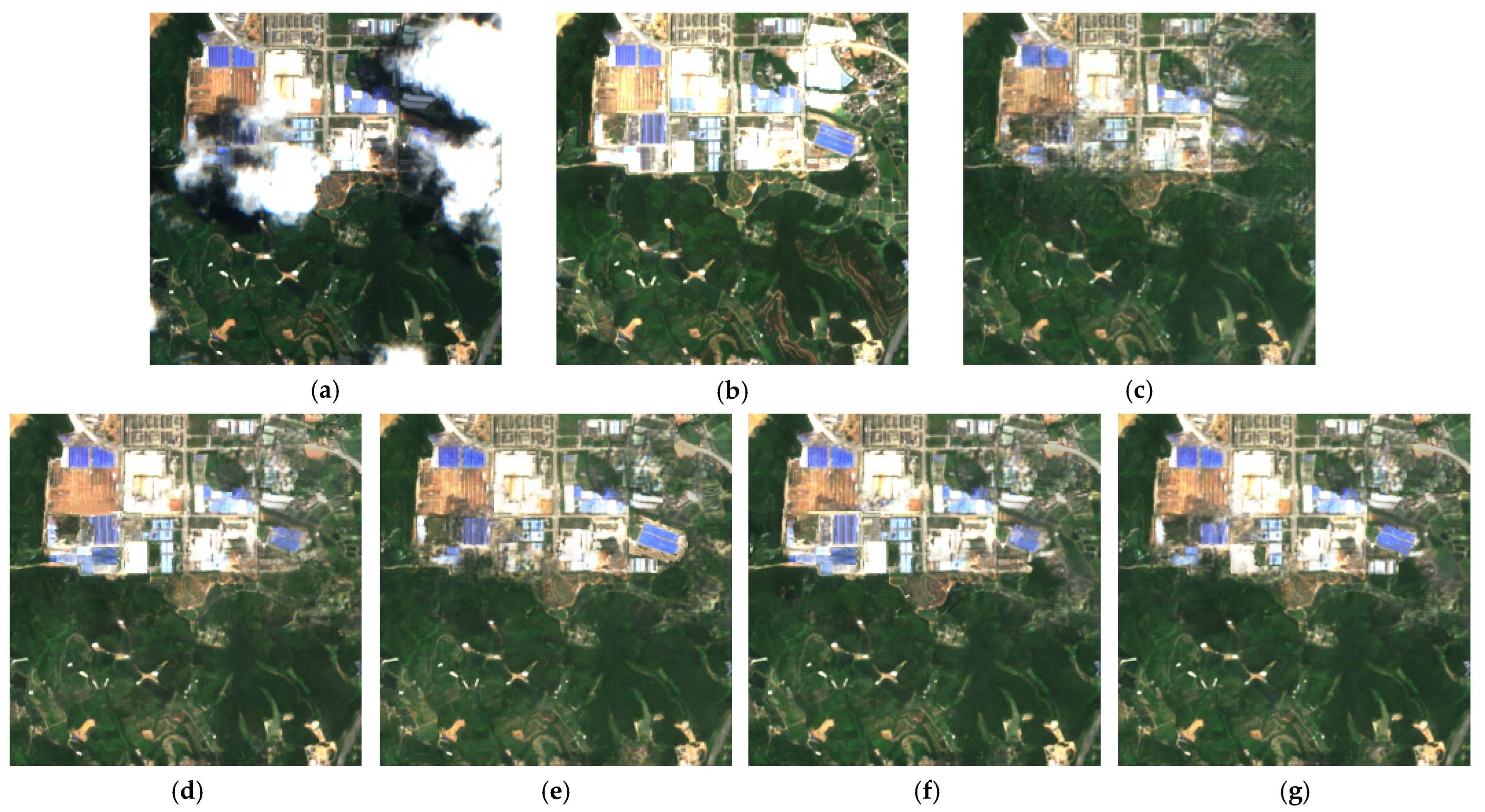

4.3. Experient Result

4.4. Loss Function Ablation Experiment

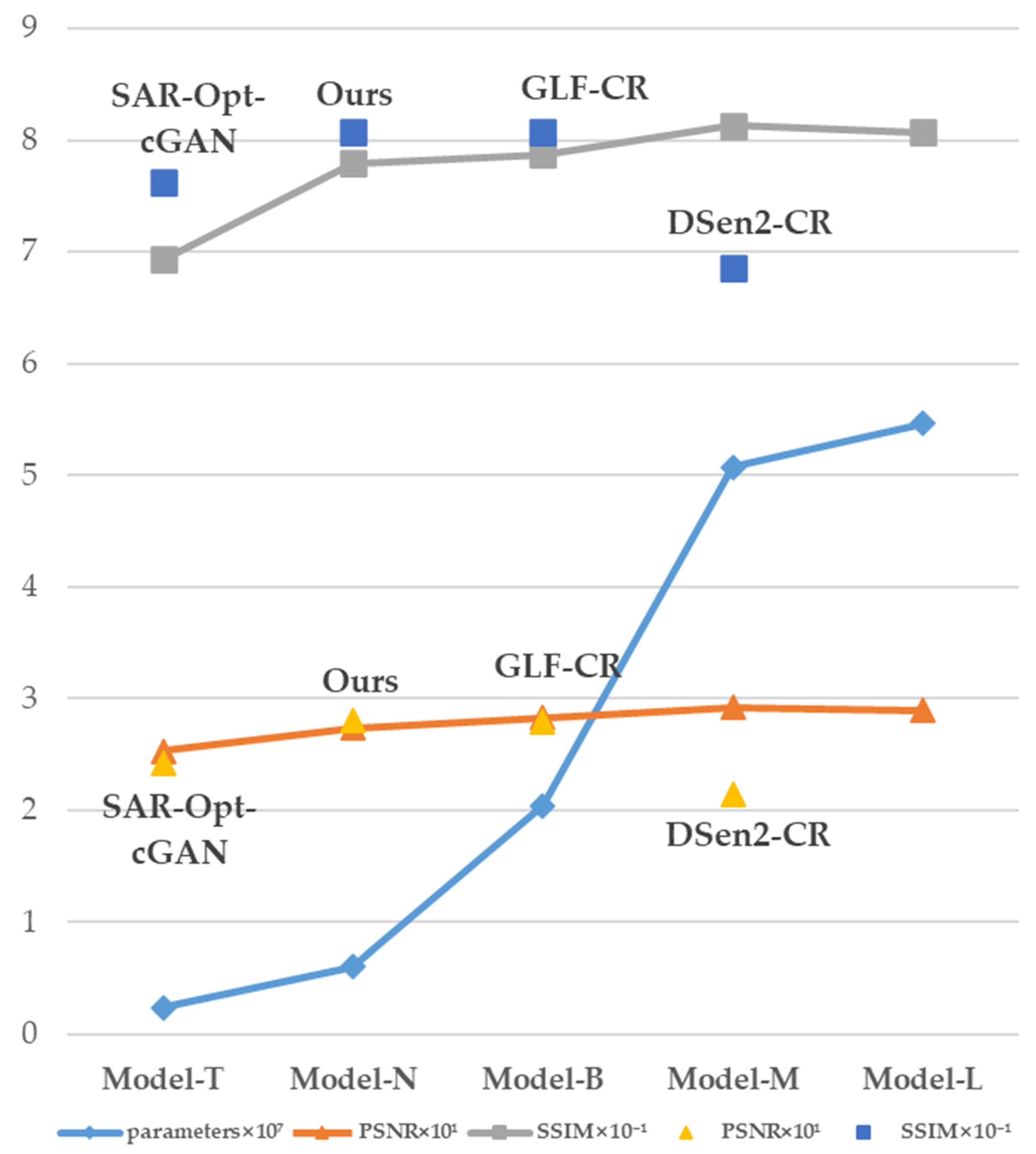

4.5. Parameters Ablation Experiment

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Li, W.B.; Li, Y. Thick Cloud Removal with Optical and SAR Imagery via Convolutional-Mapping-Deconvolutional Network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2865–2879. [Google Scholar] [CrossRef]

- Rossi, R.E.; Dungan, J.L.; Beck, L.R. Kriging in the shadows: Geostatistical interpolation for remote sensing. Remote Sens. Environ. 1994, 49, 32–40. [Google Scholar] [CrossRef]

- Van der Meer, F. Remote-sensing image analysis and geostatistics. Int. J. Remote Sens. 2012, 33, 5644–5676. [Google Scholar] [CrossRef]

- Maalouf, A.; Carré, P.; Augereau, B.; Fernandez-Maloigne, C. A bandelet-based inpainting technique for clouds removal from remotely sensed images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2363–2371. [Google Scholar] [CrossRef]

- Cheng, Q.; Shen, H.; Zhang, L.; Zhang, L.; Peng, Z. Missing information reconstruction for single remote sensing images using structure-preserving global optimization. IEEE Signal Process. Lett. 2017, 24, 1163–1167. [Google Scholar] [CrossRef]

- Meng, F.; Yang, X.; Zhou, C.; Li, Z. A Sparse Dictionary Learning-Based Adaptive Patch Inpainting Method for Thick Clouds Removal from High-Spatial Resolution Remote Sensing Imagery. Sensors 2017, 17, 2130. [Google Scholar] [CrossRef]

- Zheng, J.; Liu, X.; Wang, X. Single Image Cloud Removal Using U-Net and Generative Adversarial Networks. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6371–6385. [Google Scholar] [CrossRef]

- Lin, C.; Tsai, P.; Lai, K.; Chen, J. Cloud removal from multitemporal satellite images using information cloning. IEEE Trans. Geosci. Remote Sens. 2013, 51, 232–241. [Google Scholar] [CrossRef]

- Kalkan, K.; Maktav, M.D. A Cloud Removal Algorithm to Generate Cloud and Cloud Shadow Free Images Using Information Cloning. J. Indian Soc. Remote Sens. 2018, 46, 1255–1264. [Google Scholar] [CrossRef]

- Storey, J.; Scaramuzza, P.; Schmidt, G.; Barsi, J. Landsat 7 scan line corrector-off gap-filled product development. In Proceedings of the Pecora 16 Conference on Global Priorities in Land Remote Sensing, Sioux Falls, SD, USA, 23–27 October 2005. [Google Scholar]

- Zhang, X.; Qin, F.; Qin, Y. Study on the thick cloud removal method based on multi-temporal remote sensing images. In Proceedings of the 2010 International Conference on Multimedia Technology, Ningbo, China, 29–31 October 2010; IEEE: Piscataway, NJ, USA; pp. 1–3. [Google Scholar]

- Du, W.; Qin, Z.; Fan, J.; Gao, M.; Wang, F.; Abbasi, B. An efficient approach to remove thick cloud in VNIR bands of multi-temporal remote sensing images. Remote Sens. 2019, 11, 1284. [Google Scholar] [CrossRef]

- Zeng, C.; Long, D.; Shen, H.; Wu, P.; Cui, Y.; Hong, Y. A two-step framework for reconstructing remotely sensed land surface temperatures contaminated by cloud. ISPRS J. Photogramm. Remote Sens. 2018, 141, 30–45. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Cheng, Q.; Li, W.; Zhang, L. Thick cloud removal in high-resolution satellite images using stepwise radiometric adjustment and residual correction. Remote Sens. 2019, 11, 1925. [Google Scholar] [CrossRef]

- Cheng, Q.; Shen, H.; Zhang, L.; Yuan, Q.; Zeng, C. Cloud removal for remotely sensed images by similar pixel replacement guided with a spatio-temporal mrf model. ISPRS J. Photogramm. Remote Sens. 2014, 92, 54–68. [Google Scholar] [CrossRef]

- Lin, C.H.; Lai, K.H.; Chen, Z.B.; Chen, J.Y. Patch-based information reconstruction of cloud-contaminated multitemporal images. IEEE Trans. Geosci. Remote Sens. 2013, 52, 163–174. [Google Scholar] [CrossRef]

- Zhang, Y.; Wen, F.; Gao, Z.; Ling, X. A Coarse-to-Fine Framework for Cloud Removal in Remote Sensing Image Sequence. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5963–5974. [Google Scholar] [CrossRef]

- Wen, F.; Zhang, Y.; Gao, Z.; Ling, X. Two-pass robust component analysis for cloud removal in satellite image sequence. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1090–1094. [Google Scholar] [CrossRef]

- Li, X.; Shen, H.; Zhang, L.; Zhang, H.; Yuan, Q.; Yang, G. Recovering quantitative remote sensing products contaminated by thick clouds and shadows using multitemporal dictionary learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7086–7098. [Google Scholar]

- Li, X.; Shen, H.; Zhang, L.; Li, H. Sparse-based reconstruction of missing information in remote sensing images from spectral/temporal complementary information. ISPRS J. Photogramm. Remote Sens. 2015, 106, 1–15. [Google Scholar] [CrossRef]

- Li, X.; Shen, H.; Li, H.; Zhang, L. Patch matching-based multitemporal group sparse representation for the missing information reconstruction of remote-sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3629–3641. [Google Scholar] [CrossRef]

- Xu, M.; Jia, X.; Pickering, M.; Plaza, A.J. Cloud removal based on sparse representation via multitemporal dictionary learning. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2998–3006. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, Q.; Zeng, C.; Li, X.; Wei, Y. Missing data reconstruction in remote sensing image with a unifified spatial-temporal-spectral deep convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4274–4288. [Google Scholar] [CrossRef]

- Shen, H.; Li, X.; Cheng, Q.; Zeng, C.; Yang, G.; Li, H.; Zhang, L. Missing information reconstruction of remote sensing data: A technical review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 61–85. [Google Scholar] [CrossRef]

- Shen, H.; Wu, J.; Cheng, Q.; Aihemaiti, M.; Zhang, C.; Li, Z. A spatiotemporal fusion based cloud removal method for remote sensing images with land cover changes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 862–874. [Google Scholar] [CrossRef]

- Zhang, L.F.; Zhang, M.Y.; Sun, X.J.; Wang, L.Z.; Cen, Y. Cloud removal for hyperspectral remotely sensed images based on hyperspectral information fusion. Int. J. Remote Sens. 2018, 39, 6646–6656. [Google Scholar] [CrossRef]

- Li, X.; Wang, L.; Cheng, Q.; Wu, P.; Gan, W.; Fang, L. Cloud removal in remote sensing images using nonnegative matrix factorization and error correction. ISPRS J. Photogramm. Remote Sens. 2019, 148, 103–113. [Google Scholar] [CrossRef]

- Hoan, N.T.; Tateishi, R. Cloud removal of optical image using SAR data for ALOS applications. Experimenting on simulated ALOS data. J. Remote Sens. Soc. Japan 2009, 29, 410–417. [Google Scholar]

- Eckardt, R.; Berger, C.; Thiel, C.; Schmullius, C. Removal of optically thick clouds from multi-spectral satellite images using multi-frequency sar data. Remote Sens. 2013, 5, 2973–3006. [Google Scholar] [CrossRef]

- Bermudez, J.D.; Happ, P.N.; Oliveira, D.A.B.; Feitosa, R.Q. Sar to optical image synthesis for cloud removal with generative adversarial networks. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2018, 4, 5–11. [Google Scholar] [CrossRef]

- Grohnfeldt, C.; Schmitt, M.; Zhu, X. A conditional generative adversarial network to fuse sar and multispectral optical data for cloud removal from sentinel-2 images. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1726–1729. [Google Scholar]

- Gao, J.; Yuan, Q.; Li, J.; Zhang, H.; Su, X. Cloud Removal with Fusion of High Resolution Optical and SAR Images Using Generative Adversarial Networks. Remote Sens. 2020, 12, 191. [Google Scholar] [CrossRef]

- Darbaghshahi, F.N.; Mohammadi, M.R.; Soryani, M. Cloud Removal in Remote Sensing Images Using Generative Adversarial Networks and SAR-to-Optical Image Translation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4105309. [Google Scholar] [CrossRef]

- Meraner, A.; Ebel, P.; Zhu, X.; Schmitt, M. Cloud removal in sentinel-2 imagery using a deep residual neural network and SAR-optical data fusion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 333–346. [Google Scholar] [CrossRef] [PubMed]

- Ashish, V.; Noam, S.; Niki, P.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Chen, H.T.; Wang, Y.H.; Guo, T.Y.; Xu, C.; Deng, Y.P.; Liu, Z.H.; Ma, S.W.; Xu, C.J.; Xu, C.; Gao, W. Pre-Trained Image Processing Transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 12299–12310. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Wang, Z.; Cun, X.; Bao, J.; Zhou, W.; Liu, J.; Li, H. Uformer: A general u-shaped transformer for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17683–17693. [Google Scholar]

- Fuentes Reyes, M.; Auer, S.; Merkle, N.; Henry, C.; Schmitt, M. Sar-to-optical image translation based on conditional generative adversarial networks—Optimization, opportunities and limits. Remote Sens. 2019, 11, 2067. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In European Conference on Computer Vision; Springer: Cham, Switzerland; Zurich, Switzerland, 2014; pp. 184–199. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a Gaussian Denoiser: Residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef]

- Cavigelli, L.; Hager, P.; Benini, L. CAS-CNN: A deep convolutional neural network for image compression artifact suppression. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 752–759. [Google Scholar]

- Zhang, K.; Li, Y.; Zuo, W.; Zhang, L.; Van Gool, L.; Timofte, R. Plug-and-play image restoration with deep denoiser prior. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6360–6376. [Google Scholar] [CrossRef]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Change Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2472–2481. [Google Scholar]

- Xu, F.; Shi, Y.; Ebel, P.; Yu, L.; Xia, G.S.; Yang, W.; Zhu, X.X. GLF-CR: SAR-enhanced cloud removal with global–local fusion. ISPRS J. Photogramm. Remote Sens. 2022, 192, 268–278. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Houlsby, N. An image is worth 16 × 16 words: Transformers for image recognition at scale. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 1–5 May 2021. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Xiao, T.; Singh, M.; Mintun, E.; Darrell, T.; Dollár, P.; Girshick, R. Early convolutions help transformers see better. Adv. Neural Inf. Process. Syst. 2021, 34, 30392–30400. [Google Scholar]

- Wu, H.; Xiao, B.; Codella, N.; Liu, M.; Dai, X.; Yuan, L.; Zhang, L. Cvt: Introducing convolutions to vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 22–31. [Google Scholar]

- Li, Y.; Zhang, K.; Cao, J.; Timofte, R.; VanGool, L. LocalViT: Bringing Locality to Vision Transformers. arXiv 2021, arXiv:2104.05707. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Ebel, P.; Meraner, A.; Schmitt, M.; Zhu, X.X. Multisensor data fusion for cloud removal in global and all-season sentinel-2 imagery. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5866–5878. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Singapore, 5–9 October 2015; Springer: Munich, Germany, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

| SAR-Opt-cGAN | DSen2-CR | GLF-CR | Ours | |

|---|---|---|---|---|

| SSIM | 0.76237 | 0.6856 | 0.8082 | |

| PSNR | 24.26 | 21.39 | 27.95 | 28.73 |

| MAE | 0.0465 | 0.0753 | 0.0284 | 0.0263 |

| L1 Loss | Ours | |

|---|---|---|

| PSNR | 22.14 | 28.67 |

| L1 | 0.0352 | 0.0283 |

| SSIM | 0.5735 | 0.8103 |

| Model-T | 16 | {1, 1, 1, 1,1} |

| Model-N | 16 | {2, 2, 2, 2,4} |

| Model-B | 16 | {4, 4, 4, 4,4} |

| Model-M | 32 | {1, 2, 4, 8,4} |

| Model-L | 32 | {4, 4, 4, 4,4} |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, S.; Wang, J.; Zhang, S. Former-CR: A Transformer-Based Thick Cloud Removal Method with Optical and SAR Imagery. Remote Sens. 2023, 15, 1196. https://doi.org/10.3390/rs15051196

Han S, Wang J, Zhang S. Former-CR: A Transformer-Based Thick Cloud Removal Method with Optical and SAR Imagery. Remote Sensing. 2023; 15(5):1196. https://doi.org/10.3390/rs15051196

Chicago/Turabian StyleHan, Shuning, Jianmei Wang, and Shaoming Zhang. 2023. "Former-CR: A Transformer-Based Thick Cloud Removal Method with Optical and SAR Imagery" Remote Sensing 15, no. 5: 1196. https://doi.org/10.3390/rs15051196

APA StyleHan, S., Wang, J., & Zhang, S. (2023). Former-CR: A Transformer-Based Thick Cloud Removal Method with Optical and SAR Imagery. Remote Sensing, 15(5), 1196. https://doi.org/10.3390/rs15051196