Learning Domain-Adaptive Landmark Detection-Based Self-Supervised Video Synchronization for Remote Sensing Panorama

Abstract

1. Introduction

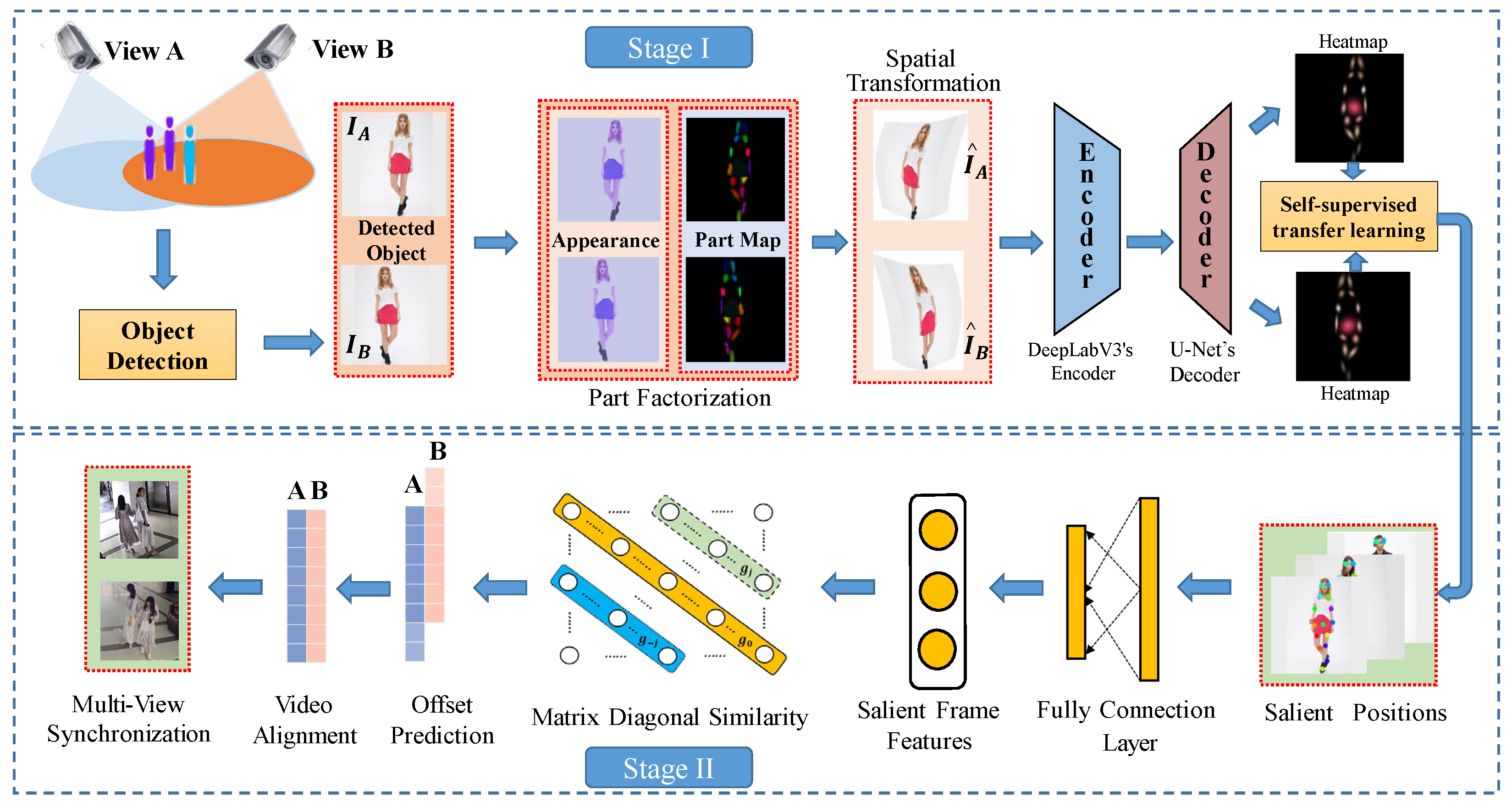

- We propose a self-supervised style transfer solution that decomposes a scene into objects and their parts to learn domain-specific object position per frame that allows to track keypoint locations, such as animal position and articulated human pose over time.

- We propose an efficient two-stage method of style transfer and matrix diagonal (STMD) which uses the keypoint locations to train a generalized similarity model that can predict the synchronized offset between two views.

- Experimentations on three different video-synchronization datasets and the application of the image mosaic of UAV remote sensing prove the superiority and generalization of the proposed method on different domains.

2. Related Work

2.1. Synchronization Algorithms

2.2. Object Detection and Tracking

2.3. Self-Supervised Methods

3. The Proposed Method

3.1. Stage I: Style Transfer-Based Object Discovery and Tracking

3.2. Stage II: Matrix Diagonal Similarity-Based Classification Framework

4. Experiments

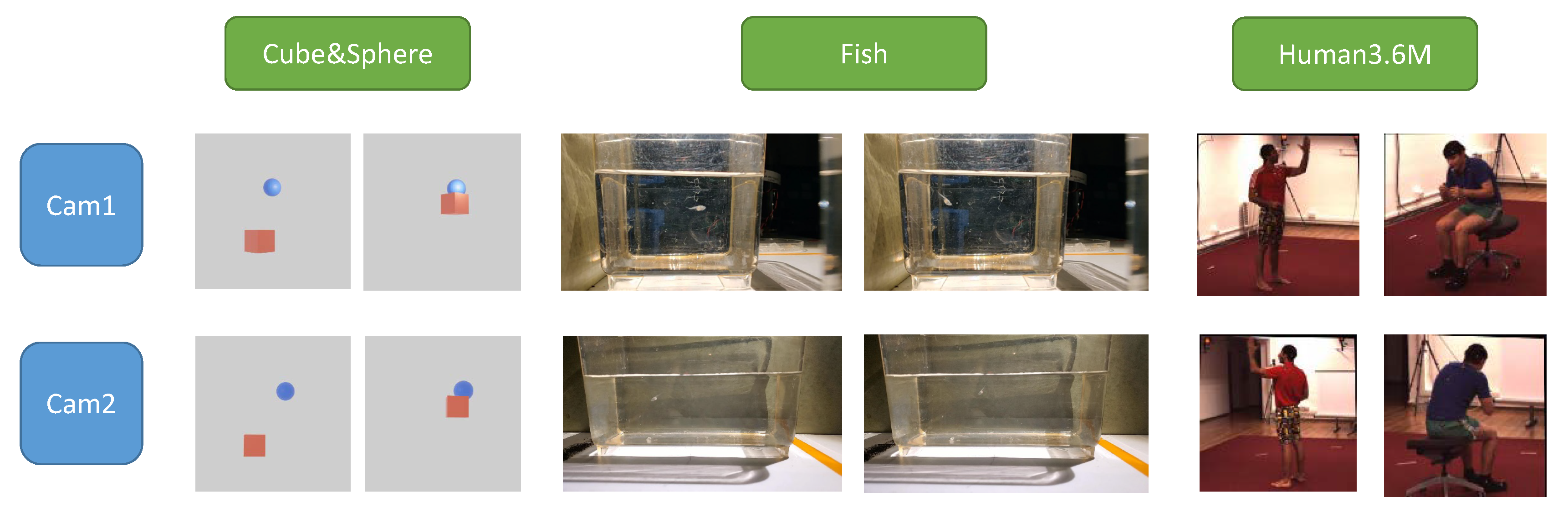

4.1. Datasets

4.2. Metrics

4.3. Experiment Setup

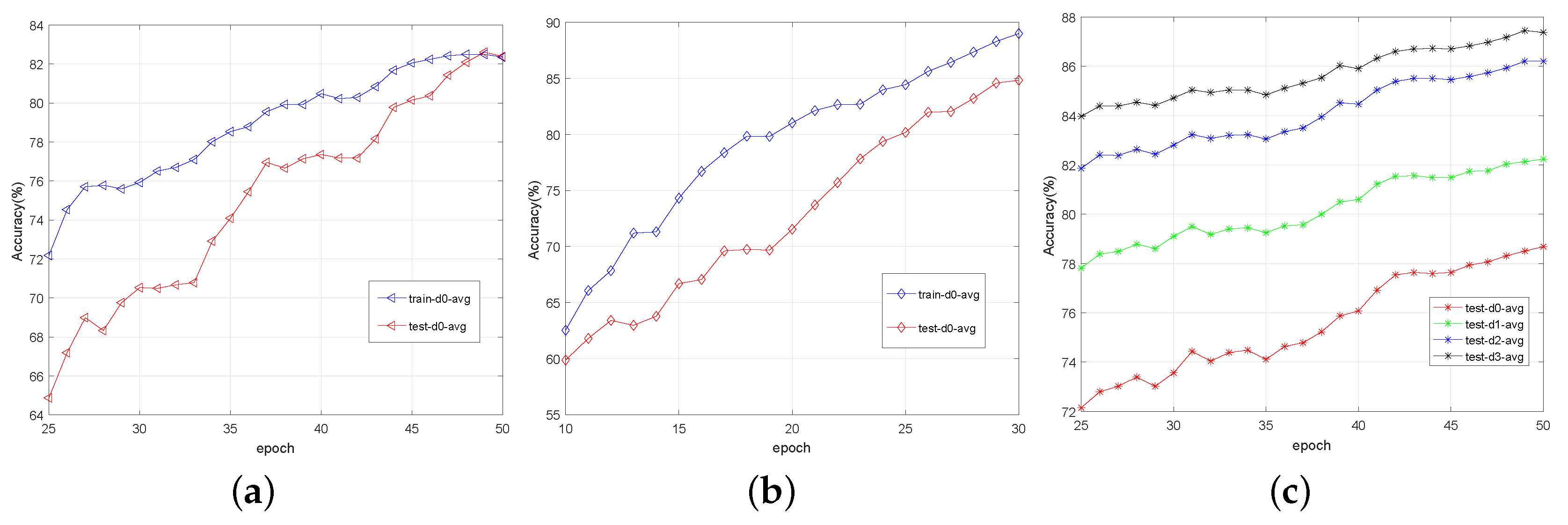

4.4. Results on Cube&Sphere Dataset

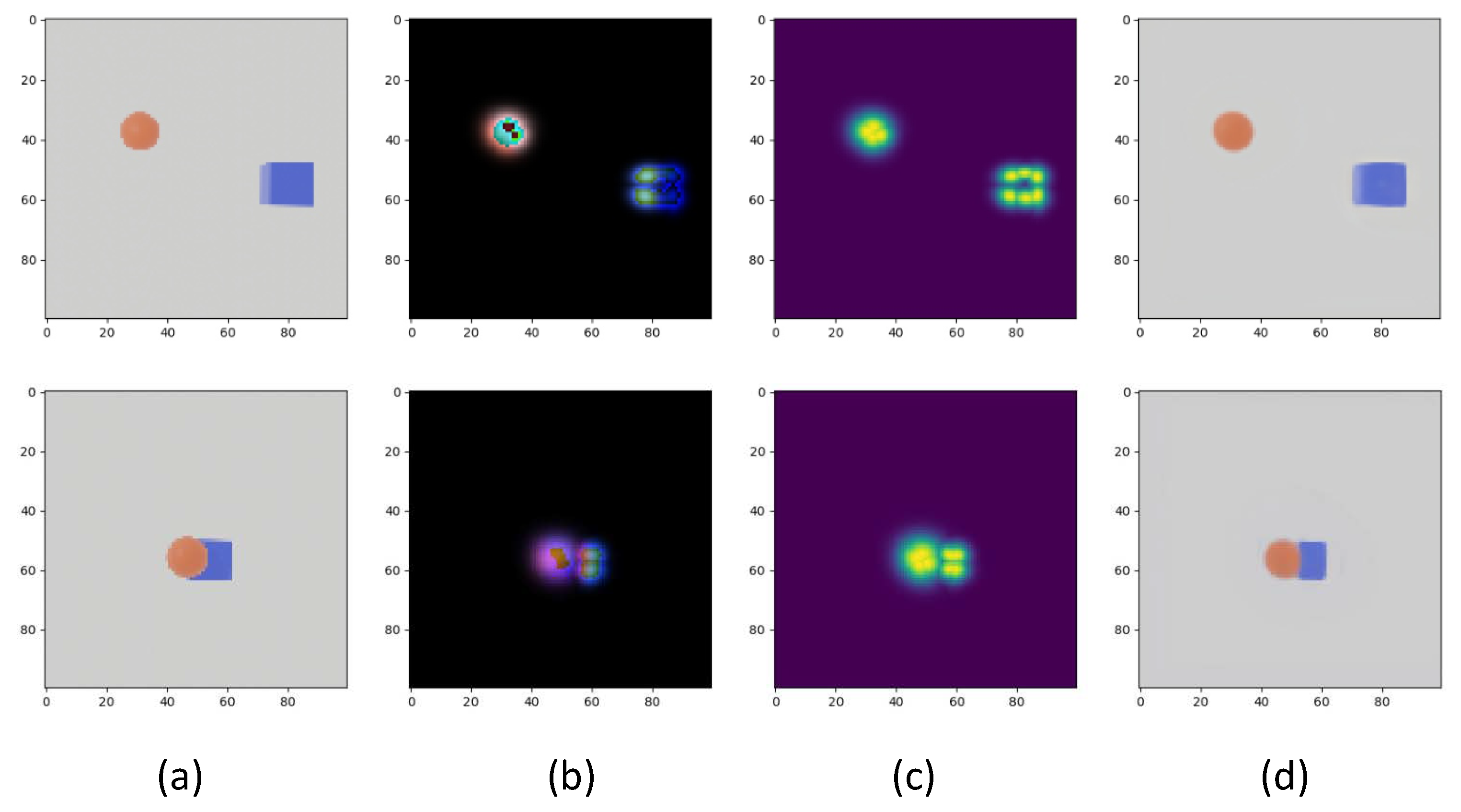

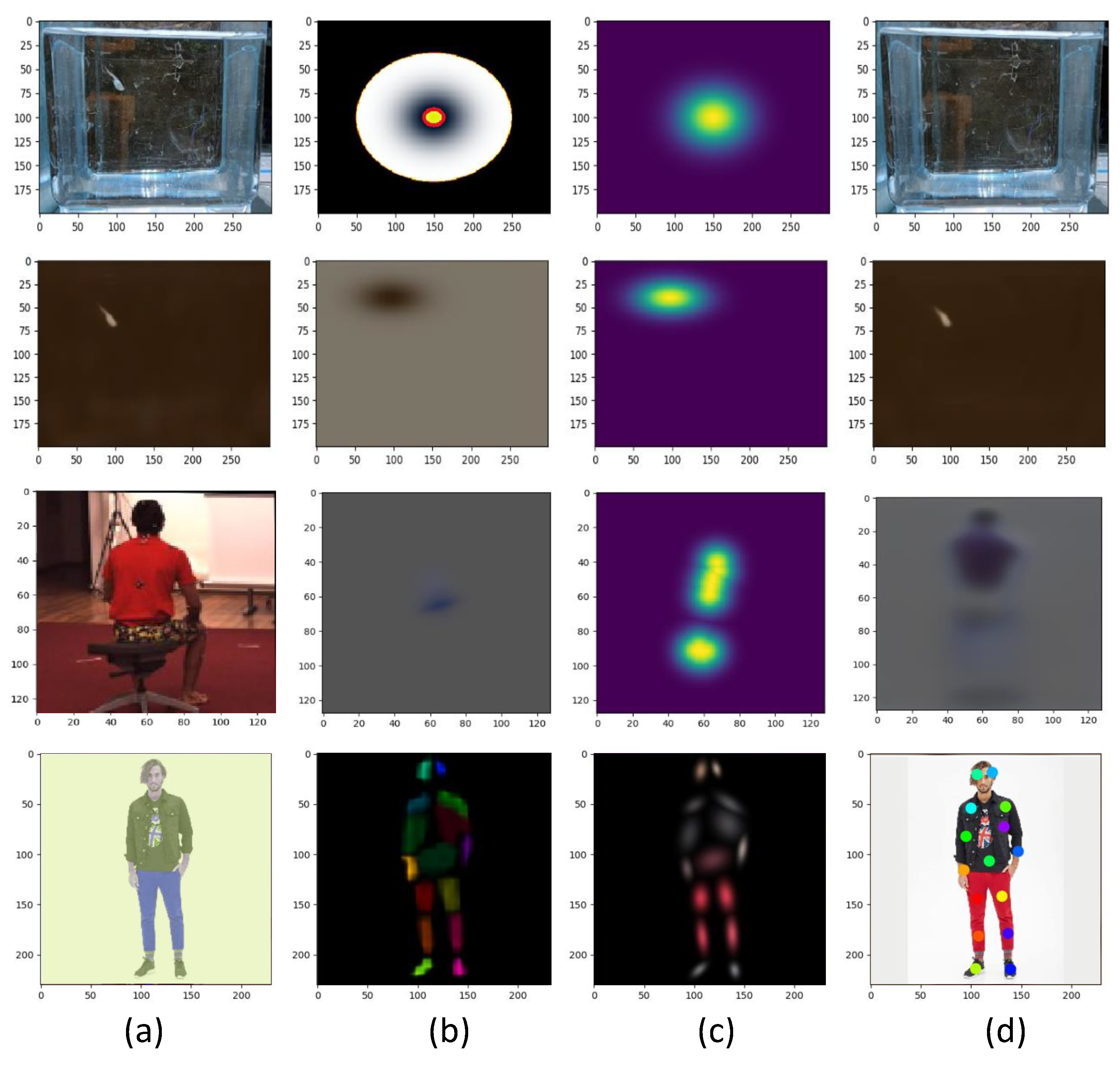

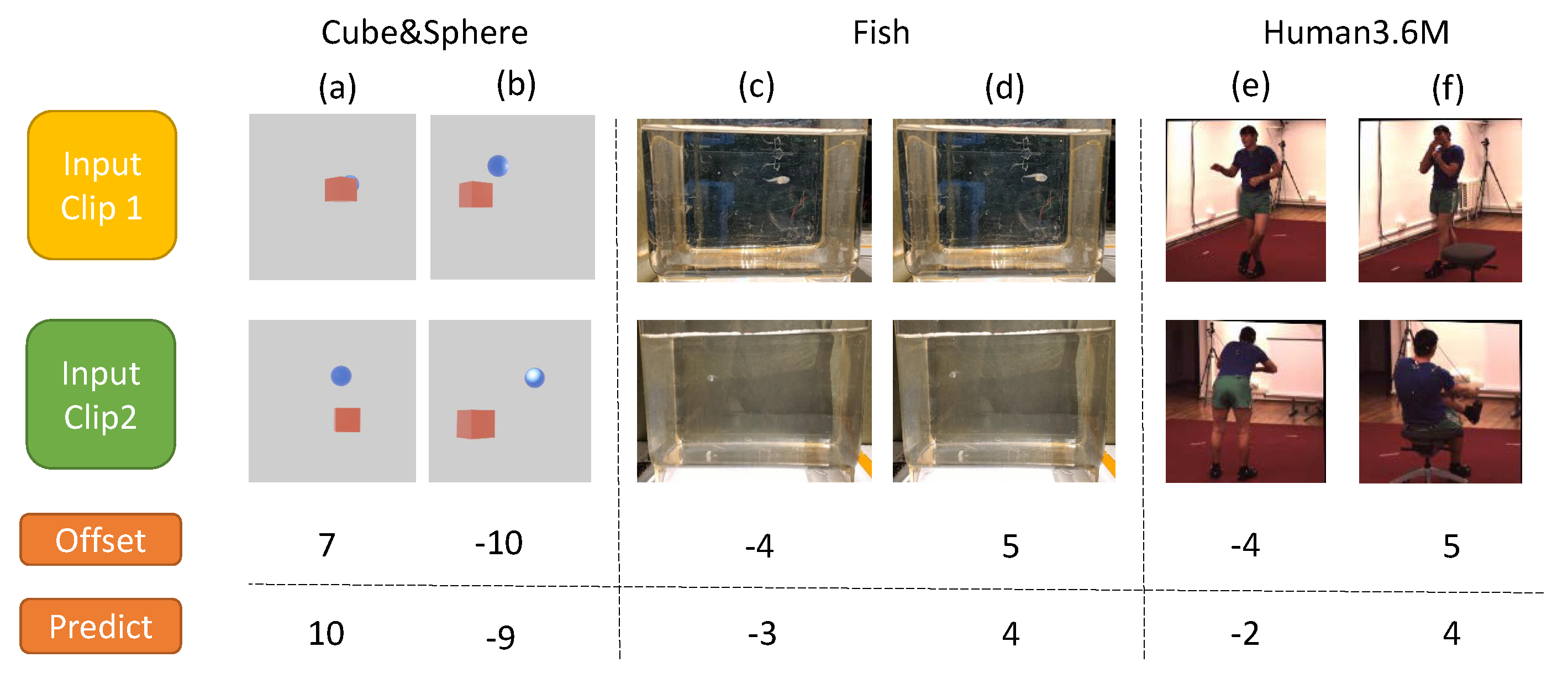

- The SynNet method [26] uses OpenPose [30], which outputs a heatmap for each human body joint. It is similar to our proposed method that disentangles the image into parts, but does not generalize to general objects since the detector is trained on humans. By contrast, our ST module precisely estimates the salient points of the non-human object that are shown in Figure 4, which showcases the better generalization of our self-supervised approach.

- To facilitate a fair comparison of the SYN network architecture and our synchronization network, we use heatmaps generated by ST as input to train SynNet. We call this combination with our self-supervised part maps (SynNet+ST). It improves the accuracy of SynNet+OpenPose by 10.9%. Moreover, our full method attains a higher offset prediction accuracy, which shows that operating on explicit 2D positions and their trajectories is better than using discretized heatmaps as input (as used in SynNet+ST).

- In addition, we also compare against the GTpoint+MD and PE methods. The former is a strong baseline that uses the ground truth 2D coordinates instead of estimated ones to compute the matrix diagonal similarity. These GT positions are the central positions of the cube and sphere in Blender, projected onto the image plane. The latter uses positional encoding (PE) [72] on the ground truth 2D positions. These are projections of the 2D point onto sinusoidal waves of different frequencies, providing a smooth and hierarchical encoding of positions. We try using the PE strategy before the coordinate feature is fed to MD to make a comparison with our absolutely coordinate feature in STMD, the proposed STMD+MSE surpasses them by a large margin, which infers that using the original absolute position generated by the ST stage is better than Blender and PE in MD stage.

- Finally, we also compare with some baselines using different downsampling rates. The results in Table 1 show that the testing accuracy is increased while the SynError is decreased, which infers that the downsampling strategy improves accuracy by sacrificing SynError. Moreover, the proposed STMD method outperforms all the downsampling cases of other baselines, and the proposed ST module can improve the MD module with the GT coordinates from Blender (GTpoint+MD) with 9.0% test-d0, which validates the superiority of our method.

4.5. Results on Fish Dataset

4.6. Results on Human36M Dataset

4.7. Limitations

4.8. STMD Method for the UAV Remote Sensing Image Mosaic

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Aires, A.S.; Marques Junior, A.; Zanotta, D.C.; Spigolon, A.L.D.; Veronez, M.R.; Gonzaga, L., Jr. Digital Outcrop Model Generation from Hybrid UAV and Panoramic Imaging Systems. Remote Sens. 2022, 14, 3994. [Google Scholar] [CrossRef]

- Zhang, Y.; Mei, X.; Ma, Y.; Jiang, X.; Peng, Z.; Huang, J. Hyperspectral Panoramic Image Stitching Using Robust Matching and Adaptive Bundle Adjustment. Remote Sens. 2022, 14, 4038. [Google Scholar] [CrossRef]

- Han, P.; Ma, C.; Chen, J.; Chen, L.; Bu, S.; Xu, S.; Zhao, Y.; Zhang, C.; Hagino, T. Fast Tree Detection and Counting on UAVs for Sequential Aerial Images with Generating Orthophoto Mosaicing. Remote Sens. 2022, 14, 4113. [Google Scholar] [CrossRef]

- Hwang, Y.S.; Schlüter, S.; Park, S.I.; Um, J.S. Comparative evaluation of mapping accuracy between UAV video versus photo mosaic for the scattered urban photovoltaic panel. Remote Sens. 2021, 13, 2745. [Google Scholar] [CrossRef]

- Wandt, B.; Little, J.J.; Rhodin, H. ElePose: Unsupervised 3D Human Pose Estimation by Predicting Camera Elevation and Learning Normalizing Flows on 2D Poses. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Gholami, M.; Wandt, B.; Rhodin, H.; Ward, R.; Wang, Z.J. AdaptPose: Cross-Dataset Adaptation for 3D Human Pose Estimation by Learnable Motion Generation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Mei, L.; Lai, J.; Xie, X.; Zhu, J.; Chen, J. Illumination-invariance optical flow estimation using weighted regularization transform. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 495–508. [Google Scholar] [CrossRef]

- Mei, L.; Chen, Z.; Lai, J. Geodesic-based probability propagation for efficient optical flow. Electron. Lett. 2018, 54, 758–760. [Google Scholar] [CrossRef]

- Mei, L.; Lai, J.; Feng, Z.; Xie, X. From pedestrian to group retrieval via siamese network and correlation. Neurocomputing 2020, 412, 447–460. [Google Scholar] [CrossRef]

- Mei, L.; Lai, J.; Feng, Z.; Xie, X. Open-World Group Retrieval with Ambiguity Removal: A Benchmark. In Proceedings of the 25th International Conference on Pattern Recognition, Milan, Italy, 10 January–15 January 2021; pp. 584–591. [Google Scholar]

- Mahmoud, N.; Collins, T.; Hostettler, A.; Soler, L.; Doignon, C.; Montiel, J.M.M. Live tracking and dense reconstruction for handheld monocular endoscopy. IEEE Trans. Med. Imaging 2018, 38, 79–89. [Google Scholar] [CrossRef]

- Zhen, W.; Hu, Y.; Liu, J.; Scherer, S. A joint optimization approach of lidar-camera fusion for accurate dense 3-d reconstructions. IEEE Robot. Autom. Lett. 2019, 4, 3585–3592. [Google Scholar] [CrossRef]

- Huang, Y.; Bi, H.; Li, Z.; Mao, T.; Wang, Z. Stgat: Modeling spatial-temporal interactions for human trajectory prediction. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6272–6281. [Google Scholar]

- Sheng, Z.; Xu, Y.; Xue, S.; Li, D. Graph-based spatial-temporal convolutional network for vehicle trajectory prediction in autonomous driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 17654–17665. [Google Scholar] [CrossRef]

- Saini, N.; Price, E.; Tallamraju, R.; Enficiaud, R.; Ludwig, R.; Martinovic, I.; Ahmad, A.; Black, M.J. Markerless outdoor human motion capture using multiple autonomous micro aerial vehicles. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 823–832. [Google Scholar]

- Rhodin, H.; Spörri, J.; Katircioglu, I.; Constantin, V.; Meyer, F.; Müller, E.; Salzmann, M.; Fua, P. Learning monocular 3D human pose estimation from multi-view images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8437–8446. [Google Scholar]

- Sharma, S.; Varigonda, P.T.; Bindal, P.; Sharma, A.; Jain, A. Monocular 3D human pose estimation by generation and ordinal ranking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2325–2334. [Google Scholar]

- Ci, H.; Ma, X.; Wang, C.; Wang, Y. Locally connected network for monocular 3D human pose estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1429–1442. [Google Scholar] [CrossRef]

- Graa, O.; Rekik, I. Multi-view learning-based data proliferator for boosting classification using highly imbalanced classes. J. Neurosci. Methods 2019, 327, 108344. [Google Scholar] [CrossRef]

- Ye, M.; Johns, E.; Handa, A.; Zhang, L.; Pratt, P.; Yang, G.Z. Self-Supervised Siamese Learning on Stereo Image Pairs for Depth Estimation in Robotic Surgery. arXiv 2017, arXiv:1705.08260. [Google Scholar]

- Zhuang, X.; Yang, Z.; Cordes, D. A technical review of canonical correlation analysis for neuroscience applications. Hum. Brain Mapp. 2020, 41, 3807–3833. [Google Scholar] [CrossRef]

- Wang, J.; Fang, Z.; Zhao, H. AlignNet: A Unifying Approach to Audio-Visual Alignment. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision, Snowmass, CO, USA, 1–5 March 2020; pp. 3309–3317. [Google Scholar]

- Wang, O.; Schroers, C.; Zimmer, H.; Gross, M.; Sorkine-Hornung, A. Videosnapping: Interactive synchronization of multiple videos. ACM Trans. Graph. (TOG) 2014, 33, 1–10. [Google Scholar] [CrossRef]

- Wieschollek, P.; Freeman, I.; Lensch, H.P. Learning robust video synchronization without annotations. In Proceedings of the 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 92–100. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal segment networks: Towards good practices for deep action recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 20–36. [Google Scholar]

- Wu, X.; Wu, Z.; Zhang, Y.; Ju, L.; Wang, S. Multi-Video Temporal Synchronization by Matching Pose Features of Shared Moving Subjects. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2729–2738. [Google Scholar]

- Du, Y.; Wang, W.; Wang, L. Hierarchical recurrent neural network for skeleton based action recognition. In Proceedings of the 2015 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1110–1118. [Google Scholar]

- Shahroudy, A.; Liu, J.; Ng, T.T.; Wang, G. Ntu RGB+D: A large scale dataset for 3D human activity analysis. In Proceedings of the 2016 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1010–1019. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2D pose estimation using part affinity fields. In Proceedings of the 2017 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Cao, Z.; Martinez, G.H.; Simon, T.; Wei, S.E.; Sheikh, Y.A. OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef]

- Lorenz, D.; Bereska, L.; Milbich, T.; Ommer, B. Unsupervised part-based disentangling of object shape and appearance. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10955–10964. [Google Scholar]

- Xu, X.; Dunn, E. Discrete Laplace Operator Estimation for Dynamic 3D Reconstruction. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1548–1557. [Google Scholar]

- Korbar, B. Co-Training of Audio and Video Representations from Self-Supervised Temporal Synchronization. Master’s Thesis, Dartmouth College, Hanover, NH, USA, 2018. [Google Scholar]

- Wang, X.; Shi, J.; Park, H.S.; Wang, Q. Motion-based temporal alignment of independently moving cameras. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 2344–2354. [Google Scholar] [CrossRef]

- Huo, S.; Liu, D.; Li, B.; Ma, S.; Wu, F.; Gao, W. Deep network-based frame extrapolation with reference frame alignment. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 1178–1192. [Google Scholar] [CrossRef]

- Purushwalkam, S.; Ye, T.; Gupta, S.; Gupta, A. Aligning videos in space and time. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 262–278. [Google Scholar]

- Dwibedi, D.; Aytar, Y.; Tompson, J.; Sermanet, P.; Zisserman, A. Temporal cycle-consistency learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1801–1810. [Google Scholar]

- Sermanet, P.; Lynch, C.; Chebotar, Y.; Hsu, J.; Jang, E.; Schaal, S.; Levine, S.; Brain, G. Time-contrastive networks: Self-supervised learning from video. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 1134–1141. [Google Scholar]

- Andrew, G.; Arora, R.; Bilmes, J.; Livescu, K. Deep canonical correlation analysis. In Proceedings of the International Conference on Machine Learning, Miami, FL, USA, 4–7 December 2013; pp. 1247–1255. [Google Scholar]

- Revaud, J.; Douze, M.; Schmid, C.; Jégou, H. Event retrieval in large video collections with circulant temporal encoding. In Proceedings of the 2013 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2459–2466. [Google Scholar]

- Wu, Y.; Ji, Q. Robust facial landmark detection under significant head poses and occlusion. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3658–3666. [Google Scholar]

- Zhu, S.; Li, C.; Change Loy, C.; Tang, X. Face alignment by coarse-to-fine shape searching. In Proceedings of the 2015 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4998–5006. [Google Scholar]

- Zhang, Z.; Luo, P.; Loy, C.C.; Tang, X. Learning deep representation for face alignment with auxiliary attributes. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 918–930. [Google Scholar] [CrossRef]

- Ranjan, R.; Patel, V.M.; Chellappa, R. Hyperface: A deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 121–135. [Google Scholar] [CrossRef]

- Tompson, J.; Goroshin, R.; Jain, A.; LeCun, Y.; Bregler, C. Efficient object localization using convolutional networks. In Proceedings of the 2015 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 648–656. [Google Scholar]

- Papandreou, G.; Zhu, T.; Chen, L.C.; Gidaris, S.; Tompson, J.; Murphy, K. Personlab: Person pose estimation and instance segmentation with a bottom-up, part-based, geometric embedding model. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 269–286. [Google Scholar]

- Ning, G.; Pei, J.; Huang, H. Lighttrack: A generic framework for online top-down human pose tracking. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 1034–1035. [Google Scholar]

- Burghardt, T.; Ćalić, J. Analysing animal behaviour in wildlife videos using face detection and tracking. IEEE Proc.-Vision Image Signal Process. 2006, 153, 305–312. [Google Scholar] [CrossRef]

- Manning, T.; Somarriba, M.; Roehe, R.; Turner, S.; Wang, H.; Zheng, H.; Kelly, B.; Lynch, J.; Walsh, P. Automated Object Tracking for Animal Behaviour Studies. In Proceedings of the 2019 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), San Diego, CA, USA, 18–21 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1876–1883. [Google Scholar]

- Bonneau, M.; Vayssade, J.A.; Troupe, W.; Arquet, R. Outdoor animal tracking combining neural network and time-lapse cameras. Comput. Electron. Agric. 2020, 168, 105150. [Google Scholar] [CrossRef]

- Vo, M.; Yumer, E.; Sunkavalli, K.; Hadap, S.; Sheikh, Y.; Narasimhan, S.G. Self-supervised multi-view person association and its applications. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2794–2808. [Google Scholar] [CrossRef] [PubMed]

- Jenni, S.; Favaro, P. Self-supervised feature learning by learning to spot artifacts. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2733–2742. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A. Colorful image colorization. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 649–666. [Google Scholar]

- Noroozi, M.; Favaro, P. Unsupervised learning of visual representations by solving jigsaw puzzles. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 69–84. [Google Scholar]

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context encoders: Feature learning by inpainting. In Proceedings of the 2016 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2536–2544. [Google Scholar]

- Wang, X.; He, K.; Gupta, A. Transitive invariance for self-supervised visual representation learning. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Beijing, China, 17–20 September 2017; pp. 1329–1338. [Google Scholar]

- Zhao, R.; Xiong, R.; Ding, Z.; Fan, X.; Zhang, J.; Huang, T. MRDFlow: Unsupervised Optical Flow Estimation Network with Multi-Scale Recurrent Decoder. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 4639–4652. [Google Scholar] [CrossRef]

- Sumer, O.; Dencker, T.; Ommer, B. Self-supervised learning of pose embeddings from spatiotemporal relations in videos. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Beijing, China, 17–20 September 2017; pp. 4298–4307. [Google Scholar]

- Gomez, L.; Patel, Y.; Rusiñol, M.; Karatzas, D.; Jawahar, C. Self-supervised learning of visual features through embedding images into text topic spaces. In Proceedings of the 2017 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4230–4239. [Google Scholar]

- Owens, A.; Wu, J.; McDermott, J.H.; Freeman, W.T.; Torralba, A. Ambient sound provides supervision for visual learning. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 801–816. [Google Scholar]

- Crawford, E.; Pineau, J. Spatially invariant unsupervised object detection with convolutional neural networks. In Proceedings of the 2019 AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 3412–3420. [Google Scholar]

- Rhodin, H.; Constantin, V.; Katircioglu, I.; Salzmann, M.; Fua, P. Neural scene decomposition for multi-person motion capture. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7703–7713. [Google Scholar]

- Katircioglu, I.; Rhodin, H.; Constantin, V.; Spörri, J.; Salzmann, M.; Fua, P. Self-supervised Training of Proposal-based Segmentation via Background Prediction. arXiv 2019, arXiv:1907.08051. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the 2015 International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Ionescu, C.; Papava, D.; Olaru, V.; Sminchisescu, C. Human3.6m: Large scale datasets and predictive methods for 3d human sensing in natural environments. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1325–1339. [Google Scholar] [CrossRef]

- Ionescu, C.; Li, F.; Sminchisescu, C. Latent structured models for human pose estimation. In Proceedings of the 2011 IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 2220–2227. [Google Scholar]

- Zhao, R.; Ouyang, W.; Wang, X. Unsupervised salience learning for person re-identification. In Proceedings of the 2013 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3586–3593. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Baraldi, L.; Douze, M.; Cucchiara, R.; Jégou, H. LAMV: Learning to align and match videos with kernelized temporal layers. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7804–7813. [Google Scholar]

- Sitzmann, V.; Martel, J.; Bergman, A.; Lindell, D.; Wetzstein, G. Implicit neural representations with periodic activation functions. Adv. Neural Inf. Process. Syst. 2020, 33, 7462–7473. [Google Scholar]

| Method | test-d0 (%) | test-d1 (%) | test-d2 (%) | test-d3 (%) | SynError ↓ |

|---|---|---|---|---|---|

| SynNet+OpenPose [26] | 10.9 | 31.2 | 50.2 | 68.8 | 0.1389 |

| SynNet+ST | 21.8 | 36.8 | 54.6 | 67.9 | 0.0782 |

| LAMV (ds = 1) [71] | 30.0 | 50.1 | 70.2 | 90.2 | 0.0638 |

| PE [72] | 34.3 | 67.7 | 72.1 | 79.6 | 0.0672 |

| LAMV (ds = 2) | 41.7 | 58.5 | 75.2 | 92.0 | 0.1061 |

| LAMV (ds = 3) | 50.1 | 64.5 | 78.9 | 93.1 | 0.1362 |

| LAMV (ds = 4) | 56.4 | 69.0 | 81.5 | 94.1 | 0.1586 |

| TCC (ds = 1) [37] | 67.1 | 81.8 | 89.6 | 92.9 | 0.0305 |

| GTpoint+MD | 77.1 | 99.0 | 99.4 | 99.5 | 0.0098 |

| TCC (ds = 2) | 80.9 | 88.1 | 91.4 | 92.8 | 0.0505 |

| STMD+MSE | 86.1 | 99.0 | 99.6 | 99.7 | 0.0059 |

| Method | test-d0 (%) | test-d1 (%) | test-d2 (%) | test-d3 (%) | SynError ↓ |

|---|---|---|---|---|---|

| TCC (ds = 1) [37] | 16.4 | 25.2 | 34.1 | 45.1 | 0.1042 |

| STMD (1) | 18.4 | 33.9 | 38.5 | 47.7 | 0.1581 |

| LAMV (ds = 1) [71] | 21.0 | 35.1 | 49.5 | 64.1 | 0.0864 |

| TCC (ds = 2) | 28.3 | 36.7 | 47.4 | 58.9 | 0.1646 |

| LAMV (ds = 2) | 30.7 | 43.2 | 56.1 | 68.5 | 0.1512 |

| STMD (50) | 34.9 | 64.2 | 64.2 | 70.6 | 0.1248 |

| TCC (ds = 3) | 35.0 | 47.8 | 60.2 | 73.0 | 0.1785 |

| LAMV (ds = 3) | 38.4 | 49.8 | 60.8 | 71.9 | 0.2013 |

| LAMV (ds = 4) | 44.8 | 54.7 | 64.7 | 74.8 | 0.2410 |

| TCC (ds = 4) | 48.7 | 58.1 | 69.4 | 79.7 | 0.1766 |

| STMD (100) | 78.9 | 78.9 | 88.1 | 91.7 | 0.0229 |

| STMD (150) | 88.1 | 92.4 | 92.6 | 92.7 | 0.0119 |

| STMD (190) | 94.5 | 98.1 | 98.3 | 99.1 | 0.0080 |

| Method | test-d0 (%) | test-d1 (%) | test-d2 (%) | test-d3 (%) | SynError ↓ |

|---|---|---|---|---|---|

| SynNet [26] | 14.5 | 23.5 | 38.0 | 47.5 | 0.3038 |

| PE [72] | 20.9 | 40.5 | 55.7 | 66.0 | 0.2055 |

| TCC (ds = 1) [37] | 28.4 | 40.2 | 53.7 | 63.4 | 0.3154 |

| PE (sub) | 31.1 | 45.3 | 59.0 | 73.3 | 0.2000 |

| TCC (ds = 2) | 38.3 | 50.0 | 59.6 | 71.0 | 0.3400 |

| TCC (ds = 3) | 45.6 | 54.4 | 65.3 | 72.5 | 0.3801 |

| TCC (ds = 4) | 51.0 | 61.4 | 68.0 | 75.6 | 1.6402 |

| LAMV (ds = 1) [71] | 54.7 | 60.7 | 66.2 | 69.9 | 0.2226 |

| LAMV (ds = 4) | 62.9 | 64.9 | 66.6 | 69.7 | 1.1553 |

| LAMV (ds = 2) | 66.8 | 70.9 | 74.3 | 77.2 | 0.3763 |

| LAMV (ds = 3) | 67.9 | 70.0 | 72.0 | 73.7 | 0.7385 |

| STMD | 78.3 | 82.6 | 85.7 | 86.3 | 0.0963 |

| STMD (sub) | 88.2 | 93.1 | 93.3 | 94.4 | 0.0485 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mei, L.; He, Y.; Fishani, F.J.; Yu, Y.; Zhang, L.; Rhodin, H. Learning Domain-Adaptive Landmark Detection-Based Self-Supervised Video Synchronization for Remote Sensing Panorama. Remote Sens. 2023, 15, 953. https://doi.org/10.3390/rs15040953

Mei L, He Y, Fishani FJ, Yu Y, Zhang L, Rhodin H. Learning Domain-Adaptive Landmark Detection-Based Self-Supervised Video Synchronization for Remote Sensing Panorama. Remote Sensing. 2023; 15(4):953. https://doi.org/10.3390/rs15040953

Chicago/Turabian StyleMei, Ling, Yizhuo He, Farnoosh Javadi Fishani, Yaowen Yu, Lijun Zhang, and Helge Rhodin. 2023. "Learning Domain-Adaptive Landmark Detection-Based Self-Supervised Video Synchronization for Remote Sensing Panorama" Remote Sensing 15, no. 4: 953. https://doi.org/10.3390/rs15040953

APA StyleMei, L., He, Y., Fishani, F. J., Yu, Y., Zhang, L., & Rhodin, H. (2023). Learning Domain-Adaptive Landmark Detection-Based Self-Supervised Video Synchronization for Remote Sensing Panorama. Remote Sensing, 15(4), 953. https://doi.org/10.3390/rs15040953