Multi-Year Crop Type Mapping Using Sentinel-2 Imagery and Deep Semantic Segmentation Algorithm in the Hetao Irrigation District in China

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Image Capture and Processing for Sentinel-2

2.3. Methods

2.3.1. The Global Separability Index Creation

2.3.2. Data Set Construction Method

2.3.3. U-Net Classification Algorithm

2.3.4. U-Net++ Classification Algorithm

2.3.5. Deeplabv3+ Classification Algorithm

2.3.6. SegFormer Classification Algorithm

2.3.7. Hyperparameter Settings

2.3.8. Precision Evaluation

3. Results

3.1. Feature Screening and Data Set Construction Results

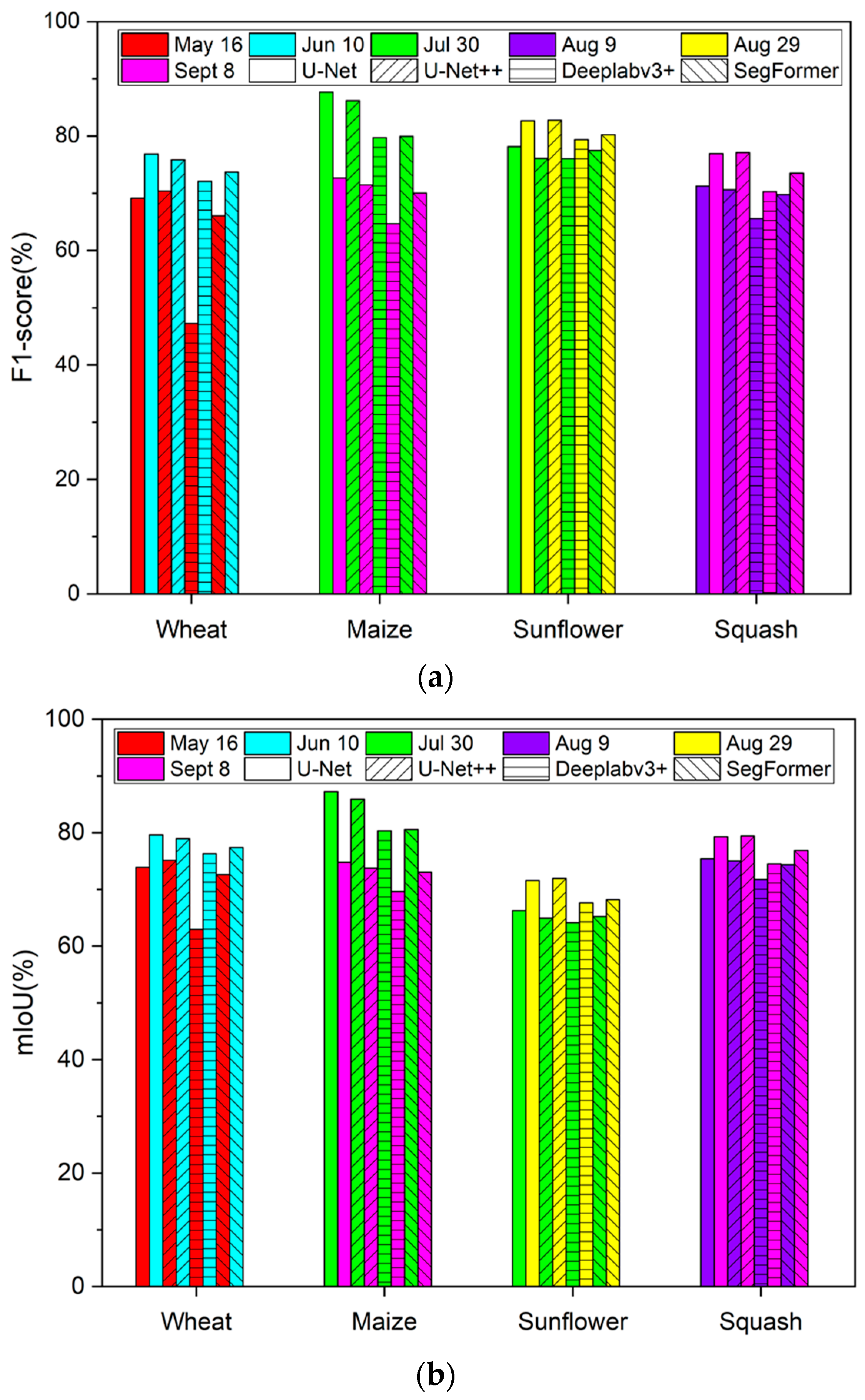

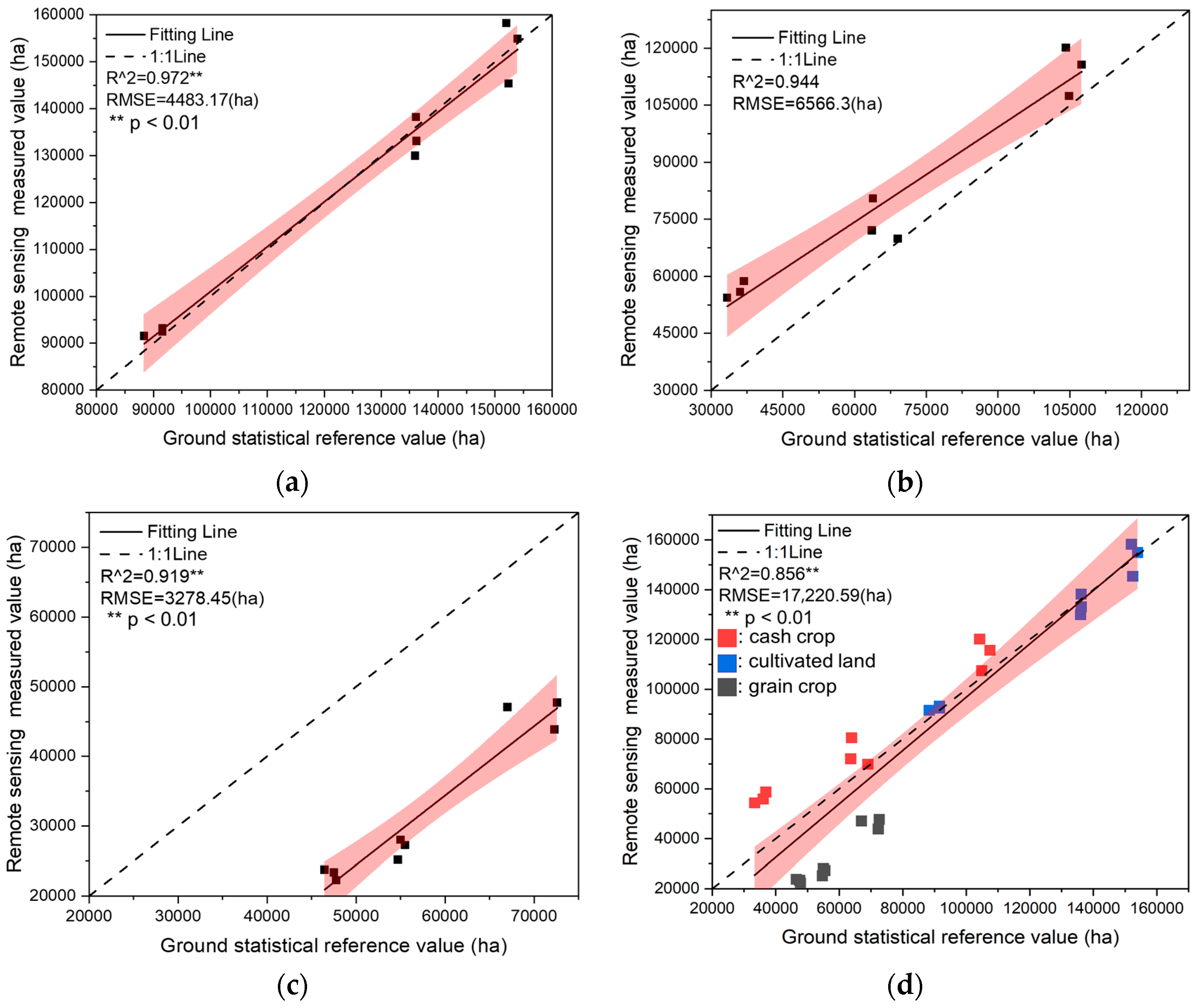

3.2. Mapping Accuracy of Different Algorithms in 2021

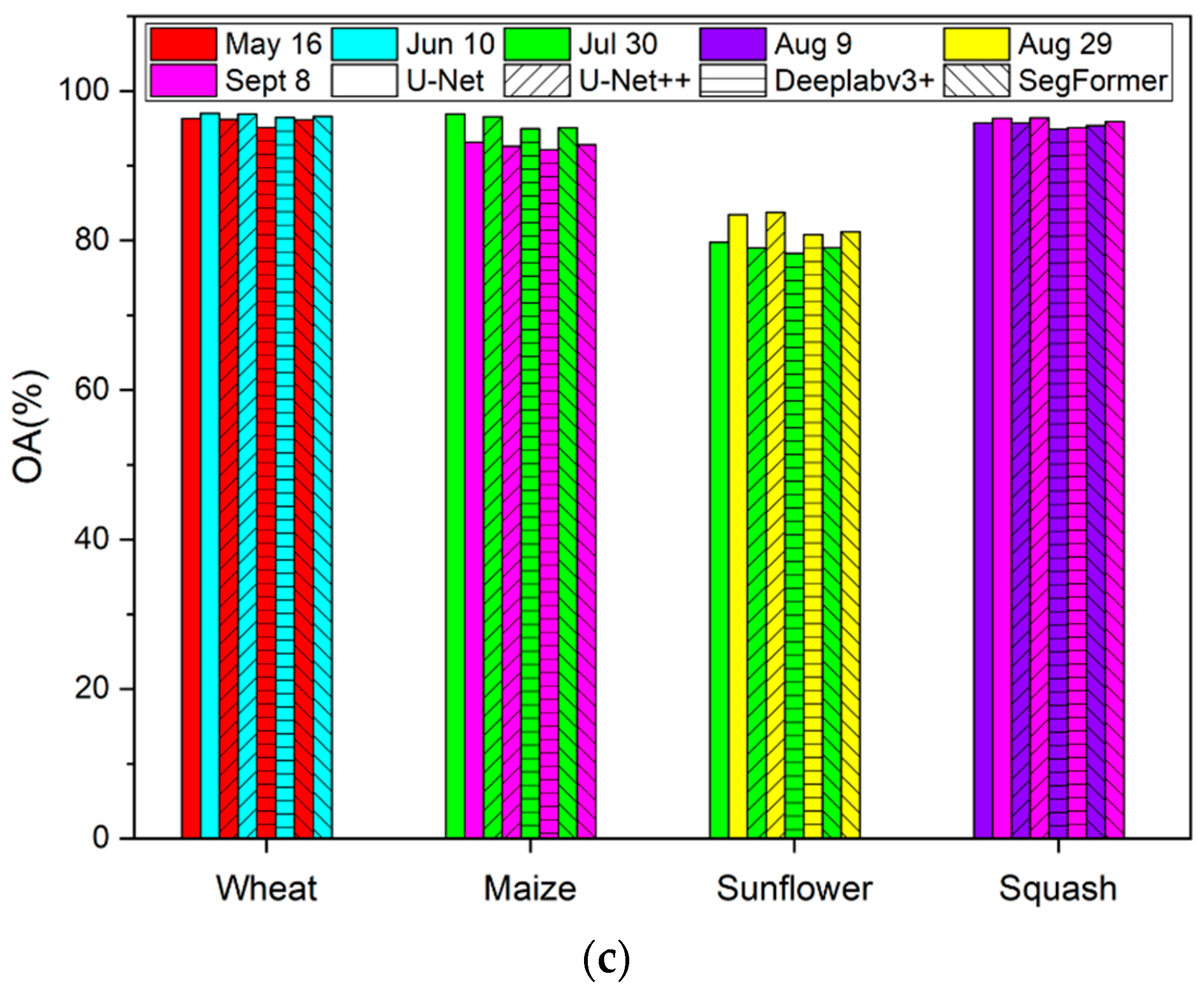

3.3. Mapping Results of the Model in 2020 and 2017–2019

4. Discussion

4.1. Advantages of Constructing Mapping Model in Key Period

4.2. Analysis of Adaptability of Different Algorithms to Sentinel-2 Mapping

4.3. Applicability Analysis of the Model to Crop Mapping over Multiple Years

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, L.; Niu, Y.; Zhang, H.; Han, W.; Li, G.; Tang, J.; Peng, X. Maize Canopy Temperature Extracted From UAV Thermal and RGB Imagery and Its Application in Water Stress Monitoring. Front. Plant Sci. 2019, 10, 1270. [Google Scholar] [CrossRef] [PubMed]

- Shuli, D.U.; Pan, Q.; Wei, F.U.; Tao, T.; Liu, R.; Liu, S. Analysis on planting structure change of Heilongjiang Province in the last 40 years. Soils Crops 2018, 7, 399–406. [Google Scholar]

- Qiu, B.; Lu, D.; Tang, Z.; Song, D.; Zeng, Y.; Wang, Z.; Chen, C.; Chen, N.; Huang, H.; Xu, W. Mapping cropping intensity trends in China during 1982–2013. Appl. Geogr. 2017, 79, 212–222. [Google Scholar] [CrossRef]

- You, N.; Dong, J.; Huang, J.; Du, G.; Zhang, G.; He, Y.; Yang, T.; Di, Y.; Xiao, X. The 10-m crop type maps in Northeast China during 2017–2019. Sci. Data 2021, 8, 41. [Google Scholar] [CrossRef] [PubMed]

- Massey, R.; Sankey, T.T.; Congalton, R.G.; Yadav, K.; Thenkabail, P.S.; Ozdogan, M.; Meador, A.J.S. MODIS phenology-derived, multi-year distribution of conterminous US crop types. Remote Sens. Environ. 2017, 198, 490–503. [Google Scholar] [CrossRef]

- Qiong, H.U.; Wen-Bin, W.U.; Song, Q.; Qiang-Yi, Y.U.; Yang, P.; Tang, H.J. Recent Progresses in Research of Crop Patterns Mapping by Using Remote Sensing. Sci. Agric. Sin. 2015, 10, 1900–1914. [Google Scholar]

- Yu, B.; Shang, S. Multi-Year Mapping of Maize and Sunflower in Hetao Irrigation District of China with High Spatial and Temporal Resolution Vegetation Index Series. Remote Sens. 2017, 9, 855. [Google Scholar] [CrossRef]

- Wen, Y.; Shang, S.; Rahman, K. Pre-Constrained Machine Learning Method for Multi-Year Mapping of Three Major Crops in a Large Irrigation District. Remote Sens. 2019, 11, 242. [Google Scholar] [CrossRef]

- Han, Z.; Zhang, H.; Zhang, L.; Li, P. Total Variation Regularized Collaborative Representation Clustering With a Locally Adaptive Dictionary for Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 166–180. [Google Scholar]

- Wei, M.; Qiao, B.; Zhao, J.; Zuo, X. Application of Remote Sensing Technology in Crop Estimation. In Proceedings of the 2018 IEEE 4th International Conference on Big Data Security on Cloud (BigDataSecurity), IEEE International Conference on High Performance and Smart Computing, (HPSC) and IEEE International Conference on Intelligent Data and Security (IDS), Omaha, NE, USA, 3–5 May 2018; pp. 252–257. [Google Scholar]

- Jafarbiglu, H.; Pourreza, A. A comprehensive review of remote sensing platforms, sensors, and applications in nut crops. Comput. Electron. Agr. 2022, 197, 106844. [Google Scholar] [CrossRef]

- Guo, L.; Liu, Y.; He, H.; Lin, H.; Qiu, G.; Yang, W. Consistency analysis of GF-1 and GF-6 satellite wide field view multi-spectral band reflectance. Optik 2021, 231, 166414. [Google Scholar] [CrossRef]

- Kang, J.; Zhang, H.; Yang, H.; Zhang, L. Support Vector Machine Classification of Crop Lands Using Sentinel-2 Imagery. In Proceedings of the 2018 7th International Conference on Agro-geoinformatics (Agro-geoinformatics), Hangzhou, China, 6–9 August 2018. [Google Scholar]

- Dong, J.; Xiao, X.; Menarguez, M.A.; Zhang, G.; Qin, Y.; Thau, D.; Biradar, C.; Moore, B. Mapping paddy rice planting area in northeastern Asia with Landsat 8 images, phenology-based algorithm and Google Earth Engine. Remote Sens. Environ. 2016, 185, 142–154. [Google Scholar] [CrossRef] [PubMed]

- Samberg, L.H.; Gerber, J.S.; Ramankutty, N.; Herrero, M.; West, P.C. Subnational distribution of average farm size and smallholder contributions to global food production. Environ. Res. Lett. 2016, 11, 124010. [Google Scholar] [CrossRef]

- Lupia, F.; Antoniou, V. Copernicus Sentinels missions and crowdsourcing as game changers for geospatial information in agriculture. GEOmedia 2018, 1, 32. [Google Scholar]

- Lebourgeois, V.; Dupuy, S.; Vintrou, É.; Ameline, M.; Butler, S.; Bégué, A. A Combined Random Forest and OBIA Classification Scheme for Mapping Smallholder Agriculture at Different Nomenclature Levels Using Multisource Data (Simulated Sentinel-2 Time Series, VHRS and DEM). Remote Sens. 2017, 9, 259. [Google Scholar] [CrossRef]

- Li, R.; Xu, M.; Chen, Z.; Gao, B.; Cai, J.; Shen, F.; He, X.; Zhuang, Y.; Chen, D. Phenology-based classification of crop species and rotation types using fused MODIS and Landsat data: The comparison of a random-forest-based model and a decision-rule-based model. Soil Tillage Res. 2021, 206, 104838. [Google Scholar] [CrossRef]

- Piedelobo, L.; Hernández-López, D.; Ballesteros, R.; Chakhar, A.; Del Pozo, S.; González-Aguilera, D.; Moreno, M.A. Scalable pixel-based crop classification combining Sentinel-2 and Landsat-8 data time series: Case study of the Duero river basin. Agr. Syst. 2019, 171, 36–50. [Google Scholar] [CrossRef]

- Zhu, M.; Zhang, L.; Wang, N.; Lin, Y.; Zhang, L.; Wang, S.; Liu, H. Comparative Study on UNVI Vegetation Index and Performance based on Sentinel-2. Remote Sens. Tech. App. 2021, 4, 936–947. [Google Scholar]

- Belgiu, M.; Bijker, W.; Csillik, O.; Stein, A. Phenology-based sample generation for supervised crop type classification. Int. J. Appl. Earth Obs. 2021, 95, 102264. [Google Scholar] [CrossRef]

- Kyere, I.; Astor, T.; Gra, R.; Wachendorf, M. Agricultural crop discrimination in a heterogeneous low-mountain range region based on multi-temporal and multi-sensor satellite data. Comput. Electron. Agr. 2020, 179, 105864. [Google Scholar] [CrossRef]

- Vogels, M.F.A.; Jong, S.M.D.; Sterk, G.; Addink, E.A. Mapping irrigated agriculture in complex landscapes using SPOT6 imagery and object-based image analysis—A case study in the Central Rift Valley, Ethiopia. Int. J. Appl. Earth Obs. 2019, 75, 118–129. [Google Scholar] [CrossRef]

- Kim, J.H.; Lee, H.; Hong, S.J.; Kim, S.; Park, J.; Hwang, J.Y.; Choi, J.P. Objects Segmentation From High-Resolution Aerial Images Using U-Net With Pyramid Pooling Layers. IEEE Geosci. Remote Sens. Lett. 2019, 16, 115–119. [Google Scholar] [CrossRef]

- King, L.; Adusei, B.; Stehman, S.V.; Potapov, P.V.; Song, X.P.; Krylov, A.; Di Bella, C.; Loveland, T.R.; Johnson, D.M.; Hansen, M.C. A multi-resolution approach to national-scale cultivated area estimation of soybean. Remote Sens. Environ. 2017, 195, 13–29. [Google Scholar] [CrossRef]

- Yang, C.; Everitt, J.H.; Murden, D. Evaluating high resolution SPOT 5 satellite imagery for crop identification. Comput. Electron. Agric. 2011, 75, 347–354. [Google Scholar] [CrossRef]

- Kluger, D.M.; Wang, S.; Lobell, D.B. Two shifts for crop mapping: Leveraging aggregate crop statistics to improve satellite-based maps in new regions. Remote Sens. Environ. 2021, 262, 112488. [Google Scholar] [CrossRef]

- Vuolo, F.; Neuwirth, M.; Immitzer, M.; Atzberger, C.; Ng, W. How much does multi-temporal Sentinel-2 data improve crop type classification? Int. J. Appl. Earth Obs. 2018, 72, 122–130. [Google Scholar] [CrossRef]

- Meng, S.; Wang, X.; Hu, X.; Luo, C.; Zhong, Y. Deep learning-based crop mapping in the cloudy season using one-shot hyperspectral satellite imagery. Comput. Electron. Agr. 2021, 186, 106188. [Google Scholar] [CrossRef]

- Maponya, M.G.; Niekerk, A.V.; Mashimbye, Z.E. Pre-harvest classification of crop types using a Sentinel-2 time-series and machine learning. Comput. Electron. Agr. 2020, 169, 105164. [Google Scholar] [CrossRef]

- Nowakowski, A.; Mrziglod, J.; Spiller, D.; Bonifacio, R.; Ferrari, I.; Mathieu, P.P.; Garcia-Herranz, M.; Kim, D. Crop type mapping by using transfer learning. Int. J. Appl. Earth Obs. 2021, 98, 102313. [Google Scholar] [CrossRef]

- Pan, Z.; Xu, J.; Guo, Y.; Hu, Y.; Wang, G. Deep Learning Segmentation and Classification for Urban Village Using a Worldview Satellite Image Based on U-Net. Remote Sens. 2020, 12, 1574. [Google Scholar] [CrossRef]

- Ge, S.; Zhang, J.; Pan, Y.; Yang, Z.; Zhu, S. Transferable deep learning model based on the phenological matching principle for mapping crop extent. Int. J. Appl. Earth Obs. 2021, 102, 102451. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Kotaridis, I.; Lazaridou, M. Remote sensing image segmentation advances: A meta-analysis. ISPRS J. Photogramm. Remote Sens. 2021, 173, 309–322. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Zhou, Z.; Siddiquee, M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Stoyanov, D., Taylor, Z., Carneiro, G., Syeda-Mahmood, T., Martel, A., Maier-Hein, L., Tavares, J.M.R.S., Bradley, A., Papa, J.P., Belagiannis, V., et al., Eds.; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–11. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Chen, P.H.; Hui, H.; Miao, M.; Zhou, H.H.; Computers, S.O. Multi-label Feature Selection Algorithm Based on ReliefF and Mutual Information. J. Guangdong Univ. Technol. 2018, 35, 7. [Google Scholar]

- Somers, B.; Asner, G.P. Multi-temporal hyperspectral mixture analysis and feature selection for invasive species mapping in rainforests. Remote Sens. Environ. 2013, 136, 14–27. [Google Scholar] [CrossRef]

- Wang, Z.; Zhou, Y.; Wang, S.; Wang, F.; Xu, Z. House building extraction from high resolution remote sensing image based on IEU-Net. J. Remote. Sens. 2021, 25, 2245–2254. [Google Scholar]

- Li, G.; Han, W.; Huang, S.; Ma, W.; Ma, Q.; Cui, X. Extraction of Sunflower Lodging Information Based on UAV Multi-Spectral Remote Sensing and Deep Learning. Remote Sens. 2021, 13, 2721. [Google Scholar] [CrossRef]

- Li, G.; Cui, J.; Han, W.; Zhang, H.; Huang, S.; Chen, H.; Ao, J. Crop type mapping using time-series Sentinel-2 imagery and U-Net in early growth periods in the Hetao irrigation district in China. Comput. Electron. Agr. 2022, 203, 107478. [Google Scholar] [CrossRef]

- Gong, P.; Liu, H.; Zhang, M.; Li, C.; Wang, J.; Huang, H.; Clinton, N.; Ji, L.; Li, W.; Bai, Y.; et al. Stable classification with limited sample: Transferring a 30-m resolution sample set collected in 2015 to mapping 10-m resolution global land cover in 2017. Sci. Bull. 2019, 64, 370–373. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Z.; Woodcock, C.E. Continuous change detection and classification of land cover using all available Landsat data. Remote Sens. Environ. 2014, 144, 152–171. [Google Scholar] [CrossRef]

- Bermudez, J.D.; Happ, P.N.; Feitosa, R.Q.; Oliveira, D.A. Synthesis of Multispectral Optical Images From SAR/Optical Multitemporal Data Using Conditional Generative Adversarial Networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1220–1224. [Google Scholar] [CrossRef]

- Ortega-Huerta, M.A.; Komar, O.; Price, K.P.; Ventura, H.J. Mapping coffee plantations with Landsat imagery: An example from El Salvador. Int. J. Remote Sens. 2012, 33, 220–242. [Google Scholar] [CrossRef]

- Hu, Y.; Zeng, H.; Tian, F.; Zhang, M.; Wu, B.; Gilliams, S.; Li, S.; Li, Y.; Lu, Y.; Yang, H. An Interannual Transfer Learning Approach for Crop Classification in the Hetao Irrigation District, China. Remote Sens. 2022, 14, 1208. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Hu, Q.; Sulla-Menashe, D.; Xu, B.; Yin, H.; Tang, H.; Yang, P.; Wu, W. A phenology-based spectral and temporal feature selection method for crop mapping from satellite time series. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 218–229. [Google Scholar] [CrossRef]

| Year | Distribution of Satellite Images | |||

|---|---|---|---|---|

| Ⅰ * | Ⅱ * | Ⅲ * | Ⅳ * | |

| 2021 | Apr 16, May 1, May 16, May 31, Jun 5, Jun 10, Jun 25, Jul 5, Jul 10, Jul 20, Jul 30, Aug 9, Aug 19, Aug 29, Sept 8 | |||

| 2020 | May 31, Sept 18 | May 31, Sept 18 | Apr 11, May 1, May 16, May 31, Jun 10, Jun 25, Jul 5, Jul 15, Aug 9, Sept 3 | May 31, Sept 18 |

| 2019 | May 30, Aug 18, Sept 2 | Jun 1, Aug 18, Sept 2 | May 22, Aug 15, Sept 4 | May 27, Aug 15, Sept 4 |

| 2018 | May 22, Aug 5, Sept 9 | May 22, Aug 5, Sept 9 | May 22, Aug 5, Sept 9 | May 22, Aug 5, Sept 9 |

| 2017 | May 30, Aug 5, Sept 7 | May 30, Aug 5, Sept 27 | May 27, Aug 5, Sept 24 | May 27, Aug 5, Sept 24 |

| Bands | Level-1C | Level-2A | ||||||

|---|---|---|---|---|---|---|---|---|

| 30 May | 10 Jun | 30 May | 10 Jun | |||||

| Mean * | Std * | Mean * | Std * | Mean * | Std * | Mean * | Std * | |

| B1 | 1751 | 165 | 1759 | 146 | 1139 | 275 | 1146 | 269 |

| B2 | 1897 | 327 | 1849 | 298 | 1642 | 491 | 1678 | 502 |

| B3 | 1888 | 363 | 1981 | 337 | 2093 | 511 | 2144 | 522 |

| B4 | 2344 | 430 | 2409 | 403 | 2639 | 530 | 2681 | 537 |

| B5 | 2541 | 362 | 2517 | 331 | 2781 | 452 | 2805 | 443 |

| B6 | 2801 | 277 | 2845 | 256 | 3014 | 326 | 3053 | 318 |

| B7 | 3065 | 268 | 3074 | 244 | 3214 | 302 | 3220 | 290 |

| B8 | 3052 | 244 | 3063 | 229 | 3422 | 291 | 3437 | 286 |

| B8A | 3279 | 259 | 3239 | 236 | 3342 | 290 | 3388 | 280 |

| B9 | 1550 | 109 | 1659 | 144 | 3279 | 228 | 3304 | 214 |

| B11 | 3839 | 493 | 3772 | 453 | 4164 | 544 | 4130 | 510 |

| B12 | 3220 | 438 | 3180 | 422 | 3655 | 501 | 3607 | 484 |

| Year | SA | Wheat (%) | Maize (%) | Sunflower (%) | Squash (%) | Others (%) |

|---|---|---|---|---|---|---|

| 2021 | 1 | 0.37 | 10.49 | 45.53 | 4.74 | 38.86 |

| 2 | 0.67 | 8.51 | 56.69 | 5.54 | 28.59 | |

| 3 | 1.13 | 26.61 | 40.22 | 7.78 | 24.27 | |

| 4 | 1.27 | 22.41 | 38.99 | 6.32 | 31.00 | |

| 5 | 4.23 | 40.43 | 26.24 | 1.84 | 27.26 | |

| 6 | 1.41 | 28.39 | 27.67 | 1.94 | 40.58 | |

| 7 | 2.41 | 16.62 | 26.71 | 6.61 | 47.65 | |

| 8 | 1.47 | 13.12 | 46.34 | 8.03 | 31.04 | |

| 9 | 0.39 | 24.94 | 45.01 | 4.45 | 25.21 | |

| 2020 | 1 | 3.23 | 11.00 | 31.88 | 3.37 | 50.52 |

| 2 | 2.99 | 20.57 | 33.98 | 1.03 | 41.42 |

| Crops | Key Period | Selected Features | Data Sets |

|---|---|---|---|

| Wheat | May 16, May 31, Jun 5, Jun 10, Jun 25 | B2, B3, B4, B5, B11, B12 | 14,000 |

| Maize | Jul 30, Aug 9, Aug 19, Aug 29, Sept 8 | B2, B3, B4, B5, B11, B12 | 14,000 |

| Sunflower | Jul 30, Aug 9, Aug 19, Aug 29 | B3, B4, B6, B7, B8, B8A | 11,200 |

| Squash | Aug 9, Aug 19, Aug 29, Sept 8 | B2, B3, B4, B5, B11, B12 | 11,200 |

| Crops | Data | Area | F1-score | mIoU | OA | Crops | Data | Area | F1-score | mIoU | OA |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Wheat | May 16 | 1 | 71.78 | 66.075 | 97.25 | Maize | Aug 9 | 1 | 73.69 | 75.615 | 93.53 |

| 2 | 69.78 | 69.96 | 97.31 | 2 | 83.8 | 81.63 | 92.83 | ||||

| Jun 25 | 1 | 68.26 | 64.35 | 96.93 | Sept 3 | 1 | 68.92 | 66.48 | 85.14 | ||

| 2 | 79.49 | 82.065 | 98.19 | 2 | 71.72 | 69.555 | 86.15 | ||||

| Sunflower | Aug 9 | 1 | 70.52 | 71.68 | 77.33 | Squash | Aug 9 | 1 | 69.61 | 53.595 | 96.32 |

| 2 | 76.75 | 75.12 | 82.79 | 2 | 71.05 | 80.075 | 98.86 | ||||

| Sept 3 | 1 | 68.52 | 65.32 | 74.74 | Sept 3 | 1 | 68.69 | 63.285 | 96.99 | ||

| 2 | 70.32 | 73.75 | 79.69 | 2 | 71.31 | 87.61 | 99.27 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, G.; Han, W.; Dong, Y.; Zhai, X.; Huang, S.; Ma, W.; Cui, X.; Wang, Y. Multi-Year Crop Type Mapping Using Sentinel-2 Imagery and Deep Semantic Segmentation Algorithm in the Hetao Irrigation District in China. Remote Sens. 2023, 15, 875. https://doi.org/10.3390/rs15040875

Li G, Han W, Dong Y, Zhai X, Huang S, Ma W, Cui X, Wang Y. Multi-Year Crop Type Mapping Using Sentinel-2 Imagery and Deep Semantic Segmentation Algorithm in the Hetao Irrigation District in China. Remote Sensing. 2023; 15(4):875. https://doi.org/10.3390/rs15040875

Chicago/Turabian StyleLi, Guang, Wenting Han, Yuxin Dong, Xuedong Zhai, Shenjin Huang, Weitong Ma, Xin Cui, and Yi Wang. 2023. "Multi-Year Crop Type Mapping Using Sentinel-2 Imagery and Deep Semantic Segmentation Algorithm in the Hetao Irrigation District in China" Remote Sensing 15, no. 4: 875. https://doi.org/10.3390/rs15040875

APA StyleLi, G., Han, W., Dong, Y., Zhai, X., Huang, S., Ma, W., Cui, X., & Wang, Y. (2023). Multi-Year Crop Type Mapping Using Sentinel-2 Imagery and Deep Semantic Segmentation Algorithm in the Hetao Irrigation District in China. Remote Sensing, 15(4), 875. https://doi.org/10.3390/rs15040875