Algorithm–Hardware Co-Optimization and Deployment Method for Field-Programmable Gate-Array-Based Convolutional Neural Network Remote Sensing Image Processing

Abstract

:1. Introduction

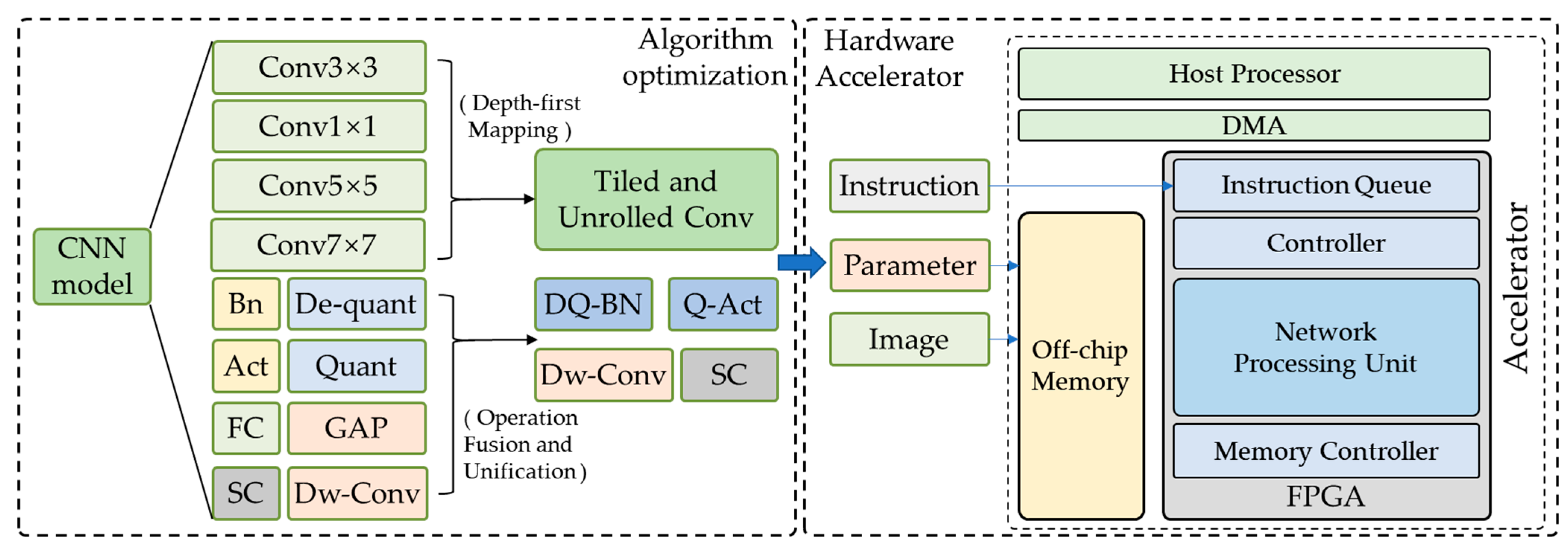

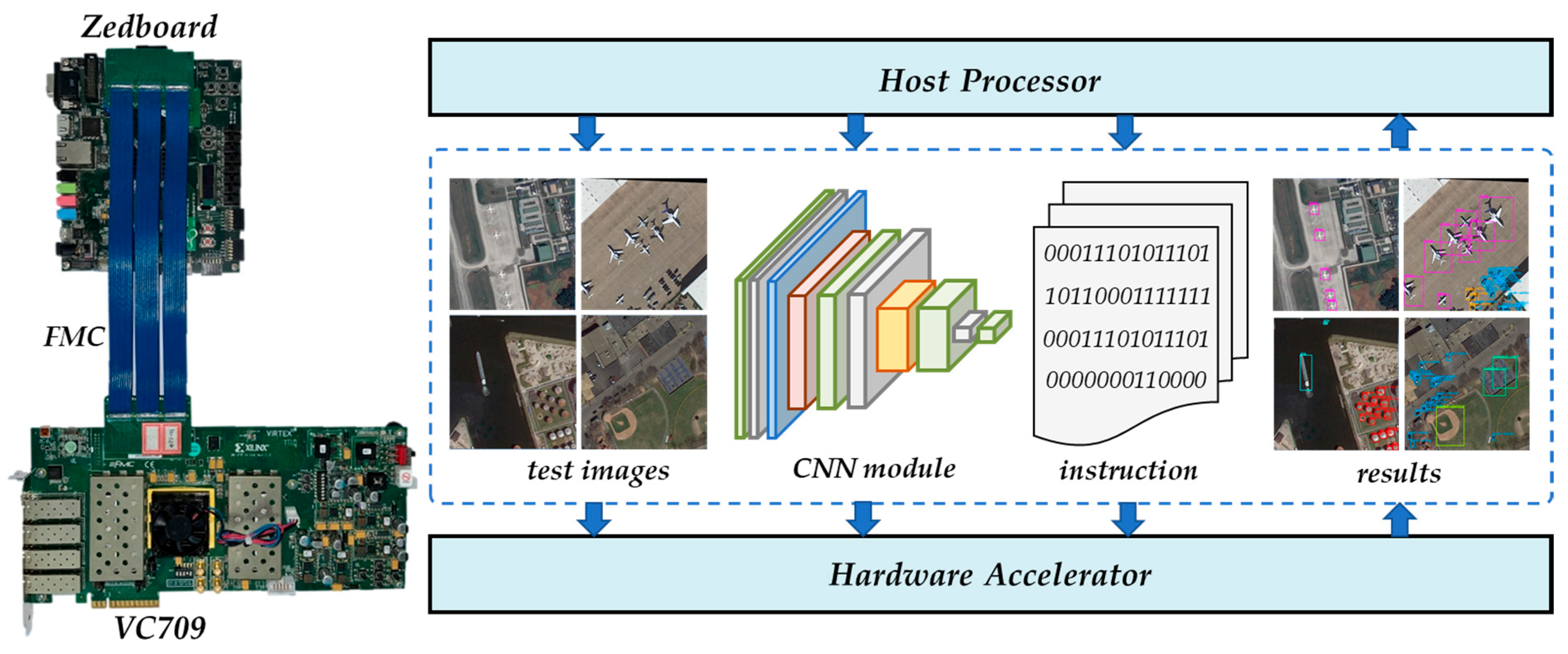

- An algorithm–hardware co-optimization and deployment method for FPGA-based CNN remote sensing image processing is proposed, including a series of hardware-centric model optimization techniques and a versatile FPGA-based CNN accelerator architecture.

- A series of hardware-centric model optimization techniques are proposed, including operation fusion and unification, as well as loop tiling and loop unrolling based on the depth-first mapping technique. These techniques reduce the hardware overhead requirements of the model and consequently improve the energy efficiency of the accelerator.

- An FPGA-based CNN accelerator architecture is proposed, comprising a highly parallel and configurable network processing unit. This architecture is specifically designed to accelerate the optimized CNN model effectively. Additionally, a multi-level storage structure is incorporated to enhance data access efficiency for tiled and unrolled models.

2. Background

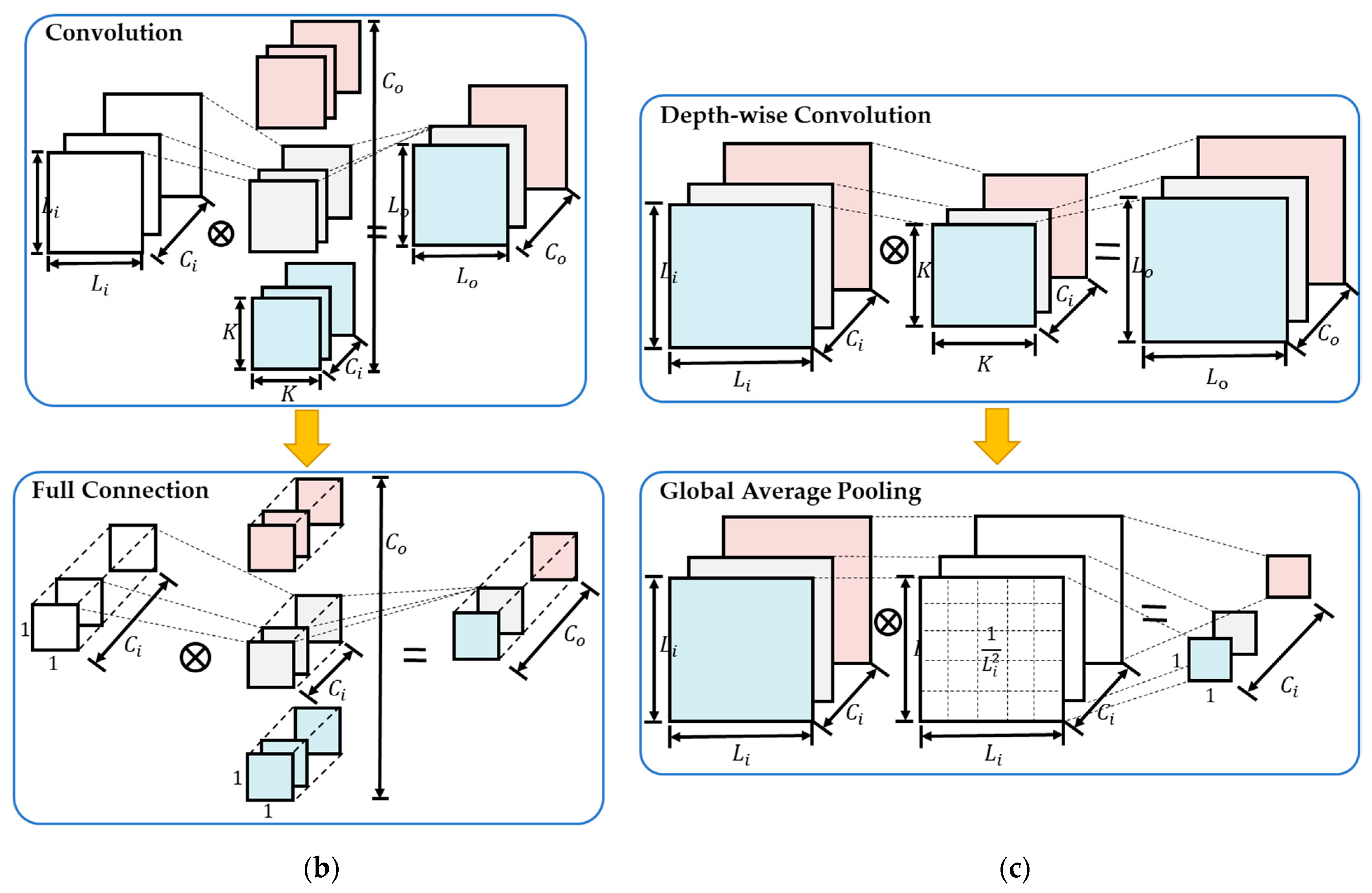

2.1. The Composition of CNNs

2.1.1. Standard Convolution

2.1.2. Depth-Wise Convolution

2.1.3. Batch Normalization

2.1.4. Full Connection and Global Average Pooling

2.1.5. Activation Function

2.1.6. Shortcut

2.2. Quantify

3. Algorithm–Hardware Co-Optimization Method for CNN Models

3.1. Hardware-Centric Optimization

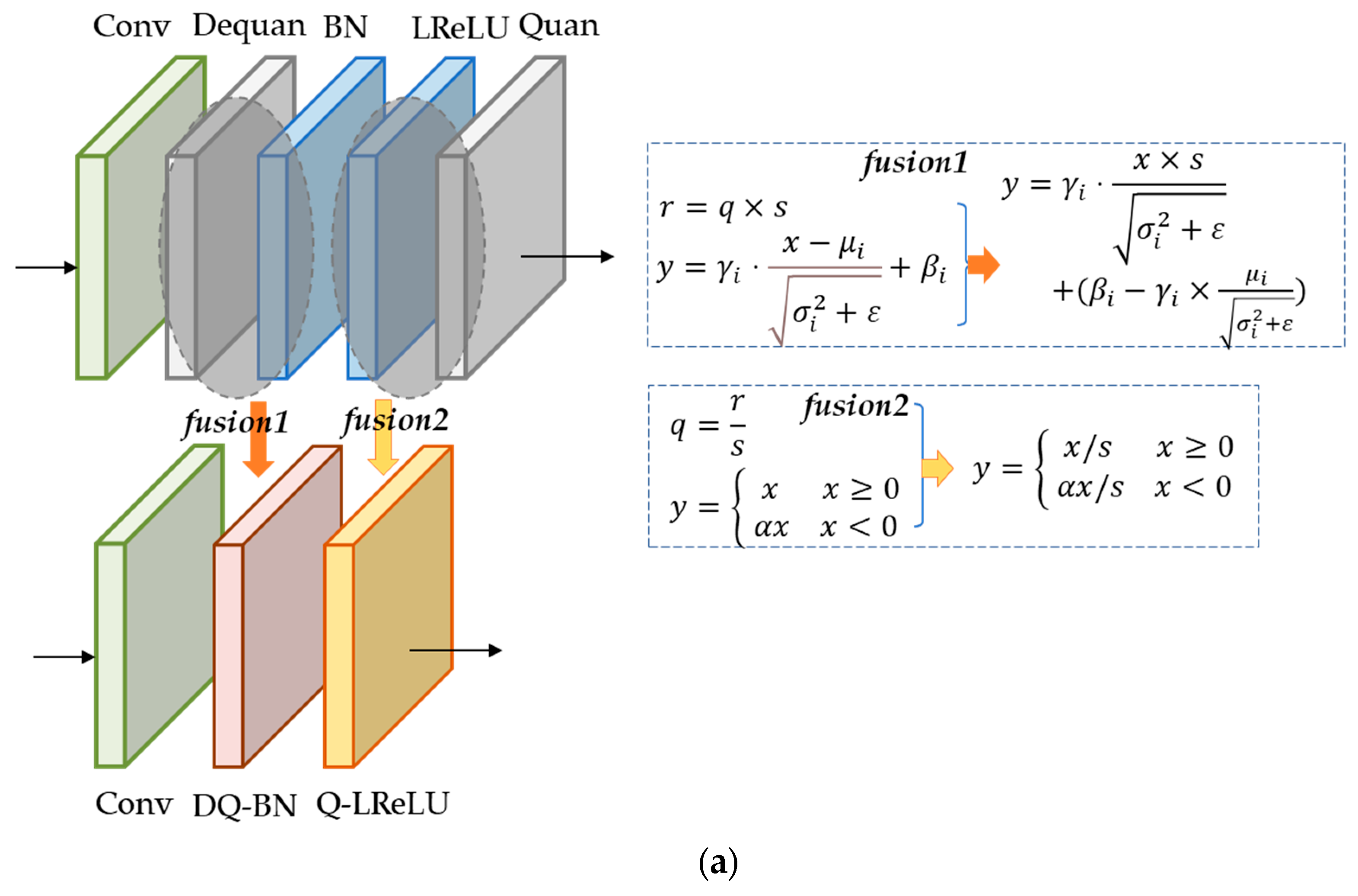

3.1.1. Operation Fusion and Unification

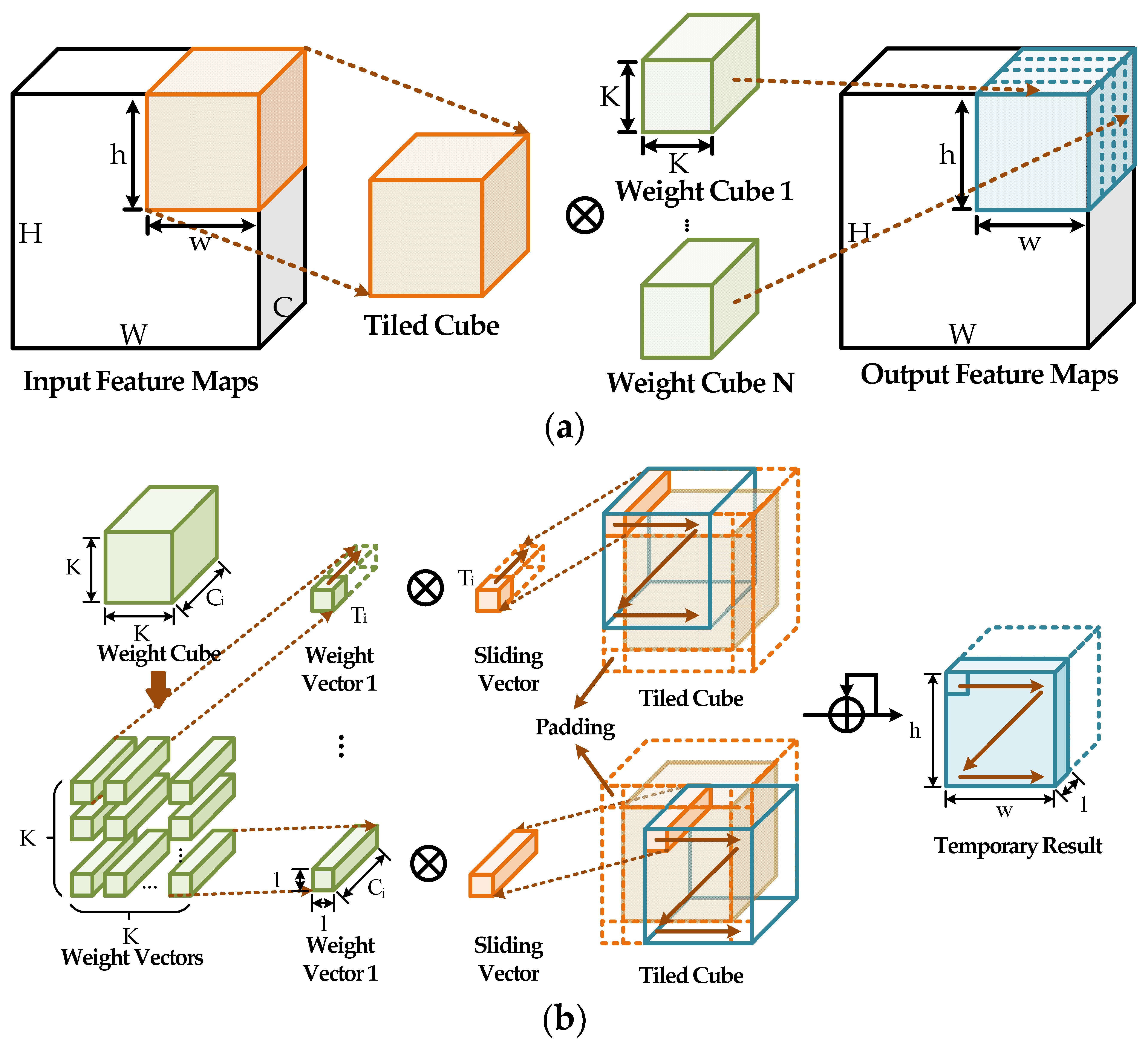

3.1.2. Depth-First Mapping Technique

| Algorithm 1: Standard convolution loops |

| for ; ; ;do |

| for ; ; ;do |

| for ; ; ;do |

| for ; ; ;do |

| for ; ; ;do |

| for ; ; ;do |

| out_fmap[] += in_fmap[] * weight[] |

3.2. The Proposed Accelerator Architecture

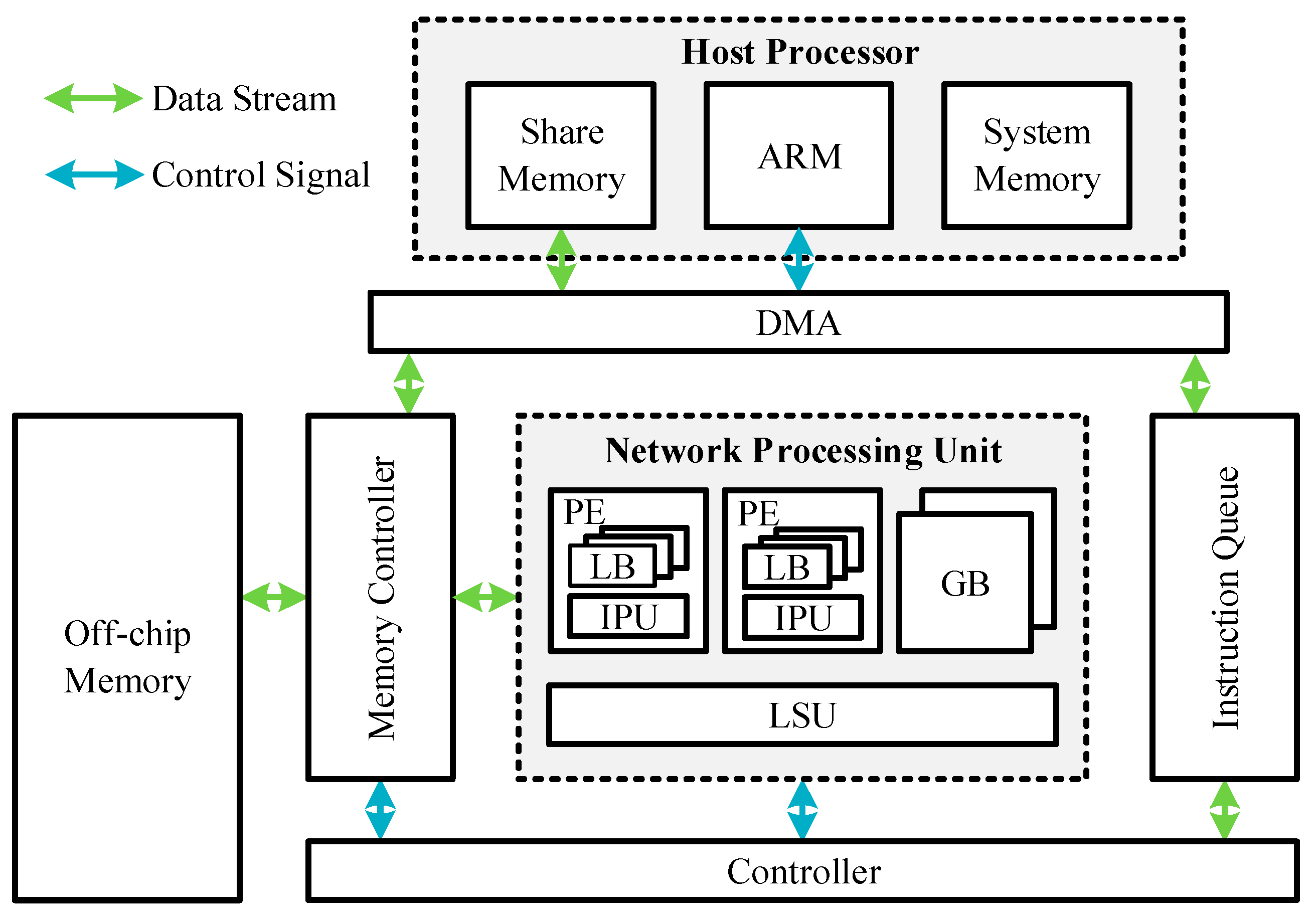

3.2.1. Overall Architecture of the Proposed Accelerator

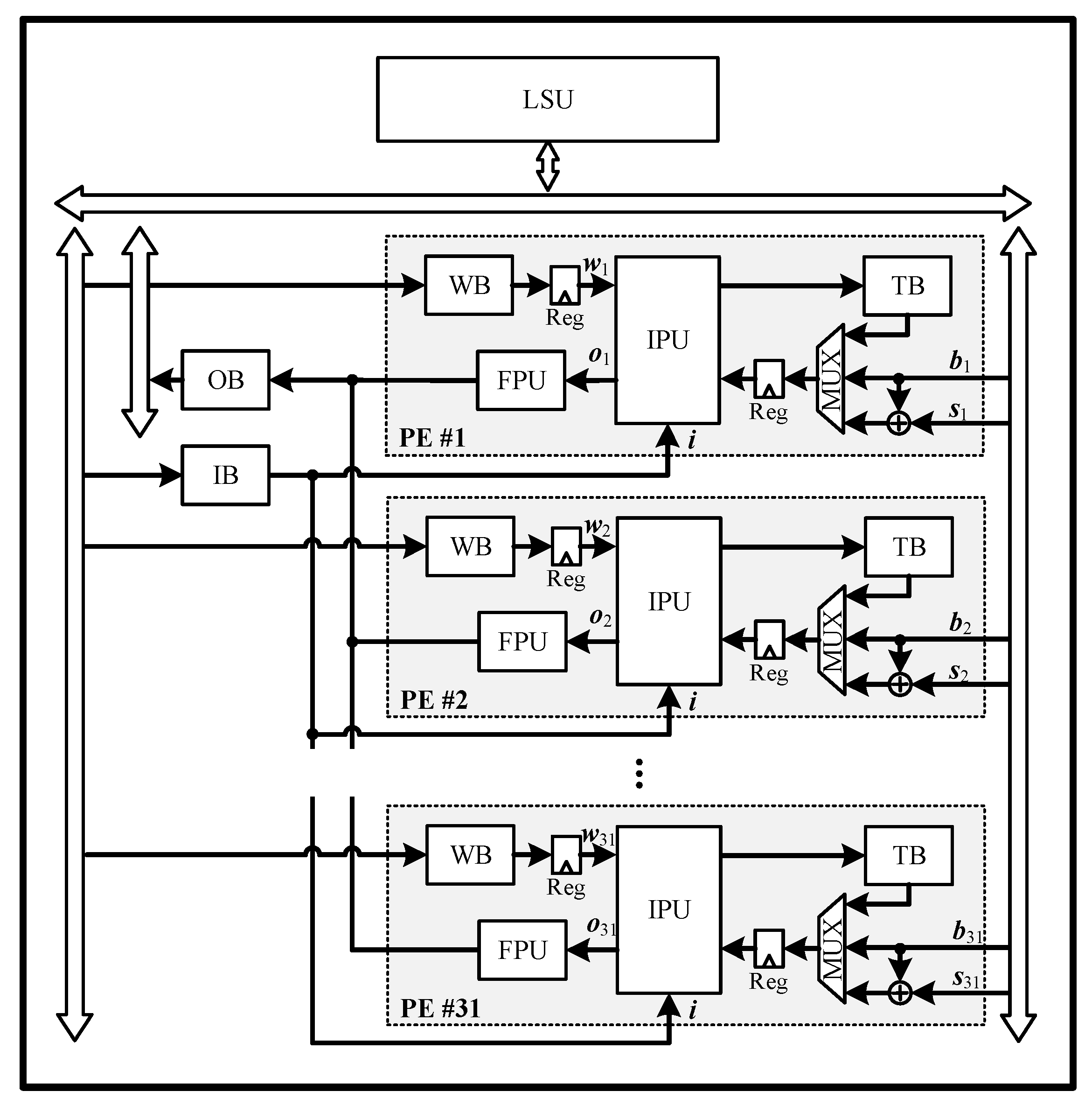

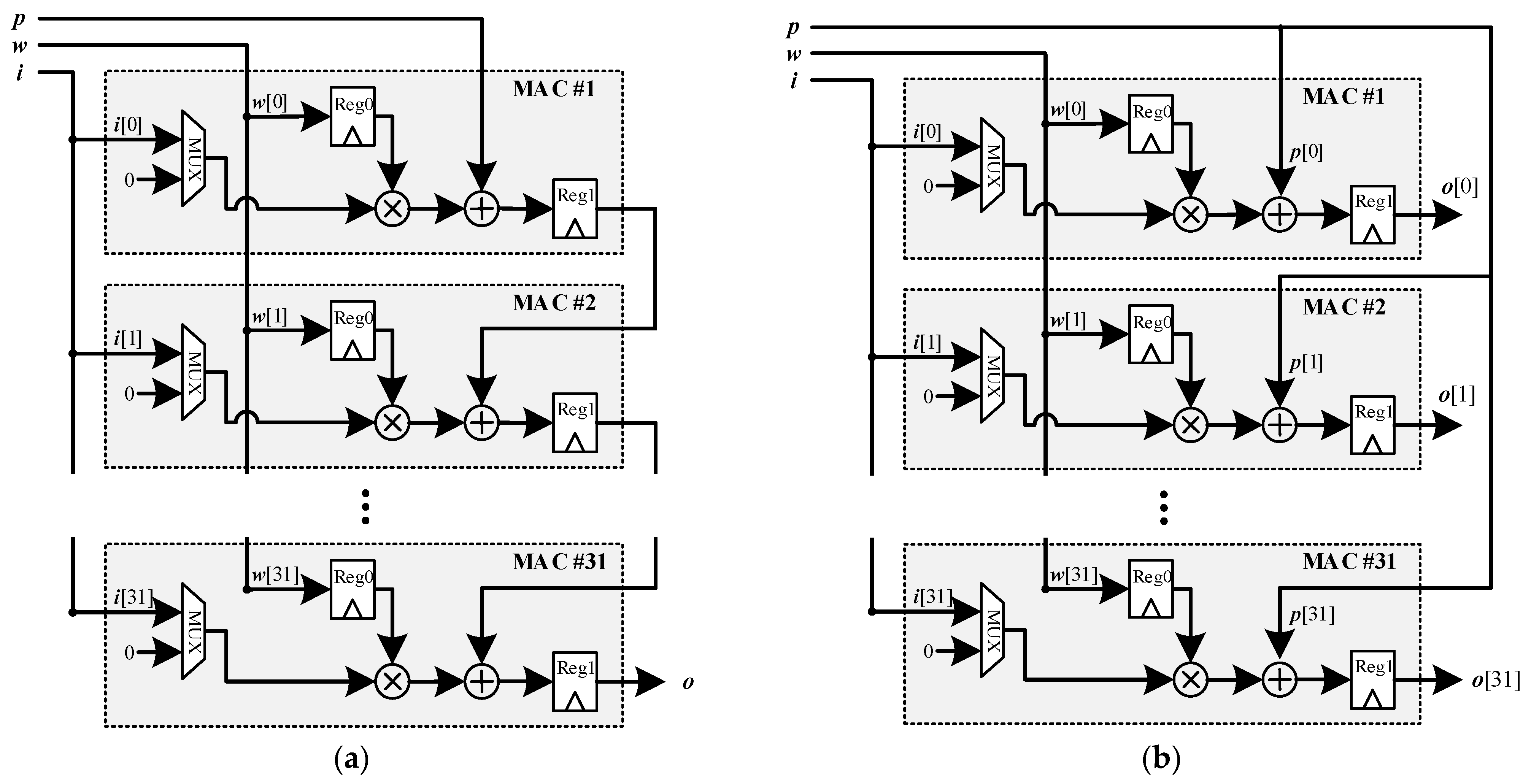

3.2.2. Network Processing Unit

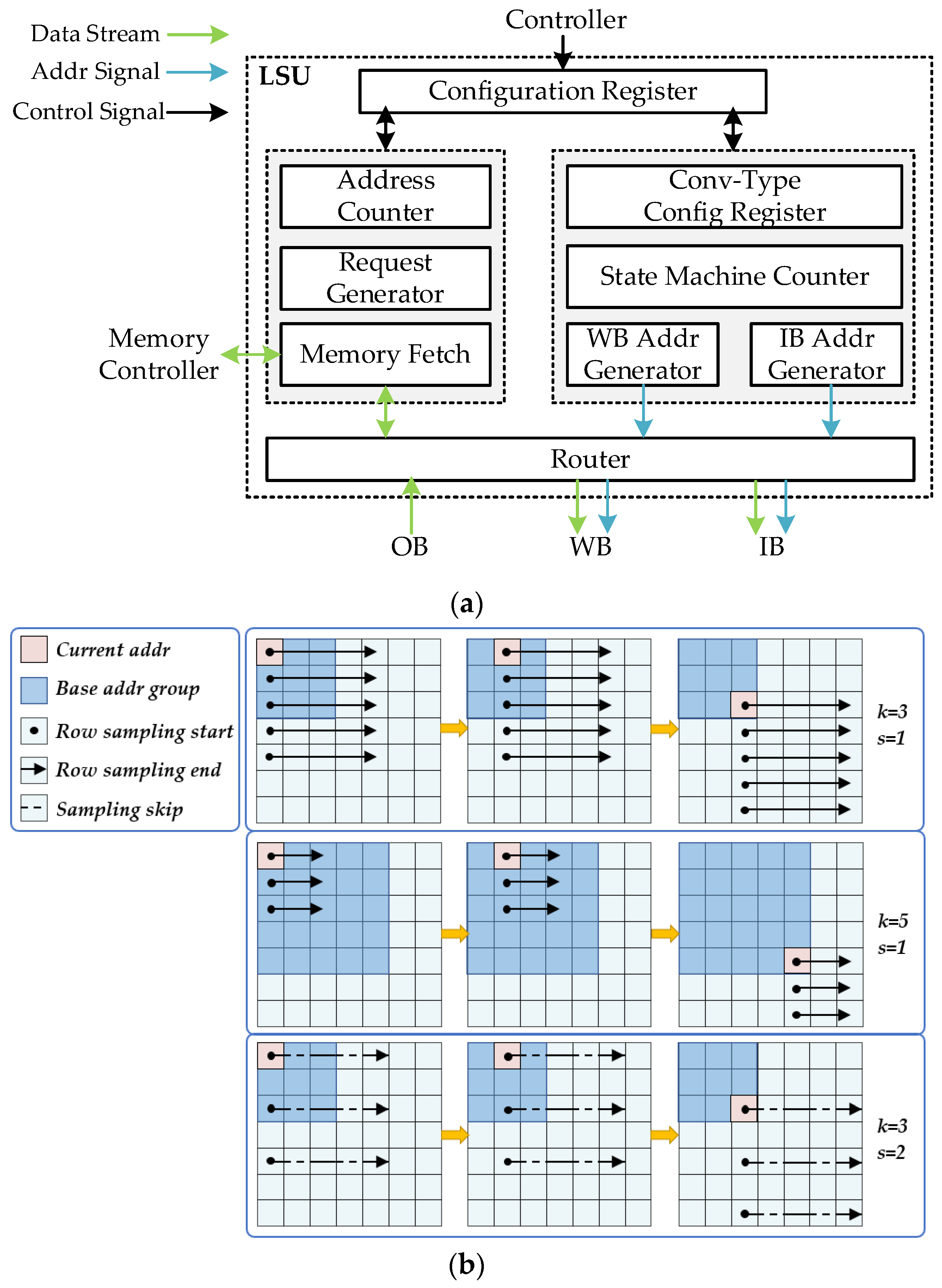

3.2.3. Multi-Level Storage Structure

4. Experiments and Results

4.1. Experimental Settings

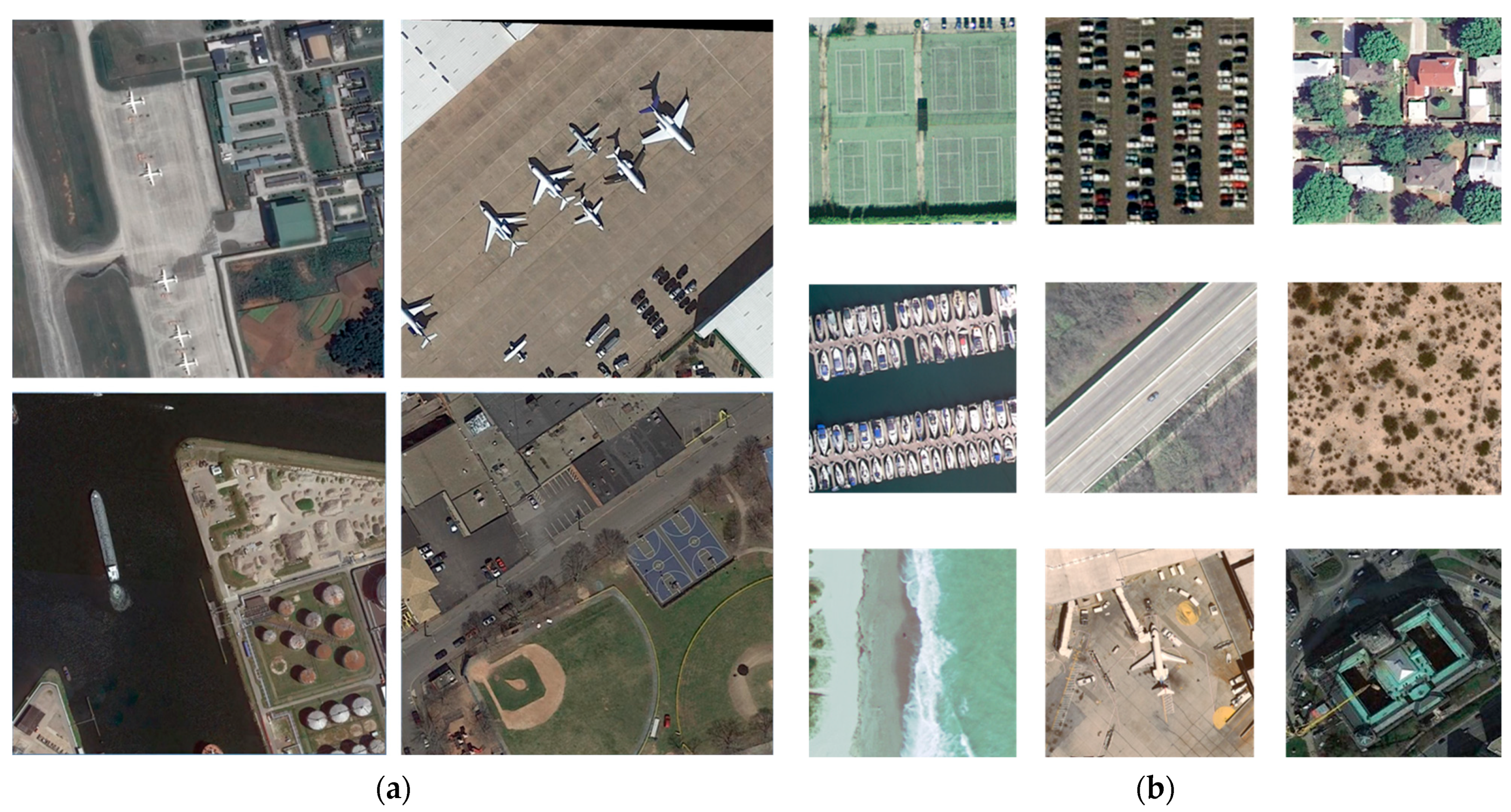

4.1.1. Datasets Description

4.1.2. Experimental Setup

4.1.3. Evaluation Metrics

4.2. Experimental Result

4.3. Performance Comparison

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yan, P.; Liu, X.; Wang, F.; Yue, C.; Wang, X. LOVD: Land Vehicle Detection in Complex Scenes of Optical Remote Sensing Image. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5615113. [Google Scholar] [CrossRef]

- Zhao, B.; Wang, Q.; Wu, Y.; Cao, Q.; Ran, Q. Target detection model distillation using feature transition and label registration for remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5416–5426. [Google Scholar] [CrossRef]

- Hou, Y.-E.; Yang, K.; Dang, L.; Liu, Y. Contextual Spatial-Channel Attention Network for Remote Sensing Scene Classification. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6008805. [Google Scholar] [CrossRef]

- Shi, J.; Liu, W.; Shan, H.; Li, E.; Li, X.; Zhang, L. Remote Sensing Scene Classification Based on Multibranch Fusion Attention Network. IEEE Geosci. Remote Sens. Lett. 2023, 20, 3001505. [Google Scholar] [CrossRef]

- Du, X.; Song, L.; Lv, Y.; Qin, X. Military Target Detection Method Based on Improved YOLOv5. In Proceedings of the 2022 International Conference on Cyber-Physical Social Intelligence (ICCSI), Nanjing, China, 18–21 November 2022; pp. 53–57. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhong, Y.; Su, Y.; Ma, A. Domain Adaptation via a Task-Specific Classifier Framework for Remote Sensing Cross-Scene Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5620513. [Google Scholar] [CrossRef]

- Li, Z.; Wu, Q.; Cheng, B.; Cao, L.; Yang, H. Remote Sensing Image Scene Classification Based on Object Relationship Reasoning CNN. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8000305. [Google Scholar] [CrossRef]

- Wu, Y.; Guan, X.; Zhao, B.; Ni, L.; Huang, M. Vehicle Detection Based on Adaptive Multi-modal Feature Fusion and Cross-modal Vehicle Index using RGB-T Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 8166–8177. [Google Scholar] [CrossRef]

- Yao, Y.; Zhou, Y.; Yuan, C.; Li, Y.; Zhang, H. On-Board Intelligent Processing for Remote Sensing Images Based on 20KG Micro-Nano Satellite. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 8107–8110. [Google Scholar] [CrossRef]

- Shivapakash, S.; Jain, H.; Hellwich, O.; Gerfers, F. A Power Efficiency Enhancements of a Multi-Bit Accelerator for Memory Prohibitive Deep Neural Networks. IEEE Open J. Circuits Syst. 2021, 2, 156–169. [Google Scholar] [CrossRef]

- Pan, Y.; Tang, L.; Jing, D.; Tang, W.; Zhou, S. Efficient and Lightweight Target Recognition for High Resolution Spaceborne SAR Images. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Zhang, N.; Wei, X.; Chen, H.; Liu, W. FPGA Implementation for CNN-Based Optical Remote Sensing Object Detection. Electronics 2021, 10, 282. [Google Scholar] [CrossRef]

- Neris, R.; Guerra, R.; López, S.; Sarmiento, R. Performance evaluation of state-of-the-art CNN architectures for the on-board processing of remotely sensed images. In Proceedings of the 2021 XXXVI Conference on Design of Circuits and Integrated Systems (DCIS), Vila do Conde, Portugal, 24–26 November 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Haut, J.M.; Alcolea, A.; Paoletti, M.E.; Plaza, J.; Resano, J.; Plaza, A. GPU-Friendly Neural Networks for Remote Sensing Scene Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8001005. [Google Scholar] [CrossRef]

- Behera, T.K.; Bakshi, S.; Nappi, M.; Sa, P.K. Superpixel-Based Multiscale CNN Approach Toward Multiclass Object Segmentation From UAV-Captured Aerial Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 1771–1784. [Google Scholar] [CrossRef]

- Zhang, N.; Wang, G.; Wang, J.; Chen, H.; Liu, W.; Chen, L. All Adder Neural Networks for On-Board Remote Sensing Scene Classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5607916. [Google Scholar] [CrossRef]

- Papatheofanous, E.A.; Tziolos, P.; Kalekis, V.; Amrou, T.; Konstantoulakis, G.; Venitourakis, G.; Reisis, D. SoC FPGA Acceleration for Semantic Segmentation of Clouds in Satellite Images. In Proceedings of the 2022 IFIP/IEEE 30th International Conference on Very Large Scale Integration (VLSI-SoC), Patras, Greece, 3–5 October 2022; pp. 1–4. [Google Scholar] [CrossRef]

- He, W.; Yang, Y.; Mei, S.; Hu, J.; Xu, W.; Hao, S. Configurable 2D-3D CNNs Accelerator for FPGA-Based Hyperspectral Imagery Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 9406–9421. [Google Scholar] [CrossRef]

- Neris, R.; Rodríguez, A.; Guerra, R.; López, S.; Sarmiento, R. FPGA-Based Implementation of a CNN Architecture for the On-Board Processing of Very High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 3740–3750. [Google Scholar] [CrossRef]

- Kim, V.H.; Choi, K.K. A Reconfigurable CNN-Based Accelerator Design for Fast and Energy-Efficient Object Detection System on Mobile FPGA. IEEE Access 2023, 11, 59438–59445. [Google Scholar] [CrossRef]

- Liu, Y.; Dai, Y.; Liu, G.; Yang, J.; Tian, L.; Li, H. Distributed Space Remote Sensing and Multi-satellite Cooperative On-board Processing. In Proceedings of the 2020 International Conference on Sensing, Measurement & Data Analytics in the era of Artificial Intelligence (ICSMD), Xi’an, China, 15–17 October 2020; pp. 551–556. [Google Scholar] [CrossRef]

- Chen, X.; Ji, J.; Mei, S.; Zhang, Y.; Han, M.; Du, Q. FPGA Based Implementation of Convolutional Neural Network for Hyperspectral Classification. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 2451–2454. [Google Scholar] [CrossRef]

- Li, X.; Cai, K. Method research on ship detection in remote sensing image based on Yolo algorithm. In Proceedings of the 2020 International Conference on Information Science, Parallel and Distributed Systems (ISPDS), Xi’an, China, 14–16 August 2020; pp. 104–108. [Google Scholar] [CrossRef]

- Liu, S.; Peng, Y.; Liu, L. A Novel Ship Detection Method in Remote Sensing Images via Effective and Efficient PP-YOLO. In Proceedings of the 2021 IEEE International Conference on Sensing, Diagnostics, Prognostics and Control (SDPC), Weihai, China, 13–15 August 2021; pp. 234–239. [Google Scholar] [CrossRef]

- Xie, T.; Han, W.; Xu, S. OYOLO: An Optimized YOLO Method for Complex Objects in Remote Sensing Image Detection. IEEE Geosci. Remote Sens. Lett. 2023. early access. [Google Scholar] [CrossRef]

- Wang, N.; Li, B.; Wei, X.; Wang, Y.; Yan, H. Ship Detection in Spaceborne Infrared Image Based on Lightweight CNN and Multisource Feature Cascade Decision. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4324–4339. [Google Scholar] [CrossRef]

- Kim, J.; Kang, J.-K.; Kim, Y. A Low-Cost Fully Integer-Based CNN Accelerator on FPGA for Real-Time Traffic Sign Recognition. IEEE Access 2022, 10, 84626–84634. [Google Scholar] [CrossRef]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a Convolutional Neural Network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Ma, Y.; Wang, C. SdcNet: A Computation-Efficient CNN for Object Recognition. In Proceedings of the 2018 IEEE 23rd International Conference on Digital Signal Processing (DSP), Shanghai, China, 19–21 November 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Urbinati, L.; Casu, M.R. A Reconfigurable Depth-Wise Convolution Module for Heterogeneously Quantized DNNs. In Proceedings of the 2022 IEEE International Symposium on Circuits and Systems (ISCAS), Austin, TX, USA, 27 May 2022–1 June 2022; pp. 128–132. [Google Scholar] [CrossRef]

- Santurkar, S.; Tsipras, D.; Ilyas, A.; Madry, A. How does batch normalization help optimization? Adv. Neural Inf. Process. Syst. 2018, 31, 1–11. [Google Scholar]

- Abdukodirova, M.; Abdullah, S.; Alsadoon, A.; Prasad, P.W.C. Deep learning for ovarian follicle (OF) classification and counting: Displaced rectifier linear unit (DReLU) and network stabilization through batch normalization (BN). In Proceedings of the 2020 5th International Conference on Innovative Technologies in Intelligent Systems and Industrial Applications (CITISIA), Sydney, Australia, 25–27 November 2020; pp. 1–10. [Google Scholar] [CrossRef]

- Kusumawati, D.; Ilham, A.A.; Achmad, A.; Nurtanio, I. Vgg-16 and Vgg-19 Architecture Models in Lie Detection Using Image Processing. In Proceedings of the 2022 6th International Conference on Information Technology, Information Systems and Electrical Engineering (ICITISEE), Yogyakarta, Indonesia, 13–14 December 2022; pp. 340–345. [Google Scholar] [CrossRef]

- Bagaskara, A.; Suryanegara, M. Evaluation of VGG-16 and VGG-19 Deep Learning Architecture for Classifying Dementia People. In Proceedings of the 2021 4th International Conference of Computer and Informatics Engineering (IC2IE), Depok, Indonesia, 14–15 September 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Yang, C.; Yang, Z.; Hou, J.; Su, Y. A Lightweight Full Homomorphic Encryption Scheme on Fully-connected Layer for CNN Hardware Accelerator achieving Security Inference. In Proceedings of the 2021 28th IEEE International Conference on Electronics, Circuits, and Systems (ICECS), Dubai, United Arab Emirates, 28 November–1 December 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Liu, K.; Kang, G.; Zhang, N.; Hou, B. Breast Cancer Classification Based on Fully-Connected Layer First Convolutional Neural Networks. IEEE Access 2018, 6, 23722–23732. [Google Scholar] [CrossRef]

- Targ, S.; Almeida, D.; Lyman, K. Resnet in resnet: Generalizing residual architectures. arXiv 2016, arXiv:1603.08029. [Google Scholar]

- Al-Qizwini, M.; Barjasteh, I.; Al-Qassab, H.; Radha, H. Deep learning algorithm for autonomous driving using googlenet. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 89–96. [Google Scholar]

- Williamson, I.A.; Hughes, T.W.; Minkov, M.; Bartlett, B.; Pai, S.; Fan, S. Reprogrammable electro-optic nonlinear activation functions for optical neural networks. IEEE J. Sel. Top. Quantum Electron. 2019, 26, 1–12. [Google Scholar] [CrossRef]

- Daubechies, I.; DeVore, R.; Foucart, S.; Hanin, B.; Petrova, G. Nonlinear approximation and (deep) ReLU networks. Constr. Approx. 2022, 55, 127–172. [Google Scholar] [CrossRef]

- Xu, J.; Li, Z.; Du, B.; Zhang, M.; Liu, J. Reluplex made more practical: Leaky ReLU. In Proceedings of the 2020 IEEE Symposium on Computers and Communications (ISCC), Rennes, France, 7–10 July 2020; pp. 1–7. [Google Scholar]

- Zhang, X.; Zou, Y.; Shi, W. Dilated convolution neural network with LeakyReLU for environmental sound classification. In Proceedings of the 2017 22nd International Conference on Digital Signal Processing (DSP), London, UK, 23–25 August 2017; pp. 1–5. [Google Scholar]

- Li, B.; He, Y. An improved ResNet based on the adjustable shortcut connections. IEEE Access 2018, 6, 18967–18974. [Google Scholar] [CrossRef]

- Mohagheghi, S.; Alizadeh, M.; Safavi, S.M.; Foruzan, A.H.; Chen, Y.W. Integration of CNN, CBMIR, and visualization techniques for diagnosis and quantification of covid-19 disease. IEEE J. Biomed. Health Inform. 2021, 25, 1873–1880. [Google Scholar] [CrossRef]

- Wei, X.; Liu, W.; Chen, L.; Ma, L.; Chen, H.; Zhuang, Y. FPGA-based hybrid-type implementation of quantized neural networks for remote sensing applications. Sensors 2019, 19, 924. [Google Scholar] [CrossRef]

- Liu, W.; Ma, L.; Wang, J. Detection of multiclass objects in optical remote sensing images. IEEE Geosci. Remote Sens. Lett. 2018, 16, 791–795. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3974–3983. [Google Scholar]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.S. Remote sensing image scene classification meets deep learning: Challenges, methods, benchmarks, and opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Li, C.; Xu, R.; Lv, Y.; Zhao, Y.; Jing, W. Edge Real-Time Object Detection and DPU-Based Hardware Implementation for Optical Remote Sensing Images. Remote Sens. 2023, 15, 3975. [Google Scholar] [CrossRef]

- Alwani, M.; Chen, H.; Ferdman, M.; Milder, P. Fused-layer CNN accelerators. In Proceedings of the 2016 49th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Taipei, Taiwan, 15–19 October 2016; pp. 1–12. [Google Scholar]

- Yu, Y.; Wu, C.; Zhao, T.; Wang, K.; He, L. OPU: An FPGA-based overlay processor for convolutional neural networks. IEEE Trans. Very Large Scale Integr. VLSI Syst. 2019, 28, 35–47. [Google Scholar] [CrossRef]

- Cui, C.; Ge, F.; Li, Z.; Yue, X.; Zhou, F.; Wu, N. Design and Implementation of OpenCL-Based FPGA Accelerator for YOLOv2. In Proceedings of the 2021 IEEE 21st International Conference on Communication Technology (ICCT), Tianjin, China, 13–16 October 2021; pp. 1004–1007. [Google Scholar]

- Zhai, J.; Li, B.; Lv, S.; Zhou, Q. FPGA-based vehicle detection and tracking accelerator. Sensors 2023, 23, 2208. [Google Scholar] [CrossRef]

- Kim, D.; Jeong, S.; Kim, J.Y. Agamotto: A Performance Optimization Framework for CNN Accelerator with Row Stationary Dataflow. IEEE Trans. Circuits Syst. I Regul. Pap. 2023, 70, 2487–2496. [Google Scholar] [CrossRef]

- Mousouliotis, P.; Tampouratzis, N.; Papaefstathiou, I. SqueezeJet-3: An HLS-based accelerator for edge CNN applications on SoC FPGAs. In Proceedings of the 2023 XXIX International Conference on Information, Communication and Automation Technologies (ICAT), Sarajevo, Bosnia and Herzegovina, 11–14 June 2023; pp. 1–6. [Google Scholar]

- Wang, H.; Li, D.; Isshiki, T. Reconfigurable CNN Accelerator Embedded in Instruction Extended RISC-V Core. In Proceedings of the 2023 6th International Conference on Electronics Technology (ICET), Chengdu, China, 12–15 May 2023; pp. 945–954. [Google Scholar]

- Jian, T.; Gong, Y.; Zhan, Z.; Shi, R.; Soltani, N.; Wang, Z.; Dy, J.; Chowdhury, K.; Wang, Y.; Ioannidis, S. Radio frequency fingerprinting on the edge. IEEE Trans. Mob. Comput. 2021, 21, 4078–4093. [Google Scholar] [CrossRef]

| Resource | LUT | FF | BRAM | DSP |

|---|---|---|---|---|

| Available in VC709 | 433200 | 866400 | 1470 | 3600 |

| Utilization | 105509 | 282807 | 794 | 832 |

| Utilization rate | 24.36% | 32.64% | 54.01% | 23.11% |

| CPU | GPU | The Proposed Accelerator | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Device | Intel Xeon E5-2697v4 1 | NVIDIA TITAN Xp 2 | AMD-Xilinx XC7VLX690T 3 | ||||||

| Technology (nm) | 14 | 16 | 28 | ||||||

| Frequency (MHz) | 2300 | 1582 | 200 | ||||||

| Power (W) | 145 | 250 | 14.97 | ||||||

| Network | YOLOv2 4 | VGG-16 | ResNet-34 | YOLOv2 4 | VGG-16 | ResNet-34 | YOLOv2 4 | VGG-16 | ResNet-34 |

| Network complexity (GOP) | 379.55 | 30.69 | 7.33 | 379.55 | 30.69 | 7.33 | 379.55 | 30.69 | 7.33 |

| Accuracy (mAP or OA) | 67.50% | 91.93% | 92.87% | 67.50% | 91.93 | 92.87% | 67.30% | 91.90% | 92.81% |

| Processing time (ms) | 7127.0 | 143.7 | 65.3 | 71.9 | 5.3 | 12.0 | 981.4 | 89.1 | 40.2 |

| Throughput (GOPS) | 53.26 | 213.57 | 112.25 | 5278.86 | 5790.57 | 610.83 | 386.74 | 344.44 | 182.34 |

| Energy efficiency (GOPS/W) | 0.37 | 1.47 | 0.77 | 21.16 | 23.16 | 2.45 | 25.83 | 23.01 | 12.18 |

| Relative energy efficiency | |||||||||

| [55] | [56] | [57] | Our Work | [58] | [59] | [60] | Our Work | [61] | Our Work | |

|---|---|---|---|---|---|---|---|---|---|---|

| Platform | XC7K325t 1 | Arria 10 GX 2 | ZYNQ 7000 3 | XC7VLX 690T | VCU118 4 | XC 7Z020 5 | Alveo-U200 6 | XC7VLX 690T | ZCU104 7 | XC7VLX 690T |

| Technology (nm) | 28 | 20 | 28 | 28 | 16 | 28 | 16 | 28 | 16 | 28 |

| Frequency (MHz) | 200 | 213 | 209 | 200 | 200 | 200 | 73 | 200 | 200 | 200 |

| Network | YOLOv2 | YOLOv2 | YOLOv3 | YOLOv2 8 | VGG-16 | VGG-16 | VGG-16 | VGG-16 | ResNet-50 | ResNet-34 |

| Quantization | 8-bit | 8-bit | 16-bit | 8-bit | 8-bit | 8-bit | 8-bit | 8-bit | N/A | 8-bit |

| DSPs | 516 | N/A | 294 | 832 | 2286 | 334 | 388 | 832 | N/A | 832 |

| Power (W) | 16.5 | 27.6 | 15.64 | 14.97 | >30 | 3.1 | 3.26 | 14.97 | 14 | 14.97 |

| Throughput (GOPS) | 391 | 248.7 | 115.7 | 386.74 | 402 | 68.66 | 51.0 | 344.44 | 103.2(51.5) | 182.34 |

| Energy efficiency (GOPS/W) | 23.69 | 9.01 | 7.40 | 25.83 | <13.4 | 22.15 | 15.6 | 23.01 | 7.37(3.68) | 12.18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ni, S.; Wei, X.; Zhang, N.; Chen, H. Algorithm–Hardware Co-Optimization and Deployment Method for Field-Programmable Gate-Array-Based Convolutional Neural Network Remote Sensing Image Processing. Remote Sens. 2023, 15, 5784. https://doi.org/10.3390/rs15245784

Ni S, Wei X, Zhang N, Chen H. Algorithm–Hardware Co-Optimization and Deployment Method for Field-Programmable Gate-Array-Based Convolutional Neural Network Remote Sensing Image Processing. Remote Sensing. 2023; 15(24):5784. https://doi.org/10.3390/rs15245784

Chicago/Turabian StyleNi, Shuo, Xin Wei, Ning Zhang, and He Chen. 2023. "Algorithm–Hardware Co-Optimization and Deployment Method for Field-Programmable Gate-Array-Based Convolutional Neural Network Remote Sensing Image Processing" Remote Sensing 15, no. 24: 5784. https://doi.org/10.3390/rs15245784

APA StyleNi, S., Wei, X., Zhang, N., & Chen, H. (2023). Algorithm–Hardware Co-Optimization and Deployment Method for Field-Programmable Gate-Array-Based Convolutional Neural Network Remote Sensing Image Processing. Remote Sensing, 15(24), 5784. https://doi.org/10.3390/rs15245784