Automatic Extraction of the Calving Front of Pine Island Glacier Based on Neural Network

Abstract

:1. Introduction

2. Materials and Methods

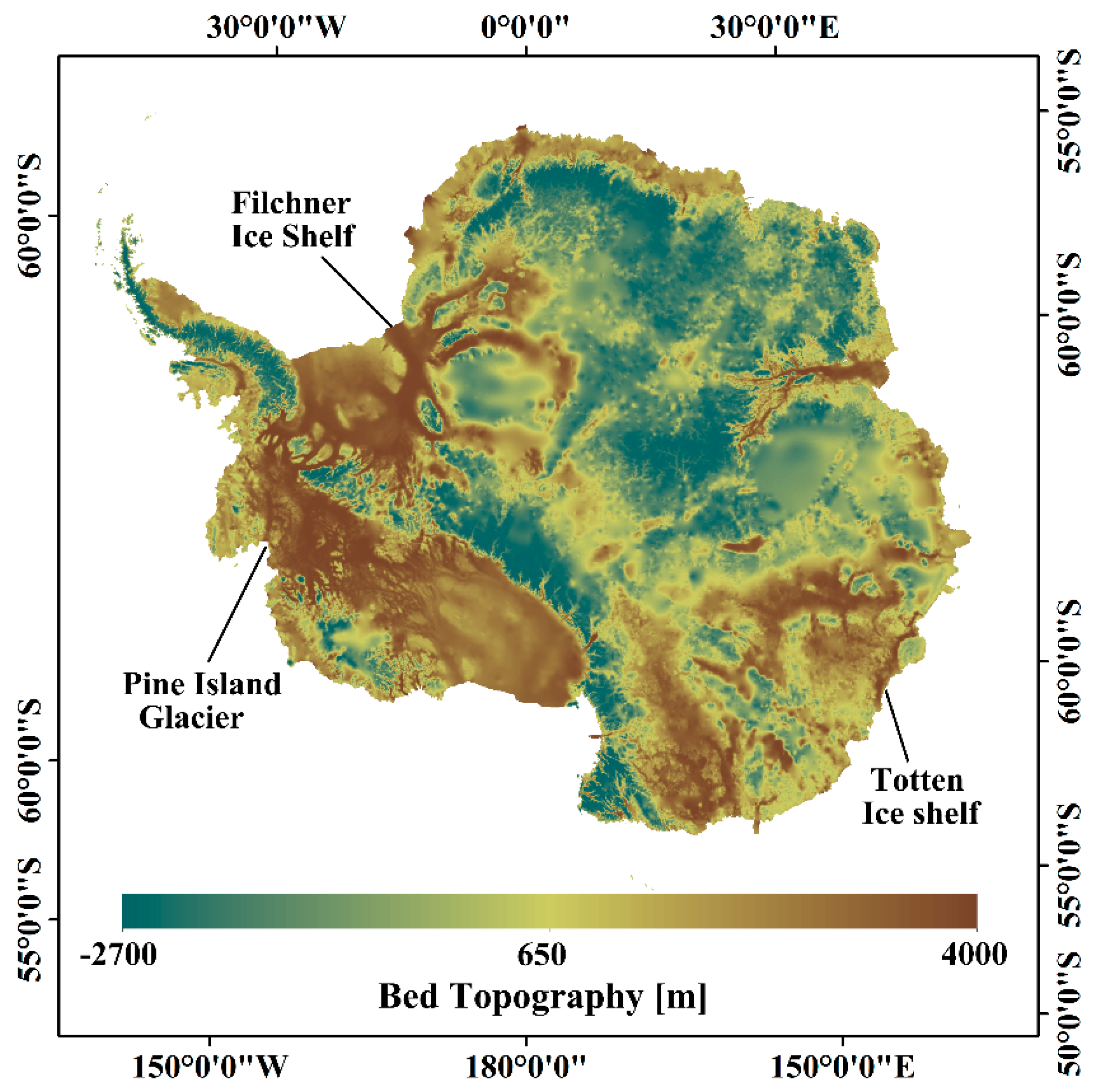

2.1. Study Area

2.2. Input Data

2.3. Methods

2.3.1. Pre-Processing and Data Preparation

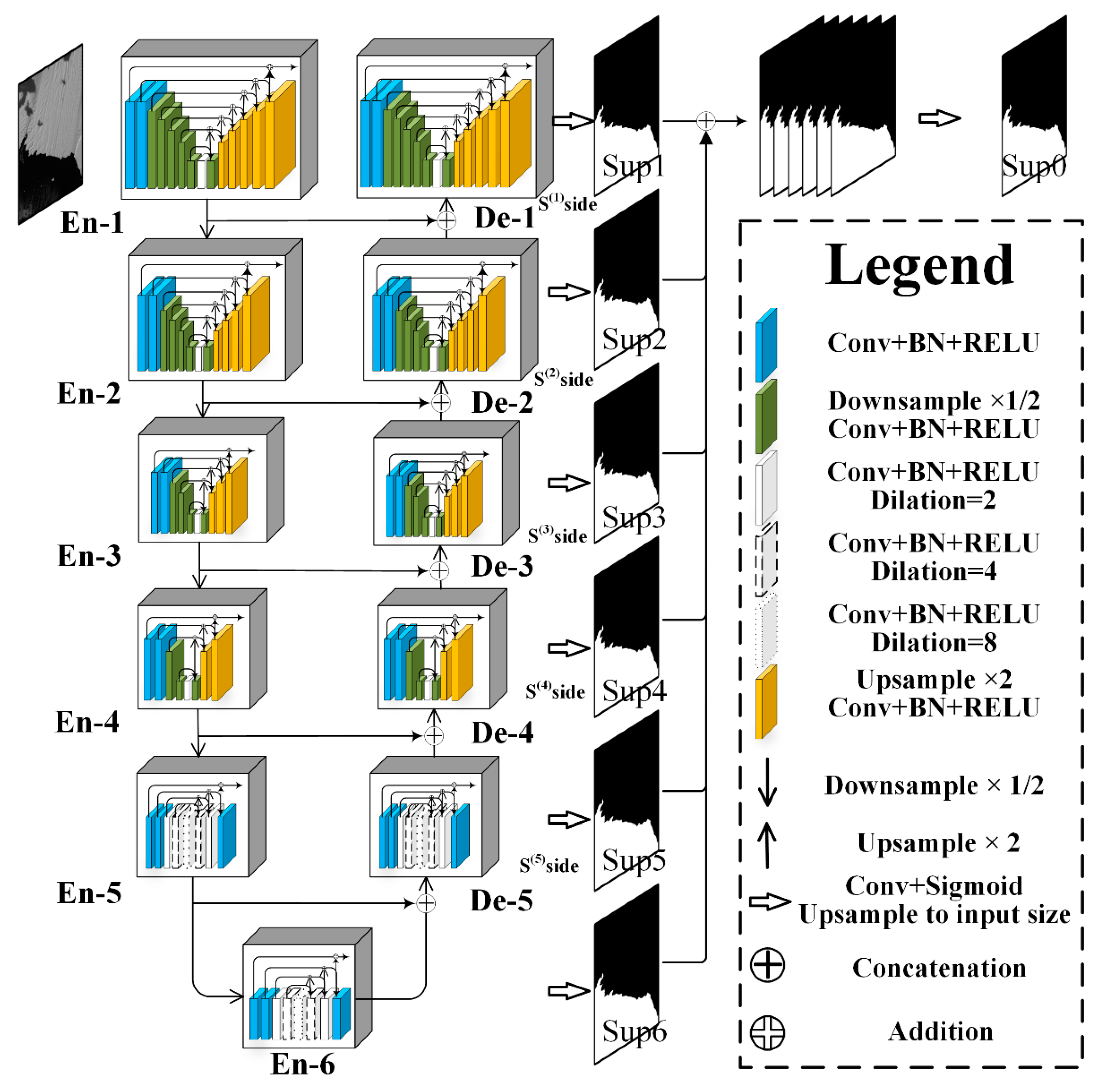

2.3.2. U2-Net Architecture and Post-Processing

2.3.3. Training Loss of U2-Net

2.3.4. Training Strategy

2.3.5. Accuracy Assessment

- (1)

- Accuracy (AC): This is an evaluation index for the network model which is used to measure the accuracy of the network for semantic segmentation. The accuracy of the model is defined as the proportion of correctly classified pixels in the classification result. The ACmod is computed as [58]:

- (2)

- Cohen’s Kappa Coefficient (k): This parameter is used to measure the degree of consistency between manual visual interpretation and model classification results. The higher this index is, the closer the model result is to the real classification situation which from manual extraction. The specific calculation method is as follows [58]:

3. Results and Discussion

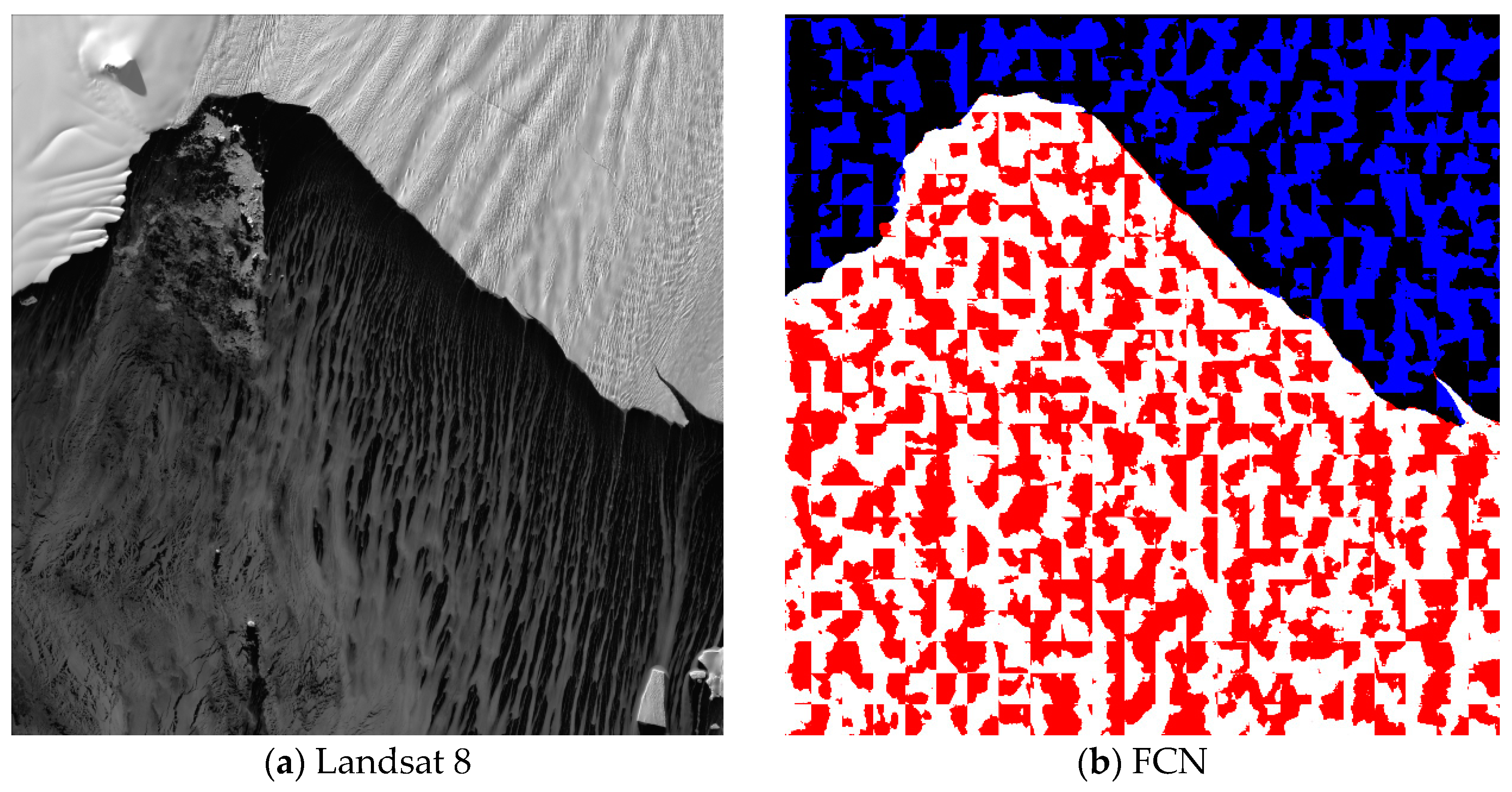

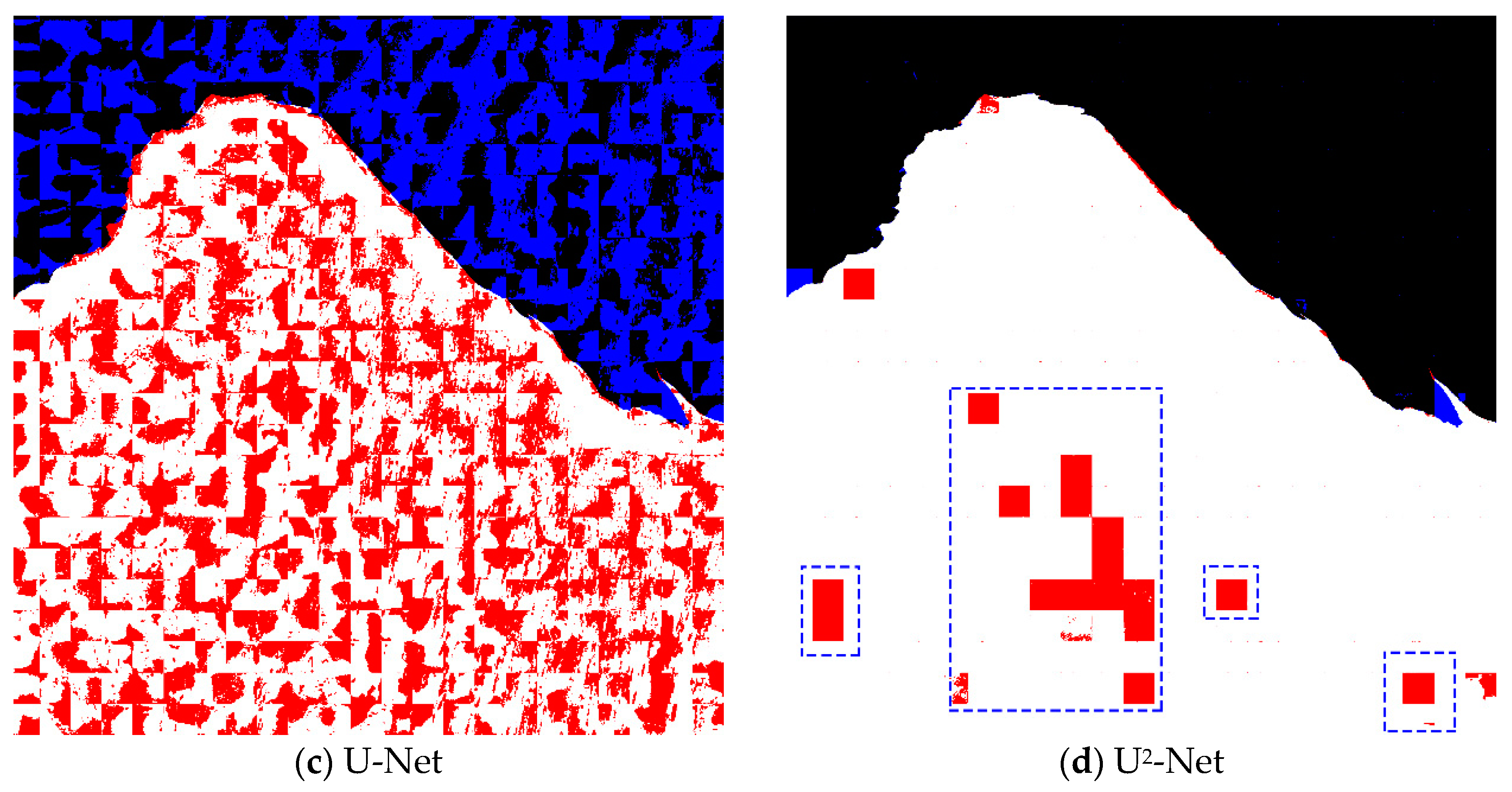

3.1. Comparison of Results

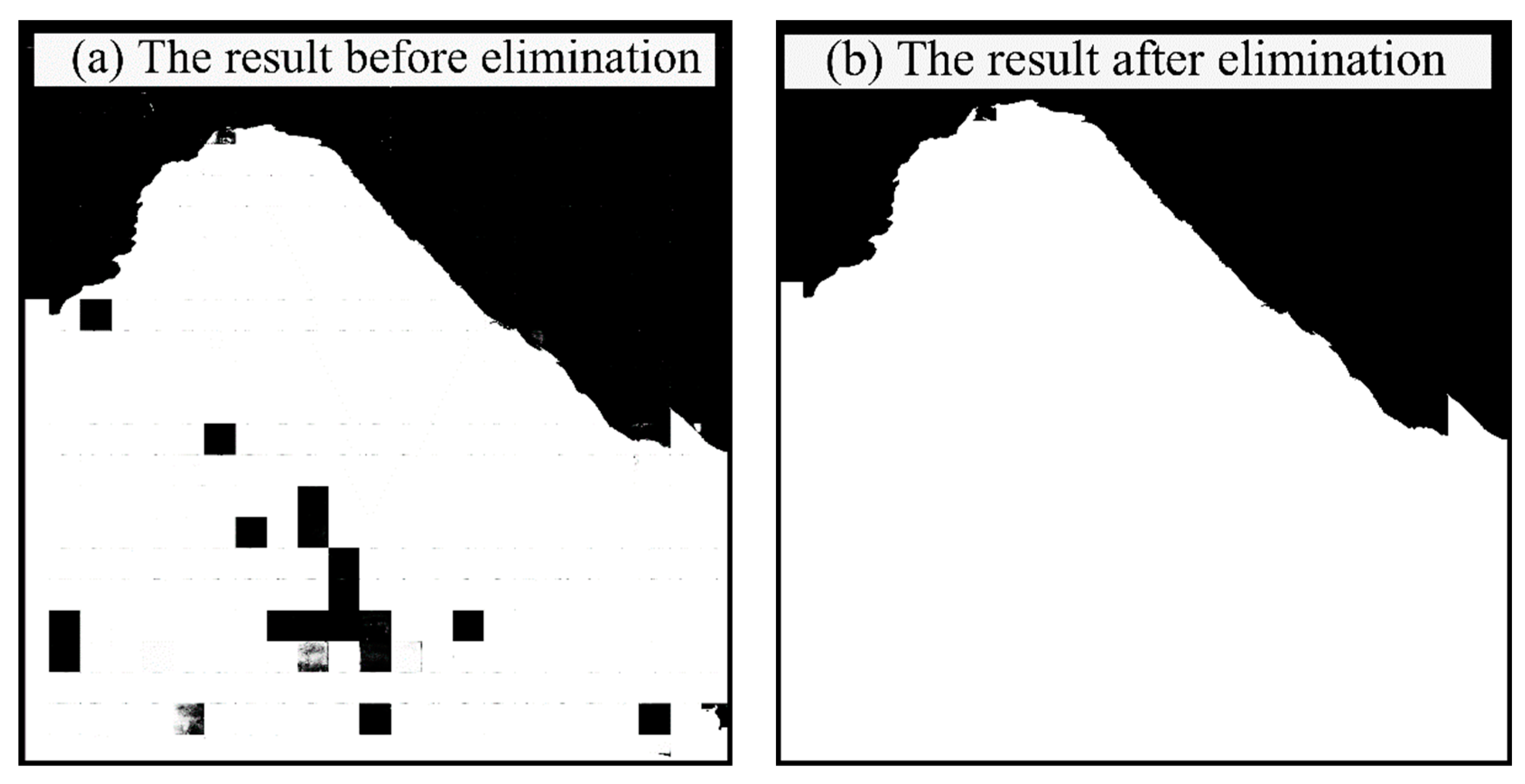

3.2. U2-Net Result Post-Processing and Edge Extraction

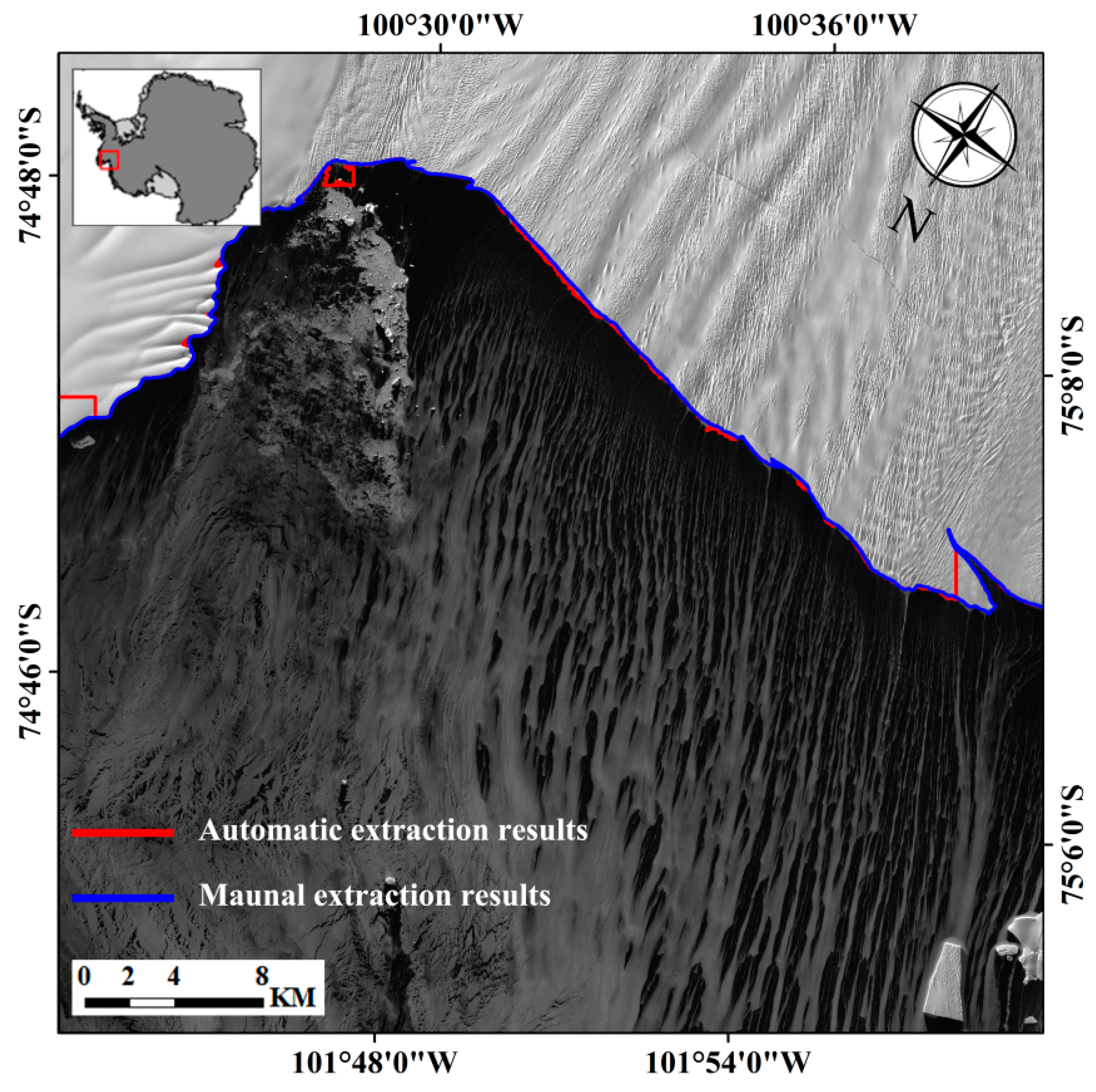

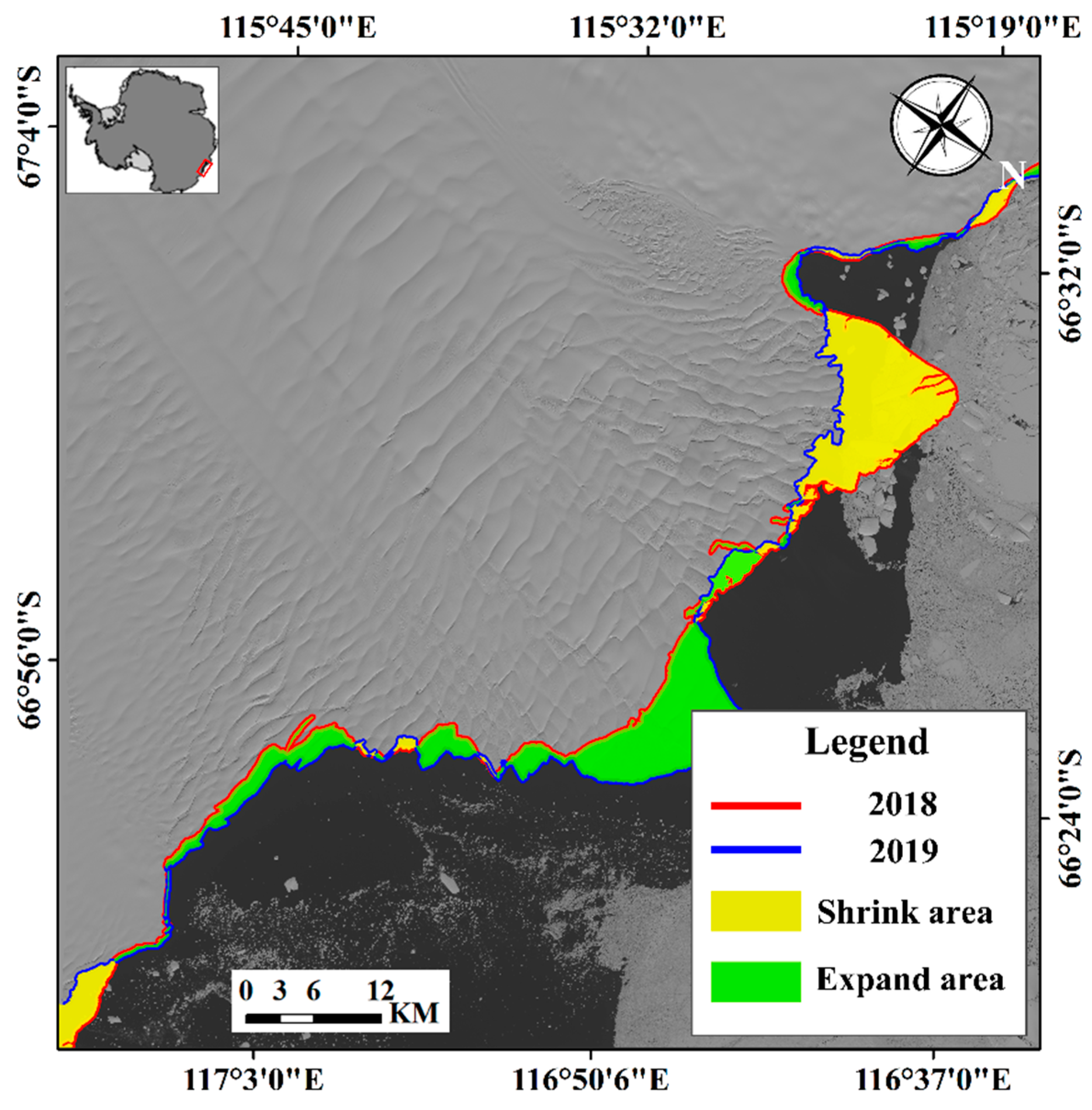

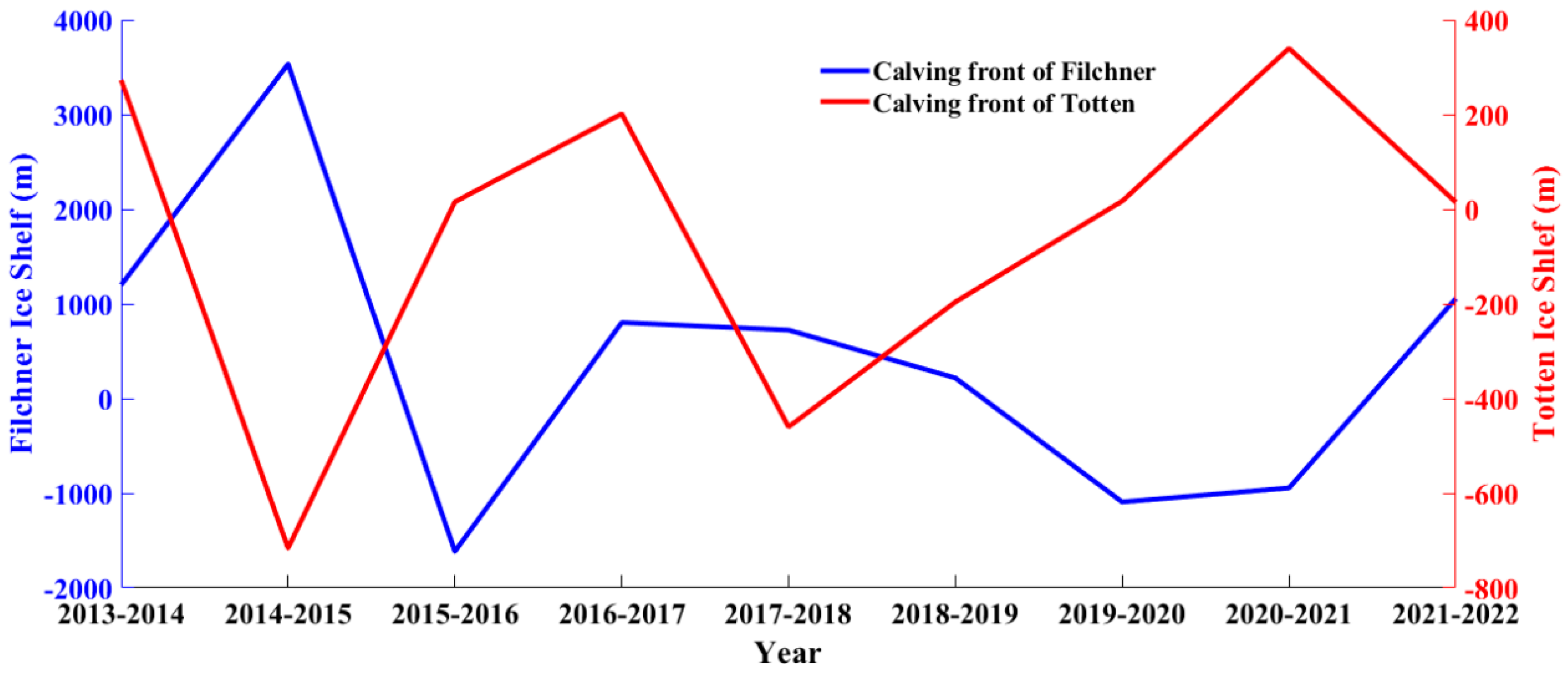

3.3. Model Generalization

4. Conclusions

- (1)

- The comparison of extraction results of the Pine Island Ice Shelf using FCN and U-Net demonstrated that U-Net, with its inclusion of a ‘skip’ operation in the network architecture, effectively integrates both low-resolution and high-resolution information. This integration leads to enhanced accuracy in F1 and IOU metrics. Specifically, there was a 1.67 percentage point increase in F1 and a 1.42 percentage point increase in IOU;

- (2)

- In comparison to the previous two models, U2-Net demonstrated the highest accuracy. This can be attributed to its utilization of the ‘U’ structure which enables the extraction of multi-scale features at each stage. Additionally, the model employed the multi-supervision algorithm to construct the objective function. By fusing feature maps from each layer and the final feature map, the network output and intermediate feature map fusion were both supervised, leading to a significant enhancement in classification accuracy. The evaluation indexes of the model indicated an accuracy above 90%, highlighting its suitability for semantic segmentation of Ice Shelves and oceans;

- (3)

- In order to further analyze the differences between the models in the Ice Shelf region, their performance was compared in terms of ACmod and k values. The findings showed that the ACmod value of U2-Net was 41.38 percentage points higher than U-Net and 41.57 percentage points higher than FCN. Additionally, the U2-Net model demonstrated superior performance in terms of the k value, indicating distinct advantages in classifying Ice Shelf regions at various levels;

- (4)

- The U2-Net model demonstrates strong generalization capabilities and has been successfully applied to analyze the Totten and Filchner Ice Shelves. This analysis allowed for the extraction of calving front changes over the past 10 years. The results revealed that the Filchner Ice Shelf exhibited more pronounced changes compared to the Totten Ice Shelf. Specifically, the calving front of the Filchner Ice Shelf advanced by 3532.84 m during the period of 2014–2015 while the maximum advancement of the Totten Ice Shelf occurred between 2020 and 2021, reaching 339.63 m;

- (5)

- By comparing the accuracy of each model in extracting the calving front location in this paper, it was found that U2-Net is more suitable for this purpose. Subsequent research can optimize the U2-Net model to improve the efficiency and accuracy of calving front extraction. Moreover, the method proposed in this study can also be applied to monitor similar disasters. For instance, in the case of natural disasters in India where the sudden acceleration of mountain glaciers leads to the formation of cracks on the surface that gradually expand over time, this paper’s method enables the numerical representation of such changes. This facilitates the analysis of specific change data to evaluate potential risks.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bondzio, J.H.; Seroussi, H.; Morlighem, M.; Kleiner, T.; Rückamp, M.; Humbert, A.; Larour, E.Y. Modelling calving front dynamics using a level-set method: Application to Jakobshavn Isbræ, West Greenland. Cryosphere 2016, 10, 497–510. [Google Scholar] [CrossRef]

- Rosier, S.H.; Gudmundsson, G.H. Exploring mechanisms responsible for tidal modulation in flow of the Filchner–Ronne Ice Shelf. Cryosphere 2020, 14, 17–37. [Google Scholar] [CrossRef]

- Müller, U.; Sandhäger, H.; Sievers, J.; Blindow, N. Glacio-kinematic analysis of ERS-1/2 SAR data of the Antarctic ice shelf Ekströmisen and the adjoining inland ice sheet. Polarforschung 2000, 67, 15–26. [Google Scholar]

- Nicholls, K.W.; Østerhus, S.; Makinson, K.; Gammelsrød, T.; Fahrbach, E. Ice-ocean processes over the continental shelf of the southern Weddell Sea, Antarctica: A review. Rev. Geophys. 2009, 47. [Google Scholar] [CrossRef]

- McCormack, F.; Roberts, J.; Gwyther, D.; Morlighem, M.; Pelle, T.; Galton-Fenzi, B.K. The impact of variable ocean temperatures on Totten Glacier stability and discharge. Geophys. Res. Lett. 2021, 48, e2020GL091790. [Google Scholar] [CrossRef]

- Gens, R. Remote sensing of coastlines: Detection, extraction and monitoring. Int. J. Remote Sens. 2010, 31, 1819–1836. [Google Scholar] [CrossRef]

- Furst, J.J.; Durand, G.; Gillet-Chaulet, F.; Tavard, L.; Rankl, M.; Braun, M.; Gagliardini, O. The safety band of Antarctic ice shelves. Nat. Clim. Change 2016, 6, 479–482. [Google Scholar] [CrossRef]

- Sakakibara, D.; Sugiyama, S. Ice-front variations and speed changes of calving glaciers in the Southern Patagonia Icefield from 1984 to 2011. J. Geophys. Res. Earth Surf. 2014, 119, 2541–2554. [Google Scholar] [CrossRef]

- Wuite, J.; Nagler, T.; Gourmelen, N.; Escorihuela, M.J.; Hogg, A.E.; Drinkwater, M.R. Sub-annual calving front migration, area change and calving rates from swath mode CryoSat-2 altimetry, on Filchner-Ronne Ice Shelf, Antarctica. Remote Sens. 2019, 11, 2761. [Google Scholar] [CrossRef]

- Baumhoer, C.A.; Dietz, A.J.; Dech, S.; Kuenzer, C. Remote sensing of antarctic glacier and ice-shelf front dynamics—A review. Remote Sens. 2018, 10, 1445. [Google Scholar]

- Mason, D.C.; Davenport, I.J. Accurate and efficient determination of the shoreline in ERS-1 SAR images. IEEE Trans. Geosci. Remote Sens. 1996, 34, 1243–1253. [Google Scholar] [CrossRef]

- Modava, M.; Akbarizadeh, G. Coastline extraction from SAR images using spatial fuzzy clustering and the active contour method. Int. J. Remote Sens. 2017, 38, 355–370. [Google Scholar] [CrossRef]

- Liu, H.; Jezek, K.C. A complete high-resolution coastline of Antarctica extracted from orthorectified Radarsat SAR imagery. Photogramm. Eng. Remote Sens. 2004, 70, 605–616. [Google Scholar] [CrossRef]

- Alonso, M.T.; López-Martínez, C.; Mallorquí, J.J.; Salembier, P. Edge enhancement algorithm based on the wavelet transform for automatic edge detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2010, 49, 222–235. [Google Scholar] [CrossRef]

- Yuan, L.; Xu, X. Adaptive image edge detection algorithm based on canny operator. In Proceedings of the 2015 4th International Conference on Advanced Information Technology and Sensor Application (AITS), Harbin, China, 21–25 August 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 28–31. [Google Scholar]

- Deng, C.-X.; Wang, G.-B.; Yang, X.-R. Image edge detection algorithm based on improved canny operator. In Proceedings of the 2013 International Conference on Wavelet Analysis and Pattern Recognition, Tianjin, China, 14–17 July 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 168–172. [Google Scholar]

- Gao, W.; Zhang, X.; Yang, L.; Liu, H. An improved Sobel edge detection. In Proceedings of the 2010 3rd International Conference on Computer Science and Information Technology, Chengdu, China, 9–11 July 2010; IEEE: Piscataway, NJ, USA, 2010; Volume 5, pp. 67–71. [Google Scholar]

- Chen, G.; Jiang, Z.; Kamruzzaman, M. Radar remote sensing image retrieval algorithm based on improved Sobel operator. J. Vis. Commun. Image Represent. 2020, 71, 102720. [Google Scholar] [CrossRef]

- Seale, A.; Christoffersen, P.; Mugford, R.I.; O’Leary, M. Ocean forcing of the Greenland Ice Sheet: Calving fronts and patterns of retreat identified by automatic satellite monitoring of eastern outlet glaciers. J. Geophys. Res. Earth Surf. 2011, 116. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Ambekar, S.; Tafuro, M.; Ankit, A.; der Mast, D.V.; Alence, M.; Athanasiadis, C. SKDCGN: Source-free Knowledge Distillation of Counterfactual Generative Networks Using cGANs. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 679–693. [Google Scholar]

- Li, Y.; Zhang, H.; Xue, X.; Jiang, Y.; Shen, Q. Deep learning for remote sensing image classification: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1264. [Google Scholar] [CrossRef]

- Sun, S.; Lu, Z.; Liu, W.; Hu, W.; Li, R. Shipnet for semantic segmentation on vhr maritime imagery. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 23–27 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 6911–6914. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Xu, Y.; Du, J.; Dai, L.-R.; Lee, C.-H. A regression approach to speech enhancement based on deep neural networks. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 23, 7–19. [Google Scholar] [CrossRef]

- Collobert, R.; Weston, J. A unified architecture for natural language processing: Deep neural networks with multitask learning. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 160–167. [Google Scholar]

- Liu, H.; Jezek, K. Automated extraction of coastline from satellite imagery by integrating Canny edge detection and locally adaptive thresholding methods. Int. J. Remote Sens. 2004, 25, 937–958. [Google Scholar] [CrossRef]

- Leigh, S.; Wang, Z.; Clausi, D.A. Automated ice–water classification using dual polarization SAR satellite imagery. IEEE Trans. Geosci. Remote Sens. 2013, 52, 5529–5539. [Google Scholar] [CrossRef]

- Kaushik, S.; Singh, T.; Joshi, P.; Dietz, A.J. Automated mapping of glacial lakes using multisource remote sensing data and deep convolutional neural network. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103085. [Google Scholar]

- Tuckett, P.A.; Ely, J.C.; Sole, A.J.; Lea, J.M.; Livingstone, S.J.; Jones, J.M.; van Wessem, J.M. Automated mapping of the seasonal evolution of surface meltwater and its links to climate on the Amery Ice Shelf, Antarctica. Cryosphere 2021, 15, 5785–5804. [Google Scholar]

- Mohajerani, Y.; Wood, M.; Velicogna, I.; Rignot, E. Detection of glacier calving margins with convolutional neural networks: A case study. Remote Sens. 2019, 11, 74. [Google Scholar] [CrossRef]

- Zhang, E.; Liu, L.; Huang, L. Automatically delineating the calving front of Jakobshavn Isbræ from multitemporal TerraSAR-X images: A deep learning approach. Cryosphere 2019, 13, 1729–1741. [Google Scholar] [CrossRef]

- Baumhoer, C.A.; Dietz, A.J.; Kneisel, C.; Kuenzer, C. Automated extraction of antarctic glacier and ice shelf fronts from sentinel-1 imagery using deep learning. Remote Sens. 2019, 11, 2529. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar]

- Chen, B.; Liu, Y.; Zhang, Z.; Lu, G.; Kong, A.W.K. Transattunet: Multi-level attention-guided u-net with transformer for medical image segmentation. arXiv 2021, arXiv:2107.05274. [Google Scholar] [CrossRef]

- Siddique, N.; Paheding, S.; Elkin, C.P.; Devabhaktuni, V. U-net and its variants for medical image segmentation: A review of theory and applications. IEEE Access 2021, 9, 82031–82057. [Google Scholar]

- Yin, X.-X.; Sun, L.; Fu, Y.; Lu, R.; Zhang, Y. U-Net-Based Medical Image Segmentation. J. Healthc. Eng. 2022, 2022, 4189781. [Google Scholar] [PubMed]

- Wei, X.; Li, X.; Liu, W.; Zhang, L.; Cheng, D.; Ji, H.; Zhang, W.; Yuan, K. Building outline extraction directly using the u2-net semantic segmentation model from high-resolution aerial images and a comparison study. Remote Sens. 2021, 13, 3187. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, C.; Liu, Z.; Zhang, Z.; Liang, J.; Chen, H.; Wang, L. Extraction of Aquaculture Ponds along Coastal Region Using U2-Net Deep Learning Model from Remote Sensing Images. Remote Sens. 2022, 14, 4001. [Google Scholar] [CrossRef]

- Fretwell, P.; Pritchard, H.D.; Vaughan, D.G.; Bamber, J.L.; Barrand, N.E.; Bell, R.; Bianchi, C.; Bingham, R.G.; Blankenship, D.D.; Casassa, G.; et al. Bedmap2: Improved ice bed, surface and thickness datasets for Antarctica. Cryosphere 2013, 7, 375–393. [Google Scholar]

- Shugar, D.H.; Jacquemart, M.; Shean, D.; Bhushan, S.; Upadhyay, K.; Sattar, A.; Schwanghart, W.; McBride, S.; de Vries, M.V.; Mergili, M.; et al. A massive rock and ice avalanche caused the 2021 disaster at Chamoli, Indian Himalaya. Science 2021, 373, 300. [Google Scholar] [PubMed]

- Rignot, E.; Vaughan, D.G.; Schmeltz, M.; Dupont, T.; MacAyeal, D. Acceleration of Pine island and Thwaites glaciers, west Antarctica. Ann. Glaciol. 2002, 34, 189–194. [Google Scholar] [CrossRef]

- Favier, L.; Durand, G.; Cornford, S.L.; Gudmundsson, G.H.; Gagliardini, O.; Gillet-Chaulet, F.; Zwinger, T.; Payne, A.; Le Brocq, A.M. Retreat of Pine Island Glacier controlled by marine ice-sheet instability. Nat. Clim. Change 2014, 4, 117–121. [Google Scholar] [CrossRef]

- Liu, S.; Su, S.; Cheng, Y.; Tong, X.; Li, R. Long-Term Monitoring and Change Analysis of Pine Island Ice Shelf Based on Multi-Source Satellite Observations during 1973–2020. J. Mar. Sci. Eng. 2022, 10, 976. [Google Scholar] [CrossRef]

- Greenbaum, J.S.; Dow, C.; Pelle, T.; Morlighem, M.; Fricker, H.; Adusumilli, S.; Jenkins, A.; Rutishauser, A.; Blankenship, D.; Coleman, R. Antarctic grounding line retreat enhanced by subglacial freshwater discharge. In Proceedings of the Copernicus Meetings, Vienna, Austria, 22–27 May 2022. [Google Scholar]

- Greenbaum, J.; Blankenship, D.; Young, D.; Richter, T.; Roberts, J.; Aitken, A.; Legresy, B.; Schroeder, D.; Warner, R.; Van Ommen, T. Ocean access to a cavity beneath Totten Glacier in East Antarctica. Nat. Geosci. 2015, 8, 294–298. [Google Scholar] [CrossRef]

- Hoffman, M.J.; Begeman, C.B.; Asay-Davis, X.S.; Comeau, D.; Barthel, A.; Price, S.F.; Wolfe, J.D. Ice-shelf freshwater triggers for the Filchner-Ronne Ice Shelf melt tipping point in a global ocean model. EGUsphere 2023, 2023, 1–29. [Google Scholar]

- Olinger, S.; Lipovsky, B.P.; Denolle, M.; Crowell, B.W. Tracking the Cracking: A Holistic Analysis of Rapid Ice Shelf Fracture Using Seismology, Geodesy, and Satellite Imagery on the Pine Island Glacier Ice Shelf, West Antarctica. Geophys. Res. Lett. 2022, 49, e2021GL097604. [Google Scholar] [CrossRef] [PubMed]

- Christie, F.; Benham, T.; Batchelor, C.; Rack, W.; Montelli, A.; Dowdeswell, J. Antarctic Ice Front Positions, 1979–2021, Supporting Antarctic Ice-Shelf Advance Driven by Anomalous Atmospheric and Sea-Ice Circulation; Apollo-University of Cambridge Repository: Cambridge, UK, 2022. [Google Scholar]

- Song, X.Y.; Wang, Z.M.; Liang, J.C.; Zhang, B.J.; Du, Y.; Zeng, Z.L.; Liu, M.L. Automatic Extraction of the Basal Channel Based on Neural Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5013–5023. [Google Scholar] [CrossRef]

- Han, H.; Kim, S.H.; Kim, S. Decadal changes of Campbell Glacier Tongue in East Antarctica from 2010 to 2020 and implications of ice pinning conditions analyzed by optical and SAR datasets. GIScience Remote Sens. 2022, 59, 705–721. [Google Scholar] [CrossRef]

- Tomar, K.S.; Tomar, S.S.; Prasad, A.V.; Luis, A.J. Glacier Dynamics in East Antarctica: A Remote Sensing Perspective. Adv. Remote Sens. Technol. Three Poles. 2022, 117–127. [Google Scholar] [CrossRef]

- Dell, R.L.; Banwell, A.F.; Willis, I.C.; Arnold, N.S.; Halberstadt, A.R.W.; Chudley, T.R.; Pritchard, H.D. Supervised classification of slush and ponded water on Antarctic ice shelves using Landsat 8 imagery. J. Glaciol. 2022, 68, 401–414. [Google Scholar] [CrossRef]

- Barsi, J.A.; Lee, K.; Kvaran, G.; Markham, B.L.; Pedelty, J.A. The Spectral Response of the Landsat-8 Operational Land Imager. Remote Sens. 2014, 6, 10232–10251. [Google Scholar] [CrossRef]

- Li, Y.J.; Li, H.; Fan, D.Z.; Li, Z.X.; Ji, S. Improved Sea Ice Image Segmentation Using U-Net and Dataset Augmentation. Appl. Sci. 2023, 13, 9402. [Google Scholar] [CrossRef]

- Perone, C.S.; Calabrese, E.; Cohen-Adad, J. Spinal cord gray matter segmentation using deep dilated convolutions. Sci. Rep. 2018, 8, 5966. [Google Scholar] [CrossRef]

- Muhuri, A.; Gascoin, S.; Menzel, L.; Kostadinov, T.S.; Harpold, A.A.; Sanmiguel-Vallelado, A.; Lopez-Moreno, J.I. Performance Assessment of Optical Satellite-Based Operational Snow Cover Monitoring Algorithms in Forested Landscapes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7159–7178. [Google Scholar] [CrossRef]

- Heidler, K.; Mou, L.C.; Baumhoer, C.; Dietz, A.; Zhu, X.X. HED-UNet: Combined Segmentation and Edge Detection for Monitoring the Antarctic Coastline. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4300514. [Google Scholar] [CrossRef]

- Zhang, E.Z.; Liu, L.; Huang, L.C.; Ng, K.S. An automated, generalized, deep-learning-based method for delineating the calving fronts of Greenland glaciers from multi-sensor remote sensing imagery. Remote Sens. Environ. 2021, 254, 112265. [Google Scholar] [CrossRef]

| Data | Path/Row | Series | Cloud Cover (%) |

|---|---|---|---|

| 20130215 | 185/116 | Landsat7 | 1.00 |

| 20140226 | 185/116 | Landsat8 | 0.00 |

| 20150301 | 185/116 | Landsat8 | 13.02 |

| 20160115 | 185/116 | Landsat8 | 3.67 |

| 20170218 | 185/116 | Landsat8 | 0.08 |

| 20180205 | 185/116 | Landsat8 | 5.80 |

| 20190312 | 185/116 | Landsat8 | 0.54 |

| 20200218 | 186/116 | Landsat8 | 0.04 |

| 20210128 | 185/116 | Landsat8 | 16.80 |

| 20220224 | 185/116 | Landsat8 | 0.14 |

| 20130109 | 102/107 | Landsat7 | 1.00 |

| 20140129 | 101/107 | Landsat8 | 7.40 |

| 20150321 | 101/107 | Landsat8 | 0.14 |

| 20160119 | 101/107 | Landsat8 | 1.73 |

| 20170222 | 101/107 | Landsat8 | 0.00 |

| 20180108 | 101/107 | Landsat8 | 0.00 |

| 20190228 | 101/107 | Landsat8 | 0.00 |

| 20200302 | 101/107 | Landsat8 | 11.64 |

| 20210217 | 101/107 | Landsat8 | 18.90 |

| 20220220 | 101/107 | Landsat8 | 0.00 |

| Index | FCN | U-Net | U2-Net |

|---|---|---|---|

| Learning rate | 0.001 | ||

| Epoch | 800 | ||

| Batch size | 8 | 8 | 4 |

| Adam optimizer | SGD (momentum = 0.7)) | Adam (betas = (0.9, 0.999), eps = 1 × 10−8, weight decay = 0) | Adam (betas = (0.9, 0.999), eps = 1 × 10−8, weight decay = 0) |

| Loss function | Binary Cross Entropy Loss | ||

| En_1 | En_2 | En_3 | En_4 | En_5 | En_6 | De_5 | De_4 | De_3 | De_2 | De_1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| U2-Net | I:3 | I:64 | I:128 | I:256 | I:512 | I:512 | I:1024 | I:1024 | I:512 | I:256 | I:128 |

| M:32 | M:32 | M:64 | M:128 | M:256 | M:256 | M:256 | M:128 | M:64 | M:32 | M:16 | |

| O:64 | O:128 | O:256 | O:512 | O:512 | O:512 | O:512 | O:256 | O:128 | O:64 | O:64 | |

| U-Net | I:3 | I:64 | I:128 | I:256 | I:512 | I:512 | I:1024 | I:1024 | I:512 | I:256 | I:128 |

| M:64 | M:128 | M:256 | M:512 | M:512 | M:512 | M:512 | M:256 | M:128 | M:64 | M:64 | |

| O:64 | O:128 | O:256 | O:512 | O:512 | O:512 | O:512 | O:256 | O:128 | O:64 | O:64 | |

| FCN | I:3 | I:64 | I:128 | I:256 | I:512 | I:512 | |||||

| O:64 | O:128 | O:256 | O:512 | O:512 | O:256 | ||||||

| Model | FCN | U-Net | U2-Net |

|---|---|---|---|

| Precision (%) | 69.04 | 66.97 | 99.43 |

| Recall (%) | 55.17 | 59.47 | 94.54 |

| F1 (%) | 61.33 | 63.00 | 96.93 |

| IOU (%) | 44.23 | 45.98 | 94.04 |

| ACmod | 54.72 | 54.53 | 96.10 |

| k | 8.32 | 4.58 | 91.59 |

| Author | Year | Method | Accuracy | Data |

|---|---|---|---|---|

| Celia A. Baumhoer [34] | 2019 | modified U-Net | 108 m | Sentinel-1 |

| Yara Mohajerani [32] | 2019 | modified U-Net | 92.5 m | Landsat 5; Landsat 7; Landsat 8. |

| Enze Zhang [60] | 2021 | combination of histogram normalization and DRN-DeepLabv3+ | 86 m | Landsat-8; Sentinel-2; Envisat; ALOS-1; TerraSAR-X; Sentinel-1; ALOS-2. |

| Konrad Heidler [59] | 2022 | HED-UNet | 80.5 m | Sentinel-1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, X.; Du, Y.; Guo, J. Automatic Extraction of the Calving Front of Pine Island Glacier Based on Neural Network. Remote Sens. 2023, 15, 5168. https://doi.org/10.3390/rs15215168

Song X, Du Y, Guo J. Automatic Extraction of the Calving Front of Pine Island Glacier Based on Neural Network. Remote Sensing. 2023; 15(21):5168. https://doi.org/10.3390/rs15215168

Chicago/Turabian StyleSong, Xiangyu, Yang Du, and Jiang Guo. 2023. "Automatic Extraction of the Calving Front of Pine Island Glacier Based on Neural Network" Remote Sensing 15, no. 21: 5168. https://doi.org/10.3390/rs15215168

APA StyleSong, X., Du, Y., & Guo, J. (2023). Automatic Extraction of the Calving Front of Pine Island Glacier Based on Neural Network. Remote Sensing, 15(21), 5168. https://doi.org/10.3390/rs15215168