Abstract

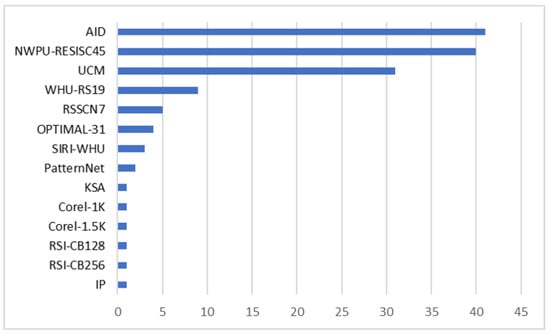

Remote sensing image scene classification with deep learning (DL) is a rapidly growing field that has gained significant attention in the past few years. While previous review papers in this domain have been confined to 2020, an up-to-date review to show the progression of research extending into the present phase is lacking. In this review, we explore the recent articles, providing a thorough classification of approaches into three main categories: Convolutional Neural Network (CNN)-based, Vision Transformer (ViT)-based, and Generative Adversarial Network (GAN)-based architectures. Notably, within the CNN-based category, we further refine the classification based on specific methodologies and techniques employed. In addition, a novel and rigorous meta-analysis is performed to synthesize and analyze the findings from 50 peer-reviewed journal articles to provide valuable insights in this domain, surpassing the scope of existing review articles. Our meta-analysis shows that the most adopted remote sensing scene datasets are AID (41 articles) and NWPU-RESISC45 (40). A notable paradigm shift is seen towards the use of transformer-based models (6) starting from 2021. Furthermore, we critically discuss the findings from the review and meta-analysis, identifying challenges and future opportunities for improvement in this domain. Our up-to-date study serves as an invaluable resource for researchers seeking to contribute to this growing area of research.

1. Introduction

The advancement in Earth observation technology has led to the availability of very high-resolution (VHR) images of the Earth’s surface. With the development of VHR images, it is possible to accurately identify and classify land use and land cover (LULC) [1], and the demands for such tasks are high. Scene classification in remote sensing images aims to categorize image scenes automatically into relevant classes like residential areas, cultivation land, forests, etc. [2], drawing considerable attention. In recent years, the application of scene classification in VHR satellite images is evident in disaster detection [3], land use [4,5,6,7,8,9], and urban planning [10]. The implementation of deep learning (DL) for scene classification is an emerging tendency in a current scenario, with an effort to achieve maximum accuracy. A review and meta-analysis of these defacto methods is valuable to the research community, but is either lacking or not up-to-date. To this end, we explore DL methods on satellite images for scene classification.

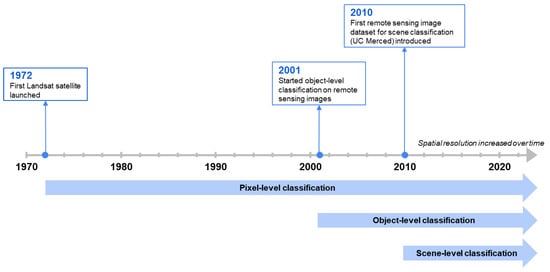

In the early days of remote sensing, the spatial resolution of images was relatively low, resulting in the pixel size equivalent to the object of interest [11]. As a consequence, the studies on remote sensing classification were based on pixel-level [11,12,13]. Subsequently, the increment in spatial resolution reoriented the research to remote sensing classification on the object-level, which produced more enhanced classification than per-pixel analysis [14]. This approach dominated the remote sensing classification domain for decades, including [15,16,17,18,19,20]. However, the perpetual growth of remote sensing images facilitates capturing distinct object classes, rendering traditional pixel-level and object-level methods inadequate for accurate image classification. In such a scenario, scene-level classification became crucial to interpret the global contents of remote sensing images [21]. Thus, numerous experiments can be observed in scene-label analysis over the past few years [22,23,24,25,26]. Figure 1 illustrates the progression of classification approaches from pixel-level to object-level and finally to scene-level.

Figure 1.

Timeline of remote sensing classification approaches. The spatial resolution of images increased over time, resulting in three classification levels: pixel-level, object-level, and scene-level classification.

The preliminary methods for remote sensing scene classification were predominantly relying on low-level features such as texture, color, gradient, and shape. Hand-crafted features like Scale-Invariant Feature Transform (SIFT), Gabor filters, local binary pattern (LBP), color histogram (CH), gray level co-occurrence matrix (GLCM), and histogram of oriented gradients (HOG) were designed to capture specific patterns or information from the low-level features [7,27,28,29,30]. These features are crafted by domain experts and are not learned automatically from data. The methods utilized on low-level features only rely on uniform texture and can not perform on complex scenes. On the other hand, methods on mid-level features extract more complex patterns with clustering, grouping, or segmentation [31,32]. The idea is to acquire local attributes from small regions or patches within an image and encode these local attributes to retrieve the intricate and detailed pattern [33]. The bag-of-visual-words (BoVW) model is a widely used mid-level approach for scene classification in the remote sensing field [34,35,36]. However, the limited representation capability of mid-level approaches has hindered breakthroughs in remote sensing image scene classification.

In recent times, DL models emerged as a solution to address the aforementioned limitations in low-level and mid-level methods. DL architectures implement a range of techniques, including Convolutional Neural Networks (CNNs) [37,38], Vision Transformers (ViT), and Generative Adversarial Networks (GANs), to learn discriminative features for effective feature extraction. For scene classification, the available datasets are grouped into diverse scenes. The DL architectures are either trained on these datasets to obtain the predicted scene class [39], or pretrained DL models are used to obtain derived classes from the same scene classes [40,41], depending upon the application. In the context of remote sensing scene classification, the experiments are mainly focused on achieving optimal scene prediction by implementing DL architectures. CNN architectures like Residual Network (ResNet) [42], AlexNet [43], GoogleNet [44], etc., are commonly used for remote sensing scene classification. Operations like fine-tuning [45], adding low-level and mid-level feature descriptors like LBP [46], BovW [47], etc., and developing novel architectures [33,48] are performed to obtain nearly ideal scene classification results. Furthermore, ViTs and GANs are also used to advance research and applications in this field.

In this paper, a meta-analysis is performed from peer-reviewed research articles that utilized DL-based methods for remote sensing image scene classification. To the best of our knowledge, this meta-analysis is the first of its kind to focus on DL methods in this domain. There are few review papers that evaluate datasets and DL methods [21,49] or include them as a part of a review article [50]. However, these studies provide an overview of experiments performed on DL-based remote sensing scene classification limited to 2020. Therefore, we review this domain as a significant amount of improvement is expected, along with meta-analysis as a novel approach.

The necessity of this research is emphasized by its significance in the domain of remote sensing scene classification using DL. Within this study, we address various insights extracted from this research, including the role of a diverse array of datasets, the notable disparity in accuracy between self-supervised and supervised methods, and the dominance of pretrained CNNs. However, the most crucial revelation is the identification of paradigm shifts that hold transformative implications in this field. This study not only contributes to the current understanding of remote sensing scene classification, but also paves the way for future advancements. These encompass the development of expansive annotated datasets, the further exploration of local–global feature representations within images, the refinement of self-supervised learning techniques to compete with supervised learning, and the pursuit of innovative approaches.

The major contributions of this article are listed below:

- We present an up-to-date review offering insights into remote sensing scene datasets and DL feature extraction methods, encompassing CNN-based, ViT-based and GAN-based approaches, through a comprehensive examination of relevant studies.

- We conduct a novel meta-analysis of 50 peer-reviewed articles in the DL-driven remote sensing scene classification domain. We pinpoint the critical problems and recognize emerging trends and paradigm shifts in this field.

- We identify and discuss key challenges in this domain while also providing valuable insights into future research directions.

2. Understanding Remote Sensing Image Scene and Deep Learning Feature Extraction

2.1. High-Resolution Scene Classification Datasets

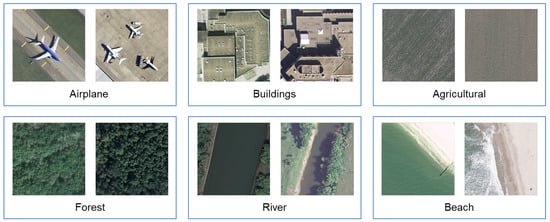

Multiple VHR remote sensing datasets are available for scene classification. The UC Merced Land Use Dataset (UC-Merced or UCM) [51] is a popular dataset obtained from the United States Geological Survey (USGS) National Map Urban Area Imagery collection covering US regions. Some of the classes belonging to this dataset are airplanes, buildings, forests, rivers, agriculture, beach, etc., depicted in Figure 2. The Aerial Image Dataset (AID) [23] is another dataset acquired from Google Earth Imagery with a higher number of images and classes than the UCM dataset. Table 1 lists some widely used datasets for scene recognition in the remote sensing domain. Some of the scene classes are common in multiple datasets. For instance, the “forest” class is included in UCM, WHU-RS19 [52], RSSCN7 [53], and NWPU-RESISC45 [49] datasets. However, there are variations in scene classes among different datasets. In addition, the number of images and their size and resolution of scene datasets varies in respective datasets. Thus, the selection of the dataset depends on the research objectives. Cheng et al. [49] proposed a novel large-scale dataset NWPU-RESISC45 with rich image variations and high within-class diversity and between-class similarity, addressing the problem of small-scale datasets, lack of variations, and diversity. Miao et al. [54] merged UCM, NWPU-RESISC45, and AID datasets to prepare a larger remote-sensing scene dataset for semi-supervised scene classification and attained a similar performance to the state-of-the-art methods. In these VHR datasets, multiple DL architectures have been conducted to obtain optimal accuracy.

Figure 2.

Sample images of some classes from the UCM dataset. In total, there are 21 classes in this dataset.

Table 1.

Scene databases used for scene classification.

2.2. CNN-Based Scene Classification Methods

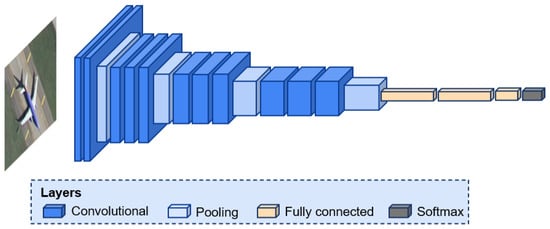

CNNs [60] effectively extract meaningful features from images by utilizing convolutional kernels and hierarchical layers. A typical CNN architecture includes a convolutional layer, pooling layer, Rectified Linear Unit (ReLU) activation layer, and fully connected (FC) layer [61] as shown in Figure 3. The mathematical formula for the output of each filter in a 2D convolutional layer is provided in Equation (1).

where represents the activation function, is the weight associated with the connection between input feature map m and output feature map n, and denotes the bias term associated with output feature map n. The convolution layers learn features from input images, followed by the pooling layer, which reduces computational complexity while retaining multi-scale information. The ReLU activation function introduces non-linearity to the network, allowing for the modeling of complex relationships and enhancing the network’s ability to learn discriminative features. The successive use of convolutional and pooling layers allows the network to learn increasingly complex and abstract features at different scales. The first few initial layers capture low-level features such as edges and textures, while deeper layers learn high-level features and global structures [62]. The FC layers serve as a means of mapping the learned features to the final output space, enabling the network to make predictions based on the extracted information. In a basic CNN structure, the output of an FC layer is fed into either a softmax or a sigmoid activation function for classification tasks. However, the majority of parameters are occupied by an FC layer, increasing the possibility of overfitting. Dropout is implemented to counter this problem [63]. To minimize loss and improve the model’s performance, several optimizers like Adam [64] and stochastic gradient descent (SGD) [65] are prevalent in research.

Figure 3.

A typical CNN architecture. Downsampling operations such as pooling and convolution layers allow the capture of multi-scale information from the input image, finally classifying them with an FC layer.

Multiple approaches have been explored to optimize the feature extraction process for accurate remote sensing scene classification using CNNs. They are sub-divided into two categories: pretrained CNNs and CNNs trained from scratch.

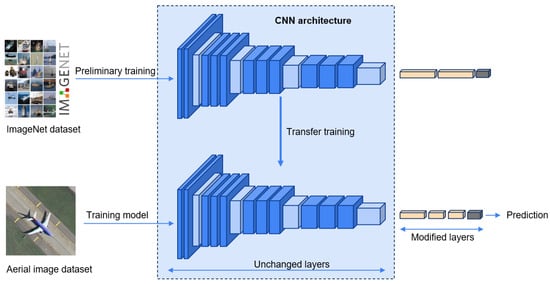

2.2.1. Pretrained CNNs for Feature Extraction

Collecting and annotating data for larger remote sensing scene datasets increases costs and is a laborious task. To address the scarcity of data in the remote sensing domain, researchers often utilize terrestrial image datasets such as ImageNet [66] and PlacesCNN [67], which contain a large number of diverse images from various categories. Wang et al. [68] described the local similarity between remote sensing scene image and natural image scenes. By leveraging pretrained models trained on these datasets, the CNN algorithms can benefit from the learned features and generalize well to remote sensing tasks with limited labeled data. This process is illustrated in Figure 4, showcasing the role of pretrained CNNs.

Figure 4.

Pretrained CNN utilized for feature extraction from the aerial image dataset. The weight parameters of CNN pretrained on the ImageNet dataset are transferred to the new CNN. Top layers are replaced with a custom layer configuration fitting to the target concept.

In 2015, Penatti et al. [30] introduced pretrained CNN on ImageNet into remote sensing scene classification, discovering better classification results for the UCM dataset than low-level descriptors. In a diverse large-scale dataset named NWPU-RESISC45, three popular pretrained CNNs: AlexNet [63], VGG-16 [69] and GoogLeNet [70], improved the performance by 30% minimum compared to handcrafted and unsupervised feature learning methods [49]. NWPU-RESISC45 dataset is ambiguous due to high intra-class diversity and inter-class similarity. Sen et al. [71] adopted a hierarchical approach to mitigate the misclassification. Their method is divided into two levels: (i) all 45 classes are rearranged into 5 main classes (Transportation, Water Areas, Buildings, Constructed Lands, Natural Lands), and (ii) the 45 sub-levels are trained in each class. DenseNet-121 [72] pretrained on ImageNet is used as a feature extractor in both levels. Al et al. [73] combined four scene datasets, namely UCM, AID, NPWU, and PatternNet, to construct a heterogeneous scene dataset. For suitability, the 12 shared classes are filtered to utilize an MB-Net architecture, which is based on pretrained ResNet-50 [74]. MB-Net is designed to capture collective knowledge from three labeled source datasets and perform scene classification on a remaining unlabeled target dataset. Shawky et al. [75] brought a data augmentation strategy for CNN-MLP architecture with Xception [76] as a feature extractor. Sun et al. [77] obtained multi-scale ground objects using multi-level convolutional pyramid semantic fusion (MCPSF) architecture and differentiated intricate scenes consisting of diverse ground objects.

Yu et al. [78] introduced a feature fusion strategy in which CNNs are utilized to extract features from both the original image and a processed image obtained through saliency detection. The extracted features from these two sources are then fused together to produce more discriminative features. Ye et al. [79] proposed parallel multi-stage (PMS) architecture based on the GoogleNet backbone to learn features individually from three hierarchical levels: low-, middle-, and high-level, prior to fusion. Dong et al. [80] integrated a Deep Convolutional Neural Network (DCNN) with Broad Learning System (BLS) [81] for the first time in the remote sensing scene classification domain to extract shallow features and named it FDPResNet. The DCNN implemented ResNet-101 pretrained on ImageNet as a backbone on both shallow and deep features and further fused and passed to the BLS system for classification. CNN architectures for remote sensing scene classification vary in design with the incorporation of additional techniques and methodologies. However, the widely employed approaches include LBP-based, fine-tuning, parameter reduction, and attention mechanism methods.

LBP-based pretrained CNNs: LBP is a widely used robust low-level descriptor for recognizing textures [82]. In 2018, Anwer et al. [46] proposed Tex-Net architecture, which combined an original RGB image with a texture-coded mapped LBP image. The late fusion strategy (Tex-Net-LF) performed better than early fusion (Tex-Net-EF). Later, Yu et al. [83], who previously introduced the two-stream deep fusion framework [78], adopted the same concept to integrate the LBP-coded image as a replacement for the processed image obtained through saliency detection. However, they conducted a combination of previously proposed and new experiments using the LBP-coded mapped image and fused the features together. Huang et al. [84] stated that two-stream architectures solely focus on RGB image stream and overlook texture-containing images. Therefore, CTFCNN architecture based on pretrained CaffeNet [85] extracted three kinds of features: (i) convolutional features from multiple layers, wherein each layer improved bag-of-visual words (iBoVW) method represented discriminating information, (ii) FC features, and (iii) LBP-based FC features. Compared to traditional BoVW [35], the iBoVW coding method achieved rational representation.

Fine-tuned pretrained CNNs: Cheng et al. [49] not only used pretrained CNNs for feature extraction from the NWPU-RESISC45 dataset, they further fine-tuned the increasing learning rate in the last layer to gain better classification results. For the same dataset, Yang et al. [86] fine-tuned parameters utilized on three CNN models: VGG-16 and DenseNet-161 pretrained on ImageNet used as deep-learning classifier training, and feature pyramid network (FPN) [87] pretrained on Microsoft Coco (Common Objects in Context) [88] for deep-learning detector training. The combination of DenseNet+FPN exhibited exceptional performance. Zhang et al. [33] used the hit and trial technique to set the hyperparameters to achieve better accuracy. Petrovska et al. [89] implemented linear learning rate decay, which decreases the learning rate over time, and cyclical learning rates [90]. The improved accuracy utilizing fine-tuning on pretrained CNNs validates the statement made by Castelluccio et al. [91] in 2015.

Parameters reduction: CNN architectures exhibit a substantial amount of parameters, such as VGG-16, which comprises approximately 138 million parameters [92]. The large number of parameters is one of the factors for over-fitting [93,94]. Zhang et al. [95] utilized DenseNet, which is known for its parameter efficiency, with around 7 million parameters. Yu et al. [96] integrated light-weighted CNN MobileNet-v2 [97] with feature fusion bilinear model [98] and termed the architecture as BiMobileNet. BiMobileNet featured a parameter count of 0.86 million, which is six, eleven, and eighty-five times lower than the parameter numbers reported in [83], [45] and [99], respectively, while achieving better accuracy.

Attention mechanism: In the process of extracting features from entire images, it is essential to consider that images contain various objects and features. Therefore, selectively focusing on critical parts and disregarding irrelevant ones becomes crucial. Zhao et al. [100] added a channel-spatial attention [101] module (CBAM) following each residual dense block (RDB) based on DenseNet-101 backbone pretrained on ImageNet. CBAM helps to learn meaningful features in both channel and spatial dimensions [102]. Ji et al. [103] proposed an attention network based on the VGG-VD16 network that localizes discriminative areas in three different scales. The multiscale images are fed to sub-network CNN architectures and further fused for classification. Zhang et al. [104] introduced a multiscale attention network (MSA-Network), where the backbone is ResNet. After each residual block, the multiscale module is integrated to extract multiscale features. The channel and position attention (CPA) module is added after the last multiscale module to extract discriminative regions. Shen et al. [105] incorporated two models, namely ResNet-50 and DenseNet-121, to fulfill the insufficiency of single CNN models. Both models captured low, middle, and high-level features and combined them with a grouping-attention-fusion strategy. Guo et al. [106] proposed a multi-view feature learning network (MVFL) divided into three branches: (i) channel-spatial attention to localize discriminative areas, (ii) triplet metric branch, and (iii) center metric branch to increase interclass distance and decrease intraclass distance. Zhao et al. [107] designed an enhanced attention module (EAM) to enhance the ability to understand more discriminative features. In EAM, two depthwise dilated convolution branches are utilized, each branch having different dilated rates. Dilated convolutions enhance the receptive fields without escalating the parameter count. They effectively capture multiscale contextual information and improve the network’s capacity to learn features. The two branches are merged with depthwise convolutions to decrease the dimensionality. Hu et al. [108] introduced a multilevel inheritance network (MINet), where FPN based on ResNet-50 is adopted to acquire multilayer features. Subsequently, an attention mechanism is employed to augment the expressive capacity of features at each level. For the fusion of features, the feature weights across different levels are computed by leveraging the SENet [109] approach.

2.2.2. CNNs Trained from Scratch

Pretrained CNNs are typically trained on large-scale datasets such as ImageNet, which are not adaptable to the specific characteristics of the target dataset. Modifying pretrained CNNs is inconvenient due to the complexity and compatibility of CNNs. Although pretrained CNNs attained outstanding classification results, Zhang et al. [110] addressed complexity in pretrained CNNs due to the extensive parameter size and implemented a light-weighted CNN MobileNet-v2 with dilated convolution and channel attention. He et al. [111] introduced a skip-connected covariance (SCCov) network, where a skip connection is added along with covariance pooling. The SCCov architecture reduced the parameter number with better scene classification. Zhang et al. [112] proposed a gradient-boosting random convolutional network to assemble various non-pretrained deep neural networks (DNNs). A simplified representation of CNN architectures trained from scratch is illustrated in Figure 3. This CNN is solely based on a dataset to be trained without the involvement of pretrained CNN on a specific dataset.

2.3. Vision Transformer-Based Scene Classification Methods

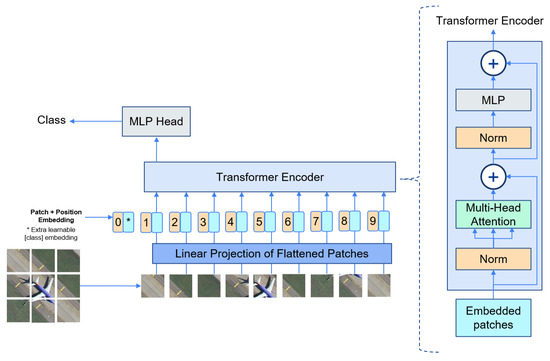

The ViT [113] model can perform image feature extraction without relying on convolutional layers. This model utilizes a transformer architecture [114], initially introduced for natural language processing. In ViT, an input image undergoes partitioning into fixed-size patches, and each patch is then transformed into a continuous vector through a process known as linear embedding. Moreover, position embeddings are added to the patch embeddings to retain positional information. Subsequently, a set of sequential patches (Equation (2)) are fed into the transformer encoder, which consists of alternating layers of Multi-head Self-Attention (MSA) [114] (Equation (3)) and multi-layer perceptron (MLP) (Equation (4)). Layernorm (LN) [115] is implemented prior to both MSA and MLP to reduce training time and stabilize the training process. Residual connections are applied after every layer to improve the performance. The MLP has two layers with a Gaussian Error Linear Unit (GELU) [116] activation function. In the final layer of the encoder, the first element of the sequence is passed to an external head classifier for prediction (Equation (5)). Figure 5 illustrates the ViT architecture for remote sensing scene classification.

where represents the embedding for the class token. denotes the embeddings of different patches flattened from the original images, concatenated with . is a matrix representing patch embeddings where P is patch size, C is the number of channels, and D is the embedding dimension. Positional embedding is added to patches, accounting for positions (including class token), each in a D-dimensional space.

where is the output of the MSA layer, applied after LN to the -th layer’s output (i.e., ), incorporating a residual connection. L represents the total number of layers in the transformer.

where is the output of the MLP layer, applied after LN to the from the -th layer, incorporating a residual connection. L represents the total number of layers in the transformer.

Figure 5.

A ViT architecture. A remote sensing scene image is partitioned into fixed-size patches, each of them linearly embedded, and positional embeddings are added. The resulting sequence of vectors are fed to a transformer encoder for classification.

ViT performs exceptionally well in capturing contextual features. Bazi et al. [117] introduced ViT for remote sensing scene classification and obtained a promising result compared to state-of-the-art CNN-based scene classification methods. Their method involves data augmentation strategies to improve classification accuracy. Furthermore, the network is compressed by pruning to reduce the model size. Bashmal et al. [118] utilized a data-efficient image transformer (DeiT), which is trained by knowledge distillation with a smaller dataset and showed potential results. Bi et al. [119] used the combination of ViT and supervised contrastive learning (CL) [120], named ViT-CL, to increase the robustness of the model by learning more discriminative features. ViT performs exceptionally well in capturing contextual features. However, they face limitations in learning local information. Moreover, their computational complexity is significantly high [121]. Peng et al. [122] addressed the challenge and introduced a local–global interactive ViT (LG-ViT). In LG-ViT architecture, images are partitioned to learn features in two different scales: small and large. ViT blocks learn from both scales to handle the problem of scale variation. In addition, a global-view network is implemented to learn global features from a whole image. The features obtained from the global-view network are embedded with local representation branches, which enhance local–global feature interaction.

CNNs excel at preserving local information but lack the ability to comprehensively capture global contextual features. ViTs are well-suited to learn long-range contextual relations. The hybrid approach of using CNNs and transformers leverages the strengths of both architectures to improve classification performance. Xu et al. [121] integrated ViT and CNN to harness the strength of CNN. In their study, ViT is used to extract rich contextual information, which is transferred to the ResNet-18 architecture. Tang et al. [123] proposed an efficient multiscale transformer and cross-level attention learning (EMTCAL), which also combines CNN with a transformer to extract maximum information. They employed ResNet-34 as a feature extractor in the CNN model. Zhang et al. [124] proposed a remote sensing transformer (TRS) to capture the global context of the image. TRS combines self-attention with ResNet through the Multi-Head Self-Attention layer (MHSA), replacing the conventional 3 × 3 spatial convolutions in the bottleneck. Additionally, the approach incorporates multiple pure transformer encoders, leveraging attention mechanisms to enhance the learning of representations. Wang et al. [125] utilized pretrained Swin Transformer [126] to capture features at multilayer followed by patch merging to concatenate the patches (except in the last block), with these two elements forming the Swin Transformer Block (STB). The multilevel features obtained from STB are eventually merged with the inspired technique [87], then further compressed using convolutions within the adaptive feature compression module because of redundancy in multiple features. Guo et al. [127] integrated Channel-Spatial Attention (CSA) into the ViT [113] and termed the architecture Channel-Spatial Attention Transformers (CSAT). The combined CSAT model accurately acquires and preserves both local and global knowledge.

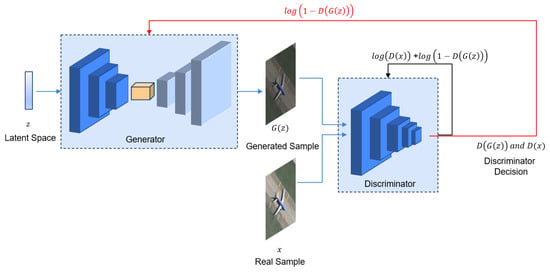

2.4. GAN-Based Scene Classification Methods

Supervised learning methods effectively perform remote sensing scene classification. However, due to limited labeled scene images, Miao et al. merged UCM, NWPU-RESISC45, and AID datasets to create larger remote-sensing scene datasets [54]. Annotating samples manually for labeling scenes is laborious and expensive. GAN [128] can extract meaningful information using unlabeled data. GAN is centered around two models: the generator and the discriminator, illustrated in Figure 6. The generator is trained to create synthetic data that resemble real data, aiming to deceive the discriminator. On the other hand, the discriminator is trained to distinguish between the generated (fake) data and the real data [129]. The overall objective of GAN is to achieve a competitive interplay between these two models, driving the generator to produce increasingly realistic data samples while the discriminator becomes better at detecting the generated data. In Equation (6), the value function describes the training process of GAN as a minimax game.

where the input from the latent space z is provided to the generator G to generate synthetic image G(z). G(z) is further fed to the discriminator D, alongside the real image x. The discriminator predicts both samples as synthetic (0) or real (1) based on its judgment. This process optimizes G and D through a dynamic interplay. G trains to minimize , driving it to create synthetic images that resemble real ones. Simultaneously, D trains to maximize + , refining its ability to distinguish real from synthetic samples.

Figure 6.

A GAN architecture consisting of generator G and discriminator D. G generates synthetic image G(z) from the latent space z. G(z) and real image x are then fed into the D, which is responsible for distinguishing between G(z) and x.

Lin et al. [130] acknowledged the unavailability of sufficient labeled data for remote sensing scene classification, which led them to introduce multiple-layer feature-matching generative adversarial networks (MARTA GANs). MARTA GANs fused mid-level features with global features for learning better representations by a descriptor. Xu et al. [131] replaced ReLU with scaled exponential linear units (SELU) [132] activation, enhancing GAN’s ability to produce high-quality images. Ma et al. [133] addressed that samples generated by GAN are solely used for self-training and introduced a new approach, SiftingGAN, to generate a significant number of authentic labeled samples. Wei et al. [134] introduced multilayer feature fusion Wasserstein GAN (MF-WGANs), where the multi-feature fusion layer is subsequent to the discriminator to learn mid-level and high-level feature information.

In unsupervised learning methods, labeled image scenes remain unexplored. However, leveraging annotations can significantly enhance classification capabilities. Therefore, Yan et al. [135] incorporated semi-supervised learning into GAN, aiming to exploit the benefits of labeled images and enhance classification performance. Miao et al. [54] introduced a semi-supervised representation consistency Siamese network (SS-RCSN), which incorporates Involution-GAN for unsupervised feature learning and a Siamese network to measure the similarity between labeled and unlabeled data in a high-dimensional space. Additionally, representation consistency loss in the Siamese network aids in minimizing the disparities between labeled and unlabeled data.

3. Meta-Analysis

In this section, a meta-analysis is conducted to synthesize and analyze the findings from multiple studies in the domain of DL for remote sensing scene classification. We begin by extracting relevant papers from a collection of peer-reviewed articles in the Scopus database, followed by a meticulous filtering process. Within the selected articles, the meta-analysis encompasses various aspects, including (i) addressing the research problems and utilizing corresponding research techniques, (ii) examining data usage frequency, (iii) exploring data preparation and augmentation methods, (iv) collecting information on the architectures, backbones, frameworks, optimizers, and classifiers involved during training, and (v) comparing architectures used in these articles. From this meta-analysis, we aim to provide valuable insights into the advancements and trends in DL methods for remote sensing scene classification.

3.1. Data Extraction

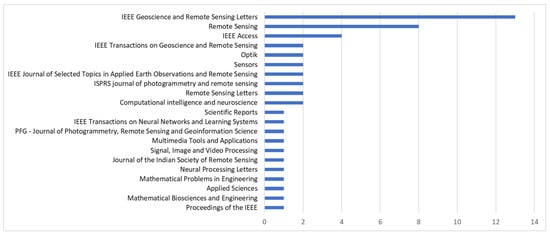

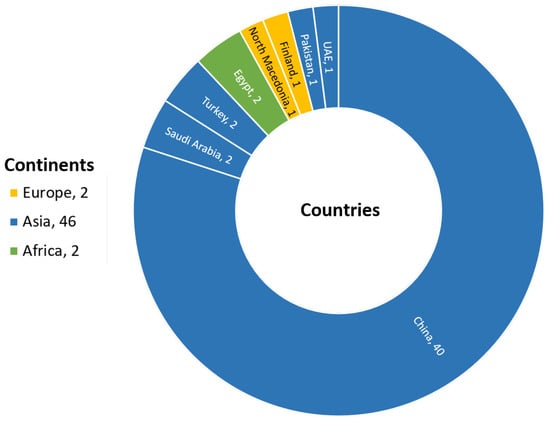

Firstly, articles regarding remote sensing scene classification were identified with a search conducted in the Scopus database. The search query involved article title/keywords/abstracts with terms: [“scene classification”] AND [“aerial image dataset” OR “nwpu-resisc45” OR “whu-rs19” OR “uc merced land used dataset”] AND [[“remote sensing”] OR [“satellite image”]] (search date: 2 May 2023). While using [“scene classification”] AND [[“remote sensing”] OR [“satellite image”]] for the query, Scopus resulted in an irrelevant list of articles without scene-labeled datasets. In addition, adding [“aerial image”] to the following query, [“aerial image”] only referred to the AID dataset in many cases, which restricts the research paper to AID dataset-related papers. Thus, specific datasets were mentioned as they are commonly used, and there is a high probability of utilizing at least one of them in research articles. The articles were limited to journal papers and review papers to exclude conference papers, resulting in 148 peer-reviewed articles. However, our bibliographical analysis is mainly focused on DL-implemented methods, comprehensive utilization of the entire dataset, free from language barriers, and accessible journal papers (including foreign access restriction). As a result, 50 articles were selected for meta-analysis, which attempted to fulfill the interpretation of DL for the remote sensing scene classification context. Figure 7 illustrates the respective journal names along with their counts for the filtered peer-reviewed articles. In Figure 8, a visualization is presented, showcasing the countries and continents associated with the affiliations of the first authors.

Figure 7.

Identified journals with the number of articles. IEEE Geoscience and Remote Sensing Letters has published the highest number of articles from selected reviewed papers for our meta-analysis.

Figure 8.

Countries and continents of first author’s affiliation.

3.2. Research Problem and Utilized Research Techniques

The filtered 50 articles target specific research problems, as depicted in Table 2, with a shared objective of enhancing remote sensing scene classification accuracy. Among the articles, 11 specifically focused on capturing more discriminative regions through the fusion of processed images in [75,78,83], multilayer fusion in [79,80,125,136,137], FC replaced by CapsNet [138] in [33,139], and pairwise comparison in [140]. To focus on key regions, attention mechanism is introduced in [100,103,104,106,107], while the use of classifier-detector is introduced in [86] and multiple instance learning (MIL) [141] in [142]. Additionally, learning local and global information in conjunction is primarily based on transformer-based networks [122,124,127,143,144], while the utilization of the dual-branch network is explored in [145]. Meanwhile, lightweight CNNs are used in [10,96] and DenseNet in [95]. For reducing the effect of high intra-class diversity and high inter-class similarity in the dataset, metric branches [106] and re-organized classes in a two-layer hierarchy [71] are implemented. The utilized techniques on respective research problems are shown in the same Table 2. It is also noticed that [83,106] aims to solve multiple research problems.

Table 2.

Research problems and implemented approaches to improve remote sensing scene classification.

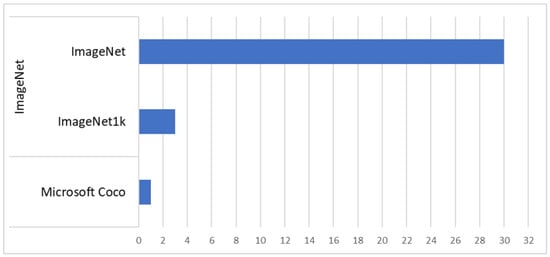

3.3. Dataset Usage Frequency

In common, multiple datasets are used within the same research papers across a set of 50 research articles. Figure 9 illustrates the dataset number used in these papers. The analysis reveals that the AID dataset is the most frequently used, with a count of 41, followed closely by NWPU-RESISC45, with a count of 40. Indian Pines [160] is the only dataset containing hyperspectral images. UCM [51], AID [23], WHU-RS19 [52], NWPU-RESISC45 [49], RSSCN7 [53], PatternNet [55], OPTIMAL-31 [56], KSA [59], SIRI-WHU [57] and RSI-CB [58] contains orginal RGB labeled images. Meanwhile, Corel-1K and Corel-1.5K [161] are terrestrial image datasets used for analysis along with other remote sensing scene datasets in [148]. The links to these remote sensing scene datasets are provided in Appendix A. For pretrained models, the backbones are pretrained on existing datasets. This means that the initial training of the model is performed on large-scale datasets such as ImageNet (33 articles) and COCO (1 article), as shown in Figure 10. The datasets utilized for each study are documented in Appendix B.

Figure 9.

Number count of implemented datasets.

Figure 10.

Number count of datasets used for pretrained backbones. ImageNet1k is a subset of the larger ImageNet dataset consisting of 1000 categories.

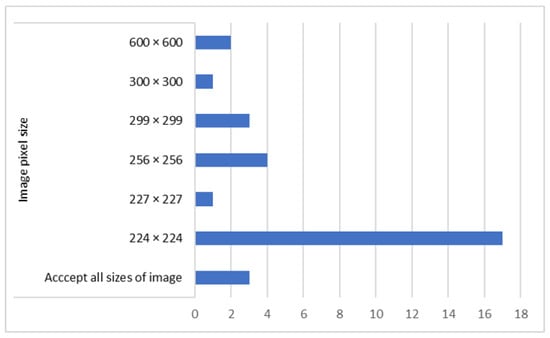

3.4. Data Preparation and Augmentation

Data preparation involves resizing the images to match the required input size. Among the surveyed articles, the majority (17 articles) employ an image size of 224 × 224 pixels as the standard for input in their architecture. However, it is worth noting that a small subset of articles (3 articles) demonstrate the adaptability of architectures by accepting images of varying sizes. This highlights the flexibility of certain DL approaches in accommodating different image dimensions. Figure 11 displays the frequency distribution of image sizes employed in the articles included in the meta-analysis.

Figure 11.

Number count of implemented image pixel size to feed in DL architectures for training and classification.

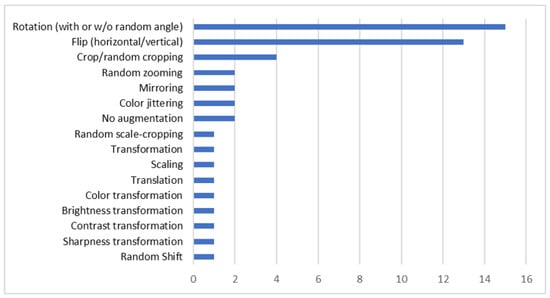

The data augmentation practice is applied with the objective of expanding the dataset [162]. Notably, a total of 20 articles in this study involve the use of data augmentation as a method to enhance their classification accuracy. However, two articles explicitly state that they did not implement any data augmentation technique in their study. Figure 12 shows the distribution of data augmentation techniques implemented among these articles. The most prevalent technique utilized is rotation, with or without a random angle, implemented in 15 articles. Following close behind is the technique of either horizontal or vertical flip, which is used in 13 articles. It is observed that many research studies employ a combination of these data augmentation methods, effectively improving their classification accuracy. More details are provided in Appendix B.

Figure 12.

Number count of implemented data augmentation techniques for expanding datasets.

3.5. Training Details

The training process of a DL architecture involves various aspects, including the training percentage, the chosen architecture, frameworks, implemented optimizers, and hyperparameters. The training percentage is determined based on the size of the dataset, ensuring an appropriate allocation of data for model training. Table 3 presents the most commonly used training percentages for the frequently employed datasets.

Table 3.

Most commonly used training percentages for various datasets.

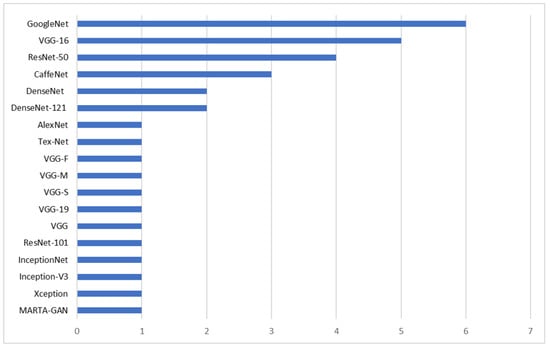

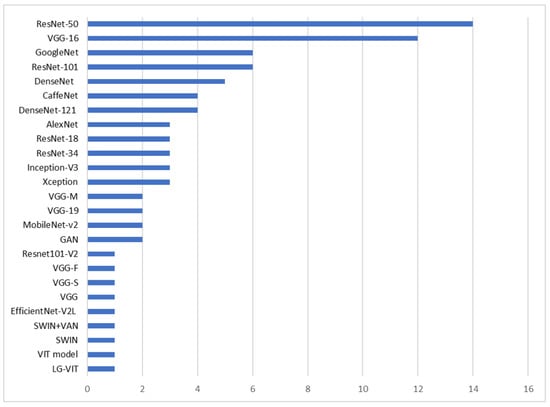

When it comes to selecting architecture, several architectures are frequently utilized in research studies. Among these architectures, GoogleNet is selected six times, indicating its usage in multiple instances. Additionally, other commonly employed architectures include VGG-16, ResNet-50, and CaffeNet. However, it is important to note that researchers also explore and implement novel architectures, showcasing continuous innovation and experimentation within the field of architecture design. Figure 13 provides a visual representation to visually explore the popular DL architectures, excluding the novel ones. Furthermore, in Figure 14, the mapping of all the backbones used in each architecture utilized in our meta-analysis is presented. It is observed that ResNet-50 is the most frequently used backbone, appearing 15 times, followed by VGG-16 (12 times), ResNet-101 (6 times), and CaffeNet (6 times). The DL architectures and backbones used for each study are documented in Appendix B.

Figure 13.

Overview of commonly used architectures. GoogleNet, VGG-16, and ResNet-50 are among the frequently employed models.

Figure 14.

Overview of DL backbones. The most commonly employed ones are ResNet-50 and VGG-16. Some of the research articles share multiple backbones.

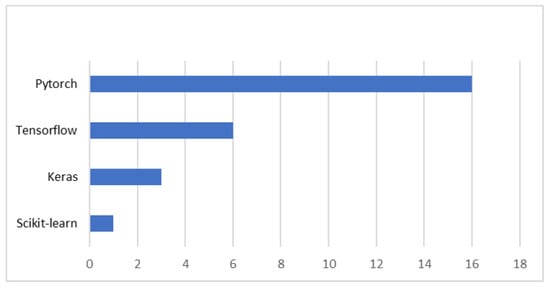

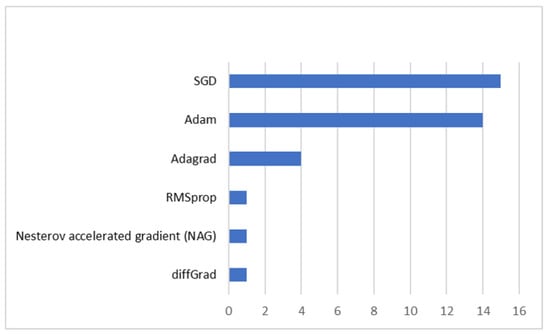

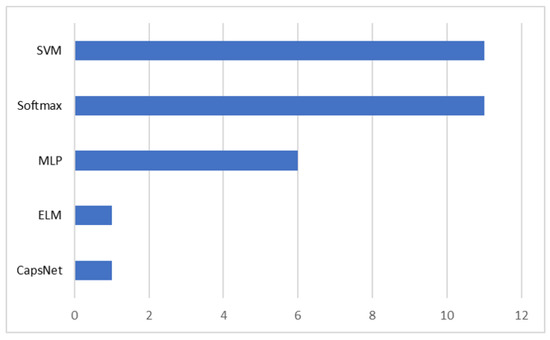

Frameworks in DL architecture for remote sensing scene classification are important for providing a structured platform, flexibility, and efficiency in developing and training DL models. As depicted in Figure 15, PyTorch [163], TensorFlow [164], Keras [165], and scikit-learn [166] are the commonly employed frameworks in this field, with PyTorch being the most frequently used among them. Optimizers are also utilized to adjust the parameters of DL models during training, aiming to minimize the loss function and enhance classification accuracy. The implemented optimizers are Adam, SGD, Adagrad [167], RMSprop [168], Nesterov accelerated gradient (NAG) [169] and diffGrand [170] (see Figure 16). Moreover, among the classifiers used in remote sensing scene classification, support vector machine (SVM) [171] and softmax are the most prevalent, surpassing other classifiers, as depicted in Figure 17. More details are provided in Appendix B.

Figure 15.

Distribution of DL frameworks. PyTorch is the most frequently used framework.

Figure 16.

Distribution of optimizers, indicating that SGD is the most used, closely followed by Adam.

Figure 17.

Distribution of classifiers. SVM and softmax are the most frequently used classifiers.

3.6. Architecture Performance Comparison

The comparison of architectures in this study is centered around the three most commonly used datasets: UCM, AID, and NWPU-RESISC45. All architectures are evaluated using the overall accuracy (OA) metric to facilitate a comprehensive comparison. The expression of OA (in percentage) is presented in Equation (7).

where TP (True Positive) represents the count of correctly classified samples, and Total Samples corresponds to the total number of test images within the dataset. To ensure fairness, the DL architectures trained with specific training rates of 80% for UCM, 50% for AID, and 20% for NWPU-RESISC datasets are selected. Standard deviation values are not considered in the comparison due to their absence in some reviewed papers. Furthermore, some reviewed articles employ multiple architectures or conduct ablation tests to assess the performance of different models. The best-performing architecture is then reviewed and analyzed specifically within the context of these three datasets. Table 4 illustrates the performance of available architectures employed on UCM, AID, and NWPU-RESISIC45.

Table 4.

Performance of DL architectures in three datasets: UCM with 80% training ratio, AID with 50% training ratio, and NWPU-RESISC45 with 20% training ratio.

For the UCM dataset at 80% training data, most architectures achieve over 97% accuracy, except Yan et al. [135], a GAN-based architecture for semi-supervised learning, which achieves 94.05% accuracy. The lowest performing supervised architecture is local and convolutional pyramid-based pooling-stretched (LCPP) [77] model with 97.54% accuracy, which focuses on effectively extracting multiscale feature maps. Notably, the best-performing DL architecture is LG-VIT [122], a transformer-based network that effectively captures both global and local information, achieving 99.93% accuracy. All grains, one scheme AGOS [142] framework also achieves outstanding performance with 99.88% accuracy.

When examining the AID dataset at a 50% training ratio, it is evident that CBAM+RDN [100] with an attention mechanism achieves the highest accuracy among the architectures with 99.08%. TRS [124], a transformer-based network, follows closely as the second-best performer with an accuracy of 98.48%. On the other hand, MF-WGAN [134], an unsupervised learning model, achieves the lowest accuracy (80.35%). LCPP is the lowest-performing supervised architecture with 93.12% classification accuracy.

In the case of the NWPU-RESISC45 dataset trained at 20% data, the Xception-based CNN-MLP [75] architecture achieves the highest accuracy of 97.40%. The Xception-based method, proposed by Shawky et al. [150], which fused the VHR image and saliency map obtained using the saliency algorithm [172], is the second high-performing architecture. Conversely, the fusion of the original VHR image and the processed VHR image with saliency detection using VGG-Net-16 [78] and CaffeNet [78] obtains the lowest accuracy of 83.02% and 83.16%, respectively. Another two-stream architecture that fuses the original image with the mapped LBP image and the original image with the processed image through saliency detection achieves only 87.01% classification accuracy. Table 5 shows the minimum, maximum, and average accuracy values obtained from DL architectures under the same condition as described in Table 4. This information is useful for discussing the importance of the dataset and its utilization in the context of remote sensing scene classification.

Table 5.

Average, minimum, and maximum values obtained from DL architectures in three datasets: UCM with 80% training ratio, AID with 50% training ratio, and NWPU-RESISC45 with 20% training ratio.

4. Discussion

4.1. DL’s Performance Dependency on Datasets

From Table 1, we can observe that the total number of images in UCM, AID, and NWPU-RESISC45 are 2100, 10,000, and 31,500, respectively. The allocation of data for training is determined based on the dataset size and is consistently followed across most of the research articles. This dataset dependency plays a crucial role in the performance of DL methods, as seen in Table 5, where we observe better performance in the UCM dataset. In addition, UCM has a lower number of classes compared to AID and NWPU-RESISC45, indicating higher intra-class diversity and potentially higher inter-class similarity in AID and NWPU-RESISC45. The increment in the training ratio in datasets can boost the performance of DL architectures. Refs. [103,104,105,106,107] used multiple training ratios, commonly used ratios illustrated in Table 3, where the highest training ratio obtained better classification accuracy.

4.2. Low Accuracy in GAN-Based Methods

Unsupervised learning models operate without the need for labeled datasets, whereas semi-supervised learning models do not fully exploit the labeled dataset as they leverage the unlabeled data. In Table 4, it is evident that the unsupervised model MF-WGAN [134] achieved the lowest accuracy of 80.35% on the AID dataset. The difference in accuracy between the unsupervised model and the lowest-performing supervised model is approximately 13%. However, when the training ratio is increased to 80%, the accuracy boosts up to 92.45%. It can be observed that the accuracy of unsupervised learning models increases with a higher training ratio. The same architecture in the NWPU-RESISC45 dataset achieved a lower accuracy of 79.49% accuracy at an 80% training ratio, outperforming another unsupervised architecture named MARTA-GANs [130], which achieved 75.43% accuracy. However, the accuracy of MF-WGAN fell short compared to supervised learning models.

The semi-supervised model based on Marta-GAN [135] achieved the lowest accuracy of 94.05% on the UCM dataset at an 80% training ratio, which is approximately 3.5% lower than the lowest performing supervised learning model of our meta-analysis. The performance of this architecture is also suboptimal on the AID dataset, achieving an accuracy of 83.12% at a 20% training ratio. However, when the training ratio is increased to 80%, the performance improved significantly to 92.78%. This highlights the importance of a higher training ratio for enhancing the performance of semi-supervised models. When SS-RCSN [54] is trained at a 60% training ratio, the accuracy improved up to 98.10%, 94.25%, and 92.03% for the respective UCM, AID, and NWPU-RESISC45 datasets. However, the performance is still lacking compared to the architectures that fully exploit labeled datasets.

From the classification performance, it is understood that semi-supervised learning models are superior to unsupervised learning models because of the utilization of the labeled dataset. However, the classification performance is still lacking compared to supervised learning models. Increasing the training ratio can boost performance to a certain degree, which is contrary to the desired direction. Despite the limitations, GAN is highly useful for minimizing domain shift problems, which occur due to the difference in training and testing datasets [129].

4.3. Shift of Paradigm in Scene Classification Architectures

From Table 4, it is evident that there has been a notable shift in the research focus over time. In the early phase, there was a strong emphasis on fine-tuned and LBP-based architectures, as well as approaches aimed at reducing parameters. Fine-tuned CNNs [86,89] involved modifications to hyper-parameters to optimize performance, while LBP-based CNNs [46,83,84] utilized LBP mapped images to capture texture information for scene classification. Lightweight CNNs [10,96] prioritize parameter reduction for faster performance. These methods, however, often face challenges in effectively classifying complex scenes due to their reliance on processing the entire image as a whole.

Since 2020, there has been a notable shift in research toward the incorporation of attention mechanisms. This approach has the ability to focus on key features or regions within an image to capture relevant information. During our meta-analysis, attention-based CNNs for remote sensing scene classification [100,103,104,105,106,107,108,143] have demonstrated promising performance. CBAM+RDN [100] obtained the highest accuracy of 99.08% on the AID dataset. The minimum accuracies achieved by these architectures are 98.96%, 96.01%, and 93.49% for UCM, AID, and NWPU-RESISC45 datasets, respectively.

Attention-based methods in CNN architectures typically focus on capturing local dependencies while ignoring global information. In recent years, transformer-based methods have gained a significant amount of attention due to their ability to capture both local and global information. By leveraging self-attention mechanisms, transformers can effectively model long-range dependencies and consider the broader context of the input data. Based on our meta-analysis, LG-ViT [122] emerged as the top-performing architecture on the UCM dataset, while TRS [124] achieved the second-best performance on the AID dataset. Furthermore, in the case of the NWPU-RESISC45 dataset, transformer-based methods outperformed LBP-based and fine-tuned methods. This showcases their superiority in capturing both local and global information for effective scene classification.

4.4. Dependency on Pretrained Models

It is evident from our analysis that supervised architectures generally outperform GAN-based architectures, particularly those that prioritize key features or regions within the input data. However, it is important to note that many of these architectures rely on pretrained models, which can provide valuable pre-existing knowledge and improve the performance of the models. The use of pretrained models allows the architectures to leverage the learned representations from large-scale datasets, leading to better generalization and performance on the target datasets. Our meta-analysis indicates that a significant majority (66%) of the reviewed articles stated that they depend on pretrained architectures. In contrast, only a small percentage (6%) confirmed that they trained their architectures from scratch.

Among the 34 articles that stated the use of pretrained models, it is notable that 33 architectures relied on the ImageNet dataset for pretraining. Out of these, three articles specifically utilized the ImageNet1k, a subset of the ImageNet dataset. Conversely, only one article reported the use of the Microsoft Coco dataset for pretraining their architecture. This demonstrates a significant preference for the ImageNet dataset as a source of pretraining DL architectures due to the larger number of available annotated images. The non-pretrained models are GAN-based architectures [134,135] and deep color fusion VGG-based CNN architecture [157] with low accuracy compared to pretrained DL architectures.

Risojevic et al. [173] questioned the necessity of the ImageNet dataset for pretraining, and discovered that we can still derive advantages. However, the dependency on ImageNet for pretraining can be attributed to the scarcity of labeled scene datasets. Researchers have applied various data augmentation approaches to increase the size of the dataset, as shown in Figure 12 of our analysis. Al et al. [73] combined the labeled images from multiple source domains to learn invariant feature representations. This further emphasizes the ongoing need for larger and more comprehensive scene-labeled datasets in the field of remote sensing scene classification. Other than data augmentation, different optimizers, and classifiers are also used to improve the performance of architecture. From our analysis, SGD and Adam are the most used optimization techniques, while SVM and softmax are commonly used for efficient classification.

4.5. Challenges and Future Opportunities

Based on the insights gained from reviewed papers, it is evident that there is always room for improvement in the accuracies obtained from architectures (see Table 4) used for remote sensing scene classification, despite the success obtained in recent years. Through our investigation, we have identified several areas that are expected to be explored in future research to achieve better classification results.

4.5.1. Requirement of Large Annotated Datasets

From Figure 9, it is seen that AID and NWPU-RESISC45 are the most commonly used datasets in remote sensing scene classification research, primarily due to their larger image repositories. Despite their popularity, these datasets are still limited in numbers, leading to the use of pretrained models, often sourced from ImageNet, for transfer learning purposes. However, transfer learning may not always be the optimal solution as pretrained models might not fully adapt to the target domain’s specific characteristics.

A noteworthy approach presented by Miao et al. [54] involves addressing the data scarcity challenge by merging multiple source datasets, thereby increasing the number of available image samples. However, it is essential to consider that different dataset sources may contain uncommon scene classes and are discarded. Moreover, while data augmentation techniques are widely used to enhance the dataset’s diversity and increase sample size, they may have limitations in fully capturing the complexity and variety of real-world scenes. Therefore, the development of large-scale annotated remote sensing scene datasets with a higher number of classes is very promising.

4.5.2. Preservation of Local-Global Features

By learning local features, the model can focus on key information from specific regions or objects within the image. Meanwhile, extracting global features enables the model to understand the overall context and spatial relationships between different portions within the image. The combination of local-global feature representation captures fine-grained details and overall spatial context and can effectively handle diverse and complex scenes. Transformer-based CNN architectures have the capacity to capture and well-preserve both local and global contextual information, with promising results and increased recognition in remote sensing scene classification [124,144] over the past two years. Therefore, it is expected that there will be exploration and utilization in this domain to a greater extent.

4.5.3. Self-Supervised Learning for Scene Classification

Although fully supervised architectures have superior scene classification performance, the data annotation task is laborious and expensive. Exploring different strategies in GAN-based methods presents an opportunity to improve the accuracy of remote sensing scene classification by leveraging unlabeled datasets. By utilizing these unlabeled datasets, the need for manual annotation is reduced, resulting in significant time and cost savings. The GAN-based approach has been utilized [54,134,135] for self-supervised learning. However, the outcome is not as effective as supervised learning. Therefore, it is valuable to continue exploring and developing non-fully supervised models to enhance the performance of remote sensing scene classification.

4.5.4. Learning with Few Data

To avoid data scarcity in remote sensing scene classification, leveraging limited samples can offer a flexible and cost-efficient solution. Quick learning methods such as one-shot [174] and few-shot [175] learning have been explored with the minimal amount of data for scene classification [176,177,178,179]. These methods aim to mimic the human capability to quickly learn and recognize new concepts or classes with very limited examples. However, the classification accuracy is very low, and they struggle to distinguish the scenes as current approaches are far from emulating human-level capabilities. Thus, studies in this domain are expected in the future to focus on further improving these quick learning methods for more accurate and robust remote sensing scene classification.

5. Conclusions

This study provides a comprehensive overview of remote sensing scene classification using DL methods. We categorize DL methods into CNN-based, ViT-based and GAN-based approaches and offer detailed insights into each category. Additionally, our meta-analysis synthesizes and analyzes the findings from 50 peer-reviewed journal articles to show the performance and trends involved in these studies. Findings from our meta-analysis indicate that the AID and NWPU-RESISC45 datasets are widely utilized in the remote sensing scene classification domain. Pretrained networks are extensively adopted as they leverage valuable pre-existing knowledge. Most works utilize commonly used training ratios, and it is observed that an increment in training ratios leads to improved performance. Furthermore, the meta-analysis reveals that supervised learning approaches consistently outperform GAN-based approaches in terms of classification accuracy. This discrepancy arises from the fact that supervised learning methods are explicitly trained to minimize classification errors using labeled data, while GAN-based methods adapt data generation and do not fully exploit the labeled dataset. However, due to the limited images in remote sensing scene datasets, annotating samples becomes expensive and laborious, making self-supervised methods crucial. Moreover, there has been a growing trend in the adoption of attention-based methods since 2020, which focus on key regions or features. In contrast, transformer-based methods gained prominence in 2021 due to their ability to effectively capture and preserve local–global information.

Based on our findings and analysis, we identify several areas for future research and improvement in remote sensing scene classification. This includes the development of large-scale annotated remote sensing scene datasets, further exploration of transformer-based and self-supervised methods, and enhancing DL models’ ability to train effectively with limited samples. Our study provides valuable insights to researchers in the DL-driven remote sensing scene classification domain, aiding them in advancing and developing more effective and accurate solutions to make impactful contributions.

Author Contributions

Conceptualization, A.T. and B.N.; methodology, A.T.; formal analysis, A.T.; investigation, A.T.; data curation, A.T.; writing—original draft preparation, A.T.; writing—review and editing, B.N., J.A. and T.H.; visualization, A.T.; supervision, B.N., J.A. and T.H.; project administration, T.H.; funding acquisition, T.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Science and Technology Research Partnership for Sustainable Development (SATREPS), Japan Science and Technology Agency (JST)/Japan International Cooperation Agency (JICA) for the project “Smart Transport Strategy for Thailand 4.0”, Grant No. JPMJSA1704.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The links to the remote sensing scene datasets mentioned in this paper, and technical details of studies in our meta-analysis are included in Appendix A and Appendix B.

Acknowledgments

This work was partially supported by the Center of Excellence in Urban Mobility Research and Innovation, Faculty of Architecture and Planning, Thammasat University, and Advanced Geospatial Technology Research Unit, SIIT, Thammasat University.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CNN | Convolutional Neural Network |

| DNN | Deep Neural Network |

| DL | Deep Learning |

| GAN | Generative Adversarial Network |

| VHR | Very High-Resolution |

| LULC | Land Use and Land Cover |

| SIFT | Scale-Invariant Feature Transform |

| LBP | Local Binary Pattern |

| CH | Color Histogram |

| GLCM | Grey Level Co-occurrence Matrix |

| HOG | Histogram of Oriented Gradients |

| BoVW | Bag-of-visual-words |

| ResNet | Residual Network |

| UCM/UC-Merced | UC Merced Land Use Dataset |

| USGS | United States Geological Survey |

| AID | Aerial Image Dataset |

| ReLU | Rectified Linear Unit |

| FC | Fully Connected |

| SGD | Stochastic Gradient Descent |

| MCPSF | Multi-level convolutional pyramid semantic fusion |

| PMS | Parallel Multi-stage |

| DCNN | Deep Convolutional Neural Network |

| BLS | Broad Learning System |

| iBoVW | Improved Bag-of-visual words |

| FPN | Feature Pyramid Network |

| COCO | Common Objects in Context |

| RDB | Residual Dense Network |

| MSA-Network | Multiscale Attention Network |

| CPA | Channel and Position Attention |

| MVFL | Multi-view Feature Learning Network |

| EAM | Enhanced Attention Module |

| MINet | Multilevel Inheritance Network |

| TRS | Remote Sensing Transformer |

| MHSA | Multi-Head Self-Attention |

| STB | Swin Transformer Block |

| CSA | Channel-Spatial Attention |

| ViT | Vision Transformer |

| CSAT | Channel-Spatial Attention Transformers |

| EMTCAL | Efficient Multiscale Transformer and Cross-level Attention learning |

| DeiT | Data-efficient Image Transformer |

| CL | Contrastive Learning |

| LG-ViT | Local–global Interactive ViT |

| SCCov | Skip-connected covariance |

| MSA | Multi-head Self-Attention |

| MLP | Multi-layer Perceptron |

| LN | Layernorm |

| GELU | Gaussian Error Linear Unit |

| MARTA GANs | Multiple-layer Feature-matching Generative Adversarial Networks |

| SELU | Scaled Exponential Linear Unit |

| MF-WGANs | Multilayer Feature Fusion Wasserstein Generative Adversarial Networks |

| MIL | Multiple Instance Learning |

| DCA | Discriminant Correlation Analysis |

| NAG | Nesterov accelerated gradient |

| SVM | Support Vector Machine |

| OA | Overall Accuracy |

| LCPP | Local and Convolutional Pyramid based Pooling-stretched |

| AGOS | All Grains, One Scheme |

Appendix A

Table A1.

Link to remote sensing scene datasets.

Table A1.

Link to remote sensing scene datasets.

| Dataset | Link |

|---|---|

| UCM | http://weegee.vision.ucmerced.edu/datasets/landuse.html (accessed on 24 July 2023) |

| AID | www.lmars.whu.edu.cn/xia/AID-project.html (accessed on 24 July 2023) |

| WHU-RS19 | http://gpcv.whu.edu.cn/data (accessed on 24 July 2023) |

| NWPU-RESISC45 | https://gcheng-nwpu.github.io (accessed on 24 July 2023) |

| PatternNet | https://sites.google.com/view/zhouwx/dataset (accessed on 24 July 2023) |

| OPTIMAL-31 | https://1drv.ms/u/s!Ags4cxbCq3lUguxW3bq0D0wbm1zCDQ (accessed on 24 July 2023) |

| SIRI-WHU | https://figshare.com/articles/dataset/SIRI_WHU_Dataset/8796980 (accessed on 24 July 2023) |

| RSSCN7 | https://sites.google.com/site/qinzoucn/documents (accessed on 24 July 2023) |

| RSI-CB | https://github.com/lehaifeng/RSI-CB (accessed on 24 July 2023) |

| KSA | [59] |

| Corel | https://archive.ics.uci.edu/dataset/119/corel+image+features (accessed on 24 July 2023) |

| IP | https://purr.purdue.edu/publications/1947/1 (accessed on 24 July 2023) |

Appendix B

Table A2.

Details of surveyed papers for the meta-analysis.

Table A2.

Details of surveyed papers for the meta-analysis.

| S.N | Paper Ref. | Datasets Used | Data Preparation | Data Augmentation | Architectures Used | Backbone | Backbones Pre-Trained on | Framework | Optimizer | Classifier |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | [49] | NWPU-RESISC45 | Fine-tuned AlexNet, VGG-16 and GoogLeNet | AlexNet, VGG-16 and GoogLeNet | ImageNet | SVM | ||||

| 2 | [78] | UCM, WHU-RS, AID and NWPU-RESISC45 | Images are resized according to the size of the receptive field of the selected CNN model. | No | CaffeNet, VGG-Net-16 and GoogleNet | CaffeNet, VGG-Net-16 and GoogleNet | ImageNet | |||

| 3 | [79] | UCM and AID | UCM data expands from 2100 to 252,000 images. Each image rotated ±7° from each 90° rotated image (15 rotations for each 90° basic rotation) and expanded to 30 images. Rotations exceeding ±7° range from the 0°, 90°, 180°, and 270°, each image expanded to 120 images. | GoogleNet | GoogleNet | ImageNet | SVM | |||

| 4 | [46] | UCM, WHU-RS19, RSSCN7 and AID | Images are resized to 224 × 224 pixels for both VGG-M and ResNet-50. | Tex-Net | VGG-M and Resnet-50 | ImageNet | SVM | |||

| 5 | [83] | UCM, AID and NWPU-RESISC45 | GoogleNet | GoogleNet | ImageNet | Tensorflow | ELM | |||

| 6 | [146] | UCM, WHU-RS19, AID and NWPU-RESISC45 | Images are resized to meet the input are of each CNN (224 × 224 or 227 × 227). | CaffeNet, GoogLeNet, VGG-F, VGG-S, VGG-M, VGG-16, VGG-19 and ResNet-50 | CaffeNet, GoogLeNet, VGG-F, VGG-S, VGG-M, VGG-16, VGG-19 and ResNet-50 | ImageNet | SVM | |||

| 7 | [73] | UCM, AID, NPWU, PatternNet and a new multiple domain dataset created from four heterogeneous scene datasets | For suitability of datasets suitable for multisource domain adaption, only 12 shared classes are considered. | MB-Net | ResNet-50 | ImageNet | Adam | |||

| 8 | [84] | UCM and AID | Images are resized to 300 × 300 pixels. | Rotation (90°, 180°, and 270°) and flip (horizontal and vertical). Texture images rotation (90°, 180°, and 270°). | CTFCNN | CaffeNet | ImageNet | SVM | ||

| 9 | [95] | UCM, AID, OPTIMAL-31 and NWPU-RESISC45 | Accept image that are different sizes. | Color transformation, brightness transformation, contrast transformation, and sharpness transformation | DenseNet | DenseNet | ImageNet | PyTorch0.4.1 | SGD | Softmax |

| 10 | [33] | UCM, AID and NWPU-RESISC45 | AID dataset images are resized to 256 × 256 pixels. | CNN-CapsNet | VGG-16 and Inception-V3 | ImageNet | Keras | Adam | CapsNet | |

| 11 | [153] | AID and NWPU-RESISC45 | InceptionNet and DenseNet (for heterogeneous used combined) | InceptionNet and DenseNet | MLP | |||||

| 12 | [155] | UCM, NWPU-RESISC45 and SIRI-WHU | Inception-LSTM | Inception-V3 | Adagrad or RMSprop | Softmax | ||||

| 13 | [86] | NWPU-RESISC45, AID and OPTIMAL-31 | Random flips in vertical and horizontal directions, random zooming and random shifts over different image crops. | VGG-16+FPN and DenseNet+FPN | VGG-16, DenseNet and ResNet-101 | VGG-16 and DenseNet-161 pretrained on ImageNet. FPN (ResNet-101) pretrained on Microsoft COCO dataset | ||||

| 14 | [135] | UCM and NWPU-RESISC45 | Flip (horizontal and vertical) and rotation (90°, 180°, 270°) | MARTA-GAN | GAN | Not pretrained | Adam | SVM | ||

| 15 | [147] | UCM, AID and NWPU-RESISC45 | Random-scale cropping with crop ratio set to [0.6, 0.8], and the number of patches cropped from each original image is set to 20. | CaffeNet, VGG-VD16 and GoogLeNet | CaffeNet, VGG-VD16 and GoogLeNet, | Tensorflow | SGD | Softmax | ||

| 16 | [80] | NWPU-RESISC45 | FDPResNet | ResNet-101 | ImageNet | SVM | ||||

| 17 | [96] | UCM, AID and NWPU-RESISC45 | Rotation clockwise (90°, 180°, and 270°), flip (horizontal and vertical) to expand the training data six-fold. | biMobileNet | MobileNet-v2 | ImageNet | PyTorch | SGD | SVM | |

| 18 | [100] | UCM and AID | Images are resized to 224 × 224 pixels. | Rotation (0°, 90°, 180°, and 270°) and mirror operations on these four angles to expand the data to eight times the original data, | RDN + CBAM | DenseNet | ImageNet | PyTorch | Adam | Softmax |

| 19 | [134] | UCM, AID and NWPU-RESISC45 | AID dataset images are resized to 256 × 256 pixels as input for the model. | Expand the UCM dataset with 90° of horizontal and vertical rotation, increasing the training samples to 6800. | MF-WGAN | GAN | Not pretrained | MLP | ||

| 20 | [89] | AID and NWPU-RESISC45 | Images of the datasets are resized according to the requirements of CNN: 224 × 224 for ResNet-50 and DenseNet-121, and 299 × 299 for Inception-V3 and Xception. | Data augmentation used. | ResNet-50, Inception-V3, Xception and DenseNet-121 | ResNet-50, Inception-V3, Xception and DenseNet-121 | ImageNet | SGD | Softmax | |

| 21 | [103] | AID and NWPU-RESISC45 | Images are resized to 224 × 224 to train the attention network. | VGG-VD16 chosen as a attention network, and AlexNet and VGG-VD16 used to learn feature representation obtained from attention network | AlexNet and VGG-VD16 | ImageNet | PyTorch | SGD | ||

| 22 | [75] | UCM, AID and NWPU-RESISC45 | Images are resized to 299 × 299 for the pre-trained CNNs. | Rotation (90° and 180°), zoom (random) and flip (horizontal and vertical) | CNN-MLP | Xception | ImageNet | Adagrad | MLP | |

| 23 | [136] | AID and NWPU-RESISC45 | Images are resized to 224 × 224 pixels before training. | Rotation (90°, 180°, and 270°), flip (horizontally and vertically) and crop (from each side by 0 to 12 pixels). For testing, rotation transformation (90°, 180°, and 270°) to obtain 4 images. | MF2Net | VGG-16 | ImageNet | PyTorch | SGD | Softmax |

| 24 | [104] | UCM, AID and NWPU-RESISC45 | Random rotation, flip, and cropping. | MSA-Network | ResNet-18, ResNet-34, ResNet-50 and ResNet-101 | ImageNet | Tensorflow | Adam | ||

| 25 | [148] | UCM, RSSCN, SIRI-WHU, Corel-1K and Corel-15K | Fine-tuned ResNet-50 | ResNet-50 | ImageNet | SGD | ||||

| 26 | [77] | UCM and AID | Images are resized to 224 × 224 for both datasets. | MCPSF | VGG-19 | ImageNet | Tensorflow | SVM | ||

| 27 | [10] | NWPU-RESISC45 and SIRI-WHU | Accept image that are different sizes. | LW-CNN | VGG-16 | Keras | SGD | Softmax | ||

| 28 | [105] | UCM, WHU-RS, AID and OPTIMAL-31 | Rotation, flip, scaling, and translation. | Dual model architecture includes ResNet-50 and DenseNet-121 fused | ResNet-50 and DenseNet-121 | ImageNet | SGD | |||

| 29 | [157] | UCM, WHU-RS19, RSSCN7, AID and NWPU-RESISC45 | Images are resized to 224 × 224 pixels. | Random crops, horizontal flips, and RGB color jittering | VGG | VGG | Not pretrained | SVM | ||

| 30 | [158] | AID, RSI-CB, IP | PDDE-Net | DenseNet | Keras and Tensorflow | SGD | Softmax | |||

| 31 | [150] | UCM, WHU-RS, AID and NWPU-RESISC45 | Images are resized to 299 × 299 pixels. | Xception | Xception | Python | Adagrad | MLP | ||

| 32 | [106] | AID, NWPU-RESISC45 and UCMerced | Images are resized to 224 × 224 pixels. | MVFLN | AlexNet [17] and VGG-16 [33] | ImageNet | PyTorch | SGD | ||

| 33 | [139] | AID and NWPU-RESISC45 | Images are resized to 224 × 224 pixels. | DS-CapsNet | 4 novel convolution layers used | PyTorch | ||||

| 34 | [124] | UCM, AID, NWPU-RESISC45 and OPTIMAL-31 | Images are resized for UCM, NWPU, and OPTIMAL-31 into 224 × 224 pixels. For AID, images are resized to 600 × 600 pixels. | TRS | ResNet-50 | ImageNet1k | Adam | Softmax | ||

| 35 | [107] | UCM, AID and NWPU-RESISC-45 | Images are resized to 256 × 256 pixels. | GoogLeNet, VGG-16, ResNet-50, and ResNet-101 | GoogLeNet, VGG-16, ResNet-50, and ResNet-101 | ImageNet | NAG | |||

| 36 | [143] | RSSCN7 and WHU-RS19 | Images are resized to 256 × 256 pixels. | CAW | Swin transformer module and VAN module concatenated together | PyTorch 3.7 | Adam | |||

| 37 | [142] | UCM, AID and NWPU-RESISC45 | AGOS | ResNet-50, ResNet-101 and DenseNet-121 | ImageNet | Tensorflow | Adam | |||

| 38 | [125] | AID and NWPU | Images are resized to 224 × 224 pixels. | Random horizontal flipping | Swin Transformer | Swin Transformer | ImageNet | PyTorch | Adam | Softmax |

| 39 | [54] | UCM, AID, NWPU-RESISC45 and merged RS dataset | For labeled data, patches of 224 × 224 pixels are randomly cropped from the original images. | Labelled data: horizontal flipping.Unlabeled data: random cropping, random rotation, and color jitter. | SS_RCSN | ResNet-18 | Adam | |||

| 40 | [140] | AID and NWPU-RESISC45 | Confused images are selected as input pairs for the network. For each batch, 30 classes of images are selected in the AID dataset, 45 classes of images are selected in the NWPU-RESISC45 dataset, and six images are randomly selected for each class. | Random rotation by 30°, and flip (horizontal and vertical). | PCNet | ResNet-50 | PyTorch | SGD | Softmax | |

| 41 | [144] | AID and NWPU-RESISC45 | Images are resized to 256 × 256 pixels. | Random flip and rotation. | CTNet | ResNet-34 and MobileNet_v2 in C-stream, and pretrained VIT model in T-stream | ImageNet1k | PyTorch | SGD | Softmax |

| 42 | [108] | AID, WHU-RS19 and NWPU-RESISC45 | Images are resized to 224 × 224 pixels. | MINet | ResNet-50 | ImageNet | PyTorch | SGD | ||

| 43 | [145] | AID and NWPU | GLDBS | ResNet-18 and ResNet-34 combined | PyTorch | Adam | ||||

| 44 | [149] | AID and NWPU-RESISC45 | Input images are resized to 224 × 224 pixels. | MRHNet | ResNet-50 and ResNet-101 | PyTorch 1.3.1 | diffGrad | |||

| 45 | [71] | NWPU-RESISC45 | Rotation clockwise (90°, 180°, and 270°) and horizontal and vertical refections of the images. | DenseNet-121-Full and Half | DenseNet-121 | ImageNet | ||||

| 46 | [137] | UCM, AID, RSSCN7 and NWPU-RESISC45 | Images are resized to 224 × 224 pixels for all four datasets. | No augmentation. | DFAGCN | VGG-16 | ImageNet | PyTorch framework with PyTorch-Geometric (PYG) is employed for the construction of GCN model | Adam | |

| 47 | [127] | UCM, AID and NWPU-RESISC45 | Images are resized to 224 × 224 pixels. | CSAT | ImageNet1K | PyTorch | Adam | MLP | ||

| 48 | [122] | UCM, AID and NWPU | LG-ViT | LG-ViT | ImageNet | Adam | ||||

| 49 | [159] | AID and NWPU | STAIRS | ResNet-50 | SGD | |||||

| 50 | [151] | UCM, AID, WHU-RS19, PatterNet and KSA | EfficientNet-V2L for global feature extraction and ResNet-101V2 for co-saliency feature extraction and fusion using DCA algorithm | EfficientNet-V2L and ResNet-101V2 | Adagrad | MLP |

References

- Gómez-Chova, L.; Tuia, D.; Moser, G.; Camps-Valls, G. Multimodal classification of remote sensing images: A review and future directions. Proc. IEEE 2015, 103, 1560–1584. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, Z.; Yu, J.G.; Zhang, Y. Learning deep cross-modal embedding networks for zero-shot remote sensing image scene classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 10590–10603. [Google Scholar] [CrossRef]

- Cheng, G.; Guo, L.; Zhao, T.; Han, J.; Li, H.; Fang, J. Automatic landslide detection from remote-sensing imagery using a scene classification method based on BoVW and pLSA. Int. J. Remote Sens. 2013, 34, 45–59. [Google Scholar] [CrossRef]

- Othman, E.; Bazi, Y.; Alajlan, N.; Alhichri, H.; Melgani, F. Using convolutional features and a sparse autoencoder for land-use scene classification. Int. J. Remote Sens. 2016, 37, 2149–2167. [Google Scholar] [CrossRef]

- Kunlun, Q.; Xiaochun, Z.; Baiyan, W.; Huayi, W. Sparse coding-based correlaton model for land-use scene classification in high-resolution remote-sensing images. J. Appl. Remote Sens. 2016, 10, 042005. [Google Scholar] [CrossRef]

- Zhao, L.; Tang, P.; Huo, L. A 2-D wavelet decomposition-based bag-of-visual-words model for land-use scene classification. Int. J. Remote Sens. 2014, 35, 2296–2310. [Google Scholar] [CrossRef]

- Chen, C.; Zhang, B.; Su, H.; Li, W.; Wang, L. Land-use scene classification using multi-scale completed local binary patterns. Signal, Image Video Process. 2016, 10, 745–752. [Google Scholar] [CrossRef]

- Weng, Q.; Mao, Z.; Lin, J.; Liao, X. Land-use scene classification based on a CNN using a constrained extreme learning machine. Int. J. Remote Sens. 2018, 39, 6281–6299. [Google Scholar] [CrossRef]