Abstract

The vertical structure of forest ecosystems influences and reflects ecosystem functioning. Terrestrial laser scanning (TLS) enables the rapid acquisition of 3D forest information and subsequent reconstruction of the vertical structure, which provides new support for acquiring forest vertical structure information. We focused on artificial forest sample plots in the north-central of Nanning, Guangxi, China as the research area. Forest sample point cloud data were obtained through TLS. By accurately capturing the gradient information of the forest vertical structure, a classification boundary was delineated. A complex forest vertical structure segmentation method was proposed based on the Forest-PointNet model. This method comprehensively utilized the spatial and shape features of the point cloud. The study accurately segmented four types of vertical structure features in the forest sample location cloud data: ground, bushes, trunks, and leaves. With optimal training, the average classification accuracy reaches 90.98%. The results indicated that segmentation errors are mainly concentrated at the branch intersections of the canopy. Our model demonstrates significant advantages, including effective segmentation of vertical structures, strong generalization ability, and feature extraction capability.

1. Introduction

Forest ecosystems, as tree-based ecosystems, continuously exchange energy and materials with other organisms in the biosphere during the process of reproduction [1]. Throughout this process, various populations and the environment are engaged in constant competition and selection, resulting in the formation of two distinct spatial structures of population distribution: the vertical structure and the horizontal structure [2]. The vertical structure of the forest reflects its hierarchy, which is typically divided into the trunk layer, bush layer, and ground cover layer. The stratification of the forest vertical structure not only helps conserve precipitation and reduce soil scouring and erosion on the forest floor, but it also determines the reserve of forest biomass and the existence and distribution of forest carbon stock. Therefore, studying the vertical structure of the forest is highly significant for enhancing forest biomass and maintaining the stability of the forest ecosystem. It also serves as the basis for studying forest succession and the carbon cycle [3]. By segmenting the components of the trunk, ground, and bush from the forest ecosystem, we can gain a comprehensive understanding of the forest’s vertical structure. This also enables us to study their respective roles in the forest ecosystem separately [4], providing theoretical and methodological support for separate studies on trunks or bushes [5].

Traditional surveys of forest resource communities require the use of ground survey methods to gather information about the vertical structure of forests. However, these methods have a significant disadvantage as they are time-consuming and labor-intensive [6]. Currently, light detection and ranging (LiDAR) technology, as an active remote sensing technology, has emerged as an advanced method for extracting the vertical structure parameters of forests. It offers styles such as high resolution and fast acquisition speed [7]. LiDAR can obtain information such as target position and height [8]. It has been widely utilized in forest resource surveys and other fields. Based on different platforms, LiDAR can be categorized into terrestrial, airborne, and spaceborne LiDAR. Spaceborne and airborne laser radars can rapidly acquire information about the forest’s horizontal structural characteristics over large areas. However, both methods have significant limitations in capturing the vertical structure of forests, making it challenging to obtain biological information beneath the forest canopy [9]. Terrestrial laser scanning (TLS), on the other hand, employs a bottom-up scanning technique to gather information quickly and accurately about trunk position, diameter at breast height (DBH), tree height, and other vertical structure attributes of the forest canopy [10]. In recent years, TLS technology has been widely applied in forest resource surveys. For example, Johannes Heinzel et al. employed ground-based LiDAR equipment to obtain forest data and identify tree stems after removing leaves and branches [11]. Sun et al. used a Leica Scanstation C10, a type of TLS equipment, to scan aspen forest samples for estimating aspen volume [12]. Bingxiao Wu et al. used TLS to collect and differentiate leaf and trunk data [13]. With the continuous development of LiDAR equipment, numerous studies have utilized terrestrial LiDAR for analyzing forest vertical structure. However, these studies mainly focus on estimating forest biomass and extracting parameters at the individual tree scale [14]. Given the complexity of forest point cloud data as well as the diversity and irregularity of ground cover organisms, existing methods for vertical structure segmentation of forests mainly focus on forest canopy analysis, while there are fewer methods for vertical structure segmentation of forests as a whole from bottom to top [15,16]. The existing methods are also challenging to apply in forests with complex forest stands [17]. Therefore, segmenting complex forest vertical structures using TLS is an urgent problem that needs to be addressed [17,18].

Currently, traditional machine learning methods have been widely utilized in forest vertical structure segmentation research and have achieved significant outcomes [18]. However, most of these methods focus solely on the segmentation of individual trees, also known as instance segmentation. Consequently, they can only recognize trees and are unable to identify bushes and ground points. Additionally, there has been a multitude of studies on wood–leaf segregation within trees, mostly limited to a single-tree scale, with minimal research conducted on a forest scale [19,20]. On the other hand, deep learning can extract meaningful features from a large pool of sample data, without the need for extensive, standardized prior knowledge. It can effectively enhance the performance of neural networks [21] and better predict the classes to which other point clouds in the existing data belong [22]. As deep learning modeling research continues to advance, the application of deep learning in forest segmentation is becoming increasingly comprehensive, particularly in canopy segmentation or single-tree instance segmentation [23].

When it comes to the semantic segmentation model for 3D point clouds in deep learning, it can be primarily divided into two types. The first type involves transforming the initial point cloud data into a regular 3D voxel grid or multiview [24]. This process divides the original point cloud data into voxels of a specific spatial size, allowing for feature extraction. However, this method not only results in the loss of spatial information but also leads to a degradation of the structural resolution [25].

As a result, more and more research studies are now employing the point-based segmentation method, the second type [25]. This method involves extracting valuable information directly from the 3D point cloud [26,27]. Besides improving computational efficiency, this method also fully utilizes the spatial structure information of the point cloud. However, it does have certain limitations. The lack of a priori knowledge seriously affects the model’s effectiveness and can result in poor generalization ability [28,29,30]. Therefore, it is crucial to enhance the feature diversity of the data to address these limitations.

In this study, we chose the forest sample plots in the north-central of Nanning, Guangxi, China as the study area. We have utilized TLS technology to obtain forest point cloud data and have comprehensively utilized the spatial location information and shape information of the forest point cloud. Through this, we proposed Forest-PointNet, a pre-trained semantic segmentation model specifically designed for forest scenarios. This model can segment the vertical structure of forests in complex forest scenarios into leaves, trunks, ground, and bushes. This research served as a foundation for the study of forest vertical structure in ecology and forestry disciplines.

The structure of the article Is as follows: Section 2 introduces the method and model used for the semantic segmentation of point clouds in complex forest vertical structures. Section 3 evaluates and analyzes the experimental results of the model. Section 4 concludes and summarizes the method for semantic segmentation of complex forest vertical structures, while also pointing out any shortcomings.

2. Materials and Methods

2.1. Study Area and Sample Plots

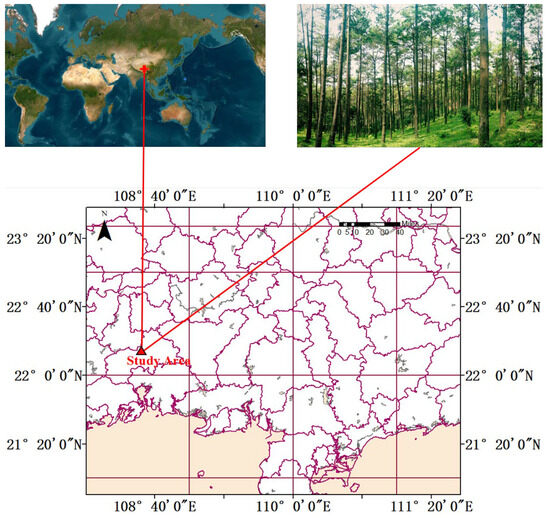

The study area is located in a natural forest in the north-central of Nanning City, Guangxi Zhuang Autonomous Region, China, 22°51′~23°02′N, 108°06′~108°31′E (Figure 1). The area is located in a subtropical region with a hilly landscape. The main tree species is Eucalyptus grandis, with rich understory vegetation and a distinctive forest vertical structure. The abundance of vegetation types and high forest cover demonstrates that the study of complex forest vertical structure segmentation in this region is representative.

Figure 1.

Location of the study area. In this case, the red + sign at the top of the image represents the location coordinates of the study area, and the detailed coordinate map of the study area is pointed out below.

The forest sample plots in the area were selected for data collection; specifically, those where Eucalyptus grandis thrives and the forest stand has a complex and diverse vertical structure. To ensure high-quality data collection with TLS, various factors such as tree shading and equipment range were taken into account. To enhance the data’s quality, a multi-site scanning method was implemented. This involved setting up a scanning point at the center of each sample plot for panoramic scanning, and four additional scanning sites at the corners of the plot’s edges. After completing the scanning at all sites, the point cloud data were obtained through stitching and coordinate conversion, which were then outputted using LAS files. By scanning all the sample sites and utilizing point cloud data, the LAS files provided the necessary data for studying the segmentation of the complex forest’s vertical structure.

2.2. Validation Data

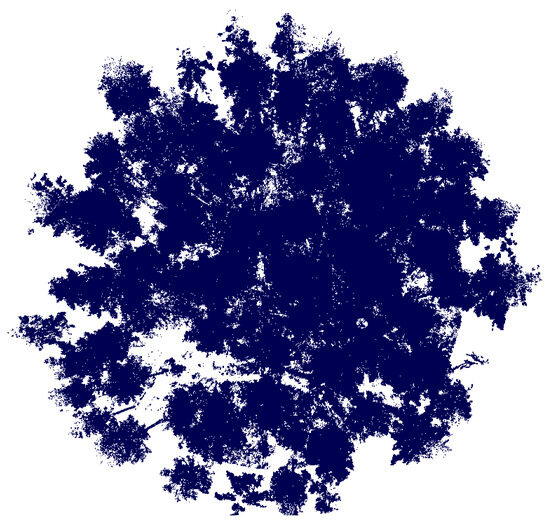

To verify the applicability of our model to forests in other regions, we utilized a publicly available dataset of forest point clouds from the Evo region of Finland [31]. Figure 2 illustrates the location of the sources from which the test data was collected. This dataset was collaboratively collected by various entities, such as the University of Eastern Finland’s Osasto Meteorological Service, the Finnish National Land Survey, and the University of Helsinki. It served to validate the performance of our model. The dataset comprises data from 80 forest sample point clouds from the Evo region. These point clouds were acquired using a Leica RTC-360-3D laser scanner. For this experiment, we randomly selected one of the 80 plots as the experimental sample plot because the stand structure and area of these forest sample plots were basically the same. Figure 3 illustrates the top view of the chosen sample plot.

Figure 2.

Diagram of the area where the validation dataset is located. In this case, the top of the picture shows the location of the study area in a world map view, and the bottom of the picture shows the detailed location of the study area, where the red coordinate pattern indicates the location of the study area.

Figure 3.

Top view of validation dataset sample plots.

2.3. General Methodology

Our proposed method consists of the following main steps. Firstly, the collected forest sample point cloud data are preprocessed, including point cloud denoising, format conversion, and other operations. Based on the preprocessed point cloud data, the required dataset for the model is prepared, including the division of the training set and test set, as well as the segmentation and rotation of the point cloud, etc. Additionally, data augmentation and broadening are carried out in the dataset processing session to obtain the completed dataset for training and testing. After obtaining the training data, we supplement the dataset features by designing the point cloud normal vector calculation method to obtain the data required for Forest-PointNet model training. Based on the PointNet model, the point cloud spatial feature extraction module is redesigned to construct the Forest-PointNet model. The training data, test data, and validation data are input into Forest-PointNet and PointNet, respectively, for training and comparative validation. The overall technical route is shown in Figure 4.

Figure 4.

The picture shows the overall technical route of the method, which mainly consists of four steps: data preprocessing, data preparation, data processing, and semantic segmentation. In this case, the four dotted boxed areas represent the main four processes of the research process.

2.4. Data Preprocessing

The processing of forest point cloud data involves the following steps: data cleaning, data cropping, data labeling, data expansion, and data format conversion [32].

Due to the complexity of the forest environment in the sample site, as well as the limited conditions of the LiDAR equipment, the collected point cloud data contained a large amount of irrelevant data [33]. Therefore, it is necessary to conduct a preliminary cleaning of the data, primarily focusing on the sample point cloud data, incomplete tree point cloud, and irrelevant point cloud data collected during the data acquisition process, to crop and denoise [34].

The process of selecting labeled data from the dataset for training is not completely random, we selected trunks of trees with various diameters at breast height, bushes with different heights, and parts of the canopy with different degrees of sparsity, making our selected training data representative of the entire forest sample.

Since there are not enough individual tree data to create a standard point cloud dataset, we manually label the point cloud data from the forest dataset to create a training dataset. In the dataset preparation session, we utilized a portion of the current forest sample data for segmentation which served as the training set for the model. To validate the model’s effectiveness, we selected a portion of the forest sample point cloud from the dataset for segmentation, copying, and splicing. The new forest point cloud generated from it serves as the test dataset, which is twice the size of the original forest scene.

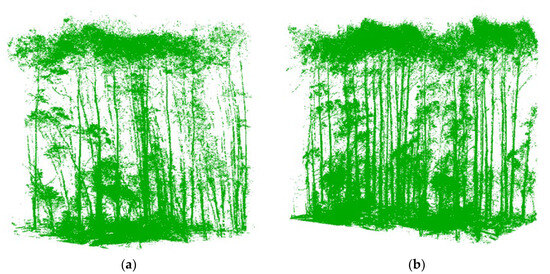

To improve the model’s robustness, we randomly selected a portion of the point cloud for translation and rotation. For point cloud rotation, we employed the Euler angle method. This involved rotating the point cloud around each of the three XYZ axes separately, obtaining a rotation matrix for each rotation. The rotation matrix of the point cloud is computed by multiplying the three matrices together. Figure 5 illustrates both the original dataset (Figure 5a) and the generated dataset (Figure 5b).

Figure 5.

The image shows the dataset view, where (a) is the original dataset and the view after dataset augmentation is shown in (b).

Unlike traditional ground point filtering methods based on morphological filtering, this method incorporates the forest’s vertical structure point cloud into the ground points as a segmentation object to generate training, validation, and test datasets.

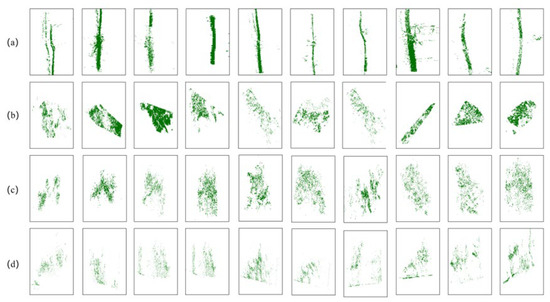

To begin with, data annotation is required. We utilized CloudCompare software (v2.6.3) for annotation, explicitly selecting the section of the forest dataset that exhibits more complex vertical structures. Since semantic segmentation only requires classification based on type and subsequent visualization, the annotation process only involves labeling the point cloud according to its category. An annotation example is depicted in Figure 6.

Figure 6.

Examples of labeled trunk, ground, leaf, and bush point clouds in order from (a–d).

The dataset is large and complex, with significant tree shading. The labeling process requires a lot of time and effort because the only part that can be accurately labeled in a complex forest scenario is the trunk point cloud. If the segmentation tool provided by the CloudCompare software cannot cut the leaves, segmentation based on the complete leaf point cloud contour is necessary to obtain accurate leaf point cloud features. However, it is easy to mix the more slender trunks and branches with the leaf markers in terms of position, creating meaningless noise for the training process.

Due to the large coverage of bushes in the complex forest scene, most of the ground is covered by bushes. Therefore, the categorized data for creating the ground point cloud can only be collected from the boundary of the entire forest. Once the bushes are labeled, the segmentation tool can be used to remove them from the scene and obtain a portion of the ground point cloud.

Since only 1/3 of the forest was selected for labeling, less data were obtained for creating the training dataset. Deep learning models such as Forest-PointNet require a large amount of data for training to obtain a model with stronger generalization ability. To address this issue, our research primarily utilizes the following two methods. (1) Due to the inconsistency in the number of points in each point cloud obtained from segmentation, and the limitations of the Forest-PointNet model on the shape of the input point cloud, the point cloud obtained from labeling is downsampled. Comparison pictures before and after performing downsampling are shown in Figure 7. Voxel downsampling is employed to grid the point cloud space, ensuring that the overall geometrical characteristics of the cloud remain unchanged while reducing the number of points. These downsampled point clouds are then averaged or weighted to generate new points that replace the original grid points, thus retaining the data of the key point cloud. (2) The labeled point cloud undergoes translation and rotation to obtain multiple representations of the same point cloud.

Figure 7.

Comparison plots of data before and after downsampling are shown.

After translating and rotating the labeled point cloud, the size of the training dataset remains small. To introduce a certain degree of error and maintain experimental value, while also avoiding overfitting, Gaussian noise is added to the point cloud data.

Individual point clouds cannot be directly inputted into the model. Therefore, the data need to be divided, formatted, and processed after rotation, translation, and the addition of noise. The training set and test set are divided in the ratio of 7:3. Additionally, two additional datasets are prepared for testing purposes. One dataset consists of preliminarily labeled data, which is independent of the training and test sets to prevent data leakage. The other dataset contains unlabeled point cloud data for the entire forest. This dataset will be used by the model to automatically classify the ground points.

2.5. Forest-PointNet

The Forest-PointNet model was built and adapted based on the PointNet model [33], with the infrastructure shown in Figure 8. PointNet model solves the problem of 3D point cloud feature extraction well, and also pioneers the use of deep learning for 3D point cloud information processing, but there is still the problem of insufficient local feature extraction ability, which brings difficulties in the analysis of complex scenes and makes the PointNet model unable to complete the task of segmenting the vertical structure of the forest in the complex forest environment well. For this reason, we change the structure of the PointNet model and design the Forest-PointNet model.

Figure 8.

Forest-PointNet structure diagram, mainly including classification network and segmentation network, where the classification network and segmentation network are connected by shared weights.

The architecture of Forest-PointNet mainly consists of two transform matrix prediction networks (T-Nets), three multilayer perceptrons (MLPs), and a maximum pooling layer. One of the advantages of PointNet is its ability to solve the rotational invariance of the point cloud [34,35]. This makes it more suitable for ground point cloud segmentation in complex forest scenes. As point clouds of features in forests can exist in various forms, it adds difficulty to point cloud identification and feature recognition. Therefore, in Forest-PointNet, we follow the special transform network (STN) of the PointNet model to solve the problem of rotating the forest point cloud. STN learns a D × D rotation matrix that optimally facilitates the network for classification and segmentation by learning the positional attitude information of the point cloud. The core structure of the STN is the T-net, which is one of the key structures that allow the PointNet model to directly process points. In the STN of Forest-PointNet, we apply two T-nets. The first one is used to align the input point cloud before it is processed by the multilayer perceptron. The other T-net is applied to align the point cloud features after they have been extracted by the multilayer perceptron. To add a regularization term to the network training loss, we constrain the matrices to be near orthogonal matrices (Formula (1)).

where A is the feature matrix of the T-net prediction outputs.

The Forest-PointNet model samples 1024 points as inputs, applies input and feature variations, and then aggregates the features through maximum pooling. The output is the predicted likelihood of category classification. The Forest-PointNet model is divided into two main network modules: the classification network and the segmentation network (Figure 9). The classification network and segmentation network are not independent. The classification network extracts the global features of the input point cloud, while the segmentation network fuses the global and local features for feature extraction and classification.

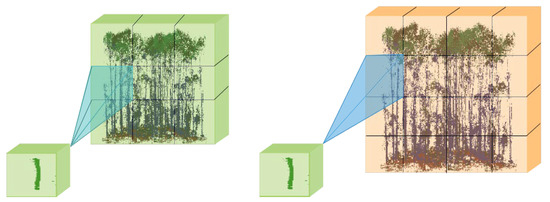

Figure 9.

The left side of the figure shows the search for the corresponding features of a single point cloud in the global features. The right side of the figure shows the schematic diagram of searching for the corresponding features after elevating the dimensionality. It can be seen that the feature matching is more accurate after elevating the dimensionality by comparison.

Segmentation of the vertical structure of complex forests requires the separation of ground and non-ground points, and, consequently, the segmentation of the ground portion of the point cloud. However, trees have complex growth patterns and do not adhere to specific growth patterns for which standardized a priori knowledge exists. The same is true for bushes, making it difficult to achieve separate identification of trunk and bush layers with general techniques, especially without a priori knowledge.

To address this issue, two approaches were taken in this study. First, the point cloud normal vectors were added to the features of the labeled point cloud. The estimation of point cloud normal vectors was carried out using the method based on local surface fitting [35,36]. The surfaces of the trunk, leaf, bush, and ground point cloud models are essentially smooth, which aligns with the premise of a localized surface-fitting-based approach. This estimation is performed in two steps. In the first step: an initial estimation of normal vectors based on local surface fitting, for each point xi in the point cloud of the forest, search for the k points closest in distance to that point for the k-nearest neighbors of x. Fit a local plane in the least-squares sense, denoted P, to the nearest k of xi, the normal vector of the local plane P is denoted as n, then the normal vector of the plane is:

where di is the distance from the coordinate origin O to the plane.

Next, we obtain the eigenvectors from the principal element analysis of the covariance matrix corresponding to the point x. Then, when |||| = 1, the smallest eigenvalue corresponds to the eigenvector that is the optimal solution, that is, the normal vector that is sought.

The normal vectors obtained by local surface fitting are not uniformly oriented and cannot be applied directly. So, further reorientation of the obtained normal vectors is required, constructing an undirected connected graph G, determining the initial point of normal vector propagation and the correct normal vector direction as well as the propagation direction, redirecting all the points in the point cloud by the propagation direction, and obtaining estimated normal vectors that can be correctly applied, the final result is the input point cloud data in the format (x,y,z,p).

In addition to the methods mentioned above, we also enhanced the PointNet model’s structure to develop the Forest-PointNet model. The overall structure of the model is shown in Figure 10.This model is specifically designed for segmenting vertical structures in complex forest scenes. To achieve this, we extracted morphological eigenvalues and represented them as vectors by utilizing the morphological differences between tree trunks and bushes. To enhance the model’s ability to extract local features, we improved the local feature extraction network. This study addressed the issue of the point cloud’s lack of high-dimensional feature extraction capability within the classification network. The segmentation network establishes a mapping relationship between the features of each point and the global features. By searching for the corresponding category within the global features for the features of a single point, the model accurately identifies the category to which the point cloud belongs. A multilayer perceptron network was used to enhance the dimensionality of high-dimensional features of a single point during the feature-matching process. This network ensures that the category of the point cloud can be accurately determined. The output of the hidden layer of the multilayer perceptron is directly used as input to the output layer. This relationship is depicted in Formula (3).

Figure 10.

Forest-PointNet structure diagram showing multiple feature extractors with different structures.

According to Formula (3), it is evident that the dimension of the input data features remains unchanged, but the output can be adjusted. To address the issue of insufficient information in the points, the multilayer perceptron is employed to increase the dimensionality of each point and incorporate the features of all inputs. This effectively mitigates the problem of information deficiency in the points.

However, excessive dimensionality elevation can result in redundant information and a decrease in segmentation performance. By increasing the dimensions, the global features become more comprehensive. Furthermore, by repeating the global features N times, they become linked with the features of individual points, meaning that each point’s specific features are retrieved once within the global features. Figure 9 illustrates the comparison between boosted and unboosted dimensions. However, if there are too many neurons in a single layer of a multilayer perceptual machine, the model tends to become wider, leading to an increased risk of overfitting. To counter this issue, in addition to widening the output layer, the depth of the hidden layer is also increased in the multilayer perceptual machine to help mitigate overfitting.

Due to the small size of the dataset in the training set, some adjustments need to be made to the multilayer perceptron in the segmentation network. This can be achieved by adding random dropout after the convolutional layers in the first three layers of the multilayer perceptron. This is necessary to prevent the model from being easily overfitted. Dropout is a widely used strategy in deep learning to solve the problem of model overfitting. By setting the value of dropout, a portion of the neural network units can be temporarily discarded from the network with a specified probability. This is equivalent to finding a more streamlined network among all the networks. During the training process of Forest-PointNet, two main factors tend to cause overfitting. Firstly, the small size of the training set leads to overfitting in fewer epochs during the model-fitting process. Secondly, the features extracted from a single point have an elevated dimensionality. Therefore, in Forest-PointNet’s segmentation network, dimensionality reduction is performed on both the single-point and global features after the multilayer perceptron. This is followed by classification. As the dimensionality increases in the segmentation network, the corresponding width of the neural network also increases, making it prone to overfitting. To address this issue, dropout is added to prevent overfitting. Before the addition of dropout, the neural network was calculated:

The neural network calculation formula with the addition of dropout is as follows:

where the Bernoulli function randomly generates a 0,1 vector, which allows a neuron to stop working with the randomly generated vector as a probability compared to a neural network that does not incorporate dropout.

2.6. Experimental Environment and Parameters

The experiments in this study were conducted on a desktop with a 64-bit Windows 10 system featuring an Intel Core (TM) i5-4590 3.3 GHz processor. As the majority of the experiments involved deep learning techniques, an NVIDIA GeForce RTX3060GPU graphics card was utilized. Training the deep learning models using a GPU instead of a CPU significantly reduced the training time. We used PyCharm2021 (212.5080.64) as a development tool for code writing, utilized TensorFlow-gpu (1.12.0) as a computational framework, and used the development package provided with Python (3.16.13). Table 1 provides the parameter settings for the Forest-PointNet model.

Table 1.

Experimental model parameter settings.

2.7. Assessment Method

To test the effectiveness of the model, it is necessary to use the mean intersection over union (MIoU) semantic segmentation metrics in addition to the curves of the change in the accuracy and loss function output during training and validation. MIoU is the average of the intersection and concurrency ratios for each class in the dataset and is calculated as shown in Formula (7).

where pii denotes the change rate of correctly predicting category i as category i.

Another set of metrics for evaluating the model is the recall, precision, and F1-score. The recall indicates the percentage of correct segmentation in the actual segmentation process. The precision indicates the percentage of all recognized point clouds that are correctly segmented. The F1-score integrates the overall accuracy of the pre-segmentation and over-segmentation errors. So, we use the F1-score to represent the accuracy of the model and make comparisons.

where true positive (TP) denotes the number of point clouds that have been correctly segmented. False negative (FN) denotes the number of segmentation trees that have not been detected. False positive (FP) denotes the number of point clouds that do not exist but have been incorrectly segmented into a particular class.

3. Results

Finally, after segmenting and comparing the point cloud using two data enhancement methods, the dataset used to train the model is obtained. It consists of a total of 1,404,928 labeled points. This includes 170 point clouds of bushes, 172 point clouds of trunks, 175 point clouds of leaves, and 170 point clouds of ground. Each point cloud contains approximately 2066 points.

The produced dataset was entered into Forest-PointNet for testing and comparison. Likewise, the same dataset was input into PointNet and tested after saving the best model weights file through training. The obtained accuracy was then compared, and the results are presented in Table 2. Due to the presence of numerous uncontrollable influencing factors in the deep learning model training process, it is essential to conduct several tests under different circumstances. In this study, 10 batches of training were performed using the same parameters on the same desktop machine, and the accuracy results were averaged. Additionally, Forest-PointNet was deployed on another server running Windows 11 with an NVIDIA GeForce 3070 GPU for testing. The results obtained were similar, with no significant deviation observed. Table 2 reveals that compared to the PointNet model, the accuracy of the Forest-PointNet model demonstrates greater stability across all segmentation types. Furthermore, the average accuracy of the Forest-PointNet model improved by 4.0930% compared to the traditional PointNet model. Forest-PointNet also achieved an MIoU value of 69.02%.

Table 2.

Forest-PointNet vs. PointNet model accuracy comparison.

Figure 11 illustrates the variation of training accuracy and training loss values for the Forest-PointNet model and the PointNet model. It can be seen that, no matter whether it is the accuracy or the loss function, the convergence of the Forest-PointNet model is much faster and the changes are much smoother. The light-colored regions in the image fluctuate due to the iterative learning of effective features in a complex batch of samples. This learning aims to determine whether the point cloud corresponds to the corresponding category at that time. As the learning process continues, the recognition accuracy of the training samples tends to increase while the training loss tends to decrease. This indicates that the training of our Forest-PointNet model is a global optimization process. After 100 epochs and training with BATCH_SIZE set to 32, the loss function exhibits strong fluctuations initially, which eventually level off after fitting the data. This suggests that the model encounters a batch of complex samples, particularly the two types of point cloud features, bush and leaf, which are more complex and can hinder the model’s regression. By comparing the training process images of the two models, it can be observed that the Forest-PointNet model converges faster and has a smoother fitting process compared to PointNet. Additionally, the Forest-PointNet model appears to be more robust.

Figure 11.

The changes in accuracy and loss function of Forest-PointNet and PointNet. (a,b) are the changes in accuracy and loss function of Forest-PointNet and PointNet, respectively. (c,d) are the changes in the loss function of Forest-PointNet and PointNet, respectively.

As shown by the confusion matrix (Figure 12), our Forest-PointNet model can accurately segment all categories, with recognition accuracies above 90% for all types.

Figure 12.

Confusion matrix image output by Forest-PointNet.

To better demonstrate the segmentation prediction effect of the model, the output 3D model files were visually read using MeshLab software in this study. Four viewpoint positions and a random cross-section were used for the demonstration, as shown in Figure 13. Among them, the four colors represent four different vertical structures, green for leaves, blue for trunks and branches, red for bushes, and gray for the ground. It can be seen that our model still performs well for the recognition of leaves and trunks as well as bushes, and there exists a portion of ground points that are not recognized.

Figure 13.

A vertically structured semantically segmented forest scene is shown from different perspectives, where the black part indicates ground points, the blue indicates trunk points, the green indicates leaf points, and the red indicates bush points. (a–e) present the prediction results from the left front, directly above, right front, directly in front, and cross-sectional views.

The prediction results reveal that the model remains fairly accurate in predicting trunks and leaves, particularly the leaves positioned in the middle of branches. It can also correctly identify trunks. Moreover, identifying bushes is relatively more accurate, and most bushes on the ground are correctly recognized.

4. Discussion

4.1. The Advantages of Our Approach

The segmentation of forest vertical structure in TLS data is an essential prerequisite for comprehending forest stand structure and estimating biomass [37,38,39]. Currently, machine vision models and image processing techniques are widely used for instance segmentation and semantic segmentation [40]. However, in scenarios where spatial information is restricted and the point cloud density is high, such as dense bush areas or tall survival positions, there might be inaccuracies in the detection of low vertical position leaves. Additionally, areas with high tree densities may exhibit overlapping canopies and dense foliage, causing challenges in differentiating trunks and leaves [41,42,43]. As a result, traditional methods may struggle to address this issue, resulting in under-segmentation or over-segmentation problems.

Three-dimensional deep learning models are capable of modeling data with higher dimensionality and a hierarchical structure. They adopt a high-level abstraction approach [44,45], which allows them to extract valid spatial structural features from a large number of samples. These models continuously improve the neural network performance through training [46]. Additionally, with the advancements in deep learning, there have been studies focused on segmenting vertical structures using 2D images as input data [47,48,49]. Despite the strong support of machine vision technology [50,51], these studies still lack the 3D spatial information of the research target. Therefore, this paper proposed a new Forest-PointNet deep learning model based on the PointNet model. This model aims to address the segmentation of disorganized, inhomogeneous, and irregular vertical structure point clouds in forests. According to preliminary research, the Forest-PointNet model directly targets the vertical and structural segmentation of scanned data, making it a novel attempt to maximize the utilization of the point cloud’s spatial characteristics. The model has demonstrated promising performance in testing, outperforming the PointNet model in segmenting the vertical structure of forests.

4.2. Comparison with Existing Methods

To enable a more objective assessment of the effects of model segmentation, we conducted segmentation and prediction using a publicly available dataset of forest point clouds from the Evo region of Finland. We first examined the prediction effects of the PointNet model and the Forest-PointNet model separately, focusing on the top view angle (Figure 14). Specifically, Figure 14a,c display the prediction results of the PointNet model, while Figure 14c,d show the prediction results of the Forest-PointNet model. The presence of a larger number of bushes and ground points labeled blue in the point cloud of Figure 14c suggests that there were more instances where ground points and bush points were misclassified as trunk points.

Figure 14.

Prediction effect of Forest-PointNet and PointNet models on the test dataset: (a,c) correspond to the prediction effect of the PointNet model, and (b,d) correspond to the prediction effect of the Forest-PointNet model.

Figure 14c,d display the prediction results of the PointNet model and the Forest-PointNet model, respectively, for the same forest sample data. We selected the same observation angle to ensure a more objective comparison of the two models’ effects. The PointNet model, in addition to accomplishing the task of basic forest vertical structure segmentation, exhibits a more pronounced issue of over-segmentation and under-segmentation at the junction of canopy branches, incorrectly predicting multiple leaves as trunks. This leads to a significantly larger number of blue markers outside the canopy boundary, as shown in the figure. Furthermore, some of the bushes in the sample plots, indicated by the red markers, are also wrongly classified as trunks, resulting in an increased number of blue bush markers. After training the Forest-PointNet model with the same training data and parameters as the PointNet model, the overall accuracy of the prediction results is significantly improved. Although there is minimal under- or over-segmentation at certain canopy junctions, this is primarily attributed to the intertwining of trees at the sample site, the uneven distribution of forest trunks, and the overlapping shading between tree canopies, which make the demarcation between trunks and branches less distinct and reduce the model’s recognition ability. Consequently, following targeted improvements, the Forest-PointNet model exhibits a greater advantage in the forest vertical structure segmentation task.

In order to evaluate the effectiveness of the models more objectively, we have chosen to compare the results of the models proposed in other papers, which all have outstanding performance in the 3D point cloud feature extraction task while having different structures. It is important to note that these values may not represent the absolute performance of the algorithms due to variations in the labeled data and labeling methods used. However, they can still serve as a useful reference for future studies.

A comparison between our study and other papers can be observed in Table 3. However, it is important to note that each study defines the categories differently. For instance, some studies filter out ground and bushes, while including categories such as leaves and trunks. The comparison reveals that the Forest-PointNet model excels in terms of overall accuracy. Additionally, our method outperforms the other approaches specifically in the categories of bushes and ground points. However, in terms of leaf segmentation accuracy, our method is comparable to the other methods.

Table 3.

Comparison of the precision of each method.

Although the comparison has some limitations, our method obtained more accurate results (Table 3). Through the comparison, we clearly understand the differences between different methods for our future research work.

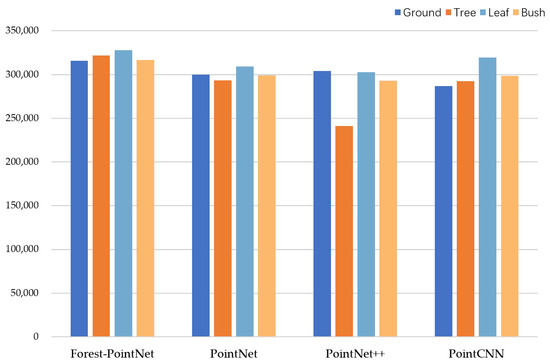

We conducted a test on the four models using the first dataset, which consisted of a total of 361,550 leaf points, 336,806 ground points, 355,352 trunk points, and 351,220 bush points. Figure 15 displays the number of points that each of the four models accurately identified. It can be seen that the number of points of the four types predicted by the Forest-PointNet model is higher than the average of the four models. Through a comparison of the correctly recognized points, we were able to further evaluate the performance of our models in an objective manner.

Figure 15.

The figure shows the number of points predicted by each of the four mainstream models for each of the four types of forest vertical structure.

4.3. Limitations and Potential Improvements

Compared to traditional machine learning methods, deep learning methods have certain limitations. These limitations include a reliance on large datasets and insufficient accuracy. However, deep learning offers several advantages. It eliminates the need for manual screening of features and reduces the requirement for extensive human and material resources to analyze these features. Additionally, as LiDAR technology continues to advance, there will be an increase in the availability of larger and more comprehensive point cloud data. As a result, the model effectiveness of deep learning will improve along with the quality of the data.

Forest-PointNet selects 1/3 of the pure forest dataset as the training dataset source. It then performs various operations on the training set such as splitting, rotating, translating, and adding Gaussian noise to create a diverse training set. Additionally, the pure forest dataset is expanded by rotating and splicing to generate a new dataset for testing purposes. After evaluating the model performance using metrics such as accuracy and MIoU, and comparing it with the traditional PointNet model, Forest-PointNet has been found to achieve better semantic segmentation results for ground point clouds in pure forests. However, there are still several areas that require further research and improvement. Firstly, the experimental and test scenarios in this study only focused on forests with ideal growth conditions. Due to limitations in data sources, more complex forest scenarios have not been tested yet. In future studies, it will be necessary to test forest scenarios with diverse stand structures and adjust the model structure and parameters accordingly. Both semantic segmentation and instance segmentation are crucial aspects in the study of forest scene segmentation. Currently, Forest-PointNet only performs semantic segmentation and does not include instance segmentation. This means that it can only differentiate between leaves, trunks, bushes, and ground, but it is unable to separate trunks and leaves belonging to the same tree. This functionality needs to be developed and improved in future research, as it holds potential for applying LiDAR in forestry research.

Meanwhile, the PointNet-based deep learning network is unable to learn the local structure that accompanies the metric space where the points are located. This limitation can negatively impact the ability to recognize fine-grained patterns. To address this issue, we propose integrating a state-of-the-art neural network as the backbone network. The purpose of this is to improve the accuracy of the model identification and thus to solve the problem of over-segmentation or under-segmentation of the model in the part of the tree where the trunk and leaves are connected.

Beyond this, based on the current experimental results, we can only demonstrate that our model works in deciduous broadleaf forests, and further experiments are needed to determine whether it is applicable to coniferous forests.

5. Conclusions

In this paper, we present a deep learning method designed to recognize the vertical structure of forests using terrestrial LiDAR scans at the point cloud scale, which achieves an average recognition accuracy of 90.98%. Our approach has some advantages over current approaches to forest semantic segmentation. Compared to machine-learning-based forest vertical structure segmentation methods, deep learning methods do not rely on manually planned features and the pre-trained deep learning models have better generalizability; compared to forest vertical structure segmentation methods based on 3D structural reconstruction, deep learning methods extract features directly from the forest point cloud and learn the features, which have higher accuracy. Compared to deep learning methods such as PointCNN, PointNet, and PointNet++, our method draws on the advantages of the PointNet model and improves the local feature extraction capability to a certain extent, which improves by about 4% in terms of accuracy. We combine this with other knowledge to achieve the segmentation of vertical structures. Our study applies deep learning techniques to the semantic segmentation of ground point clouds in complex forest environments, and we adapt the model specifically for this task, creating the Forest-PointNet deep learning model. Forest-PointNet improves the classification performance of individual point clouds by retaining the global feature extraction capability of PointNet. With the adoption of anti-overfitting measures, the model becomes more suitable for semantic segmentation in complex forest scenarios while retaining the important spatial features of forest point clouds. Our results demonstrate that the Forest-PointNet model achieves the best performance in recognizing leaves, branches, bushes, and the ground among the four vertical structures. It also performs well on validated point cloud data that includes complex forest structures, branch crossings, and branch occlusions. In conclusion, our proposed deep learning framework directly processes forest point cloud data and successfully solves the task of vertical structure segmentation, even in cases where extensive prior knowledge is not available. After training and prediction, the model is well adapted to perform semantic segmentation in complex forest scenarios.

Author Contributions

Conceptualization, F.C. and Z.M.; methodology, Z.M.; software, Y.D. and Z.M.; validation, Y.D., Z.M. and J.Z.; formal analysis, Y.D.; investigation, Z.M.; resources, F.C.; data curation, Y.D.; writing—original draft preparation, Z.M; writing—review and editing, F.C., J.Z., F.X., Y.D. and Z.M.; visualization, Y.D.; supervision, F.C.; project administration, F.C. and F.X.; funding acquisition, F.C. and F.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key R&D Program of China, grant number 2022YFF1302700; The Emergency Open Competition Project of National Forestry and Grassland Administration, grant number 202303; and Outstanding Youth Team Project of Central Universities, grant number QNTD202308.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, S.; Wang, T.; Hou, Z.; Gong, Y.; Feng, L.; Ge, J. Harnessing terrestrial laser scanning to predict understory biomass in temperate mixed forests. Ecol. Indic. 2021, 121, 107011. [Google Scholar] [CrossRef]

- Brunner, A.; Houtmeyers, S. Segmentation of conifer tree crowns from terrestrial laser scanning point clouds in mixed stands of Scots pine and Norway spruce. Eur. J. For. Res. 2022, 141, 909–925. [Google Scholar] [CrossRef]

- Itakura, K.; Hosoi, F. Automatic individual tree detection and canopy segmentation from three-dimensional point cloud images obtained from ground-based lidar. J. Agric. Meteorol. 2018, 74, 109–113. [Google Scholar] [CrossRef]

- Wei, H.; Xu, E.; Zhang, J.; Meng, Y.; Wei, J.; Dong, Z.; Li, Z. BushNet: Effective semantic segmentation of bush in large-scale point clouds. Comput. Electron. Agric. 2022, 193, 106653. [Google Scholar] [CrossRef]

- Reitberger, J.; Schnörr, C.; Krzystek, P.; Stilla, U. 3D segmentation of single trees exploiting full waveform LIDAR data. ISPRS J. Photogramm. Remote Sens. 2009, 64, 561–574. [Google Scholar] [CrossRef]

- Briechle, S.; Krzystek, P.; Vosselman, G. Classification of tree species and standing dead trees by fusing uav-based lidar data and multispectral imagery in the 3d deep neural network pointnet++. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 2, 203–210. [Google Scholar] [CrossRef]

- Liu, G.; Wang, J.; Dong, P.; Chen, Y.; Liu, Z. Estimating Individual Tree Height and Diameter at Breast Height (DBH) from Terrestrial Laser Scanning (TLS) Data at Plot Level. Forests 2018, 9, 398. [Google Scholar] [CrossRef]

- Panagiotidis, D.; Abdollahnejad, A.; Slavík, M. Assessment of Stem Volume on Plots Using Terrestrial Laser Scanner: A Precision Forestry Application. Sensors 2021, 21, 301. [Google Scholar] [CrossRef]

- Guo, Z.; Feng, C.C. Using multi-scale and hierarchical deep convolutional features for 3D semantic classification of TLS point clouds. Int. J. Geogr. Inf. Sci. 2020, 34, 661–680. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, J.; Liu, G. Vertical Structure Classification of a Forest Sample Plot Based on Point Cloud Data. J. Indian Soc. Remote Sens. 2020, 48, 1215–1222. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, J.; Dong, P.; Ma, W. Tree stem extraction from TLS point-cloud data of natural forests based on geometric features and DBSCAN. Geocarto Int. 2022, 37, 10392–10406. [Google Scholar] [CrossRef]

- Sun, Y.; Liang, X.; Liang, Z.; Welham, C.; Li, W. Deriving Merchantable Volume in Poplar through a Localized Tapering Function from Non-Destructive Terrestrial Laser Scanning. Forests 2016, 7, 87. [Google Scholar] [CrossRef]

- Wu, B.; Zheng, G.; Chen, Y. An Improved Convolution Neural Network-Based Model for Classifying Foliage and Woody Components from Terrestrial Laser Scanning Data. Remote Sens. 2020, 12, 1010. [Google Scholar] [CrossRef]

- Lin, W.; Fan, W.; Liu, H.; Xu, Y.; Wu, J. Classification of Handheld Laser Scanning Tree Point Cloud Based on Different KNN Algorithms and Random Forest Algorithm. Forests 2021, 12, 292. [Google Scholar] [CrossRef]

- Wang, Y.; Weinacker, H.; Koch, B. A Lidar Point Cloud Based Procedure for Vertical Canopy Structure Analysis And 3D Single Tree Modelling in Forest. Sensors 2008, 8, 3938–3951. [Google Scholar] [CrossRef] [PubMed]

- Lu, X.; Guo, Q.; Li, W.; Flanagan, J. A bottom-up approach to segment individual deciduous trees using leaf-off lidar point cloud data. ISPRS J. Photogramm. Remote Sens. 2014, 94, 1–12. [Google Scholar] [CrossRef]

- Seidel, D.; Albert, K.; Fehrmann, L.; Ammer, C. The potential of terrestrial laser scanning for the estimation of understory biomass in coppice-with-standard systems. Biomass-Bioenergy 2012, 47, 20–25. [Google Scholar] [CrossRef]

- Beyene, S.M.; Hussin, Y.A.; Kloosterman, H.E.; Ismail, M.H. Forest Inventory and Aboveground Biomass Estimation with Terrestrial LiDAR in the Tropical Forest of Malaysia. Can. J. Remote Sens. 2020, 46, 130–145. [Google Scholar] [CrossRef]

- Wang, D. Unsupervised semantic and instance segmentation of forest point clouds. ISPRS J. Photogramm. Remote Sens. 2020, 165, 86–97. [Google Scholar] [CrossRef]

- Liu, L.; Pang, Y.; Li, Z.; Si, L.; Liao, S. Combining Airborne and Terrestrial Laser Scanning Technologies to Measure Forest Understorey Volume. Forests 2017, 8, 111. [Google Scholar] [CrossRef]

- Pu, L.; Xv, J.; Deng, F. An Automatic Method for Tree Species Point Cloud Segmentation Based on Deep Learning. J. Indian Soc. Remote Sens. 2021, 49, 2163–2172. [Google Scholar] [CrossRef]

- Wang, L.; Huang, Y.; Hou, Y.; Zhang, S.; Shan, J. Graph attention convolution for point cloud semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10296–10305. [Google Scholar]

- Gao, X.-Y.; Wang, Y.-Z.; Zhang, C.-X.; Lu, J.-Q. Multi-Head Self-Attention for 3D Point Cloud Classification. IEEE Access 2021, 9, 18137–18147. [Google Scholar] [CrossRef]

- Vanian, V.; Zamanakos, G.; Pratikakis, I. Improving performance of deep learning models for 3D point cloud semantic segmentation via attention mechanisms. Comput. Graph. 2022, 106, 277–287. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, X.; Chen, Z.; Lu, Z. A Review of Deep Learning-Based Semantic Segmentation for Point Cloud. IEEE Access 2019, 7, 179118–179133. [Google Scholar] [CrossRef]

- Chen, X.; Jiang, K.; Zhu, Y.; Wang, X.; Yun, T. Individual Tree Crown Segmentation Directly from UAV-Borne LiDAR Data Using the PointNet of Deep Learning. Forest 2021, 12, 131. [Google Scholar] [CrossRef]

- Liu, B.; Chen, S.; Huang, H.; Tian, X. Tree Species Classification of Backpack Laser Scanning Data Using the PointNet++ Point Cloud Deep Learning Method. Remote Sens. 2022, 14, 3809. [Google Scholar] [CrossRef]

- Jing, Z.; Guan, H.; Zhao, P.; Li, D.; Yu, Y.; Zang, Y.; Wang, H.; Li, J. Multispectral LiDAR point cloud classification using SE-PointNet++. Remote Sens. 2021, 13, 2516. [Google Scholar] [CrossRef]

- Komori, J.; Hotta, K. AB-PointNet for 3D point cloud recognition. In Proceedings of the 2019 Digital Image Computing: Techniques and Applications (DICTA), Perth, Australia, 2–4 December 2019; pp. 1–6. [Google Scholar]

- Aoki, Y.; Goforth, H.; Srivatsan, R.A.; Lucey, S. Pointnetlk Robust & efficient point cloud registration using pointnet. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7163–7172. [Google Scholar]

- Yrttimaa, T.; Vastaranta, M.; Saarinen, N.; Kankare, V.; Luoma, V.; Hyyppä, J.; University of Eastern Finland. Terrestrial Laser Scanning Point Clouds from Evo Test Site, 80 Sample Plots, Autumn 2021; Metsätieteiden osasto, Version 1; University of Eastern Finland: Joensuu, Finland, 2022. [Google Scholar]

- Fu, H.; Li, H.; Dong, Y.; Xu, F.; Chen, F. Segmenting Individual Tree from TLS Point Clouds Using Improved DBSCAN. Forests 2022, 13, 566. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Wagers, S.; Castilla, G.; Filiatrault, M.; Sanchez-Azofeifa, G.A. Using TLS-Measured Tree Attributes to Estimate Aboveground Biomass in Small Black Spruce Trees. Forests 2021, 12, 1521. [Google Scholar] [CrossRef]

- Sanchez, J.; Denis, F.; Coeurjolly, D.; Dupont, F.; Trassoudaine, L.; Checchin, P. Robust normal vector estimation in 3D point clouds through iterative principal component analysis. ISPRS J. Photogramm. Remote Sens. 2020, 163, 18–35. [Google Scholar] [CrossRef]

- Mitra, N.J.; Nguyen, A. Estimating surface normals in noisy point cloud data. In Proceedings of the Nineteenth Annual Symposium on Computational Geometry, San Diego, CA, USA, 8–10 June 2003; pp. 322–328. [Google Scholar]

- Xu, D.; Wang, H.; Xu, W.; Luan, Z.; Xu, X. LiDAR Applications to Estimate Forest Biomass at Individual Tree Scale: Opportunities, Challenges and Future Perspectives. Forests 2021, 12, 550. [Google Scholar] [CrossRef]

- Nurunnabi, A.; Teferle, F.N.; Li, J.; Lindenbergh, R.C.; Parvaz, S. Investigation of pointnet for semantic segmentation of large-scale outdoor point clouds. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 46, 397–404. [Google Scholar] [CrossRef]

- Calders, K.; Newnham, G.; Burt, A.; Murphy, S.; Raumonen, P.; Herold, M.; Culvenor, D.; Avitabile, V.; Disney, M.; Armston, J.; et al. Nondestructive estimates of above-ground biomass using terrestrial laser scanning. Methods Ecol. Evol. 2014, 6, 198–208. [Google Scholar] [CrossRef]

- Tchapmi, L.P.; Choy, C.B.; Armeni, I.; Gwak, J.; Savarese, S. SEGCloud: Semantic segmentation of 3d point clouds. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 537–547. [Google Scholar]

- Cabo, C.; Ordóñez, C.; Sáchez-Lasheras, F.; Roca-Pardiñas, J.; de Cos-Juez, J. Multiscale Supervised Classification of Point Clouds with Urban and Forest Applications. Sensors 2019, 19, 4523. [Google Scholar] [CrossRef]

- Liu, M.; Han, Z.; Chen, Y.; Liu, Z.; Han, Y. Tree species classification of LiDAR data based on 3D deep learning. Measurement 2021, 177, 109301. [Google Scholar] [CrossRef]

- Wan, P.; Shao, J.; Jin, S.; Wang, T.; Yang, S.; Yan, G.; Zhang, W. A novel and efficient method for wood–leaf separation from terrestrial laser scanning point clouds at the forest plot level. Methods Ecol. Evol. 2021, 12, 2473–2486. [Google Scholar] [CrossRef]

- Ferrara, R.; Virdis, S.G.; Ventura, A.; Ghisu, T.; Duce, P.; Pellizzaro, G. An automated approach for wood-leaf separation from terrestrial LIDAR point clouds using the density based clustering algorithm DBSCAN. Agric. For. Meteorol. 2018, 262, 434–444. [Google Scholar] [CrossRef]

- Han, T.; Sánchez-Azofeifa, G.A. A Deep Learning Time Series Approach for Leaf and Wood Classification from Terrestrial LiDAR Point Clouds. Remote Sens. 2022, 14, 3157. [Google Scholar] [CrossRef]

- Abd Rahman, M.Z.; Gorte, B.G.H.; Bucksch, A.K. A new method for individual tree delineation and undergrowth removal from high resolution airborne LiDAR. In Proceedings of the ISPRS Workshop Laserscanning 2009, Paris, France, 1–2 September 2009; 38, pp. 283–288. [Google Scholar]

- Wang, D.; Hollaus, M.; Pfeifer, N. Feasibility of machine learning methods for separating wood and leaf points from terrestrial laser scanning data. In Proceedings of the ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences, Wuhan, China, 18–22 September 2017; pp. 157–164. [Google Scholar]

- Hui, Z.; Jin, S.; Xia, Y.; Wang, L.; Ziggah, Y.Y.; Cheng, P. Wood and leaf separation from terrestrial LiDAR point clouds based on mode points evolution. ISPRS J. Photogramm. Remote Sens. 2021, 178, 219–239. [Google Scholar] [CrossRef]

- Xi, Z.; Hopkinson, C.; Rood, S.B.; Peddle, D.R. See the forest and the trees: Effective machine and deep learning algorithms for wood filtering and tree species classification from terrestrial laser scanning. ISPRS J. Photogramm. Remote Sens. 2020, 168, 1–16. [Google Scholar] [CrossRef]

- Dechesne, C.; Mallet, C.; Le Bris, A.; Gouet-Brunet, V. Semantic segmentation of forest stands of pure species combining airborne lidar data and very high resolution multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2017, 126, 129–145. [Google Scholar] [CrossRef]

- Kaijaluoto, R.; Kukko, A.; El Issaoui, A.; Hyyppä, J.; Kaartinen, H. Semantic segmentation of point cloud data using raw laser scanner measurements and deep neural networks. ISPRS Open J. Photogramm. Remote Sens. 2021, 3, 100011. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).