EddyDet: A Deep Framework for Oceanic Eddy Detection in Synthetic Aperture Radar Images

Abstract

:1. Introduction

2. Dataset

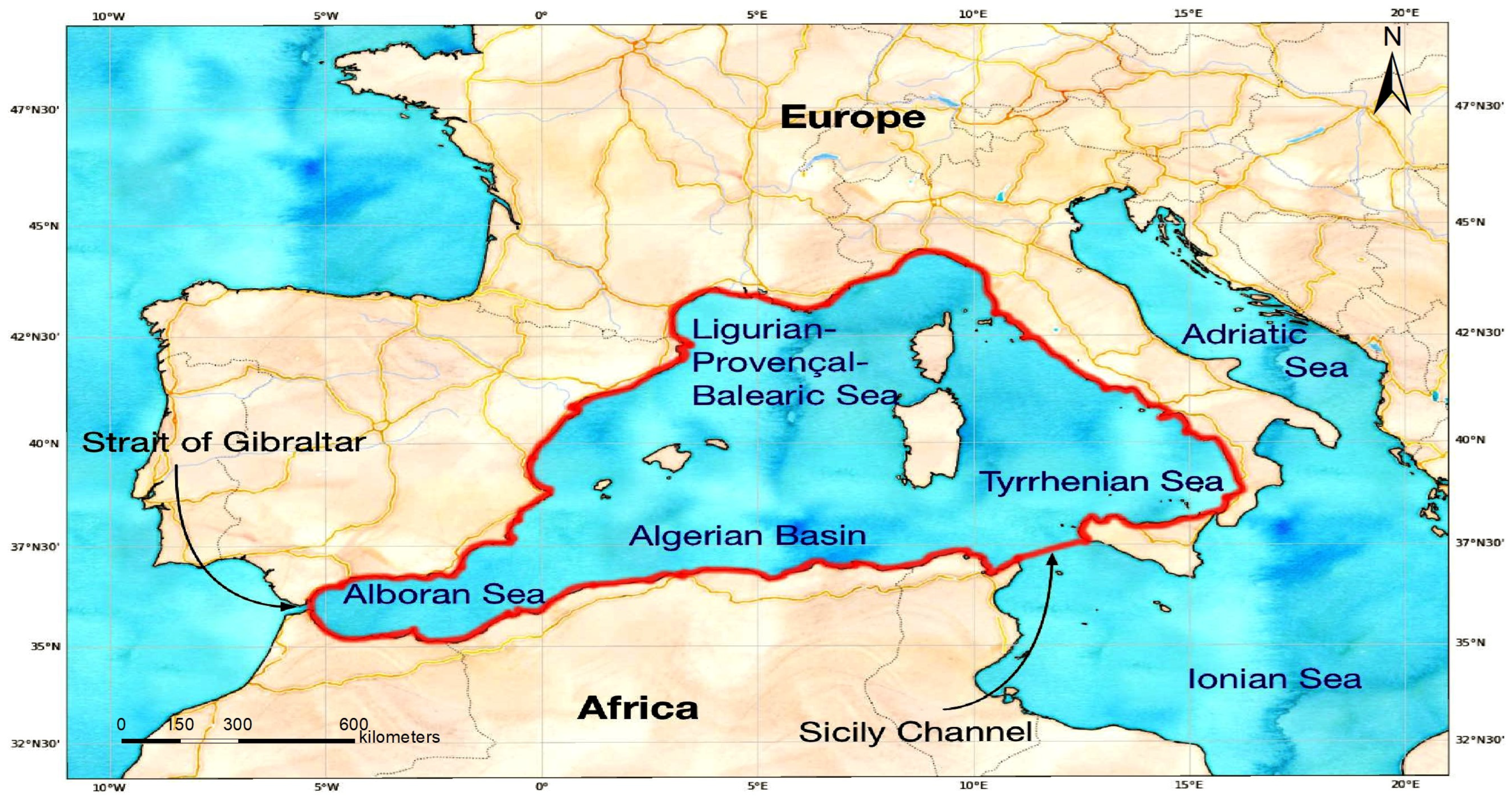

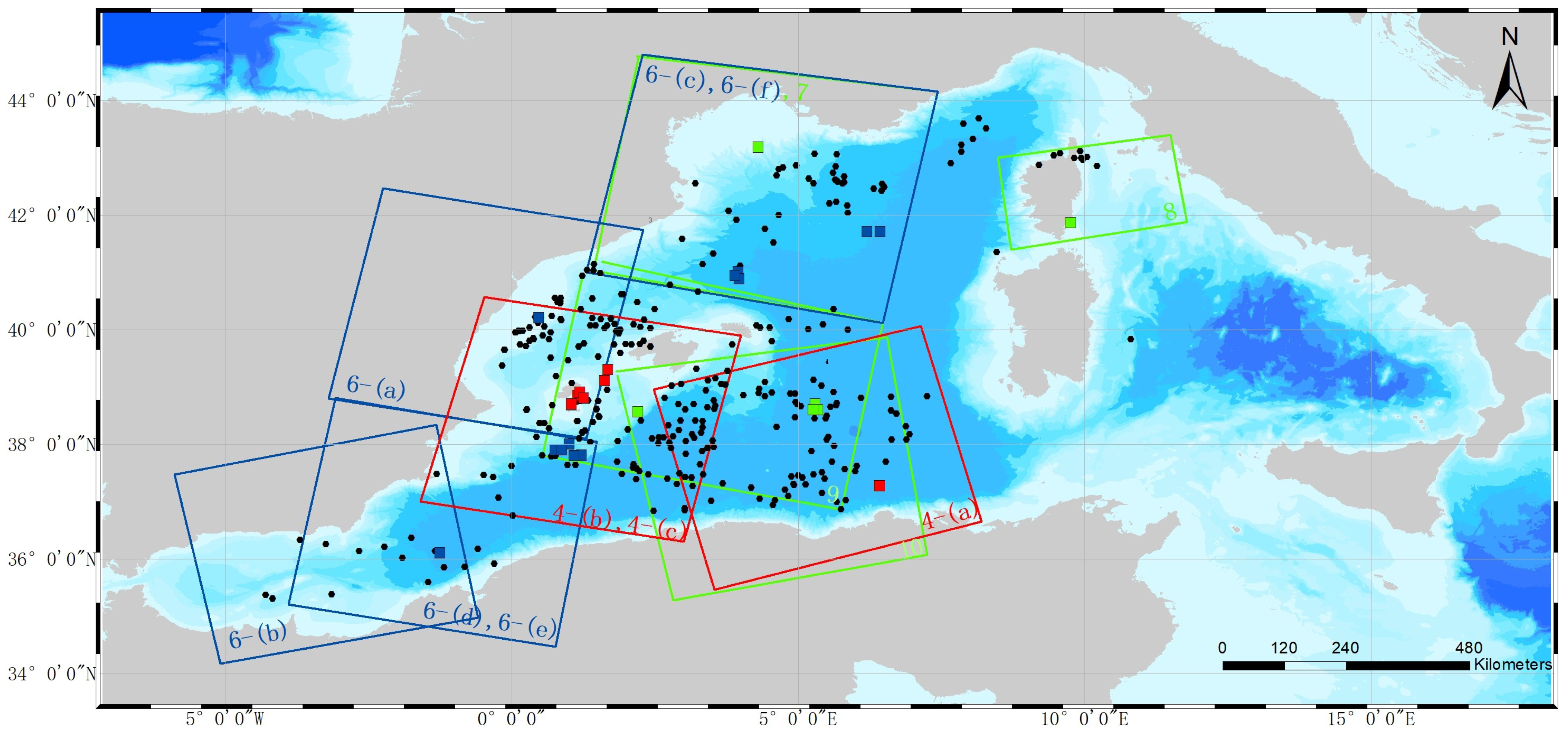

2.1. Data Collection

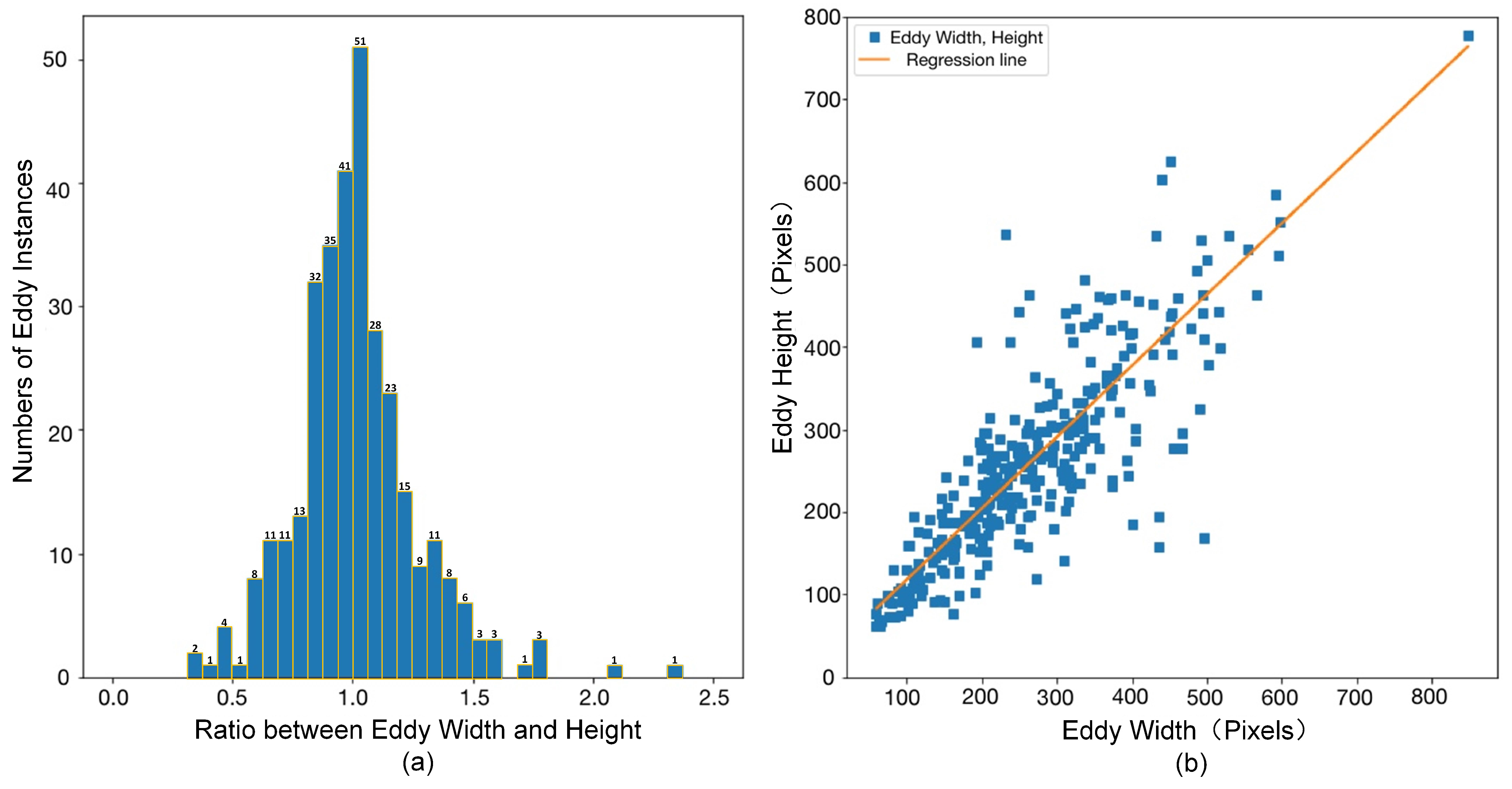

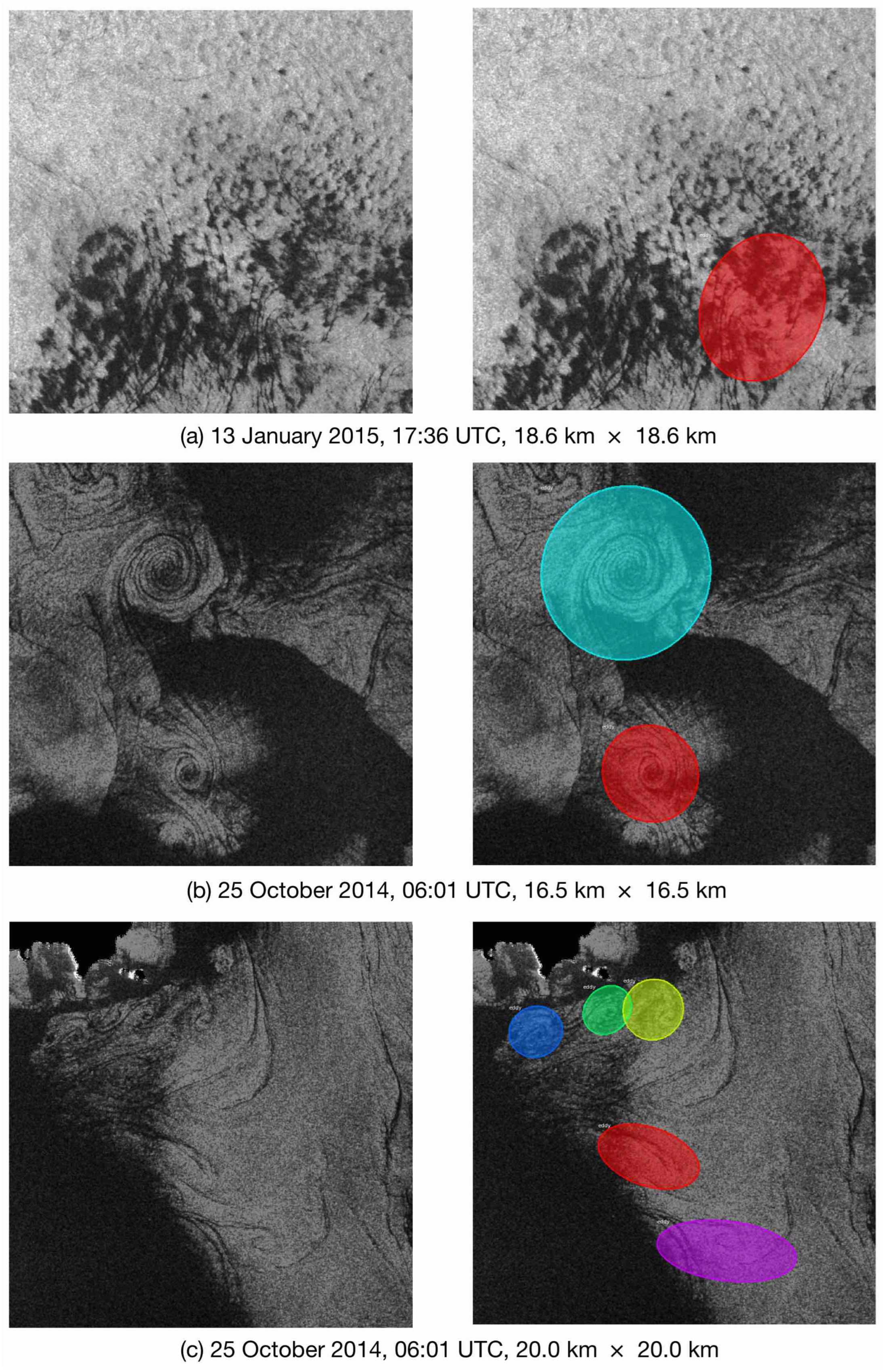

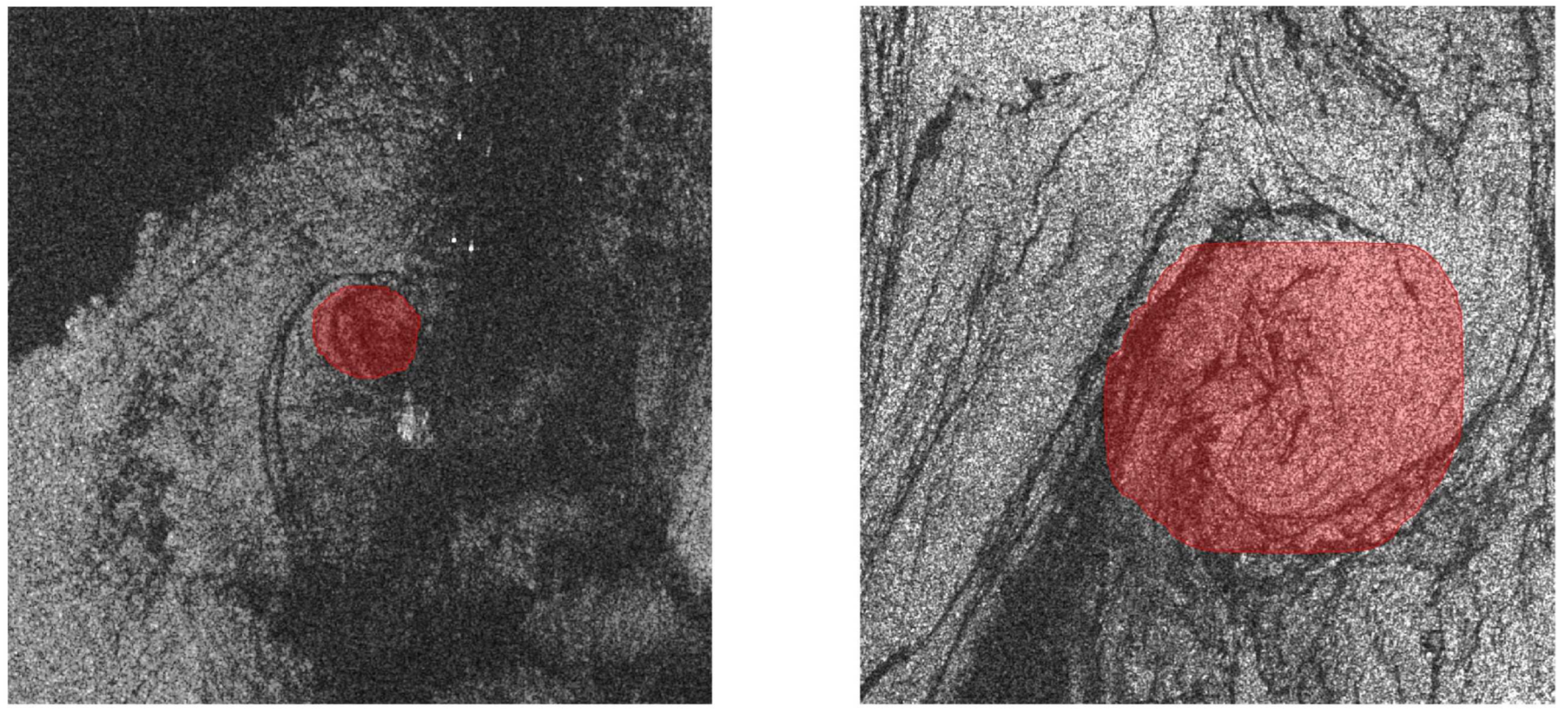

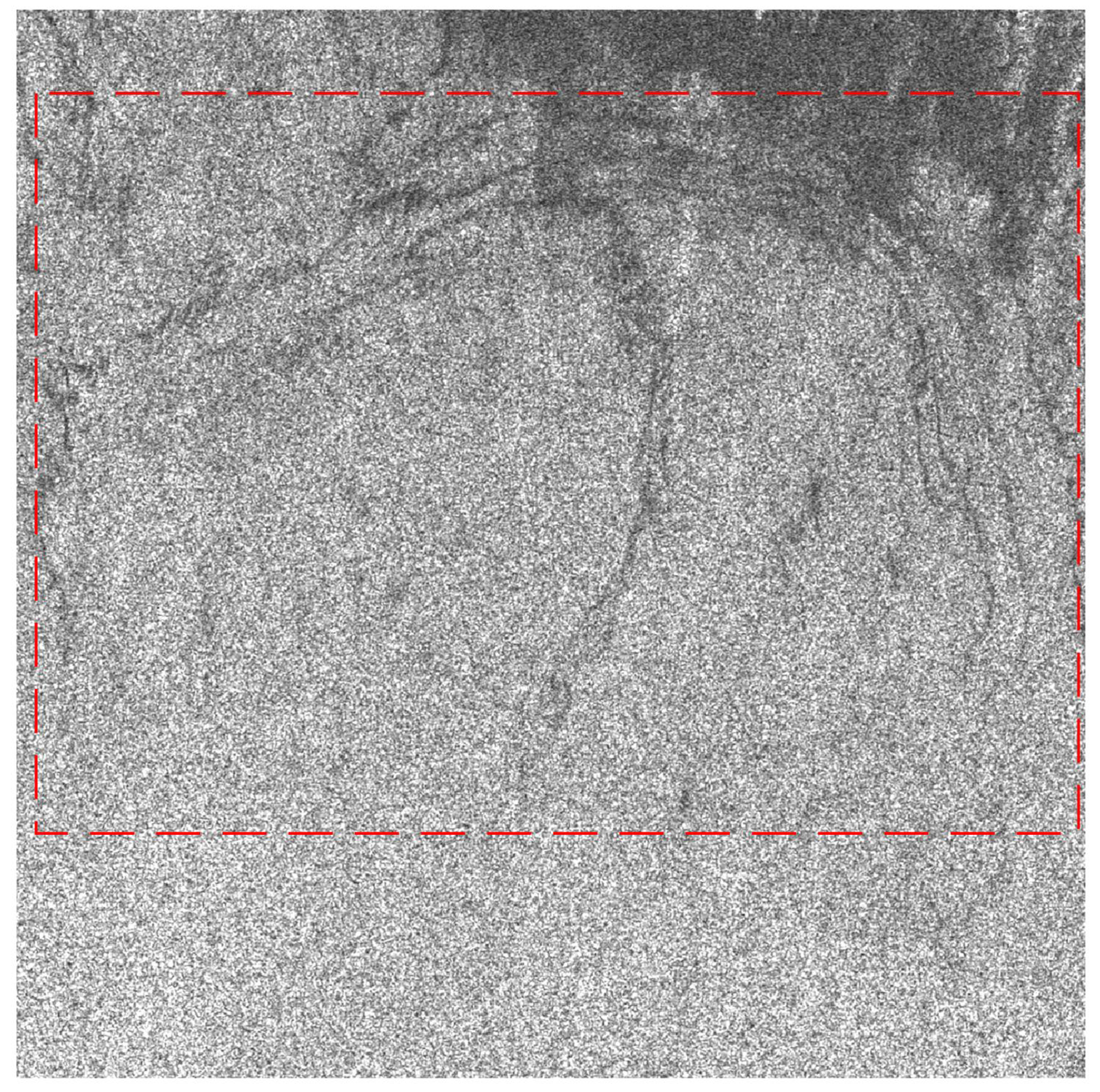

2.2. Dataset Construction

3. Methodology

3.1. System Architecture

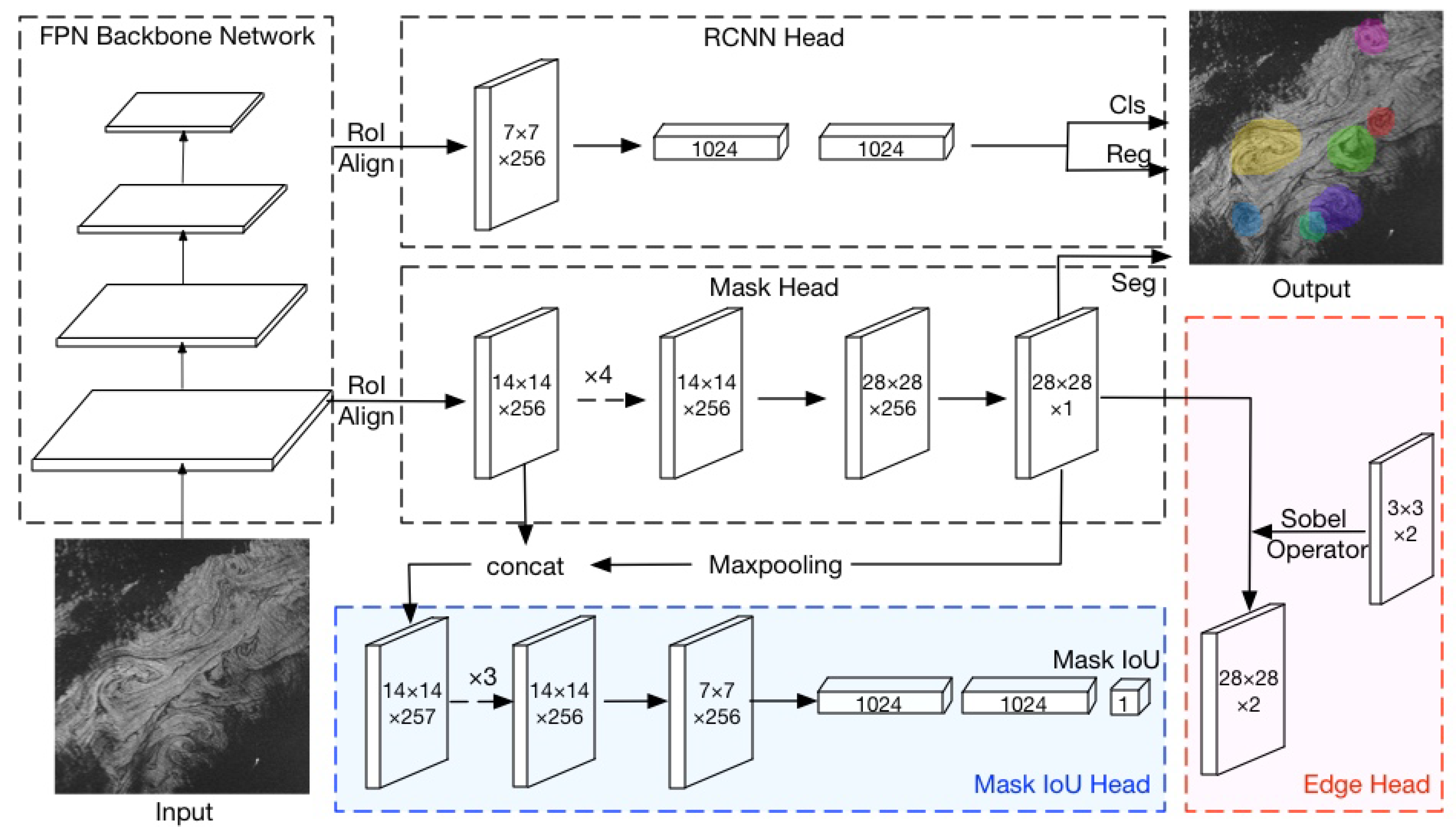

3.1.1. The Overall Architecture

3.1.2. The Edge Head

3.1.3. The Mask IoU Head

3.2. Evaluation Metrics

3.3. Experimental Setup

3.4. Quantitative Results

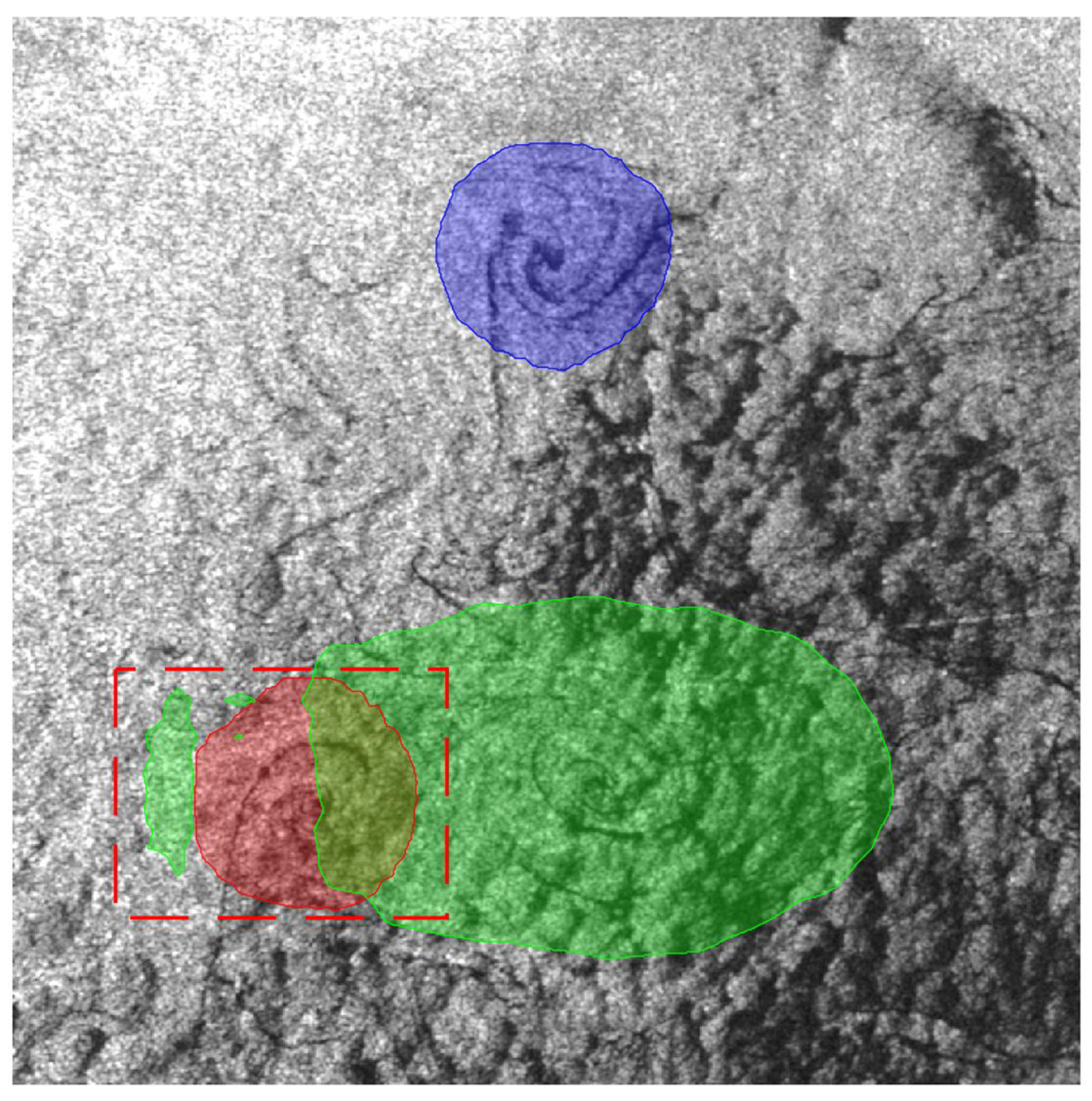

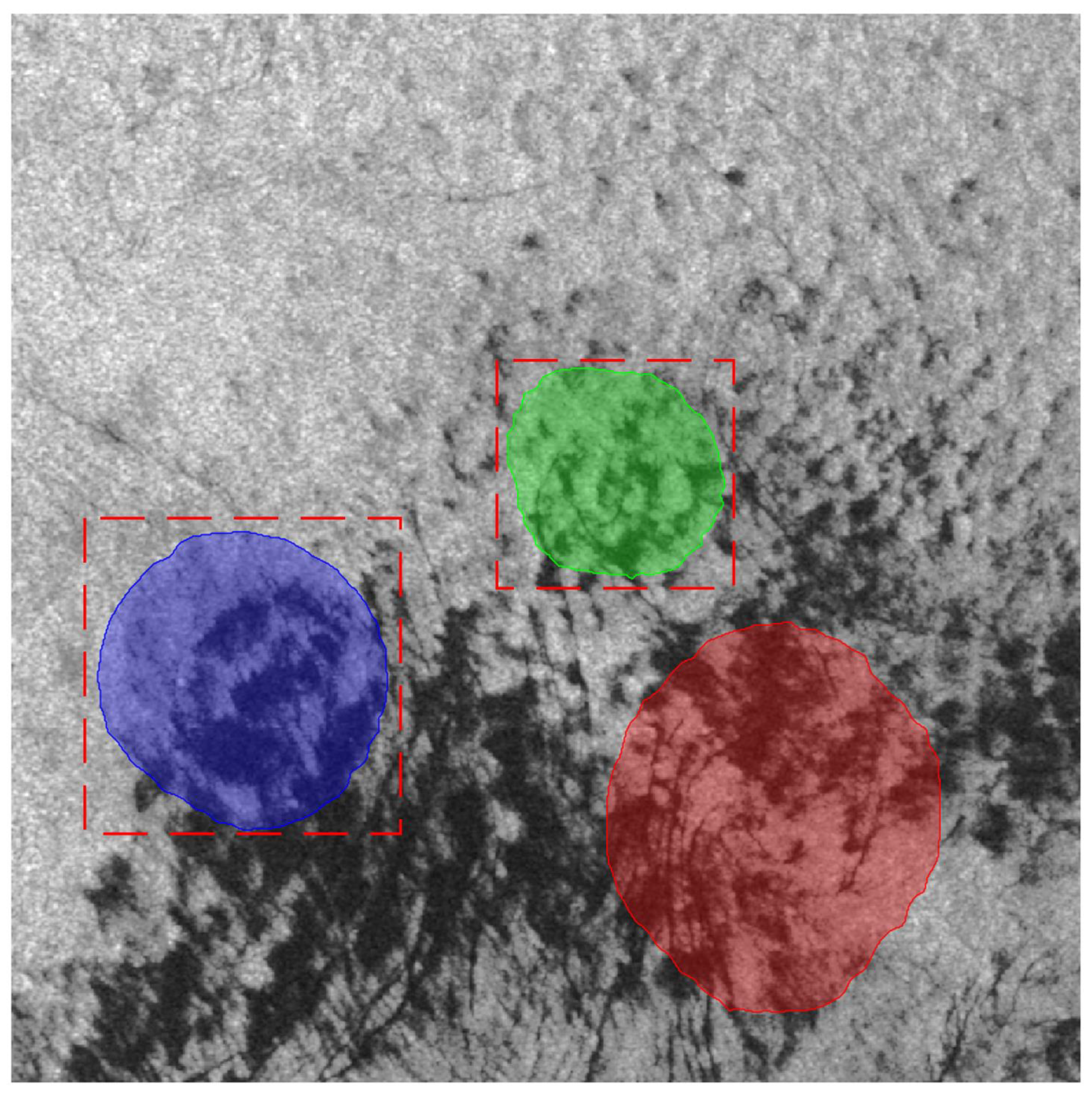

3.5. Visualization Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Robinson, A.R. Overview and Summary of Eddy Science. In Eddies in Marine Science; Robinson, A.R., Ed.; Springer: Berlin/Heidelberg, Germany, 1983; pp. 3–15. [Google Scholar] [CrossRef]

- Munk, W.; Armi, L.; Fischer, K.; Zachariasen, F. Spirals on the sea. Proc. R. Soc. London. Ser. A Math. Phys. Eng. Sci. 2000, 456, 1217–1280. [Google Scholar] [CrossRef]

- Dong, C.; Liu, L.; Nencioli, F.; Bethel, B.J.; Liu, Y.; Xu, G.; Ma, J.; Ji, J.; Sun, W.; Shan, H.; et al. The near-global ocean mesoscale eddy atmospheric-oceanic-biological interaction observational dataset. Sci. Data 2022, 9, 436. [Google Scholar] [CrossRef] [PubMed]

- Gade, M.; Karimova, S.; Buck, A. Mediterranean Eddy Statistics Based on Multiple SAR Imagery. In Advances in SAR Remote Sensing of Oceans, 1st ed.; Li, X., Guo, H., Chen, K.S., Yang, X., Eds.; CRC Press: Boca Raton, FL, USA, 2018; pp. 257–270. [Google Scholar] [CrossRef]

- Stuhlmacher, A.; Gade, M. Statistical analyses of eddies in the Western Mediterranean Sea based on Synthetic Aperture Radar imagery. Remote Sens. Environ. 2020, 250, 112023. [Google Scholar] [CrossRef]

- Bourras, D. Response of the atmospheric boundary layer to a mesoscale oceanic eddy in the northeast Atlantic. J. Geophys. Res. 2004, 109, D18114. [Google Scholar] [CrossRef]

- Chen, G.; Gan, J.; Xie, Q.; Chu, X.; Wang, D.; Hou, Y. Eddy heat and salt transports in the South China Sea and their seasonal modulations: Eddy Transports in the SCS. J. Geophys. Res. Ocean. 2012, 117, C05021. [Google Scholar] [CrossRef]

- Dong, C.; McWilliams, J.C.; Liu, Y.; Chen, D. Global heat and salt transports by eddy movement. Nat. Commun. 2014, 5, 3294. [Google Scholar] [CrossRef]

- Faghmous, J.H.; Frenger, I.; Yao, Y.; Warmka, R.; Lindell, A.; Kumar, V. A daily global mesoscale ocean eddy dataset from satellite altimetry. Sci. Data 2015, 2, 150028. [Google Scholar] [CrossRef]

- Karimova, S.; Gade, M. Improved statistics of sub-mesoscale eddies in the Baltic Sea retrieved from SAR imagery. Int. J. Remote Sens. 2016, 37, 2394–2414. [Google Scholar] [CrossRef]

- Byrne, D.; Münnich, M.; Frenger, I.; Gruber, N. Mesoscale atmosphere ocean coupling enhances the transfer of wind energy into the ocean. Nat. Commun. 2016, 7, ncomms11867. [Google Scholar] [CrossRef]

- Cao, L.; Zhang, D.; Zhang, X.; Guo, Q. Detection and Identification of Mesoscale Eddies in the South China Sea Based on an Artificial Neural Network Model—YOLOF and Remotely Sensed Data. Remote Sens. 2022, 14, 5411. [Google Scholar] [CrossRef]

- Liu, J.; Piao, S.; Gong, L.; Zhang, M.; Guo, Y.; Zhang, S. The Effect of Mesoscale Eddy on the Characteristic of Sound Propagation. J. Mar. Sci. Eng. 2021, 9, 787. [Google Scholar] [CrossRef]

- Chelton, D. Mesoscale eddy effects. Nat. Geosci. 2013, 6, 594–595. [Google Scholar] [CrossRef]

- Wang, X.; Wang, H.; Liu, D.; Wang, W. The Prediction of Oceanic Mesoscale Eddy Properties and Propagation Trajectories Based on Machine Learning. Water 2020, 12, 2521. [Google Scholar] [CrossRef]

- Chen, X.; Chen, G.; Ge, L.; Huang, B.; Cao, C. Global Oceanic Eddy Identification: A Deep Learning Method From Argo Profiles and Altimetry Data. Front. Mar. Sci. 2021, 8, 646926. [Google Scholar] [CrossRef]

- Xia, L.; Chen, G.; Chen, X.; Ge, L.; Huang, B. Submesoscale oceanic eddy detection in SAR images using context and edge association network. Front. Mar. Sci. 2022, 9, 1023624. [Google Scholar] [CrossRef]

- Matsuoka, D.; Araki, F.; Inoue, Y.; Sasaki, H. A New Approach to Ocean Eddy Detection, Tracking, and Event Visualization–Application to the Northwest Pacific Ocean. Procedia Comput. Sci. 2016, 80, 1601–1611. [Google Scholar] [CrossRef]

- Nian, R.; Cai, Y.; Zhang, Z.; He, H.; Wu, J.; Yuan, Q.; Geng, X.; Qian, Y.; Yang, H.; He, B. The Identification and Prediction of Mesoscale Eddy Variation via Memory in Memory With Scheduled Sampling for Sea Level Anomaly. Front. Mar. Sci. 2021, 8, 753942. [Google Scholar] [CrossRef]

- Moschos, E.; Kugusheva, A.; Coste, P.; Stegner, A. Computer Vision for Ocean Eddy Detection in Infrared Imagery. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 6384–6393. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, Q.; Li, X. Detection and Analysis of Mesoscale Eddies Based on Deep Learning. In Artificial Intelligence Oceanography; Li, X., Wang, F., Eds.; Springer Nature Singapore: Singapore, 2023; pp. 209–225. [Google Scholar] [CrossRef]

- Faghmous, J.H.; Le, M.; Uluyol, M.; Kumar, V.; Chatterjee, S. A Parameter-Free Spatio-Temporal Pattern Mining Model to Catalog Global Ocean Dynamics. In Proceedings of the 2013 IEEE 13th International Conference on Data Mining, Dallas, TX, USA, 7–10 December 2013; pp. 151–160. [Google Scholar] [CrossRef]

- Qiu, C.; Ouyang, J.; Yu, J.; Mao, H.; Qi, Y.; Wu, J.; Su, D. Variations of mesoscale eddy SST fronts based on an automatic detection method in the northern South China Sea. Acta Oceanol. Sin. 2020, 39, 82–90. [Google Scholar] [CrossRef]

- Moschos, E.; Stegner, A.; Schwander, O.; Lapeyre, G.; Tuckerman, L.; Sommeria, J.; Morel, Y. Classification of Eddy Sea Surface Temperature Signatures Under Cloud Coverage. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4338–4347. [Google Scholar] [CrossRef]

- Zhao, D.; Xu, Y.; Zhang, X.; Huang, C. Global chlorophyll distribution induced by mesoscale eddies. Remote Sens. Environ. 2021, 254, 112245. [Google Scholar] [CrossRef]

- Xu, G.; Cheng, C.; Yang, W.; Xie, W.; Kong, L.; Hang, R.; Ma, F.; Dong, C.; Yang, J. Oceanic Eddy Identification Using an AI Scheme. Remote Sens. 2019, 11, 1349. [Google Scholar] [CrossRef]

- Huang, D.; Du, Y.; He, Q.; Song, W.; Liotta, A. DeepEddy: A simple deep architecture for mesoscale oceanic eddy detection in SAR images. In Proceedings of the 2017 IEEE 14th International Conference on Networking, Sensing and Control (ICNSC), Calabria, Italy, 16–18 May 2017; pp. 673–678. [Google Scholar] [CrossRef]

- Moschos, E.; Schwander, O.; Stegner, A.; Gallinari, P. Deep-SST-Eddies: A Deep Learning Framework to Detect Oceanic Eddies in Sea Surface Temperature Images. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 4307–4311. [Google Scholar] [CrossRef]

- Chelton, D.B.; Schlax, M.G.; Samelson, R.M. Global observations of nonlinear mesoscale eddies. Prog. Oceanogr. 2011, 91, 167–216, titleTranslation. [Google Scholar] [CrossRef]

- Li, Y.; Li, X.; Wang, J.; Peng, S. Dynamical analysis of a satellite-observed anticyclonic eddy in the northern Bering Sea. J. Geophys. Res. Ocean. 2016, 121, 3517–3531. [Google Scholar] [CrossRef]

- Dong, D.; Yang, X.; Li, X.; Li, Z. SAR Observation of Eddy-Induced Mode-2 Internal Solitary Waves in the South China Sea. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6674–6686. [Google Scholar] [CrossRef]

- Gade, M.; Stuhlmacher, A. Updated Eddy Statistics For The Western Mediterranean Based On Three Years Of Sentinel-1A Sar Imagery. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 8086–8089. [Google Scholar] [CrossRef]

- Du, Y.; Song, W.; He, Q.; Huang, D.; Liotta, A.; Su, C. Deep learning with multi-scale feature fusion in remote sensing for automatic oceanic eddy detection. Inf. Fusion 2019, 49, 89–99. [Google Scholar] [CrossRef]

- Wang, W.; Gade, M.; Yang, X. Detection of Bivalve Beds on Exposed Intertidal Flats Using Polarimetric SAR Indicators. Remote Sens. 2017, 9, 1047. [Google Scholar] [CrossRef]

- Okubo, A. Horizontal dispersion of floatable particles in the vicinity of velocity singularities such as convergences. Deep Sea Res. Oceanogr. Abstr. 1970, 17, 445–454. [Google Scholar] [CrossRef]

- Weiss, J. The dynamics of enstrophy transfer in two-dimensional hydrodynamics. Phys. D Nonlinear Phenom. 1991, 48, 273–294. [Google Scholar] [CrossRef]

- Frenger, I.; Münnich, M.; Gruber, N.; Knutti, R. Southern ocean eddy phenomenology. J. Geophys. Res. Ocean. 2015, 120, 7413–7449. [Google Scholar] [CrossRef]

- Nencioli, F.; Dong, C.; Dickey, T.D.; Washburn, L.; McWilliams, J.C. A Vector Geometry–Based Eddy Detection Algorithm and Its Application to a High-Resolution Numerical Model Product and High-Frequency Radar Surface Velocities in the Southern California Bight. J. Atmos. Ocean. Technol. 2010, 27, 564–579. [Google Scholar] [CrossRef]

- Liu, Y.; Dong, C.; Guan, Y.; Chen, D.; McWilliams, J.; Nencioli, F. Eddy analysis in the subtropical zonal band of the North Pacific Ocean. Deep Sea Res. Part I Oceanogr. Res. Pap. 2012, 68, 54–67. [Google Scholar] [CrossRef]

- Ari Sadarjoen, I.; Post, F.H. Detection, quantification, and tracking of vortices using streamline geometry. Comput. Graph. 2000, 24, 333–341. [Google Scholar] [CrossRef]

- Chaigneau, A.; Eldin, G.; Dewitte, B. Eddy activity in the four major upwelling systems from satellite altimetry (1992–2007). Prog. Oceanogr. 2009, 83, 117–123. [Google Scholar] [CrossRef]

- Chen, G.; Hou, Y.; Chu, X. Mesoscale eddies in the South China Sea: Mean properties, spatiotemporal variability, and impact on thermohaline structure. J. Geophys. Res. 2011, 116, C06018. [Google Scholar] [CrossRef]

- Xing, T.; Yang, Y. Three Mesoscale Eddy Detection and Tracking Methods: Assessment for the South China Sea. J. Atmos. Ocean. Technol. 2021, 38, 243–258. [Google Scholar] [CrossRef]

- Du, Y.; Liu, J.; Song, W.; He, Q.; Huang, D. Ocean Eddy Recognition in SAR Images With Adaptive Weighted Feature Fusion. IEEE Access 2019, 7, 152023–152033. [Google Scholar] [CrossRef]

- Khachatrian, E.; Sandalyuk, N.; Lozou, P. Eddy Detection in the Marginal Ice Zone with Sentinel-1 Data Using YOLOv5. Remote Sens. 2023, 15, 2244. [Google Scholar] [CrossRef]

- Yan, Z.; Chong, J.; Zhao, Y.; Sun, K.; Wang, Y.; Li, Y. Multifeature Fusion Neural Network for Oceanic Phenomena Detection in SAR Images. Sensors 2019, 20, 210. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Terven, J.; Cordova-Esparza, D. A Comprehensive Review of YOLO: From YOLOv1 and Beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar]

- Malanotte-Rizzoli, P.; Artale, V.; Borzelli-Eusebi, G.L.; Brenner, S.; Crise, A.; Gacic, M.; Kress, N.; Marullo, S.; Ribera d’Alcalà, M.; Sofianos, S.; et al. Physical forcing and physical/biochemical variability of the Mediterranean Sea: A review of unresolved issues and directions for future research. Ocean Sci. 2014, 10, 281–322. [Google Scholar] [CrossRef]

- Robinson, I.S. Introduction. In Discovering the Ocean from Space; Springer: Berlin/Heidelberg, Germany, 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Skrunes, S.; Brekke, C.; Eltoft, T. Characterization of Marine Surface Slicks by Radarsat-2 Multipolarization Features. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5302–5319. [Google Scholar] [CrossRef]

- Li, X.; Guo, H.; Chen, K.S.; Yang, X. (Eds.) Advances in SAR Remote Sensing of Oceans; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar] [CrossRef]

- Karimova, S. Spiral eddies in the Baltic, Black and Caspian seas as seen by satellite radar data. Adv. Space Res. 2012, 50, 1107–1124. [Google Scholar] [CrossRef]

- Gade, M.; Byfield, V.; Ermakov, S.; Lavrova, O.; Mitnik, L. Slicks as Indicators for Marine Processes. Oceanography 2013, 26. [Google Scholar] [CrossRef]

- Johannessen, J.A.; Shuchman, R.A.; Digranes, G.; Lyzenga, D.R.; Wackerman, C.; Johannessen, O.M.; Vachon, P.W. Coastal ocean fronts and eddies imaged with ERS 1 synthetic aperture radar. J. Geophys. Res. Ocean. 1996, 101, 6651–6667. [Google Scholar] [CrossRef]

- Reza, A.M. Realization of the Contrast Limited Adaptive Histogram Equalization (CLAHE) for Real-Time Image Enhancement. J. VLSI Signal Process.-Syst. Signal Image Video Technol. 2004, 38, 35–44. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Series Title: Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar] [CrossRef]

- Hafiz, A.M.; Bhat, G.M. A survey on instance segmentation: State-of-the-art. Int. J. Multimed. Inf. Retr. 2020, 9, 171–189. [Google Scholar] [CrossRef]

- Zhang, D.; Gade, M.; Zhang, J. SAR Eddy Detection Using Mask-RCNN and Edge Enhancement. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 1604–1607. [Google Scholar] [CrossRef]

- Huang, Z.; Huang, L.; Gong, Y.; Huang, C.; Wang, X. Mask Scoring R-CNN. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–19 June 2019; pp. 6402–6411. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Q. A Survey on Multi-Task Learning. IEEE Trans. Knowl. Data Eng. 2022, 34, 5586–5609. [Google Scholar] [CrossRef]

- Zimmermann, R.S.; Siems, J.N. Faster training of Mask R-CNN by focusing on instance boundaries. Comput. Vis. Image Underst. 2019, 188, 102795. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS—Improving Object Detection with One Line of Code. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5562–5570. [Google Scholar] [CrossRef]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a Few Examples: A Survey on Few-shot Learning. ACM Comput. Surv. 2021, 53, 1–34. [Google Scholar] [CrossRef]

- Zhou, Z.H. A brief introduction to weakly supervised learning. Natl. Sci. Rev. 2018, 5, 44–53. [Google Scholar] [CrossRef]

- Guo, C.; Fan, B.; Zhang, Q.; Xiang, S.; Pan, C. AugFPN: Improving Multi-Scale Feature Learning for Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12592–12601. [Google Scholar] [CrossRef]

- Pan, X.; Ren, Y.; Sheng, K.; Dong, W.; Yuan, H.; Guo, X.; Ma, C.; Xu, C. Dynamic Refinement Network for Oriented and Densely Packed Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11204–11213. [Google Scholar] [CrossRef]

- Yang, X.; Yang, J.; Yan, J.; Zhang, Y.; Zhang, T.; Guo, Z.; Sun, X.; Fu, K. SCRDet: Towards More Robust Detection for Small, Cluttered and Rotated Objects. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8231–8240. [Google Scholar] [CrossRef]

- Qiu, H.; Li, H.; Wu, Q.; Cui, J.; Song, Z.; Wang, L.; Zhang, M. CrossDet: Crossline Representation for Object Detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3175–3184. [Google Scholar] [CrossRef]

| Methods | |||

|---|---|---|---|

| Mask RCNN | 18.7 | 35.6 | 20.1 |

| Mask RCNN and Edge Enhancement | 21.0 | 38.5 | 22.2 |

| Mask RCNN and only Mask IoU Head | 23.3 | 45.0 | 25.1 |

| Mask-ES-RCNN | 24.8 | 48.5 | 27.1 |

| Backbone | Mask IoU Head | Laplace Head | Sobel Head | |||

|---|---|---|---|---|---|---|

| ResNet-50-FPN | × | × | × | 18.7 | 35.6 | 20.1 |

| × | ✓ | × | 22.9 | 44.9 | 24.8 | |

| × | × | ✓ | 23.6 | 46.7 | 25.9 | |

| ✓ | × | ✓ | 24.8 | 48.5 | 27.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, D.; Gade, M.; Wang, W.; Zhou, H. EddyDet: A Deep Framework for Oceanic Eddy Detection in Synthetic Aperture Radar Images. Remote Sens. 2023, 15, 4752. https://doi.org/10.3390/rs15194752

Zhang D, Gade M, Wang W, Zhou H. EddyDet: A Deep Framework for Oceanic Eddy Detection in Synthetic Aperture Radar Images. Remote Sensing. 2023; 15(19):4752. https://doi.org/10.3390/rs15194752

Chicago/Turabian StyleZhang, Di, Martin Gade, Wensheng Wang, and Haoran Zhou. 2023. "EddyDet: A Deep Framework for Oceanic Eddy Detection in Synthetic Aperture Radar Images" Remote Sensing 15, no. 19: 4752. https://doi.org/10.3390/rs15194752

APA StyleZhang, D., Gade, M., Wang, W., & Zhou, H. (2023). EddyDet: A Deep Framework for Oceanic Eddy Detection in Synthetic Aperture Radar Images. Remote Sensing, 15(19), 4752. https://doi.org/10.3390/rs15194752