Boosting SAR Aircraft Detection Performance with Multi-Stage Domain Adaptation Training

Abstract

:1. Introduction

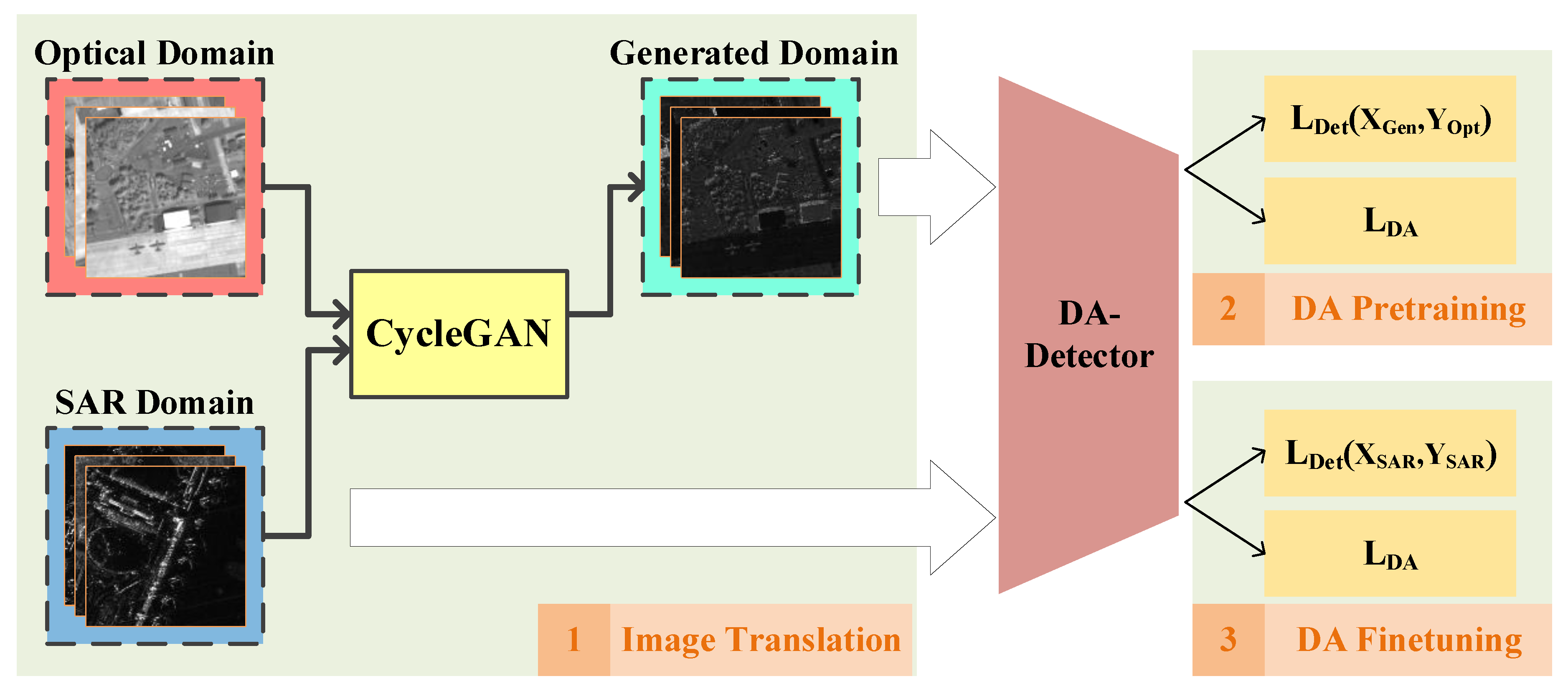

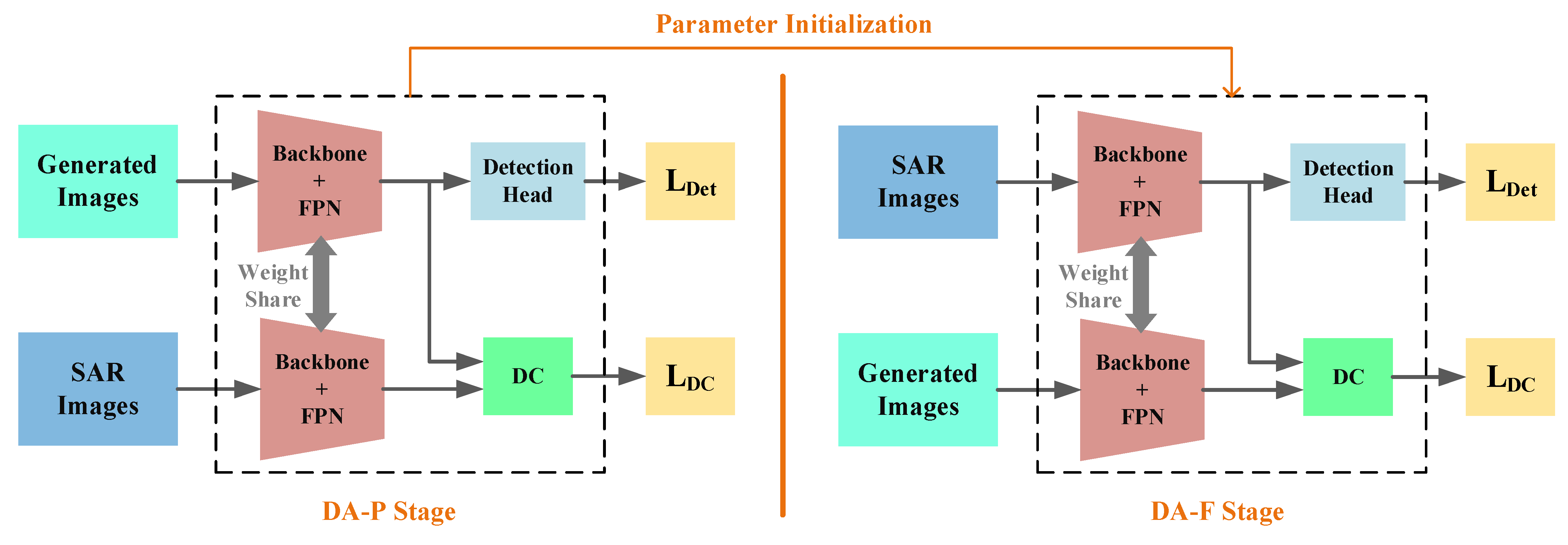

- A multi-stage domain adaptation training (MDAT) framework is proposed in this paper. The training procedure includes three stages, i.e., image translation (IT), domain adaptive pretraining (DA-P), and domain adaptive finetuning (DA-F), to gradually reduce the discrepancy between optical and SAR domains. To the best of our knowledge, it is the first work that focuses on improving SAR aircraft detection performance by efficiently transferring knowledge from optical images.

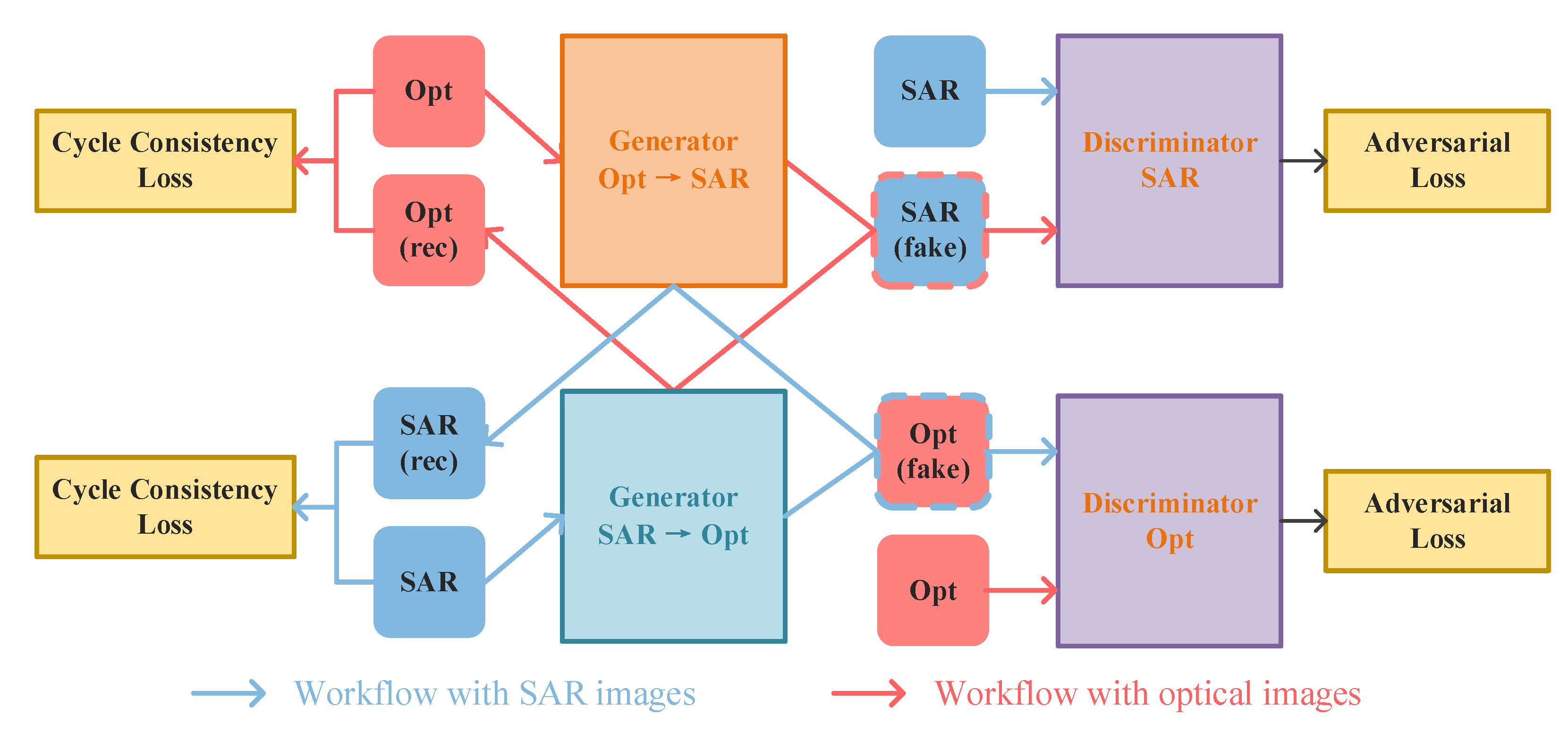

- To reduce the global-level image divergence between optical and SAR domains, CycleGAN [38] is adopted in the first stage to employ image-level domain adaptation. By translating optical images with aircraft targets into corresponding SAR-style ones, the overall image divergences can be effectively eliminated.

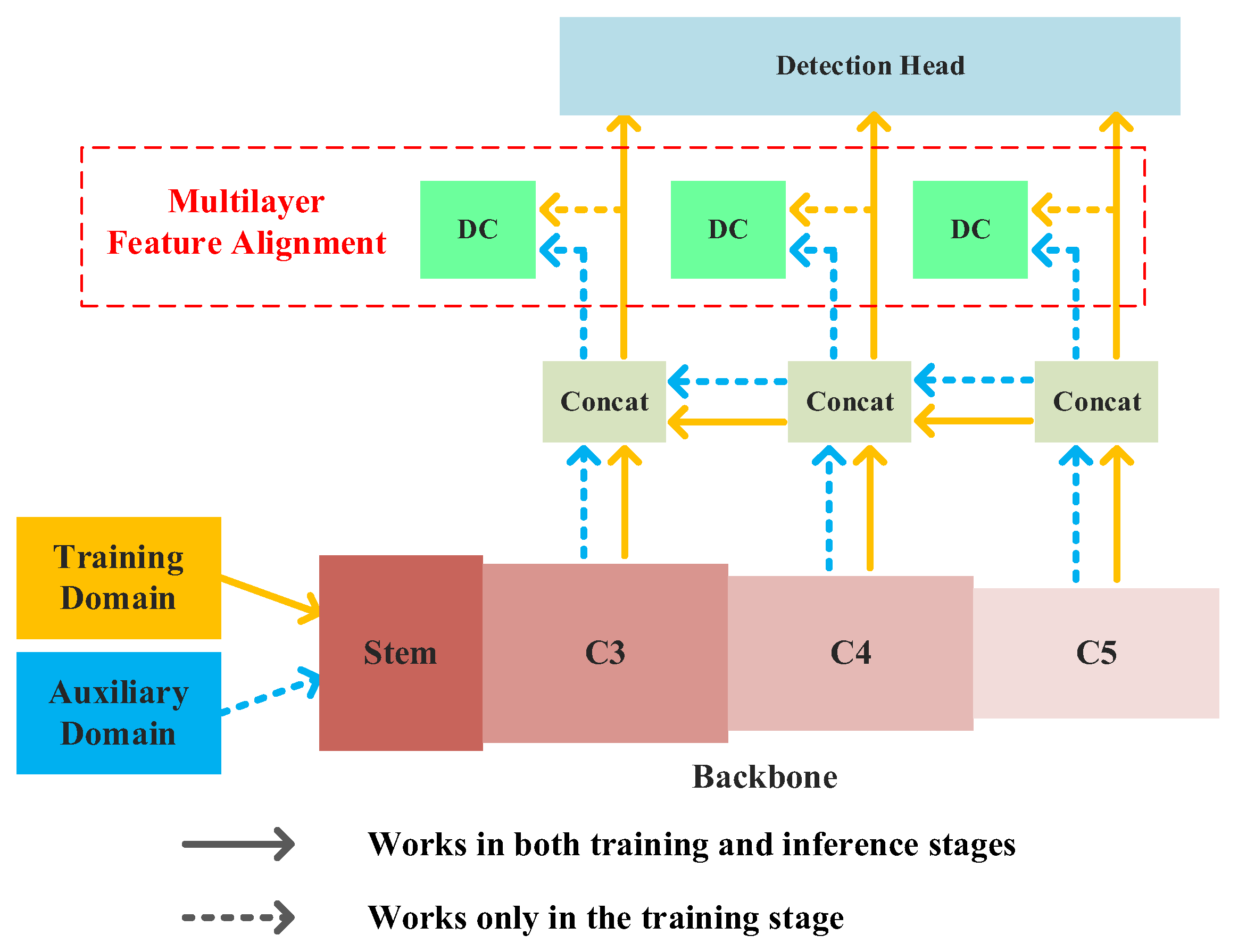

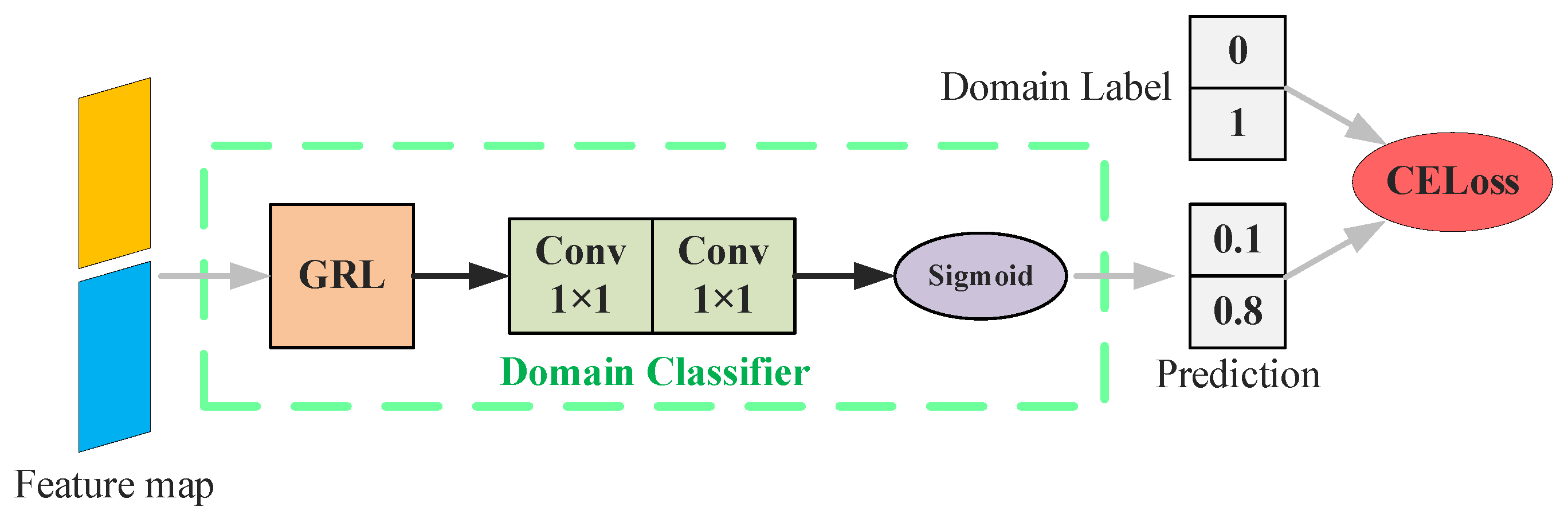

- Additionally, multilayer feature alignment was designed to further reduce local-level divergences. By using domain adversarial learning in both the pretrain and finetune stages, the detector can extract domain-invariant features and learn generic aircraft characteristics, which improves the transfer effect and increases SAR aircraft detection accuracy.

2. Materials and Methods

2.1. Structure of Detection Networks

2.1.1. Faster RCNN

2.1.2. YOLOv3

2.2. Overall Framework of MDAT

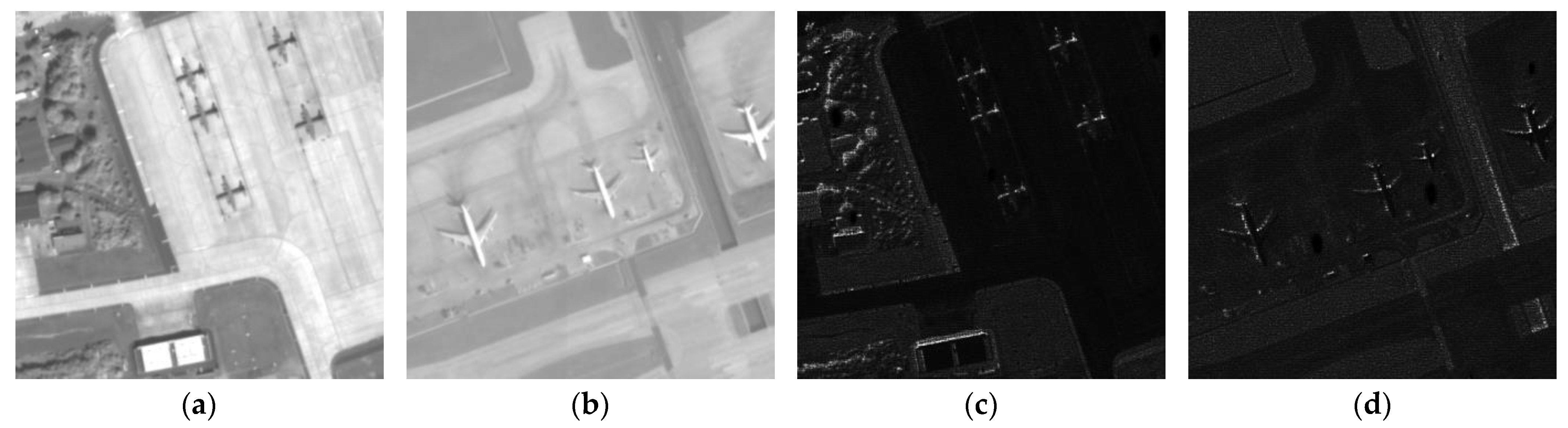

2.3. Global-Level Domain Adaptation with Image Translation

2.4. Local-Level Domain Adaptation with Multilayer Feature Alignment

3. Experiment and Parameters Evaluation

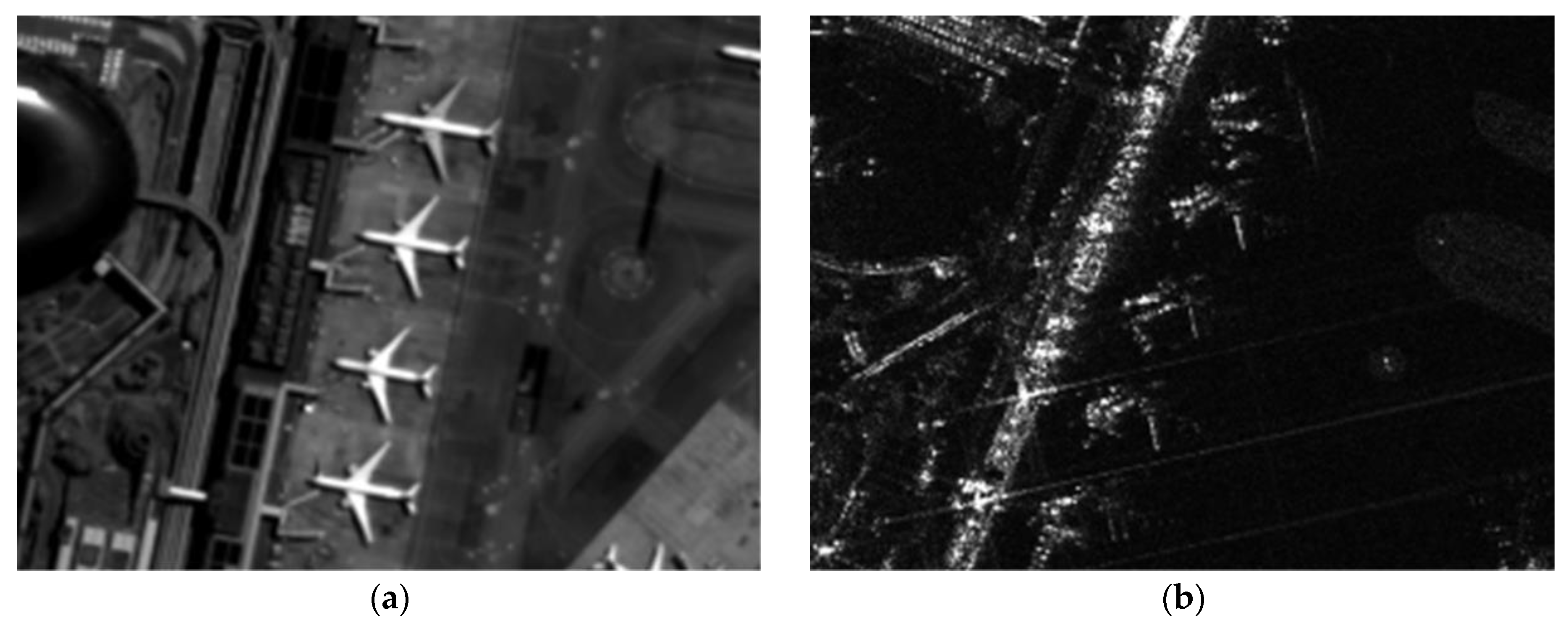

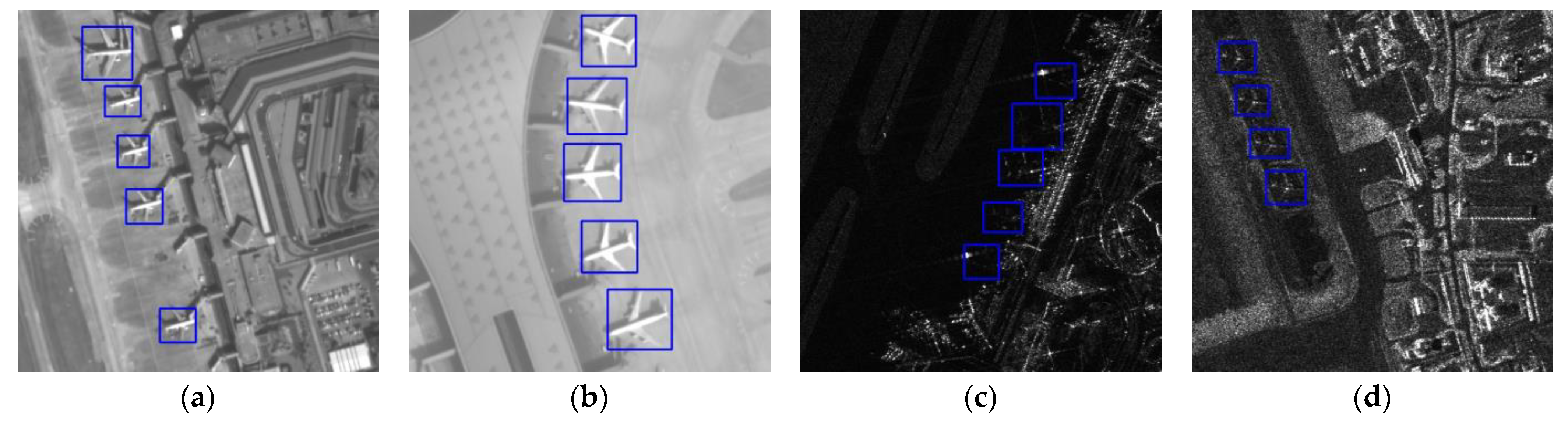

3.1. Datasets

3.2. Evaluation Metrics

3.3. Implementation Setting

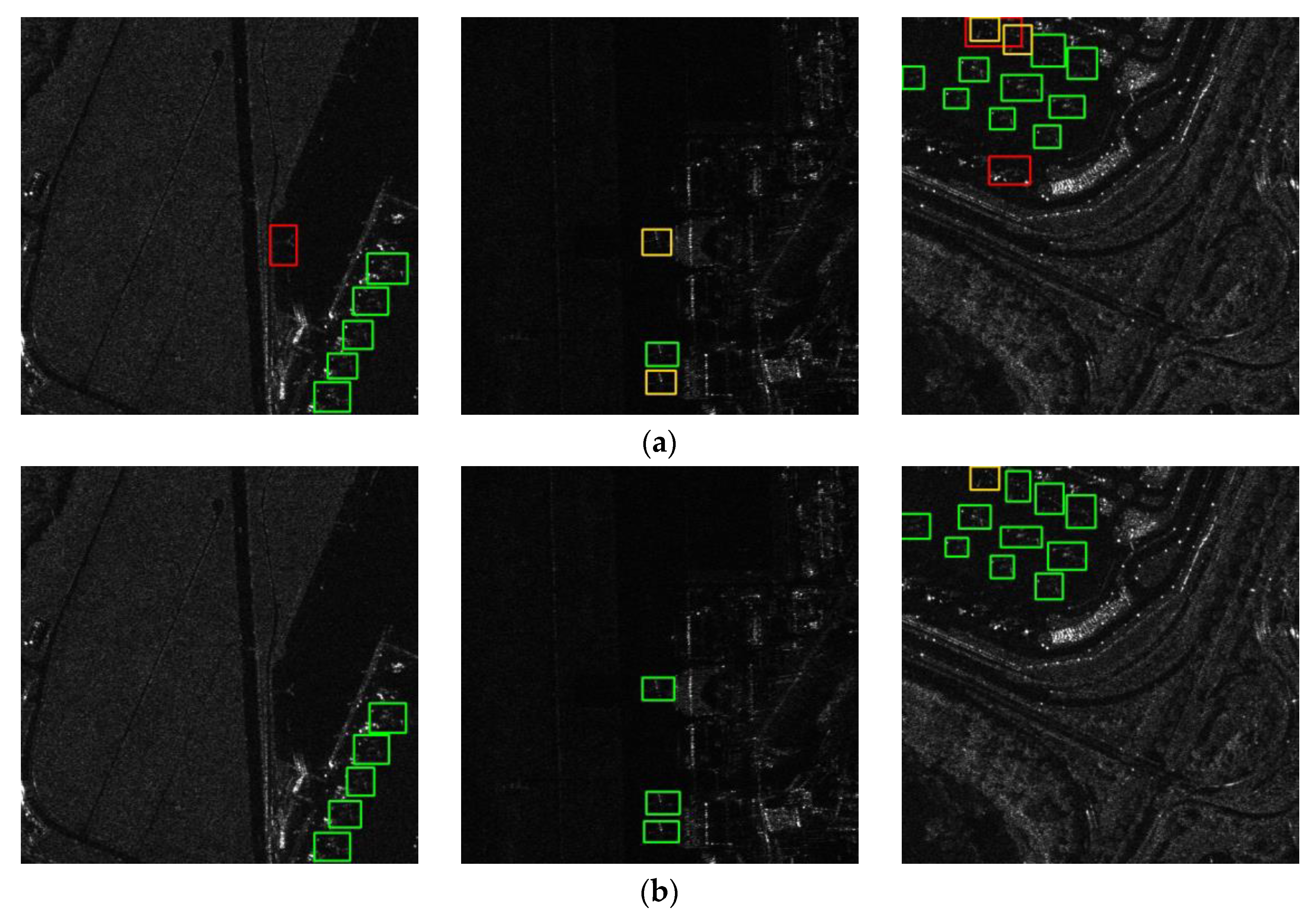

4. Results

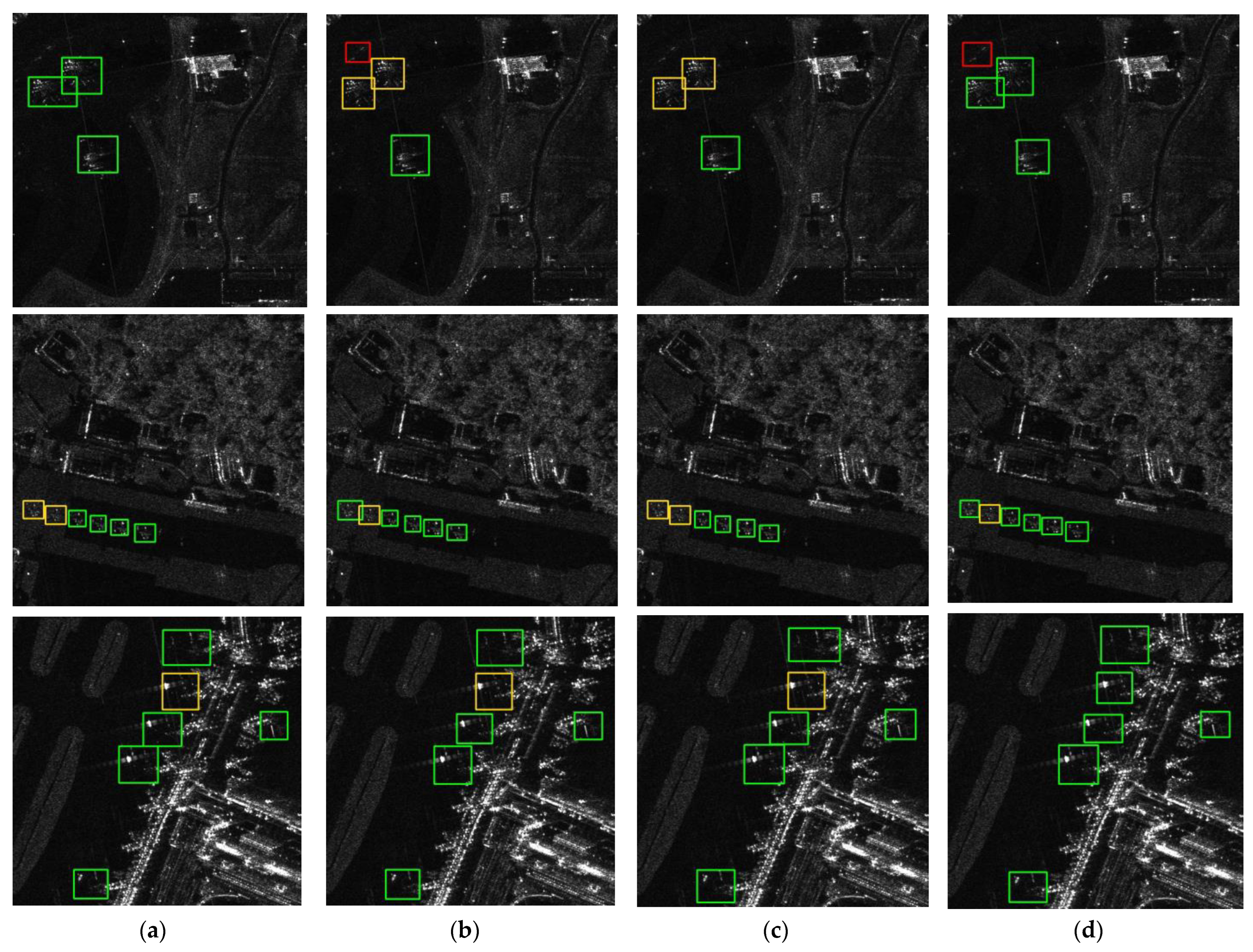

4.1. Comparison with Other Methods

4.2. Ablation Study

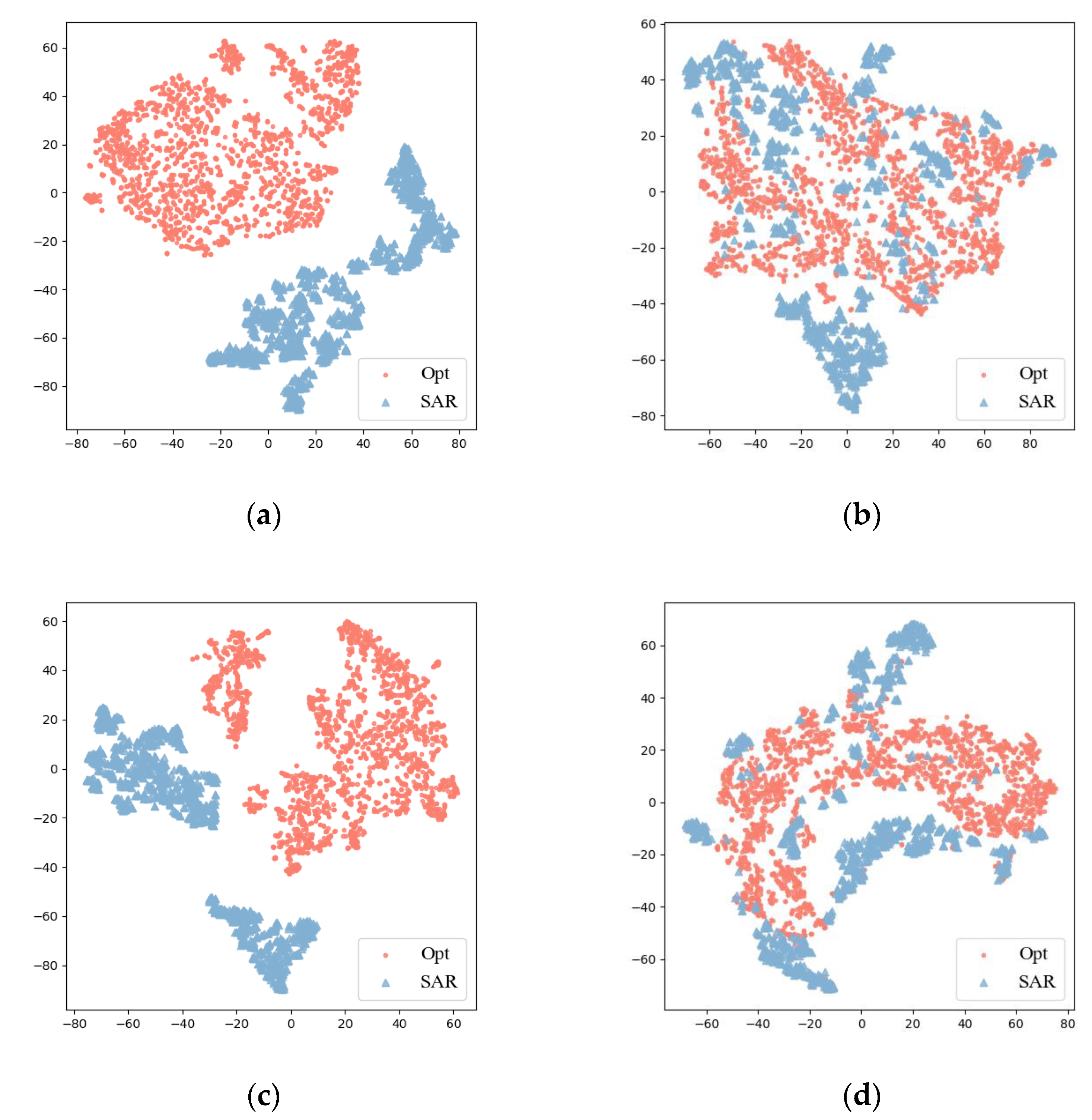

4.3. Effect of Domain Adaptation

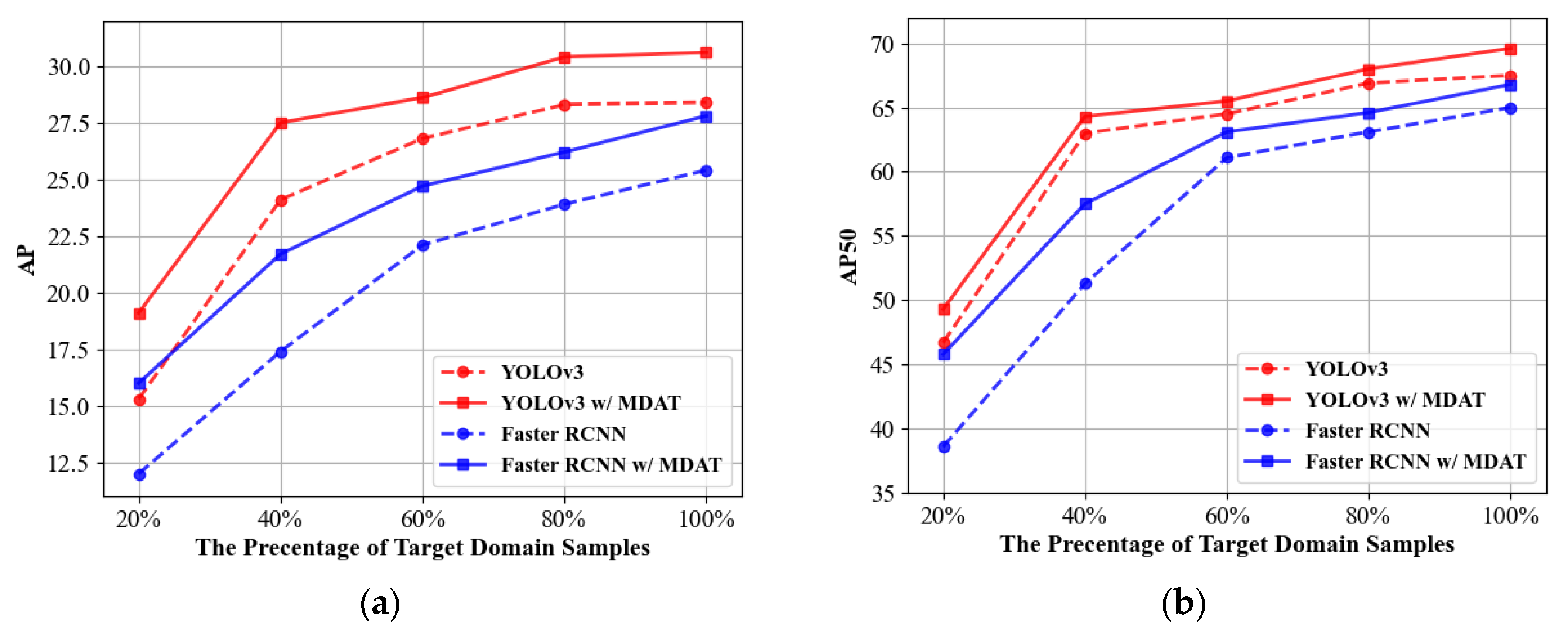

4.4. Analysis of Training Sample Scale

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Novak, L.M.; Halversen, S.D.; Owirka, G.J.; Hiett, M. Effects of Polarization and Resolution on SAR ATR. IEEE Trans. Aerosp. Electron. Syst. 1997, 33, 102–116. [Google Scholar] [CrossRef]

- Nunziata, F.; Migliaccio, M.; Brown, C.E. Reflection Symmetry for Polarimetric Observation of Man-Made Metallic Targets at Sea. IEEE J. Ocean. Eng. 2012, 37, 384–394. [Google Scholar] [CrossRef]

- Dellinger, F.; Delon, J.; Gousseau, Y.; Michel, J.; Tupin, F. SAR-SIFT: A SIFT-like Algorithm for SAR Images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 453–466. [Google Scholar] [CrossRef]

- Zhai, L.; Li, Y.; Su, Y. Inshore Ship Detection via Saliency and Context Information in High-Resolution SAR Images. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1870–1874. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Bi, H.; Deng, J.; Yang, T.; Wang, J.; Wang, L. CNN-Based Target Detection and Classification When Sparse SAR Image Dataset Is Available. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6815–6826. [Google Scholar] [CrossRef]

- Zou, B.; Qin, J.; Zhang, L. Vehicle Detection Based on Semantic-Context Enhancement for High-Resolution SAR Images in Complex Background. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4503905. [Google Scholar] [CrossRef]

- Feng, Y.; Chen, J.; Huang, Z.; Wan, H.; Xia, R.; Wu, B. A Lightweight Position-Enhanced Anchor-Free Algorithm for SAR Ship Detection. Remote Sens. 2022, 14, 1908. [Google Scholar] [CrossRef]

- Yu, W.; Wang, Z.; Li, J.; Luo, Y.; Yu, Z. A Lightweight Network Based on One-Level Feature for Ship Detection in SAR Images. Remote Sens. 2022, 14, 3321. [Google Scholar] [CrossRef]

- Sun, Z.; Dai, M.; Leng, X.; Lei, Y.; Xiong, B.; Ji, K.; Kuang, G. An Anchor-Free Detection Method for Ship Targets in High-Resolution SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7799–7816. [Google Scholar] [CrossRef]

- Chen, L.; Weng, T.; Xing, J.; Pan, Z.; Yuan, Z.; Xing, X.; Zhang, P. A New Deep Learning Network for Automatic Bridge Detection from SAR Images Based on Balanced and Attention Mechanism. Remote Sens. 2020, 12, 441. [Google Scholar] [CrossRef]

- He, C.; Tu, M.; Xiong, D.; Tu, F.; Liao, M. A Component-Based Multi-Layer Parallel Network for Airplane Detection in SAR Imagery. Remote Sens. 2018, 10, 1016. [Google Scholar] [CrossRef]

- Diao, W.; Dou, F.; Fu, K.; Sun, X. Aircraft Detection in SAR Images Using Saliency Based Location Regression Network. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 2334–2337. [Google Scholar] [CrossRef]

- Wang, J.; Xiao, H.; Chen, L.; Xing, J.; Pan, Z.; Luo, R.; Cai, X. Integrating Weighted Feature Fusion and the Spatial Attention Module with Convolutional Neural Networks for Automatic Aircraft Detection from Sar Images. Remote Sens. 2021, 13, 910. [Google Scholar] [CrossRef]

- Guo, Q.; Wang, H.; Xu, F. Scattering Enhanced Attention Pyramid Network for Aircraft Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7570–7587. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Li, C.; Kuang, G. Pyramid Attention Dilated Network for Aircraft Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2021, 18, 662–666. [Google Scholar] [CrossRef]

- Kang, Y.; Wang, Z.; Fu, J.; Sun, X.; Fu, K. SFR-Net: Scattering Feature Relation Network for Aircraft Detection in Complex SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5218317. [Google Scholar] [CrossRef]

- Chen, L.; Luo, R.; Xing, J.; Li, Z.; Yuan, Z.; Cai, X. Geospatial Transformer Is What You Need for Aircraft Detection in SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5225715. [Google Scholar] [CrossRef]

- Li, J.; Qu, C.; Shao, J. Ship Detection in SAR Images Based on an Improved Faster R-CNN. In Proceedings of the SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017; pp. 1–6. [Google Scholar]

- Bao, W.; Huang, M.; Zhang, Y.; Xu, Y.; Liu, X.; Xiang, X. Boosting Ship Detection in SAR Images with Complementary Pretraining Techniques. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8941–8954. [Google Scholar] [CrossRef]

- Ganin, Y.; Lempitsky, V. Unsupervised Domain Adaptation by Backpropagation. In Proceedings of the 32nd International Conference on Machine Learning, ICML 2015, Lille, France, 6–11 July 2015; Volume 2, pp. 1180–1189. [Google Scholar]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-Adversarial Training of Neural Networks. J. Mach. Learn.Res. 2016, 17, 2096-2030. [Google Scholar]

- Chen, Y.; Li, W.; Sakaridis, C.; Dai, D.; Van Gool, L. Domain Adaptive Faster R-CNN for Object Detection in the Wild. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3339–3348. [Google Scholar]

- Saito, K.; Ushiku, Y.; Harada, T.; Saenko, K. Strong-Weak Distribution Alignment for Adaptive Object Detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6949–6958. [Google Scholar] [CrossRef]

- Rostami, M.; Kolouri, S.; Eaton, E.; Kim, K. Deep Transfer Learning for Few-Shot SAR Image Classification. Remote Sens. 2019, 11, 1374. [Google Scholar] [CrossRef]

- Chen, Z.; Zhao, L.; He, Q.; Kuang, G. Pixel-Level and Feature-Level Domain Adaptation for Heterogeneous SAR Target Recognition. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4515205. [Google Scholar] [CrossRef]

- Song, Y.; Li, J.; Gao, P.; Li, L.; Tian, T.; Tian, J. Two-Stage Cross-Modality Transfer Learning Method for Military-Civilian SAR Ship Recognition. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4506405. [Google Scholar] [CrossRef]

- Shi, Y.; Du, L.; Guo, Y.; Du, Y. Unsupervised Domain Adaptation Based on Progressive Transfer for Ship Detection: From Optical to SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5230317. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar] [CrossRef]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar]

- Yu, W.; Wang, Z.; Li, J.; Wang, Y.; Yu, Z. Unsupervised Aircraft Detection in SAR Images with Image-Level Domain Adaption from Optical Images. In Proceedings of the International Conference on Computer Vision and Pattern Analysis (ICCPA), Hangzhou, China, 31 March–2 April 2023. [Google Scholar]

- Li, C.; Du, D.; Zhang, L.; Wen, L.; Luo, T.; Wu, Y.; Zhu, P. Spatial Attention Pyramid Network for Unsupervised Domain Adaptation. In Proceedings of the Computer Vision—ECCV, Glasgow, UK, 23–28 August 2020; Volume 12358 LNCS, pp. 481–497. [Google Scholar]

- Zheng, Y.; Huang, D.; Liu, S.; Wang, Y. Cross-Domain Object Detection through Coarse-to-Fine Feature Adaptation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13763–13772. [Google Scholar]

- Fan, Q.; Zhuo, W.; Tai Tencent, Y.-W. Few-Shot Object Detection with Attention-RPN and Multi-Relation Detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4013–4022. [Google Scholar]

- Vibashan, V.S.; Gupta, V.; Oza, P.; Sindagi, V.A.; Patel, V.M. MEGA-CDA: Memory Guided Attention for Category-Aware Unsupervised Domain Adaptive Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 4516–4526. [Google Scholar]

- Xu, C.; Zheng, X.; Lu, X. Multi-Level Alignment Network for Cross-Domain Ship Detection. Remote Sens. 2022, 14, 2389. [Google Scholar] [CrossRef]

| Domain | Imaging Platform | Imaging Mode | Resolution | Chip Num. (All) (Train/Test) | Chip Num. (Filtered) (Train/Test) | Aircraft Num. (Train/Test) |

|---|---|---|---|---|---|---|

| Source | Gaofen-2 | Panchromatic | 0.8 m | 2180/- | 648/- | 2083/- |

| Target | Gaofen-3 | Spotlight | 1.0 m | 1120/912 | 300/189 | 1282/697 |

| Detector | Method | P | R | F1 | AP | AP50 |

|---|---|---|---|---|---|---|

| Faster RCNN | DANN | 0.7718 | 0.6987 | 0.7334 | 26.3 | 63.0 |

| DAF | 0.7438 | 0.7374 | 0.7406 | 26.6 | 65.0 | |

| SWDA | 0.7646 | 0.7131 | 0.7380 | 27.7 | 65.5 | |

| MDAT | 0.7555 | 0.7403 | 0.7478 | 27.8 | 66.8 | |

| YOLOv3 | DANN | 0.7682 | 0.7274 | 0.7472 | 29.8 | 66.5 |

| MDAT | 0.7836 | 0.7690 | 0.7762 | 31.0 | 69.6 |

| P-F | IT | DA-P | DA-F | Faster RCNN | YOLOv3 | ||

|---|---|---|---|---|---|---|---|

| AP | AP50 | AP | AP50 | ||||

| 25.4 | 65.0 | 28.4 | 67.5 | ||||

| √ | 25.9 | 61.5 | 28.8 | 65.5 | |||

| √ | √ | 26.7 | 64.4 | 30.0 | 67.2 | ||

| √ | √ | √ | 27.2 | 65.8 | 30.6 | 68.0 | |

| √ | √ | √ | √ | 27.8 | 66.8 | 31.0 | 69.6 |

| Training Percentage | Baseline | Proposed Method | ||

|---|---|---|---|---|

| AP | AP50 | AP | AP50 | |

| 20% | 12.0 | 38.6 | 16.0 | 45.8 |

| 40% | 17.4 | 51.3 | 21.7 | 57.5 |

| 60% | 22.1 | 61.1 | 24.7 | 63.1 |

| 80% | 23.9 | 63.1 | 26.2 | 64.6 |

| 100% | 25.4 | 65.0 | 27.8 | 66.8 |

| Training Percentage | Baseline | Proposed Method | ||

|---|---|---|---|---|

| AP | AP50 | AP | AP50 | |

| 20% | 15.3 | 46.7 | 19.1 | 49.3 |

| 40% | 24.1 | 63.0 | 27.5 | 64.3 |

| 60% | 26.8 | 64.5 | 28.6 | 65.5 |

| 80% | 28.3 | 66.9 | 30.4 | 68.0 |

| 100% | 28.4 | 67.5 | 30.6 | 69.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, W.; Li, J.; Wang, Z.; Yu, Z. Boosting SAR Aircraft Detection Performance with Multi-Stage Domain Adaptation Training. Remote Sens. 2023, 15, 4614. https://doi.org/10.3390/rs15184614

Yu W, Li J, Wang Z, Yu Z. Boosting SAR Aircraft Detection Performance with Multi-Stage Domain Adaptation Training. Remote Sensing. 2023; 15(18):4614. https://doi.org/10.3390/rs15184614

Chicago/Turabian StyleYu, Wenbo, Jiamu Li, Zijian Wang, and Zhongjun Yu. 2023. "Boosting SAR Aircraft Detection Performance with Multi-Stage Domain Adaptation Training" Remote Sensing 15, no. 18: 4614. https://doi.org/10.3390/rs15184614

APA StyleYu, W., Li, J., Wang, Z., & Yu, Z. (2023). Boosting SAR Aircraft Detection Performance with Multi-Stage Domain Adaptation Training. Remote Sensing, 15(18), 4614. https://doi.org/10.3390/rs15184614