Statistical Downscaling of SEVIRI Land Surface Temperature to WRF Near-Surface Air Temperature Using a Deep Learning Model

Abstract

:1. Introduction

1.1. Motivation and Context

1.2. State of the Art

1.3. Application

1.4. Proposed Approach

2. Methodology

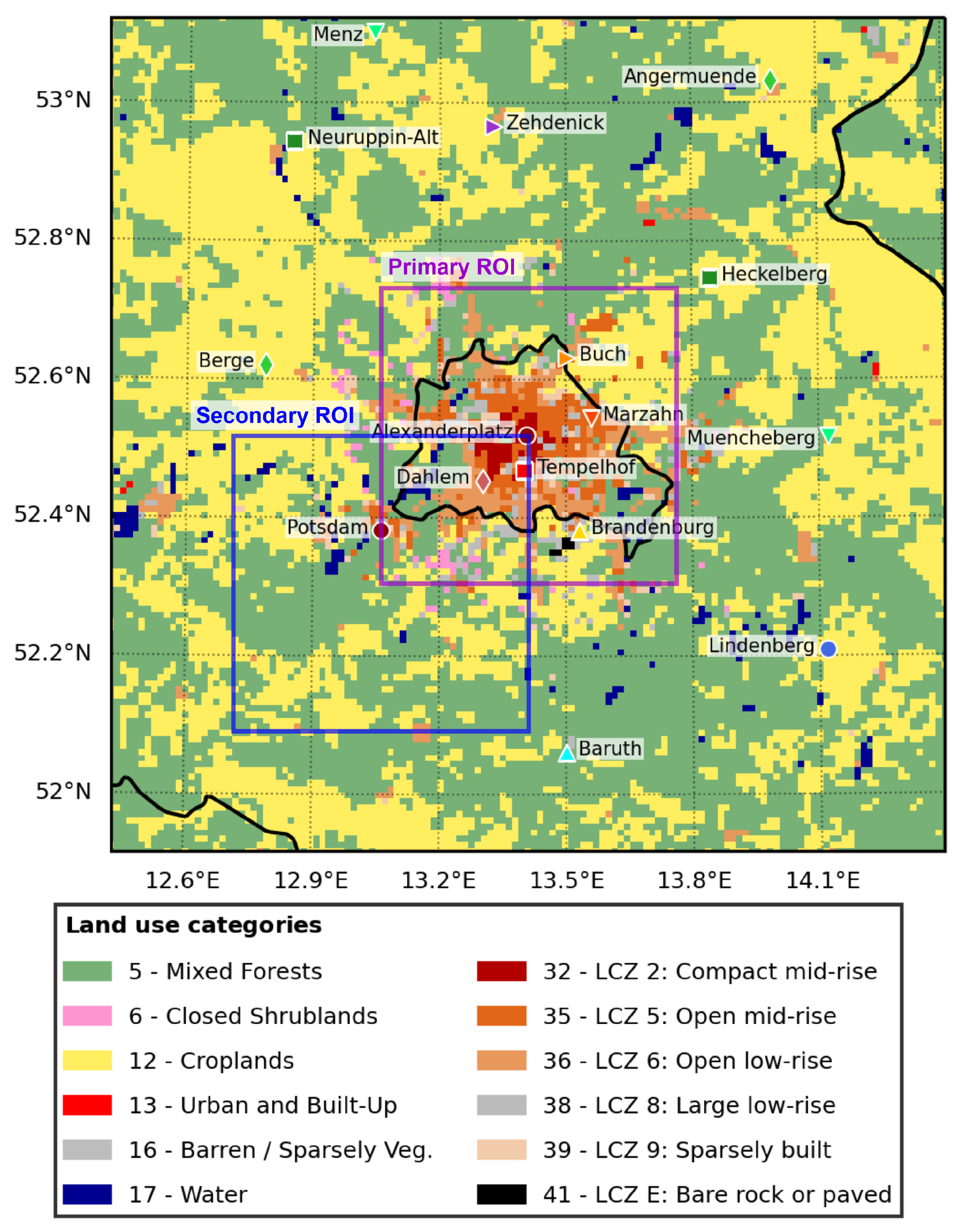

2.1. Test Case

2.2. The Predictor: SEVIRI Land Surface Temperature

2.3. The Predictand: WRF Near-Surface Air Temperature

2.4. Data Preprocessing

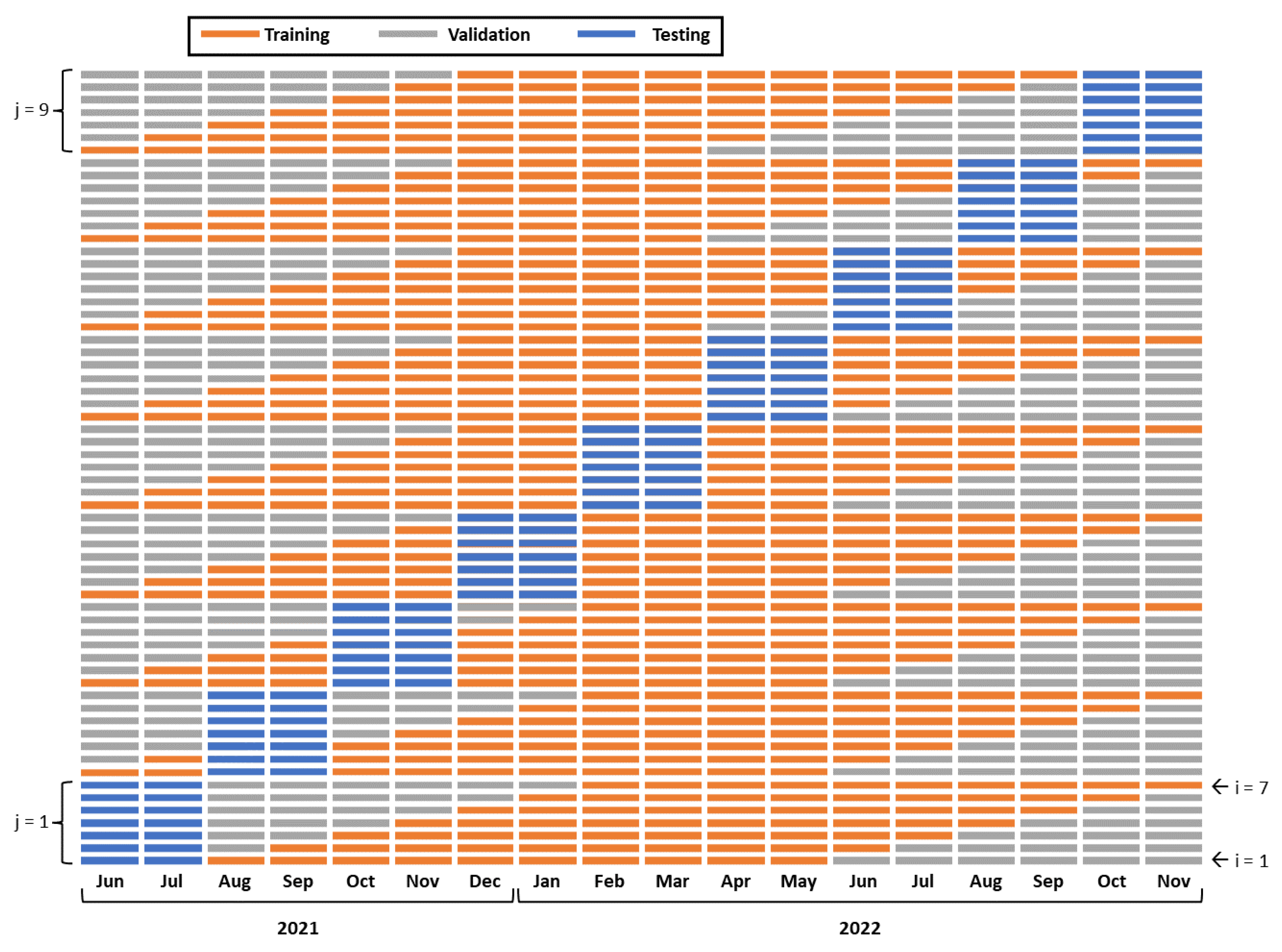

2.5. Data Splitting

2.6. Statistical Downscaling Models

2.6.1. Linear Regression Model

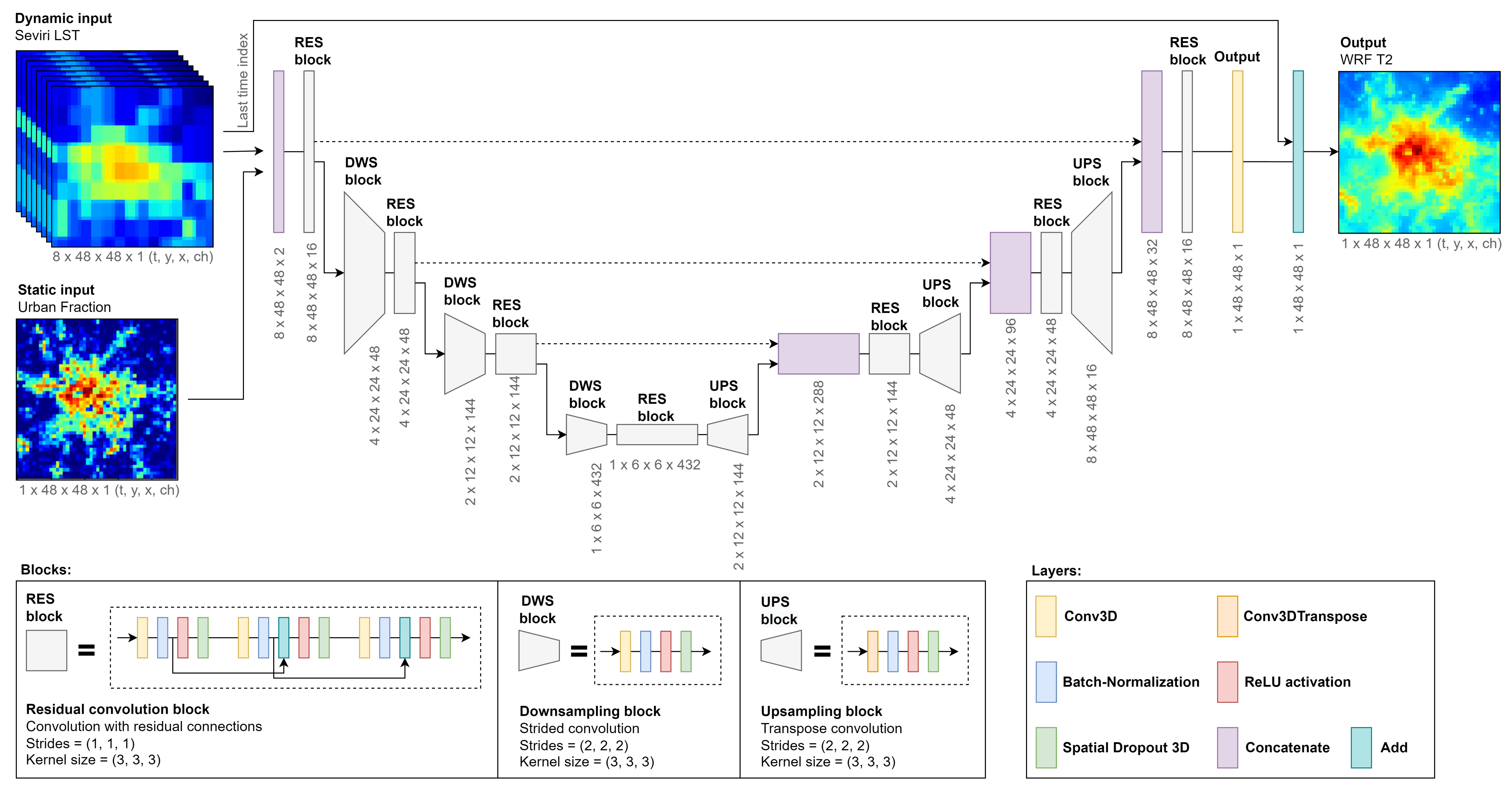

2.6.2. Deep Learning Model

3. Results and Discussion

3.1. Input Uncertainty

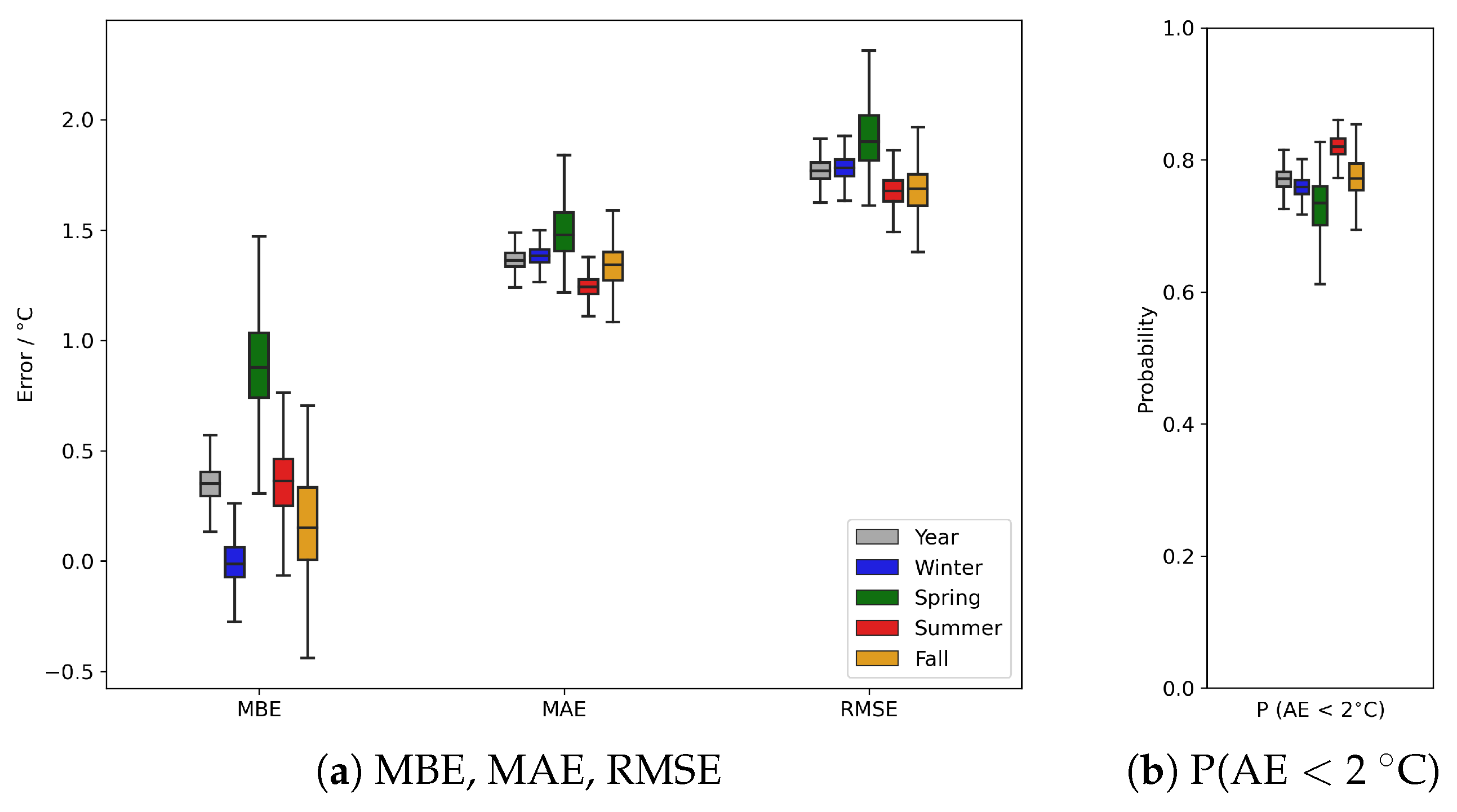

3.2. Overall Model Error and Accuracy Metrics

- Mean bias error:

- Mean absolute error:

- Root mean square error:

- R-squared:

- Peak signal-to-noise ratio:

- 2 C), the probability of getting an absolute error (AE) that is smaller than 2 C:

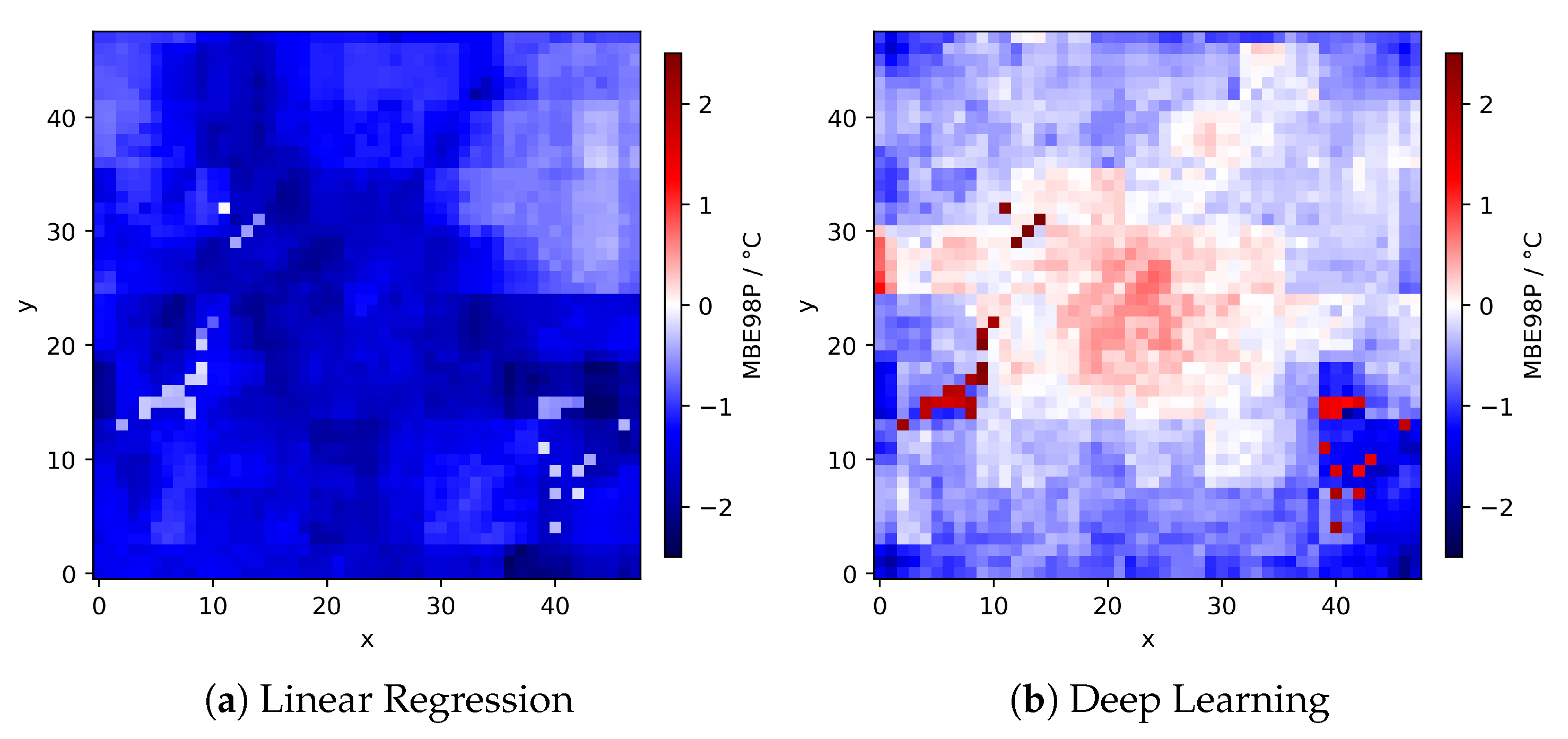

- Mean bias error map:

- Mean absolute error map:

- Root Mean Square Error map:

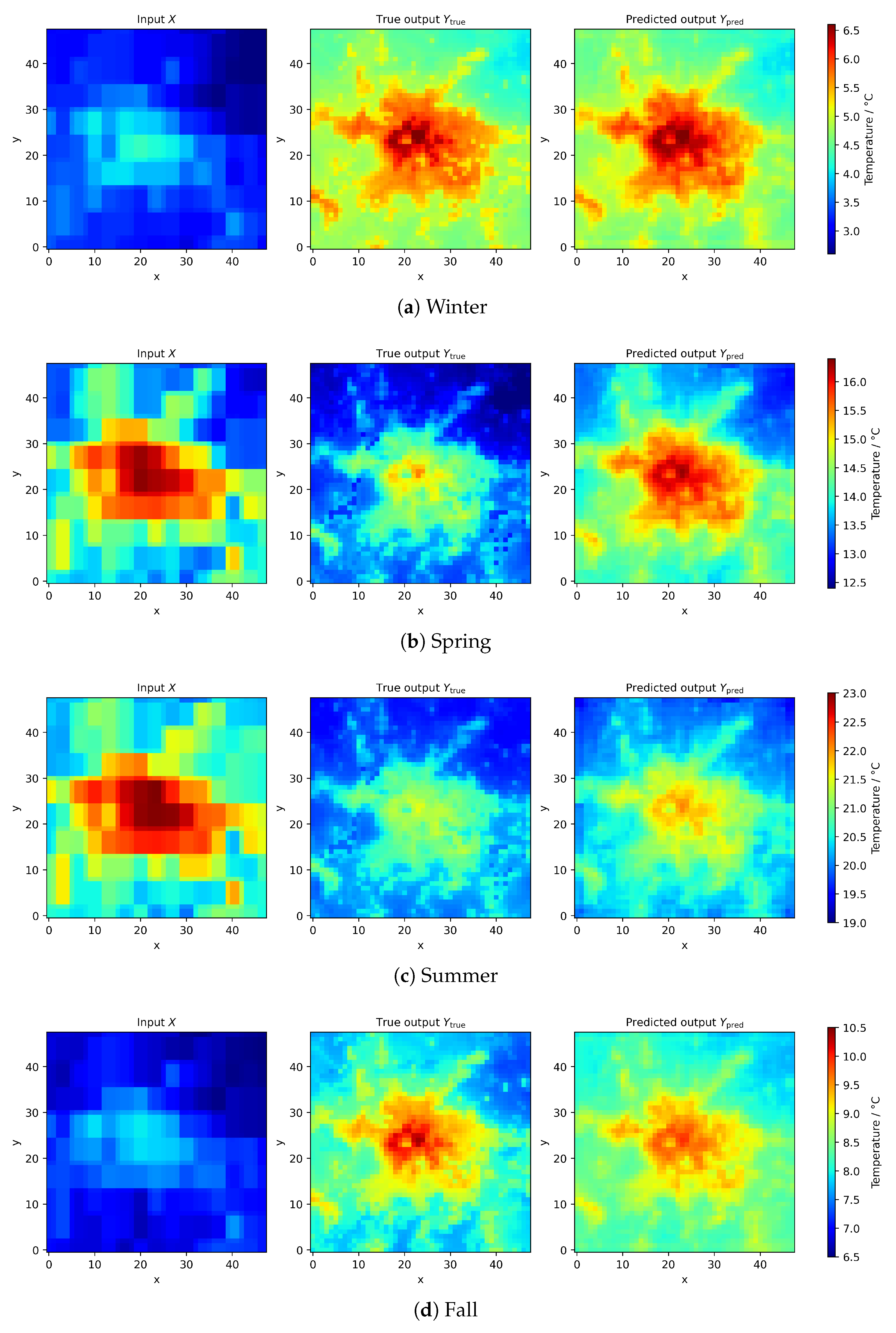

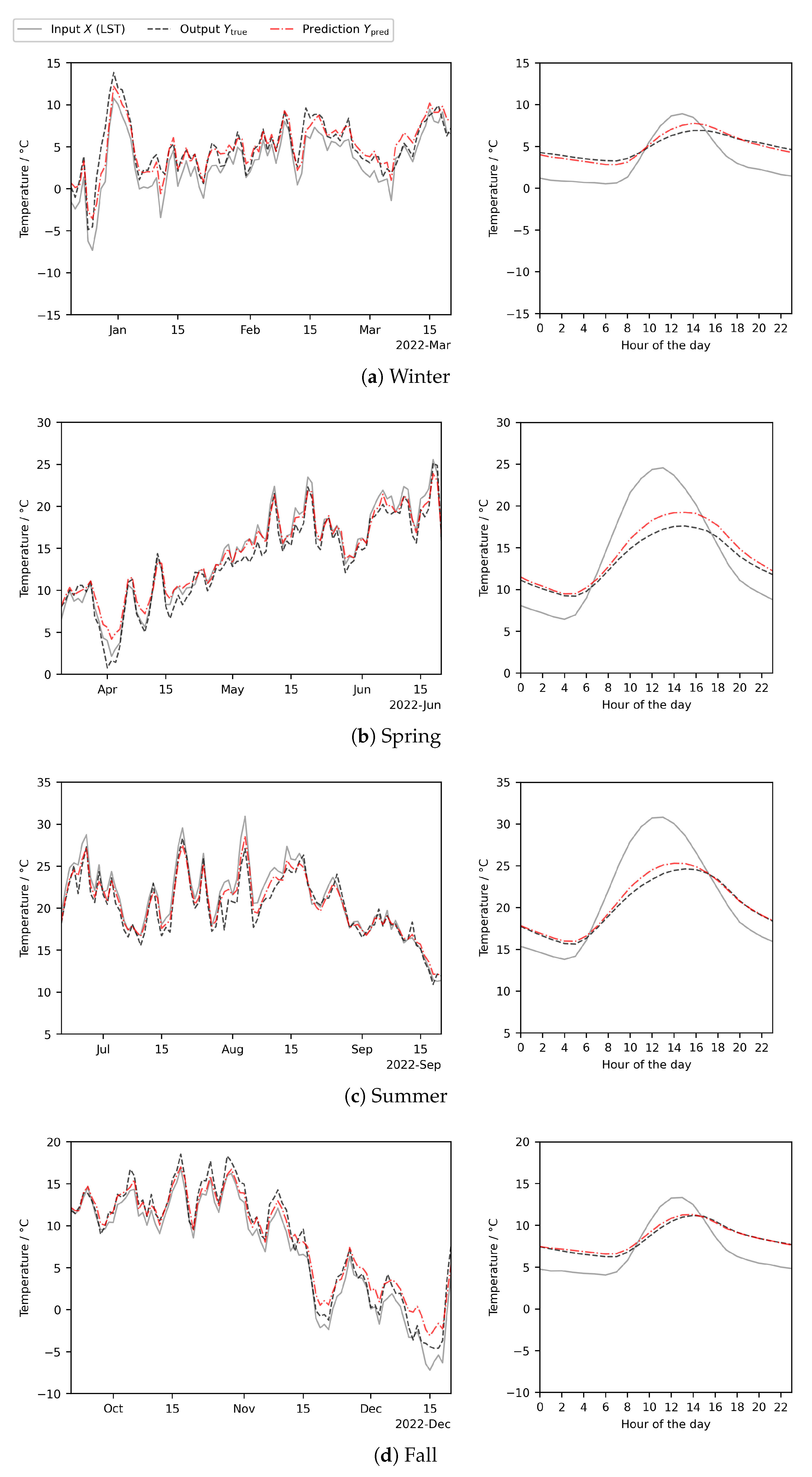

3.3. Model Evaluation

3.4. Seasonal Variability of the Model Performance

3.5. Generalizability of the Model

4. Conclusions and Outlook

4.1. Conclusions

- The proposed application is original: nowcasting of high-resolution near-surface air temperature (WRF emulation) in near real-time conditions using as input high-frequency but low-resolution SEVIRI LST images;

- The proposed deep learning model implements a novel space-time approach, where multiple time steps are fed into the model through 3D convolution layers;

- Clear superiority of the proposed deep learning model is demonstrated based on a comprehensive set of evaluation metrics, in comparison to a finely tuned multiple linear regression benchmark;

- Generalizability is established by applying the deep learning model to domains that were mostly unseen during training.

4.2. Outlook

- Conduct the analysis over an extended period (several years) in order to reduce the bias;

- Use a separate model for each season: The seasonal variations of the model error, presumably attributable in large part to the seasonal variability of the input error, call for a clear differentiation between the seasons;

- Explore more effective ways to incorporate daily/seasonal patterns into the model: Fourier harmonics, etc.;

- Investigate other deep learning model architectures: convolutional LSTM, GAN, etc.;

- Include additional regularizers into the loss function: Ability to enforce physical conservation laws would be ideal in this context;

- Systematically optimize hyper-parameters: For instance, the regularization coefficients in the loss function;

- Use, as input to the deep learning model, further gridded static data maps to characterize anthropogenic heat, topography, land cover, and urban morphology (to the extent such data is also taken into account in the WRF simulation): For instance, road density (e.g., from OpenStreetMap), population density, average building height, roughness length, land/water mask, NDVI (Normalized Difference Vegetation Index), terrain elevation, etc.;

- Add LST data from non-geostationary satellites: MODIS, ASTER, etc.;

- Apply to short-term forecasting: with minimal modifications, use the model to forecast near-surface air temperature a few hours ahead; this forward-looking capability will enable us to cover the short latency that currently exists in the availability of the SEVIRI LST data.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Masson-Delmotte, V.; Zhai, P.; Pirani, A. Climate Change 2021: The Physical Science Basis: Summary for Policymakers: Working Group I Contribution to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change; IPCC: Geneva, Switzerland, 2021. [Google Scholar]

- Fiedler, T.; Pitman, A.J.; Mackenzie, K.; Wood, N.; Jakob, C.; Perkins-Kirkpatrick, S.E. Business risk and the emergence of climate analytics. Nat. Clim. Chang. 2021, 11, 87–94. [Google Scholar] [CrossRef]

- Feser, F.; Rockel, B.; von Storch, H.; Winterfeldt, J.; Zahn, M. Regional Climate Models Add Value to Global Model Data: A Review and Selected Examples. Bull. Am. Meteorol. Soc. 2011, 92, 1181–1192. [Google Scholar] [CrossRef]

- Höhlein, K.; Kern, M.; Hewson, T.; Westermann, R. A comparative study of convolutional neural network models for wind field downscaling. Meteorol. Appl. 2020, 27, e1961. [Google Scholar] [CrossRef]

- Deser, C. Certain Uncertainty: The Role of Internal Climate Variability in Projections of Regional Climate Change and Risk Management. Earth’s Future 2020, 8, e2020EF001854. [Google Scholar] [CrossRef]

- Wilby, R.; Wigley, T. Downscaling general circulation model output: A review of methods and limitations. Prog. Phys. Geogr. Earth Environ. 1997, 21, 530–548. [Google Scholar] [CrossRef]

- Chandler, R.E. On the use of generalized linear models for interpreting climate variability. Environmetrics 2005, 16, 699–715. [Google Scholar] [CrossRef]

- Reichstein, M.; Camps-Valls, G.; Stevens, B.; Jung, M.; Denzler, J.; Carvalhais, N.; Prabhat, F. Deep learning and process understanding for data-driven Earth system science. Nature 2019, 566, 195–204. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Rampal, N.; Gibson, P.B.; Sood, A.; Stuart, S.; Fauchereau, N.C.; Brandolino, C.; Noll, B.; Meyers, T. High-resolution downscaling with interpretable deep learning: Rainfall extremes over New Zealand. Weather Clim. Extrem. 2022, 38, 100525. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef]

- Yang, W.; Zhang, X.; Tian, Y.; Wang, W.; Xue, J.H. Deep Learning for Single Image Super-Resolution: A Brief Review. IEEE Trans. Multimed. 2019, 21, 3106–3121. [Google Scholar] [CrossRef]

- Vandal, T.; Kodra, E.; Ganguly, S.; Michaelis, A.; Nemani, R.; Ganguly, A.R. DeepSD: Generating High Resolution Climate Change Projections through Single Image Super-Resolution. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1663–1672. [Google Scholar] [CrossRef]

- Baño-Medina, J.; Manzanas, R.; Gutiérrez, J.M. On the suitability of deep convolutional neural networks for continental-wide downscaling of climate change projections. Clim. Dyn. 2021, 57, 2941–2951. [Google Scholar] [CrossRef]

- Vaughan, A.; Tebbutt, W.; Hosking, J.S.; Turner, R.E. Convolutional conditional neural processes for local climate downscaling. Geosci. Model Dev. 2022, 15, 251–268. [Google Scholar] [CrossRef]

- Leinonen, J.; Nerini, D.; Berne, A. Stochastic Super-Resolution for Downscaling Time-Evolving Atmospheric Fields With a Generative Adversarial Network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7211–7223. [Google Scholar] [CrossRef]

- Watson-Parris, D. Machine learning for weather and climate are worlds apart. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2021, 379, 20200098. [Google Scholar] [CrossRef]

- Gal, Y.; Ghahramani, Z. Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Bihlo, A. A generative adversarial network approach to (ensemble) weather prediction. Neural Netw. 2021, 139, 1–16. [Google Scholar] [CrossRef]

- Lakshminarayanan, B.; Pritzel, A.; Blundell, C. Simple and Scalable Predictive Uncertainty Estimation using Deep Ensembles. arXiv 2017, arXiv:1612.01474. [Google Scholar]

- Neal, R.M. Bayesian Learning for Neural Networks; Lecture Notes in Statistics; Springer: New York, NY, USA, 1996; Volume 118. [Google Scholar]

- Graves, A. Practical Variational Inference for Neural Networks. In Proceedings of the 24th International Conference on Neural Information Processing Systems, Granada, Spain, 12–15 December 2011; Curran Associates Inc.: Red Hook, NY, USA, 2011; pp. 2348–2356. [Google Scholar]

- Beluch, W.H.; Genewein, T.; Nurnberger, A.; Kohler, J.M. The Power of Ensembles for Active Learning in Image Classification. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9368–9377. [Google Scholar] [CrossRef]

- Ovadia, Y.; Fertig, E.; Ren, J.; Nado, Z.; Sculley, D.; Nowozin, S.; Dillon, J.V.; Lakshminarayanan, B.; Snoek, J. Can You Trust Your Model’s Uncertainty? Evaluating Predictive Uncertainty under Dataset Shift. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates Inc.: Red Hook, NY, USA, 2019. [Google Scholar]

- Gustafsson, F.K.; Danelljan, M.; Schon, T.B. Evaluating Scalable Bayesian Deep Learning Methods for Robust Computer Vision. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1289–1298. [Google Scholar] [CrossRef]

- Krogh, A.; Vedelsby, J. Neural Network Ensembles, Cross Validation and Active Learning. In Proceedings of the 7th International Conference on Neural Information Processing Systems, Denver, CO, USA, 28 November–1 December 1994; MIT Press: Cambridge, MA, USA, 1994; pp. 231–238. [Google Scholar]

- Ayhan, M.S.; Berens, P. Test-time Data Augmentation for Estimation of Heteroscedastic Aleatoric Uncertainty in Deep Neural Networks. In Proceedings of the International Conference on Medical Imaging with Deep Learning, Amsterdam, The Netherland, 4–6 July 2018. [Google Scholar]

- Gawlikowski, J.; Tassi, C.R.N.; Ali, M.; Lee, J.; Humt, M.; Feng, J.; Kruspe, A.; Triebel, R.; Jung, P.; Roscher, R.; et al. A Survey of Uncertainty in Deep Neural Networks. arXiv 2022, arXiv:2107.03342. [Google Scholar] [CrossRef]

- Wang, G.; Li, W.; Aertsen, M.; Deprest, J.; Ourselin, S.; Vercauteren, T. Aleatoric uncertainty estimation with test-time augmentation for medical image segmentation with convolutional neural networks. Neurocomputing 2019, 338, 34–45. [Google Scholar] [CrossRef]

- Vandal, T.; Kodra, E.; Ganguly, A.R. Intercomparison of machine learning methods for statistical downscaling: The case of daily and extreme precipitation. Theor. Appl. Climatol. 2019, 137, 557–570. [Google Scholar] [CrossRef]

- Baño-Medina, J.; Manzanas, R.; Gutiérrez, J.M. Configuration and intercomparison of deep learning neural models for statistical downscaling. Geosci. Model Dev. 2020, 13, 2109–2124. [Google Scholar] [CrossRef]

- Liu, Y.; Ganguly, A.R.; Dy, J. Climate Downscaling Using YNet. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual Event, 6–10 July 2020; Gupta, R., Liu, Y., Shah, M., Rajan, S., Tang, J., Prakash, B.A., Eds.; ACM: Long Beach, CA, USA, 2020; pp. 3145–3153. [Google Scholar] [CrossRef]

- Serifi, A.; Günther, T.; Ban, N. Spatio-Temporal Downscaling of Climate Data Using Convolutional and Error-Predicting Neural Networks. Front. Clim. 2021, 3, 656479. [Google Scholar] [CrossRef]

- Kwok, P.H.; Qi, Q. Enhanced Variational U-Net for Weather Forecasting. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data), Orlando, FL, USA, 15–18 December 2021; pp. 5758–5763. [Google Scholar] [CrossRef]

- Doury, A.; Somot, S.; Gadat, S.; Ribes, A.; Corre, L. Regional climate model emulator based on deep learning: Concept and first evaluation of a novel hybrid downscaling approach. Clim. Dyn. 2022, 60, 1751–1779. [Google Scholar] [CrossRef]

- Lerch, S.; Polsterer, K.L. Convolutional autoencoders for spatially-informed ensemble post-processing. arXiv 2022, arXiv:2204.05102. [Google Scholar]

- Gong, B.; Langguth, M.; Ji, Y.; Mozaffari, A.; Stadtler, S.; Mache, K.; Schultz, M.G. Temperature forecasting by deep learning methods. Geosci. Model Dev. 2022, 15, 8931–8956. [Google Scholar] [CrossRef]

- Price, I.; Rasp, S. Increasing the accuracy and resolution of precipitation forecasts using deep generative models. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Virtual, 28–30 March 2022. [Google Scholar]

- Wang, J.; Liu, Z.; Foster, I.; Chang, W.; Kettimuthu, R.; Kotamarthi, V.R. Fast and accurate learned multiresolution dynamical downscaling for precipitation. Geosci. Model Dev. 2021, 14, 6355–6372. [Google Scholar] [CrossRef]

- Harris, L.; McRae, A.T.T.; Chantry, M.; Dueben, P.D.; Palmer, T.N. A Generative Deep Learning Approach to Stochastic Downscaling of Precipitation Forecasts. J. Adv. Model. Earth Syst. 2022, 14, e2022MS003120. [Google Scholar] [CrossRef]

- Tian, L.; Li, X.; Ye, Y.; Xie, P.; Li, Y. A Generative Adversarial Gated Recurrent Unit Model for Precipitation Nowcasting. IEEE Geosci. Remote Sens. Lett. 2020, 17, 601–605. [Google Scholar] [CrossRef]

- Harilal, N.; Singh, M.; Bhatia, U. Augmented Convolutional LSTMs for Generation of High-Resolution Climate Change Projections. IEEE Access 2021, 9, 25208–25218. [Google Scholar] [CrossRef]

- de Bézenac, E.; Pajot, A.; Gallinari, P. Deep learning for physical processes: Incorporating prior scientific knowledge. J. Stat. Mech. Theory Exp. 2019, 2019, 124009. [Google Scholar] [CrossRef]

- Kashinath, K.; Mustafa, M.; Albert, A.; Wu, J.L.; Jiang, C.; Esmaeilzadeh, S.; Azizzadenesheli, K.; Wang, R.; Chattopadhyay, A.; Singh, A.; et al. Physics-informed machine learning: Case studies for weather and climate modelling. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2021, 379, 20200093. [Google Scholar] [CrossRef]

- Shen, H.; Jiang, Y.; Li, T.; Cheng, Q.; Zeng, C.; Zhang, L. Deep learning-based air temperature mapping by fusing remote sensing, station, simulation and socioeconomic data. Remote Sens. Environ. 2020, 240, 111692. [Google Scholar] [CrossRef]

- Nguyen, B.M.; Tian, G.; Vo, M.T.; Michel, A.; Corpetti, T.; Granero-Belinchon, C. Convolutional Neural Network Modelling for MODIS Land Surface Temperature Super-Resolution. arXiv 2022, arXiv:2202.10753. [Google Scholar]

- Gomez Gonzalez, C.A. DL4DS—Deep learning for empirical downscaling. Environ. Data Sci. 2023, 2, E3. [Google Scholar] [CrossRef]

- Stoll, M.J.; Brazel, A.J. Surface-Air Temperature Relationships in the Urban Environment of Phoenix, Arizona. Phys. Geogr. 1992, 13, 160–179. [Google Scholar] [CrossRef]

- Prihodko, L.; Goward, S.N. Estimation of air temperature from remotely sensed surface observations. Remote Sens. Environ. 1997, 60, 335–346. [Google Scholar] [CrossRef]

- Vogt, J.V.; Viau, A.A.; Paquet, F. Mapping regional air temperature fields using satellite-derived surface skin temperatures. Int. J. Climatol. 1997, 17, 1559–1579. [Google Scholar] [CrossRef]

- Voogt, J.A.; Oke, T.R. Complete Urban Surface Temperatures. J. Appl. Meteorol. 1997, 36, 1117–1132. [Google Scholar] [CrossRef]

- Liang, S.; Wang, J. (Eds.) Advanced Remote Sensing: Terrestrial Information Extraction and Applications, 2nd ed.; Academic Press: Amsterdam, The Netherland, 2020. [Google Scholar]

- MODIS. Terra & Aqua Moderate Resolution Imaging Spectroradiometer. 2023. Available online: https://ladsweb.modaps.eosdis.nasa.gov/missions-and-measurements/modis/ (accessed on 21 March 2023).

- SEVIRI. The Spinning Enhanced Visible and InfraRed Imager. 2023. Available online: https://www.eumetsat.int/seviri (accessed on 21 March 2023).

- Benali, A.; Carvalho, A.; Nunes, J.; Carvalhais, N.; Santos, A. Estimating air surface temperature in Portugal using MODIS LST data. Remote Sens. Environ. 2012, 124, 108–121. [Google Scholar] [CrossRef]

- Kloog, I.; Nordio, F.; Coull, B.A.; Schwartz, J. Predicting spatiotemporal mean air temperature using MODIS satellite surface temperature measurements across the Northeastern USA. Remote Sens. Environ. 2014, 150, 132–139. [Google Scholar] [CrossRef]

- Kloog, I.; Nordio, F.; Lepeule, J.; Padoan, A.; Lee, M.; Auffray, A.; Schwartz, J. Modelling spatio-temporally resolved air temperature across the complex geo-climate area of France using satellite-derived land surface temperature data: Modelling Air Temperature in France. Int. J. Climatol. 2017, 37, 296–304. [Google Scholar] [CrossRef]

- Bechtel, B.; Zakšek, K.; Hoshyaripour, G. Downscaling Land Surface Temperature in an Urban Area: A Case Study for Hamburg, Germany. Remote Sens. 2012, 4, 3184–3200. [Google Scholar] [CrossRef]

- Zhou, B.; Erell, E.; Hough, I.; Shtein, A.; Just, A.C.; Novack, V.; Rosenblatt, J.; Kloog, I. Estimation of Hourly near Surface Air Temperature Across Israel Using an Ensemble Model. Remote Sens. 2020, 12, 1741. [Google Scholar] [CrossRef]

- Trigo, I.F.; DaCamara, C.C.; Viterbo, P.; Roujean, J.L.; Olesen, F.; Barroso, C.; Camacho-de Coca, F.; Carrer, D.; Freitas, S.C.; García-Haro, J.; et al. The Satellite Application Facility on Land Surface Analysis. Int. J. Remote Sens. 2011, 32, 2725–2744. [Google Scholar] [CrossRef]

- Jones, P.W. First- and Second-Order Conservative Remapping Schemes for Grids in Spherical Coordinates. Mon. Weather Rev. 1999, 127, 2204–2210. [Google Scholar] [CrossRef]

- Zhuang, J.; Dussin, R.; Huard, D.; Bourgault, P.; Banihirwe, A.; Raynaud, S.; Malevich, B.; Schupfner, M.; Filipe; Levang, S.; et al. Pangeo-Data/xESMF: V0.7.1. 2023. Available online: https://zenodo.org/record/7800141 (accessed on 21 March 2023).

- Skamarock, W.C.; Klemp, J.B.; Dudhia, J.; Gill, D.O.; Liu, Z.; Berner, J.; Wang, W.; Powers, J.G.; Duda, M.G.; Barker, D.M.; et al. A Description of the Advanced Research WRF Model Version 4. NCAR Tech. Notes 2019. [Google Scholar] [CrossRef]

- Vogel, J.; Afshari, A. Comparison of Urban Heat Island Intensity Estimation Methods Using Urbanized WRF in Berlin, Germany. Atmosphere 2020, 11, 1338. [Google Scholar] [CrossRef]

- Muhammad, F.; Xie, C.; Vogel, J.; Afshari, A. Inference of Local Climate Zones from GIS Data, and Comparison to WUDAPT Classification and Custom-Fit Clusters. Land 2022, 11, 747. [Google Scholar] [CrossRef]

- Demuzere, M.; Bechtel, B.; Middel, A.; Mills, G. Mapping Europe into local climate zones. PLoS ONE 2019, 14, e0214474. [Google Scholar] [CrossRef]

- Deutscher Wetterdienst. DWD Open Data-Server Climate Data Center (CDC). Available online: https://opendata.dwd.de (accessed on 21 March 2023).

- Hernanz, A.; García-Valero, J.A.; Domínguez, M.; Ramos-Calzado, P.; Pastor-Saavedra, M.A.; Rodríguez-Camino, E. Evaluation of statistical downscaling methods for climate change projections over Spain: Present conditions with perfect predictors. Int. J. Climatol. 2022, 42, 762–776. [Google Scholar] [CrossRef]

- Wilby, R.L.; Dawson, C.W.; Barrow, E.M. SDSM—A decision support tool for the assessment of regional climate change impacts. Environ. Model. Softw. 2002, 17, 145–157. [Google Scholar] [CrossRef]

- Raschka, S. MLxtend: Providing machine learning and data science utilities and extensions to Python’s scientific computing stack. J. Open Source Softw. 2018, 3, 638. [Google Scholar] [CrossRef]

- Wilks, D.S. Statistical Methods in the Atmospheric Sciences; Academic Press: Amsterdam, The Netherland, 2011; Volume 100. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Abrahamyan, L.; Truong, A.M.; Philips, W.; Deligiannis, N. Gradient Variance Loss for Structure-Enhanced Image Super-Resolution. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 3219–3223. [Google Scholar] [CrossRef]

- Lu, Z.; Chen, Y. Single Image Super Resolution based on a Modified U-net with Mixed Gradient Loss. arXiv 2019, arXiv:1911.09428. [Google Scholar] [CrossRef]

- Trigo, I.F.; Monteiro, I.T.; Olesen, F.; Kabsch, E. An assessment of remotely sensed land surface temperature. J. Geophys. Res. Atmos. 2008, 113, D17108. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

| WRF Parameter | d01 | d02 | d03 |

|---|---|---|---|

| Lateral grid spacing | 9 km | 3 km | 1 km |

| Starting index in parent grid | 1 | 36 | 37 |

| Number of lateral grid points | 110 | 118 | 136 |

| Vertical first full layer thickness | 10 m | 10 m | 10 m |

| Number of vertical grid points | 61 | 61 | 61 |

| Metric | MLR Model | DL Model |

|---|---|---|

| MBE | 0.195 C | 0.350 C |

| MBE98P (98th percentile) | −1.47 C | −0.430 C |

| MAE | 1.49 C | 1.36 C |

| MAE98P (98th percentile) | 1.99 C | 1.80 C |

| RMSE | 1.91 C | 1.77 C |

| RMSE98P (98th percentile) | 2.50 C | 2.24 C |

| R | 0.944 | 0.952 |

| PSNR | 28.4 | 29.0 |

| SSIM | 0.969 | 0.977 |

| P( 2 C) | 0.721 | 0.770 |

| Metric | Winter | Spring | Summer | Fall |

|---|---|---|---|---|

| MBE (C) | −0.004 | 0.886 | 0.349 | 0.159 |

| MAE (C) | 1.38 | 1.5 | 1.25 | 1.34 |

| RMSE (C) | 1.78 | 1.92 | 1.68 | 1.68 |

| 0.791 | 0.905 | 0.890 | 0.941 | |

| PSNR | 29 | 28.3 | 29.4 | 29.5 |

| SSIM | 0.982 | 0.974 | 0.978 | 0.976 |

| P( 2 C) | 0.759 | 0.728 | 0.819 | 0.774 |

| Metric | Primary ROI | SW ROI | SE ROI | NW ROI | NE ROI |

|---|---|---|---|---|---|

| MBE (C) | 0.350 | 0.607 | 0.625 | 0.454 | 0.471 |

| MBE98P (C) | −0.430 | −0.575 | −0.604 | −0.881 | −0.825 |

| MAE (C) | 1.36 | 1.54 | 1.53 | 1.46 | 1.47 |

| MAE98P (C) | 1.80 | 1.68 | 1.83 | 1.75 | 1.86 |

| RMSE (C) | 1.77 | 1.98 | 1.96 | 1.88 | 1.89 |

| RMSE98P (C) | 2.24 | 2.12 | 2.31 | 2.21 | 2.39 |

| 0.952 | 0.940 | 0.943 | 0.947 | 0.947 | |

| PSNR | 29.0 | 28.0 | 28.1 | 28.5 | 28.4 |

| SSIM | 0.977 | 0.977 | 0.977 | 0.974 | 0.974 |

| P( 2 C) | 0.770 | 0.711 | 0.718 | 0.739 | 0.739 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Afshari, A.; Vogel, J.; Chockalingam, G. Statistical Downscaling of SEVIRI Land Surface Temperature to WRF Near-Surface Air Temperature Using a Deep Learning Model. Remote Sens. 2023, 15, 4447. https://doi.org/10.3390/rs15184447

Afshari A, Vogel J, Chockalingam G. Statistical Downscaling of SEVIRI Land Surface Temperature to WRF Near-Surface Air Temperature Using a Deep Learning Model. Remote Sensing. 2023; 15(18):4447. https://doi.org/10.3390/rs15184447

Chicago/Turabian StyleAfshari, Afshin, Julian Vogel, and Ganesh Chockalingam. 2023. "Statistical Downscaling of SEVIRI Land Surface Temperature to WRF Near-Surface Air Temperature Using a Deep Learning Model" Remote Sensing 15, no. 18: 4447. https://doi.org/10.3390/rs15184447

APA StyleAfshari, A., Vogel, J., & Chockalingam, G. (2023). Statistical Downscaling of SEVIRI Land Surface Temperature to WRF Near-Surface Air Temperature Using a Deep Learning Model. Remote Sensing, 15(18), 4447. https://doi.org/10.3390/rs15184447