Identifying and Monitoring Gardens in Urban Areas Using Aerial and Satellite Imagery

Abstract

:1. Introduction

2. Materials and Methods

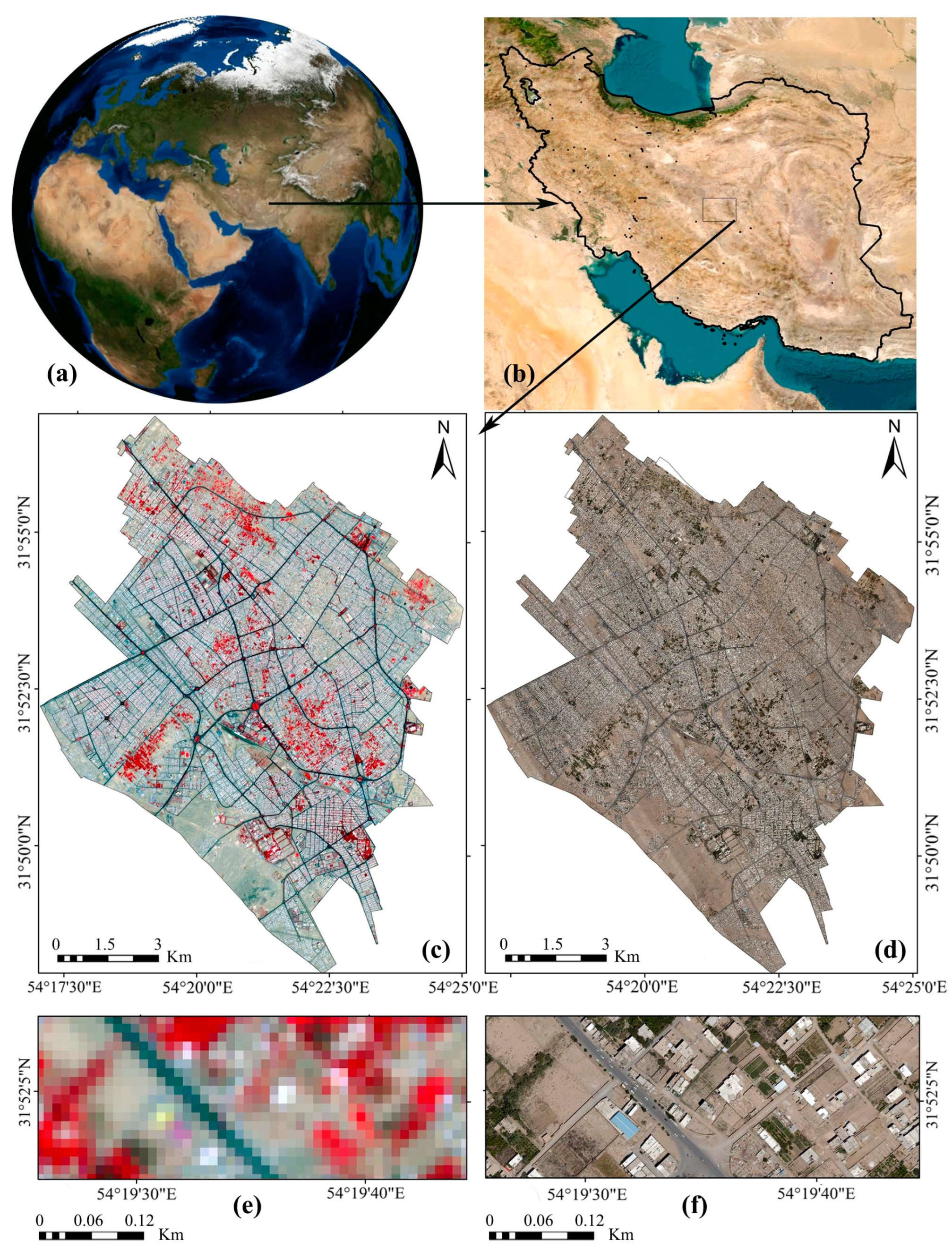

2.1. Study Region

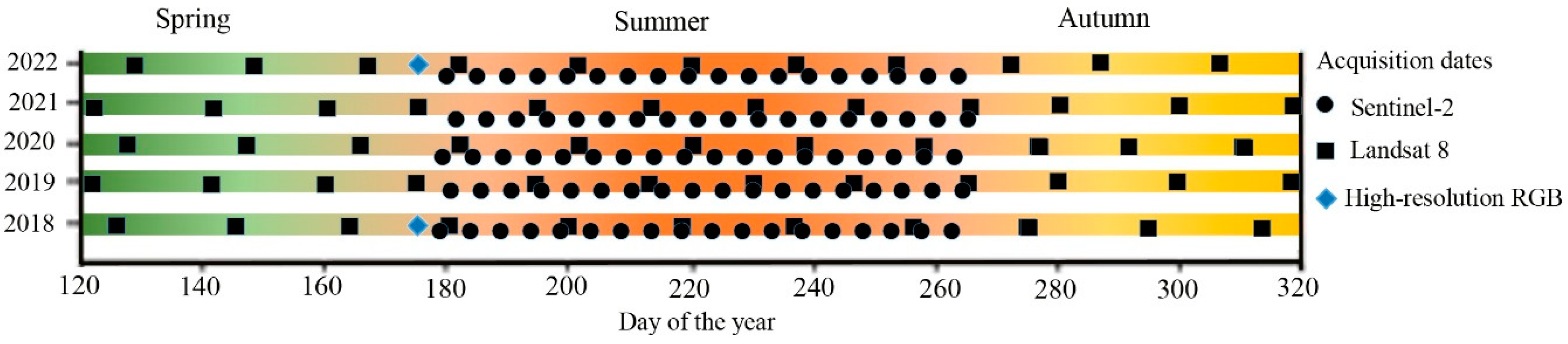

2.2. Datasets and Preprocessing

2.3. Methods

2.3.1. Sentinel-2 and Aerial Images Fusion

- (1)

- Simulating a PAN image from a spectral band with low spatial resolution.

- (2)

- Applying GS transformation to the simulated PAN image and spectral band, using the simulated PAN band as the first band.

- (3)

- Replacing the high spatial resolution PAN band with the first band.

- (4)

- (1)

- Applying the HPF to the PAN image with high spatial resolution.

- (2)

- Adding the filtered image to each band of the MS image by applying a weighting factor to the standard deviation of the MS bands.

- (3)

- Matching the histogram of the fused image with the original multispectral image.

2.3.2. Classification of Images Using Object-Oriented Methods

2.3.3. Estimation of Vegetation Index

2.3.4. Identification of New Construction in Garden Areas

2.3.5. Investigating the Effect of Destruction of Gardens on the LST

3. Results

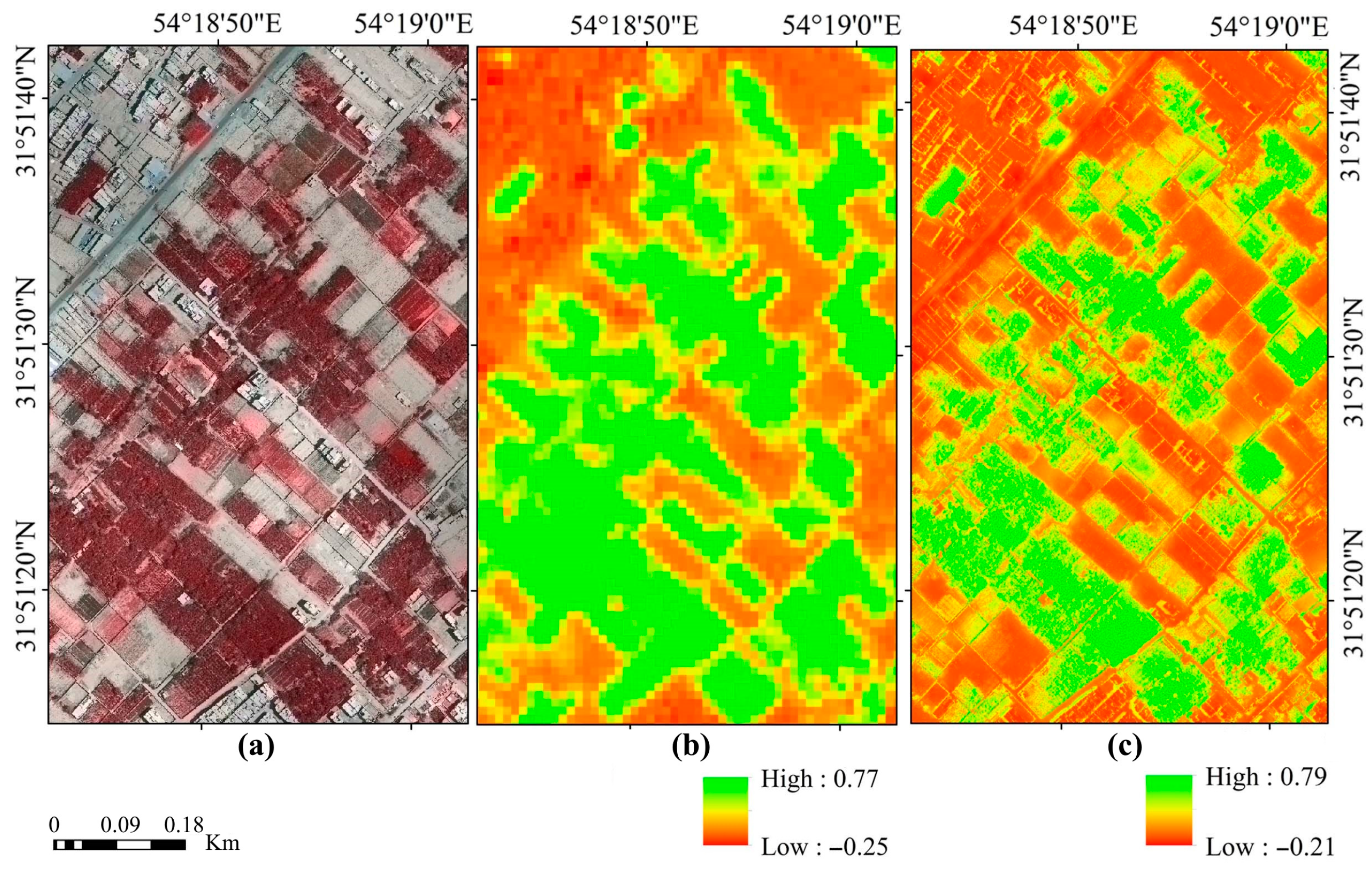

3.1. Comparison of Fusion Methods

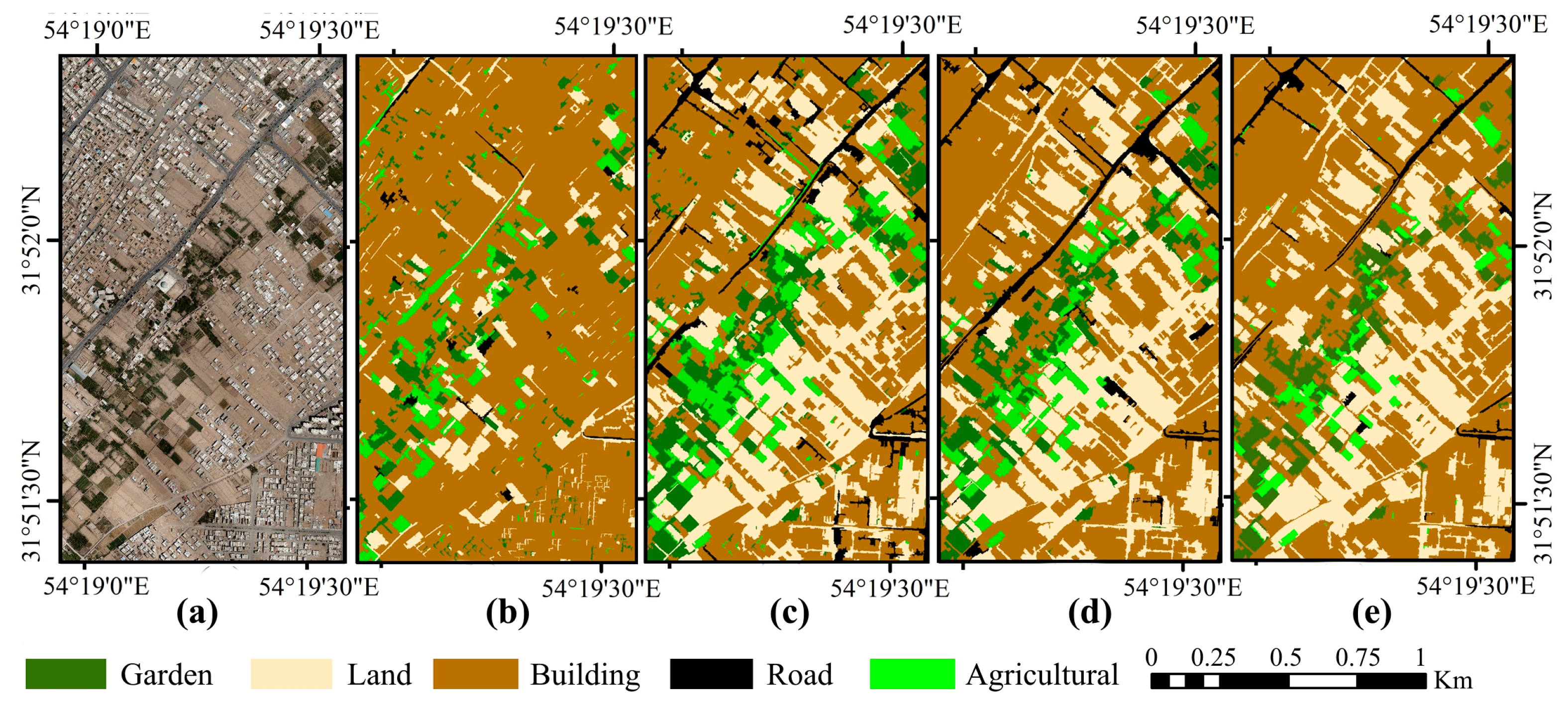

3.2. Comparison of Object-Oriented Classification Methods

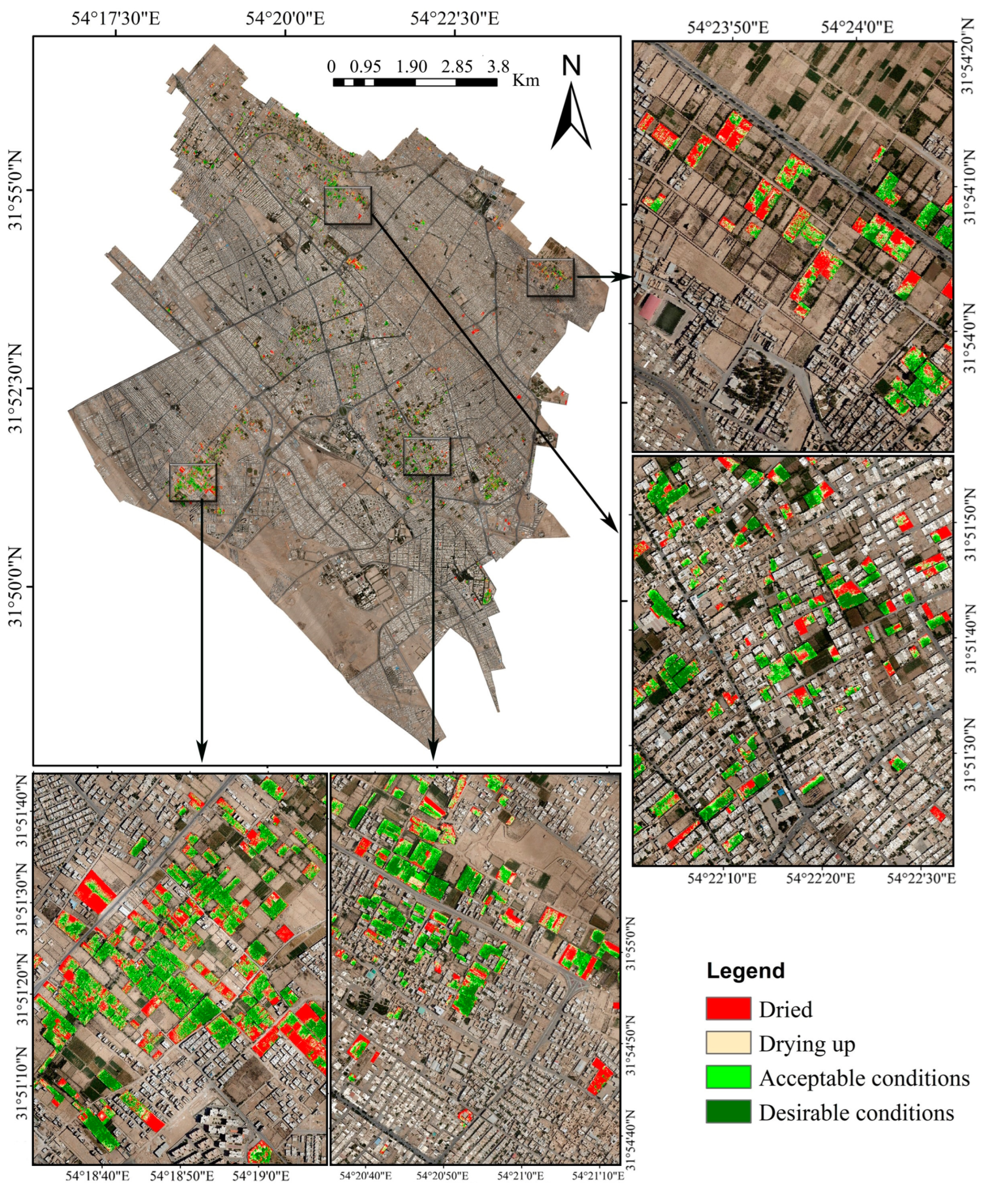

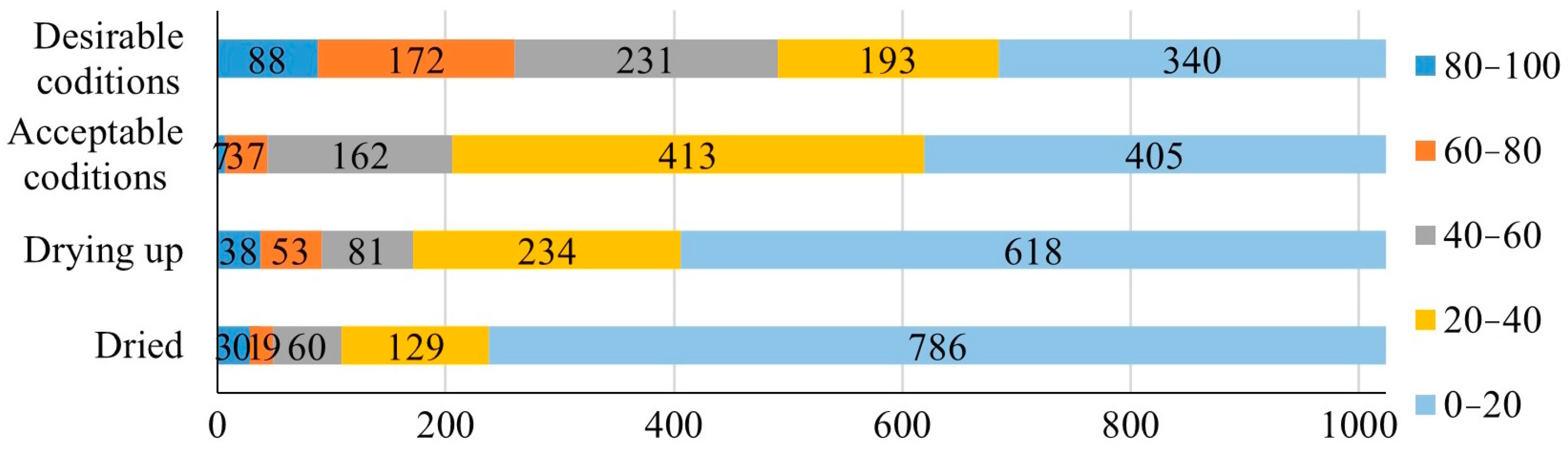

3.3. Monitoring the State of Vegetation in Gardens

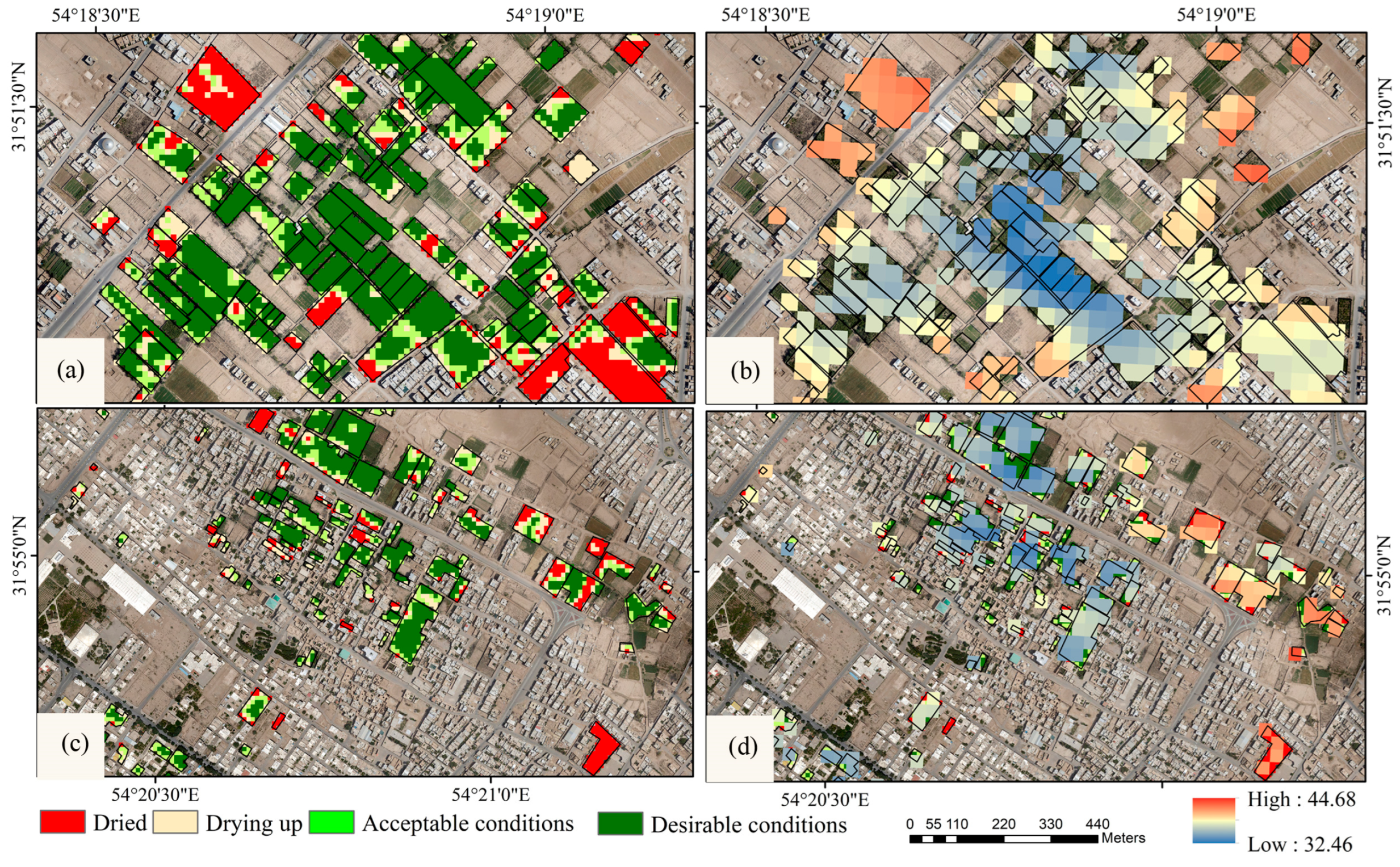

3.4. Identification of New Construction in Gardens

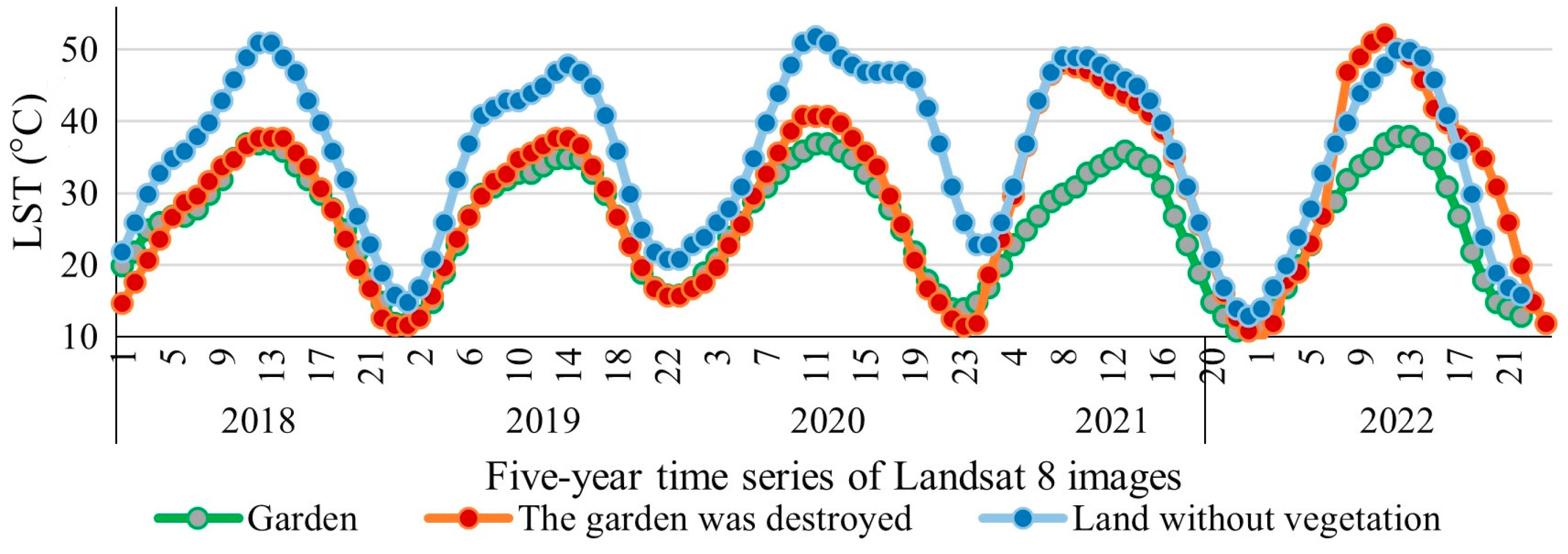

3.5. Investigating the Effect of the Garden Destruction on the LST

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mirzaee, S.; Mirzakhani Nafchi, A. Monitoring Spatiotemporal Vegetation Response to Drought Using Remote Sensing Data. Sensors 2023, 23, 2134. [Google Scholar] [CrossRef] [PubMed]

- Ghaderpour, E.; Mazzanti, P.; Scarascia Mugnozza, G.; Bozzano, F. Coherency and phase delay analyses between land cover and climate across Italy via the least-squares wavelet software. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103241. [Google Scholar] [CrossRef]

- Liu, Z.; Chen, D.; Liu, S.; Feng, W.; Lai, F.; Li, H.; Zou, C.; Zhang, N.; Zan, M. Research on Vegetation Cover Changes in Arid and Semi-Arid Region Based on a Spatio-Temporal Fusion Model. Forests 2022, 13, 2066. [Google Scholar] [CrossRef]

- Ghorbanian, A.; Mohammadzadeh, A.; Jamali, S. Linear and Non-Linear Vegetation Trend Analysis throughout Iran Using Two Decades of MODIS NDVI Imagery. Remote Sens. 2022, 14, 3683. [Google Scholar] [CrossRef]

- Almalki, R.; Khaki, M.; Saco, P.M.; Rodriguez, J.F. Monitoring and Mapping Vegetation Cover Changes in Arid and Semi-Arid Areas Using Remote Sensing Technology: A Review. Remote Sens. 2022, 14, 5143. [Google Scholar] [CrossRef]

- Kellert, A.; Bremer, M.; Low, M.; Rutzinger, M. Exploring the potential of land surface phenology and seasonal cloud free composites of one year of Sentinel-2 imagery for tree species mapping in a mountainous region. Int. J. Appl. Earth Obs. Geoinf. 2021, 94, 102208. [Google Scholar] [CrossRef]

- Lindberg, E.; Holmgren, J.; Olsson, H. Classification of tree species classes in a hemi-boreal forest from multispectral airborne laser scanning data using a mini raster cell method. Int. J. Appl. Earth Obs. Geoinf. 2021, 100, 102334. [Google Scholar] [CrossRef]

- Modzelewska, A.; Fassnacht, F.E.; Sterenczak, K. Tree species identification within an extensive forest area with diverse management regimes using airborne hyperspectral data. Int. J. Appl. Earth Obs. Geoinf. 2020, 84, 101960. [Google Scholar] [CrossRef]

- Grabska, E.; Hostert, P.; Pflugmacher, D.; Ostapowicz, K. Forest stand species mapping using the Sentinel-2-time series. Remote Sens. 2019, 11, 1197. [Google Scholar] [CrossRef]

- Wang, M.; Zheng, Y.; Huang, C.; Meng, R.; Pang, Y.; Jia, W.; Zhou, J.; Huang, Z.; Fang, L.; Zhao, F. Assessing Landsat-8 and Sentinel-2 spectral-temporal features for mapping tree species of northern plantation forests in Heilongjiang Province, China. For. Ecosyst. 2022, 9, 100032. [Google Scholar] [CrossRef]

- Madonsela, S.; Cho, M.A.; Mathieu, R.; Mutanga, O.; Ramoelo, A.; Kaszta, Z.; Van De Kerchove, R.; Wolff, E. Multi-phenology WorldView-2 imagery improves remote sensing of savannah tree species. Int. J. Appl. Earth Obs. Geoinf. 2017, 58, 65–73. [Google Scholar] [CrossRef]

- Rahman, M.F.F.; Fan, S.; Zhang, Y.; Chen, L. A comparative study on application of unmanned aerial vehicle systems in agriculture. Agriculture 2022, 11, 22. [Google Scholar] [CrossRef]

- Ahmadi, P.; Mansor, S.; Farjad, B.; Ghaderpour, E. Unmanned Aerial Vehicle (UAV)-Based Remote Sensing for Early-Stage Detection of Ganoderma. Remote Sens. 2022, 14, 1239. [Google Scholar] [CrossRef]

- Grybas, H.; Congalton, R.G. A comparison of multi-temporal RGB and multispectral UAS imagery for tree species classification in heterogeneous New Hampshire Forests. Remote Sens. 2021, 13, 2631. [Google Scholar] [CrossRef]

- Belcore, E.; Pittarello, M.; Lingua, A.M.; Lonati, M. Mapping riparian habitats of natura 2000 network (91E0*, 3240) at individual tree level using UAV multi-temporal and multi-spectral data. Remote Sens. 2021, 13, 1756. [Google Scholar] [CrossRef]

- Johansen, K.; Duan, Q.; Tu, Y.H.; Searle, C.; Wu, D.; Phinn, S.; Robson, A.; McCabe, M.F. Mapping the condition of macadamia tree crops using multi-spectral UAV and WorldView-3 imagery. ISPRS J. Photogramm. Remote Sens. 2020, 165, 28–40. [Google Scholar] [CrossRef]

- Wu, Q.; Zhang, Y.; Xie, M.; Zhao, Z.; Yang, L.; Liu, J.; Hou, D. Estimation of Fv/Fm in spring wheat using UAV-Based multispectral and RGB imagery with multiple machine learning methods. Agronomy 2023, 13, 1003. [Google Scholar] [CrossRef]

- Hegarty-Craver, M.; Polly, J.; O’Neil, M.; Ujeneza, N.; Rineer, J.; Beach, R.H.; Temple, D.S. Remote crop mapping at scale: Using satellite imagery and UAV-acquired data as ground truth. Remote Sens. 2020, 12, 1984. [Google Scholar] [CrossRef]

- Shamshiri, R.R.; Hameed, I.A.; Balasundram, S.K.; Ahmad, D.; Weltzien, C.; Yamin, M. Fundamental research on unmanned aerial vehicles to support precision agriculture in oil palm plantations. In Agricultural Robots-Fundamentals and Applications; IntechOpen: London, UK, 2018; pp. 91–116. [Google Scholar]

- Yuan, L.; Zhu, G. Research on Remote Sensing Image Classification Based on Feature Level Fusion. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-3, 2185–2189. [Google Scholar] [CrossRef]

- Aliabad, F.A.; Malamiri, H.R.G.; Shojaei, S.; Sarsangi, A.; Ferreira, C.S.S.; Kalantari, Z. Investigating the Ability to Identify New Constructions in Urban Areas Using Images from Unmanned Aerial Vehicles, Google Earth, and Sentinel-2. Remote Sens. 2022, 14, 3227. [Google Scholar] [CrossRef]

- Jenerowicz, A.; Woroszkiewicz, M. The pan-sharpening of satellite and UAV imagery for agricultural applications. In Remote Sensing for Agriculture, Ecosystems, and Hydrology XVIII; Neale, C.M.U., Maltese, A., Eds.; SPIE: Bellingham, WA, USA, 2016; Volume 9998. [Google Scholar]

- Li, Y.; Yan, W.; An, S.; Gao, W.; Jia, J.; Tao, S.; Wang, W. A Spatio-Temporal Fusion Framework of UAV and Satellite Imagery for Winter Wheat Growth Monitoring. Drones 2023, 7, 23. [Google Scholar] [CrossRef]

- Lu, T.; Wan, L.; Qi, S.; Gao, M. Land Cover Classification of UAV Remote Sensing Based on Transformer—CNN Hybrid Architecture. Sensors 2023, 23, 5288. [Google Scholar] [CrossRef] [PubMed]

- Alvarez-Vanhard, E.; Corpetti, T.; Houet, T. UAV & satellite synergies for optical remote sensing applications: A literature review. Sci. Remote Sens. 2021, 3, 100019. [Google Scholar]

- Navarro, J.A.; Algeet, N.; Fernández-Landa, A.; Esteban, J.; Rodríguez-Noriega, P.; Guillén-Climent, M.L. Integration of UAV, Sentinel-1, and Sentinel-2 data for mangrove plantation aboveground biomass monitoring in Senegal. Remote Sens. 2019, 11, 77. [Google Scholar] [CrossRef]

- Yilmaz, V.; Gungor, O. Fusion of very high-resolution UAV images with criteria-based image fusion algorithm. Arab. J. Geosci. 2016, 9, 59. [Google Scholar] [CrossRef]

- Zhao, L.; Shi, Y.; Liu, B.; Hovis, C.; Duan, Y.; Shi, Z. Finer classification of crops by fusing UAV images and Sentinel-2A data. Remote Sens. 2019, 11, 3012. [Google Scholar] [CrossRef]

- Beltrán-Marcos, D.; Suárez-Seoane, S.; Fernández-Guisuraga, J.M.; Fernández-García, V.; Marcos, E.; Calvo, L. Relevance of UAV and sentinel-2 data fusion for estimating topsoil organic carbon after forest fire. Geoderma 2023, 430, 116290. [Google Scholar] [CrossRef]

- Daryaei, A.; Sohrabi, H.; Atzberger, C.; Immitzer, M. Fine-scale detection of vegetation in semi-arid mountainous areas with focus on riparian landscapes using Sentinel-2 and UAV data. Comput. Electron. Agric. 2020, 177, 105686. [Google Scholar] [CrossRef]

- Moltó, E. Fusion of different image sources for improved monitoring of agricultural plots. Sensors 2022, 22, 6642. [Google Scholar] [CrossRef]

- Bolyn, C.; Lejeune, P.; Michez, A.; Latte, N. Mapping tree species proportions from satellite imagery using spectral-spatial deep learning. Remote Sens. Environ. 2022, 280, 113205. [Google Scholar] [CrossRef]

- De Giglio, M.; Greggio, N.; Goffo, F.; Merloni, N.; Dubbini, M.; Barbarella, M. Comparison of pixel-and object-based classification methods of unmanned aerial vehicle data applied to coastal dune vegetation communities: Casal borsetti case study. Remote Sens. 2019, 11, 1416. [Google Scholar] [CrossRef]

- Phiri, D.; Simwanda, M.; Salekin, S.R.; Nyirenda, V.; Murayama, Y.; Ranagalage, M. Sentinel-2 Data for Land Cover. Use Mapping: A Review. Remote Sens. 2020, 12, 2291. [Google Scholar] [CrossRef]

- Zhen, Z.; Chen, S.; Yin, T.; Gastellu-Etchegorry, J.P. Improving Crop Mapping by Using Bidirectional Reflectance Distribution Function (BRDF) Signatures with Google Earth Engine. Remote Sens. 2023, 15, 2761. [Google Scholar] [CrossRef]

- Tarantino, C.; Forte, L.; Blonda, P.; Vicario, S.; Tomaselli, V.; Beierkuhnlein, C.; Adamo, M. Intra-annual sentinel-2 time-series supporting grassland habitat discrimination. Remote Sens. 2021, 13, 277. [Google Scholar] [CrossRef]

- Kluczek, M.; Zagajewski, B.; Kycko, M. Airborne HySpex hyperspectral versus multitemporal Sentinel-2 images for mountain plant communities mapping. Remote Sens. 2022, 14, 1209. [Google Scholar] [CrossRef]

- Kluczek, M.; Zagajewski, B.; Zwijacz-Kozica, T. Mountain Tree Species Mapping Using Sentinel-2, PlanetScope, and Airborne HySpex Hyperspectral Imagery. Remote Sens. 2023, 15, 844. [Google Scholar] [CrossRef]

- Praticò, S.; Solano, F.; Di Fazio, S.; Modica, G. Machine learning classification of mediterranean forest habitats in google earth engine based on seasonal sentinel-2 time-series and input image composition optimisation. Remote Sens. 2021, 13, 586. [Google Scholar] [CrossRef]

- Liu, X.; Liu, H.; Datta, P.; Frey, J.; Koch, B. Mapping an invasive plant Spartina alterniflora by combining an ensemble one-class classification algorithm with a phenological NDVI time-series analysis approach in middle coast of Jiangsu, China. Remote Sens. 2020, 12, 4010. [Google Scholar] [CrossRef]

- Bollas, N.; Kokinou, E.; Polychronos, V. Comparison of sentinel-2 and UAV multispectral data for use in precision agriculture: An application from northern Greece. Drones 2021, 5, 35. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop monitoring using satellite/UAV data fusion and machine learning. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- Chen, P.C.; Chiang, Y.C.; Weng, P.Y. Imaging using unmanned aerial vehicles for agriculture land use classification. Agriculture 2020, 10, 416. [Google Scholar] [CrossRef]

- Wulder, M.A.; White, J.C.; Loveland, T.R.; Woodcock, C.E.; Belward, A.S.; Cohen, W.B.; Fosnight, E.A.; Shaw, J.; Masek, J.G.; Roy, D.P. The global Landsat archive: Status, consolidation, and direction. Remote Sens. Environ. 2016, 185, 271–283. [Google Scholar]

- Jiménez-Muñoz, J.C.; Sobrino, J.A. Split-window coefficients for land surface temperature retrieval from low-resolution thermal infrared sensors. IEEE Geosci. Remote Sens. Lett. 2008, 5, 806–809. [Google Scholar] [CrossRef]

- Zarei, A.; Shah-Hosseini, R.; Ranjbar, S.; Hasanlou, M. Validation of non-linear split window algorithm for land surface temperature estimation using Sentinel-3 satellite imagery: Case study; Tehran Province, Iran. Adv. Space Res. 2021, 67, 3979–3993. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with Sentinel-2 data for crop and tree species classifications in central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Malenovský, Z.; Rott, H.; Cihlar, J.; Schaepman, M.E.; García-Santos, G.; Fernandes, R.; Berger, M. Sentinels for science: Potential of Sentinel-1, -2, and -3 missions for scientific observations of ocean, cryosphere, and land. Remote Sens. Environ. 2012, 120, 91–101. [Google Scholar] [CrossRef]

- Crommelinck, S.; Bennett, R.; Gerke, M.; Nex, F.; Yang, M.Y.; Vosselman, G. Review of automatic feature extraction from high-resolution optical sensor data for UAV-based cadastral mapping. Remote Sens. 2016, 8, 689. [Google Scholar] [CrossRef]

- Maulit, A.; Nugumanova, A.; Apayev, K.; Baiburin, Y.; Sutula, M. A Multispectral UAV Imagery Dataset of Wheat, Soybean and Barley Crops in East Kazakhstan. Data 2023, 8, 88. [Google Scholar] [CrossRef]

- Tahar, K.N.; Ahmad, A. An evaluation on fixed wing and multi-rotor UAV images using photogrammetric image processing. Int. J. Comput. Electr. Autom. Control Inf. Eng. 2013, 7, 48–52. [Google Scholar]

- Toth, C.; Jó’zków, G. Remote sensing platforms and sensors: A survey. ISPRS J. Photogramm. Remote Sens. 2016, 115, 22–36. [Google Scholar] [CrossRef]

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P.J. Quantitative remote sensing at ultra-high resolution with UAV spectroscopy: A review of sensor technology, measurement procedures, and data correction workflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Benediktsson, J.A.; Aanæs, H. Image fusion for classification of high-resolution images based on mathematical morphology. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; pp. 492–495. [Google Scholar]

- Lucien, W. Some terms of reference in data fusion. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1190–1193. [Google Scholar]

- Li, Z.L.; Tang, B.H.; Wu, H.; Ren, H.; Yan, G.; Wan, Z.; Trigo, I.F.; Sobrino, J.A. Satellite-derived land surface temperature: Current status and perspectives. Remote Sens. Environ. 2013, 131, 14–37. [Google Scholar] [CrossRef]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

- Maurer, T. How to pan-sharpen images using the gram-schmidt pan-sharpen metho—A recipe. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-1/W1, 239–244. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS + Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Pohl, C.; Genderen, J.L. Multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef]

- Schowengerdt, R.A. Reconstruction of Multispatial, Multispectral Image Data Using Spatial Frequency Content. Photogramm. Eng. Remote Sens. 1980, 46, 1325–1334. [Google Scholar]

- Tu, T.M.; Huang, P.S.; Hung, C.L.; Chang, C.P. A fast intensity hue-saturation fusion technique with spectral adjustment for IKONOS imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 309–312. [Google Scholar] [CrossRef]

- Carper, W.J.; Lillesand, T.M.; Kiefer, R.W. The use of intensity-hue-saturation transformations for merging spot panchromatic and multispectral image data. Photogramm. Eng. Remote Sens. 1990, 56, 459–467. [Google Scholar]

- Zhang, X.; Dai, X.; Zhang, X.; Hu, Y.; Kang, Y.; Jin, G. Improved Generalized IHS Based on Total Variation for Pansharpening. Remote Sens. 2023, 15, 2945. [Google Scholar] [CrossRef]

- Park, J.H.; Kang, M.G. Spatially Adaptive Multi-resolution Multispectral Image Fusion. Int. J. Remote Sens. 2004, 25, 5491–5508. [Google Scholar] [CrossRef]

- Shamshad, A.; Wan Hussin, W.M.A.; Mohd Sanusi, S.A. Comparison of Different Data Fusion Approaches for Surface Features Extraction Using Quickbird Images. In Proceedings of the GISIDEAS, Hanoi, Vietnam, 16–18 September 2004. [Google Scholar]

- Pohl, C.; van Genderen, J.L. Remote sensing image fusion: An update in the context of digital earth. Int. J. Digit. Earth. 2014, 7, 158–172. [Google Scholar] [CrossRef]

- Shuangao, W.; Padmanaban, R.; Mbanze, A.A.; Silva, J.M.; Shamsudeen, M.; Cabral, P.; Campos, F.S. Using satellite image fusion to evaluate the impact of land use changes on ecosystem services and their economic values. Remote Sens. 2021, 13, 851. [Google Scholar] [CrossRef]

- Ehlers, M.; Madden, M. FFT-enhanced IHS transform for fusing high-resolution satellite images FFT-enhanced IHS transform method for fusing high-resolution satellite images. ISPRS J. Photogramm. Remote Sens. 2007, 61, 381–392. [Google Scholar] [CrossRef]

- Sun, W.; Chen, B.; Messinger, D. Nearest-neighbor diffusion-based pansharpening algorithm for spectral images. Opt. Eng. 2014, 53, 013107. [Google Scholar] [CrossRef]

- Perona, P.; Malik, J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 629–639. [Google Scholar] [CrossRef]

- Padwick, C.; Deskevich, M.; Pacifici, F. WorldView-2 pan-sharpening. In Proceedings of the ASPRS 2010 Annual Conference, San Diego, CA, USA, 26–30 April 2010. [Google Scholar]

- Dahiya, S.; Garg, P.K.; Jat, M.K. A comparative study of various pixel-based image fusion techniques as applied to an urban environment. Int. J. Image Data Fusion 2013, 4, 197–213. [Google Scholar] [CrossRef]

- Geospatial Hexagon. ERDAS Imagine Help Guide. 2015. Available online: https://hexagonusfederal.com/-/media/Files/IGS/Resources/Geospatial%20Product/ERDAS%20IMAGINE/img%20pd1.ashx?la=en (accessed on 14 August 2023).

- Lindgren, J.E.; Kilston, S. Projective pan sharpening algorithm. In Multispectral Imaging for Terrestrial Applications. Int. J. Opt. Photonics 1996, 2818, 128–138. [Google Scholar]

- Jelének, J.; Kopačková, V.; Koucká, L.; Mišurec, J. Testing a modified PCA-based sharpening approach for image fusion. Remote Sens. 2016, 8, 794. [Google Scholar] [CrossRef]

- Maxwell, A.; Warner, T.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Weiwei, T.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 11171. [Google Scholar] [CrossRef]

- Zhang, D.; Li, D.; Zhou, L.; Wu, J. Fine Classification of UAV Urban Nighttime Light Images Based on Object-Oriented Approach. Sensors 2023, 23, 2180. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Phan, T.N.; Kuch, V.; Lehnert, L.W. Land cover classification using Google Earth Engine and random forest classifier—The role of image composition. Remote Sens. 2020, 12, 2411. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Z.; Ren, T.; Liu, D.; Ma, Z.; Tong, L.; Zhang, C.; Zhou, T.; Zhang, X.; Li, S. Identification of seed maize fields with high spatial resolution and multiple spectral remote sensing using random forest classifier. Remote Sens. 2020, 12, 362. [Google Scholar] [CrossRef]

- Wang, S.; Azzari, G.; Lobell, D.B. Crop type mapping without field-level labels: Random forest transfer and unsupervised clustering techniques. Remote Sens. Environ. 2019, 222, 303–317. [Google Scholar] [CrossRef]

- Gola, J.; Webel, J.; Britz, D.; Guitar, A.; Staudt, T.; Winter, M.; Mücklich, F. Objective microstructure classification by support vector machine (SVM) using a combination of morphological parameters and textural features for low carbon steels. Comput. Mater. Sci. 2019, 160, 186–196. [Google Scholar] [CrossRef]

- Yousefi, S.; Mirzaee, S.; Almohamad, H.; Al Dughairi, A.A.; Gomez, C.; Siamian, N.; Alrasheedi, M.; Abdo, H.G. Image classification and land cover mapping using sentinel-2 imagery: Optimization of SVM parameters. Land 2022, 11, 993. [Google Scholar] [CrossRef]

- Taheri Dehkordi, A.; Valadan Zoej, M.J.; Ghasemi, H.; Ghaderpour, E.; Hassan, Q.K. A new clustering method to generate training samples for supervised monitoring of long-term water surface dynamics using Landsat data through Google Earth Engine. Sustainability 2022, 14, 8046. [Google Scholar] [CrossRef]

- Sahour, H.; Kemink, K.M.; O’Connell, J. Integrating SAR and optical remote sensing for conservation-targeted wetlands mapping. Remote Sens. 2022, 14, 159. [Google Scholar] [CrossRef]

- Wettschereck, D.; Aha, D.W.; Mohri, T. A Review and Empirical Evaluation of Feature Weighting Methods for a Class of Lazy Learning Algorithms. Artif. Intell. Rev. 1997, 11, 273–314. [Google Scholar] [CrossRef]

- Noi Tnh, P.; Kappas, M. Comparison of Random Forest, k-Nearest Neighbor, and Support Vector Machine Classifiers for Land Cover Classification Using Sentinel-2 Imagery. Sensors 2018, 18, 18. [Google Scholar] [CrossRef] [PubMed]

- Abedi, R.; Eslam Bonyad, A. Estimation and mapping forest attributes using “k-nearest neighbor” method on IRS-p6 lISS III satellite image data. Ecol. Balk. 2015, 7, 93–102. [Google Scholar]

- Pacheco, A.D.P.; Junior, J.A.D.S.; Ruiz-Armenteros, A.M.; Henriques, R.F.F. Assessment of k-nearest neighbor and random forest classifiers for mapping forest fire areas in central portugal using landsat-8, sentinel-2, and terra imagery. Remote Sens. 2021, 13, 1345. [Google Scholar] [CrossRef]

- Matvienko, I.; Gasanov, M.; Petrovskaia, A.; Kuznetsov, M.; Jana, R.; Pukalchik, M.; Oseledets, I. Bayesian Aggregation Improves Traditional Single-Image Crop Classification Approaches. Sensors 2022, 22, 8600. [Google Scholar] [CrossRef] [PubMed]

- Axelsson, A.; Lindberg, E.; Reese, H.; Olsson, H. Tree species classification using Sentinel-2 imagery and Bayesian inference. Int. J. Appl. Earth Obs. Geoinf. 2021, 100, 102318. [Google Scholar] [CrossRef]

- Cohen, J.A. Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and application. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef]

- McKinnon, T.; Hoff, P. Comparing RGB-Based Vegetation Indices with NDVI for Drone Based Agricultural Sensing; AGBX021-17; AGBX: Clermont-Ferrand, France, 2017; Volume 21, pp. 1–8. [Google Scholar]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of remote sensing in precision agriculture: A review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Govaerts, B.; Verhulst, N. The Normalized Difference Vegetation Index (NDVI) GreenSeekerTM Handheld Sensor: Toward the Integrated Evaluation of Crop Management; CIMMYT: Mexico City, Mexico, 2010. [Google Scholar]

- Tan, C.; Zhang, P.; Zhou, X.; Wang, Z.; Xu, Z.; Mao, W.; Li, W.; Huo, Z.; Guo, W.; Yun, F. Quantitative monitoring of leaf area index in wheat of different plant types by integrating nDVi and Beer-Lambert law. Sci. Rep. 2020, 10, 929. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Carlson, T.N.; Ripley, D.A. On the relation between NDVI, fractional vegetation cover, and leaf area index. Remote Sens. Environ. 1997, 62, 241–252. [Google Scholar] [CrossRef]

- Zhou, J.; Jia, L.; Menenti, M.; Gorte, B. On the performance of remote sensing time series reconstruction methods—A spatial comparison. Remote Sens. Environ. 2016, 187, 367–384. [Google Scholar] [CrossRef]

- Jiang, W.; Yuan, L.; Wang, W.; Cao, R.; Zhang, Y.; Shen, W. Spatio-temporal analysis of vegetation variation in the Yellow River Basin. Ecol. Indic. 2015, 51, 117–126. [Google Scholar] [CrossRef]

- Mangewa, L.J.; Ndakidemi, P.A.; Alward, R.D.; Kija, H.K.; Bukombe, J.K.; Nasolwa, E.R.; Munishi, L.K. Comparative Assessment of UAV and Sentinel-2 NDVI and GNDVI for Preliminary Diagnosis of Habitat Conditions in Burunge Wildlife Management Area, Tanzania. Earth 2022, 3, 769–787. [Google Scholar] [CrossRef]

- Stritih, A.; Senf, C.; Seidl, R.; Grêt-Regamey, A.; Bebi, P. The impact of land-use legacies and recent management on natural disturbance susceptibility in mountain forests. For. Ecol. Manag. 2021, 484, 118950. [Google Scholar] [CrossRef]

- Aliabad, F.; Zare, M.; Ghafarian Malamiri, H. A comparative assessment of the accuracies of split-window algorithms for retrieving of land surface temperature using Landsat 8 data. Model. Earth Syst. Environ. 2021, 7, 2267–2281. [Google Scholar] [CrossRef]

- Aliabad, F.A.; Zare, M.; Malamiri, H.G. Comparison of the accuracy of daytime land surface temperature retrieval methods using Landsat 8 images in arid regions. Infrared Phys. Technol. 2021, 115, 103692. [Google Scholar] [CrossRef]

- Chander, G.; Markham, B.L.; Helder, D.L. Summary of current radiometric calibration coefficients for Landsat MSS, TM, ETM+, and EO-1 ALI sensors. Remote Sens. Environ. 2009, 113, 893–903. [Google Scholar] [CrossRef]

- Tan, K.C.; San Lim, H.; Mat Jafri, M.Z.; Abdullah, K. Landsat data to evaluate urban expansion and determine land use/land cover changes in Penang Island, Malaysia. Environ. Earth Sci. 2010, 60, 1509–1521. [Google Scholar] [CrossRef]

- Vlassova, L.; Perez-Cabello, F.; Nieto, H.; Martín, P.; Riaño, D.; De La Riva, J. Assessment of methods for land surface temperature retrieval from Landsat-5 TM images applicable to multiscale tree-grass ecosystem modeling. Remote Sens. 2014, 6, 4345–4368. [Google Scholar] [CrossRef]

- Neinavaz, E.; Skidmore, A.K.; Darvishzadeh, R. Effects of prediction accuracy of the proportion of vegetation cover on land surface emissivity and temperature using the NDVI threshold method. Int. J. Appl. Earth Obs. Geoinf. 2020, 85, 101984. [Google Scholar] [CrossRef]

- Sobrino, J.A.; Raissouni, N.; Li, Z.L. A comparative study of land surface emissivity retrieval from NOAA data. Remote Sens. Environ. 2001, 75, 256–266. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS; NASA Special Publication; Texas A&M University: College Station, TX, USA, 1974; Volume 351, p. 309. [Google Scholar]

- Dymond, J.R.; Stephens, P.R.; Newsome, P.F.; Wilde, R.H. Percentage vegetation cover of a degrading rangeland from SPOT. Int. J. Remote Sens. 1992, 13, 1999–2007. [Google Scholar] [CrossRef]

- Aliabad, F.; Zare, M.; Ghafarian Malamiri, H.R. Comparison of the Accuracies of Different Methods for Estimating Atmospheric Water Vapor in the Retrieval of Land Surface Temperature Using Landsat 8 Images. Desert Manag. 2021, 9, 15–34. [Google Scholar]

- Wang, M.; He, G.; Zhang, Z.; Wang, G.; Long, T. NDVI-based split-window algorithm for precipitable water vapor retrieval from Landsat-8 TIRS data over land area. Remote Sens. Lett. 2015, 6, 904–913. [Google Scholar] [CrossRef]

- Aliabad, F.A.; Zare, M.; Solgi, R.; Shojaei, S. Comparison of neural network methods (fuzzy ARTMAP, Kohonen and Perceptron) and maximum likelihood efficiency in preparation of land use map. GeoJournal 2023, 88, 2199–2214. [Google Scholar] [CrossRef]

- Aliabad, F.; Zare, M.; Ghafarian Malamiri, H.R. Investigating the retrieval possibility of land surface temperature images of Landsat 8 in desert areas using harmonic analysis of time series (HANTS). Infrared Phys. Technol 2023. under review. [Google Scholar]

- Ai, J.; Gao, W.; Gao, Z.; Shi, R.; Zhang, C.; Liu, C. Integrating pan-sharpening and classifier ensemble techniques to map an invasive plant (Spartina alterniflora) in an estuarine wetland using Landsat 8 imagery. J. Appl. Remote Sens. 2016, 10, 026001. [Google Scholar] [CrossRef]

- Rahimzadeganasl, A.; Alganci, U.; Goksel, C. An approach for the pan sharpening of very high resolution satellite images using a CIELab color based component substitution algorithm. Appl. Sci. 2019, 9, 5234. [Google Scholar] [CrossRef]

- Al-Najjar, H.A.; Kalantar, B.; Pradhan, B.; Saeidi, V.; Halin, A.A.; Ueda, N.; Mansor, S. Land cover classification from fused DSM and UAV images using convolutional neural networks. Remote Sens. 2019, 11, 1461. [Google Scholar] [CrossRef]

- Marcinkowska-Ochtyra, A.; Zagajewski, B.; Raczko, E.; Ochtyra, A.; Jarocińska, A. Classification of High-Mountain Vegetation Communities within a Diverse Giant Mountains Ecosystem Using Airborne APEX Hyperspectral Imagery. Remote Sens. 2018, 10, 570. [Google Scholar] [CrossRef]

- Burai, P.; Deák, B.; Valkó, O.; Tomor, T. Classification of Herbaceous Vegetation Using Airborne Hyperspectral Imagery. Remote Sens. 2015, 7, 2046–2066. [Google Scholar] [CrossRef]

- Bento, N.L.; Ferraz, G.A.E.S.; Amorim, J.D.S.; Santana, L.S.; Barata, R.A.P.; Soares, D.V.; Ferraz, P.F.P. Weed Detection and Mapping of a Coffee Farm by a Remotely Piloted Aircraft System. Agronomy 2023, 13, 830. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, W.; Sun, Y.; Chang, C.; Yu, J.; Zhang, W. Fusion of multispectral aerial imagery and vegetation indices for machine learning-based ground classification. Remote Sens. 2021, 13, 1411. [Google Scholar] [CrossRef]

| Coefficient | C0 | C1 | C2 | C3 | C4 | C5 | C6 |

| Value | −0/268 | 1/378 | 0/183 | 54/300 | −2/238 | −129/200 | 16/400 |

| Brovey | IHS | Ehlers | HPF | GS | PRM | CN | NNDiffuse | HSC | PSC | |

| CC | 0/92 | 0.97 | 0.97 | 0.88 | 0.97 | 0.94 | 0.86 | 0.96 | 0.91 | 0.96 |

| RMSE | 14.61 | 13.5 | 12.3 | 15.1 | 13.5 | 13.82 | 17.5 | 13.8 | 18.37 | 13.79 |

| ERGAS | 1.97 | 1.87 | 1.73 | 2.39 | 1.73 | 2.16 | 2.61 | 1.95 | 2.37 | 1.98 |

| Kappa Coefficient | Overall Accuracy | |

| SVM | 89% | 86.2 |

| Bayes | 58% | 51.3 |

| KNN | 76% | 81.4 |

| RF | 87% | 83.1 |

| NDVI | Name | Color | Class |

|---|---|---|---|

| 0.4< | Desirable conditions | Very Good | |

| 0.3–0.4 | Acceptable conditions | Good | |

| 0.2–0.3 | Drying up | Poor | |

| 0.2> | Dried | Very Poor |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arabi Aliabad, F.; Ghafarian Malamiri, H.; Sarsangi, A.; Sekertekin, A.; Ghaderpour, E. Identifying and Monitoring Gardens in Urban Areas Using Aerial and Satellite Imagery. Remote Sens. 2023, 15, 4053. https://doi.org/10.3390/rs15164053

Arabi Aliabad F, Ghafarian Malamiri H, Sarsangi A, Sekertekin A, Ghaderpour E. Identifying and Monitoring Gardens in Urban Areas Using Aerial and Satellite Imagery. Remote Sensing. 2023; 15(16):4053. https://doi.org/10.3390/rs15164053

Chicago/Turabian StyleArabi Aliabad, Fahime, Hamidreza Ghafarian Malamiri, Alireza Sarsangi, Aliihsan Sekertekin, and Ebrahim Ghaderpour. 2023. "Identifying and Monitoring Gardens in Urban Areas Using Aerial and Satellite Imagery" Remote Sensing 15, no. 16: 4053. https://doi.org/10.3390/rs15164053

APA StyleArabi Aliabad, F., Ghafarian Malamiri, H., Sarsangi, A., Sekertekin, A., & Ghaderpour, E. (2023). Identifying and Monitoring Gardens in Urban Areas Using Aerial and Satellite Imagery. Remote Sensing, 15(16), 4053. https://doi.org/10.3390/rs15164053