1. Introduction

Airborne synthetic aperture radar (SAR) is a valuable tool for remote sensing and mapping, providing high-resolution two-dimensional (2-D) images that improve detection performances [

1,

2,

3,

4]. Compared to traditional optical remote sensing, airborne SAR can be used for detection during the day, at night, and in adverse weather conditions, making it a flexible and reliable monitoring technology [

5,

6,

7]. Recent advancements in unmanned aerial vehicle (UAV) technology have led to the development of micro-SAR devices that can be equipped on drones, offering advantages such as ease of operation and deployment and low cost, particularly in lightweight drones. UAV SAR can be used in hazardous conditions, such as during natural disasters or fires, to reduce the risks for rescue personnel [

8,

9,

10,

11,

12].

A stable flying status is crucial for all kinds of airborne SAR systems to effectively synthesize the Doppler bandwidth. However, motion-induced error can compromise both resolution and overall system performance [

13,

14,

15,

16]. In practice, flight paths are often non-linear due to atmospheric airflow, resulting in motion error that significantly impacts the Doppler characteristics of the echo data, including the Doppler centroid and the Doppler chirp rate, which determine the azimuth position and depth of field, respectively. These factors are inherently limited by residual range cell migration (RCM) and nonlinear phase error (NPE) of the target [

17,

18,

19,

20]. As a result, motion error cannot be neglected during airborne SAR imaging processing.

For stable aircraft, such as transport planes, the impact of motion error on SAR performance is generally negligible [

21,

22,

23]. However, for drones, atmosphere turbulence can cause a significant motion-induced error compared to manned aircraft [

24]. Moreover, due to payload limitations, drone SARs are typically designed with higher frequencies to shorten the wavelength and reduce the size and weight of the RF device, resulting in significant phase error caused by motion error [

25]. In addition, compared to traditional airborne SAR systems, drone SAR systems have a shorter detection range, requiring a larger antenna pitch angle to cover the ground scene. This results in significant spatial variability caused by motion error, which can greatly degrade imaging quality, particularly in high-resolution applications.

With the continuous improvement of radar resolution, the demand for enhanced accuracy in SAR motion compensation has grown [

26]. Currently, the measurement accuracy of inertial guidance systems (INS) or global positioning systems (GPS) [

27] often cannot meet the requirements for high-resolution SAR motion compensation UAV systems. This limitation restricts the use of motion compensation algorithms based on navigation information. Consequently, motion compensation emerges as a critical factor in obtaining high-resolution images for UAV SAR systems. Previous research has primarily focused on error properties and estimation methods, such as the phase gradient autofocus (PGA) technique for spotlight mode [

28] and motion compensation methods for strip mode [

29]. However, these approaches have limitations in addressing scenarios with extremely small angles of incidence. Therefore, the utilization of autofocus approaches is recommended to implement motion compensation in drone SAR systems, particularly for close-range scenarios with extremely small angles of incidence. Further research is necessary to investigate high-resolution imaging and motion compensation algorithms tailored for such scenarios.

Various studies have investigated the SAR autofocus problem, with phase gradient autofocus (PGA) being one of the most well-known techniques [

30,

31]. Qualification PGA (QPGA) reduces the requirement for the number of salient points in two dimensions, and increasing the number of salient points can improve the precision of phase gradient estimation and the robustness of the algorithm [

32]. Different weighting strategies have been proposed to address the issue of low signal clutter ratio (SCR) features in phase gradient estimation and enhance the contribution of high-quality features. However, most existing algorithms have been proposed to compensate for the spatial invariant motion error and do not address the issue of spatial variation in the scene, which may limit their practical performance. In the case of large elevation angles, the observation distance to the target is small, i.e., the slant range is smaller than in the case of small elevation angles. Indeed, the impact on the echo signals is also significant. Therefore, there is a pressing need for a more precise and accurate motion compensation method. Moreover, due to the presence of spatial variation, the variation in slant range in the range dimension is smaller in the case of large elevation angles than in the case of small elevation angles. This leads to increased range spatial variation in the echo, while imaging targets are located at different range cells in the scene. As a consequence, existing algorithms that only consider spatial variation fail to address this issue adequately. Hence, the development of a more accurate higher-order autofocusing algorithm is necessary.

This study proposes a MOCO algorithm for UAV SAR systems that addresses practical issues, including range motion error and PGA failure. It first establishes a geometric model of the system and analyzes the potential issues related to motion error and inadequate scattering points. Then, it proposes a motion compensation algorithm based on an improved phase-weighted estimation PGA algorithm, which is able to estimate both spatial invariant and spatially variant phase error and perform full aperture phase stitching. Finally, a combined autofocus method is proposed to address the issue of insufficient strong scattering points in the scene, which selects different autofocus methods based on the proportion of strong scattering points and sets a threshold to improve the spatially variant performance of MOCO. Experimental results show that this proposed method has a wider application and a higher imaging precision compared with traditional methods.

In summary, the innovation and contribution of this work is a MOCO strategy designed for UAV SAR high-resolution imaging in extremely small incident angle. The core of which is the statistical threshold selection, resulting in a combined autofocus method applicable to arbitrary imaging scene. By selecting the appropriate processing method, the proposed approach addresses the challenge of poor performance of traditional methods when there are few strong scattering points in the imaging scene. Meanwhile, to ensure the accuracy of MOCO when incident angle is extremely small, an improved PGA algorithm that considers the effect of the high-order phase errors is utilized, which further enhance the robustness and effectiveness of the proposed approach.

The rest of this paper is organized as follows:

Section 2 analyzes the airborne SAR motion error both geometrically and mathematically. In

Section 3, an improved combined MOCO approach based on spatial variation, consisting of three parts (i.e., a statistical threshold selection, an improved phase-weighted estimation PGA algorithm, and an auxiliary algorithm), is presented in detail.

Section 4 provides the experimental results including the simulation and real data processing, and

Section 5 presents the conclusion summarizing the main findings.

2. Modeling

2.1. Geometric Model

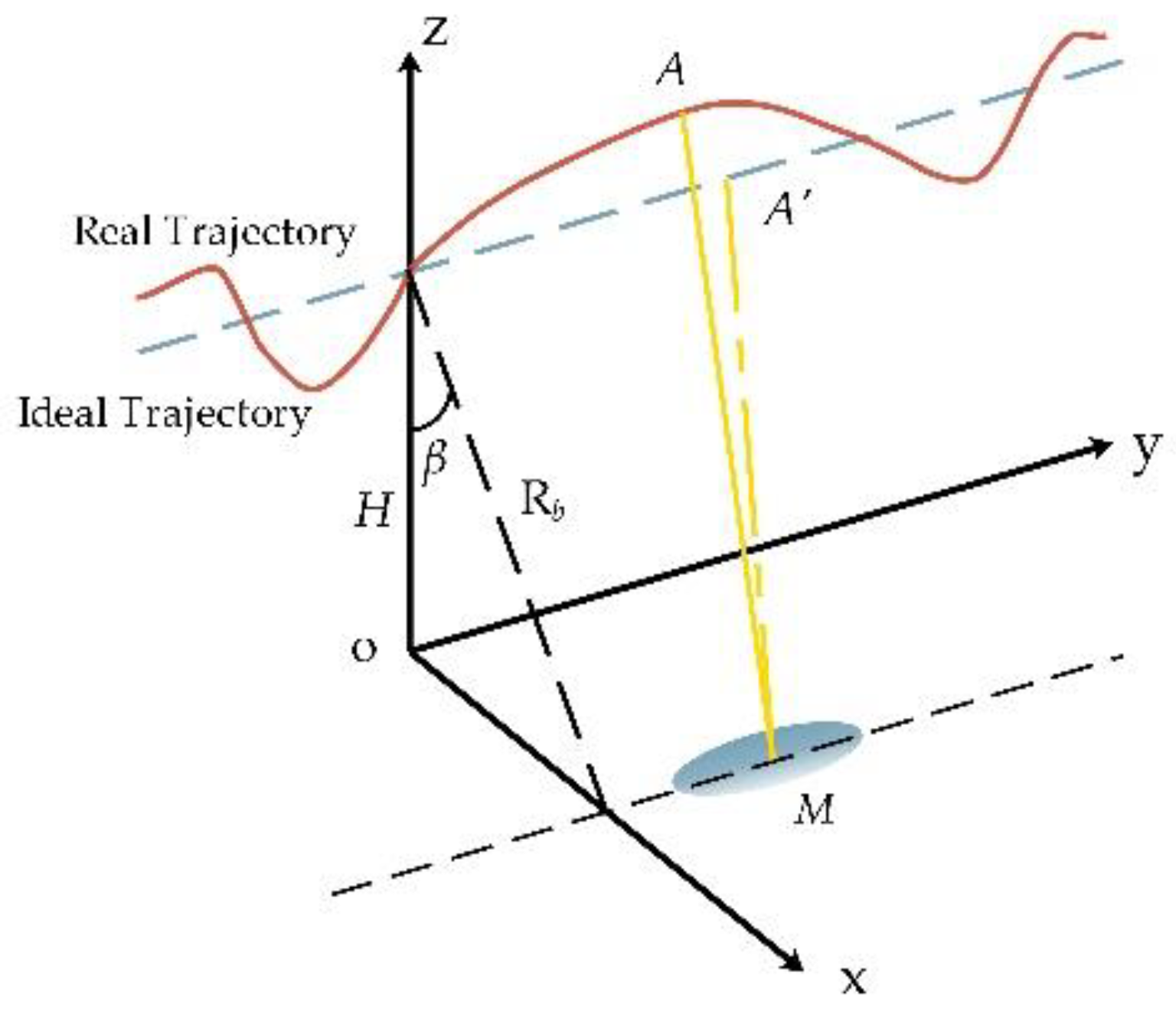

The geometric model of airborne SAR imaging with motion error caused by atmosphere turbulence is shown in

Figure 1.

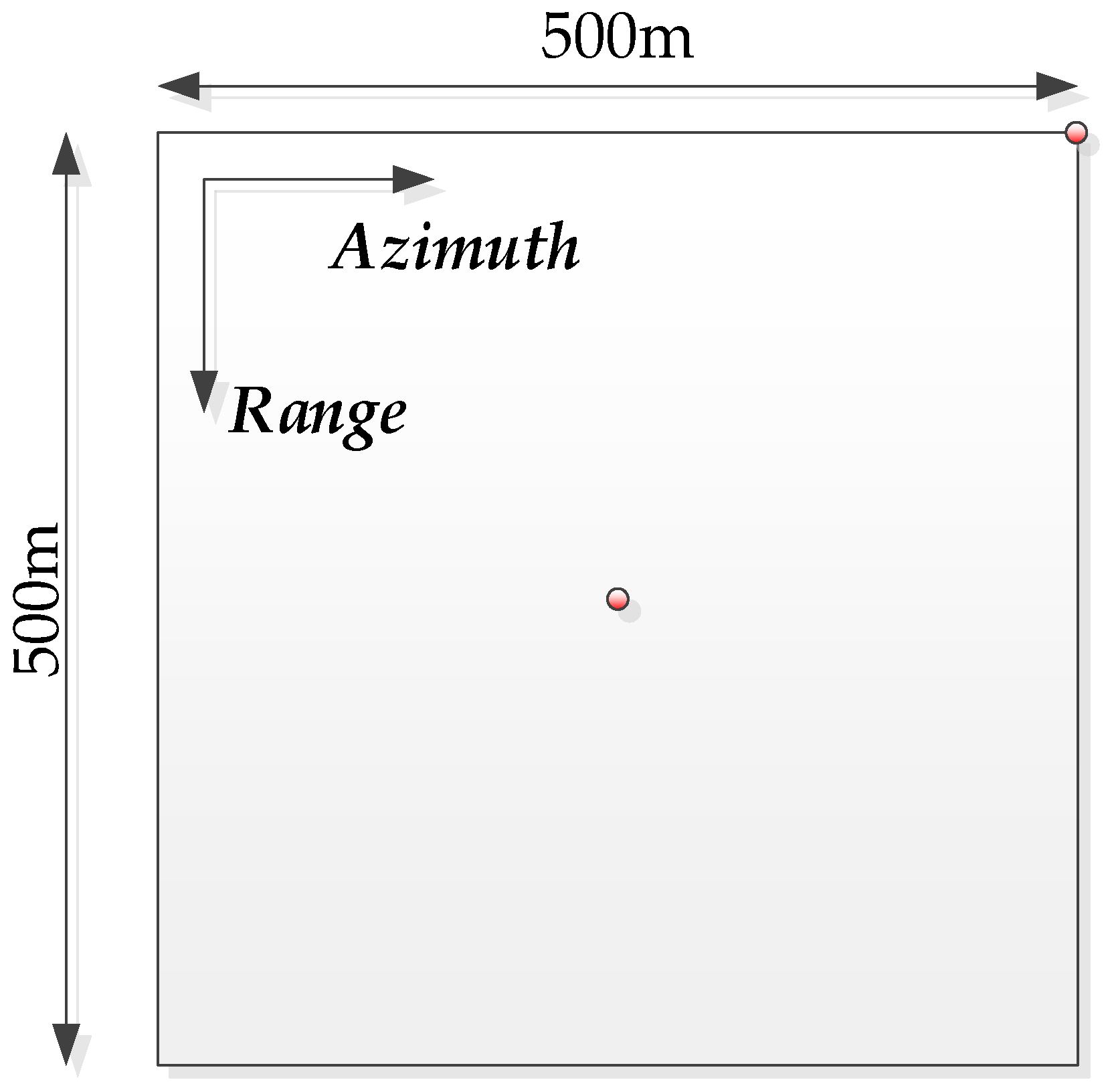

In a spatial coordinate system based on the right-handed convention, the origin is set as the ground projection of the synthetic aperture central. The platform is assumed to fly along -axis at a constant velocity and altitude . The area of interest on the ground lies in the right field of view of the aircraft. denotes the arbitrary point target in the imaging scene, denotes the closest range of , and is the corresponding incident angle, i.e., .

It is almost impossible for an aircraft to maintain a constant attitude. Unstable motion leads to uncertain error, causing the real air path to deviate from the expected path as shown by the red solid line and blue dashed line plotted in

Figure 1, respectively.

and

are the arbitrary point of the platform on the real path and the expected one, respectively. Thus, the instantaneous position on the real and the expected paths can be expressed by the spatial coordinates

,

, and

, i.e.,

and

, where

denotes the slow time and

denotes the imaging velocity. Additionally, the location of

can be defined as

. Thus, the instantaneous range from

to

can be expressed as follows:

where

is azimuth slow time,

, and

,

. Meanwhile, Equation (1) can be expanded by the Taylor series as

Thus, , corresponding to azimuth and range dimensions, is decomposed into three components, which are explained in detail as follows:

The first component denotes the slant range of at aperture central and determines the range position of on the SAR image;

Note that the second component with respect to mainly depends on the -axis motion status, and varies with the azimuth position of . This term determines the azimuthal position of on the SAR image. In reality, motion error on the -axis may deteriorate the linear Doppler central and further cause a shift in the result. In addition, apart from phase error, the difference interval between chirps caused by motion error on the -axis will result in azimuthal non-uniformity;

The third component affected by and is called the cross-path error, which is an important component that needs to be compensated primarily during imaging processing. This is because the speeds along the -axis and -axis change as the platform approaches the target, thereby deteriorating the Doppler frequency, such as the Doppler central and the Doppler chirp rate. Thus, the result is shifted and defocused. Moreover, it should be noted that the component reflects the spatially variant nature, leading to extra range cell migrations (RCM) and non-linear phase errors (NPE). As a result, this component will cause a great impact on processing.

By means of Equation (2), the range history error

between the real and the expected trajectory can be expressed as

where

denotes the position error along the

-axis at any moment.

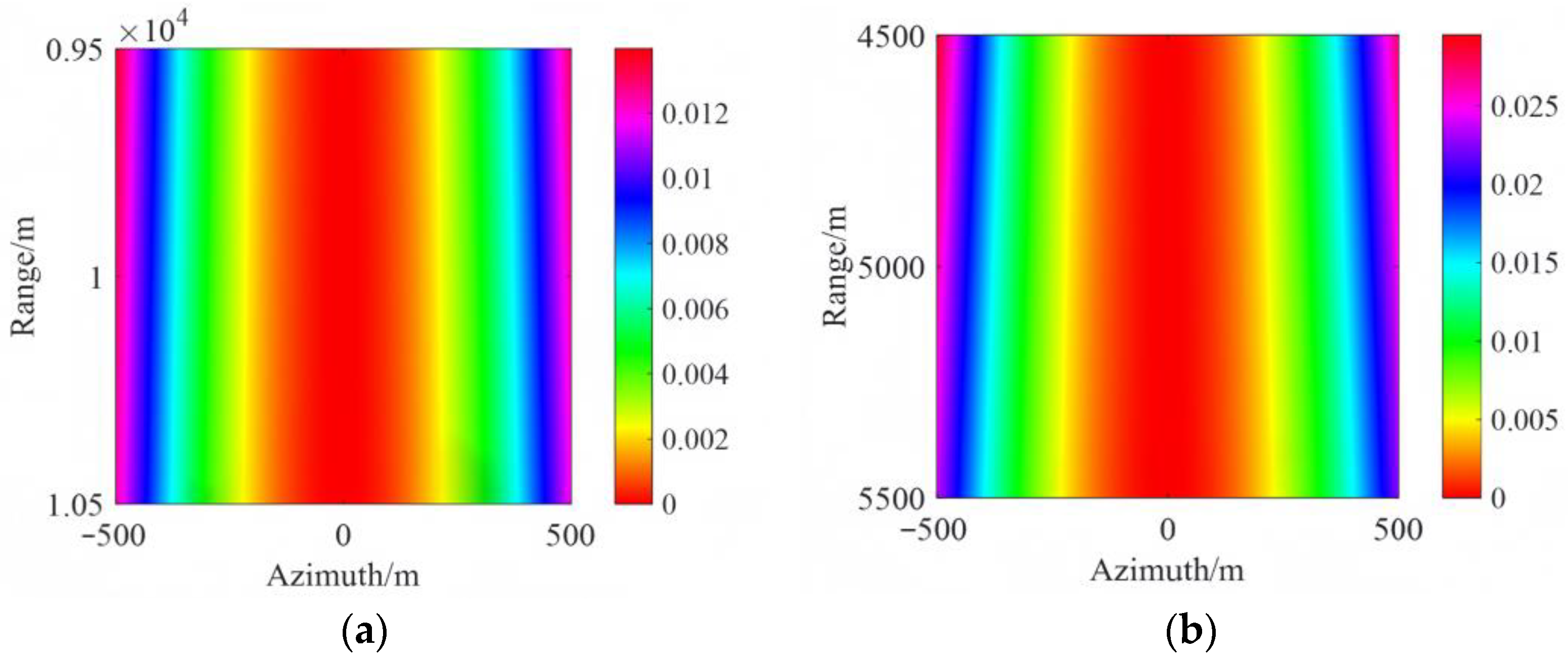

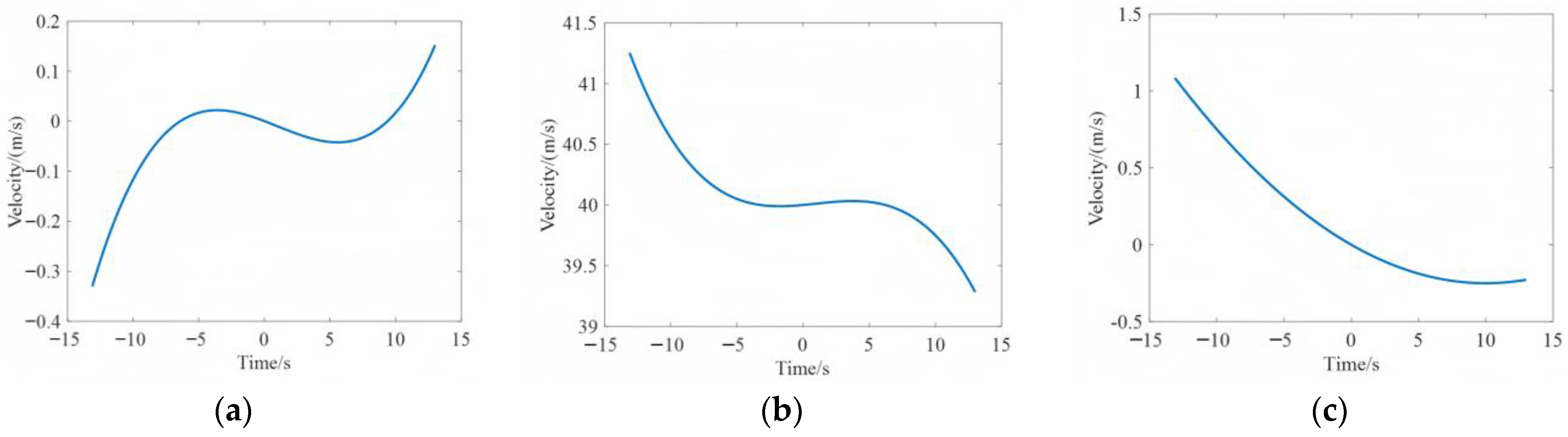

Figure 2 shows the range error corresponding to range and azimuth directions caused by

in a typical UAV SAR application, with different reference ranges

, where the speed along the

-axis varies from −1 m/s to 1 m/s during the entire aperture. It is apparent that the range error denoted by

is too small to cause defocusing in the images even in high-resolution cases. Thus, these errors can be ignored.

Hence, Equation (3) can be simplified as

However, is not only determined by -axis and -axis motion error but depends on as well. This implies that is cross-coupled and spatially variant in Equation (4).

2.2. Spatially Variant Error Analysis

Before developing motion compensation algorithms, it is essential to analyze the spatial variation error that results from motion error. In UAV applications, these errors exhibit more pronounced characteristics compared to traditional airborne SAR imaging. This disparity can be attributed to the large antenna pitch required to cover the ground scene, which is limited by the detection power.

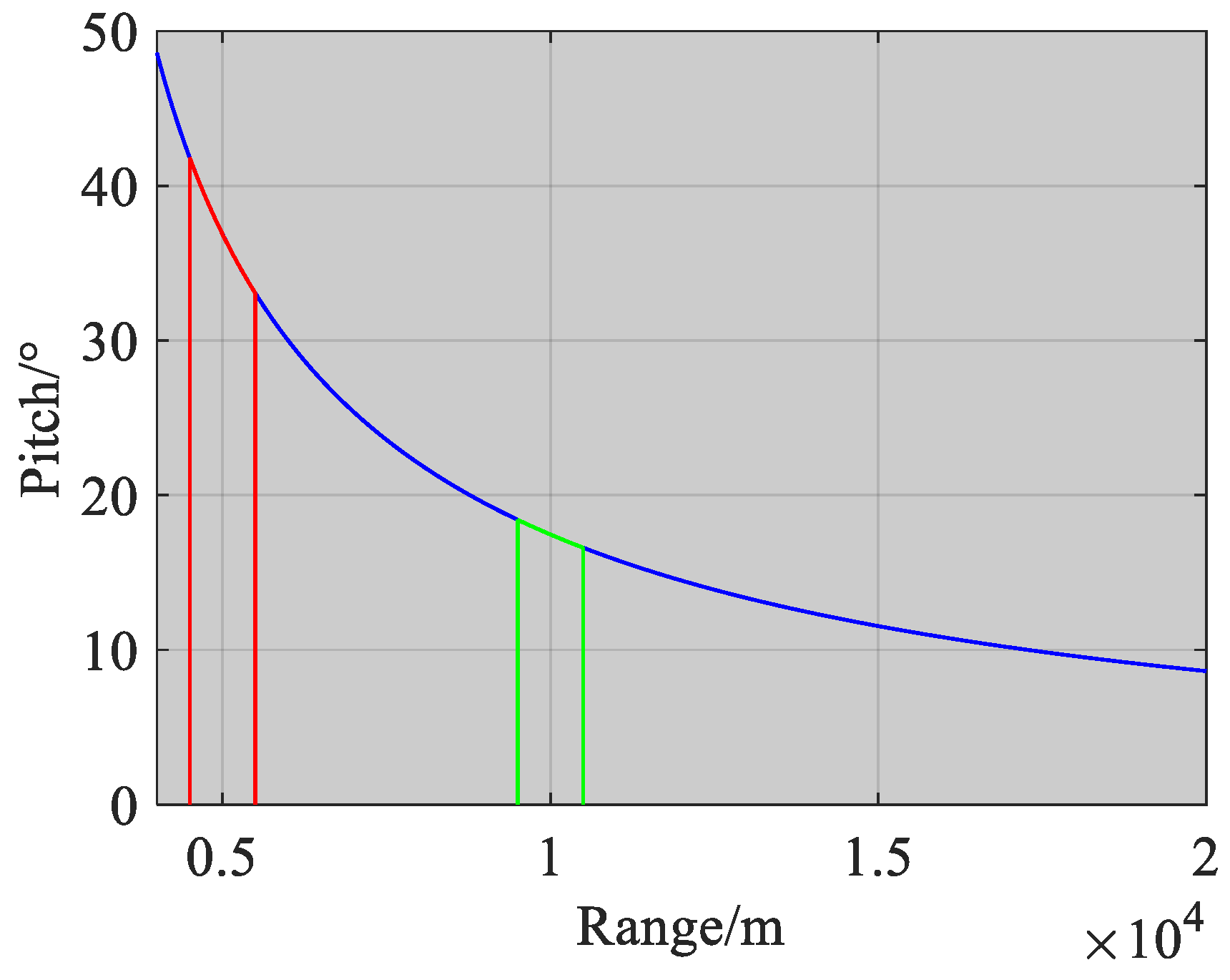

Figure 3 shows the relationship between antenna pitch and slant range. Generally, when the phase error resulting from spatially variant error is larger than

, it has a noticeable impact on the imaging process. We refer to this distance as the “near” detection areas. Conversely, when the phase error within the scene is less than

, the impact on imaging can be disregarded, and this distance is termed the “far” detection area.

In

Figure 3, the red and green labels indicate near and far detection areas, respectively. Although the amplitudes of the red and green detections areas are the same, it is evident that the rate of change in the red detections areas is significantly greater than that in the green detections areas under a large elevation angle. This signifies that the phase error induced by spatially variant error in the far detections areas can be disregarded.

To simplify the analysis, let us assume that

denotes the reference range vector from the platform to the interested area central at antenna phase central (APC),

denotes the range vector from the platform to

, which can be expressed as

where

is the range vector from

to the scene center, which can be defined as the spatially variant part of

relative to the center, i.e.,

.

To further analyze the spatial variation induced by motion error,

and

in Equation (6) can be expanded by the Taylor series as

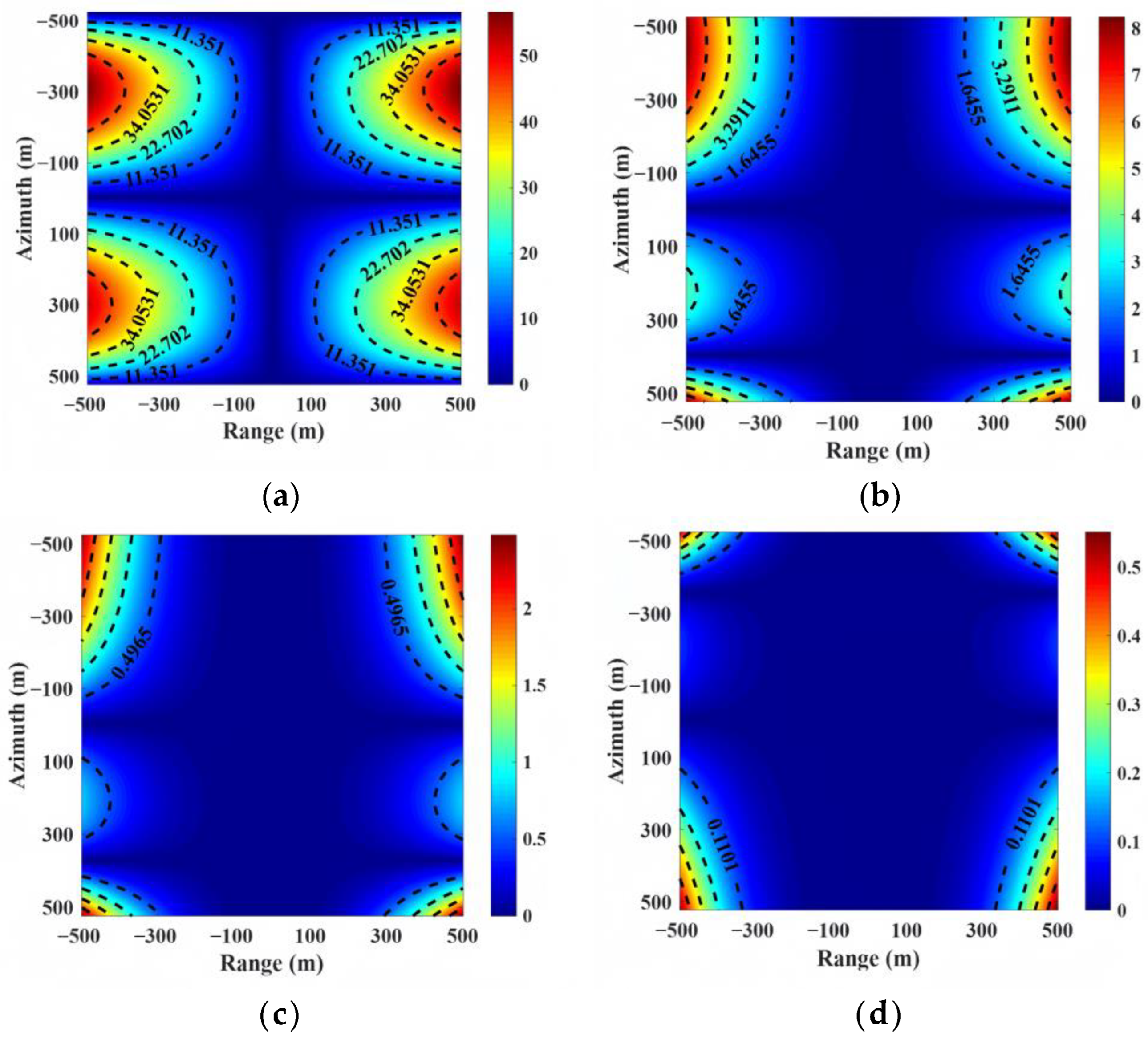

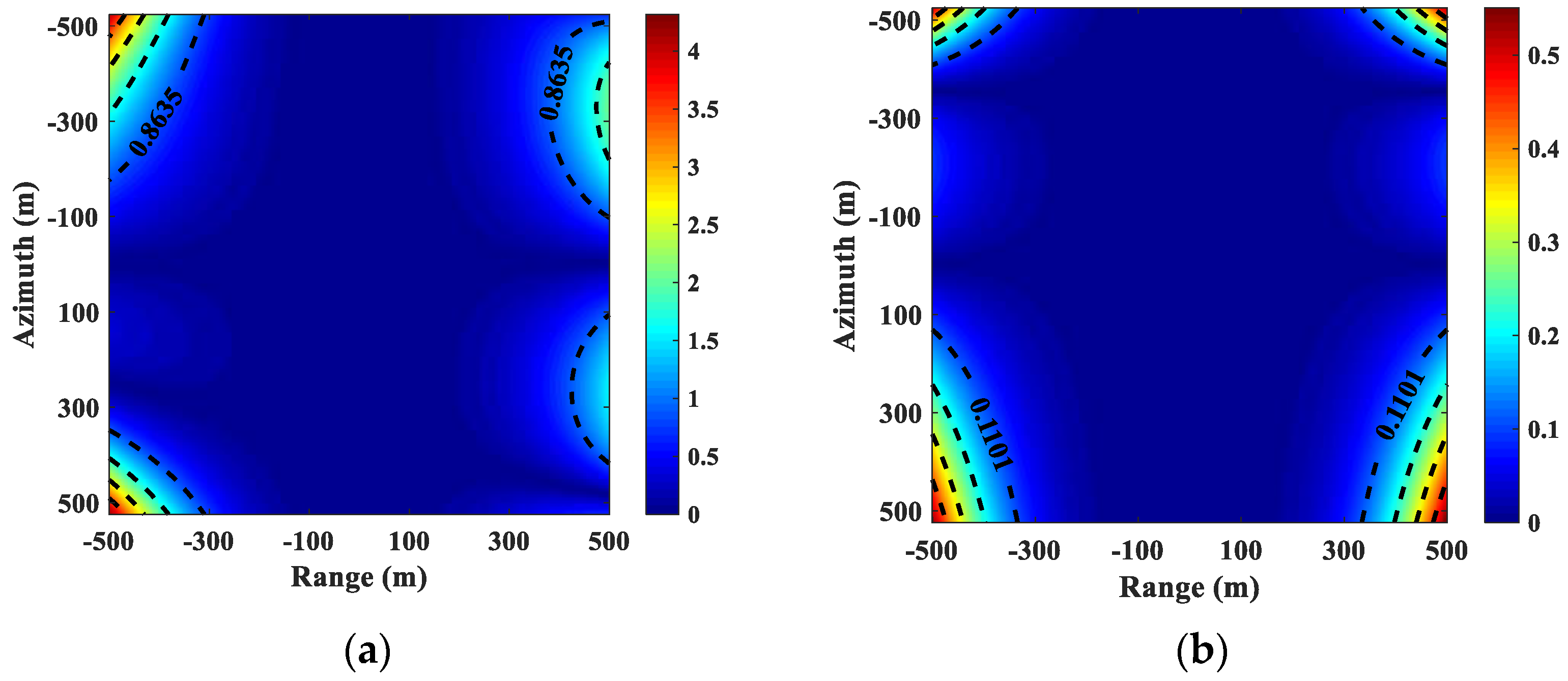

Figure 4a,b display the spatially variant error corresponding to the azimuth and range directions for different range cases based on Equation (6), where the speed along the

-axis and

-axis varies from −1 m/s to 1 m/s during the whole aperture. It is clear that the spatially variant error increases as the reference range decreases.

The effects of spatial variation caused by motion error in UAV SAR are more pronounced than in conventional airborne SAR platforms, particularly in high-resolution applications, and thus cannot be overlooked.

2.3. Discussion of PGA Performance

Autofocus techniques are essential for improving the depth of field in practical airborne SAR processing. The reason is that, due to the presence of system noise, NPE caused by motion error cannot be fully compensated for by motion sensors. Additionally, high-frequency errors, which are associated with fine-scale variation in the motion trajectory, cannot be accurately measured by INS or GPS. Among the nonparametric autofocus methods, PGA has gained widespread usage in most airborne SAR systems due to its excellent performance. The critical step in PGA is to obtain NPE from the phase error gradient.

For further analysis, let us assume the

n-th range cell’s data after windowing and shifting is denoted by

, the one after inverse Fourier transform (IFT) is given by

, and the scatter-dependent phase function is denoted by

. Thus, the linear unbiased minimum variance (LUMV) estimation of the phase error gradient

is given by [

27]:

where

denotes the summation operation,

denotes the first-order derivative of

,

denotes the conjugate of

,

denotes the first-order derivative of

, and

denotes the first-order derivative of

. Based on Equation (7), NPE

can approached the true value through iterative correction. However, there are two weaknesses:

According to Equation (4), PGA is a discrete point-type autofocus algorithm that averages over a number of samples (i.e., range cells) and neglects range-independent error during processing. However, as previously analyzed, the range variant error becomes more prominent with a larger antenna pitch compared to a smaller pitch. Therefore, the traditional PGA may not be suitable for such scenarios.

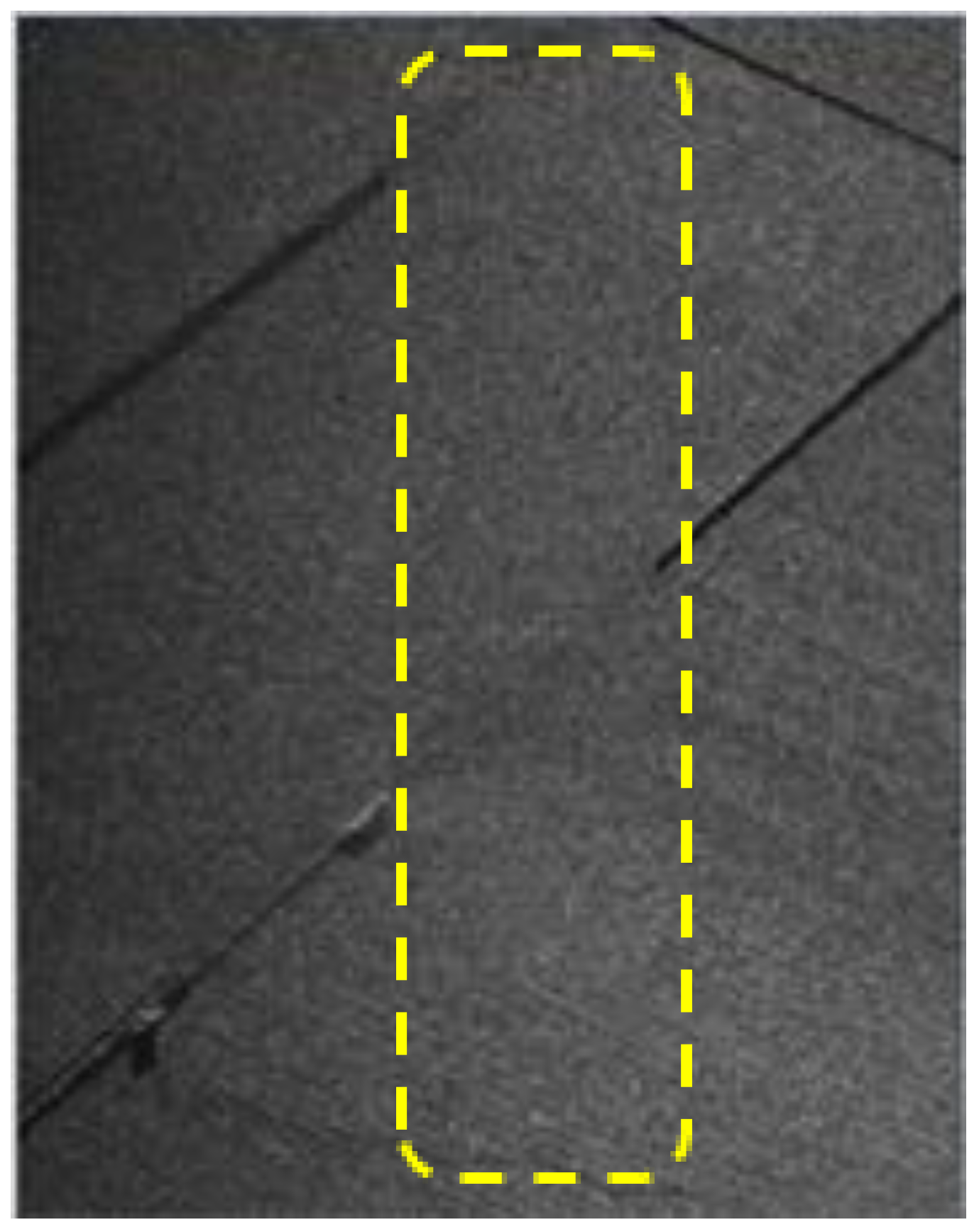

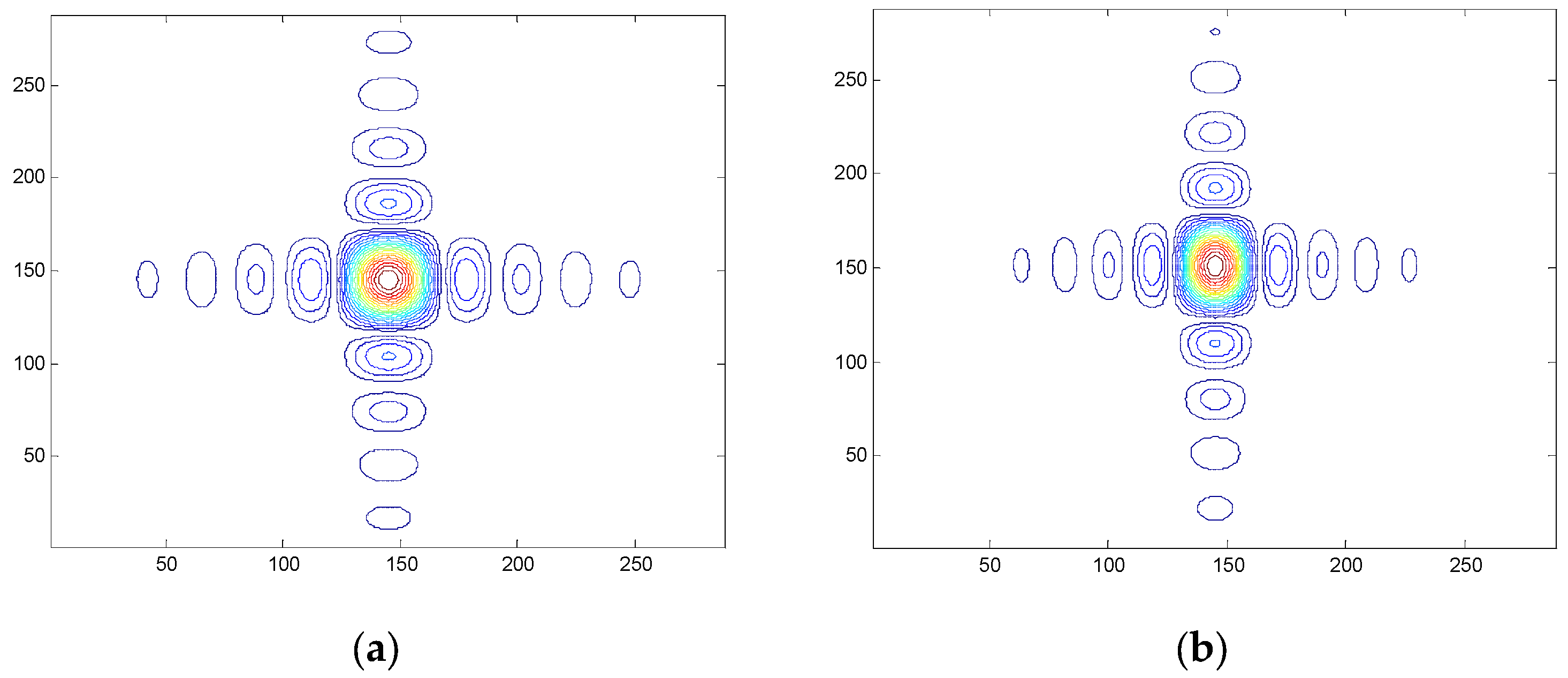

Moreover, classic PGA relies on selecting strong range cells as samples to ensure accuracy, which implies that the ability to focus an image depends entirely on the absence of dominant reflectors. However, this approach lacks robustness, especially for featureless areas. As depicted in

Figure 5, the area within the yellow dash box represents a scene with few strong scattering points. In most drone SAR applications, obtaining enough features from the small interested area is impossible. Therefore, the performance of PGA should be improved.

3. Approach

In this section, we propose a combined autofocus approach that leverages statistical techniques to address the limitations of existing autofocus methods. Our proposed approach utilizes a brightness-counting method to obtain clearer and higher-quality images. To further enhance the stability and quality of the algorithm, we introduce a statistical threshold.

3.1. Statistical Threshold Selection

As previously discussed, the accurate selection of strong scattering points is crucial for the successful implementation of PGA. However, in practice, weak or non-existent scattering points are often selected, which may result in the inability to properly determine suitable scattering points and reduce image accuracy. Thus, it is imperative to improve the performance of selecting strong scattering points to enhance the quality of images.

The brightness of SAR images is typically represented by the intensity of the scattering points following mean quantization. To accurately quantify the intensity of each point, we developed a statistical threshold value that utilizes the statistical histogram approach. Specifically, the image brightness is partitioned into a range of 0 to the maximum value h, determined based on the specific situation. Then, we analyzed the intensity distribution of points in the image and derived a probability density function of the intensity distribution.

To identify the differences in probability density functions of target strength distribution for different scenarios, statistical analysis was performed on the target strength distribution of various scenes.

Assuming a SAR image has

m azimuth samples and

n range samples, the intensity value corresponding to each pixel point of the image is first computed and quantized to its mean value. In this paper, the gray interval

h is set to 255, and the intensity of a pixel point in the

k-th interval is denoted as

. Therefore:

Then, the probability density function of the intensity distribution for each point in the image is denoted as

:

Additionally, the corresponding threshold of the image can be calculated from the obtained probability density function, i.e.:

where

k0 can be regarded as the image intensity demarcation range, which is determined based on the specific circumstances encountered in the SAR image analysis. From Equation (10), it indicates an abundance of strong scatterers in the image under the condition that the proportion is smaller than a value

p, which necessitates compensation by employing an improved phase-weighted estimation PGA algorithm. Conversely, if the proportion is larger than or equal to

p, further compensation is necessary by employing an auxiliary algorithm.

Unlike the traditional algorithm, the imaging method with the threshold selection addresses the challenge of poor performance of the traditional PGA algorithm, which heavily relies on the selection of strong scatterers in the image. This threshold-based imaging method offers improved performance and can be applied to a wider range of scenarios.

In this method, the imaging process is enhanced by considering the count of all scattering points in the scene and analyzing the brightness distribution. From the above analysis, the proportion of strong scatterers in the image can be obtained. By comparing this proportion with the threshold value, different compensation strategies are selected for achieving high-resolution imaging. In practice, the imaging method improves the imaging performance of the traditional autofocusing algorithm and is verified in the real-data experiment in

Section 4.

3.2. Improved Phase-Weighted Estimation PGA

After analyzing the motion error occurring at large elevation angles, it becomes apparent that the phase error, resulting from the spatial variation due to the motion error, cannot be ignored. The conventional PGA algorithm, which assumes that motion error do not vary spatially with range, is unsuitable for imaging blind spots at large elevation angles. In this section, we propose an improved phase-weighted estimation PGA algorithm and a novel MOCO method based on this algorithm. To solve the spatially variant error, we selected range cells with high contrast from the background.

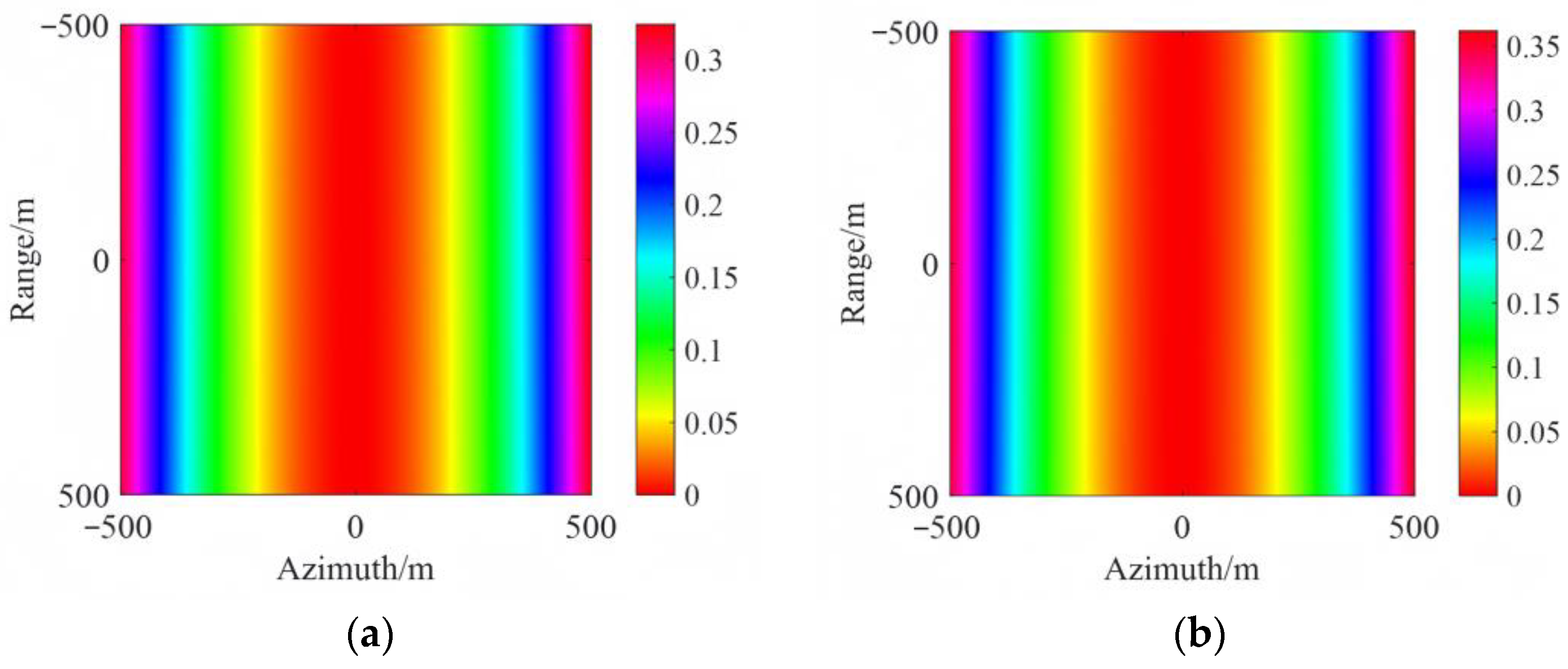

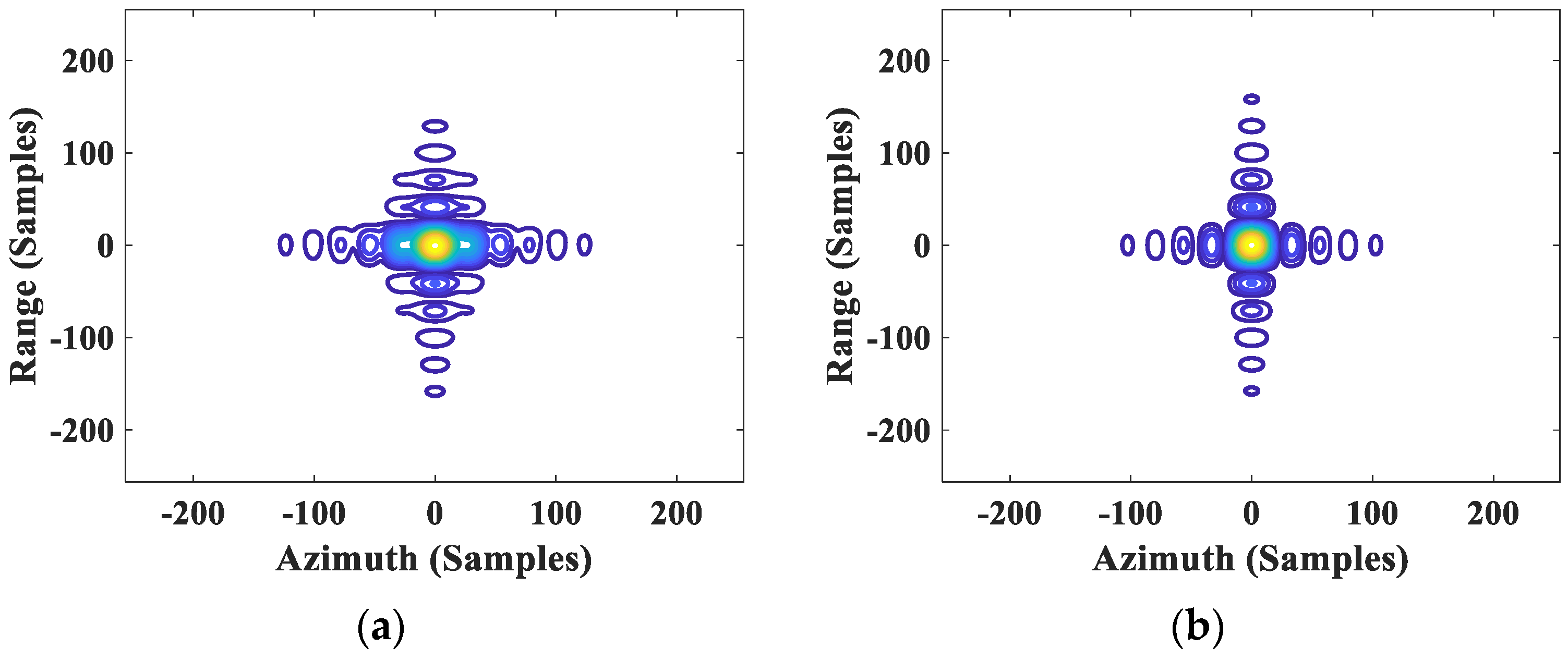

To acquire the phase error, a spatially variant matrix is first constructed using least squares estimation. At large elevation angles, it is necessary to analyze the impact of the spatial variation of the high-order term range. By substituting Equation (6) into Equation (4), we establish a relationship between motion error and range, encompassing terms ranging from the first-order to the fourth-order spatial variation. The data simulation presented in

Table 1 yields

Figure 6, which illustrates the effect of the phase error from the spatial variation in the range up to the fourth order. The contour plot in

Figure 6 employs units of

. In general, the motion errors can be ignored when they are less than

. From the simulation results, it can be seen that the phase error of the first-order to the third-order range spatial variation significantly exceeds

, while the phase error of the fourth-order range spatial variation is considerably less than

. In other words, the phase error from the first-order to the third-order range spatial variation should not be considered negligible.

Consequently, the phase error can be modeled as a third-order polynomial with respect to range, i.e.:

where

represents the azimuth slow time and

represents the difference between the slant range of an arbitrary target in the scene and the slant range of the scene center.

,

,

, and

represent the constant, first-order, second-order, and third-order coefficients of phase error, respectively. The phase error function is spatially variant in range due to the presence of motion error.

Once the expression of phase error is obtained, a weighted least squares estimation of

,

,

, and

can be formulated as:

where,

,

,

, and

are the gradient estimation of

,

,

, and

, respectively,

represents the azimuth length of the sample, and

denotes the contrast weighting matrix between the target and background, where

is the contrast within the

k-th range cell.

denotes the matrix of the spatially variant range, which can be expressed as:

The phase gradient estimation matrix of the selected sample is expressed as:

where

is the phase gradient estimation of the

k-th sample cell.

The entire phase gradient estimation algorithm above utilizes the spatial invariant phase error in the range sub-apertures and estimates the phase gradient in each sub-aperture with high precision through maximum likelihood estimation (MLE). The polynomial coefficients can then be estimated using least squares estimation, thereby realizing the estimation of the spatially variant phase error of the range. As proposed in the improved PGA algorithm, the corresponding MOCO method for high-resolution SAR can effectively achieve both phase and envelope compensation while accurately compensating for spatially variant error.

It is recommended to use the overlapped sub-apertures strategy in real data processing to enhance the accuracy and robustness of the improved phase-weighted estimation PGA algorithm. The improved PGA algorithm can achieve highly accurate phase error estimation with high operational efficiency and robustness.

3.3. Auxiliary Algorithm

In this subsection, an auxiliary algorithm based on the quadratic phase error model [

33,

34] is designed to address the issue of the PGA algorithm failing in cases where there are few features available for accurate phase error estimation. This auxiliary algorithm can effectively compensate for such a deficiency with high estimation accuracy.

Unlike the PGA algorithm, the auxiliary algorithm is independent of the number of features. It is based on a parameter model and can effectively estimate the Doppler modulation frequency while accurately compensating for quadratic phase error. Moreover, it can produce better focusing effects for scattering points when the motion error is small. The auxiliary algorithm is characterized by its ability to achieve high-quality results with low computational complexity, as it only requires operations such as FFT, IFFT, correlation, and complex multiplication [

35,

36,

37]. Additionally, the algorithm does not require multiple iterations and can adapt to various imaging scenarios easily. Concomitantly, the proposed method exhibits robustness in estimating the Doppler velocity parameter.

Assuming that the residual phase error remains spatially invariant, the algorithm is employed to extract instantaneous Doppler velocities within a sub-aperture, followed by double integration of the Doppler velocities to obtain the aperture’s complete phase function. The specific algorithm is described as follows:

The spatial invariant phase error obtained by estimation is

, the spatial invariant range migration is

, and the phase function of coarse compensation is:

The change in azimuth modulation frequency can be obtained using the auxiliary algorithm. It can be deduced that the second-order integration of the frequency modulation with respect to time yields the phase. Thus, the phase error can be obtained by integrating this variation

. Note that the first-order integral of the frequency modulation yields the frequency while removing its linear component, which helps prevent linear offset of the image orientation. The phase error

can then be expressed as:

where

represents the number of points in the azimuth direction, and

represents the frequency shift. Through this method, the spatial invariant phase error can be accurately compensated even if the inertial navigation system (INS) fails.

3.4. Flowchart of Imaging Approach

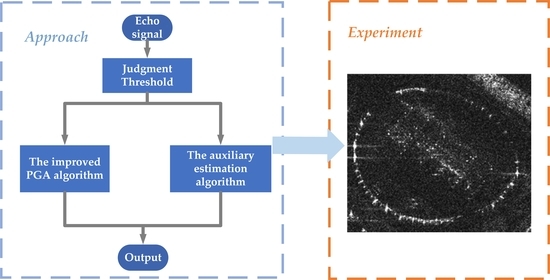

For complex scenes, the conventional autofocus algorithm based on the image criterion may not adequately account for the spatial variability of motion error and may fail to meet focus requirements. To address these issues, a threshold-based combined imaging algorithm for high-resolution SAR is proposed.

Drawing upon the derivation in the preceding section, a statistical threshold is selected to design a composite autofocus algorithm with a broader scope of application. This approach enables the use of the PGA algorithm in scenes where the image’s brightness features exceed a certain threshold. In contrast, for scenes where distinct features are not present and the image’s brightness falls below a designated threshold, such as deserts and grasslands, an auxiliary algorithm can be utilized for imaging.

The flow chart of the combined autofocus algorithm proposed in this paper is shown in

Figure 7. This combined focusing approach ensures both sufficient focusing accuracy in scenes with an abundance of strong points and stable focusing in scenes with sparse scatterers. Additionally, this approach has a wider range of applications and higher stability.