Deep Learning Approach for Paddy Field Detection Using Labeled Aerial Images: The Case of Detecting and Staging Paddy Fields in Central and Southern Taiwan

Abstract

1. Introduction

1.1. Purpose

1.2. Related Work

1.3. Contribution

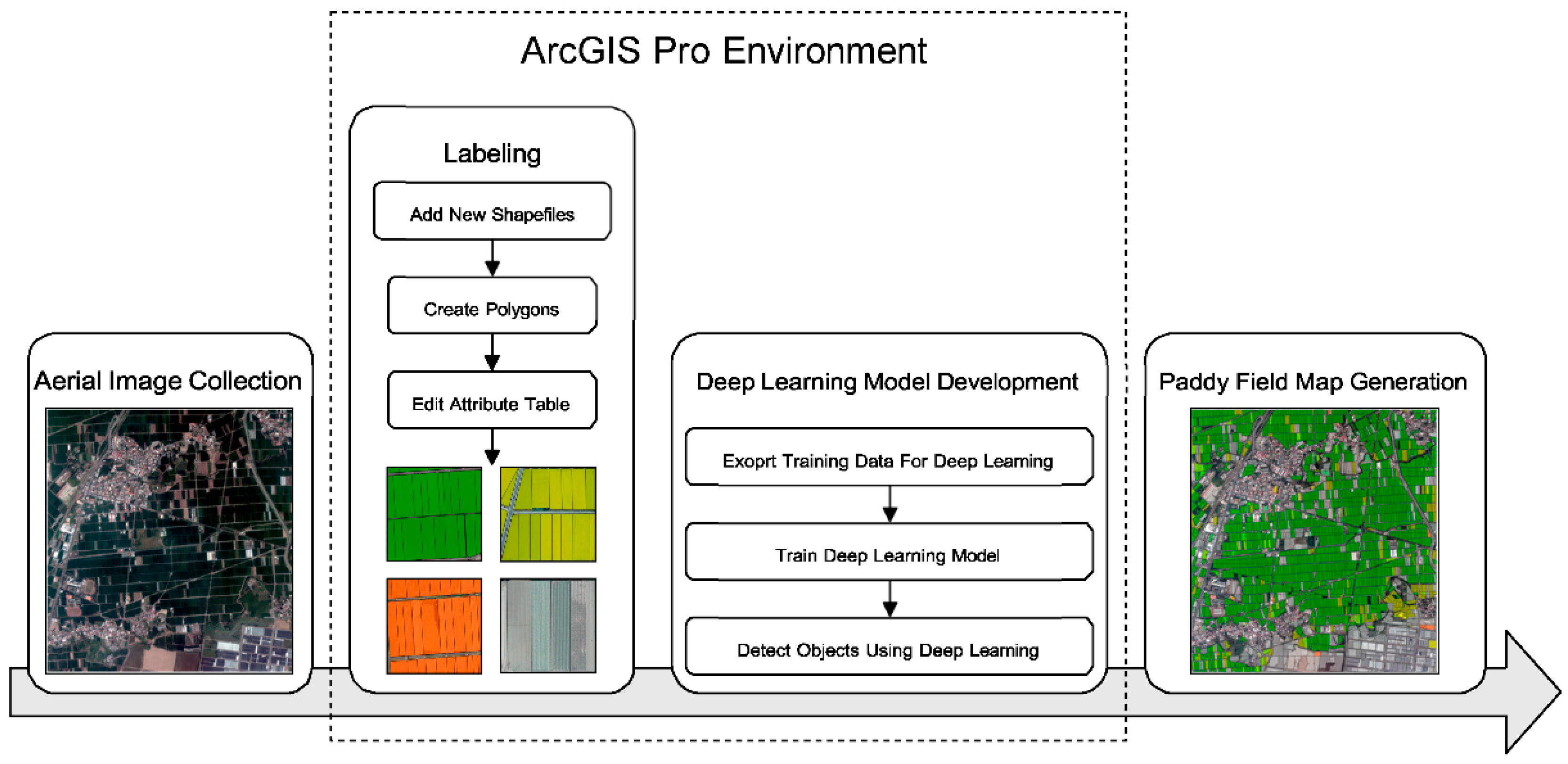

2. Materials and Methods

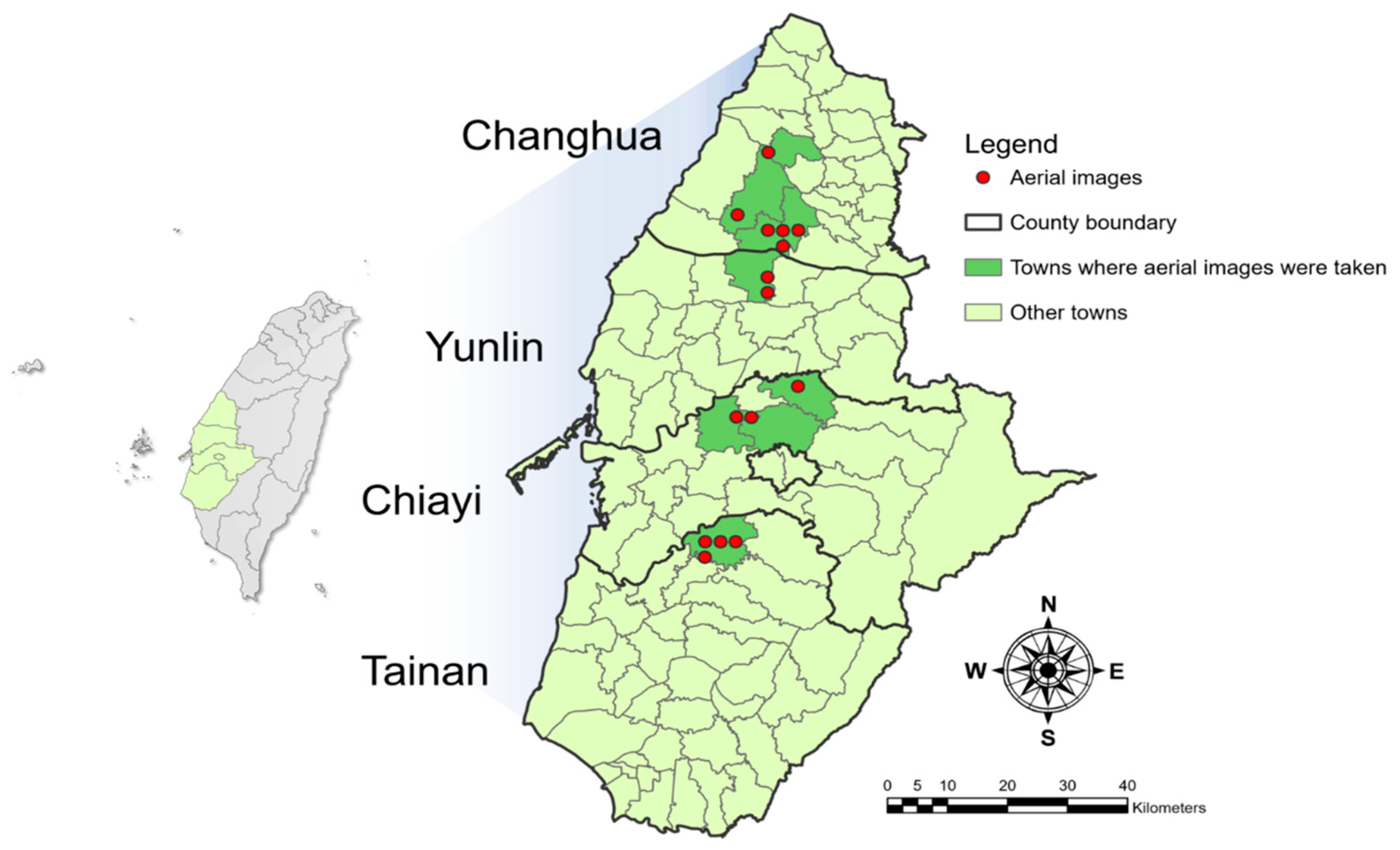

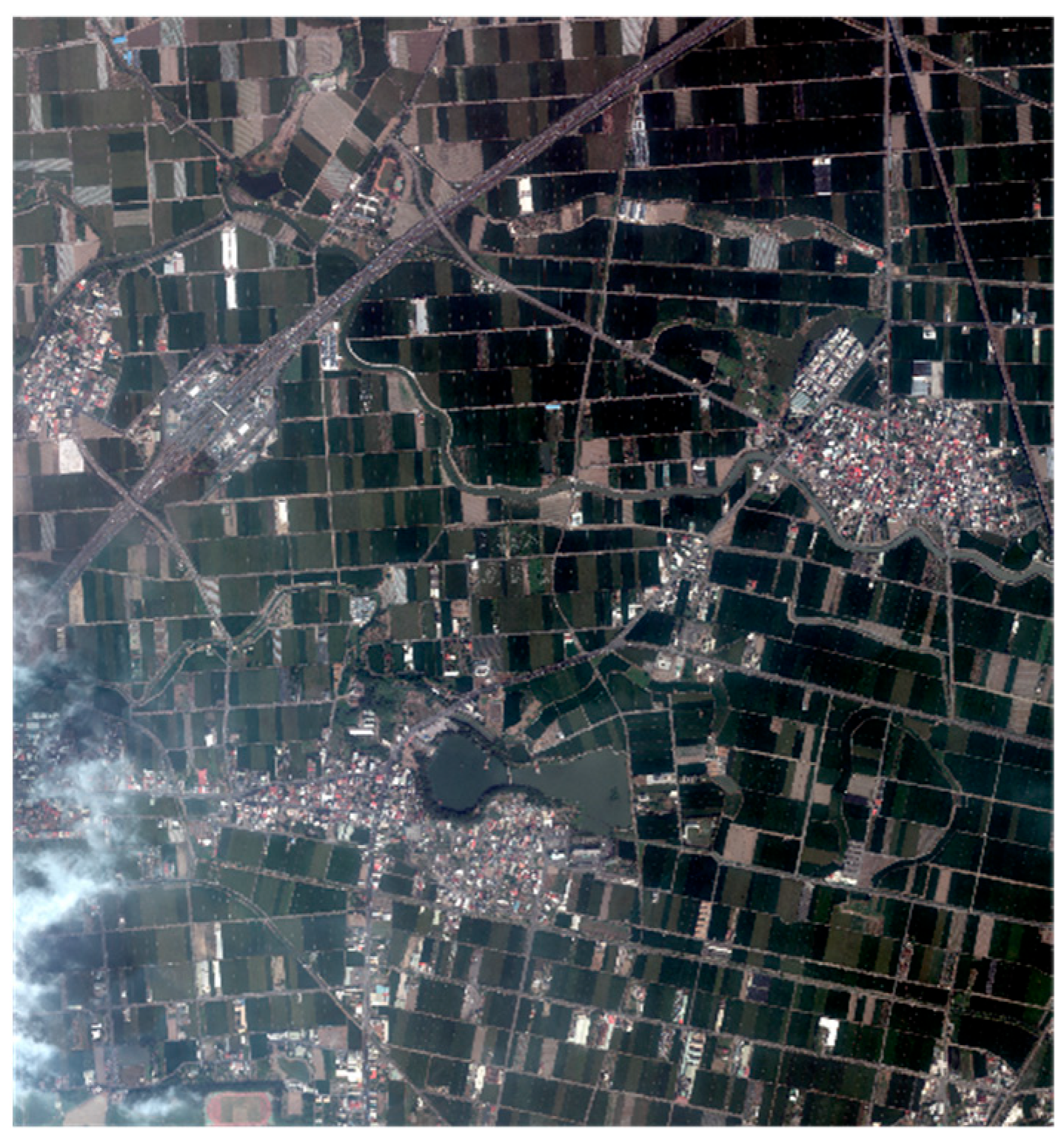

2.1. Aerial Images

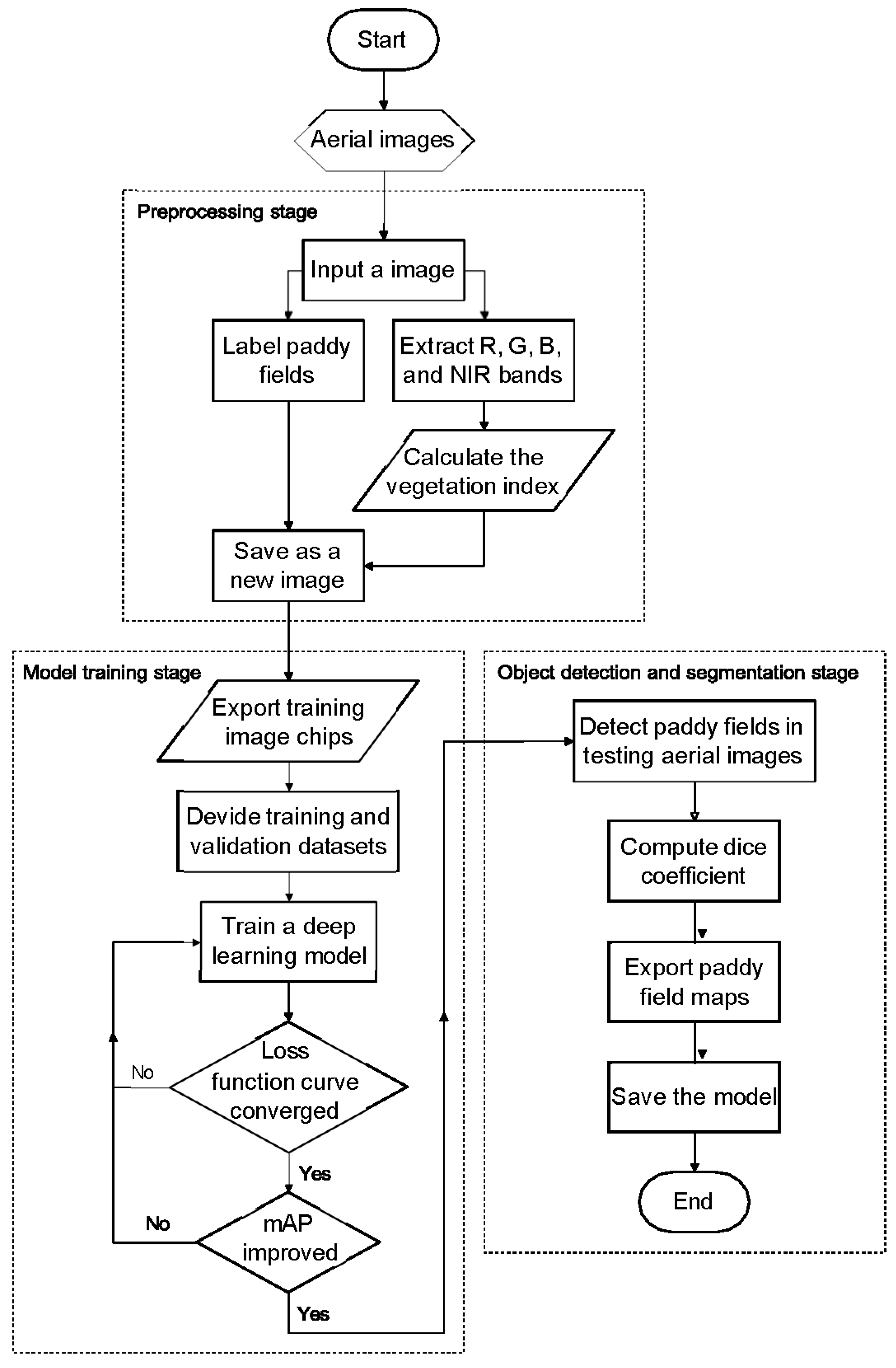

2.2. Image Preprocessing

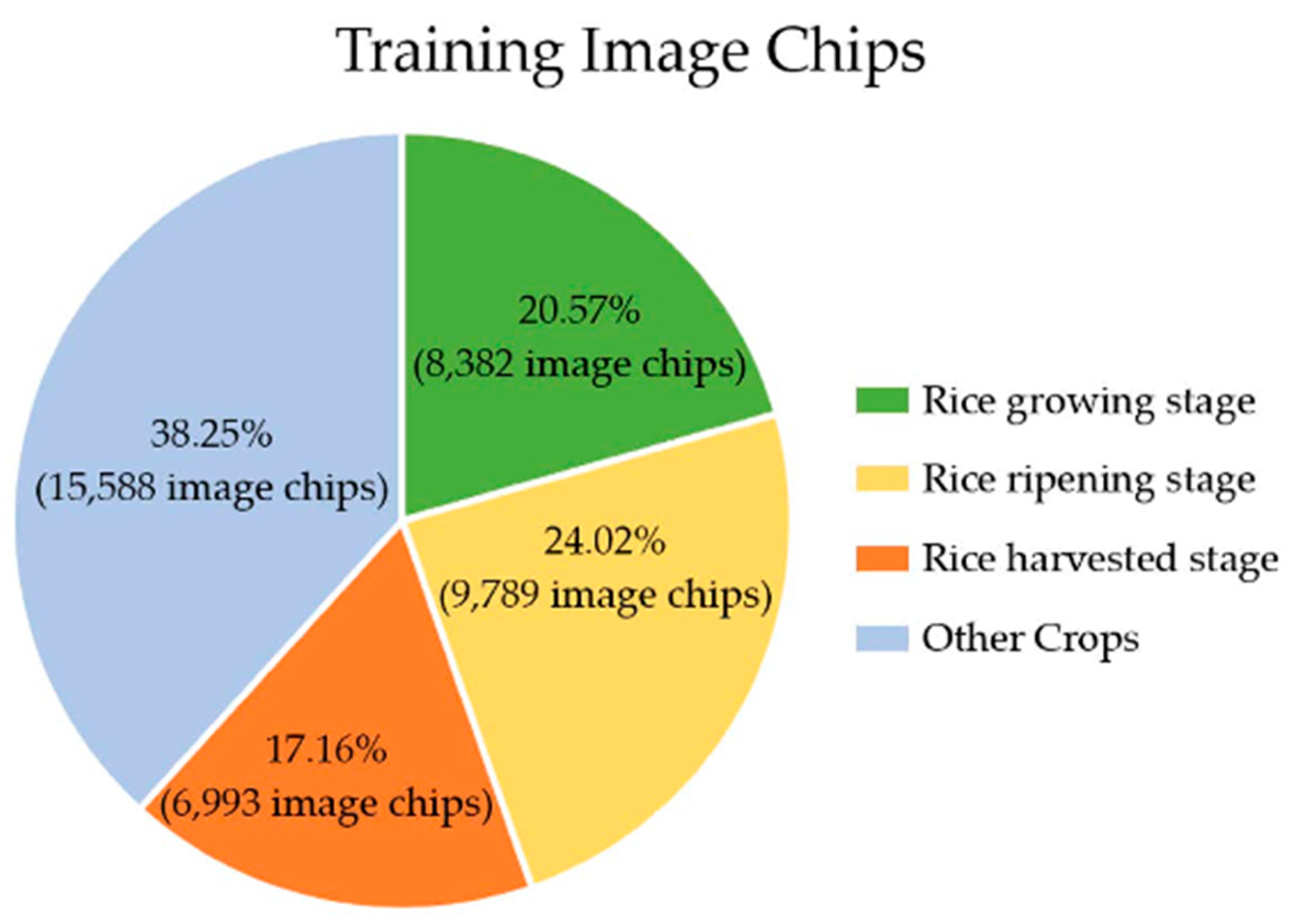

2.3. Dataset

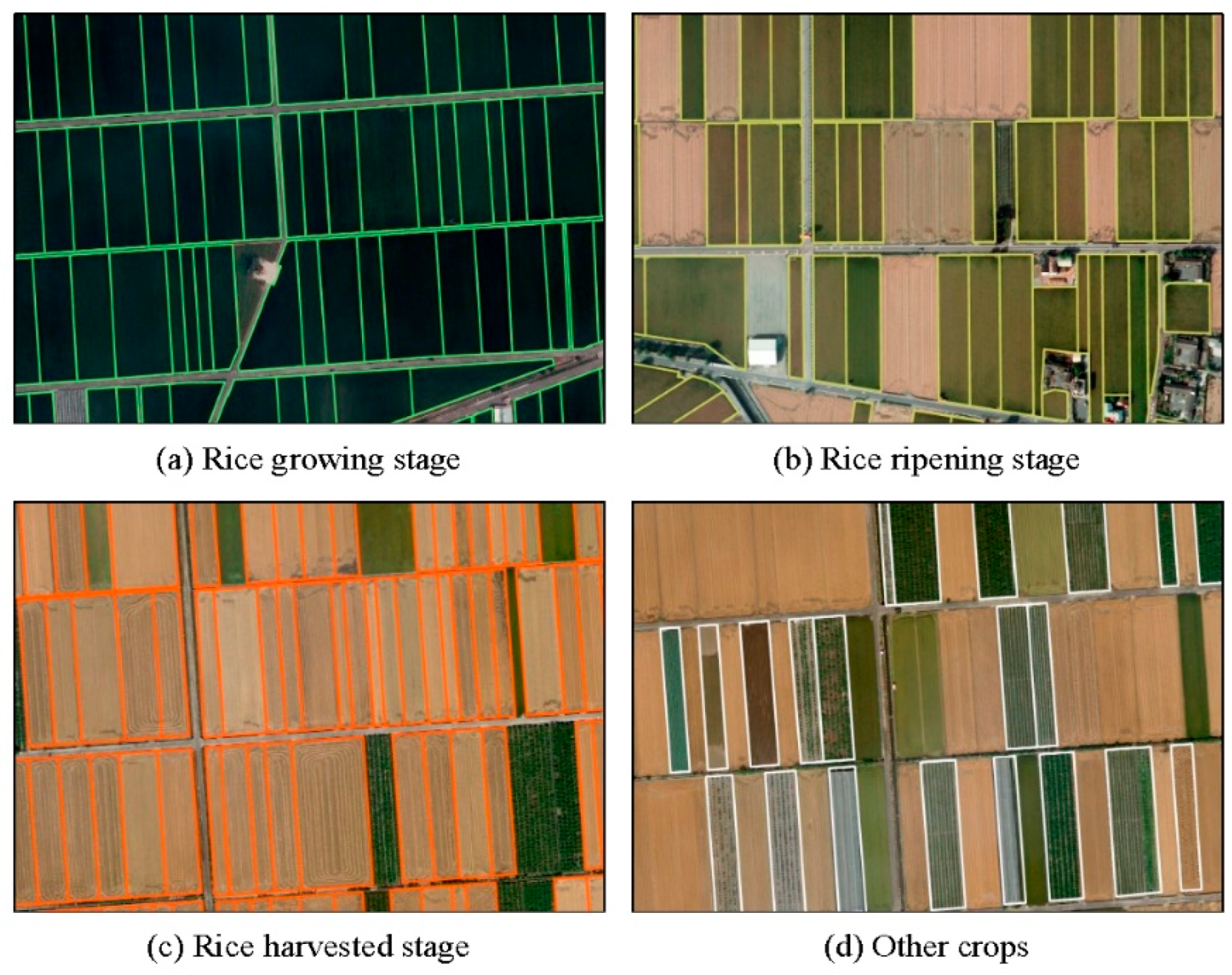

2.4. Labeled Categories

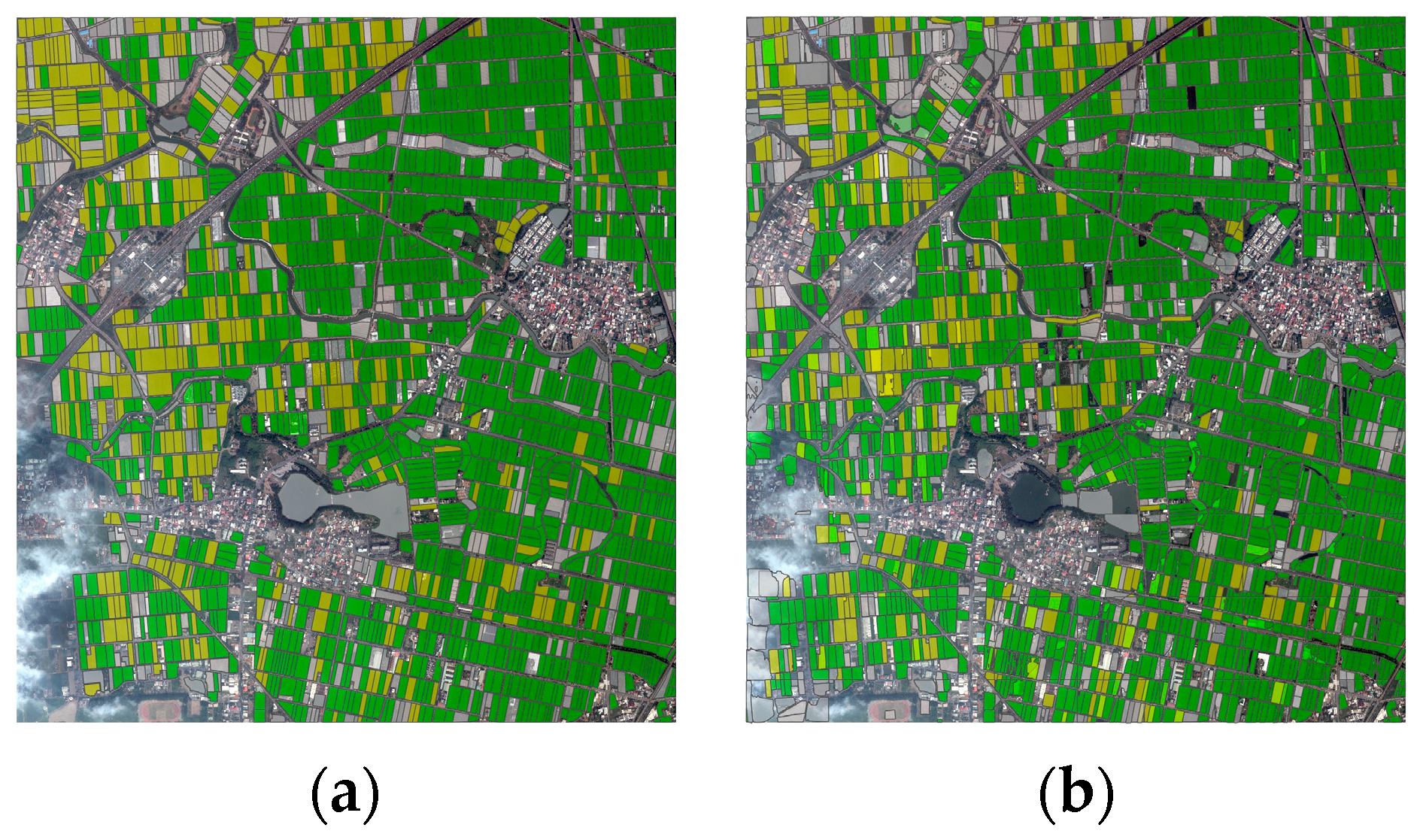

2.4.1. Rice Growing Stage

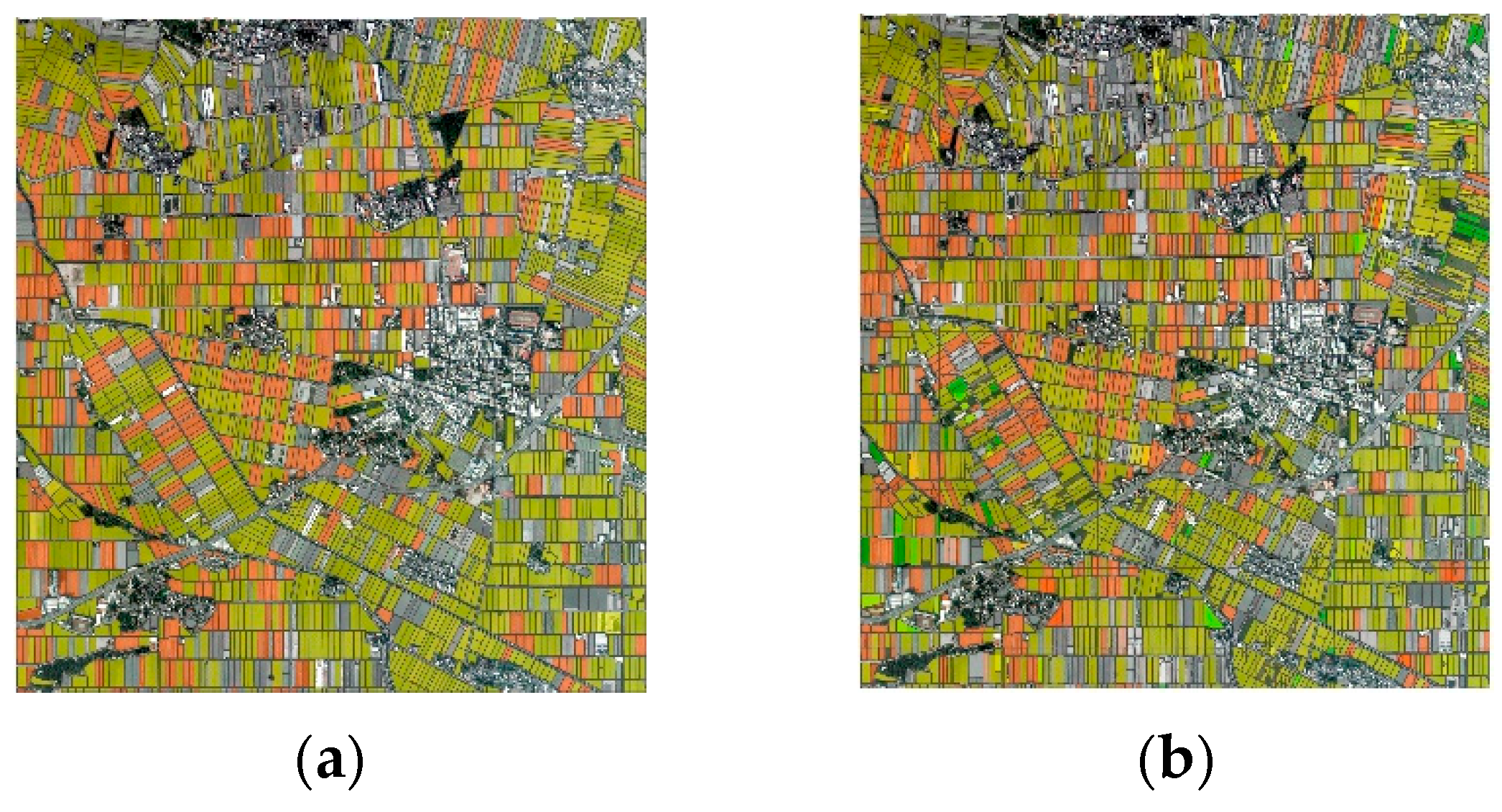

2.4.2. Rice Ripening Stage

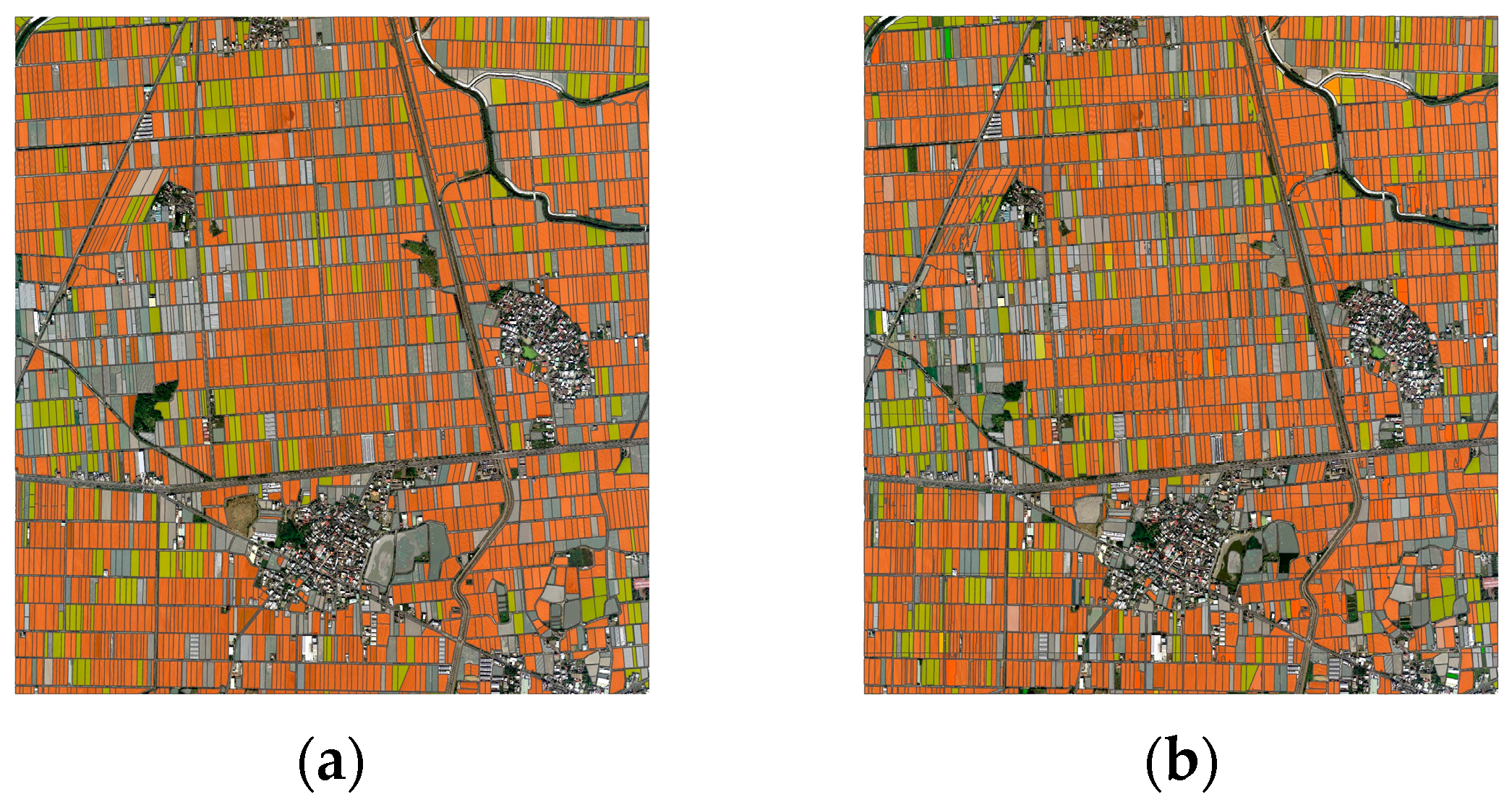

2.4.3. Rice Harvested Stage

2.4.4. Other Crops

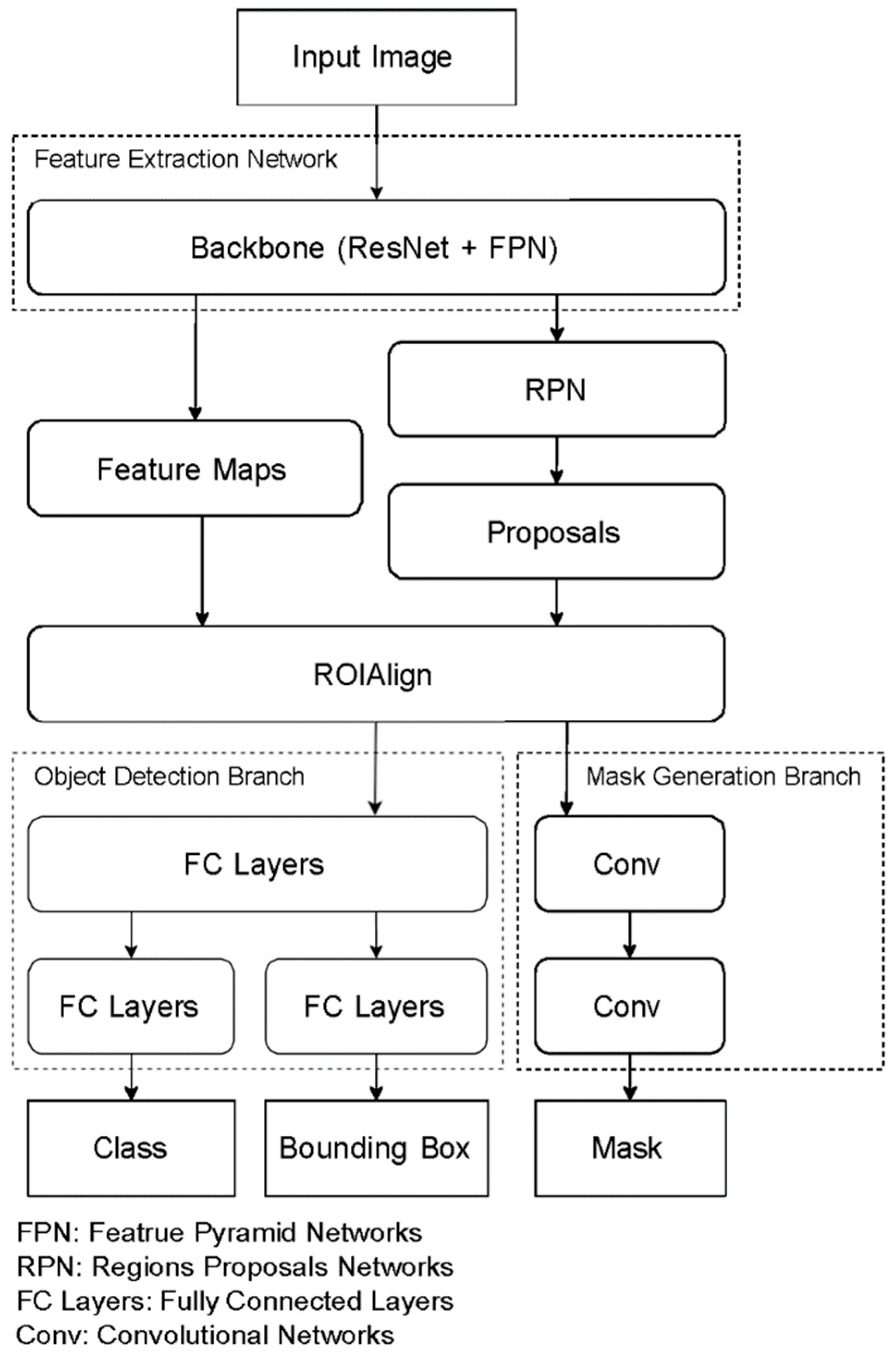

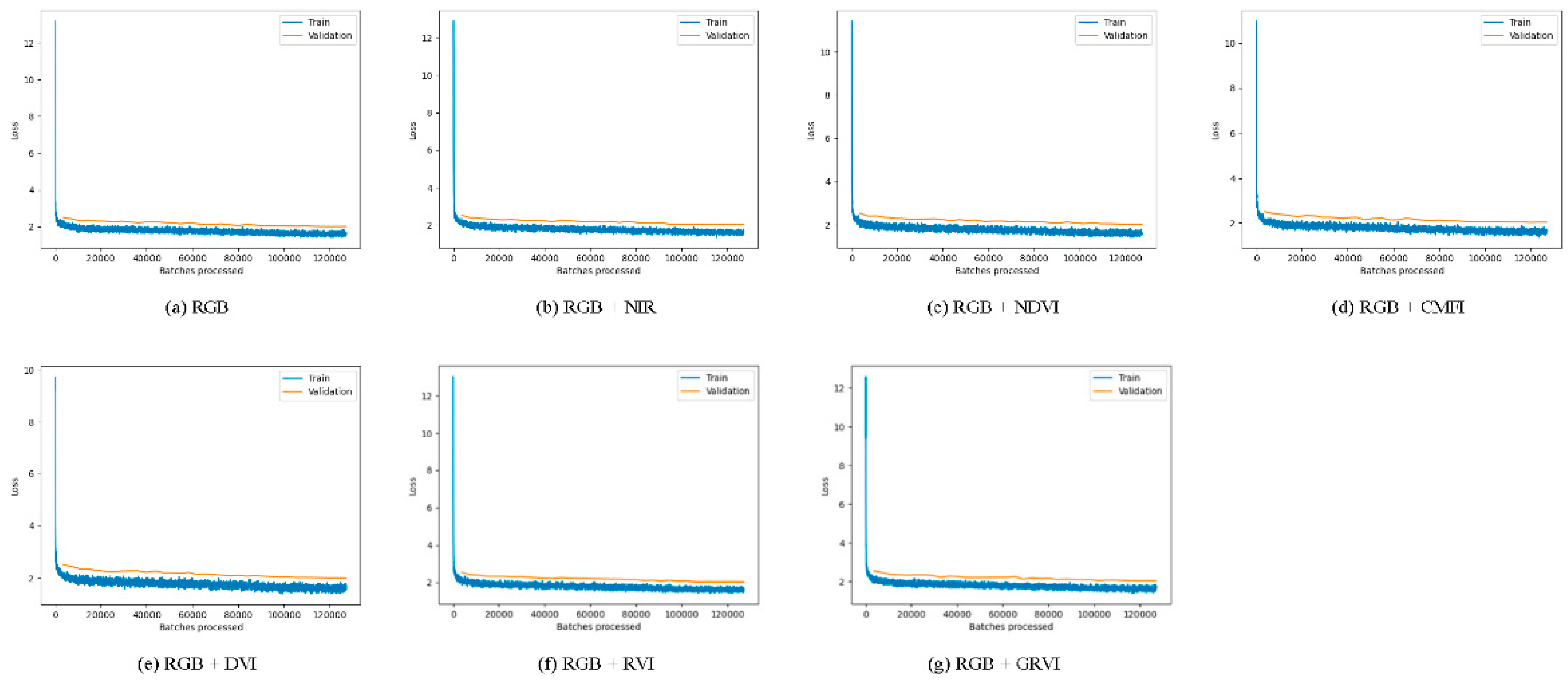

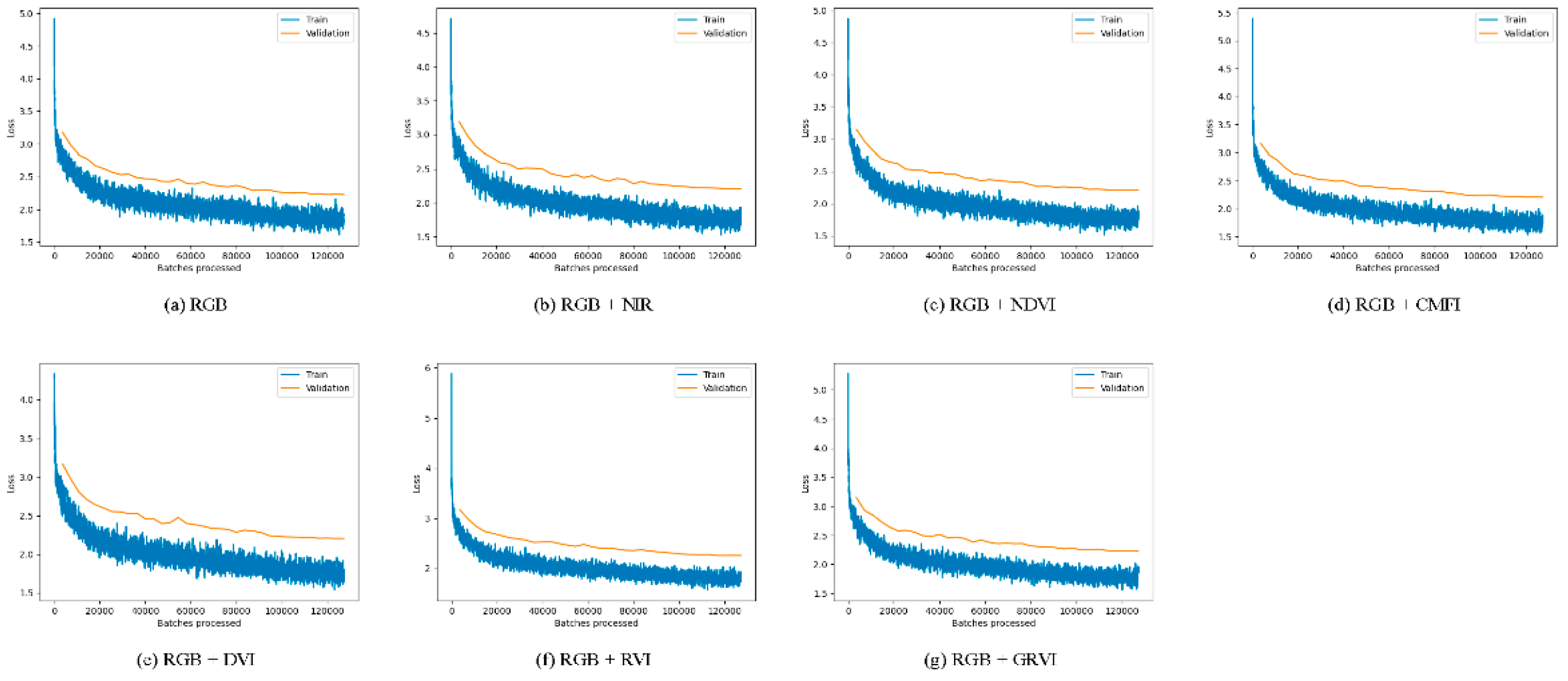

2.5. Mask RCNN

2.6. Equipment

2.7. Evaluation Metrics

3. Results

3.1. Rice Growing Stage

3.2. Rice Ripening Stage

3.3. Rice Harvested Stage

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AP | Average precision |

| B | Blue |

| COA | Council of Agriculture |

| CMFI | Cropping management factor index |

| CNN | Convolutional neural network |

| DCNN | Deep convolutional neural network |

| DOTA | Dataset for object detection in aerial images |

| DVI | Difference vegetation index |

| Esri | Environmental Systems Research Institute, Inc. |

| FN | False negative |

| FCN | Fully convolutional networks |

| FP | False positive |

| FPN | Feature pyramid networks |

| G | Green |

| GIS | Geographic information system |

| GLCM | Gray-level co-occurrence matrix |

| GRVI | Green-red vegetation index |

| iSAID | Instance segmentation in aerial images dataset |

| LULC | Land use and land cover |

| mAP | Mean average precision |

| NDVI | Normalized difference vegetation index |

| NIR | Near-infrared |

| R | Red |

| RCNN | Region-based convolutional network |

| RPN | Regional proposal network |

| ROI | Region of interest |

| RVI | Ratio vegetation index |

| RF | Random forest |

| SVM | Support vector machine |

| TARI | Taiwan Agricultural Research Institute |

| TN | True negative |

| TP | True positive |

References

- Council of Agriculture, Executive Yuan, T. Agricultural Statistics. 2022. Available online: https://agrstat.coa.gov.tw/sdweb/public/indicator/Indicator.aspx (accessed on 5 November 2022).

- Moldenhauer, K.; Slaton, N. Rice growth and development. Rice Prod. Handb. 2001, 192, 7–14. [Google Scholar]

- Jo, H.W.; Lee, S.; Park, E.; Lim, C.H.; Song, C.; Lee, H.; Ko, Y.; Cha, S.; Yoon, H.; Lee, W.K. Deep learning applications on multitemporal SAR (Sentinel-1) image classification using confined labeled data: The case of detecting rice paddy in South Korea. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7589–7601. [Google Scholar]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 13–23 June 2018; pp. 3974–3983. [Google Scholar]

- Waqas Zamir, S.; Arora, A.; Gupta, A.; Khan, S.; Sun, G.; Shahbaz Khan, F.; Zhu, F.; Shao, L.; Xia, G.S.; Bai, X. isaid: A large-scale dataset for instance segmentation in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 28–37. [Google Scholar]

- Helber, P.; Bischke, B.; Dengel, A.; Borth, D. Eurosat: A novel dataset and deep learning benchmark for land use and land cover classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2217–2226. [Google Scholar]

- Hoeser, T.; Bachofer, F.; Kuenzer, C. Object detection and image segmentation with deep learning on Earth observation data: Areview—Part 2: Applications. Remote Sens. 2020, 12, 3053. [Google Scholar]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Segment-before-detect: Vehicle detection and classification through semantic segmentation of aerial images. Remote Sens. 2017, 9, 368. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Yin, S.; Li, H.; Teng, L. Airport detection based on improved faster RCNN in large scale remote sensing images. Sens. Imaging 2020, 21, 49. [Google Scholar]

- Khasawneh, N.; Fraiwan, M.; Fraiwan, L. Detection of K-complexes in EEG waveform images using faster R-CNN and deep transfer learning. BMC Med. Inform. Decis. Mak. 2022, 22, 297. [Google Scholar]

- Li, Y.; Xu, W.; Chen, H.; Jiang, J.; Li, X. A novel framework based on mask R-CNN and Histogram thresholding for scalable segmentation of new and old rural buildings. Remote Sens. 2021, 13, 1070. [Google Scholar]

- Pai, M.M.; Mehrotra, V.; Aiyar, S.; Verma, U.; Pai, R.M. Automatic segmentation of river and land in sar images: A deep learning approach. In Proceedings of the 2019 IEEE Second International Conference on Artificial Intelligence and Knowledge Engineering (AIKE), Sardinia, Italy, 3–5 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 15–20. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Song, S.; Liu, J.; Liu, Y.; Feng, G.; Han, H.; Yao, Y.; Du, M. Intelligent object recognition of urban water bodies based on deep learning for multi-source and multi-temporal high spatial resolution remote sensing imagery. Sensors 2020, 20, 397. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Huang, L.; Wu, X.; Peng, Q.; Yu, X. Depth Semantic Segmentation of Tobacco Planting Areas from Unmanned Aerial Vehicle Remote Sensing Images in Plateau Mountains. J. Spectrosc. 2021, 2021, 6687799. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [PubMed]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Zhu, X.; Liang, J.; Hauptmann, A. Msnet: A multilevel instance segmentation network for natural disaster damage assessment in aerial videos. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 2023–2032. [Google Scholar]

- Kontgis, C.; Schneider, A.; Ozdogan, M. Mapping rice paddy extent and intensification in the Vietnamese Mekong River Delta with dense time stacks of Landsat data. Remote Sens. Environ. 2015, 169, 255–269. [Google Scholar]

- Onojeghuo, A.O.; Blackburn, G.A.; Wang, Q.; Atkinson, P.M.; Kindred, D.; Miao, Y. Mapping paddy rice fields by applying machine learning algorithms to multi-temporal Sentinel-1A and Landsat data. Int. J. Remote Sens. 2018, 39, 1042–1067. [Google Scholar]

- Hsiao, K.; Liu, C.; Hsu, W. The Evaluation of Image Classification Methods for Rice Paddy Interpretation. J. Photogramm. Remote Sens. 2004, 9, 13–26. (In Chinese) [Google Scholar]

- Chang, S.; Wan, H.; Chou, Y. The Eco-friendly Evaluation Model: The Paddy Rice Image Classification through SOM and Logistic Regression by Remote Sensing Data. J. Soil Water Conserv. Technol. 2012, 7, 212–220. [Google Scholar]

- Kim, H.O.; Yeom, J.M. Sensitivity of vegetation indices to spatial degradation of RapidEye imagery for paddy rice detection: A case study of South Korea. GIScience Remote Sens. 2015, 52, 1–17. [Google Scholar]

- Wan, S.; Lei, T.; Chou, T.Y. An enhanced supervised spatial decision support system of image classification: Consideration on the ancillary information of paddy rice area. Int. J. Geogr. Inf. Sci. 2010, 24, 623–642. [Google Scholar]

- Lei, T.C.; Huang, S.W.C.Y.; Li, J.Y. The Comparison Study of Paddy Rice Thematic Maps Based on Parameter Classifier (mlc) and Regional Object of Knowledge Classifier (RG + ROSE). Available online: https://a-a-r-s.org/proceeding/ACRS2011/Session/Paper/P_89_9-6-16.pdf (accessed on 7 April 2023).

- Yang, M.D.; Tseng, H.H.; Hsu, Y.C.; Tsai, H.P. Semantic segmentation using deep learning with vegetation indices for rice lodging identification in multi-date UAV visible images. Remote Sens. 2020, 12, 633. [Google Scholar]

- Wang, M.; Wang, J.; Cui, Y.; Liu, J.; Chen, L. Agricultural Field Boundary Delineation with Satellite Image Segmentation for High-Resolution Crop Mapping: A Case Study of Rice Paddy. Agronomy 2022, 12, 2342. [Google Scholar]

- Yenchia, H. Prediction of Paddy Field Area in Satellite Images Using Deep Learning Neural Networks. Master’s Thesis, Chung Yuan Christian University, Taoyuan City, Taiwan, 2019. [Google Scholar]

- Environmental Systems Research Institute, ArcGIS Pro Desktop: Version 3.0.2. Available online: https://www.esri.com/en-us/arcgis/products/arcgis-pro/overview (accessed on 19 June 2022).

- Council of Agriculture, Executive Yuan, T. Aerial Photography Information. 2022. Available online: https://www.afasi.gov.tw/aerial_search (accessed on 23 August 2022).

- GmbH, V.I. UltraCam-XP Technical Specifications. 2008. Available online: http://coello.ujaen.es/Asignaturas/fotodigital/descargas/UCXP-specs.pdf (accessed on 28 October 2022).

- Nazir, A.; Ullah, S.; Saqib, Z.A.; Abbas, A.; Ali, A.; Iqbal, M.S.; Hussain, K.; Shakir, M.; Shah, M.; Butt, M.U. Estimation and forecasting of rice yield using phenology-based algorithm and linear regression model on sentinel-2 satellite data. Agriculture 2021, 11, 1026. [Google Scholar]

- Lin, C.Y.; Chuang, C.W.; Lin, W.T.; Chou, W.C. Vegetation recovery and landscape change assessment at Chiufenershan landslide area caused by Chichi earthquake in central Taiwan. Nat. Hazards 2010, 53, 175–194. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar]

| Comparison Items | Existing Research | Proposed Study |

|---|---|---|

| Training tool | Not discussed | ArcGIS Pro |

| Band used | RGB + NDVI | RGB + Optimal vegetation index |

| Model type | Object detection | Instance segmentation |

| Phenological stage | Not discussed | Multiple (growing, ripening, harvested) |

| Application environment | Not discussed | ArcGIS Pro |

| Item | Value |

|---|---|

| Image size | 11,460 × 12,260 pixels |

| Horizontal resolution | 96 dpi |

| Vertical resolution | 96 dpi |

| Ground resolution | 0.25 m |

| Bands | R, G, B, NIR |

| Total number of images | 17 |

| County | Township | Number of Aerial Images |

|---|---|---|

| Changhua | Puyan, Erling, Pitou, Zhutang | 7 |

| Yunlin | Erlun | 2 |

| Chiayi | Dalin, Minxiong, Xingang | 3 |

| Tainan | Houbi | 5 |

| Vegetation Index | Usage | Formula |

|---|---|---|

| NDVI | General index applied to detect vegetation cover | (NIR − R)/(NIR + R) |

| CMFI | Similar to NDVI, but convert NDVI to 0–1 | (1 − NDVI)/2 |

| DVI | Detect high density vegetation cover | NIR − R |

| RVI | Sensitive to the difference between soil and vegetation cover | R/NIR |

| GRVI | Detect the relationship between vegetation cover and seasonal changes | (G − R)/(G + R) |

| Category | Land Cover Characteristic |

|---|---|

| Rice growing stage | Green vegetation |

| Rice ripening stage | Yellow vegetation |

| Rice harvested stage | Bare soil and scorch marks caused by burning straws |

| Other crops | Greenhouses, fruit tree orchards, melon sheds, and other types of farmland exhibit distinctive planting densities and appearances that set them apart from paddy fields |

| Parameter | Value |

|---|---|

| Chip size | 550 × 550 pixels |

| Backbone | ResNet-50/ResNet-101 |

| Batch size | 4 |

| Epoch | 35 |

| Learning rate | 0.0005 |

| Validation ratio | 20% |

| Pretrained weight | False |

| Early stopping | True |

| Equipment | Specifications |

|---|---|

| CPU RAM GPU Software Libraries | Intel(R) Core(TM) i7-9700KF CPU @ 3.60 GHz (8 cores) 64 GB NVIDIA GeForce RTX 2080 Ti ArcGIS Pro 3.0.2 Python 3.9.12, PyTorch 1.8.2, Tensorflow 2.7, Scikit-learn 1.0.2, Scikit- image 0.17.2, Fast.ai 1.0.63 |

| Backbone | Band Used | Rice Phenological Stage | Other Crops | mAP | ||

|---|---|---|---|---|---|---|

| Growing | Ripening | Harvested | ||||

| ResNet-50 | RGB | 68.29 | 79.53 | 78.21 | 66.69 | 73.18 |

| RGB + NIR | 73.78 | 76.55 | 79.18 | 65.72 | 73.81 (2) † | |

| RGB + NDVI | 72.63 | 76.58 | 78.81 | 65.07 | 73.27 | |

| RGB + CMFI | 67.52 | 76.47 | 78.15 | 65.87 | 72.00 | |

| RGB + DVI | 73.93 | 77.01 | 78.52 | 66.56 | 74.01 (1) † | |

| RGB + RVI | 68.77 | 74.71 | 78.88 | 65.12 | 71.87 | |

| RGB + GRVI | 71.11 | 78.91 | 79.07 | 65.77 | 73.72 (3) † | |

| ResNet-101 | RGB | 67.36 | 77.82 | 75.23 | 61.36 | 70.44 |

| RGB + NIR | 71.05 | 73.88 | 75.62 | 61.94 | 70.62 | |

| RGB + NDVI | 70.51 | 75.48 | 76.09 | 61.77 | 70.96 | |

| RGB + CMFI | 68.64 | 74.15 | 76.52 | 62.44 | 70.44 | |

| RGB + DVI | 72.17 | 75.07 | 74.79 | 61.56 | 70.9 | |

| RGB + RVI | 69.16 | 73.25 | 75.92 | 62.56 | 70.22 | |

| RGB + GRVI | 70.67 | 75.43 | 75.14 | 61.82 | 70.77 | |

| Backbone | Band Used | Test Aerial Images | ||||

|---|---|---|---|---|---|---|

| Houbi, Tainan | Average | |||||

| Growing (62%) † | Ripening (19%) | Harvested | Other Crops (19%) | |||

| ResNet-50 | RGB | 90.44 | 74.09 | - | 66.95 | 77.16 |

| RGB + NIR | 91.42 | 75.04 | - | 71.41 | 79.29 | |

| RGB + NDVI | 91.95 | 75.01 | - | 65.48 | 77.48 | |

| RGB + CMFI | 92.66 | 77.27 | - | 68.84 | 79.59 ‡ | |

| RGB + DVI | 91.23 | 76.46 | - | 69.91 | 79.20 | |

| RGB + RVI | 91.86 | 72.45 | - | 67.37 | 77.23 | |

| RGB + GRVI | 91.56 | 76.96 | - | 68.11 | 78.88 | |

| ResNet-101 | RGB | 89.92 | 81.61 | - | 66.18 | 79.24 |

| RGB + NIR | 88.65 | 69.77 | - | 68.72 | 75.71 | |

| RGB + NDVI | 89.39 | 75.63 | - | 68.59 | 77.87 | |

| RGB + CMFI | 87.77 | 67.08 | - | 74.28 | 76.38 | |

| RGB + DVI | 91.91 | 77.92 | - | 66.35 | 78.73 | |

| RGB + RVI | 90.52 | 75.68 | - | 69.06 | 78.42 | |

| RGB + GRVI | 90.29 | 70.36 | - | 67.35 | 76.00 | |

| Backbone | Band Used | Test Aerial Images | ||||

|---|---|---|---|---|---|---|

| Zhutang, Changhua | Average | |||||

| Growing | Ripening (56%) † | Harvested (22%) † | Other Crops (22%) † | |||

| ResNet-50 | RGB | - | 92.20 | 90.47 | 78.30 | 86.99 |

| RGB + NIR | - | 93.77 | 93.25 | 82.11 | 89.71 ‡ | |

| RGB + NDVI | - | 92.16 | 92.55 | 80.52 | 88.41 | |

| RGB + CMFI | - | 93.95 | 92.59 | 81.40 | 89.31 | |

| RGB + DVI | - | 94.69 | 91.87 | 80.83 | 89.13 | |

| RGB + RVI | - | 94.53 | 91.22 | 82.49 | 89.41 | |

| RGB + GRVI | - | 93.99 | 92.3 | 80.61 | 88.97 | |

| ResNet-101 | RGB | - | 87.29 | 92.81 | 77.52 | 85.87 |

| RGB + NIR | - | 91.74 | 91.57 | 80.21 | 87.84 | |

| RGB + NDVI | - | 91.79 | 90.11 | 79.28 | 87.06 | |

| RGB + CMFI | - | 92.92 | 91.57 | 80.76 | 88.42 | |

| RGB + DVI | - | 92.04 | 90.18 | 79.57 | 87.26 | |

| RGB + RVI | - | 92.53 | 91.92 | 81.07 | 88.51 | |

| RGB + GRVI | - | 91.71 | 91.60 | 78.99 | 87.43 | |

| Backbone | Band Used | Test Aerial Images | ||||

|---|---|---|---|---|---|---|

| Xingang, Chiayi | Average | |||||

| Growing | Ripening (11%) † | Harvested (65%) † | Other Crops (24%) † | |||

| ResNet-50 | RGB | - | 85.87 | 94.50 | 79.42 | 86.60 |

| RGB + NIR | - | 32.60 | 93.62 | 68.16 | 64.80 | |

| RGB + NDVI | - | 75.61 | 93.98 | 77.15 | 82.25 | |

| RGB + CMFI | - | 88.06 | 93.85 | 76.88 | 86.26 | |

| RGB + DVI | - | 43.67 | 91.12 | 68.50 | 67.76 | |

| RGB + RVI | - | 55.27 | 93.44 | 68.24 | 72.31 | |

| RGB+GRVI | - | 89.78 | 94.42 | 79.63 | 87.94 ‡ | |

| ResNet-101 | RGB | - | 74.70 | 93.13 | 73.55 | 80.46 |

| RGB + NIR | - | 17.01 | 91.83 | 68.92 | 59.26 | |

| RGB + NDVI | - | 75.36 | 93.08 | 73.72 | 80.72 | |

| RGB + CMFI | - | 63.03 | 92.87 | 69.19 | 75.03 | |

| RGB + DVI | - | 48.45 | 91.68 | 72.46 | 70.87 | |

| RGB + RVI | - | 50.88 | 92.21 | 67.44 | 70.18 | |

| RGB + GRVI | - | 86.14 | 93.33 | 75.39 | 84.96 | |

| Rice Phenological Stage | Backbone | Band Combination |

|---|---|---|

| Growing Ripening Harvested | ResNet-50 | RGB + CMFI RGB + NIR RGB + GRVI |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chou, Y.-S.; Chou, C.-Y. Deep Learning Approach for Paddy Field Detection Using Labeled Aerial Images: The Case of Detecting and Staging Paddy Fields in Central and Southern Taiwan. Remote Sens. 2023, 15, 3575. https://doi.org/10.3390/rs15143575

Chou Y-S, Chou C-Y. Deep Learning Approach for Paddy Field Detection Using Labeled Aerial Images: The Case of Detecting and Staging Paddy Fields in Central and Southern Taiwan. Remote Sensing. 2023; 15(14):3575. https://doi.org/10.3390/rs15143575

Chicago/Turabian StyleChou, Yi-Shin, and Cheng-Ying Chou. 2023. "Deep Learning Approach for Paddy Field Detection Using Labeled Aerial Images: The Case of Detecting and Staging Paddy Fields in Central and Southern Taiwan" Remote Sensing 15, no. 14: 3575. https://doi.org/10.3390/rs15143575

APA StyleChou, Y.-S., & Chou, C.-Y. (2023). Deep Learning Approach for Paddy Field Detection Using Labeled Aerial Images: The Case of Detecting and Staging Paddy Fields in Central and Southern Taiwan. Remote Sensing, 15(14), 3575. https://doi.org/10.3390/rs15143575