Removing Moving Objects without Registration from 3D LiDAR Data Using Range Flow Coupled with IMU Measurements

Abstract

1. Introduction

| Methods | Relying on Registration | Using Training Labels | Using GPUs |

|---|---|---|---|

| ERASOR [2] | ✔ | ✘ | ✘ |

| Peopleremover [4] | ✔ | ✘ | ✘ |

| Mapless [9] | ✔ | ✘ | ✘ |

| Removert [10] | ✔ | ✘ | ✘ |

| LMNet [1] | ✘ | ✔ | ✔ |

| MOTS [14] | ✘ | ✘ | ✔ |

| FlowNet3D [18] | ✘ | ✔ | ✔ |

| SLIM [19] | ✘ | ✘ | ✔ |

| Proposed | ✘ | ✘ | ✘ |

- (1)

- Proposing a novel method: we introduce a novel method for moving objects removal without registration by considering range geometric constraints and IMU measurements in a 3D point clouds domain.

- (2)

- Dataset evaluation: Our proposed method is evaluated on three datasets, and the experimental results demonstrate that it outperforms the baseline methods. Importantly, our method achieves this superior performance without relying on accurate LiDAR registration or expensive annotations. This characteristic makes our method highly suitable for integration with widely accessible SLAM systems.

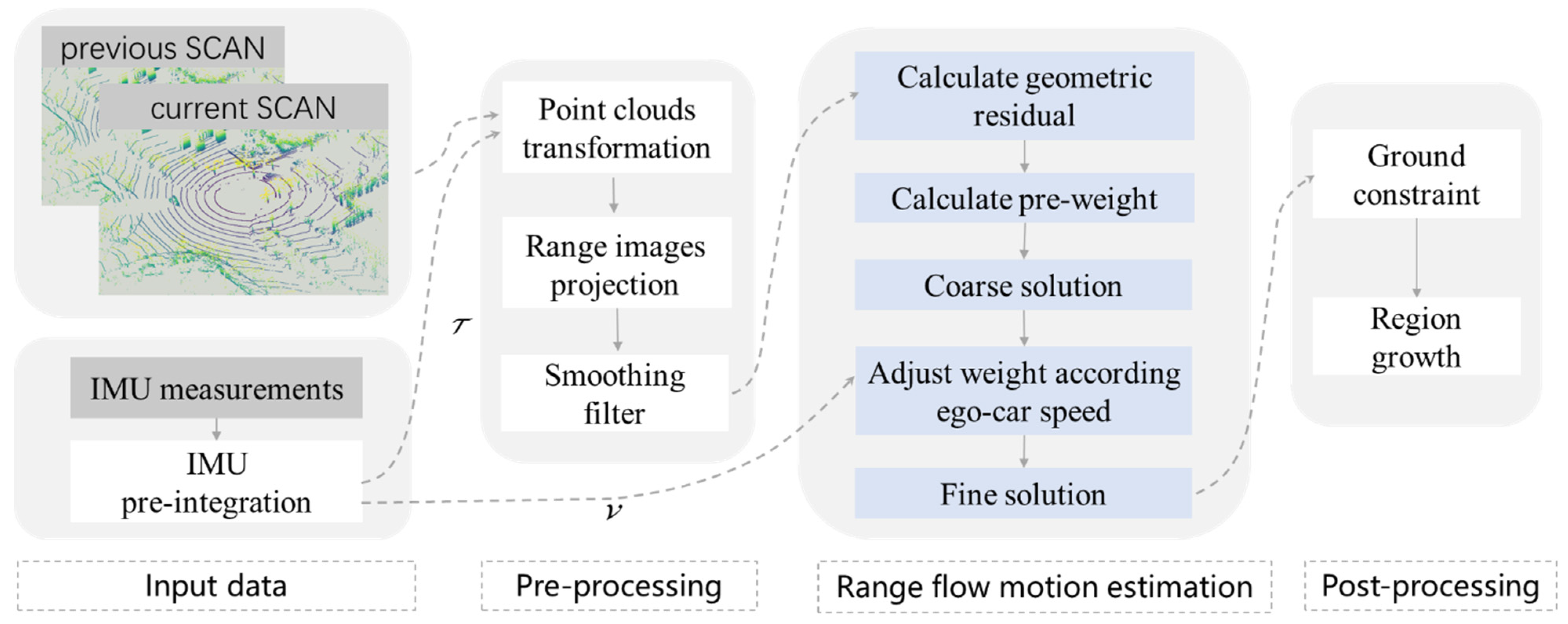

2. Methods

| Algorithm 1: Moving Objects Removal without Registration |

| Input: ; The prior pose between two scans from IMU measurements ; The current speed of the ego-car from IMU measurements . |

| Pre-processing: 1. Coarsely align scan to scan based on transformation ,; 2. Perform range image projection and apply smoothing on scans ,, . Range flow estimation: 3. Compute the geometric constraint residual for each point using range images , ; 4. Compute pre-weight of each point ; 5. Obtain a coarse solution of range flow by minimizing the loss function ; 6. Adjust the weight of each point by incorporating the ego-car speed ; 7. Obtain a fine solution by recomputing Step 6 with the adjusted weight ; 8. Segment points belonging to moving objects according the fine range flow . Post-processing: 9. Perform ground removal and region growth techniques to improve the segmentation. |

2.1. IMU Measurements

2.2. Range Image Representation

2.3. Smoothing Filter

2.4. Range Flow Estimation

2.4.1. 3D Range Flow Representation

2.4.2. Coarse Estimation of Range Flow

2.4.3. Fine Estimation with Weight Adjustment

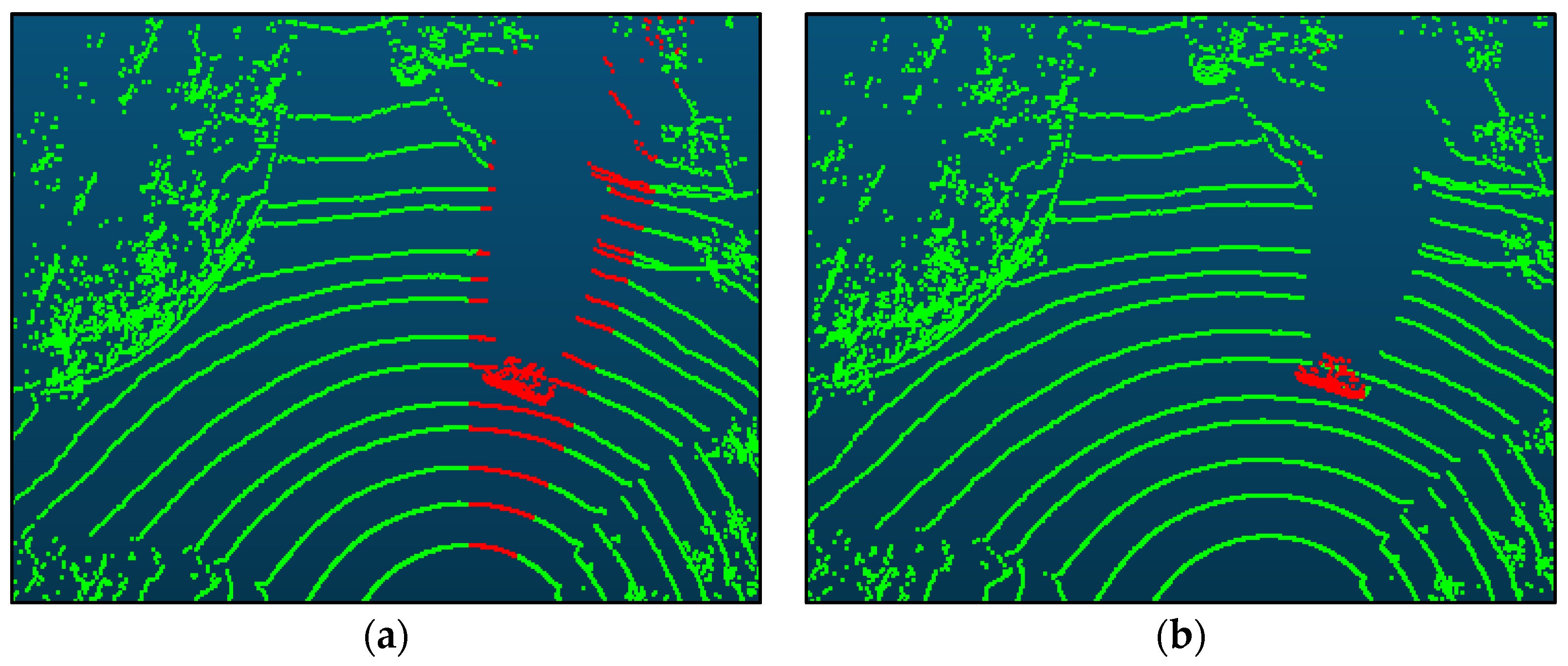

2.5. Ground Constraint and Region Growth

3. Datasets

4. Metric

5. Performance Evaluation

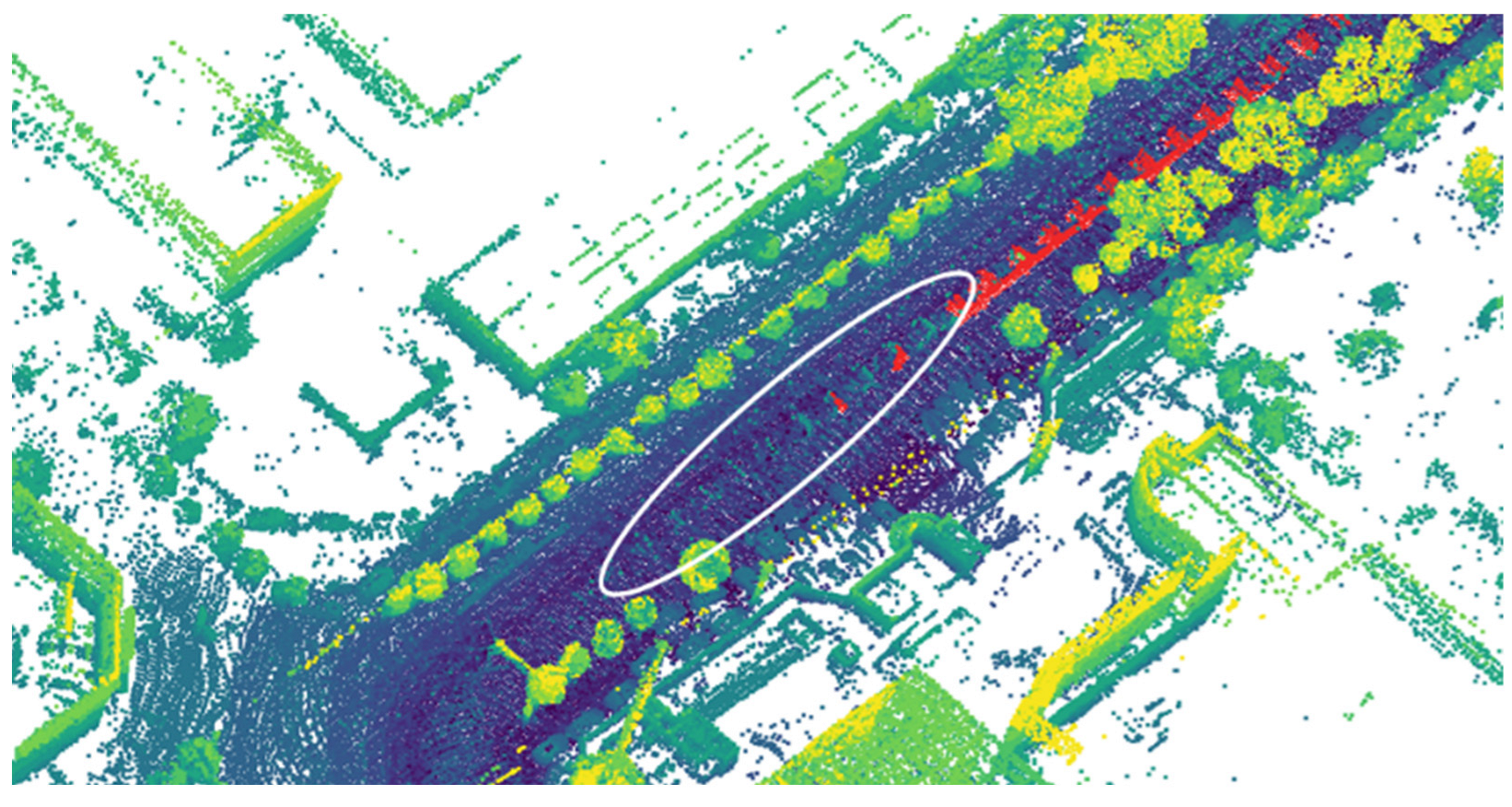

5.1. Performance Comparison on Different Datasets

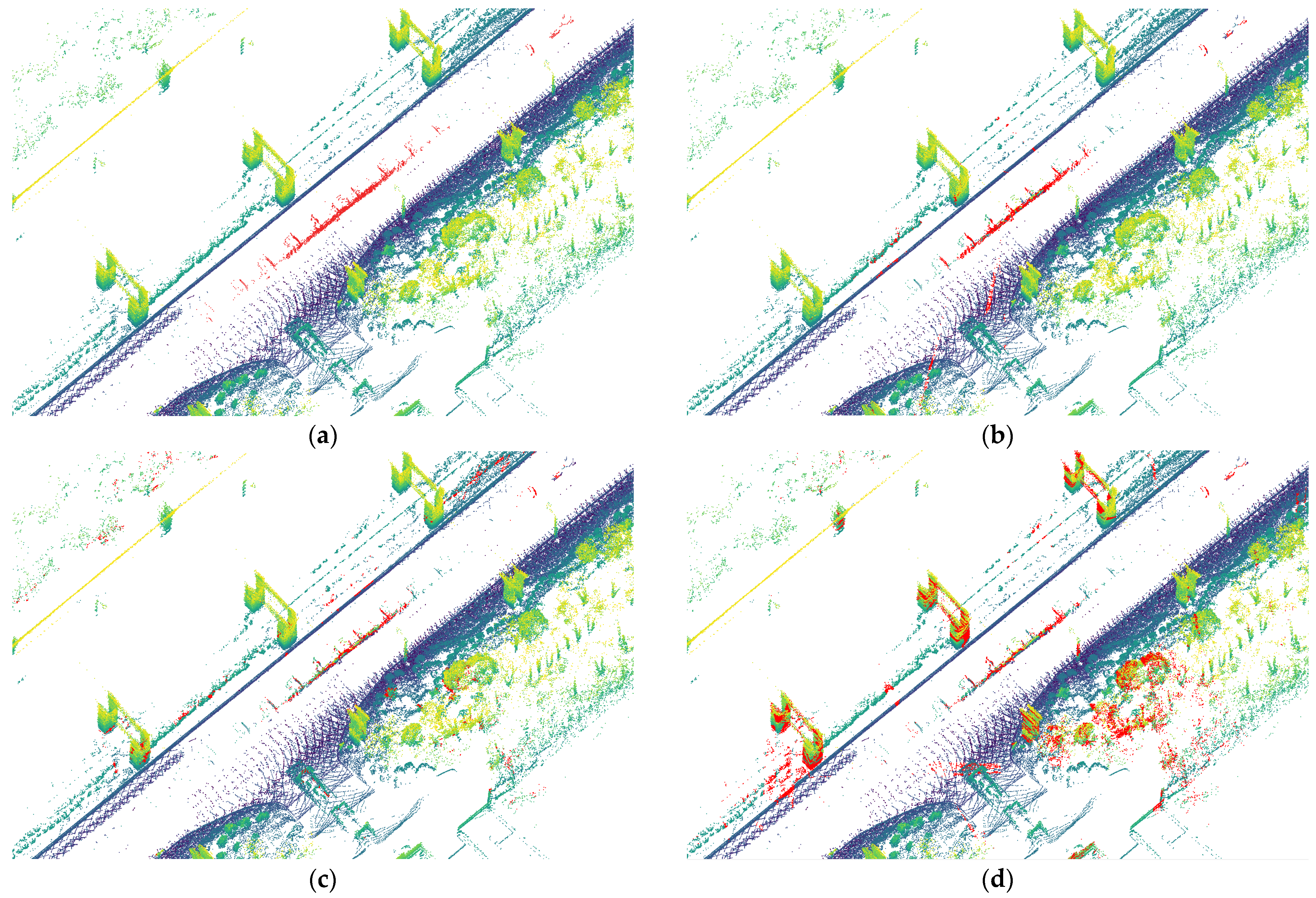

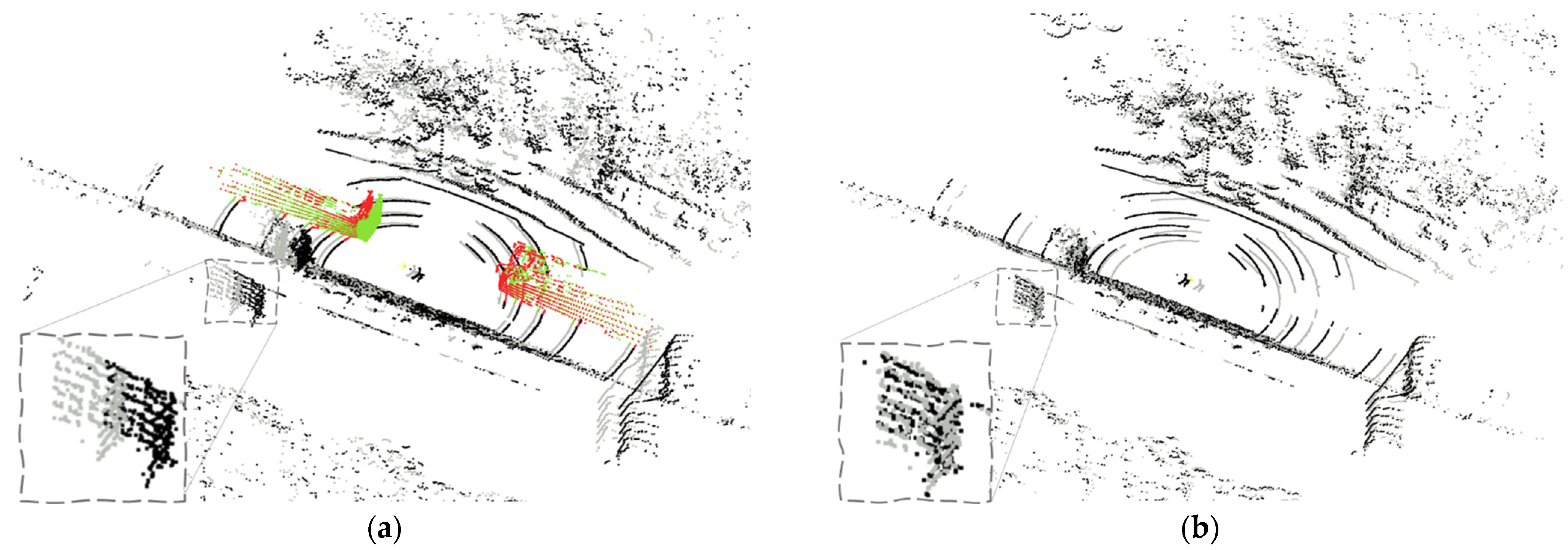

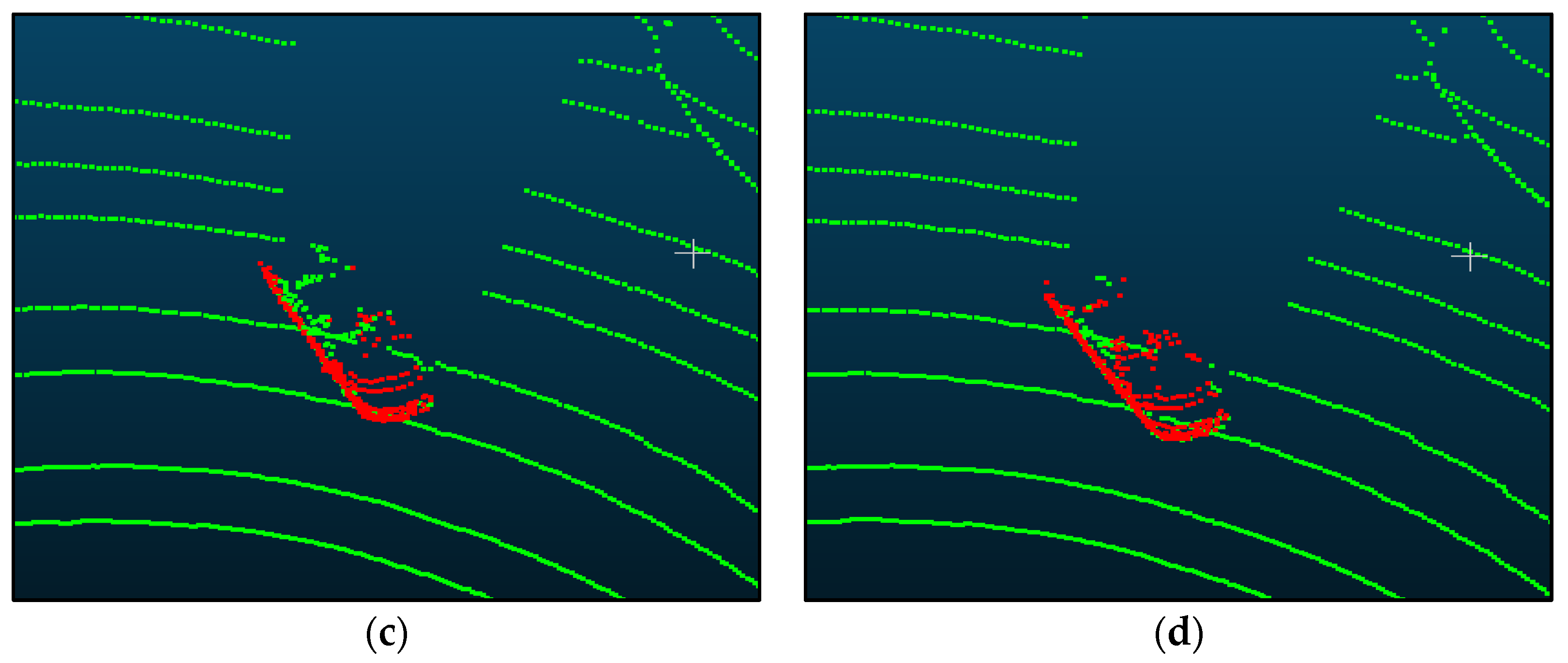

5.2. Performance in the Scenarios of LiDAR Registration Failure

5.3. Ablation Experiment

5.4. Runtime

5.5. Limitations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, X.; Li, S.; Mersch, B.; Wiesmann, L.; Gall, J.; Behley, J.; Stachniss, C. Moving Object Segmentation in 3D LiDAR Data: A Learning-Based Approach Exploiting Sequential Data. IEEE Robot. Autom. Lett. 2021, 6, 6529–6536. [Google Scholar] [CrossRef]

- Lim, H.; Hwang, S.; Myung, H. ERASOR: Egocentric Ratio of Pseudo Occupancy-Based Dynamic Object Removal for Static 3D Point Cloud Map Building. IEEE Robot. Autom. Lett. 2021, 6, 2272–2279. [Google Scholar] [CrossRef]

- Pomerleau, F.; Krusi, P.; Colas, F.; Furgale, P.; Siegwart, R. Long-Term 3D Map Maintenance in Dynamic Environments. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–5 June 2014; pp. 3712–3719. [Google Scholar] [CrossRef]

- Schauer, J.; Nuchter, A. The Peopleremover—Removing Dynamic Objects from 3-D Point Cloud Data by Traversing a Voxel Occupancy Grid. IEEE Robot. Autom. Lett. 2018, 3, 1679–1686. [Google Scholar] [CrossRef]

- Moosmann, F.; Fraichard, T. Motion Estimation from Range Images in Dynamic Outdoor Scenes. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 142–147. [Google Scholar] [CrossRef]

- Pagad, S.; Agarwal, D.; Narayanan, S.; Rangan, K.; Kim, H.; Yalla, G. Robust Method for Removing Dynamic Objects from Point Clouds. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10765–10771. [Google Scholar] [CrossRef]

- Fan, T.; Shen, B.; Chen, H.; Zhang, W.; Pan, J. DynamicFilter: An Online Dynamic Objects Removal Framework for Highly Dynamic Environments. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022. [Google Scholar]

- Fu, H.; Xue, H.; Xie, G. MapCleaner: Efficiently Removing Moving Objects from Point Cloud Maps in Autonomous Driving Scenarios. Remote Sens. 2022, 14, 4496. [Google Scholar] [CrossRef]

- Yoon, D.; Tang, T.; Barfoot, T. Mapless Online Detection of Dynamic Objects in 3D Lidar. In Proceedings of the 2019 16th Conference on Computer and Robot Vision (CRV), Kingston, QC, Canada, 29–31 May 2019; pp. 113–120. [Google Scholar] [CrossRef]

- Kim, G.; Kim, A. Remove, Then Revert: Static Point Cloud Map Construction Using Multiresolution Range Images. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10758–10765. [Google Scholar] [CrossRef]

- Kim, J.; Woo, J.; Im, S. RVMOS: Range-View Moving Object Segmentation Leveraged by Semantic and Motion Features. IEEE Robot. Autom. Lett. 2022, 7, 8044–8051. [Google Scholar] [CrossRef]

- Mersch, B.; Chen, X.; Vizzo, I.; Nunes, L.; Behley, J.; Stachniss, C. Receding Moving Object Segmentation in 3D LiDAR Data Using Sparse 4D Convolutions. IEEE Robot. Autom. Lett. 2022, 7, 7503–7510. [Google Scholar] [CrossRef]

- Pfreundschuh, P.; Hendrikx, H.F.C.; Reijgwart, V.; Dube, R.; Siegwart, R.; Cramariuc, A. Dynamic Object Aware LiDAR SLAM Based on Automatic Generation of Training Data. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 11641–11647. [Google Scholar] [CrossRef]

- Kreutz, T.; Muhlhauser, M.; Guinea, A.S. Unsupervised 4D LiDAR Moving Object Segmentation in Stationary Settings with Multivariate Occupancy Time Series. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–7 January 2023; pp. 1644–1653. [Google Scholar] [CrossRef]

- Sun, J.; Dai, Y.; Zhang, X.; Xu, J.; Ai, R.; Gu, W.; Chen, X. Efficient Spatial-Temporal Information Fusion for LiDAR-Based 3D Moving Object Segmentation. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 11456–11463. [Google Scholar]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep Learning for 3D Point Clouds: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4338–4364. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Li, S.; Howard-Jenkins, H.; Prisacariu, V.; Chen, M. FlowNet3D++: Geometric Losses for Deep Scene Flow Estimation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass, CO, USA, 3–8 January 2020; pp. 91–98. [Google Scholar]

- Liu, X.; Qi, C.R.; Guibas, L.J. FlowNet3D: Learning Scene Flow in 3D Point Clouds. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 529–537. [Google Scholar] [CrossRef]

- Baur, S.A.; Emmerichs, D.J.; Moosmann, F.; Pinggera, P.; Ommer, B.; Geiger, A. SLIM: Self-Supervised LiDAR Scene Flow and Motion Segmentation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 13106–13116. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar] [CrossRef]

- Dewan, A.; Caselitz, T.; Tipaldi, G.D.; Burgard, W. Rigid Scene Flow for 3D LiDAR Scans. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 1765–1770. [Google Scholar] [CrossRef]

- Noorwali, S. Range Flow: New Algorithm Design and Quantitative and Qualitative Analysis. Ph.D. Thesis, The University of Western Ontario, London, ON, Canada, 2020. [Google Scholar]

- Ahmad, N.; Ghazilla, R.A.R.; Khairi, N.M.; Kasi, V. Reviews on Various Inertial Measurement Unit (IMU) Sensor Applications. IJSPS 2013, 1, 256–262. [Google Scholar] [CrossRef]

- Sun, R.; Wang, J.; Cheng, Q.; Mao, Y.; Ochieng, W.Y. A New IMU-Aided Multiple GNSS Fault Detection and Exclusion Algorithm for Integrated Navigation in Urban Environments. GPS Solut. 2021, 25, 147. [Google Scholar] [CrossRef]

- Sukkarieh, S.; Nebot, E.M.; Durrant-Whyte, H.F. A High Integrity IMU/GPS Navigation Loop for Autonomous Land Vehicle Applications. IEEE Trans. Robot. Autom. 1999, 15, 572–578. [Google Scholar] [CrossRef]

- Bruhn, A.; Weickert, J.; Schnörr, C. Lucas/Kanade Meets Horn/Schunck: Combining Local and Global Optic Flow Methods. Int. J. Comput. Vis. 2005, 61, 211–231. [Google Scholar] [CrossRef]

- Cui, J.; Zhang, F.; Feng, D.; Li, C.; Li, F.; Tian, Q. An Improved SLAM Based on RK-VIF: Vision and Inertial Information Fusion via Runge-Kutta Method. Def. Technol. 2023, 21, 133–146. [Google Scholar] [CrossRef]

- Bogoslavskyi, I.; Stachniss, C. Efficient Online Segmentation for Sparse 3D Laser Scans. PFG 2017, 85, 41–52. [Google Scholar] [CrossRef]

- Velodyne. Velodyne VLP-32C User Manual. Available online: http://www.velodynelidar.com (accessed on 20 May 2023).

- Spies, H.; Jähne, B.; Barron, J.L. Range Flow Estimation. Comput. Vis. Image Underst. 2002, 85, 209–231. [Google Scholar] [CrossRef]

- Jaimez, M.; Monroy, J.G.; Gonzalez-Jimenez, J. Planar Odometry from a Radial Laser Scanner. A Range Flow-Based Approach. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 4479–4485. [Google Scholar] [CrossRef]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9296–9306. [Google Scholar] [CrossRef]

| Methods | mIoU (%) | |||

|---|---|---|---|---|

| SemanticKITTI | City1 | City2 | Average | |

| Peopleremover [4] | 41.2 | 22.3 | 33.1 | 32.2 |

| Removert [10] | 47.2 | 40.5 | 41.9 | 43.2 |

| Our method | 48.1 | 44.2 | 45.0 | 45.8 |

| Methods | mIoU (%) |

|---|---|

| Full pipeline | 44.2 |

| (w/o) weight adjustment | 38.7 |

| (w/o) ground constraint | 42.0 |

| (w/o) region growth | 43.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cai, Y.; Li, B.; Zhou, J.; Zhang, H.; Cao, Y. Removing Moving Objects without Registration from 3D LiDAR Data Using Range Flow Coupled with IMU Measurements. Remote Sens. 2023, 15, 3390. https://doi.org/10.3390/rs15133390

Cai Y, Li B, Zhou J, Zhang H, Cao Y. Removing Moving Objects without Registration from 3D LiDAR Data Using Range Flow Coupled with IMU Measurements. Remote Sensing. 2023; 15(13):3390. https://doi.org/10.3390/rs15133390

Chicago/Turabian StyleCai, Yi, Bijun Li, Jian Zhou, Hongjuan Zhang, and Yongxing Cao. 2023. "Removing Moving Objects without Registration from 3D LiDAR Data Using Range Flow Coupled with IMU Measurements" Remote Sensing 15, no. 13: 3390. https://doi.org/10.3390/rs15133390

APA StyleCai, Y., Li, B., Zhou, J., Zhang, H., & Cao, Y. (2023). Removing Moving Objects without Registration from 3D LiDAR Data Using Range Flow Coupled with IMU Measurements. Remote Sensing, 15(13), 3390. https://doi.org/10.3390/rs15133390