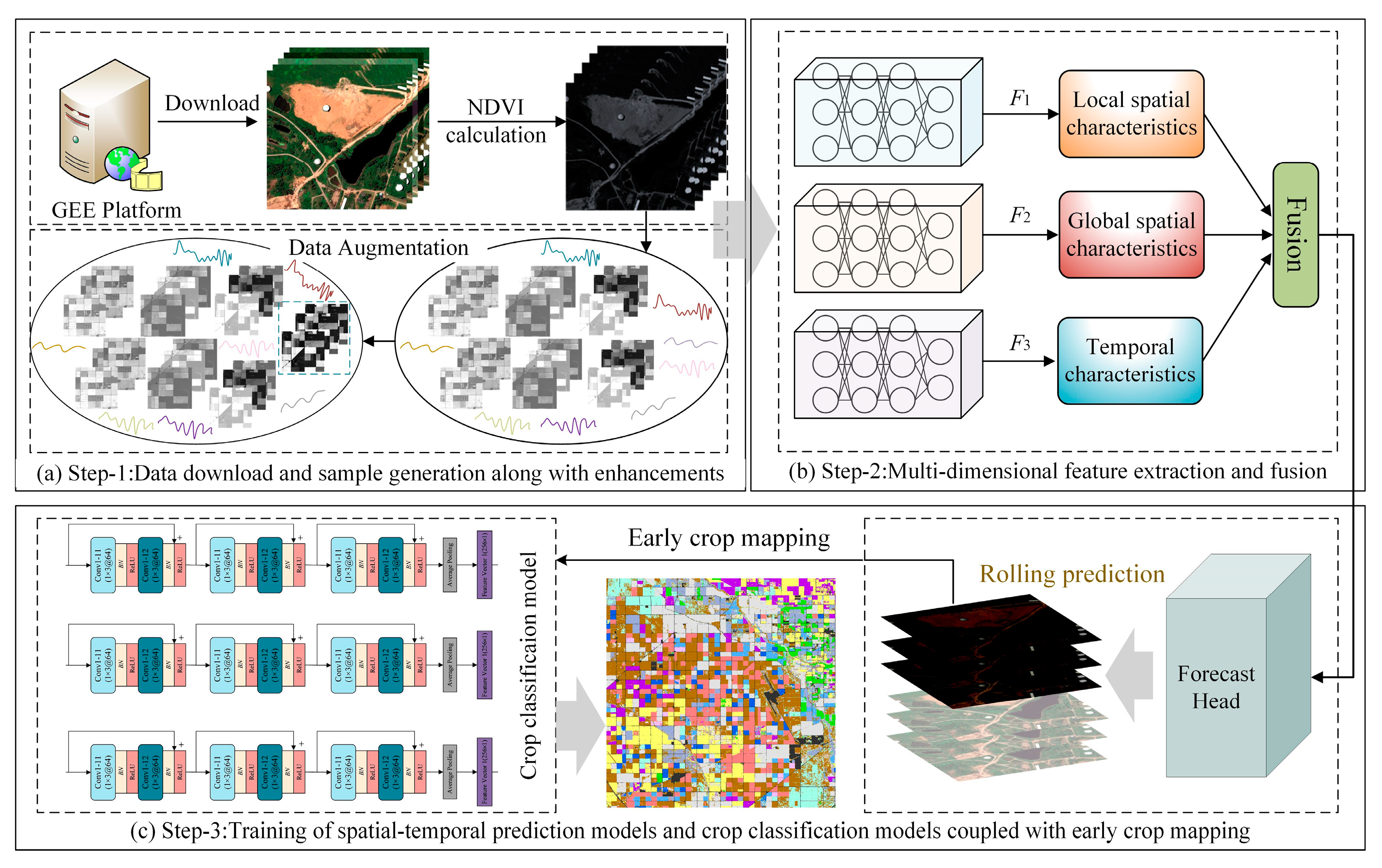

2.2. Local–Global–Temporal Multi-Modal Feature Exploration and Fusion

In order to effectively extract robust spatial–temporal evolving features of crops from remote sensing data, we have designed an integrated multi-modal feature extraction net-work architecture.

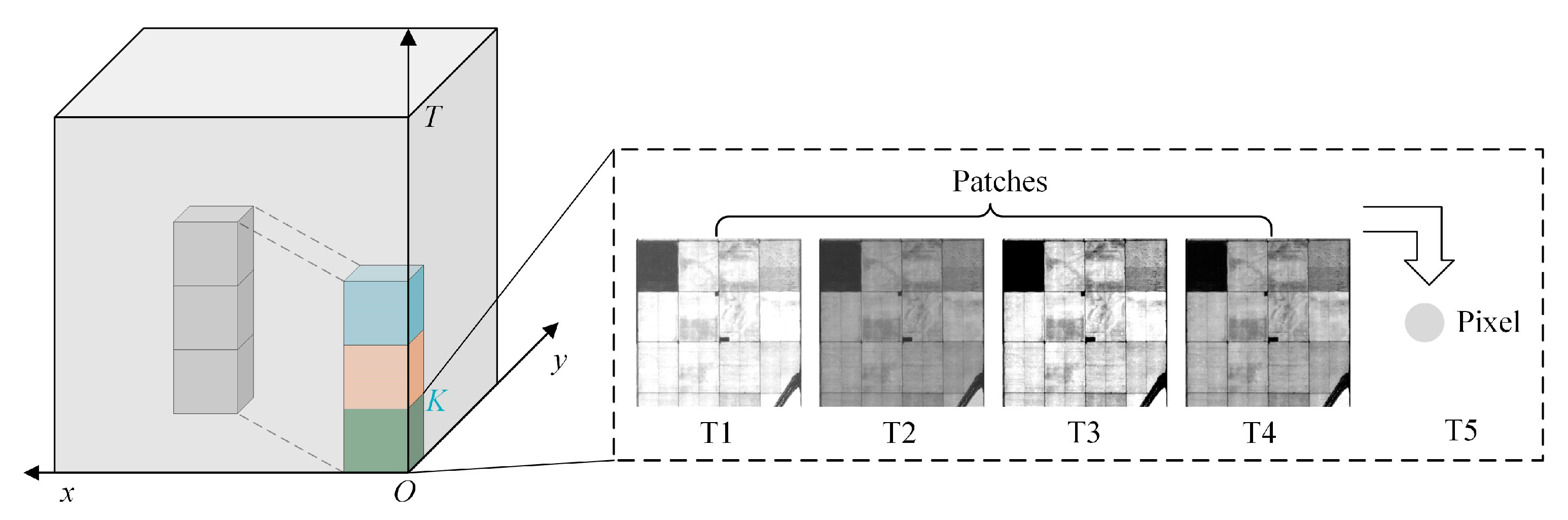

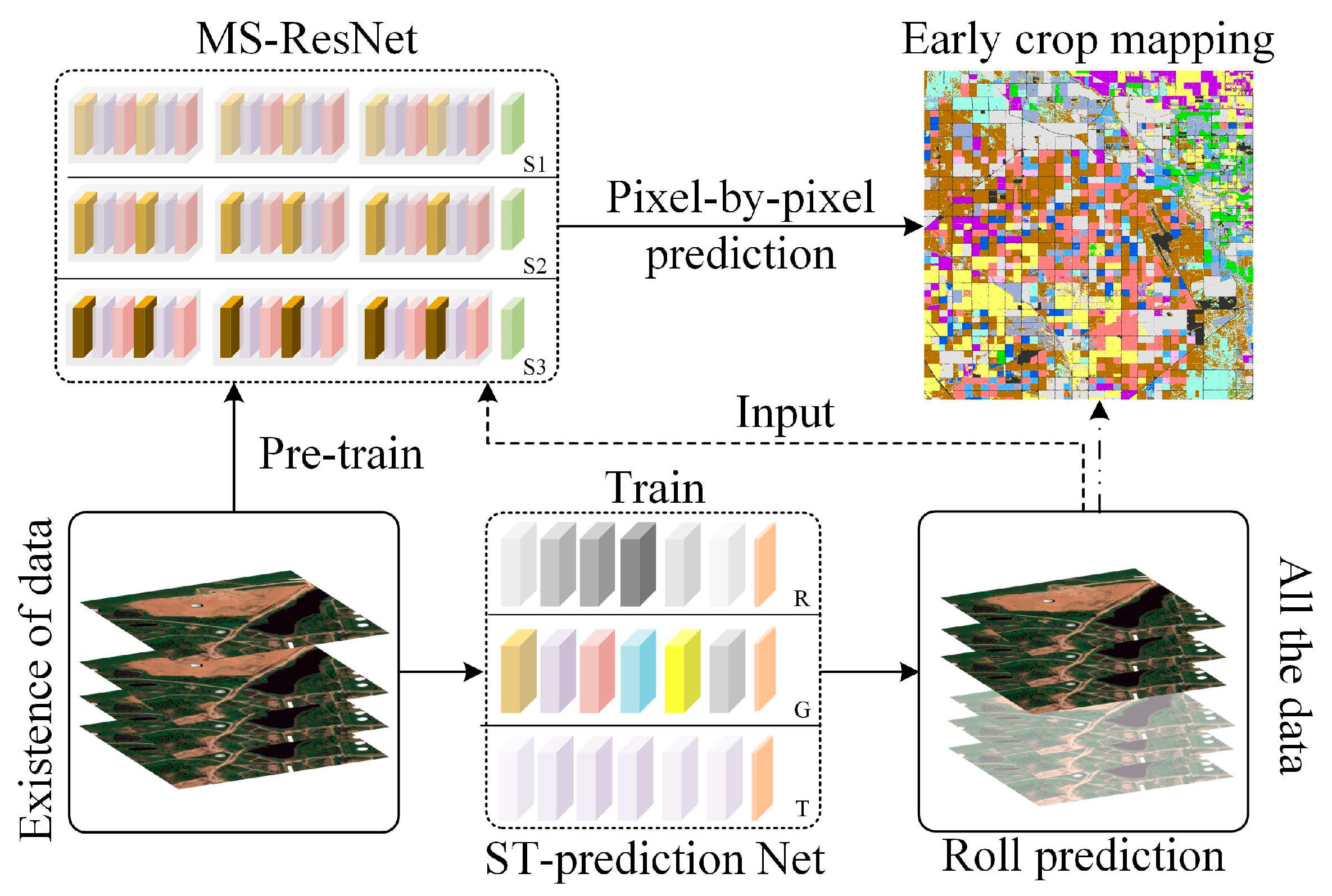

Figure 2 shows an intuitive representation of the proposed network architecture. This model takes randomly cropped spatial–temporal patches as input samples, followed by an in-depth analysis of the recursive characteristics of remote sensing time-series data for future data generation. From various perspectives, such local–global–temporal multi-modal features are able to explore the spatial–temporal evolution patterns of crops for better simulation. Specifically, when the input sample

(where

H and

W represent the height and width of the input patch, and

T represents the length of the temporal dimension) enters the feature extraction network, it is extracted by multiple different feature extraction backbone networks for corresponding modal features. For local-scale features, the model uses a shallow depth separable convolution network (DSC) as the feature extractor. Compared with commonly used backbones [

34,

35], the DSC network can reduce the required training parameters while ensuring efficient feature extraction. For the input sample, this feature extraction stage includes two processes, namely convolution over time (DT) and convolution over pixels (PC). In the DT stage,

n (

n =

T) 3 × 3 two-dimensional convolution kernels are used to perform sliding convolution on different temporal nodes to obtain the feature

that is consistent with the initial dimension of the sample. The calculation process can be represented as

. Then, based on feature

, feature aggregation calculations are performed for each pixel using C convolution kernels of size 1 × 1 ×

T, resulting in the local-scale feature

F1. The calculation involved can be represented as

, where

represent two different convolution operations. This network branch is used to extract local texture features from the sample. We set the depth of the final output feature to be C.

For the extraction of global-scale features for the spatial dimension, we used the Transformer model as the feature extraction backbone network. The input sample

x is first reshaped and flattened into a two-dimensional vector

, and then a two-dimensional convolution layer is used to project the feature dimension of the vector to

D, resulting in a feature vector

. To maintain the order of the sequence within the sample and reduce the computational cost of the model, the backbone network preserves the original 1-D position embedding module. Specifically, a fixed position vector

p is added to the feature vector

to obtain a feature vector

of the same dimension. Finally, the feature vector

is fed into the stacked encoders, and the corresponding weight information is obtained through the attention mechanism. The internal structure of the encoder is shown in

Figure 2. From the figure, it can be seen that each encoder layer consists of a Multi-Head Self-Attention (

MHSA) module, an MLP module, and three Layer Norm (LN) layers. Meanwhile, the MLP module is connected by two fully connected layers and a GeLU activation function. Therefore, the entire process of obtaining the final global spatial feature representation

F2 from the vector

through the encoder can be expressed in the formula as follows:

In this equation, l denotes the number of layers in the encoder, and . Q, K, and V represent the projections of input feature vectors in different feature vector spaces, which are used to compute similarity and output vectors. Wi represents the corresponding projection parameter, while Hi denotes the distinct heads in the attention mechanism.

Finally, to effectively extract temporal features from the samples, a set of LSTM layers is integrated into the framework for temporal feature extraction. Specifically, the input sample

x is continuously passed through interconnected LSTM cells, allowing the model to iteratively learn the temporal patterns within the data. Within each cell unit, the flow and propagation of information are controlled using forget gates (

), input gates (

), and output gates (

), while the cell state (

) is used to capture and store temporal features. In summary, the process of obtaining temporal features

F3 within the samples using the LSTM feature extraction backbone can be represented by the following equation:

where

denotes the current moment of the hidden state inside the cell.

Once the local–global–temporal features are extracted, we concatenate all the resulting feature vectors to form a fused feature vector. In order to enhance the coupling relationship between the fused features, we design a simple feature aggregation module based on the fused feature. In particular, we use one-dimensional convolution and ReLU activation function in sequence to aggregate information of the concatenated feature, resulting in the final fused feature

U. The specific process is shown in Equation (7).

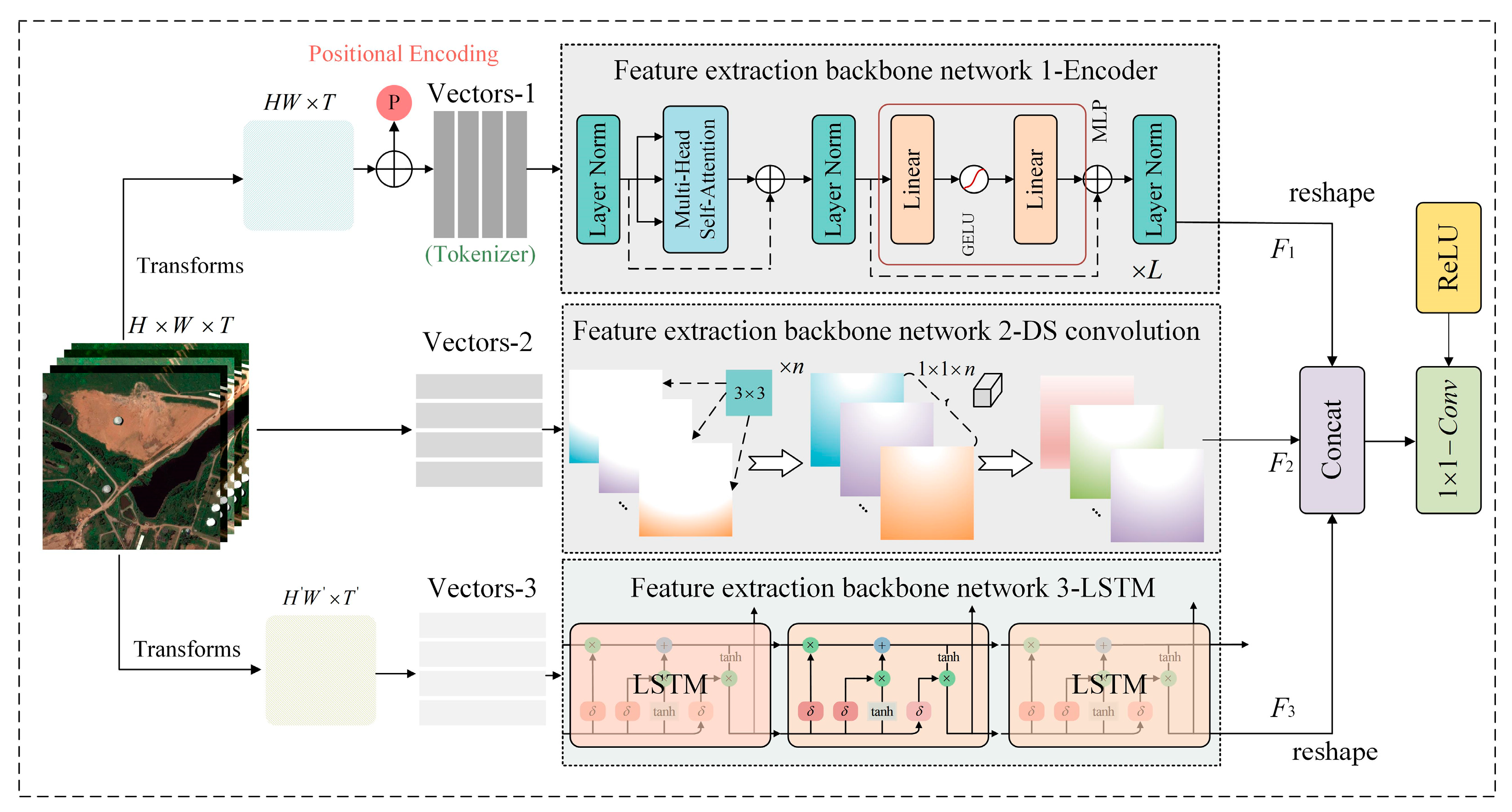

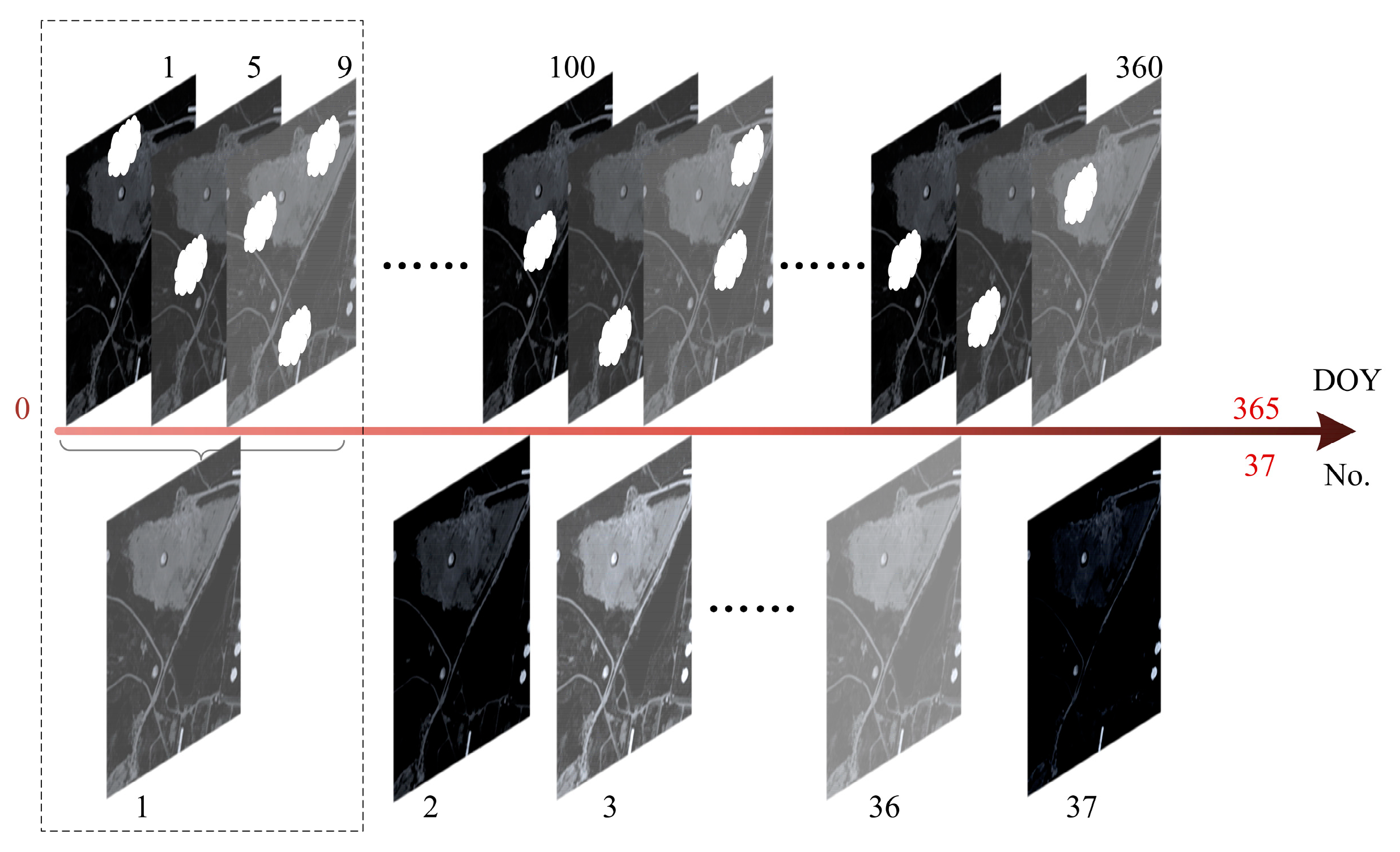

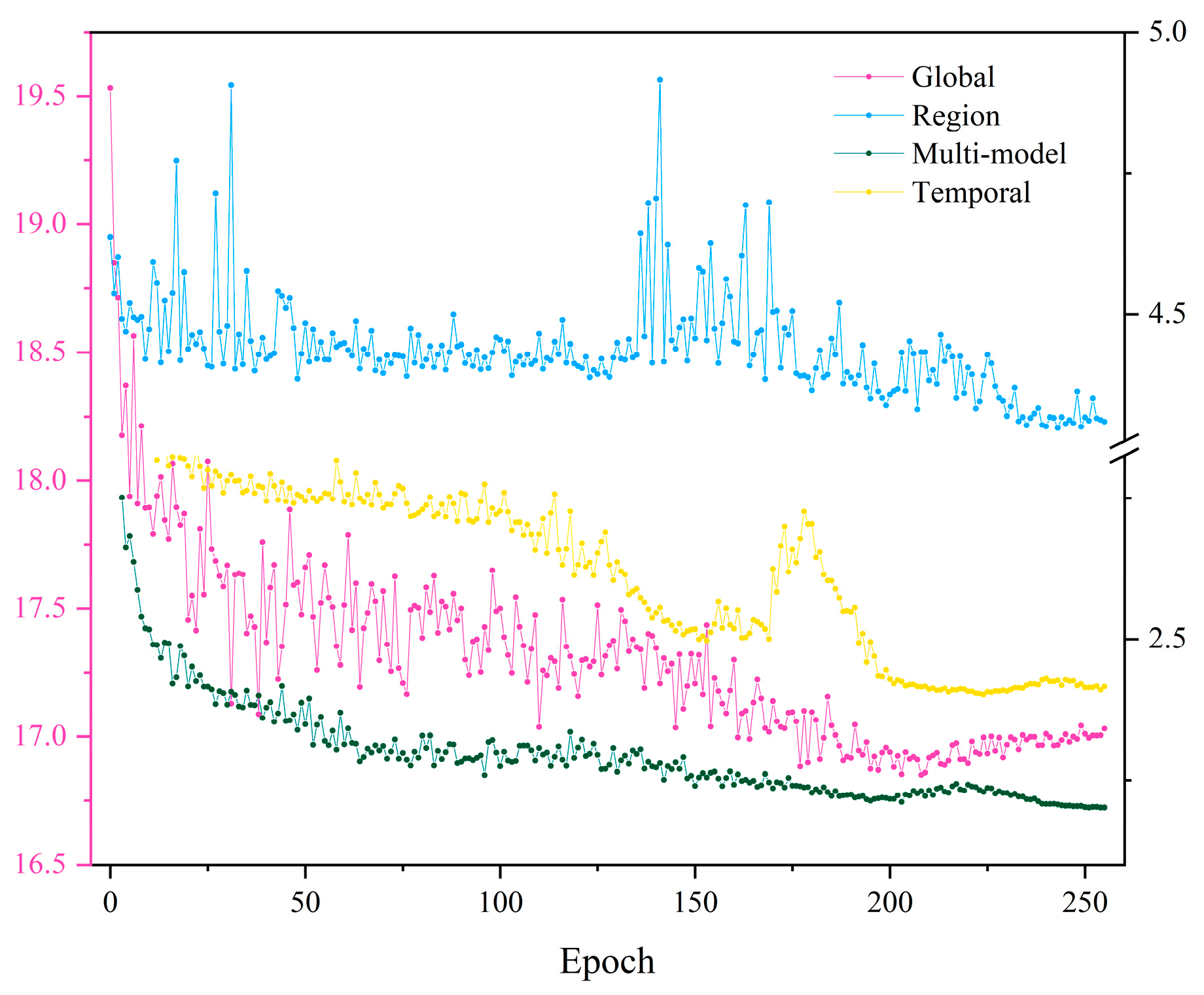

In contrast to simple time-series prediction, in order to enable the model to learn robust spatial–temporal features that characterize the complex remote sensing scenes, we performed rolling training strategy along the temporal axis with representative samples. The training process is shown in

Figure 3.

Assuming that the input sample can be represented as , where denotes the two-dimensional spatial data at the i-th time node, we set a fixed temporal length K, which represents the two-dimensional spatial data containing K temporal nodes included in each sliding input, denoted as . At the same time, we take the center pixel value of the data corresponding to the K+1 node as the target label for the model. Once the current training step is finished, the model will continue to roll down within the batch of samples and obtain the second training sample, denoted as , while taking the center pixel value of the K + 2 node as the new target label. The advantage of training the model in this way is that it not only enables infinite rolling prediction of future data, but also allows the spatial–temporal prediction model to be unrestricted by the initial length of input time-series data.

Finally, the model feeds the aggregated feature

U into a fully connected layer to predict the corresponding future pixel values. During this process, the algorithm utilizes the root mean square error (RMSE) as the loss function to continuously optimize and guide training process. The calculation method of the RMSE can be expressed as Equation (8).

where

represent the predicted and true values, respectively, and

N denotes the number of samples.

2.3. Spatial–Temporal Prediction for Early Crop Mapping

The spatial–temporal prediction module mentioned in the previous section was conducted to obtain future remote sensing data at the early growth stage of crops. Compared with existing early crop mapping methods [

17,

18,

19], the STPM framework aims to forecast crop growth patterns step-by-step with current remote sensing data and perform early crop type mapping at the same time. The corresponding processing flow is shown in

Figure 4.

Due to the inherent differences in training methods between the crop type identification module and spatial–temporal prediction module, it is necessary to pre-train a universal and transferable crop identification model. Considering the inter-annual growth variability of crops, in order to obtain a robust crop classification model, we trained a robust crop identification model based on a large number of time-series crop samples over the past years. Compared to existing time-series classification models, the Multi-Scale 1D ResNet (MS-ResNet) [

36] classification network was chosen as the basic model for crop identification module due to its smaller network parameters, superior crop recognition ability, and model transferability. The MS-ResNet refers to the process of extracting features at different time scales from the input vector using multiple one-dimensional convolution kernels of different sizes, and then fusing all the scale features for subsequent crop classification tasks. Assuming that the convolution kernel sizes of the multi-scale convolution layer are

and the corresponding convolution step sizes are

, the process of extracting multi-scale features

can be represented as follows,

Throughout the model training, the cross-entropy was adopted as the loss function, as formulated in Equation (11).

Among them, ReLU represents the activation function, BN stands for Batch Normalization layer, and denote the true crop label and the predicted result of the model, respectively.

Finally, assuming that the time-series remote sensing data available to us in the current year are , and the future time-series data to be predicted are . The final mapping result obtained through the proposed early crop mapping framework can be represented by