Abstract

Nonnegative matrix factorization (NMF) and its numerous variants have been extensively studied and used in hyperspectral unmixing (HU). With the aid of the designed deep structure, deep NMF-based methods demonstrate advantages in exploring the hierarchical features of complex data. However, a noise corruption problem commonly exists in hyperspectral data and severely degrades the unmixing performance of deep NMF-based methods when applied to HU. In this study, we propose an norm-based robust deep nonnegative matrix factorization (-RDNMF) for HU, which incorporates an norm into the two stages of the deep structure to achieve robustness. The multiplicative updating rules of -RDNMF are efficiently learned and provided. The efficiency of the presented method is verified in experiments using both synthetic and genuine data.

1. Introduction

Hyperspectral unmixing (HU) is a key means of making sufficient use of remotely sensed hyperspectral image (HSI) data, which aims to separate mixed pixels into a group of contained endmembers and corresponding abundance maps. The classical linear mixing model (LMM) has been popularly employed in HU because of its practicality and clarity of physical significance. Due to the nonnegative constraint in LMM, NMF shows its advantages in extracting intuitive and interpretable representation and has been commonly applied to handle HU problems [1]. However, due to the nonconvexity, NMF is prone to get stuck at local minima and is sensitive to noisy samples [2].

To constrain the solution space and produce superior results, a branch of NMF-based methods with additional constraints have been proposed [3,4,5,6]. Robust NMF methods have also been investigated and proposed to address the problem of noise corruption [7,8,9]. The norm first produced in [10] has been successfully used in a number of applications to deal with noise and outliers [11,12,13,14]. Through the integration of an norm, Ma et al. [15] presented a robust sparse HU method (RSU) with an norm-based loss function that achieves robustness to noise and outliers. In [16], a robust version of NMF employing an norm in the objective function (-RNMF) is presented and handles outliers and noise more effectively than a conventional NMF. As a nonlinear similarity metric, correntropy has also been introduced to formulate robust NMF methods. In order to mitigate the corruption of noisy bands, Wang et al. present a correntropy-based NMF (CENMF) that allocates band weights in an adaptive manner [17]. Considering the variability of pixels’ noise intensities, Li et al. further utilize the correntropy metric to propose a robust spectral–spatial sparsity-regularized NMF (CSsRS-NMF) [18]. In [19,20], a truncation operation is used in the cost function to promote robustness to outliers [19,20]. Peng et al. propose a self-paced NMF (SpNMF) utilizing a self-paced regularizer to learn adaptive weights and obviate the effect of noisy atoms (i.e., row-wise bands or column-wise pixels) [21].

Despite the broad and successful applications in HU, NMF-based methods still remain an improvement space for unmixing performance. In general, a large majority of existing NMF-based methods are conducted by a single-layer decomposition, which has restrained the HU performance on complicated and highly mixed hyperspectral data. With the development and enormous success of neural networks [22,23], researchers have been increasingly motivated and concentrated on creating NMF-based approaches with multi-layer structures. Rajabi et al. [24] propose a multilayer NMF (MLNMF) [25] for the purpose of hyperspectral unmixing that decomposes the data matrix and coefficient matrix in a sequential manner. Nevertheless, MLNMF only involves a series of successive single-layer NMFs. There is no feedback of the later layers on the earlier layers in the multi-layer structure of MLNMF [26], which means MLNMF does not fully utilize the hierarchical power of the deep scheme. Deep NMF (DNMF) [27] makes a key advancement by involving a global objective function and a fine-tuning step to propagate information in a reverse direction. In this manner, the entire reconstruction error of the model can be reduced, and better factorization results can be obtained. In the recent literature, some variants of DNMF have been studied to further promote the quality of solutions [26,28,29]. In these variants, various regularizations and constraints are integrated to limit the solution space and lead to better results. Deep orthogonal NMF [26] is a variant that imposes an orthogonality constraint to the matrix . Fang et al. [30] proposed a sparsity-constrained deep NMF method for HU using an regularizer of the abundance matrix on each layer. In [31], an regularizer and a TV regularizer are introduced to propose a sparity-constrained deep NMF with total variation (SDNMF-TV) for HU. In general, most deep NMFs and their variants are based on the square of the Euclidian distance objective function, which lacks the capacity to adapt to noise occasions. In real applications, HSIs are commonly contaminated by various types of noise, including Gaussian noise, dead noise, and impulse noise, which dramatically degrade the unmixing performance [32]. To address this problem, metrics with robust properties such as an norm [10] and a correntropy-induced metric (CIM) [33] can be considered to enhance the robustness of deep NMF-based methods.

This study introduces an norm-based robust deep nonnegative matrix factorization (-RDNMF) method for HU. To strengthen the robustness of DNMF when exploring hierarchical features, an norm is effectively incorporated into the designed deep NMF structure. The proposed -RDNMF is composed of a pretraining stage and a fine-tuning stage, both adopting an norm in the objective function. The pretraining stage conducts a succession of forward decompositions to pretrain the layer-wise factors. Next, the fine-tuning stage obtains the fine-tuned factors by optimizing a global norm-based objective function. The superiority of our method is demonstrated through experimental results on synthetic and real data. The major contributions of our article are summarized as follows: (1) We first propose a robust deep NMF unmixing model by applying an norm. The suggested unmixing model is made of two stages: the pretraining stage and the fine-tuning stage. (2) We provide the multiplicative updating rules for the two stages of the proposed model and explain the robustness mechanism of the norm from the viewpoint of dynamic updating weights.

2. Methodology

2.1. Linear Mixing Model

In the classical LMM, the spectra of mixed pixels in HSIs are assumed to be representable by a linear mixture of endmember spectra and related abundance maps. For an HSI with N pixels and K bands, the LMM can be formulated as

where represents the endmembers, represents the abundances, and denotes the residue term. The constraint of the abundance’s nonnegativity (ANC) and the constraint of the abundance sum-to-one (ASC) are two prior constraints generally subject to the LMM, which can be represented as

2.2. DNMF

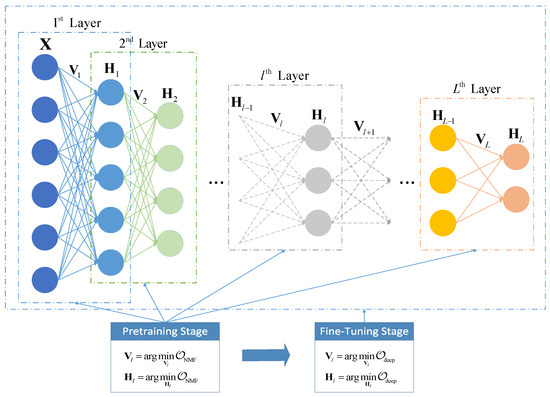

NMF is a popularly used method for addressing the linear unmixing issue, which learns the endmember matrix and the abundance matrix in a single-layer learning manner. As the deep variant of NMF, deep NMF integrates the multi-layer network structure and the fine-tuning step to explore hierarchical features with hidden information and further enhance the unmixing performance. As shown in Figure 1, the formation of DNMF is composed of the following stages:

Figure 1.

Schematic diagram of deep NMF.

(1) Pretraining Stage: The task of this stage is to pretrain the layers by performing basic NMF successively in each layer. To be specific, the data matrix is divided into and in the first layer. Then, is divided into and in the second layer. A similar procedure is repeated until the last L-th layer is finished. In this stage, the objective function for each layer is given by

with . In the first layer (i.e., ), we have and . The multiplicative update rules for (3) are provided in the classical NMF algorithm [34], i.e.,

where and denote element-wise multiplication and division.

(2) Fine-Tuning Stage: In this stage, the hierarchical network structure is considered as a whole in order to minimize the overall reconstruction error. The factors of all layers obtained in the pretraining stage are fine-tuned with the objective function as follows:

In fact, in the fine-tuning stage, acts as an intermediate variable and is not reflected in (6). Only the variables need to be alternatively updated. By differentiating (6) with respect to and , we have

After selecting appropriate step sizes, the update rules for and can be derived through the gradient descent (GD) method in the following multiplicative forms:

On the basis of acquired optimal factors, the final endmember matrix and abundance matrix are calculated as follows:

2.3. Proposed -RDNMF

In real-world scenarios, HSIs frequently suffer from various kinds of interference, including Gaussian noise, impulse noise, and dead pixels. The presence of complicated noise severely decreases the performance of DNMF and may result in the failure of the HU task. To promote the robustness of the deep NMF method, we present a robust deep NMF unmixing model that applies an norm to the pretraining and fine-tuning stages. Our suggested unmixing model is also made up of two stages:

where and are the objective functions of the pretraining stage and fine-tuning stage, respectively, and is the so-called norm, which is defined as follows:

where , denote the n-th column and the -th element of the residue term .

For the pretraining stage, the gradients of with respect to and are calculated as follows:

where is a diagonal matrix containing the diagonal components, defined as

On the basis of (19) and (20), by selecting appropriate step sizes, the update rules for and can be derived through the gradient descent (GD) method in the following multiplicative forms:

In the pretraining stage, the layer-wise factors are pretrained by the update rules given in (22) and (23).

For the fine-tuning stage, the objective function given in (17) can be rewritten as

The gradients of with respect to are calculated as follows:

where is a diagonal matrix containing the diagonal components, defined as

Further, the gradients of with respect to is calculated as follows:

Similar to (12) and (13), by selecting appropriate step sizes, the update rules for (17) can be derived in the following multiplicative forms

The final results of the proposed -RDNMF are calculated by (14) and (15). It can be observed from (21) and (26) that the data samples in -RDNMF are assigned with adaptive weights corresponding to their located column’s reconstruction error. By this robust formulation, the influence of those columns with large errors due to high noise intensities can be reduced in the updating process. Therefore, -RDNMF has good robustness to noisy samples (pixels) by column. Algorithm 1 presents the overall process of -RDNMF.

| Algorithm 1 Proposed -RDNMF |

| Input: HSI data ; number of layers L; inner ranks . |

| Output: Endmembers ; abundances . |

| Pretraining stage: |

| 1: for do |

| 2: Obtain initial and by VCA-FCLS. |

| 3: repeat |

| 4: Compute using Equation (21). |

| 5: Update by Equation (22). |

| 6: Update by Equation (23). |

| 7: until convergence |

| 8: end for |

| Fine-tuning stage: |

| 9: repeat |

| 10: for do |

| 11: Compute using Equation (8). |

| 12: Compute using Equation (9). |

| 13: Compute using Equation (26). |

| 14: Update by Equation (28). |

| 15: end for |

| 16: Update by Equation (29). |

| 17: until the stopping criterion is satisfied. |

| 18: Compute using Equation (14). |

| 19: Compute using Equation (15). |

| 20: return and |

2.4. Implementation Issues

Vertex component analysis (VCA) [35] is utilized to initialize the matrix , and the fully constrained least squares (FCLS) algorithm [36] is utilized to initialize . Specifically, to avoid pixels with near-zero residues dominating the objective function, the maximum value in and is limited to 100. As for the stopping criterion, the algorithm is stopped when the number of iterations reaches a preset maximum number or the relative error is met. is set to 500 and is set to in this paper. Further, the parameters of the multi-layer structure in deep NMF-based methods are determined with reference to [31] through empirical examination. In Experiment 1, it is found that optimal performance is achieved when the number of layers L of the deep NMF structure is defined as 3. Therefore, we set L as 3 in the rest of the experiments.

3. Experiments

To verify the effectiveness of our method, several experiments are carried out on both synthetic and real HSI data. Seven HU methods, i.e., VCA-FCLS [35,36], -RNMF [16], DNMF [27], SDNMF-TV [31], CSsRS-NMF [18], MLNMF [25], and CANMF-TV [33] are compared to the proposed -RDNMF in the experiments. Two commonly utilized metrics, spectral angle distance (SAD) and root-mean-square error (RMSE), are used to quantify the performance of the obtained unmixing results [2].

3.1. Experiments on Synthetic Data

The used synthetic data are generated according to the methodology provided in [2]. The spectra used as endmembers are randomly assigned from the United States Geological Survey (USGS) digital spectral library [37]. The size of generated synthetic data is 100 × 100 pixels with 224 bands. To better simulate the real scenario, the synthetic data are contaminated by a possible complex noise composition involving dead pixels, Gaussian noise, and impulse noise. When adding the Gaussian noise, pixels’ values of the signal-to-noise ratio (SNR) are derived from with reference to [18]. To establish a reliable comparison, the reported results in the experiments are derived by averaging over 20 independent runs for each method. When the iteration number is set as 500, the time consumption of the proposed -RDNMF and other compared methods is presented in Table 1.

Table 1.

Time consumption of the proposed -RDNMF and other compared methods.

3.1.1. Experiment 1 (Investigation of the Number of Layers)

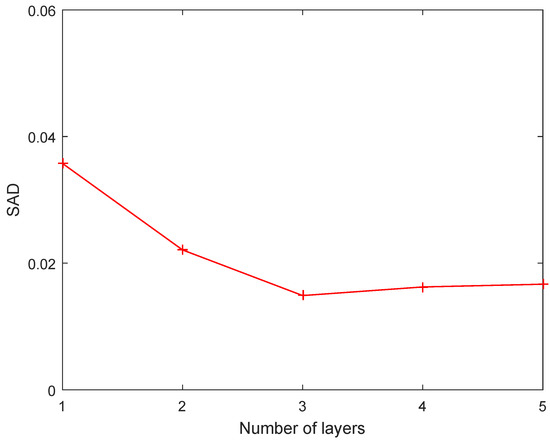

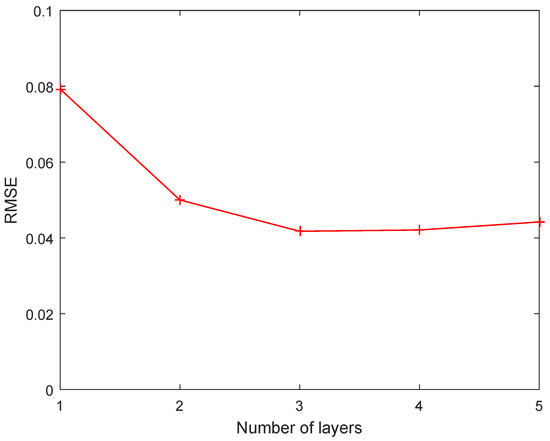

The number of layers is a key parameter for a deep NMF-based method that determines the depth of the multi-layer structure and influences the unmixing performance. This experiment investigates the impact of different settings for the number of layers on the unmixing performance. The number of layers L is set as . Figure 2 and Figure 3 display the SAD and RMSE results of -RDNMF under different settings of L. It can be observed that the two metrics show a similar trend when L varies. The performance of the multi-layer structure () is superior to the single-layer structure (), as expected. The metrics of endmembers and abundances both gradually improve with increasing L, and then, they degrade when L is larger than 3. In other words, optimal performance can be achieved by using a three-layer structure. Therefore, a three-layer structure is adopted in the rest of the experiments.

Figure 2.

SAD results of proposed -RDNMF with different settings of the number of layers.

Figure 3.

RMSE results of proposed -RDNMF with different settings of the number of layers.

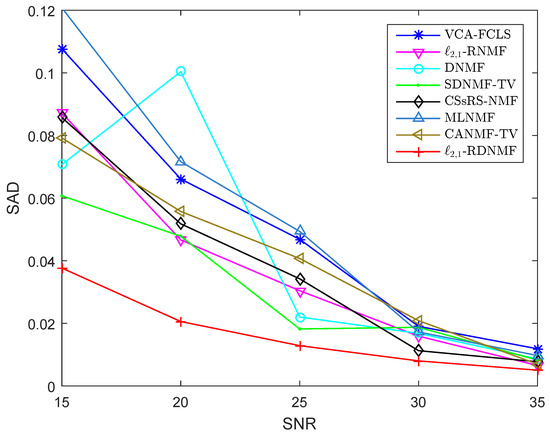

3.1.2. Experiment 2 (Investigation of Noise Intensities)

To verify the proposed method’s effectiveness, synthetic HSI data with various noise intensities are utilized to assess the algorithms’ performance. The value of is specified as 15, 20, 25, 30, or 35 in order to simulate various noise intensities; is set to 5 in the experiment. Figure 4 and Figure 5 show the SAD and RMSE results of algorithms when the noise level varies. When , the algorithms all perform well and are at a comparable level. When the value of decreases, all five methods’ performance degrades as anticipated. However, it can be seen that the proposed -RDNMF apparently obtains lower values of SAD and RMSE, which validates the robustness of -RDNMF to various noise levels.

Figure 4.

SAD results of different methods on data simulated with various intensities of Gaussian noise.

Figure 5.

RMSE results of different methods on data simulated with various intensities of Gaussian noise.

3.1.3. Experiment 3 (Investigation of Noise Types)

The algorithms are also investigated on synthetic data with various forms of mixed noise added, which provides a better simulation of complicated mixed noise in real scenarios. The mixed noise is made up of a combination of dead pixels, Gaussian noise, and impulse noise. For the dead pixels, 0.5% of pixels are randomly selected to be dead pixels. For the Gaussian noise, is set to 30. For the impulse noise, bands 30–40 are degraded by impulse noise with the noise density set to 0.05. Table 2 and Table 3 report the SAD and RMSE results of algorithms under five types of noise situations, including Gaussian noise; Gaussian noise and impulse noise; Gaussian noise and dead pixels; and Gaussian noise, impulse noise, and dead pixels. The reported results show that -RDNMF achieves the best unmixing performance in the terms of endmembers and abundances under all four types of noise situations, which further confirms the robustness of the proposed -RDNMF under complicated mixed noise.

Table 2.

SADs of different methods on synthetic data simulated with various types of noise. The best results are marked in bold.

Table 3.

RMSEs of different methods on synthetic data simulated with various types of noise. The best results are marked in bold.

3.2. Experiments on Real Data

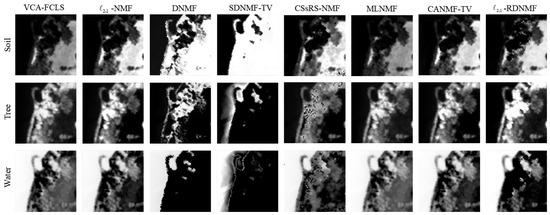

3.2.1. Samson Dataset

The first real HSI dataset used in our experiments is the Samson dataset [38], which contains 156 bands spanning the spectral range of 401 nm–889 nm. As shown in Figure 6, the used subscene consists of 95 × 95 pixels. Based on the previous studies in [31,39], there are three kinds of targets existing in the subscene, including water, trees, and soil. Table 4 presents the SAD results of the three targets achieved by the proposed -RDNMF and comparative methods. As the results show, -RDNMF outperforms the compared methods in all three targets and the mean value. Since the ground truth of abundances for the real dataset is unavailable, Figure 7 only displays the estimated abundance maps using the proposed -RDNMF. With comparison to the results presented in the previous works [31,39], the abundance maps are in accordance with the the reference maps.

Figure 6.

The Samson dataset used in the experiment.

Table 4.

The SADs of algorithms on the Samson dataset. The best results are marked in bold.

Figure 7.

Abundance maps estimated using -RDNMF on the Samson dataset.

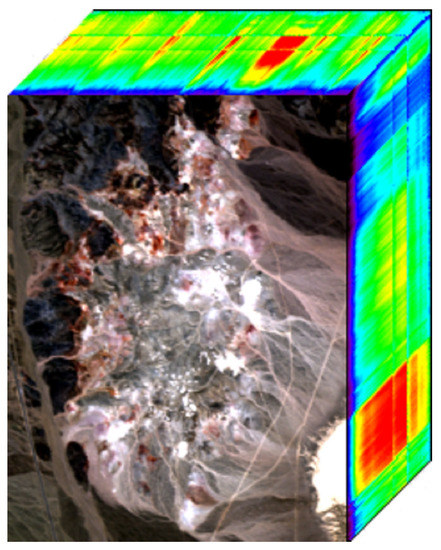

3.2.2. Cuprite Dataset

The second scene employed in the experiment is the Cuprite dataset collected by the AVIRIS sensor over a Cuprite area in Las Vegas, NV, US. As shown in Figure 8, the used subimage contains 250 × 191 pixels and 224 bands. After badly degraded bands (1–2, 76, 104–113, 148–167, and 221–224) are removed, there are 188 bands remaining that are used in the experiment. Table 5 presents the SAD results of the proposed -RDNMF and comparative methods on the Cuprite dataset. It can be observed that -RDNMF achieves the best performance in several minerals, including Andradite, Dumortierite, Kaolinite #2, Muscovite, Nontronite and Pyrope, as well as the mean value. Additionally, Figure 9 presents the abundance maps estimated by -RDNMF.

Figure 8.

The Cuprite dataset used in the experiment.

Table 5.

The SADs of algorithms on the Cuprite dataset. The best results are marked in bold.

Figure 9.

Abundance maps estimated by -RDNMF on the Cuprite dataset. (a) Alunite. (b) Andradite. (c) Buddingtonite. (d) Dumortierite. (e) Kaolinite #1. (f) Kaolinite #2. (g) Muscovite. (h) Montmorillonite. (i) Nontronite. (j) Pyrope. (k) Sphene. (l) Chalcedony.

4. Conclusions

In this study, we provide an norm-based robust deep NMF method (-RDNMF) for HU. The norms are effectively incorporated into two stages of the designed deep structure to achieve robustness to noise corruption in HU. Experiments conducted on synthetic data simulated with various noise intensities and types demonstrate that the proposed method possesses gratifying robustness to complex noise situations. Experiments conducted on real data also verify the effectiveness of our method.

Author Contributions

Writing, R.H.; software, R.H.; methodology, H.J.; validation, C.X.; funding acquisition, X.L.; project administration, S.C., C.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Nature Science Foundation of China under grant No. 62171404, in part by the Joint Fund of the Ministry of Education of China under grant No. 8091B022120, in part by Zhejiang Provincial Natural Science Foundation of China under grant No.LQ23F020003, and in part by Natural Science Foundation of Wenzhou under grant No.G20220007.

Data Availability Statement

Data available upon reasonable request to the c.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Feng, X.R.; Li, H.C.; Wang, R.; Du, Q.; Jia, X.; Plaza, A.J. Hyperspectral unmixing based on nonnegative matrix factorization: A comprehensive review. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2022, 15, 4414–4436. [Google Scholar] [CrossRef]

- Qian, Y.; Jia, S.; Zhou, J.; Robles-Kelly, A. Hyperspectral unmixing via L1/2 sparsity-constrained nonnegative matrix factorization. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4282–4297. [Google Scholar] [CrossRef]

- Huck, A.; Guillaume, M.; Blanc-Talon, J. Minimum dispersion constrained nonnegative matrix factorization to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2590–2602. [Google Scholar] [CrossRef]

- Lu, X.; Wu, H.; Yuan, Y.; Yan, P.; Li, X. Manifold regularized sparse NMF for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2815–2826. [Google Scholar] [CrossRef]

- Wang, N.; Du, B.; Zhang, L. An endmember dissimilarity constrained non-negative matrix factorization method for hyperspectral unmixing. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 6, 554–569. [Google Scholar] [CrossRef]

- Lei, T.; Zhou, J.; Qian, Y.; Xiao, B.; Gao, Y. Nonnegative-matrix-factorization-based hyperspectral unmixing with partially known endmembers. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6531–6544. [Google Scholar]

- Févotte, C.; Dobigeon, N. Nonlinear hyperspectral unmixing with robust nonnegative matrix factorization. IEEE Trans. Image Process. 2015, 24, 4810–4819. [Google Scholar] [CrossRef]

- Li, C.; Ma, Y.; Mei, X.; Liu, C.; Ma, J. Hyperspectral unmixing with robust collaborative sparse regression. Remote Sens. 2016, 8, 588. [Google Scholar] [CrossRef]

- He, W.; Zhang, H.; Zhang, L. Sparsity-regularized robust non-negative matrix factorization for hyperspectral unmixing. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 4267–4279. [Google Scholar] [CrossRef]

- Ding, C.; Zhou, D.; He, X.; Zha, H. R1-PCA: Rotational invariant L1-norm principal component analysis for robust subspace factorization. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 281–288. [Google Scholar]

- Nie, F.; Huang, H.; Cai, X.; Ding, C.H. Efficient and robust feature selection via joint ℓ2,1-norms minimization. In Advances in Neural Information Processing Systems 23 (NIPS 2010); Morgan Kaufmann: Vancouver, BC, Canada, 2010; pp. 1813–1821. [Google Scholar]

- Huang, H.; Ding, C. Robust tensor factorization using R1 norm. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1–8. [Google Scholar]

- Ren, C.X.; Dai, D.Q.; Yan, H. Robust classification using ℓ2,1-norm based regression model. Pattern Recognit. 2012, 45, 2708–2718. [Google Scholar] [CrossRef]

- Yang, S.; Hou, C.; Zhang, C.; Wu, Y. Robust non-negative matrix factorization via joint sparse and graph regularization for transfer learning. Neur. Comput. Appl. 2013, 23, 541–559. [Google Scholar] [CrossRef]

- Ma, Y.; Li, C.; Mei, X.; Liu, C.; Ma, J. Robust sparse hyperspectral unmixing with ℓ2,1 norm. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1227–1239. [Google Scholar] [CrossRef]

- Kong, D.; Ding, C.; Huang, H. Robust nonnegative matrix factorization using L21-norm. In Proceedings of the 20th ACM International Conference on Information and Knowledge Management, Glasgow, UK, 24–28 October 2011; ACM: New York, NY, USA, 2011; pp. 673–682. [Google Scholar]

- Wang, Y.; Pan, C.; Xiang, S.; Zhu, F. Robust hyperspectral unmixing with correntropy-based metric. IEEE Trans. Image Process. 2015, 24, 4027–4040. [Google Scholar] [PubMed]

- Li, X.; Huang, R.; Zhao, L. Correntropy-based spatial-spectral robust sparsity-regularized hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2020, 59, 1453–1471. [Google Scholar] [CrossRef]

- Wang, H.; Yang, W.; Guan, N. Cauchy sparse NMF with manifold regularization: A robust method for hyperspectral unmixing. Knowl.-Based Syst. 2019, 184, 104898. [Google Scholar] [CrossRef]

- Huang, R.; Li, X.; Zhao, L. Spectral-spatial robust nonnegative matrix factorization for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8235–8254. [Google Scholar] [CrossRef]

- Peng, J.; Zhou, Y.; Sun, W.; Du, Q.; Xia, L. Self-paced nonnegative matrix factorization for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1501–1515. [Google Scholar] [CrossRef]

- Zheng, Q.; Zhao, P.; Li, Y.; Wang, H.; Yang, Y. Spectrum interference-based two-level data augmentation method in deep learning for automatic modulation classification. Neur. Comput. Appl. 2021, 33, 7723–7745. [Google Scholar]

- Zhao, M.; Liu, Q.; Jha, A.; Deng, R.; Yao, T.; Mahadevan-Jansen, A.; Tyska, M.J.; Millis, B.A.; Huo, Y. VoxelEmbed: 3D instance segmentation and tracking with voxel embedding based deep learning. In Proceedings of the Machine Learning in Medical Imaging: 12th International Workshop, MLMI 2021, Held in Conjunction with MICCAI 2021, Strasbourg, France, 27 September 2021, Proceedings 12; Springer: Berlin/Heidelberg, Germany, 2021; pp. 437–446. [Google Scholar]

- Rajabi, R.; Ghassemian, H. Spectral unmixing of hyperspectral imagery using multilayer NMF. IEEE Geosci. Remote Sens. Lett. 2014, 12, 38–42. [Google Scholar]

- Cichocki, A.; Zdunek, R. Multilayer nonnegative matrix factorisation. Electron. Lett. 2006, 42, 947–948. [Google Scholar] [CrossRef]

- De Handschutter, P.; Gillis, N.; Siebert, X. A survey on deep matrix factorizations. Comput. Sci. Rev. 2021, 42, 100423. [Google Scholar] [CrossRef]

- Trigeorgis, G.; Bousmalis, K.; Zafeiriou, S.; Schuller, B.W. A deep matrix factorization method for learning attribute representations. IEEE Trans. Patt. Anal. Mach. Intell. 2016, 39, 417–429. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.S.; Zeng, Q.; Pan, B. A survey of deep nonnegative matrix factorization. Neurocomputing 2022, 491, 305–320. [Google Scholar] [CrossRef]

- Sun, J.; Kong, Q.; Xu, Z. Deep alternating non-negative matrix factorisation. Knowl.-Based Syst. 2022, 251, 109210. [Google Scholar] [CrossRef]

- Fang, H.; Li, A.; Xu, H.; Wang, T. Sparsity-constrained deep nonnegative matrix factorization for hyperspectral unmixing. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1105–1109. [Google Scholar] [CrossRef]

- Feng, X.R.; Li, H.C.; Li, J.; Du, Q.; Plaza, A.; Emery, W.J. Hyperspectral unmixing using sparsity-constrained deep nonnegative matrix factorization with total variation. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6245–6257. [Google Scholar] [CrossRef]

- Huang, R.; Li, X.; Fang, Y.; Cao, Z.; Xia, C. Robust Hyperspectral Unmixing with Practical Learning-Based Hyperspectral Image Denoising. Remote Sens. 2023, 15, 1058. [Google Scholar] [CrossRef]

- Feng, X.R.; Li, H.C.; Liu, S.; Zhang, H. Correntropy-based autoencoder-like NMF with total variation for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2022, 19, 1–5. [Google Scholar]

- Lee, D.D.; Seung, H.S. Algorithms for non-negative matrix factorization. In Advances in Neural Information Processing Systems 13 (NIPS 2000); Morgan Kaufmann: Denver, CO, USA, 2001; pp. 556–562. [Google Scholar]

- Nascimento, J.M.; Dias, J.M. Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 898–910. [Google Scholar] [CrossRef]

- Heinz, D.C.; Chang, C.I. Fully constrained least squares linear spectral mixture analysis method for material quantification in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 529–545. [Google Scholar] [CrossRef]

- Clark, R.N.; Swayze, G.A.; Gallagher, A.J.; King, T.V.; Calvin, W.M. The US Geological Survey, Digital Spectral Library: Version 1 (0.2 to 3.0 um); U.S. Geological Survey Open-File ReportThe US Geological Survey: Denver, CO, USA, 1993. [Google Scholar]

- Zhu, F. Hyperspectral Unmixing Datasets & Ground Truths. 2014. Available online: http://www.escience.cn/people/feiyunZHU/Dataset_GT.html (accessed on 10 March 2019).

- Zhu, F.; Wang, Y.; Fan, B.; Xiang, S.; Meng, G.; Pan, C. Spectral unmixing via data-guided sparsity. IEEE Trans. Image Process. 2014, 23, 5412–5427. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).