1. Introduction

The space-based infrared (IR) detection system is not restricted by geography and atmosphere, and has strong concealment, so it can effectively detect and identify space targets such as missiles and launch vehicles [

1,

2,

3]. The high-precision extraction of velocity features is a key technology of space-based IR detection systems and an important prerequisite for space target recognition. The research on the velocity features of space IR targets has garnered extensive attention in various application areas, including space debris monitoring [

4], space kill assessment [

5], target tracking [

6], etc. Due to factors such as space target design cost and weight control, there are relative differences in speed and direction before and after the release of different targets. This difference provides a reference for target classification and recognition. Due to the long detection distance and low signal-to-noise ratio of targets, it is difficult to detect targets on the image plane. Therefore, researchers have conducted many theoretical analyses and simulations on the detection and recognition of dim moving targets in deep space backgrounds [

7,

8,

9,

10,

11]. Satellites located in specific orbits can use the passive observation of IR sensors to obtain Line-of-Sight (LOS) measurement information from the sensor to the target. Combined with the coordinate transformation relationship, the position and velocity information of the observed target can be obtained [

12].

The commonly used velocity estimation method mainly combines prior knowledge of the space target dynamics model and adopts the batch processing algorithm or the filtering algorithm to estimate the target motion information. The basic premise of batch processing methods is to linearize nonlinear functions around the initial estimate using Taylor series expansion and then iteratively solve them [

13]. However, the solving process of this method is unstable and the computation is complex. The most classic filtering algorithm is the Extended Kalman Filter (EKF). Sandip [

14] used the EKF to estimate the motion position and velocity of the target and found that the EKF algorithm had a significant advantage in calculating velocity. The EKF can use the linearization method to overcome the nonlinear filtering problem with relatively low computational complexity. However, it is prone to divergence problems in situations where nonlinearity is strong and the amount of data is limited. Therefore, the Unscented Kalman Filter (UKF) [

15,

16] and the Cubature Kalman Filter (CKF) [

17,

18] have been proposed. Huang et al. introduced the variance statistical function in UKF to calculate the residual weight in real time, which improved the sensitivity of the system to target maneuvering [

19]. Cui et al. [

20] proposed an adaptive high-order CKF algorithm to solve the problem in which the accuracy of CKF velocity estimation decreases when the system state changes abruptly. In summary, the above-mentioned correlation filtering methods are based on the assumption that the motion model of space objects is known, and aim to balance the calculation efficiency and estimation accuracy according to this motion model. The effectiveness of these algorithms depends largely on the accuracy of the established motion model and the selection of the initial state, and the accuracy of the model greatly influences the final estimation result.

During actual detection processes, these unknown targets are usually uncooperative, and there is no prior information about their structure or motion parameters. In this case, the aforementioned velocity estimation methods are not applicable. In recent years, some scholars have used geometric methods [

21,

22,

23] to locate space targets. These methods do not need to establish a motion model for the space target but only focus on the rotation relationship between the space target and the IR imaging position, and the calculation is small and easy to process on the satellite. Combining the relationships among position, velocity, and time, it is also relatively feasible to use the above algorithm to estimate the velocity. Limited by the performance of the detector, long-distance objects appear in the form of small dots on the imaging plane of the detector. In the absence of prior information, only angular information can be obtained under single-satellite observation, and the distance between the target and detector cannot be obtained accurately. Furthermore, the accurate position and velocity of the target cannot be obtained. Therefore, the geometric method usually requires more than two satellites to observe the target simultaneously.

There are many error sources in the space-based detection system, which seriously affect the target positioning results [

12,

24]. How to accurately fit the positioning information is directly related to the accuracy of velocity estimation. Commonly used fitting methods are the Weighted Moving Average (WMA) [

25], Least Mean Square (LMS) [

26], Savitzky–Golay filter [

27], locally weighted regression (LWR) [

28], and variants of these methods. WMA and its variants are used in various fields to estimate models and predict future events [

29,

30], but it responds slowly to sudden and fast-changing data, is sensitive to weight selection, and has a poor smoothing effect. LMS gradually adjusts the weight of the filter in an iterative manner to minimize the mean square error of the prediction error [

26]. However, LMS has a slow convergence speed and requires high signal correlation. The effectiveness of the classic Savitzky–Golay filter depends on the correct choice of polynomial degree, which may lead to overfitting or loss of detail information for high-order polynomial fitting. Ochieng et al. proposed an improved Adaptive Savitzky–Golay filter (ASG), which automatically adjusts parameters by dynamically modifying the smoothing results of the filter to ensure high-precision feature extraction [

31]. The LWR method can adapt to the distribution and density of different data points, as well as retain the local characteristics and nonlinear trends of the data. It is widely used in various fields such as automatic driving [

32], software engineering quantity estimation [

33], and computer tomography [

34].

Building on the previous research, this study utilized different IR detectors mounted on multiple satellites at a certain distance, combined with robust locally weighted regression, to extract the velocity characteristics of dim space targets. This method does not need to assume the motion state of the space object, and it is more accurate and stable than other methods. There are three main contributions of this study:

(1) Established a multi-satellite observation model under space-based IR conditions, which solved the problem of insufficient information obtained by single-satellite observation.

(2) A robust local weighted regression method is proposed for velocity estimation, which can accurately and quickly calculate the target velocity.

(3) The impacts of satellite position measurement error, satellite attitude angle measurement error, sensor pointing error, and pixel coordinate extraction error on velocity estimation accuracy are analyzed.

The remainder of this paper is organized as follows: In

Section 2, the space-based IR multi-satellite observation model is established in detail;

Section 3 presents a velocity estimation method based on robust locally weighted regression;

Section 4 provides the simulation experiment results and an analysis of the impact factors; and, finally,

Section 5 provides the conclusions.

2. Space-Based IR Multi-Satellite Observation Model

When a single IR detector observes a target, only the angular information of the target can be obtained by combining the coordinates of the imaging point and the optical system parameters. Thus, the greatest estimation uncertainty always exists along the LOS direction, and the target position and velocity information cannot be accurately obtained [

35]. Moreover, due to the limitations of single-satellite observations, the continuity of the observation is usually unsatisfactory. Using multiple IR detectors (mounted on different satellites) can overcome the above limitation in extracting the velocity characteristics of space targets [

36]. This section presents the complete derivation process for the space-based multi-satellite observation model. Firstly, the overall space target observation model is derived, followed by the extraction of the target IR imaging point position using the gray-weighted centroid method. Finally, the multi-satellite cross-positioning model is obtained.

2.1. Space Target Observation Model

The observation of space targets is used to solve the projection problem of the target in three-dimensional space by discerning the position information of the target on the two-dimensional plane and the full-link projection transformation relationship between the target and the sensor [

37]. In the process of solving the target position, it is necessary to establish and clarify multiple coordinate frames and their conversion relationships. As shown in

Figure 1, the process involves four coordinate transformations of the target position vector. The specific transformations are as follows.

Assume that the position vectors of the space target and the satellite in the Earth-Centered Inertial (ECI) coordinate frame (

) are

and

, the latitude angle is

, the orbital inclination angle is

, and the Right Ascension of the Ascending Node (RAAN) is

. According to the coordinate rotation adjustment relationship, the position vector

of the space target in the satellite orbital coordinate frame (

) can be calculated:

where

is the matrix two norm;

represents the transition matrix from the ECI coordinate frame to the satellite orbit coordinate frame;

denotes the rotation matrix; and

refers to the coordinate axis adjustment matrix, expressed as follows.

The three-axis attitude angles of the observation satellite relative to the satellite orbit coordinate frame are roll angle

, pitch angle

, and yaw angle

. According to the rotation of the satellite attitude, the target position vector

under the satellite body coordinate frame (

) can be obtained as:

where

is the transition matrix from the satellite orbital coordinate frame to the satellite body coordinate frame. When the three attitude angles are all 0,

.

Upon rotating the position vector

by the azimuth angle

around the

axis, and then rotating it by the elevation angle

around the

axis, the position vector

in the sensor coordinate frame (

) is obtained:

where

is the transition matrix from the satellite body coordinate frame to the sensor coordinate frame.

The

Z-axis of the sensor coordinate frame and the image plane coordinate frame coincide with each other. Assume that the component length of each coordinate axis of the target in the sensor coordinate frame is

. According to the principle of lens imaging, the object and image are conjugate. Therefore, the imaging point coordinates are

where

is the focal length, and

represents the component length of the target on the

axis. The coordinate value of the imaging point is rounded and translated to obtain the target pixel coordinate

:

where

represents the pixel size,

is the rounded operation, and

and

denote the number of pixels in the rows and columns of the image plane, respectively.

By combining Equation (1) to Equation (9), the overall observation model of the target projected from the three-dimensional space to the image plane position can be obtained:

where

is the formula for transformation from the sensor coordinate frame to the image plane coordinate frame (

).

2.2. Target IR Image Plane Extraction

Ideally, the target is imaged as a dispersed spot on the IR sensor. However, in the case of long exposure, the space target moves too fast and is imaged as a strip source in different directions. The angle-dependent anisotropic Gaussian spread function [

38] is introduced as follows:

where coordinates

and

are the positions of the target center and the distributed pixels,

represents the rotation angle of the strip target image,

refers to the maximal energy, and

and

are the horizontal and vertical spread parameters of the dispersed spot, respectively. The imaging of an ideal target and a space moving target is shown in

Figure 2.

Due to point spread and other reasons, the energy of the target imaging point is dispersed to the surrounding pixels, which affects the accurate pixel coordinates of the target imaging point [

39,

40]. The target imaging region is divided into rectangular regions. Assuming that the

X-axis coordinate range of the region is

and the

Y-axis coordinate range is

, the pixel coordinates of the target imaging point

are expressed as

When the centroid method is used, , is the gray value of the point in the rectangular region, and represents the average gray value of background and noise. When the gray-weighted centroid method is used, . When the threshold centroid method is employed, , and is the set threshold. The gray-weighted centroid method is widely used in engineering because of its simple calculation and high positioning accuracy, and the fact that no new parameters are introduced. Therefore, this study used the gray-weighted centroid method to extract the pixel coordinates of the target imaging point.

2.3. Multi-Satellite Cross-Positioning

If the space target is in the satellite position (

, Equation (1) can be converted to

, that is,

. Combined with the formulae in

Section 2.1, the target position vector

in the sensor coordinate frame satisfies

If

, then

is the overall transition matrix from the sensor coordinate frame to the ECI coordinate frame. Combined with the results of the image plane position extraction and Equation (9), the target coordinates in the image plane coordinate frame can be obtained:

The target unit direction vector in the sensor coordinate frame is

The unit direction vector is converted to the ECI coordinate frame using , and is the unit LOS vector of the target in the ECI coordinate frame.

Single-satellite observation can only provide space target angular information; to accurately determine the position of the target, at least two or more satellites are needed. In this study, the principle of multi-satellite cross-positioning was used to determine the three-dimensional position information of space targets under only angle information, as shown in

Figure 3. According to the geometric relationship between the target and the IR detector in the ECI coordinate frame, the following equations are established:

where

is the number of satellites that simultaneously observe the target, and

represents the distance between the target position vector

and the satellite position vector

. Multiplying the two ends of the equation by

gives

The above equations are expanded in three dimensions:

Equation (18) is the target positioning equation. By combining the positioning equations of

satellites, the equation can be written as

The above equation is simplified as

Since the unknown variable is 3 and the number of equations is

, the equation becomes an overdetermined system of equations with no analytical solution when

≥ 2. By using the least squares method to estimate the optimal solution, the target spatial position

in the ECI coordinate frame can be obtained:

3. Velocity Estimation Based on Robust Locally Weighted Regression

If the spatial position obtained under multi-satellite observation is directly used to solve the velocity, a huge error occurs. This is due to two reasons: 1. The least squares method is used to calculate the estimated value of a single sampling point position, which is prone to producing errors in the estimated values of continuous sampling points. This seriously affects the calculation of instantaneous velocity. 2. There are many error sources that affect the target positioning accuracy in the space-based IR surveillance system, which will lead to a large number of outliers in the target position. This section fully considers the above factors, proposes a velocity estimation method based on robust locally weighted regression, and analyzes the velocity estimation accuracy.

3.1. Locally Weighted Regression

The locally weighted regression (LWR) is a non-parametric regression method that assigns a weight to each observation and uses weighted least squares to perform polynomial regression fitting [

28]. Unlike ordinary linear regression, LWR does not fit the entire data globally but fits the data around each point to be predicted, thereby capturing local features and variations more accurately. After obtaining the target position for multiple frames of images, a three-axis position sequence

,

, and

is formed. The LWR method is used to perform regression on the position sequence, and

,

, and

are obtained.

Take

as an example. Select an appropriate window length

, and take the serial number point

corresponding to

as the center to form a serial number segment

. Standardize the serial number segment in the window:

where

. The numerical weights within the window are determined by the following cubic weighting function:

Use the least squares method to calculate the estimated value of the regression coefficient

for each observation point

with weight

. The obtained

is the fitting value at

, and the regression equation is

3.2. Robust Locally Weighted Regression

The robust locally weighted regression (RLWR) is an LWR-based method designed to deal with data sets that contain outliers or abnormal points. For data sets containing outliers, RLWR can fit the data more accurately and improve the robustness of the regression. Unlike the LWR method in

Section 3.1, the weight function used in RLWR is relatively insensitive to outliers, and its weighting function is defined as

Differently from the cubic weight function in Equation (23), the quadratic power in Equation (25) reduces the surrounding weights more slowly and has a more balanced impact on adjacent data. During robust iterations, volatility can be reduced to provide more stable results.

After obtaining the regression Equation (24) using the LWR method, the robustness enhancement process begins. Let

be the residual of the fitting value, and

be the median value of

. The weight correction coefficient is defined as

We use to replace the original weight at the points , and use the least squares method to perform 𝑝-order polynomial fitting to calculate the new . Repeat the robust enhancement process times; the final is the robust locally weighted fitting value.

Traditional LWR is sensitive to outliers because it will give higher weights to neighboring points that are closer to the target point, and outliers often cause significant deviations in the fitting results. RLWR introduces a correction coefficient for the weights. During the iterations, the weights are continuously corrected by updating the correction coefficients, which improve the robustness of the algorithm.

All the above steps are performed for all the position points to obtain the processed

. In this study, the window length was 50, the polynomial degree was 1, and the number of iterations was 2. Detailed parameter analysis is described in

Section 4.3.1.

3.3. Velocity Estimation and Accuracy Analysis

Taking the velocity calculation interval as

, the velocity of the target is

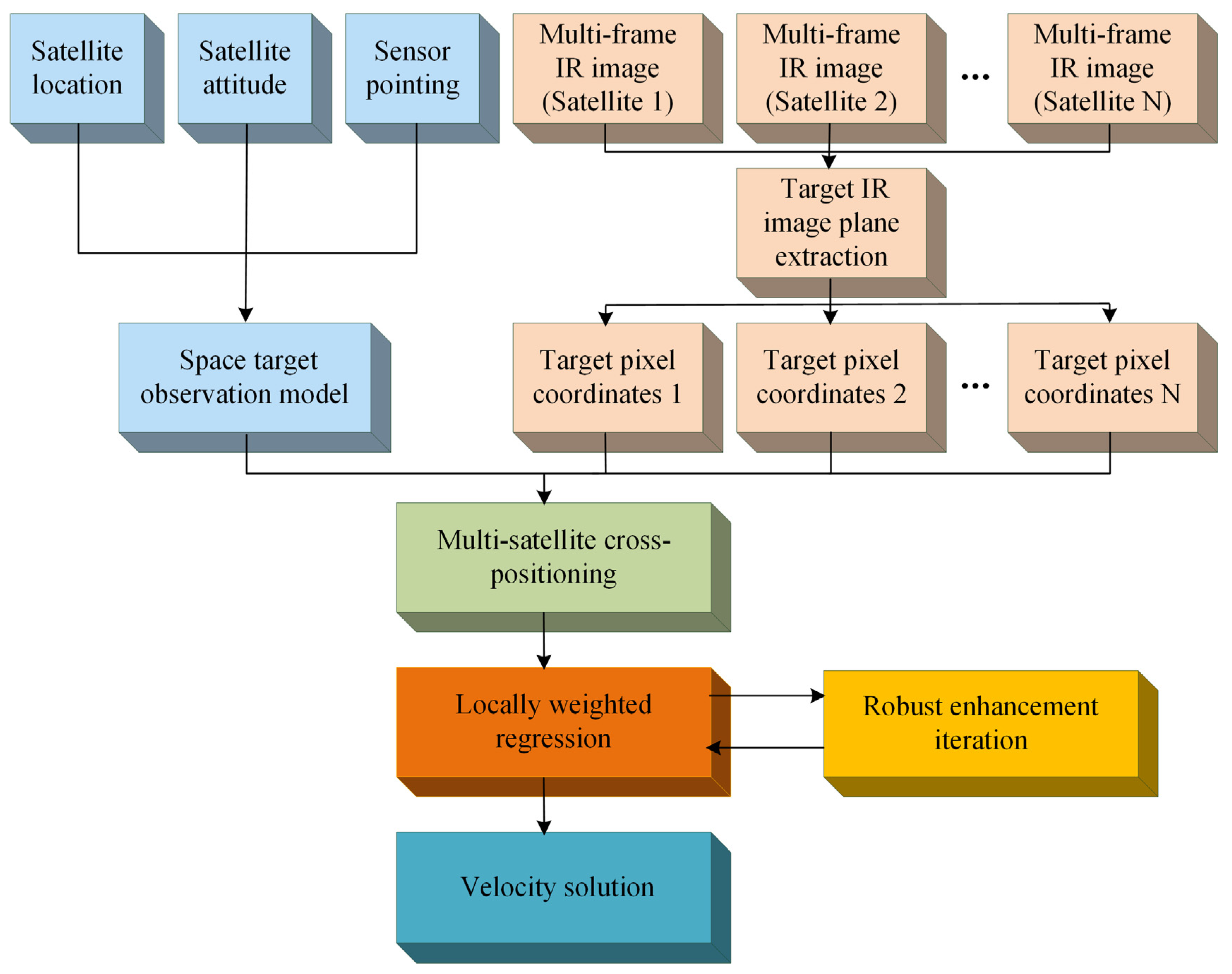

The flowchart of the proposed space IR target velocity estimation method is shown in

Figure 4. The whole technical process is mainly divided into two parts: (1) Establish the space target observation model, extract the pixel coordinates of the target on different satellite IR image planes, and use multi-satellite cross-positioning to solve the target spatial position; (2) Perform locally weighted regressions of multiple robust enhancement iterations on the target spatial position and, finally, solve for the velocity.

The estimation accuracy for velocity is mainly affected by the measurement error for the target. The target measurement error is composed of the satellite location error, satellite attitude angle error, sensor pointing error, and target pixel coordinate extraction error [

12,

41], which can be seen from

Figure 4. Let us assume that the measurement errors of the satellite position, satellite attitude angles, sensor pointing, and pixel coordinate extraction all follow normal distributions with a mean of 0 and standard deviations of

,

,

, and

, respectively. First, these error values are added to the true values, and then, the proposed method is used to calculate the target velocity. Finally, the calculated velocity is compared with the true velocity to obtain the velocity estimation error. The velocity estimation error model obtained using the Monte Carlo method is as follows:

where

is the real velocity of the target;

represents the velocity estimation result after adding random errors to each input; and

denotes the number of Monte Carlo simulations.

The velocity estimation accuracy during the observation phase is evaluated using the Mean Absolute Error (MAE) and Root Mean Square Error (RMSE). The estimation results of the velocity components in the

X-axis,

Y-axis, and

Z-axis directions and the overall velocity are separately evaluated. The calculation formulas are as follows:

where

represents the number of observation points.

5. Discussion

The proposed method significantly improves the velocity estimation accuracy of space IR dim targets in different scenarios, which we believe is related to two characteristics.

(1) Multi-satellite observation. Satellite position and attitude are known information that are easily obtained in real time in space-based IR surveillance systems, and are an important basis for velocity estimation based on physical principles. Multi-satellite cooperation is conducive to long-term and wide-area observation of moving dim targets. As mentioned in 4.3.3, the increase in observation satellites effectively reduces the decrease in estimation accuracy caused by measurement errors.

(2) Robust locally weighted regression. The target position vector obtained by multi-satellite cross-location contains a large number of outliers. In the process of robust enhancement, a weighting function is introduced to reduce the weight of sampling points that are far away, and the slight abnormal points will be smoothed out. By continuously iteratively modifying the weights, the robustness of the algorithm is improved. In addition, local fitting preserves the nonlinear relationships between data, which can better capture the characteristics and laws between data, and is of great benefit to the establishment of complex models.

This research focuses on the velocity estimation of space IR dim targets, and has achieved certain results. However, there are still some shortcomings in the research work:

(1) Our research object is the space IR dim moving target. However, collecting enough data for comprehensive experiments and analyzes in the real world is very challenging, involving many limitations and huge costs. Therefore, we adopted a Monte Carlo (MC)-based random error generation method in the experimental design. This method can simulate the noise, uncertainty, and change in the actual environment, and has high flexibility and controllability. By using the MC method to generate a series of different error cases, we can comprehensively evaluate the performance of the proposed method. It should be pointed out that there are certain limitations in using simulated data. Despite our efforts to design and generate realistic data, it is still difficult to fully simulate complex real-world environments. In future research, we will further consider validation based on real-world data.

(2) Although the proposed method has a good processing effect, there is still room for improvement in the running time. In follow-up research, it is necessary to seek optimization methods to reduce computing resource consumption for better satellite transplantation.

(3) This paper only briefly discusses the influence of the number of satellites on velocity estimation, but the variable orbits and complex parameters of satellites in orbit will inevitably affect velocity estimation. Therefore, the influence of satellite parameters needs to be further explored in the future to meet the application requirements in more scenarios.