Abstract

The hyperspectral feature extraction technique is one of the most popular topics in the remote sensing community. However, most hyperspectral feature extraction methods are based on region-based local information descriptors while neglecting the correlation and dependencies of different homogeneous regions. To alleviate this issue, this paper proposes a multi-view structural feature extraction method to furnish a complete characterization for spectral–spatial structures of different objects, which mainly is made up of the following key steps. First, the spectral number of the original image is reduced with the minimum noise fraction (MNF) method, and a relative total variation is exploited to extract the local structural feature from the dimension reduced data. Then, with the help of a superpixel segmentation technique, the nonlocal structural features from intra-view and inter-view are constructed by considering the intra- and inter-similarities of superpixels. Finally, the local and nonlocal structural features are merged together to form the final image features for classification. Experiments on several real hyperspectral datasets indicate that the proposed method outperforms other state-of-the-art classification methods in terms of visual performance and objective results, especially when the number of training set is limited.

1. Introduction

A hyperspectral image (HSI) is able to record hundreds of contiguous spectral channels, and thus provides an unique ability to identify different types of materials. Owing to this merit, hyperspectral imaging has been extensively applied in various aspects, such as land cover mapping [1,2], object detection [3,4], and environment monitoring [5,6]. Over the past few years, hyperspectral image classification has been made great progress because of its significance in mineral mapping, urban investigation, and precision agriculture. Nevertheless, object spectrum is usually affected by the imaging equipment and imaging environment, resulting in high spectrum mixture among different land covers.

To alleviate this problem, hyperspectral feature extraction methods have been widely studied to improve the class discrimination among different land covers. Some representative manifold learning tools [7,8,9,10] have been successfully used as feature extractor of HSIs, such as principal component analysis (PCA) [8], minimum noise fraction (MNF) [10], and independent component analysis (ICA) [9]. However, most of these techniques only utilize the spectral information of different objects, and thus fail to achieve satisfactory classification performance.

To fully exploit the spectral and spatial characteristics in HSIs, a mass of spectral–spatial feature extraction techniques have been developed [11,12,13,14,15]. For instance, Marpu et al. developed attribute profiles (APs) to extract discriminative features of HSIs by using morphological operations [12]. Mura et al. studied extended morphological attribute profiles (EMAP) to characterize HSIs by using a series of morphological attribute filters [13]. Kang et al. developed an edge-preserving filtering method for hyperspectral feature extraction to remove low-contrast details [14]. Duan et al. modeled hyperspectral image as a linear combination of structural profile and texture information, in which the structural profile was used as the spatial features [15]. After that, many improved approaches have been also studied for classification of HSIs, such as ensemble learning [16,17], semi-supervised learning [18,19], and active learning [20,21]. For instance, in [17], a random feature ensemble method was proposed by using ICA and edge-preserving filtering to boost the classification accuracy of HSIs. In [18], a rolling guidance filter-based semi-supervised classification method was developed in which an extended label propagation technique was utilized to expand the training set.

In addition, with the development of deep learning models, many deep learning models have been applied to extract the high-order semantic features of HSIs. For example, Chen et al. presented a 3D convolutional neural network (CNN)-based feature extraction technique in which the dropout and regularization were adopted to prevent overfitting for class imbalance [22]. Liu et al. proposed a Siamese CNN method with a margin ranking loss function to improve the discrimination of different objects [23]. Liu et al. designed a mixed deep feature extraction technique by combining the pixel frequency spectrum features obtained by fast Fourier transform and spatial features. Lately, all kinds of improved versions have been also investigated to increase the classification accuracy [24,25,26,27,28]. For example, Hang et al. designed an attention-guided CNN method to extract spectral–spatial information, in which a spectral attention module and a spatial attention module were considered to capture the spectral and spatial information, respectively [26]. Hong et al. improved the transformer network to characterize the sequence attributes of spectral information, where a cross-layer skip connection was used to fuse spatial features from different layers [27].

To better characterize spectral–spatial information, the multiview technique, which aims to reveal the data characteristics from diverse aspects and provide multiple features for model learning, has been applied in hyperspectral feature extraction [20,29,30]. In more detail, the raw data are first transformed into different views (e.g., attributes, feature subsets), and then, the complementary information of all views is integrated together to achieve a more accurate classification result. For example, in [20], Li et al. proposed a multi-view active learning method for hyperspectral image classification, where the subpixel-level, pixel-level, and superpixel-level information were jointly used to achieve a better identification ability. Xu et al. proposed multi-view attribute components for classification of HSIs, in which an intensity-based query scheme was used to expand the number of training set [29]. In general, these methods can increase the classification performance because of multi-view strategy. However, most of them only utilize the local neighboring information without considering the correlation of pixels in the nonlocal region. Based on the above analysis, it is necessary to develop a novel multi-view method to further boost the classification performance by jointly using the dependencies of pixels in the local and nonlocal regions.

In this work, we propose a novel multi-view structural feature extraction method for the first time, which consists of several key steps. First, the spectral dimension of the original data is reduced to increase the computational efficiency. Then, three multi-view structural features are constructed to characterize varying land covers from different aspects. Finally, different types of features are merged together to increase the discrimination of different land covers, and the fused feature is fed into a spectral classifier to obtain the classification results. Experiments are performed on three benchmark datasets to quantitatively and qualitatively validate the effectiveness of the proposed method. The experimental results verify that the proposed feature extraction method can significantly outperform other state-of-the-art feature extractors. More importantly, our method can obtain promising classification performance over other approaches in the case of limited training samples.

2. Method

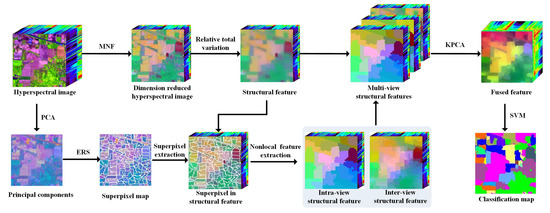

Figure 1 shows the flowchart of the multi-view structural feature extraction method, which consists of three key steps. First, the spectral number of the raw data is reduced with the MNF method. Then, the multi-view structural features, i.e., local structural feature, intra-view, and inter-view structural features, are constructed based on the correlation of pixels in the local and nonlocal regions. Finally, the multi-view features are fused together with the kernel PCA (KPCA) method, and the fused feature is fed into the support vector machine (SVM) classifier to obtain the classification map.

Figure 1.

The flow chart of the proposed multi-view structural feature extraction method.

2.1. Dimension Reduction

To decrease the computing time and the influence of image noise, the maximum noise fraction (MNF) [31] is first exploited to decrease the spectral dimension of the original data. Specifically, assume is the raw data, the MNF is to seek a transform matrix to maximize the signal to noise ratio of transformed data.

where is the dimension-reduced data, and is the transform matrix, which can be estimated as

where is regarded as a linear combination of the uncorrelated signal and noise matrix , and , in which and denote the covariance of and . In this work, the first L-dimensional components are preserved for the following feature extraction.

2.2. Multi-View Feature Generation

Since the imaging scene contains different types of land covers with different spatial size, a single structural feature cannot comprehensively characterize the spatial information of diverse objects. To alleviate this problem, a multi-view structural feature extraction method is proposed. Specifically, three different types of structural features are generated, including local structural feature, intra-view, and inter-view structural features.

(1) Local structural feature: The local structural feature aims to remove useless details (e.g., image noise and texture) and preserve the intrinsic spectral–spatial information. Specifically, a relative total variation technique [32] is performed on the dimension-reduced data to construct local structural feature , which is expressed as

where T represents the amount of pixels in total. stands for the desired structural feature. denotes a smoothing weight. is adopted to prevent dividing by zero. and indicate the variations in two directions. The solution of Equation (3) refers to [32].

where and stand for the partial derivatives in two directions, which mainly calculates the spatial similarity within a local window , and is a weight.

where denotes the window size.

(2) Intra-view structural feature: The intra-view structural feature is to reduce the spectral difference of pixels belonging to the same land cover and increase the spectral purity in the homogeneous regions. In order to extract the intra-view structural feature, first, an entropy rate superpixel (ERS) segmentation method [33] is adopted to obtain the homogeneous region of the same object. In more detail, the PCA scheme is first conducted on the local structural feature to obtain the first three components , and then, the ERS segmentation scheme is utilized to obtain a 2D superpixel resulting map.

where indicates the segmentation result, and T indicates the number of superpixels, which is determined by

where L is empirically selected in this work, represents the amount of nonzero pixels in the detected map obtained by performing Canny filter on the base image , and N represents the total amount of pixels in the base image. Based on the position indexes of pixels in each superpixel , we can obtain the corresponding 3D superpixels .

Then, a mean filtering is conducted on each 3D superpixel to calculate the average value. Finally, we assign the pixels in each superpixel to the average value to obtain the intra-view structural feature .

(3) Inter-view structural feature: The intra-view structural feature is able to reduce the difference of pixels in each superpixel. However, the correlations of pixels for different superpixels are not considered. Thus, we construct an inter-view structural feature to improve the discrimination of different objects. Specifically, a weighted mean operation is performed on the neighboring superpixels of the current superpixel to obtain the inter-view structural feature .

where denotes the neighboring superpixels of the ith superpixels. The obtained value is assigned to all pixels in the ith superpixel to produce the inter-view structural feature .

2.3. Feature Fusion

To make full use of multi-view structural features, the KPCA technique [34] is used to merge three types of features. Specifically, first, three structural features are stacked together . Then, the stacked data is projected into a high-dimensional space by using a Gaussian kernel function . Finally, the fused feature can be calculated:

where indicates Gram matrix . In this paper, K-dimensional features are preserved in the fused feature. Once the fused feature is obtained, the spectral classifier, i.e., SVM, is considered to examine the classification performance. To clearly show the whole procedure of the proposed method, Algorithm 1 presents a pseudocode to summarize the key steps of our method.

| Algorithm 1 Multi-view structural feature extraction |

| Input: Input hyperspectral image I; Output: Hyperspectral image feature

|

3. Experiments

3.1. Experimental Setup

(1) Datasets: In the experimental section, three hyperspectral datasets, i.e., Indian Pines, Salinas, and Honghu, are used to examine the classification performance of the proposed feature extraction method. All these datasets are collected from a public hyperspectral database.

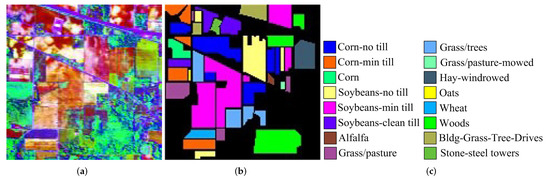

The Indian Pines dataset was obtained by the Airborne Visible Infrared Imaging Spectrometer (AVIRIS) sensor over the Indian Pines test scene in northwestern Indiana, which is available online (http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes (accessed on 10 January 2022 )). This image is composed of 220 spectral bands spanning from 0.4 to 2.5 µm. The spatial size is 145 × 145 with a spatial resolution of 20 m. Twenty water absorption channels (No. 104-108, 150-163, and 220) are discarded before experiments. Figure 2a presents the false color composite image. Figure 2b shows the ground truth, which contains 16 different land covers. Figure 2c gives the class name.

Figure 2.

Indian Pines dataset. (a) False color composite. (b) Ground truth. (c) Label name.

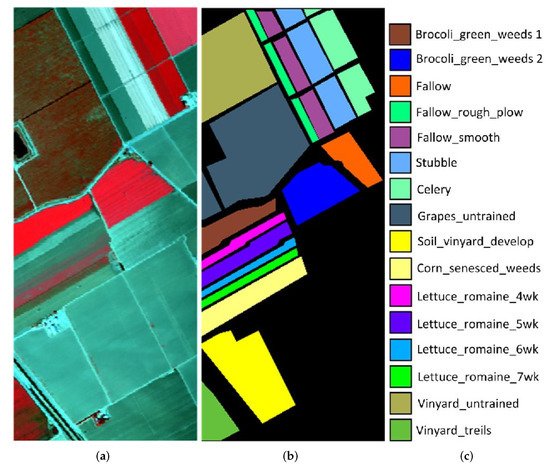

The Salinas dataset was collected by the AVIRIS sensor over Salinas Valley, California, USA, which is available online (http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes (accessed on 10 January 2022)). This image consists of 224 spectral channels with spatial size of 512 × 217 pixels. The spatial resolution is of 3.7 m. This scene is an agricultural region, which includes different types of crops, such as vegetables, bare soils, and vineyard fields. Twenty spectral bands (No. 108-112, 154-167, and 224) are discarded before the following experiments. Figure 3a gives the false color composition. Figure 3b displays the ground truth, which consists of 16 different land covers. Figure 3c shows the class name.

Figure 3.

Salinas dataset. (a) False color composite. (b) Ground truth. (c) Label name.

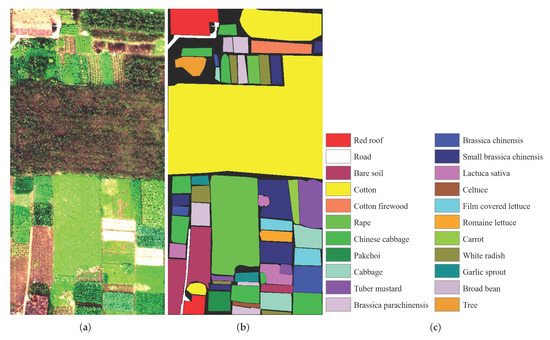

The Honghu dataset was captured by the a 17 mm focal length Headwall Nano-Hyperspec imaging sensor over Honghu City, Hubei province, China, which is available online (http://rsidea.whu.edu.cn/e-resource_WHUHi_sharing.htm (accessed on 10 January 2022)). This image consists of 270 spectral channels ranging from 0.4 to 1.0 µm. The spatial size is 940 × 475 pixels with a spatial resolution of 0.043 m. This scene is a complex agricultural area, including various crops and different cultivars of the same crop. Figure 4 gives the false color composition, ground truth, and class name. Table 1 presents the training and test samples of all used datasets for the following experiments.

Figure 4.

Honghu dataset. (a) False color composite. (b) Ground truth. (c) Label name.

Table 1.

The number of training and test set. The colors represent different land covers in the classification map.

(2) Evaluation Indexes: To quantitatively calculate the classification accuracies of all considered techniques, four extensively used objective indexes [35,36,37], i.e., class accuracy (CA), overall accuracy (OA), average accuracy (AA), and Kappa coefficient, are used. The definitions of all objective indexes are shown as follows:

(1) CA: CA calculates the percentage of correctly classified pixels of each class in the total number of pixels.

where is the confusion matrix obtained by comparing the ground truth with the predicted result, and C is the total number of categories.

(2) OA: OA assesses the proportion of correctly identified samples to all samples.

where N is the total number of labeled samples, is the confusion matrix, and C is the total number of categories.

(3) AA: AA represents mean of the percentage of the correctly identified samples.

where C is the total number of categories, and is the confusion matrix.

(4) Kappa: Kappa coefficient denotes the interrater reliability for categorical variables.

where N is the total number of labeled samples, is the confusion matrix, and C is the total number of categories.

3.2. Classification Results

To examine the effectiveness of the proposed feature extraction method, several state-of-the-art hyperspectral classification methods are selected as competitors, including (1) the spectral classifier, i.e., SVM on the original image (SVM) [38]; (2) the feature extraction methods, i.e., the image fusion and recursive filtering (IFRF) [14], the extended morphological attribute profiles (EMAP) [13], multi-scale total variation (MSTV) [15], the PCA-based edge-preserving features (PCAEPFs) [8]; (3) the spectral–spatial classification methods, i.e., the superpixel-based classification via multiple kernels (SCMK) [39] and the generalized tensor regression approach (GTR) [40]. These methods are adopted because they are either highly cited publications in the remote sensing field or are recently proposed classification methods with state-of-the-art classification performance on several hyperspectral datasets. For all considered approaches, the default parameters follow the corresponding publications for a fair comparison.

3.2.1. Indian Pines Dataset

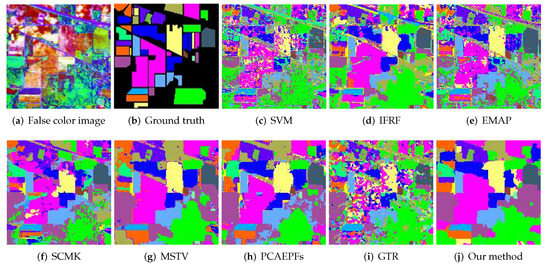

The first experiment is performed on the Indian Pines dataset, in which 1% labeled samples are randomly selected from the reference image for training (see Table 1). Figure 5 presents the classification maps of all considered methods on the Indian Pines dataset. As shown in this figure, the SVM method yields very noisy visual effects in the classification result, exposing the disadvantages of the spectral classifier without considering the spatial information. By removing image details and preserving the strong edge structures, the IFRF method greatly improves the classification result over the spectral classifier. However, there are still obvious misclassified pixels around the boundaries. For the EMAP method, some homogeneous regions still contain “noise” such as mislabels in the resulting map. For the SCMK method, some obvious misclassified results appear in the edges and corners. The main reason is that the homogeneous region belonging to the same object cannot be accurately segmented. The MSTV method effectively removes the noisy labels. However, it tends to yield an oversmoothed classification map. The PCAEPFs method significantly boosts the classification performance with respect to the IFRF method, since multi-scale feature extraction strategy is adopted. However, some objects with small size fail to be well preserved in the classification map. The GTR method yields spot-like misclassification results since the tensor regression technique cannot fit the spectral curves of different objects well. By contrast, the proposed method obtains a better visual map by integrating multi-view spectral–spatial structural features, in which the edges of the classification map are more consistent with the real scene.

Figure 5.

Classification results of all considered approaches on Indian Pines dataset. (a) False color image. (b) Ground truth. (c) SVM [33], OA = 55.30%. (d) IFRF [14], OA = 70.09%. (e) EMAP [13], OA = 67.70%. (f) SCMK [39], OA = 71.20%. (g) MSTV [15], OA = 88.25%. (h) PCAEPFs [8], OA = 85.58%. (i) GTR [40], OA = 63.25%. (j) Our method, OA = 90.32%.

For objective comparison, Table 2 lists the objective results of different approaches including CA, OA, AA, and Kappa. It is easily to observe that the proposed method is superior than all compared approaches in terms of OA, AA, and Kappa. For instance, OA value is increased from 53% to 90% obtained by the proposed method with respect to the SVM method on the original data. Moreover, the proposed feature extraction approach yields the highest classification accuracies for ten classes. This experiment illustrates that the proposed feature extraction method is more effective compared to other approaches.

Table 2.

Classification accuracies of all methods on Indian Pines dataset. The bold denotes the best classification accuracy.

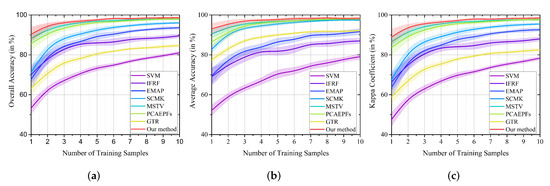

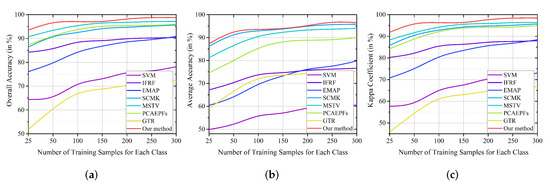

Furthermore, the influence of the number of training set on all classification methods is discussed. Different numbers of samples varying from 1% to 10% are randomly chosen from the reference data to construct the training set. Figure 6 shows the change tendency of all studied methods with different numbers of training set. It is easily found that the classification performance of all methods tends to be improved when the amount of training set increases. In addition, the proposed method performs promising performance with respect to other methods especially when the number of training samples is limited.

Figure 6.

Classification performance of different approaches on the Indian Pines with different numbers of training samples. (a) OA. (b) AA. (c) Kappa. The widths of the line areas are the standard deviation of accuracies produced in ten experiments.

3.2.2. Salinas Dataset

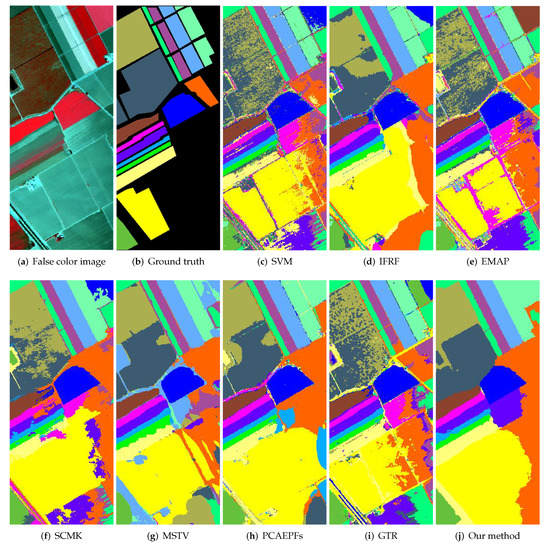

The second experiment is conducted on the Salinas dataset, in which five samples per class are randomly selected from the reference image to constitute the training samples (see Table 1). Figure 7 shows the visual maps of all studied approaches. We can easily observe that the SVM method produces noisy classification performance. The reason is that the spatial priors are not considered in the spectral classifier. The IFRF method removes the noisy labels in some homogeneous regions. However, there are still serious misclassifications, such as Grapes_untrained and Vinyard_treils classes. The EMAP method also yields “pepper and noisy” appearance since this method is a pixel-level feature extraction method. For the SCMK method, some regions are misclassified into other classes due to the inaccurate segmentation. The MSTV method produces an oversmoothed visual resulting map. The main reason is that the feature extraction process removes the spatial information of land covers with low reflectivity. The PCAEPFs method produces noisy labels in the edges and boundaries. The GTR method yields a serious misclassification map since the tensor regression model fails to distinguish the similar spectral curves. Different from other methods, the proposed method provides the best visual classification effect in removing noisy labels and preserving the boundaries of different classes.

Figure 7.

Classification results of all considered approaches on Salinas dataset. (a) False color image. (b) Ground truth. (c) SVM [33], OA = 80.08%. (d) IFRF [14], OA = 90.67%. (e) EMAP [13], OA = 85.54%. (f) SCMK [39], OA = 88.70%. (g) MSTV [15], OA = 94.46%. (h) PCAEPFs [8], OA = 95.12%. (i) GTR [40], OA = 85.59%. (j) Our method, OA = 98.13%.

Furthermore, Table 3 also verifies the effectiveness of the proposed method. Likewise, the proposed method obtains the highest classification accuracies with regard to OA, AA, and Kappa compared to other studied techniques. In addition, the influence of different numbers of training samples is presented in Figure 8. The number of training set for each class is varying from 5 to 50. It is shown that the increase of the training size is beneficial to the classification performance of all methods. Moreover, our method is always higher than other classification approaches.

Table 3.

Classification performance of all methods on Salinas dataset. The bold denotes the best classification accuracy.

Figure 8.

Classification performance of different approaches on the Salinas with different numbers of training samples. (a) OA. (b) AA. (c) Kappa. The widths of the line areas are the standard deviation of accuracies produced in ten experiments.

3.2.3. Honghu Dataset

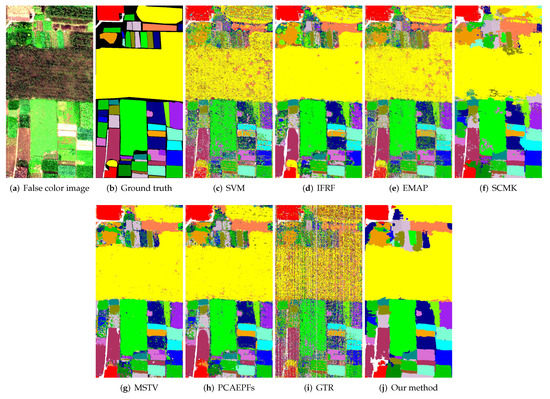

The third experiment is performed on a complex crop scene with 22 crop classes, i.e., Honghu dataset, in which the benchmark training and test samples (http://rsidea.whu.edu.cn/e-resource_WHUHi_sharing.htm (accessed on on Jaunary 2022)) are adopted. The classification results of all methods are shown in Figure 9. Similarly, the SVM method produces a very noisy visual map since only the spectral information is used. The IFRF method greatly improves this problem by using the image filtering on the raw data, obtaining a better classification result over the SVM method. The EMAP method also obtains a noisy classification map. The reason is that this feature extraction method is in a pixel-wise pattern. For the SCMK method, the classification map has a small amount of misclassification labels for some classes. The MSTV method obtains a better classification map due to multi-scale technique. However, the edges of different classes still have misclassification appearance. The PCAEPFs method yields a similar classification effect to the MSTV method. For the GTR method, the classification result suffers from serious misclassification for this complex scene when the amount of training set is limited. By contrast, the proposed method yields the best visual map with respect to other studied approaches, since multi-view structural features are effectively merged to yield a complete characterization of different objects.

Figure 9.

Classification results of all considered approaches on Honghu dataset. (a) False color image. (b) Ground truth. (c) SVM [33], OA = 64.43%. (d) IFRF [14], OA = 84.23%. (e) EMAP [13], OA = 76.11%. (f) SCMK [39], OA = 86.41%. (g) MSTV [15], 90.74%. (h) PCAEPFs [8], OA = 87.37%. (i) GTR [40], OA = 51.87%. (j) Our method, OA = 94.01%.

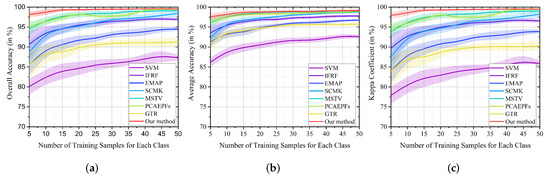

Furthermore, the objective results obtained by all considered approaches are listed in Table 4. It is obvious from Table 4 that our method still provides the highest classification accuracies concerning OA, AA, and Kappa coefficient. In addition, Figure 10 gives the OA, AA, and Kappa coefficient of all studied approaches as functions of the amount of training samples from 25 to 300. It should be mentioned that the training samples follow the benchmark dataset (http://rsidea.whu.edu.cn/e-resource_WHUHi_sharing.htm (accessed on 10 January 2022)). It is found that the classification accuracies of all methods tend to improve when the training size increases, and our method still produces the highest OA, AA, and Kappa coefficient.

Table 4.

Classification performance of all methods on Honghu dataset. The bold denotes the best classification accuracy.

Figure 10.

Classification performance of different approaches on the Honghu with different numbers of training samples. (a) OA. (b) AA. (c) Kappa.

4. Discussion

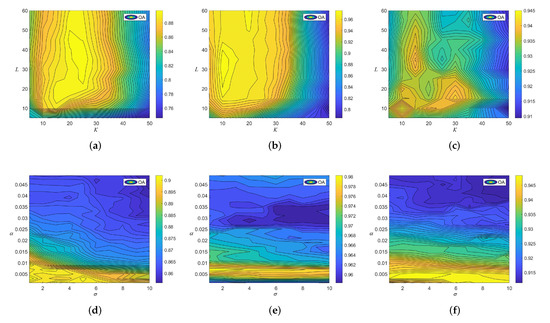

4.1. The Influence of Different Parameters

In this part, the influence of different free parameters, i.e., the number of dimension reduction L, the number of fused feature K, the smoothing weight , and the window size , on the classification accuracy of our method is analyzed. An experiment is performed on the three datasets with training set listed in Table 1. When L and K are discussed, and are fixed as 0.005 and 3, respectively. Similarly, when and are analyzed, L and K are set as 30 and 20, respectively. Figure 11 presents the classification accuracy OA of the proposed method with different parameter settings. It can be observed that the proposed method can yield satisfactory classification accuracy for all used datasets when L and K are set to be 30 and 20, respectively. Moreover, when L and K are relatively small, the classification performance tends to be decreased, since the limited number of features cannot well represent the spectral–spatial information in HSIs. Figure 11d–f shows the influence of different and . It is shown that when and increase, the classification performance of the proposed method decreases. The reason is that the structural feature extraction technique smooths out the spatial structures of HSIs. When and are set to be 0.005 and 3, respectively, the proposed method obtains the highest classification accuracy. Based on this observation, L, K, , and are set as 30, 20, 0.005, and 3, respectively.

Figure 11.

The influence of different parameters in the proposed method. The first row is the influence of the number of dimension reduction L and the number of fused feature K. The second row is the influence of the smoothing parameter and the window size . (a) Indian Pines dataset; (b) Salinas dataset; (c) Honghu dataset; (d) Indian Pines dataset; (e) Salinas dataset; (f) Honghu dataset.

4.2. The Influence of Three Different Views

In this subsection, the influence of three different views on the classification accuracy is investigated. An experiment is conducted on the Indian Pines dataset. The classification performance obtained by the proposed framework with different views is shown in Table 5. It can be seen that the inter-view feature performs the best classification performance among three different views. Furthermore, the combination of two different views outperforms individual view in terms of classification accuracies. Overall, when three types of features are combined, the proposed method provides the highest classification results. The reason is that three different views have complementary information, which can be jointly utilized to improve the classification performance.

Table 5.

Classification performance of three different views. The bold denotes the best classification performance.

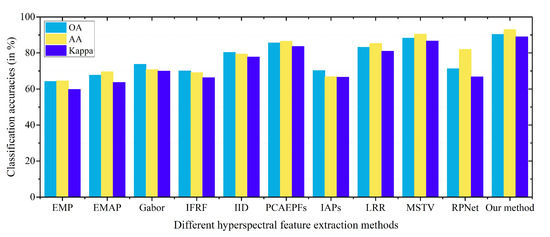

4.3. Effect of Different Hyperspectral Feature Methods

To demonstrate the advantage of the proposed feature extraction method, several widely used feature extraction methods for HSIs are selected as competitors, including extended morphological profiles (EMP) [41], extended morphological attribute profiles (EMAP) [13], Gabor filtering (Gabor) [42], image fusion and recursive filtering (IFRF) [14], intrinsic image decomposition (IID) [43], PCA-based edge-preserving filters (PCAEPFs) [8], invariant attribute profiles (IAPs) [44], low rank representation (LRR) [45], multi-scale total variation (MSTV) [15], and random patches network (RPNet) [46]. An experiment is performed on the Indian Pines dataset with 1% of training samples listed in Table 1. The classification accuracy of all considered approaches is shown in Figure 12. The EMP and EMAP methods only yield around 60% classification performance when the number of training samples is scarce. The classification performance obtained by the edge-preserving filtering-based feature extraction methods such as IFRF and PCAEPFs also tends to decrease when the number of training set is scarce. The RPNet-based deep feature extraction method also fails to achieve satisfactory performance. By contrast, it is found that the proposed feature method obtains the highest classification performance among all feature extraction techniques for three indexes, which further illustrates that the proposed method can better characterize the spectral–spatial information compared to other methods by fusing local and nonlocal multi-view structural features.

Figure 12.

Classification accuracies of different hyperspectral feature extraction techniques on Indian Pines dataset.

4.4. Computing Time

The computing efficiency of all considered techniques for all datasets is provided in Table 6. All experiments are tested a laptop with 8 GB RAM and 2.6 GHz with Matlab 2018. We can observe from Table 6 that when the spatial and spectral dimensions of HSIs increase, the computing time of all methods tends to increases. Furthermore, the computing time of our method is quite competitive among all considered approaches (taking the Indian Pines dataset as an example, the running time of our method is around 5.36 s). The GTR method is the fastest as it is a regression model.

Table 6.

The computing time of all considered approaches for all datasets. The bold denotes the best computing efficiency.

5. Conclusions

In this work, a multi-view structural feature extraction method is developed for hyperspectral image classification, which consists of three key steps. First, the spectral number of the raw data is decreased. Then, the local structural feature, intra-view structural feature, and inter-view structural feature are constructed to characterize spectral–spatial information of diverse ground objects. Finally, the KPCA technique is exploited to merge multi-view structural features, and the fused feature is incorporated with the spectral classifier to obtain the classification map. Our experimental results on three datasets reveal that the proposed feature extraction method can consistently outperform other state-of-the-art classification methods even when the number of training set is limited. Furthermore, with regard to 10 other representative feature extraction methods, our method still produces the highest classification performance.

Author Contributions

N.L. performed the experiments and wrote the draft. P.D. provided some comments and carefully revised the presentation. H.X. modified the presentation of this work. L.C. checked the grammar of this work. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Key Laboratory of Mine Water Resource Utilization of Anhui Higher Education Institutes, Suzhou University, under grant KMWRU202107, in part by the Key Natural Science Project of the Anhui Provincial Education Department under grant KJ2021ZD0137, in part by the Key Natural Science Project of the Anhui Provincial Education Department under grant KJ2020A0733, in part by the Top talent project of colleges and universities in Anhui Province under grant gxbjZD43, and in part by the Collaborative Innovation Center—cloud computing industry under grant 4199106.

Data Availability Statement

The Indian Pines and Salinas datasets are freely available from this site (http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes (accessed on 10 January 2022)). The Honghu dataset is freely available from this site (http://rsidea.whu.edu.cn/e-resource_WHUHi_sharing.htm (accessed on 10 January 2022)).

Acknowledgments

We would like to thank Y. Zhong from Wuhan University for sharing the Honghu datset.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MNF | Minimum noise fraction |

| HSI | Hyperspectral image |

| PCA | Principal component analysis |

| ICA | Independent component analysis |

| APs | Attribute profiles |

| EMAP | Extended morphological attribute profiles |

| CNN | Convolutional neural network |

| ERS | Entropy rate superpixel |

| KPCA | Kernel PCA |

| SVM | Support vector machine |

| AVIRIS | Airborne Visible Infrared Imaging Spectrometer |

| CA | Class accuracy |

| OA | Overall accuracy |

| AA | Average accuracy |

| IFRF | Image fusion and recursive filtering |

| SCMK | Superpixel-based classification via multiple kernels |

| MSTV | Multi-scale total variation |

| PCAEPFs | PCA-based edge-preserving features |

| GTR | Generalized tensor regression |

| EMP | Extended morphological profiles |

| Gabor | Gabor filtering |

| IID | Intrinsic image decomposition |

| IAPs | Invariant attribute profiles |

| LRR | Low rank representation |

| RPNet | Random patches network |

References

- Rasti, B.; Hong, D.; Hang, R.; Ghamisi, P.; Kang, X.; Chanussot, J.; Benediktsson, J.A. Feature Extraction for Hyperspectral Imagery: The Evolution From Shallow to Deep: Overview and Toolbox. IEEE Geosci. Remote Sens. Mag. 2020, 8, 60–88. [Google Scholar] [CrossRef]

- Duan, P.; Lai, J.; Ghamisi, P.; Kang, X.; Jackisch, R.; Kang, J.; Gloaguen, R. Component Decomposition-Based Hyperspectral Resolution Enhancement for Mineral Mapping. Remote Sens. 2020, 12, 2903. [Google Scholar] [CrossRef]

- Liang, J.; Zhou, J.; Tong, L.; Bai, X.; Wang, B. Material Based Salient Object Detection from Hyperspectral Images. Pattern Recognit. 2018, 76, 476–490. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Zhang, K.; Duan, P.; Kang, X. Hyperspectral Anomaly Detection With Kernel Isolation Forest. IEEE Trans. Geosci. Remote Sens. 2020, 58, 319–329. [Google Scholar] [CrossRef]

- Duan, P.; Lai, J.; Kang, J.; Kang, X.; Ghamisi, P.; Li, S. Texture-Aware Total Variation-Based Removal of Sun Glint in Hyperspectral Images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 359–372. [Google Scholar] [CrossRef]

- Stuart, M.B.; McGonigle, A.J.S.; Willmott, J.R. Hyperspectral Imaging in Environmental Monitoring: A Review of Recent Developments and Technological Advances in Compact Field Deployable Systems. Sensors 2019, 19, 3071. [Google Scholar] [CrossRef] [Green Version]

- Prasad, S.; Bruce, L.M. Limitations of Principal Components Analysis for Hyperspectral Target Recognition. IEEE Geosci. Remote Sens. Lett. 2008, 5, 625–629. [Google Scholar] [CrossRef]

- Kang, X.; Xiang, X.; Li, S.; Benediktsson, J.A. PCA-Based Edge-Preserving Features for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7140–7151. [Google Scholar] [CrossRef]

- Wang, J.; Chang, C.I. Independent Component Analysis-Based Dimensionality Reduction with Applications in Hyperspectral Image Analysis. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1586–1600. [Google Scholar] [CrossRef]

- Gao, L.; Zhao, B.; Jia, X.; Liao, W.; Zhang, B. Optimized Kernel Minimum Noise Fraction Transformation for Hyperspectral Image Classification. Remote Sens. 2017, 9, 548. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Kang, X.; Li, S.; Duan, P.; Benediktsson, J.A. Feature Extraction from Hyperspectral Images using Learned Edge Structures. Remote Sens. Lett. 2019, 10, 244–253. [Google Scholar] [CrossRef]

- Marpu, P.R.; Pedergnana, M.; Dalla Mura, M.; Benediktsson, J.A.; Bruzzone, L. Automatic Generation of Standard Deviation Attribute Profiles for Spectral–Spatial Classification of Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2013, 10, 293–297. [Google Scholar] [CrossRef]

- Dalla Mura, M.; Villa, A.; Benediktsson, J.A.; Chanussot, J.; Bruzzone, L. Classification of Hyperspectral Images by Using Extended Morphological Attribute Profiles and Independent Component Analysis. IEEE Geosci. Remote Sens. Lett. 2011, 8, 542–546. [Google Scholar] [CrossRef] [Green Version]

- Kang, X.; Li, S.; Benediktsson, J.A. Feature Extraction of Hyperspectral Images With Image Fusion and Recursive Filtering. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3742–3752. [Google Scholar] [CrossRef]

- Duan, P.; Kang, X.; Li, S.; Ghamisi, P. Noise-Robust Hyperspectral Image Classification via Multi-Scale Total Variation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 1948–1962. [Google Scholar] [CrossRef]

- Duan, P.; Ghamisi, P.; Kang, X.; Rasti, B.; Li, S.; Gloaguen, R. Fusion of Dual Spatial Information for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7726–7738. [Google Scholar] [CrossRef]

- Xia, J.; Bombrun, L.; Adalı, T.; Berthoumieu, Y.; Germain, C. Spectral–Spatial Classification of Hyperspectral Images Using ICA and Edge-Preserving Filter via an Ensemble Strategy. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4971–4982. [Google Scholar] [CrossRef] [Green Version]

- Cui, B.; Xie, X.; Hao, S.; Cui, J.; Lu, Y. Semi-Supervised Classification of Hyperspectral Images Based on Extended Label Propagation and Rolling Guidance Filtering. Remote Sens. 2018, 10, 515. [Google Scholar] [CrossRef] [Green Version]

- Sellars, P.; Aviles-Rivero, A.I.; Schönlieb, C.B. Superpixel Contracted Graph-Based Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4180–4193. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Lu, T.; Li, S. Subpixel-Pixel-Superpixel-Based Multiview Active Learning for Hyperspectral Images Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4976–4988. [Google Scholar] [CrossRef]

- Li, Q.; Zheng, B.; Yang, Y. Spectral-Spatial Active Learning With Structure Density for Hyperspectral Classification. IEEE Access 2021, 9, 61793–61806. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef] [Green Version]

- Liu, B.; Yu, X.; Zhang, P.; Yu, A.; Fu, Q.; Wei, X. Supervised Deep Feature Extraction for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1909–1921. [Google Scholar] [CrossRef]

- Kang, X.; Zhuo, B.; Duan, P. Dual-Path Network-Based Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2019, 16, 447–451. [Google Scholar] [CrossRef]

- Xie, Z.; Hu, J.; Kang, X.; Duan, P.; Li, S. Multi-Layer Global Spectral-Spatial Attention Network for Wetland Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 58, 3232–3245. [Google Scholar]

- Hang, R.; Li, Z.; Liu, Q.; Ghamisi, P.; Bhattacharyya, S.S. Hyperspectral Image Classification With Attention-Aided CNNs. IEEE Trans. Geosci. Remote Sens. 2021, 59, 2281–2293. [Google Scholar] [CrossRef]

- Hong, D.; Han, Z.; Yao, J.; Gao, L.; Zhang, B.; Plaza, A.; Chanussot, J. SpectralFormer: Rethinking Hyperspectral Image Classification with Transformers. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Duan, P.; Xie, Z.; Kang, X.; Li, S. Self-Supervised Learning-Based Oil Spill Detection of Hyperspectral Images. Sci. China Technol. Sci. 2022, 65, 793–801. [Google Scholar] [CrossRef]

- Xu, X.; Li, J.; Li, S. Multiview Intensity-Based Active Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 669–680. [Google Scholar] [CrossRef]

- Zhou, X.; Prasad, S.; Crawford, M.M. Wavelet-Domain Multiview Active Learning for Spatial-Spectral Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4047–4059. [Google Scholar] [CrossRef]

- Green, A.; Berman, M.; Switzer, P.; Craig, M. A Transformation for Ordering Multispectral Data in terms of Image Quality with Implications for Noise Removal. IEEE Trans. Geosci. Remote Sens. 1988, 26, 65–74. [Google Scholar] [CrossRef] [Green Version]

- Xu, L.; Yan, Q.; Xia, Y.; Jia, J. Structure Extraction from Texture via Relative Total Variation. ACM Trans. Graph. 2012, 31, 1–10. [Google Scholar] [CrossRef]

- Liu, M.Y.; Tuzel, O.; Ramalingam, S.; Chellappa, R. Entropy-Rate Clustering: Cluster Analysis via Maximizing a Submodular Function Subject to a Matroid Constraint. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 99–112. [Google Scholar] [CrossRef] [PubMed]

- Schölkopf, B.; Smola, A.; Müller, K.R. Kernel Principal Component Analysis. In Artificial Neural Networks—ICANN’97, Proceedings of the 7th International Conference, Lausanne, Switzerland, 8–10 October 1997 Proceeedings; Gerstner, W., Germond, A., Hasler, M., Nicoud, J.D., Eds.; Springer: Berlin/Heidelberg, Germany, 1997; pp. 583–588. [Google Scholar]

- Duan, P.; Kang, X.; Li, S.; Ghamisi, P.; Benediktsson, J.A. Fusion of Multiple Edge-Preserving Operations for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 10336–10349. [Google Scholar] [CrossRef]

- Duan, P.; Kang, X.; Li, S.; Ghamisi, P. Multichannel Pulse-Coupled Neural Network-Based Hyperspectral Image Visualization. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2444–2456. [Google Scholar] [CrossRef]

- Hang, R.; Liu, Q.; Hong, D.; Ghamisi, P. Cascaded Recurrent Neural Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5384–5394. [Google Scholar] [CrossRef] [Green Version]

- Melgani, F.; Bruzzone, L. Classification of Hyperspectral Remote Sensing Images with Support Vector Machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef] [Green Version]

- Fang, L.; Li, S.; Duan, W.; Ren, J.; Benediktsson, J.A. Classification of Hyperspectral Images by Exploiting Spectral–Spatial Information of Superpixel via Multiple Kernels. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6663–6674. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Wu, Z.; Xiao, L.; Sun, J.; Yan, H. Generalized Tensor Regression for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 1244–1258. [Google Scholar] [CrossRef]

- Benediktsson, J.; Palmason, J.; Sveinsson, J. Classification of Hyperspectral Data from Urban Areas Based on Extended Morphological Profiles. IEEE Trans. Geosci. Remote Sens. 2005, 43, 480–491. [Google Scholar] [CrossRef]

- He, L.; Li, J.; Plaza, A.; Li, Y. Discriminative Low-Rank Gabor Filtering for Spectral–Spatial Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1381–1395. [Google Scholar] [CrossRef]

- Kang, X.; Li, S.; Fang, L.; Benediktsson, J.A. Intrinsic Image Decomposition for Feature Extraction of Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2241–2253. [Google Scholar] [CrossRef]

- Hong, D.; Wu, X.; Ghamisi, P.; Chanussot, J.; Yokoya, N.; Zhu, X.X. Invariant Attribute Profiles: A Spatial-Frequency Joint Feature Extractor for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3791–3808. [Google Scholar] [CrossRef] [Green Version]

- Rasti, B.; Ulfarsson, M.O.; Sveinsson, J.R. Hyperspectral Feature Extraction Using Total Variation Component Analysis. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6976–6985. [Google Scholar] [CrossRef]

- Xu, Y.; Du, B.; Zhang, F.; Zhang, L. Hyperspectral Image Classification via a Random Patches Network. ISPRS J. Photogramm. Remote Sens. 2018, 142, 344–357. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).