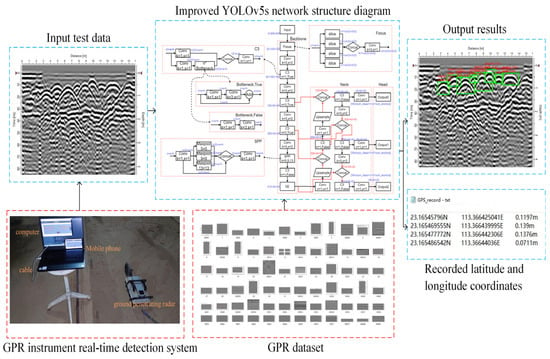

Application of an Improved YOLOv5 Algorithm in Real-Time Detection of Foreign Objects by Ground Penetrating Radar

Abstract

:1. Introduction

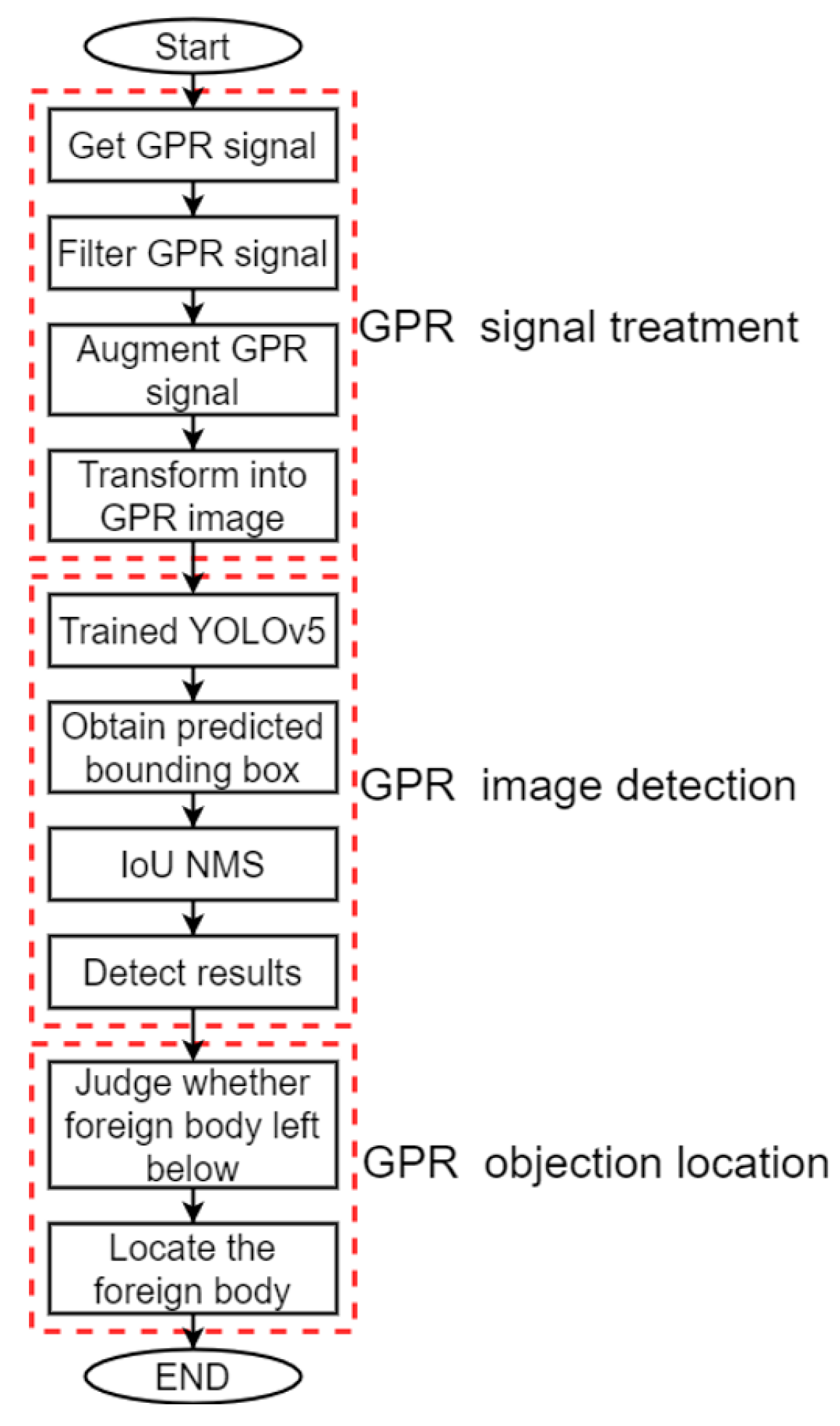

2. Methodology

2.1. Signal Acquisition

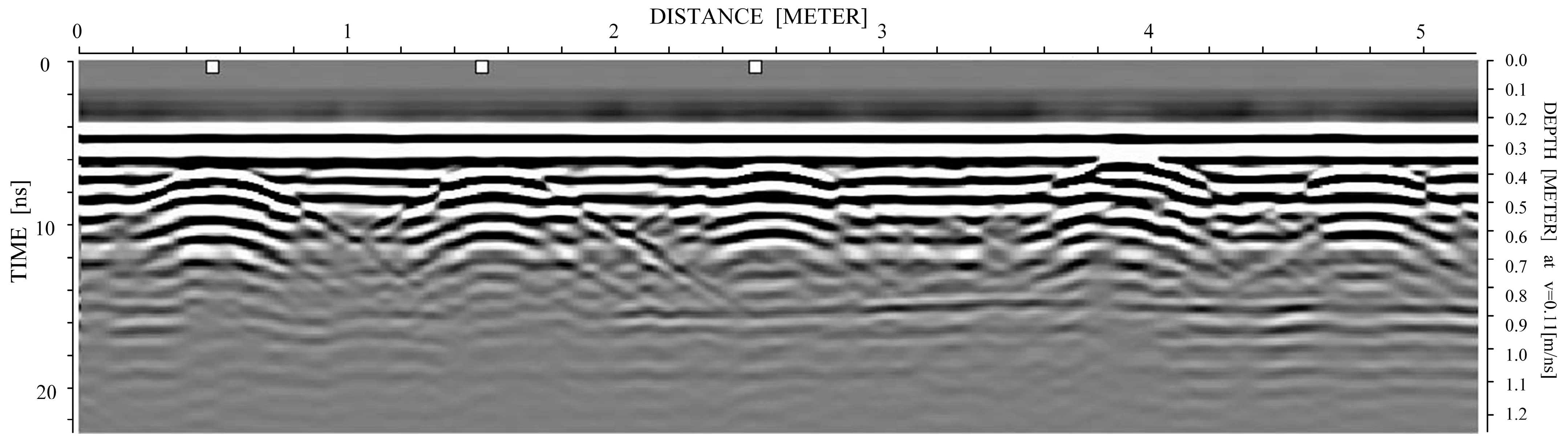

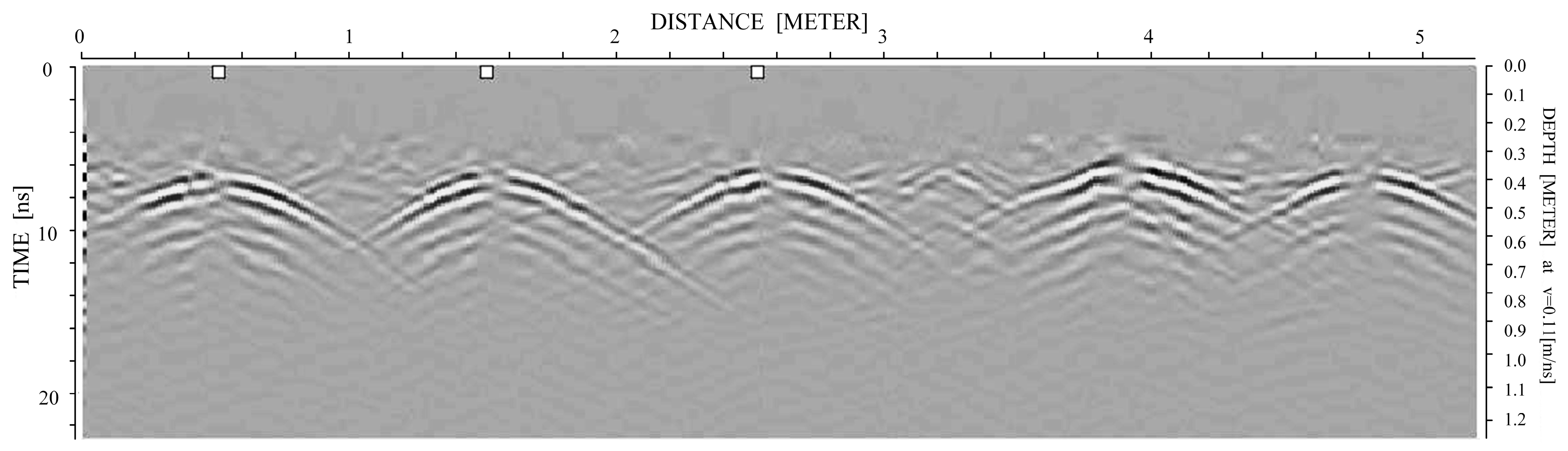

2.1.1. Time Zero Correction

2.1.2. Filtering and Gain

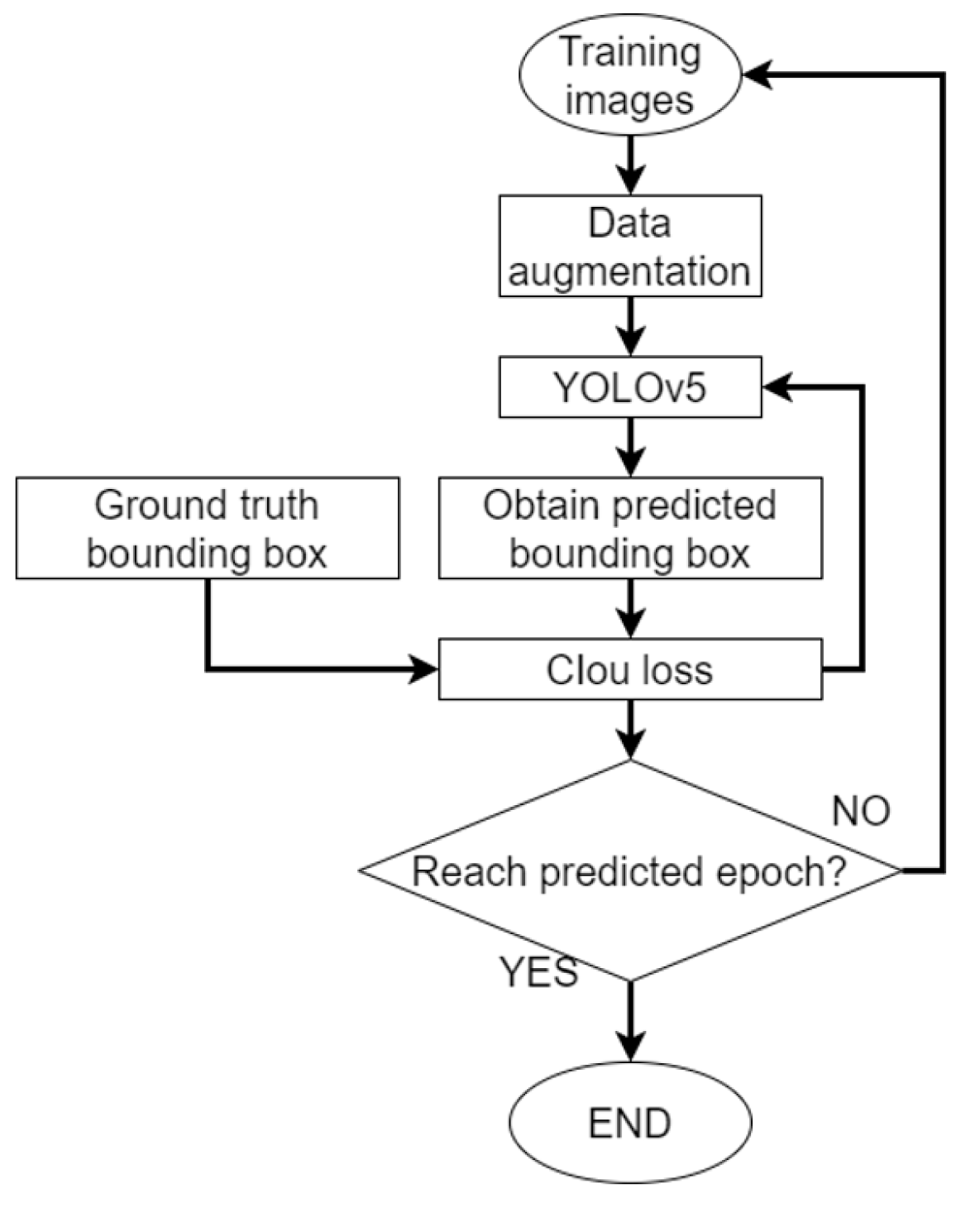

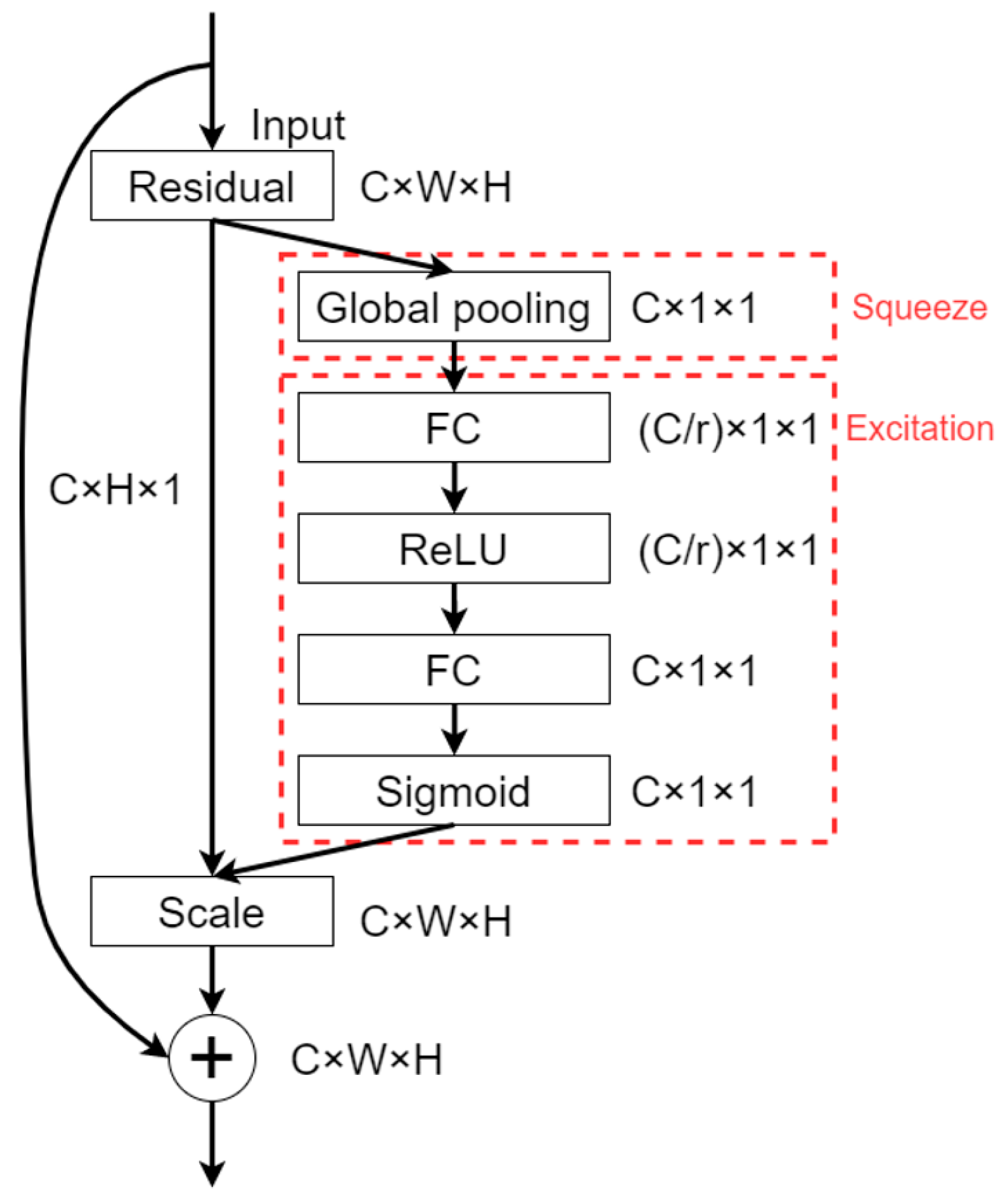

2.2. Improve the YOLOv5 Network Structure

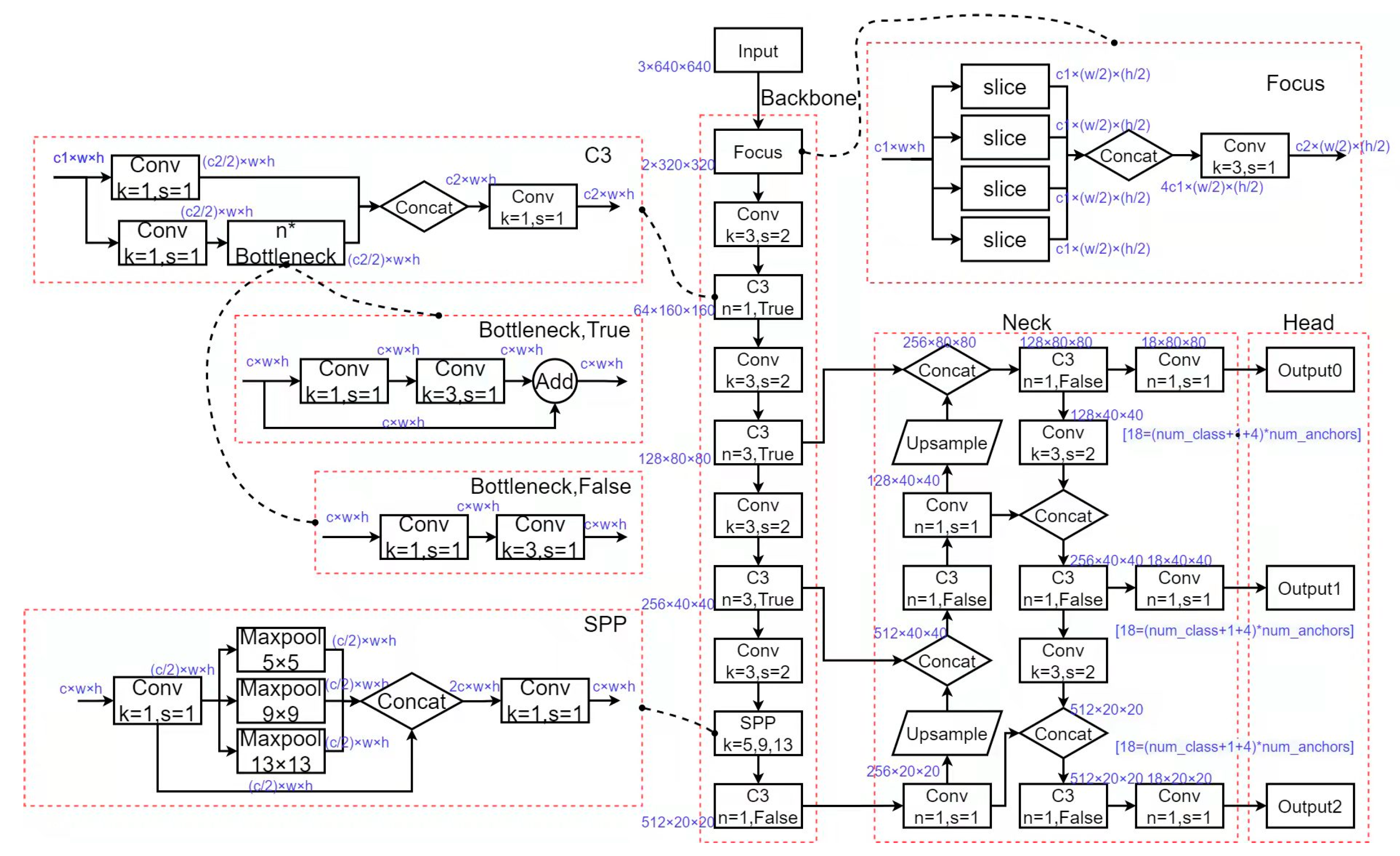

2.2.1. YOLOv5s Architecture

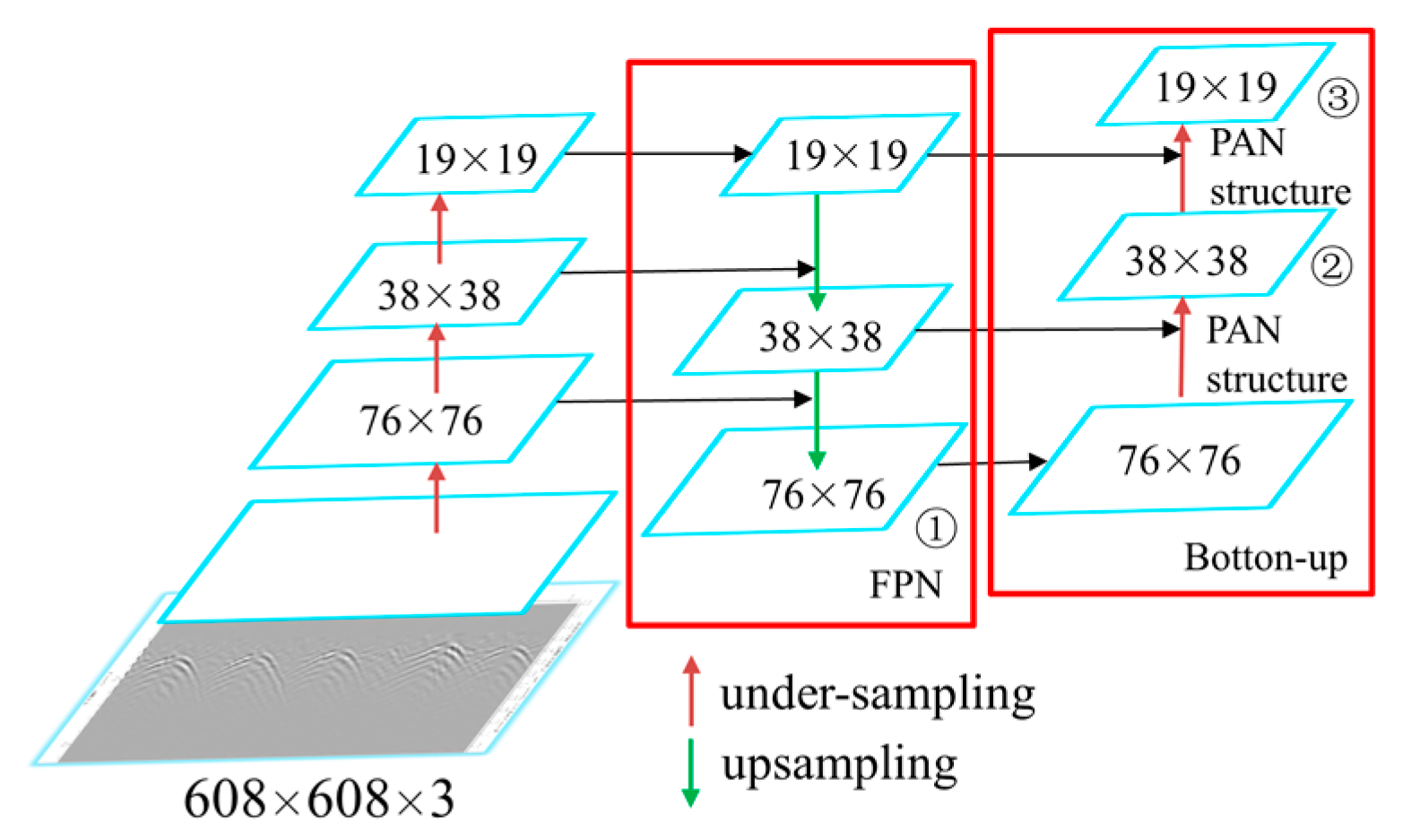

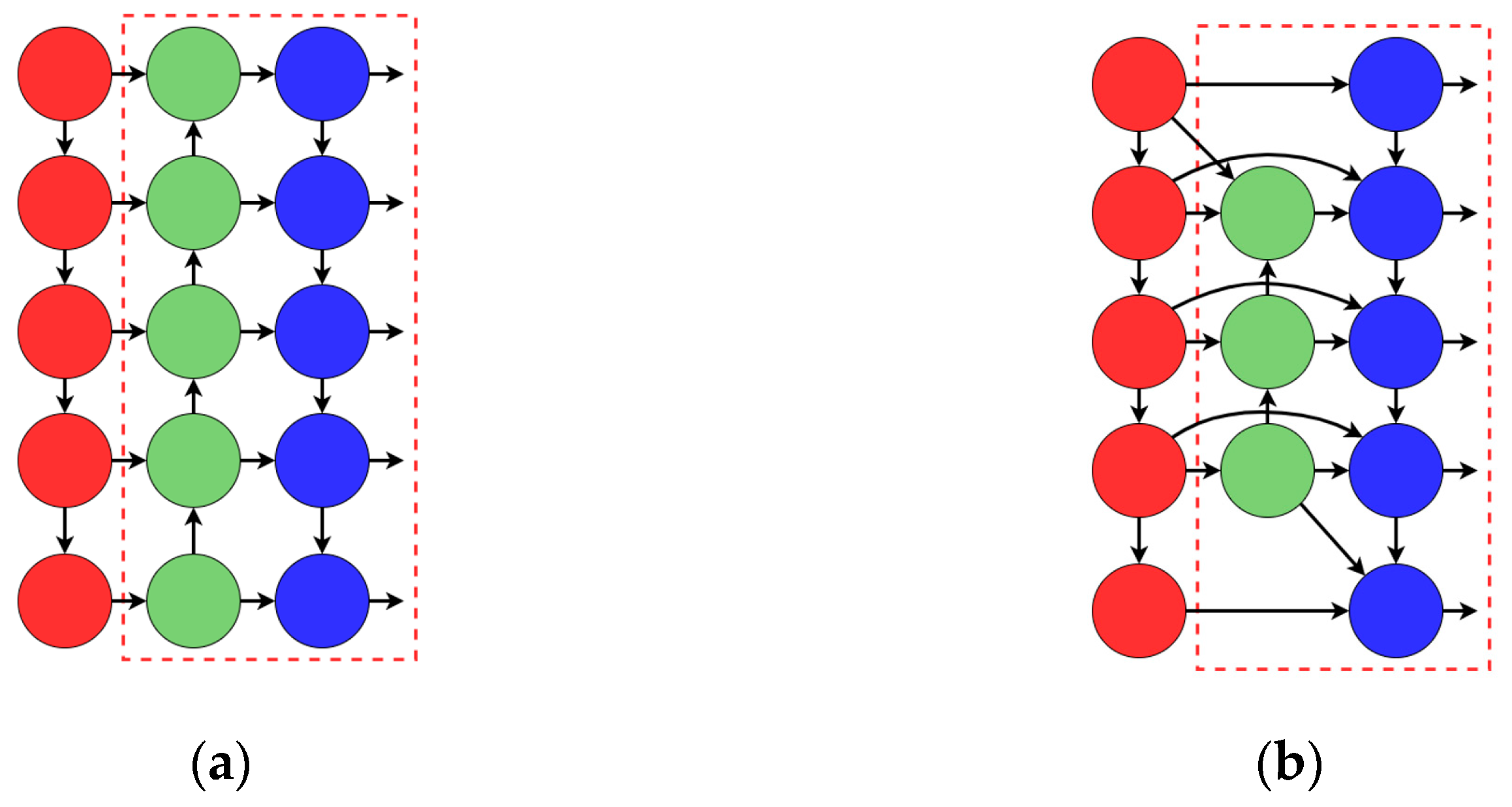

2.2.2. Improve the YOLOv5s Network Structure

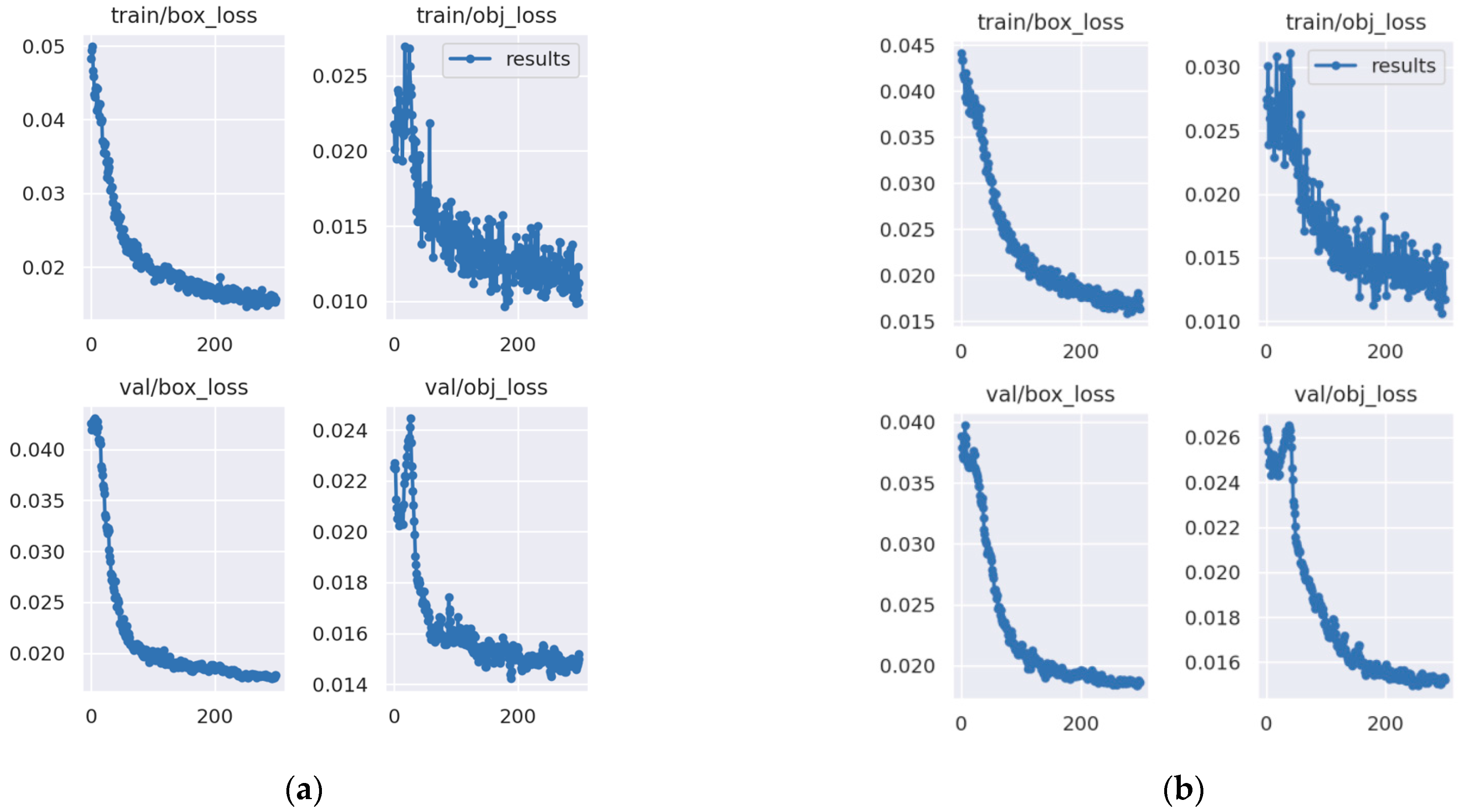

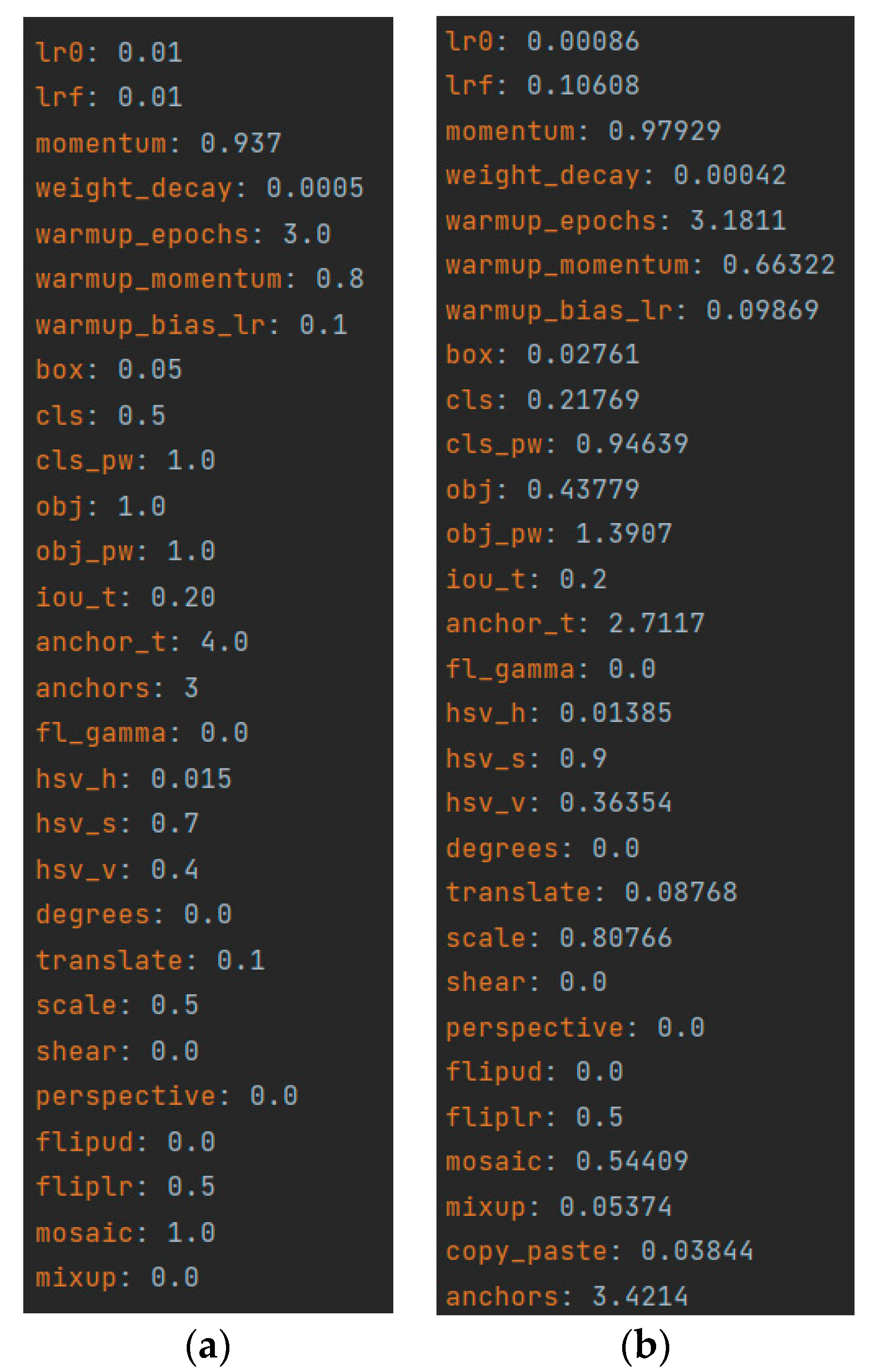

2.2.3. Data Augmentation

2.2.4. Improved Prediction Box Regression Loss Function

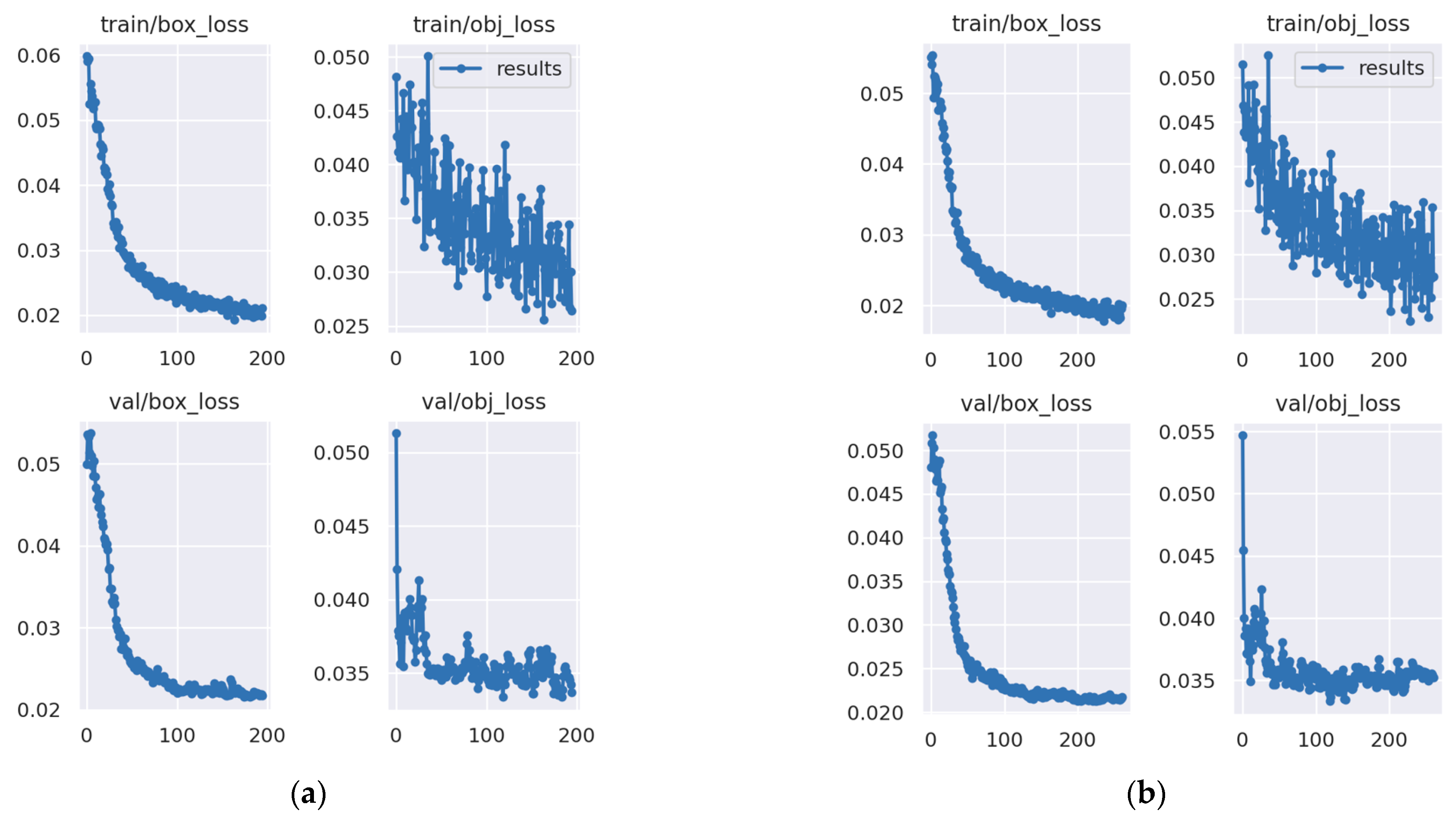

2.2.5. Ablation Experiment

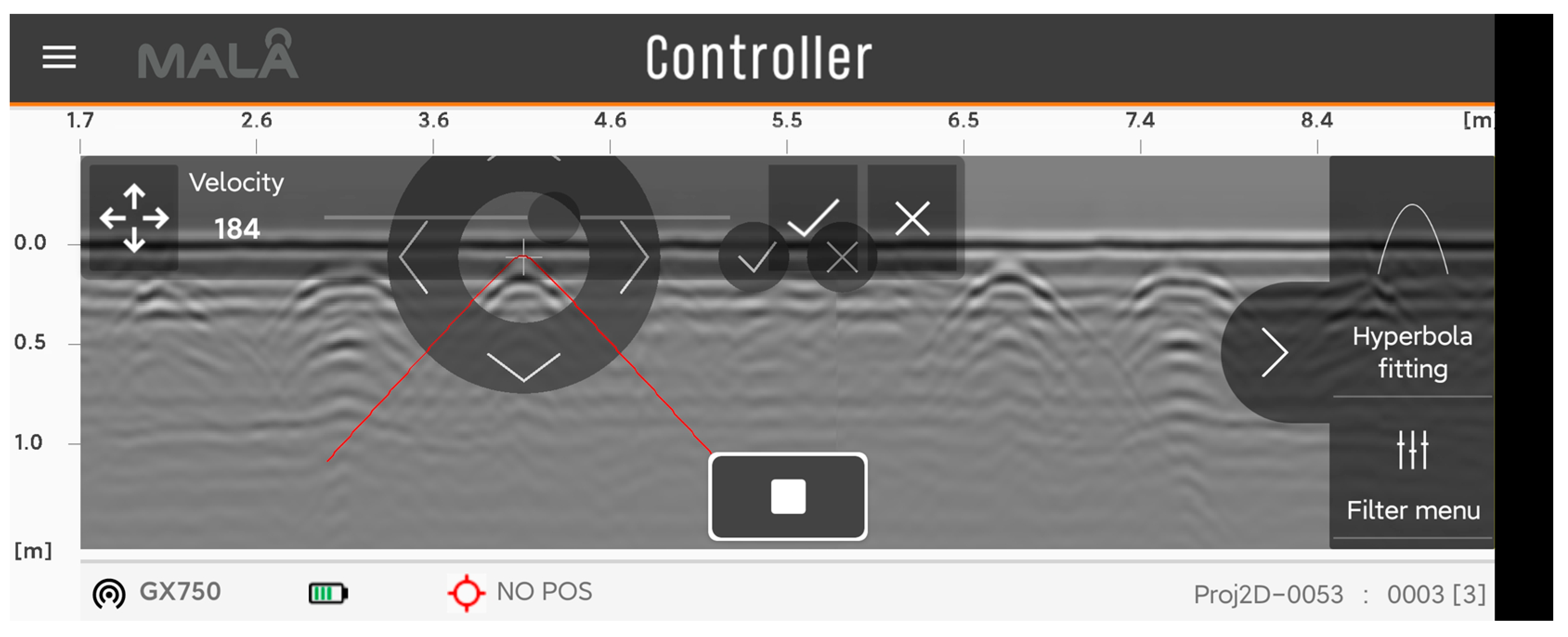

2.3. Ground Penetrating Radar Target Precise Positioning

2.3.1. Obtaining a GPS Signal

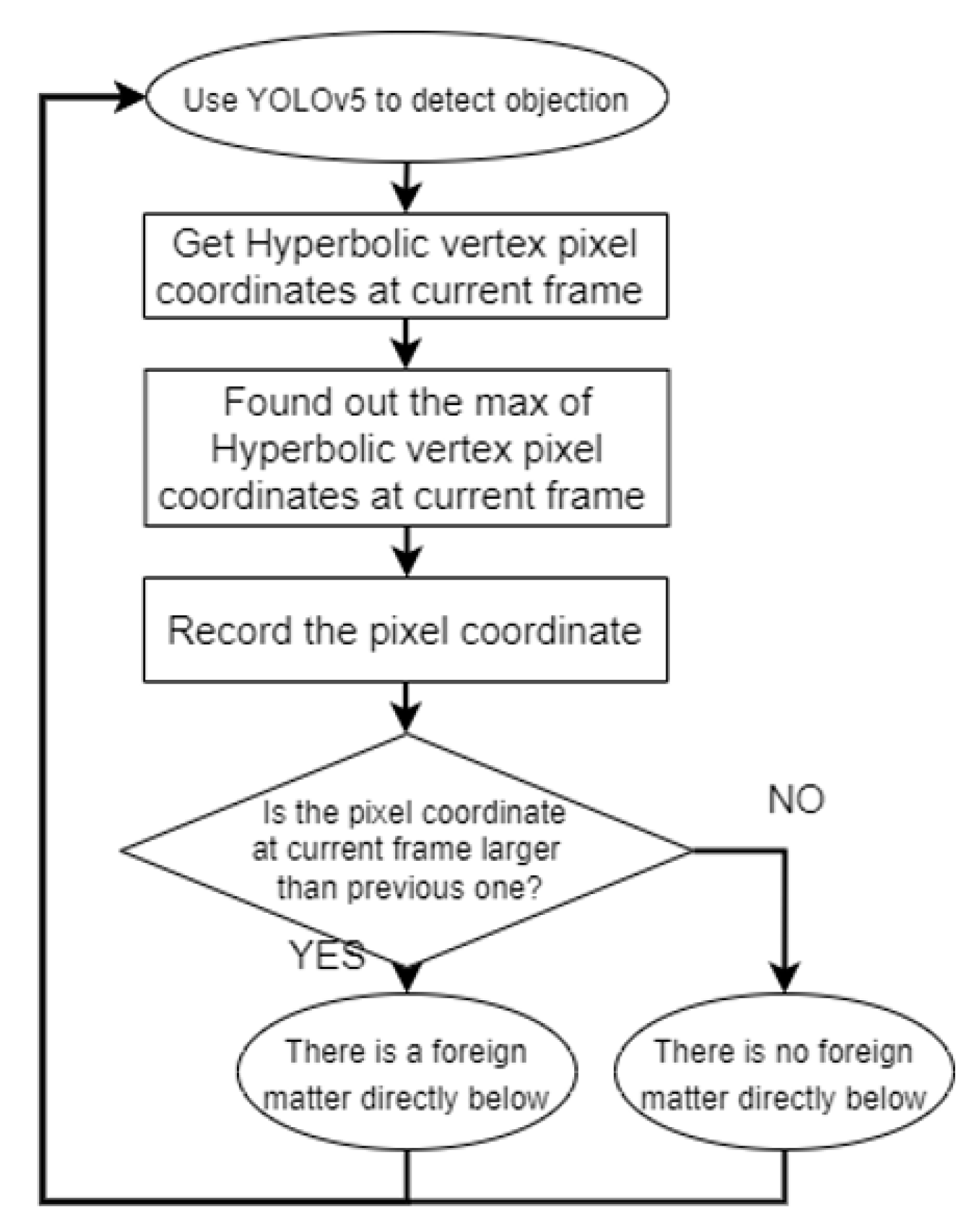

2.3.2. Determining Whether There Is a Foreign Object Directly Below

2.3.3. World Coordinate Transformation

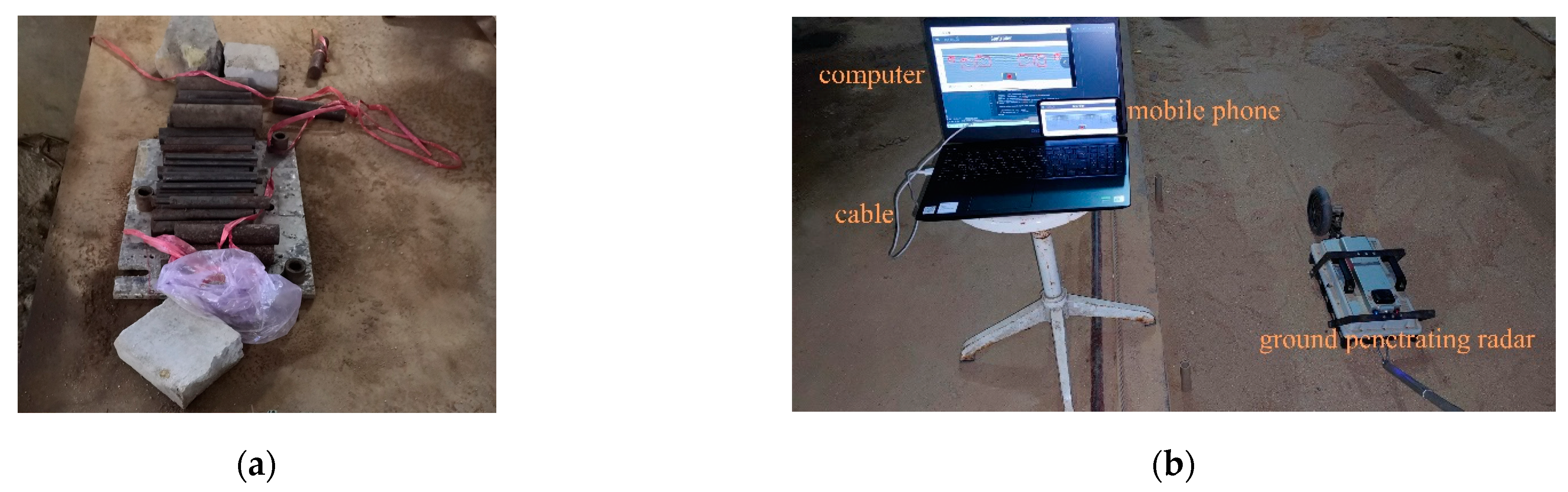

2.4. Ground Penetrating Radar Experiment

3. Results and Discussion

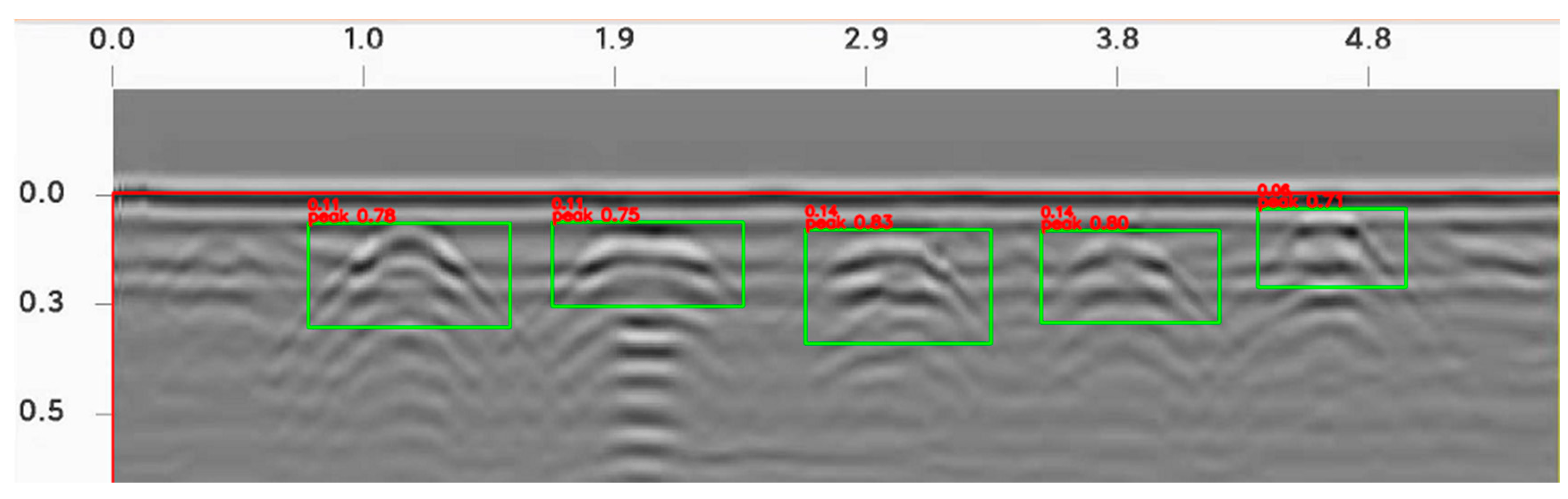

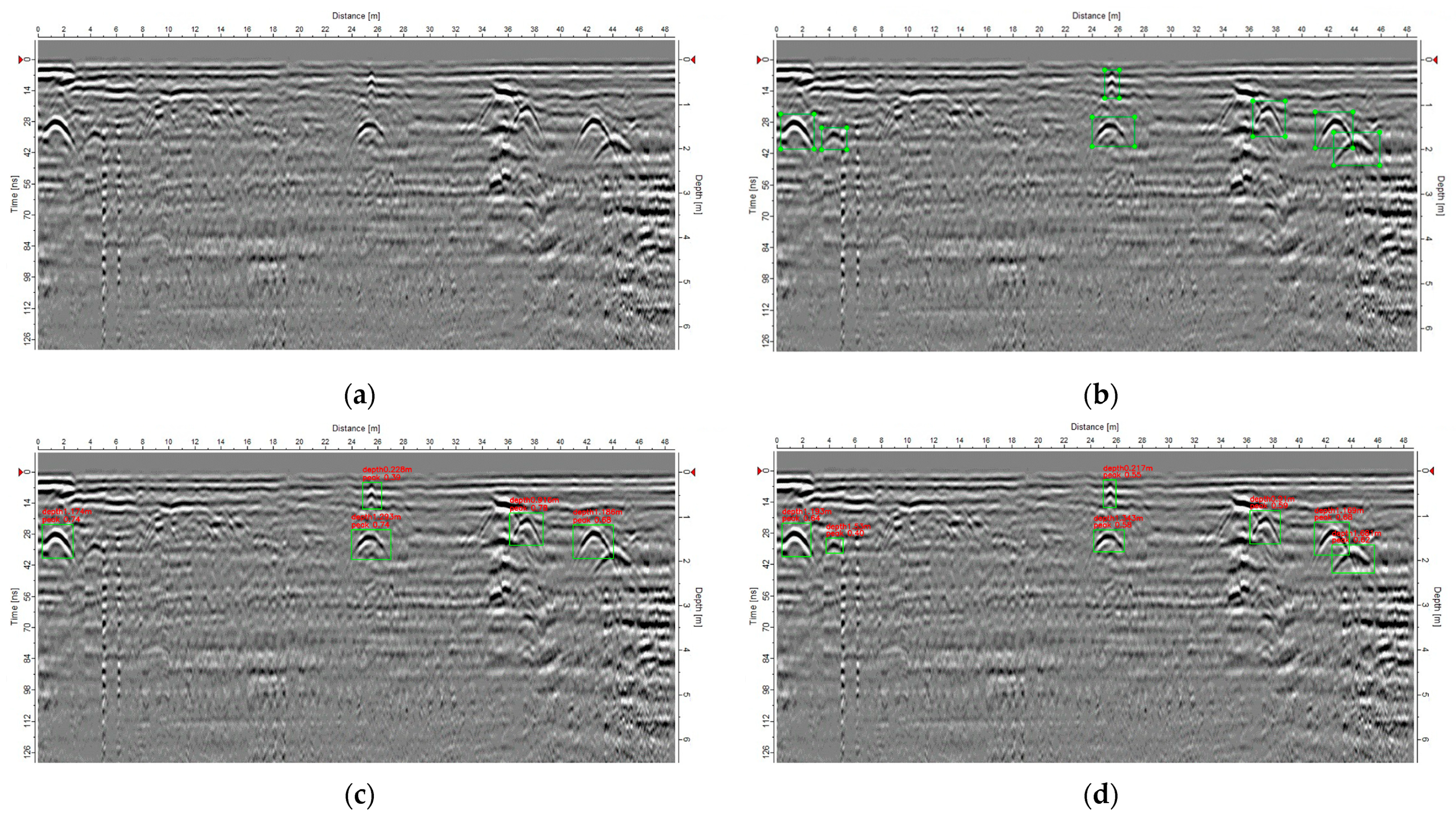

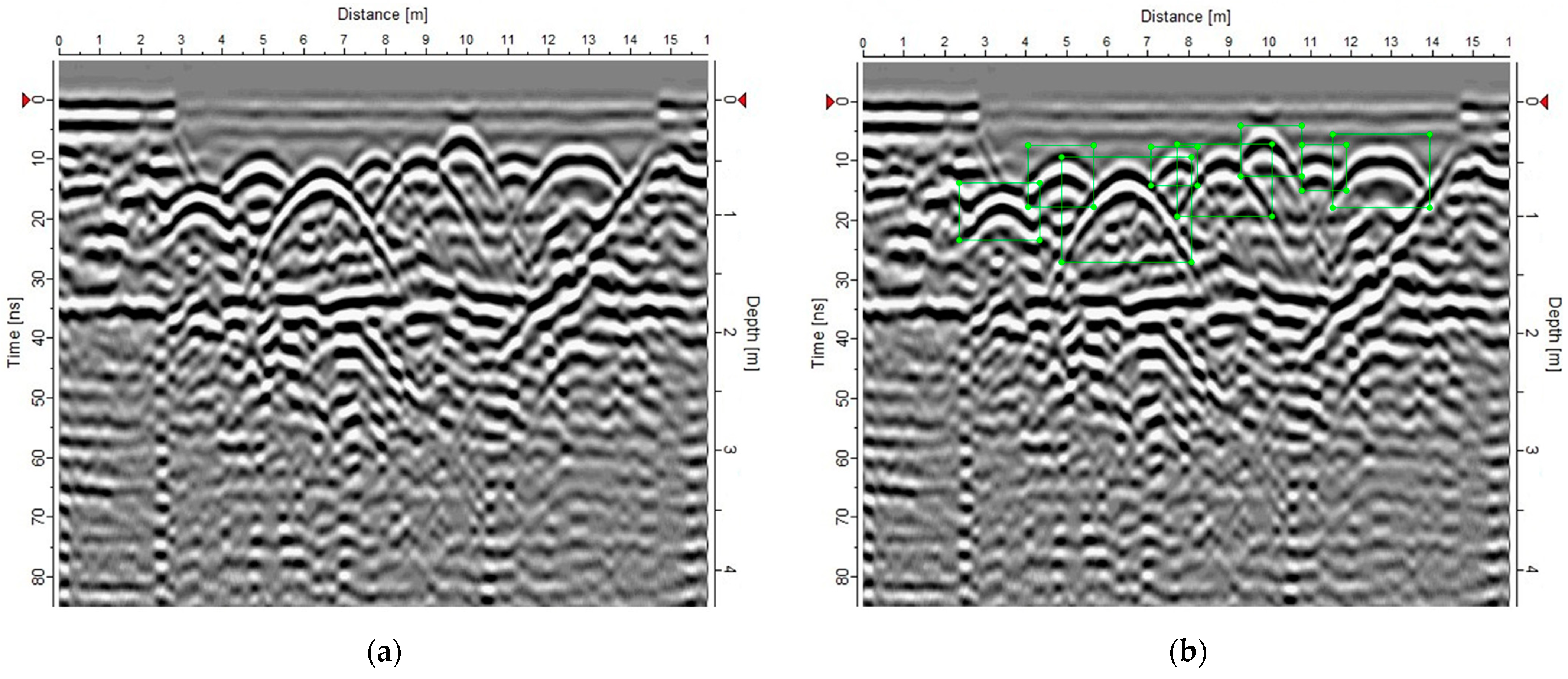

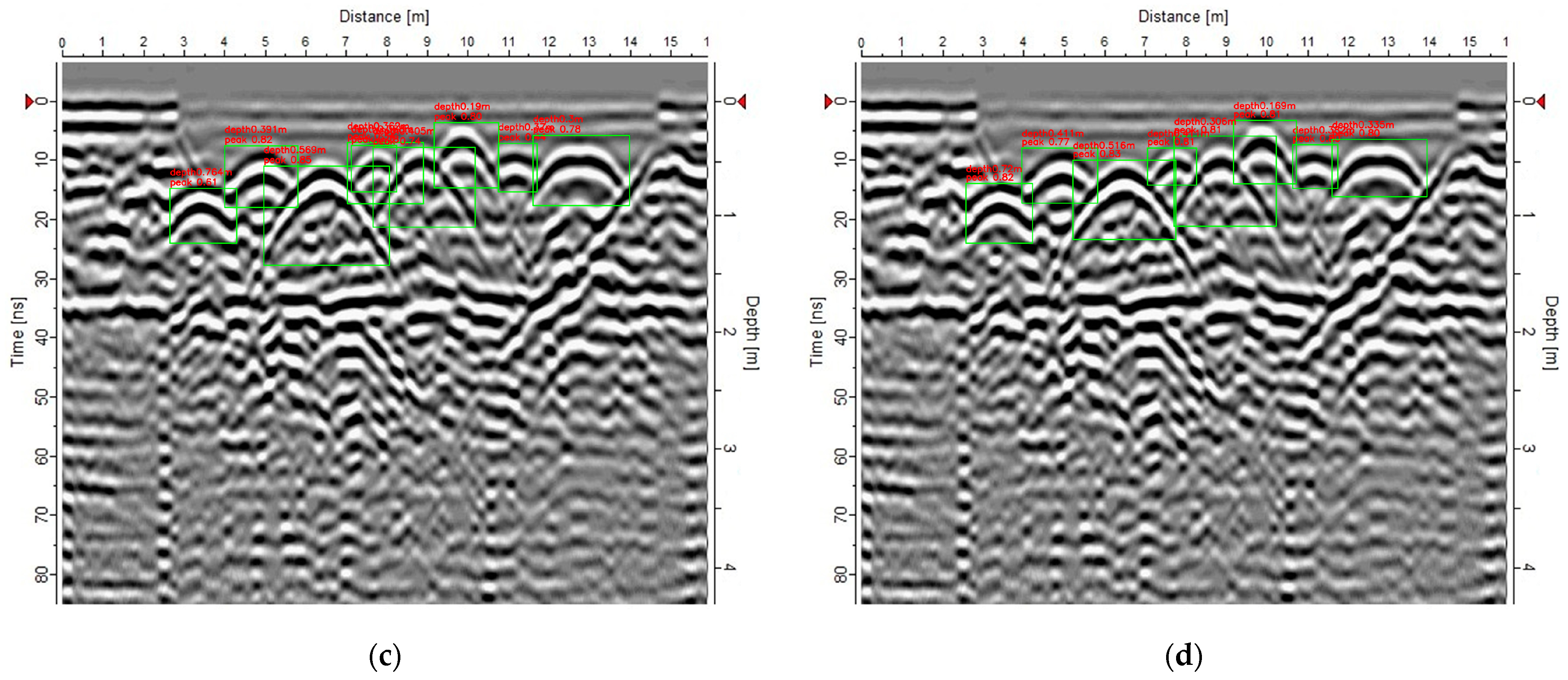

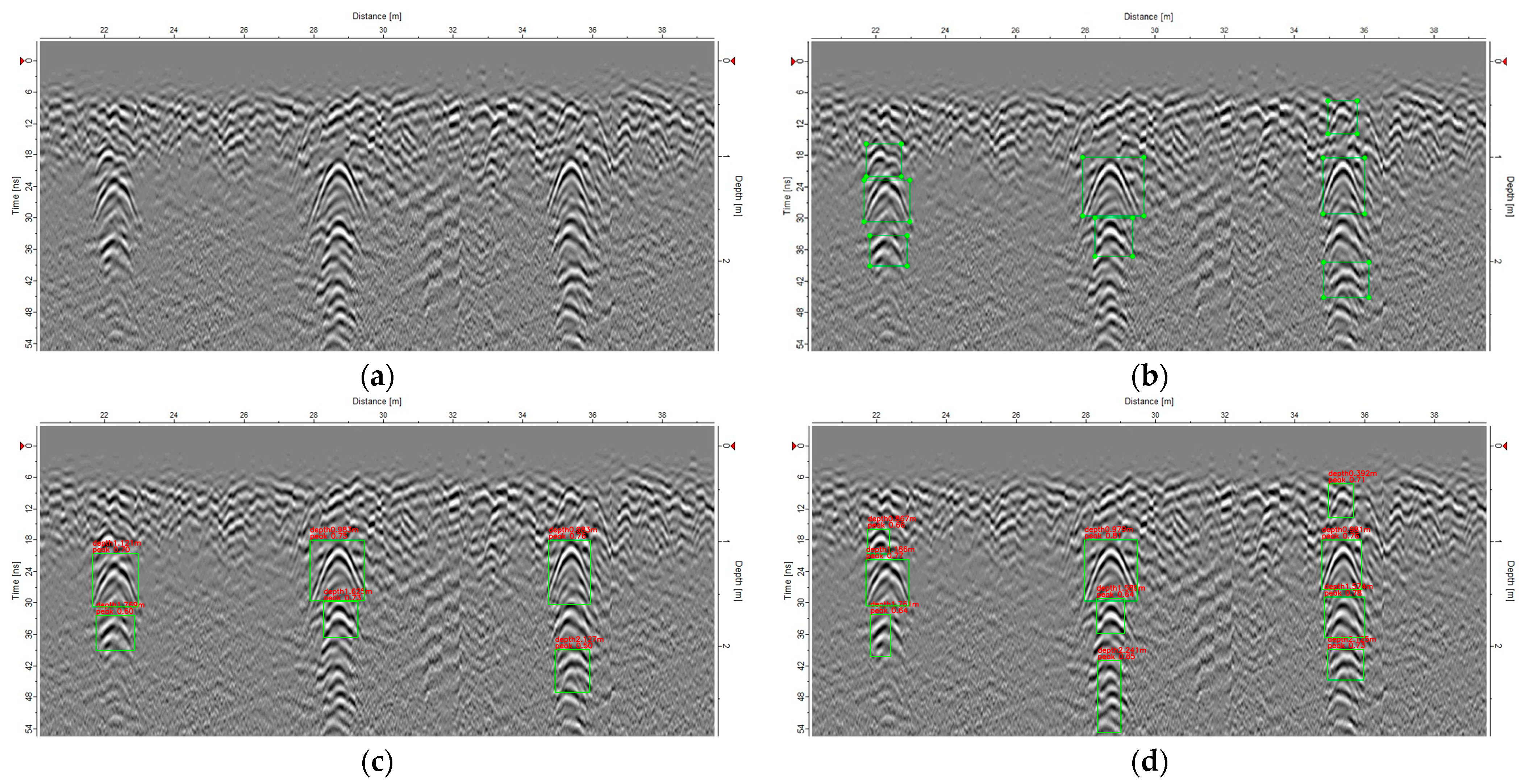

3.1. Detection Effect

3.2. Model Comparison

3.2.1. Model Evaluation Metrics

3.2.2. Detector Comparison

4. Conclusions

- (1)

- We improved the network structure of YOLOv5, used BiFPN instead of FPN+PANet, added a smaller target detection layer, used the CIoU loss function instead of the GIoU loss function, and added copy–paste data enhancement methods to the data enhancement, which had better accuracy and robustness than without the improvement, and reduced the false detection and missed detection rate.

- (2)

- After we detected the target, we used the regression equation and the supervision function to compensate for the inaccurate positioning caused by the signal delay of the ground penetrating radar receiver, and realized that when a foreign object in the underground soil is detected, the global longitude, latitude, and burial depth of the foreign object can be known in real time, which is convenient for the subsequent processing of an unmanned farm.

- (3)

- We solved the problem of unsatisfactory GPR image quality obtained by taking screenshots with MALA’s mobile phone software, which are easily affected by the screen resolution of the mobile phone. After we obtained the ground penetrating radar image signal, we filtered out the interference of the direct wave and enhanced the filtered signal so as to obtain a better ground penetrating radar image, which is convenient for subsequent target detection.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Wang, T.; Xu, X.; Wang, C.; Li, Z.; Li, D. From Smart Farming towards Unmanned Farms: A New Mode of Agricultural Production. Agriculture 2021, 11, 145. [Google Scholar] [CrossRef]

- Zang, G.; Sun, L.; Chen, Z.; Li, L. A nondestructive evaluation method for semi-rigid base cracking condition of asphalt pavement. Constr. Build. Mater. 2018, 162, 892–897. [Google Scholar] [CrossRef]

- Li, Y.; Zhao, Z.; Xu, W.; Liu, Z.; Wang, X. An effective FDTD model for GPR to detect the material of hard objects buried in tillage soil layer. Soil Tillage Res. 2019, 195, 104353. [Google Scholar] [CrossRef]

- Meschino, S.; Pajewski, L.; Pastorino, M.; Randazzo, A.; Schettini, G. Detection of subsurface metallic utilities by means of a SAP technique: Comparing MUSIC- and SVM-based approaches. J. Appl. Geophys. 2013, 97, 60–68. [Google Scholar] [CrossRef]

- Kim, N.; Kim, K.; An, Y.; Lee, H.; Lee, J. Deep learning-based underground object detection for urban road pavement. Int. J. Pavement Eng. 2020, 21, 1638–1650. [Google Scholar] [CrossRef]

- Hong, W.; Kang, S.; Lee, S.J.; Lee, J. Analyses of GPR signals for characterization of ground conditions in urban areas. J. Appl. Geophys. 2018, 152, 65–76. [Google Scholar] [CrossRef]

- Krysiński, L.; Sudyka, J. GPR abilities in investigation of the pavement transversal cracks. J. Appl. Geophys. 2013, 97, 27–36. [Google Scholar] [CrossRef]

- Abouhamad, M.; Dawood, T.; Jabri, A.; Alsharqawi, M.; Zayed, T. Corrosiveness mapping of bridge decks using image-based analysis of GPR data. Autom. Constr. 2017, 80, 104–117. [Google Scholar] [CrossRef]

- Solla, M.; Lorenzo, H.; Novo, A.; Caamaño, J.C. Structural analysis of the Roman Bibei bridge (Spain) based on GPR data and numerical modelling. Autom. Constr. 2012, 22, 334–339. [Google Scholar] [CrossRef]

- Wei, L.; Magee, D.R.; Cohn, A.G. An anomalous event detection and tracking method for a tunnel look-ahead ground prediction system. Automat. Constr. 2018, 91, 216–225. [Google Scholar] [CrossRef] [Green Version]

- Feng, D.; Wang, X.; Zhang, B. Specific evaluation of tunnel lining multi-defects by all-refined GPR simulation method using hybrid algorithm of FETD and FDTD. Constr. Build. Mater. 2018, 185, 220–229. [Google Scholar] [CrossRef]

- Cuenca-García, C.; Risbøl, O.; Bates, C.R.; Stamnes, A.A.; Skoglund, F.; Ødegård, Ø.; Viberg, A.; Koivisto, S.; Fuglsang, M.; Gabler, M.; et al. Sensing Archaeology in the North: The Use of Non-Destructive Geophysical and Remote Sensing Methods in Archaeology in Scandinavian and North Atlantic Territories. Remote Sens. 2020, 12, 3102. [Google Scholar] [CrossRef]

- Papadopoulos, N.; Sarris, A.; Yi, M.; Kim, J. Urban archaeological investigations using surface 3D Ground Penetrating Radar and Electrical Resistivity Tomography methods. Explor. Geophys. 2018, 40, 56–68. [Google Scholar] [CrossRef]

- Ramya, M.; Balasubramaniam, K.; Shunmugam, M.S. On a reliable assessment of the location and size of rebar in concrete structures from radargrams of ground-penetrating radar. Insight Non-Destr. Test. Cond. Monit. 2016, 58, 264–270. [Google Scholar] [CrossRef]

- Li, W.; Cui, X.; Guo, L.; Chen, J.; Chen, X.; Cao, X. Tree Root Automatic Recognition in Ground Penetrating Radar Profiles Based on Randomized Hough Transform. Remote Sens. Basel 2016, 8, 430. [Google Scholar] [CrossRef] [Green Version]

- Jin, Y.; Duan, Y. Wavelet Scattering Network-Based Machine Learning for Ground Penetrating Radar Imaging: Application in Pipeline Identification. Remote Sens. 2020, 12, 3655. [Google Scholar] [CrossRef]

- Jiao, L.; Ye, Q.; Cao, X.; Huston, D.; Xia, T. Identifying concrete structure defects in GPR image. Measurement 2020, 160, 107839. [Google Scholar] [CrossRef]

- Qin, H.; Zhang, D.; Tang, Y.; Wang, Y. Automatic recognition of tunnel lining elements from GPR images using deep convolutional networks with data augmentation. Automat. Constr. 2021, 130, 103830. [Google Scholar] [CrossRef]

- Dinh, K.; Gucunski, N.; Duong, T.H. An algorithm for automatic localization and detection of rebars from GPR data of concrete bridge decks. Automat. Constr. 2018, 89, 292–298. [Google Scholar] [CrossRef]

- Li, Y.; Zhao, Z.; Luo, Y.; Qiu, Z. Real-Time Pattern-Recognition of GPR Images with YOLO v3 Implemented by Tensorflow. Sensors 2020, 20, 6476. [Google Scholar] [CrossRef]

- Li, S.; Gu, X.; Xu, X.; Xu, D.; Zhang, T.; Liu, Z.; Dong, Q. Detection of concealed cracks from ground penetrating radar images based on deep learning algorithm. Constr. Build. Mater. 2021, 273, 121949. [Google Scholar] [CrossRef]

- Agred, K.; Klysz, G.; Balayssac, J.P. Location of reinforcement and moisture assessment in reinforced concrete with a double receiver GPR antenna. Constr. Build. Mater. 2018, 188, 1119–1127. [Google Scholar] [CrossRef]

- Maas, C.; Schmalzl, J. Using pattern recognition to automatically localize reflection hyperbolas in data from ground penetrating radar. Comput. Geosci. UK 2013, 58, 116–125. [Google Scholar] [CrossRef]

- Feng, D.; Wang, X.; Wang, X.; Ding, S.; Zhang, H. Deep Convolutional Denoising Autoencoders with Network Structure Optimization for the High-Fidelity Attenuation of Random GPR Noise. Remote Sens. 2021, 13, 1761. [Google Scholar] [CrossRef]

- Mao, S.; Rajan, D.; Chia, L.T. Deep residual pooling network for texture recognition. Pattern Recogn. 2021, 112, 107817. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [Green Version]

- Hu, J.; Shi, C.J.R.; Zhang, J. Saliency-based YOLO for single target detection. Knowl. Inf. Syst. 2021, 63, 717–732. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and PATTERN recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Rios-Cabrera, R.; Lopez-Juarez, I.; Maldonado-Ramirez, A.; Alvarez-Hernandez, A.; Maldonado-Ramirez, A.D.J. Dynamic categorization of 3D objects for mobile service robots. Ind. Robot. Int. J. Robot. Res. Appl. 2020, 48, 51–61. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Ghiasi, G.; Cui, Y.; Srinivas, A.; Qian, R.; Lin, T.Y.; Cubuk, E.D.; Le, Q.V.; Zoph, B. Simple copy-paste is a strong data augmentation method for instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2918–2928. [Google Scholar]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. UnitBox: An Advanced Object Detection Network. In Proceedings of the 24th ACM international conference on Multimedia, New York, NY, USA, 15–19 October 2016; pp. 516–520. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection over Union: A Metric and A Loss for Bounding Box Regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zhang, H.; An, L.; Chu, V.W.; Stow, D.A.; Liu, X.; Ding, Q. Learning Adjustable Reduced Downsampling Network for Small Object Detection in Urban Environments. Remote Sens. 2021, 13, 3608. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2021. [Google Scholar] [CrossRef] [PubMed]

| IoU0.5 | IoU0.6 | IoU0.7 | IoU0.8 | IoU0.9 | IoU0.95 | |

|---|---|---|---|---|---|---|

| GIoU | 0.609 | 0.598 | 0.567 | 0.484 | 0.311 | 0.227 |

| CIoU | 0.653 | 0.643 | 0.616 | 0.546 | 0.382 | 0.264 |

| None | BiFPN | SE | Data Augmentation | CIoU | P | R | mAP |

|---|---|---|---|---|---|---|---|

| √ | 0.860 | 0.494 | 0.610 | ||||

| √ | √ | 0.757 | 0.682 | 0.732 | |||

| √ | 0.783 | 0.542 | 0.609 | ||||

| √ | √ | 0.776 | 0.599 | 0.653 | |||

| √ | √ | √ | √ | 0.826 | 0.680 | 0.757 |

| Fast RCNN | SSD | YOLOv5 | Ours | |

|---|---|---|---|---|

| P | 0.558 | 0.905 | 0.86 | 0.826 |

| R | 76.2 | 0.473 | 0.496 | 0.68 |

| AP | 0.731 | 0.656 | 0.63 | 0.757 |

| F1 | 0.64 | 0.62 | 0.68 | 0.73 |

| Inference time | 56.2 ms | 8.14 ms | 9.5 ms | 9.1 ms |

| Weight | 108 MB | 90.6 MB | 13.6 MB | 16.1 MB |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, Z.; Zhao, Z.; Chen, S.; Zeng, J.; Huang, Y.; Xiang, B. Application of an Improved YOLOv5 Algorithm in Real-Time Detection of Foreign Objects by Ground Penetrating Radar. Remote Sens. 2022, 14, 1895. https://doi.org/10.3390/rs14081895

Qiu Z, Zhao Z, Chen S, Zeng J, Huang Y, Xiang B. Application of an Improved YOLOv5 Algorithm in Real-Time Detection of Foreign Objects by Ground Penetrating Radar. Remote Sensing. 2022; 14(8):1895. https://doi.org/10.3390/rs14081895

Chicago/Turabian StyleQiu, Zhi, Zuoxi Zhao, Shaoji Chen, Junyuan Zeng, Yuan Huang, and Borui Xiang. 2022. "Application of an Improved YOLOv5 Algorithm in Real-Time Detection of Foreign Objects by Ground Penetrating Radar" Remote Sensing 14, no. 8: 1895. https://doi.org/10.3390/rs14081895

APA StyleQiu, Z., Zhao, Z., Chen, S., Zeng, J., Huang, Y., & Xiang, B. (2022). Application of an Improved YOLOv5 Algorithm in Real-Time Detection of Foreign Objects by Ground Penetrating Radar. Remote Sensing, 14(8), 1895. https://doi.org/10.3390/rs14081895