Earth Observation Mission of a 6U CubeSat with a 5-Meter Resolution for Wildfire Image Classification Using Convolution Neural Network Approach

Abstract

:1. Introduction

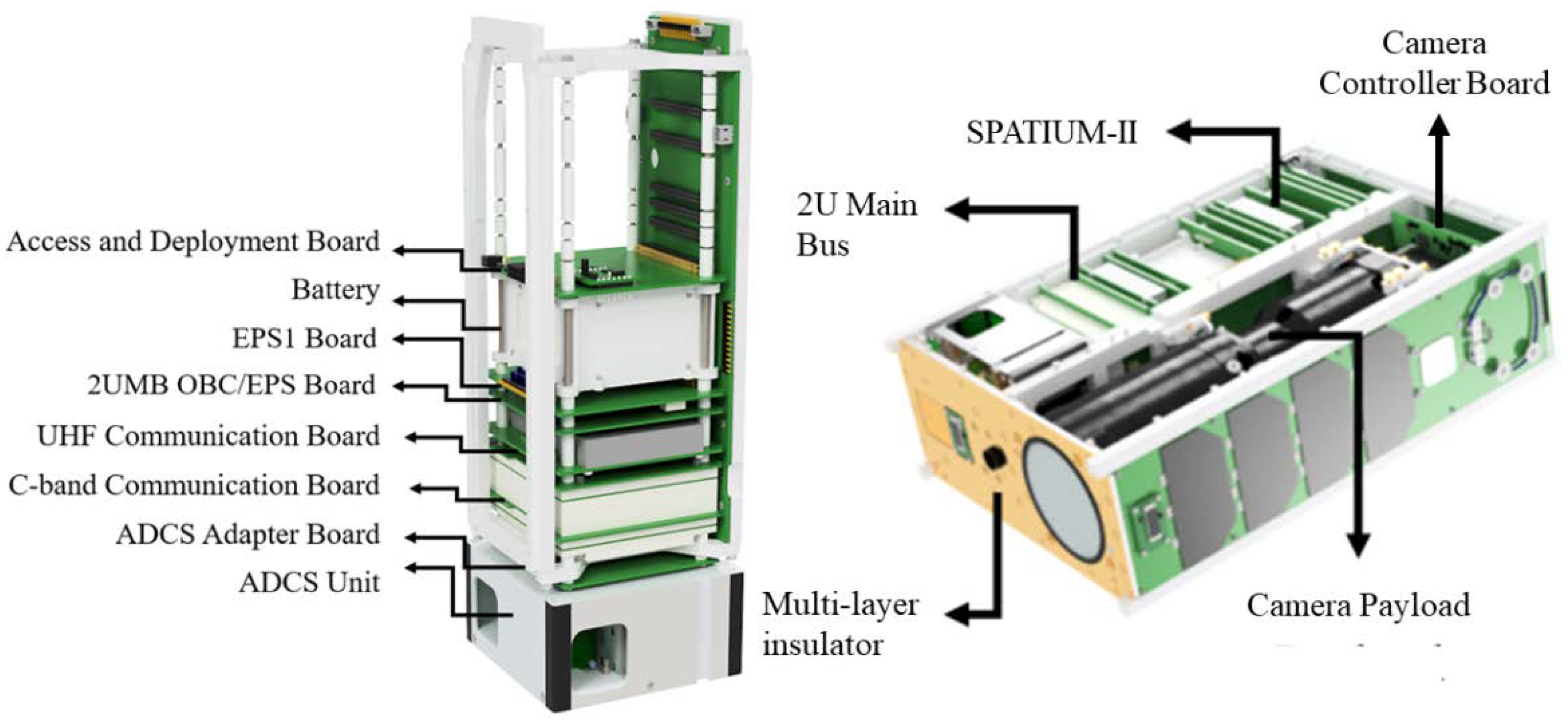

2. KITSUNE Satellite

2.1. Overview

2.2. Mission and System Requirements

- The ground resolution should be <6 m/pixel in addition to the ground swath of approximately 20 km;

- The overall payload should be able to fit within a volume of 90.0 mm × 90.0 mm × 327.5 mm;

- The camera sensor should have a pixel size larger than 3.0 µm with a global shutter and a shutter speed of less than 1/3200 s. In addition, it should be able to capture six images in a row with approximately 1 frame per second;

- The camera controller board (CCB) should capture images and transfer over C-band communication for downlink when it is requested by uplink commands. This could be either in real-time mode or downlink mode over C-band flash memory for stored images;

- The camera sensor should capture RGB images with JPG compression (>90%) with correct colors;

- The power consumption of the overall mission should be less than 10.0 Wh per orbit, and the in-rush current should be less than the overcurrent protection settings of the EPS;

- The mission should be operated by the uplink commands both from UHF GS and C-band mobile GS. In addition, images and telemetry should be received by UHF GS and C-band main GS;

- The satellite should point the camera and C-band Tx antenna with approximately 0.25° accuracy in target and nadir pointing modes by using the ADCS subsystem;

- The electronics should survive a total ionization dose of approximately 200.0 Gy. In addition, they should be able to operate within the temperature range of −20.0 °C to +50.0 °C while the range of temperature difference should be between −5.0 °C to +5.0 °C for the lens components.

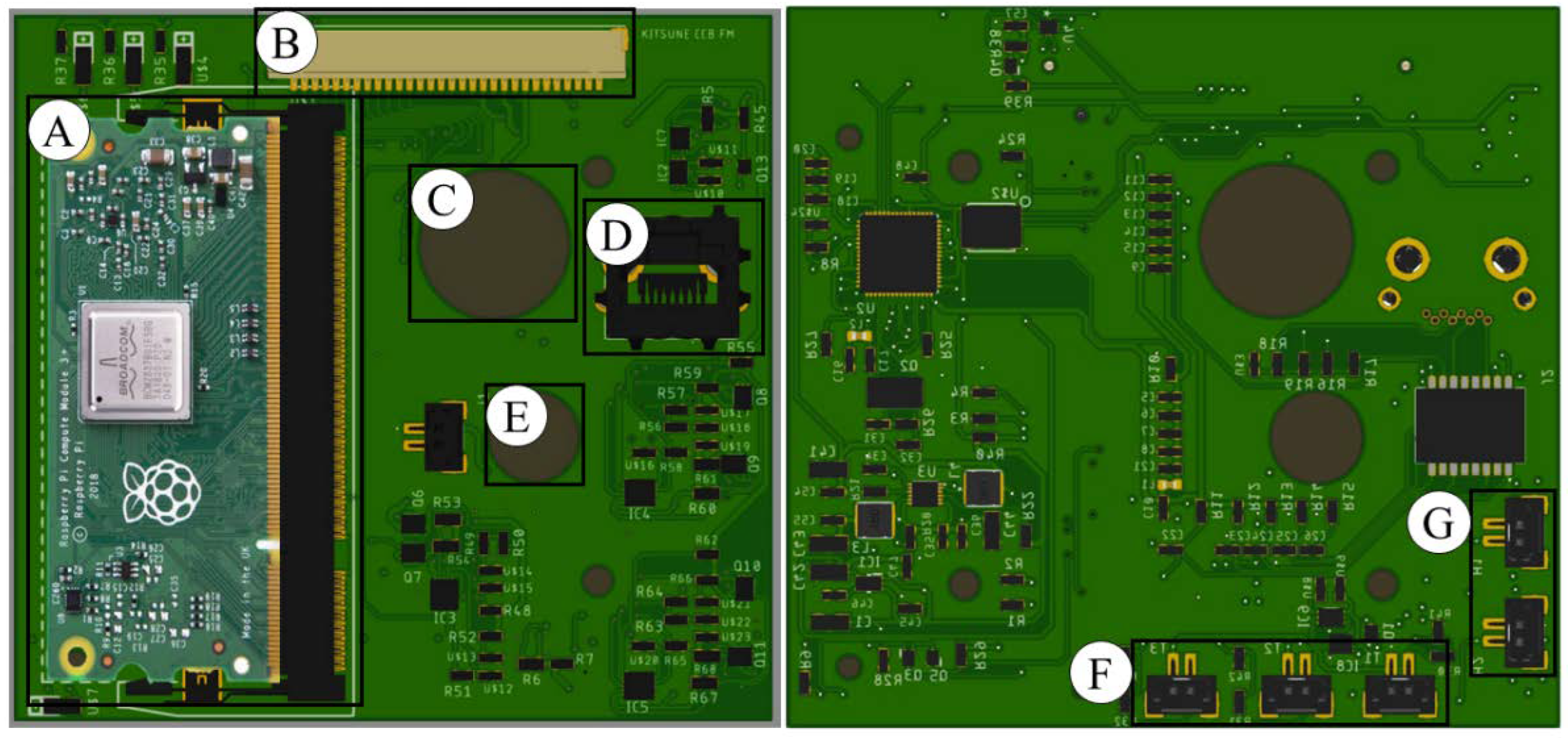

2.3. Hardware

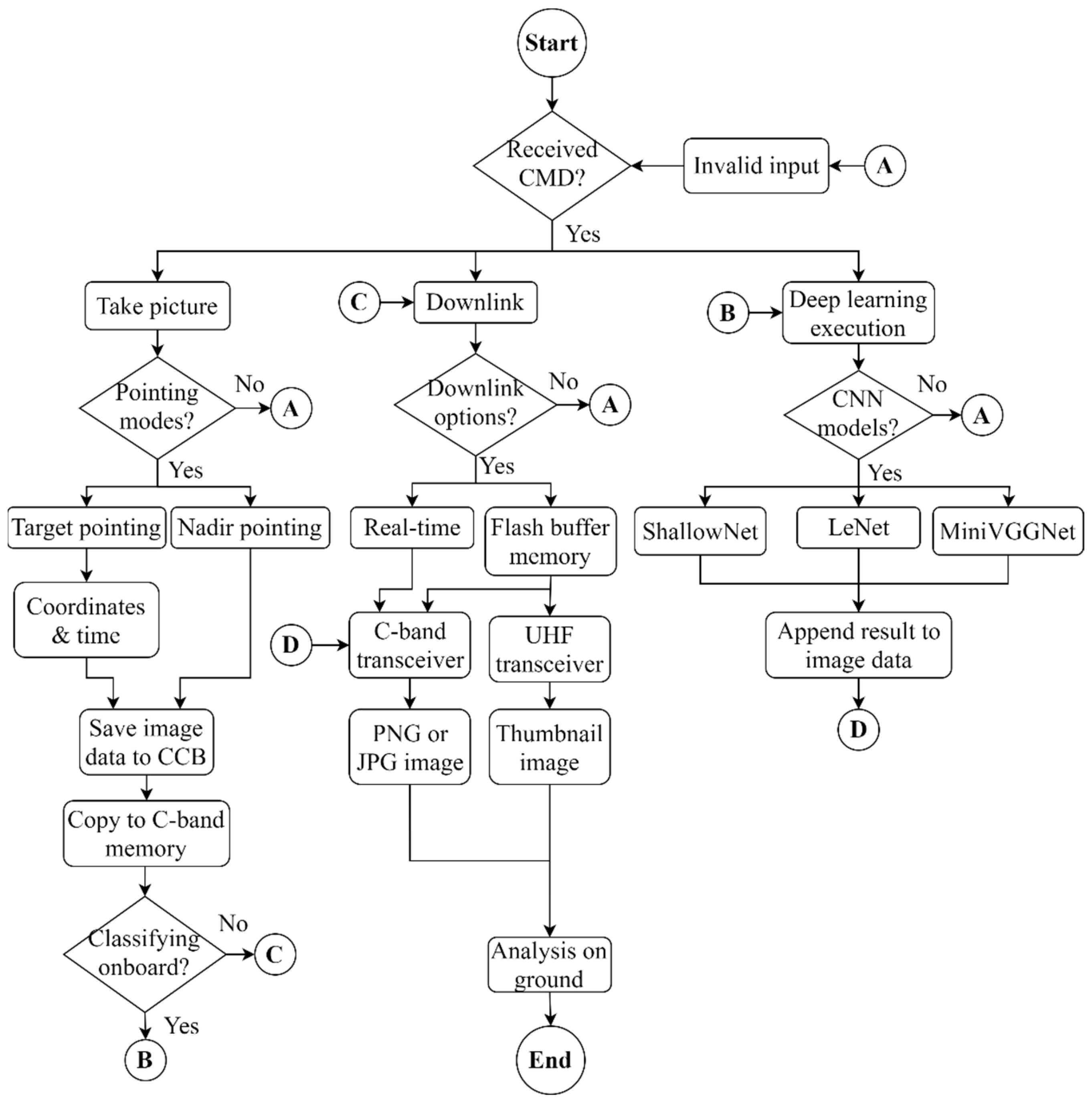

2.4. Software

2.4.1. Camera Controller Board

2.4.2. Ground Station Software

3. Methods

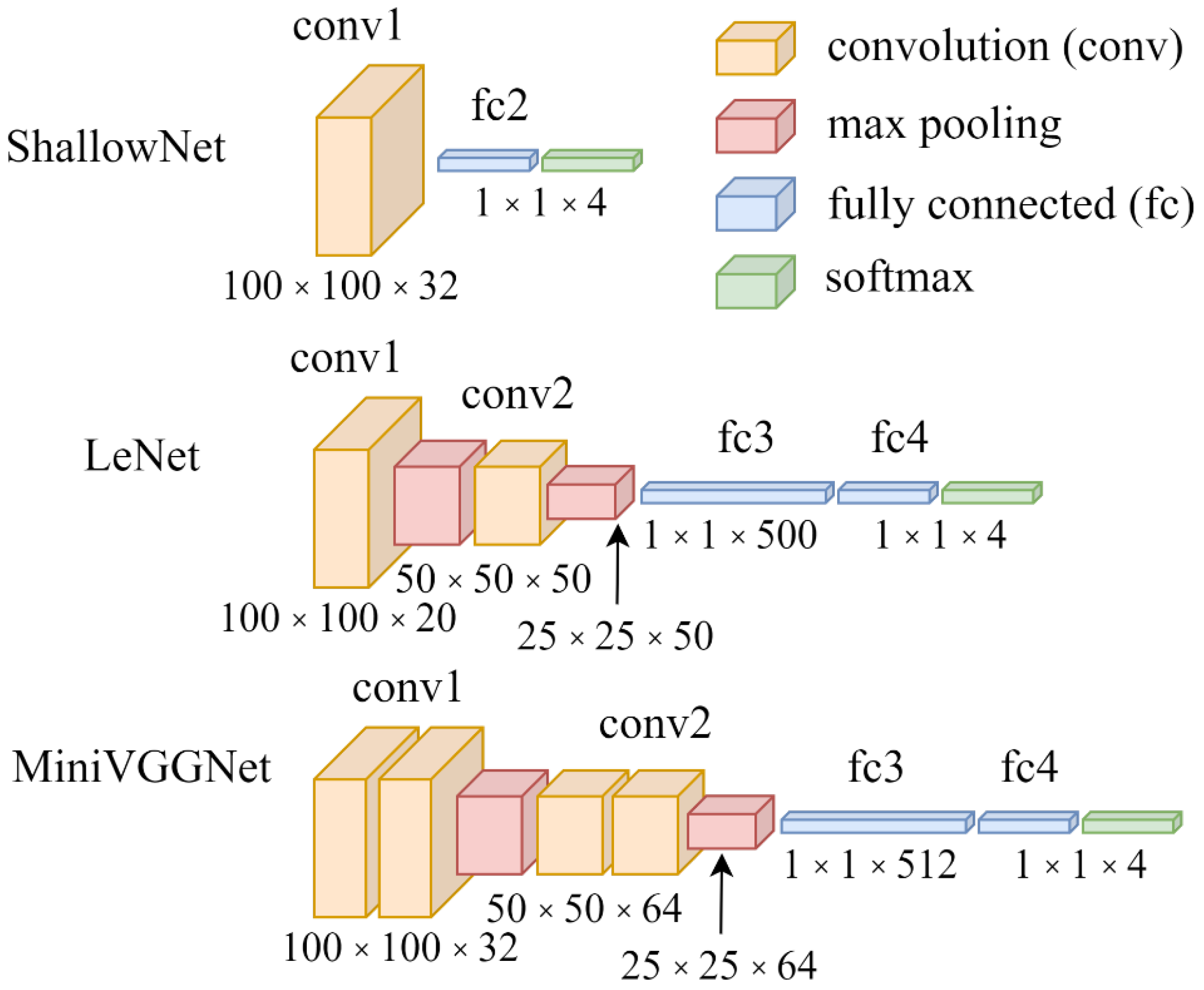

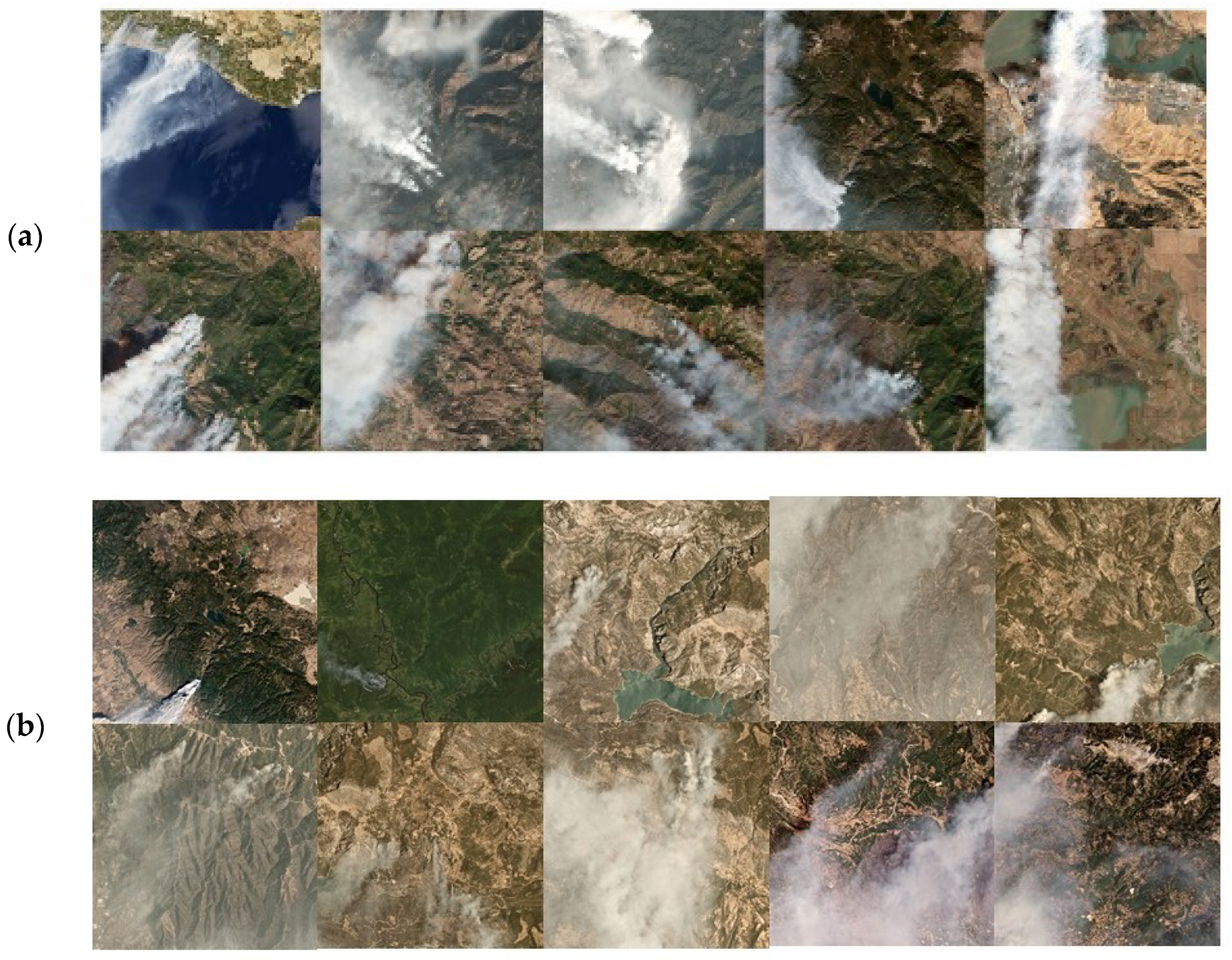

3.1. Wildfire Image Classification

3.2. Functional Test

4. Results

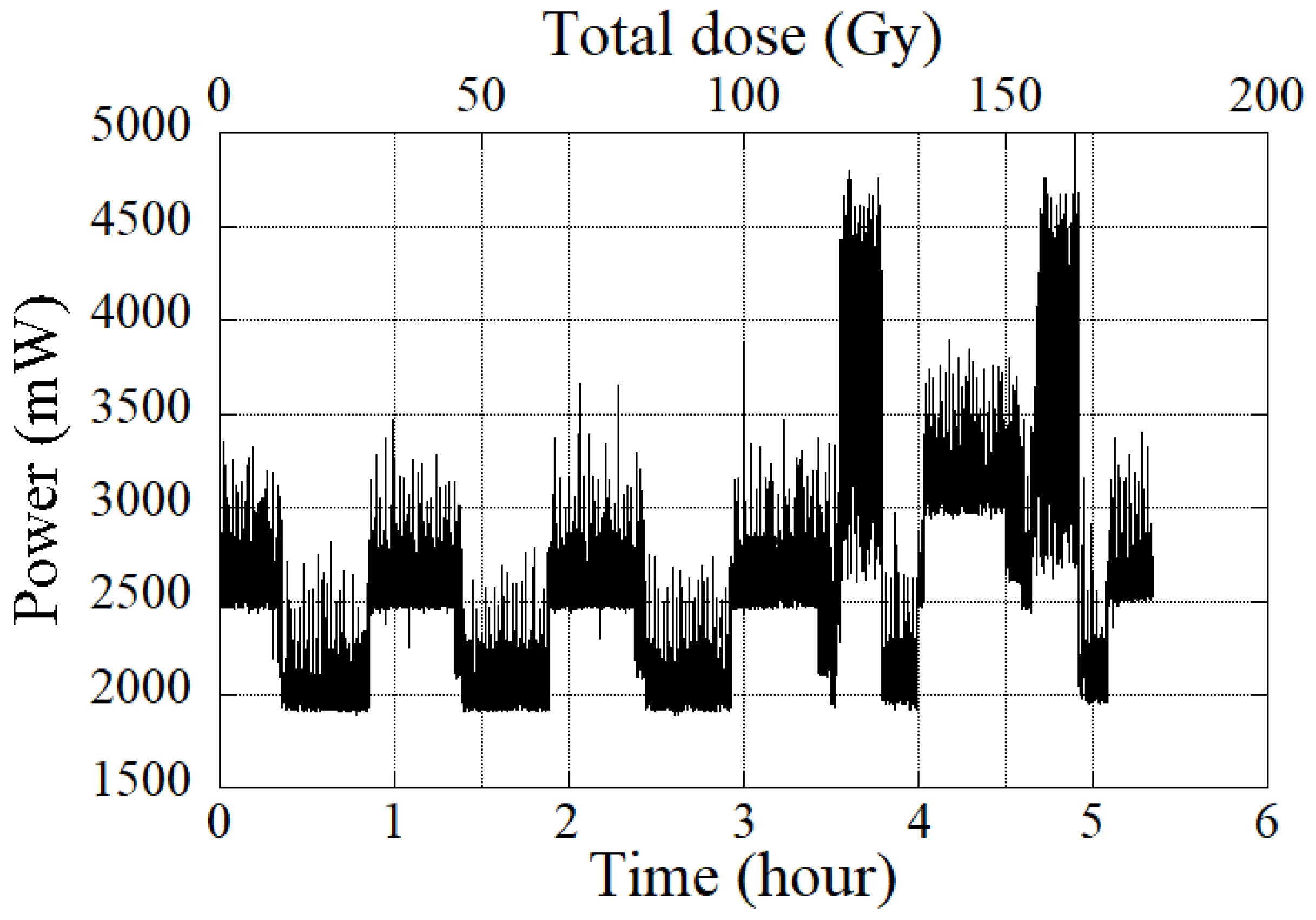

4.1. Total Ionizing Dose (TID) Radiation Test

4.2. Thermal Vacuum Test (TVT)

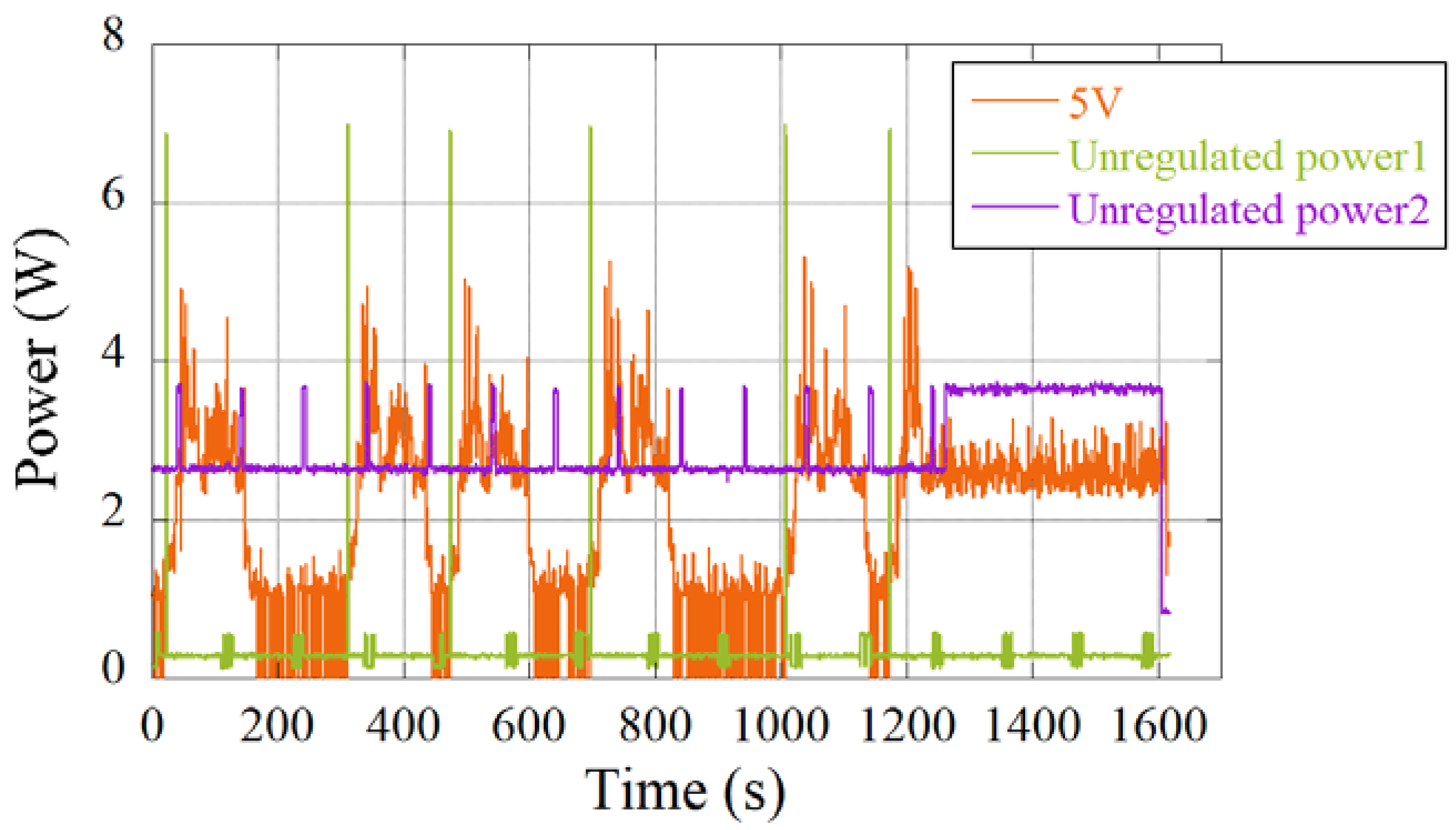

4.3. Long-Duration Operation Test (LDOT)

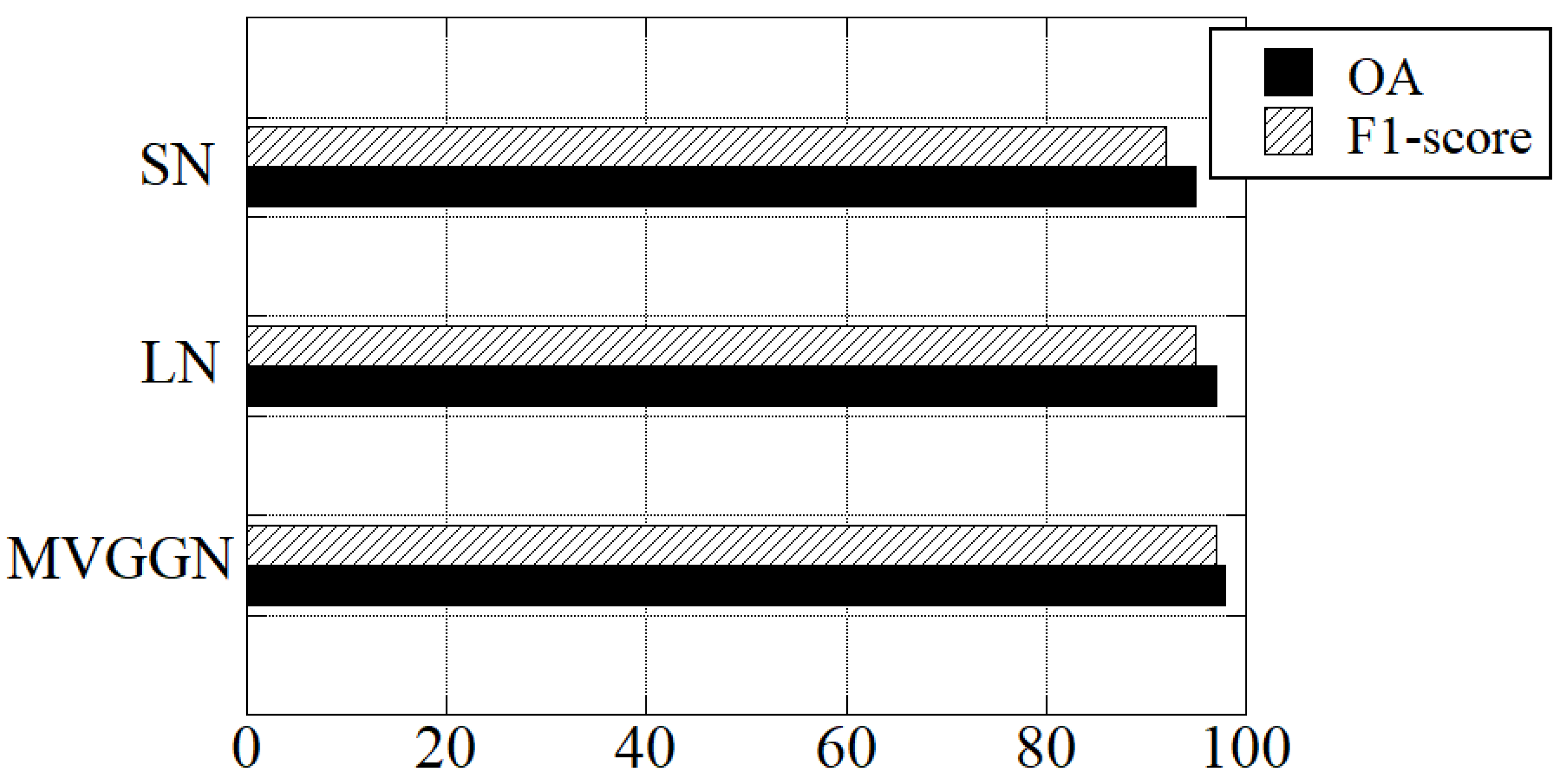

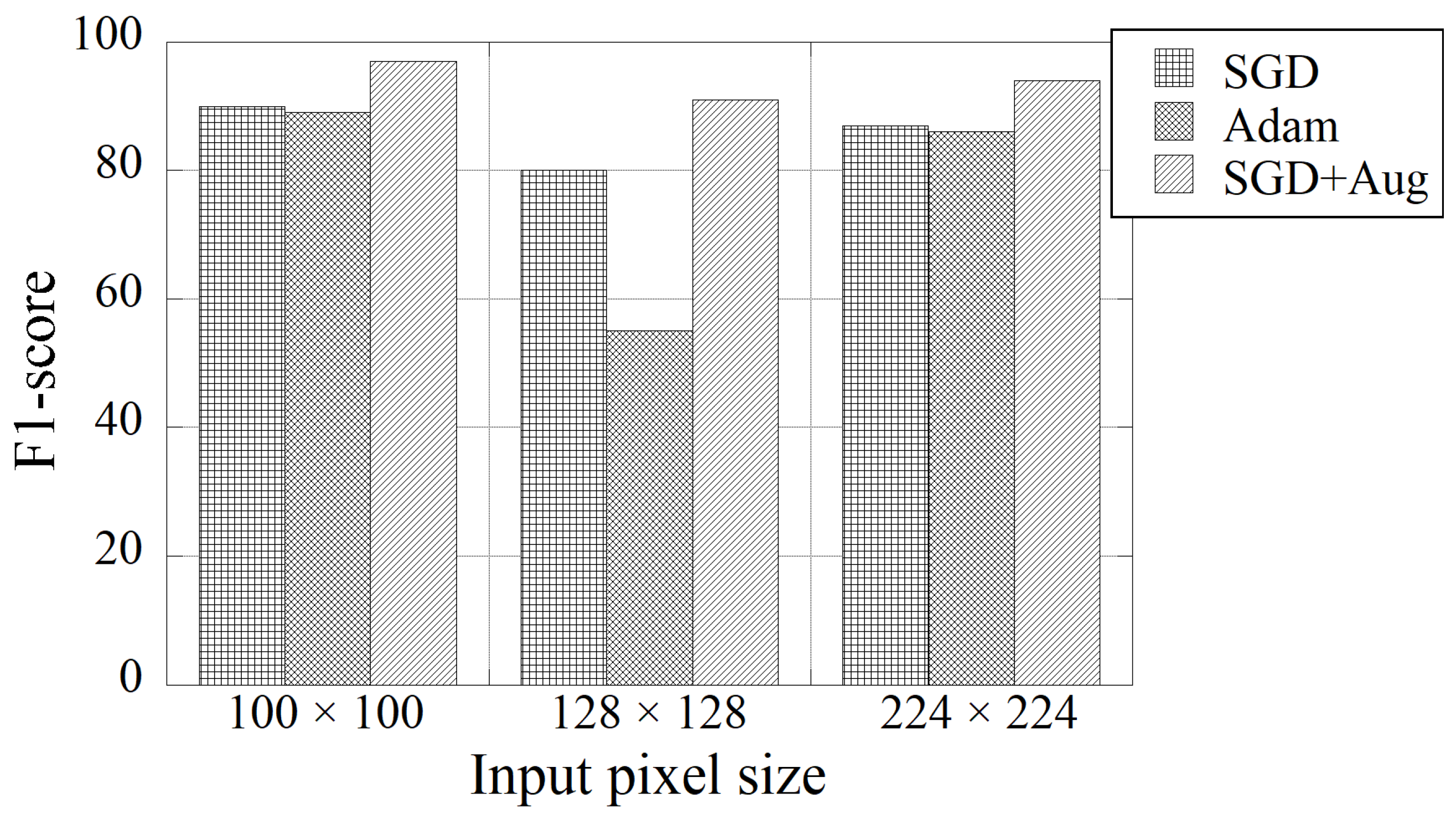

4.4. Convolution Neural Network for Wildfire Dataset

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nakasuka, S.; Sako, N.; Sahara, H.; Nakamura, Y.; Eishima, T.; Komatsu, M. Evolution from education to practical use in University of Tokyo’s nano-satellite activities. Acta Astronaut. 2010, 66, 1099–1105. [Google Scholar] [CrossRef]

- Tsuda, Y.; Sako, N.; Eishima, T.; Ito, T.; Arikawa, Y.; Miyamura, N.; Tanaka, A.; Nakasuka, S. University of Tokyo’s CubeSat project—Its educational and technological significance. In Proceedings of the the 15th Annual AIAA/USU Conference on Small Satellites, Logan, UT, USA, 13–16 August 2001. [Google Scholar]

- Berthoud, L.; Swartwout, M.; Blvd, L.; Louis, S.; Cutler, J.; Klumpar, D. University CubeSat Project Management for Success. In Proceedings of the the 33rd Annual AIAA/USU Conference on Small Satellites, Logan, UT, USA, 3–8 August 2019; p. 63. [Google Scholar]

- Chin, A.; Coelho, R.; Brooks, L.; Nugent, R.; Puig-Suari, J. Standardization Promotes Flexibility: A Review of CubeSats’ Success. In Proceedings of the AIAA 6th Responsive Space Conference, Los Angeles, CA, USA, 28 April–1 May 2008. [Google Scholar]

- Nugent, R.; Munakata, R.; Chin, A.; Coelho, R.; Puig-Suari, J. The CubeSat: The picosatellite standard for research and education. In Proceedings of the AIAA Space 2008 Conference and Exhibition, San Diego, CA, USA, 9–11 September 2008. [Google Scholar] [CrossRef] [Green Version]

- Toorian, A.; Diaz, K.; Lee, S. The CubeSat approach to space access. In Proceedings of the 2008 IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2008; Volume 1. [Google Scholar] [CrossRef]

- Sweeting, M.N. Small Satellites for Affordable Access to Space. Coop. Space 1999, 430, 393. [Google Scholar]

- Sandau, R. Status and trends of small satellite missions for Earth observation. Acta Astronaut. 2010, 66, 1–12. [Google Scholar] [CrossRef]

- Swartwout, M. You say “Picosat”, i say ’“CubeSat”: Developing a better taxonomy for secondary spacecraft. In Proceedings of the 2018 IEEE Aerospace Conference, Big Sky, MT, USA, 3–10 March 2018; pp. 1–17. [Google Scholar] [CrossRef]

- Villela, T.; Costa, C.A.; Brandão, A.M.; Bueno, F.T.; Leonardi, R. Towards the thousandth CubeSat: A statistical overview. Int. J. Aerosp. Eng. 2019, 2019, 5063145. [Google Scholar] [CrossRef]

- Liddle, J.D.; Holt, A.P.; Jason, S.J.; O’Donnell, K.A.; Stevens, E.J. Space science with CubeSats and nanosatellites. Nat. Astron. 2020, 4, 1026–1030. [Google Scholar] [CrossRef]

- Poghosyan, A.; Golkar, A. CubeSat evolution: Analyzing CubeSat capabilities for conducting science missions. Prog. Aerosp. Sci. 2017, 88, 59–83. [Google Scholar] [CrossRef]

- Toth, C.; Jóźków, G. Remote sensing platforms and sensors: A survey. ISPRS J. Photogramm. Remote Sens. 2016, 115, 22–36. [Google Scholar] [CrossRef]

- Giulio, M.; Yesie, L.; Brama, M.Z. Athenoxat-1, Night Vision Experiments in LEO. In Proceedings of the 30th AIAA/USU Conference on Small Satellites, Logan, UT, USA, 6–11 August 2016; pp. 7–12. [Google Scholar]

- Brychikhin, M.N.; Chkhalo, N.I.; Eikhorn, Y.O.; Malyshev, I.V.; Pestov, A.E.; Plastinin, Y.A.; Polkovnikov, V.N.; Rizvanov, A.A.; Salashchenko, N.N.; Strulya, I.L.; et al. Reflective Schmidt–Cassegrain system for large-aperture telescopes. Appl. Opt. 2016, 55, 4430. [Google Scholar] [CrossRef]

- Da Deppo, V.; Sandri, P.; Mazzinghi, P.; Zuccaro Marchi, A. A lightweight Schmidt space telescope configuration for ultra-high energy cosmic ray detection. Int. Conf. Space Opt. 2019, 11180, 252. [Google Scholar] [CrossRef] [Green Version]

- Smith, M.W.; Donner, A.; Knapp, M.; Pong, C.M.; Smith, C.; Luu, J.; Pasquale, P.D.; Bocchino, R.L.; Campuzano, B.; Loveland, J.; et al. On-Orbit Results and Lessons Learned from the ASTERIA Space Telescope Mission. In Proceedings of the 32nd AIAA/USU Conference on Small Satellites, Logan, UT, USA, 4–9 August 2018. [Google Scholar]

- Pastena, M.; Carnicero Domínguez, B.; Mathieu, P.P.; Regan, A.; Esposito, M.; Conticello, S.; Van Dijk, C.; Vercruyssen, N.; Foglia Manzillo, P.; Koelemann, R.; et al. ESA Earth observation directorate NewSpace initiatives. In Proceedings of the 33rd AIAA/USU Conference on Small Satellites, Logan, UT, USA, 3–8 August 2019; pp. 1–3. [Google Scholar]

- Urihara, B.J.K.; Uwahara, T.K.; Ujita, S.F.; Ato, Y.S.; Anyu, K.H. A High Spatial Resolution Multispectral Sensor on the RISESAT microsatellite. Trans. Jpn. Soc. Aeronaut. Space Sci. Aerosp. Technol. Jpn. 2020, 18, 186–191. [Google Scholar]

- Houborg, R.; McCabe, M.F. A Cubesat enabled Spatio-Temporal Enhancement Method (CESTEM) utilizing Planet, Landsat and MODIS data. Remote Sens. Environ. 2018, 209, 211–226. [Google Scholar] [CrossRef]

- Boshuizen, C.R.; Mason, J.; Klupar, P.; Spanhake, S. Results from the Planet Labs Flock Constellation. In Proceedings of the 28th AIAA/USU Conference on Small Satellites, Logan, UT, USA, 4–7 August 2014. [Google Scholar]

- Griffith, D.; Cogan, D.; Magidimisha, E.; Van Zyl, R. Flight hardware verification and validation of the K-line fire sensor payload on ZACube-2. In Proceedings of the Fifth Conference on Sensors, MEMS, and Electro-Optic Systems, Skukuza, South Africa, 8–10 October 2018; International Society for Optics and Photonics: Bellingham, WA, USA, 2019; p. 100. [Google Scholar] [CrossRef]

- Esposito, M.; Dominguez, B.C.; Pastena, M.; Vercruyssen, N.; Conticello, S.S.; van Dijk, C.; Manzillo, P.F.; Koeleman, R. Highly integration of hyperspectral, thermal and artificial intelligence for the ESA PHISAT-1 mission. In Proceedings of the International Astronautical Congress IAC 2019, Washington, DC, USA, 21–25 October 2019; pp. 1–8. [Google Scholar]

- Stock, G.; Fraire, J.A.; Hermanns, H.; Cruz, E.; Isaacs, A.; Imbrosh, Z. On the Automation, Optimization, and In-Orbit Validation of Intelligent Satellite Constellation Operations. In Proceedings of the 36th AIAA/USU Conference on Small Satellites, Logan, UT, USA, 7–12 August 2019; p. SSC21-V-05. [Google Scholar]

- Jallad, A.H.; Marpu, P.; Aziz, Z.A.; Al Marar, A.; Awad, M. MeznSat-A 3U CubeSat for monitoring greenhouse gases using short wave infra-red spectrometry: Mission concept and analysis. Aerospace 2019, 6, 118. [Google Scholar] [CrossRef] [Green Version]

- Weber, K.T.; Yadav, R. Spatiotemporal Trends in Wildfires across the Western United States (1950–2019). Remote Sens. 2020, 12, 2959. [Google Scholar] [CrossRef]

- de Almeida Pereira, G.H.; Fusioka, A.M.; Nassu, B.T.; Minetto, R. Active fire detection in Landsat-8 imagery: A large-scale dataset and a deep-learning study. ISPRS J. Photogramm. Remote Sens. 2021, 178, 171–186. [Google Scholar] [CrossRef]

- Chen, Y.; Lara, M.J.; Hu, F.S. A robust visible near-infrared index for fire severity mapping in Arctic tundra ecosystems. ISPRS J. Photogramm. Remote Sens. 2020, 159, 101–113. [Google Scholar] [CrossRef]

- Gibson, R.; Danaher, T.; Hehir, W.; Collins, L. A remote sensing approach to mapping fire severity in south-eastern Australia using sentinel 2 and random forest. Remote Sens. Environ. 2020, 240, 111702. [Google Scholar] [CrossRef]

- Novo, A.; Fariñas-Álvarez, N.; Martínez-Sánchez, J.; González-Jorge, H.; Fernández-Alonso, J.M.; Lorenzo, H. Mapping Forest Fire Risk—A Case Study in Galicia (Spain). Remote Sens. 2020, 12, 3705. [Google Scholar] [CrossRef]

- Xu, W.; Wooster, M.J.; He, J.; Zhang, T. Improvements in high-temporal resolution active fire detection and FRP retrieval over the Americas using GOES-16 ABI with the geostationary Fire Thermal Anomaly (FTA) algorithm. Sci. Remote Sens. 2021, 3, 100016. [Google Scholar] [CrossRef]

- Chien, S.; Doubleday, J.; Thompson, D.; Wagstaff, K.; Bellardo, J.; Francis, C.; Baumgarten, E.; Williams, A.; Yee, E.; Fluitt, D. Onboard Autonomy on the Intelligent Payload EXperiment (IPEX) CubeSat Mission: A pathfinder for the proposed HyspIRI Mission Intelligent Payload Module. In Proceedings of the 12th International Symposium on Artificial Intelligence, Robotics and Automation in Space, Montreal, QC, Canada, 18–22 June 2014; pp. 1–8. [Google Scholar]

- Giuffrida, G.; Diana, L.; de Gioia, F.; Benelli, G.; Meoni, G.; Donati, M.; Fanucci, L. CloudScout: A deep neural network for on-board cloud detection on hyperspectral images. Remote Sens. 2020, 12, 2205. [Google Scholar] [CrossRef]

- Kim, S.; Yamauchi, T.; Masui, H.; Cho, M. BIRDS BUS: A Standard CubeSat BUS for an Annual Educational Satellite Project. JoSS 2021, 10, 1015–1034. [Google Scholar]

- Balch, J.K.; St. Denis, L.A.; Mahood, A.L.; Mietkiewicz, N.P.; Williams, T.M.; McGlinchy, J.; Cook, M.C. Fired (Fire events delineation): An open, flexible algorithm and database of us fire events derived from the modis burned area product (2001–2019). Remote Sens. 2020, 12, 3498. [Google Scholar] [CrossRef]

- Pinto, M.M.; Libonati, R.; Trigo, R.M.; Trigo, I.F.; DaCamara, C.C. A deep learning approach for mapping and dating burned areas using temporal sequences of satellite images. ISPRS J. Photogramm. Remote Sens. 2020, 160, 260–274. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, X. Investigation of wildfire impacts on land surface phenology from MODIS time series in the western US forests. ISPRS J. Photogramm. Remote Sens. 2020, 159, 281–295. [Google Scholar] [CrossRef]

- Collins, L.; Griffioen, P.; Newell, G.; Mellor, A. The utility of Random Forests for wildfire severity mapping. Remote Sens. Environ. 2018, 216, 374–384. [Google Scholar] [CrossRef]

- Hislop, S.; Haywood, A.; Jones, S.; Soto-Berelov, M.; Skidmore, A.; Nguyen, T.H. A satellite data driven approach to monitoring and reporting fire disturbance and recovery across boreal and temperate forests. Int. J. Appl. Earth Obs. Geoinf. 2020, 87, 102034. [Google Scholar] [CrossRef]

- Rosebrock, A. Deep Learning for Computer Vision with Python, 3rd ed.; PyImageSearch: Philadelphia, PA, USA, 2019; Volume Starter Bundle; ISBN 9788578110796. [Google Scholar]

- Toumbas, G. Raspberry Pi Radiation Experiment; University of Surrey: Guildford, UK, 2018. [Google Scholar]

- Slater, W.S.; Tiwari, N.P.; Lovelly, T.M.; Mee, J.K. Total Ionizing Dose Radiation Testing of NVIDIA Jetson Nano GPUs. In Proceedings of the 2020 IEEE High Performance Extreme Computing Conference, HPEC 2020, Waltham, MA, USA, 22–24 September 2020. [Google Scholar]

- Thanh Noi, P.; Kappas, M. Comparison of Random Forest, k-Nearest Neighbor, and Support Vector Machine Classifiers for Land Cover Classification Using Sentinel-2 Imagery. Sensor 2017, 18, 18. [Google Scholar] [CrossRef] [Green Version]

- Danielsen, A.S.; Johansen, T.A.; Garrett, J.L. Self-organizing maps for clustering hyperspectral images on-board a cubesat. Remote Sens. 2021, 13, 4174. [Google Scholar] [CrossRef]

- Maskey, A.; Cho, M. CubeSatNet: Ultralight Convolutional Neural Network designed for on-orbit binary image classification on a 1U CubeSat. Eng. Appl. Artif. Intell. 2020, 96, 103952. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Czakon, J.; Kaczmarek, K.A.; Pyskir, A.; Tarasiewicz, P.; Kunwar, S.; Rohrbach, J.; Luo, D.; Prasad, M.; Fleer, S.; et al. Deep Learning for Understanding Satellite Imagery: An Experimental Survey. Front. Artif. Intell. 2020, 3, 1–21. [Google Scholar] [CrossRef]

- Cho, D.-H.; Choi, W.-S.; Kim, M.-K.; Kim, J.-H.; Sim, E.; Kim, H.-D. High-Resolution Image and Video CubeSat (HiREV): Development of Space Technology Test Platform Using a Low-Cost CubeSat Platform. Int. J. Aerosp. Eng. 2019, 2019, 8916416. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, T.; Wang, F.; He, W.; Hua, B.; Wu, Y. Ship Detection from Remote Sensing Images Based on Modified Convolution Neural Network. In Proceedings of the International Symposium on Space Technology and Science Aerospace Engineering, Fukui, Japan, 15–21 June 2019. [Google Scholar]

- Mikuriya, W.; Obata, T.; Ikari, S.; Funase, R.; Nakasuka, S. Cooperative Learning Between On-board and Ground Computers for Remote Sensing Image Processing. In Proceedings of the International Symposium on Space Technology and Science Aerospace Engineering, Fukui, Japan, 15–21 June 2019. [Google Scholar]

- Buonaiuto, N.; Kief, C.; Louie, M.; Aarestad, J.; Zufelt, B.; Mital, R.; Mateik, D.; Sivilli, R.; Bhopale, A. Satellite Identification Imaging for Small Satellites Using NVIDIA. In Proceedings of the 31th AIAA/USU Conference on Small Satellites, Logan, UT, USA, 5–10 August 2017. [Google Scholar]

- Szpakowski, D.M.; Jensen, J.L.R. A Review of the Applications of Remote Sensing in Fire Ecology. Remote Sens. 2019, 11, 2638. [Google Scholar] [CrossRef] [Green Version]

| Item | Information |

|---|---|

| Sensor | |

| Number of pixels | 31.4 million pixels |

| Sensor type | CMOS |

| Shutter method | Global shutter |

| Shutter speed | 30 μs to 10.0 s |

| Interface | Ethernet |

| Data transmission speed | 10 Mbps |

| Power supply | +12.0 V |

| Camera controller board | |

| Model | Customized board with Raspberry Pi Compute Module 3+ |

| Operating system | GNU/Linux Ubuntu distribution version 18.04 |

| CPU | ARMv8, 1.2 GHz |

| Memory | 32 GB (flash), 1 GB (RAM) |

| Image capturing speed | 0.42–8.75 frames per second (depending on image resolution) |

| Interface | Ethernet (camera), USB (programming), UART (OBC and C-band board) |

| Power supply | +5.0 V |

| Optics | |

| Focal length | 300 mm |

| Temperature control | Active control and multi-layer insulator |

| Heaters | Polyimide heaters |

| Heater power supply | 7.4–8.4 V (unregulated power line) |

| Temperature sensors | Radial glass thermistor (G10K3976) |

| Pass | ||||

|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |

| Purpose | Camera capture | Downlink thumbnails and JPG image | Downlink PNG image via C-band | Deep-learning execution |

| 12 V | + | − | − | − |

| 5 V | + | + | − | + |

| Unregulated power1 | − | + | + | − |

| Unregulated power2 | − | + | + | − |

| Total duration (s) | 1400 | 130 | 512 | 137 |

| Peak power (W) | 18.40 | 20.10 | 23.14 | 5.31 |

| Energy consumption (Wh) | 2.90 | 2.81 | 2.33 | 0.10 |

| CNN Models | True/Predicted Labels | Cloud | Land | Sea | Wildfire |

|---|---|---|---|---|---|

| ShallowNet | Cloud | 228 94.6% | 4 1.6% | 0 0% | 9 3.7% |

| Land | 2 0.8% | 250 97.3% | 3 1.2% | 2 0.8% | |

| Sea | 0 0% | 4 1.5% | 260 97.4% | 3 1.1% | |

| Wildfire | 4 1.7% | 21 8.9% | 0 0% | 210 89.3% | |

| LeNet | Cloud | 236 97.9% | 2 0.8% | 0 0% | 3 1.2% |

| Land | 3 1.2% | 252 98.1% | 0 0% | 2 0.8% | |

| Sea | 0 0% | 1 0.4% | 266 99.6% | 0 0% | |

| Wildfire | 2 0.9% | 15 6.4% | 0 0% | 218 92.8% | |

| MiniVGGNet | Cloud | 233 96.7% | 8 3.3% | 0 0% | 0 0% |

| Land | 1 0.4% | 256 99.6% | 0 0% | 0 0% | |

| Sea | 0 0% | 0 0% | 267 100% | 0 0% | |

| Wildfire | 1 0.4% | 13 5.5% | 0 0% | 221 94.0% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Azami, M.H.b.; Orger, N.C.; Schulz, V.H.; Oshiro, T.; Cho, M. Earth Observation Mission of a 6U CubeSat with a 5-Meter Resolution for Wildfire Image Classification Using Convolution Neural Network Approach. Remote Sens. 2022, 14, 1874. https://doi.org/10.3390/rs14081874

Azami MHb, Orger NC, Schulz VH, Oshiro T, Cho M. Earth Observation Mission of a 6U CubeSat with a 5-Meter Resolution for Wildfire Image Classification Using Convolution Neural Network Approach. Remote Sensing. 2022; 14(8):1874. https://doi.org/10.3390/rs14081874

Chicago/Turabian StyleAzami, Muhammad Hasif bin, Necmi Cihan Orger, Victor Hugo Schulz, Takashi Oshiro, and Mengu Cho. 2022. "Earth Observation Mission of a 6U CubeSat with a 5-Meter Resolution for Wildfire Image Classification Using Convolution Neural Network Approach" Remote Sensing 14, no. 8: 1874. https://doi.org/10.3390/rs14081874

APA StyleAzami, M. H. b., Orger, N. C., Schulz, V. H., Oshiro, T., & Cho, M. (2022). Earth Observation Mission of a 6U CubeSat with a 5-Meter Resolution for Wildfire Image Classification Using Convolution Neural Network Approach. Remote Sensing, 14(8), 1874. https://doi.org/10.3390/rs14081874