Performance and the Optimal Integration of Sentinel-1/2 Time-Series Features for Crop Classification in Northern Mongolia

Abstract

:1. Introduction

- (1)

- Which satellite combination is best for crop-type classification in northern Mongolia?

- (2)

- What are the suitable time windows for crop classification in northern Mongolia?

- (3)

- What is the most effective and sensitive metric for crop classification?

2. Study Area and Data

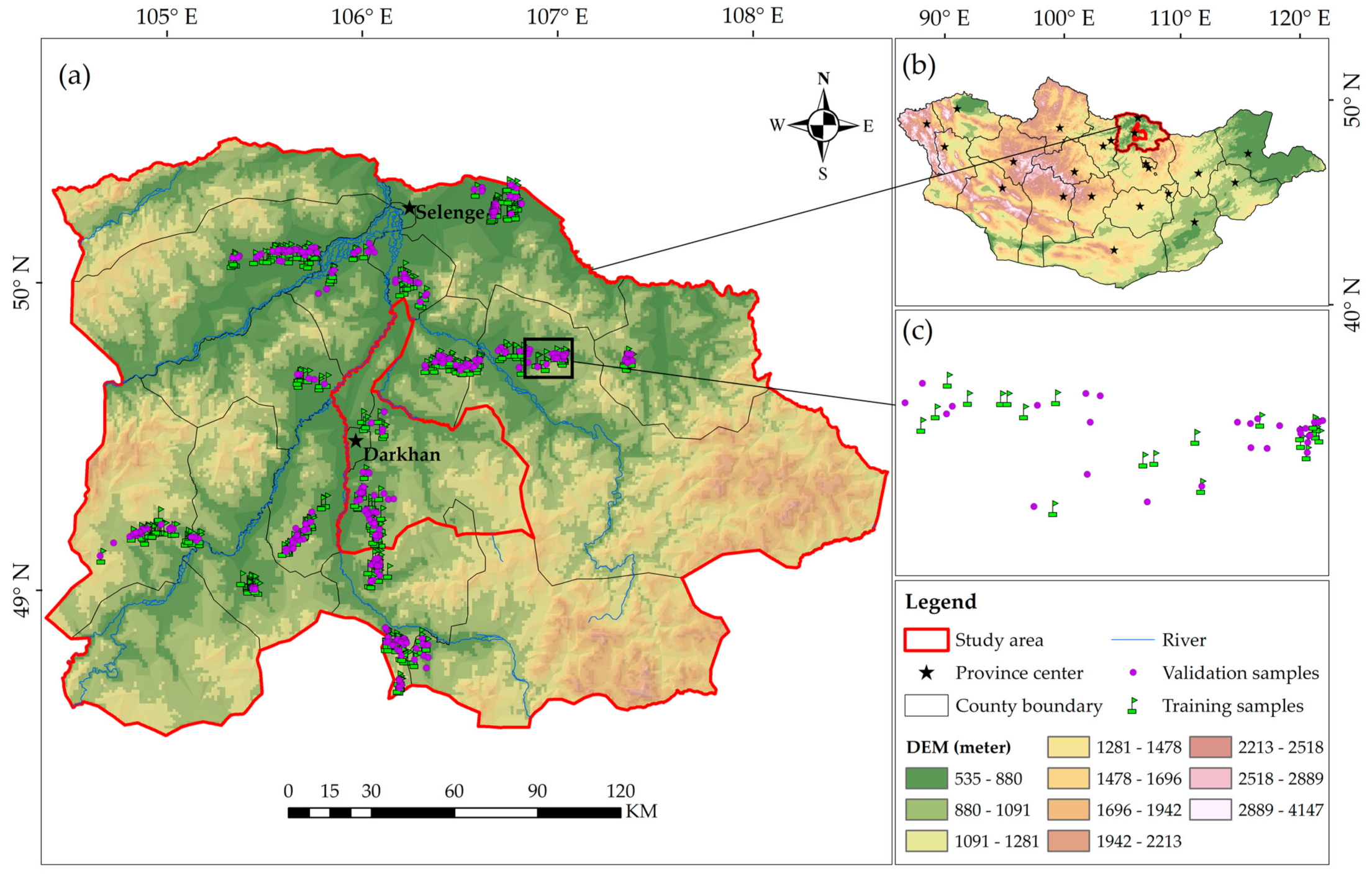

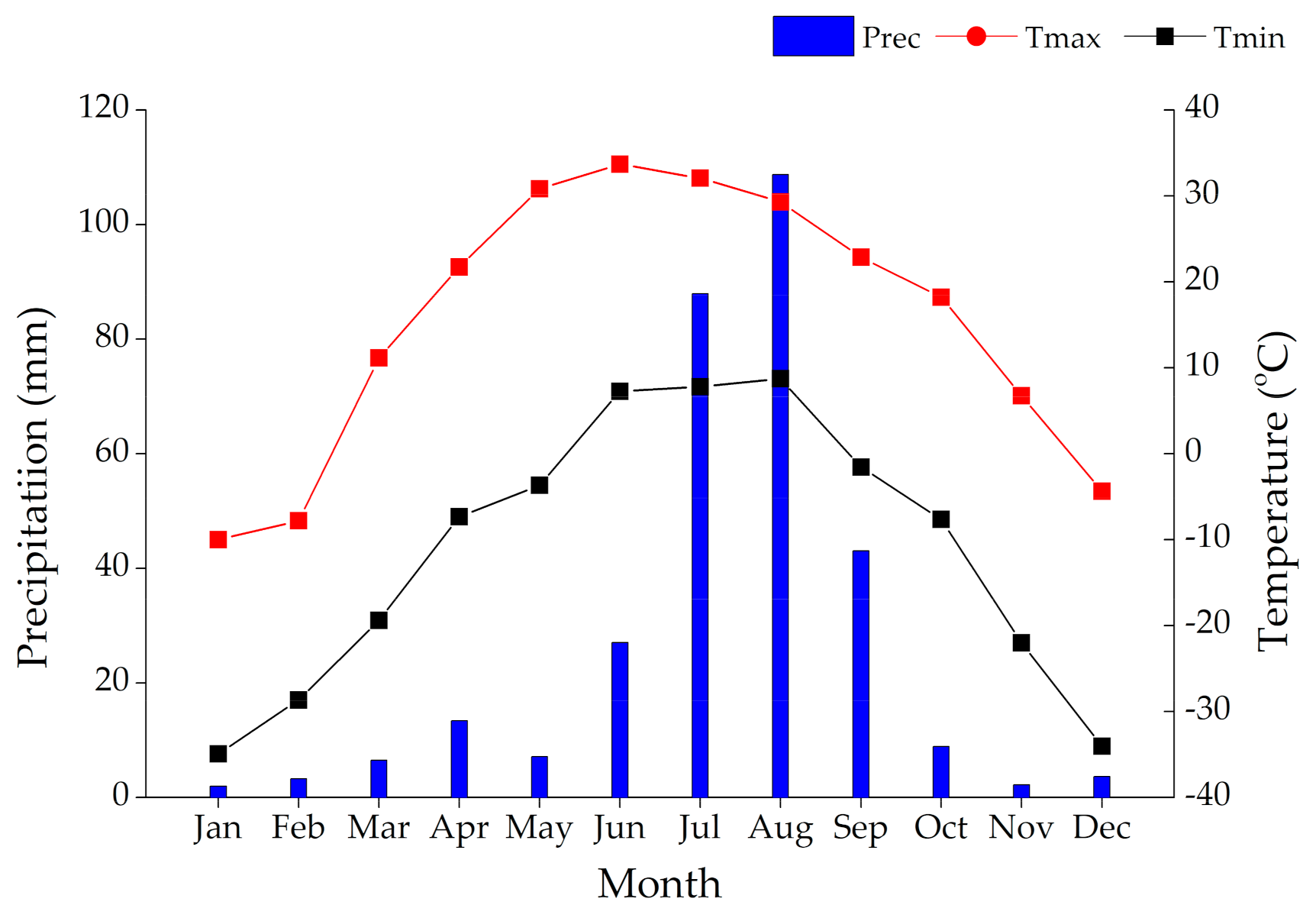

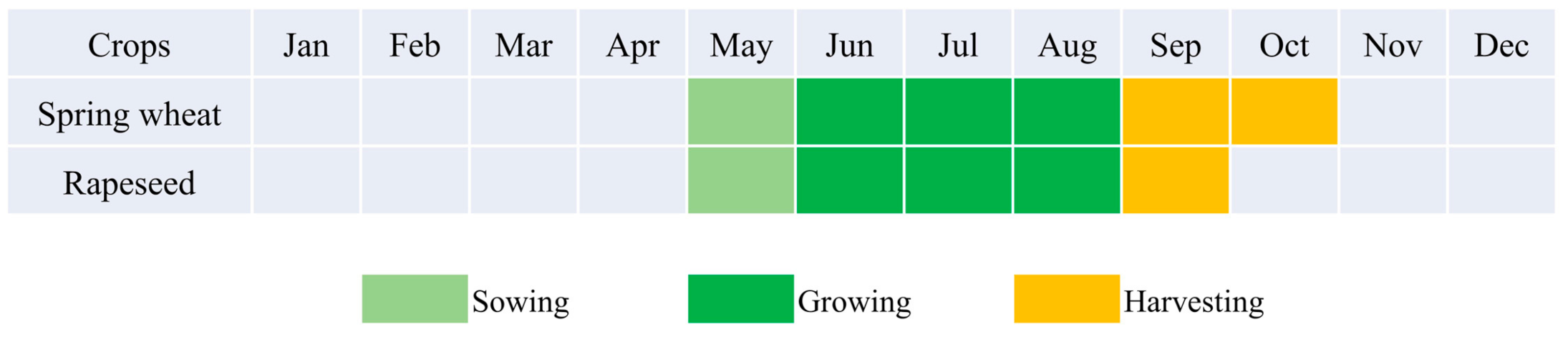

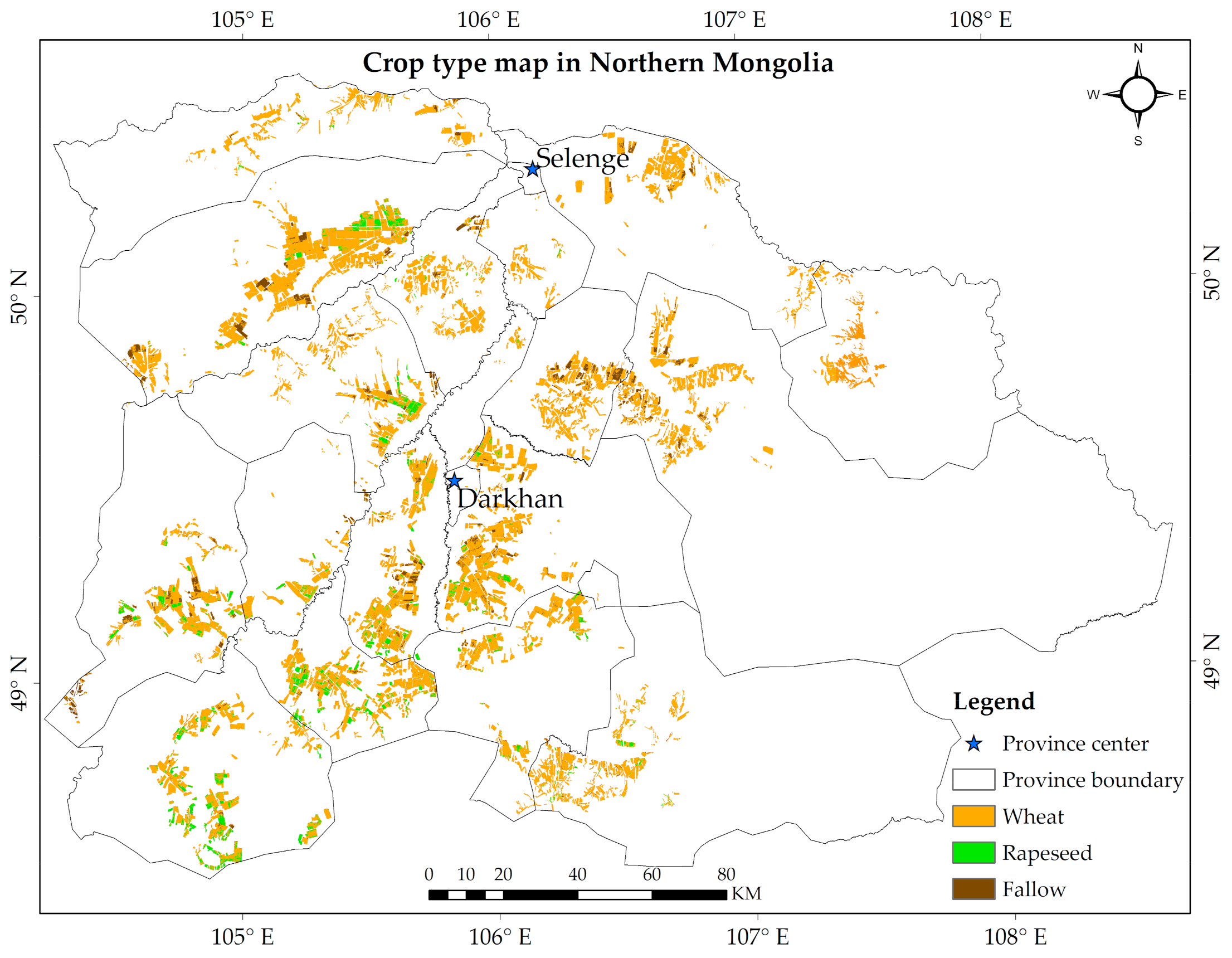

2.1. Study Area

2.2. Collection of Crop Reference Data

2.3. Satellite Data Processing

2.3.1. Sentinel-2 Data

- (1)

- Cloud free

- (2)

- Time-series reconstruction of metric

2.3.2. Sentinel-1 Data

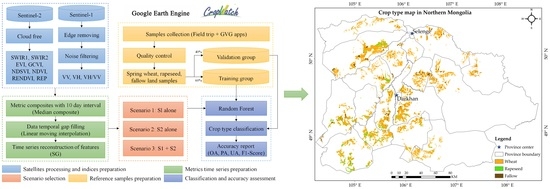

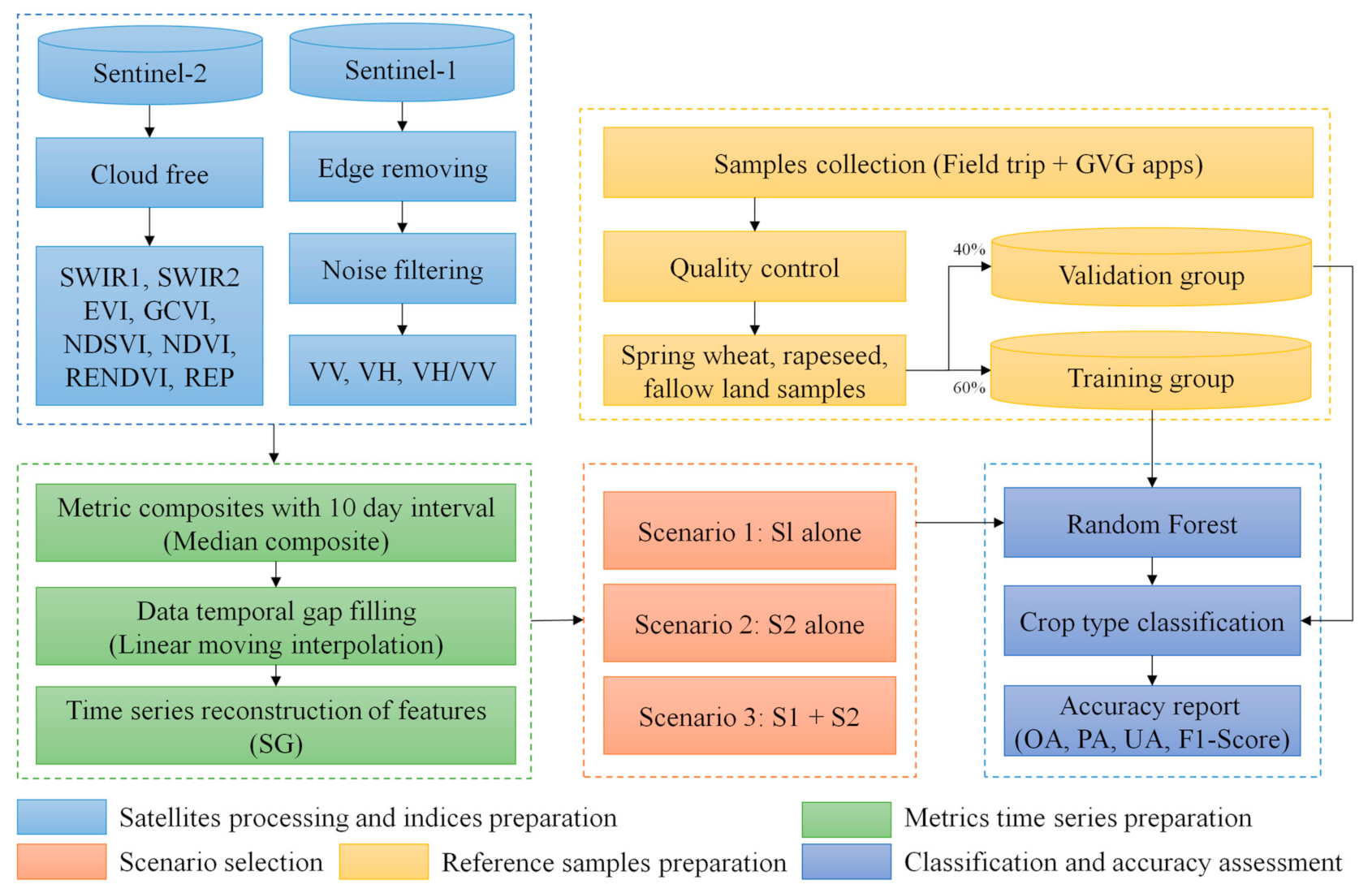

3. Methodology

3.1. Satellites’ Processing and Indices’ Preparation

3.2. Metrics’ Time-Series Preparation

3.3. Scenario Selection

3.4. Reference Samples’ Preparation

3.5. Classification and Accuracy Assessment

3.5.1. Classifier: Random Forest

3.5.2. Accuracy Assessment

4. Results

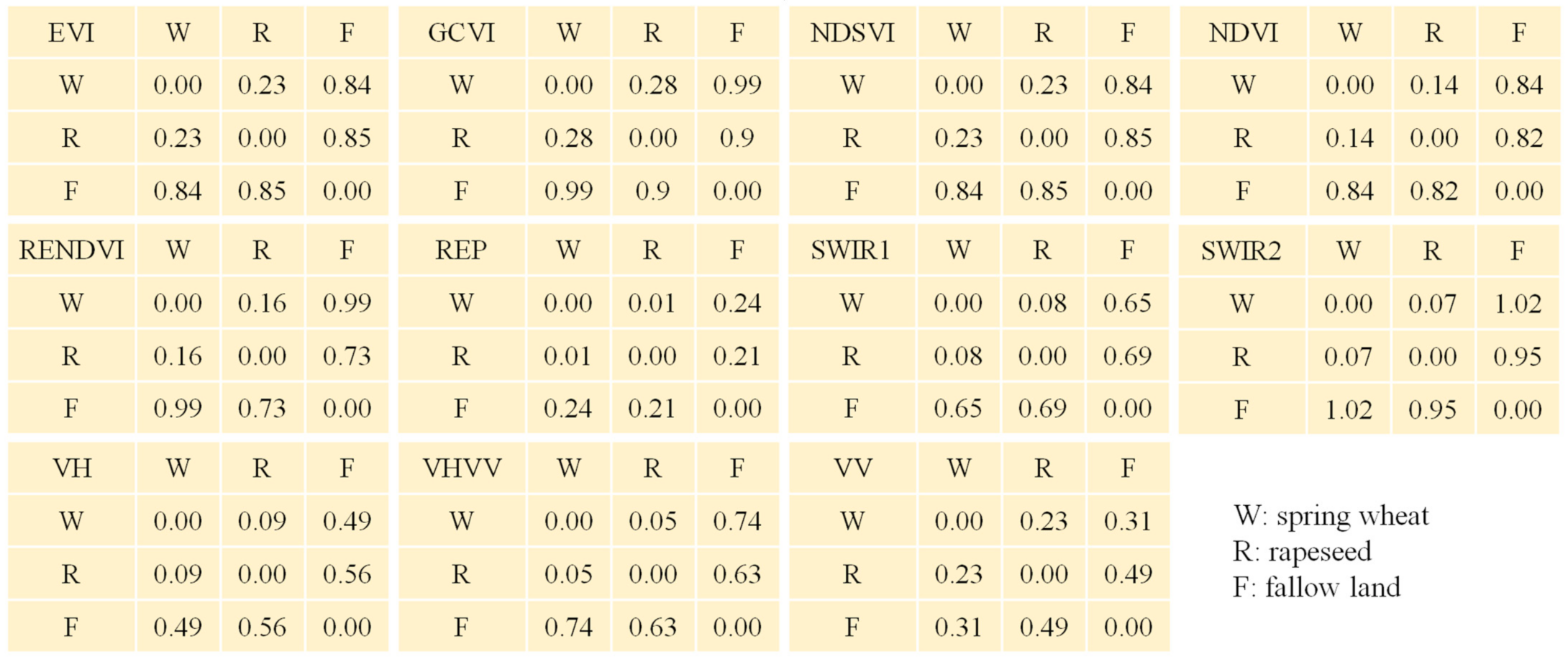

4.1. Temporal of the Satellite Metrics for Crops

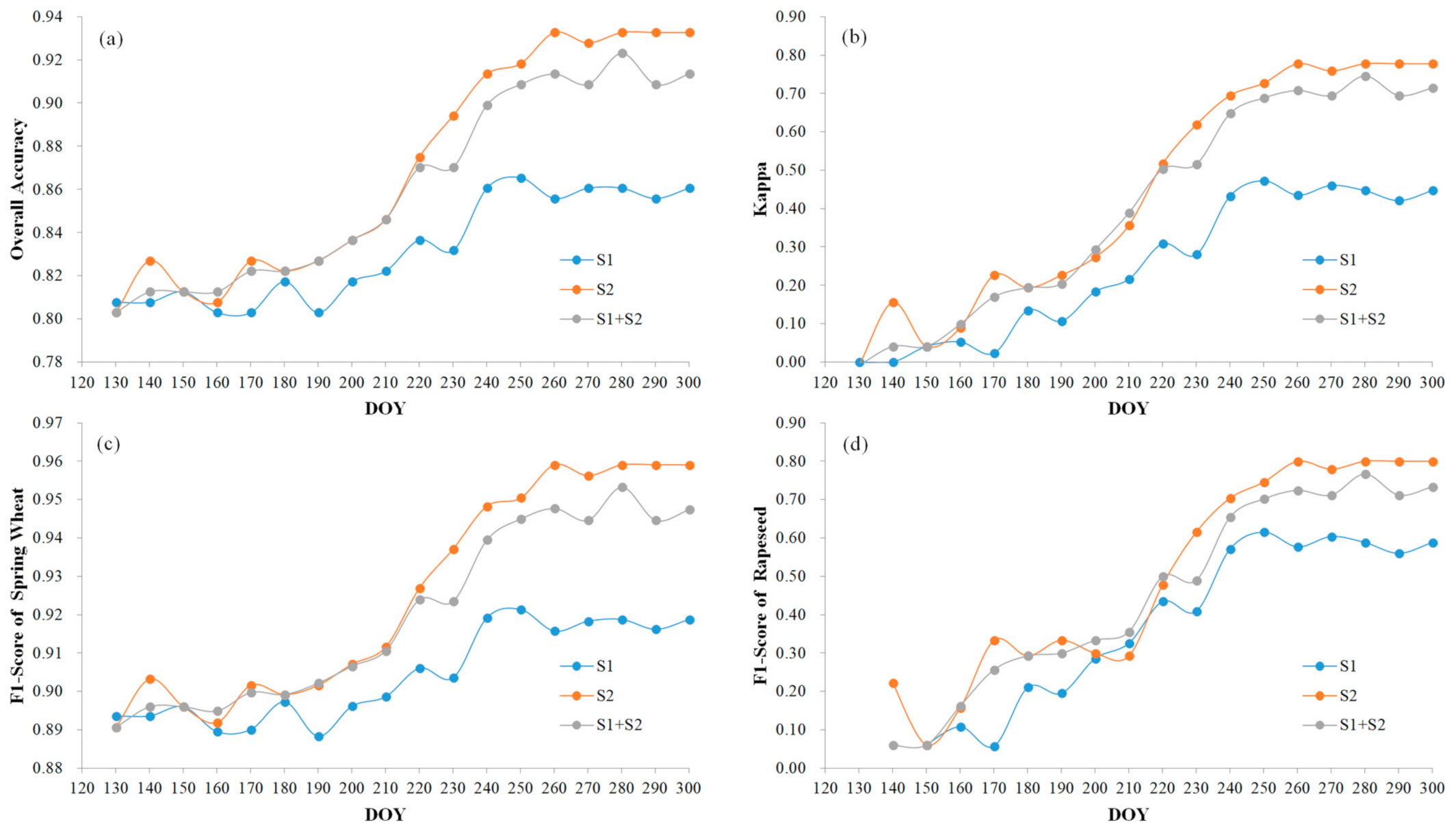

4.2. Accuracy Assessment for Three Scenarios

4.3. Classification Results

4.3.1. Accuracy Assessment

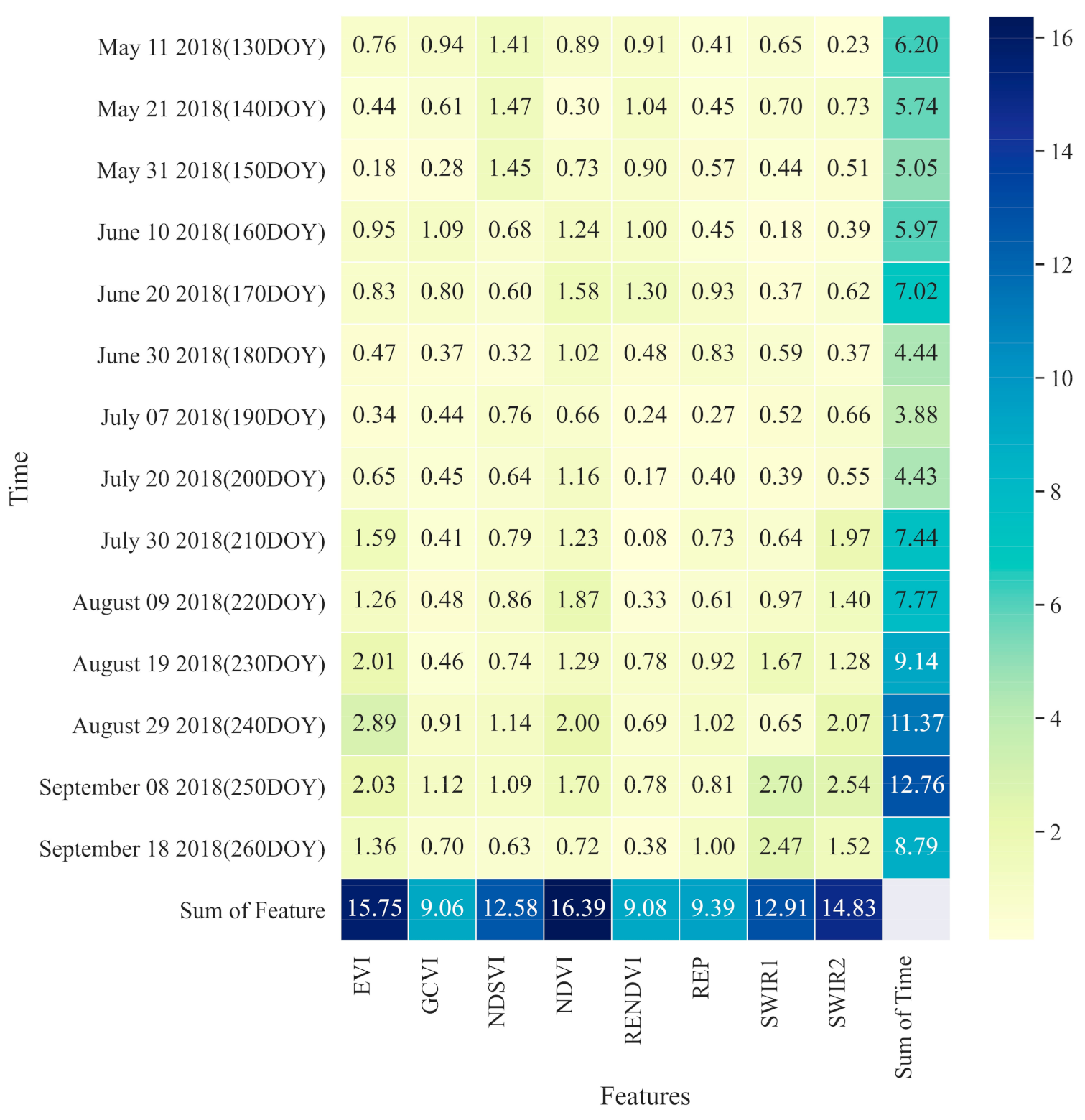

4.3.2. Weight Contribution

4.3.3. Classification Results

5. Discussion

5.1. Classification Accuracy Compared with Others

5.2. Impact of SAR Information on Crop-Type Classification

5.3. Compare to Percentiles Composite of Time-Series Reconstruction

5.4. Shortcomings

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Date | DOY | Overall Accuracy (OA) | Kappa | ||||

|---|---|---|---|---|---|---|---|

| S1 | S2 | S1 + S2 | S1 | S2 | S1 + S2 | ||

| 11 May 2018 | 130 | 0.81 | 0.80 | 0.80 | 0.00 | −0.01 | −0.01 |

| 21 May 2018 | 140 | 0.81 | 0.83 | 0.81 | 0.00 | 0.16 | 0.04 |

| 31 May 2018 | 150 | 0.81 | 0.81 | 0.81 | 0.04 | 0.04 | 0.04 |

| 10 June 2018 | 160 | 0.80 | 0.81 | 0.81 | 0.05 | 0.09 | 0.10 |

| 20 June 2018 | 170 | 0.80 | 0.83 | 0.82 | 0.02 | 0.23 | 0.17 |

| 30 June 2018 | 180 | 0.82 | 0.82 | 0.82 | 0.13 | 0.19 | 0.19 |

| 10 July 2018 | 190 | 0.80 | 0.83 | 0.83 | 0.11 | 0.23 | 0.20 |

| 20 July 2018 | 200 | 0.82 | 0.84 | 0.84 | 0.18 | 0.27 | 0.29 |

| 30 July 2018 | 210 | 0.82 | 0.85 | 0.85 | 0.22 | 0.36 | 0.39 |

| 9 August 2018 | 220 | 0.84 | 0.88 | 0.87 | 0.31 | 0.52 | 0.50 |

| 19 August 2018 | 230 | 0.83 | 0.89 | 0.87 | 0.28 | 0.62 | 0.52 |

| 29 August 2018 | 240 | 0.86 | 0.91 | 0.90 | 0.43 | 0.69 | 0.65 |

| 8 Septermber 2018 | 250 | 0.87 | 0.92 | 0.91 | 0.47 | 0.73 | 0.69 |

| 18 Septermber 2018 | 260 | 0.86 | 0.93 | 0.91 | 0.43 | 0.78 | 0.71 |

| 28 Septermber 2018 | 270 | 0.86 | 0.93 | 0.91 | 0.46 | 0.76 | 0.69 |

| 8 October 2018 | 280 | 0.86 | 0.93 | 0.92 | 0.45 | 0.78 | 0.75 |

| 18 October 2018 | 290 | 0.86 | 0.93 | 0.91 | 0.42 | 0.78 | 0.69 |

| 28 October 2018 | 300 | 0.86 | 0.93 | 0.91 | 0.45 | 0.78 | 0.71 |

| Date | DOY | F1 Score (Spring Wheat) | F1 Score (Rapeseed) | ||||

|---|---|---|---|---|---|---|---|

| S1 | S2 | S1 + S2 | S1 | S2 | S1 + S2 | ||

| 11 May 2018 | 130 | 0.89 | 0.89 | 0.89 | |||

| 21 May 2018 | 140 | 0.89 | 0.90 | 0.90 | 0.22 | 0.06 | |

| 31 May 2018 | 150 | 0.90 | 0.90 | 0.90 | 0.06 | 0.06 | 0.06 |

| 10 June 2018 | 160 | 0.89 | 0.89 | 0.89 | 0.11 | 0.16 | 0.16 |

| 20 June 2018 | 170 | 0.89 | 0.90 | 0.90 | 0.06 | 0.33 | 0.26 |

| 30 June 2018 | 180 | 0.90 | 0.90 | 0.90 | 0.21 | 0.29 | 0.29 |

| 10 July 2018 | 190 | 0.89 | 0.90 | 0.90 | 0.20 | 0.33 | 0.30 |

| 20 July 2018 | 200 | 0.90 | 0.91 | 0.91 | 0.29 | 0.30 | 0.33 |

| 30 July 2018 | 210 | 0.90 | 0.91 | 0.91 | 0.33 | 0.29 | 0.36 |

| 9 August 2018 | 220 | 0.91 | 0.93 | 0.92 | 0.43 | 0.48 | 0.50 |

| 19 August 2018 | 230 | 0.90 | 0.94 | 0.92 | 0.41 | 0.62 | 0.49 |

| 29 August 2018 | 240 | 0.92 | 0.95 | 0.94 | 0.57 | 0.70 | 0.65 |

| 8 Septermber 2018 | 250 | 0.92 | 0.95 | 0.94 | 0.62 | 0.75 | 0.70 |

| 18 Septermber 2018 | 260 | 0.92 | 0.96 | 0.95 | 0.58 | 0.80 | 0.72 |

| 28 Septermber 2018 | 270 | 0.92 | 0.96 | 0.94 | 0.60 | 0.78 | 0.71 |

| 8 October 2018 | 280 | 0.92 | 0.96 | 0.95 | 0.59 | 0.80 | 0.77 |

| 18 October 2018 | 290 | 0.92 | 0.96 | 0.94 | 0.56 | 0.80 | 0.71 |

| 28 October 2018 | 300 | 0.92 | 0.96 | 0.95 | 0.59 | 0.80 | 0.73 |

References

- NSO. National Statistic Ofiice of Mongolia. Available online: https://www.1212.mn/stat.aspx?LIST_ID=976_L10_2,%20(15022022) (accessed on 5 April 2022).

- FAO. Special Report FAO/WFP Crop and Livestock Assessment Mission to Mongilia. Available online: https://reliefweb.int/report/mongolia/special-report-faowfp-crop-and-livestock-assessment-mission-mongolia (accessed on 5 April 2022).

- Lussem, U.; Hütt, C.; Waldhoff, G. Combined analysis of Sentinel-1 and Rapideye data for improved crop type classification: An early season approach for rapeseed and cereals. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B8, 959–963. [Google Scholar] [CrossRef] [Green Version]

- Gil, J.D.B.; Reidsma, P.; Giller, K.; Todman, L.; Whitmore, A.; van Ittersum, M. Sustainable development goal 2: Improved targets and indicators for agriculture and food security. Ambio 2019, 48, 685–698. [Google Scholar] [CrossRef] [Green Version]

- Nilsson, M.; Chisholm, E.; Griggs, D.; Howden-Chapman, P.; McCollum, D.; Messerli, P.; Neumann, B.; Stevance, A.S.; Visbeck, M.; Stafford-Smith, M. Mapping interactions between the sustainable development goals: Lessons learned and ways forward. Sustain. Sci. 2018, 13, 1489–1503. [Google Scholar] [CrossRef] [Green Version]

- Liang, X.; Li, P.; Wang, J.; Shun Chan, F.K.; Togtokh, C.; Ochir, A.; Davaasuren, D. Research progress of desertification and its prevention in mongolia. Sustainability 2021, 13, 6861. [Google Scholar] [CrossRef]

- Meng, X.; Gao, X.; Li, S.; Lei, J. Monitoring desertification in Mongolia based on Landsat images and Google Earth Engine from 1990 to 2020. Ecol. Indic. 2021, 129, 107908. [Google Scholar] [CrossRef]

- Tuvdendorj, B.; Wu, B.; Zeng, H.; Batdelger, G.; Nanzad, L. Determination of appropriate remote sensing indices for spring wheat yield estimation in mongolia. Remote Sens. 2019, 11, 2568. [Google Scholar] [CrossRef] [Green Version]

- Otgonbayar, M.; Atzberger, C.; Chambers, J.; Amarsaikhan, D.; Böck, S.; Tsogtbayar, J. Land suitability evaluation for agricultural cropland in mongolia using the spatial MCDM method and AHP based GIS. J. Geosci. Environ. Prot. 2017, 5, 238–263. [Google Scholar] [CrossRef] [Green Version]

- Myagmartseren, P.; Myagmarjav, I. Cropland suitability assessment and confusion matrix evaluation with GIS. Mong. J. Agric. Sci. 2018, 21, 78–83. [Google Scholar] [CrossRef]

- Natsagdorj, E.; Renchin, T.; de Maeyer, P.; Dari, C.; Tseveen, B. Long-term soil moisture content estimation using satellite and climate data in agricultural area of Mongolia. Geocarto Int. 2019, 34, 722–734. [Google Scholar] [CrossRef]

- Nandintsetseg, B.; Shinoda, M. Seasonal change of soil moisture in Mongolia: Its climatology. Int. J. Climatol. 2010, 1152, 1143–1152. [Google Scholar] [CrossRef]

- Nanzad, L.; Zhang, J.; Tuvdendorj, B.; Nabil, M.; Zhang, S.; Bai, Y. NDVI anomaly for drought monitoring and its correlation with climate factors over Mongolia from 2000 to 2016. J. Arid Environ. 2019, 164, 69–77. [Google Scholar] [CrossRef]

- Kang, Y.; Guo, E.L.; Wang, Y.F.; Bao, Y.L.; Bao, Y.H.; Na, R.M. Application of temperature vegetation dryness index for drought monitoring in Mongolian Plateau. Appl. Ecol. 2021, 32, 2534–2544. [Google Scholar] [CrossRef]

- Norovsuren, B.; Tseveen, B.; Batomunkuev, V.; Renchin, T.; Natsagdorj, E.; Yangiv, A.; Mart, Z. Land cover classification using maximum likelihood method (2000 and 2019) at Khandgait valley in Mongolia. IOP Conf. Ser. Earth Environ. Sci. 2019, 381, 012054. [Google Scholar] [CrossRef]

- Wang, J.; Wei, H.; Cheng, K.; Ochir, A.; Shao, Y.; Yao, J.; Wu, Y.; Han, X.; Davaasuren, D.; Chonokhuu, S.; et al. Updatable dataset revealing decade changes in land cover types in Mongolia. Geosci. Data J. 2022, 1–14. [Google Scholar] [CrossRef]

- Wardlow, B.; Egbert, S.; Kastens, J. Analysis of time-series MODIS 250 m vegetation index data for crop classification in the U.S. Central Great Plains. Remote Sens. Environ. 2007, 108, 290–310. [Google Scholar] [CrossRef] [Green Version]

- Blickensdörfer, L.; Schwieder, M.; Pflugmacher, D.; Nendel, C.; Erasmi, S.; Hostert, P. Mapping of crop types and crop sequences with combined time series of Sentinel-1, Sentinel-2 and Landsat 8 data for Germany. Remote Sens. Environ. 2022, 269, 112831. [Google Scholar] [CrossRef]

- Ghassemi, B.; Dujakovic, A.; Żółtak, M.; Immitzer, M.; Atzberger, C.; Vuolo, F. Designing a european-wide crop type mapping approach based on machine learning algorithms using LUCAS field survey and sentinel-2 data. Remote Sens. 2022, 14, 541. [Google Scholar] [CrossRef]

- Hunt, M.L.; Blackburn, G.A.; Carrasco, L.; Redhead, J.W.; Rowland, C.S. High resolution wheat yield mapping using Sentinel-2. Remote Sens. Environ. 2019, 233, 111410. [Google Scholar] [CrossRef]

- Kussul, N.; Lemoine, G.; Gallego, F.J.; Skakun, S.V.; Lavreniuk, M.; Shelestov, A.Y. Parcel-based crop classification in ukraine using landsat-8 data and sentinel-1A data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2500–2508. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google earth engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Yang, J.; Huang, X. The 30 m annual land cover dataset and its dynamics in China from 1990 to 2019. Earth Syst. Sci. Data 2021, 13, 3907–3925. [Google Scholar] [CrossRef]

- Kumari, N.; Srivastava, A.; Dumka, U.C. A long-term spatiotemporal analysis of vegetation greenness over the himalayan region using google earth engine. Climate 2021, 9, 109. [Google Scholar] [CrossRef]

- Elnashar, A.; Zeng, H.; Wu, B.; Fenta, A.A.; Nabil, M.; Duerler, R. Soil erosion assessment in the Blue Nile Basin driven by a novel RUSLE-GEE framework. Sci. Total Environ. 2021, 793, 48466. [Google Scholar] [CrossRef] [PubMed]

- Elnashar, A.; Zeng, H.; Wu, B.; Gebremicael, T.G.; Marie, K. Assessment of environmentally sensitive areas to desertification in the Blue Nile Basin driven by the MEDALUS-GEE framework. Sci. Total Environ. 2022, 185, 152925. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, B.; Ponce-Campos, G.; Zhang, M.; Chang, S.; Tian, F. Mapping up-to-date paddy rice extent at 10 M resolution in China through the integration of optical and synthetic aperture radar images. Remote Sens. 2018, 10, 1200. [Google Scholar] [CrossRef] [Green Version]

- Xiao, W.; Xu, S.; He, T. Mapping paddy rice with sentinel-1/2 and phenology-, object-based algorithm—A implementation in hangjiahu plain in China using GEE platform. Remote Sens. 2021, 13, 990. [Google Scholar] [CrossRef]

- Tian, F.; Wu, B.; Zeng, H.; Zhang, X.; Xu, J. Efficient identification of corn cultivation area with multitemporal synthetic aperture radar and optical images in the google earth engine cloud platform. Remote Sens. 2019, 11, 629. [Google Scholar] [CrossRef] [Green Version]

- Yang, G.; Yu, W.; Yao, X.; Zheng, H.; Cao, Q.; Zhu, Y.; Cao, W.; Cheng, T. AGTOC: A novel approach to winter wheat mapping by automatic generation of training samples and one-class classification on google earth engine. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102446. [Google Scholar] [CrossRef]

- You, N.; Dong, J.; Huang, J.; Du, G.; Zhang, G.; He, Y.; Yang, T.; Di, Y.; Xiao, X. The 10-m crop type maps in Northeast China during 2017–2019. Sci. Data 2021, 8, 41. [Google Scholar] [CrossRef]

- van Tricht, K.; Gobin, A.; Gilliams, S.; Piccard, I. Synergistic use of radar sentinel-1 and optical sentinel-2 imagery for crop mapping: A case study for belgium. Remote Sens. 2018, 10, 1642. [Google Scholar] [CrossRef] [Green Version]

- Dong, J.; Xiao, X.; Menarguez, M.A.; Zhang, G.; Qin, Y.; Thau, D.; Biradar, C.; Moore, B. III. Mapping paddy rice planting area in northeastern Asia with landsat 8 images, phenology-based algorithm and google earth engine. Remote Sens. Environ. 2016, 185, 142–154. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- d’Andrimont, R.; Verhegghen, A.; Lemoine, G.; Kempeneers, P.; Meroni, M.; van der Velde, M. From parcel to continental scale—A first European crop type map based on sentinel-1 and LUCAS copernicus in-situ observations. Remote Sens. Environ. 2019, 266, 112708. [Google Scholar] [CrossRef]

- Defourny, P.; Bontemps, S.; Bellemans, N.; Cara, C.; Dedieu, G.; Guzzonato, E.; Hagolle, O.; Inglada, J.; Nicola, L.; Rabaute, T.; et al. Near real-time agriculture monitoring at national scale at parcel resolution: Performance assessment of the Sen2-Agri automated system in various cropping systems around the world. Remote Sens. Environ. 2019, 221, 551–568. [Google Scholar] [CrossRef]

- Wang, C.; Wang, S.; Cui, H.; Šebela, M.B.; Zhang, C.; Gu, X.; Fang, X.; Hu, Z.; Tang, Q.; Wang, Y. Framework to create cloud-free remote sensing data using passenger aircraft as the platform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6923–6936. [Google Scholar] [CrossRef]

- Tahsin, S.; Medeiros, S.; Hooshyar, M.; Singh, A. Optical cloud pixel recovery via machine learning. Remote Sens. 2017, 9, 527. [Google Scholar] [CrossRef] [Green Version]

- Griffiths, P.; Nendel, C.; Hostert, P. Intra-annual reflectance composites from sentinel-2 and landsat for national-scale crop and land cover mapping. Remote Sens. Environ. 2019, 220, 135–151. [Google Scholar] [CrossRef]

- Chen, J.; Jönsson, P.; Tamura, M.; Gu, Z.; Matsushita, B.; Eklundh, L. A simple method for reconstructing a high-quality NDVI time-series data set based on the savitzky–golay filter. Remote Sens. Environ. 2004, 91, 332–344. [Google Scholar] [CrossRef]

- You, N.; Dong, J. Examining earliest identifiable timing of crops using all available sentinel 1/2 imagery and google earth engine. ISPRS J. Photogramm. Remote Sens. 2020, 161, 109–123. [Google Scholar] [CrossRef]

- Zhao, H.; Chen, Z.; Jiang, H.; Jing, W.; Sun, L.; Feng, M. Evaluation of Three deep learning models for early crop classification using sentinel-1a imagery time series—A case study in Zhanjiang, China. Remote Sens. 2019, 11, 2673. [Google Scholar] [CrossRef] [Green Version]

- Singha, M.; Dong, J.; Zhang, G.; Xiao, X. High resolution paddy rice maps in cloud-prone Bangladesh and Northeast India using Sentinel-1 data. Sci. Data 2019, 6, 26. [Google Scholar] [CrossRef]

- Arias, M.; Campo-Bescos, M.A.; Alvarez-Mozos, J. Crop type mapping based on sentinel-1 backscatter time series. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 6623–6626. [Google Scholar] [CrossRef]

- Arias, M.; Campo-Bescós, M.Á.; Álvarez-Mozos, J. Crop classification based on temporal signatures of sentinel-1 observations over Navarre province, Spain. Remote Sens. 2020, 12, 278. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, D.B.; Gruber, A.; Wagner, W. Mapping rice extent and cropping scheme in the mekong delta using sentinel-1A data. Remote Sens. Lett. 2016, 7, 1209–1218. [Google Scholar] [CrossRef]

- Shelestov, A.; Lavreniuk, M.; Vasiliev, V.; Shumilo, L.; Kolotii, A.; Yailymov, B.; Kussul, N.; Yailymova, H. Cloud approach to automated crop classification using sentinel-1 imagery. IEEE Trans. Big Data 2019, 6, 572–582. [Google Scholar] [CrossRef]

- Nasrallah, A.; Baghdadi, N.; El Hajj, M.; Darwish, T.; Belhouchette, H.; Faour, G.; Darwich, S.; Mhawej, M. Sentinel-1 data for winter wheat phenology monitoring and mapping. Remote Sens. 2019, 11, 2228. [Google Scholar] [CrossRef] [Green Version]

- Singh, J.; Devi, U.; Hazra, J.; Kalyanaraman, S. Crop-identification using sentinel-1 and sentinel-2 data for Indian region. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 5312–5314. [Google Scholar] [CrossRef]

- Behzad, A.; Aamir, M. Estimation of wheat area using sentinel-1 and sentinel-2 datasets (a comparative analysis). Int. J. Agric. Sustain. Dev. 2019, 1, 81–93. [Google Scholar] [CrossRef]

- Hu, Y.; Zeng, H.; Tian, F.; Zhang, M.; Wu, B.; Gilliams, S.; Li, S.; Li, Y.; Lu, Y.; Yang, H. An interannual transfer learning approach for crop classification in the Hetao Irrigation district, China. Remote Sens. 2022, 14, 1208. [Google Scholar] [CrossRef]

- van Niel, T.G.; McVicar, T.R. Determining temporal windows for crop discrimination with remote sensing: A case study in south-eastern Australia. Comput. Electron. Agric. 2004, 45, 91–108. [Google Scholar] [CrossRef]

- Azar, R.; Villa, P.; Stroppiana, D.; Crema, A.; Boschetti, M.; Brivio, P.A. Assessing in-season crop classification performance using satellite data: A test case in Northern Italy. Eur. J. Remote Sens. 2016, 49, 361–380. [Google Scholar] [CrossRef] [Green Version]

- AIRCAS. Gvg for Android. Available online: https://apkpure.com/gvg/com.sysapk.gvg (accessed on 5 April 2022).

- Tran, K.H.; Zhang, H.K.; McMaine, J.T.; Zhang, X.; Luo, D. 10 m crop type mapping using Sentinel-2 reflectance and 30 m cropland data layer product. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102692. [Google Scholar] [CrossRef]

- Oreopoulos, L.; Wilson, M.J.; Várnai, T. Implementation on landsat data of a simple cloud-mask algorithm developed for MODIS land bands. IEEE Geosci. Remote Sens. Lett. 2011, 8, 597–601. [Google Scholar] [CrossRef] [Green Version]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Gitelson, A.A. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef] [Green Version]

- Zhong, L.; Gong, P.; Biging, G.S. Efficient corn and soybean mapping with temporal extendability: A multi-year experiment using landsat imagery. Remote Sens. Environ. 2014, 140, 1–13. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R.; et al. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar] [CrossRef] [Green Version]

- Teluguntla, P.; Thenkabail, P.S.; Oliphant, A.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K.; Huete, A. A 30-m landsat-derived cropland extent product of Australia and China using random forest machine learning algorithm on Google Earth Engine cloud computing platform. ISPRS J. Photogramm. Remote Sens. 2018, 144, 325–340. [Google Scholar] [CrossRef]

- d’Andrimont, R.; Lemoine, G.; van der Velde, M. Targeted grassland monitoring at parcel level using sentinels, street-level images and field observations. Remote Sens. 2018, 10, 1300. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.-S. Refined filtering of image noise using local statistics. Comput. Graph. Image Process. 1981, 15, 380–389. [Google Scholar] [CrossRef]

- Kaufman, Y.J. Detection of forests using mid-IR reflectance: An application for aerosol studies. IEEE Trans. Geosci. Remote Sens. 1994, 32, 672–683. [Google Scholar] [CrossRef]

- Xun, L.; Zhang, J.; Cao, D.; Yang, S.; Yao, F. A novel cotton mapping index combining Sentinel-1 SAR and Sentinel-2 multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2021, 181, 148–166. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Khabbazan, S.; Vermunt, P.; Steele-Dunne, S.; Ratering Arntz, L.; Marinetti, C.; van der Valk, D.; Iannini, L.; Molijn, R.; Westerdijk, K.; van der Sande, C. Crop monitoring using sentinel-1 data: A case study from The Netherlands. Remote Sens. 2019, 11, 1887. [Google Scholar] [CrossRef] [Green Version]

- Chakhar, A. Assessing the accuracy of multiple classification algorithms for crop classification using landsat-8 and sentinel-2 data. Remote Sens. 2020, 12, 1735. [Google Scholar] [CrossRef]

- Elbegjargal, N.; Khudulmur, N.; Tsogtbaatar, S.; Dash, J.; Mandakh, D. Desertification Atlas of Mongolia; Institute of Geoecology, Mongolian Academy of Sciences: Ulaanbaatar, Mongolia, 2014. [Google Scholar]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K. Crop classification from sentinel-2- derived vegetation indices using ensemble learning. J. Appl. Remote Sens. 2018, 12, 17. [Google Scholar] [CrossRef] [Green Version]

- Saini, R.; Ghosh, S.K. Crop classification on single date Sentinel-2 imagery using random forest and suppor vector machine. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII–5, 683–688. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Y.; Lu, Z.; Li, S.; Lei, Y.; Chu, Q.; Yin, X.; Chen, F. Large-scale and high-resolution crop mapping in China using sentinel-2 satellite imagery. Agriculture 2020, 10, 433. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with sentinel-2 data for crop and tree species classifications in central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Ustuner, M.; Sanli, F.B.; Abdikan, S.; Esetlili, M.T.; Kurucu, Y. Crop typw classification using vegetation indices of Rapideye imagery. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-7, 195–198. [Google Scholar] [CrossRef] [Green Version]

- Song, Y.; Wang, J. Mapping Winter Wheat Planting Area and Monitoring Its Phenology Using Sentinel-1 Backscatter Time Series. Remote Sens. 2019, 11, 449. [Google Scholar] [CrossRef] [Green Version]

- Forkuor, G.; Conrad, C.; Thiel, M.; Ullmann, T.; Zoungrana, E. Integration of optical and synthetic aperture radar imagery for improving crop mapping in Northwestern Benin, West Africa. Remote Sens. 2014, 6, 6472–6499. [Google Scholar] [CrossRef] [Green Version]

- Inglada, J.; Vincent, A.; Arias, M.; Marais-Sicre, C. Improved early crop type identification by joint use of high temporal resolution sar and optical image time series. Remote Sens. 2016, 8, 362. [Google Scholar] [CrossRef] [Green Version]

- Zeng, H.; Wu, B.; Wang, S.; Musakwa, W.; Tian, F.; Mashimbye, Z.E.; Poona, N.; Syndey, M. A Synthesizing land-cover classification method based on google earth engine: A case study in Nzhelele and Levhuvu Catchments, South Africa. Chin. Geogr. Sci. 2020, 30, 397–409. [Google Scholar] [CrossRef]

| Name | Spring Wheat | Rapeseed | Fallow Land | PA |

|---|---|---|---|---|

| Spring wheat | 164 | 4 | 0 | 0.98 |

| Rapeseed | 8 | 24 | 0 | 0.75 |

| Fallow land | 2 | 0 | 6 | 0.75 |

| UA | 0.94 | 0.86 | 1.00 | OA = 0.93, Kappa = 0.78 |

| Indices | OA | Kappa | F1-Score | |

|---|---|---|---|---|

| Spring Wheat | Rapeseed | |||

| S2 + VV | 0.91 | 0.69 | 0.94 | 0.71 |

| S2 + VH | 0.92 | 0.72 | 0.95 | 0.74 |

| S2 + VH/VV | 0.92 | 0.74 | 0.95 | 0.76 |

| S2 + VH + VV | 0.91 | 0.69 | 0.94 | 0.70 |

| S2 + VH + VH/VV | 0.92 | 0.73 | 0.94 | 0.70 |

| S2 + VV + VH/VV | 0.91 | 0.71 | 0.95 | 0.72 |

| S2 + VV + VH + VH/VV | 0.91 | 0.69 | 0.94 | 0.71 |

| S2 | 0.93 | 0.78 | 0.96 | 0.80 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tuvdendorj, B.; Zeng, H.; Wu, B.; Elnashar, A.; Zhang, M.; Tian, F.; Nabil, M.; Nanzad, L.; Bulkhbai, A.; Natsagdorj, N. Performance and the Optimal Integration of Sentinel-1/2 Time-Series Features for Crop Classification in Northern Mongolia. Remote Sens. 2022, 14, 1830. https://doi.org/10.3390/rs14081830

Tuvdendorj B, Zeng H, Wu B, Elnashar A, Zhang M, Tian F, Nabil M, Nanzad L, Bulkhbai A, Natsagdorj N. Performance and the Optimal Integration of Sentinel-1/2 Time-Series Features for Crop Classification in Northern Mongolia. Remote Sensing. 2022; 14(8):1830. https://doi.org/10.3390/rs14081830

Chicago/Turabian StyleTuvdendorj, Battsetseg, Hongwei Zeng, Bingfang Wu, Abdelrazek Elnashar, Miao Zhang, Fuyou Tian, Mohsen Nabil, Lkhagvadorj Nanzad, Amanjol Bulkhbai, and Natsagsuren Natsagdorj. 2022. "Performance and the Optimal Integration of Sentinel-1/2 Time-Series Features for Crop Classification in Northern Mongolia" Remote Sensing 14, no. 8: 1830. https://doi.org/10.3390/rs14081830

APA StyleTuvdendorj, B., Zeng, H., Wu, B., Elnashar, A., Zhang, M., Tian, F., Nabil, M., Nanzad, L., Bulkhbai, A., & Natsagdorj, N. (2022). Performance and the Optimal Integration of Sentinel-1/2 Time-Series Features for Crop Classification in Northern Mongolia. Remote Sensing, 14(8), 1830. https://doi.org/10.3390/rs14081830